Abstract

Cracked preserved eggs can easily decay, emit a peculiar smell, and cause cross-infection. The identification of cracked preserved eggs during production suffers from low efficiency and high cost. This paper proposes an online detection and identification method of cracked preserved eggs to address this issue. First, the images of preserved eggs are collected online. Then, each collected image is cut into a single image of the preserved egg, and the images of different surfaces of the same preserved egg are respectively spliced by the sequential splicing scheme and the matrix splicing scheme. Finally, the data sets obtained by the two stitching methods are exploited to establish a deep learning detection model. The experimental results indicate that the MobileNetV3_egg model, an improved version of the MobileNetV3_large model, achieves the best recognition ability for cracked preserved eggs by using the matrix splicing scheme. The accuracy reaches 96.3%, and the detection time for 300 images is only 4.267 s. The proposed method can meet the needs of actual production, and the application of this method will make the identification of cracked preserved eggs more automated and intelligent.

1. Introduction

At present, traditional Chinese egg products still have a relatively low automation degree [1]. Especially in the preserved egg industry, much work needs to be performed manually. Preserved eggs are one of China’s traditional egg products that are made of fresh eggs with special pickling. There may be bumps during the handling and cleaning of raw eggs and the transportation of preserved eggs. In this case, cracked preserved eggs are generated, which are prone to corruption, odor, and cross-infection [2] and cannot be eaten [3]. To ensure the quality of preserved eggs, the factory needs to manually remove cracked preserved eggs in the production process, which wastes much manpower. In addition, the manual work reduces the production efficiency and is easy to cause secondary damage. Therefore, it is urgent to develop an online detection technology for preserved egg cracks to improve production efficiency, reduce the production cost, and realize automatic and rapid removal of cracked preserved eggs in the egg industry.

The predecessors mainly used vibration signal analysis [4,5], acoustic characteristic analysis [6], machine vision [7,8], and other [9] technical means to detect the crack of fresh poultry eggs. Among the detection technologies applied to the online detection of poultry eggs [10,11], machine vision technology is more mature [12]. In comparison, the use of vibration signal analysis and acoustic characteristic analysis in online detection is faced with several problems, such as difficult signal acquisition and more signal interference. Especially during the pickling process of preserved eggs, the eggshell is corroded and more fragile than fresh eggs [13], which is easy to cause secondary damage and expand the crack of preserved eggs. Although the application of the polarization image [3] and acoustic characteristics [14] have been proposed to detect the cracks of preserved eggs, these methods have the problems of low accuracy and high detection cost, and they cannot solve actual production problems.

Due to the interference of the spots on the surface of preserved eggs, traditional machine vision algorithms fail to achieve a suitable effect of preserved egg crack feature extraction. In recent years, deep learning has developed rapidly [15], and deep learning algorithms have advantages in solving image recognition problems under a complex background [16]. Therefore, deep learning algorithms have been applied to quality detection [17] and crack detection [18] of poultry eggs, and some deep learning models have been successfully applied to online detection [19,20,21]. This makes it possible to apply deep learning algorithms to solve the problem of online detection of preserved egg cracks. This paper aims to introduce the deep learning algorithm into the machine vision detection of preserved egg cracks. First, taking duck eggs as raw materials and preserved eggs as test materials, the images of preserved eggs are collected online on the three-channel egg conveyor. Then, a reasonable image preprocessing algorithm is designed to make the collected images meet the requirements of the deep learning recognition algorithm. Finally, an online deep learning detection model of preserved egg crack based is established to realize high-throughput online detection of preserved egg crack.

2. Image Acquisition

2.1. Test Materials

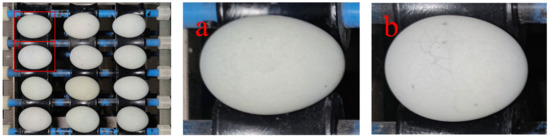

A number of 800 ordinary duck preserved eggs, including 400 cracked preserved eggs and 400 non-cracked preserved eggs, are purchased from the market and taken as the test sample. The crack of preserved eggs will make the eggs gradually turn black after they are stored for a period of time. In this case, the color of cracked preserved eggs is quite different from that of eggshells. The experimental sample is shown in Figure 1.

Figure 1.

Preserved egg sample image. (a) denotes preserved egg; (b) denotes creaked preserved egg.

2.2. Image Acquisition Device

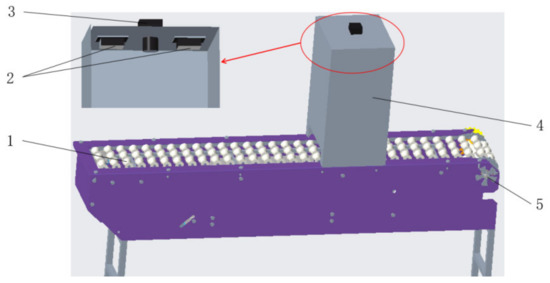

The online image acquisition device used in this paper for preserved eggs is shown in Figure 2. In industrial production, people often pay much attention to the stability and price of the camera. A charge-coupled device (CCD) camera has higher stability than an ordinary complementary metal oxide semiconductor (CMOS) camera, and it is more suitable for industrial applications. However, a CCD camera often has the disadvantage of high price, so it is crucial to choose a camera that meets the requirements. Most of the input image of the deep learning model has a resolution of 224 × 224 pixels, so this paper selects the color CCD industrial camera ui-2210re-c-hq produced by IDS company in Germany with a resolution of 640 × 480 pixels and a frame rate of 100 fps (frames per second). Although the resolution is not high, the camera can obtain the cracks in the image, and its price is moderate, which basically meets the needs of industrial production. The M0814-MP2 lens is an industrial lens with a fixed focal length, and it is produced by the Japanese COMPUTAR company. The light source is two symmetrically placed plane light sources with adjustable brightness. The conveyor can transport 10,000 eggs per hour. The roller on the conveyor will drive the preserved eggs forward and make them rotate at the same time. The speed measurement device is composed of a fan blade and a photoelectric sensor, in which the fan blade is connected with the conveyor belt for synchronization, and each fan blade rotates corresponds to a station of the conveyor belt. The online collection device of preserved egg images is shown in Figure 3.

Figure 2.

Three-dimensional drawing of the online image acquisition device for preserved eggs. 1. Conveyor; 2. light source; 3. industrial camera; 4. black box; 5. speed measurement device.

Figure 3.

The online image acquisition device for preserved eggs.

2.3. Image Acquisition Method

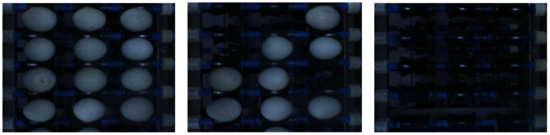

In this paper, an online automatic acquisition program is designed to obtain the image of cracked preserved eggs. The image acquisition time is determined by the speed measurement device, as shown in Figure 1. The photoelectric sensor outputs a low level when there is no occlusion and a high level when there is occlusion. Therefore, each time the output of the photoelectric sensor switches from a low level to a high level, the corresponding conveyor belt moves one station. According to the working principle of the speed measurement device, the program of online image acquisition is designed: ① read the output level of the photoelectric sensor and provide a signal to the camera whenever the output of the photoelectric sensor switches from a low level to a high level; ② after receiving the signal, the camera immediately collects the image of the current position and saves it to the computer. Since the running speed of the conveyor belt is about 1 station per second, the camera used in this study can meet the requirement of the acquisition speed, and the collected images are shown in Figure 4.

Figure 4.

An image collected for preserved eggs by the online image acquisition device.

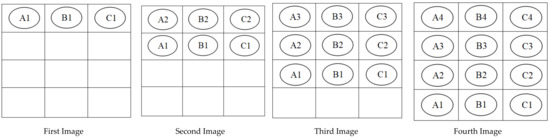

It can be seen from Figure 4 that the images of preserved eggs on four stations can be collected. The preserved egg will rotate with the roller during the movement of the conveyor belt. Due to the difference in size, preserved eggs move roughly three stations corresponding to a rotation cycle of the conveyor belt. Therefore, collecting the images of four stations can ensure that the images of all surfaces of preserved eggs can be collected. Due to the fast-running speed of the conveyor belt, there could be a station without preserved eggs, as shown in Figure 4. This is because, during manual feeding, preserved eggs are placed on the roller when the conveyor is started, which leads to the generation of vacancy. The movement mode of the conveyor is shown in Figure 5, where A, B, and C represent the number of three channels, and the number represents the number of stations; that is, A1 represents the first station of the first channel. The roller moves up and down. According to the image acquisition process, four images of different surfaces of a single preserved egg will be collected. These four images contain the complete information of the preserved egg.

Figure 5.

Online image acquisition process of preserved eggs.

2.4. Test Platform

This experiment is performed on a computer equipped with AMD Ryzen r5-3600 CPU (3.60 GHz), 32 GB main memory, and Geforce GTX 1070 GPU (8 GB video memory).

3. Image Preprocessing

3.1. Image Clipping and Stitching

An image contains the information of 12 stations. Firstly, the information of different stations in the image is cut to obtain the information of a single station; then, the image of the same station in the four original images is spliced to form one image; finally, the image is used to detect and recognize cracked preserved eggs.

Different from the existing online detection technique [22], the image acquisition method used in this study can ensure that each station appears at the same position of the image. Therefore, the fixed-position clipping method is adopted to cut the original image to obtain the images of 12 stations. Then, the images of the same station are extracted from the four adjacent images and spliced into a new image. The clipping result is shown in Figure 5. The first image is numbered from station 1, and the second image is numbered from station 2. In this scheme, the collected images of preserved eggs are cut and numbered in turn.

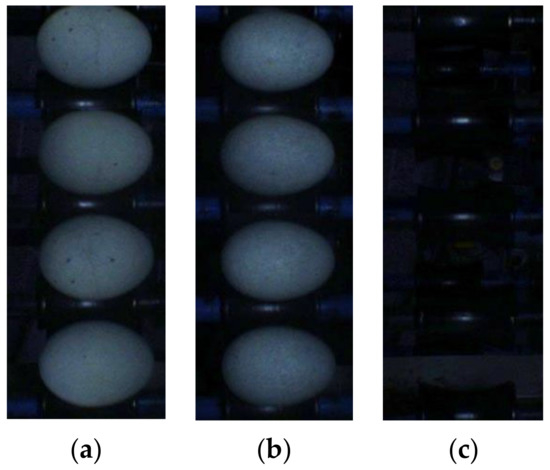

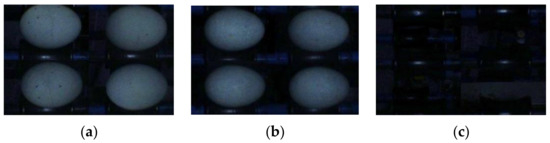

It can be seen from Figure 6 that except for the first three images, each number in the other images can be cut into four images, and the four images with the same number can be extracted and spliced into a new image. For this case, two splicing schemes can be used. One is the sequential splicing scheme, and it is used by most of the existing online detection techniques [23]. This scheme can retain the position information of preserved eggs; the other is the matrix splicing scheme (2 × 2). For the sequential splicing of the matrix, the splicing results of the sequential splicing scheme and the matrix splicing method are shown in Figure 7 and Figure 8, respectively.

Figure 6.

The clipping process of the images of preserved eggs.

Figure 7.

The splicing result of the sequential splicing scheme. (a) Cracked preserved egg. (b) Preserved egg. (c) Vacancy.

Figure 8.

The splicing result of the matrix splicing scheme. (a) Cracked preserved egg. (b) Preserved egg. (c) Vacancy.

During the online image acquisition process of preserved eggs, a total of 121 dynamic images were collected. The collected images are cut and spliced following the method introduced above, and a total of 400 images of cracked preserved eggs, 400 images of non-cracked preserved eggs, and 651 vacancy images are obtained. To ensure the balance of all kinds of data, 400 vacancy images are randomly selected in this study, and the redundant vacancy images are eliminated. The final two data sets are shown in Table 1, where 400 images of cracked preserved eggs, 400 images of non-cracked preserved eggs, and 400 vacancy images are obtained by the sequential splicing scheme; 400 images of cracked preserved eggs, 400 images of non-cracked preserved eggs, and 400 vacancy images are obtained by matrix splicing scheme. The two data sets are respectively adopted to train the deep learning model, and the appropriate splicing scheme is selected by comparing the model performance.

Table 1.

Online inspection data set.

3.2. Data Enhancement

To improve the generalization ability of the model and simulate the preserved egg data collected under different angles and illumination conditions in the actual environment, the training of deep learning models often requires a large number of training samples to achieve a better training effect. In this study, a variety of data enhancement methods are used to expand the two data sets: (1) translation and rotation. The position and direction of the images are changed, and the image is translated or scaled to simulate the image data of preserved eggs at different angles; (2) horizontal and vertical flip. The images are flipped horizontally and vertically to simulate the image data of preserved eggs in different arrangement order and placement direction; (3) random brightness contrast enhancement. The brightness and contrast of the image are enhanced with random intensity to simulate different illumination intensities; (4) random hue and saturation. The hue and saturation of the image are randomly adjusted to simulate the acquisition of multiple scenes.

4. Image Classification Model of Preserved Egg Crack

4.1. The Model Structure of MobileNetV3

To apply the deep learning model to devices with limited computing power, such as mobile terminals and embedded devices, a small deep learning model called MobileNet is used in this study. Its main body is a depthwise separable convolution network that divides the standard convolution into two operations: deep convolution and pointwise convolution. Based on this, the calculation amount of the model is greatly reduced, and a fast and small-size deep learning model is realized. Some testing results indicate that the effect of the model is not significantly lower than that of the standard convolution neural network [24]. Subsequently, literature [25] introduced the inverse residual structure into the network and proposed a MobileNetV2 network to improve the detection speed and reduce the number of network parameters [26]. Literature [27] improved the MobileNetV2 network and proposed the MobileNetV3 network, which further improved the detection speed and detection effect.

The improvements of MobileNetV3 include: (1) there are 32 convolutions in the original network with a size of 3 × 3, which is reduced to 16 by MobileNetV3. It is verified that this improves the running speed of the model without significantly reducing the effect of the model; (2) some network layers use hard swish (H-swish) instead of rectified linear unit (ReLU) as the activation layer of the network, which improves the detection accuracy of the model; (3) the MobileNetV3 network introduces sequence-and-exception blocks (SE) to improve the inverse residual convolution structure (IRS). Meanwhile, it introduces SE inverse residual convolution structure (SE IRS) in some network layers to improve the recognition ability of the model. The initial form of the network is called MobileNetV3_large, and the network with reduced building blocks is called MobileNetV3_small, which has a simpler structure and runs faster. The network structure of MobileNetV3_large is shown in Table 2.

Table 2.

MobileNetV3_large network parameters.

4.2. Evaluation Indicators

In this paper, precision (P), recall (R), and accuracy (A) are used as evaluation indicators to compare the model effect. The calculation formulas are shown in Equations (1)–(3):

TP represents the number of positive samples that are correctly predicted as positive; TN represents the number of negative samples that are correctly predicted as negative; FP represents the number of negative samples that are incorrectly predicted as positive, and FN represents the number of positive samples that are incorrectly predicted as negative. Although the data set in this paper includes cracked preserved eggs, non-cracked preserved eggs, and vacancies, the main evaluation indicator of the model is the recognition accuracy of cracked preserved eggs. Therefore, only the recognition accuracy of the model for cracked preserved eggs and non-cracked preserved eggs is calculated.

Considering the actual production and processing process, this paper designs the packaging and calling program of the model based on C++ language. Therefore, the detection time is the time spent from the beginning of the program to the end of the program for detecting 300 pictures, which includes the time spent on model calling, target detection, and result output.

4.3. Model Training

The two data sets are divided into a training set, a verification set, and a test set at the ratio of 7:2:1. The image size is adjusted to 224 × 224 pixels, and then the model is trained. To obtain the best detection model, this paper selects common classification models for training, including MobileNetVMobileNetV3_small, MobileNetVMobileNetV3_large, ResNet18, ResNet34, DarkNet53, and DenseNet121. The training results are listed in Table 3.

Table 3.

The training results of different models under the two splicing schemes.

It can be seen from Table 3 that the depth learning models can fully recognize the vacancy images based on the data sets obtained by different splicing schemes. Meanwhile, the preserved egg images are not predicted as vacancy images, indicating that the vacancy images are obviously different and easy to be recognized. In this study, the effect of the model is evaluated by only calculating the recognition accuracy of the model for cracked preserved eggs and non-cracked preserved eggs. By comparing the detection effects of six different depth learning models, it can be seen that the matrix splicing method contributes to a significantly better model training effect than the sequential splicing method, and the detection accuracy of the model is higher than 90%. The results show that the deep learning models can achieve online detection of cracked preserved eggs, and the mode detection effect is independent of the position of preserved eggs. Moreover, the detection accuracy of MobileNetV3_large, ResNet34, and DenseNet121 models is significantly higher than that of other models. MobileNetV3 has an obvious advantage in detection speed, so it can better meet the running speed requirement of online detection.

5. Optimization and Discussion of the Detection Model

5.1. Effect of Image Resolution on Model Detection

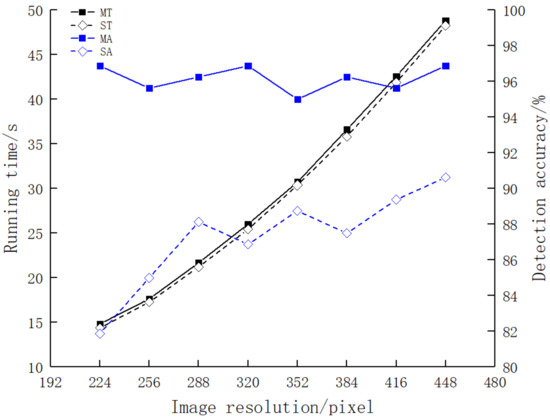

The size of the image obtained by the sequential splicing scheme and the matrix splicing scheme is 170 × 444 and 340 × 222 pixels, respectively. When the image size is reduced to 224 × 224 pixels, the image height and direction information is lost too much, leading to the low accuracy of the model on the data set obtained by the sequential splicing scheme. To investigate the relationship between image size and model accuracy, this paper adjusts the image size to test the accuracy of the MobileNetV3_large model. The test results are shown in Figure 9.

Figure 9.

The relationship between model running time, detection accuracy, and image size. MT denotes running time when using the matrix splicing scheme; ST denotes running time when using the sequential splicing scheme; MA denotes detection accuracy when using the matrix splicing scheme; SA denotes detection accuracy when using the sequential splicing scheme.

It can be seen from Figure 9 that the running time of the model has a quadratic relationship with the image size, and its correlation coefficient R2 is as high as 0.99. Meanwhile, the two splicing methods have little impact on the running time of the model. When the matrix splicing scheme is used, the image size has little effect on the detection accuracy of the model, and the model accuracy fluctuates slightly between 95% and 97%. When the matrix splicing scheme is used, the image size has an obvious impact on the detection accuracy of the model, and the model accuracy increases with the image size. Generally, the matrix splicing scheme contributes to a better model detection effect than the sequential splicing scheme. Therefore, the matrix splicing scheme is finally selected in this paper, and the image size is set to 224 × 224 pixels. In this way, high detection accuracy and improved detection speed can be ensured.

5.2. Detection of Cracked Preserved Eggs Based on Improved MobileNetV3_Large

The proponents of MobileNetV3 have proved its strong ability in multi-classification on the traditional image classification data set. The classification problem to be solved in this paper is binary classification. To further reduce the calculation amount and improve the running speed, this paper improves the initial MobileNetV3_Large network, and its structure (MobileNetV3_egg) is shown in Table 4. In the first convolution layer, the number of convolution kernels is reduced from 16 to 8, and the convolution feature image matrix is also reduced from 16 to 8 to reduce the number of features extracted by the network. To preserve the structure and depth of the original network, the number of feature matrices to be extracted at each layer of the network is halved, and finally, the number of feature parameters extracted for classification is reduced from 1280 to 640.

Table 4.

The structure of MobileNetV3_egg.

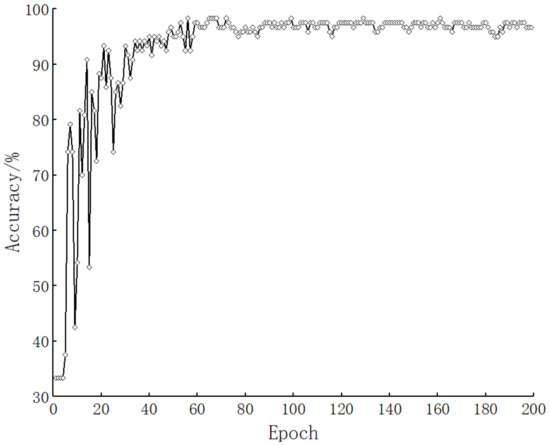

The training process of the MobileNetV3_egg model is shown in Figure 10. This figure shows that the accuracy increases with the increase in epoch after the beginning of training. When epoch exceeds 59, the accuracy of the model tends to be stable, so the modification of the model is successful. Table 5 shows the comparison between the MobileNetV3_egg model and the initial MobileNetV3 model. The parameters of the improved model are reduced from 5.153 × 106 to 1.644 × 106. The detection time of MobileNetV3_egg for 300 pictures is only 4.267 s, and the detection speed is more than three times that of the initial MobileNetV3, which reaches 96.3%. Compared with the original MobileNetV3_small model, the improved MobileNetV3_egg model has fewer parameters, higher accuracy, and faster detection speed.

Figure 10.

Changes in accuracy of MobileNetV3_egg network during training.

Table 5.

Comparison of the improved MobileNetv3_egg model to MobileNetv3_large and MobileNetv3_small.

5.3. Discussion

A plethora of studies has been conducted on egg crack detection. At present, the detection methods of egg crack are mainly machine vision detection and acoustic detection. These two methods have their advantages and disadvantages. Acoustic detection has better sensitivity to cracks, which captures small cracks based on the difference between the acoustic signals. This method can be applied to detect small cracks that are not easy to be detected by the naked eye. However, the acquisition of acoustic signals is greatly affected by the surrounding environment, so a special acquisition device is often needed. Therefore, this method cannot achieve a suitable detection effect in a noisy environment, but in practice, the online detection equipment will produce large noise. As a result, acoustic detection is difficult to be applied to online equipment. Literatures [28,29] adopt acoustic detection methods to realize crack detection. However, in recent years, the successful application of online detection of egg cracks has become more and more urgent. The machine vision detection method has gradually become mainstream. This kind of method has fast detection speed, low requirements for the working environment, and low equipment cost, but it has poor detection effect for cracks that are difficult to be observed by human eyes. The works of [10,17] have adopted machine vision to realize the online detection of eggshell crack. The work of [17] is the closest to our study. In recent years, with the rapid development of deep learning, deep learning models have achieved great progress in image processing. Based on the learning ability, deep learning models often achieve higher accuracy than traditional image processing methods with a simpler programming process. At present, there are few studies on the application of deep learning models to the online detection of eggshell cracks. Therefore, the method proposed in this paper provides a reference for implementing online detection based on deep learning models. Compared with the work of [17], the model constructed by this method is simpler, so it has higher detection efficiency, fewer parameters, less calculation amount, and lower computing power of online detection equipment. The image acquisition equipment used in this paper also has higher efficiency and better stability, so it has more application prospects. This study also has the problem of insufficient detection ability of microcracks on the preserved egg surface that are difficult to be observed by human eyes. In the later stage, we will further increase the sample size, improve the application scope of the model, and increase the samples of microcracked preserved eggs for the next research.

6. Conclusions

In this paper, the images of preserved eggs are dynamically collected on the three-row egg conveyor, and the position of each station in each collected image remains unchanged. Then, the collected images are cut into a single preserved egg image according to the fixed station, and the images of the same preserved egg in the four adjacent images are spliced by the matrix splicing scheme and the sequential splicing scheme. Next, taking the images obtained by the two splicing schemes as data sets, multiple deep learning classification models are trained, and the detection speed and detection effect of the models are comprehensively compared. The comparison results indicate that MobileNetV3_large is the best detection model. Subsequently, through experiments, the effects of splicing schemes and image size on detection speed and detection effect are investigated. It is determined that the matrix splicing scheme performs the best, and the optimal input image size is 224 × 224 pixels. Finally, according to the detection task of this paper, the deep learning model is improved, and the number of parameters of the improved MobileNetV3_large model is only 1.644 × 106. The detection time for 300 images is only 4.267 s, and the detection accuracy reaches 96.3%. The test results show that the detection method in this paper achieves high accuracy in identifying cracked preserved eggs, and it can meet the actual production needs. In addition, the proposed method does not require high computing power, which is feasible for high-throughput online detection of cracked preserved eggs.

Author Contributions

Conceptualization, Q.W.; methodology, W.T.; software, W.T.; validation, W.T., J.H. and Q.W.; formal analysis, W.T.; investigation, J.H.; resources, Q.W.; data curation, W.T.; writing—original draft preparation, W.T.; writing—review and editing, W.T. and Q.W.; visualization, Q.W.; supervision, Q.W.; project administration, Q.W.; funding acquisition, Q.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (no. 32072302).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available.

Acknowledgments

The authors wish to thank all the reviewers who participated in the review during the preparation of this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yuan, J.; Li, Y.; Liu, X.M.; Zhao, X.X.; He, L.F. Structure design of egg auto-picking system and manipulator motion planning. Trans. Chin. Soc. Agric. Eng. 2016, 32, 48–55. [Google Scholar]

- Mei, Z.M.; Wang, S.C.; Zhang, R. Compression test and elastic modulus and Poisson’s ratio of preserved egg. J. Huazhong Agric. Univ. 2015, 34, 130–133. [Google Scholar]

- Wang, F.; Wen, Y.X.; Tan, Z.J.; Cheng, F.; Wei, W.; Li, Z.; Yi, W.S. Nondestructive detecting cracks of preserved eggshell using polarization technology and cluster analysis. Trans. Chin. Soc. Agric. Eng. 2014, 30, 249–255. [Google Scholar]

- Sun, L.; Feng, S.Y.; Chen, C.; Liu, X.L.; Cai, J.R. Identification of eggshell crack for hen egg and duck egg using correlation analysis based on acoustic resonance method. J. Food Process. Eng. 2020, 43, e13430. [Google Scholar] [CrossRef]

- Lin, H.; Xu, P.T.; Sun, L.; Zhao, J.W.; Cai, J.R. Identification of eggshell crack using multiple vibration sensors and correlative information analysis. J. Food Process. Eng. 2018, 41, e12894. [Google Scholar] [CrossRef]

- Jin, C.; Xie, L.J.; Ying, Y.B. Eggshell crack detection based on the time-domain acoustic signal of rolling eggs on a step-plate. J. Food Eng. 2015, 153, 53–62. [Google Scholar] [CrossRef]

- Yang, J.; Xia, C.Y.; Pan, H.; Shi, Y.; Li, X.Y. Research of test model for eggshell crack detection. Adv. Mat. Res. 2014, 2863, 655–658. [Google Scholar] [CrossRef]

- Priyadumkol, J.; Kittichaikarn, C.; Thainimit, S. Crack detection on unwashed eggs using image processing. J. Food Eng. 2017, 209, 76–82. [Google Scholar] [CrossRef]

- Yang, J.; Pan, H.; Peng, Z.W.; Li, X.Y. Based on Vibration and improved GRNN identify eggshell crack. Appl. Mech. Mater. 2014, 2900, 404–408. [Google Scholar] [CrossRef]

- Sun, K.; Ma, L.; Pan, L.Q.; Tu, K. Sequenced wave signal extraction and classification algorithm for duck egg crack on-line detection. Comput. Electron. Agr. 2017, 142, 429–439. [Google Scholar] [CrossRef]

- Luo, H.; Yan, S.M.; Lu, W.; Zhang, C.Y.; Dai, D.J. Micro-cracked eggs online detection method based on force-acoustic features. Trans. Chin. Soc. Agric. Mach. 2016, 47, 224–230. [Google Scholar]

- Wang, M.; Yu, J.Y.; Hu, Y.X.; Wang, Y.G.; Liu, X.; Yu, F. Application of nondestructive testing technology in detection of egg quality. Food. Sci. Tech. 2021, 46, 268–272. [Google Scholar]

- Mei, Z.M.; Zhang, R.; Zhang, L.; Wang, S.C. Experimental study on preserved egg breakage mechanism and finite element analysis. Adv. J. Food Sci. Tech. 2016, 10, 313–325. [Google Scholar]

- Liu, L.; Fu, M.Z.; Wang, S.C. Preserved egg crack detection based on wavelet energy spectrum and BP neutral network. J. Huazhong Agric. Univ. 2015, 34, 130–133. [Google Scholar]

- Zhang, R.; Li, W.P.; Mo, T. Review of deep learning. Infor. Control. 2018, 47, 385–397. [Google Scholar]

- Yuan, Z.M.; Yuan, H.J.; Yan, Y.X. Automatic recognition and classification of field insects based on lightweight deep learning model. J. Jilin Univ. 2021, 51, 1131–1139. [Google Scholar]

- Turkoglu, M. Defective egg detection based on deep features and Bidirectional Long-Short-Term-Memory. Comput. Electron. Agr. 2021, 185, 106152. [Google Scholar] [CrossRef]

- Ran, R.; Xu, X.H.; Qiu, S.H.; Cui, X.P.; Ou, Y.B. Review of crack detection methods based on deep convolutional neural networks. Comp. Eng. Appl. 2021, 57, 23–35. [Google Scholar]

- Wen, S.P.; Zhou, Z.J.; Zhang, X.Y.; Chen, Z.H. Online detection system of bearing roller’s surface defects based on computational vision. J. South China Univ. Tech. 2020, 48, 76–87. [Google Scholar]

- Li, S.J.; Hu, D.Y.; Gao, S.M.; Lin, J.H.; An, X.S.; Zhu, M. Real-time classification and detection of citrus based on improved single short multibox detecter. Trans. Chin. Soc. Agric. Eng. 2019, 35, 307–313. [Google Scholar]

- Yuan, P.S.; Shen, C.J.; Xu, H.L. Fine-grained mushroom phenotype recognition based on transfer learning and bilinear CNN. Trans. Chin. Soc. Agric. Mach. 2021, 52, 151–158. [Google Scholar]

- Lv, S.Z.; Du, W.L.; Chen, Z.; Chen, W.; Surigalatu. On-line measuring method of buckwheat hulling efficiency parameters based on machine vision. Trans. Chin. Soc. Agric. Mach. 2019, 50, 35–43. [Google Scholar]

- Li, L.; Peng, Y.K.; Li, Y.Y. Design and experiment on grading system for online non-destructive detection of internal and external quality of apple. Trans. Chin. Soc. Agric. Eng. 2018, 34, 267–275. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Li, Q.X.; Wang, Q.H.; Xiao, S.J.; Gu, W.; Ma, M.H. Detection method for fertilizing information of group duck eggs based on deep learning. Trans. Chin. Soc. Agric. Mach. 2021, 52, 193–200. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Wang, H.J.; Mao, J.H.; Zhang, J.Y.; Jiang, H.Y.; Wang, J.P. Acoustic feature extraction and optimization of crack detection for eggshell. J. Food Eng. 2016, 171, 240–247. [Google Scholar] [CrossRef]

- Li, Y.Y.; Dhakal, S.; Peng, Y.K. A machine vision system for identification of micro-crack in egg shell. J. Food Eng. 2012, 109, 127–134. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).