Evaluation and Testing System for Automotive LiDAR Sensors

Abstract

1. Introduction

- An evaluation and testing platform for testing several parameters of a LiDAR sensor for automotive applications;

- A point cloud filter-based approach to evaluate several characteristics of a LiDAR sensor at the reception level;

- A desktop and an embedded approach for deploying the testing platform;

- Validation of the platform through testing and evaluation of a commercial off-the-shelf (COTS) LiDAR sensor.

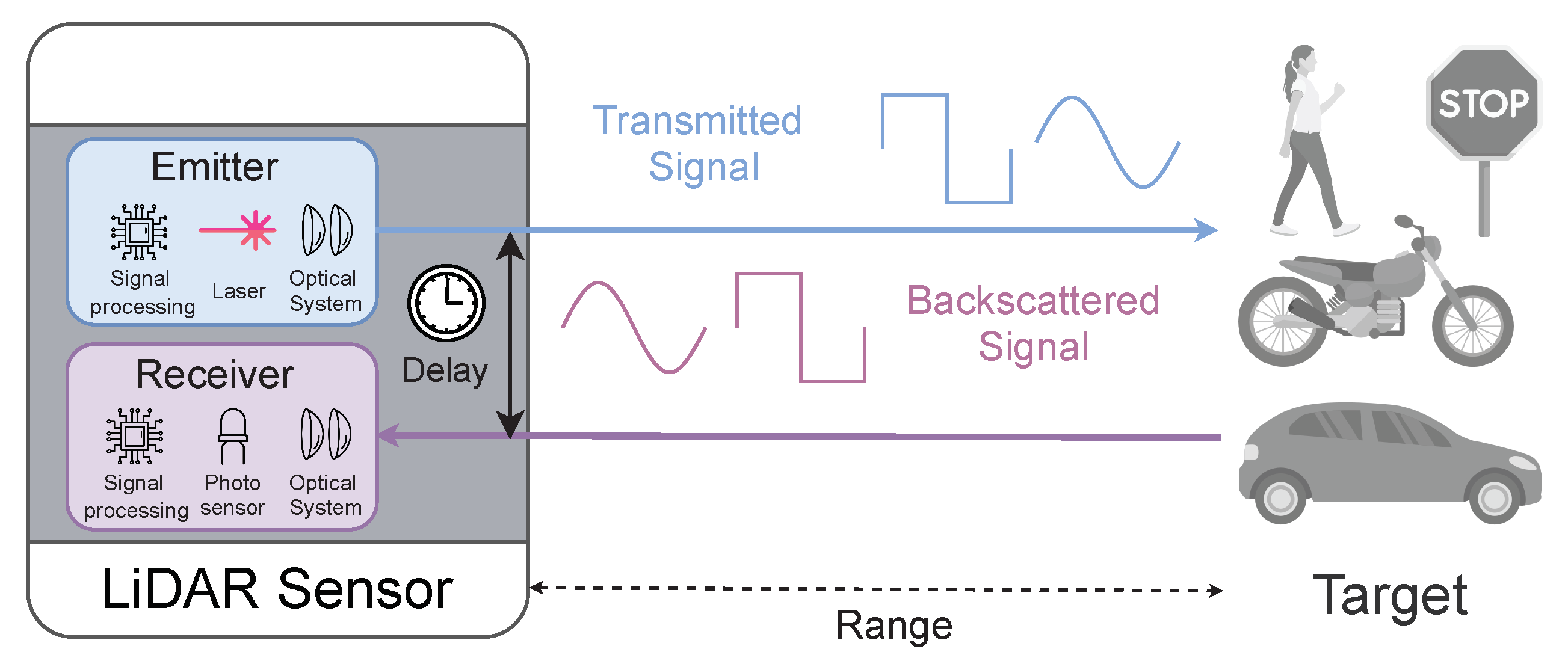

2. LiDAR Sensors for Automotive

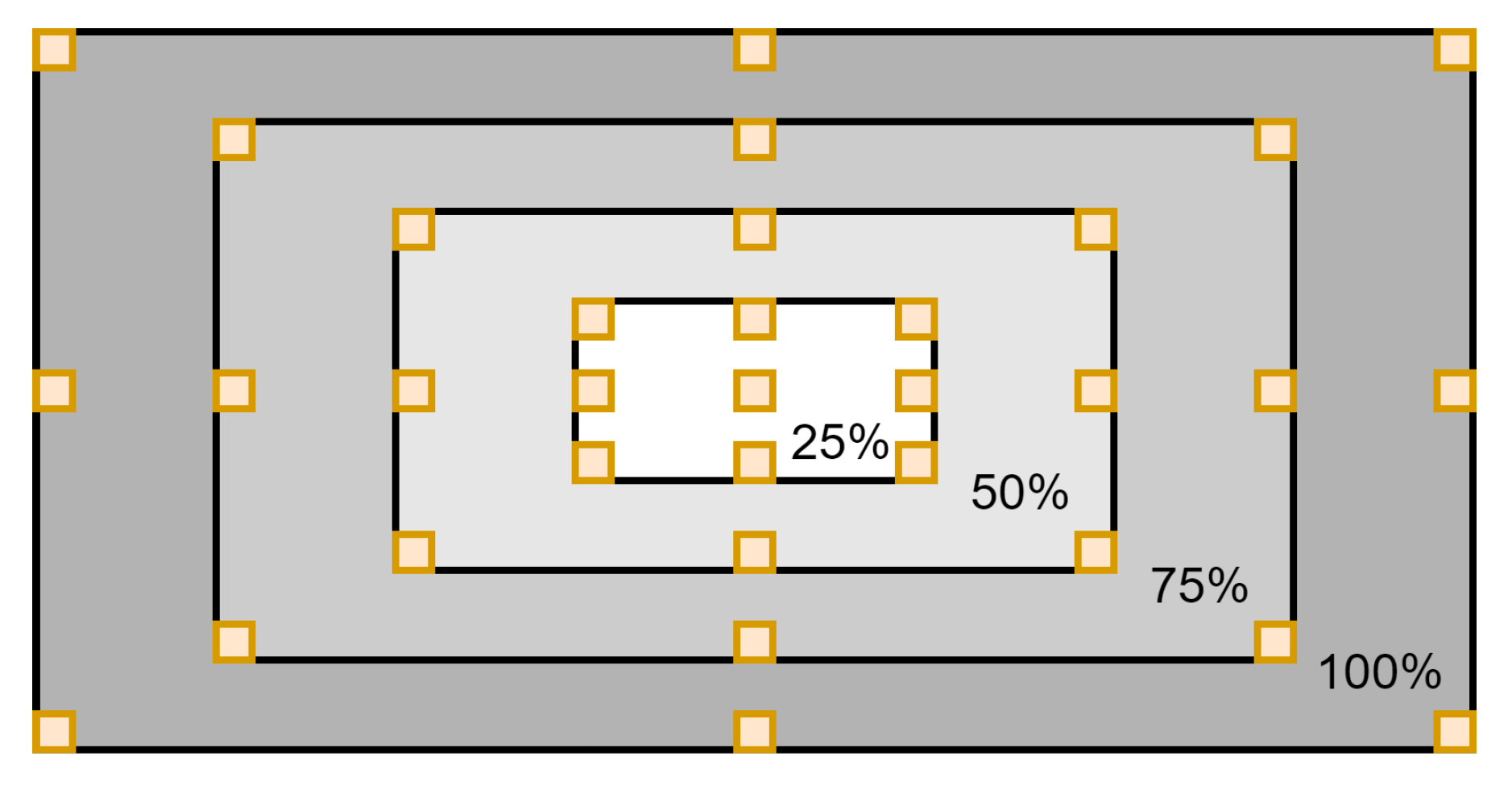

- The Field of View is one of the metrics that defines the maximum angle at which a LiDAR sensor is able to detect objects, as shown in Figure 3. When two scanning angles are available, the sensor can scan a 3D area defined by the Vertical FoV (VFoV) and the Horizontal FoV (HFoV). This test is designed to identify the maximum detection angles of the sensor in order to validate its defined values.

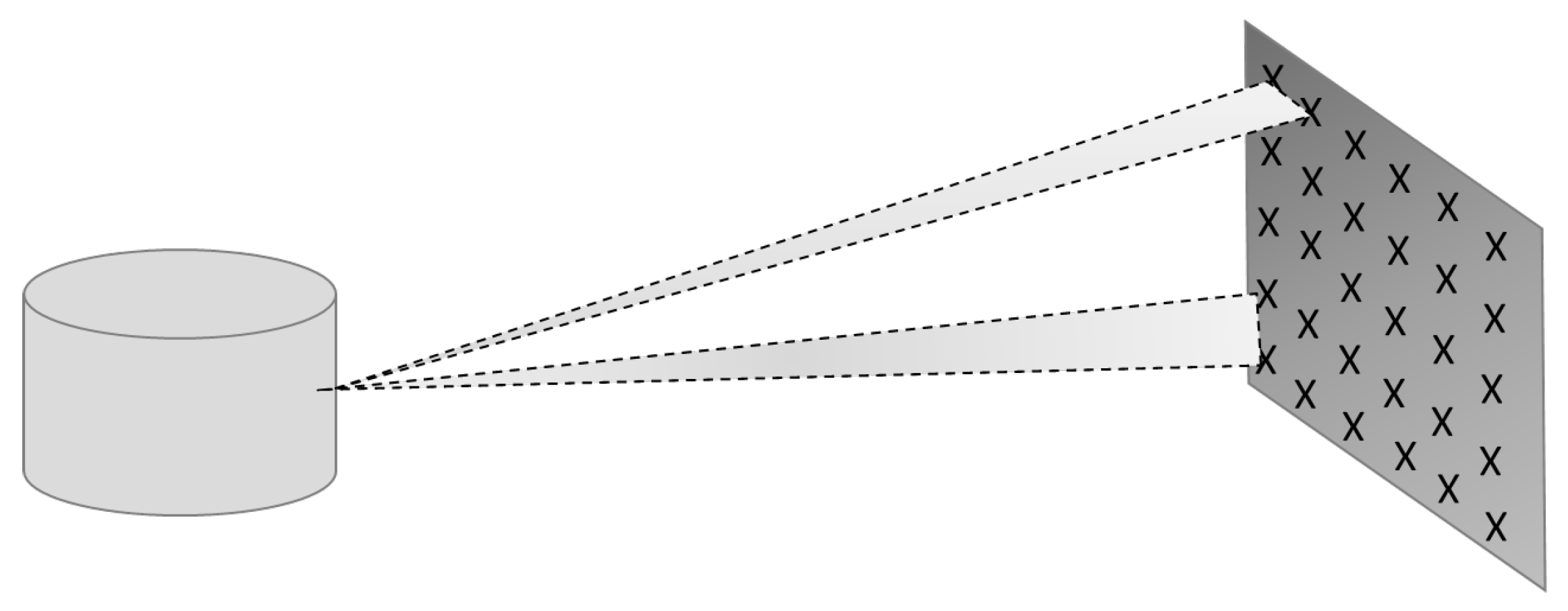

- The Angular Resolution represents the sensor’s ability to scan and detect objects within the FoV, as depicted in Figure 4. Higher resolutions allow for smaller blind spots between laser firings, enabling the detection of small objects and greater detail of the environment, particularly at higher detection ranges. Thus, this test is designed to identify the angular resolution both vertically and horizontally in different areas of the FoV in order to verifying that the collected values match the requirements and/or the sensor’s characteristics as defined by the manufacturer.

- Background Light and Sunlight can have a severe impact on sensor behaviour. In real-world environments, LiDAR sensors may experience substantially decreased performance when exposed to external light interference, such as sunlight backscattering from targets with high reflectivity characteristics. Removing such light noise can be particularly challenging, as solar radiation is a powerful light source present in a wide range of wavelengths [35]. Therefore, it is important to evaluate sensor output when exposed to background light in a controlled environment.

- The Power Consumption test aims to monitor and analyze the power consumption of the sensor under test in different operation modes, configured parameters, and environment/target conditions.

- The Range can be defined as the minimum and the maximum distances at which the sensor successfully detects an object. While detecting the minimum range can be quite simple, finding the maximum range is not straightforward, and depends on the target’s reflectivity, which is considered detected when it appears in at least 90% (detection probability) of the the sensor’s data output. With a target reflectivity higher than 40–50%, detecting the maximum range in a straight line inside our testing laboratory (maximum range 100 m) would be impossible for high-range sensors. However, a sensor’s maximum range can be deduced from measurements performed on lower reflectivity targets by using the relationship between the returning signal strength from a specific target with a known reflectivity and its distance to the sensor. This method is based on the signal power arriving at the LiDAR detector as defined by the Equation (1), where A is a constant, is the target’s reflectivity, and is the target’s distance.If the required minimum level for the returning signal remains the same regardless of the target’s reflectivity, the maximum distance can be calculated for any reflectivity value using Equation (2), where is the target reflectivity to be simulated and is the corresponding target distance calculated for the new reflectivity level. In order to reduce errors in the estimations, several measurements for the maximum range must be performed, e.g., targets with reflectivity of 10%, 20%, and 40%.

3. LiDAR Evaluation and Testing

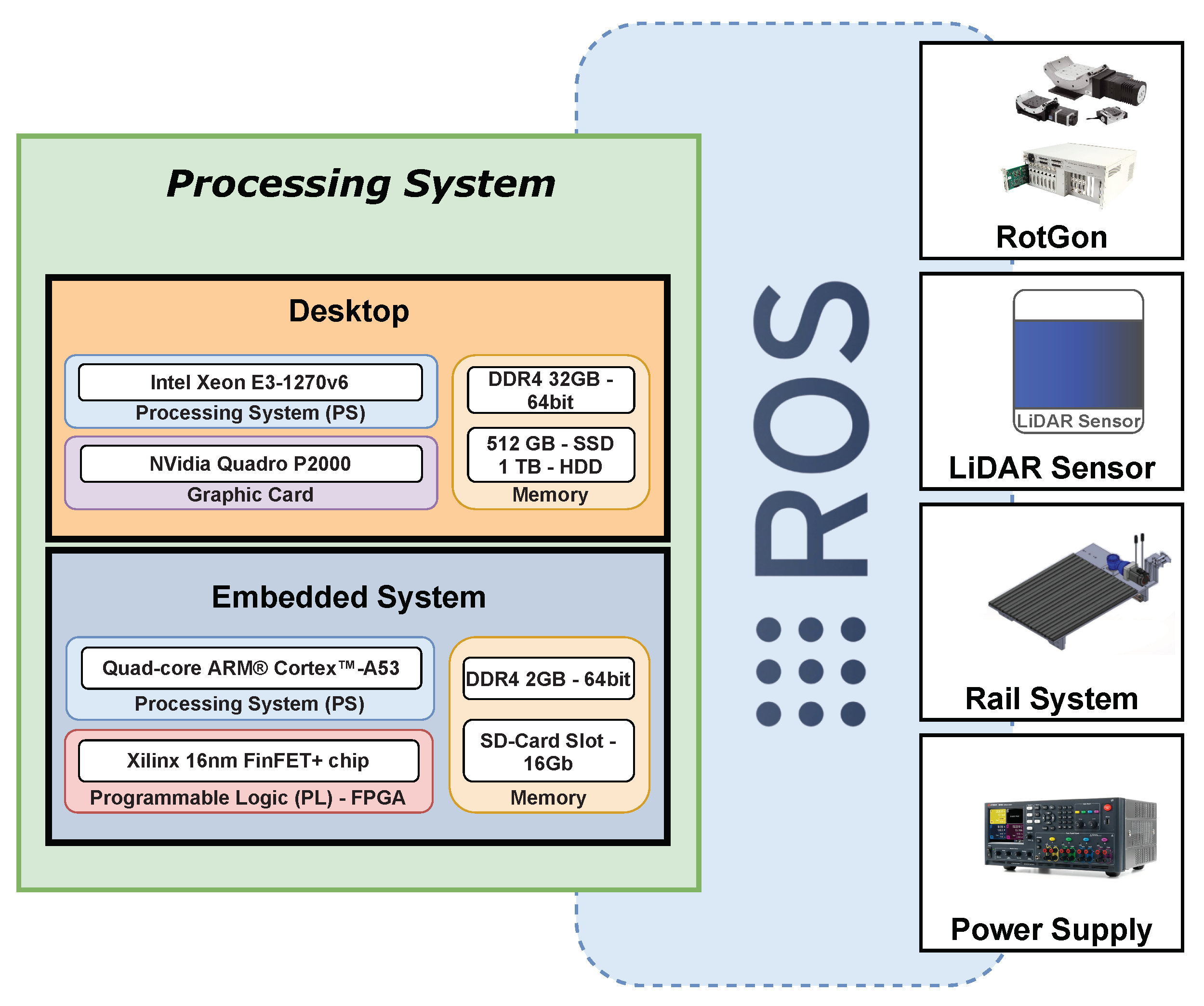

3.1. System Architecture

3.2. Lab Equipment

- RotGon: The RotGon enables tilting/rotating of LiDAR sensors in three distinct angles. The rotation stage permits continuous 360° motion with a maximum speed of 2°/s and a minimum incremental motion of 0.01°. The goniometer allows an angular range between −15° and 45° and features a worm mounted rotary encoder for improved accuracy and repeatability. Due to its high precision, the RotGon is highly important for the measurement of the AR and the FoV.

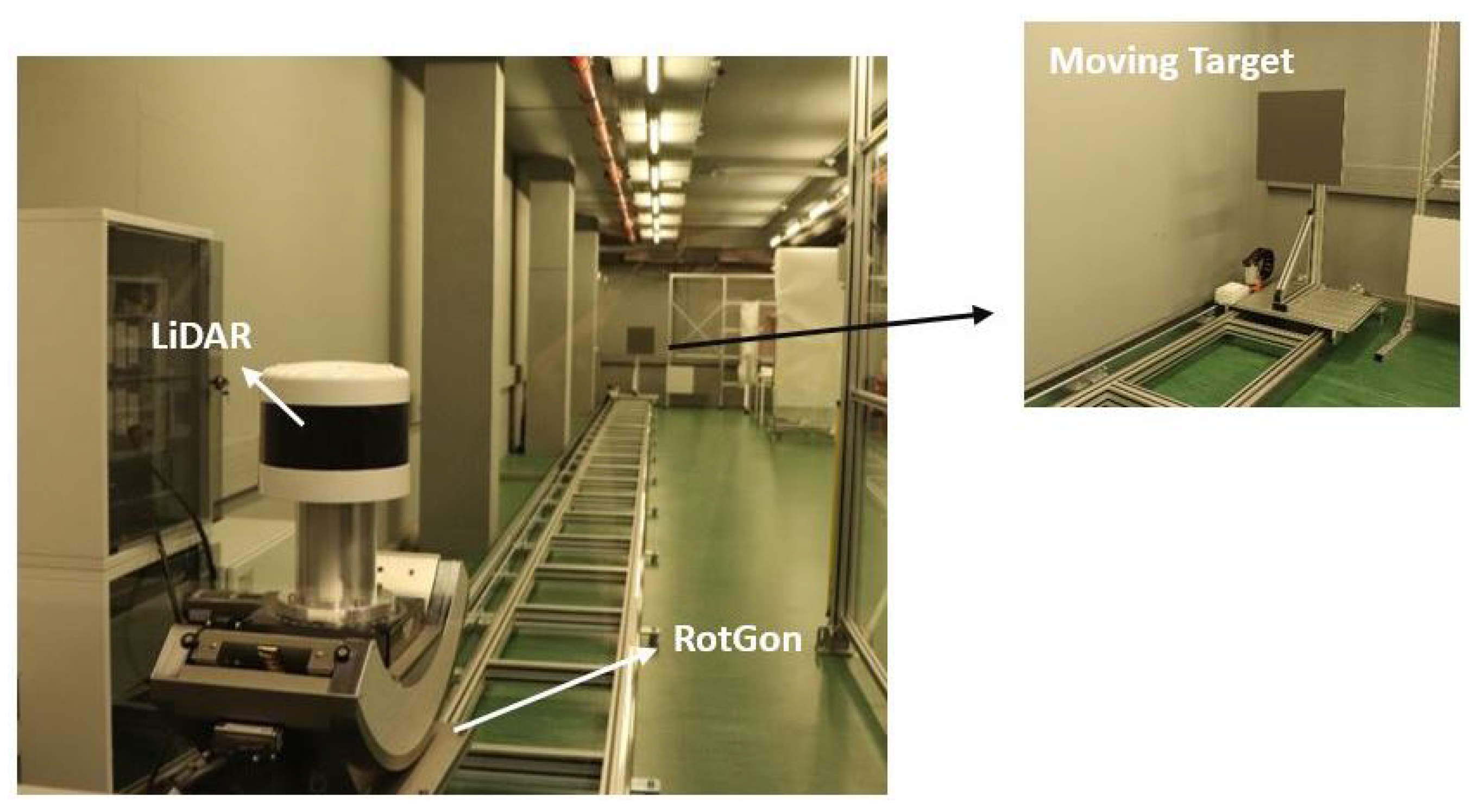

- Rail System: The rail system was designed to enable a base moving that can handle weights of up to 30 kg and can be programmed by external communication. In turn, the base can support several targets with different reflectivity values. The rail system’s structure has a length of 25 m and it is installed inside the laboratory. Figure 6 depicts the rail system with the LiDAR sensor installed on top of the RotGon (left side) and the moving platform with a mounted target at the end of the rail structure (right side). The rail system allows the velocity and acceleration/deceleration of the moving target to be controlled with given values (in mm/s for velocity and ± mm/s2 for acceleration/deceleration). Prior to its utilization, the rail system was calibrated with rangefinder equipment used to measure several distances to a target with 95% reflectivity mounted on the rail system. These measurements were used as reference values for the internal position detector sensor.

- Power Supply: This equipment is used to power and monitor the power consumption of the LiDAR sensor under test. The power supply used in the evaluation and testing platform is the N6705C DC Power Analyzer, which includes four independent channels that can be used to power and monitor four different connected modules. The voltage and current levels for each channel can be changed in real time, allowing further testing of the sensor’s behaviour under different power source conditions.

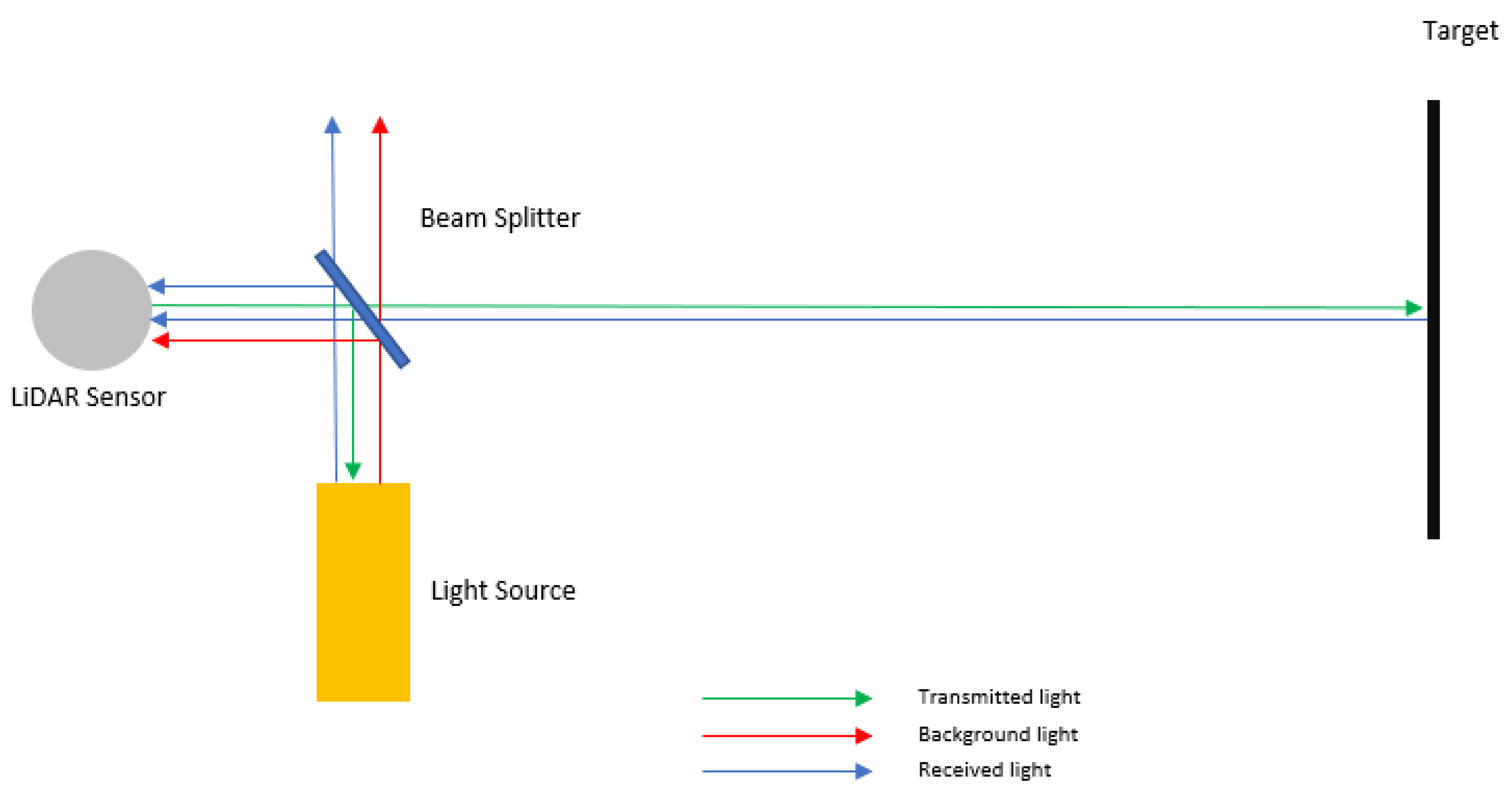

- External illumination influence (background and sunlight): Ambient light from the sun and artificial sources represents one of the major drawbacks of using LiDAR in outdoor applications. For this reason, we created the setup depicted in Figure 7, which allows the influence of the external illumination to be tested by artificially changing the behaviour of the target’s background light.

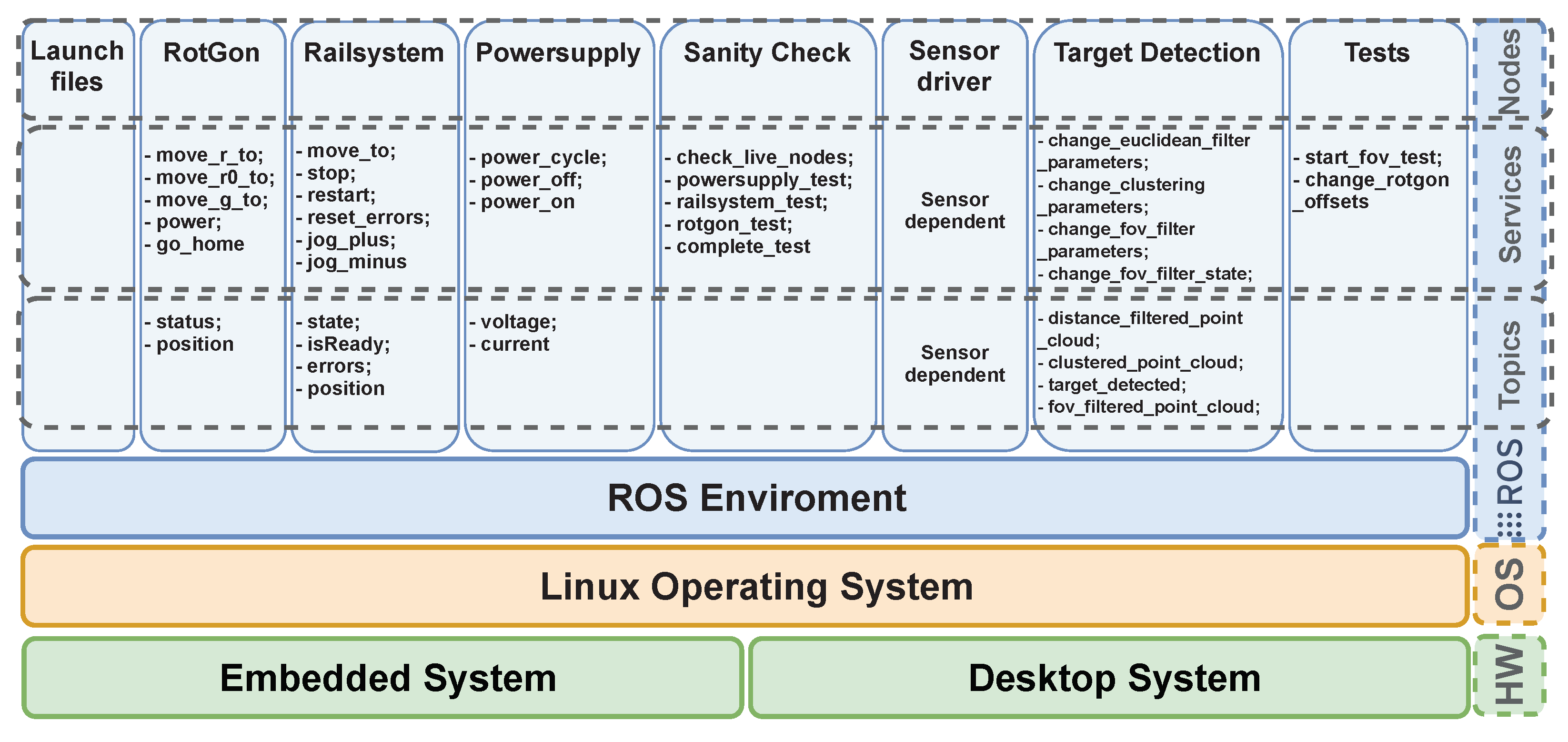

3.3. ROS Software Architecture

- Launch files package: This package was developed to ease the system’s launch with the correct testing setup. It allows for flexible debug sessions with different LiDAR sensors, different sensor configurations, and several system setups in which one or more components (e.g., RotGon, the rail system) may not be used. This package only presents launch files without services or topics available.

- RotGon: The RotGon package enables tilting/rotating of LiDAR sensors in three distinct angles. Therefore, this package provides three services, one for each axis, to move the sensor to the desired position/angle: move_r_to, move_r0_to, and move_g_to. Additionally, it provides a self-reset service that moves all axes to the 0 degree position: go_home, as well as a power service to turn the device on and off. The RotGon package publishes information on two topics: one to display the current RotGon status regarding errors (status) and another to output the current angle position in real time (position).

- Railsystem: This package is responsible for moving the target within the sensor’s FoV. Therefore, it provides three moving services: one to send the target to a desired position, move_to; one to move the target away from the sensor at a constant speed and acceleration, jog_plus; and another to move the target towards the sensor, jog_minus. All can be interrupted by calling the stop service. Two more services are available: one to control the system regarding errors (reset_errors) and another to handle communication issues (restart). As with RotGon, this package has two other topics, one with status information (state) and another that publishes the current platform’s position (position).

- Powersupply: This package is responsible for controlling the sensor’s power source. It provides three services to individually control each channel: one for turning on the power source, power_on; one for turning off the power source, power_off; and another for resetting the power supply, power_cycle. In addition, it can set different voltage and current values, providing real-time measurements of the channel powering the sensor.

- Sanity Check: This package consists of a set of tools used to verify the full operation of the main system used for testing a sensor, i.e., the rail system, RotGon, and power supply. It provides one service to individually test each core component, <equipment>_test; one that tests the connectivity with the nodes, check_live_nodes; and another that sequentially tests the whole setup.

- Sensor driver: This package depends on the sensor that is currently under test. Because most manufacturers provide an ROS-based driver and packages to interface with their sensors, the evaluation and testing platform can easily support a broad number of devices. Nonetheless, each driver package has to be manually installed and configured before changing the sensor and any test configuration.

- Target detection: This package is required for tests that depend on the target’s visibility inside the sensor’s FoV, and consequently its visibility in the point cloud. It supports a set of services that are used to enable and configure several filters applied to the point cloud, such as the target’s distance, the software-based FoV, etc. Such filters are further explained in the next section. This service can output several topics with the filtered point clouds (one per filter) and one topic that continuously informs it of whether the target is inside the sensor’s FoV (target_detected).

- Tests: The Tests package contains the supported tests for the evaluation and testing platform that require the utilization of at least one of the pieces of equipment mentioned above. For each test, e.g., FoV and AR, a service is used to trigger the automated execution of the whole procedure. During the test, all the test outputs are saved in an ROS log file.

4. System Implementation

4.1. Point Cloud Filtering for Target Detection

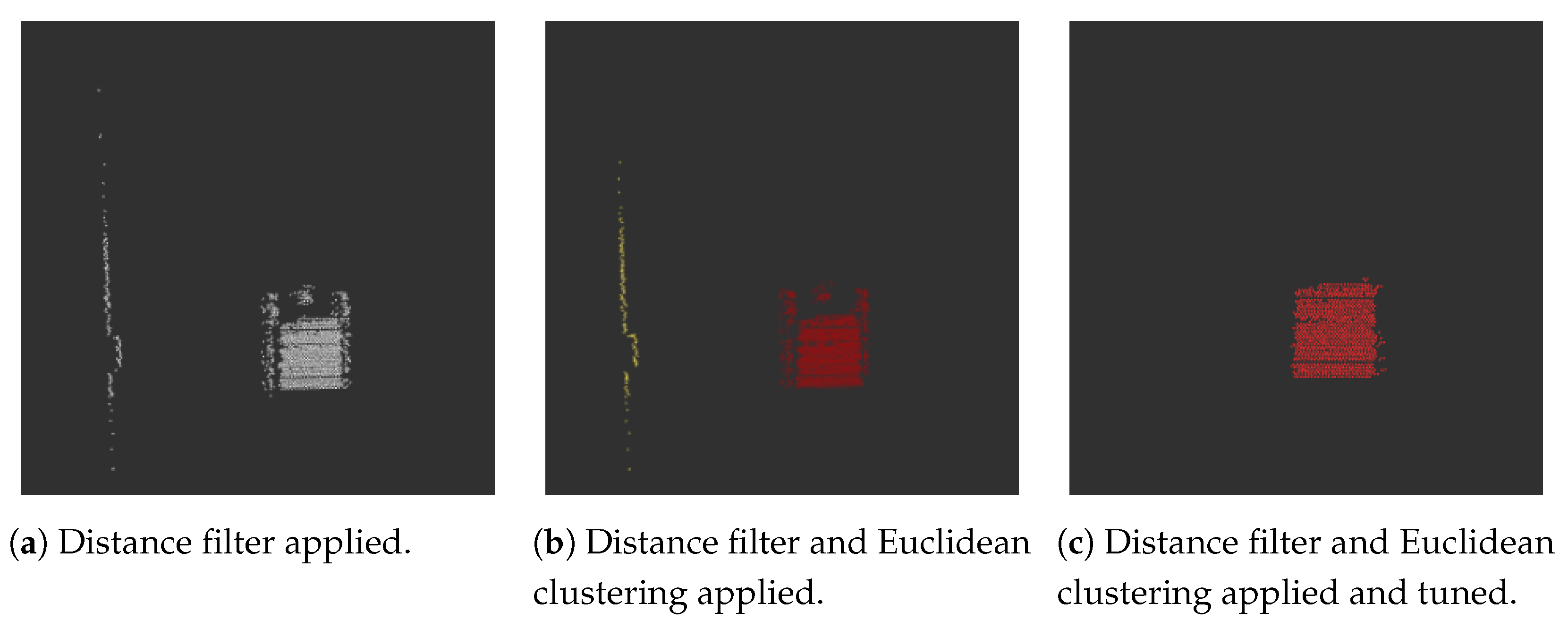

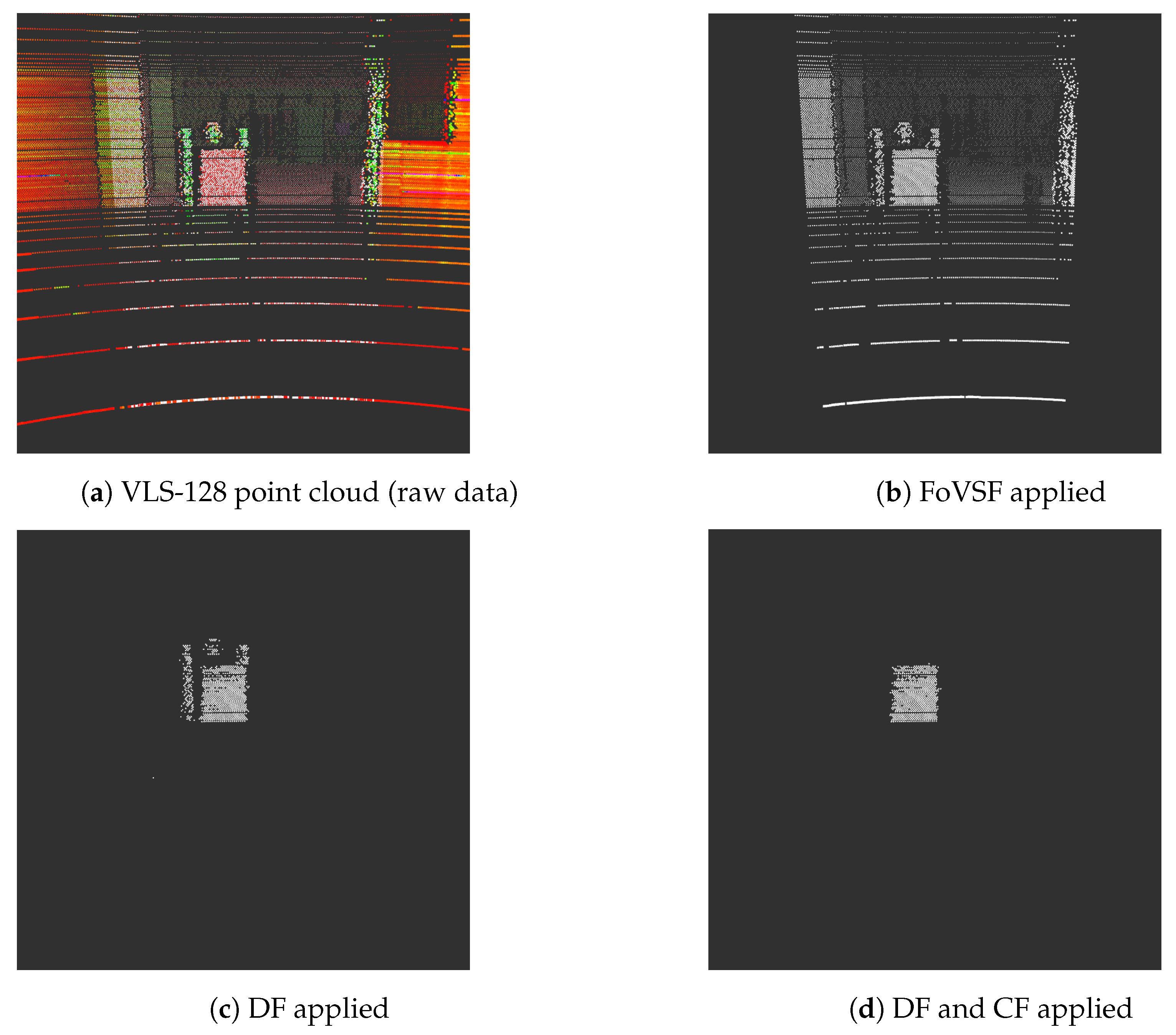

- Distance Filter (DF): Because the target is placed at a known distance from the sensor, the output of this filter is a new point cloud (published to the filtered_point_cloud topic) containing points that are, at this distance, ± a threshold value (used to avoid removal of points that actually belong to the target). The result after applying the distance filter is shown in Figure 10a. This procedure removes undesired points, and can help to reduce the computational costs of subsequent tasks.

- Clustering Filter (CF): The cluster filter algorithm groups the points that are present in the point cloud and evaluates whether the target is within the clusters created. Because the target’s distance and size and the sensor’s resolution can have an effect on the clustering results, this algorithm must be tuned afterwards. Figure 10b depicts the output of the CF algorithm without tuning its parameters; it can be seen that two clusters were identified, represented by the yellow and red points. The points present inside the yellow cluster result from points that are at the same distance as the target, which must be removed during the next step. To detect whether the resulting clusters represent the target, a Euclidean clustering filter is applied. Because the point density within the target’s cluster is higher than in other objects at the same distance, this filter analyzes the neighbour points of each point within a defined search radius . If a neighbor point is inside this search radius , it belongs to the same cluster and is retained in the point cloud; otherwise, it is removed. This task is performed by resorting to the EuclideanClusterExtraction.extract method presented in the point cloud library (PCL) [36]. The parameters used to configure this method are:

- Cluster Tolerance: Defines the search radius ; if the chosen value is too small, the same target can be divided into multiple clusters. On the other hand, multiple objects can be set as just one cluster if this value is too high. This parameter permits an interval value between 0.01 and 1 m.

- Minimum Cluster Size: This parameter is used to define the minimum number of points required to form a cluster. It permits values between 1 and 10,000 points.

- Maximum Cluster Size: This parameter defines the maximum number of points used to form a cluster. It supports a minimum of 2 and a maximum of 50,000 points.

- FoV software filter (FoVSF): The purpose of this filter is to enable support for any LiDAR sensor on the market, including the rotation-based COTS LiDAR sensors widely used in automotive applications, which usually provide a 360° horizontal FoV. Notwithstanding this, for the purposes of testing and validating the platform, which must support additionally LiDAR sensors with a limited FoV, the FoV software filter allows the point cloud to be cropped to a desired horizontal and vertical FoV. This filter runs in two steps: first, it converts the points in the point cloud from the Cartesian to the spherical coordinate system, using Equation (3) to calculate the azimuth and Equation (4) for the elevation angle; in the second step, the algorithm discards the points from the point cloud that are not within the desired thresholds. The output of this filter is an ROS topic with a new point cloud containing the points that are within the configured FoV. Later, in Section 5, we present an application of this filter.

4.2. Implementation of the FoV Test

- P1—Last position where the target is completely outside of the FoV;

- P2—First position where the target is completely inside of the FoV;

- P3—Last position where the target is completely inside of the FoV;

- P4—First position where the target is completely outside of the FoV after P3;

4.3. Implementation of the AR Test

5. Results

5.1. Sanity Check

- Power supply sanity check: After checking whether the powersupply_node is alive, this test verifies whether any sensor is connected to the system by obtaining the list of connected sensors. Then, it evaluates whether each connected sensor’s parameters match the values reported by the power supply. There are three possible outcomes: (1) the node is unresponsive; (2) the connected sensor matches the configured parameters; or (3) the power supply readings do not match the expected values.

- Rail system sanity check: Similar to the power supply, the rail system routine begins by testing whether its corresponding ROS node is alive. Next, the rail system is validated by sending the platform that holds the target into different positions while checking the system’s response. In this way, two dedicated services are defined, move_sequence and is_moving; the first is responsible for calling the move_to services, while the latter checks whether the target is in fact moving or is at the desired position. The rail system sanity check has six possible outputs: (1) no problems were detected; (2) the node is unresponsive; (3) the node’s internal flags indicate a busy state, i.e., the rail system is not ready to receive commands; (4) the internal flags indicate internal error status; (5) the target did not move after a move_to command; (6) the target could not stop after a stop command; and (7) the target is not at the expected position.

- RotGon sanity check: This routine verifies four moving commands: go_home, move_r_to, move_r0_to, and move_g_to. Next, it tests whether the moving parts (one for each axis) are at the desired angles. This test can report three possible situations: (1) the RotGon is alive and running; (2) The RotGon node is unresponsive; and (3) the RotGon positions are different from the expected positions.

5.2. FoV Test

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Daily, M.; Medasani, S.; Behringer, R.; Trivedi, M. Self-Driving Cars. Computer 2017, 50, 18–23. [Google Scholar] [CrossRef]

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.; Berriel, R.; Paixão, T.M.; Mutz, F.; et al. Self-driving cars: A survey. Expert Syst. Appl. 2021, 165, 113816. [Google Scholar] [CrossRef]

- Gao, C.; Wang, G.; Shi, W.; Wang, Z.; Chen, Y. Autonomous Driving Security: State of the Art and Challenges. IEEE Internet Things J. 2022, 9, 7572–7595. [Google Scholar] [CrossRef]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Litman, T. Autonomous Vehicle Implementation Predictions; Victoria Transport Policy Institute Victoria: Victoria, BC, Canada, 2021. [Google Scholar]

- Society of Automotive Engineers (SAE). Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles (Surface Vehicle Recommended Practice: Superseding J3016 Jun 2018); SAE International: Warrendale, PA, USA, 2021. [Google Scholar]

- Guerrero-Ibáñez, J.; Zeadally, S.; Contreras-Castillo, J. Sensor Technologies for Intelligent Transportation Systems. Sensors 2018, 18, 1212. [Google Scholar] [CrossRef] [PubMed]

- Marti, E.; de Miguel, M.A.; Garcia, F.; Perez, J. A Review of Sensor Technologies for Perception in Automated Driving. IEEE Intell. Transp. Syst. Mag. 2019, 11, 94–108. [Google Scholar] [CrossRef]

- Shahian Jahromi, B.; Tulabandhula, T.; Cetin, S. Real-Time Hybrid Multi-Sensor Fusion Framework for Perception in Autonomous Vehicles. Sensors 2019, 19, 4357. [Google Scholar] [CrossRef]

- Mohammed, A.S.; Amamou, A.; Ayevide, F.K.; Kelouwani, S.; Agbossou, K.; Zioui, N. The Perception System of Intelligent Ground Vehicles in All Weather Conditions: A Systematic Literature Review. Sensors 2020, 20, 6532. [Google Scholar] [CrossRef]

- Warren, M.E. Automotive LIDAR Technology. In Proceedings of the 2019 Symposium on VLSI Circuits, Kyoto, Japan, 9–14 June 2019; pp. C254–C255. [Google Scholar]

- Li, Y.; Ibanez-Guzman, J. Lidar for Autonomous Driving: The Principles, Challenges, and Trends for Automotive Lidar and Perception Systems. IEEE Signal Process. Mag. 2020, 37, 50–61. [Google Scholar] [CrossRef]

- Roriz, R.; Cabral, J.; Gomes, T. Automotive LiDAR Technology: A Survey. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6282–6297. [Google Scholar] [CrossRef]

- Cunha, L.; Roriz, R.; Pinto, S.; Gomes, T. Hardware-Accelerated Data Decoding and Reconstruction for Automotive LiDAR Sensors. IEEE Trans. Veh. Technol. 2022, 1–10. [Google Scholar] [CrossRef]

- Arnold, E.; Al-Jarrah, O.Y.; Dianati, M.; Fallah, S.; Oxtoby, D.; Mouzakitis, A. A Survey on 3D Object Detection Methods for Autonomous Driving Applications. IEEE Trans. Intell. Transp. Syst. 2019, 20, 3782–3795. [Google Scholar] [CrossRef]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection From Point Cloud. Available online: https://openaccess.thecvf.com/content_CVPR_2019/html/Shi_PointRCNN_3D_Object_Proposal_Generation_and_Detection_From_Point_Cloud_CVPR_2019_paper.html (accessed on 1 September 2022).

- Wu, J.; Xu, H.; Tian, Y.; Pi, R.; Yue, R. Vehicle Detection under Adverse Weather from Roadside LiDAR Data. Sensors 2020, 20, 3433. [Google Scholar] [CrossRef]

- Wang, H.; Wang, B.; Liu, B.; Meng, X.; Yang, G. Pedestrian recognition and tracking using 3D LiDAR for autonomous vehicle. Robot. Auton. Syst. 2017, 88, 71–78. [Google Scholar] [CrossRef]

- Peng, X.; Shan, J. Detection and Tracking of Pedestrians Using Doppler LiDAR. Remote Sens. 2021, 13, 2952. [Google Scholar] [CrossRef]

- Huang, W.; Liang, H.; Lin, L.; Wang, Z.; Wang, S.; Yu, B.; Niu, R. A Fast Point Cloud Ground Segmentation Approach Based on Coarse-To-Fine Markov Random Field. IEEE Trans. Intell. Transp. Syst. 2022, 23, 7841–7854. [Google Scholar] [CrossRef]

- Karlsson, R.; Wong, D.R.; Kawabata, K.; Thompson, S.; Sakai, N. Probabilistic Rainfall Estimation from Automotive Lidar. In Proceedings of the 2022 IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 4–9 June 2022. [Google Scholar]

- Raj, T.; Hashim, F.; Huddin, B.; Ibrahim, M.; Hussain, A. A Survey on LiDAR Scanning Mechanisms. Electronics 2020, 9, 741. [Google Scholar] [CrossRef]

- Behroozpour, B.; Sandborn, P.A.M.; Wu, M.C.; Boser, B.E. Lidar System Architectures and Circuits. IEEE Commun. Mag. 2017, 55, 135–142. [Google Scholar] [CrossRef]

- Jiménez, J. Laser diode reliability: Crystal defects and degradation modes. Comptes Rendus Phys. 2003, 4, 663–673. [Google Scholar] [CrossRef]

- Kwong, W.C.; Lin, W.Y.; Yang, G.C.; Glesk, I. 2-D Optical-CDMA Modulation in Automotive Time-of-Flight LIDAR Systems. In Proceedings of the 2020 22nd International Conference on Transparent Optical Networks (ICTON), Bari, Italy, 19–23 July 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Fersch, T.; Weigel, R.; Koelpin, A. A CDMA Modulation Technique for Automotive Time-of-Flight LiDAR Systems. IEEE Sensors J. 2017, 17, 3507–3516. [Google Scholar] [CrossRef]

- Lee, H.; Kim, S.; Park, S.; Jeong, Y.; Lee, H.; Yi, K. AVM / LiDAR sensor based lane marking detection method for automated driving on complex urban roads. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1434–1439. [Google Scholar] [CrossRef]

- Jokela, M.; Kutila, M.; Pyykönen, P. Testing and Validation of Automotive Point-Cloud Sensors in Adverse Weather Conditions. Appl. Sci. 2019, 9, 2341. [Google Scholar] [CrossRef]

- Vargas Rivero, J.R.; Gerbich, T.; Teiluf, V.; Buschardt, B.; Chen, J. Weather Classification Using an Automotive LIDAR Sensor Based on Detections on Asphalt and Atmosphere. Sensors 2020, 20, 4306. [Google Scholar] [CrossRef] [PubMed]

- Roriz, R.; Campos, A.; Pinto, S.; Gomes, T. DIOR: A Hardware-Assisted Weather Denoising Solution for LiDAR Point Clouds. IEEE Sensors J. 2022, 22, 1621–1628. [Google Scholar] [CrossRef]

- Chan, P.H.; Dhadyalla, G.; Donzella, V. A Framework to Analyze Noise Factors of Automotive Perception Sensors. IEEE Sensors Lett. 2020, 4, 1–4. [Google Scholar] [CrossRef]

- Carballo, A.; Lambert, J.; Monrroy, A.; Wong, D.; Narksri, P.; Kitsukawa, Y.; Takeuchi, E.; Kato, S.; Takeda, K. LIBRE: The Multiple 3D LiDAR Dataset. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October 2020–13 November 2020; pp. 1094–1101. [Google Scholar] [CrossRef]

- Lambert, J.; Carballo, A.; Cano, A.M.; Narksri, P.; Wong, D.; Takeuchi, E.; Takeda, K. Performance Analysis of 10 Models of 3D LiDARs for Automated Driving. IEEE Access 2020, 8, 131699–131722. [Google Scholar] [CrossRef]

- Suss, A.; Rochus, V.; Rosmeulen, M.; Rottenberg, X. Benchmarking time-of-flight based depth measurement techniques. In Smart Photonic and Optoelectronic Integrated Circuits XVIII; He, S., Lee, E.H., Eldada, L.A., Eds.; SPIE: Bellingham, WA, USA, 2016; Volume 9751, pp. 199–217. [Google Scholar]

- Sun, W.; Hu, Y.; MacDonnell, D.G.; Weimer, C.; Baize, R.R. Technique to separate lidar signal and sunlight. Opt. Express 2016, 24, 12949–12954. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 18 August 2011; pp. 1–4. [Google Scholar]

| Equipment | Result | Description |

|---|---|---|

| 1 | No problems detected | |

| Powersupply | 2 | No node detected in the ROS Environment |

| 3 | Values detected not matching the expected values | |

| 1 | No problems detected | |

| 2 | No node detected in the ROS Environment | |

| 3 | Component not ready | |

| Railsystem | 4 | Component has internal errors |

| 5 | Component not moving after a moving command | |

| 6 | Component not stopping after a stop command | |

| 7 | Component not in the correct position | |

| 1 | No problems detected | |

| Rotgon | 2 | No node detected in the ROS Environment |

| 3 | Component not in the correct position |

| Cluster Filter | Distance Filter | Target | |||

|---|---|---|---|---|---|

| Cluster Tolerance | Cluster Size (min) | Cluster Size (max) | Threshold (min) | Threshold (max) | Distance |

| 0.03 m | 100 pt | 2000 pt | 5.5 m | 5.6 m | 5.5 m |

| Horizontal FoV (min) | Horizontal FoV (max) | Vertical FoV (min) | Vertical FoV (max) | ||

|---|---|---|---|---|---|

| Test 1 | FoVSF | 0° | 60° | −10° | 15° |

| Measured FoV | 0.03° | 59.89° | −1.95° | 14.89° | |

| Test 2 | FoVSF | 20° | 75° | −15° | 0° |

| Measured FoV | 20.02° | 74.89° | −14.79° | −1.05° | |

| Test 3 | FoVSF | 0° | 135° | 0° | 17° |

| Measured FoV | −1.08° | 134.70° | 0.04° | 16.92° |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gomes, T.; Roriz, R.; Cunha, L.; Ganal, A.; Soares, N.; Araújo, T.; Monteiro, J. Evaluation and Testing System for Automotive LiDAR Sensors. Appl. Sci. 2022, 12, 13003. https://doi.org/10.3390/app122413003

Gomes T, Roriz R, Cunha L, Ganal A, Soares N, Araújo T, Monteiro J. Evaluation and Testing System for Automotive LiDAR Sensors. Applied Sciences. 2022; 12(24):13003. https://doi.org/10.3390/app122413003

Chicago/Turabian StyleGomes, Tiago, Ricardo Roriz, Luís Cunha, Andreas Ganal, Narciso Soares, Teresa Araújo, and João Monteiro. 2022. "Evaluation and Testing System for Automotive LiDAR Sensors" Applied Sciences 12, no. 24: 13003. https://doi.org/10.3390/app122413003

APA StyleGomes, T., Roriz, R., Cunha, L., Ganal, A., Soares, N., Araújo, T., & Monteiro, J. (2022). Evaluation and Testing System for Automotive LiDAR Sensors. Applied Sciences, 12(24), 13003. https://doi.org/10.3390/app122413003