Comparing OBIA-Generated Labels and Manually Annotated Labels for Semantic Segmentation in Extracting Refugee-Dwelling Footprints

Abstract

1. Introduction

2. Materials and Methods

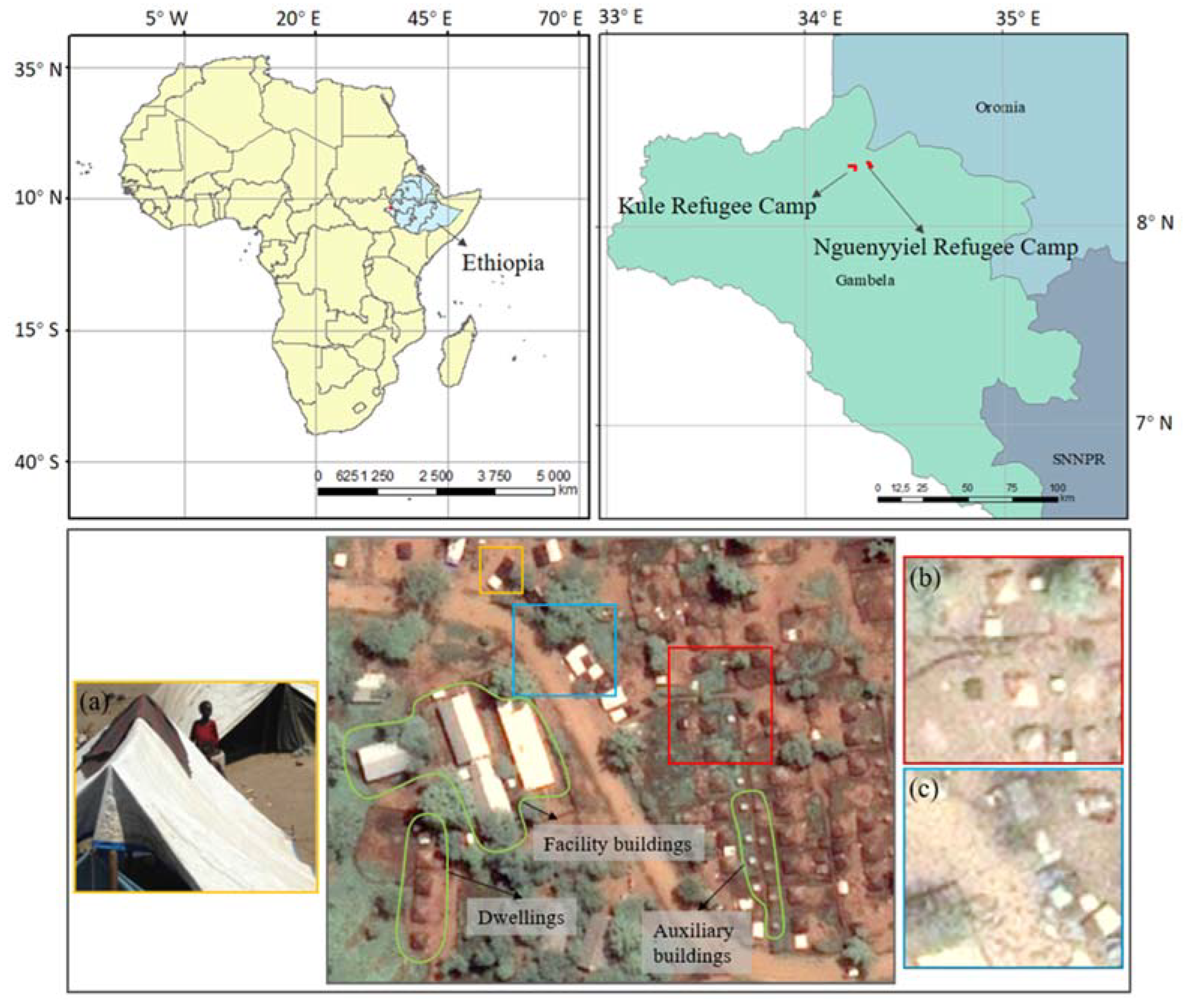

2.1. Study Sites

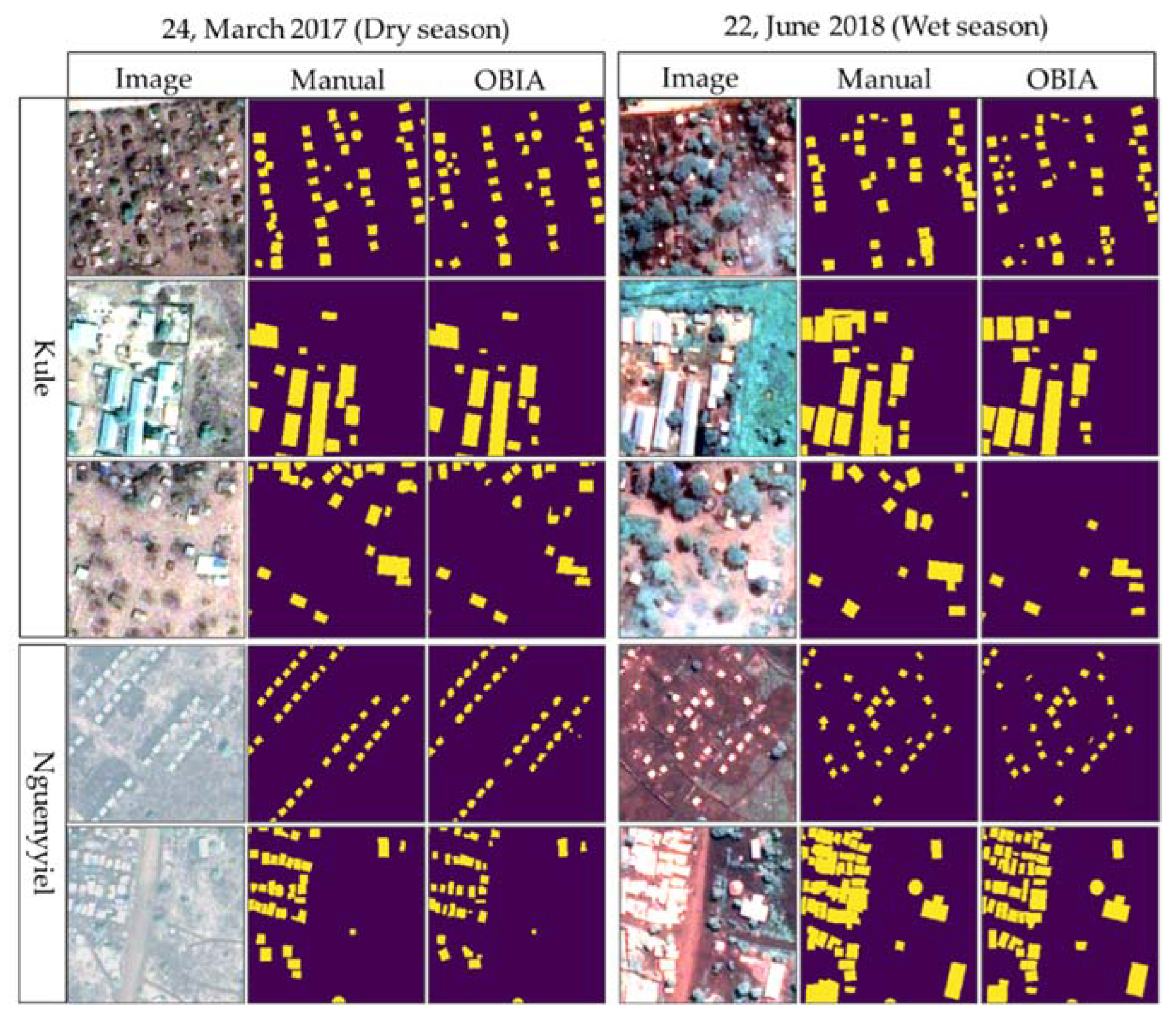

2.2. Data Preparation

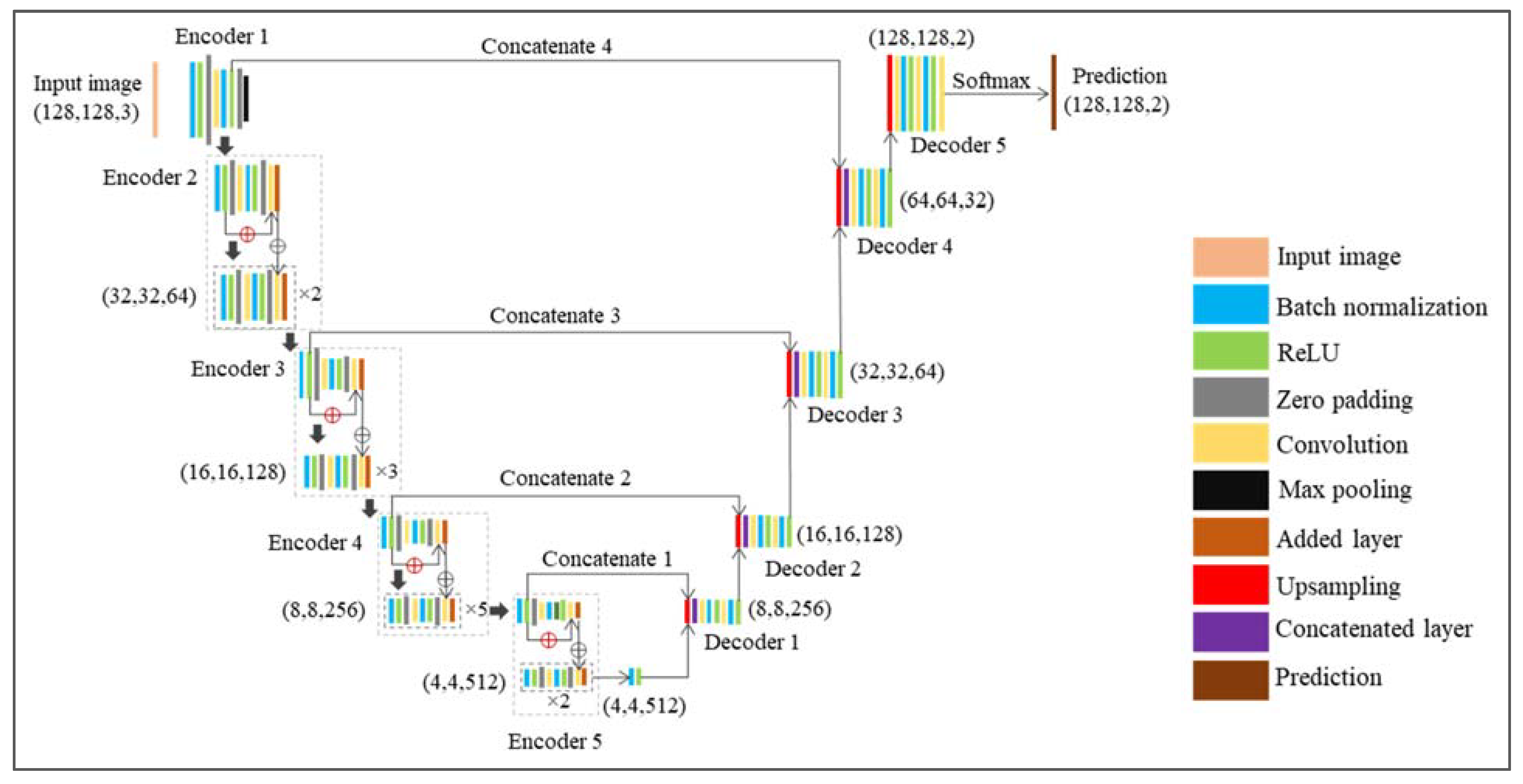

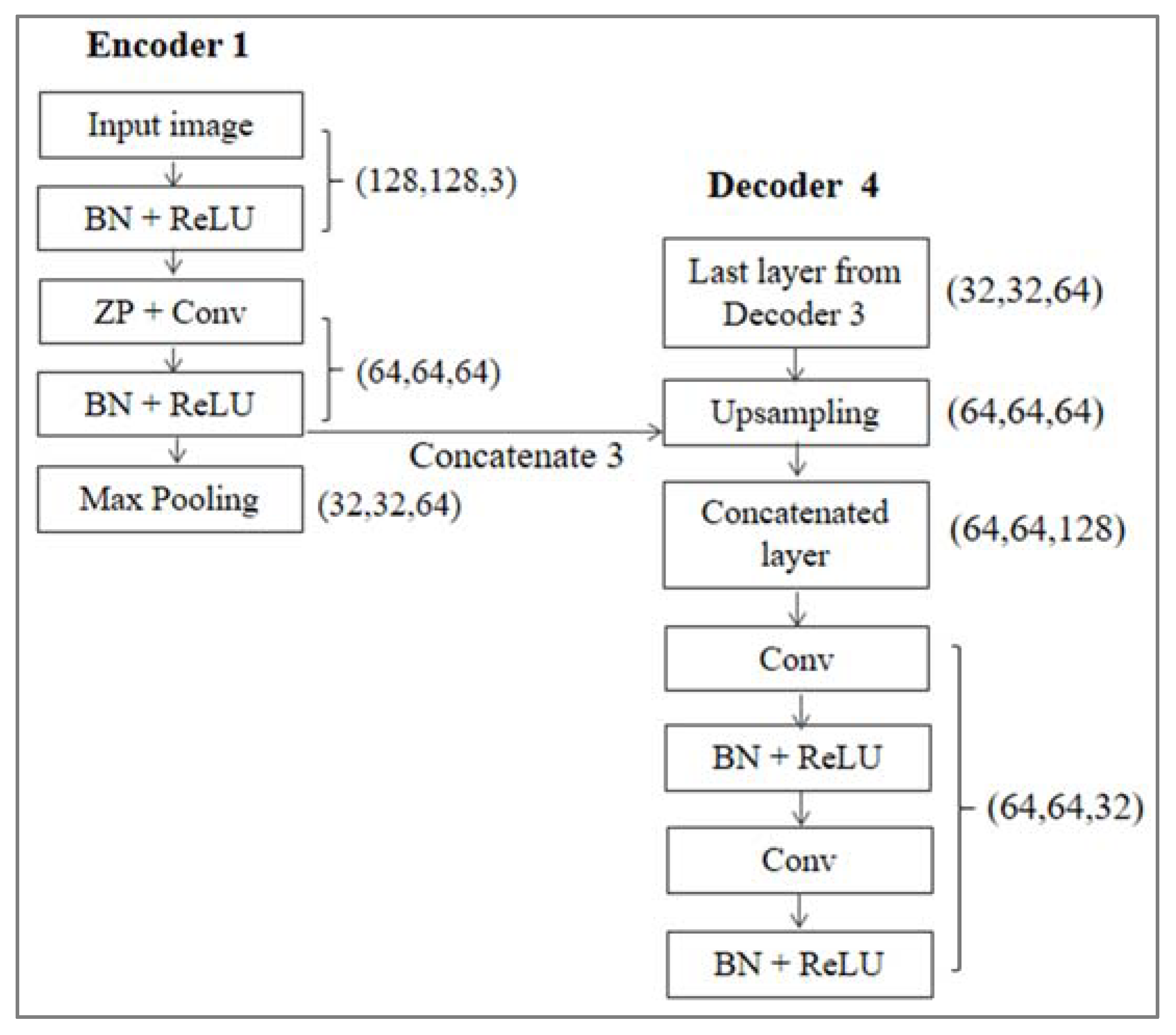

2.3. Model Structures and Hyperparameters

2.4. Accuracy Metrics

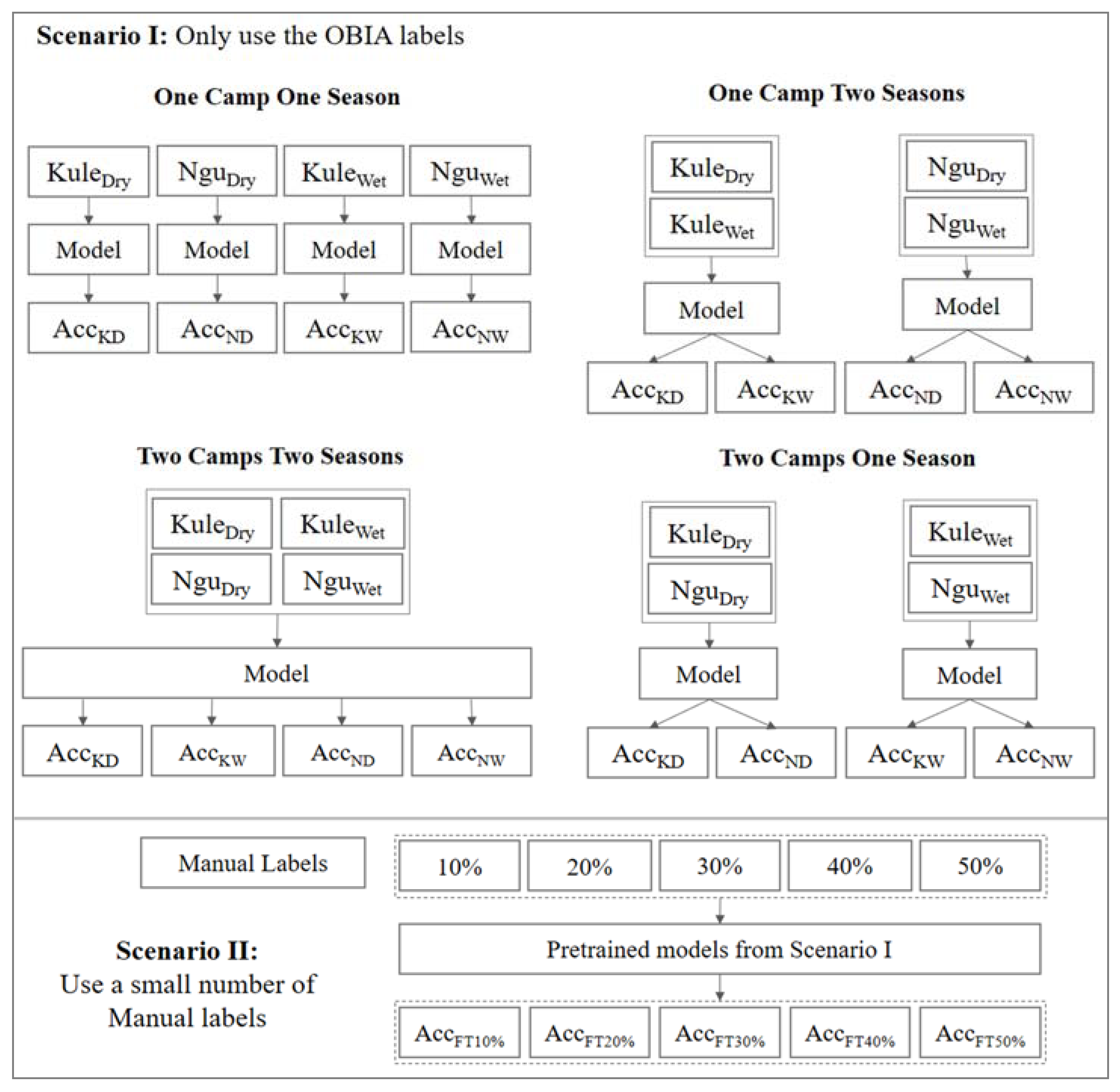

2.5. The Designation of Experiments

2.6. Set-Up

3. Results

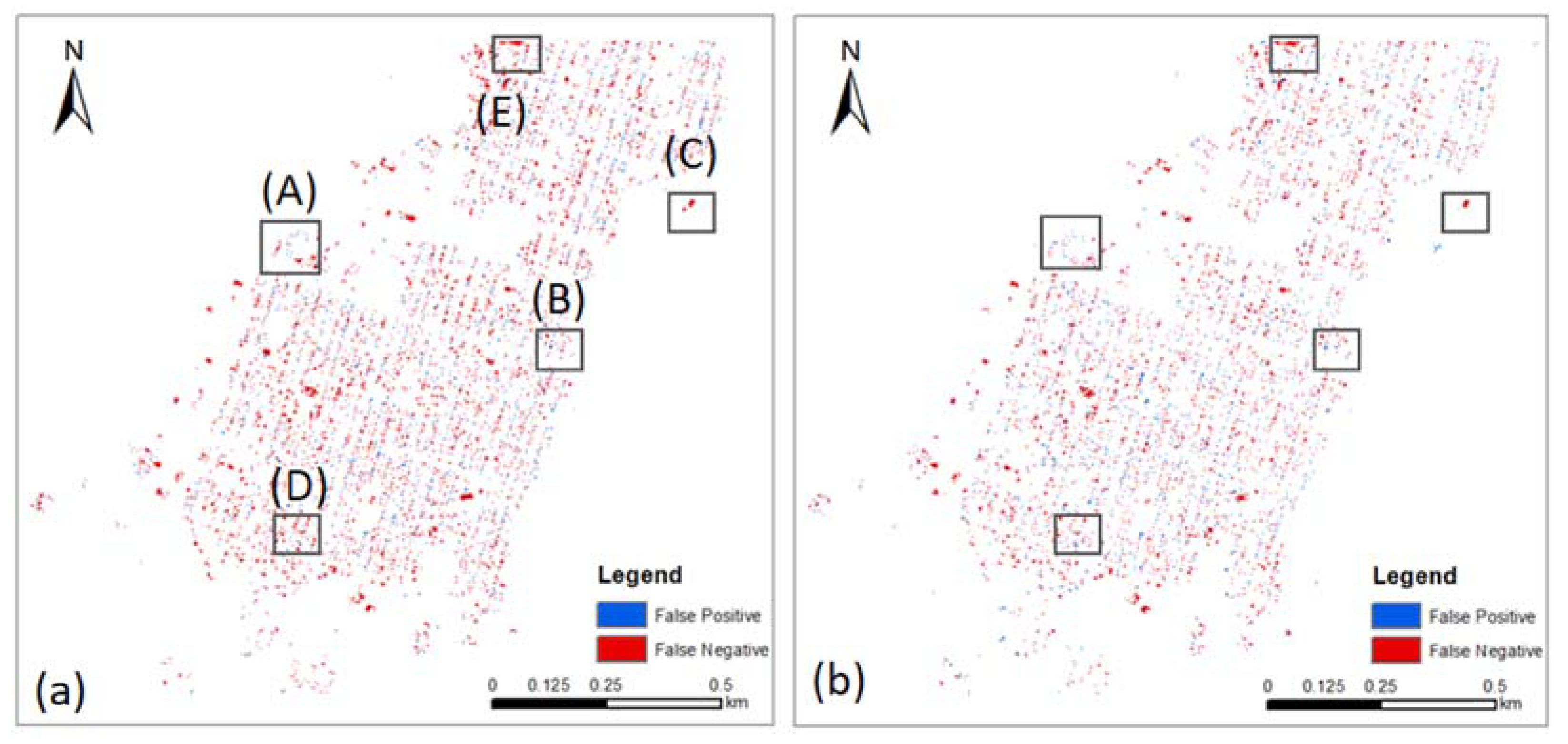

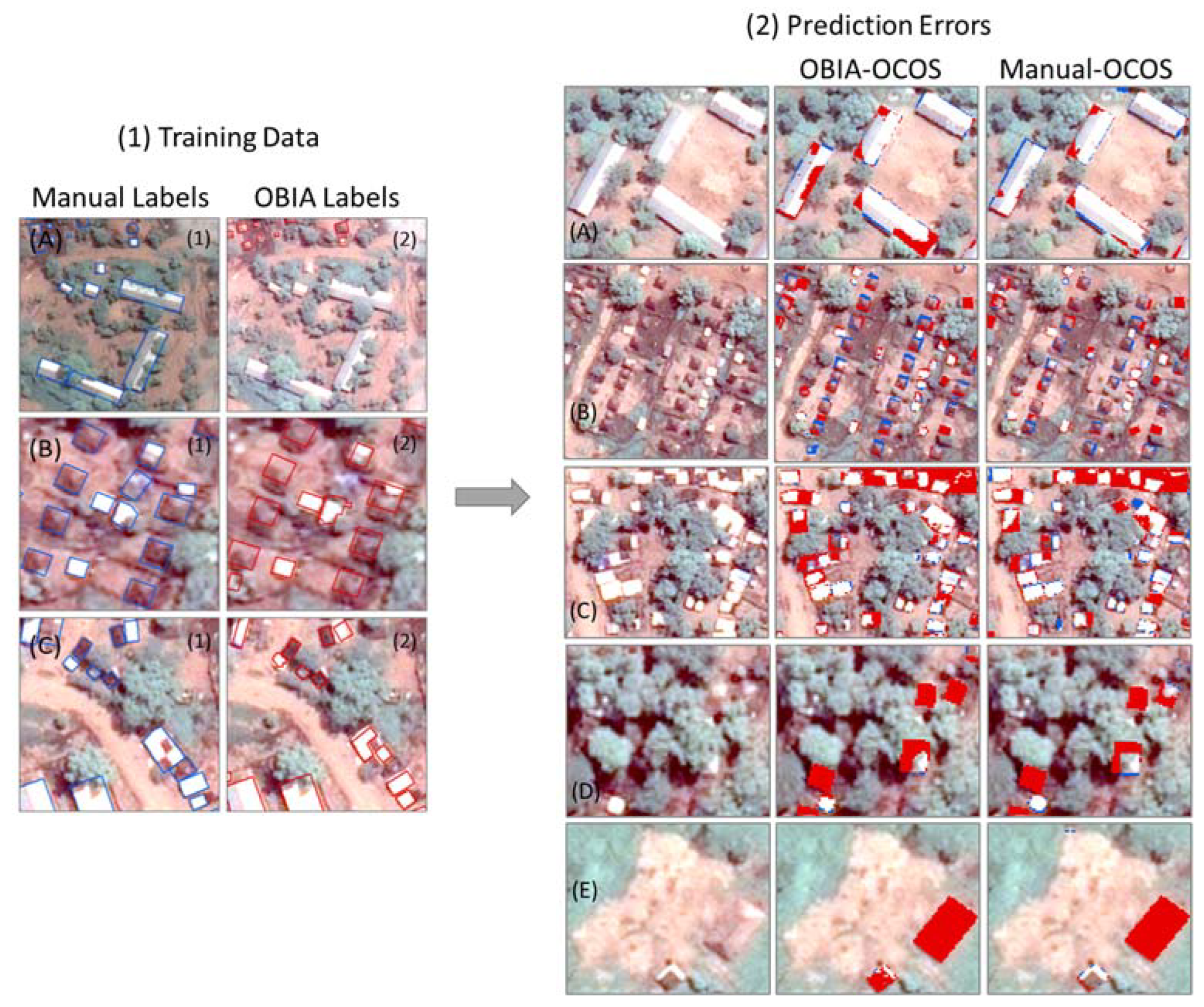

3.1. Scenario I

3.2. Scenario II

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Refugee Camp | Season | Training Strategy | Baseline | Scenario I | Scenario II | ||||

|---|---|---|---|---|---|---|---|---|---|

| Manual | OBIA | 10% | 20% | 30% | 40% | 50% | |||

| Kule | Dry | OCOS | 0.7387 | 0.6766 | 0.7365 | 0.7526 | 0.7515 | 0.7476 | 0.7499 |

| OCTS | 0.7365 | 0.6844 | 0.7370 | 0.7442 | 0.7449 | 0.7467 | 0.7435 | ||

| TCOS | 0.7411 | 0.6908 | 0.7434 | 0.7443 | 0.7442 | 0.7449 | 0.7443 | ||

| TCTS | 0.7409 | 0.6907 | 0.7258 | 0.7379 | 0.7371 | 0.7394 | 0.7366 | ||

| Kule | Wet | OCOS | 0.7805 | 0.7129 | 0.7794 | 0.7908 | 0.7929 | 0.7814 | 0.7824 |

| OCTS | 0.7849 | 0.7041 | 0.7645 | 0.7797 | 0.7839 | 0.7749 | 0.7814 | ||

| TCOS | 0.7795 | 0.6905 | 0.7816 | 0.7856 | 0.7864 | 0.7874 | 0.7843 | ||

| TCTS | 0.7759 | 0.7157 | 0.7727 | 0.7750 | 0.7864 | 0.7808 | 0.7778 | ||

| Nguenyyiel | Dry | OCOS | 0.7787 | 0.7540 | 0.7774 | 0.7799 | 0.7801 | 0.7797 | 0.7770 |

| OCTS | 0.7796 | 0.7558 | 0.7600 | 0.7732 | 0.7786 | 0.7781 | 0.7779 | ||

| TCOS | 0.7789 | 0.7539 | 0.7755 | 0.7783 | 0.7790 | 0.7793 | 0.7786 | ||

| TCTS | 0.7799 | 0.7583 | 0.7804 | 0.7874 | 0.7858 | 0.7865 | 0.7835 | ||

| Nguenyyiel | Wet | OCOS | 0.7858 | 0.7651 | 0.7873 | 0.7907 | 0.7895 | 0.7873 | 0.7862 |

| OCTS | 0.7853 | 0.7630 | 0.7868 | 0.7892 | 0.7892 | 0.7864 | 0.7844 | ||

| TCOS | 0.7884 | 0.7629 | 0.7957 | 0.7978 | 0.7973 | 0.7960 | 0.7990 | ||

| TCTS | 0.7977 | 0.7731 | 0.7753 | 0.7618 | 0.7969 | 0.7770 | 0.7955 | ||

| Refugee Camp | Season | Training Strategy | Baseline | Scenario I | Scenario II | ||||

|---|---|---|---|---|---|---|---|---|---|

| Manual | OBIA | 10% | 20% | 30% | 40% | 50% | |||

| Kule | Dry | OCOS | 0.7819 | 0.7429 | 0.7795 | 0.7908 | 0.7900 | 0.7873 | 0.7888 |

| OCTS | 0.7803 | 0.7481 | 0.7808 | 0.7853 | 0.7858 | 0.7869 | 0.7847 | ||

| TCOS | 0.7834 | 0.7520 | 0.7849 | 0.7854 | 0.7853 | 0.7857 | 0.7852 | ||

| TCTS | 0.7832 | 0.7518 | 0.7736 | 0.7813 | 0.7807 | 0.7821 | 0.7803 | ||

| Kule | Wet | OCOS | 0.8115 | 0.7664 | 0.8108 | 0.8186 | 0.8200 | 0.8119 | 0.8126 |

| OCTS | 0.8145 | 0.7610 | 0.8005 | 0.8109 | 0.8139 | 0.8074 | 0.8120 | ||

| TCOS | 0.8108 | 0.7528 | 0.8122 | 0.8151 | 0.8156 | 0.8162 | 0.8139 | ||

| TCTS | 0.8082 | 0.7682 | 0.8061 | 0.8077 | 0.8155 | 0.8115 | 0.8094 | ||

| Nguenyyiel | Dry | OCOS | 0.8157 | 0.7993 | 0.8149 | 0.8165 | 0.8166 | 0.8163 | 0.8145 |

| OCTS | 0.8163 | 0.8004 | 0.8030 | 0.8119 | 0.8156 | 0.8153 | 0.8151 | ||

| TCOS | 0.8158 | 0.7992 | 0.8134 | 0.8154 | 0.8159 | 0.8161 | 0.8156 | ||

| TCTS | 0.8164 | 0.8021 | 0.8169 | 0.8216 | 0.8205 | 0.8210 | 0.8189 | ||

| Nguenyyiel | Wet | OCOS | 0.8174 | 0.8033 | 0.8185 | 0.8208 | 0.8199 | 0.8184 | 0.8177 |

| OCTS | 0.8170 | 0.8018 | 0.8182 | 0.8197 | 0.8198 | 0.8178 | 0.8163 | ||

| TCOS | 0.8192 | 0.8019 | 0.8245 | 0.8259 | 0.8256 | 0.8246 | 0.8268 | ||

| TCTS | 0.8258 | 0.8088 | 0.8102 | 0.8004 | 0.8252 | 0.8110 | 0.8242 | ||

References

- UNHCR. Global Trends: Forced Displacement in 2020; UNHCR Global Trends: Copenhagen, Denmark, 2021; p. 72. [Google Scholar]

- UNHCR. UNHCR: A Record 100 Million People Forcibly Displaced Worldwide. UN News, 23 May 2022. [Google Scholar]

- UNHCR. Persons Who are Forcibly Displaced, Stateless and Others of Concern to UNHCR, UNHCR The UN Refugee Agency. 2021. Available online: https://www.unhcr.org/refugee-statistics/methodology/definition/ (accessed on 28 November 2021).

- UNHCR. Mid-Year Trends 2021; UNCHR: Copenhagen, Denmark, 2021. [Google Scholar]

- Elizabeth, U.; Stuart, M. Report on UNHCR’s Response to COVID-19; UNCHR: Geneva, Switzerland, 2020. [Google Scholar]

- Médecins Sans Frontières. Global Migration and Refugee Crisis—Record Numbers of People Have Been Forced from Home and Struggle to Find Safety. 2021. Available online: https://www.doctorswithoutborders.org/refugees (accessed on 28 November 2021).

- Çelik, M.; Ergun, Ö.; Johnson, B.; Keskinocak, P.; Lorca, Á.; Pekgün, P.; Swann, J. Humanitarian logistics. In New Directions in Informatics, Optimization, Logistics, and Production; INFORMS: Catonsville, MD, USA, 2012; pp. 18–49. [Google Scholar]

- Lang, S.; Füreder, P.; Riedler, B.; Wendt, L.; Braun, A.; Tiede, D.; Schoepfer, E.; Zeil, P.; Spröhnle, K.; Kulessa, K.; et al. Earth observation tools and services to increase the effectiveness of humanitarian assistance. Eur. J. Remote Sens. 2020, 53, 67–85. [Google Scholar] [CrossRef]

- Kunz, N.; Van Wassenhove, L.N.; Besiou, M.; Hambye, C.; Kovacs, G. Relevance of humanitarian logistics research: Best practices and way forward. Int. J. Oper. Prod. Manag. 2017, 37, 1585–1599. [Google Scholar] [CrossRef]

- Witharana, C. Who Does What Where? Advanced Earth Observation for Humanitarian Crisis Management. In Proceedings of the 2012 IEEE 6th International Conference on Information and Automation for Sustainability, Beijing, China, 27–29 September 2012; pp. 1–6. [Google Scholar]

- Füreder, P.; Lang, S.; Hagenlocher, M.; Tiede, D.; Wendt, L.; Rogenhofer, E. Earth Observation and GIS to Support Humanitarian Operations in Refugee/IDP Camps. In Proceedings of the ISCRAM 2015—The 12th International Conference on Information Systems for Crisis Response and Management, Kristiansand, Norway, 24–27 May 2015. [Google Scholar]

- Lang, S.; Schoepfer, E.; Zeil, P.; Riedler, B. Earth observation for humanitarian assistance. GI Forum 2017, 1, 157–165. [Google Scholar] [CrossRef]

- Braun, A.; Hochschild, V. Potential and limitations of radar remote sensing for humanitarian operations. GI Forum 2017, 1, 1228–1243. [Google Scholar] [CrossRef]

- Lang, S.; Füreder, P.; Rogenhofer, E. Earth observation for humanitarian operations. In Yearbook on Space Policy 2016; Springer: Cham, Switzerland, 2018; pp. 217–229. [Google Scholar]

- Checchi, F.; Stewart, B.T.; Palmer, J.J.; Grundy, C. Validity and feasibility of a satellite imagery-based method for rapid estimation of displaced populations. Int. J. Health Geogr. 2013, 12, 4. [Google Scholar] [CrossRef]

- Spröhnle, K.; Tiede, D.; Schoepfer, E.; Füreder, P.; Svanberg, A.; Rost, T. Earth observation-based dwelling detection approaches in a highly complex refugee camp environment—A comparative study. Remote Sens. 2014, 6, 9277–9297. [Google Scholar] [CrossRef]

- Füreder, P.; Tiede, D.; Lüthje, F.; Lang, S. Object-based dwelling extraction in refugee/IDP camps–challenges in an operational mode. South-East. Eur. J. Earth Obs. Geomat. 2014, 3, 539–544. [Google Scholar]

- Kraffi, P.; Tiede, D.; Fureder, P. Template Matching to Support Earth Observation Based Refugee Camp Analysis in Obia Workflows—Creation and Evaluation of a Dwelling Template Library for Improving Dwelling Extraction Within an Object-Based Framework. In Proceedings of the GEOBIA 2016: Solutions and Synergies, Enschede, The Netherlands, 14–16 September 2016. [Google Scholar]

- Tiede, D.; Krafft, P.; Füreder, P.; Lang, S. Stratified template matching to support refugee camp analysis in OBIA workflows. Remote Sens. 2017, 9, 326. [Google Scholar] [CrossRef]

- Tiede, D.; Füreder, P.; Lang, S.; Hölbling, D.; Zeil, P. Automated analysis of satellite imagery to provide information products for humanitarian relief operations in refugee camps -from scientific development towards operational services. Photogramm. Fernerkund. Geoinf. 2013, 2013, 185–195. [Google Scholar] [CrossRef]

- Tiede, D.; Lang, S.; Hölbling, D.; Füreder, P. Transferability of Obia Rulesets for Idp Camp Analysis in Darfur. In Proceedings of the GEOBIA 2010: Geographic Object-Based Image Analysis, Ghent, Belgium, 29 June–2 July 2021. [Google Scholar]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Zhu, M.; He, Y.; He, Q. A Review of Researches on Deep Learning in Remote Sensing Application. Int. J. Geosci. 2019, 10, 1–11. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Kumar, V. Deep learning for Remote Sensing Data. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A review. arXiv 2017, arXiv:1710.03959. [Google Scholar]

- Fisher, A.; Mohammed, E.A.; Mago, V. TentNet: Deep Learning Tent Detection Algorithm Using A Synthetic Training Approach. IEEE Trans. Syst. Man Cybern. Syst. 2020, 2020, 860–867. [Google Scholar]

- Luo, L.; Li, P.; Yan, X. Deep learning-based building extraction from remote sensing images: A comprehensive review. Energies 2021, 14, 7982. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Tiede, D.; Dabiri, Z.; Sudmanns, M.; Lang, S. Dwelling extraction in refugee camps using CNN—First experiences and lessons learnt. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 161–166. [Google Scholar] [CrossRef]

- Quinn, J.A.; Nyhan, M.M.; Navarro, C.; Coluccia, D.; Bromley, L.; Luengo-Oroz, M. Humanitarian applications of machine learning with remote-sensing data: Review and case study in refugee settlement mapping. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2018, 376, 20170363. [Google Scholar] [CrossRef]

- Wickert, L.; Bogen, M.; Richter, M. Lessons Learned on Conducting Dwelling Detection on VHR Satellite Imagery for the Management of Humanitarian Operations. Sens. Transducers 2021, 249, 45–53. [Google Scholar]

- Tiede, D.; Schwendemann, G.; Alobaidi, A.; Wendt, L.; Lang, S. Mask R-CNN based building extraction from VHR satellite data in operational humanitarian action: An example related to COVID-19 response in Khartoum, Sudan. Trans. GIS 2021, 25, 1213–1227. [Google Scholar] [CrossRef] [PubMed]

- Gella, G.W.; Wendt, L.; Lang, S.; Braun, A. Testing Transferability of Deep-Learning-Based Dwelling Extraction in Refugee Camps. GI Forum 2021, 9, 220–227. [Google Scholar]

- Gella, G.W.; Wendt, L.; Lang, S.; Tiede, D.; Hofer, B.; Gao, Y.; Braun, A. Mapping of Dwellings in IDP/Refugee Settlements from Very High-Resolution Satellite Imagery Using a Mask Region-Based Convolutional Neural Network. Remote Sens. 2022, 14, 689. [Google Scholar] [CrossRef]

- Lu, Y.; Kwan, C. Deep Learning for Effective Refugee Tent. IEEE Geosci. Remote Sens. Lett. 2020, 18, 16–20. [Google Scholar]

- Yuan, X.; Shi, J.; Gu, L. A review of deep learning methods for semantic segmentation of remote sensing imagery. Expert Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Lu, Z.; Fu, Z.; Xiang, T.; Han, P.; Wang, L.; Gao, X. Learning from weak and noisy labels for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 486–500. [Google Scholar] [CrossRef]

- Kamann, C.; Rother, C. Benchmarking the Robustness of Semantic Segmentation Models with Respect to Common Corruptions. Int. J. Comput. Vis. 2021, 129, 462–483. [Google Scholar] [CrossRef]

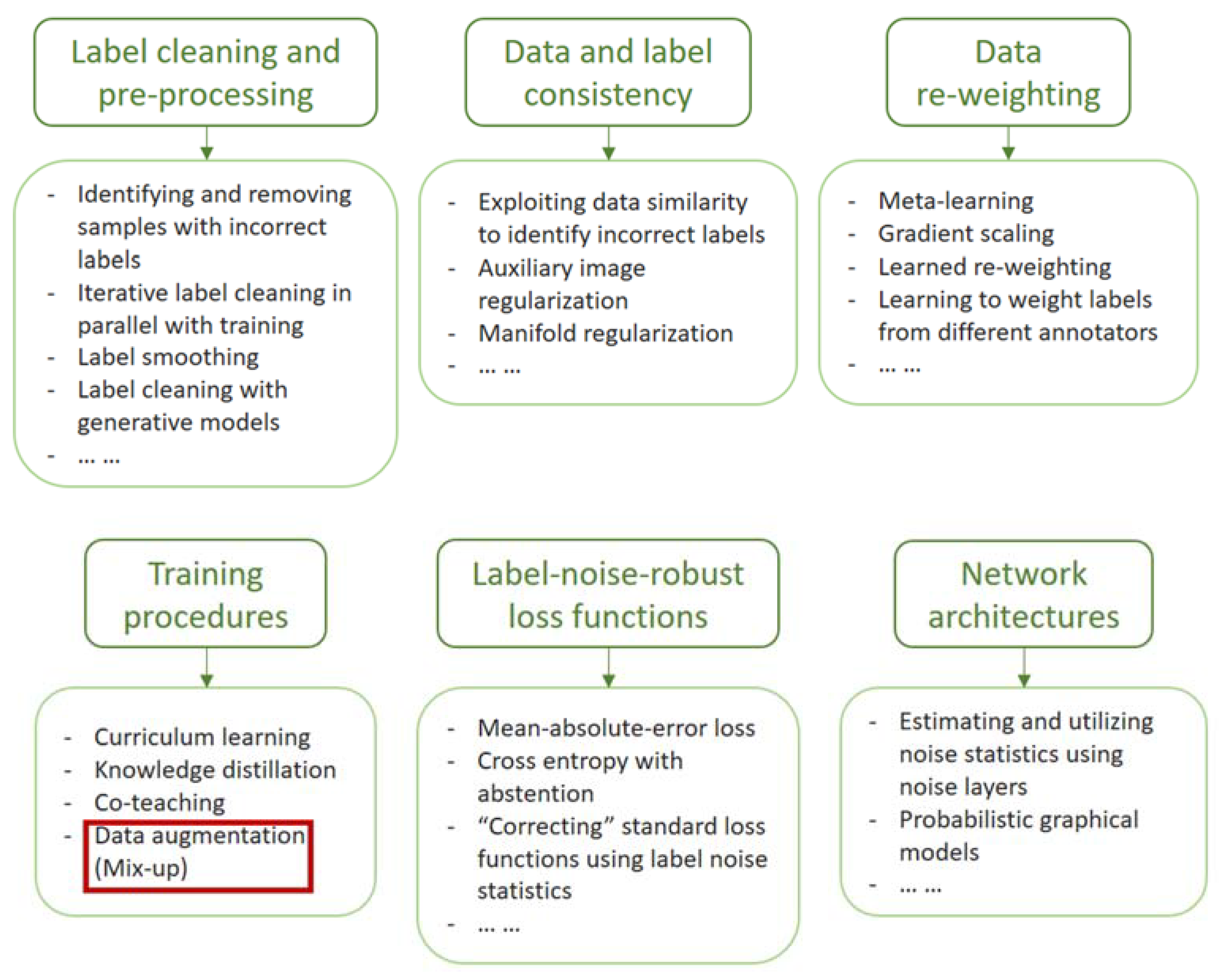

- Karimi, D.; Dou, H.; Warfield, S.K.; Gholipour, A. Deep learning with noisy labels: Exploring techniques and remedies in medical image analysis. Med. Image Anal. 2020, 65, 101759. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, J.; Kan, M.; Shan, S.; Chen, X. Self-Supervised Equivariant Attention Mechanism for Weakly Supervised Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2022; pp. 12272–12281. [Google Scholar]

- Zhou, T.; Li, L.; Li, X.; Feng, C.M.; Li, J.; Shao, L. Group-Wise Learning for Weakly Supervised Semantic Segmentation. IEEE Trans. Image Process. 2021, 31, 799–811. [Google Scholar] [CrossRef]

- Zhou, T.; Zhang, M.; Zhao, F.; Li, J. Regional Semantic Contrast and Aggregation for Weakly Supervised Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4289–4299. [Google Scholar]

- Liu, S.; Liu, K.; Zhu, W.; Shen, Y.; Fernandez-Granda, C. Adaptive Early-Learning Correction for Segmentation from Noisy Annotations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2606–2616. [Google Scholar]

- Touzani, S.; Granderson, J. Open data and deep semantic segmentation for automated extraction of building footprints. Remote Sens. 2021, 13, 2578. [Google Scholar] [CrossRef]

- UNHCR. Kule Camp Profile: Who Does What Where; UNCHR: Copenhagen, Denmark, 2016. [Google Scholar]

- UNHCR. Nguenyyiel Refugee Camp; UNCHR: Copenhagen, Denmark, 2020. [Google Scholar]

- Weather Spark. Climate and Average Weather Year Round in Gambēla; Cedar Lake Ventures, Inc.: Excelsior, MN, USA, 2021. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ISPRS Journal of Photogrammetry and Remote Sensing ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Van Beers, F.; Lindström, A.; Okafor, E.; Wiering, M.A. Deep Neural Networks with Intersection Over Union Loss for Binary Image Segmentation. In Proceedings of the ICPRAM 2019—8th International Conference Pattern Recognition Applications and Methods, Prague, Czech Republic, 19–21 February 2019; pp. 438–445. [Google Scholar]

- Yakubovskiy, P. Segmentation Models; GitHub Repository: San Francisco, CA, USA, 2019. [Google Scholar]

- Bock, S.; Goppold, J.; Weiß, M. An improvement of the convergence proof of the ADAM-Optimizer. arXiv 2018, arXiv:1804.10587. [Google Scholar]

- Gao, Y.; Lang, S.; Tiede, D.; Gella, G.W.; Wendt, L. Comparing the Robustness of U-Net, LinkNet, and FPN Towards Label Noise for Refugee Dwelling Extraction from Satellite Imagery. In Proceedings of the 2022 IEEE Global Humanitarian Technology Conference (GHTC), Santa Clara, CA, USA, 8–11 September 2022; pp. 88–94. [Google Scholar]

| Refugee Camp | Season | Training | Validation | ||

|---|---|---|---|---|---|

| OBIA | Manual | OBIA | Manual | ||

| Kule | Dry | 8253 | 8286 | 917 | 921 |

| Wet | 7930 | 8109 | 881 | 901 | |

| Nguenyyiel | Dry | 6806 | 6745 | 756 | 749 |

| Wet | 8106 | 8145 | 901 | 905 | |

| Refugee Camp | Season | Training Strategy | Baseline | Scenario I | Scenario II | ||||

|---|---|---|---|---|---|---|---|---|---|

| Manual | OBIA | 10% | 20% | 30% | 40% | 50% | |||

| Kule | Dry | OCOS | 0.5857 | 0.5113 | 0.5829 | 0.6033 | 0.6019 | 0.5969 | 0.5999 |

| OCTS | 0.5828 | 0.5202 | 0.5836 | 0.5927 | 0.5936 | 0.5958 | 0.5917 | ||

| TCOS | 0.5887 | 0.5277 | 0.5916 | 0.5927 | 0.5927 | 0.5934 | 0.5927 | ||

| TCTS | 0.5884 | 0.5276 | 0.5696 | 0.5847 | 0.5836 | 0.5866 | 0.5830 | ||

| Kule | Wet | OCOS | 0.6400 | 0.5539 | 0.6386 | 0.6541 | 0.6569 | 0.6412 | 0.6425 |

| OCTS | 0.6459 | 0.5434 | 0.6188 | 0.6389 | 0.6446 | 0.6325 | 0.6413 | ||

| TCOS | 0.6387 | 0.5273 | 0.6415 | 0.6470 | 0.6480 | 0.6493 | 0.6451 | ||

| TCTS | 0.6339 | 0.5573 | 0.6296 | 0.6327 | 0.6480 | 0.6404 | 0.6364 | ||

| Nguenyyiel | Dry | OCOS | 0.6377 | 0.6051 | 0.6359 | 0.6393 | 0.6394 | 0.6389 | 0.6353 |

| OCTS | 0.6388 | 0.6074 | 0.6129 | 0.6302 | 0.6375 | 0.6369 | 0.6366 | ||

| TCOS | 0.6379 | 0.6051 | 0.6333 | 0.6371 | 0.6380 | 0.6385 | 0.6375 | ||

| TCTS | 0.6391 | 0.6107 | 0.6399 | 0.6493 | 0.6471 | 0.6481 | 0.6440 | ||

| Nguenyyiel | Wet | OCOS | 0.6472 | 0.6196 | 0.6491 | 0.6538 | 0.6522 | 0.6493 | 0.6477 |

| OCTS | 0.6465 | 0.6168 | 0.6486 | 0.6517 | 0.6518 | 0.6480 | 0.6452 | ||

| TCOS | 0.6507 | 0.6167 | 0.6608 | 0.6636 | 0.6630 | 0.6611 | 0.6653 | ||

| TCTS | 0.6634 | 0.6302 | 0.6330 | 0.6152 | 0.6624 | 0.6353 | 0.6604 | ||

| Refugee Camp | Season | Training Strategy | Baseline | Scenario I | Scenario II | ||||

|---|---|---|---|---|---|---|---|---|---|

| Manual | OBIA | 10% | 20% | 30% | 40% | 50% | |||

| Kule | Dry | OCOS | 0.6840 | 0.5912 | 0.7370 | 0.7313 | 0.7335 | 0.7299 | 0.7377 |

| OCTS | 0.6910 | 0.5790 | 0.6798 | 0.7066 | 0.7071 | 0.7180 | 0.7135 | ||

| TCOS | 0.6940 | 0.5879 | 0.7002 | 0.7035 | 0.7076 | 0.7135 | 0.7180 | ||

| TCTS | 0.6959 | 0.5950 | 0.6553 | 0.6912 | 0.6922 | 0.7007 | 0.6960 | ||

| Kule | Wet | OCOS | 0.7116 | 0.6162 | 0.7470 | 0.7551 | 0.7324 | 0.7324 | 0.7349 |

| OCTS | 0.7245 | 0.6005 | 0.6766 | 0.7120 | 0.7225 | 0.7228 | 0.7298 | ||

| TCOS | 0.7148 | 0.5755 | 0.7209 | 0.7243 | 0.7307 | 0.7422 | 0.7377 | ||

| TCTS | 0.7124 | 0.6234 | 0.6966 | 0.7051 | 0.7338 | 0.7281 | 0.7249 | ||

| Nguenyyiel | Dry | OCOS | 0.7760 | 0.7093 | 0.7536 | 0.7628 | 0.7623 | 0.7668 | 0.7679 |

| OCTS | 0.7657 | 0.7110 | 0.7493 | 0.7552 | 0.7683 | 0.7635 | 0.7651 | ||

| TCOS | 0.7698 | 0.7043 | 0.7863 | 0.7659 | 0.7667 | 0.7688 | 0.7677 | ||

| TCTS | 0.7713 | 0.7186 | 0.7562 | 0.7779 | 0.7782 | 0.7825 | 0.7784 | ||

| Nguenyyiel | Wet | OCOS | 0.7553 | 0.7042 | 0.7474 | 0.7595 | 0.7694 | 0.7628 | 0.7553 |

| OCTS | 0.7608 | 0.7045 | 0.7487 | 0.7592 | 0.7621 | 0.7595 | 0.7552 | ||

| TCOS | 0.7638 | 0.6890 | 0.7627 | 0.7712 | 0.7717 | 0.7724 | 0.7747 | ||

| TCTS | 0.7712 | 0.7138 | 0.7245 | 0.7626 | 0.7754 | 0.7667 | 0.7703 | ||

| Refugee Camp | Season | Training Strategy | Baseline | Scenario I | Scenario II | ||||

|---|---|---|---|---|---|---|---|---|---|

| Manual | OBIA | 10% | 20% | 30% | 40% | 50% | |||

| Kule | Dry | OCOS | 0.7909 | 0.8029 | 0.7360 | 0.7752 | 0.7704 | 0.7661 | 0.7625 |

| OCTS | 0.7882 | 0.8367 | 0.8047 | 0.7862 | 0.7871 | 0.7777 | 0.7760 | ||

| TCOS | 0.7950 | 0.8374 | 0.7923 | 0.7901 | 0.7849 | 0.7791 | 0.7726 | ||

| TCTS | 0.7921 | 0.8232 | 0.8132 | 0.7914 | 0.7882 | 0.7827 | 0.7822 | ||

| Kule | Wet | OCOS | 0.8642 | 0.8455 | 0.8402 | 0.8346 | 0.8374 | 0.8374 | 0.8364 |

| OCTS | 0.8562 | 0.8511 | 0.8787 | 0.8616 | 0.8568 | 0.8352 | 0.8410 | ||

| TCOS | 0.8573 | 0.8629 | 0.8535 | 0.8583 | 0.8512 | 0.8385 | 0.8372 | ||

| TCTS | 0.8518 | 0.8402 | 0.8674 | 0.8603 | 0.8471 | 0.8418 | 0.8389 | ||

| Nguenyyiel | Dry | OCOS | 0.7815 | 0.8048 | 0.8028 | 0.7979 | 0.7987 | 0.7930 | 0.7863 |

| OCTS | 0.7940 | 0.8066 | 0.7710 | 0.7921 | 0.7891 | 0.7934 | 0.7912 | ||

| TCOS | 0.7883 | 0.8111 | 0.7650 | 0.7911 | 0.7918 | 0.7902 | 0.7899 | ||

| TCTS | 0.7886 | 0.8026 | 0.8063 | 0.7971 | 0.7935 | 0.7905 | 0.7886 | ||

| Nguenyyiel | Wet | OCOS | 0.8189 | 0.8376 | 0.8316 | 0.8245 | 0.8106 | 0.8135 | 0.8198 |

| OCTS | 0.8114 | 0.8320 | 0.8291 | 0.8215 | 0.8183 | 0.8154 | 0.8159 | ||

| TCOS | 0.8147 | 0.8545 | 0.8318 | 0.8263 | 0.8247 | 0.8210 | 0.8249 | ||

| TCTS | 0.8260 | 0.8432 | 0.8337 | 0.7610 | 0.8196 | 0.7876 | 0.8224 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, Y.; Lang, S.; Tiede, D.; Gella, G.W.; Wendt, L. Comparing OBIA-Generated Labels and Manually Annotated Labels for Semantic Segmentation in Extracting Refugee-Dwelling Footprints. Appl. Sci. 2022, 12, 11226. https://doi.org/10.3390/app122111226

Gao Y, Lang S, Tiede D, Gella GW, Wendt L. Comparing OBIA-Generated Labels and Manually Annotated Labels for Semantic Segmentation in Extracting Refugee-Dwelling Footprints. Applied Sciences. 2022; 12(21):11226. https://doi.org/10.3390/app122111226

Chicago/Turabian StyleGao, Yunya, Stefan Lang, Dirk Tiede, Getachew Workineh Gella, and Lorenz Wendt. 2022. "Comparing OBIA-Generated Labels and Manually Annotated Labels for Semantic Segmentation in Extracting Refugee-Dwelling Footprints" Applied Sciences 12, no. 21: 11226. https://doi.org/10.3390/app122111226

APA StyleGao, Y., Lang, S., Tiede, D., Gella, G. W., & Wendt, L. (2022). Comparing OBIA-Generated Labels and Manually Annotated Labels for Semantic Segmentation in Extracting Refugee-Dwelling Footprints. Applied Sciences, 12(21), 11226. https://doi.org/10.3390/app122111226