Using Artificial Intelligence Techniques to Predict Intrinsic Compressibility Characteristic of Clay

Abstract

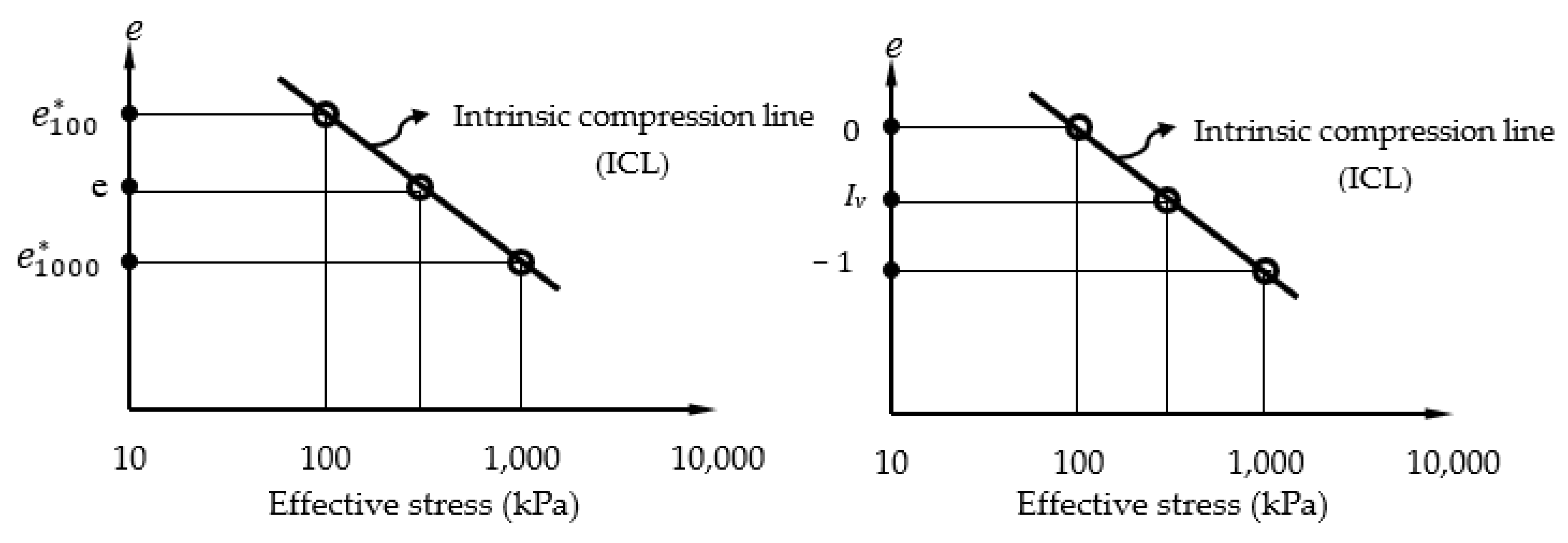

1. Introduction

2. Methodology

2.1. Database Generation and Pre-Processing

2.2. ML Cross-Validation

k-Fold and Monte Carlo Cross-Validation Techniques (kFCV)

3. Supervised ML Models

3.1. Tree-Based Ensembles and Decision Forest Classifier Models

3.1.1. Random Decision Forest (RDF) and Boosted Decision Trees (BDT)

3.1.2. Meta-Heuristic Ensembles and Voting Ensemble (VE)

3.2. Stand-Alone Algorithms

3.2.1. Linear Regression (REG)

3.2.2. Logistic Regression (LR)

3.2.3. Bayesian Linear Regressor (BLR)

3.2.4. Artificial Neural Networks (ANN)

3.3. ML Model Implementation and Multiclass-Class Evaluation Metrics

4. Results and Discussion

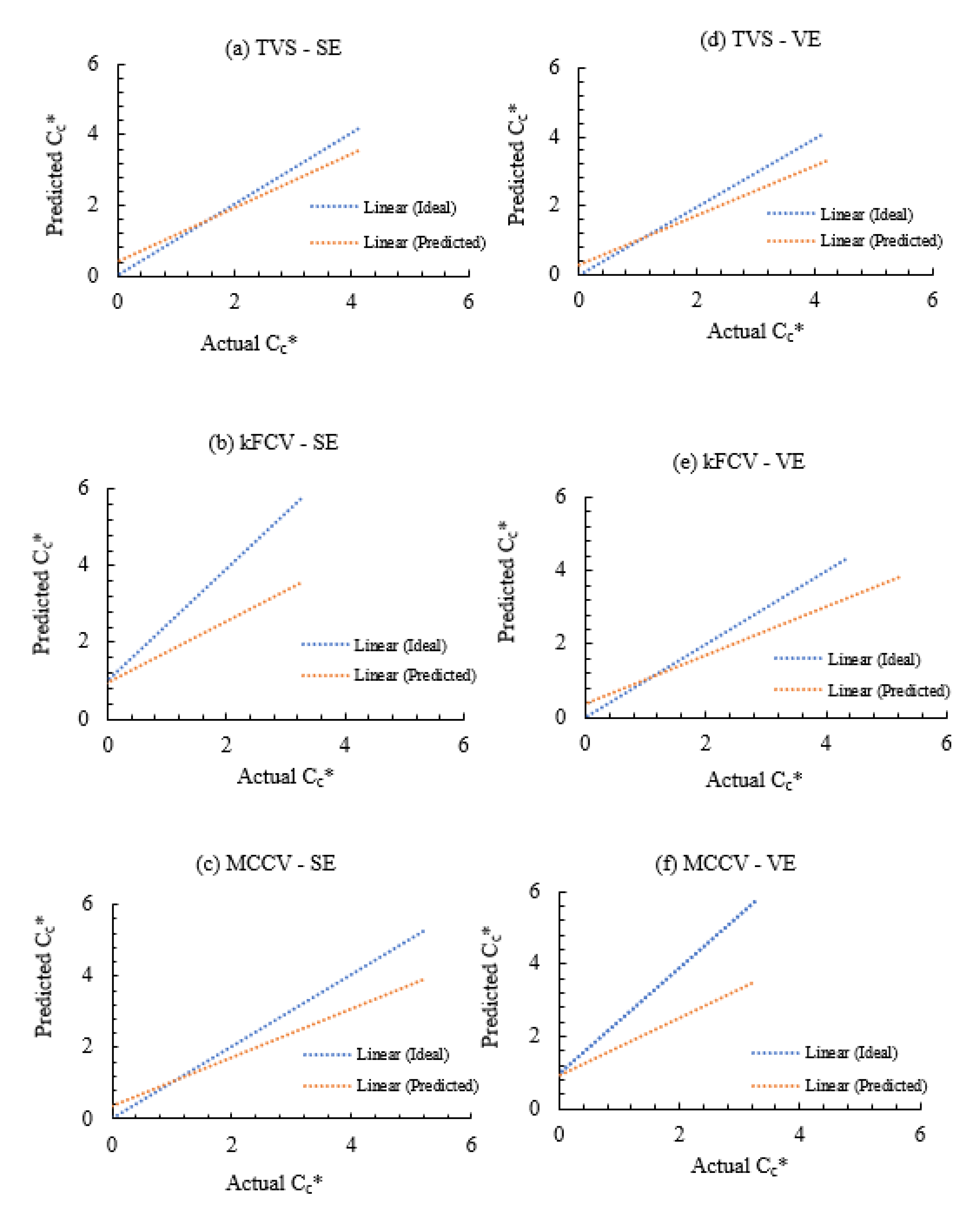

4.1. ML Regression

4.2. Sensitivity Analysis of the Meta-Ensembles

4.2.1. Comparing between Cross-Validation Techniques

4.2.2. Model Residuals

4.2.3. Distribution of Residuals

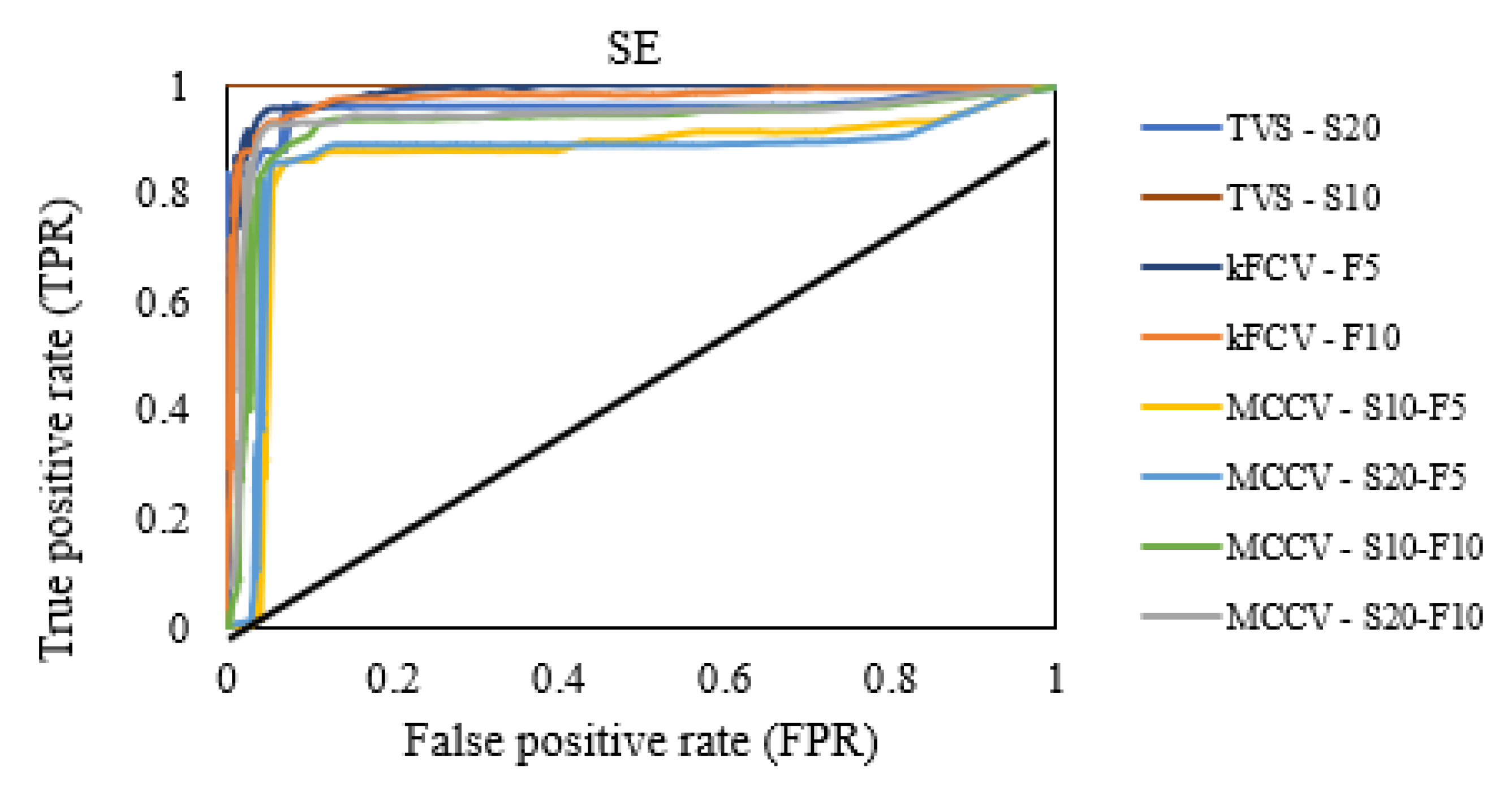

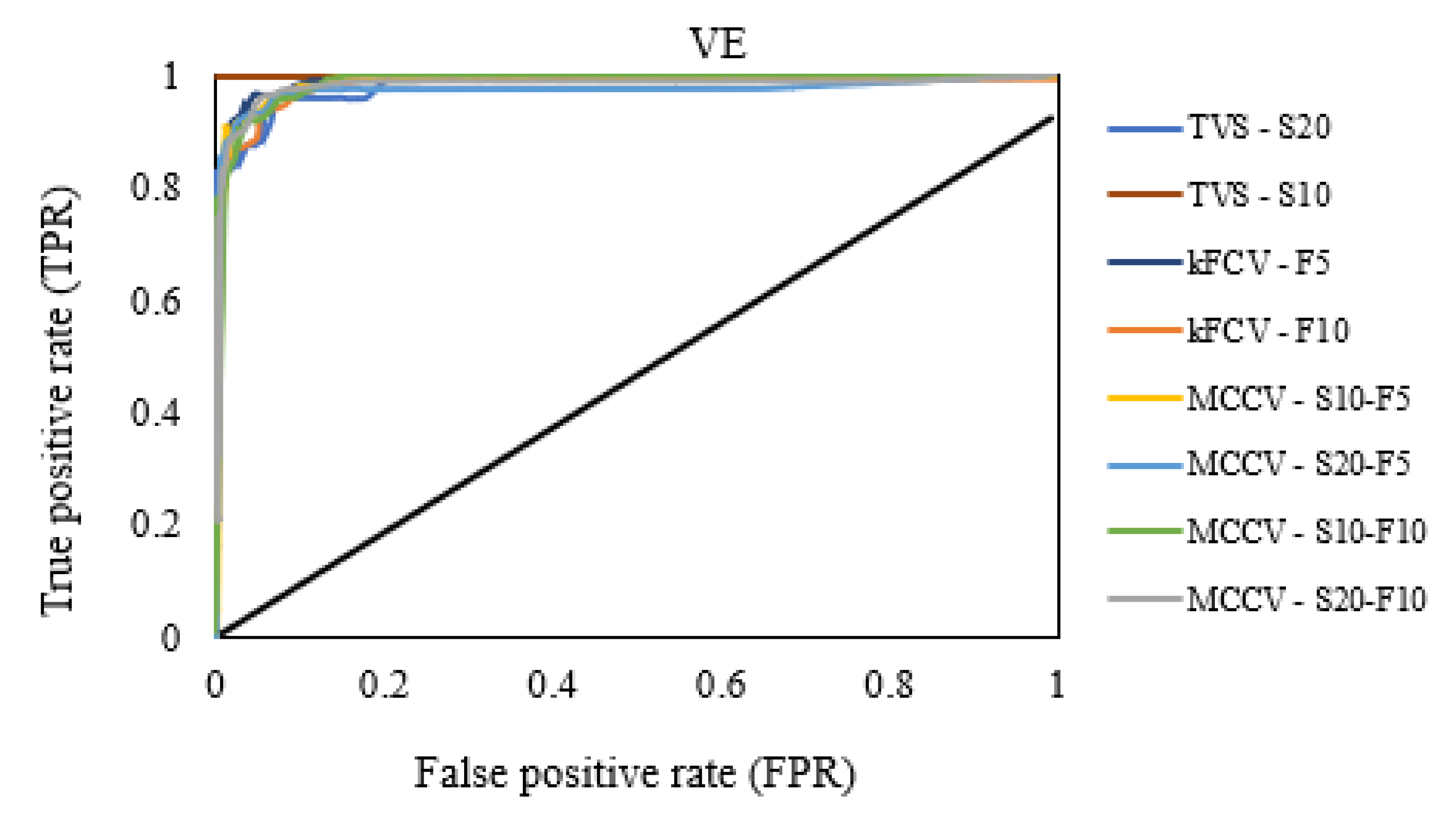

4.3. ML Classification

4.3.1. Receiver Operating Characteristic Curve (ROC) and Area under Curve (AUC)

4.3.2. Comparing between Meta-Heuristic Ensembles and Traditional Classifiers

4.3.3. Feature Importance

5. Study Significance and Implementation

6. Conclusions

- Among the traditional stand-alone ML regression models, BLR did produce the least accuracy of prediction of intrinsic compressibility index of soil when compared to REG and ANN. Although, a general consideration of the stand-alone ensembles such as REG and BLR would suggest their inability to implicitly detect the complexities of variable combinations due to their basic underlying assumption of linearity.

- The tree ensembles (RDF and BDT) did generally outperform the stand-alone models with the RDF model having an R2 of 0.86 and RMSE of 0.48 and the BDT model producing and an R2 of 0.822 and RMSE of 0.53. The techniques of ‘bagging’ or ‘bootstrapping’ inherent in these tree-ensembles certainly enhanced their accuracy in that respect.

- The technique of ensemble averaging with a meta-heuristic combination of models’ hyperparameters through voting and stacking gave the best overall performance in the prediction of the intrinsic compressibility index of soils with an average R2 of 0.9 and RMSE of 0.34.

- Sensitive analysis and diagnostic tests used to examine the procedure and outcome of dataset training, testing and validation showed the Monte Carlo method of cross validation as giving the best results for ML prediction.

- An analysis of the features with direct influence on the ML prediction revealed that the void ratio determined at effective stress of 100 kPa had the most significance followed by soil’s Atterberg limits while specific gravity was the variable of least importance in the forecast of intrinsic compressibility index of soils C*c.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Gu, X.; Zhang, J.; Huang, X. DEM analysis of monotonic and cyclic behaviors of sand based on critical state soil mechanics framework. Comput. Geotech. 2020, 128, 103787. [Google Scholar] [CrossRef]

- Roscoe, K.H.; Schofield, A.N.; Wroth, C.P. On the Yielding of Soils. Geotechnique 1958, 8, 22–53. [Google Scholar] [CrossRef]

- Yang, J.; Li, X.S. State-Dependent Strength of Sands from the Perspective of Unified Modeling. J. Geotech. Geoenviron. Eng. 2004, 130, 186–198. [Google Scholar] [CrossRef]

- Feng, L.; Zhang, S.; Jin, Z.; Zhang, M.; Sun, P.; Jia, J.; Chu, G.; Hu, W. The critical mechanics of the initiation of loess flow failure and implications for landslides. Eng. Geol. 2021, 288, 106165. [Google Scholar] [CrossRef]

- Nguyen, H.B.K.; Rahman, M.; Fourie, A. The critical state behaviour of granular material in triaxial and direct simple shear condition: A DEM approach. Comput. Geotech. 2021, 138, 104325. [Google Scholar] [CrossRef]

- de Bono, J.P.; McDowell, G.R. Micro mechanics of the critical state line at high stresses. Comput. Geotech. 2018, 98, 181–188. [Google Scholar] [CrossRef]

- Burland, J.B. On the compressibility and shear strength of natural clays. Géotechnique 1990, 40, 329–378. [Google Scholar] [CrossRef]

- Cerato, A.; Lutenegger, A.J. Determining Intrinsic Compressibility of Fine-Grained Soils. J. Geotech. Geoenvironmental Eng. 2004, 130, 872–877. [Google Scholar] [CrossRef]

- Lee, C.; Hong, S.-J.; Kim, D.; Lee, W. Assessment of Compression Index of Busan and Incheon Clays with Sedimentation State. Mar. Georesources Geotechnol. 2015, 33, 23–32. [Google Scholar] [CrossRef]

- Tsuchida, T. e–logσv′ relationship for marine clays considering initial water content to evaluate soil structure. Mar. Georesources Geotechnol. 2017, 35, 104–119. [Google Scholar] [CrossRef]

- Zeng, L.-L.; Hong, Z.-S.; Cui, Y.-J. Determining the virgin compression lines of reconstituted clays at different initial water contents. Can. Geotech. J. 2015, 52, 1408–1415. [Google Scholar] [CrossRef]

- Al Haj, K.; Standing, J. Mechanical properties of two expansive clay soils from Sudan. Geotechnique 2015, 65, 258–273. [Google Scholar] [CrossRef]

- Hong, Z.S.; Zeng, L.L.; Cui, Y.J.; Cai, Y.Q.; Lin, C. Compression Behaviour of Natural and Reconstituted Clays. Geotechnique 2012, 62, 291–301. [Google Scholar] [CrossRef]

- Xu, G.-Z.; Yin, J. Compression Behavior of Secondary Clay Minerals at High Initial Water Contents. Mar. Georesources Geotechnol. 2016, 34, 721–728. [Google Scholar] [CrossRef]

- Habibbeygi, F. Intrinsic compression characteristics of an expansive clay from western australia. Int. J. Geomate 2017, 12, 140–147. [Google Scholar] [CrossRef]

- Habibbeygi, F.; Nikraz, H.; Koul, B.K. Regression models for intrinsic constants of reconstituted clays. Cogent Geosci. 2018, 4, 1546978. [Google Scholar] [CrossRef]

- Kootahi, K.; Moradi, G. Evaluation of compression index of marine fine-grained soils by the use of index tests. Mar. Georesources Geotechnol. 2017, 35, 548–570. [Google Scholar] [CrossRef]

- Cerato, A.B. Influence of Specific Surface Area on Geotechnical Characteristics of Fine-Grained Soils; University of Massachusetts: Amherst, MA, USA, 2001. [Google Scholar]

- Onofrio, A.; Santucci de Magistris, F.; Olivares, L. Influence of Soil Structure on the Behavior of Two Natural Stiff Clays in the Pre-Failure Range. In Proceedings of the 2nd International Symposium on the Geotechnics of Hard Soils-Soft Rocks, Naples, Italy, 12–14 October 1998; pp. 497–505. [Google Scholar]

- Yagi, N.; Yatabe, R.; Mukaitani, M. Compressive Characteristics of Cohesive Soils. In Proceedings of the International Symposium on Compression and Consolidation of Clayey Soils, IS-Hiroshima, Hiroshima, Japan, 10–12 May 1995; Hiroshi, Y., Osamu, K., Eds.; A.A. Balkema: Rotterdam, The Netherlands, 1995; pp. 221–226. [Google Scholar]

- Gori, U.; Polidori, E.; Tonelli, G. The One-Dimensional Consolidation Effect on the Slightly Sensitive Soils. In Proceedings of the International Symposium on Compression and Consolidation of Clayey Soils, IS-Hiroshima, Hiroshima, Japan, 10–12 May 1995; pp. 49–55. [Google Scholar]

- Yamadera, A.; Miura, N.; Hino, T. Microscopic Consideration on Compression and Rebound Behaviors of Ariake Clay. In Proceedings of the International Symposium, Bothkennar, Drammen, Quebec & Ariake Clays, Yokosuka, Japan, 26–28 February 1997; pp. 175–184. [Google Scholar]

- Nagaraj, T.S.; Srinivasa, B.R.; Murthy, S. A critical reappraisal of compression index equations. Geotechnique 1986, 36, 27–32. [Google Scholar] [CrossRef]

- Nagaraj, T.S.; Pandian, N.S.; Raju, N. Stress state-permeability fine-grained. Geotechnique 1993, 43, 333–336. [Google Scholar] [CrossRef]

- Sridharan, A.; Nagaraj, H.B. Compressibility behaviour of remoulded, fine-grained soils and correlation with index properties. Can. Geotech. J. 2000, 37, 712–722. [Google Scholar] [CrossRef]

- Meriggi, R.; Paronuzzi, P.; Simeoni, L. Engineering geology characterization of lacustrine overconsolidated clays in an alpine area of Italy. Can. Geotech. J. 2000, 37, 1241–1251. [Google Scholar] [CrossRef]

- Skempton, A.W.; Jones, O.T. Notes on the compressibility of clays. Q. J. Geol. Soc. 1944, 100, 119–135. [Google Scholar] [CrossRef]

- Youssef, M.S.; Ramli, A.H.; Demery, M.E. Relationships Between Shear Strength, Consolidation, Liquid Limit, and Plastic Limit for Remoulded Clays. In Proceedings of the Sixth International Conference on Soil Mechanics and Foundation Engineering, Montreal, QC, Canada, 8–15 September 1965; pp. 126–129. [Google Scholar]

- Quigley, R.M.; Thompson, C.D. The Fabric of Anisotropically Consolidated Sensitive Marine Clay. Can. Geotech. J. 1966, 3, 61–73. [Google Scholar] [CrossRef]

- Akagi, H.; Komiya, K. Constant Rate of Strain Consolidation Properties of Clayey Soil at High Temperature. Volume 1 of Compression and Consolidation of Clayey Soils. In Proceedings of the International Symposium on Compression and Con-solidation of Clayey Soils, IS-Hiroshima, Hiroshima, Japan, 10–12 May 1995; pp. 3–8. [Google Scholar]

- Raymond, G.P. Laboratory Consolidation of Some Normally Consolidated Soils. Can. Geotech. J. 1966, 3, 217–234. [Google Scholar] [CrossRef]

- Locat, J.; Lefebure, G. The Compressibility and Sensitivity of an Artificially Sedimented Clay Soil: The Grande-Baleine Marine Clay. In Proceedings of the 2nd Canadian Conference on Marine Geotechnical Engineering, Halifax, NS, Canada, 1–3 June 1982. [Google Scholar]

- Jardine, R.J. Investigations of Pile-Soil Behaviour, with Special Reference to the Foundations of Offshore Structures. PhD thesis, The University of London, London, UK, 1985. [Google Scholar]

- Templeton, G.F. A Two-Step Approach for Transforming Continuous Variables to Normal: Implications and Recommendations for IS Research. Commun. Assoc. Inf. Syst. 2011, 28, 41–58. [Google Scholar] [CrossRef]

- Yu, H.-F.; Huang, F.-L.; Lin, C.-J. Dual coordinate descent methods for logistic regression and maximum entropy models. Mach. Learn. 2011, 85, 41–75. [Google Scholar] [CrossRef]

- Huang, F.-L.; Hsieh, C.-J.; Chang, K.-W.; Lin, C.-J. Iterative scaling and coordinate descent methods for maximum entropy. J. Mach. Learn. Res. 2010, 11, 815–848. [Google Scholar] [CrossRef]

- Galwey, N. Introduction to Mixed Modelling: Beyond Regression and Analysis of Variance, 2nd ed.; Wiley: Chichester, UK, 2014. [Google Scholar]

- Chatterjee, S.; Hadi, A. Regression Analysis by Example, 5th ed.; Wiley: Hoboken, NJ, USA, 2012. [Google Scholar]

| Mean | Standard Error | Standard Deviation | Kurtosis | Skewness | Min. | Max. | Range |

|---|---|---|---|---|---|---|---|

| 0.789244 | 0.124782 | 1.36121 | −0.0914 | 0.123457 | 0.08 | 8.5 | 8.42 |

| Statistic | G | LL | PL | PI | |||

|---|---|---|---|---|---|---|---|

| Mean | 2.71 | 87.62 | 31.11 | 56.50 | 2.37 | 0.84 | 1.51 |

| Standard Error | 0.01 | 8.375 | 1.196 | 7.82 | 0.22 | 0.03 | 0.10 |

| Standard Deviation | 0.10 | 91.36 | 13.05 | 85.25 | 2.43 | 0.36 | 1.08 |

| Kurtosis | 0.86 | 13.93 | 9.15 | 16.11 | 12.94 | 11.59 | 9.53 |

| Skewness | −0.5 | 3.60 | 2.05 | 3.94 | 3.49 | 2.36 | 2.78 |

| Range | 0.56 | 538 | 96 | 503 | 13.68 | 2.75 | 6.65 |

| Min. | 2.37 | 22 | 12 | 5 | 0.59 | 0.31 | 0.45 |

| Max. | 2.93 | 560 | 108 | 508 | 14.27 | 3.06 | 7.10 |

| Stand-Alone Algorithms | ||

|---|---|---|

| Parameter | Option/value | |

| REG | Regularisation wt. (L2) | 0.001 |

| Method | Ordinary least squares (OLS) | |

| LR | Optimisation tolerance | 1 × 10−7 |

| Regularisation wt. (L1 & L2) | 1.00 | |

| BLR | Regularisation wt. (L2) | 1.00 |

| ANN | Normaliser | min-max |

| No. of hidden nodes | 100.00 | |

| No. of iterative learning | 100.00 | |

| Hidden layer spec. | Full connection | |

| Tree-ensembles | ||

| Parameter | Option/value | |

| Boosted decision tree (BDT) | Constructed trees | 100.00 |

| Tree-forming training instances | 10.00 | |

| Leaves/tree (max.) | 20.00 | |

| Random decision forest (RDF) | Tree depth (max.) | 32.00 |

| Constructed trees | 8.00 | |

| Method of resampling | Bagging | |

| Samples/leaf node (min.) | 1.00 | |

| Randomised splits/node | 128.00 | |

| Model | R2 | RMSE | MAE |

|---|---|---|---|

| % | % | ||

| REG | 0.67 | 0.73 | 0.54 |

| ANN | 0.59 | 0.81 | 0.61 |

| BLR | 0.19 | 1.14 | 1.02 |

| BDT | 0.82 | 0.53 | 0.44 |

| RDF | 0.86 | 0.48 | 0.38 |

| SE | 0.93 | 0.34 | 0.29 |

| VE | 0.93 | 0.33 | 0.26 |

| Model | R2 | RMSE | MAE | |||

|---|---|---|---|---|---|---|

| CV Method | Set | No. of Folds | % | % | ||

| TVS | 20 | - | 0.93 | 0.34 | 0.29 | |

| 10 | - | 0.67 | 0.66 | 0.62 | ||

| SE | KFCV | - | 5 | 0.88 | 0.44 | 0.33 |

| - | 10 | 0.86 | 0.44 | 0.33 | ||

| MCCV | 10 | 5 | 0.89 | 0.40 | 0.31 | |

| 20 | 5 | 0.88 | 0.46 | 0.35 | ||

| 10 | 10 | 0.88 | 0.43 | 0.34 | ||

| 20 | 10 | 0.88 | 0.46 | 0.36 | ||

| TVS | 10 | - | 0.91 | 0.34 | 0.29 | |

| 20 | - | 0.93 | 0.33 | 0.26 | ||

| VE | KFCV | - | 5 | 0.88 | 0.43 | 0.32 |

| - | 10 | 0.86 | 0.43 | 0.32 | ||

| MCCV | 10 | 5 | 0.90 | 0.40 | 0.32 | |

| 20 | 5 | 0.88 | 0.45 | 0.35 | ||

| 10 | 10 | 0.87 | 0.44 | 0.35 | ||

| 20 | 10 | 0.88 | 0.46 | 0.36 |

| Model | CV Method | Set | No. of Folds | AUC | ||

|---|---|---|---|---|---|---|

| Micro | Macro | Weighted | ||||

| TVS | 20 | - | 0.957 | 0.869 | 0.975 | |

| TVS | 10 | - | 1.000 | 1.000 | 1.000 | |

| SE | KFCV | - | 5 | 0.989 | 0.982 | 0.992 |

| KFCV | - | 10 | 0.982 | 0.986 | 0.992 | |

| MCCV | 10 | 5 | 0.861 | 0.813 | 0.833 | |

| MCCV | 20 | 5 | 0.890 | 0.843 | 0.911 | |

| MCCV | 10 | 10 | 0.923 | 0.887 | 0.904 | |

| MCCV | 20 | 10 | 0.946 | 0.929 | 0.946 | |

| Model | CV Method | Set | No. of Folds | AUC | ||

|---|---|---|---|---|---|---|

| Micro | Macro | Weighted | ||||

| TVS | 20 | - | 0.986 | 0.986 | 0.996 | |

| TVS | 10 | - | 1.000 | 1.000 | 1.000 | |

| VE | KFCV | - | 5 | 0.992 | 0.984 | 0.993 |

| KFCV | - | 10 | 0.991 | 0.996 | 0.998 | |

| MCCV | 10 | 5 | 0.950 | 0.964 | 0.973 | |

| MCCV | 20 | 5 | 0.974 | 0.969 | 0.984 | |

| MCCV | 10 | 10 | 0.966 | 0.976 | 0.979 | |

| MCCV | 20 | 10 | 0.983 | 0.982 | 0.990 | |

| Model | CV Method | Set (S) | No. of Folds (F) | Accuracy | Recall | Precision | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Overall | Weighted | Micro | Macro | Weighted | Micro | Macro | Weighted | ||||

| TVS | 20 | - | 0.833 | 0.938 | 0.833 | 0.563 | 0.833 | 0.833 | 0.575 | 0.775 | |

| TVS | 10 | - | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | |

| SE | KFCV | - | 5 | 0.924 | 0.972 | 0.924 | 0.758 | 0.924 | 0.924 | 0.787 | 0.908 |

| KFCV | - | 10 | 0.933 | 0.964 | 0.933 | 0.856 | 0.933 | 0.933 | 0.855 | 0.923 | |

| MCCV | 10 | 5 | 0.817 | 0.856 | 0.817 | 0.756 | 0.817 | 0.817 | 0.734 | 0.776 | |

| MCCV | 20 | 5 | 0.858 | 0.911 | 0.858 | 0.746 | 0.858 | 0.858 | 0.745 | 0.809 | |

| MCCV | 10 | 10 | 0.858 | 0.893 | 0.858 | 0.805 | 0.858 | 0.858 | 0.765 | 0.820 | |

| MCCV | 20 | 10 | 0.908 | 0.946 | 0.908 | 0.806 | 0.908 | 0.908 | 0.803 | 0.875 | |

| TVS | 20 | - | 0.875 | 0.957 | 0.875 | 0.625 | 0.875 | 0.875 | 0.683 | 0.850 | |

| TVS | 10 | - | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | |

| VE | KFCV | - | 5 | 0.958 | 0.982 | 0.958 | 0.856 | 0.958 | 0.958 | 0.865 | 0.946 |

| KFCV | - | 10 | 0.941 | 0.975 | 0.941 | 0.861 | 0.941 | 0.941 | 0.859 | 0.928 | |

| MCCV | 10 | 5 | 0.917 | 0.936 | 0.917 | 0.865 | 0.917 | 0.917 | 0.883 | 0.922 | |

| MCCV | 20 | 5 | 0.925 | 0.969 | 0.925 | 0.796 | 0.925 | 0.925 | 0.803 | 0.901 | |

| MCCV | 10 | 10 | 0.900 | 0.935 | 0.900 | 0.824 | 0.900 | 0.900 | 0.816 | 0.877 | |

| MCCV | 20 | 10 | 0.921 | 0.964 | 0.921 | 0.781 | 0.921 | 0.921 | 0.778 | 0.897 | |

| Model | BDT | RDF | LR | ANN | VE | SE | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 10 CV | 5 CV | 10 CV | 5 CV | 10 CV | 5 CV | 10 CV | 5 CV | 10 CV | 5 CV | 10 CV | 5 CV | |

| Accuracy | 0.898 | 0.907 | 0.898 | 0.866 | 0.747 | 0.731 | 0.721 | 0.690 | 0.941 | 0.958 | 0.933 | 0.924 |

| Precision | 0.869 | 0.805 | 0.836 | 0.772 | 0.531 | 0.523 | 0.523 | 0.455 | 0.859 | 0.865 | 0.856 | 0.787 |

| Recall | 0.878 | 0.836 | 0.842 | 0.715 | 0.634 | 0.570 | 0.585 | 0.520 | 0.861 | 0.856 | 0.856 | 0.758 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abbey, S.J.; Eyo, E.U.; Booth, C.A. Using Artificial Intelligence Techniques to Predict Intrinsic Compressibility Characteristic of Clay. Appl. Sci. 2022, 12, 9940. https://doi.org/10.3390/app12199940

Abbey SJ, Eyo EU, Booth CA. Using Artificial Intelligence Techniques to Predict Intrinsic Compressibility Characteristic of Clay. Applied Sciences. 2022; 12(19):9940. https://doi.org/10.3390/app12199940

Chicago/Turabian StyleAbbey, Samuel J., Eyo U. Eyo, and Colin A. Booth. 2022. "Using Artificial Intelligence Techniques to Predict Intrinsic Compressibility Characteristic of Clay" Applied Sciences 12, no. 19: 9940. https://doi.org/10.3390/app12199940

APA StyleAbbey, S. J., Eyo, E. U., & Booth, C. A. (2022). Using Artificial Intelligence Techniques to Predict Intrinsic Compressibility Characteristic of Clay. Applied Sciences, 12(19), 9940. https://doi.org/10.3390/app12199940