OkeyDoggy3D: A Mobile Application for Recognizing Stress-Related Behaviors in Companion Dogs Based on Three-Dimensional Pose Estimation through Deep Learning

Abstract

:1. Introduction

- “Related Work” introduces contemporary deep learning-based dog behavior recognition systems and dog disease diagnosis systems and establishes the theoretical understanding of behavioral disorders in dogs;

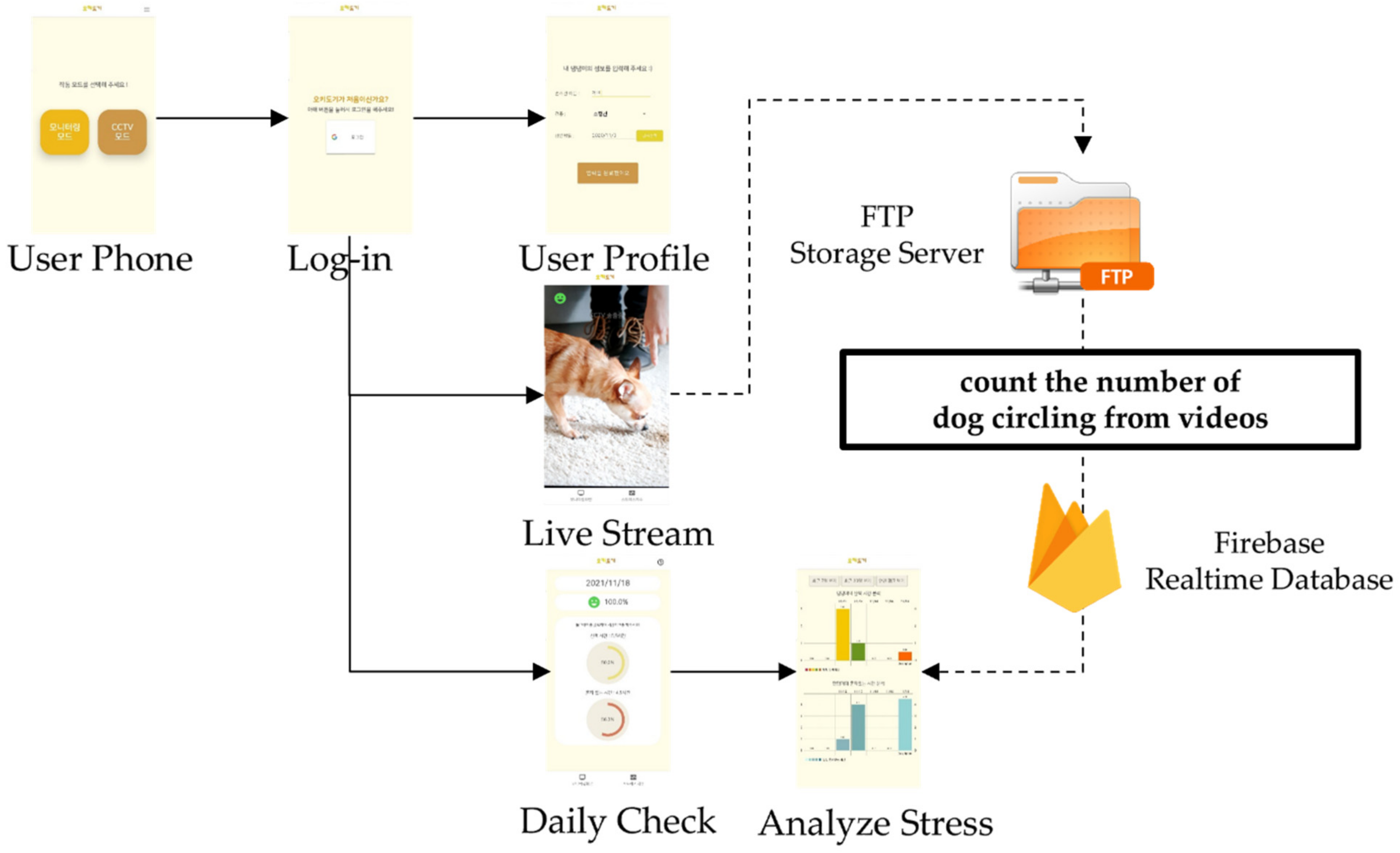

- “Architecture of OkeyDoggy3D” presents the roles and main results of the step-by-step algorithm used to develop this mobile application and provides a structural understanding of the mobile application;

- “Evaluation” discusses the quantitative and qualitative assessments of the developed mobile application;

- “Conclusions and Future Work” summarizes this paper, describes the main findings, and suggests future directions based on the limitations of this study.

2. Related Work

2.1. Canine Behavior Recognition Systems Based on Deep Learning

2.2. Canine Disease Diagnosis Systems Based on Deep Learning

2.3. Behavioral Disorders in Dogs

3. Architecture of OkeyDoggy3D

3.1. Design Concept

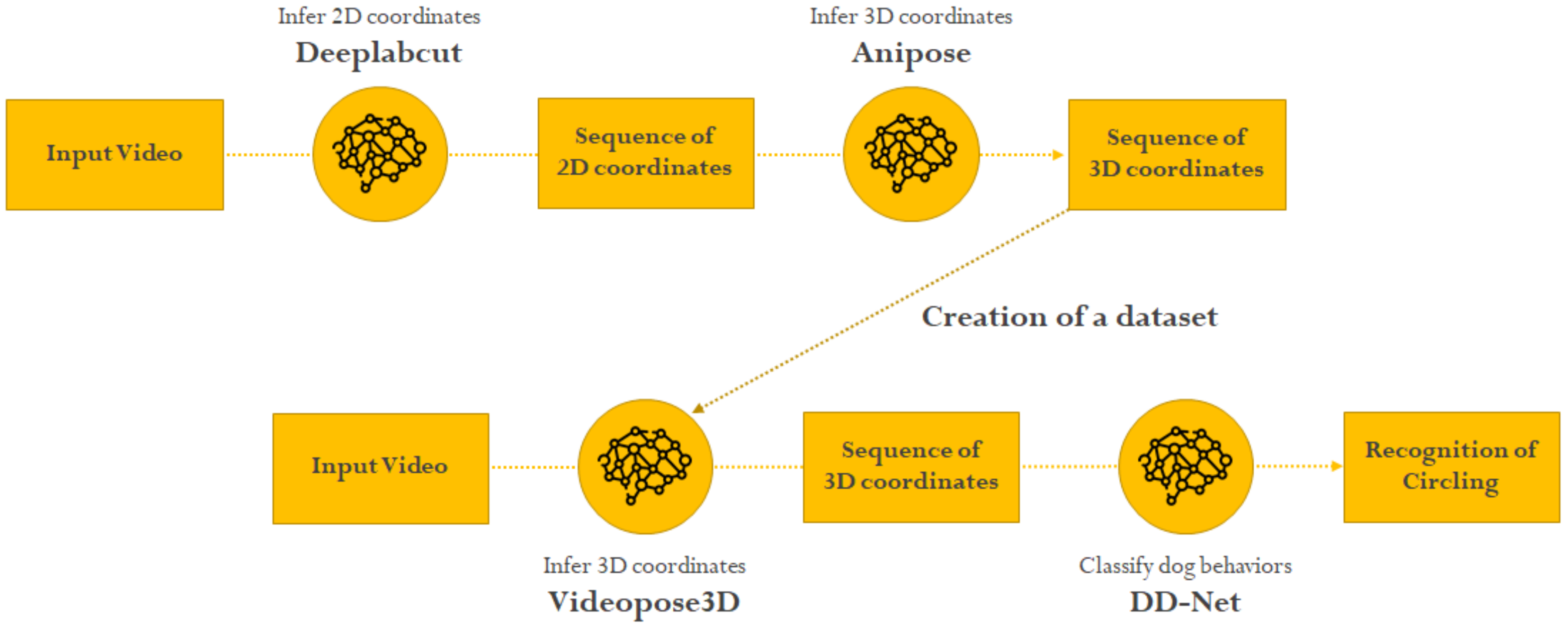

3.2. Creation of the Dataset

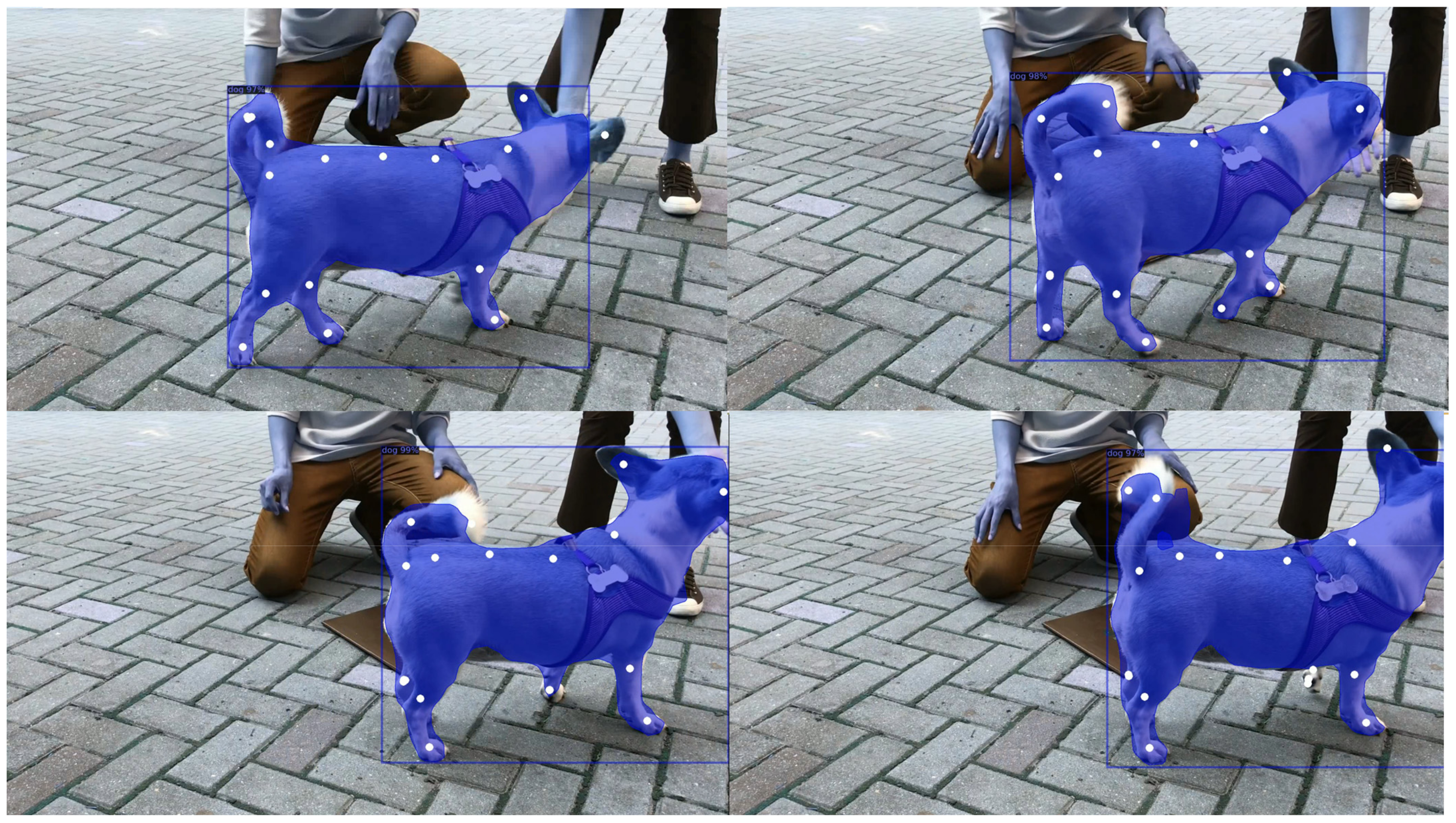

3.2.1. 2D Dog-Pose Estimation

3.2.2. Multi-View 3D Dog-Pose Estimation

3.3. Identification of Problematic Behaviors in Dogs

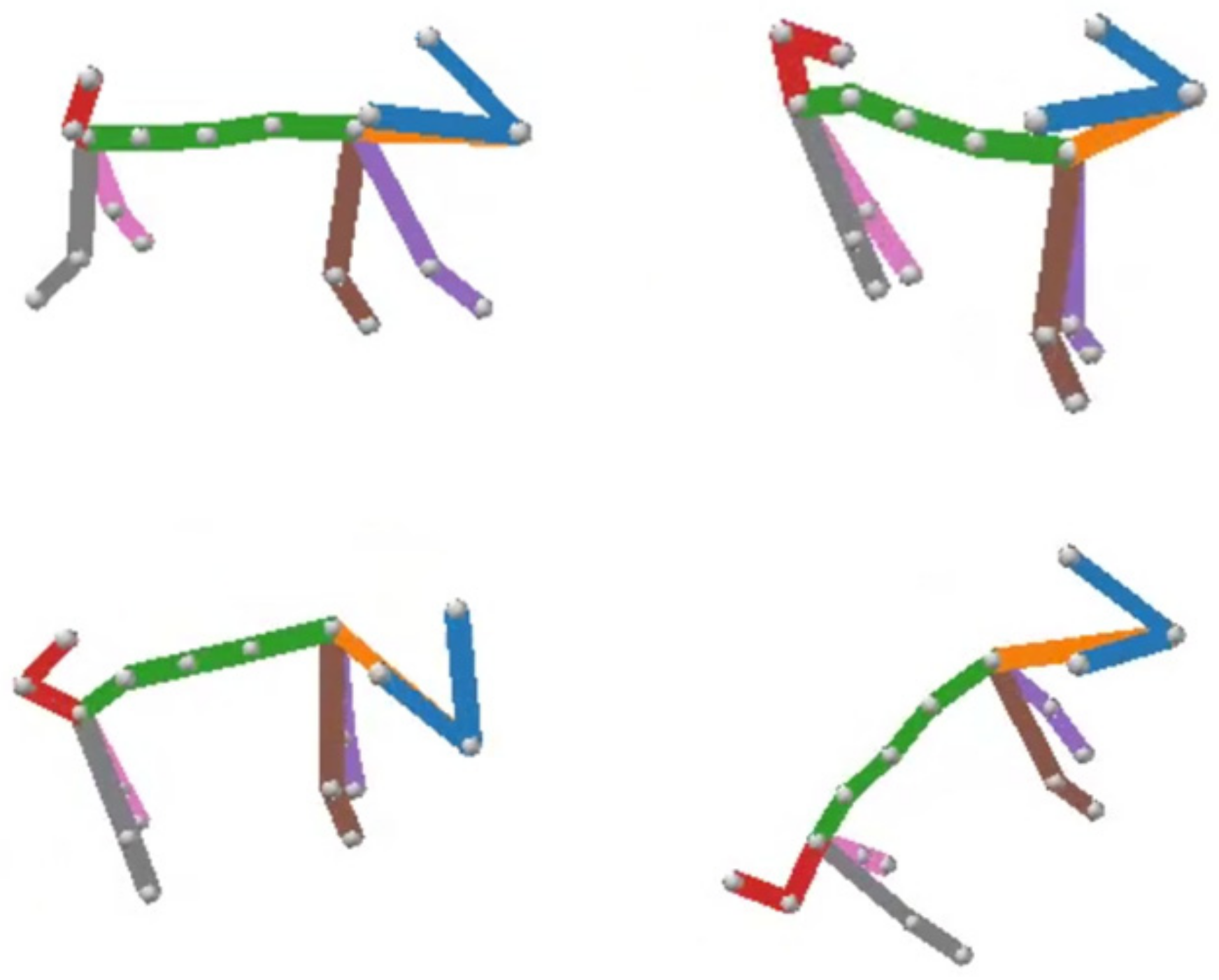

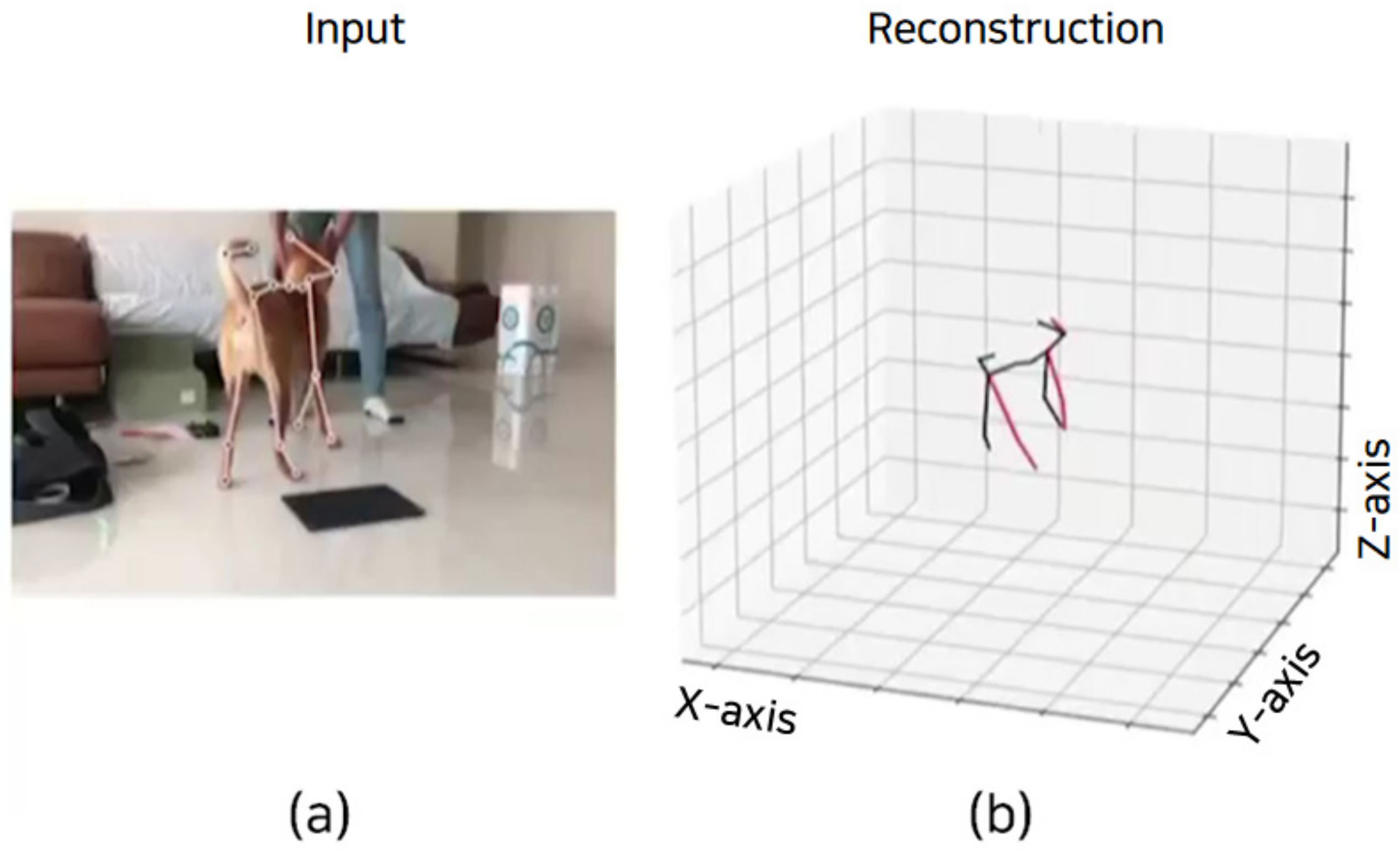

3.3.1. Single-View 3D Dog-Pose Estimation

3.3.2. Recognition of Problematic Behaviors

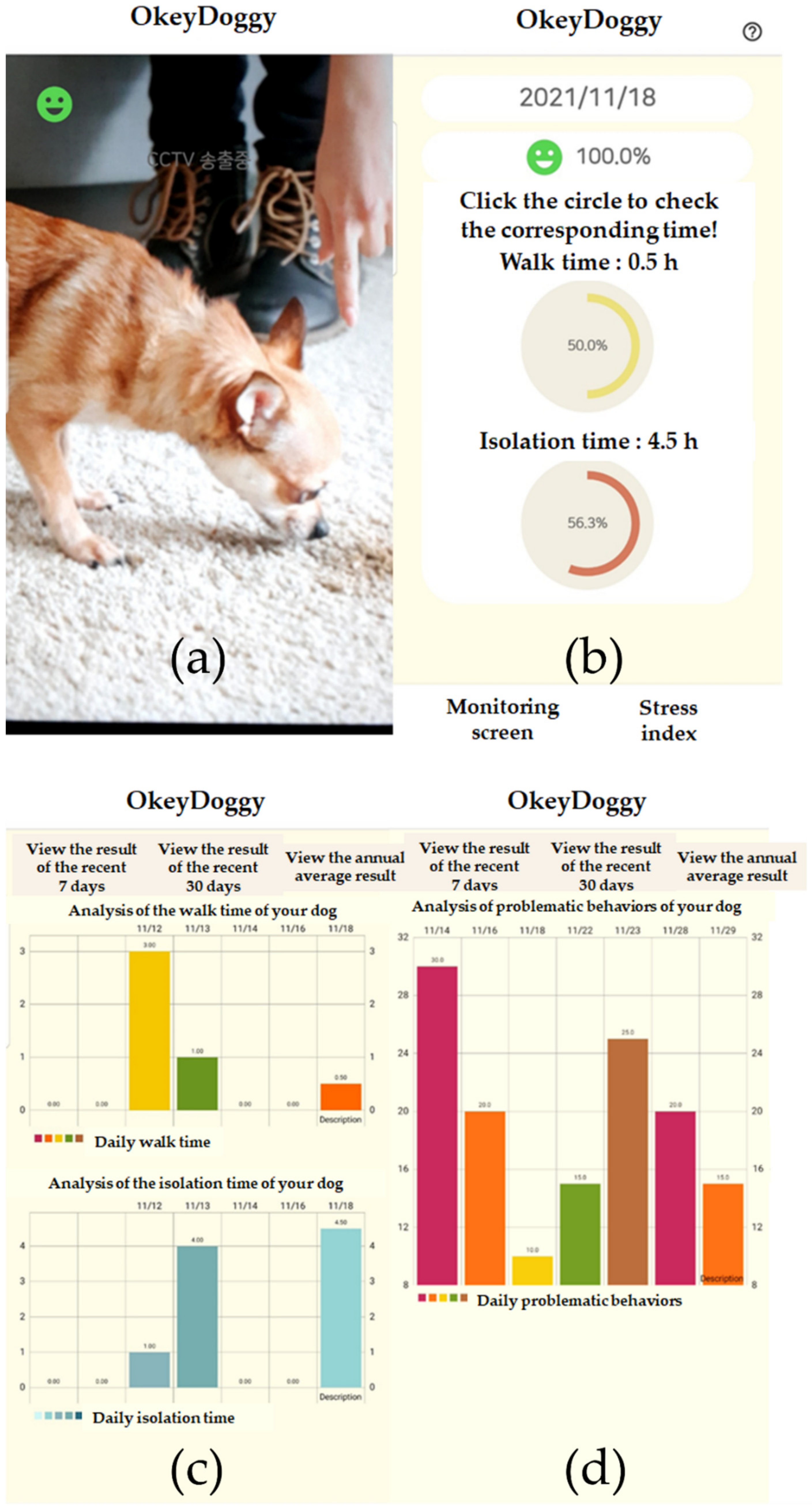

3.4. Prototype of the Mobile App

4. Evaluation

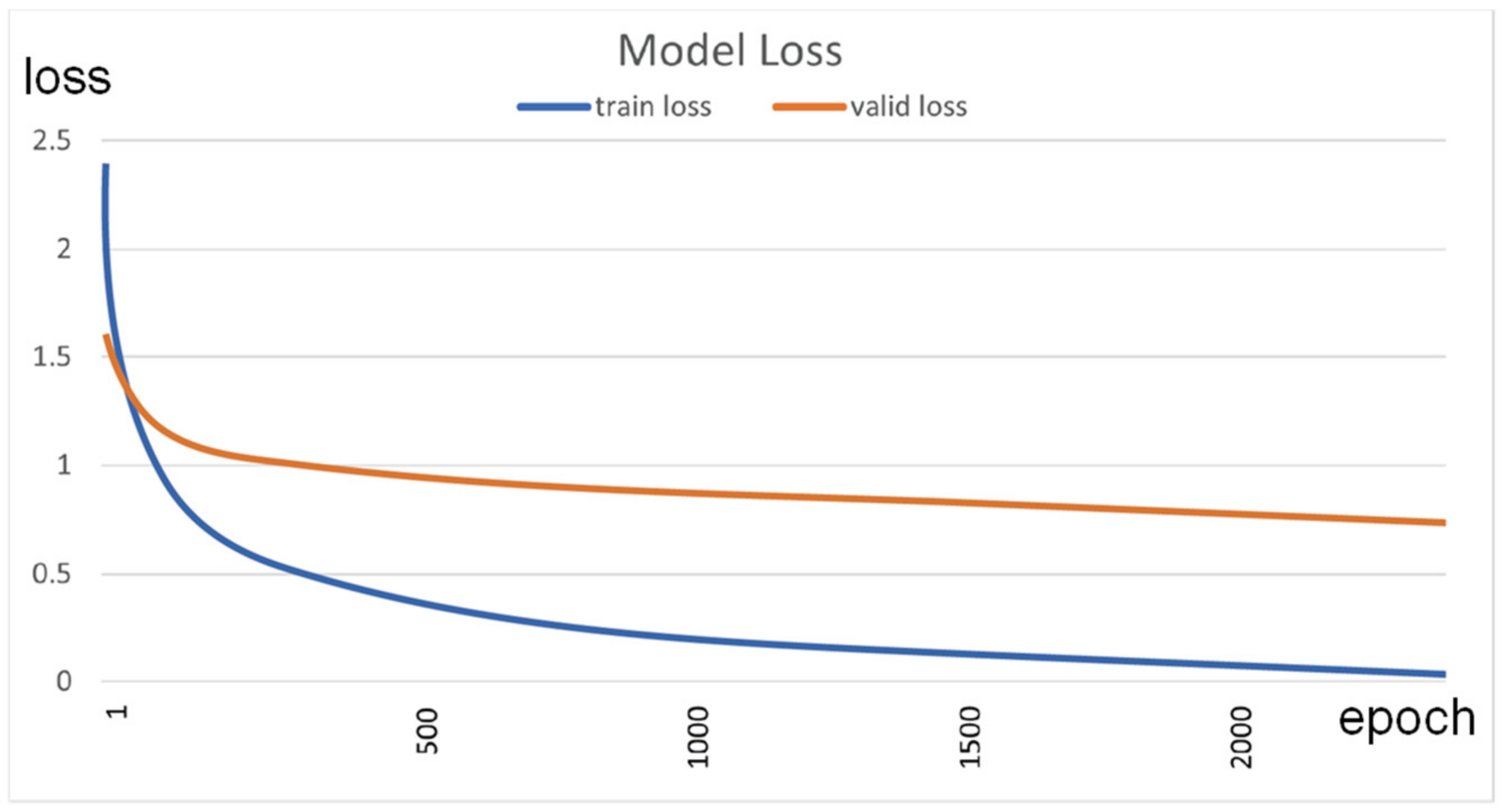

4.1. AI Model Evaluation Result

4.2. Qualitative Evaluation by Experts

4.3. Results of Qualitative Evaluation by Experts

“It appears that the mobile app performs measurement well.”

“It seems that the number of times the dog circles is being measured correctly.”

“I have never seen any mobile apps that can analyze canine behavior. This mobile app is advantageous in that users can be easily informed about the meaning of their dogs’ behaviors.”

“I find it positive that the developed mobile app provides users with the stress index of their dogs. The bar graph (referring to the times that companion dogs spend walking and being left alone) is helpful because users can understand how much affection they devote to their dogs at a glance.”

“It is my first time experiencing a mobile app that can analyze behaviors of companion dogs. Other mobile apps provide only descriptions related to their behaviors. By contrast, this mobile app analyzes their behaviors based on their recorded videos. For this reason, I find this mobile app better than other mobile apps.”

“By simply installing the developed mobile app on their smartphones, users can automatically ascertain the problematic behaviors of their companion dogs. I believe that this function will help users easily identify problematic behaviors of their dogs.”

“The accessibility of the mobile app is high enough to allow me to use this app without inconvenience. I can also search detailed information easily.”

“In general, the developed mobile app operates smoothly. However, when my smartphone was installed at a certain point, this device detected my dog from only a certain angle. For this reason, problematic behaviors of my dog were not analyzed when my dog was not detected. I wish the app could improve this functionality.”

“It is also crucial to analyze whether companion dogs naturally like people or only their owners.”

“I would like to recommend including additional information on the main owner for the companion dog among family members.”

“It is recommended to include information on the amount of time that people spend playing with their dogs at home.”

“I believe that the developed mobile app can analyze the stress of companion dogs more accurately if it detects behaviors and facial expressions of these dogs simultaneously.”

“It will be useful if the mobile app sends friendly messages on the analytic result derived by this app to users when the stress index of their dogs reaches a certain level.”

“It will be appreciated if the developed mobile app provides a function for enabling users to solve quizzes about causes of problematic behaviors of their dogs displayed on this app to increase the awareness of users.”

“I hope that the developed mobile app can provide additional information on basic solutions for users to cope with situations where their dogs exhibit problematic behaviors.”

“It will be useful if the developed mobile app could provide a function for enabling users to record the amount of time that they spent playing with their dogs. I suggest that this app should allow users to identify not only the amount of time that they spend walking their dogs but also the amount of time that they spend playing with their dogs.”

“It is recommended to analyze a relationship between the stress of the dog with not only the dog walk time but also behaviors of the dog shown during the dog walk time.”

“Abandoned dogs might perform circling because of past trauma, and certain companion dogs might perform circling because of SAD.”

“Companion dogs show different tendencies. Some companion dogs might be satisfied with a short walk time, whereas other companion dogs might not be satisfied with even a long walk time.”

“Companion dogs suffering from SAD can exhibit several problematic behaviors, including circling, when they are separated from their owners.”

“When companion dogs are separated alone from their owners, they suffer from considerable stress.”

“If puppies did not learn how to live independently during the period of independently leaving their mother dogs, they will suffer from SAD. I think that highly independent dogs might not be stressed even when they are left alone without their owners.”

“The relationship between companion dogs and their owners and tendencies of these dogs can be regarded as important stress-related environmental information.”

“The amount of time people spend playing with their dogs at home and caring about their dogs is as important as the time they spend walking their dogs.”

“The same behaviors of dogs might have different meanings. The meaning of the same circling behavior can vary according to a minor difference of ears, tails, pupils, and hair.”

“It would be difficult to analyze the stress of companion dogs without considering their tendencies.”

“It is required to inspect situations observed when problematic behaviors of companion dogs occur as well as behaviors that they perform instantly before they exhibit such problematic behaviors.”

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kearney, S.; Li, W.; Parsons, M.; Kim, K.I.; Cosker, D. RGBD-Dog: Predicting Canine Pose from RGBD Sensors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8333–8342. [Google Scholar] [CrossRef]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: Markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef] [PubMed]

- Graving, J.M.; Chae, D.; Naik, H.; Li, L.; Koger, B.; Costelloe, B.R.; Couzin, I.D. DeepPoseKit, a software toolkit for fast and robust animal pose estimation using deep learning. elife 2019, 8, e47994. [Google Scholar] [CrossRef] [PubMed]

- Brugarolas, R.; Loftin, R.T.; Yang, P.; Roberts, D.L.; Sherman, B.; Bozkurt, A. Behavior recognition based on machine learning algorithms for a wireless canine machine interface. In Proceedings of the 2013 IEEE International Conference on Body Sensor Networks, Cambridge, MA, USA, 6–9 May 2013. [Google Scholar]

- Ahn, J.; Kwon, J.; Nam, H.; Jang, H.K.; Kim, J.I. Pet buddy: A wearable device for canine behavior recognition using a single IMU. In Proceedings of the 2016 International Conference on Big Data and Smart Computing (BigComp), Hong Kong, China, 18–20 January 2016. [Google Scholar]

- Kasnesis, P.; Doulgerakis, V.; Uzunidis, D.; Kogias, D.G.; Funcia, S.I.; González, M.B.; Giannousis, C.; Patrikakis, C.Z. Deep Learning Empowered Wearable-Based Behavior Recognition for Search and Rescue Dogs. Sensors 2022, 22, 993. [Google Scholar] [CrossRef] [PubMed]

- Haq, A.U. Canine psychiatry: Addressing animal psycho-pathologies. Behaviour 2017, 6, 7. [Google Scholar]

- Notari, L. Stress in Veterinary Behavioural Medicine. In BSAVA Manual of Canine and Feline Behavioural Medicine; BSAVA Library: Gloucester, UK, 2009; pp. 136–145. [Google Scholar]

- Cafazzo, S.; Maragliano, L.; Bonanni, R.; Scholl, F.; Guarducci, M.; Scarcella, R.; Di Paolo, M.; Pontier, D.; Lai, O.; Carlevaro, F.; et al. Behavioural and physiological indi-cators of shelter dogs’ welfare: Reflections on the no-kill policy on free-ranging dogs in Italy revisited on the basis of 15years of implementation. Physiol. Behav. 2014, 133, 223–229. [Google Scholar] [CrossRef] [PubMed]

- RSPCA (Royal Society for the Prevention of Cruelty to Animals). Being #DogKind: How in Tune Are We with the Needs of Our Canine Companions? Royal Society for the Prevention of Cruelty to Animals: London, UK, 2018; p. 13. [Google Scholar]

- Petcube. Petcube Launches Vet Chat, an Online Vet Consultation Service Powered by Fuzzy Pet Health. Available online: https://petcube.com/news/online-veterinarian-service (accessed on 27 November 2021).

- Furbo. Available online: https://shopus.furbo.com (accessed on 2 May 2022).

- AlphaDo. Available online: https://www.AlphaDo.co.kr (accessed on 27 November 2021).

- TTcare. Available online: https://www.ttcareforpet.com/ko-kr (accessed on 2 May 2022).

- Chung, T.; Park, C.; Kwon, Y.; Yeon, S. Prevalence of canine behavior problems related to dog-human relationship in South Korea—A pilot study. J. Vet. Behav. 2016, 11, 26–30. [Google Scholar] [CrossRef]

- Luescher, A.U. Diagnosis and management of compulsive disorders in dogs and cats. Vet. Clin. N. Am. Small Anim. Pract. 2003, 33, 253–267. [Google Scholar] [CrossRef]

- Sherman, B.L. Understanding Behavior-Separation Anxiety in Dogs-Inadequate treatment of separation anxiety can lead to abandonment, relinquishment to an animal shelter, or even euthanasia of the affected dog. Compend. Contin. Educ. Pract. Vet. 2008, 30, 27–32. [Google Scholar]

- Bodnariu, A.L.I.N.A. Indicators of stress and stress assessment in dogs. Lucr. Stiint. Med. Vet. 2008, 41, 20–26. [Google Scholar]

- Kimberly, C. What Does It Mean When a Puppy Keeps Walking in Circles? Available online: https://dogcare.dailypuppy.com/mean-puppy-keeps-walking-circles-3320.html (accessed on 2 May 2022).

- PDSA (People’s Dispensary for Sick Animals). Animal Wellbeing Report 2017; PDSA: Cape Town, South Africa, 2017; p. 4. [Google Scholar]

- Sung, M.K.; Jeong, I.K. Motion Synthesis Method. Available online: https://patents.justia.com/patent/20100156912 (accessed on 2 May 2022).

- Karashchuk, P.; Rupp, K.L.; Dickinson, E.S.; Walling-Bell, S.; Sanders, E.; Azim, E.; Brunton, B.W.; Tuthill, J.C. Anipose: A toolkit for robust markerless 3D pose estimation. Cell Rep. 2021, 36, 109730. [Google Scholar] [CrossRef] [PubMed]

- Kaustubh, S.; Satya, M. Camera Calibration Using OpenCV. Available online: https://learnopencv.com/camera-calibration-using-opencv (accessed on 2 May 2022).

- Pavllo, D.; Feichtenhofer, C.; Grangier, D.; Auli, M. 3D human pose estimation in video with temporal convolutions and semi-supervised training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Wu, Y.; Kirillov, A.; Massa, F.; Yen, W.; Lo, R.G. Detectron2. Available online: https://github.com/facebookresearch (accessed on 14 January 2021).

- Yang, F.; Wu, Y.; Sakti, S.; Nakamura, S. Make Skeleton-based Action Recognition Model Smaller, Faster and Better. In Proceedings of the ACM Multimedia Asia, New York, NY, USA, 15–18 December 2019. [Google Scholar]

- Marshall, J.D.; Klibaite, U.; Gellis, A.J.; Aldarondo, D.E.; Olveczky, B.P.; Dunn, T.W. The pair-r24m dataset for multi-animal 3d pose estimation. bioRxiv 2021. [Google Scholar] [CrossRef]

- Google Developers. Available online: https://developers.google.com/machine-learning/crash-course/descending-into-ml/training-and-loss?hl=ko (accessed on 2 May 2022).

- Faiz, M.M.T.; Sadeep, D.K. How to increase the attendance in an event through quality circles. In Proceedings of the 8th International Symposium SEUSL, Oluvil, Sri Lanka, 17–18 December 2018. [Google Scholar]

- Kang, M. OkeyDoggy: Application for Helping Communication between Owners and Companion Dogs with Deep Learning—Focusing on ‘Calming Signal’. Master’s Thesis, Sogang University, Seoul, Korea, 2021. [Google Scholar]

| Parameter | Value |

|---|---|

| Stride | 1 |

| Epochs | 60 |

| batch-size | 1024 |

| Dropout | 0.25 |

| lr (learning rate) | 0.001 |

| lr-decay | 0.95 |

| Architecture | 3, 3, 3, 3, 3 |

| Channels | 1024 |

| Category | Value [mm] |

|---|---|

| MPJPE | 75.9 |

| P-MPJPE | 45.6 |

| N-MPJPE | 70.9 |

| MPJVE | 4.11 |

| Category | Value |

|---|---|

| Training Loss | 0.0344 |

| Training Accuracy | 1.0000 |

| Validation Loss | 0.7443 |

| Validation Accuracy | 0.8182 |

| Sample | Gender | Age | Experience |

|---|---|---|---|

| A | Male | In his 20s | Level 2 dog-training certificate Animal-assisted psychotherapist Pet sitter and handler certificates |

| B | Female | In her 20s | Level 3 dog-training certificate |

| C | Male | In his 20s | Level 3 companion dog-care certificate Level 3 companion dog-training certificate |

| D | Male | In his 40s | Six years of experience in dog training A companion dog trainer |

| E | Female | In her 30s | Level 3 companion dog-training certificate |

| Order | Task |

|---|---|

| 1 | Run the mobile app, check the live video |

| 2 | Input the walking time and isolation time |

| 3 | Check the stress index |

| Sample | 1 | 2 | 3 |

|---|---|---|---|

| A | 00:15 | 00:23 | 01:02 |

| B | 00:18 | 00:24 | 00:33 |

| C | 00:21 | 00:21 | 00:43 |

| D | 00:15 | 00:17 | 00:50 |

| E | 00:17 | 00:38 | 00:48 |

| Categories | Questions | |

|---|---|---|

| Q1 | Validity | Was the walk time of the dog and the number of circles it performed related to its stress? |

| Q2 | Was the isolation time of the dog and the number of circles it performed related to its stress? | |

| Q3 | Was the number of circles taken by the dog measured accurately? | |

| Q4 | What types of stress-related environmental information for dogs should be additionally included? | |

| Q5 | Usability | Do you think the proposed mobile app can analyze the stress of the dog? |

| Q6 | What is the difference between the developed mobile app and other mobile apps? | |

| Q7 | What do you think about the usability of the developed mobile app? | |

| Q8 | What types of functions should be additionally included? |

| Opinions | Category |

|---|---|

| It appears that the mobile app performs measurements well. | Experience |

| It seems that the number of times the dog circles is being measured correctly | |

| I have never seen any mobile apps that can analyze canine behavior. This mobile app is advantageous in that users can be easily informed about the meaning of their dogs’ behaviors. | |

| I find it positive that the developed mobile app provides users with the stress index of their dogs. The bar graph (referring to the times that companion dogs spend walking and being left alone) is helpful because users can understand how much affection they devote to their dogs at a glance. | |

| It is my first time experiencing a mobile app that can analyze the behaviors of companion dogs. Other mobile apps provide only descriptions related to their behaviors. By contrast, this mobile app analyzes their behaviors based on their recorded videos. For this reason, I find this mobile app better than other mobile apps. | |

| By simply installing the developed mobile app on their smartphones, users can automatically ascertain the problematic behaviors of their companion dogs. I believe that this function will help users easily identify problematic behaviors of their dogs. | |

| The accessibility of the mobile app is high enough to allow me to use this app without inconvenience. I can also search detailed information easily. | |

| In general, the developed mobile app operates smoothly. However, when it was installed on my smartphone, at a certain point, this device detected my dog from only a certain angle. For this reason, the problematic behaviors of my dog were not analyzed when my dog was not detected. I wish the app could improve this functionality. | |

| It is also crucial to analyze whether companion dogs naturally like people or only their owners. | Idea |

| I would like to recommend including additional information on the main owner for the companion dog among family members. | |

| It is recommended to include information on the amount of time that people spend playing with their dogs at home. | |

| I believe that the developed mobile app can analyze the stress of companion dogs more accurately if it detects the behaviors and facial expressions of these dogs simultaneously. | |

| It would be useful if the mobile app sent friendly messages on the analytic results derived by this app to users when the stress index of their dogs reaches a certain level. | |

| It will be appreciated if the developed mobile app provides a function for enabling users to solve quizzes about the causes of problematic behaviors of their dogs displayed on this app to increase the awareness of users. | |

| I hope that the developed mobile app can provide additional information on basic solutions for users to cope with situations where their dogs exhibit problematic behaviors. | |

| It would be useful if the developed mobile app could provide a function for enabling users to record the amount of time that they spent playing with their dogs. I suggest that this app should allow users to identify not only the amount of time that they spent walking their dogs but also the amount of time that they spent playing with their dogs. | |

| It is recommended to analyze a relationship between the stress of the dog with not only the dog walk time but also the behaviors of the dog shown during the dog walk time. | Relevance |

| Abandoned dogs might perform circling because of past trauma, and certain companion dogs might perform circling because of SAD. | |

| Companion dogs show different tendencies. Some companion dogs might be satisfied with a short walk time, whereas other companion dogs might not be satisfied with even a long walk time. | |

| Companion dogs suffering from SAD can exhibit several problematic behaviors, including circling, when they are separated from their owners. | |

| When companion dogs are isolated from their owners, they suffer from considerable stress. | |

| If puppies did not learn how to live independently during the period of independently leaving their mother dogs, they will suffer from SAD. I think that highly independent dogs might not be stressed even when they are left alone without their owners. | |

| The relationship between companion dogs and their owners and the tendencies of these dogs can be regarded as important stress-related environmental information. | |

| The amount of time people spend playing with their dogs at home and caring about their dogs is as important as the time they spend walking their dogs. | |

| The same behaviors of dogs might have different meanings. The meaning of the same circling behavior can vary according to a minor difference in the ears, tails, pupils, and hair. | |

| It would be difficult to analyze the stress of companion dogs without considering their tendencies. | |

| It is required to inspect situations observed when problematic behaviors of companion dogs occur as well as behaviors that they perform instantly before they exhibit such problematic behaviors. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, R.; Choi, Y. OkeyDoggy3D: A Mobile Application for Recognizing Stress-Related Behaviors in Companion Dogs Based on Three-Dimensional Pose Estimation through Deep Learning. Appl. Sci. 2022, 12, 8057. https://doi.org/10.3390/app12168057

Yu R, Choi Y. OkeyDoggy3D: A Mobile Application for Recognizing Stress-Related Behaviors in Companion Dogs Based on Three-Dimensional Pose Estimation through Deep Learning. Applied Sciences. 2022; 12(16):8057. https://doi.org/10.3390/app12168057

Chicago/Turabian StyleYu, Rim, and Yongsoon Choi. 2022. "OkeyDoggy3D: A Mobile Application for Recognizing Stress-Related Behaviors in Companion Dogs Based on Three-Dimensional Pose Estimation through Deep Learning" Applied Sciences 12, no. 16: 8057. https://doi.org/10.3390/app12168057

APA StyleYu, R., & Choi, Y. (2022). OkeyDoggy3D: A Mobile Application for Recognizing Stress-Related Behaviors in Companion Dogs Based on Three-Dimensional Pose Estimation through Deep Learning. Applied Sciences, 12(16), 8057. https://doi.org/10.3390/app12168057