A Neural Network-Based Navigation Approach for Autonomous Mobile Robot Systems

Abstract

:1. Introduction

- A autonomous mobile robot navigation problem was formulated with a finite number of motion types, requirements on obstacle avoidance in 2D space, and no global environmental information.

- An implementation algorithm is provided on how to process the obtained data and train a neural network model using a supervised learning method to approach the human decision-making process.

- A simulator was established in the Python programming environment to model each step of an exemplary mobile robot navigation task with visualization outcomes, and the human decision data were collected under the same navigation scenario of the simulator.

- The proposed algorithm used the data collected from the human decision-making process under the same navigation scenario to train a neural network model to achieve high estimation accuracy rates.

- The trained neural network model was validated in the simulator to perform the required task with the desired performance, which solves the real-time computation issue.

2. Problem Formulation

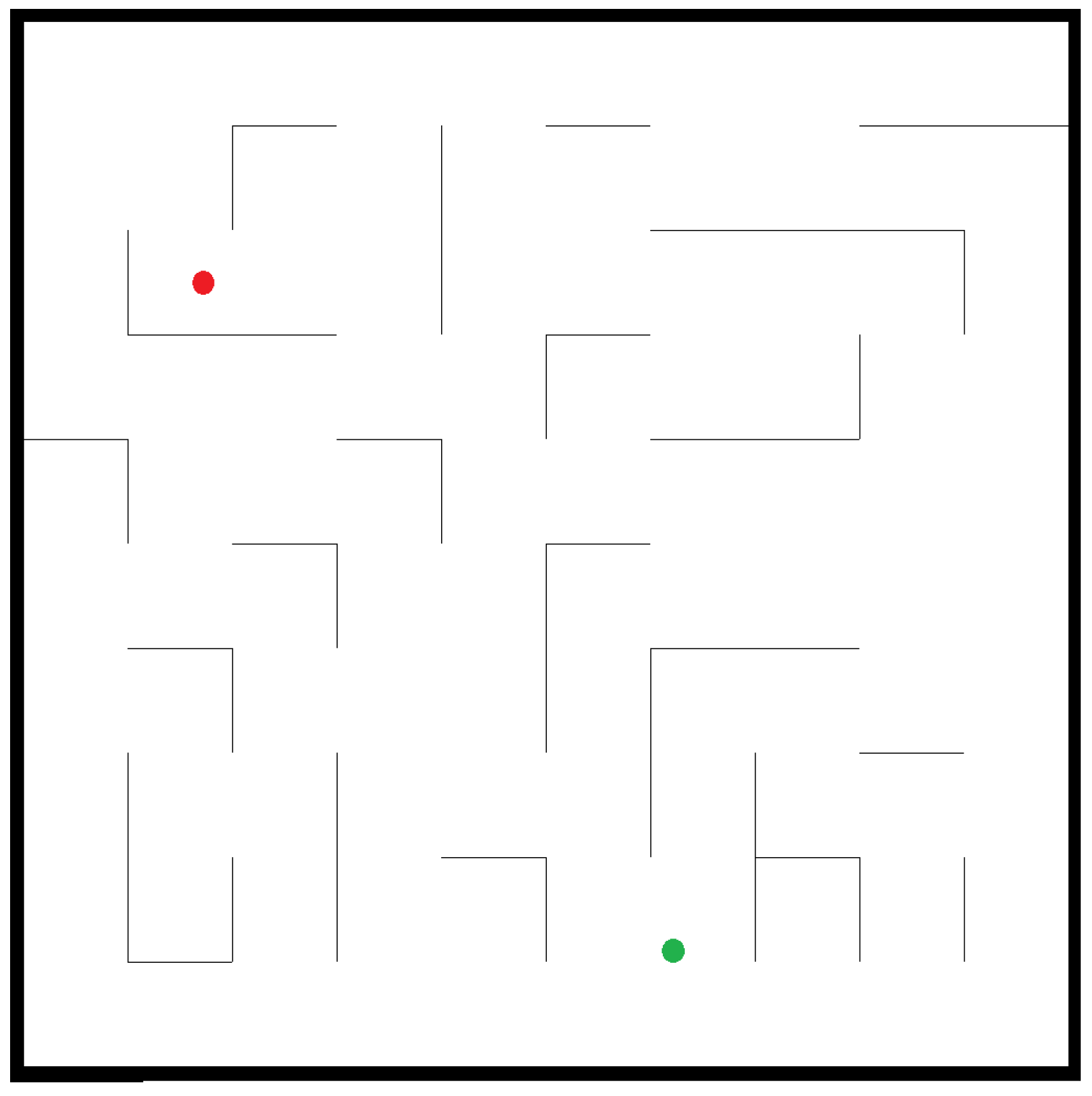

2.1. Navigation Task Description

2.2. Task Design Objective and Problem Definition

3. Process for Training a Neural Network

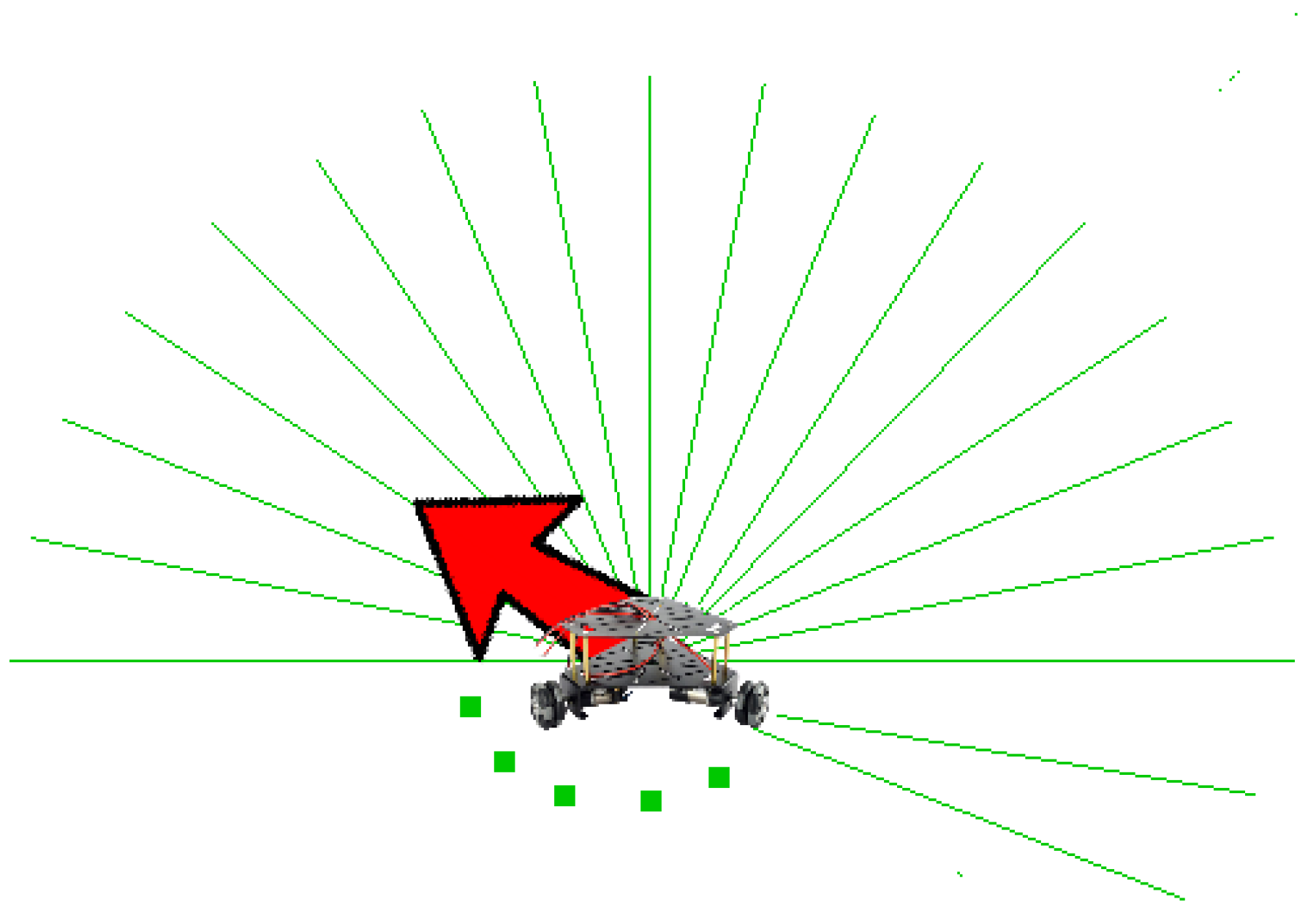

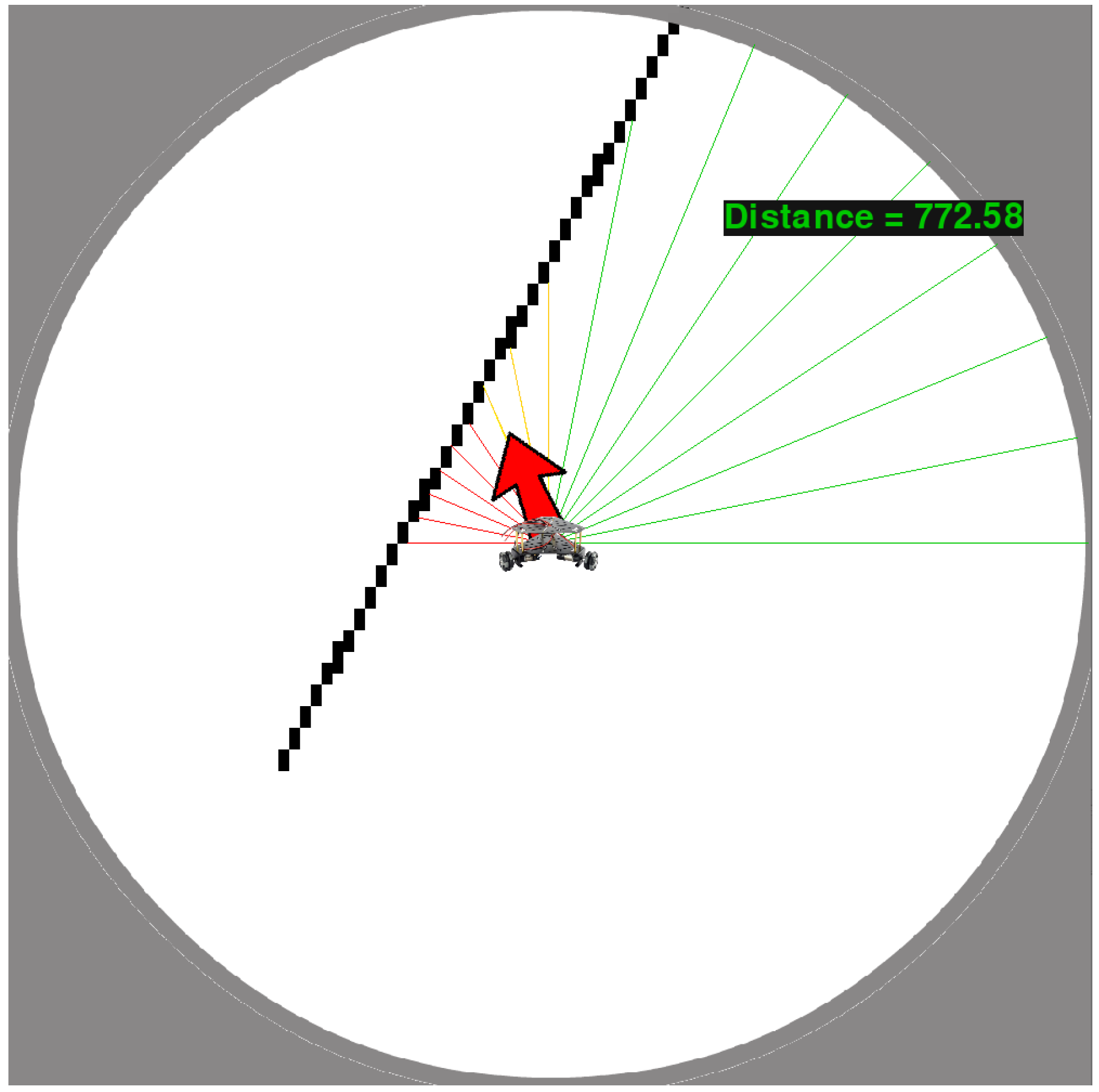

3.1. User Data Collection and Problem Reformulation

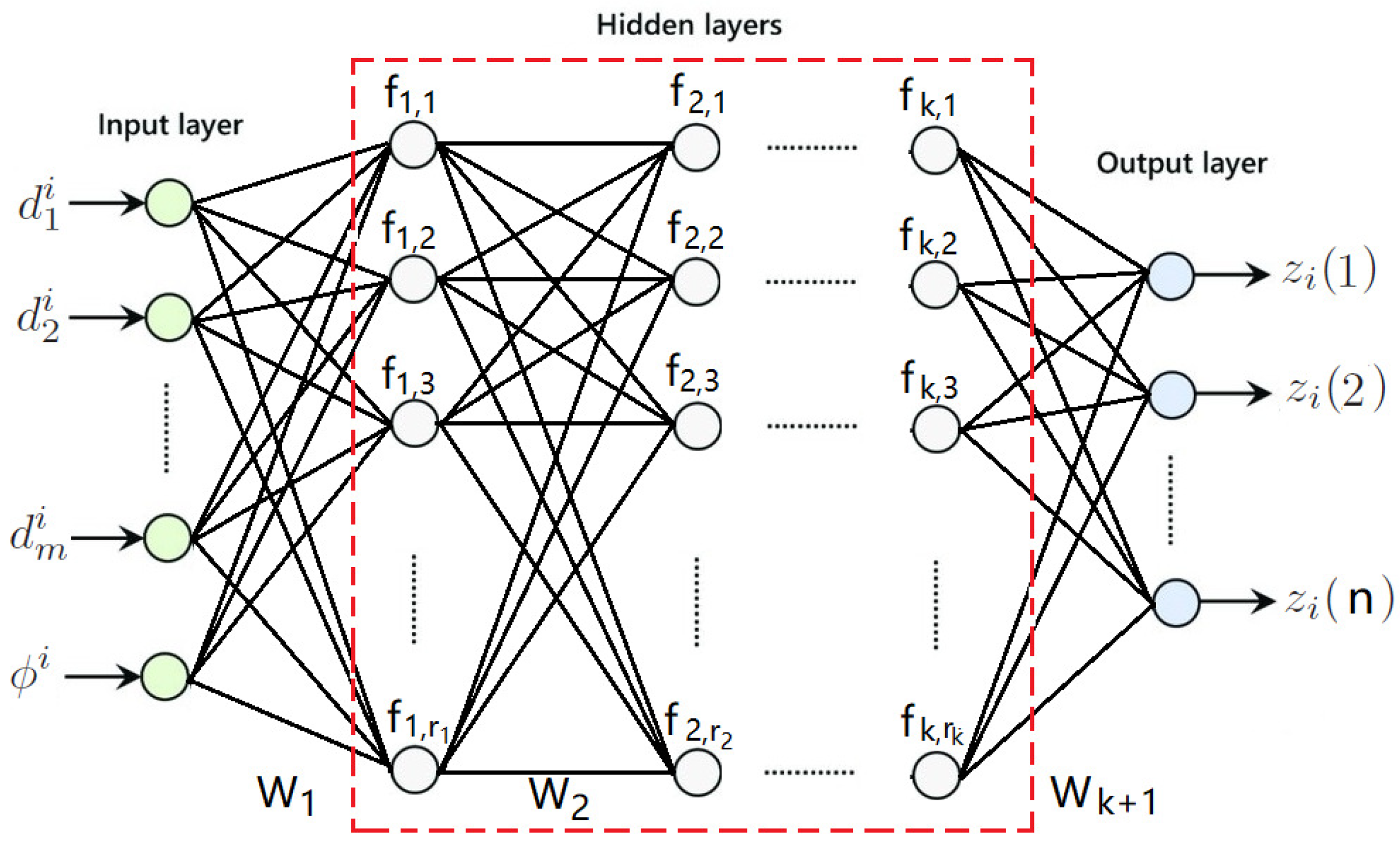

3.2. Neural Network Structure

3.3. A Supervised Learning Algorithm

| Algorithm 1 Training process for neural network model |

|

4. Mobile Robot Simulator Platform

5. Numerical Simulation Results

5.1. Design Parameter Specifications

5.2. Training Performance Analysis

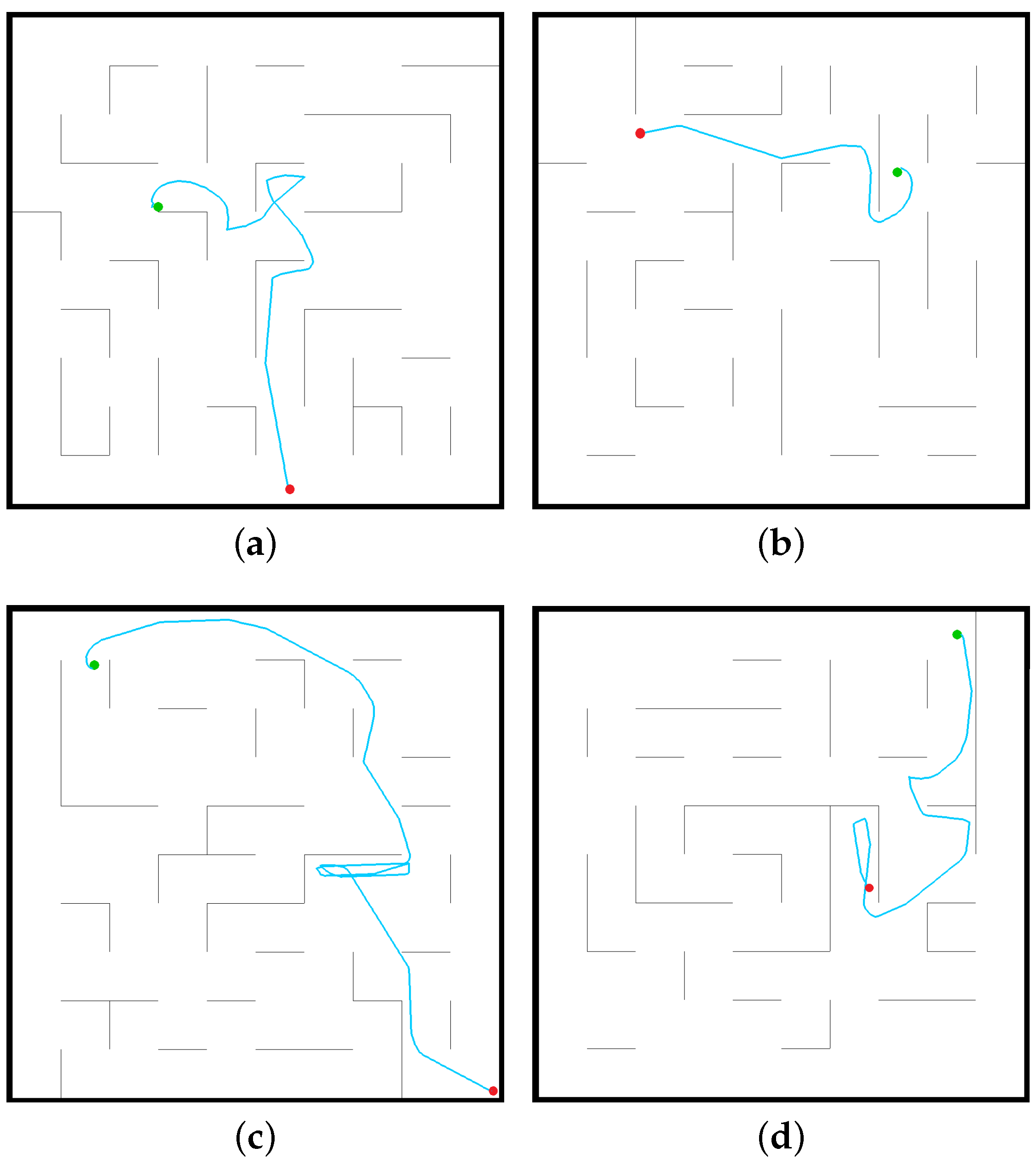

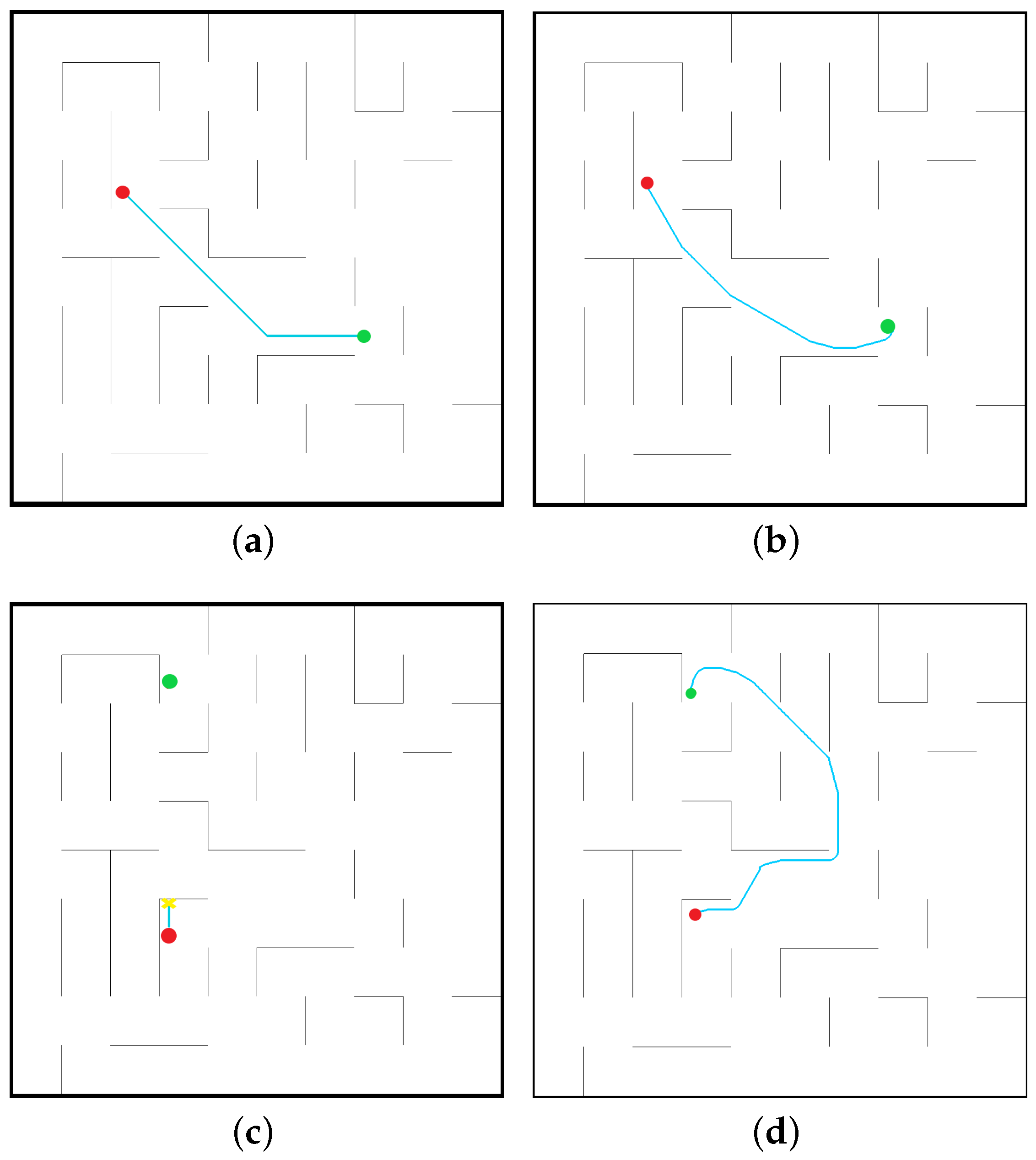

5.3. Verification on the Simulator

5.4. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Abbreviations | Description |

| CUDA | Compute unified device architecture |

| GPU | Graphics processing unit |

| t-SNE | t-Distributed Stochastic Neighbor Embedding |

| Symbols | Description |

| is an n-dimensional Euclidean vector space | |

| is an real matrix space | |

| is the i-th motion type of a mobile robot | |

| is the i-th state change function of a mobile robot | |

| is the cross entropy loss function | |

| t | is the sample decision-making time index |

| is the training data set | |

| is the testing data set |

References

- Gong, Y.; Zhang, L.; Liu, R.P.; Yu, K.; Srivastava, G. Nonlinear MIMO for Industrial Internet of Things in Cyber-Physical Systems. IEEE Trans. Ind. Inform. 2021, 17, 5533–5541. [Google Scholar] [CrossRef]

- Schulman, J.; Duan, Y.; Ho, J.; Lee, A.; Awwal, I.; Bradlow, H.; Pan, J.; Patil, S.; Goldberg, K.; Abbeel, P. Motion planning with sequential convex optimization and convex collision checking. Int. J. Robot. Res. 2014, 33, 1251–1270. [Google Scholar] [CrossRef] [Green Version]

- Rezaee, H.; Abdollahi, F. A Decentralized Cooperative Control Scheme with Obstacle Avoidance for a Team of Mobile Robots. IEEE Trans. Ind. Electron. 2014, 61, 347–354. [Google Scholar] [CrossRef]

- Ji, J.; Khajepour, A.; Melek, W.W.; Huang, Y. Path Planning and Tracking for Vehicle Collision Avoidance Based on Model Predictive Control With Multiconstraints. IEEE Trans. Veh. Technol. 2017, 66, 952–964. [Google Scholar] [CrossRef]

- Cheng, C.; Chen, Y. A neural network based mobile robot navigation approach using reinforcement learning parameter tuning mechanism. In Proceedings of the IEEE 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021. [Google Scholar]

- Goerzen, C.; Kong, Z.; Mettler, B. A Survey of Motion Planning Algorithms from the Perspective of Autonomous UAV Guidance. J. Intell. Robot. Syst. 2010, 57, 65–100. [Google Scholar] [CrossRef]

- Campbell, S.; Naeem, W.; Irwin, G.W. A review on improving the autonomy of unmanned surface vehicles through intelligent collision avoidance manoeuvres. Annu. Rev. Control 2012, 36, 267–283. [Google Scholar] [CrossRef] [Green Version]

- Galceran, E.; Carreras, M. A survey on coverage path planning for robotics. Robot. Auton. Syst. 2013, 61, 1258–1276. [Google Scholar] [CrossRef] [Green Version]

- Meng, D.; Xiao, Y.; Guo, Z.; Jolfaei, A.; Qin, L.; Lu, X.; Xiang, Q. A Data-driven Intelligent Planning Model for UAVs Routing Networks in Mobile Internet of Things. Comput. Commun. 2021, 179, 231–241. [Google Scholar] [CrossRef]

- Khansari-Zadeh, S.M.; Billard, A. A dynamical system approach to realtime obstacle avoidance. Auton. Robot. 2012, 32, 433–454. [Google Scholar] [CrossRef] [Green Version]

- Chu, K.; Lee, M.; Sunwoo, M. Local Path Planning for Off-Road Autonomous Driving with Avoidance of Static Obstacles. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1599–1616. [Google Scholar] [CrossRef]

- Alonso-Mora, J.; Naegeli, T.; Siegwart, R.; Beardsley, P. Collision avoidance for aerial vehicles in multi-agent scenarios. Auton. Robot. 2015, 39, 101–121. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning; Springer: Berlin, Germany, 2001. [Google Scholar]

- Nasrabadi, N.M. Pattern recognition and machine learning. J. Electron. Imaging 2007, 16, 049901. [Google Scholar]

- Sun, Y.; Liu, J.; Yu, K.; Alazab, M.; Lin, K. PMRSS: Privacy-preserving Medical Record Searching Scheme for Intelligent Diagnosis in IoT Healthcare. IEEE Trans. Ind. Inform. 2021, 18, 1. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the 2012 International Conference on Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Weng, G.; Dong, B.; Lei, Y. A level set method based on additive bias correction for image segmentation. Expert Syst. Appl. 2021, 185, 115633. [Google Scholar] [CrossRef]

- Ge, P.; Chen, Y.; Wang, G.; Weng, G. A hybrid active contour model based on pre-fitting energy and adaptive functions for fast image segmentation. Pattern Recognit. Lett. 2022, 158, 71–79. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, L.; Ding, Z.; Song, J.; Zhai, Y.; Zhang, D. Sparse Vector Coding-based Multi-Carrier NOMA for In-Home Health Networks. IEEE J. Sel. Areas Commun. 2021, 39, 325–337. [Google Scholar] [CrossRef]

- Chen, Y.; Zhou, Y. Machine learning based decision making for time varying systems: Parameter estimation and performance optimization. Knowl.-Based Syst. 2020, 190, 105479. [Google Scholar] [CrossRef]

- Chen, Y.; Zhou, Y.; Zhang, Y. Machine Learning-Based Model Predictive Control for Collaborative Production Planning Problem with Unknown Information. Electronics 2021, 10, 1818. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, W.; Charalambous, T. Machine learning based iterative learning control for non-repetitive time-varying systems. Int. J. Robust Nonlinear Control 2022. Early View. [Google Scholar] [CrossRef]

- Tao, H.; Wang, P.; Chen, Y.; Stojanovic, V.; Yang, H. An unsupervised fault diagnosis method for rolling bearing using STFT and generative neural networks. J. Franklin Inst. 2020, 357, 7286–7307. [Google Scholar] [CrossRef]

- Jaradat, M.A.K.; Al-Rousan, M.; Quadan, L. Reinforcement based mobile robot navigation in dynamic environment. Robot. Comput.-Integr. Manuf. 2011, 27, 135–149. [Google Scholar] [CrossRef]

- Duguleana, M.; Mogan, G. Neural networks based reinforcement learning for mobile robots obstacle avoidance. Expert Syst. Appl. 2016, 62, 104–115. [Google Scholar] [CrossRef]

- Zhu, K.; Zhang, T. Deep reinforcement learning based mobile robot navigation: A review. Tsinghua Sci. Technol. 2021, 26, 674–691. [Google Scholar] [CrossRef]

- Li, H.; Zhang, Q.; Zhao, D. Deep Reinforcement Learning-Based Automatic Exploration for Navigation in Unknown Environment. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 2064–2076. [Google Scholar] [CrossRef] [PubMed]

- Xiao, W.; Yuan, L.; He, L.; Ran, T.; Zhang, J.; Cui, J. Multigoal Visual Navigation with Collision Avoidance via Deep Reinforcement Learning. IEEE Trans. Instrum. Meas. 2022, 71, 1–9. [Google Scholar] [CrossRef]

- Ni, Z.; He, H.; Wen, J.; Xu, X. Goal Representation Heuristic Dynamic Programming on Maze Navigation. IEEE Trans. Neural Netw. Learn. Syst. 2013, 24, 2038–2050. [Google Scholar] [PubMed]

- Kim, C.J.; Chwa, D. Obstacle Avoidance Method for Wheeled MobileRobots Using Interval Type-2 FuzzyNeural Network. IEEE Trans. Fuzzy Syst. 2015, 23, 677–687. [Google Scholar] [CrossRef]

- Talbot, B.; Dayoub, F.; Corke, P.; Wyeth, G. Robot Navigation in Unseen Spaces Using an Abstract Map. IEEE Trans. Cogn. Dev. Syst. 2021, 13, 791–805. [Google Scholar] [CrossRef]

- Yuan, W.; Li, Z.; Su, C.Y. Multisensor-Based Navigation and Control of a Mobile Service Robot. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 2624–2634. [Google Scholar] [CrossRef]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning; Springer: Berlin, Germany, 2013. [Google Scholar]

- Chen, Y.; Chu, B.; Freeman, C.T. Generalized Iterative Learning Control using Successive Projection: Algorithm, Convergence and Experimental Verification. IEEE Trans. Control Syst. Technol. 2020, 28, 2079–2091. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Chu, B.; Freeman, C.T. Iterative Learning Control for Path-Following Tasks with Performance Optimization. IEEE Trans. Control Syst. Technol. 2022, 30, 234–246. [Google Scholar] [CrossRef]

- Chen, Y.; Chu, B.; Freeman, C.T. Iterative Learning Control for Robotic Path Following with Trial-Varying Motion Profiles. IEEE/ASME Trans. Mechatron. 2022. Unpublished work. [Google Scholar] [CrossRef]

| Number | Consumed Time (s) | ||||||

|---|---|---|---|---|---|---|---|

| 1 | 0.00123 | 0.00100 | 0.00100 | 0.00100 | 0.00119 | 0.00100 | 0.00107 |

| 2 | 0.00100 | 0.00099 | 0.00100 | 0.00099 | 0.00100 | 0.00100 | 0.00100 |

| 3 | 0.00100 | 0.00099 | 0.00200 | 0.00100 | 0.00100 | 0.00100 | 0.001165 |

| 4 | 0.00100 | 0.00100 | 0.00100 | 0.00099 | 0.00100 | 0.00100 | 0.00100 |

| 5 | 0.00100 | 0.00100 | 0.00100 | 0.00200 | 0.00099 | 0.00100 | 0.001165 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Cheng, C.; Zhang, Y.; Li, X.; Sun, L. A Neural Network-Based Navigation Approach for Autonomous Mobile Robot Systems. Appl. Sci. 2022, 12, 7796. https://doi.org/10.3390/app12157796

Chen Y, Cheng C, Zhang Y, Li X, Sun L. A Neural Network-Based Navigation Approach for Autonomous Mobile Robot Systems. Applied Sciences. 2022; 12(15):7796. https://doi.org/10.3390/app12157796

Chicago/Turabian StyleChen, Yiyang, Chuanxin Cheng, Yueyuan Zhang, Xinlin Li, and Lining Sun. 2022. "A Neural Network-Based Navigation Approach for Autonomous Mobile Robot Systems" Applied Sciences 12, no. 15: 7796. https://doi.org/10.3390/app12157796

APA StyleChen, Y., Cheng, C., Zhang, Y., Li, X., & Sun, L. (2022). A Neural Network-Based Navigation Approach for Autonomous Mobile Robot Systems. Applied Sciences, 12(15), 7796. https://doi.org/10.3390/app12157796