1. Introduction

Time series forecasting is a field of interest in many research and society fields such as energy [

1,

2,

3], economics [

4,

5], health [

6,

7], agriculture [

8,

9], education [

10,

11], infrastructure [

12,

13], defense [

14], technology [

15], hydrology [

16,

17], and many others. Time series are generally addressed in terms of stochastic processes in which values are placed at consecutive points in time [

18]. Time series forecasting is the process of predicting values of a historical data sequence [

19]. In the digitized development, with the increase in extensive historical data, more powerful and cross-platform-compatible forecasting methods are highly desirable [

20,

21].

Pattern-sequence-based forecasting (PSF) is a univariate time series forecasting method which was proposed in 2011 [

22]. It was developed to predict a discrete time series and proposed to use clustering methods to transform a time series into a sequence of labels. To date, several researchers have proposed modifications for its improvement [

23,

24,

25,

26] and recently, its implementation in the form of an R package was also proposed [

27,

28]. PSF has been successfully used in various domains including wind speed [

29], solar power [

26], water demand [

13], electricity prices [

30], CO

emissions [

31], and cognitive radio [

32].

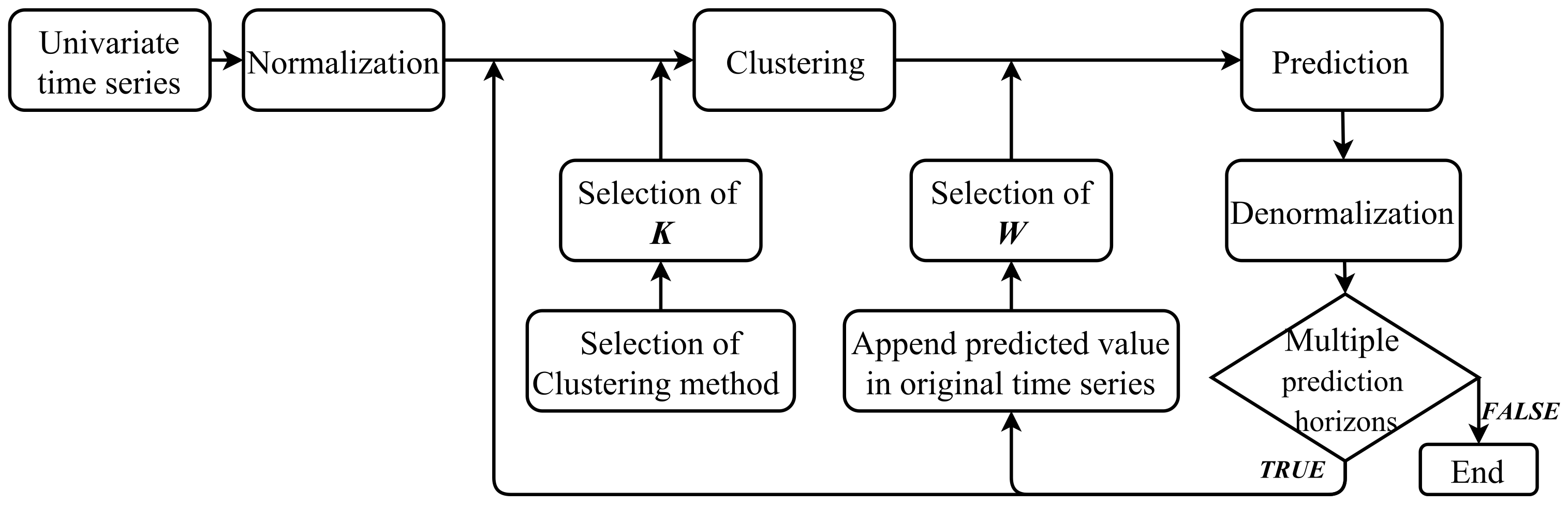

The PSF algorithm consists of various processes. These processes are broadly categorized into two steps, clustering of data and, based on this clustered data, performing forecasting. The predicted values are appended at the end of the original data and these new data are used to forecast future values. This makes PSF a closed-loop algorithm, which allows PSF to predict values for a longer duration. PSF has the ability to forecast more than one values at the same time, i.e., it deals with arbitrary lengths for the prediction horizon. It must be noted that this algorithm was particularly developed to forecast data which contain some patterns.

Figure 1 shows the steps involved in the PSF method.

The goal of the clustering step is to discover clusters and label them accordingly in the data. It consists of the normalization of the data, the selection of the optimal number of clusters, and applying k-means clustering using the optimum number of clusters. Normalization is an important part of any data processing technique. The formula used to normalize the data is:

where

is an input time series and

denotes the normalized value for

and

. The k-means clustering technique is used to cluster and label the data. However, k-means requires the number of clusters (

k) to be provided as an input. To calculate the optimum value of

k, the silhouette index was used. The clustering step outputs the time series as a series of labels which are used for forecasting.

Then, the last “

w” labels are selected from the series of labels outputted by the clustering step. This sequence of

w labels is searched for in the series of labels. If the sequence is not found, then the search is repeated with the last (

) labels. The selection of the optimum value of

w is crucial in order to get accurate prediction results. Formally, the size of the window for which the error in forecasting is minimum during the training process is called the optimum window size. The error function used is shown in (

2).

where

is a predicted value at time

t,

is the measured data at same time instance, and

represents the time series under study.

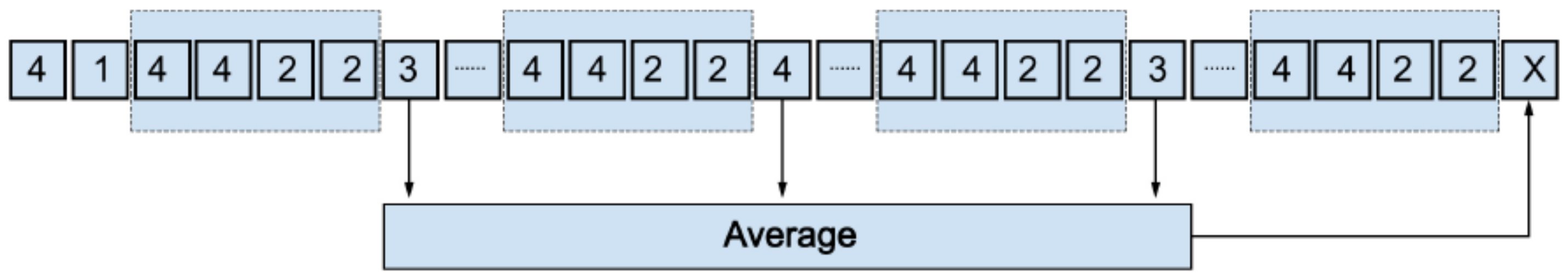

After the selection of the optimum window size (

w), the last

w values are searched for in a series of labels and labels next to the discovered sequence are stored in a new vector called

. The data corresponding to these labels from the original time series are retrieved. The future time series value is predicted by averaging the retrieved data from the time series with the expression (

3).

This predicted value is appended to the original time series and the process is repeated for predicting the next value as shown in

Figure 2. This allows PSF to make long-term predictions.

In the current research, the main intention of the current investigation was to develop a new Python package for modeling univariate time series data that are characterized by natural stochasticity. This can contribute remarkably to the best knowledge of monitoring, assessment, and advisable support for decision makers that are interested with such time-series-related problems. Among several time series engineering problems, hydrological time series forecasting is one of the highly attractive topics recently discovered [

34,

35,

36]. Hydrological time series processes are very complex and stochastic problems that require robust technologies to tackle their complicated mechanisms. Hence, in this research, several hydrological time series examples were tested to validate the proposed methodology.

2. Difference Pattern-Sequence-Based Forecasting (DPSF) Method

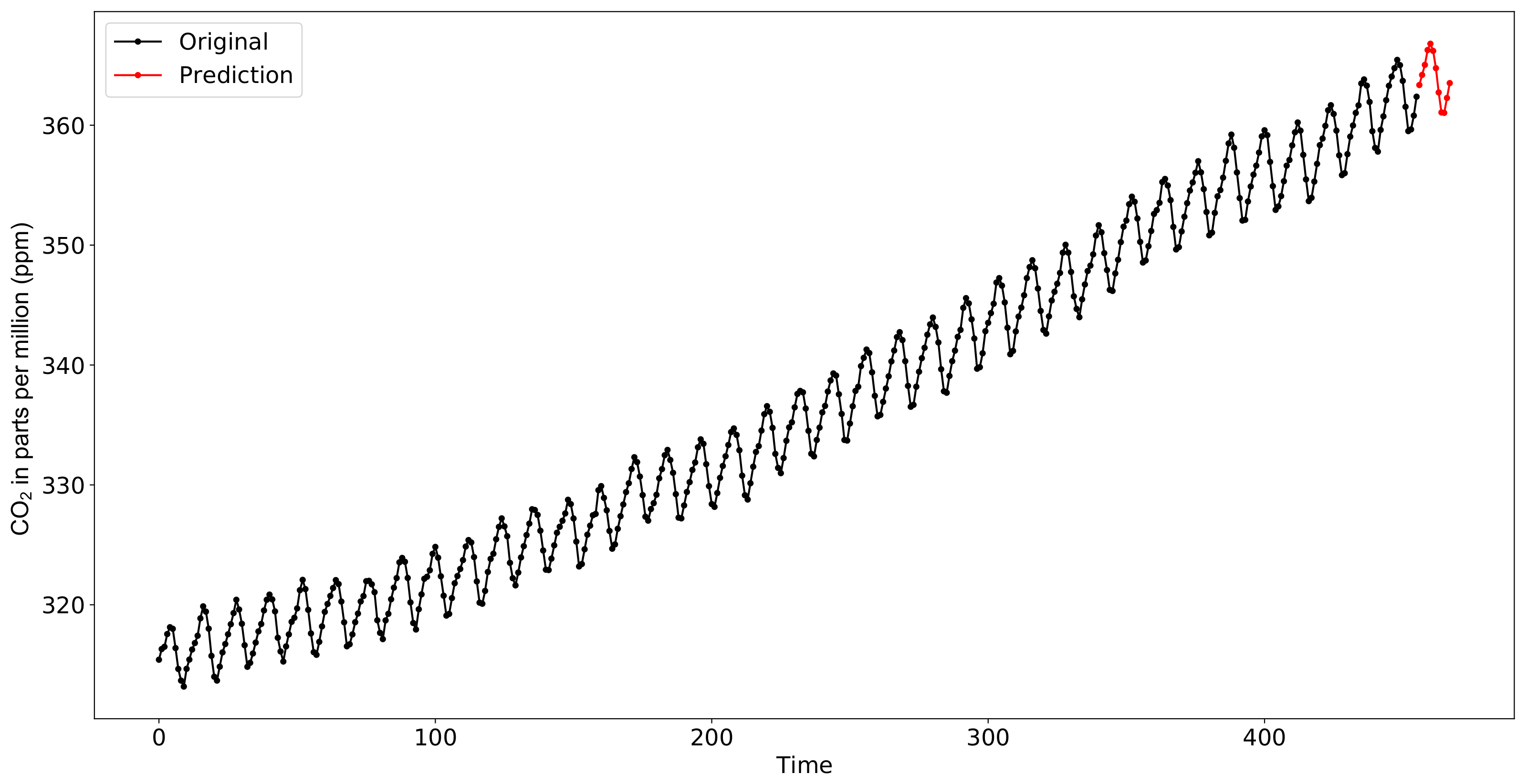

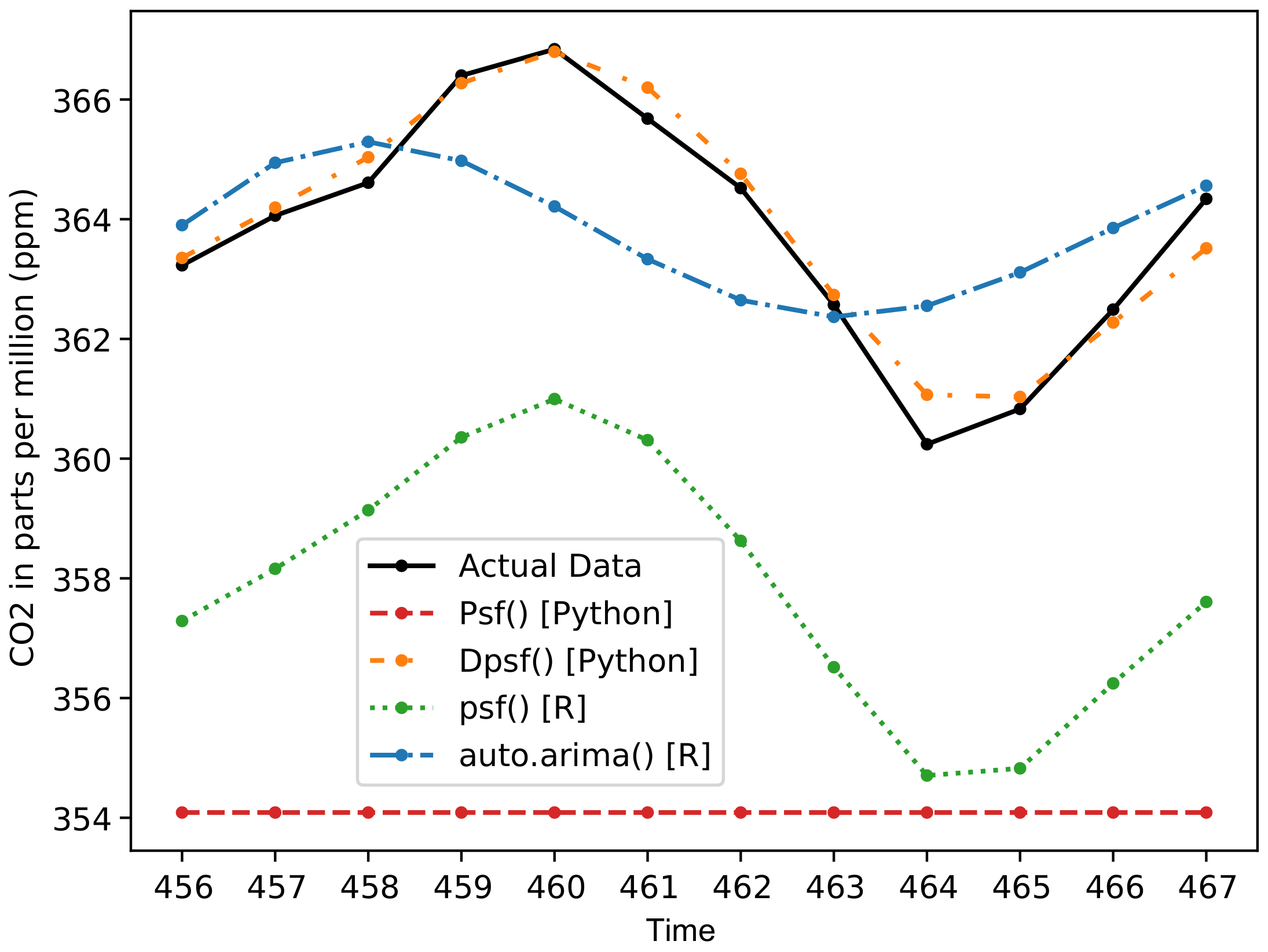

The PSF algorithm was particularly developed to forecast data for a time series which contains pattern or is seasonal, thus the prediction error is very small for such time series. However, if the time series follows some trends or is not seasonal, then the error increases. This can be observed in the illustrative examples provided in the later sections. The “nottem” dataset is very seasonal, thus the predictions of PSF are observed to be better than that of ARIMA. However, in the “CO

” dataset, the result of PSF is not as good as that of ARIMA. This is because the “CO

” dataset follows an upward trend. The forecasting results with the PSF method are degraded with positive or negative trends. To tackle this problem the DPSF model was proposed [

3].

The DPSF method is a modification of the PSF algorithm. The time series is differenced once. These differenced data are then used for prediction using the PSF algorithm. The predicted values are then appended to the differenced time series, which was used for prediction using PSF. Finally, the original time series is attempted to be regenerated using the reverse method of the first-order differencing process.

The DPSF method gives better results for data where positive or negative trends can be observed in the data. However, the PSF method does not work well with such datasets and prefers seasonal datasets. This can also be observed in examples shown in

Section 4.1 and

Section 4.2. An example in

Section 4.1 uses a seasonal dataset (

nottem), where the PSF results are better than the DPSF results. In

Section 4.2, the

CO dataset is used, which shows a positive trend. Here the results of DPSF are significantly better than those of PSF.

3. Description of the Python Package for PSF (PSF_Py)

The proposed Python package for PSF (PSF_Py) is available at the Python repository, describing license, version, and required package imports [

37]. The package can be installed using command in

Listing 1.

Listing 1.

Command to install PSF_Py package.

Listing 1.

Command to install PSF_Py package.

The package makes use of “pandas”, “numpy”, “matplotlib”, “sklearn” packages. The various tasks of the processes are accomplished by using various functions, such as

psf(),

predict(),

psf_predict(),

optimum_k(),

optimum_w(),

cluster_labels(),

neighbour(),

psf_model(), and

psf_plot(). The code for all the functions was made available on GitHub [

38]. All these functions were made private and are not directly accessible by the user. The user needs to create an object of the class

Psf, which takes as inputs the time series, cycle, values for the window size (

w), and the number of clusters (

k) to be formed. The values of

k and

w are optional; if not specified by the user, then they are internally calculated using the

optimum_k() and

optimum_w() functions. Once the PSF model has been created, the predictions can be made using the

predict() method. The

predict() takes as its input the number of predictions to make (

n_ahead). For the DPSF model, the user makes use of the class

Dpsf. The remaining process is the same as that of

Psf.

After the predictions are made using the predict() method of class Psf, the model can be viewed using the model_print() method. The original time series and predicted values are plotted using the psf_plot() or dpsf_plot() methods. Alternatively, the user can use “matplotlib” functions to plot the time series.

3.1. optimum_k()

The optimum_k function is used to calculate the optimum number of clusters for forecasting. The PSF uses the k-means algorithm to cluster the data, but the algorithm requires the number of clusters as an input. The function takes as inputs the time series and a tuple consisting of the desired values for k. The function performs k-means clustering using KMeans() from the sklearn package, calculates its silhouette score using the “the silhouette_score()” function and returns the value of k for which the score was maximum.

3.2. optimum_w()

The optimum_w function is used to calculate the optimum window size. The window size is a critical parameter for getting accurate predictions. A cross-validation is performed to find the optimum value for the window size. The time series is divided into a training and test set. The test set consists of the last cycle values of the time series and the training set consists of the remaining time series values. PSF is performed on the training set and cycle values are predicted. Then, the error is calculated for the predicted values and on the test set. The error is calculated using the mean absolute error (MAE). The function returns the value of w for which the error is minimum.

The functions for calculating the optimum window size and clustering the data may yield a different result in R and Python. Therefore, the predictions done in R and Python can vary in some cases. Furthermore, the default window values in optimum_w() range from 5 to 20 in Python. In R, they range from 1 to 10. In some cases, it was observed that the optimum number of clusters was calculated more accurately in Python. Overall, the predicted values were very similar to R.

3.3. get_ts()

In the Python package for PSF, some time series are included, namely, “nottem”, “AirPassengers”, “Nile”, “morley”, “penguin”, “sunspots”, and “wineind”. It should be noted that the package does not provide the entire data frames (datasets). It only provides a 1D array that consists of the data for the time series. These can be accessed using the get_ts() function, which takes as an input the name of the time series.

3.4. predict()

The predict() method is used to perform the forecasting. This method returns a numpy array of values predicted according to the PSF algorithm (or DPSF algorithm, if the DPSF model is used). The actual calculations take place in the psf_predict() function, which was made private and not intended to be directly used by the user. The predict() method also calculates the optimum values of k and w, in case no values are given by the user, using the optimum_w() and optimum_k() functions described above. If a tuple of values is passed instead of an integer, then the optimum k and w are calculated from those values. Furthermore, the normalization of the data is done in this method.

Some other functions are available to the users. The model_print() function prints the actual time series, predicted values, values of k and w used for predictions, and value of cycle for the time series. This function does not return anything; it only prints the data and parameters. The functions psf_plot() and dpsf_plot() take the PSF model and predicted values as inputs and plot them. The functions make use of the “matplotlib” package.

4. Demonstration

Following several established research works from the literature, proposing a new soft-computing methodology must be validated with real time series datasets [

39,

40,

41,

42]. The proposed package in the current research was examined on six different time series dataset. The performance of forecasting methods were compared with the root-mean-square error (

), mean absolute error (

), mean absolute percentage error (

), and Nash–Sutcliffe efficiency (

) [

43,

44]. These error metrics are defined in Equations (

4)–(

7), respectively.

where

and

are the measured and predicted data at time

t.

is the mean of the measured data and

N is the number of predicted values.

For each of the examples demonstrated, the original dataset was divided into training and test data. The number of observation values used for the test data is mentioned in each example. Once the forecasted values were calculated, they were compared against the test data.

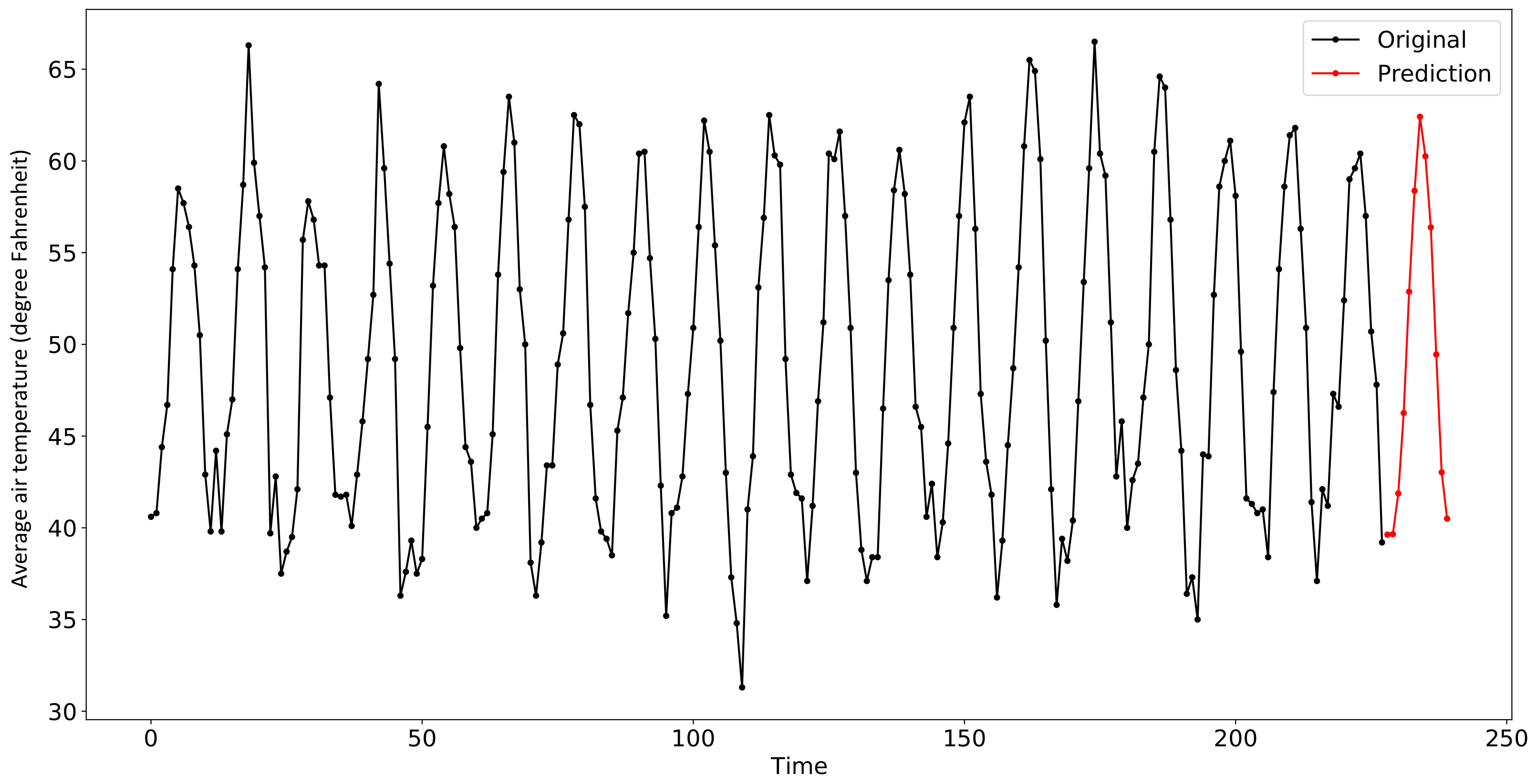

4.1. Example 1: Nottem Dataset

In the below example, the “nottem” time series was used for the model training, forecasting, and plotting. It contains the average air temperatures at Nottingham Castle in degrees Fahrenheit over 20 years [

45]. The procedure is the same for other univariate time series.

Table 1 reveals the statistical characteristics of the time series.

The package contains the

get_ts() function, which can be used to access some univariate time series included in the package using command in Listing

2.

Listing 2.

get_ts() function to access univariate time series included in the package.

Listing 2.

get_ts() function to access univariate time series included in the package.

# From the package import class PSF, and

|

# function get_ts() and psf_plot()

|

>>> from PSF_Py import Psf, get_ts, psf_plot

|

| |

| |

# Get the Time series ’nottem’

|

>>> ts = get_ts(’nottem’)

|

We split the time series into training and test parts. The test contained the last 12 values of the time series and the training part contained the remaining data. A

Psf model was then created using the training set as shown in Listing

3.

Listing 3.

Command to create a Psf model.

Listing 3.

Command to create a Psf model.

>>> train, test = ts[:len(ts)-12], ts[len(ts)-12:]

|

| |

| |

# Create a PSF model for prediction

|

>>> a = Psf(data=train, cycle=12)

|

The model can be printed using the

model_print() method as shown in Listing

4.

Listing 4.

Command to print the model

Listing 4.

Command to print the model

>>> a.model_print()

|

| |

Original time-series :

|

0 40.6

|

1 40.8

|

2 44.4

|

3 46.7

|

4 54.1

|

5 58.5

|

6 57.7

|

7 56.4

|

8 54.3

|

9 50.5

|

10 42.9

|

\dots

|

219 46.6

|

220 52.4

|

221 59.0

|

222 59.6

|

223 60.4

|

224 57.0

|

225 50.7

|

226 47.8

|

227 39.2

|

Length: 228, dtype: float64

|

k = 2

|

w = 12

|

cycle = 12

|

dmin = 31.3

|

dmax = 66.5

|

type = <class ’PSF_Py.psf.Psf’>

|

Then, Listing

5 shows how the actual prediction was performed using this PSF model.

Listing 5.

Command to predict using PSF model.

Listing 5.

Command to predict using PSF model.

# Perform prediction using predict method of

|

# class Psf.

|

>>> b = a.predict(n_ahead=12)

|

>>> b

|

| |

| |

array([39.62727273, 39.65454545, 41.87272727,

|

46.25454545, 52.87272727, 58.37272727, 62.40909091,

|

60.25454545, 56.38, 49.45, 43.02857143, 40.5])

|

where b contains the predicted values.

The model and predictions can be plotted using the

psf_plot() function (shown in Listing

6) as shown in

Figure 3.

Listing 6.

Command to plot the original and predicted values

Listing 6.

Command to plot the original and predicted values

A similar procedure was carried out to perform the prediction in R using the PSF library. Several error metrics was calculated for the predicted values and testing set. The performance of Python and R are compared in

Table 2 and

Figure 4.

4.2. Example 2: CO2 Dataset

This example demonstrates the use of the DPSF algorithm. The dataset consisted of atmospheric concentrations of CO

expressed in parts per million (ppm) and reported in the preliminary 1997 SIO manometric mole fraction scale [

46]. The values for February, March, and April of 1964 were missing and were obtained by interpolating linearly between the values for January and May of 1964.

Table 3 contains the statistical characteristics of the time series.

The time series data were divided into training and testing datasets. The training set contained the time series data, excluding the last 12 values. The testing dataset contained the last 12 values. A

Dpsf model was created using the training dataset, and the future 12 values were forecasted as shown in

Figure 5. The corresponding commands are shown in Listing

7. These predictions were then compared with the testing dataset. The comparisons are provided in

Table 4 and

Figure 6.

Listing 7.

Command to create and use Dpsf model

Listing 7.

Command to create and use Dpsf model

# Import Dpsf, get_ts(), and dpsf_plot()

|

# from PSF_Py package

|

>>> from PSF_Py import Dpsf,dpsf_plot,get_ts

|

|

# Load the time series CO2

|

>>> ts = get_ts(’co2’)

|

|

# Divide the time series into training and testing

|

>>> train, test = ts[:len(ts)-12], ts[len(ts)-12:]

|

|

# Create Dpsf model

|

>>> a = Dpsf(data=train, cycle=12)

|

|

# The created model can be displayed using

|

# model_print() method

|

>>> a.model_print()

|

Original time-series :

|

0 315.42

|

1 316.31

|

2 316.50

|

3 317.56

|

4 318.13

|

5 318.00

|

6 316.39

|

7 314.65

|

8 313.68

|

9 313.18

|

10 314.66

|

\dots

|

448 365.45

|

449 365.01

|

450 363.70

|

451 361.54

|

452 359.51

|

453 359.65

|

454 360.80

|

455 362.38

|

Length: 456, dtype: float64

|

k = 2

|

w = 18

|

cycle = 12

|

dmin = 313.18

|

dmax = 365.45

|

type = <class ’PSF_Py.dpsf.Dpsf’>

|

|

# Perform prediction using predict() method

|

>>> b = a.predict(n_ahead=12)

|

>>> b

|

array([363.35347826, 364.19434783, 365.03521739,

|

366.27304348, 366.79695652, 366.19913043, 364.75913043,

|

362.73458498, 361.06708498, 361.03143281, 362.2740415,

|

363.51445817])

|

|

# Plot the model and predicted values

|

>>> dpsf_plot(a,b)

|

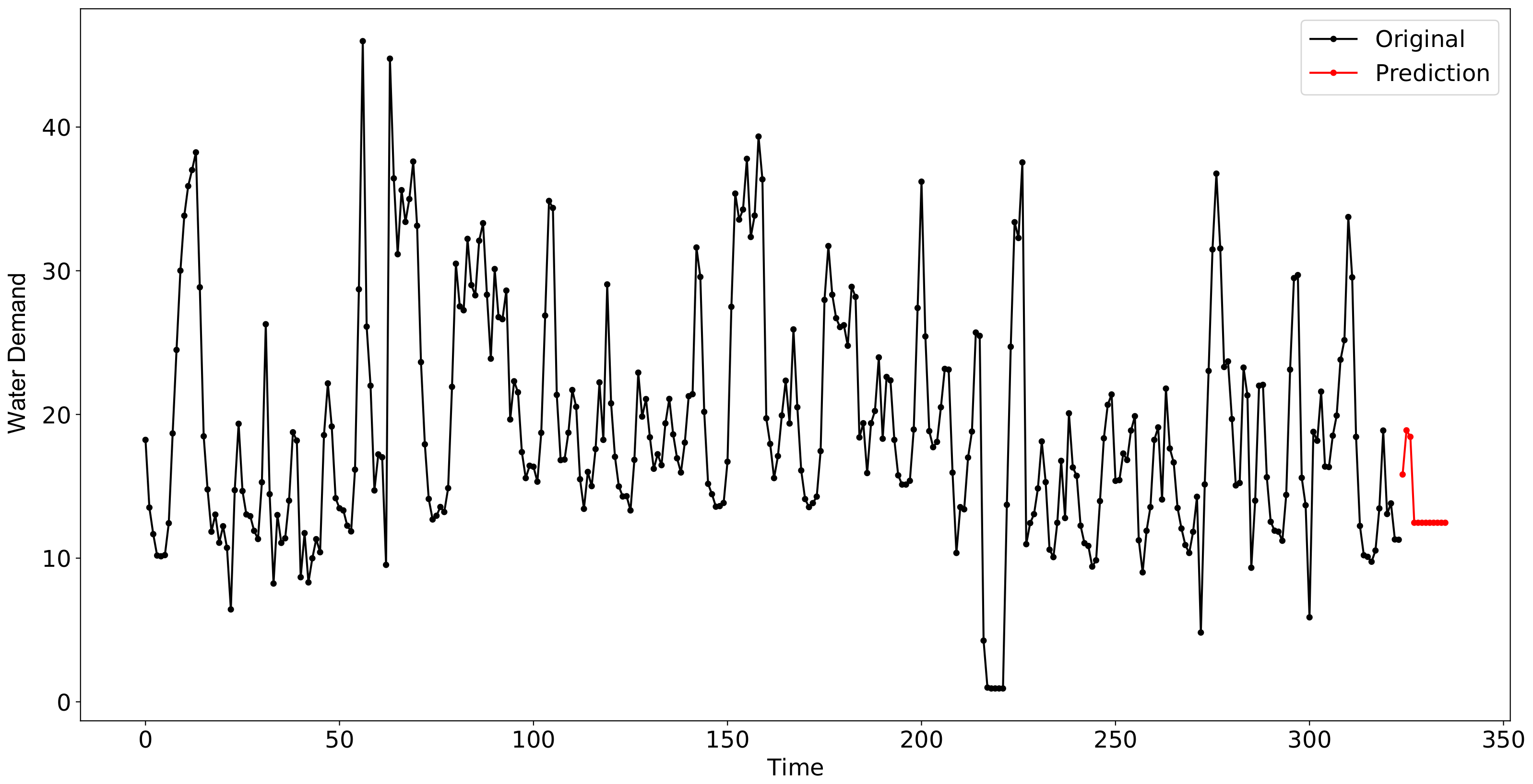

4.3. Example 3: Water Demand Dataset

The PSF and DPSF algorithms were applied to forecast water demand on the given dataset. Investigating such high complex time series data is highly important for water management [

47].

Table 5 contains the statistical characteristics of the time series.

Different error metrics comparison is listed in

Table 6. The error for the

Psf() function in python was found to be better than that of the other algorithms adopted in this study. The dataset and predictions using

Psf() and

Dpsf() are plotted in

Figure 7 and

Figure 8. Further, the comparison of forecasted values using various methods are shown in

Figure 9.

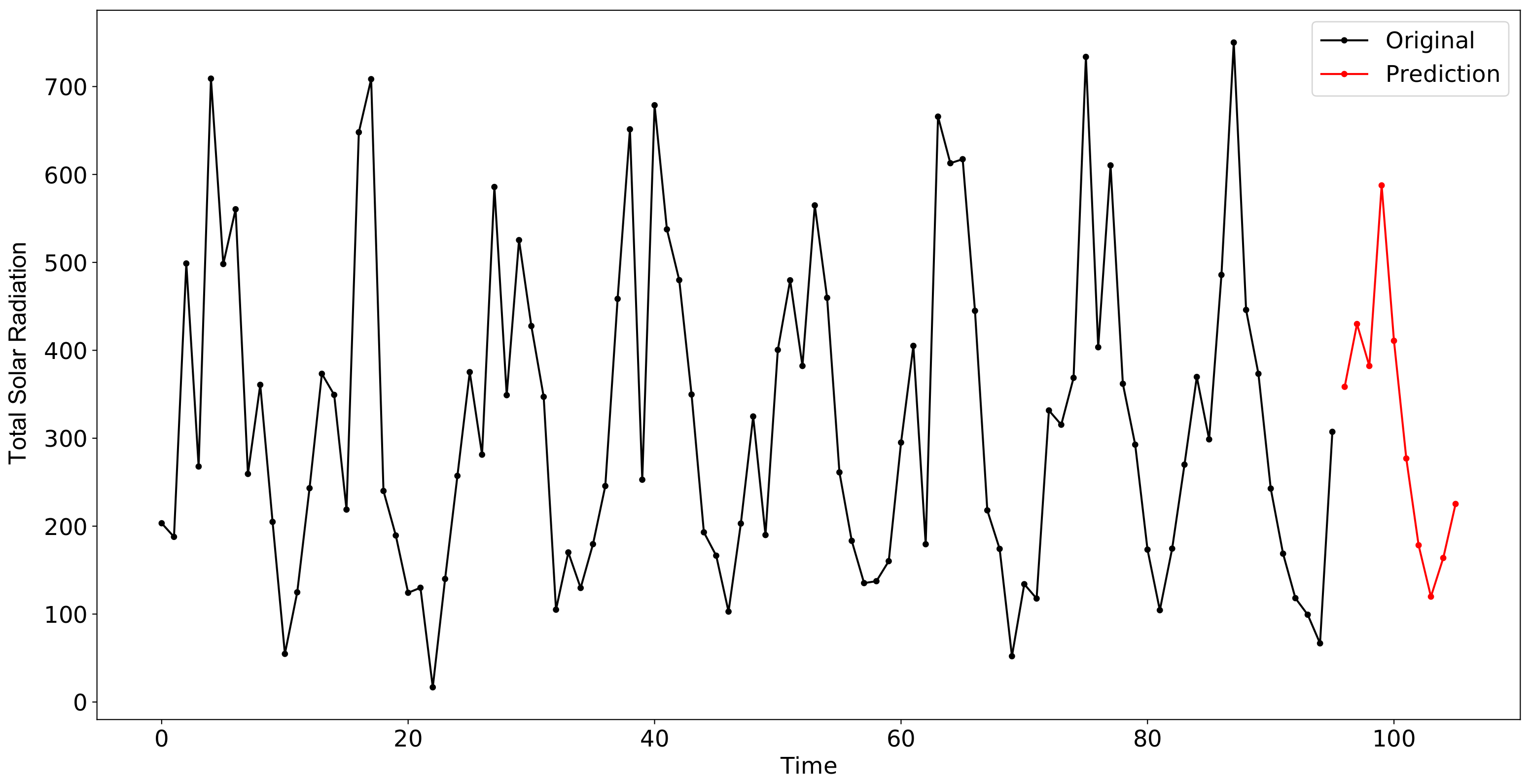

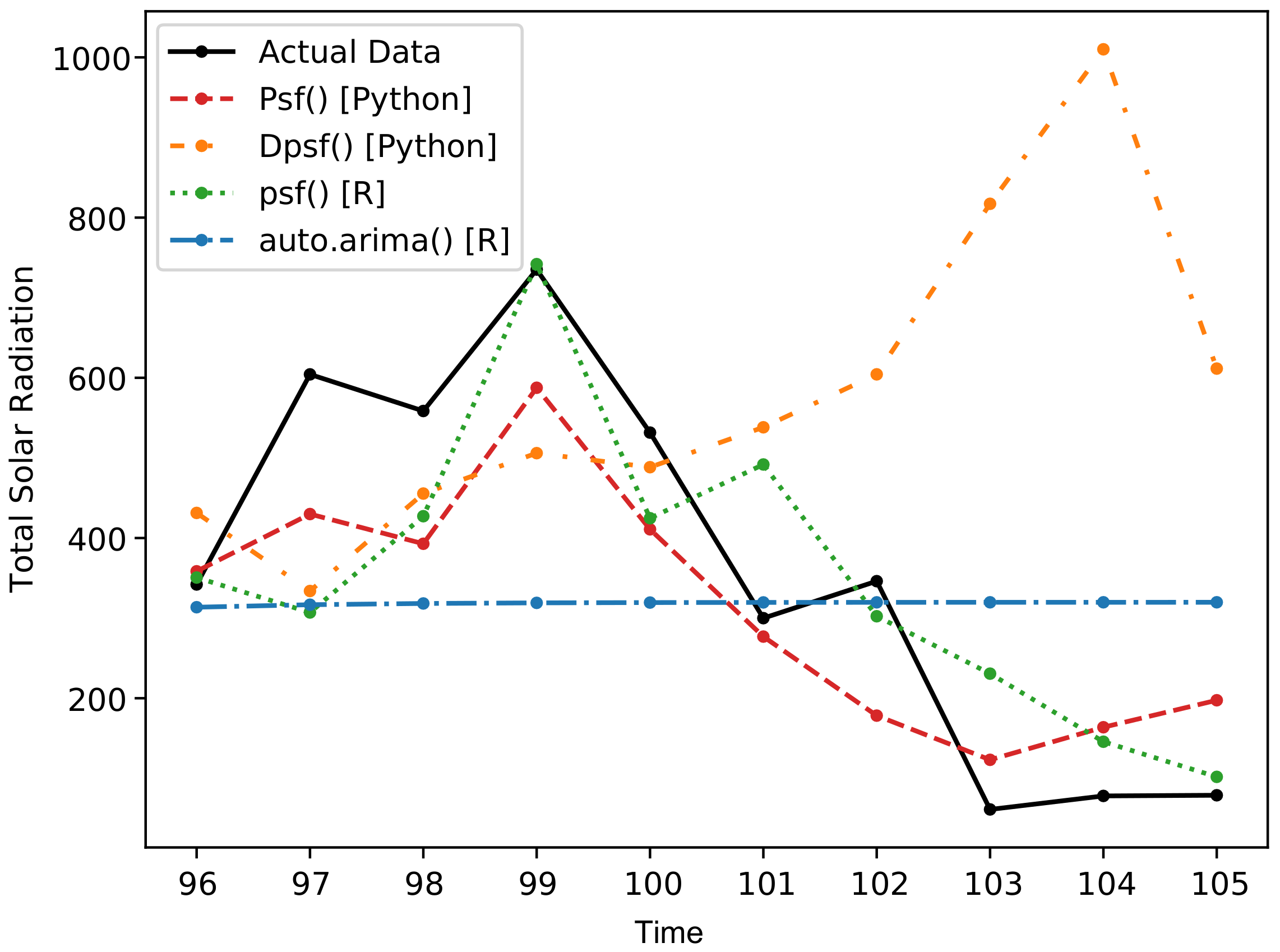

4.4. Example 4: Total Solar Radiation Dataset

Among several climatological time series, total solar radiation is one of the essential climatological processes. Providing a robust soft-computing methodology for solar radiation can contribute remarkably to clean and friendly sources of energy [

48]. The dataset consisted of daily solar radiation readings for year 2010 to year 2018 at Baker station in North Dakota. Before applying the algorithms, the dataset was reduced by taking the mean of the values for each month.

Table 7 presents the statistical characteristics of the time series. Performance of various methods are tabulated in

Table 8. Results of

psf_plot() and

dpsf_plot() for the “Total Solar Radiation” dataset are shown in

Figure 10 and

Figure 11. Further, the comparison of forecasted values with different methods are shown in

Figure 12.

The error for

Psf() was significantly less the that for

auto.arima() and

Dpsf(). The errors are listed in

Table 8.

4.5. Example 5: Average Bare Soil Temperature

Soil temperature is an important process that is related to geoscience engineering [

49]. Based on the factual mechanism, soil temperature has highly nonstationary features due to the influence of the soil morphology, climate, and hydrology information [

50,

51]. Hence, taking the soil temperature as a time series forecasting is highly useful for multiple geoscience engineering applications [

52]. The data were obtained from the same region as in Example 4 (“Baker station”) and using the same data span, “2010–2018”. A similar modeling procedure was implemented as in

Section 4.4.

Table 9 reports the statistical characteristics of the soil temperature time series, and the performance of various methods are tabulated in

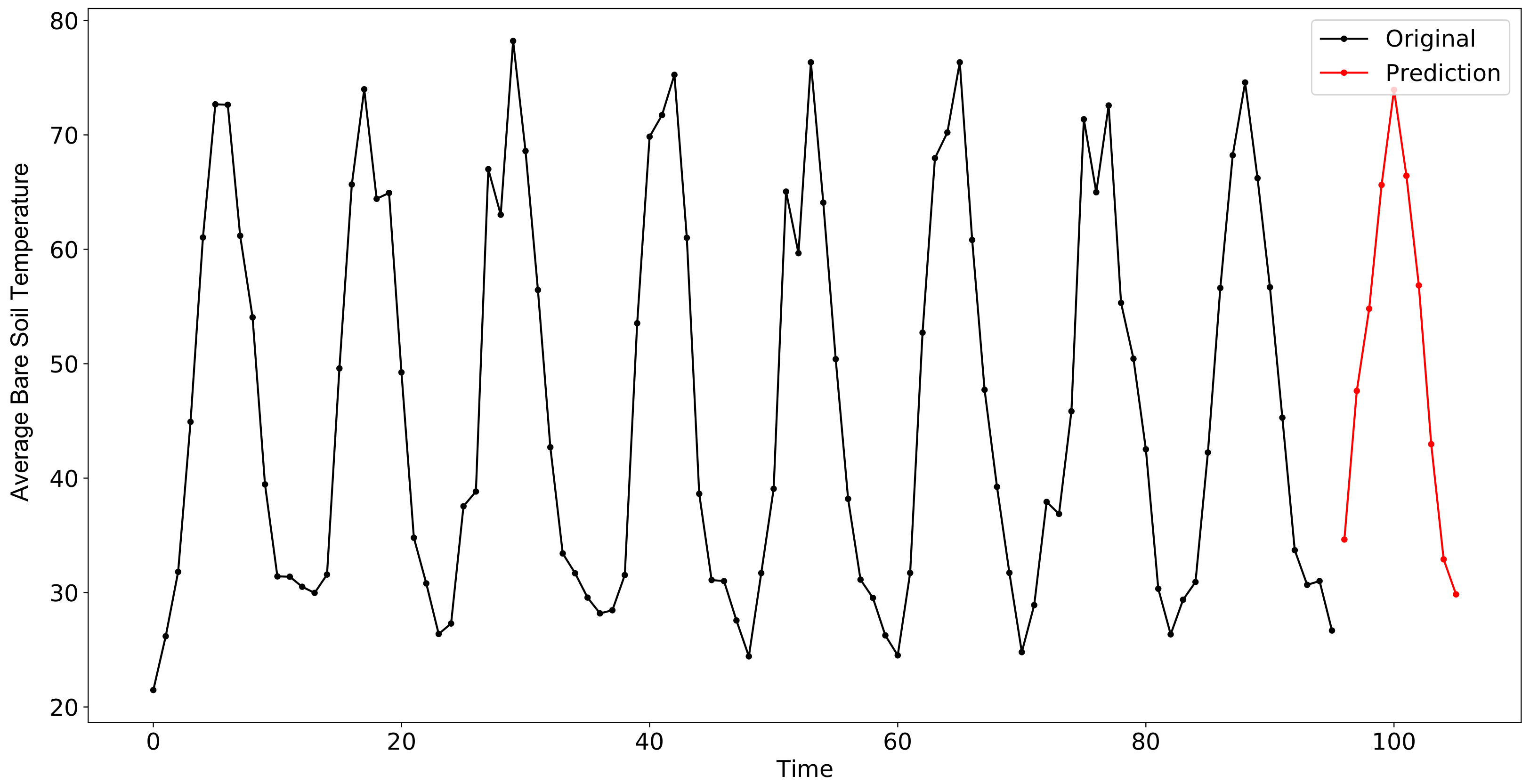

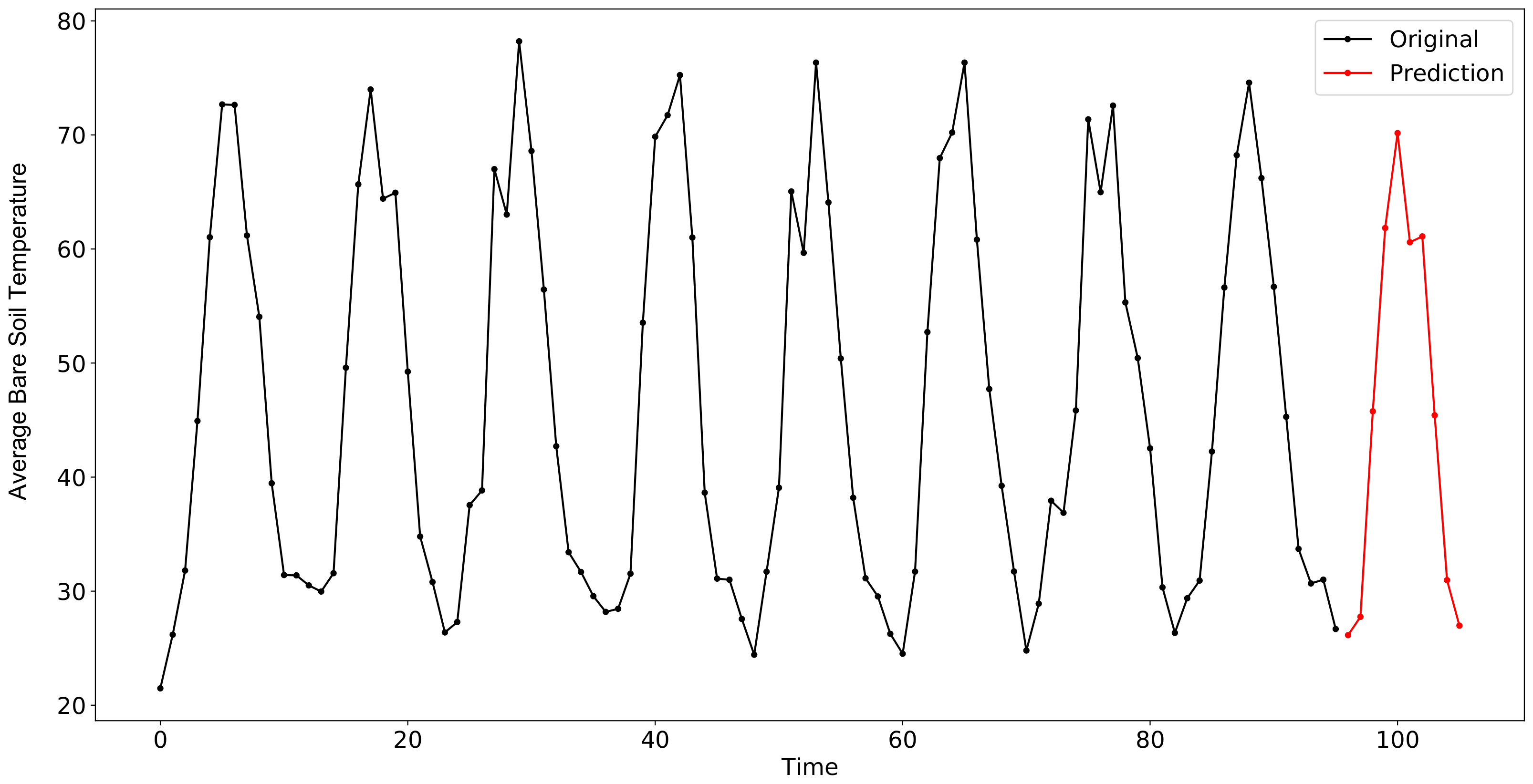

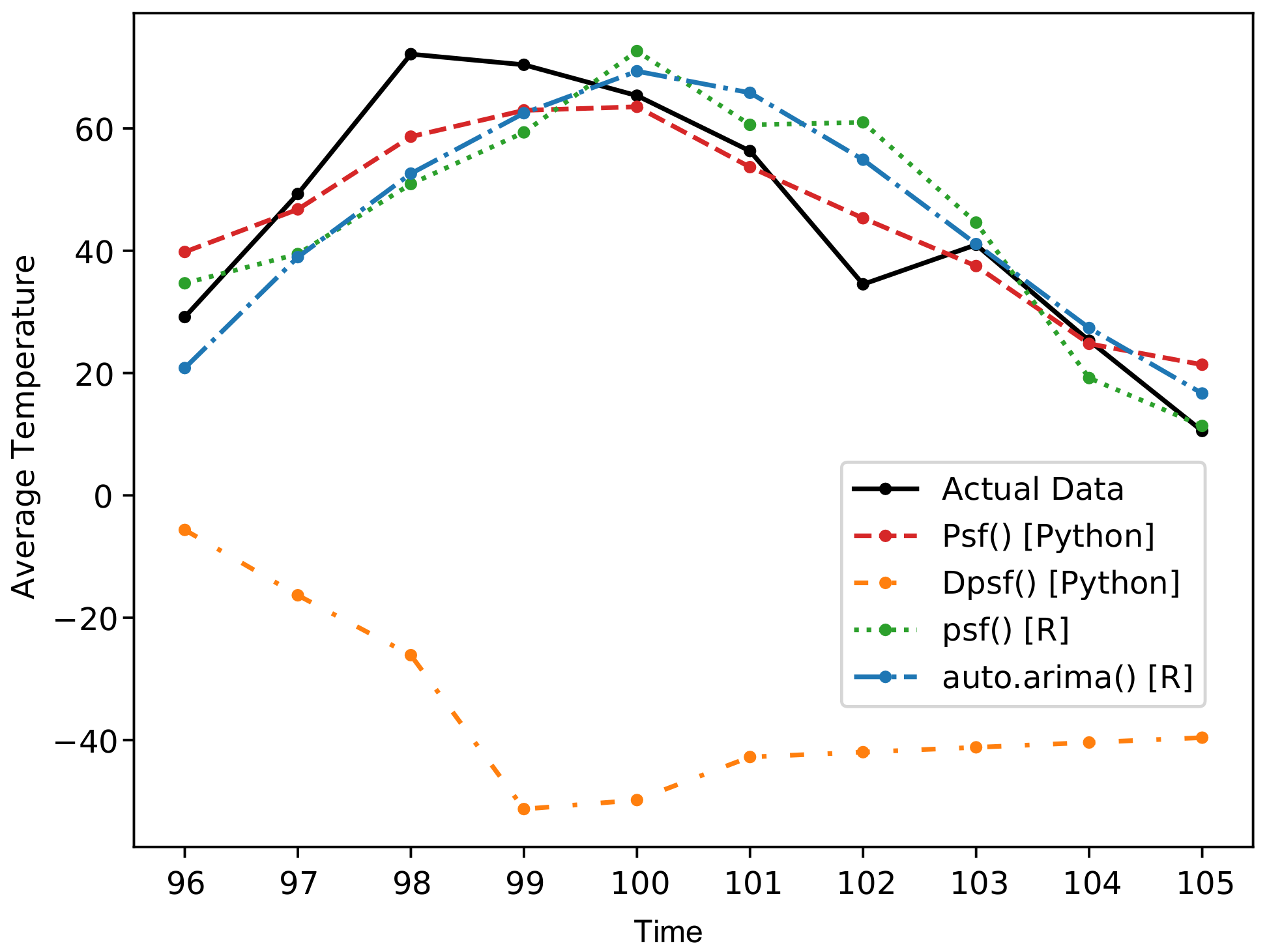

Table 10. Results of

psf_plot() and

dpsf_plot() for the “Average Bare Soil Temperature” dataset are shown in

Figure 13 and

Figure 14. Further, the comparison of forecasted values with different methods are shown in

Figure 15.

4.6. Example 6: Average Temperature

The final example reported in this research is modeling the air temperature. Having a reliable and robust technique for air temperature is very essential for diverse water resources and hydrological processes [

53,

54], for instance, in agriculture, water body evaporation, crops production, etc. [

55]. Similar to Examples 4 and 5, the air temperature data were from the same station, region, and data span. For these data, the procedure followed was the same as in Examples 4 and 5.

Table 11 indicates the statistical characteristics of the time series. The performance of various methods are tabulated in

Table 12. Result of

psf_plot() for the “Average Temperature” dataset are shown in

Figure 16. Further, the comparison of forecasted values with different methods are shown in

Figure 17.

5. Discussion and Conclusions

This paper described the

PSF_Py package in detail and demonstrated its use for implementing the PSF and DPSF algorithms for diverse applications on real time series forecasting datasets. The package makes it very easy to make predictions using the PSF algorithm. The syntax is similar to that in R and is very easy to understand. The examples shown above suggested that the results from the Python package were comparable to those in R. The values of the window size and the number of clusters may differ in both packages. The algorithm worked exceptionally well for the time series containing periodic patterns. The forecasting error of the DPSF method, implemented in the proposed package, was much smaller and better than the benchmark ARIMA model. The complexity of a model is another critical aspect besides its accuracy. We compared the time and space complexities of the models in case studies with the GuessCompx tool. The GuessCompx tool [

56,

57] empirically estimates the computational complexity of a function in terms of Big-O notations. It computes multiple samples of increasing sizes from the given dataset and estimates the best-fit complexity according to the “leave-one-out mean squared error (LOO-MSE)” approach. The “nottem” dataset was used to calculate the complexities. The results of the tool are summarized in

Table 13, and it shows that both PSF and DPSF models are computationally efficient and consumes an optimum amount of memory to achieve a better accuracy.

It is worth to mention that this research proposed a reliable and robust computational technique that can be implemented for online and offline forecasting for diverse hydrological and climatological applications [

58,

59]. In future work, other hybrid models [

33,

60] of the PSF method can be incorporated into the proposed Python package, with which further improved accuracy in forecasting can be targeted. In addition, the application of the proposed package could be extended to several new data-driven research domains. Further, other hydrological processes dataset or other engineering time series data could be investigated for the possibility to generalize the proposed package.