Abstract

Person re-identification (re-ID) technology has attracted extensive interests in critical applications of daily lives, such as autonomous surveillance systems and intelligent control. However, light-weight and efficient person re-ID solutions are rare because the limited computing resources cannot guarantee accuracy and efficiency in detecting person features, which inevitably results in performance bottleneck in real-time applications. Aiming at this research challenge, this study developed a lightweight framework for generation of the person multi-attribute feature. The framework mainly consists of three sub-networks each conforming to a convolutional neural network architecture: (1) the accessory attribute network (a-ANet) grasps the person ornament information for an accessory descriptor; (2) the body attribute network (b-ANet) captures the person region structure for a body descriptor; and (3) the color attribute network (c-ANet) forms the color descriptor to maintain the consistency of the color of the person(s). Inspired by the human visual processing mechanism, these descriptors (each “descriptor” corresponds to the attribute of an individual person) are integrated via a tree-based feature-selection method to construct a global “feature”, i.e., a multi-attribute descriptor of the person serving as the key to identify the person. Distance learning is then exploited to measure the person similarity for the final person re-identification. Experiments have been performed on four public datasets to evaluate the proposed framework: CUHK-01, CUHK-03, Market-1501, and VIPeR. The results indicate that (1) the multi-attribute feature outperforms most of the existing feature-representation methods by 5–10% at rank@1 in terms of the cumulative matching curve criterion; and (2) the time required for recognition is as low as for real-time person re-ID applications.

1. Introduction

Person re-identification (re-ID) technology aims to match (associate) an individual identity in non-overlapping physical locations or time, which has become a vital role for maintaining the public security in the fields of smart cities and artificial intelligence [1]. Owing to its superior performance in tracking the suspects and identifying their status, it has been widely applied to underpin many crucial applications such as criminal investigation [1], action recognition [2], and object tracking [3]. Given a query image of the person taken from one camera view, re-ID is essentially an image retrieval problem that is devoted to searching the images of the same person captured by other camera views and generates a final ranking list where top-ranked results are more likely to be the same person as the queried one [1]. However, owing to great variations in illumination, camera views, and the images’ resolution and pose, the individual’s appearance usually exhibits dramatic changes in harsh real-world scenarios (see Figure 1). As a result, it inevitably leads to an unstable performance for person re-ID in the real applications, which remains an unsolved and challenging problem.

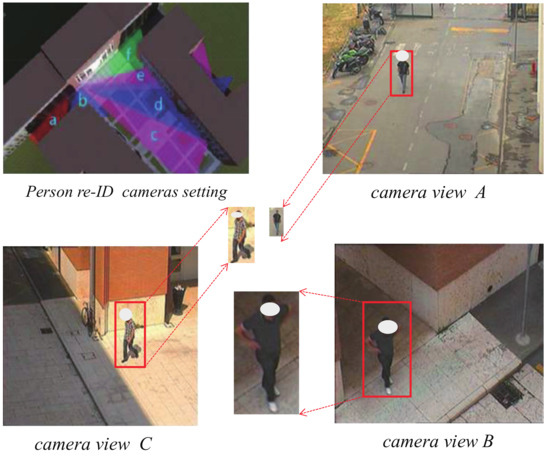

Figure 1.

The real surveillance scenes for the person re-ID application. There are multiple camera views for the same person with different representations that are in great variations in illumination (camera C), scales (camera A), and person poses (camera B) in harsh real-world scenarios.

Recent booming deep-learning algorithms have significantly enhanced the performance of various computer vision tasks [4]. Person re-ID based on deep-learning has also become prevalent [4,5]. However, deep learning models usually demand significant computing resources for optimization of numerous parameters and the very complex networks. This inevitably causes a performance bottleneck in real-time applications where trade-offs between recognition accuracy and efficiency are often a must [6]. In addition, due to the chained training criterion of existing deep modules, information-forward propagation is prone to gradient dispersion when confronting the deeper and deeper network architectures [7]. The primary input information gradually weakens during forward propagation and the model converges very slowly after a few layers [8]. In view of the network architecture, the middle layer of neural networks still retains the multiple-attribute information (such as color or body-region information) of the person [9]. It is very difficult to ensure the robustness and discrimination of the person’s features without properly optimizing the network. A pressing need exists for a method to generate robustness and features to achieve accurate and efficient person re-ID [10,11].

This study developed a lightweight framework for generation of the person multi-attribute feature. The framework mainly consists of three sub-networks each conforming to a convolutional neural network architecture (Section 3): (1) The accessory attribute network (a-Net) grasps the person ornament information for an accessory descriptor by dividing the person appearance into the several regions of interests; (2) the sub-networks b-Net and c-Net are engaged in learning body and color descriptors; the b-Net captures the person region structure for a body descriptor (e.g., body structure) and the c-Net captures the color descriptor that maintains the consistency of a person’s color; (3) multi-attribute descriptor: these descriptors use a tree-based feature-selection method to construct a global person feature inspired by the visual processing mechanism of human beings, which serves as the key to depict the person representation.

Experiments have been performed on four public datasets to evaluate the proposed framework: CUHK-01, CUHK-03, Market-1501, and VIPeR (Section 4). Furthermore, the lightweight framework incurs a computational complexity as low as . It takes less than 3 s to identify 5000 individuals with a HuaWei smart Table PC.

This study thus makes the following major contributions:

- A lightweight feature-generation method is proposed for accurate person re-ID. The method requires only marginal computing resources for training and operation, which significantly outperforms the conventional counterparts such as ResNet [12].

- The study demonstrates that the human visual processing mechanism can be exploited to optimize neural networks and thus significantly improves the performance of person re-ID.

2. Related Work

This section introduces the mainstream research directions of person re-ID, including (1) traditional methods for person re-ID and (2) deep-learning-based methods for person re-ID. The most salient works in this field are discussed.

2.1. Traditional Person Re-ID Methods

Traditional research methods for person re-ID can be classified into feature representation and metric learning.

Typical feature-representation methods are color and texture, which are used for general content retrieval and person re-identification tasks. Gray et al. [13] first partitioned person images into several horizontal strips and then extracted both the color channels and texture filters from each strip. The Adaboost learning model was used to combine these features for the final person representation.

Si et al. [14] proposed a spatial-pyramid-based statistical feature-extraction method. The cascade feature learning manner was adapted to evaluate the configuration and strategy for person re-identification tasks. Due to the challenges of real surveillance scenes, it was extremely difficult for feature-representation methods to identify persons captured in previous camera views [15].

To measure distances, Bai et al. [16] proposed a method that uses the unsupervised re-ranking procedure for object retrieval and person re-ID with a specific concentration on an ensemble of multiple metrics. They fused the metrics by using different strategies and analyzed the pros and cons of these strategies for person re-ID.

Muhammad et al. [17] initially extracted three types of handcrafted features on the input images including shape, color, and texture for person re-ID. They exploited a feature fusion and selection technique to integrate these handcrafted features for clustering.

However, in the real world, interference like low image resolution, partial occlusion, and view changes could cause significant variations in person appearance. It is extremely challenging to design a discriminative feature and a scaling function for accurate and efficient person re-ID tasks.

2.2. Deep-Learning Person Re-ID Methods

Recently, deep-learning-based methods for person re-ID have become popular and offer high performance. These methods are inspired by the perception mechanism of humans, who are equipped with multiple neural nodes for this problem. Huang et al. [8] designed a novel lightweight person ReID model, called the Multi-Scale Focusing Attention Network (MSFANet), to capture robust and elaborate multi-scale ReID features, which has a lower float-computing and a higher performance.

Liu et al. [18] proposed a non-local temporal pooling (NLTP) method used for temporal features’ aggregation for person re-ID. Their method would effectively capture long-range and global dependencies among the frames of the video.

Huang et al. [8] proposed a lightweight model called the Multi-Scale Focusing Attention Network (MSFANet) for person re-ID. The proposed method had designed a multi-branch depth-wise separable convolution module to extract and fuse multi-scale features independently.

Herzog et al. [19] have proposed a lightweight network, which mainly combined the global, part-based, and channel features in a unified multi-branch architecture for person re-ID. The network structure they designed to obtain the pedestrian feature was mainly exploited the pooling operations (MaxPool and AvgPool) to the initial feature maps. In our work, we have excavated three kinds of attributes (descriptors) equipped with the accessories feature, the body contour, and the appearance color to re-identify the specific persons, respectively. These descriptors all have specific meanings for each person via constructing a network architecture accordingly that is essentially different from it.

Tay et al. [9] had utilized the person attribute information into the classification framework with three sub-networks, called the Global Feature Network (GFN), the Part Feature Network (PFN), and the Attribute Feature Network (AFN), which is called AANet. This method was devoted to excavating multi-attributes for each person who was not a specific one.

In this work, the multi-attribute has the same concept as the descriptor. Specifically, we exploited the accessory attribute network to grasp the person accessory descriptor. The body attribute network was engaged in learning the person region structure with a Spatial Pyramid operation to capture the body descriptor, and the color attribute network was devoted to maintaining the color consistency of each person for a color descriptor. These descriptors all have the specific meanings for each person in our work, and it is essentially different from the AANet [9].

The present study differs from previous works in the following major ways: (1) it proposes a lightweight multi-attribute feature-generation method for person re-ID, and (2) it explores the applicability of the human visual perception mechanism in person re-ID technology.

3. Efficiency Multi-Attribute Feature Generation Framework

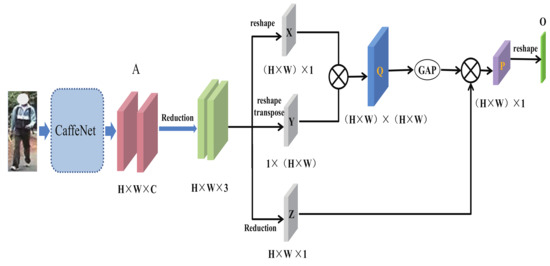

This section mainly describes the lightweight and efficient multi-attribute feature generation framework for person re-ID, which is shown in Figure 2. More concretely, the notations and problem statement for the person re-ID are introduced firstly. Then, the architecture of the multi-attribute feature generation framework is stated. Optimization of the network is depicted for the next part. It ends with the tree-based feature selection method for a global person representation.

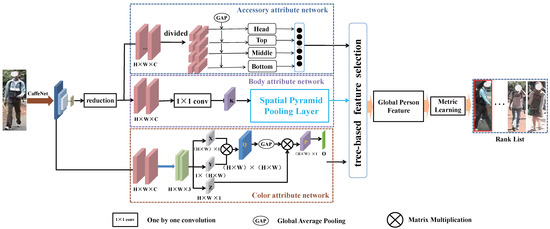

Figure 2.

The person multi-attribute feature generation framework for person re-ID. The framework is equipped with three modules, which are denoted as the accessory attribute network, the body attribute network, and the color attribute network to generate the accessory descriptor, the body descriptor, and the color descriptor, respectively. Then, the descriptors are via a tree-based feature selection method for global person feature. The metric learning manner is exploited to obtain person re-ID final results.

3.1. Notations and Problem Statement

As mentioned above, person re-ID can be regarded as a retrieval problem. The core task of it is to seek the occurrences of a probe (query image) from the gallery (the candidate set), where they (probe and gallery) are captured from different camera views. Given the query image () with the label () and a set of gallery images with labels (), the person re-ID aims to retrieve all the () that satisfy (). M and N denote the amount of the gallery and the query set, respectively. In this study, the person multi-attribute feature generation framework consists of a-ANet, a b-ANet, and a c-ANet, which generate the accessory, the body, and the color descriptors (denoted as , , and , respectively) for each person. The generated three kinds of descriptors are then via a tree-based feature selection method to construct a global person feature f.

3.2. The Multi-Attribute Feature Generation Framework

The architecture of the multi-attribute feature generation framework is shown in Figure 2, and the backbone network is CaffeNet [20], which is well-known for both its excellent efficiency and performance with feature generation. The convolutional feature map extracted by CaffeNet is reduced to where define the size of the map, and C denotes the channel. The CaffeNet-generated feature map is followed by the reduced convolutional feature map, which consists of a a-ANet, a b-ANet, and a c-ANet for learning three types of descriptors:

- The a-ANet is designed to grasp the person accessory descriptor. It divides the reduced convolutional feature map into four regions of interests (Head, Top, Middle, and Bottom) using peak activation detection and pooling. Then, the global average pooling operation is performed on the four ROIs to capture the accessory descriptor for each person, and the accessory attributes may be hair, coats, trousers, and shoes, etc.

- The b-ANet is engaged in learning the person region structure to capture the body descriptor. The convolutional layer is followed by the reduced convolutional feature map to bring the dimension down to K, which conforms a spatial pyramid pooling layer to get the hierarchical region information for a body descriptor including: head, torso, and legs, etc.

- The c-ANet is devoted to maintaining the color consistency of each person for a color descriptor, in which the feature map is reduced into the dimension . The channels are decomposed into three single maps, X, Y, and Z, respectively. Then, the element-wise addition is implemented on the single map that performs the global average pooling operation for the person color descriptor.

In addition, as described in Figure 2, the “” denotes the one by one convolution. The “GAP” means the global average pooling, which performs on the feature maps for dimensionality down. Then, three types of person descriptors are via the tree-based feature selection method to form the global person feature. After that, the person re-ID process is performed with the metric learning to obtain the final results (rank lists).

3.3. The Accessory Attribute Network

The accessory attribute network (a-ANet) is used to excavate the decoration information of a person wearing something that is striking to the human beings. Its architecture is shown in Figure 3, where the convolutional feature map is extracted firstly. Then, the body part detector [9] divides the person feature map A into four horizontal parts (head, top, middle, and bottom), which are denoted as the regions of interest (ROIs) for each person via Equation (1) correspondingly, where is the activation value at location on the th feature channel.

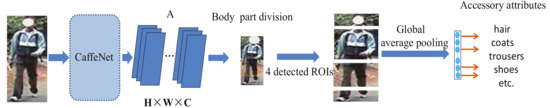

Figure 3.

The accessory attribute network divides the convolutional feature map A into four ROIs (regions of interests) using peak activation detection and pooling as the reference [9]. Features from the four ROIs are further used for person accessory descriptor extraction.

A global average pooling operation is performed on the four divided horizontal parts for further feature dimension reduction. Then, the person wearing information is generated from the head, top, middle, and bottom parts vectors, which are extracted from the reduced feature maps. The method in AFN [9] is used to support the person accessory attribute classification (such as hair, clothing, trousers, and shoes) calculating by the Softmax function, which is exploited to optimize the network. The back-propagation is performed by the Cross-entropy loss via the Softmax output.

3.4. The Body Attribute Network

The body attribute network (b-ANet) is designed for grasping person region information for a body descriptor. Its architecture is shown in Figure 4, which consists of a convolution operation and a spatial pyramid pooling layer (pooling maps are designed as 256-dimensional). The samples are set as 16, 9, 4, and 1 corresponding to enabling down-sampling for person hierarchical region information extraction. Then, the multi-sampling features are connected together to represent the person’s body attributes, including the head, torso, legs, and profile, etc., in this study.

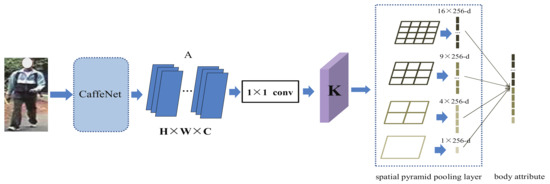

Figure 4.

The body attribute network consists of a convolution operation and a spatial pyramid pooling layer for the personal body attribute recognition.

Traditional CNNs fix the size of input for the convolutional feature maps extraction, which has a high dimension feature vector (e.g., for AlexNet). As a contrast, the convolution layer is easy to reduce the dimension of feature map for its simple operation. In addition, the designing of the spatial pyramid layer could adapt to the inputs of various scales for personal body representation. The four kinds of spatial down-sampling operations are performed on reduced feature maps K to form the hierarchical person multi-region information for body descriptor learning. The optimization of the network is similar to a-ANet for a Softmax function and Cross-entropy loss for using back-propagation.

3.5. The Color Attribute Network

The color attribute network (c-ANet) is used to extract the low-level color information for each person. Its architecture is shown in Figure 5, where the extracted convolutional feature map is first transferred into for dimension reduction. Then, the three channels feature maps are further reshaped into the single channel map , , and . The map features X and Y performed the matrix multiplication operation to form the feature map . After that, the global average pooling (GAP) is executed to the Q, and the result is performed with the matrix multiplication operation with the to obtain the feature map . At last, the feature P is reshaped in to O for the final person color descriptor.

Figure 5.

The color attribute network reduces the extracted convolutional feature map into correspondingly. Then, the three channels feature map is divided into X, Y, and Z for the global average pooling operation, respectively.

In Figure 5, the color attribute network yields a 800-dimensional feature vector from each single person images, which is calculated by subtracting the mean of the image and performing a forward propagation. The optimization of the network is used to learn the back-propagation of the Softmax function. The loss function is also exploited by the Cross-entropy for parameters’ updating.

3.6. Optimization

In this study, the Softmax regression was used for whole network optimization. At the training phase, the above three kinds of attribute networks were trained via the Softmax function, which was followed by a Cross-entropy loss. The stochastic gradient descent (SGD) algorithm was used for the network training. Training errors were calculated via each mini-batch and back propagated to the primary layers. The single attribute network loss is denoted as , , and , which is shown in Equations (2)–(4), where , , and are the parameters for each network correspondingly, and r, s, and t denote the amount of the attribute for each one. In this study, r is 4, which means four kinds of accessory attributes (head, top, middle, and bottom) for each person. s was set as 4, which denotes 4 types of body attributes (profile head, torso, and legs) for a person. t was 3, which represents three kinds of color attributes.

The above equations update the gradient, which is shown in Equation (5). is a vector, and its l-th element is , the partial derivative of with respect to the l-th element of , which is the Cross-entropy loss to each single attribute (descriptor) network for optimization. The proposed method can be seen as a multi-task learning framework. The whole loss is and is described as Equation (6).

where , , and are the accessory, body, and color attribute factors, respectively.

3.7. Tree-Based Feature Selection Method

Due to the redundancy of each person, it is necessary to select reasonable descriptors to form the robust person features. In this study, the decision tree-based feature ranking method is proposed to select relevant features and ranks the features like the [21]. The tree-based feature selection method is easy to visualize and understand for feature discrimination. The learned features can be visualized as Figure 6, which would identify the specific person. In addition, this algorithm is completely unaffected by data scaling, since each feature is processed separately and the division of data does not depend on scaling. It does not need “feature preprocessing, such as normalization or standardization.” In our work, we aimed to extract three kinds of different attributes (descriptors) that do not need processing. So, the tree-based feature selection method was exploited in our work. Of course, we will also do the comparative experiments with the relevant feature selection methods in the future.

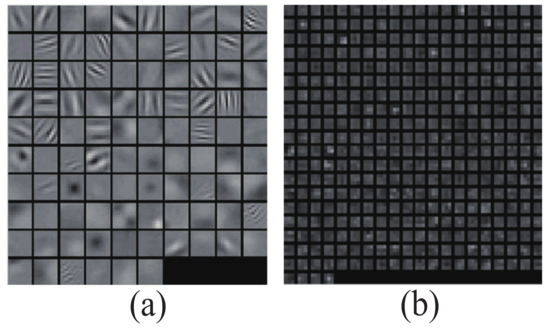

Figure 6.

Some typical weights of the convolutional layers. (a) The weight for the first convolutional layer, which has 96 kernels. (b) The weight for the fourth convolutional layer that includes 384 kernels.

The descriptor’s information is obtained by (7), which indicates the selected suitable attribute, where is the average information needed to ensure vector F class label. is the probability that an arbitrary vector in F belongs to attribute class . The is resorted to as information and is encoded in bits.

It eliminates the last elements (attributes) when a person attribute feature ranking list is generated by the tree-based selection manner directly. Then, the - layer is exploited to normalize the features that are described as Formula (8).

where is the rest attribute features with the dimension k.

4. Experiments and Results

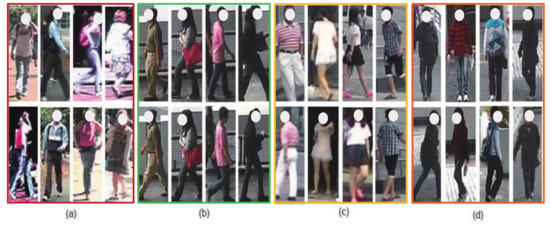

In this section, we evaluate the proposed algorithm on public person re-identification datasets. Some typical samples of these public datasets are shown in Figure 7. Firstly, the datasets and evaluation protocols will be introduced. Then, the experimental results are exhibited and compared with both traditional or deep learning methods. In addition, the ablation study is shown to prove the effectiveness of the proposed method. After that, the efficiency of this method is discussed. The detailed information is depicted in next section.

Figure 7.

Some typical samples from three public datasets. Each column shows two images of the same person from two different cameras under significant changes of the scale and illumination. (a) VIPeR dataset exhibits significant differences between different views. (b) CUHK-03 dataset with labeled version. (c) Market-1501 dataset has significant and consistent lighting changes. (d) CUHK-01 dataset is similar with CUHK-03.

4.1. Datasets, Implementation Details, and Evaluation Protocols

4.1.1. Datasets

The experiments were implemented on four public datasets, which include CUHK-01 [22], CUHK-03 [23], Market-1501 [24], and VIPeR [25]. Each dataset is described briefly as follows.

CUHK-01 dataset: The CUHK-01 Campus dataset [22] contains 1816 persons and five pairs of camera views (P1-P5, ten camera views). They have 971, 306, 107, 193, and 239 persons, respectively. Each person has two images in each camera view. This dataset is used to evaluate the performance when camera views in the test are different than those in training. All images are normalized to .

CUHK-03 dataset: There are 13,164 images of 1360 identities contained in the CUHK-03 dataset [23]. All pedestrians are captured by six cameras, and each person is only taken form two camera views. The dataset provides both the manually cropped bounding box and the automatically cropped bounding box with a pedestrian detector [26]. We report results on both of these versions of the data. Following the protocol used in [23], we randomly partitioned the dataset into a training set of 1160 persons and a test set of 100 persons. This random partition was repeated for 20 times for computing the averaged performance.

Market-1501 dataset: Market-1501 [24] contains 32,668 detected bounding boxes of 1501 persons, with each of them captured by six cameras at most and two cameras at least. Similar to the CUHK-01 dataset, it also employs a DPM detector [26]. We used the provided fixed training and the test set, containing 750 and 751 identities, respectively, to conduct experiments.

VIPeR dataset: The widely used VIPeR dataset was collected by Gray and Tao [25] and contains 1264 outdoor images obtained from two views of 632 persons. Each pair is made up of images of the same person from two different cameras, under different viewpoints, poses, and light conditions, respectively. All images were normalized to pixels. View changes were the matched image pairs containing a viewpoint change of 90 degrees.

4.1.2. Implementation Details

Our framework was performed on Ubuntu-18.04 and NVIDIA-1080 (11G) which was made by NVIDIA Corporation in Santa Clara, USA. This work exploited the current popular deep neural frameworks, such as python (3.6.13), pytorch (1.8.1), and torchvision (0.9.1) for running of the modules. As described above, the CaffeNet is adopted as the backbone network for the attributes (descriptors) learning. Specifically, the input person image is resized into 128 × 48, and the loss of the three sub-networks are represented as , , and , respectively. The overall loss is the summation of the three losses, which was used to train the proposed framework.

4.1.3. Evaluation and Data Augmentation

This section mains the evaluation metric, training strategy, and data augmentation for the proposed algorithm, which is described as below, respectively.

Evaluation protocol: We adopted the widely used cumulative match curve (CMC) approach [25] for quantitative evaluation. This measure indicates how the matching performance of the algorithm improved as the number of returned image increases. The second measure manner is the mean average precision (mAP) [24], which reflects the overall ranking quality rather than looking at top n positions only.

Data Augmentation: In the training set, the matched sample pairs (positive samples) are far fewer than the mismatched pairs (negative samples). If they are directly used to train the deep network, the model tends to predict all inputs as being non-matched. The most common method to avoid this issue is to enlarge the positive samples and randomly reduce the negative ones by label-preserving transformation artificially. In this study, we used a data augmentation strategy to extract random patches from previous image pairs [20]. For an original image of the size , we sampled ten identically sized images around the center in the range .

4.2. Performance Results

4.2.1. Results on CUHK-01 Dataset

Firstly, we pre-trained the proposed network structure on the entire dataset of Market-1501 and CUHK-03. Then, the pre-trained model was fine-tuned on 485 identities of CUHK-01 to produce the final model. At the fine-tuning stage, the learning rate was set as for the first epochs, then it decreased to . The momentum was set as , and the weight decay was . The parameters were shared for each sub-network. Multi-shot matching was used to sum the scores of multiple images of the same person. The experimental results are shown in Table 1. It shows that our method reached the best performance for rank@1.

Table 1.

Experimental results on CUHK-01.

A variety of methods are used for the comparison, including traditional methods like MLF [27], kLFDA [28], and deep learning methods like FPNN [23], IDLA [29], DeepRank [30], MuDeep [5], and MVLDML+ [31]. From Table 1, the rank@1 accuracy of our method reached 83.92%, outperforming all anchors.

4.2.2. Results on CUHK-03 Dataset

The CUHK-03 dataset includes both manually cropped images (referred to as the labeled version) and automatically cropped images by the detector [32] (denoted as the detected version).

Following the protocol in [23], we randomly divided the dataset into the training set of 1160 persons and the set of 100 persons. The experiments were conducted with five random splits with the results computed by the single shot-setting. The learning rate was set as for the first epochs; then, it decreased to . The momentum was set as , and the weight decay was . The batch-size was set as 256. The experimental results for the detected version and the labeled version are shown in Table 2 and Table 3, respectively. It shows that our method reached the best performance for rank@1.

Table 2.

Experimental results for CUHK-03 on the detected version.

Table 3.

Experimental results for CUHK-03 on the labeled version.

We compared the existing methods (like FPNN [23], MGCA [35], and DeepSimilarity [33]), HA-CNN [32], and MuDeep [5]. From Table 2 and Table 3, the rank@1 matching rate of our method exceeded the counterparts and was reached at 78.50% and 82.50%, respectively. For the labeled version in Table 3, our matching rates at rank@5, 10, and 20 were lower than those of LOMO+SSM [36]. They both regard the person re-identification as a binary classification task and consider the person image pairs. However, in our method, we just considered the deep features for the single person image.

4.2.3. Results on Market-1501 Dataset

Pedestrians in the Market-1501 dataset are detected by deformable part model (DPM) and thus there appear false detection results. In addition, due to capture by six camera views, the relationships between pedestrians are more complicated than other datasets. The experimental results are shown in Table 4.

Table 4.

Experimental results on Market-1501.

The comparable methods include existing methods like LOMO+XQDA [34], Zheng et al. [24], PersonNet [42], End-2-End CAN [45], Hybrid [44], MVLDML+ [31], and PT [46]. From Table 4, the rank@1 matching rate of our method has the best performance.

4.2.4. Results on VIPeR DataSet

The VIPeR [25] contains 1264 pedestrian images taken from arbitrary viewpoints under varied illumination conditions. We randomly split the data set into two halves, 316 for training and the remaining 316 for testing. This procedure will be repeated 10 times to obtain an average result. As this dataset is too small to train the proposed network directly, we first pre-trained the model on the dataset of Market-1501 [24] and CUHK-03. Then, the fine-tune strategy was exploited to use the 316 training identities. The learning rate was set as for the first epochs. After that, it decreased to . The momentum was set as , and the weight decay was . The experimental results are tabulated in Table 5.

Table 5.

Experimental results on VIPeR.

We compared our method with the existing methods (like MLF [27], kLFDA [28], LOMO+ XQDA [34], FPNN [23], IDLA [29], DeepFeature [53], DeepList [54], and TJAIDL [52]). From Table 5, our method gives the best rank@1 matching rate.

4.3. Ablation Study

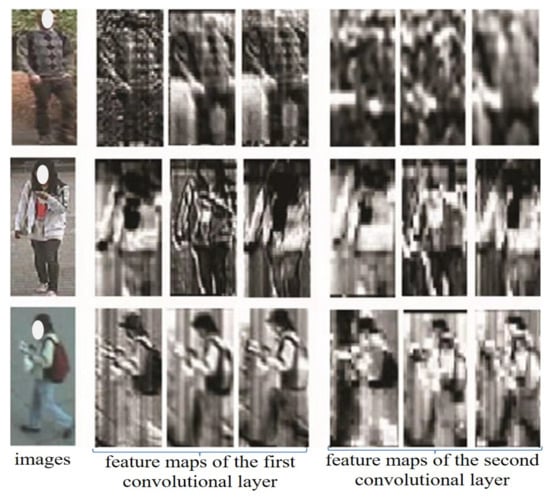

As mentioned above, the proposed method provides the best rank@1 accuracy. We would like to visualize the middle-layer descriptors for each attribute network and show the low-level visual descriptors (e.g., color and body information). Furthermore, we prove the performance of the single-attribute network.

Figure 8 shows the visualized descriptor, which means the low-level visual information (e.g., color and region information) of a person can be retained in the first two convolutional layers (shallow layer network structure). The first two convolutional layers show that the person representation (color and body information) becomes vaguer upon increasing the number of hidden layers. In fact, the color descriptor is quite obvious in the first convolutional layer feature maps and then fades away beyond a few layers for the deep network [32]. In addition, the region information for each person is also visible in Figure 8.

Figure 8.

The feature maps of the first and second convolutional layers.

In addition, we also visualized the weights (or the coefficients of kernel filters) in Figure 6, which shows 96 kernels for the first convolutional layer and 384 convolutional kernels for the fourth layer. Each square in Figure 6 is a hidden unit. Different hidden units represent different edge positions and orientations in the image. Generally, the sharper the edge is, the more discriminative it is of the descriptor.

This study has also given the performance for each single attribute network in Table 6, which shows the recognition result for each descriptor. From Table 6, we can see that the color attribute network (c-Net) and the body attribute network (b-Net) have a better performance comparing with the accessory attribute networks. The multi-attribute networks could construct a global person feature inspired by the visual processing mechanism of human beings, which serves as a key to depict the person representation.

Table 6.

The performance of the single sub-Net.

Furthermore, we conducted the ablation study on the VIPeR dataset for the attribute weight factors. The results are shown in Table 7. Generally, we think the accessory attribute would bear the more robust information comparing with the color attribute that is more fragile as the environment changed. In this ablation study, we continued to increase the weight of the accessory attribute.

Table 7.

The attribute factors learning on VIPeR dataset.

4.4. Discussion

As mentioned above, this study developed a lightweight person multi-attribute feature-generation framework for person re-ID. While maintaining the accuracy, the parameters of the proposed framework are less than for ResNet for the global person feature representation. Generally, the person re-ID algorithms are commonly used to obtain the person recognition accuracy, which is evaluated by the cumulative match characteristic (CMC) curve in real scene applications. The value of CMC@k indicates the percentage of real recognition ranked in the top k. For each inquired person image , all gallery images are ranked based on the feature similarity, which recognizes by using the person re-ID algorithms. In this case, the efficiency of feature generation is very important for real pedestrian surveillance and recognition. The time complexity for optimizing the proposed framework is as low as for feature generation, which is quite suitable for applications in many mobile terminal devices that have limited computing resources [56]. The MagicBook Pro series smart Tablet PC is an example of this type of device; it is made by HuaWei Tech. Co. and can match more than 5000 special instances in 3 s. This device adapts to the high-speed computing technology of video processing and analyzing for retrieving person instances. Therefore, the proposed algorithm equipped with fewer parameters has the advantage application for real person re-ID technology.

In addition, the parameters in our framework are all shared for unified training. The holistic network structure of CaffeNet is almost the simplest comparing with the common deep neural networks except the LeNet [57]. However, the LeNet can not achieve the performance that the CaffeNet does. So, we think our proposed framework is a lightweight algorithm that can be deployed on the mobile devices. The comparative results are shown in Table 8 (“ins” denotes “instances”). It can be seen that the proposed method is “lightweight” with the CaffeNet as a backbone network when weighing various factors (Params, accuracy, and time).

Table 8.

The time comparison with existing methods on VIPeR dataset.

5. Conclusions

Taking on the challenges of real autonomous unmanned system applications, this study developed a lightweight person multi-attribute feature-generation framework for person re-ID. The framework mainly consists of three sub-networks (a-ANet, b-ANet, and c-ANet) to generate multi-attribute descriptors (the accessory descriptor, the body descriptor, and the color descriptor), which construct the global feature for each person by mimicking the visual processing mechanism of humans.

The experimental results indicate that (1) the multi-attribute feature outperforms most of the existing feature-representation methods by 5–10% at rank@1 in terms of the cumulative matching curve criterion. (2) For network optimization, the method converges more easily than most existing deep-learning methods. The time complexity of optimizing a multi-attribute network is as low as , which makes the method more suitable for real autonomous surveillance applications. (3) Use of the multi-attribute feature boosts the performance of person representation compared with the traditional single-attribute counterparts.

Overall, the proposed multi-attribute feature-generation method significantly outperformed most of the person re-ID algorithms in the context of real autonomous applications. It thus has the potential for use in real surveillance-video analysis with light-weight deep neural networks.

Author Contributions

Writing—original draft, M.X., Z.G., R.H. (Ruimin Hu), J.C., R.H. (Ruhan He), H.C. and T.P.; Conceptualization, M.X. and Z.G.; methodology, M.X.; software, M.X., Z.G. and J.C.; validation, M.X., Z.G. and J.C.; formal analysis, M.X.; investigation, M.X., R.H. (Ruimin Hu) and J.C.; resources, M.X.; data curation, M.X. and J.C.; writing—review and editing, M.X., Z.G. and J.C.; visualization, H.C. and T.P.; supervision, R.H. (Ruhan He); project administration, M.X.; funding acquisition, M.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of Hubei Province (No. 2021CFB568 and No. 2020CFB801) and the National Nature Science Foundation of China (No. U1903214 and No. 62071339).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S.C.H. Deep Learning for Person Re-identification: A Survey and Outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 119, 104394. [Google Scholar] [CrossRef]

- Koniusz, P.; Wang, L.; Cherian, A. Tensor representations for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 648–665. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi-Object Tracking by Associating Every Detection Box. arXiv 2021, arXiv:2110.06864. [Google Scholar]

- Yang, Q.; Yu, H.-X.; Zheng, W.S. Patch-based Discriminative Feature Learning for Unsupervised Person Re-identification. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3633–3642. [Google Scholar]

- Fu, Y.; Qian, X.; Jiang, Y.; Xiang, T.; Xue, X. Multi-scale deep learning architectures for person re-identification. In Proceedings of the International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5409–5418. [Google Scholar]

- Chen, P.; Dai, P.; Liu, J.; Zheng, F.; Tian, Q.; Ji, R. Dual distribution alignment network for generalizable person re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 6, pp. 711–719. [Google Scholar]

- Choi, S.; Kim, T.; Jeong, M.; Park, H.; Kim, C. Meta batch-instance normalization for generalizable person re-identification. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 3425–3435. [Google Scholar]

- Huang, W.; Li, Y.; Zhang, K.; Hou, X.; Xu, J.; Su, R.; Xu, H. An Efficient Multi-Scale Focusing Attention Network for Person Re-Identification. Appl. Sci. 2021, 11, 2010. [Google Scholar] [CrossRef]

- Chiat-Pin Tay, S.R.; Yap, K.H. AANet: Attribute Attention Network for Person Re-Identifications. In Proceedings of the Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7134–7143. [Google Scholar]

- Hou, R.; Ma, B.; Chang, H.; Gu, X.; Shan, S.; Chen, X. Interaction-And-Aggregation Network for Person Re-Identification. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9309–9318. [Google Scholar]

- Bao, L.; Ma, B.; Chang, H.; Chen, X. Preserving structural relationships for person re-identification. In Proceedings of the 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shanghai, China, 8–12 July 2019; pp. 120–125. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Jian, S. Deep Residual Learning for Image Recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Gray, D.; Tao, H. Viewpoint Invariant Pedestrian Recognition with an Ensemble of Localized Features. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; pp. 262–275. [Google Scholar]

- Si, J.; Zhang, H.; Li, C.G.; Guo, J. Spatial Pyramid-Based Statistical Features for Person Re-Identification: A Comprehensive Evaluation. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1140–1154. [Google Scholar] [CrossRef]

- Chang, X.; Hospedales, T.M.; Tao, X. Multi-Level Factorisation Net for Person Re-Identification. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2109–2118. [Google Scholar]

- Bai, S.; Tang, P.; Torr, P.H.; Latecki, L.J. Re-Ranking via Metric Fusion for Object Retrieval and Person Re-Identification. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 740–749. [Google Scholar]

- Fayyaz, M.; Yasmin, M.; Sharif, M.; Shah, J.H.; Raza, M.; Iqbal, T. Person re-identification with features-based clustering and deep features. Neural Comput. Appl. 2020, 32, 10519–10540. [Google Scholar] [CrossRef]

- Liu, Z.; Du, F.; Li, W.; Liu, X.; Zou, Q. Non-local spatial and temporal attention network for video-based person re-identification. Appl. Sci. 2020, 10, 5385. [Google Scholar] [CrossRef]

- Herzog, F.; Ji, X.; Teepe, T.; Hörmann, S.; Gilg, J.; Rigoll, G. Lightweight multi-branch network for person re-identification. In Proceedings of the International Conference on Image Processing, Anchorage, AK, USA, 19–22 September 2021; pp. 1129–1133. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2012; Volume 25, pp. 1097–1105. [Google Scholar]

- Jotheeswaran, J.; Koteeswaran, S. Decision tree based feature selection and multilayer perceptron for sentiment analysis. J. Eng. Appl. Sci. 2015, 10, 5883–5894. [Google Scholar]

- Li, W.; Wang, X. Locally Aligned Feature Transforms across Views. In Proceedings of the Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3594–3601. [Google Scholar]

- Li, W.; Zhao, R.; Xiao, T.; Wang, X. DeepReID: Deep Filter Pairing Neural Network for Person Re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 152–159. [Google Scholar]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable Person Re-identification: A Benchmark. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1116–1124. [Google Scholar]

- Gray, D.; Brennan, S.; Tao, H. Evaluating appearance models for recognition, reacquisition, and tracking. In Proceedings of the International Workshop on Performance Evaluation for Tracking and Surveillance (PETS), Rio de Janeiro, Brazil, 14 October 2007; Volume 3. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; Mcallester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Zhao, R.; Ouyang, W.; Wang, X. Learning Mid-level Filters for Person Re-identification. In Proceedings of the Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 144–151. [Google Scholar]

- Xiong, F.; Gou, M.; Camps, O.; Sznaier, M. Person Re-Identification Using Kernel-Based Metric Learning Methods. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 1–16. [Google Scholar]

- Ahmed, E.; Jones, M.; Marks, T.K. An improved deep learning architecture for person re-identification. In Proceedings of the International Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 25–34. [Google Scholar]

- Chen, S.Z.; Guo, C.C.; Lai, J.H. Deep Ranking for Person Re-Identification via Joint Representation Learning. Trans. Image Process. 2016, 25, 2353–2367. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Wang, M.; Tao, D. Person re-identification with metric learning using privileged information. IEEE Trans. Image Process. 2017, 27, 791–805. [Google Scholar] [CrossRef]

- Wei, L.; Zhu, X.; Gong, S. Harmonious Attention Network for Person Re-Identification. In Proceedings of the International Computer Vision and Pattern Recognition., Salt Lake City, UT, USA, 18–22 June 2018; pp. 1–10. [Google Scholar]

- Guo, Y.; Tao, D.; Yu, J.; Li, Y. Deep Similarity Feature Learning for Person Re-Identification; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 386–396. [Google Scholar]

- Liao, S.; Hu, Y.; Zhu, X.; Li, S.Z. Person re-identification by Local Maximal Occurrence representation and metric learning. In Proceedings of the Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2197–2206. [Google Scholar]

- Song, C.; Huang, Y.; Ouyang, W.; Wang, L. Mask-Guided Contrastive Attention Model for Person Re-identification. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1179–1188. [Google Scholar]

- Song, B.; Xiang, B.; Qi, T. Scalable Person Re-identification on Supervised Smoothed Manifold. In Proceedings of the International Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2530–2539. [Google Scholar]

- Sun, Y.; Zheng, L.; Yang, Y.; Tian, Q.; Wang, S. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 480–496. [Google Scholar]

- Yang, W.; Huang, H.; Zhang, Z.; Chen, X.; Huang, K.; Zhang, S. Towards rich feature discovery with class activation maps augmentation for person re-identification. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1389–1398. [Google Scholar]

- Zheng, M.; Karanam, S.; Wu, Z.; Radke, R.J. Re-Identification With Consistent Attentive Siamese Networks. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, Seoul, Korea, 27 October–2 November 2019; pp. 5728–5737. [Google Scholar]

- Guo, J.; Yuan, Y.; Huang, L.; Zhang, C.; Yao, J.G.; Han, K. Beyond Human Parts: Dual Part-Aligned Representations for Person Re-Identification. In Proceedings of the International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 3641–3650. [Google Scholar]

- Zhang, Z.; Lan, C.; Zeng, W.; Chen, Z. Densely Semantically Aligned Person Re-Identification. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 667–676. [Google Scholar]

- Wu, L.; Shen, C.; Hengel, A.V.D. PersonNet: Person Re-identification with Deep Convolutional Neural Networks. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1–7. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Wu, L.; Shen, C.; Hengel, A.V.D. Deep linear discriminant analysis on fisher networks: A hybrid architecture for person re-identification. Pattern Recognit. 2016, 65, 238–250. [Google Scholar] [CrossRef]

- Liu, H.; Feng, J.; Qi, M.; Jiang, J.; Yan, S. End-to-End Comparative Attention Networks for Person Re-identification. Trans. Image Process. 2017, 26, 3492–3506. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Ni, B.; Yan, Y.; Zhou, P.; Cheng, S.; Hu, J. Pose transferrable person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4099–4108. [Google Scholar]

- Zhao, F.; Liao, S.; Xie, G.S.; Zhao, J.; Zhang, K.; Shao, L. Unsupervised domain adaptation with noise resistible mutual-training for person re-identification. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 526–544. [Google Scholar]

- Jin, X.; Lan, C.; Zeng, W.; Chen, Z. Global distance-distributions separation for unsupervised person re-identification. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 735–751. [Google Scholar]

- Zhai, Y.; Ye, Q.; Lu, S.; Jia, M.; Ji, R.; Tian, Y. Multiple expert brainstorming for domain adaptive person re-identification. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 594–611. [Google Scholar]

- Miao, J.; Wu, Y.; Liu, P.; Ding, Y.; Yang, Y. Pose-Guided Feature Alignment for Occluded Person Re-Identification. In Proceedings of the International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 542–551. [Google Scholar]

- Kalayeh, M.M.; Basaran, E.; Gokmen, M.; Kamasak, M.E.; Shah, M. Human Semantic Parsing for Person Re-identification. In Proceedings of the International Conference on Computer Vision and Pattern Recognition., Salt Lake City, UT, USA, 18–23 June 2018; pp. 1062–1071. [Google Scholar]

- Wang, J.; Zhu, X.; Gong, S.; Wei, L. Transferable Joint Attribute-Identity Deep Learning for Unsupervised Person Re-Identification. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 719–728. [Google Scholar]

- Ding, S.; Lin, L.; Wang, G.; Chao, H. Deep feature learning with relative distance comparison for person re-identification. Pattern Recognit. 2015, 48, 2993–3003. [Google Scholar] [CrossRef]

- Wang, J.; Wang, Z.; Gao, C.; Sang, N. DeepList: Learning Deep Features with Adaptive Listwise Constraint for Person Re-identification. Trans. Circuits Syst. Video Technol. 2017, 27, 513–524. [Google Scholar] [CrossRef]

- Luo, P.; Zhang, R.; Ren, J.; Peng, Z.; Li, J. Switchable normalization for learning-to-normalize deep representation. Trans. Pattern Anal. Mach. Intell. 2019, 43, 712–728. [Google Scholar] [CrossRef] [PubMed]

- Lan, H.; Chan, Y.; Xu, K.; Schmidt, B.; Peng, S.; Liu, W. Parallel Algorithms for Large-Scale Biological Sequence Alignment on Xeon-Phi Based Clusters; BMC Bioinformatics: London, UK, 2016; Volume 17, pp. 11–23. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).