A Sequential and Intensive Weighted Language Modeling Scheme for Multi-Task Learning-Based Natural Language Understanding

Abstract

1. Introduction

2. Related Work

3. Methodology

3.1. Multi-Task Learning (MTL)

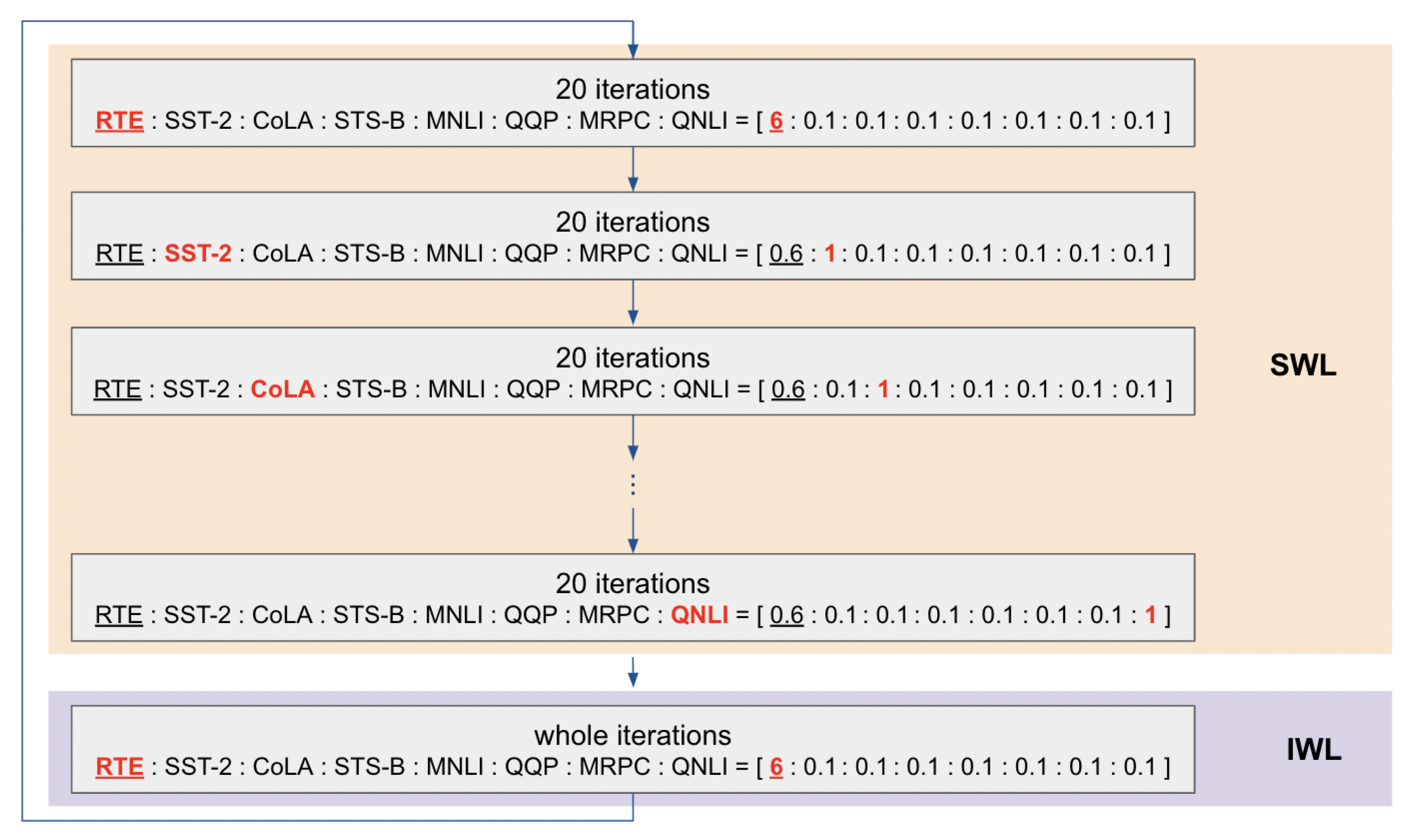

3.2. Sequential and Intensive Weighted Language Modeling (SIWLM)

| Algorithm 1 Sequential and intensive weighted learning. |

|

3.3. Fine-Tuning

4. Experiments

4.1. Datasets and Metrics

4.1.1. Single-Sentence Classification

4.1.2. Pairwise Text Similarity

4.1.3. Pairwise Text Classification

4.1.4. Pairwise Ranking

4.2. Experiment Setting

4.2.1. Competing Models

- BERT: A model with an additional task -specific layer added to the uncased BERT-base model. We fine-tune the pretrained model with each GLUE dataset.

- BAM: A model that performs MTL using knowledge distillation and exhibits high performance in the GLUE benchmark.

- MT-DNN: An MTL model that uses a pretrained BERT as an encoder and learns each task in parallel.

- SMART: A model that outperforms MT-DNN in many NLP tasks by applying a new fine-tuning technique to MT-DNN.

- MTDNN-SIWLM: An MT-DNN model that uses our SIWLM scheme.

4.2.2. Implementation Details

5. Results and Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Jang, B.; Kim, M.; Harerimana, G.; Kang, S.u.; Kim, J.W. Bi-LSTM model to increase accuracy in text classification: Combining Word2vec CNN and attention mechanism. Appl. Sci. 2020, 10, 5841. [Google Scholar] [CrossRef]

- Wang, A.; Singh, A.; Michael, J.; Hill, F.; Levy, O.; Bowman, S.R. Glue: A multi-task benchmark and analysis platform for natural language understanding. arXiv 2018, arXiv:1804.07461. [Google Scholar]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. arXiv 2018, arXiv:1802.05365. [Google Scholar]

- Liu, X.; He, P.; Chen, W.; Gao, J. Multi-task deep neural networks for natural language understanding. arXiv 2019, arXiv:1901.11504. [Google Scholar]

- Clark, K.; Luong, M.T.; Khandelwal, U.; Manning, C.D.; Le, Q.V. Bam! born-again multi-task networks for natural language understanding. arXiv 2019, arXiv:1907.04829. [Google Scholar]

- Yang, Y.; Gao, R.; Tang, Y.; Antic, S.L.; Deppen, S.; Huo, Y.; Sandler, K.L.; Massion, P.P.; Landman, B.A. Internal-transfer weighting of multi-task learning for lung cancer detection. In Medical Imaging 2020: Image Processing; International Society for Optics and Photonics: Bellingham, WA, USA, 2020; Volume 11313, p. 1131323. [Google Scholar]

- Jiang, H.; He, P.; Chen, W.; Liu, X.; Gao, J.; Zhao, T. Smart: Robust and efficient fine-tuning for pre-trained natural language models through principled regularized optimization. arXiv 2019, arXiv:1911.03437. [Google Scholar]

- Lee, C.; Yang, K.; Whang, T.; Park, C.; Matteson, A.; Lim, H. Exploring the Data Efficiency of Cross-Lingual Post-Training in Pretrained Language Models. Appl. Sci. 2021, 11, 1974. [Google Scholar] [CrossRef]

- Jwa, H.; Oh, D.; Park, K.; Kang, J.M.; Lim, H. exBAKE: Automatic fake news detection model based on bidirectional encoder representations from transformers (bert). Appl. Sci. 2019, 9, 4062. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. arXiv 2019, arXiv:1910.10683. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.; Le, Q.V. Xlnet: Generalized autoregressive pretraining for language understanding. arXiv 2019, arXiv:1906.08237. [Google Scholar]

- Wang, W.; Bi, B.; Yan, M.; Wu, C.; Bao, Z.; Xia, J.; Peng, L.; Si, L. Structbert: Incorporating language structures into pre-training for deep language understanding. arXiv 2019, arXiv:1908.04577. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- He, P.; Liu, X.; Gao, J.; Chen, W. Deberta: Decoding-enhanced bert with disentangled attention. arXiv 2020, arXiv:2006.03654. [Google Scholar]

- Zhang, Y.; Yang, Q. A survey on multi-task learning. arXiv 2017, arXiv:1707.08114. [Google Scholar]

- Guo, H.; Pasunuru, R.; Bansal, M. Soft layer-specific multi-task summarization with entailment and question generation. arXiv 2018, arXiv:1805.11004. [Google Scholar]

- Ruder, S.; Bingel, J.; Augenstein, I.; Søgaard, A. Latent multi-task architecture learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 4822–4829. [Google Scholar]

- Ruder, S. An overview of multi-task learning in deep neural networks. arXiv 2017, arXiv:1706.05098. [Google Scholar]

- Sun, Y.; Wang, S.; Li, Y.K.; Feng, S.; Tian, H.; Wu, H.; Wang, H. ERNIE 2.0: A Continual Pre-Training Framework for Language Understanding. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 8968–8975. [Google Scholar]

- Bingel, J.; Søgaard, A. Identifying beneficial task relations for multi-task learning in deep neural networks. arXiv 2017, arXiv:1702.08303. [Google Scholar]

- Sanh, V.; Wolf, T.; Ruder, S. A hierarchical multi-task approach for learning embeddings from semantic tasks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 6949–6956. [Google Scholar]

- Wu, S.; Zhang, H.R.; Ré, C. Understanding and Improving Information Transfer in Multi-Task Learning. arXiv 2020, arXiv:2005.00944. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Warstadt, A.; Singh, A.; Bowman, S.R. Neural network acceptability judgments. Trans. Assoc. Comput. Linguist. 2019, 7, 625–641. [Google Scholar] [CrossRef]

- Socher, R.; Perelygin, A.; Wu, J.; Chuang, J.; Manning, C.D.; Ng, A.Y.; Potts, C. Recursive deep models for semantic compositionality over a sentiment treebank. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; pp. 1631–1642. [Google Scholar]

- Cer, D.; Diab, M.; Agirre, E.; Lopez-Gazpio, I.; Specia, L. Semeval-2017 task 1: Semantic textual similarity-multilingual and cross-lingual focused evaluation. arXiv 2017, arXiv:1708.00055. [Google Scholar]

- Williams, A.; Nangia, N.; Bowman, S.R. A broad-coverage challenge corpus for sentence understanding through inference. arXiv 2017, arXiv:1704.05426. [Google Scholar]

- Dagan, I.; Glickman, O.; Magnini, B. The PASCAL recognising textual entailment challenge. In Machine Learning Challenges Workshop; Springer: Berlin/Heidelberg, Germany, 2005; pp. 177–190. [Google Scholar]

- Haim, R.B.; Dagan, I.; Dolan, B.; Ferro, L.; Giampiccolo, D.; Magnini, B.; Szpektor, I. The second pascal recognising textual entailment challenge. In Proceedings of the Second PASCAL Challenges Workshop on Recognising Textual Entailment, Venezia, Italy, 10 April 2006. [Google Scholar]

- Giampiccolo, D.; Magnini, B.; Dagan, I.; Dolan, B. The third pascal recognizing textual entailment challenge. In Proceedings of the ACL-PASCAL Workshop on Textual Entailment and Paraphrasing, Prague, Czech Republic, 28–29 June 2007; pp. 1–9. [Google Scholar]

- Bentivogli, L.; Clark, P.; Dagan, I.; Giampiccolo, D. The Fifth PASCAL Recognizing Textual Entailment Challenge. In Proceedings of the Text Analysis Conference, Gaithersburg, MD, USA, 16–17 November 2009. [Google Scholar]

- Dolan, W.B.; Brockett, C. Automatically constructing a corpus of sentential paraphrases. In Proceedings of the Third International Workshop on Paraphrasing (IWP2005), Jeju Island, Korea, 14 October 2005. [Google Scholar]

- Rajpurkar, P.; Zhang, J.; Lopyrev, K.; Liang, P. Squad: 100,000+ questions for machine comprehension of text. arXiv 2016, arXiv:1606.05250. [Google Scholar]

- Kochkina, E.; Liakata, M.; Zubiaga, A. All-in-one: Multi-task learning for rumour verification. arXiv 2018, arXiv:1806.03713. [Google Scholar]

- Majumder, N.; Poria, S.; Peng, H.; Chhaya, N.; Cambria, E.; Gelbukh, A. Sentiment and sarcasm classification with multitask learning. IEEE Intell. Syst. 2019, 34, 38–43. [Google Scholar] [CrossRef]

- Crichton, G.; Pyysalo, S.; Chiu, B.; Korhonen, A. A neural network multi-task learning approach to biomedical named entity recognition. BMC Bioinform. 2017, 18, 368. [Google Scholar] [CrossRef] [PubMed]

- Wang, A.; Pruksachatkun, Y.; Nangia, N.; Singh, A.; Michael, J.; Hill, F.; Levy, O.; Bowman, S.R. Superglue: A stickier benchmark for general-purpose language understanding systems. arXiv 2019, arXiv:1905.00537. [Google Scholar]

| Dataset | The Number of Training Examples |

|---|---|

| MNLI | 393 k |

| QQP | 364 k |

| QNLI | 108 k |

| SST-2 | 67 k |

| CoLA | 8.5 k |

| STS-B | 7 k |

| MRPC | 3.7 k |

| RTE | 2.5 k |

| Task Weight | MNLI-m/mm Acc | QQP Acc/F1 | QNLI Acc | SST-2 Acc | CoLA Acc/Mcc | STS-B P/S Corr | MRPC Acc/F1 | RTE Acc |

|---|---|---|---|---|---|---|---|---|

| 1 | 84.65/84.30 | 90.61/87.36 | 91.01 | 92.54 | 80.92/52.65 | 88.01/87.97 | 87.00/90.20 | 76.17 |

| 3 | 84.52/84.35 | 90.76/87.56 | 91.23 | 92.54 | 81.20/53.39 | 88.05/88.16 | 86.76/90.14 | 75.81 |

| 6 | 84.34/84.60 | 90.80/87.61 | 91.14 | 92.88 | 81.11/53.18 | 87.83/87.90 | 86.76/90.21 | 77.25 |

| 9 | 84.51/84.55 | 90.73/87.49 | 91.36 | 92.66 | 80.72/52.06 | 88.02/88.20 | 87.99/90.90 | 77.61 |

| 12 | 84.37/84.46 | 90.79/87.61 | 91.06 | 92.77 | 81.30/53.64 | 87.91/87.99 | 87.99/91.04 | 77.25 |

| 15 | 84.52/84.54 | 90.59/87.70 | 91.15 | 92.20 | 80.92/52.69 | 87.83/87.98 | 87.01/90.31 | 77.98 |

| MNLI-m/mm Acc | QQP Acc | QNLI Acc | SST-2 Acc | CoLA Mcc | STS-B Avg | MRPC Acc | RTE Acc | |

|---|---|---|---|---|---|---|---|---|

| BERT [11] | 84.1 | 90.8 | 91.2 | 93.0 | 52.8 | 83.7 | 86.5 | 67.1 |

| BAM [5] | 84.9 | 91.0 | 91.4 | 92.8 | 56.3 | 86.9 | 88.5 | 82.7 |

| MT-DNN [4] | 84.0 | 89.5 | 91.4 | 92.1 | 53.1 | 89.6 | 89.7 | 78.7 |

| SMART [7] | 84.9 | 91.4 | 91.8 | 91.6 | 58.5 | 85.7 | 87.2 | 79.7 |

| MTDNN-SIWLM | 84.8 | 91.5 | 92.0 | 93.2 | 57.0 | 86.7 | 90.4 | 79.0 |

| MNLI-m/mm Acc | QQP Acc/F1 | QNLI Acc | SST-2 Acc | CoLA Mcc | STS-B P/S Corr | MRPC Acc/F1 | RTE Acc | |

|---|---|---|---|---|---|---|---|---|

| MT-DNN [4] | 84.2/83.0 | 71.4/89.1 | 90.9 | 93.1 | 50.1 | 87.4/86.8 | 86.4/89.8 | 75.5 |

| SMART [7] | 84.7/83.2 | 89.5/71.6 | 91.7 | 92.5 | 55.2 | 82.6/81.0 | 86.6/89.8 | 72.7 |

| MTDNN-SIWLM | 84.8/84.0 | 89.4/71.7 | 91.7 | 93.8 | 53.1 | 83.8/82.3 | 90.7/87.6 | 77.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Son, S.; Hwang, S.; Bae, S.; Park, S.J.; Choi, J.-H. A Sequential and Intensive Weighted Language Modeling Scheme for Multi-Task Learning-Based Natural Language Understanding. Appl. Sci. 2021, 11, 3095. https://doi.org/10.3390/app11073095

Son S, Hwang S, Bae S, Park SJ, Choi J-H. A Sequential and Intensive Weighted Language Modeling Scheme for Multi-Task Learning-Based Natural Language Understanding. Applied Sciences. 2021; 11(7):3095. https://doi.org/10.3390/app11073095

Chicago/Turabian StyleSon, Suhyune, Seonjeong Hwang, Sohyeun Bae, Soo Jun Park, and Jang-Hwan Choi. 2021. "A Sequential and Intensive Weighted Language Modeling Scheme for Multi-Task Learning-Based Natural Language Understanding" Applied Sciences 11, no. 7: 3095. https://doi.org/10.3390/app11073095

APA StyleSon, S., Hwang, S., Bae, S., Park, S. J., & Choi, J.-H. (2021). A Sequential and Intensive Weighted Language Modeling Scheme for Multi-Task Learning-Based Natural Language Understanding. Applied Sciences, 11(7), 3095. https://doi.org/10.3390/app11073095