Enhanced K-Nearest Neighbor for Intelligent Fault Diagnosis of Rotating Machinery

Abstract

1. Introduction

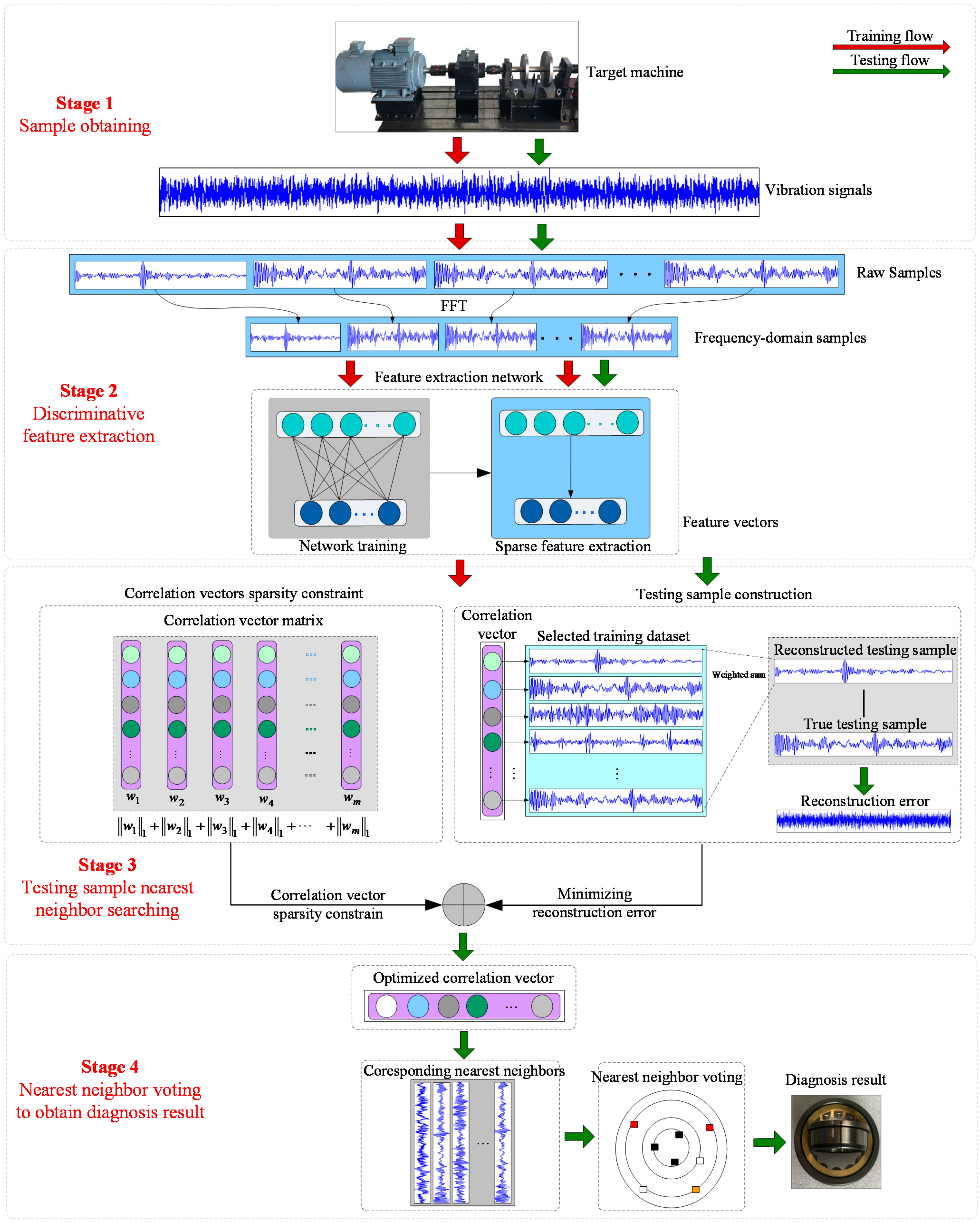

- (1)

- For bearing fault diagnosis, a fourth-stage novel data-driven fault diagnosis method is developed, which takes advantage of both parameter-based and case-based methods and addresses the shortcomings in common KNN-based fault diagnosis methods. As regards feature extraction, it uses a powerful sparse feature extraction method to extract discriminative features. In its feature classification part, inspired by sparse coding [36], a novel method called EKNN is proposed to realize automatic nearest neighbor location.

- (2)

- For EKNN, it can also take advantage of the training dataset in the testing stage, retaining the advantages of case-based methods in feature classification. With the help of the training dataset, when new faults occur, EKNN can fuse the related data to train and obtain a new diagnosis network suitable for all faults.

- (3)

- To solve the nearest neighbor location problem in the common KNN-based fault diagnosis method, a reconstruction method is proposed in EKNN for the testing sample nearest to neighbor determination. It attempts to obtain the correlation vector of each testing sample in the testing sample reconstruction utilizing training samples, which distinguishes it from existing methods.

2. Principal Knowledge

2.1. Sparse Filtering

- (1)

- Feature extraction via Equation (1);

- (2)

- Feature matrix normalization with L2 norm by Equation (2);

- (3)

- Optimization via minimizing L1 norm in the objective function, namely, Equation (3), to maximize the sparsity of . The weight matrix updating of SF can be derived by backpropagation step-by-step.

2.2. Sparse Coding

3. Proposed Method

3.1. Stage 1: Sample Obtaining

3.2. Stage 2: Discriminative Feature Extraction

3.3. Stage 3: Nearest Neighbor Searching

3.4. Stage 4: Nearest Neighbor Voting to Obtain Diagnosis Result

4. Fault Diagnosis Case Investigation Utilizing the Proposed EKNN

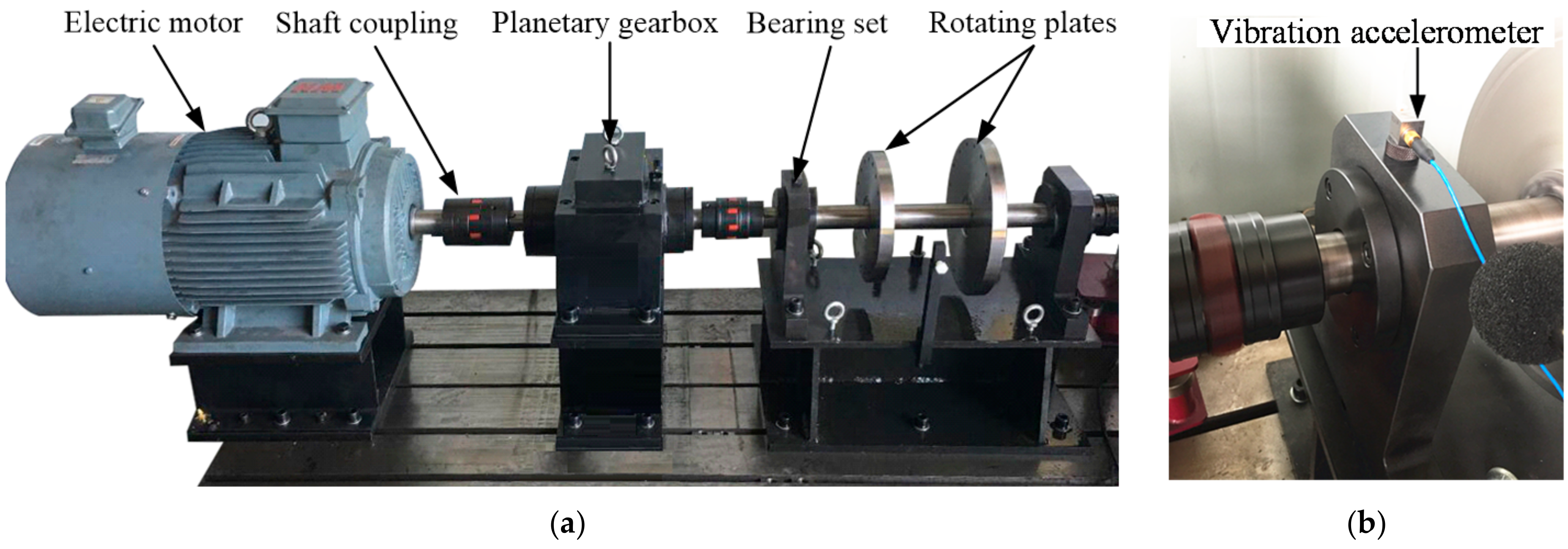

4.1. Dataset Description

- (1)

- Normal condition, denoted as NO;

- (2)

- Three inner race faults with different fault severities (0.2, 0.6 and 1.2 mm), denoted as IF02, IF06 and IF12, respectively;

- (3)

- Three outer race faults with different fault severities (0.2, 0.6 and 1.2 mm), denoted as OF02, OF06 and OF12, respectively;

- (4)

- Three roller faults with different fault severities (0.2, 0.6 and 1.2 mm), denoted as BF02, BF06 and BF12, respectively.

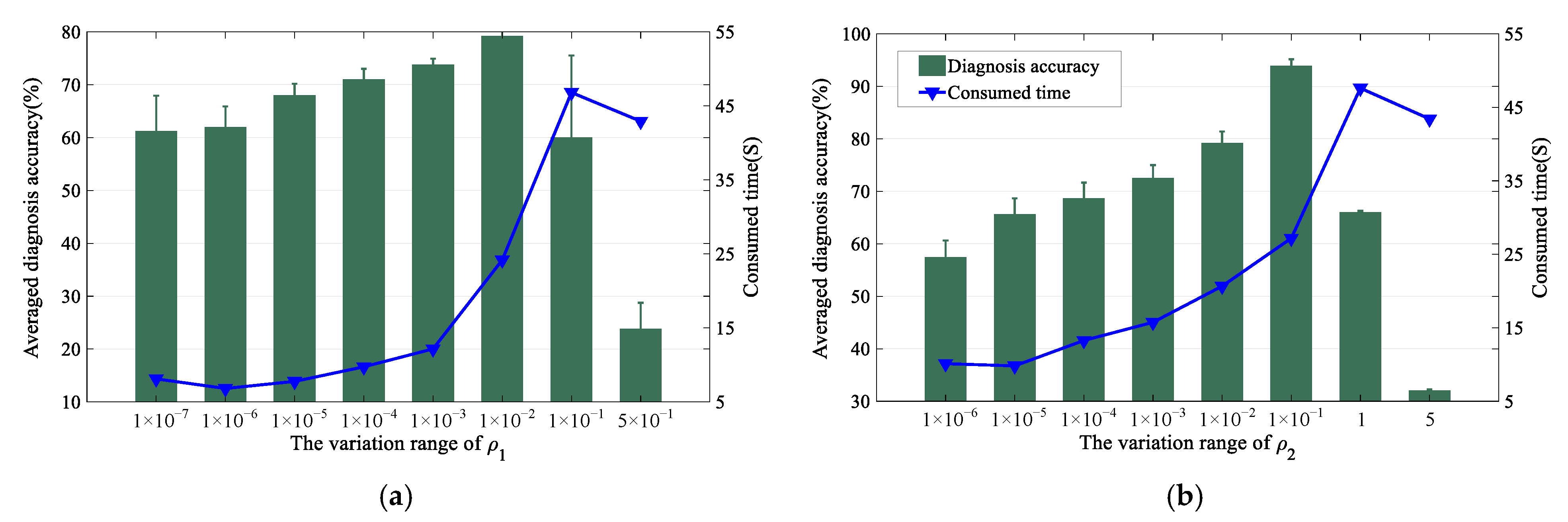

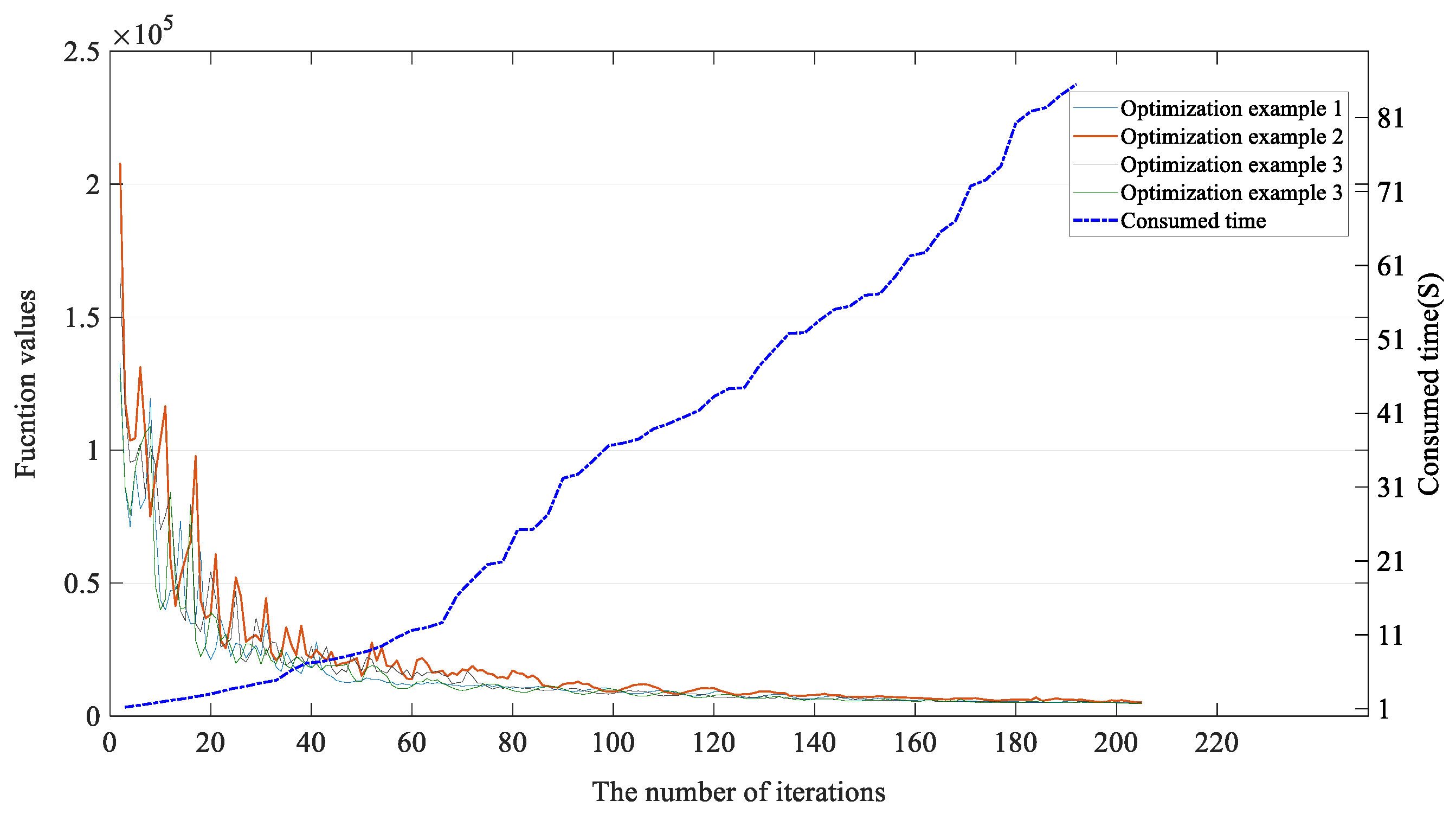

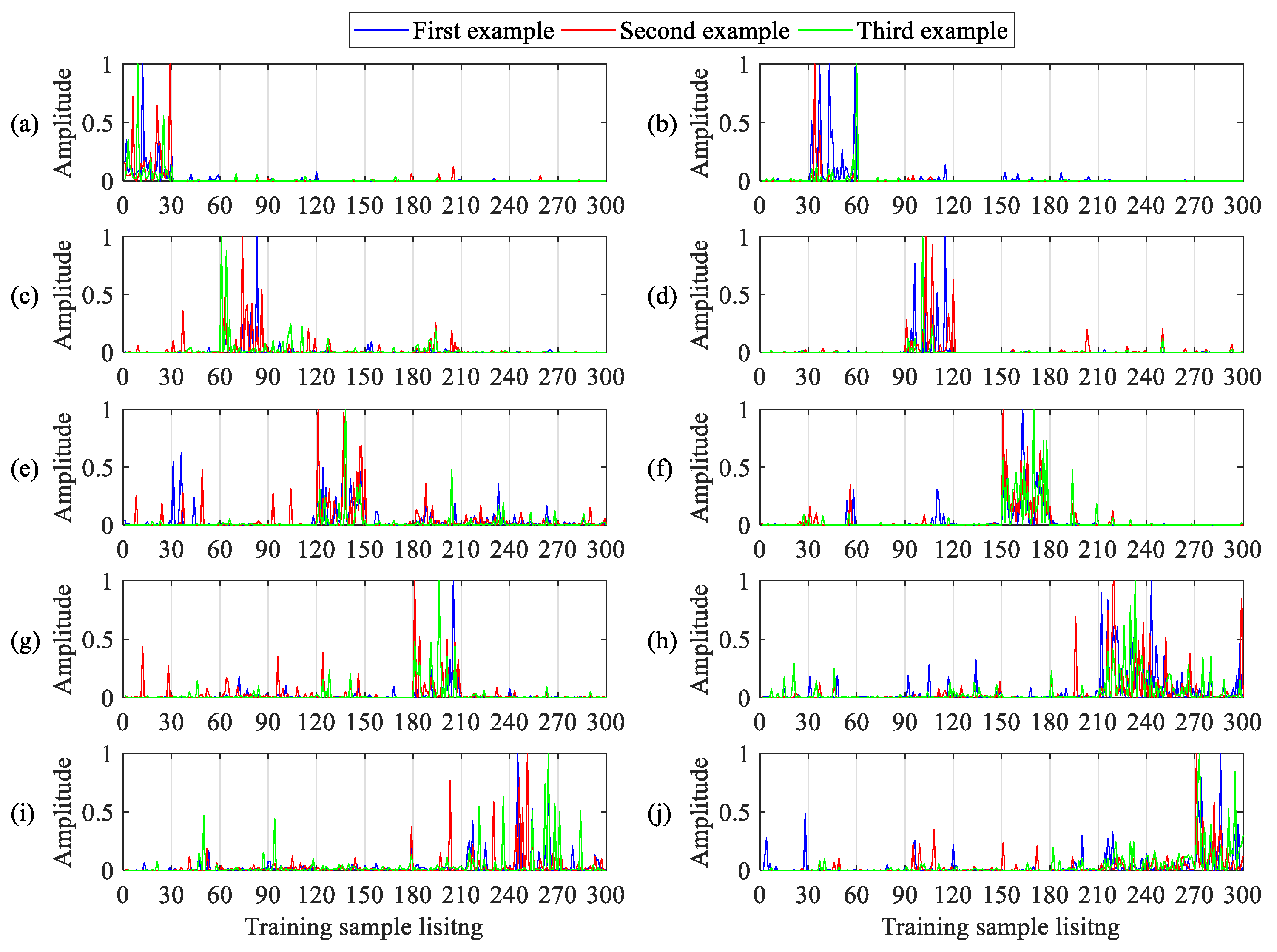

4.2. Parameter Tuning and Sensitivity Investigation

- (1)

- The feature dimension N1 in the feature layer of SF;

- (2)

- The regular parameter ρ1 for L1 norm in Stage 3;

- (3)

- The regular parameter ρ2 for similarity evaluation in stage 3.

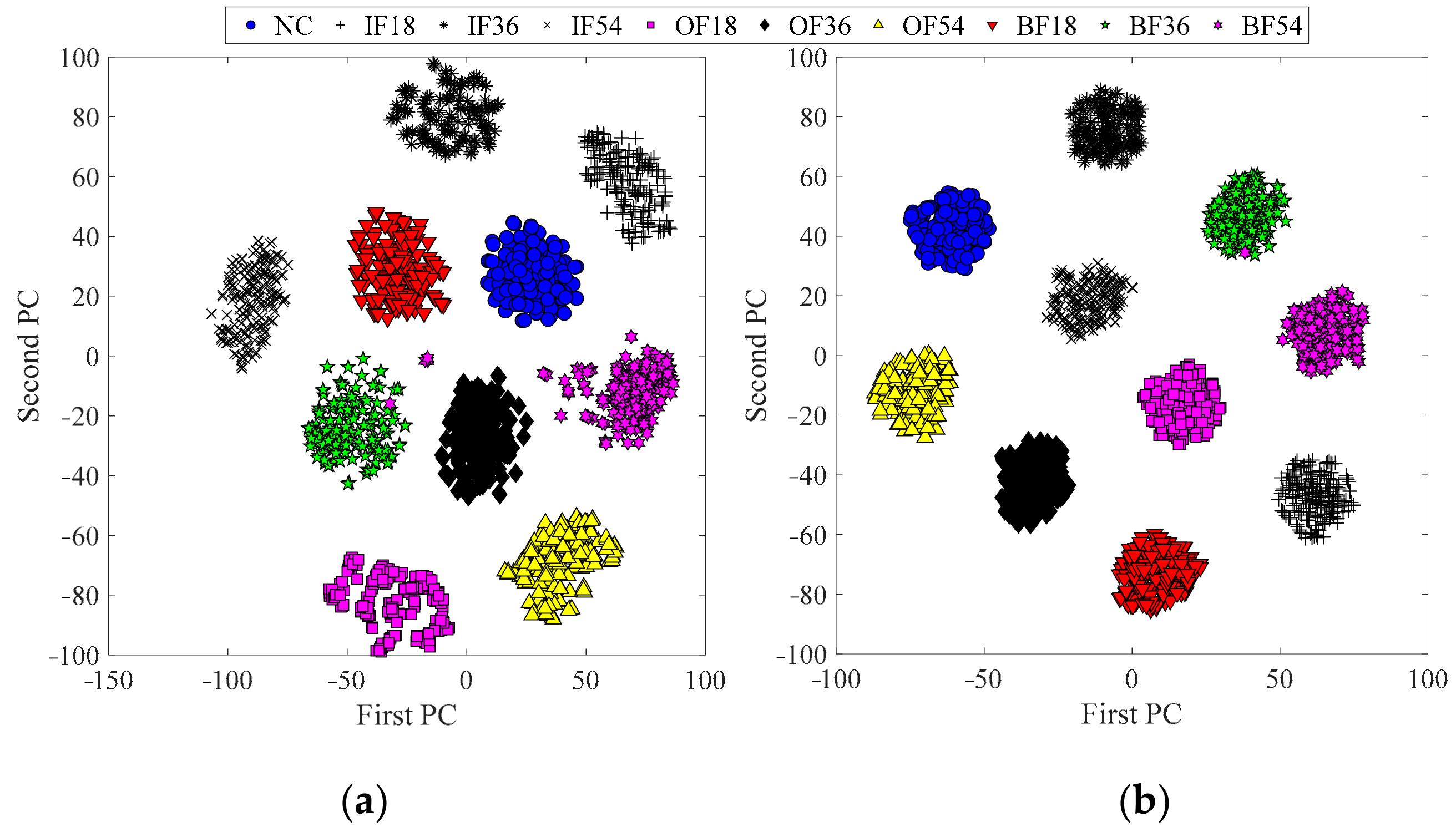

4.3. Diagnosis Results and Comparisons

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jiang, X.; Li, S.; Wang, Y. Study on nature of crossover phenomenon with application to gearbox fault diagnosis. Mech. Syst. Signal Process. 2017, 83, 272–295. [Google Scholar] [CrossRef]

- Guo, S.; Yang, T.; Gao, W.; Zhang, C. A novel fault diagnosis method for rotating machinery based on a convolutional neural network. Sensors 2018, 18, 1429. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Tang, C.; Shao, Y. An innovative dynamic model for vibration analysis of a flexible roller bearing. Mech. Mach. Theory 2019, 135, 27–39. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, J.; Wang, J.; Du, W.; Wang, J.; Han, X.; He, G. A Novel Fault Diagnosis Method of Gearbox Based on Maximum Kurtosis Spectral Entropy Deconvolution. IEEE Access 2019, 7, 29520–29532. [Google Scholar] [CrossRef]

- Huang, W.; Gao, G.; Li, N.; Jiang, X.; Zhu, Z. Time-Frequency Squeezing and Generalized Demodulation Combined for Variable Speed Bearing Fault Diagnosis. IEEE Trans. Instrum. Meas. 2019, 68, 2819–2829. [Google Scholar] [CrossRef]

- Shen, C.; Wang, D.; Kong, F.; Tse, P.W. Fault diagnosis of rotating machinery based on the statistical parameters of wavelet packet paving and a generic support vector regressive classifier. Measurement 2013, 46, 1551–1564. [Google Scholar] [CrossRef]

- Han, T.; Liu, C.; Yang, W.; Jiang, D. Learning transferable features in deep convolutional neural networks for diagnosing unseen machine conditions. ISA Trans. 2019, 93, 341–353. [Google Scholar] [CrossRef]

- Liu, J.; Hu, Y.; Wang, Y.; Wu, B.; Fan, J.; Hu, Z. An integrated multi-sensor fusion-based deep feature learning approach for rotating machinery diagnosis. Meas. Sci. Technol. 2018, 29, 055103. [Google Scholar] [CrossRef]

- Yang, B.; Lei, Y.; Jia, F.; Xing, S. An intelligent fault diagnosis approach based on transfer learning from laboratory bearings to locomotive bearings. Mech. Syst. Signal Process. 2019, 122, 692–706. [Google Scholar] [CrossRef]

- Lei, Y.; Jia, F.; Lin, J.; Xing, S.; Ding, S.X. An Intelligent Fault Diagnosis Method Using Unsupervised Feature Learning Towards Mechanical Big Data. IEEE Trans. Ind. Electron. 2016, 63, 3137–3147. [Google Scholar] [CrossRef]

- Ngiam, J.; Chen, Z.; Bhaskar, S.A.; Koh, P.W.; Ng, A.Y. Sparse Filtering. In Proceedings of the 24th International Conference on Neural Information Processing Systems (NIPS), Granada, Spain, 12–17 December 2011; pp. 1125–1133. [Google Scholar]

- Qian, W.; Li, S.; Wang, J.; Wu, Q. A novel supervised sparse feature extraction method and its application on rotating machine fault diagnosis. Neurocomputing 2018, 320, 129–140. [Google Scholar] [CrossRef]

- Qian, W.; Li, S.; Jiang, X. Deep transfer network for rotating machine fault analysis. Pattern Recognit. 2019, 96, 106993. [Google Scholar] [CrossRef]

- Wen, L.; Li, X.; Gao, L.; Zhang, Y. A New Convolutional Neural Network-Based Data-Driven Fault Diagnosis Method. IEEE Trans. Ind. Electron. 2018, 65, 5990–5998. [Google Scholar] [CrossRef]

- Jia, F.; Lei, Y.; Guo, L.; Lin, J.; Xing, S. A neural network constructed by deep learning technique and its application to intelligent fault diagnosis of machines. Neurocomputing 2018, 272, 619–628. [Google Scholar] [CrossRef]

- Liu, R.; Meng, G.; Yang, B.; Sun, C.; Chen, X. Dislocated Time Series Convolutional Neural Architecture: An Intelligent Fault Diagnosis Approach for Electric Machine. IEEE Trans. Ind. Inform. 2017, 13, 1310–1320. [Google Scholar] [CrossRef]

- Shao, H.; Junsheng, C.; Hongkai, J.; Yu, Y.; Zhantao, W. Enhanced deep gated recurrent unit and complex wavelet packet energy moment entropy for early fault prognosis of bearing. Knowl. Based Syst. 2019, 188, 105022. [Google Scholar] [CrossRef]

- Miao, Q.; Zhang, X.; Liu, Z.; Zhang, H. Condition multi-classification and evaluation of system degradation process using an improved support vector machine. Microelectron. Reliab. 2017, 75, 223–232. [Google Scholar] [CrossRef]

- Qian, W.; Li, S.; Yi, P.; Zhang, K. A novel transfer learning method for robust fault diagnosis of rotating machines under variable working conditions. Measurement 2019, 138, 514–525. [Google Scholar] [CrossRef]

- Zhao, R.; Yan, R.; Chen, Z.; Mao, K.; Wang, P.; Gao, R.X. Deep Learning and Its Applications to Machine Health Monitoring: A Survey. arXiv 2016, arXiv:1612.07640. [Google Scholar] [CrossRef]

- Berredjem, T.; Benidir, M. Bearing faults diagnosis using fuzzy expert system relying on an Improved Range Overlaps and Similarity method. Expert Syst. Appl. 2018, 108, 134–142. [Google Scholar] [CrossRef]

- Yuan, K.; Deng, Y. Conflict evidence management in fault diagnosis. Int. J. Mach. Learn. Cybern. 2017, 10, 1–10. [Google Scholar] [CrossRef]

- Shi, P.; Yuan, D.; Han, D.; Zhang, Y.; Fu, R. Stochastic resonance in a time-delayed feedback tristable system and its application in fault diagnosis. J. Sound Vib. 2018, 424, 1–14. [Google Scholar] [CrossRef]

- Imani, M.B.; Heydarzadeh, M.; Khan, L.; Nourani, M. A Scalable Spark-Based Fault Diagnosis Platform for Gearbox Fault Diagnosis in Wind Farms. In Proceedings of the Information Reuse and Integration Conference, San Diego, CA, USA, 4–6 August 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Mohapatra, S.; Khilar, P.M.; Swain, R.R. Fault diagnosis in wireless sensor network using clonal selection principle and probabilistic neural network approach. Int. J. Commun. Syst. 2019, 32, e4138. [Google Scholar] [CrossRef]

- Wang, Q.; Liu, Y.B.; He, X.; Liu, S.Y.; Liu, J.H. Fault Diagnosis of Bearing Based on KPCA and KNN Method. Adv. Mater. Res. 2014, 986, 1491–1496. [Google Scholar] [CrossRef]

- Jiang, X.; Li, S. A dual path optimization ridge estimation method for condition monitoring of planetary gearbox under varying-speed operation. Measurement 2016, 94, 630–644. [Google Scholar] [CrossRef]

- Qian, W.; Li, S.; Wang, J.; An, Z.; Jiang, X. An intelligent fault diagnosis framework for raw vibration signals: Adaptive overlapping convolutional neural network. Meas. Sci. Technol. 2018, 29, 095009. [Google Scholar] [CrossRef]

- Gorunescu, F.; Belciug, S. Intelligent Decision Support Systems in Automated Medical Diagnosis. In Advances in Biomedical Informatics; Holmes, D., Jain, L., Eds.; Springer: Cham, Switzerland, 2018; Volume 137, pp. 161–186. [Google Scholar]

- Du, W.; Zhou, W. An Intelligent Fault Diagnosis Architecture for Electrical Fused Magnesia Furnace Using Sound Spectrum Submanifold Analysis. IEEE Trans. Instrum. Meas. 2018, 67, 2014–2023. [Google Scholar] [CrossRef]

- Melin, P.; Prado-Arechiga, G. New Hybrid Intelligent Systems for Diagnosis and Risk Evaluation of Arterial Hypertension. In Springer Briefs in Applied Sciences and Technology; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Guo, G.; Wang, H.; Bell, D.A.; Bi, Y.; Greer, K. KNN Model-Based Approach in Classification. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Lei, Y.; Zuo, M.J. Gear crack level identification based on weighted K nearest neighbor classification algorithm. Mech. Syst. Signal Process. 2009, 23, 1535–1547. [Google Scholar] [CrossRef]

- Zhang, M.-L.; Zhou, Z.-H. ML-KNN: A lazy learning approach to multi-label learning. Pattern Recognit. 2007, 40, 2038–2048. [Google Scholar] [CrossRef]

- Chen, J.; Fang, H.; Saad, Y. Fast Approximate kNN Graph Construction for High Dimensional Data via Recursive Lanczos Bisection. J. Mach. Learn. Res. 2009, 10, 1989–2012. [Google Scholar]

- Luo, W.; Liu, W.; Gao, S. A Revisit of Sparse Coding Based Anomaly Detection in Stacked RNN Framework. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; Volume 1, pp. 341–349. [Google Scholar]

- Vlcek, J.; Lukšan, L. Generalizations of the limited-memory BFGS method based on the quasi-product form of update. J. Comput. Appl. Math. 2013, 241, 116–129. [Google Scholar] [CrossRef]

- Pezzotti, N.; Lelieveldt, B.P.F.; Van Der Maaten, L.; Höllt, T.; Eisemann, E.; Vilanova, A. Approximated and User Steerable tSNE for Progressive Visual Analytics. IEEE Trans. Vis. Comput. Graph. 2015, 23, 1739–1752. [Google Scholar] [CrossRef] [PubMed]

| Fault Classes | TP | FP | TN | FN | Precision | Recall |

|---|---|---|---|---|---|---|

| NO | 1000 | 1.25 | 8844.9 | 0 | 0.999 | 1.000 |

| IF02 | 999.95 | 8.5 | 8844.95 | 0.05 | 0.992 | 1.000 |

| IF06 | 998.9 | 3.95 | 8846 | 1.1 | 0.996 | 0.999 |

| IF12 | 999.9 | 7.85 | 8845 | 0.1 | 0.992 | 1.000 |

| OF02 | 999.85 | 6.35 | 8845.05 | 0.15 | 0.994 | 1.000 |

| OF06 | 999.9 | 8.75 | 8845 | 0.1 | 0.991 | 1.000 |

| OF12 | 999.05 | 10 | 8845.85 | 0.95 | 0.990 | 0.999 |

| BF02 | 925.5 | 11.65 | 8919.4 | 75.05 | 0.988 | 0.925 |

| BF06 | 983.95 | 95.65 | 8860.95 | 16.05 | 0.911 | 0.984 |

| BF12 | 937.9 | 1.7 | 8907 | 62.1 | 0.998 | 0.938 |

| Fault Classes | TP | FP | TN | FN | Precision | Recall |

|---|---|---|---|---|---|---|

| NO | 1000 | 0.05 | 8993.1 | 0 | 1.000 | 1.000 |

| IF02 | 1000 | 0.3 | 8993.1 | 0 | 1.000 | 1.000 |

| IF06 | 999.95 | 0.8 | 8993.15 | 0.05 | 0.999 | 1.000 |

| IF12 | 1000 | 0.7 | 8993.1 | 0 | 0.999 | 1.000 |

| OF02 | 999.95 | 0.55 | 8993.15 | 0.05 | 0.999 | 1.000 |

| OF06 | 999.3 | 0.1 | 8993.8 | 0.7 | 1.000 | 0.999 |

| OF12 | 998.9 | 0.3 | 8994.2 | 1.1 | 1.000 | 0.999 |

| BF02 | 999.5 | 4.55 | 8993.6 | 1.2 | 0.995 | 0.999 |

| BF06 | 995.5 | 0.6 | 8997.6 | 5.1 | 0.999 | 0.995 |

| BF12 | 1000 | 0.25 | 8993.1 | 0 | 1.000 | 1.000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, J.; Qian, W.; Li, S.; Cui, R. Enhanced K-Nearest Neighbor for Intelligent Fault Diagnosis of Rotating Machinery. Appl. Sci. 2021, 11, 919. https://doi.org/10.3390/app11030919

Lu J, Qian W, Li S, Cui R. Enhanced K-Nearest Neighbor for Intelligent Fault Diagnosis of Rotating Machinery. Applied Sciences. 2021; 11(3):919. https://doi.org/10.3390/app11030919

Chicago/Turabian StyleLu, Jiantao, Weiwei Qian, Shunming Li, and Rongqing Cui. 2021. "Enhanced K-Nearest Neighbor for Intelligent Fault Diagnosis of Rotating Machinery" Applied Sciences 11, no. 3: 919. https://doi.org/10.3390/app11030919

APA StyleLu, J., Qian, W., Li, S., & Cui, R. (2021). Enhanced K-Nearest Neighbor for Intelligent Fault Diagnosis of Rotating Machinery. Applied Sciences, 11(3), 919. https://doi.org/10.3390/app11030919