Jupyter Notebooks in Undergraduate Mobile Robotics Courses: Educational Tool and Case Study

Abstract

Featured Application

Abstract

1. Introduction

2. Theoretical Background

3. Purpose of the Research

- To know and explain which are the fundamental problems so a robot can be considered autonomous.

- To know the components of a robot (sensors, actuators, software, mechanical components, etc.) and how they operate in isolation and as components of a system.

- To understand how the most used sensors for gathering information from the environment work, as well as their associated probabilistic models.

- To comprehend the localization and map building process for a mobile robot.

- To understand and develop motion planning algorithms.

- To program a mobile robot so it navigates a 2D environment.

- RQ1.

- Do Jupyter notebooks enhance learning in mobile robotics undergraduate courses?

- RQ2.

- Do they represent an improvement over the traditional problem posing approach?

- RQ3.

- Are they a suitable framework for designing hands-on sessions from the lecturers point of view?

4. Methods

4.1. Procedure

4.2. Educational Tool

4.2.1. Used Technologies

Jupyter Notebook

- Notebook format. It organizes the code in a number of cells, which can be modified and run on demand. The outputs generated by these executions are displayed just beneath the code cell. Not only they can display text, but plots, graphics or mathematical formulas. Besides the code cells, there are text cells written using Markdown markup language (more information on that below).

- Web application. It works as both a text and code editor and a computing platform. Its web application nature allows a lecturer to set up a server in order to give ready access to the platform if needed, although it can be easily installed in a local machine, e.g., using Anaconda (https://www.anaconda.com/distribution/).

- Kernel. Language backend designed to execute code on request, using a common documented protocol. Initially, only a kernel for the Python programming language was available, yet the incredible popularity of the platform and its open-source nature has encouraged the development of an increasingly amount of kernels, now embracing over 50 additional languages (https://github.com/jupyter/jupyter/wiki/Jupyter-kernels).

or HTML, is Markdown’s rather minimal syntax, which allows newcomers to readily work without experiencing a heavy learning curve. Nonetheless, if a greater amount of control over the content of the document was needed, HTML can be also used within a text cell. This could be a really powerful tool, as most of the capabilities of HTML translate directly to the Jupyter Notebooks, such as embedding a video within them. Next section describes the programming language used in the code cells.

or HTML, is Markdown’s rather minimal syntax, which allows newcomers to readily work without experiencing a heavy learning curve. Nonetheless, if a greater amount of control over the content of the document was needed, HTML can be also used within a text cell. This could be a really powerful tool, as most of the capabilities of HTML translate directly to the Jupyter Notebooks, such as embedding a video within them. Next section describes the programming language used in the code cells.Python

- Numpy.

- The de facto library for matrix and array computation in Python. It provides a large collection of high-level mathematical functions, such as matrix multiplication, trigonometric functions, etc.

- Scipy.

- A library for mathematical computation, which was originally Numpy’s parent project. It brings additional utilities for statistics, linear algebra and signal processing which are basic building blocks in the developed notebooks.

- Matplotlib.

- Visualizing the problem pays a huge part in the learning process, specially in the domain of mobile robots. Matplotlib is a 2D plotting library that permits us to create different data visualizations along our exercises. It has native support in Jupyter notebooks, displaying and updating the figures inline.

4.2.2. The Collection of Jupyter Notebooks

- Introduction. Description of the topic to be addressed in the notebook, including relevant theoretical concepts introduced as text, figures, equations, etc. This section also put the notebook in the context of a real problem (e.g., robot localization in a shopping mall).

- Imports. Code cell importing all the external modules needed to complete the assignment.

- Utils(optional). Depending on the complexity of the exercise, some code can be provided to the student to assist the implementation.

- Issues. Each exercise will be comprised of a number of issues or points to be solved, each one made up of some text cell describing it and some incomplete code cells.

- Demos. In order to test the correctness of the assignment and illustrate the concepts of the exercise, there will be mostly complete code cells to create visualizations.

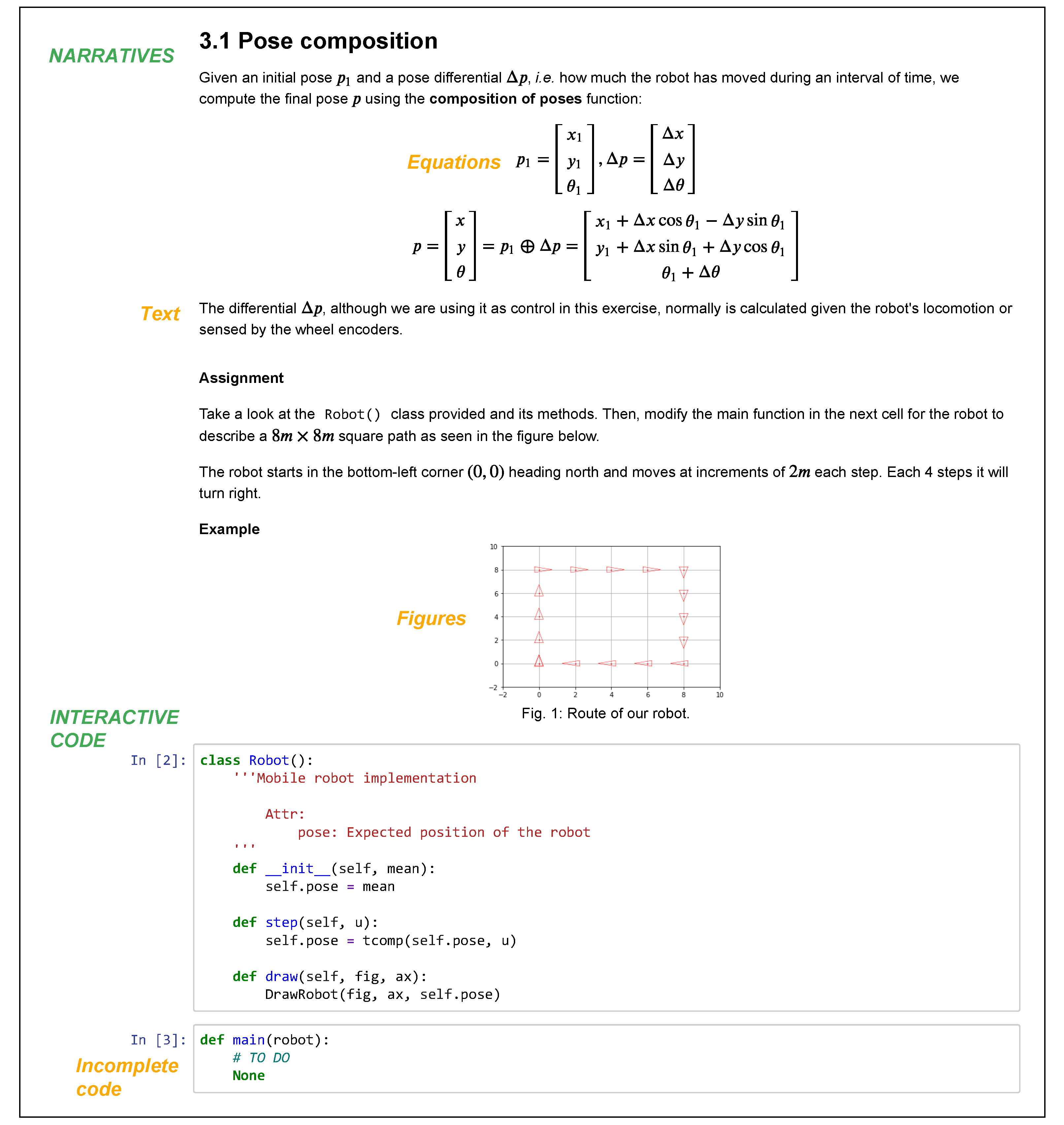

- images: directory containing the figures used in the notebooks’ narratives (see Figure 1 for an example).

- utils: directory grouping together a number of utilities (Python .py files) that ease students’ implementations if needed, so they can focus on more relevant parts of the algorithms addressed in the notebooks (recall the third point in the previous list). Examples of these utilities could be a function for drawing a triangle representing a robot at a certain position and with a given orientation, a method for plotting the uncertainty about robot/landmarks localization by means of ellipses, a method for composing poses, another one building complex Jacobian matrices, etc.

- .ipynb files: the Jupyter notebooks themselves.

- LICENSE: a file describing the notebooks’ public license (GNU General Public License v3.0).

- README: a file briefly describing the notebooks and their high-level dependencies.

- requirements: a text file containing the packages on which the designed notebooks depend. It can be used to automatically install such dependencies by means of pip or Anaconda.

Lab. 1: Probability Fundamentals

- Gaussian distribution. This notebook introduces the student to some basic concepts of interest, for instance: how a Gaussian distribution is defined and the generation of random samples from it (see Figure 2).

- Properties. It serves to illustrate different properties and operations of Gaussians like the central limit theorem, sum, product, linear transformation, etc., letting the student to experiment with different distributions.

- Multidimensional Distributions. It mirrors similar issues from the previous two parts, using multidimensional distributions instead.

Lab. 2: Robot Motion

- Velocity-based. Motion model where the robot is controlled using a pair of linear and angular velocities, respectively, during a certain amount of time.

- Odometry-based. This model abstracts the complexity of the robot kinematics, being most useful when wheel encoders are present, although other sensors like laser scanners can be used to compute the required pose increments [63]. In this case, the motion commands are expressed as an increment of the pose: .

- Composition of noisy poses. The first assignment is to implement robot movement using pose composition and Gaussian noise, then generating a number of movement commands to traverse a square route, something which will become a familiar routine in the following tasks.

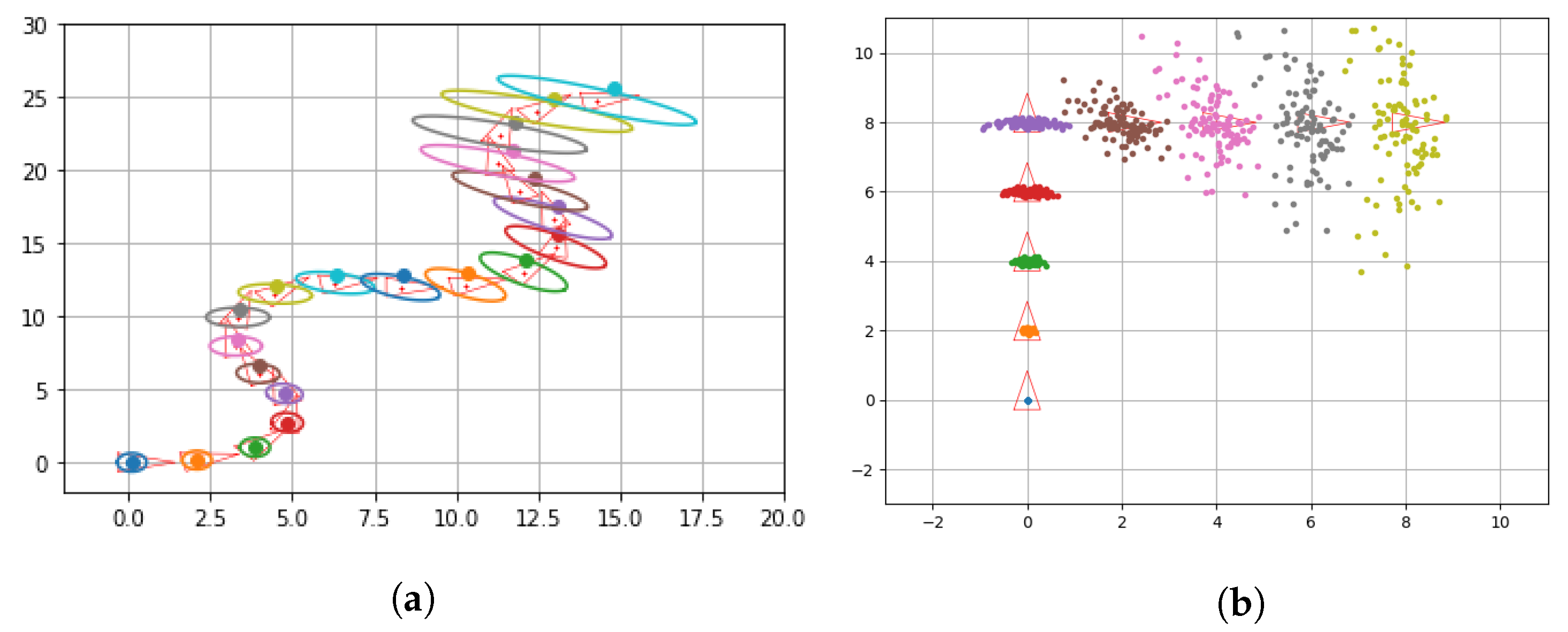

- Differential motion with velocity commands. The following notebook introduces the equations for the differential motion model. Then asks the student to use velocity commands to move the robot in a serpentine trajectory as seen in Figure 3a.

- Differential drive with odometry commands. Lastly, in order to reinforce the concepts of odometry commands the students apply them in both the analytical form (), and the sample form. The latter one is of special interest because of its utilization in particle filters [59]. It also better represents the real movement of a robot base (see Figure 3b).

Lab. 3: Robot Sensing

- Proprioceptive sensors: those measuring the internal status of the robot: battery, position, acceleration, inclination. Some examples are: optical encoders, heading sensors (compass, gyroscopes), accelerometers, Intertial Measurement Units (IMUs), potentiometers, etc.

- Exteroceptive sensors: sensors gathering information from the environment, like distance and/or angle to objects, light intensity reflected by the environment, etc. Examples of these kind of sensors could be range sensors (sonar, laser scanner [64], infrared, etc.) or vision based (cameras or RGB-D cameras [65]).

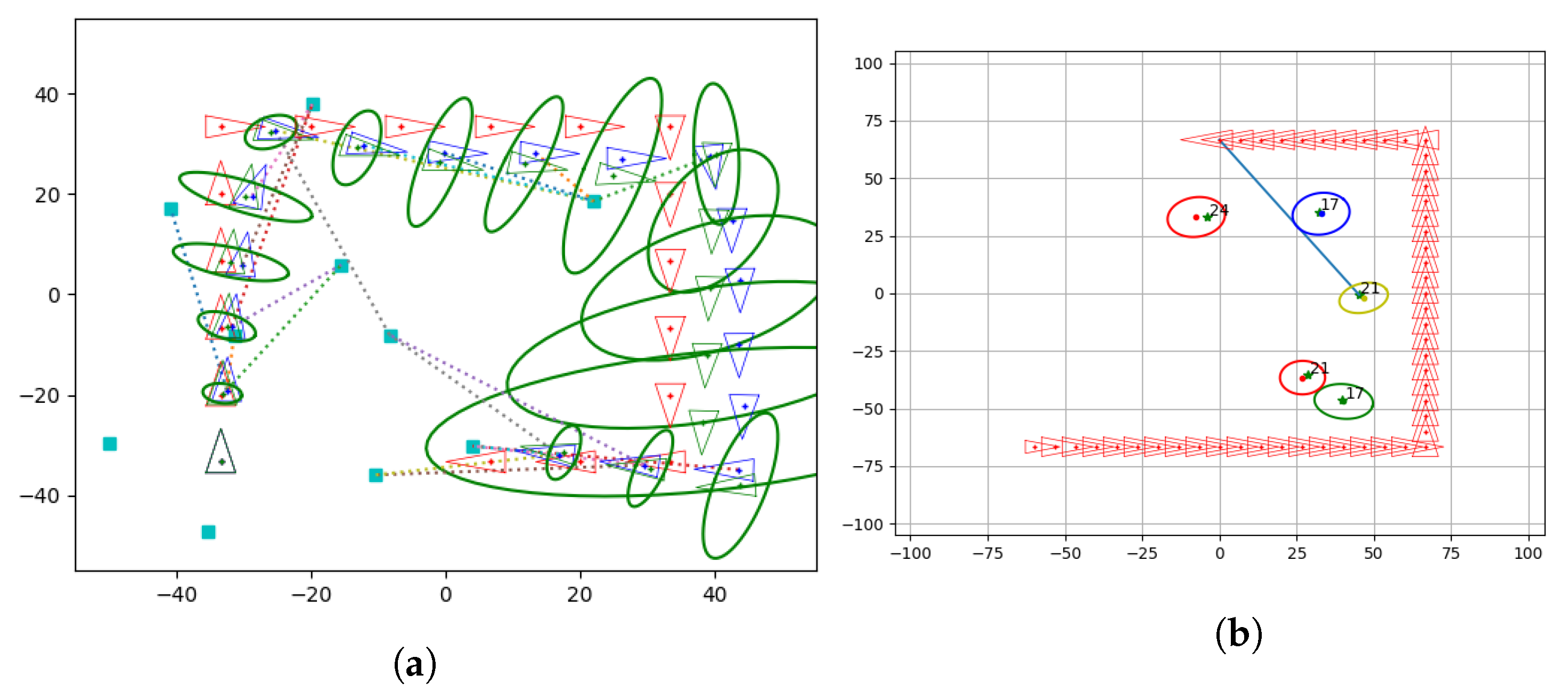

Lab. 4: Robot Localization

Lab. 5: Mapping

Lab. 6: SLAM

Lab. 7: Motion Planning

- Reactive navigation. It handles obstacle avoidance in the environment, relying in a constant flow of information from the sensors. Virtual Force Field (VFF), Vector Field Histogram (VFH) or PT-Space are commonly used techniques for dealing with this problem.

- Global navigation. It is the optimization problem designed to search the best viable path to accomplish the current goal. It mostly relies on the information from the available map. Examples of techniques addressing this problem are Visibility graphs, Voronoi diagrams, cell decomposition, or Probabilistic roadmaps, among others.

4.3. Data Sources

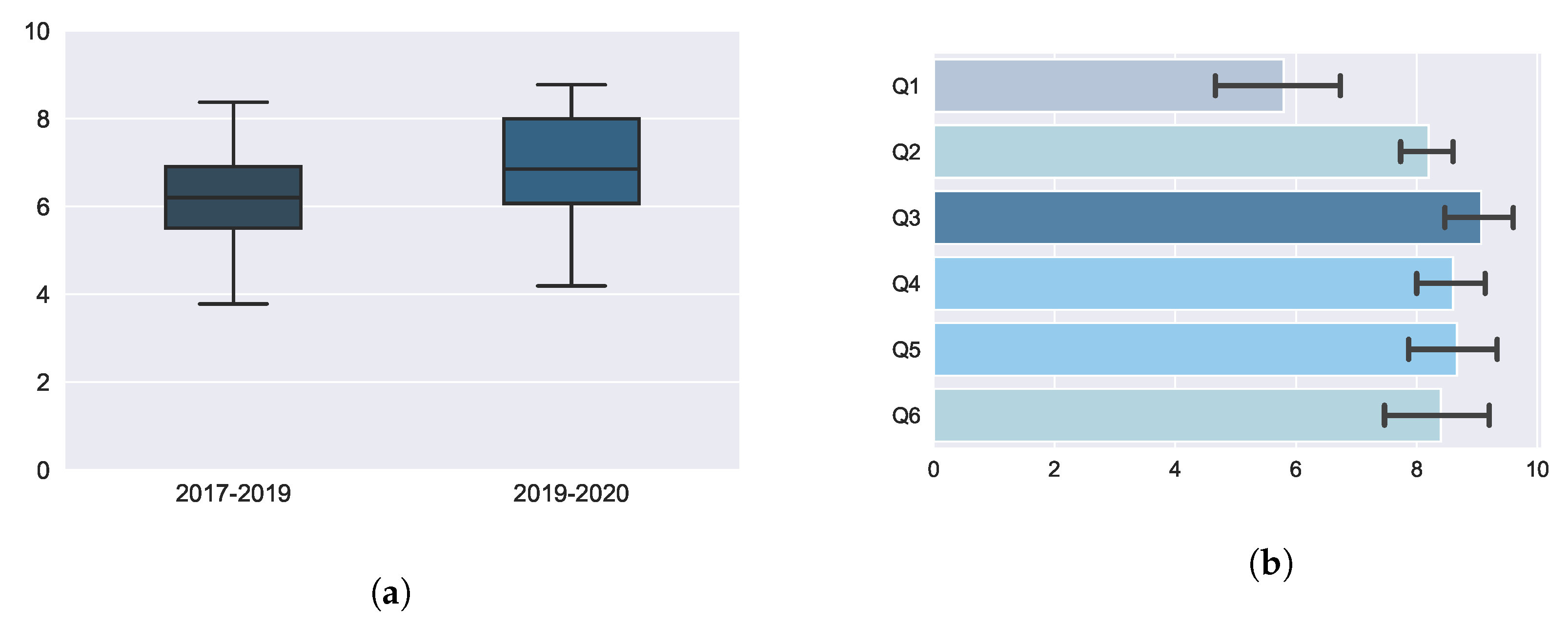

5. Results and Discussion

5.1. Learning Performance According to Students Grades

5.2. Analyzing Students and Lecturers Output

5.3. Limitations of the Study

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kluyver, T.; Ragan-Kelley, B.; Pérez, F.; Granger, B.E.; Bussonnier, M.; Frederic, J.; Kelley, K.; Hamrick, J.B.; Grout, J.; Corlay, S.; et al. Jupyter Notebooks-a Publishing Format for Reproducible Computational Workflows; IOS Press: Clifton, NJ, USA, 2016; pp. 87–90. [Google Scholar]

- Klever, N. Jupyter Notebook, JupyterHub and Nbgrader. In Becoming Greener—Digitalization in My Work; The Publication Series of LAB University of Applied Sciences; LAB University of Applied Sciences: Lahti, Finland, 2020; pp. 37–43. [Google Scholar]

- Barba, L.A.; Barker, L.J.; Blank, D.; Brown, J.; Downey, A.; George, T.; Heagy, L.; Mandli, K.; Moore, J.K.; Lippert, D.; et al. Teaching and Learning with Jupyter. 2019. Available online: https://jupyter4edu.github.io/jupyter-edu-book/ (accessed on 22 June 2020).

- Perez, F.; Granger, B.E. Project Jupyter: Computational narratives as the engine of collaborative data science. Retrieved Sept. 2015, 11, 108. [Google Scholar]

- Forehand, M. Bloom’s taxonomy. Emerg. Perspect. Learn. Teach. Technol. 2010, 41, 47–56. [Google Scholar]

- Robins, B.; Dautenhahn, K.; Te Boekhorst, R.; Billard, A. Robotic assistants in therapy and education of children with autism: Can a small humanoid robot help encourage social interaction skills? Univ. Access Inf. Soc. 2005, 4, 105–120. [Google Scholar] [CrossRef]

- Broadbent, E.; Stafford, R.; MacDonald, B. Acceptance of healthcare robots for the older population: Review and future directions. Int. J. Soc. Robot. 2009, 1, 319. [Google Scholar] [CrossRef]

- Song, G.; Yin, K.; Zhou, Y.; Cheng, X. A surveillance robot with hopping capabilities for home security. IEEE Trans. Consum. Electron. 2009, 55, 2034–2039. [Google Scholar] [CrossRef]

- Zhou, T.T.; Zhou, D.T.; Zhou, A.H. Unmanned Drone, Robot System for Delivering Mail, Goods, Humanoid Security, Crisis Negotiation, Mobile Payments, Smart Humanoid Mailbox and Wearable Personal Exoskeleton Heavy Load Flying Machine. U.S. Patent Application No. 14/285,659, 11 September 2014. [Google Scholar]

- Bogue, R. Growth in e-commerce boosts innovation in the warehouse robot market. Ind. Robot Int. J. 2016, 43, 583–587. [Google Scholar] [CrossRef]

- Horizon. 2020. Available online: https://ec.europa.eu/programmes/horizon2020/ (accessed on 3 July 2020).

- Hanson Robotics Research. Available online: https://www.hansonrobotics.com/research/ (accessed on 10 July 2020).

- Thrun, S. Probabilistic Robotics. Commun. ACM 2002, 45, 52–57. [Google Scholar] [CrossRef]

- Sanders, M. STEM, STEM Education, STEMmania. Technol. Eng. Teach. 2008, 68, 20. [Google Scholar]

- Byhee, B. Advancing STEM education: A 2020 vision. Technol. Eng. Teach. 2010, 70, 30–35. [Google Scholar]

- González-Jiménez, J.; Galindo, C.; Ruiz-Sarmiento, J. Technical improvements of the Giraff telepresence robot based on users’ evaluation. In Proceedings of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; pp. 827–832. [Google Scholar]

- Newman, P.M. C4B—Mobile Robotics. An Introduction to Estimation and Its Application to Navigation. 2004. Available online: http://www.robots.ox.ac.uk/~pnewman/Teaching/C4CourseResources/C4BMobileRobotics2004.pdf (accessed on 5 August 2020).

- Python Software Foundation. Python Language Reference, Version 3.X. Available online: https://www.python.org/ (accessed on 3 July 2020).

- Bloom, B.S. Taxonomy of Educational Objectives; Longmans, Green Co.: New York, NY, USA, 1964; Volume 1. [Google Scholar]

- Masapanta-Carrión, S.; Velázquez-Iturbide, J.A. A Systematic Review of the Use of Bloom’s Taxonomy in Computer Science Education. In Proceedings of the 49th ACM Technical Symposium on Computer Science Education; Association for Computing Machinery, SIGCSE ’18, New York, NY, USA, 16–22 February 2018; pp. 441–446. [Google Scholar] [CrossRef]

- Anderson, L.W.; Krathwohl, D.R.E. A Taxonomy for Learning, Teaching and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives: Complete Edition; Longmans: New York, NY, USA, 2001. [Google Scholar]

- Armstrong, P. Bloom’s Taxonomy; Vanderbilt University Center for Teaching: Nashville, TN, USA, 2016. [Google Scholar]

- Chen, J.C.; Huang, Y.; Lin, K.Y.; Chang, Y.S.; Lin, H.C.; Lin, C.Y.; Hsiao, H.S. Developing a hands-on activity using virtual reality to help students learn by doing. J. Comput. Assist. Learn. 2020, 36, 46–60. [Google Scholar] [CrossRef]

- Burbaite, R.; Stuikys, V.; Marcinkevicius, R. The LEGO NXT robot-based e-learning environment to teach computer science topics. Elektron. Elektrotechnika 2012, 18, 113–116. [Google Scholar] [CrossRef]

- Baltanas-Molero, S.F.; Ruiz-Sarmiento, J.R.; Gonzalez-Jimenez, J. Empowering Mobile Robotics Undergraduate Courses by Using Jupyter Notebooks. In Proceedings of the 14th Annual International Technology, Education and Development Conference, Valencia, Spain, 2–4 March 2020. [Google Scholar]

- Yaniv, Z.; Lowekamp, B.C.; Johnson, H.J.; Beare, R. SimpleITK image-analysis notebooks: A collaborative environment for education and reproducible research. J. Digit. Imag. 2018, 31, 290–303. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.H.; Yang, Y.C. Revisiting the effects of project-based learning on students’ academic achievement: A meta-analysis investigating moderators. Education. Res. Rev. 2019, 26, 71–81. [Google Scholar] [CrossRef]

- Chu, S.K.W.; Reynolds, R.B.; Tavares, N.J.; Notari, M.; Lee, C.W.Y. 21st Century Skills Development through Inquiry-Based Learning; Springer: Singapore, 2017; Volume 1007, pp. 978–981. [Google Scholar]

- Munir, M.; Baroutian, S.; Young, B.R.; Carter, S. Flipped classroom with cooperative learning as a cornerstone. Educ. Chem. Eng. 2018, 23, 25–33. [Google Scholar] [CrossRef]

- Vaughan, K. The role of apprenticeship in the cultivation of soft skills and dispositions. J. Vocat. Educ. Train. 2017, 69, 540–557. [Google Scholar] [CrossRef]

- Moon, J.A. A Handbook of Reflective and Experiential Learning: Theory and Practice; Psychology Press: Hove, East Sussex, UK, 2004. [Google Scholar]

- Kolb, D.A. Experiential Learning: Experience as the Source of Learning and Development; FT Press: Upper Saddle River, NJ, USA, 2014. [Google Scholar]

- Dewey, J. Experience and Nature; Courier Corporation: North Chelmsford, MA, USA, 1958; Volume 471. [Google Scholar]

- Beard, C. Dewey in the world of experiential education. New Dir. Adult Contin. Educ. 2018, 2018, 27–37. [Google Scholar] [CrossRef]

- Elliott, S.; Littlefield, J. Educational Psychology: Effective Teaching, Effective Learning; WCB: Edmonton, AL, Canada; McGraw-Hill: New York, NY, USA, 1995. [Google Scholar]

- Taber, K. Constructivism in Education: Interpretations and Criticisms from Science Education. In Early Childhood Development: Concepts, Methodologies, Tools, and Applications; IGI Global: Hershey, PA, USA, 2019; pp. 312–342. [Google Scholar] [CrossRef]

- Dale, E. Audiovisual Methods in Teaching; Dryden Press: New York, NY, USA, 1969. [Google Scholar]

- Lee, S.J.; Reeves, T.C. Edgar dale and the cone of experience. Foun. Lear. Instruct. Des. Technol. 2017, 47, 56. [Google Scholar]

- O’Hara, K.; Blank, D.; Marshall, J. Computational notebooks for AI education. In Proceedings of the The Twenty-Eighth International Flairs Conference, Hollywood, FL, USA, 18–20 May 2015. [Google Scholar]

- Jacko, J.A. Human Computer Interaction Handbook: Fundamentals, Evolving Technologies, and Emerging Applications; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar]

- Rebmann, A.; Beuther, A.; Schumann, S.; Fettke, P. Hands-on Process Discovery with Python-Utilizing Jupyter Notebook for the Digital Assistance in Higher Education. In Proceedings of the Modellierung 2020 Short, Workshop and Tools & Demo Papers, Vienna, Austria, 19–21 February 2020; pp. 65–76. [Google Scholar]

- Clark, R.C.; Nguyen, F.; Sweller, J. Efficiency in Learning: Evidence-Based Guidelines to Manage Cognitive Load; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Chen, O.; Kalyuga, S.; Sweller, J. The worked example effect, the generation effect, and element interactivity. J. Educ. Psychol. 2015, 107, 689. [Google Scholar] [CrossRef]

- Sweller, J. The worked example effect and human cognition. In Learning and Instruction; APA: Washington, DC, USA, 2006. [Google Scholar]

- Merrill, C.; Daugherty, J. STEM education and leadership: A mathematics and science partnership approach. J. Technol. Educ. 2010, 21, 21. [Google Scholar]

- Fan, S.C.; Yu, K.C. How an integrative STEM curriculum can benefit students in engineering design practices. Int. J. Technol. Des. Educ. 2017, 27, 107–129. [Google Scholar] [CrossRef]

- Capraro, R.M.; Capraro, M.M.; Morgan, J.R. STEM Project-Based Learning: An Integrated Science, Technology, Engineering, and Mathematics (STEM) Approach; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Nehmzow, U. Mobile Robotics: A Practical Introduction; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Grabarnik, G.; Kim-Tyan, L.; Yaskolko, S. Addressing Prerequisites for STEM Classes Using an Example of Linear Algebra for a Course in Machine Learning. In Proceedings of the 12th International Conference on Mobile, Hybrid, and On-Line Learning, St. Maarten, The Netherlands, 10–16 February 2020; pp. 21–26. [Google Scholar]

- Kennedy, T.; Odell, M. Engaging students in STEM education. Sci. Educ. Int. 2014, 25, 246–258. [Google Scholar]

- Krischer, L.; Aiman, Y.A.; Bartholomaus, T.; Donner, S.; van Driel, M.; Duru, K.; Garina, K.; Gessele, K.; Gunawan, T.; Hable, S.; et al. seismo-live: An Educational Online Library of Jupyter Notebooks for Seismology. Seismol. Res. Lett. 2018, 89, 2413–2419. [Google Scholar] [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

- Cervera, E. Interactive ROS Tutorials with Jupyter Notebooks. In Proceedings of the 2018—European Robotics Forum 2018 Workshop Teaching Robotics with ROS, Tampere, Finland, 15 March 2018; pp. 1–11. [Google Scholar]

- Ruiz-Sarmiento, J.; Galindo, C.; Gonzalez-Jimenez, J. Experiences on a Motivational Learning Approach for Robotics in Undergraduate Courses. In Proceedings of the IATED, 11th International Technology, Education and Development Conference, Valencia, Spain, 6–8 March 2017; pp. 3803–3811. [Google Scholar] [CrossRef]

- Users’Guide, E. European credit transfer and accumulation system and the diploma supplement. In Directorate-General for Education and Culture; European Commission: Brussels, Belgium, 2004; Volume 45. [Google Scholar]

- Peers, S. Statistics on Women in Engineering; Women’s Engineering Society: London, UK, 2018; pp. 1–19. [Google Scholar]

- ACM. ACM Software System Award. 2017. Available online: https://awards.acm.org/about/2017-technical-awards (accessed on 3 July 2020).

- TIOBE Index. TIOBE-The Software Quality Company. 2020. Available online: https://www.tiobe.com/tiobe-index/ (accessed on 30 June 2020).

- Fernández-Madrigal, J.A.; Blanco, J.L. Simultaneous Localization and Mapping for Mobile Robots: Introduction and Methods; IGI Global: Hershey, PA, USA, 2012. [Google Scholar] [CrossRef]

- Siegwart, R.; Nourbakhsh, I.R.; Scaramuzza, D. Introduction to Autonomous Mobile Robots; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Monroy, J.; Ruiz-Sarmiento, J.R.; Moreno, F.A.; Melendez-Fernandez, F.; Galindo, C.; Gonzalez-Jimenez, J. A semantic-based gas source localization with a mobile robot combining vision and chemical sensing. Sensors 2018, 18, 4174. [Google Scholar] [CrossRef] [PubMed]

- Choset, H.M.; Hutchinson, S.; Lynch, K.M.; Kantor, G.; Burgard, W.; Kavraki, L.E.; Thrun, S. Principles of Robot Motion: Theory, Algorithms, and Implementation; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Jaimez, M.; Monroy, J.; Lopez-Antequera, M.; Gonzalez-Jimenez, J. Robust Planar Odometry based on Symmetric Range Flow and Multi-Scan Alignment. IEEE Trans. Robot. 2018, 1623–1635. [Google Scholar] [CrossRef]

- Ruiz-Sarmiento, J.R.; Galindo, C.; González-Jiménez, J. Olt: A toolkit for object labeling applied to robotic RGB-D datasets. In Proceedings of the 2015 European Conference on Mobile Robots (ECMR), Lincoln, UK, 2–4 September 2015; pp. 1–6. [Google Scholar]

- Zuñiga-Noël, D.; Ruiz-Sarmiento, J.; Gomez-Ojeda, R.; Gonzalez-Jimenez, J. Automatic Multi-Sensor Extrinsic Calibration for Mobile Robots. IEEE Robot. Autom. Lett. 2019, 4, 2862–2869. [Google Scholar] [CrossRef]

- Julier, S.J.; Uhlmann, J.K. Unscented filtering and nonlinear estimation. Proc. IEEE 2004, 92, 401–422. [Google Scholar] [CrossRef]

- Doğançay, K. Bearings-only target localization using total least squares. Signal Proc. 2005, 85, 1695–1710. [Google Scholar] [CrossRef]

- Gonzalez-Jimenez, J.; Galindo, C.; Melendez-Fernandez, F.; Ruiz-Sarmiento, J. Building and exploiting maps in a telepresence robotic application. In Proceedings of the 10th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Reykjavik, Iceland, 29–31 July 2013. [Google Scholar]

- Ruiz-Sarmiento, J.R.; Galindo, C.; González-Jiménez, J. Robot@home, a robotic dataset for semantic mapping of home environments. Int. J. Robot. Res. 2017, 36, 131–141. [Google Scholar] [CrossRef]

- Ruiz-Sarmiento, J.R.; Galindo, C.; Gonzalez-Jimenez, J. Building multiversal semantic maps for mobile robot operation. Knowl.-Based Syst. 2017, 119, 257–272. [Google Scholar] [CrossRef]

- Thrun, S. Learning occupancy grid maps with forward sensor models. Auton. Robot. 2003, 15, 111–127. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Younes, G.; Asmar, D.; Shammas, E.; Zelek, J. Keyframe-based monocular SLAM: Design, survey, and future directions. Robot. Auton. Syst. 2017, 98, 67–88. [Google Scholar] [CrossRef]

- Moreno, F.A.; Monroy, J.; Ruiz-Sarmiento, J.R.; Galindo, C.; Gonzalez-Jimenez, J. Automatic Waypoint Generation to Improve Robot Navigation Through Narrow Spaces. Sensors 2020, 20, 240. [Google Scholar] [CrossRef] [PubMed]

- LaValle, S.M. Planning Algorithms; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Koren, Y.; Borenstein, J. Potential field methods and their inherent limitations for mobile robot navigation. In Proceedings of the 1991 IEEE International Conference on Robotics and Automation, Sacramento, CA, USA, 9–11 April 1991; pp. 1398–1404. [Google Scholar]

- Welch, B.L. On the comparison of several mean values: An alternative approach. Biometrika 1951, 38, 330–336. [Google Scholar] [CrossRef]

- Maxwell, S.E.; Delaney, H.D.; Kelley, K. Designing Experiments and Analyzing Data: A model Comparison Perspective; Routledge: London, UK, 2017. [Google Scholar]

| Lesson | Topic | #Sessions |

|---|---|---|

| Theory 1 | Introduction to Autonomous Robotics | 1 |

| Theory 2 | Probability and Statistics Bases for Robotics | 3 |

| Lab. 1 | Probability fundamentals (Gaussian distribution) | 2 |

| Theory 3 | Robot Motion | 3 |

| Lab. 2 | Movement of a robot using velocity and odometry commands | 2 |

| Theory 4 | Robot Sensing | 2 |

| Lab. 3 | Landmark-based models for sensing | 1 |

| Theory 5 | Robot Localization | 2 |

| Lab. 4 | Least Squares and EKF for localization | 2 |

| Theory 6 | Mapping | 1 |

| Lab. 5 | EKF for robot mapping | 2 |

| Theory 7 | SLAM | 1 |

| Lab. 6 | EKF for Simultaneous Localization and Mapping | 1 |

| Theory 8 | Motion planning | 1 |

| Lab. 7 | Motion planning by means of Potential Fields | 1 |

| Theory 9 | Robot Control Architecture + ROS | 2 |

| Lab. 8 | Implementing a robotic explorer using Python and ROS | 2 |

| Id | Question |

|---|---|

| Q1 | Indicate your degree of understanding about the topics in the subject after theory sessions. |

| Q2 | Indicate your degree of understanding about the topics in the subject after lab sessions. |

| Q3 | I consider that the utilization of Jupyter notebooks in hands-on sessions empowered my learning to a greater extent that following a traditional approach (e.g., statement-solution). |

| Q4 | I consider that the provided Jupyter notebooks have helped me to pass the final exam. |

| Q5 | I consider that the Jupyter Notebook learning curve in the subject is appropriate. |

| Q6 | I consider that the Python programming language is suited for completing the practical sessions. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ruiz-Sarmiento, J.-R.; Baltanas, S.-F.; Gonzalez-Jimenez, J. Jupyter Notebooks in Undergraduate Mobile Robotics Courses: Educational Tool and Case Study. Appl. Sci. 2021, 11, 917. https://doi.org/10.3390/app11030917

Ruiz-Sarmiento J-R, Baltanas S-F, Gonzalez-Jimenez J. Jupyter Notebooks in Undergraduate Mobile Robotics Courses: Educational Tool and Case Study. Applied Sciences. 2021; 11(3):917. https://doi.org/10.3390/app11030917

Chicago/Turabian StyleRuiz-Sarmiento, Jose-Raul, Samuel-Felipe Baltanas, and Javier Gonzalez-Jimenez. 2021. "Jupyter Notebooks in Undergraduate Mobile Robotics Courses: Educational Tool and Case Study" Applied Sciences 11, no. 3: 917. https://doi.org/10.3390/app11030917

APA StyleRuiz-Sarmiento, J.-R., Baltanas, S.-F., & Gonzalez-Jimenez, J. (2021). Jupyter Notebooks in Undergraduate Mobile Robotics Courses: Educational Tool and Case Study. Applied Sciences, 11(3), 917. https://doi.org/10.3390/app11030917