Split-Based Algorithm for Weighted Context-Free Grammar Induction

Abstract

1. Introduction

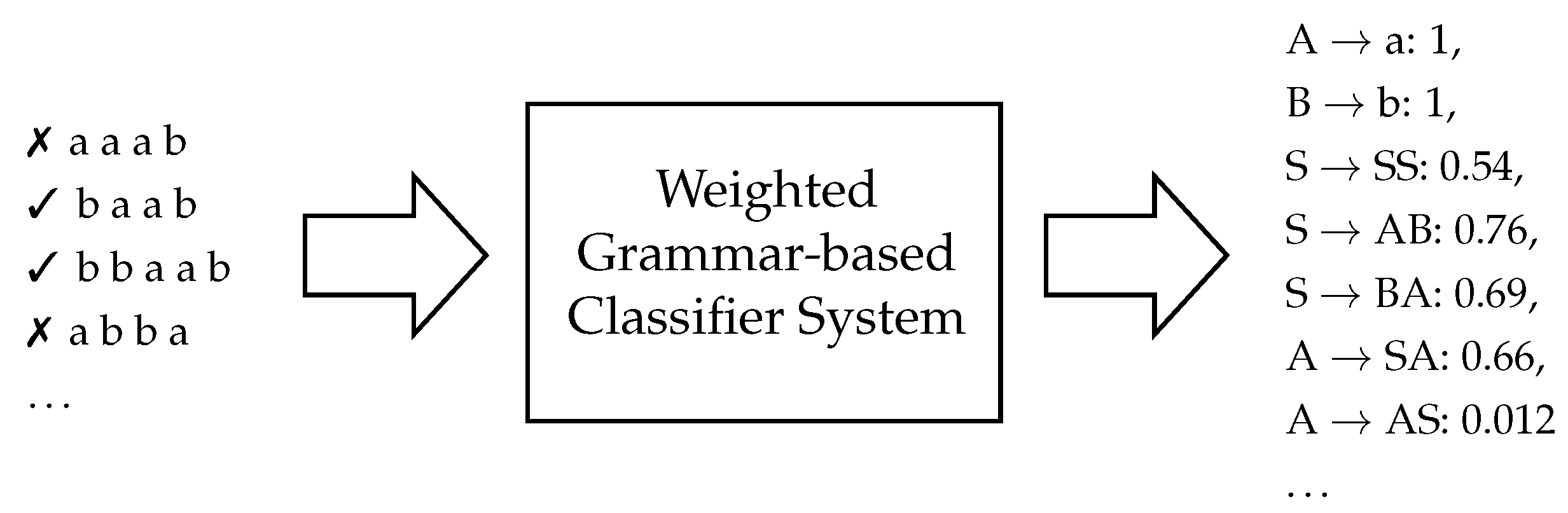

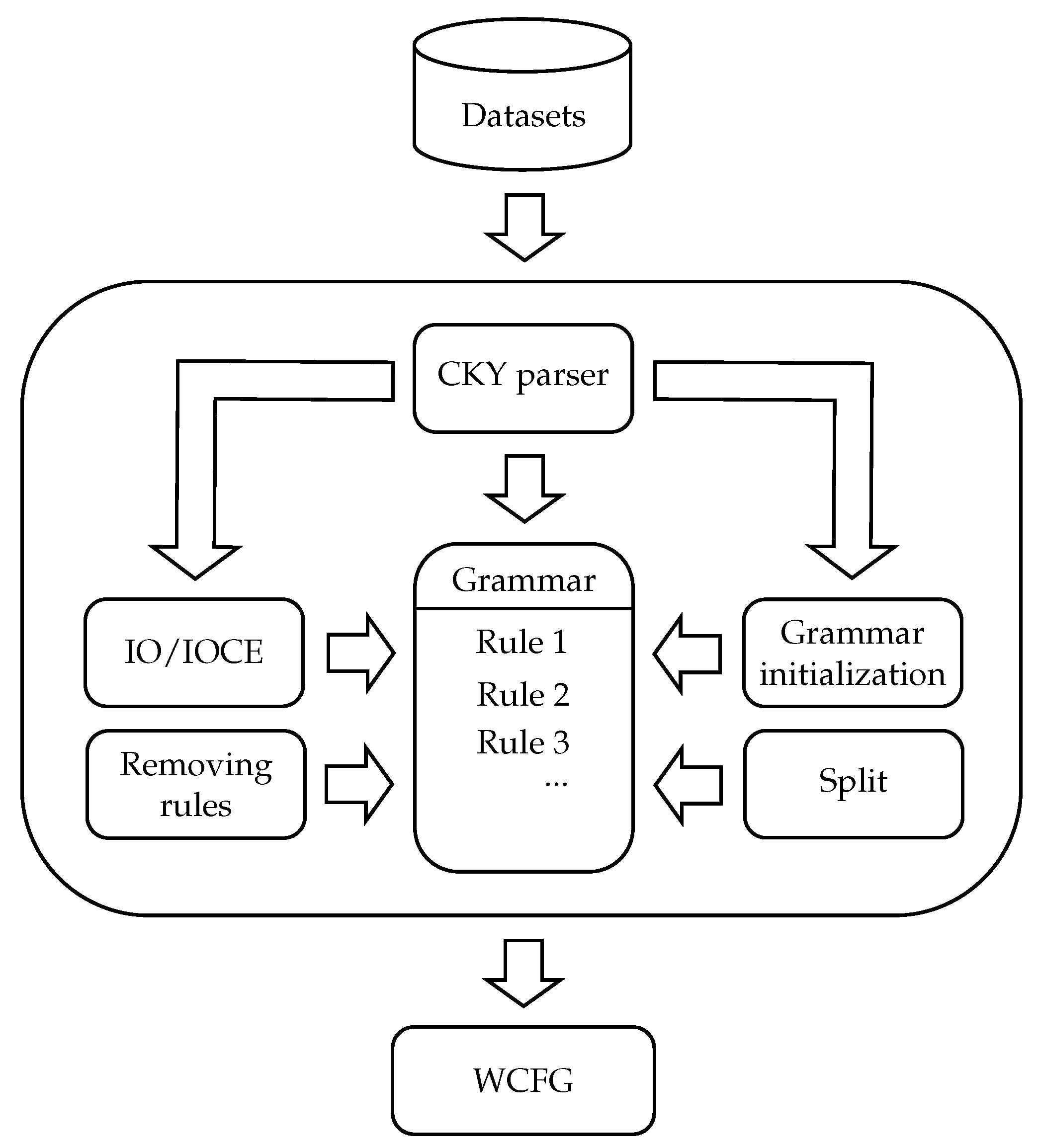

2. Weighted Grammar-Based Classifier System

2.1. Weighted Context-Free Grammar

2.2. Grammar Initialisation

2.3. Stochastic CKY Parser

| Algorithm 1: Stochastic CKY |

|

2.4. Split Algorithm

- For symbols and , create all possible rules (see line 6 in Algorithm 2). Since the rules are in CNF, their number is 8.

- For all nonterminal rules with in the form , where or is , create new untimely rules in the same form, replacing with . For multiple occurrences of in a rule, create all combinations (see lines 7–20 in Algorithm 2).

| Algorithm 2: Split algorithm |

|

- From Y and split symbol Z create: , , , , , , , (line 6 of Algorithm 2).

- From create new following rules and from rules (lines 7–21 of Algorithm 2).

2.5. Rule Weight Estimation

2.5.1. Inside–Outside

- Probability/weight of the rule:

- –

- for nonterminal rules:

- –

- for terminal rules:

- Probability/weight of deriving a sentence from grammar:where ⇒ stands for sentence derivation, W stands for sentence output, and are the individual words of the sentence W.

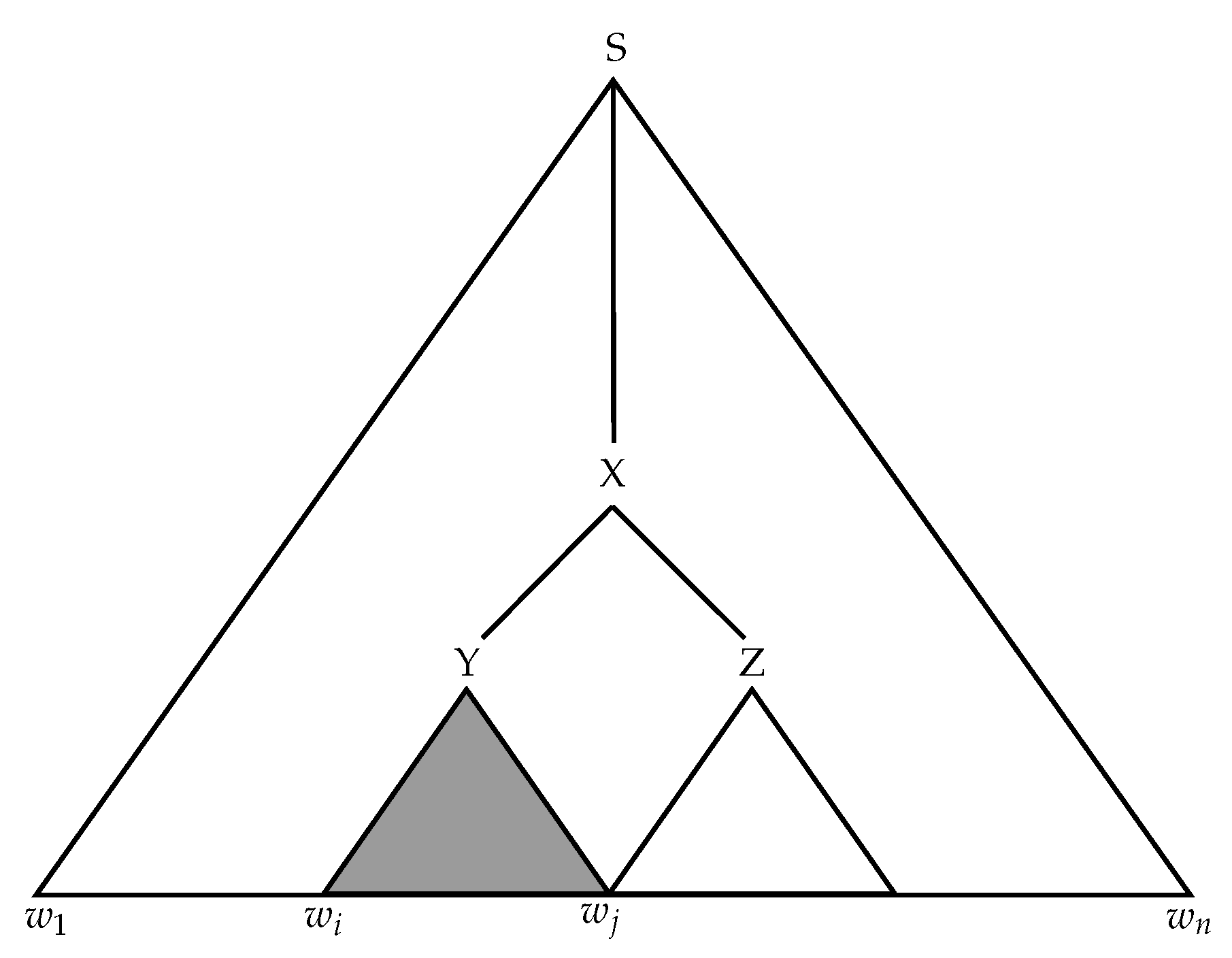

- The inside probability is the probability of deriving from a given symbol the nonterminal sequence of words from the sentence :where X is any nonterminal grammar symbol.Figure 3 shows the graphical interpretation of the inside probability for the nonterminal Y symbol, .

- The outside probability is the probability of deriving from the starting symbol the string from the sentence :Figure 4 shows the graphical representation of the outside probability for the nonterminal symbol Y, .

- Estimated number of uses of the rule determines how often the rule occurs for a single sentence:

- –

- for terminal rules:

- –

- for nonterminal rules:

- The total estimated number of uses of the rule determines how often the rule occurs in all sentences in the training set:where stands for a terminal or nonterminal rule and for successive sentences in the training set.

- The new weight/probability of a rule is calculated as the ratio of the total estimated uses of a given rule to the sum of the estimated total uses of a rule with the same left-hand symbol:where stands for a rule with the same left-hand side symbol as the rule in the numerator and any right-hand side form () of a rule in a CNF, either a terminal symbol or two nonterminal symbols.

2.5.2. Inside–Outside Contrastive Estimation

2.6. Removing Rules with Low Weight

2.7. Experimental Protocol and Complexity Analysis

| Algorithm 3: Experimental protocol |

|

3. Benchmarks

3.1. Datasets

- : balanced parentheses

- :

- :

- :

- : the language of ukasiewicz ()

3.2. Brief Description of Other Approaches

4. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| IO | Inside-Outside algorithm |

| CFG | Context-Free Grammar |

| CNF | Chomsky Normal Form |

| CKY | Cocke–Kasami–Younger parser |

| WCFG | Weighted Context-Free Grammar |

| WGCS | Weighted Grammar-based Classifier System |

| IOCE | Inside-Outside Contrastive Estimation algorithm |

References

- Flasiński, M. Introduction to Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- de la Higuera, C. Grammatical Inference: Learning Automata and Grammars; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar] [CrossRef]

- Gold, E.M. Language identification in the limit. Inf. Control. 1967, 10, 447–474. [Google Scholar] [CrossRef]

- Horning, J.J. A Study of Grammatical Inference; Technical Report; Stanford University California Department of Computer Science: Stanford, CA, USA, 1969. [Google Scholar]

- Unold, O.; Gabor, M.; Wieczorek, W. Unsupervised Statistical Learning of Context-free Grammar. In Proceedings of the 12th International Conference on Agents and Artificial Intelligence—Volume 1: NLPinAI; INSTICC; SciTePress: Setúbal, Portugal, 2020; pp. 431–438. [Google Scholar]

- Unold, O.; Gabor, M.; Dyrka, W. Unsupervised Grammar Induction for Revealing the Internal Structure of Protein Sequence Motifs. In Proceedings of the International Conference on Artificial Intelligence in Medicine, Minneapolis, MN, USA, 25–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 299–309. [Google Scholar]

- Unold, O. Context-free grammar induction with grammar-based classifier system. Arch. Control. Sci. 2005, 15, 681–690. [Google Scholar]

- Unold, O. Fuzzy grammar-based prediction of amyloidogenic regions. In Proceedings of the International Conference on Grammatical Inference, College Park, MD, USA, 5–8 September 2012; pp. 210–219. [Google Scholar]

- Unold, O.; Gabor, M. How implicit negative evidence improve weighted context-free grammar induction. In Proceedings of the International Conference on Artificial Intelligence and Soft Computing, Zakopane, Poland, 16–20 June 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 595–606. [Google Scholar]

- Van Zaanen, M. ABL: Alignment-based learning. In Proceedings of the 18th Conference on Computational Linguistics, Saarbrucken, Germany, 31 July–4 August 2000; Volume 2, pp. 961–967. [Google Scholar]

- Adriaans, P.; Vervoort, M. The EMILE 4.1 grammar induction toolbox. In Proceedings of the International Colloquium on Grammatical Inference, Amsterdam, The Netherlands, 23–25 September 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 293–295. [Google Scholar]

- Solan, Z.; Horn, D.; Ruppin, E.; Edelman, S. Unsupervised learning of natural languages. Proc. Natl. Acad. Sci. USA 2005, 102, 11629–11634. [Google Scholar] [CrossRef]

- Wieczorek, W. A Local Search Algorithm for Grammatical Inference. In Grammatical Inference: Theoretical Results and Applications, Proceedings of the 10th International Colloquium (ICGI 2010), Valencia, Spain, 13–16 September 2010; Lecture Notes in Computer Science; Jose, M., Sempere, P.G., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6339, pp. 217–229. [Google Scholar]

- Urbanowicz, R.J.; Moore, J.H. Learning classifier systems: A complete introduction, review, and roadmap. J. Artif. Evol. Appl. 2009, 2009, 1. [Google Scholar] [CrossRef]

- Sakakibara, Y. Learning context-free grammars using tabular representations. Pattern Recognit. 2005, 38, 1372–1383. [Google Scholar] [CrossRef]

- Kasami, T. An Efficient Recognition and Syntax-Analysis Algorithm for Context-Free Languages; Coordinated Science Laboratory Report No. R-257; University of Illinois at Urbana-Champaign: Champaign, IL, USA, 1966. [Google Scholar]

- Younger, D.H. Recognition and parsing of context-free languages in time n3. Inf. Control 1967, 10, 189–208. [Google Scholar] [CrossRef]

- Ney, H. Dynamic programming parsing for context-free grammars in continuous speech recognition. IEEE Trans. Signal Process. 1991, 39, 336–340. [Google Scholar] [CrossRef]

- Hogenhout, W.R.; Matsumoto, Y. A fast method for statistical grammar induction. Nat. Lang. Eng. 1998, 4, 191–209. [Google Scholar] [CrossRef]

- Kurihara, K.; Sato, T. Variational Bayesian grammar induction for natural language. In Proceedings of the International Colloquium on Grammatical Inference, Tokyo, Japan, 20–22 September 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 84–96. [Google Scholar]

- Baker, J.K. Trainable grammars for speech recognition. J. Acoust. Soc. Am. 1979, 65, S132. [Google Scholar] [CrossRef]

- Lari, K.; Young, S.J. The estimation of stochastic context-free grammars using the inside-outside algorithm. Comput. Speech Lang. 1990, 4, 35–56. [Google Scholar] [CrossRef]

- Smith, N.A.; Eisner, J. Contrastive estimation: Training log-linear models on unlabeled data. In Proceedings of the 43rd Annual Meeting on Association for Computational Linguistics, Ann Arbor, MI, USA, 25–30 June 2005; pp. 354–362. [Google Scholar]

- Smith, N.A.; Eisner, J. Guiding unsupervised grammar induction using contrastive estimation. In Proceedings of the IJCAI Workshop on Grammatical Inference Applications, Edinburgh, UK, 31 July 2005; pp. 73–82. [Google Scholar]

- Unold, O.; Kaczmarek, A.; Culer, Ł. Iterative method of generating artificial context-free grammars. arXiv 2019, arXiv:1911.05801. [Google Scholar]

- Nakamura, K.; Matsumoto, M. Incremental learning of context free grammars based on bottom-up parsing and search. Pattern Recognit. 2005, 38, 1384–1392. [Google Scholar] [CrossRef]

- Eyraud, R.; de la Higuera, C.; Janodet, J.C. LARS: A learning algorithm for rewriting systems. Mach. Learn. 2007, 66, 7–31. [Google Scholar] [CrossRef]

- Solan, Z.; Ruppin, E.; Horn, D.; Edelman, S. Automatic Acquisition and Efficient Representation of Syntactic Structures. In Neural Information Processing Systems 15, Proceedings of the Neural Information Processing Systems (NIPS 2002), Vancouver, BC, Canada, 9–14 December 2002; Becker, S., Thrun, S., Obermayer, K., Eds.; MIT Press: Cambridge, MA, USA, 2002; pp. 91–98. [Google Scholar]

- Unold, O. jGCS. 2019. Available online: https://github.com/ounold/jGCS (accessed on 22 January 2021).

- Rey, D.; Neuhäuser, M. Wilcoxon-Signed-Rank Test. In International Encyclopedia of Statistical Science; Lovric, M., Ed.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 1658–1659. [Google Scholar] [CrossRef]

- Zaanen, M.; Noord, N. Model merging versus model splitting context-free grammar induction. In Proceedings of the International Conference on Grammatical Inference, College Park, MD, USA, 5–8 September 2012; pp. 224–236. [Google Scholar]

| Dataset | Size | Positive Sentences | Negative Sentences | Max. Length of Sentence | Min. Length of Sentence | Number of Terminals |

|---|---|---|---|---|---|---|

| 1 | 213 | 113 | 100 | 18 | 3 | 4 |

| 2 | 220 | 120 | 100 | 18 | 2 | 2 |

| 3 | 204 | 104 | 100 | 14 | 4 | 2 |

| 4 | 240 | 140 | 100 | 20 | 4 | 5 |

| 5 | 208 | 108 | 100 | 20 | 8 | 4 |

| 6 | 200 | 100 | 100 | 20 | 12 | 2 |

| 7 | 198 | 98 | 100 | 20 | 4 | 4 |

| 8 | 200 | 100 | 100 | 14 | 3 | 2 |

| 9 | 200 | 100 | 100 | 16 | 6 | 2 |

| 10 | 200 | 100 | 100 | 20 | 11 | 2 |

| 11 | 200 | 100 | 100 | 20 | 1 | 2 |

| 12 | 204 | 104 | 100 | 20 | 4 | 6 |

| 13 | 205 | 105 | 100 | 20 | 3 | 4 |

| 14 | 200 | 100 | 100 | 20 | 3 | 3 |

| 15 | 200 | 100 | 100 | 20 | 3 | 5 |

| 16 | 216 | 116 | 100 | 20 | 2 | 7 |

| 17 | 197 | 97 | 100 | 20 | 3 | 3 |

| 18 | 206 | 106 | 100 | 20 | 5 | 5 |

| 19 | 240 | 140 | 100 | 12 | 2 | 2 |

| 20 | 209 | 109 | 100 | 20 | 6 | 4 |

| 21 | 213 | 113 | 100 | 20 | 5 | 6 |

| 22 | 205 | 105 | 100 | 20 | 7 | 4 |

| 23 | 209 | 109 | 100 | 20 | 5 | 5 |

| 24 | 199 | 99 | 100 | 20 | 3 | 3 |

| 25 | 207 | 107 | 100 | 16 | 3 | 6 |

| 26 | 200 | 100 | 100 | 20 | 2 | 2 |

| 27 | 190 | 90 | 100 | 16 | 2 | 5 |

| 28 | 224 | 124 | 100 | 18 | 5 | 6 |

| Set | WGCS | LS | ADIOS | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| |G| | Pr | Rc | F1 | Time | |G| | Pr | Rc | F1 | Time | |G| | Pr | Rc | F1 | Time | |

| 1 | 25 | 0.99 | 1.00 | 0.99 | 19:18 | 16.8 | 1.00 | 0.99 | 0.99 | 26:59:07 | 28.2 | 0.90 | 0.98 | 0.94 | 1.2 |

| 2 | 47 | 0.99 | 0.98 | 0.99 | 14:54 | 75.0 | 0.98 | 0.88 | 0.93 | 97:54:37 | 33.8 | 0.63 | 1.00 | 0.77 | 2.0 |

| 3 | 16 | 1.00 | 1.00 | 1.00 | 1:43 | 34.8 | 0.99 | 0.90 | 0.94 | 56:44:14 | 34.4 | 0.51 | 1.00 | 0.68 | 2.4 |

| 4 | 40.6 | 0.98 | 0.99 | 0.97 | 16:12 | 19.4 | 1.00 | 1.00 | 1.00 | 7:22:35 | 26.4 | 0.60 | 1.00 | 0.75 | 3.0 |

| 5 | 38 | 0.98 | 0.98 | 0.98 | 1:41:53 | 10.2 | 1.00 | 1.00 | 1.00 | 19:06:57 | 30.0 | 0.52 | 1.00 | 0.68 | 4.5 |

| 6 | 11.2 | 1.00 | 1.00 | 1.00 | 5:43 | 132.0 | 1.00 | 0.48 | 0.61 | 4:37:01 | 25.2 | 0.5 | 1.00 | 0.67 | 4.3 |

| 7 | 19.2 | 1.00 | 1.00 | 1.00 | 48:31 | 28.2 | 1.00 | 0.94 | 0.97 | 2:50:37 | 26.4 | 0.49 | 1.00 | 0.66 | 4.7 |

| 8 | 56.6 | 0.94 | 0.95 | 0.94 | 2:21:43 | 92.9 | 0.79 | 0.51 | 0.61 | 89:22:16 | 24.2 | 0.50 | 1.00 | 0.67 | 4.0 |

| 9 | 41.2 | 0.76 | 0.90 | 0.81 | 1:51:15 | 192.4 | 0.20 | 0.02 | 0.04 | 2:24:38 | 20.6 | 0.50 | 1.00 | 0.67 | 6.9 |

| 10 | 42.4 | 0.85 | 0.93 | 0.88 | 4:13:26 | 192.2 | 0.20 | 0.01 | 0.02 | 2:21:31 | 31.0 | 0.50 | 1.00 | 0.67 | 5.9 |

| 11 | 7.8 | 1.00 | 0.99 | 0.99 | 1:05:39 | 67.4 | 0.99 | 0.78 | 0.86 | 16:53:53 | 29.6 | 0.50 | 1.00 | 0.67 | 6.0 |

| 12 | 21.4 | 0.97 | 0.99 | 0.98 | 1:23:47 | 16.0 | 1.00 | 0.99 | 0.99 | 1:59:20 | 24.2 | 0.56 | 1.00 | 0.72 | 6.0 |

| 13 | 40 | 0.97 | 0.99 | 0.98 | 1:05:18 | 9.8 | 1.00 | 0.99 | 0.99 | 15:26:20 | 27.8 | 0.51 | 1.00 | 0.68 | 7.8 |

| 14 | 42.2 | 0.99 | 0.98 | 0.98 | 1:27:58 | 84.0 | 0.96 | 0.71 | 0.80 | 49:18:12 | 27.8 | 0.50 | 1.00 | 0.67 | 8.7 |

| 15 | 57 | 0.96 | 0.91 | 0.93 | 1:22:26 | 26.0 | 0.99 | 0.92 | 0.95 | 31:39:10 | 23.2 | 0.50 | 1.00 | 0.67 | 7.7 |

| 16 | 57.2 | 0.98 | 0.93 | 0.96 | 47:29 | 12.8 | 1.00 | 1.00 | 1.00 | 4:18:35 | 32.2 | 0.56 | 0.98 | 0.71 | 10.5 |

| 17 | 40.2 | 0.97 | 0.99 | 0.98 | 52:33 | 93.5 | 0.98 | 0.82 | 0.88 | 86:43:13 | 20.6 | 0.49 | 1.00 | 0.66 | 8.4 |

| 18 | 27.6 | 1.00 | 1.00 | 1.00 | 5:53 | 26.2 | 1.00 | 0.91 | 0.95 | 2:46:58 | 21.2 | 0.51 | 1.00 | 0.68 | 9.3 |

| 19 | 65.4 | 0.96 | 0.89 | 0.92 | 17:03 | 82.9 | 0.84 | 0.61 | 0.70 | 88:28:50 | 23.4 | 0.58 | 0.00 | 0.73 | 8.5 |

| 20 | 64 | 0.96 | 0.90 | 0.93 | 1:56:21 | 12.4 | 1.00 | 0.99 | 0.99 | 75:31:18 | 20.8 | 0.52 | 1.00 | 0.69 | 10.2 |

| 21 | 46.8 | 0.99 | 1.00 | 0.99 | 55:27 | 15.2 | 1.00 | 1.00 | 1.00 | 62:32:23 | 25.0 | 0.60 | 1.00 | 0.74 | 8.5 |

| 22 | 40.2 | 0.98 | 1.00 | 0.99 | 1:08:24 | 14.0 | 1.00 | 0.96 | 0.98 | 17:09:24 | 24.0 | 0.51 | 1.00 | 0.68 | 10.7 |

| 23 | 23.4 | 0.97 | 0.99 | 0.98 | 20:59 | 14.8 | 1.00 | 0.99 | 0.99 | 46:38:02 | 25.0 | 0.52 | 1.00 | 0.69 | 10.5 |

| 24 | 50.6 | 0.88 | 0.95 | 0.91 | 1:17:20 | 75.8 | 0.98 | 0.71 | 0.82 | 28:55:28 | 28.0 | 0.50 | 1.00 | 0.66 | 11.5 |

| 25 | 54.6 | 0.98 | 0.88 | 0.92 | 16:08 | 17.0 | 1.00 | 0.99 | 0.99 | 38:25:13 | 24.2 | 0.52 | 1.00 | 0.68 | 12.7 |

| 26 | 48 | 0.87 | 0.97 | 0.91 | 2:15:20 | 122.0 | 0.99 | 0.53 | 0.68 | 84:07:51 | 28.4 | 0.50 | 1.00 | 0.67 | 16.0 |

| 27 | 29.8 | 0.99 | 0.98 | 0.98 | 4:43 | 18.2 | 1.00 | 0.96 | 0.98 | 18:43:47 | 21.0 | 0.47 | 1.00 | 0.64 | 12.3 |

| 28 | 30.4 | 0.96 | 0.97 | 0.98 | 18:07 | 25.8 | 1.00 | 1.00 | 1.00 | 18:21:58 | 23.4 | 0.55 | 1.00 | 0.71 | 11.2 |

| Avg | 38.7 | 0.96 | 0.97 | 0.96 | 1:01:59 | 54.6 | 0.92 | 0.81 | 0.85 | 35:37:59 | 26.1 | 0.54 | 0.96 | 0.70 | 7.7 |

| WGCS vs. LS | WGCS vs. ADIOS | LS vs. ADIOS |

|---|---|---|

| 1.87 × 10−2 | 3.74 × 10−6 | 1.81 × 10−3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gabor, M.; Wieczorek, W.; Unold, O. Split-Based Algorithm for Weighted Context-Free Grammar Induction. Appl. Sci. 2021, 11, 1030. https://doi.org/10.3390/app11031030

Gabor M, Wieczorek W, Unold O. Split-Based Algorithm for Weighted Context-Free Grammar Induction. Applied Sciences. 2021; 11(3):1030. https://doi.org/10.3390/app11031030

Chicago/Turabian StyleGabor, Mateusz, Wojciech Wieczorek, and Olgierd Unold. 2021. "Split-Based Algorithm for Weighted Context-Free Grammar Induction" Applied Sciences 11, no. 3: 1030. https://doi.org/10.3390/app11031030

APA StyleGabor, M., Wieczorek, W., & Unold, O. (2021). Split-Based Algorithm for Weighted Context-Free Grammar Induction. Applied Sciences, 11(3), 1030. https://doi.org/10.3390/app11031030