Immersive Virtual Reality Experience of Historical Events Using Haptics and Locomotion Simulation

Abstract

:1. Introduction

1.1. Related Work

1.2. Design and Contribution

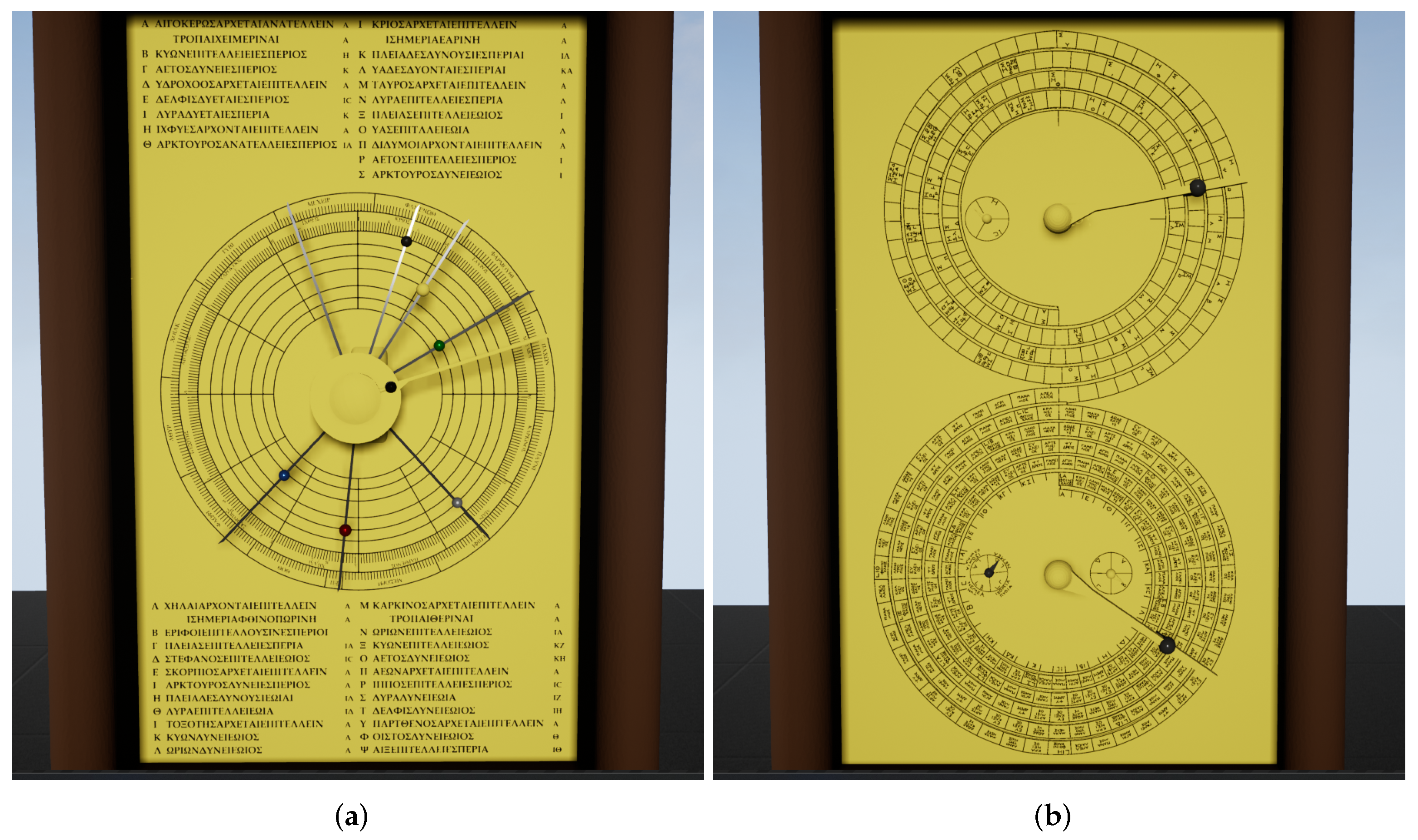

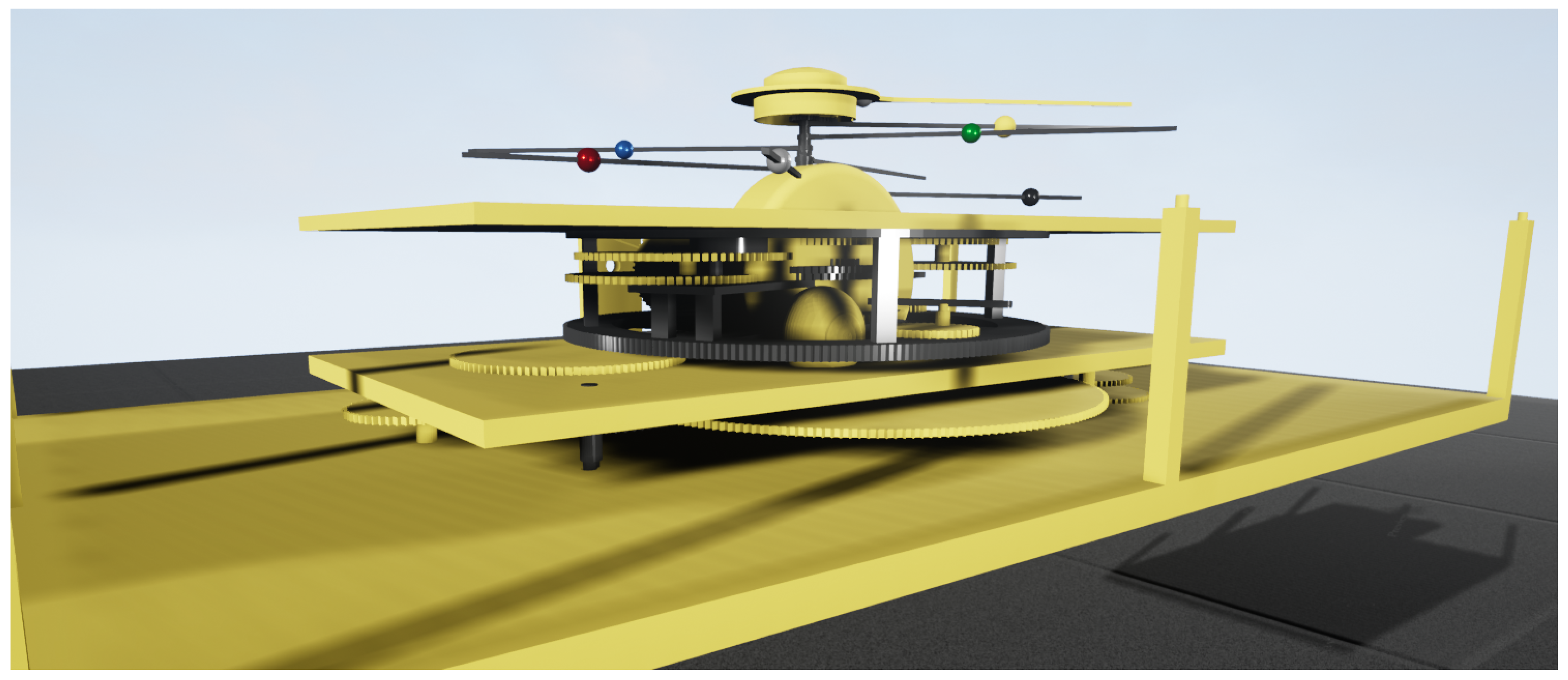

1.3. The Antikythera Mechanism

2. Materials and Methods

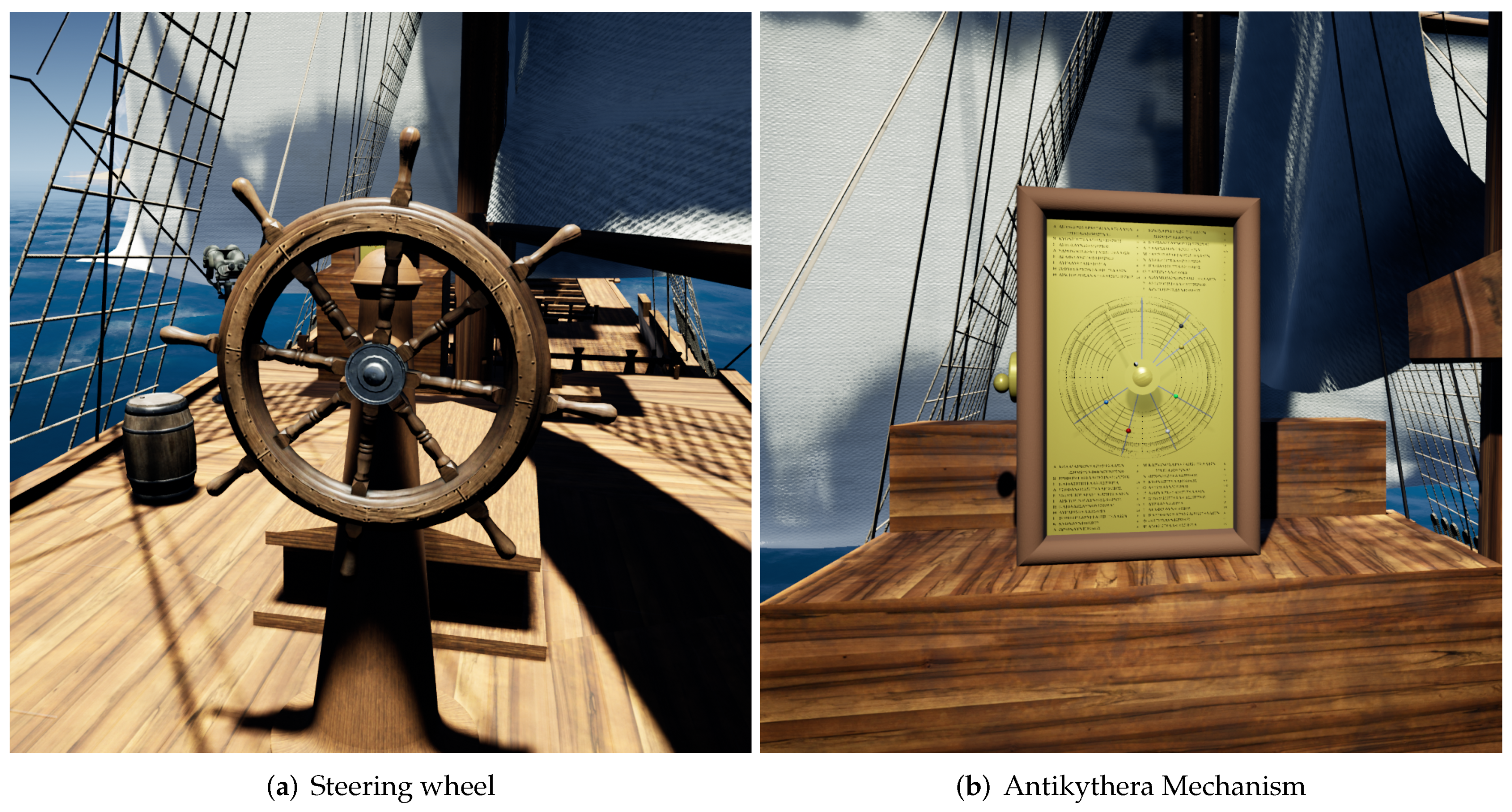

2.1. Design of the Virtual Enviroment

- Look through the binoculars: When the user goes to the binoculars he can look through them. A render texture is added to the viewport that is taken from a camera with a higher depth ratio so that everything appears closer;

- Use the Antikythera Mechanism: The user can grab the reconstructed mechanism and rotate it to see the positions of the various stars and planets. Additional information will be given to the user to understand how the mechanism works and what each gear represents (Figure 3b).

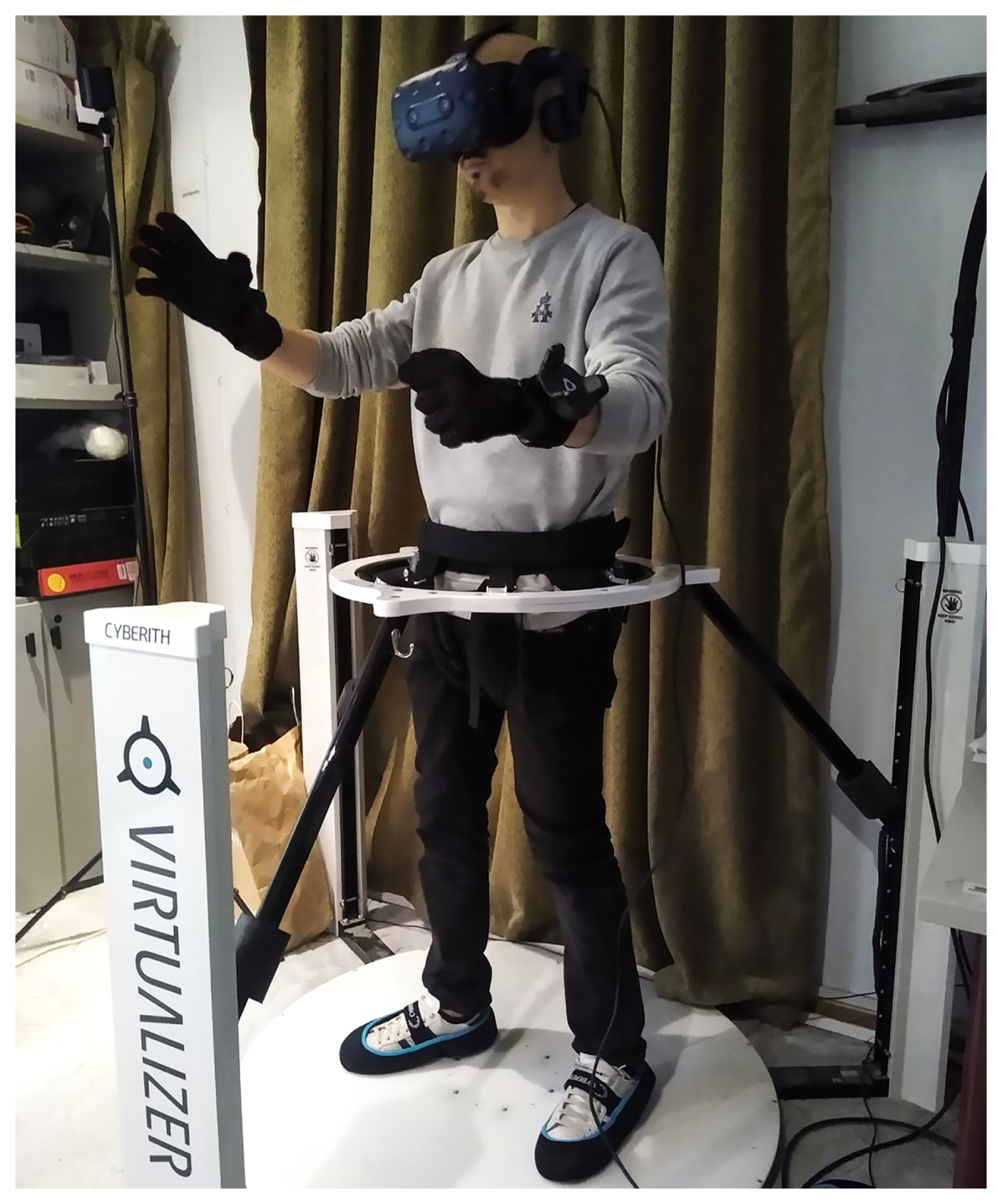

2.2. Locomotion Simulation

- MovementVector: a 3-dimensional vector describing the movement speed value in the three different axes. The MovementVector is also multiplied with a user-defined variable called speedMultiplier so that the movement speed can be adjusted to each individual walking style;

- OrientationVector: a 4-dimensional vector describing the user’s orientation in quaternions. The OrientationVector is transferred to the player’s local coordinate system by multiplying it with the corresponding transformation matrix or by using an appropriate function provided by the Unreal’s library;

- UserLocation: a 3-dimensional vector defining the user’s position in the three different axes.

2.3. Hand Tracking and Haptic Feedback

2.4. Educational Content

2.5. User Evaluation

3. Results and Discussion

3.1. Level of Immersion

3.2. Aspects of Immersion

3.3. VR for Cultural Heritage

3.4. Limitations

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lee, H.; Jung, T.H.; tom Dieck, M.C.; Chung, N. Experiencing immersive virtual reality in museums. Inf. Manag. 2020, 57, 103229. [Google Scholar] [CrossRef]

- Britain, S.; Liber, O. A Framework for Pedagogical Evaluation of Virtual Learning Environments. In JISC Technology Applications Programme. Report 41; 1999. Available online: https://files.eric.ed.gov/fulltext/ED443394.pdf (accessed on 26 October 2021).

- Weller, M. Virtual Learning Environments: Using, Choosing and Developing Your VLE; Routledge: Abingdon, UK, 2007. [Google Scholar]

- Drossis, G.; Birliraki, C.; Stephanidis, C. Interaction with immersive cultural heritage environments using virtual reality technologies. In Proceedings of the International Conference on Human-Computer Interaction, Las Vegas, NV, USA, 15–20 July 2018; pp. 177–183. [Google Scholar]

- Moustakas, K.; Strintzis, M.G.; Tzovaras, D.; Carbini, S.; Bernier, O.; Viallet, J.E.; Raidt, S.; Mancas, M.; Dimiccoli, M.; Yagci, E.; et al. Masterpiece: Physical interaction and 3D content-based search in VR applications. IEEE MultiMedia 2006, 13, 92–100. [Google Scholar] [CrossRef]

- Kersten, T.P.; Tschirschwitz, F.; Deggim, S.; Lindstaedt, M. Virtual reality for cultural heritage monuments—From 3d data recording to immersive visualisation. In Proceedings of the Euro-Mediterranean Conference, Nicosia, Cyprus, 29 October–3 November 2018; pp. 74–83. [Google Scholar]

- Paladini, A.; Dhanda, A.; Reina Ortiz, M.; Weigert, A.; Nofal, E.; Min, A.; Gyi, M.; Su, S.; Van Balen, K.; Santana Quintero, M. Impact of virtual reality experience on accessibility of cultural heritage. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 929–936. [Google Scholar] [CrossRef] [Green Version]

- Cao, D.; Li, G.; Zhu, W.; Liu, Q.; Bai, S.; Li, X. Virtual reality technology applied in digitalization of cultural heritage. Clust. Comput. 2019, 22, 10063–10074. [Google Scholar]

- Tsita, C.; Satratzemi, M. A serious game design and evaluation approach to enhance cultural heritage understanding. In Proceedings of the International Conference on Games and Learning Alliance, Athens, Greece, 27–29 November 2019; pp. 438–446. [Google Scholar]

- Volkmar, G.; Wenig, N.; Malaka, R. Memorial Quest-A Location-based Serious Game for Cultural Heritage Preservation. In Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts, Melbourne, Australia, 28–31 October 2018; pp. 661–668. [Google Scholar]

- Luigini, A.; Basso, A. Heritage education for primary age through an immersive serious game. In From Building Information Modelling to Mixed Reality; Springer: Cham, Switzerland, 2021; pp. 157–174. [Google Scholar]

- Čejka, J.; Zsíros, A.; Liarokapis, F. A hybrid augmented reality guide for underwater cultural heritage sites. Pers. Ubiquitous Comput. 2020, 24, 1–14. [Google Scholar] [CrossRef]

- Bruno, F.; Lagudi, A.; Barbieri, L.; Muzzupappa, M.; Mangeruga, M.; Cozza, M.; Cozza, A.; Ritacco, G.; Peluso, R. Virtual reality technologies for the exploitation of underwater cultural heritage. In Latest Developments in Reality-Based 3D Surveying and Modelling; Remondino, F., Georgopoulos, A., González-Aguilera, D., Agrafiotis, P., Eds.; MDPI: Basel, Switzerland, 2018; pp. 220–236. [Google Scholar]

- Ruddle, R.A.; Lessels, S. For efficient navigational search, humans require full physical movement, but not a rich visual scene. Psychol. Sci. 2006, 17, 460–465. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Frommel, J.; Sonntag, S.; Weber, M. Effects of controller-based locomotion on player experience in a virtual reality exploration game. In Proceedings of the 12th International Conference on the Foundations of Digital Games, Hyannis, MA, USA, 14–17 August 2017; pp. 1–6. [Google Scholar]

- Schäfer, A.; Reis, G.; Stricker, D. Controlling Teleportation-Based Locomotion in Virtual Reality with Hand Gestures: A Comparative Evaluation of Two-Handed and One-Handed Techniques. Electronics 2021, 10, 715. [Google Scholar] [CrossRef]

- Caggianese, G.; Capece, N.; Erra, U.; Gallo, L.; Rinaldi, M. Freehand-steering locomotion techniques for immersive virtual environments: A comparative evaluation. Int. J. Hum.-Comput. Interact. 2020, 36, 1734–1755. [Google Scholar] [CrossRef]

- Nilsson, N.C.; Serafin, S.; Steinicke, F.; Nordahl, R. Natural walking in virtual reality: A review. Comput. Entertain. (CIE) 2018, 16, 1–22. [Google Scholar] [CrossRef]

- Cherni, H.; Nicolas, S.; Métayer, N. Using virtual reality treadmill as a locomotion technique in a navigation task: Impact on user experience–case of the KatWalk. Int. J. Virtual Real. 2021, 21, 1–14. [Google Scholar] [CrossRef]

- Oh, K.; Stanley, C.J.; Damiano, D.L.; Kim, J.; Yoon, J.; Park, H.S. Biomechanical evaluation of virtual reality-based turning on a self-paced linear treadmill. Gait Posture 2018, 65, 157–162. [Google Scholar] [CrossRef] [PubMed]

- Peruzzi, A.; Zarbo, I.R.; Cereatti, A.; Della Croce, U.; Mirelman, A. An innovative training program based on virtual reality and treadmill: Effects on gait of persons with multiple sclerosis. Disabil. Rehabil. 2017, 39, 1557–1563. [Google Scholar] [CrossRef]

- Rose, T.; Nam, C.S.; Chen, K.B. Immersion of virtual reality for rehabilitation—Review. Appl. Ergon. 2018, 69, 153–161. [Google Scholar] [CrossRef]

- Kreimeier, J.; Hammer, S.; Friedmann, D.; Karg, P.; Bühner, C.; Bankel, L.; Götzelmann, T. Evaluation of different types of haptic feedback influencing the task-based presence and performance in virtual reality. In Proceedings of the 12th ACM International Conference on PErvasive Technologies Related to Assistive Environments, Rhodes, Greece, 5–7 June 2019; pp. 289–298. [Google Scholar]

- Hosseini, M.; Sengül, A.; Pane, Y.; De Schutter, J.; Bruyninck, H. Exoten-glove: A force-feedback haptic glove based on twisted string actuation system. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 320–327. [Google Scholar]

- Moustakas, K.; Nikolakis, G.; Tzovaras, D.; Strintzis, M.G. A geometry education haptic VR application based on a new virtual hand representation. In Proceedings of the IEEE Proceedings. VR 2005. Virtual Reality, Bonn, Germany, 12–16 March 2005; pp. 249–252. [Google Scholar]

- Butt, A.L.; Kardong-Edgren, S.; Ellertson, A. Using game-based virtual reality with haptics for skill acquisition. Clin. Simul. Nurs. 2018, 16, 25–32. [Google Scholar] [CrossRef] [Green Version]

- CURVE. Available online: https://curve.gr/en/ (accessed on 26 October 2021).

- Efstathiou, K.; Efstathiou, M. Celestial Gearbox. Mech. Eng. 2018, 140, 31–35. [Google Scholar] [CrossRef] [Green Version]

- Kaltsas, N.E.; Vlachogianni, E.; Bouyia, P. The Antikythera Shipwreck: The Ship, the Treasures, the Mechanism; National Archaeological Museum, April 2012–April 2013; Kapon Editions: Athens, Greece, 2013. [Google Scholar]

- Epic Games. Unreal Engine. 2019. Available online: https://www.unrealengine.com (accessed on 26 October 2021).

- Mastrocinque, A. The Antikythera shipwreck and Sinope’s culture during the Mithridatic wars. In Mithridates VI and the Pontic Kingdom; Jojte, J.M., Ed.; Aarhus Univeristy PRESS: Aarhus, Denmark, 2009; pp. 313–319. [Google Scholar]

- Kougianos, G.; Moustakas, K. Large-scale ray traced water caustics in real-time using cascaded caustic maps. Comput. Graph. 2021, 98, 255–267. [Google Scholar] [CrossRef]

- Slater, M.; Usoh, M.; Steed, A. Taking steps: The influence of a walking technique on presence in virtual reality. ACM Trans.-Comput.-Hum. Interact. TOCHI 1995, 2, 201–219. [Google Scholar] [CrossRef]

- Cannavò, A.; Calandra, D.; Pratticò, F.G.; Gatteschi, V.; Lamberti, F. An evaluation testbed for locomotion in virtual reality. IEEE Trans. Vis. Comput. Graph. 2020, 27, 1871–1889. [Google Scholar] [CrossRef] [PubMed]

- Cakmak, T.; Hager, H. Cyberith Virtualizer: A Locomotion Device for Virtual Reality. In Proceedings of the ACM SIGGRAPH 2014 Emerging Technologies, Vancouver, BC, Canada, 10–14 August 2014; p. 1. [Google Scholar]

- Pakzad, A.; Iacoviello, F.; Ramsey, A.; Speller, R.; Griffiths, J.; Freeth, T.; Gibson, A. Improved X-ray computed tomography reconstruction of the largest fragment of the Antikythera Mechanism, an ancient Greek astronomical calculator. PLoS ONE 2018, 13, e0207430. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Freeth, T.; Jones, A. The Cosmos in the Antikythera Mechanism; ISAW Papers; ISAW: New York, NY, USA, 2012. [Google Scholar]

- Witmer, B.G.; Singer, M.J. Measuring presence in virtual environments: A presence questionnaire. Presence 1998, 7, 225–240. [Google Scholar] [CrossRef]

- Albert, J.; Sung, K. User-centric classification of virtual reality locomotion. In Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology, Tokyo, Japan, 28 November–1 December 2018; pp. 1–2. [Google Scholar]

- Kreimeier, J.; Götzelmann, T. First steps towards walk-in-place locomotion and haptic feedback in virtual reality for visually impaired. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–6. [Google Scholar]

- Han, S.; Kim, J. A study on immersion of hand interaction for mobile platform virtual reality contents. Symmetry 2017, 9, 22. [Google Scholar] [CrossRef] [Green Version]

- Kang, H.; Lee, G.; Han, J. Visual manipulation for underwater drag force perception in immersive virtual environments. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 38–46. [Google Scholar]

| Gear Name | Function of the Gear/Pointer | Mechanism Formula | Gear Direction |

|---|---|---|---|

| x | Year gear | 1 (by definition) | cw |

| b | the Moon’s orbit | Time(b) = Time(x) × (c1/b2) × (d1/c2) × (e2/d2) × (k1/e5) × (e6/k2) × (b3/e1) | cw |

| r | lunar phase display | Time(r) = 1/((1/Time(b2 [mean sun] or sun3 [true sun])) − (1/Time(b))) | |

| n* | Metonic pointer | Time(n) = Time(x) × (l1/b2) × (m1 /l2) × (n1/m2) | ccw |

| o* | Games dial pointer | Time(o) = Time(n) × (o1/n2) | cw |

| q* | Callippic pointer | Time(q) = Time(n) × (p1/n3) × (q1 /p2) | ccw |

| e* | lunar orbit precession | Time(e) = Time(x) × (l1/b2) × (m1/l2) × (e3/m3) | ccw |

| g* | Saros cycle | Time(g) = Time(e) × (f1/e4) × (g1/f2) | ccw |

| i* | Exeligmos pointer | Time(i) = Time(g) × (h1/g2) × (i1/h2) | ccw |

| The following are proposed gearing from the 2012 Freeth and Jones reconstruction: | |||

| sun3* | True sun pointer | Time(sun3) = Time(x) × (sun3/sun1) × (sun2/sun3) | cw |

| mer2* | Mercury pointer | Time(mer2) = Time(x) × (mer2/mer1) | cw |

| ven2* | Venus pointer | Time(ven2) = Time(x) × (ven1/sun1) | cw |

| mars4* | Mars pointer | Time(mars4) = Time(x) × (mars2/mars1) × (mars4/mars3) | cw |

| jup4* | Jupiter pointer | Time(jup4) = Time(x) × (jup2/jup1) × (jup4/jup3) | cw |

| sat4* | Saturn pointer | Time(sat4) = Time(x) × (sat2/sat1) × (sat4/sat3) | cw |

| Evaluate the Level of Control and Immersion You Perceived, during Your Experience. | Mean Value | Standard Deviation |

|---|---|---|

| How aware were you of events occurring in the real world around you? | 2.70 | 1.08 |

| How much were you able to control events in the virtual world? | 3.90 | 0.72 |

| How responsive was the environment to actions that you initiated (or performed)? | 3.85 | 0.88 |

| How natural did your interactions with the environment seem? | 3.75 | 0.64 |

| How much did the visual aspects of the environment contribute to your overall immersion? | 4.65 | 0.49 |

| How much did the auditory aspects of the environment contribute to your overall immersion? | 4.15 | 1.04 |

| How natural was the mechanism which controlled movement through the environment? | 3.65 | 0.81 |

| How compelling was your sense of moving around inside the virtual environment? | 3.80 | 0.77 |

| How much did your experiences in the virtual environment seem consistent with your real-world experiences? | 3.45 | 1.10 |

| How involved were you in the virtual environment experience? | 4.30 | 0.66 |

| Evaluate the Importance of the Following for a More Immersive VR Experience. | Mean Value | Standard Deviation |

|---|---|---|

| Haptic Feedback | 3.90 | 0.85 |

| Locomotion through the treadmill | 3.75 | 1.02 |

| Interacting with objects in the scenes | 4.40 | 0.75 |

| Virtual Reality for Cultural Heritage | Mean Value | Standard Deviation |

|---|---|---|

| I think that my interest in courses and educational content would be higher if interactive content and VR systems were used. | 4.45 | 0.83 |

| It is easier to remember a historic fact if it is visualized. | 4.60 | 0.75 |

| I believe that VR systems could be utilized in other fields (science, art etc.). | 4.70 | 0.66 |

| After this experience I am more intrigued about learning about cultural heritage. | 4.00 | 0.86 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chrysanthakopoulou, A.; Kalatzis, K.; Moustakas, K. Immersive Virtual Reality Experience of Historical Events Using Haptics and Locomotion Simulation. Appl. Sci. 2021, 11, 11613. https://doi.org/10.3390/app112411613

Chrysanthakopoulou A, Kalatzis K, Moustakas K. Immersive Virtual Reality Experience of Historical Events Using Haptics and Locomotion Simulation. Applied Sciences. 2021; 11(24):11613. https://doi.org/10.3390/app112411613

Chicago/Turabian StyleChrysanthakopoulou, Agapi, Konstantinos Kalatzis, and Konstantinos Moustakas. 2021. "Immersive Virtual Reality Experience of Historical Events Using Haptics and Locomotion Simulation" Applied Sciences 11, no. 24: 11613. https://doi.org/10.3390/app112411613

APA StyleChrysanthakopoulou, A., Kalatzis, K., & Moustakas, K. (2021). Immersive Virtual Reality Experience of Historical Events Using Haptics and Locomotion Simulation. Applied Sciences, 11(24), 11613. https://doi.org/10.3390/app112411613