1. Introduction

Recently, due to the worldwide COVID-19 pandemic, the use of remote collaboration has increased [

1,

2]. Various conventional video conferencing solutions [

3,

4,

5] for remote collaboration have limitations in terms of realistically delivering the user’s work [

6,

7]. To overcome this, research to apply mixed reality (MR) to remote collaboration has recently been conducted [

8,

9]. In remote MR collaboration, end-to-end latency possibly occurs because of various reasons, such as tracking, application, image generation, display, or network [

10,

11,

12,

13]. It is difficult to completely remove the latency since most of the latency causes mentioned above are essential operations for MR. However, as latency affects the usability (e.g., interaction satisfaction) as well as the efficiency of remote MR collaborations, it is necessary to reduce it.

Due to this need, various studies have been conducted to improve the latency. Conventional studies have focused on reducing latency between the moment of performing the interaction and delivering the performed interaction information. Action detection, such as contact between a human and an object, is required to find the performed interaction in a remote MR collaboration. In other words, in remote MR collaborations, it is difficult to determine whether the user had performed an interaction before such actions are detected. Defining the time to check whether the interaction is performed according to the user’s intention in a remote MR collaboration as the interaction latency, conventional solutions have limitations because it is difficult to reduce latency.

In this paper, we propose an interaction prediction method for reducing interaction latency in remote MR collaborations. The proposed method reduces interaction latency by predicting interactions between a human and a virtual object. Interaction prediction is performed using consecutive hand joint information as the input in human and virtual object interactions.

This paper is composed as follows: in

Section 2, we introduce the process of deriving frequently used gestures in remote MR collaboration based on conventional studies and selecting the gestures for this study. In

Section 3, we propose a prediction method for selected gestures. In

Section 4, we conduct an experiment with and without applying the proposed method and compare the experimental results to examine the effectiveness of the proposed method. Finally, in

Section 5, we present conclusions and future works.

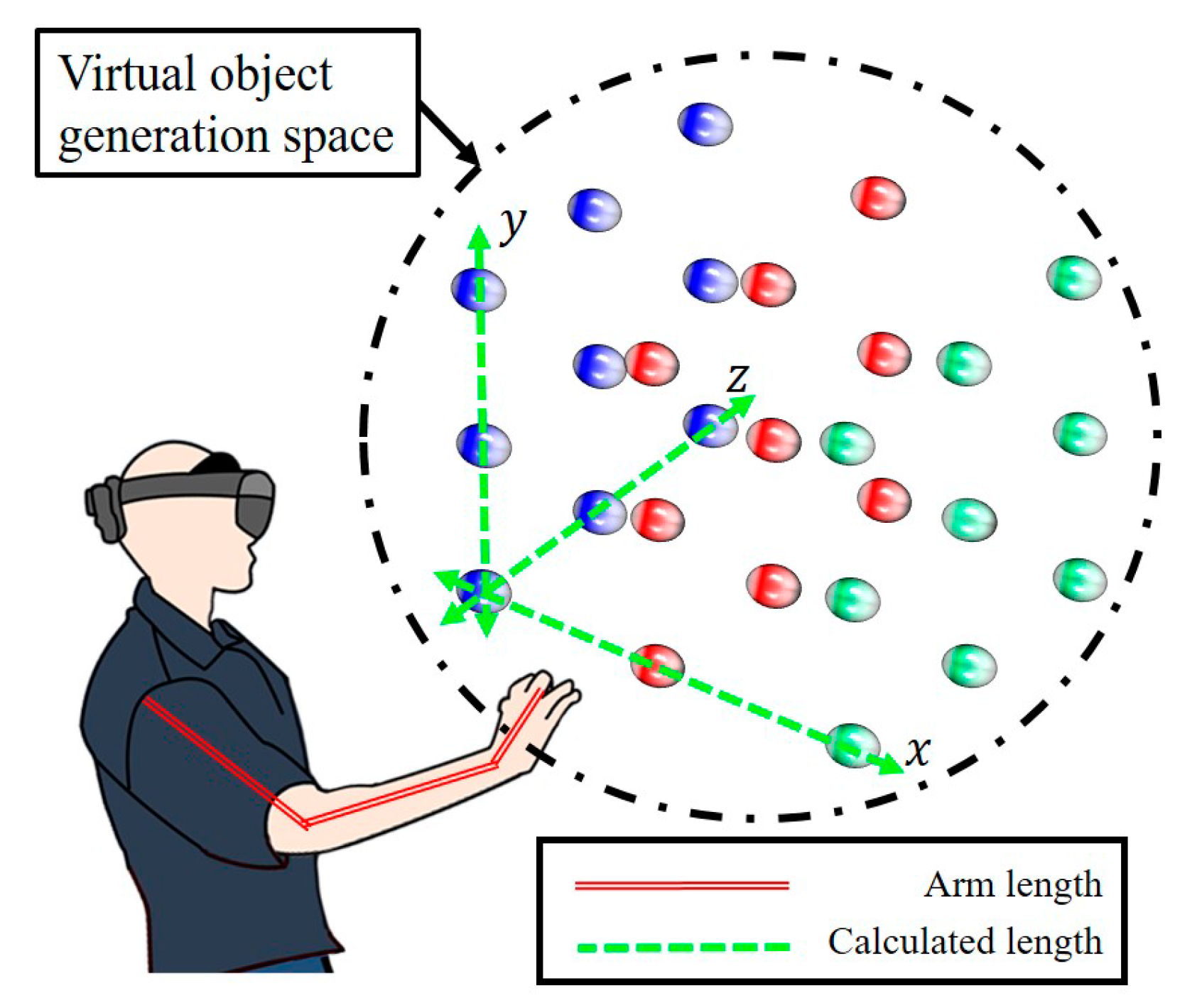

3. Proposed Method

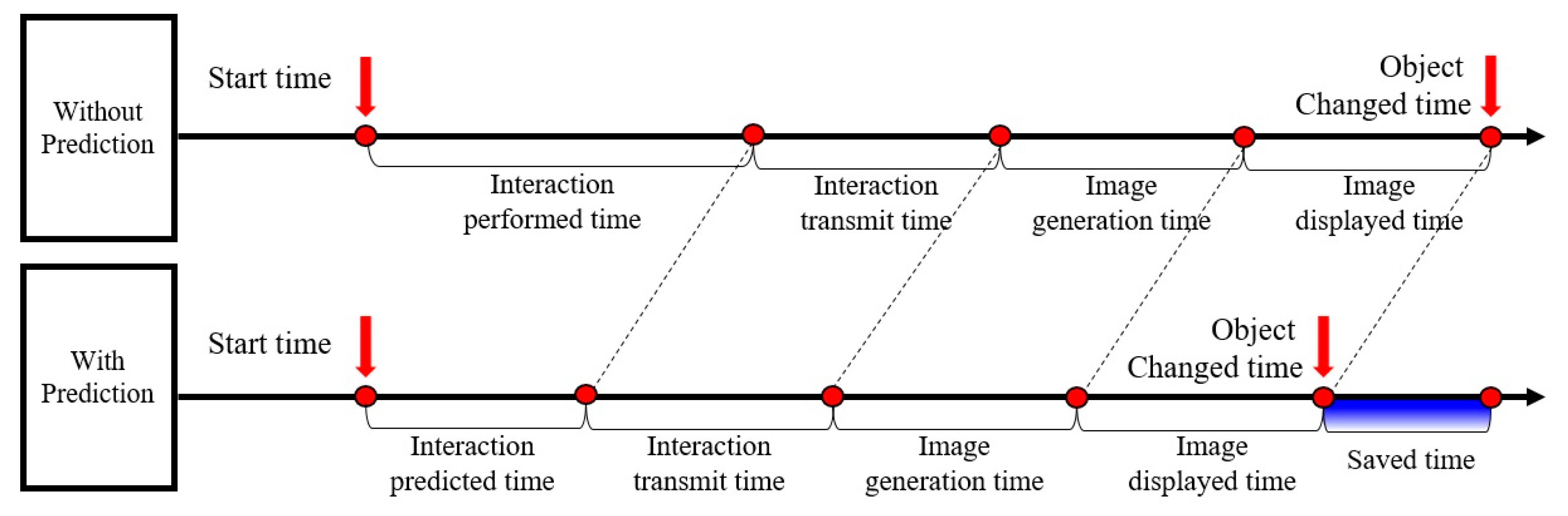

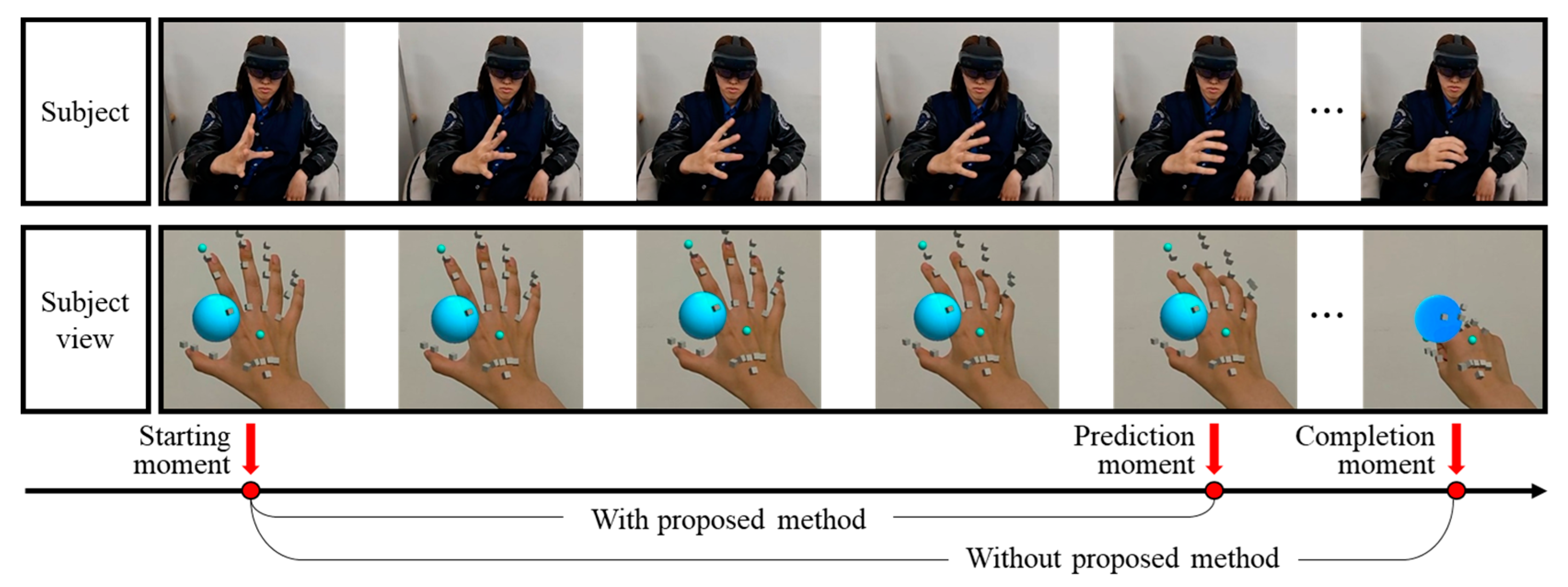

In this section, we describe a method for reducing interaction latency when performing human–VO interactions. In general, in remote MR collaborations, including human–virtual object interactions, remote users cannot know the other user’s intentions until an interaction is performed. Since users’ intentions include interaction targets, interaction types, etc., if users’ intentions are known previously, it is possible to predict changes in the virtual object and environment (e.g., the object’s color, sound effect, etc.). If the changes of the virtual object or environment are predictable, the ‘saved time’, highlighted in blue in

Figure 1, can be shortened until the change caused by the interaction is revealed to a user.

To reduce interaction latency, this study proposes a method to find the interaction information in advance in remote MR collaborations. The proposed method is a method to reduce interaction latency by predicting the occurrence of the interaction before the human–VO interaction is performed.

There are many types of human–VO interactions used in the MR environment [

24], and one of those types, a representative type that does not require tools and devices other than a see-through type displays or HMDs, is the gesture-based interaction. This study mainly targets gesture-based interactions that are not biased toward a specific device and can be widely applied to remote MR collaborations. Meanwhile, the types of gestures that can be used for interaction, such as manipulating virtual objects in a remote MR collaboration, are various, and in many cases, those gestures even differ for each application. This study focused on gestures that were mainly used to transform a target object in a human–VO interaction, such as grab and pinch [

21].

For the interaction prediction, it is required to classify the studied target gestures correctly. In the proposed method, k-nearest neighbor (k-NN) [

25], which executes quickly on a relatively small dataset without significantly compromising accuracy, was used as an algorithm for gesture classification. The purpose of this study was to investigate the feasibility of the proposed method, so a relatively small and simple k-NN algorithm was adopted.

A k-NN algorithm can cause problems of classifying an undefined hand gesture (hereafter none) into a specifically defined hand gesture (grab, pinch). Therefore, the existing k-NN was partially adjusted to further classify undefined hand gestures. Three numbers (3, 5, and 7) were also selected as the value , since the performance of k-NN may vary depending on the value .

A dataset is essential for classifying gestures with k-NN. In MR, the hand is usually expressed as a 20-keypoint model representing a joint. If all of this information is used, the size of the dataset becomes very large as the number of gesture samples in the dataset increases. Therefore, in this study, we tried to derive a representative value that can represent the hand using joint information before creating a dataset for gesture classification.

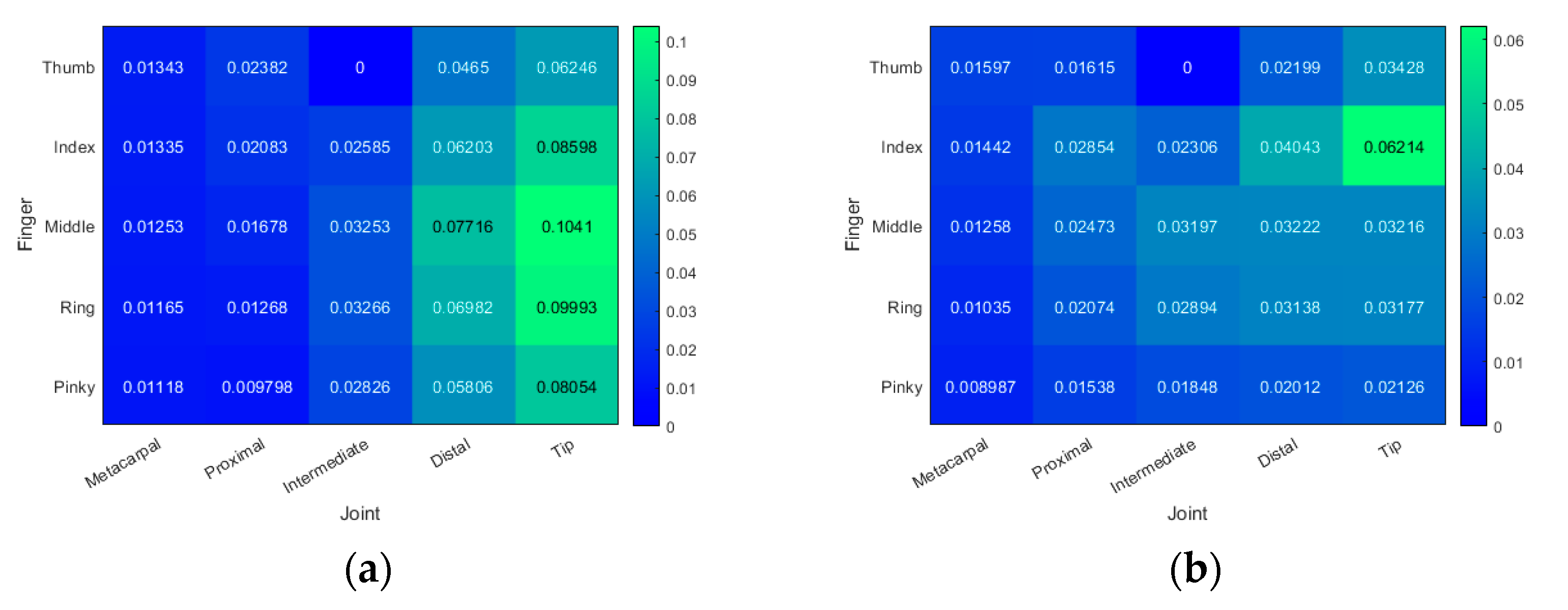

Since the representative value is used to classify the gesture, it should express whether the gesture is performed or not. In other words, the value that changed the most according to the gesture that was performed should be selected as the representative value. To find this, grab and pinch gestures, the targets of this study, were performed; recorded joint trajectories data are shown in

Figure 2.

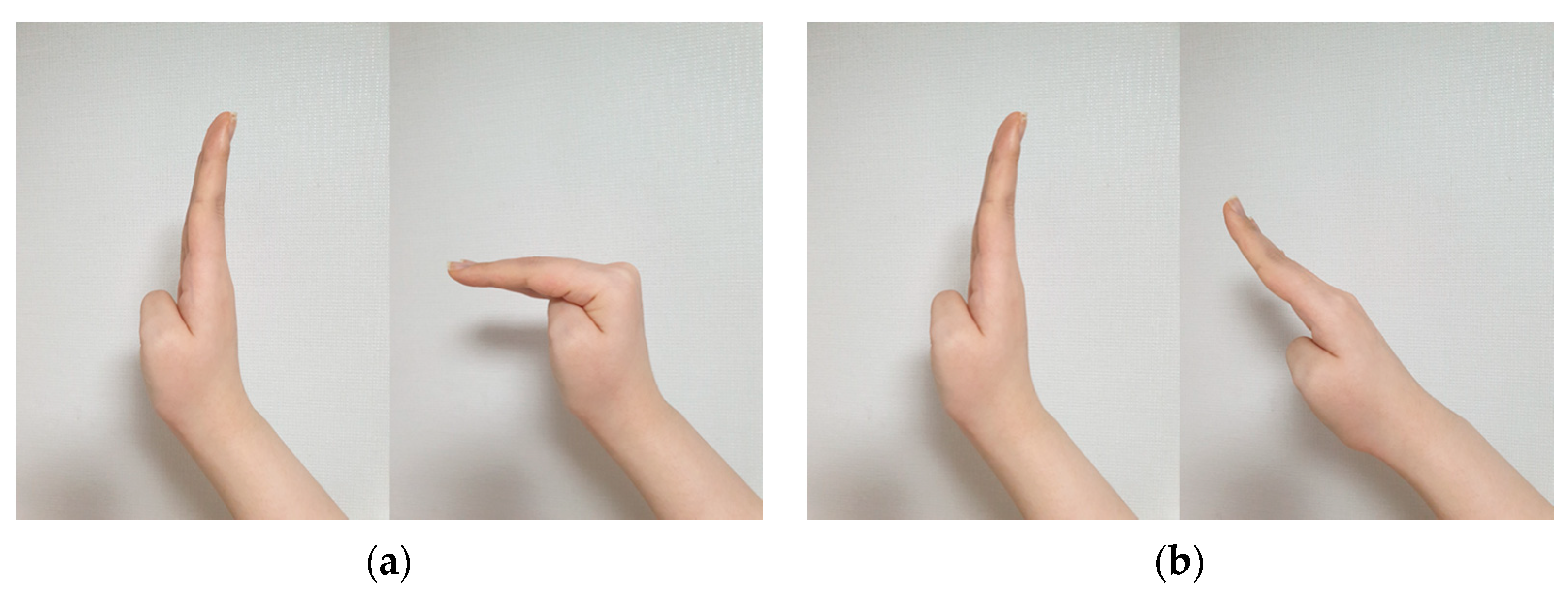

Figure 2 shows the joint trajectories with respect to grab and pinch gestures. Each joint’s information was recorded by dividing it to confirm the degree of change as follows: gesture at starting moment (

Figure 2a,d), gesture at ending moment (

Figure 2b,e).

Figure 2c,f expresses the starting and ending moment of a gesture together; the dashed line means the hand at the starting moment, and the solid line means the hand at the ending moment. In

Figure 2c,f, although it is possible to approximately check the degree of the joint information changed in each gesture, it is difficult to confirm the degree of change with respect to each joint. The degree of change with respect to each joint was calculated to confirm it more accurately.

Figure 3 indicates the degree of change with respect to each joint information according to grab and pinch gestures. In the case of the thumb, there is no intermediate joint, so 0 was assigned in the intermediate of the thumb. The other joints are expressed in blue for fewer changes (0 in

Figure 3a,b) and green for more changes (0.1 in

Figure 3a and 0.05 in

Figure 3b), depending on the degree of change. As a result, in the case of grab, it was confirmed that the tip joint greatly changed. In the case of pinch, it was confirmed that the tip joint and the distal joint of the index finger greatly changed. Additionally, in the case of pinch, the tip joint of the other fingers showed a tendency to change significantly compared to other joints of each finger. Based on this, we selected the tip joint of each finger as a representative value of the hand for classifying the grab and pinch gesture. However, additional information is required because it is impossible to determine the hand’s movement only with the tip joint. For example,

Figure 4a,b shows the cases where the tip joint greatly changed. It is difficult to distinguish the cases shown in

Figure 4 with only the information that the tip joint changed greatly.

Even if additional information is used, the representativeness of the tip joint should be maintained, so the metacarpal joint and proximal joint were additionally selected, which had the least degree of change due to the review result of

Figure 3. The selected joints were used to help classify the gesture without compromising the representativeness of the tip joint. In particular, the selected joints can help to check the overall degree of the hand. Meanwhile, the more joints included when classifying a gesture, the larger the size of the dataset and the longer the computation time. Therefore, we did not select a distal joint that showed the most degree of change after the tip, for which the change of degree was almost similar to the tip. Eventually, in this study, joint angles for each finger were calculated using the tip joint, the metacarpal joint, and the proximal joint by Equations (1)–(3):

where

,

, and

are 3D points of the metacarpal joint, the proximal joint, and the tip joint, respectively;

is an internal angle calculated using the metacarpal, proximal, and tip joints. These five calculated joint angles (

−

) are shown in

Figure 5 and these values were used as representative values representing the hand.

When classifying a gesture, only using information at a specific moment cannot reflect information from consecutive movements, so it is difficult to find the user’s intention. In other words, since classifications using only the information of a specific moment are highly likely to fail, consecutive joint angles were used for interaction predictions in this study.

Figure 6 shows an example of deriving consecutive joint angles. The joint angles of five fingers are calculated from each frame (frame 1, frame 2, frame 3, frame 4, and frame 5), and calculated values from 5 consecutive frames become one joint angle set. In general, a human gesture lasts 0.5 to 1 s [

26]. In order for the proposed method to have meaning as a prediction, it was judged that the time required for the prediction itself should be about half of the duration of the gesture. Therefore, we tried to perform predictions through 5 frames at 30 fps (about 0.17 s).

The dataset used in this study consists of joint angle sets calculated as above. In this study, the joint angle sets were created by performing grab and pinch gestures for virtual objects generated at random locations by 3 users. Joint angle sets included in the dataset were derived through 5 consecutive frames from when each gesture was started. To create the dataset, 3 users performed grab and pinch gestures 100 times each, and through this, a dataset including a total of 300 finger joint angle sets for gesture interactions was obtained. The procedure for performing the proposed interaction prediction described above is shown in

Figure 7, and the detailed description is as follows:

In this study, ‘interaction prediction’ means that the user’s gesture is classified as a defined specific gesture through the above process. The first frame among the consecutive frames used for classification is considered as the user’s gesture starting moment. We examined whether our procedure worked well by comparing the obtained hand gestures with actual ones. It was confirmed that the proposed method could classify users’ hand gestures into grab, pinch, and none through the additional test.