Abstract

The widespread use of mobile devices and sensors has motivated data-driven applications that can leverage the power of big data to benefit many aspects of our daily life, such as health, transportation, economy, and environment. Under the context of smart city, intelligent transportation systems (ITS), as a main building block of modern cities, and edge computing (EC), as an emerging computing service that targets addressing the limitations of cloud computing, have attracted increasing attention in the research community in recent years. It is well believed that the application of EC in ITS will have considerable benefits to transportation systems regarding efficiency, safety, and sustainability. Despite the growing trend in ITS and EC research, a big gap in the existing literature is identified: the intersection between these two promising directions has been far from well explored. In this paper, we focus on a critical part of ITS, i.e., sensing, and conducting a review on the recent advances in ITS sensing and EC applications in this field. The key challenges in ITS sensing and future directions with the integration of edge computing are discussed.

1. Introduction

Data explosion has been posing unprecedented opportunities and challenges to our cities. To utilize big data to better allocate urban resources for the purpose of improving life quality and city management, the concept of Smart City was introduced as an emerging topic to society and the research community. To fulfill the increasing demand in data processing, cloud computing is expected not to be able to fully support the computing services, and thus, the community has realized the need for a new form of computing, i.e., edge computing (EC). Edge computing processes sensor data closer to where the data are generated, thereby balancing the computing load and saving network resources. At the same time, edge computing has the potential for improved privacy protection by not transmitting all the raw data to the cloud datacenters.

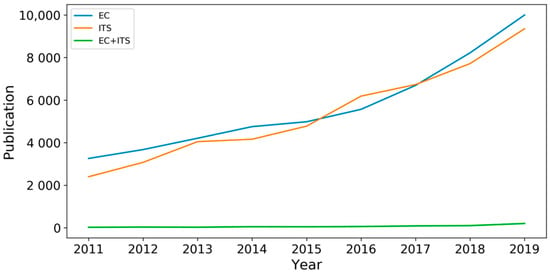

Transportation is a key building block of our city, and the concept of intelligent transportation systems (ITS) is a critical component of Smart City. Research studies and engineering implementations on ITS have been attracting attention in recent years. According to the Web of Science, we surveyed the number of publications with the keywords intelligent transportation, intelligent vehicle, or smart transportation. It can be seen from Figure 1 (the orange line) that the number of publications on ITS increased from 2011 to 2019 by almost five times. We also searched the publications with keywords edge computing, and the trend is similar to that of ITS (the blue line). However, when we searched the combined keywords, including edge computing + transportation and edge computing + vehicle, the related publications were in very small numbers: 21 publications in 2011 and 199 publications in 2019.

Figure 1.

The number of publications from 2011 to 2019 on the topics of (1) edge computing, (2) intelligent transportation systems, and (3) edge computing + transportation. The statistics are from the Web of Science.

From the numbers themselves, we might conclude that EC and ITS are two unrelated research fields. Nevertheless, with careful investigation, this is not the case at all. In several of the most highly cited survey papers on edge computing [1,2,3], the summarized key applications of edge computing included smart transportation, connected vehicle, wireless sensing, smart city, traffic video analytics, and so on. These are core research topics in ITS. They envisioned that, with the wide spread of mobile phones, sensors, network cameras, connected cars, etc., cloud computing would no longer be suitable for many city-wide applications. Edge computing would be able to leverage the large amount of data produced by them. On the other hand, we observed more and more studies and articles on applying edge computing to ITS (e.g., [4,5,6,7,8,9]). Though still in a relatively small number, these studies are innovative and show great potential to branch out into new ideas and solutions.

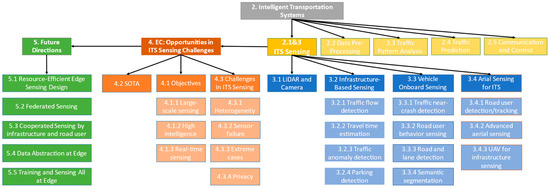

Therefore, the statistics in Figure 1 actually unveil a big gap and high demand in future research on the combination of EC and ITS. In this paper, we conduct a survey on recent advances in ITS, especially ITS sensing technologies; we then propose the challenges in ITS sensing and how EC may help address them, as well as future research opportunities in applying EC to the area of ITS sensing. Note that EC could benefit not only ITS sensing but also other components of ITS, such as data pre-processing, traffic pattern analysis, and control strategies; however, in this paper, we mainly focus on EC’s application in ITS sensing and present a detailed survey. The structure of the content in this paper is displayed in Figure 2.

Figure 2.

The structure of the content in this review paper.

2. Intelligent Transportation Systems (ITS)

ITS is a combination of cutting-edge information and communication technologies for the advancement of traffic management. Examples include traffic signal control, smart parking management, electronic toll collection, variable speed limit, route optimization, and, more recently, connected and automated vehicles. Regardless of the specific application, ITS is typically composed of five major components: traffic sensing, data pre-processing, data pattern analysis, information communication, and control. Other components, such as traffic prediction, may also be necessary for certain tasks. In this section, we review the ITS components and their functions.

2.1. Sensing

Sensing is essentially the detection of certain types of signals in the real world and the conversion of them into readable data. Traffic sensors generate data that supports the analysis, prediction, and decision-making of intelligent transportation systems. There are various sensors for different data collection purposes and scenarios. The most commonly seen traffic sensors in today’s roadway networks and transportation infrastructures include, but are not limited to, inductive loop detectors [10,11,12], magnetic sensors [13,14], cameras [15,16], infrared sensors [17], LiDAR sensors [18], acoustic sensors [19], Bluetooth [20], wi-fi [21], mobile phones [22], and probe vehicle sensors [23,24,25]. These sensors measure the feature quantity of some objects or scenarios in transportation systems, such as road users, traffic flow parameters, congestion, crashes, queue length at intersections, and automobile emission.

For most sensor signals, while there is a lot of valuable information that can be mined from them, the conversion process is straightforward and can be completed based on some simple rules. For example, loop detectors measure the change in the inductance when vehicles pass over them for traffic volume and occupancy detection; Bluetooth sensors capture the radio communication signal with a device unique identifier, i.e., the media access control address (MAC) so that they can estimate the number of devices (usually associated with the number of road users) or travel time; acoustic sensors generate acoustic wave to detect the existence of objects at a certain location, without the ability to tell the object type. For some sensors, such as camera and LiDAR, the conversion of the raw signals (i.e., the digital images and the 3D point cloud) to useful data can be quite complicated, and thereby advanced algorithms have been applied widely to the conversion of LiDAR and camera signals.

2.2. Data Pre-Processing

Data pre-processing, when necessary, is conducted right after the sensing task. It can address data quality issues that are hard to be addressed previously. Noisy data and missing data are two major problems in traffic data pre-processing and cleaning. While data denoising can be done with satisfactory performance using traditional methods, such as wavelet filter, moving average model, and Butterworth filter [26], missing data imputation is much harder since it adds information properly. Another commonly applied traffic data denoising task is trajectory data map matching. The most popular models for this task that denoises the map matching errors are often based on the Hidden Markov Model [27,28,29]. There have been quite some efforts in deep learning-based missing data imputation lately. These state-of-the-art methods often focus on learning spatial-temporal features using deep learning models so that that are able to inference the missing values using the existing values [30,31,32,33,34,35]. Given the spatial-temporal property of traffic data, the Convolutional Neural Network (CNN) is a natural choice due to its ability to learn image-like patches. Zhuang et al. designed a CNN-based method for loop traffic flow data imputation and demonstrated its improved performance over the state-of-the-art methods [32]. Generative adversarial network (GAN) is another deep learning method that is appropriate for traffic data imputation, given its recent advances in image-like data generation. Chen et al. proposed a GAN algorithm to generate time-dependent traffic flow data. They made two modifications to the standard GAN on using the real data and introducing a representation loss [31]. GAN is also experimented for travel time imputation using probe vehicle trajectory data. Zhang et al. developed a travel times imputation GAN (TTI-GAN) considering the network-wide spatial-temporal correlations [35].

2.3. Traffic Pattern Analysis

With the data organized, the next step for an ITS is to learn the traffic patterns, understand traffic status, and make traffic predictions. There are two critical steps in most tasks for traffic pattern learning: (1) feature selection and (2) model design. Essentially, feature selection forms the original data space, and model design converts the original space to a new space that is learnable for classification, regression, clustering, or other tasks. On the one hand, traffic sensing is so important that, without original data property collected, it is almost impossible to make up a new space for pattern learning from poor original data space. On the other hand, once the traffic sensing is done with good design and quality, it is then necessary to focus on designing models to extract useful information for your tasks. Machine learning has been widely applied for a variety of traffic pattern learning tasks, such as driver and passenger classification using smart phone data [36], K-means clustering for truck bottleneck identification using GPS data [37], estimation of the number of bus passengers using deep learning [38], and faulty detection in vehicular cyber-physical systems [39].

A traditional group of studies is transportation mode recognition. Models are developed to recognize the mode of travelers, such as working, biking, running, and driving. This can be achieved by identifying travel features, such as speed, distance, and acceleration. Jahangiri and Rakha applied multiple traditional machine learning techniques for mode recognition using mobile phone data and found Random Forest (RF) and Support Vector Machine (SVM) to have the best performances [40]. Ashqar et al. enhanced the mode recognition accuracy by designing a two-layer hierarchical classifier and extracting new frequency domain features [41]. Another work introduced an online sequential extreme learning machine (ELM), which focuses on transfer learning techniques for mode recognition. It was trained with both labeled and unlabeled data for better training efficiency and classification accuracy. Recently, deep learning models were also developed for mode recognition [42]. Jeyakumar et al. developed a convolutional bidirectional Long Short-Term Memory (LSTM) model for transportation mode recognition. Feature extraction includes time domain and frequency domain features from the raw data [43].

Another representative group in data-driven pattern analysis is traffic accident detection. It is beneficial for transportation management agencies and travelers to have real-time information of traffic accidents regarding where it occurs and what the situation is. Otherwise, it may cause severe congestion and other issues besides the accident itself. This group of work often extracts features from traffic flow data, weather data, and so on to identify the traffic pattern change or differences around the accident location. Parsa et al. implemented eXtreme Gradient Booting (XGBoost) to detect the occurrence of accidents using real-time data, including traffic flow, road network, demographic, land use, and weather information. The Shapley Additive exPlanation (SHAP) is employed for interpretation of the results for the analysis of the importance of individual features [44]. They also led another study that showed the superiority of probabilistic neural networks for accident detection on freeways using imbalanced data. It revealed that the speed difference between the upstream and downstream of the accident was very significant [45]. In addition to traffic flow data, social media data is also shown to be effective for traffic accident detection. Zhang et al. employed the Deep Belief Network (DBN) and LSTM in the detection of traffic accidents using Twitter data in Northern Virginia and New York City. They found that nearly 66% of the accident-related tweets can be located by the accident log and over 80% can be linked to abnormal traffic data nearby [46]. Another sub-category is to detect accidents in real-time from a vehicle’s perspective. For example, Dogru and Subasi studied the possibility of applying RF, SVM, and a neural network for accident detection based on an individual vehicle’s speed and location under the context of Vehicular Ad-hoc Network (VANET) [47].

2.4. Traffic Prediction

Traffic pattern analysis is fundamental to traffic prediction. In some cases, traffic prediction is critical for an ITS if decision-making requires information in advance. In traffic prediction, the models extract features and learn the current pattern of traffic in order to predict some measurements. Traffic prediction is crucial for intelligence. It is one of the areas in which artificial intelligence, especially deep learning techniques, have been heavily applied. Traffic prediction has covered many tasks in transportation systems. Examples are traffic flow prediction [26,48,49,50,51,52,53,54], transit demand prediction [55,56], taxi or ride-hailing demand prediction [57,58,59], bike sharing-related prediction [60,61], parking occupancy prediction [62], pedestrian behavior prediction [63], and lane change behavior prediction [64]. LSTM, CNN, GAN, and graph neural networks are some of the most widely used deep learning methods for traffic prediction. The current trend of traffic prediction is larger scale, higher resolution, higher prediction accuracy, and real-time speed. For instance, Ma et al. investigated the feasibility of applying LSTM for single-spot traffic flow data prediction [51]. Their work is a milestone in this field and has laid the foundation for sophisticated models that can capture network-wide features for large-scale traffic speed prediction [65,66,67].

2.5. Information Communication and Control

There are two purposes of information communication: (1) gathering information to support decision making and (2) disseminating the decisions and control strategies to devices and road users. Traditional communication relies a lot on wired communication. Actuated traffic signal control collects vehicle arrival data from loop detectors underneath the roadway surface and pedestrian signal data via push button at intersections [68]. This information is gathered through wires into the signal controller cabinet, which is usually located at the roadside near an intersection. A similar communication method is for ramp metering control at freeway entrances [69]. Loop detectors are located underneath the freeway mainstream lanes and sometimes also underneath ramp lanes and they communicate with the cabinet through wire [70]. Using an Ethernet cable is another common method for wired communication for ITS. It can either connect devices to the Internet or serves as media for local communication, such as video streaming [71]. Controller Area Network (CAN bus) is a standard vehicle bus designed for microcontroller communications without a host computer [72], which enables the parts within vehicles to communicate with each other. Wired communication through CAN is an important way to test vehicle onboard innovations and solutions in ITS studies.

Wireless communication has been widely used in different applications, thanks to the rapid development of general communication technologies. Probe vehicle data can be available in real-time through vehicle-cloud communications. Companies, such as INRIX [73], and Wejo [74], have such connected vehicle data, such as trajectories and driver events, given their good connections to the vehicle OEMs. Similar vehicle and traffic data are available via devices other than the vehicle itself, such as smartphones, to provide real-time information for drivers via phone apps, such as Google Maps and Waze [75]. A connected vehicle, in many other scenarios, refers to not only vehicle-cloud communication but also vehicle-to-vehicle (V2V), vehicle-to-infrastructure (V2I), and even vehicle-to-everything (V2X) [76]. Dedicated Short Range Communication (DSRC) was a standard communication protocol for V2X application [77]; however, recently, C-V2X has been proposed as a new communication protocol with the emergence of 5G for high bandwidth, low latency, and highly reliable communication among a broad range of devices in ITS [78]. Information, such as variable speed limit via control strategy, work zone information, and real-time travel time, can be disseminated via variable message signs on the roadways in Advanced Traffic Management System (ATMS) and Advanced Traveler Information System (ATIS), from traffic management centers to road users [79,80].

3. ITS Sensing

This section goes into deep detail in the state-of-the-art in ITS sensing from a unique angle. First, existing ITS sensing works using camera and LiDAR are briefly introduced in Section 3.1, since those two sensors often require complicated methods for formatting input signals into useful data. The authors then summarize ITS sensing into infrastructure-based traffic sensing, vehicle onboard sensing, and aerial sensing for surface traffic from Section 3.2 to Section 3.4: (1) From the transportation system functionality perspective, infrastructure and road users are the two crucial elements that form the ground transportation system; the ground transportation system’s functionality is further extended with the emergence of aerial-based surveillance in civil utilization; (2) From the methodological perspective, sensor properties for these three transportation system components requires different solutions. Taking video sensing as an example, surveillance video, vehicle onboard video, and aerial video have different video background motion patterns so that there are unique video analytics algorithms for video foreground extraction for each of the three groups.

3.1. LiDAR and Camera

LiDAR has been predominately used in autonomous vehicles compared to its use in transportation infrastructure systems. LiDAR signal is 3D point cloud and it can be used for 3D object detection, 3D object tracking, lane detection, obstacle detection, traffic sign detection, and 3D mapping in autonomous vehicles’ perception systems [81]. For example, Qi et al. proposed PointNets, a deep learning framework for 3D object detection from RGB-D data that learned directly from the raw point clouds to extract 3D bounding boxes of vehicles [82]. Allodi et al. proposed using machine learning for combined LiDAR/stereo vision data that did tracking and obstacle detection at the same time [83]. Jung et al. designed an expectation-maximization-based method for real-time 3D road lane detection using raw LiDAR signals from a probe vehicle [84]. Guan developed a traffic sign classifier based on a supervised Gaussian-Bernoulli deep Boltzmann machine model, which used LiDAR point cloud and images as input [85]. There are also some representative works providing critical insights into the application of LiDAR as an infrastructure-based sensor. Zhao et al. proposed a clustering method for detecting and tracking pedestrians and vehicles using roadside LiDAR [18]. The findings are helpful for both researchers and transportation engineers.

Camera collects images or videos, and these raw data are essentially 2D matrices with quantized pixel numbers that are samples of the real-world visual signals. New techniques are applied to convert these complex 2D matrices into traffic-related data. One fundamental application is object detection. Researchers in the engineering and computer science fields have spent a lot of effort designing smart and fast object detectors using traditional statistics/learning [15] and deep learning techniques [86,87]. Object detection localizes and classifies cars, trucks, pedestrians, bicyclists, etc., in traffic camera images and enables different data collection tasks. There are also datasets being collected and published specifically for object detection and classification in traffic surveillance images, which has generated much interest [88]. The AI City Challenge is a leading workshop and competition in the field of traffic surveillance video data processing [89]. It has guided video-based traffic sensing, such as traffic volume counting, vehicle re-identification, multiple-vehicle tracking, and traffic anomaly detection. Since the camera sensor is a critical component of autonomous vehicles, advanced traffic sensing techniques have been heavily deployed in autonomous vehicles’ perception systems to understand the environment [81]. Traffic events, congestion levels, road users, road regions, infrastructure information, road user interactions, etc., are all meaningful data that can be extracted from the raw images using machine learning. Datasets have also been published and widely recognized to facilitate the design of cutting-edge methods for converting camera data into readable traffic-related data [90,91,92,93].

3.2. Infrastructure-Based ITS Sensing

A key objective of the ITS concept is to leverage the existing civil infrastructures to improve traffic performance. Transport infrastructure refers to roads, bridges, tunnels, terminals, railways, traffic controllers, traffic signs, other roadside units, and so on. Sensors installed with transport infrastructures monitor certain locations in a transportation system, such as intersections, roadway segments, freeway entrances/exists, and parking facilities.

3.2.1. Traffic Flow Detection

One of the fundamental functions of infrastructure-based ITS sensing is traffic flow detection and classification at certain locations. Vehicle counts, flow rate, speed, density, trajectories, classes, and many other valuable data can be available through traffic flow detection and classification. Chen et al. [94] proposed a traffic flow detection method using optimized YOLO (You Only Look Once) for vehicle detection and DeepSORT (Deep Simple Online and Realtime Tracking) for vehicle tracking and implemented the method on Nvidia edge device Jetson TX2. Haferkamp et al. [95] proposed a method by applying machine learning (KNN and SVM) to radio attenuation signals and were able to achieve success in traffic flow detection and classification. If processed with advanced signal processing methods, traditional traffic sensors, such as loop detectors and radar, can also expand their detection categories and performance. Ho and Chung [96] applied Fast Fourier Transform (FFT) to radar signals to detect traffic flow at the roadside. Ke et al. [97] developed a method for traffic flow bottleneck detection using Wavelet Transform on loop detector data. Distributed sensing with acoustic sensing, traffic flow outlier detection, deep learning, and robust traffic flow detection in congestion are examples of other state-of-the-art studies in this sub-field [98,99,100,101].

3.2.2. Travel Time Estimation

Coupled with traffic flow detection, travel time estimation is another task in ITS sensing. Accurate travel time estimation needs multi-location sensing and re-identification of road users. Bluetooth sensing is a primary way to detect travel time since Bluetooth detection comes with a MAC address of a device so it can naturally re-identify the road users that carry the device. Vehicle travel time [102] and pedestrian travel time [103] can both be extracted with Bluetooth sensing. Bluetooth sensing has generated privacy concerns. With the advance in computer vision and deep learning, travel time estimation has been advanced with road user re-identification using surveillance cameras. Deep image features are extracted for vehicles and pedestrians and are compared among region-wide surveillance cameras for multi-camera tracking [104,105,106,107,108]. An effective and efficient pedestrian re-identification method was developed by Han et al. [108], called KISS+ (Keep It Simple and Straightforward Plus), in which multi-feature fusion and feature dimension reduction are conducted based on the original KISS method. Sometimes it is not necessary to estimate travel time for every single road user. In those cases, more conventional detectors and methods could achieve good results. Oh et al. [109] proposed a method to estimate link travel time, as early as in the year 2002, using loop detectors. The key idea was based on road section density that can be acquired by observing in-and-out traffic flows between two loop stations. While no re-identification was realized, these methods had reasonably good performances and provided helpful travel time information for traffic management and users [109,110,111].

3.2.3. Traffic Anomaly Detection

Another topic in infrastructure-based sensing is traffic anomaly detection. As the name suggests, traffic anomaly refers to those abnormal incidents in an ITS. They rarely occur, and examples include vehicle breakdown, collision, near-crash, wrong-way driving, and so forth. Two major challenges in traffic anomaly detection are (1) the lack of sufficient anomaly data for algorithm development and (2) the wide variety of anomalies that lacks a clear definition. Anomalies detection is achieved mainly using surveillance cameras given the requirement for rich information, though time series data is also feasible in some relatively simple anomaly detection tasks [112]. Traffic anomaly detection can be divided into three categories: supervised learning, unsupervised learning, and semi-supervised learning. Supervised learning methods are useful when the number of classes is clearly defined and training data is large enough to make the model statistically significant; but supervised learning requires manual labeling and needs both data and labor, and they cannot detect unforeseen anomalies [113,114,115]. Unsupervised learning has no requirement for labeling data and is more generalizable to the unforeseen anomaly as long as sufficient normal data is given; however, anomaly detection will be hard when the data nature changes over time (e.g., if a surveillance camera keeps changing angle and direction) [116]. Li et al. [117] designed an unsupervised method based on multi-granularity tracking, and their method won first place in the 2020 AI City Challenge. Semi-supervised learning needs only weak labels. Chakraborty et al. [118] proposed a semi-supervised model for freeway traffic trajectory classification using YOLO, SORT, and maximum-likelihood-based Contrastive Pessimistic Likelihood Estimation (CPLE). This model detects anomalies based on trajectories and improves the accuracy by 14%. Sultani et al. [119] considered videos as bags and video segments as instances in multiple instance learning and automatically learned an anomaly ranking model with weakly labeled data. Lately, traffic anomaly detection has been advanced not only by the design of new learning methods but also by object tracking methods. It is interesting to see that, in the 2021 AI City Challenge, all top-ranking methods somewhat made contributions to the tracking part [120,121,122].

3.2.4. Parking Detection

Alongside roadway monitoring, parking facility monitoring, as another typical scene in the urban area, plays a crucial role in infrastructure-based sensing. Infrastructure-based parking space detection can be divided into two categories from the sensor functionality perspective: the wireless sensor network (WSN) solution and camera-based solution. The WSN solution has one sensor for each parking space, and the sensors need to be low power, sturdy, and affordable [8,123,124,125,126,127,128,129,130,131,132]. The WSN solution has some pros and cons: algorithm-wise, it is often straightforward; a thresholding method would work in most cases, but a relatively simple detection method may lead to a high false detection rate. A unique feature for the WSN is it is robust to sensor failure due to a large number of sensors. That means, even if a few stop working, the WSN still covers most of the spaces. However, a large number of sensors do require a high cost of labor and maintenance in large-scale installation. Magnetic nodes, infrared sensors, ultrasonic sensors, light sensors, and inductance loops are the most popular sensors. For example, Sifuentes et al. [131] developed a cost-effective parking space detection algorithm based on magnetic nodes, which integrates a wake-up function with optical sensors. The camera-based solution has been increasingly popular with advances in video sensing, machine learning, and data communication technologies [8,123,133,134,135,136,137,138,139,140,141,142,143,144]. Compared to the WSN, one camera covers multiple parking spaces; thus, the cost per space is reduced. It is also more manageable regarding maintenance, since the installation of camera systems is non-intrusive. Additionally, as aforementioned, video contains more information than other sensors, which has the potential to apply to more complicated tasks in parking. Bulan et al. proposed to use background subtraction and SVM for street parking detection, which achieved a promising performance and was not sensitive to occlusion [133]. Nurullayev et al. designed a pipeline with a unique design of dilated convolutional neural network (CNN) structure. The design was validated to be robust and suitable for parking detection [136].

3.3. Vehicle Onboard Sensing

Vehicle onboard sensing is complimentary to infrastructure-based sensing. It happens on the road user side. The sensors move with road users and thereby are more flexible and cover larger areas. Additionally, vehicle onboard sensors are the eyes of an intelligent vehicle, making the vehicle see and understand the surroundings. These properties pose opportunities for urban sensing and autonomous driving technologies, but at the same time create challenges for innovation. A major technical challenge is the irregular movement of sensors. Traditional ITS sensing on infrastructure-mounted sensors deal with stationary backgrounds and relatively stable environment settings. For instance, radar sensors for speed measurement know where the traffic is supposed to be. Camera sensors have a fixed video background so that traditional background modeling algorithms can be applied. Therefore, in order to benefit from vehicle onboard sensing, it is necessary to address the challenges.

3.3.1. Traffic Near-Crash Detection

Traffic near-crash or traffic near-miss is the conflict between road users that has the potential to develop into a collision. Near-crash detection using onboard sensors is the first step for multiple ITS applications: near-crash data serves as (1) surrogate safety data for traffic safety study, (2) corner-case data for autonomous vehicle testing, and (3) input to collision avoidance systems. There were some pioneer studies on automatic near-crash data extraction on the infrastructure side using LiDAR and camera [145,146,147]. In recent years, near-crash detection systems and algorithms using onboard sensors have been developed at a fast pace. Ke et al. [148] and Yamamoto et al. [149] each applied conventional machine learning models (SVM and random forest) in their near-crash detection frameworks and achieved fairly good detection accuracy and efficiency on regular computers. The state-of-the-art methods tend to use deep learning for near-crash detection. The integration of CNN, LSTM, and attention mechanisms was demonstrated to be superior in recent studies [149,150,151]. Ibrahim et al. presented that a bi-directional LSTM with self-attention outperformed a single LSTM with a normal attention mechanism [150]. Another feature in recent studies was the combination of onboard camera sensor input and onboard telematics input, such as vehicle speed, acceleration, and location to either improve the near-crash detection performance or increase the output data diversity [9,149,152]. Ke et al. mainly used onboard video for near-crash detection but also collected telematics and vehicle CAN data for post analysis [9].

3.3.2. Road User Behavior Sensing

Human drivers can recognize and predict other road users’ behaviors, e.g., pedestrians crossing the street, vehicle changing lanes. For intelligent or autonomous vehicles, automating this kind of behavior recognition process is expected to be part of the onboard sensing functions [153,154,155,156,157]. Stanford University [157] published an article on pedestrian intent recognition using onboard videos. They built a graph CNN to exploit spatio-temporal relationships in the videos, which was able to show the relationships between different objects. While, for now, the intent prediction just focused on crossing the street or not, the research direction is clearly promising. They also published over 900 h of onboard videos online. Another study proposed by Brehar et al. [154] on pedestrian action recognition used an infrared camera, which compensates for regular cameras in the nighttime, on foggy days, and on rainy days. They built a framework composed of a pedestrian detector, an original tracking method, road segmentation, and LSTM-based action recognition. They also introduced a new dataset named CROSSIR. Likewise, vehicle behavior recognition is of the same importance for intelligent or autonomous vehicles [158,159,160,161,162]. Wang et al. [159] lately developed a method using fuzzy inference and LSTM for vehicles’ lane changing behavior recognition. The recognition results were used for a new intelligent path planning method to ensure the safety of autonomous driving. The method was trained and tested by NGSIM data. Another study on vehicle trajectory prediction using onboard sensors in a connected-vehicle environment was conducted. It improved the effectiveness of the Advanced Driver Assistant System (ADAS) in cut-in scenarios by establishing a new collision warning model based on lane-changing intent recognition, LSTM for driving trajectory prediction, and oriented bounding box detection [158]. Another type of road user-related sensing is passenger sensing, while for different purposes, e.g., transit ridership sensing using wireless technologies [163] and car passenger occupancy detection using thermal images for carpool enforcement [164].

3.3.3. Road and Lane Detection

In addition to road user-related sensing tasks, road and lane detection are often performed for lane departure warning, adaptive cruise control, road condition monitoring, and autonomous driving. The state-of-the-art methods mostly apply deep learning models for onboard camera sensors, LiDAR, and depth sensors for road and lane detection [165,166,167,168,169,170]. Chen et al. [165] proposed a novel progressive LiDAR adaption approach-aided road detection method to adapt LiDAR point cloud to visual images. The adaption contains two modules, i.e., data space adaptation and feature space adaptation. This camera-LiDAR fusion model currently stays at the top of the KITTI road detection leaderboard. Fan et al. [166] designed a deep learning architecture that consists of a surface normal estimator, an RGB encoder, a surface normal encoder, and a decoder with connected skip connections. It applied road detection to the RGB image and depth image and achieved state-of-the-art accuracy. Alongside road region detection, an ego-lane detection model proposed by Wang et al. outperformed other state-of-the-art models in this sub-field by exploiting prior knowledge from digital maps. Specifically, they employed OpenStreetMap’s road shape file to assist lane detection [167]. Multi-lane detection has been more challenging and rarely addressed in existing works. Still, Luo et al. [168] were able to achieve pretty good multi-lane detection results by adding five constraints to Hough Transform: length constraint, parallel constraint, distribution constraint, pair constraint, and uniform width constraint. A dynamic programming approach was operated after the Hough Transform to select the final candidates.

3.3.4. Semantic Segmentation

Detecting the road regions at the pixel level is a type of image segmentation focusing on the road instance. There has been a trend in onboard sensing to segment the entire video frame at pixel level into different object categories. This is called semantic segmentation and is considered a must for advanced robotics, especially autonomous driving [171,172,173,174,175,176,177,178,179]. Compared to other tasks, which can usually be fulfilled using different types of onboard sensors, semantic segmentation is strictly realized using visual data. Nvidia researchers [172] proposed a hierarchical multi-scale attention mechanism for semantic segmentation based on the observation that certain failure modes in the segmentation can be resolved in a different scale. The design of their attention was hierarchical so that memory usage was four times more efficient in the training process. The proposed method ranked top on two segmentation benchmark datasets. Semantic segmentation is relatively computationally expensive; thus, working towards the goal of real-time segmentation is a challenge [171,174]. Siam et al. [171] targeted proposing a general framework for real-time segmentation and ran 15 fps on Nvidia Jetson TX2. Labeling at the pixel level is time-consuming and is another challenge for semantic segmentation. There are some benchmark datasets available for algorithm testing, such as Cityscapes [180]. Efficient labeling for semantic segmentation and unsupervised/semi-supervised learning for semantics segmentation are interesting topics worth exploring [173,175,176].

3.4. Aerial Sensing for ITS

Aerial sensing using drones, i.e., unmanned aerial vehicles (UAVs), has been performed in the military for years and recently has become increasingly explored in civil applications, such as agriculture, transportation, good delivery, and security. Automation and smartness of surface traffic cannot be fulfilled with ground transportation itself. UAV extends the functionality of existing ground transportation systems with its high mobility, top-view perspective, wide view range, and autonomous operation [181]. UAV’s role is envisaged in many ITS scenarios, such as flying accident report agents [182], traffic enforcement [183], traffic monitoring [184], and vehicle navigation [185]. While there are regulations to be completed and practical challenges to be addressed, such as safety concerns, privacy issues, and short battery life problem, UAV’s applications in ITS is envisioned to be one step forward towards transportation network automation [181]. On the road user side, UAV extends the functionality of ground transportation systems by detecting vehicles, pedestrians, and cyclists from the top view, which has a wider view range and better view angle (no occlusion) than surveillance cameras and onboard cameras. UAV also detects road users’ interactions and traffic theory-based parameters, thereby supporting applications in traffic management and user experience improvement.

3.4.1. Road User Detection and Tracking

Road user detection and tracking are the initialization processes for traffic interaction detection, pattern recognition, and traffic parameter estimation. Conventional UAV-based road user detection often uses background subtraction and handcrafted features, assuming UAV is not moving or stitching frames in the first step [186,187,188,189,190,191]. Recent studies tended to develop deep learning detectors for UAV surveillance [192,193,194,195,196]. Road user detection itself can acquire traffic flow parameters, such as density and counts, without any need for motion estimation or vehicle tracking. Zhu et al. [196] proposed an enhanced Single Shot Multibox Detector (SSD) for vehicle detection with manually annotated data, resulting in high detection accuracy and a new dataset. Wang et al. [197] identified the challenge in UAV-based pedestrian detection, particularly at night time, and proposed an image enhancement method and a CNN for pedestrian detection at nighttime. In order to conduct more advanced tasks in UAV sensing on the road user side, road user tracking is a must because it connects individual detection results. Efforts have been made on UAV-based vehicle tracking and motion analysis [186,187,188,198,199,200]. In many previous works, existing tracking methods, such as particle filter and SORT, were directly applied and had fairly good tracking performance. Recently, Ke et al. [201] developed a new tracking algorithm that incorporated lane detection information and improved tracking accuracy.

3.4.2. Advanced Aerial Sensing

Road user detection and tracking support advanced aerial ITS sensing applications. For example, in [192,202], the researchers developed new methods for traffic shock-wave identification and synchronized traffic flow pattern recognition under oversaturated traffic conditions. Chen et al. [203] conducted a thorough study on traffic conflict based on extracted road user trajectories from UAV. The Safety Space Boundary concept in the paper is an informative design for conflict analysis. One of the most useful applications using UAV is traffic flow parameter estimation: Traditional research in this field focused on using static UAV videos for macroscopic parameters extraction. McCord et al. [204] led a pioneering research work to extract a variety of critical macroscopic traffic parameters, such as annual average daily traffic (AADT). Later on, a new method was proposed by Shastry et al. [205], in which they adopted image registration and motion information to stitch images and obtain fundamental traffic flow parameters. Lately, Ke et al. developed a series of efficient and robust frameworks to estimate aggregated traffic flow parameters (speed, density, and volume) [206,207,208]. Because of the potential benefits of higher-resolution data in ITS, microscopic traffic parameter estimation has been conducted [209]. Barmpounakis et al. proposed a method to extract naturalistic trajectory data from UAV videos at a relatively less congested intersection using static UAV video [194]. Ke et al. [201] developed an advanced framework composed of lane detection, vehicle detection, vehicle tracking, and traffic parameter estimation that can estimate 10 different macroscopic and microscopic parameters from a moving UAV.

3.4.3. UAV for Infrastructure Sensing

On the infrastructure side, UAV has been utilized for some ITS sensing services, such as road detection, lane detection, and infrastructure inspection. UAV is extremely helpful at locations where it is hard for humans to reach. Road detection is used to localize the regions where traffic appears from UAV sensing data. It is crucial to support applications such as navigation and task scheduling [210,211,212]. For instance, Zhou et al. [213,214] designed two of the popular methods for road detection in UAV imagery. While there were some studies before these two, the paper [213] was the first targeting speeding up the road localization part with a proposed tracking method. Reference [214] presented a fully automatic approach that detects roads from a single UAV image with two major components: road/non-road seeds generation and seeded road segmentation. Their methods were tested on challenging scenes. UAV has been intensely used for infrastructure inspection, particularly bridge inspection [215,216,217,218] and road surface inspection [219,220,221]. Manual inspection for bridges and road surfaces is costly in terms of both time and labor. Bolourian et al. [217] proposed a high-level framework for bridge inspection using LiDAR-equipped UAVs. It contained planning a collision-free optimized path and a data analysis framework for point cloud processing. Bicici and Zeybek [219] developed an approach with verticality features, DBSCAN clustering, and robust plane fitting to process point cloud for automated extraction of road surface distress.

4. Edge Computing: Opportunities in ITS Sensing Challenges

Despite the massive advances in ITS sensing both in methodology and application, there are various challenges to be addressed towards a truly smart city and smart transportation system. We envision the major objectives of future ITS sensing to be large-scale sensing, high intelligence, and real-time capability. These three properties would lay the foundation for high automation of city-wide transportation systems. On the other hand, we summarize the challenges into a few categories: heterogeneity, high probability of sensor failure, sensing in extreme cases, and privacy concern. In review of the emerging works in using edge computing for ITS tasks, it is reasonable to consider that edge computing will be a primary component of the solutions to these challenges.

4.1. Objectives

4.1.1. Large-Scale Sensing

ITS sensing in smart cities is expected to cover a large network of microsites. Without edge computing, the cost for large-scale cloud computing services (e.g., AWS and Azure) is significant and will eventually reach the upper limit of network resources (bandwidth, computation, and storage) [9]. Sending network-wide data over a limited bandwidth to a centralized cloud is counterproductive. Edge computing could significantly improve network efficiency by transporting non-raw data in smaller amounts or providing edge functions to eliminate irrelevant data onsite. Systems and algorithms will need to be developed to address concerns in high probability of sensor failure in a high variety of large scale real-world scenarios and maintenance and support facilities.

4.1.2. High Intelligence

Intelligence in ITS sensing means that transportation systems understand the surrounding environment through intelligent sensing functions, thus providing valuable information for efficient and effective decision-making. Many ITS environments today still have unreliable or unpredictable network connectivity. These could include buses, planes, parking facilities, traffic signal facilities, and general infrastructures under extreme conditions. Edge computing functions can be designed as self-contained, thereby neatly supporting these environments by allowing autonomous or semi-autonomous operation without network connectivity. One existing example could be ADAS functions, which automatically run onboard vehicles. Without Internet connection, it may not serve as a data collection point for other services but is still able to warn and protect drivers in risky scenarios. However, high intelligence often requires high-complexity methods and computation power. Concerns exist in the resource constraint on edge devices, the ability to handle corner cases that the machines never encountered, and other general challenges in AI.

4.1.3. Real-Time Sensing

Sensing in real-time is critical for many future ITS applications. Connected infrastructure, autonomous vehicles, smart traffic surveillance, short-term traffic prediction, and so on, all expect real-time capability, and they cannot tolerate even milliseconds of delay in processing due to effectiveness and safety. These tasks that require fast response time, low latency, and high efficiency, especially when on a large scale, cannot be achieved without edge computing architecture. However, there is always a tradeoff between real-time sensing and high intelligence: as intelligence increases, efficiency commonly decreases. This conflict stands out in edge computing, given the limited resources at the edge. Sometimes, the input data itself is intense, such as video data and sensor fusion data, which puts additional burdens on the edge computing devices. Careful design in system architectures that balance the computation load between edge and cloud is expected to move towards this goal. Algorithm design that targets innovation in light-weight neural network structures and other models has shown effectiveness in reducing computation load at the edge while maintaining a good sensing performance. In summary, concerns are a tradeoff between real-time sensing and high intelligence, the network and computation resource constraint, and intense data input at the edge.

4.2. State of the Art

In this subsection, we summarize state-of-the-art models in edge computing for ITS sensing. The benefit of edge computing lies in the improvement in computation efficiency, network bandwidth usage, response time, cyber security, and privacy [1]. However, the resource constraints on edge devices are the key bottleneck for the implementation of high intelligence. Zhou et al. conducted a comprehensive survey on edge AI and considered edge computing is paving the last mile of AI [2]. In terms of AI model optimization at the edge, the compression of deep neural networks using pruning and quantization techniques is significant [222,223].

In ITS applications, there have not been many pioneering studies that explore the design of both system architectures and algorithms for certain transportation scenarios using edge computing. It is widely known that edge computing with machine learning is a trend for ITS. Ferdowsi et al. introduced a new ITS architecture that relies on edge computing and deep learning to enhance computation, latency, and reliability [224]. They investigated the potential of using edge deep learning to solve multiple ITS challenges, including data heterogeneity, path planning, autonomous vehicle and platoon control, and cyber security.

Crowdsensing with the Internet of Vehicles (IoV) is one category of research using edge computing for ITS. Vehicles are individual nodes in the traffic network with local data collection and processing units. As a whole, they form the IoV network that can perform crowdsensing. One example is monitoring urban street parking spaces with in-vehicle edge video analytics [225]. In this work, smart phones serve as the data producer; the edge unit detects cars, signs, and GPS and uploads the ego-vehicle location and road identifier to the cloud for data aggregation. Another study uses a crowdsensing scheme and edge machine learning for road surface condition classification. A multi-classifier is applied at the edge to recognize road surface type and anomaly situation [226]. Liu et al. proposed SafeRNet, a safe transportation routing computation framework that utilized the Bayesian network to analyze crowdsensing traffic data to infer safe routes and deliver them to users in real time [227].

Some other studies focus on managing and optimizing the resources of the system to ensure efficient message delivery, computation, caching, and so on for IoV [6,7,228,229,230]. Dai et al. [7] exploited reinforcement learning (RL) to formulate a new architecture that dynamically optimizes edge computing and caching resources. Yuan et al. [229] proposed a two-level edge computing architecture for efficient content delivery of large-volume, time-varying, location-dependent, and delay-constrained automated driving services. Ji et al. [6] developed a relay cooperative transmission algorithm of IoV with aggregated interference at the destination node.

Another group of research on this topic focuses on developing machine learning methods for certain ITS tasks with edge computing, instead of for resources management in crowdsensing. Real-time video analytics, as the killer app for edge computing, has generated challenges and thereby huge interests for research [5,231]. Microsoft Research has explored a new architecture with deep learning and edge computing techniques for intersection traffic monitoring and potential conflict detection [5]. Ke et al. [4] designed a new architecture that splits the computation load into cloud part and edge part for smart parking surveillance. On the edge device Raspberry Pi, background subtraction, and an SSD vehicle detector were implemented, and only the bounding boxes related information was sent back to the cloud for object tracking and occupancy judgment. The proposed work improved efficiency, accuracy, and reliability of the sensing system in adverse weather conditions.

Detecting parking space occupancy by lightweight CNN models on edge devices has also been investigated by different researchers [135,143,232]. Another lightweight CNN that comprised factorization convolution layers and compression layers was developed for edge computing and multiple object detection on a Nvidia Jetson device for transportation cyber-physical systems [232]. Cyber-attacks can also be detected in transportation cyber-physical systems using machine learning. Chen et al. proposed a deep belief network structure to achieve attack detection in a transportation mobile edge computing environment [233]. UAV can also serve as an edge unit for attack detection for smart vehicles [234]. Another interesting application of edge machine learning is detecting road surface quality issues onboard a vehicle [235,236]. Traditional machine learning methods, such as random forest, appeared to perform well with high accuracy and real-time operation for this task.

4.3. Challenges in ITS Sensing

4.3.1. Challenge 1: Heterogeneity

Developing advanced ITS applications requires the adoption of different sensors and sensing methods. On a large scale, heterogeneity resides in many aspects, e.g., hardware, software, power supply, and data. Sensor hardware has a large variety of different ITS tasks. Magnetic sensor, radar sensor, infrared sensor, LiDAR, camera, etc., are common sensor types that each poses unique advantage in certain scenarios. These sensors are different regarding cost, size, material, reliability, working environment, sensing capability, and so on. Not only is there a large variety of sensors themselves, the hardware supporting the sensing functions for storage and protection is also diverse. The associated hardware may limit the applicability of sensors, as well. A sensor with local storage is able to store data onsite for later use; a sensor with a waterproof shell is able to work outdoors, while those without may solely be available for indoor monitoring.

Even within the same type of sensors, there can be significant variance with respect to detailed configurations and will influence the effectiveness and applicability of the sensors. Cameras with different resolution is an example, and those with high resolution are suitable for some tasks that are not possible for low-resolution cameras, such as small object detection; the low-resolution cameras may support less complicated tasks and have a better efficiency and lower cost. The installation locations of the same sensors also vary. As aforementioned, sensors onboard a vehicle or carried by a pedestrian have different functions from those installed on infrastructures. Some sensors can only be installed on the infrastructures, and some are appropriate for onboard sensing. For example, loop detectors and magnetic nodes are most often on or underneath the road surface, while sensors for collision avoidance need to be onboard cars, buses, or trucks.

Software is another aspect that poses heterogeneity in ITS sensing. There is open-source software and proprietary software. Open-source software is free and flexible and can be customized for specific tasks; however, there is a relatively high risk that some open-source software is unreliable and may solely work in specific settings. There are many open-source codes on platforms such as GitHub. A good open-source tool can generate massive influence on the research community, such as open codes for Mask R-CNN [237], which has been widely applied for traffic object instance segmentation. Proprietary software is generally more reliable, and some software comes with customer services from the company who develop the software. These software tools are usually not free and have less flexibility in being customized. It is also hard to know the internal sensing algorithms or design. When an ITS system is composed of multiple software tools, which is likely the case most of the time, and these tools lack transparency or flexibility regarding communication, there will be hurdles in developing efficient and advanced ITS applications.

Heterogeneous settings in ITS sensing inevitably collect a heterogeneous mix of data, such as vehicle dynamics, traffic flow, driver behavior, safety measurements, and environmental features. There are uncertainties, noises, and missing patches in ITS data. Modern ITS applications would require data to be of high quality, integrated, and sometimes in real-time. Despite improving sensing functionality for individual sensors at a single location, new challenges arise in the integration of heterogeneous data. New technologies also pose challenges in data collection as some data under traditional settings will be redundant, and at the same time, new data will be required for some tasks, e.g., CAV safety and mixed autonomy.

Edge computing is promising in terms of improving data integration of different data sources. For example, Ke et al. [238] developed an onboard edge computing system based on Nvidia Jetson TX2 for near-crash detection based on video streams. The system leveraged edge computing for real-time video analytics and communicated with another LiDAR-based system onboard; while the sensor sets and data generated from the two onboard systems were very different, the designed edge data fusion framework was able to address the data heterogeneity of the two groups neatly through a CAN-based triggering mechanism. In future ITS, data heterogeneity problems are expected to be more complex, involving not only data from different sensors on the same entity but also data with completely different characteristics and generation processes. Edge computing will make it one step closer to an ideal solution by formatting the data immediately after they are produced.

4.3.2. Challenge 2: High Probability of Sensor Failure

Large-scale and real-time sensing requirements will have little tolerance for sensor failure because it may cause severe problems to the operation and safety of ITS. A representative example is sensor failure in an autonomous vehicle, which could lead to property damage, injuries, and even fatality. When ITS becomes more advanced, where one functional system will likely consist of multiple coordinated modules, the failure of one sensor could malfunction the entire system. For instance, a connected vehicle and infrastructure system may stop working because some infrastructure-mounted sensors have no readings, so the data flow for the system would be interrupted.

Sensor failure may rarely occur for every individual sensor, but according to probability theory, if the failure probability of one sensor is p during a specific period, the failure that occurs among N sensors will be . When N is large enough, the probability of sensor failure will be very high. This phenomenon is similar to the fault tolerance in cloud computing, which is about designing a blueprint for continuing the cloud computing ongoing work when a few machines are down. However, in ITS sensing, sensor fault tolerance is more challenging due to: (1) the hardware, software, and data heterogeneity mentioned in the last sub-section; (2) the fact that, unlike cloud computing settings, sensors are connected to different networks or even not connected to any network; (3) the potential cost and loss from a sensor fault, which could be much more serious than one in cloud computing.

This problem naturally exists and is hard to be eliminated, because, as we discussed, even when the failure probability for a single sensor is super low, in city-scale ITS sensing applications, where there are hundreds and even thousands of heterogeneous sensors, the probability goes up significantly. Furthermore, it is not realistic to reduce the failure probability of a single sensor or device to zero in the real world. Edge computing could help improve the situation. On the one hand, an edge computing device or an edge server can make the network edge itself more robust to sensor fault with fault detection designs. A sensor directly connected to the network cannot notify the datacenter of a failure of itself, but when a sensor mounted on an edge device fails, the edge device would know and communicate with the datacenter. On the other hand, backup sensor sets could be deployed within an edge computing platform. With computing capability at the edge, the backup sensor set could be called in case of sensor failure. Nevertheless, more comprehensive solutions to perfectly deal with sensor failure are still under exploration.

4.3.3. Challenge 3: Sensing in Extreme Cases

ITS sensing tasks that seem simple could become extraordinarily complicated or unreliable in extreme cases, such as during adverse weather, due to occlusion, and at nighttime. A typical example is video sensing, which is sensitive to lighting changes, shadow, reflection, and so forth. In smart parking surveillance, a recent study showed that video-based detectors performed more reliably indoors than outdoors due to extreme lighting conditions and adverse weather [4]. Due to low-lighting conditions, even the cutting-edge video-based ADAS products on the market are not recommended for operation at night [239]. The LiDAR sensor is one of the most reliable sensors for ITS; however, LiDAR sensing performance downgrades in rainy and snowy weather, and it is also sensitive to objects with reflective surfaces. GPS sensors experience signal obstruction due to surrounding buildings, trees, tunnels, mountains, and even human bodies. Therefore, GPS sensors work well in open areas but not in areas where obstructions are unavoidable, such as downtown.

In ITS, especially in automated vehicle testing, extreme cases can also refer to corner cases that an automated and intelligent vehicle has not encountered before. For example, a pedestrian crossing the freeway at night may not be a common case that is thoroughly covered in the database, so a vehicle might not understand the sensing results enough to proceed confidently; therefore, it would cause uncertainty in the real-time decision making. Some corner cases may be created by attackers. Adding noise that is unnoticeable by human eyes to a traffic sign image could result in a missed detection of the sign [240]; these adversarial examples threaten the security and robustness of ITS sensing. Corner case detection appears to be one of the hurdles that slow down the pace towards L-5 autonomous driving. The first question is: how does a vehicle know when it encounters a corner case? The second question is: how should it handle the unforeseen situation? We expect that corner case handling will not only be an issue for the automated vehicle but is also faced by the broad ITS sensing components.

There have been research studies that focused on addressing extreme case challenges. Li et al. [241] developed a domain adaptation method that used UAV sensing data from daytime to train detectors for traffic sensing at nighttime. The transfer learning method is a promising direction to address extreme cases in sensing. With edge computing, the machine is expected to be able to collect onsite data and improve the sensing functions over time. A particular edge device at one certain location could overfit itself for improved sensing performance at that certain location, though overfitting is not good in traditional machine learning.

4.3.4. Challenge 4: Privacy Protection

Privacy protection is another major challenge. As ITS sensing becomes advanced, more and more detailed information is available, and there have been increasing concerns regarding the use of the data and possible invasion of privacy. Bluetooth sensing detects the MAC address of the devices such as cell phones and tracks the devices in some applications, which not only risk people’s identification but also their location information. Camera images, when not properly protected, may contain private information, such as faces and license plates. These data are often stored on the cloud and not owned by the people whose private information is there.

Edge computing is a great solution to privacy challenges. Data are collected and processed at the edge, and raw data, with private information, is not transmitted to the cloud. In [238], video and other sensor data are processed onboard the vehicles and most are removed in real-time. While the primary purpose was to save network and cloud resources, privacy protection was fulfilled, as well as with edge computing. Federated learning [2] is a learning mechanism for privacy protection that assumes that users at different locations/agencies cannot share all the data to the cloud datacenter, so learning with new data has to happen at the edge first before transmitting some intermediate values to the cloud.

5. Future Research Directions

5.1. Resource-Efficient Edge Sensing Design

The state-of-the-art sensing models, especially those based on AI, are mostly resource-intensive and consume lots of computation, storage, network bandwidth, and power. Abundant hardware and network support is crucial for boosting the performance of the latest sensing methods. However, edge devices are resource-constrained. The sharp contrast naturally entails the design of resource-friendly algorithms and system architectures for ITS sensing. There have been quite a few studies on AI model compression techniques, e.g., network pruning and quantization, that target reducing the weight of neural networks. In addition to resizing existing AI models, another solution is to exploit the AutoML and neural architecture search methods to search over the model parameter space, at the same time considering the edge hardware constraints [242,243]. Alongside general designs on AI models, it is sometimes beneficial to leverage the characteristics of certain ITS scenes and theories, which can simplify the models and even improve the overall robustness when incorporated appropriately. On the other hand, system design is vital for edge sensing: system architecture design includes designs at the edge and designs across the edge and cloud. The purpose of system architecture design is to optimize the resource allocation to support requirements in different sensing tasks.

5.2. Federated Sensing

Federated learning was proposed by Google in 2016, which is adopted for joint learning of data from multiple edge devices for a centralized model. The learning occurs both on the edge devices and the centralized cloud. In ITS sensing, federated learning can be leveraged in many research areas (e.g., IoV sensing). Sensing models at the same type of agents for a specific task are often the same. It would be helpful to update the model as multiple agents collect new data; this is expected to improve the overall sensing performance by training using more samples. In the large-scale application, new data accumulate quickly, thus the general model training could iterate. However, at present, these valuable data are often discarded or stored in a place for offline analysis. There is also a hurdle regarding data sharing by different edge devices due to privacy issues or technology constraints. In the future, federated sensing schemes are expected to be devised towards real-time data sharing from edge devices and to enhance ITS sensing applications.

5.3. Cooperated Sensing by Infrastructure and Road Users

While federated learning will benefit multi-agent sensing for the same sensing task, it is also expected that sensor data integration from different ITS components will be another research direction. At present, sensing tasks are carried out by individual road users or individual infrastructure, e.g., roadside radars for speed enforcement, surveillance cameras for traffic flow detection, onboard LiDAR for collision avoidance. Even sensor fusion techniques are mostly about sensor signal integration at an individual agent, e.g., camera and LiDAR fusion onboard a vehicle. However, sensor data from different types of ITS components could provide richer information from different angles towards the same problem. For example, for a freeway segment of interest, individual loop detectors are distributed at fixed locations, sampling the traffic flow (speed, volume, occupancy) about every 0.5–1 mile. There is no ground truth data regarding what goes on at locations not covered by the loop detectors. If we can develop a cooperated sensing mechanism that integrates vehicle telematics data or other onboard data, tasks such as congestion management and locating an incident would benefit largely. Another example is jointly detecting objects of interest (e.g., street parking spaces). From a certain angle, either from a road user or some roadside infrastructure, there may be occlusion of a certain parking space; a cooperated sensing on the edge could help improve the detection accuracy and reliability.

5.4. ITS Sensing Data Abstraction at Edge

There will be a huge number of edge devices for ITS sensing. The large amount of data provided at the edge, even not raw data, still needs further data abstraction to a level that balances the workload and resources. There are a few points that may guide us through the exploration. First, to what extent do the edge devices conduct data abstraction? Second, data from different devices may be in different formats, e.g., the cooperated sensing data, so what are the abstraction and fusion frameworks for multi-source data? Third, if the data abstraction layer should be on the top of the sensor layer, then, for an application, how would the data abstraction strategies change as the sensor distributions change? We envision that appropriate data abstraction is the foundation to support advanced tools and application development in ITS sensing. Good data abstraction strategies at the edge will not only balance resource usage and information availability but also make the upper layers of pattern analysis and decision-making easier.

5.5. Training and Sensing All at Edge

A previous survey on edge computing [2] summarized six levels in the development of edge intelligence, ranging from level-1 cloud-edge co-inference to level-6 both training and inference on edge devices. We agree on this point and envision that ITS sensing with edge computing will follow a similar path of development. At present, most edge computing applications in ITS are level-1 to level-3, where the training happens on the cloud and models are deployed to the edge devices with or without compression/optimization. Sometimes the sensing function is done collaboratively by edge and cloud. Since federated sensing needs to be conceived in the future, with huge benefits from consistent data input to update the general model, it is reasonable to require an extension from federated sensing and for each device to update a customized sensing model online at the edge. Compared to a general model, all at-edge training and sensing is more flexible and intelligent. However, it does not mean that centralized learning from distributed devices is not useful; even in the era of level-5 or level-6, we expect that there will be models updating on single devices and aggregated learning to some extent for optimal sensing performances.

6. Conclusions

The intersection between ITS and EC is expected to have enormous potential in smart city applications. This paper has initially reviewed the key components of ITS, including sensing, data pre-processing, pattern analysis, traffic prediction, information communication, and control. This has been followed by a detailed review of the recent advances in ITS sensing, which summarized ITS sensing from three perspectives: infrastructure-based sensing, vehicle onboard sensing, and aerial sensing; under each of the three corresponding subsections, we further divided these perspectives into representative applications. Based on the review of state-of-the-art models in ITS sensing, the next section summarized three objectives of future ITS sensing (large-scale sensing, high intelligence, real-time capability) and was followed by a review of recent edge computing applications in ITS sensing. Several key challenges in ITS sensing (heterogeneity, high probability of sensor failure, sensing in extreme cases, and privacy protection) and how edge computing could help address them were then discussed. Five future research directions were envisioned by the authors in Section 5, including resource-efficient edge sensing design, federated sensing, cooperative sensing by infrastructure and road users, ITS sensing data abstraction at edge, and training and sensing all at edge. Edge computing applications in ITS sensing, as well as other ITS components, are still in their infancy. The road ahead is full of opportunities and challenges.

Author Contributions

X.Z. and R.K. conceptualized and presented the idea. X.Z., R.K., H.Y. and C.L. studied the state-of-the-art methods and summarized the literature on ITS sensing and edge computing. X.Z. and R.K. took the lead in organizing and writing the manuscript, with the participation of H.Y. and C.L. All authors provided feedback, helped shape the research, and contributed to the final manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge intelligence: Paving the last mile of artificial intelligence with edge computing. arXiv 2019, 107, 1738–1762. [Google Scholar] [CrossRef] [Green Version]

- Abbas, N.; Zhang, Y.; Taherkordi, A.; Skeie, T. Mobile edge computing: A survey. IEEE Internet Things J. 2017, 5, 450–465. [Google Scholar] [CrossRef] [Green Version]

- Ke, R.; Zhuang, Y.; Pu, Z.; Wang, Y. A Smart, Efficient, and Reliable Parking Surveillance System with Edge Artificial Intelligence on IoT Devices. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4962–4974. [Google Scholar] [CrossRef] [Green Version]

- Ananthanarayanan, G.; Bahl, P.; Bodik, P.; Chintalapudi, K.; Philipose, M.; Ravindranath, L.; Sinha, S. Real-time video analytics: The killer app for edge computing. Computer 2017, 50, 58–67. [Google Scholar] [CrossRef]

- Ji, B.; Han, Y.; Wang, Y.; Cao, D.; Tao, F.; Fu, Z.; Li, P.; Wen, H. Relay Cooperative Transmission Algorithms for IoV Under Aggregated Interference. IEEE Trans. Intell. Transp. Syst. 2021, 1–14. [Google Scholar] [CrossRef]

- Dai, Y.; Xu, D.; Maharjan, S.; Qiao, G.; Zhang, Y. Artificial Intelligence Empowered Edge Computing and Caching for Internet of Vehicles. IEEE Wirel. Commun. 2019, 3, 12–18. [Google Scholar] [CrossRef]

- Al-Turjman, F.; Malekloo, A. Smart parking in IoT-enabled cities: A survey. Sustain. Cities Soc. 2019, 49, 101608. [Google Scholar] [CrossRef]

- Ke, R. Real-Time Video Analytics Empowered by Machine Learning and Edge Computing for Smart Transportation Applications; University of Washington: Seattle, WA, USA, 2020. [Google Scholar]

- Ban, X.J.; Herring, R.; Margulici, J.D.; Bayen, A.M. Optimal Sensor Placement for Freeway Travel Time Estimation. In Transportation and Traffic Theory 2009: Golden Jubilee; Springer: Berlin/Heidelberg, Germany, 2009; pp. 697–721. [Google Scholar]

- Sharma, A.; Bullock, D.M.; Bonneson, J.A. Input-output and hybrid techniques for real-time prediction of delay and maximum queue length at signalized intersections. Transp. Res. Rec. 2007, 2035, 69–80. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Nihan, N.L. Freeway traffic speed estimation with single-loop outputs. Transp. Res. Rec. 2000, 1727, 120–126. [Google Scholar] [CrossRef]

- Cheung, S.Y.; Coleri, S.; Dundar, B.; Ganesh, S.; Tan, C.W.; Varaiya, P. Traffic measurement and vehicle classification with single magnetic sensor. Transp. Res. Rec. 2005, 1917, 173–181. [Google Scholar] [CrossRef]

- Haoui, A.; Kavaler, R.; Varaiya, P. Wireless magnetic sensors for traffic surveillance. Transp. Res. Part C Emerg. Technol. 2008, 16, 294–306. [Google Scholar] [CrossRef]

- Buch, N.; Velastin, S.A.; Orwell, J. A review of computer vision techniques for the analysis of urban traffic. IEEE Trans. Intell. Transp. Syst. 2011, 12, 920–939. [Google Scholar] [CrossRef]

- Datondji, S.R.E.; Dupuis, Y.; Subirats, P.; Vasseur, P. A Survey of Vision-Based Traffic Monitoring of Road Intersections. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2681–2698. [Google Scholar] [CrossRef]

- Odat, E.; Shamma, J.S.; Claudel, C. Vehicle Classification and Speed Estimation Using Combined Passive Infrared/Ultrasonic Sensors. IEEE Trans. Intell. Transp. Syst. 2018, 19, 1593–1606. [Google Scholar] [CrossRef]

- Zhao, J.; Xu, H.; Liu, H.; Wu, J.; Zheng, Y.; Wu, D. Detection and tracking of pedestrians and vehicles using roadside LiDAR sensors. Transp. Res. Part C Emerg. Technol. 2019, 100, 68–87. [Google Scholar] [CrossRef]

- Sen, R.; Siriah, P.; Raman, B. RoadSoundSense: Acoustic sensing based road congestion monitoring in developing regions. In Proceedings of the 8th Annual IEEE Communications Society Conference on Sensor, Mesh and Ad Hoc Communications and Networks, Salt Lake City, UT, USA, 27–30 June 2011; pp. 125–133. [Google Scholar] [CrossRef] [Green Version]

- Malinovskiy, Y.; Saunier, N.; Wang, Y. Analysis of pedestrian travel with static bluetooth sensors. Transp. Res. Rec. 2012, 2299, 137–149. [Google Scholar] [CrossRef]