Automatic Handgun Detection with Deep Learning in Video Surveillance Images

Abstract

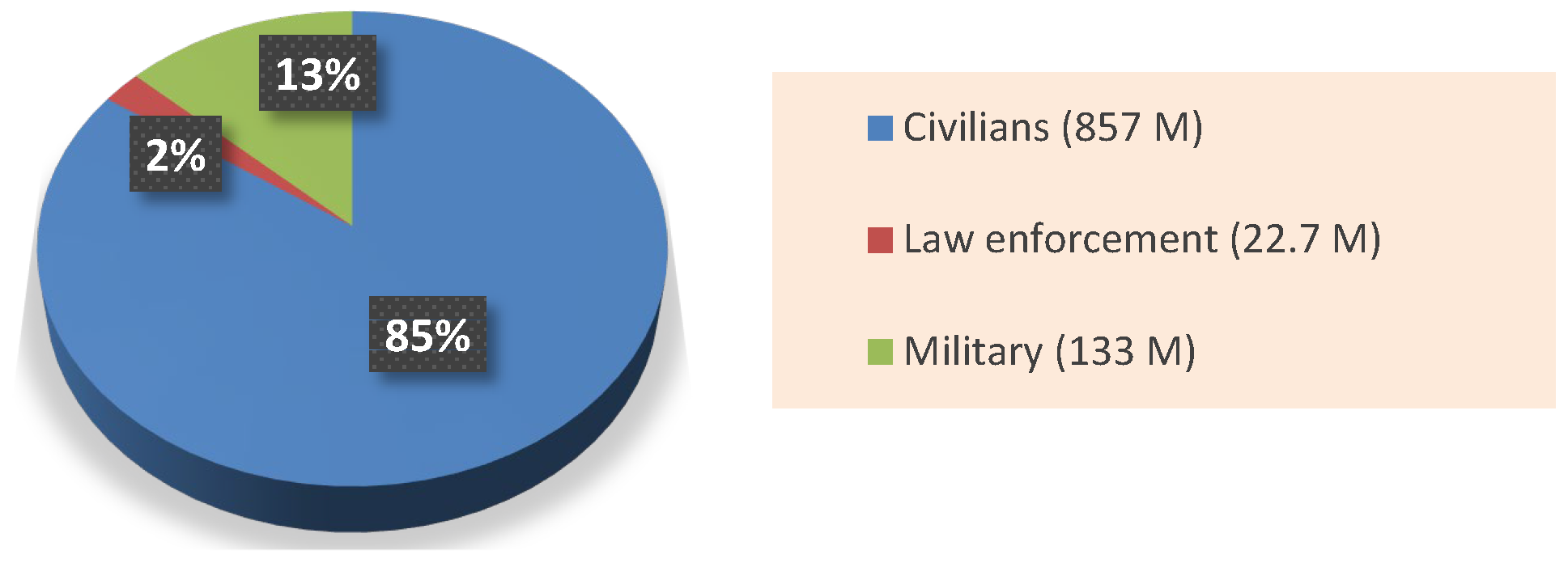

:1. Introduction

- CCTV video surveillance;

- Patrol of security agents;

- Scanning luggage through X-rays;

- Active metal detection;

- Individual frisking of people.

- Be able to perform real-time detection;

- Have a very low rate of undetected visible weapons (false negative rate (FNR)).

2. Related Works

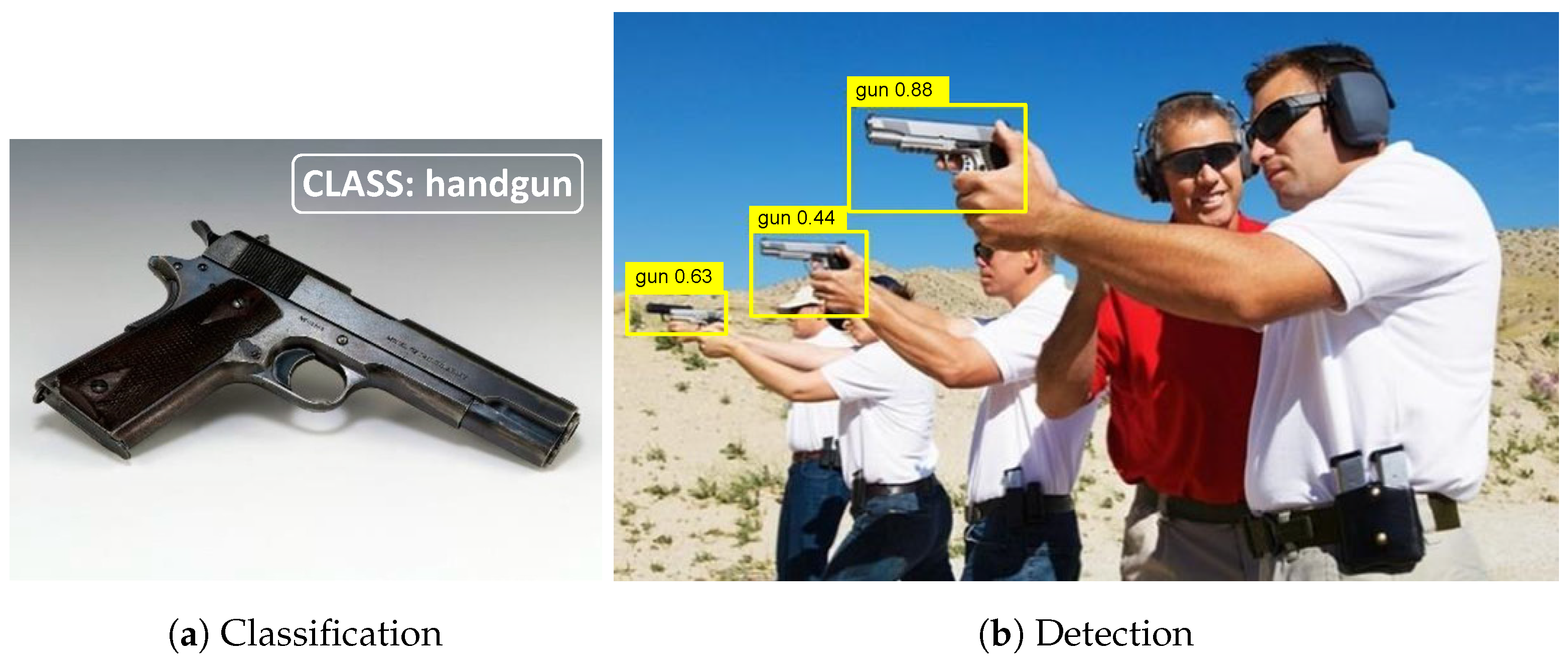

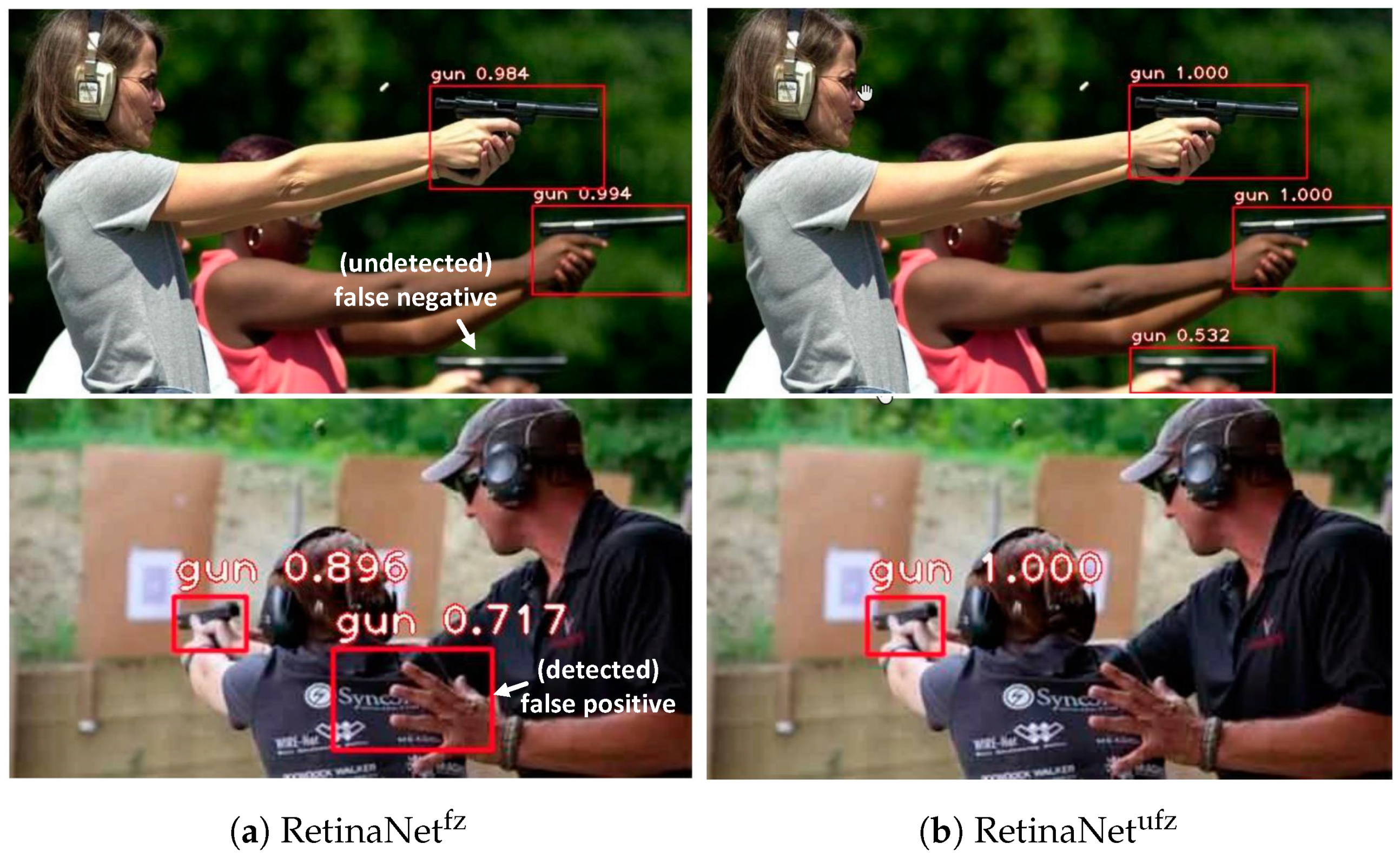

- Detection [8,10]: Given an image with multiple objects present in it, each object must be located by marking in the image the bounding box (bbox) that contains it. A label indicating the type of object contained and a certainty value (between zero and one) for such a prediction is added to each bbox (see the example in Figure 2b). It is common to consider a prediction valid, successful or not, when the prediction’s certainty or confidence score exceeds a threshold value (e.g., 0.5);

- Segmentation: Given an image, each pixel must be labeled with the class of the object to which that pixel belongs.

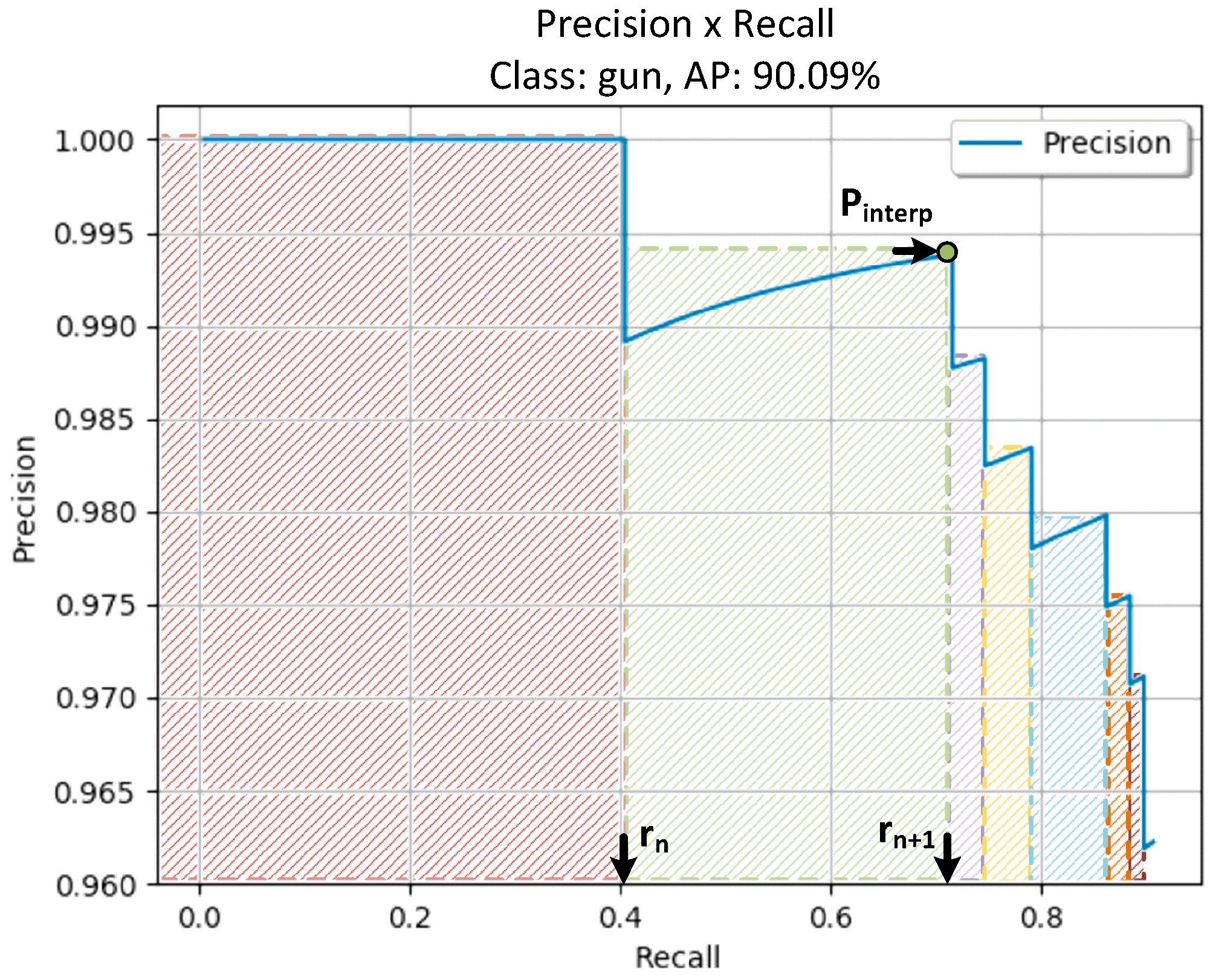

2.1. Performance Metrics

- Confidence score of the detection: This is the value in the range obtained by the algorithm, which represents the certainty value of the object’s membership within the box with the indicated class;

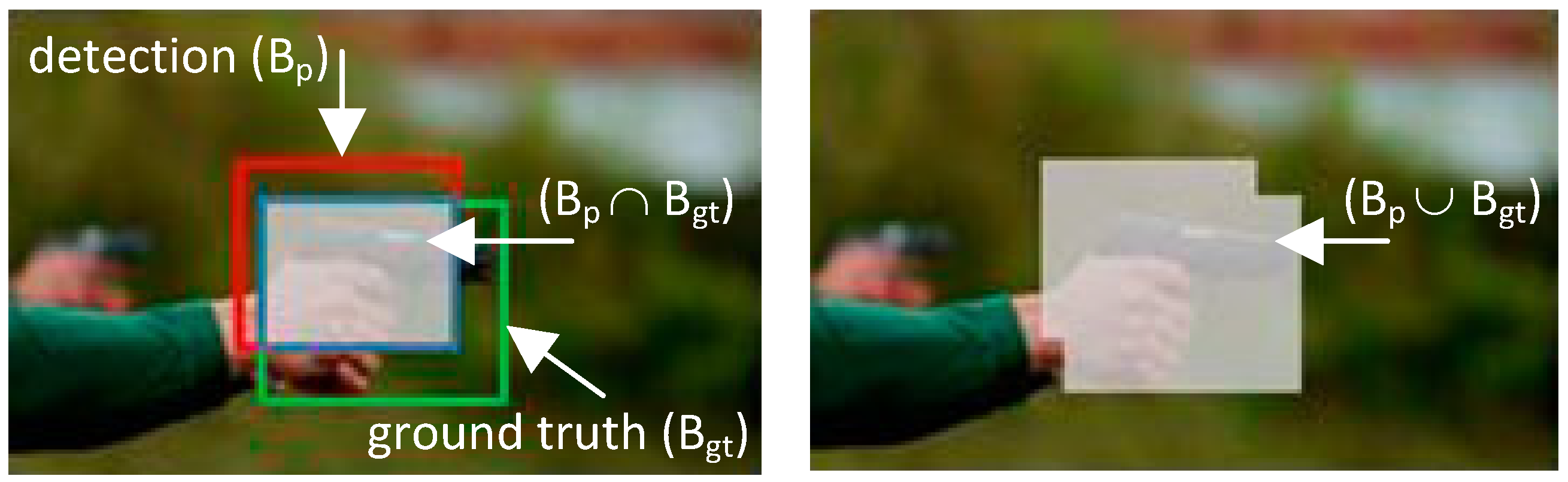

- Intersection over union (IoU): This takes into account the area of the object bbox in the ground truth () and that of the bbox obtained by the detection algorithm () when both areas overlap. It is calculated as the ratio between the values of the intersection of the areas by the junction of both areas (see Equation (1) and Figure 3). By its own definition, it is a value in the range .

- The confidence score for is greater than a threshold value;

- The class that is predicted for the detected object matches the class included in the ground truth (GT) for that object;

- The IoU value for the detected object exceeds a threshold (usually ≥0.5).

2.2. Two-Stage Detectors

2.3. Single-Stage Detectors

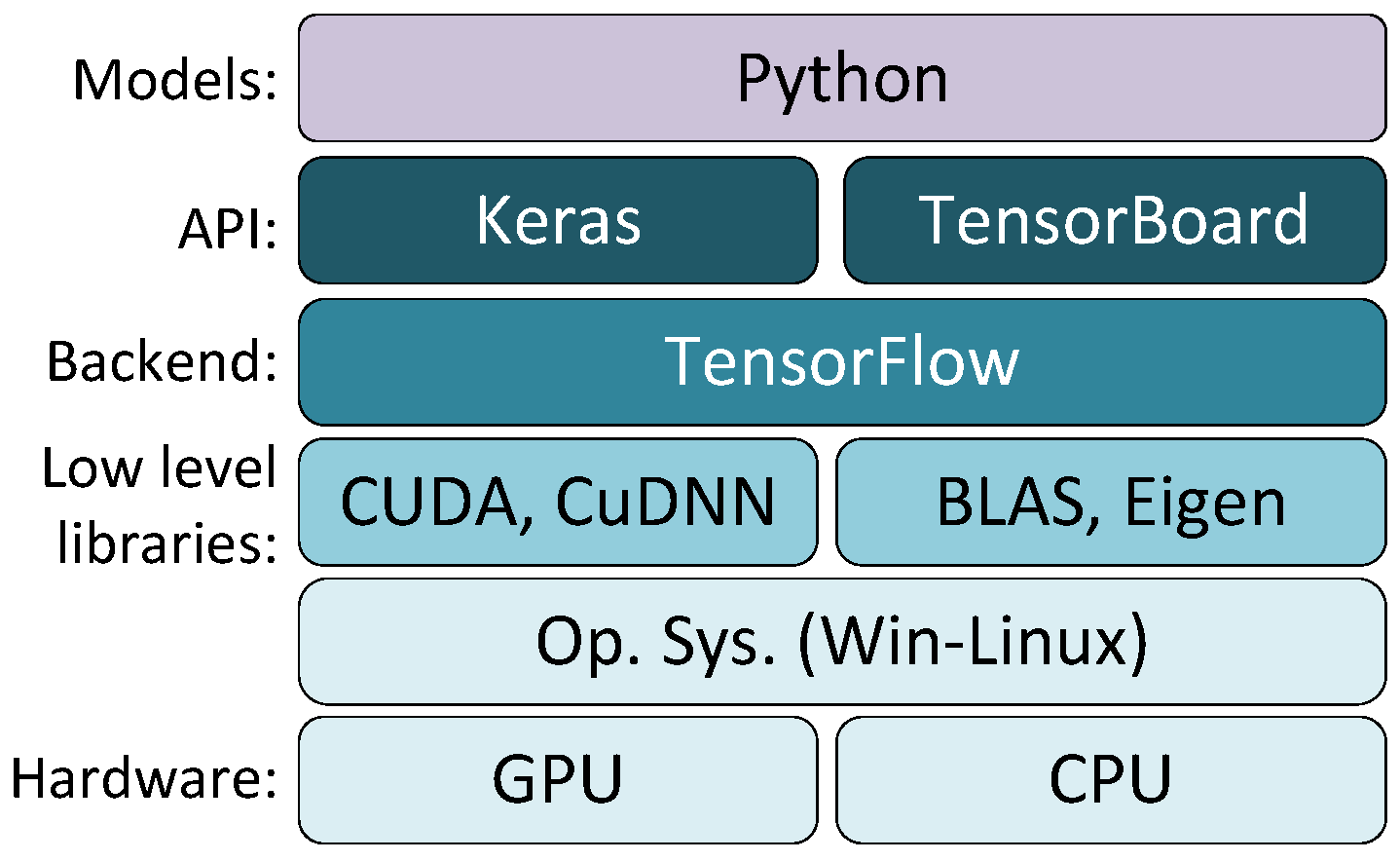

2.4. Components of Detection Architectures

- Neck: This is the part of the network that strengthens the results by offering invariance to scale through a network that takes feature maps as the input at different scales. A very common implementation method is the feature pyramid network (FPN) [40] and the multilevel feature pyramid network (MLFPN) [41];

- Detection head: This is the output layer that provides the location prediction of the bbox that delimits each object and the confidence score for a particular class prediction.

2.5. Detection of Weapons and the Associated Pose

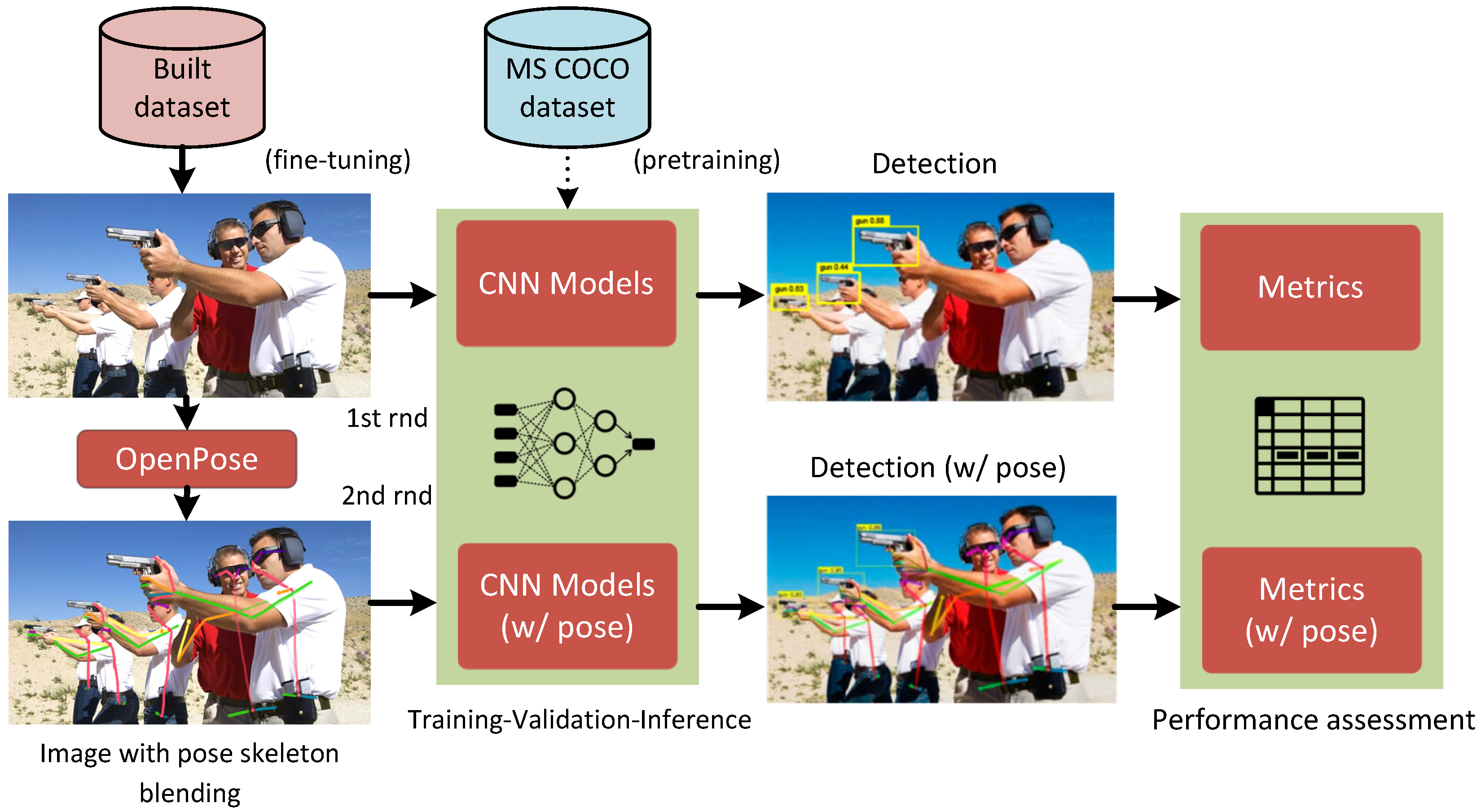

3. Materials and Methods

- The image/frame was not the first plane of a handgun (as in the datasets used in classification problems). Handguns were part of the scene, and they may have had a small size relative to the whole image;

- If possible, the images were representative of true scenes captured by video surveillance systems;

- Images should correspond to situations that guarantee enough generalization capacity for the models; that is, the images covered situations from different perspectives, displaying several people in various poses, even with more than one visible gun;

- Noisy and low-quality images should be avoided. This enhanced the use of fewer data with high-quality information versus the use of more data with low-quality information.

4. Results

5. Conclusions

- Compare the performance of the three models;

- Analyze the influence of fine-tuning with an unfrozen/frozen backbone network for the RetinaNet model;

- Analyze the improvement of the detection quality by model training on the dataset with pose information—associated with held handguns—including by a simple method of blending the skeleton poses in the input images.

- RetinaNet trained by the unfrozen backbone on images without the pose information (exp. 5) obtained the best results in terms of the average precision (96.36%) and recall (97.23%);

- YOLOv3—in Experiments 7 and 8—obtained the best precision (94.79∼96.23%) and F1 score values (91.74∼93.36%);

- The training on images with pose-related information by blending the pose skeletons—generated by OpenPose—in the input images obtained worse detection results for the Faster R-CNN and RetinaNet models (exp. 2, 4, and 6). However, in Experiment 8, YOLOv3 consistently improved every assessment metric by training on images incorporating the explicit pose information (precision ↑ 1.44, recall , F1 , and AP ). This promising result encouraged us to further our studies on the ability to improve the way pose information is incorporated into the models;

- When the models were trained on the dataset including the pose information, our method of blending the pose skeletons obtained better results than the previous alternative methods.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AP | Average precision |

| API | Application programming interface |

| bbox | Bounding box |

| CCTV | Closed circuit of television |

| CNN | Convolutional neural network |

| FN | False negative |

| FNR | False negative rate |

| FP | False positive |

| FPN | Feature pyramid network |

| fps | Frames per second |

| GT | Ground truth |

| GT_P | Ground truth positives (labeled in the ground truth) |

| IoU | Intersection over union |

| LSTM | Long short-term memory |

| mAP | Mean average precision |

| MLFPN | MultiLevel feature pyramid network |

| PPV | Positive predictive value (precision) |

| PxR | Precision × recall (curve) |

| R-CNN | Region-based convolutional neural network |

| SSD | Single shot multibox detector |

| SVM | Support vector machine |

| TP | True positive |

| TPR | True positive rate (recall) |

References

- Karp, A. Estimating Global Civilian-Held Firearms Numbers. Briefing Paper in Small Arms Survey. 2018. Available online: http://www.smallarmssurvey.org/ (accessed on 4 March 2021).

- Spagnolo, P.; Mazzeo, P.L.; Distante, C. (Eds.) Human Behavior Understanding in Networked Sensing; Springer International Publishing: Cham, Switzerland, 2014. [Google Scholar] [CrossRef]

- Leo, M.; Spagnolo, P.; D’Orazio, T.; Mazzeo, P.L.; Distante, A. Real-time smart surveillance using motion analysis. Expert Syst. 2010, 27, 314–337. [Google Scholar] [CrossRef]

- Bianco, V.; Mazzeo, P.; Paturzo, M.; Distante, C.; Ferraro, P. Deep learning assisted portable IR active imaging sensor spots and identifies live humans through fire. Opt. Lasers Eng. 2020, 124, 105818. [Google Scholar] [CrossRef]

- Xiao, Z.; Lu, X.; Yan, J.; Wu, L.; Ren, L. Automatic detection of concealed pistols using passive millimeter wave imaging. In Proceedings of the 2015 IEEE International Conference on Imaging Systems and Techniques (IST), Macau, China, 16–18 September 2015. [Google Scholar] [CrossRef]

- Tiwari, R.K.; Verma, G.K. A Computer Vision based Framework for Visual Gun Detection Using Harris Interest Point Detector. Procedia Comput. Sci. 2015, 54, 703–712. [Google Scholar] [CrossRef] [Green Version]

- Grega, M.; Matiolański, A.; Guzik, P.; Leszczuk, M. Automated Detection of Firearms and Knives in a CCTV Image. Sensors 2016, 16, 47. [Google Scholar] [CrossRef]

- Sultana, F.; Sufian, A.; Dutta, P. A Review of Object Detection Models Based on Convolutional Neural Network. In Advances in Intelligent Systems and Computing; Springer: Singapore, 2020; pp. 1–16. [Google Scholar] [CrossRef]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Z.; Zheng, P.; Xu, S.T.; Wu, X. Object Detection With Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [Green Version]

- Rawat, W.; Wang, Z. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, J.; Courville, A.; Bach, F. Deep Learning; MIT Press Ltd.: London, UK, 2016; ISBN 9780262035613. [Google Scholar]

- Khan, M.A.; Kim, J. Toward Developing Efficient Conv-AE-Based Intrusion Detection System Using Heterogeneous Dataset. Electronics 2020, 9, 1771. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Lectures Notes in Computer Science; Springer: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar] [CrossRef] [Green Version]

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Kolesnikov, A.; et al. The Open Images Dataset V4: Unified image classification, object detection, and visual relationship detection at scale. arXiv 2018, arXiv:1811.00982. [Google Scholar] [CrossRef] [Green Version]

- Padilla, R.; Passos, W.L.; Dias, T.L.B.; Netto, S.L.; da Silva, E.A.B. A Comparative Analysis of Object Detection Metrics with a Companion Open-Source Toolkit. Electronics 2021, 10, 279. [Google Scholar] [CrossRef]

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020. [Google Scholar] [CrossRef]

- Gelana, F.; Yadav, A. Firearm Detection from Surveillance Cameras Using Image Processing and Machine Learning Techniques. In Smart Innovations in Communication and Computational Sciences; Springer: Singapore, 2018; pp. 25–34. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar] [CrossRef] [Green Version]

- Uijlings, J.R.R.; van de Sande, K.E.A.; Gevers, T.; Smeulders, A.W.M. Selective Search for Object Recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef] [Green Version]

- Elsner, J.; Fritz, T.; Henke, L.; Jarrousse, O.; Taing, S.; Uhlenbrock, M. Automatic Weapon Detection in Social Media Image Data Using a Two-Pass Convolutional Neural Network; European Law Enforcement Research Bulletin: Budapest, Hungary, 2018; (4 SCE); pp. 61–65. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Verma, G.K.; Dhillon, A. A Handheld Gun Detection using Faster R-CNN Deep Learning. In Proceedings of the 7th International Conference on Computer and Communication Technology-ICCCT-2017, Allahabad, India, 24–26 November 2017; ACM Press: New York, NY, USA, 2017; pp. 84–88. [Google Scholar] [CrossRef]

- Olmos, R.; Tabik, S.; Herrera, F. Automatic handgun detection alarm in videos using deep learning. Neurocomputing 2018, 275, 66–72. [Google Scholar] [CrossRef] [Green Version]

- Salazar González, J.L.; Zaccaro, C.; Álvarez García, J.A.; Soria Morillo, L.M.; Sancho Caparrini, F. Real-time gun detection in CCTV: An open problem. Neural Netw. 2020, 132, 297–308. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision–ECCV 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef] [Green Version]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Romero, D.; Salamea, C. Convolutional Models for the Detection of Firearms in Surveillance Videos. Appl. Sci. 2019, 9, 2965. [Google Scholar] [CrossRef] [Green Version]

- Kanehisa, R.; Neto, A. Firearm Detection using Convolutional Neural Networks. In Proceedings of the 11th International Conference on Agents and Artificial Intelligence-Volume 2: ICAART, Prague, Czech Republic, 19–21 February 2019; INSTICC. SciTePress: Setubal, Portugal, 2019; pp. 707–714. [Google Scholar] [CrossRef]

- Warsi, A.; Abdullah, M.; Husen, M.N.; Yahya, M.; Khan, S.; Jawaid, N. Gun Detection System Using YOLOv3. In Proceedings of the 2019 IEEE International Conference on Smart Instrumentation, Measurement and Application (ICSIMA), Kuala Lumpur, Malaysia, 27–29 August 2019. [Google Scholar] [CrossRef]

- Sumit, S.S.; Watada, J.; Roy, A.; Rambli, D. In object detection deep learning methods, YOLO shows supremum to Mask R-CNN. J. Phys. Conf. Ser. 2020, 1529, 042086. [Google Scholar] [CrossRef]

- Warsi, A.; Abdullah, M.; Husen, M.N.; Yahya, M. Automatic Handgun and Knife Detection Algorithms: A Review. In Proceedings of the 2020 14th International Conference on Ubiquitous Information Management and Communication (IMCOM), Taichung, Taiwan, 3–5 January 2020. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Q.; Sheng, T.; Wang, Y.; Tang, Z.; Chen, Y.; Cai, L.; Ling, H. M2Det: A Single-Shot Object Detector Based on Multi-Level Feature Pyramid Network. Proc. AAAI Conf. Artif. Intell. 2019, 33, 9259–9266. [Google Scholar] [CrossRef]

- Elmir, Y.; Laouar, S.A.; Hamdaoui, L. Deep Learning for Automatic Detection of Handguns in Video Sequences. In Proceedings of the 3rd edition of the National Study Day on Research on Computer Sciences (JERI 2019), Saida, Algeria, 27 April 2019. [Google Scholar]

- Lim, J.; Jobayer, M.I.A.; Baskaran, V.M.; Lim, J.M.; Wong, K.; See, J. Gun Detection in Surveillance Videos using Deep Neural Networks. In Proceedings of the 2019 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Lanzhou, China, 18–21 November 2019. [Google Scholar] [CrossRef]

- Velasco Mata, A. Human Pose Information as an Improvement Factor for Handgun Detection. Master’s Thesis, Escuela Superior de Informática, Univ. de Castilla-La Mancha, Ciudad Real, Spain, 2020. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, Y. Faster R-CNN (Object Detection) Implemented by Keras for Custom Data from Google’s Open Images . . . . Towards Data Science. 2018. Available online: https://towardsdatascience.com/faster-r-cnn-object-detection-implemented-by-keras-for-custom-data-from-googles-open-images-125f62b9141a (accessed on 29 June 2021).

- Gaiser, H.; Vries, M.D.; Lacatusu, V.; Vcarpani; Williamson, A.; Liscio, E.; Andras; Henon, Y.; Jjiun; Gratie, C.; et al. fizyr/kerasretinanet 0.5.1. 2019. GitHub. Available online: https://github.com/fizyr/keras-retinanet (accessed on 4 March 2021).

- Balsys, R. pythonlessons/YOLOv3-Object-Detection-Tutorial. 2019. Available online: https://pylessons.com/YOLOv3-TF2-introduction/ (accessed on 18 March 2021).

- Darrenl. Tzutalin/Labelimg. 2018. GitHub. Available online: https://github.com/tzutalin/labelImg (accessed on 4 March 2021).

- Lee, K.; Lee, I.; Lee, S. Propagating LSTM: 3D Pose Estimation Based on Joint Interdependency. In Computer Vision–ECCV 2018; Springer: Cham, Switzerland, 2018; pp. 123–141. [Google Scholar] [CrossRef]

| Exp. | Model | #TP | #FP | #FN | Precision (%) | Recall (%) | F1 (%) | AP (%) |

|---|---|---|---|---|---|---|---|---|

| 1 | Faster R-CNN | 194 | 88 | 31 | 68.79 | 86.22 | 76.53 | 81.43 |

| 2 | Faster R-CNN (with pose) | 190 | 71 | 35 | 72.80 | 84.44 | 78.19 | 80.79 |

| 3 | RetinaNetfz | 211 | 25 | 16 | 89.41 | 92.89 | 91.54 | 93.12 |

| 4 | RetinaNetfz (with pose) | 203 | 29 | 22 | 87.50 | 90.22 | 88.84 | 89.71 |

| 5 | RetinaNetufz | 219 | 35 | 6 | 86.22 | 97.23 | 91.44 | 96.36 |

| 6 | RetinaNetufz (with pose) | 210 | 25 | 15 | 89.36 | 93.33 | 91.30 | 92.82 |

| 7 | YOLOv3 | 200 | 11 | 25 | 94.79 | 88.89 | 91.74 | 88.49 |

| 8 | YOLOv3 (with pose) | 204 | 8 | 21 | 96.23 | 90.67 | 93.36 | 90.09 |

| [44] | Velasco’s work (with pose) | 158 | 2 | 39 | 98.75 | 80.20 | 88.51 | 83.6 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salido, J.; Lomas, V.; Ruiz-Santaquiteria, J.; Deniz, O. Automatic Handgun Detection with Deep Learning in Video Surveillance Images. Appl. Sci. 2021, 11, 6085. https://doi.org/10.3390/app11136085

Salido J, Lomas V, Ruiz-Santaquiteria J, Deniz O. Automatic Handgun Detection with Deep Learning in Video Surveillance Images. Applied Sciences. 2021; 11(13):6085. https://doi.org/10.3390/app11136085

Chicago/Turabian StyleSalido, Jesus, Vanesa Lomas, Jesus Ruiz-Santaquiteria, and Oscar Deniz. 2021. "Automatic Handgun Detection with Deep Learning in Video Surveillance Images" Applied Sciences 11, no. 13: 6085. https://doi.org/10.3390/app11136085

APA StyleSalido, J., Lomas, V., Ruiz-Santaquiteria, J., & Deniz, O. (2021). Automatic Handgun Detection with Deep Learning in Video Surveillance Images. Applied Sciences, 11(13), 6085. https://doi.org/10.3390/app11136085