Toward Future Automatic Warehouses: An Autonomous Depalletizing System Based on Mobile Manipulation and 3D Perception

Abstract

:1. Introduction

2. Related Work

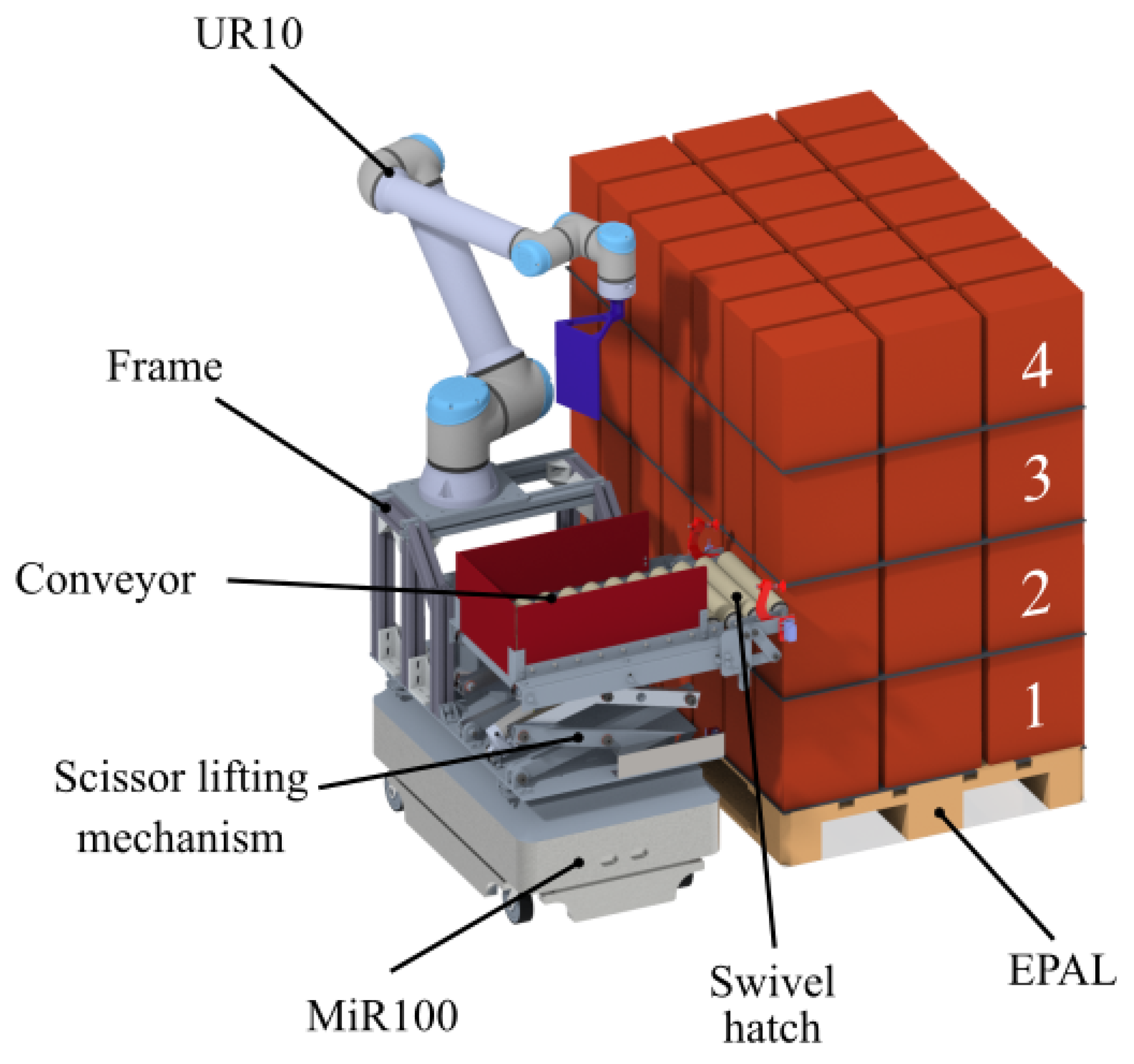

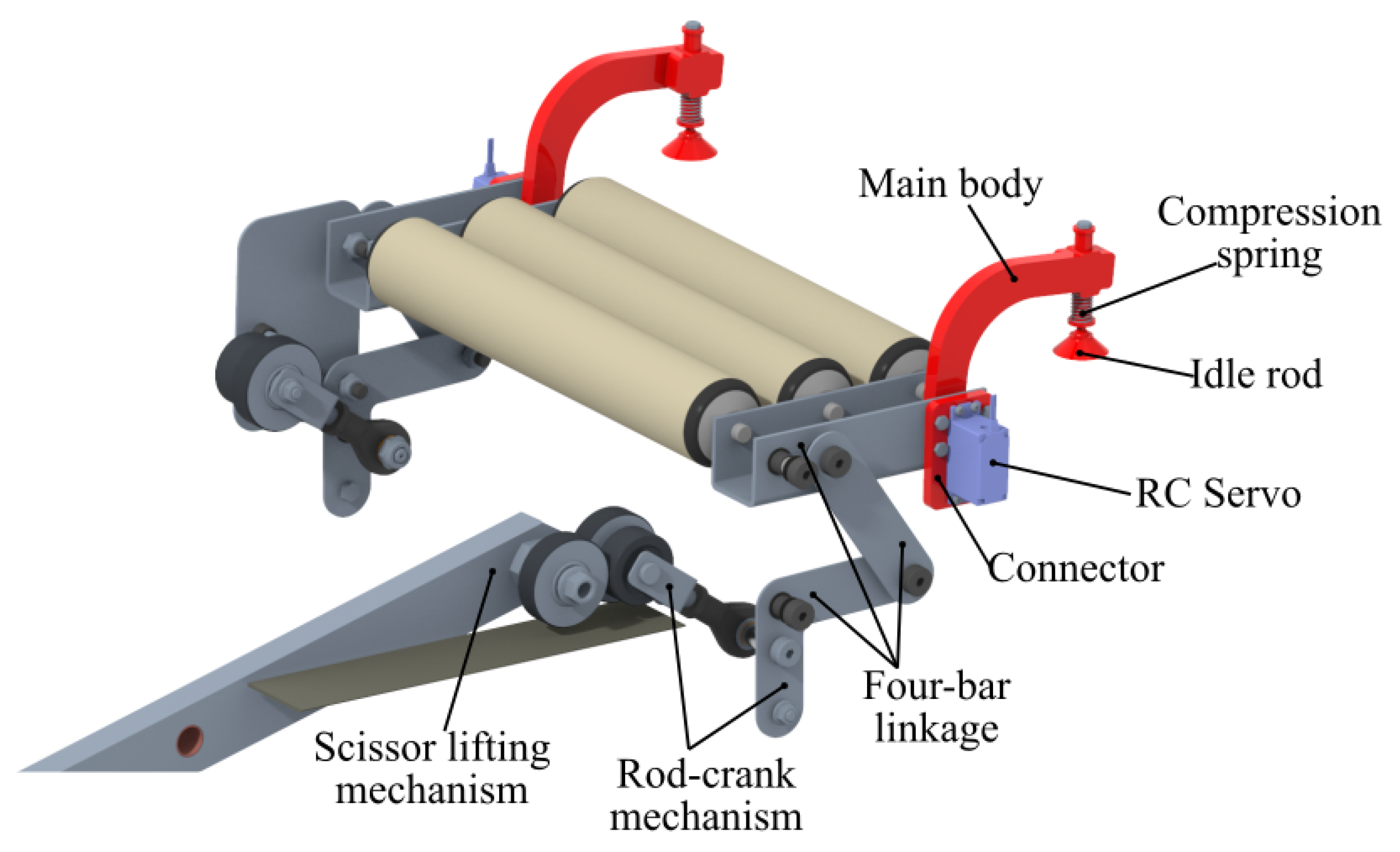

3. Concept and Mechanical Design

- Robot’s self-localization within the workspace;

- Autonomous navigation towards the desired pallet;

- Pose detection of boxes on the top layer of the pallet;

- Extraction of boxes from the pallet and placement aboard the robot.

4. Simulations

| Procedure 1: one-box dragging motion sequence, shovel-shaped tool |

Point-to-point motion to box target frame Point-to-point motion to box target frame |

Linear downward approaching movement (200 mm) Linear downward approaching movement (200 mm) |

Linear dragging movement (600 mm) Linear dragging movement (600 mm) |

Linear upward movement (300 mm) Linear upward movement (300 mm) |

- Tj is the time required to move the AMR from the initial pallet to the storage pallet;

- Ts = 1 s is the time required to move the AMR from the current to the next column of the grid,

| Procedure 2: one-box picking/placing, gripper tool |

Point-to-point motion to box target frame Point-to-point motion to box target frame |

Linear downward approaching movement (200 mm) Linear downward approaching movement (200 mm) |

Close the gripper Close the gripper |

Linear upward approaching movement (200 mm) Linear upward approaching movement (200 mm) |

Linear movement (600 mm) Linear movement (600 mm) |

Linear downward approaching movement (200 mm) Linear downward approaching movement (200 mm) |

Open the gripper Open the gripper |

Linear upward movement (300 mm) Linear upward movement (300 mm) |

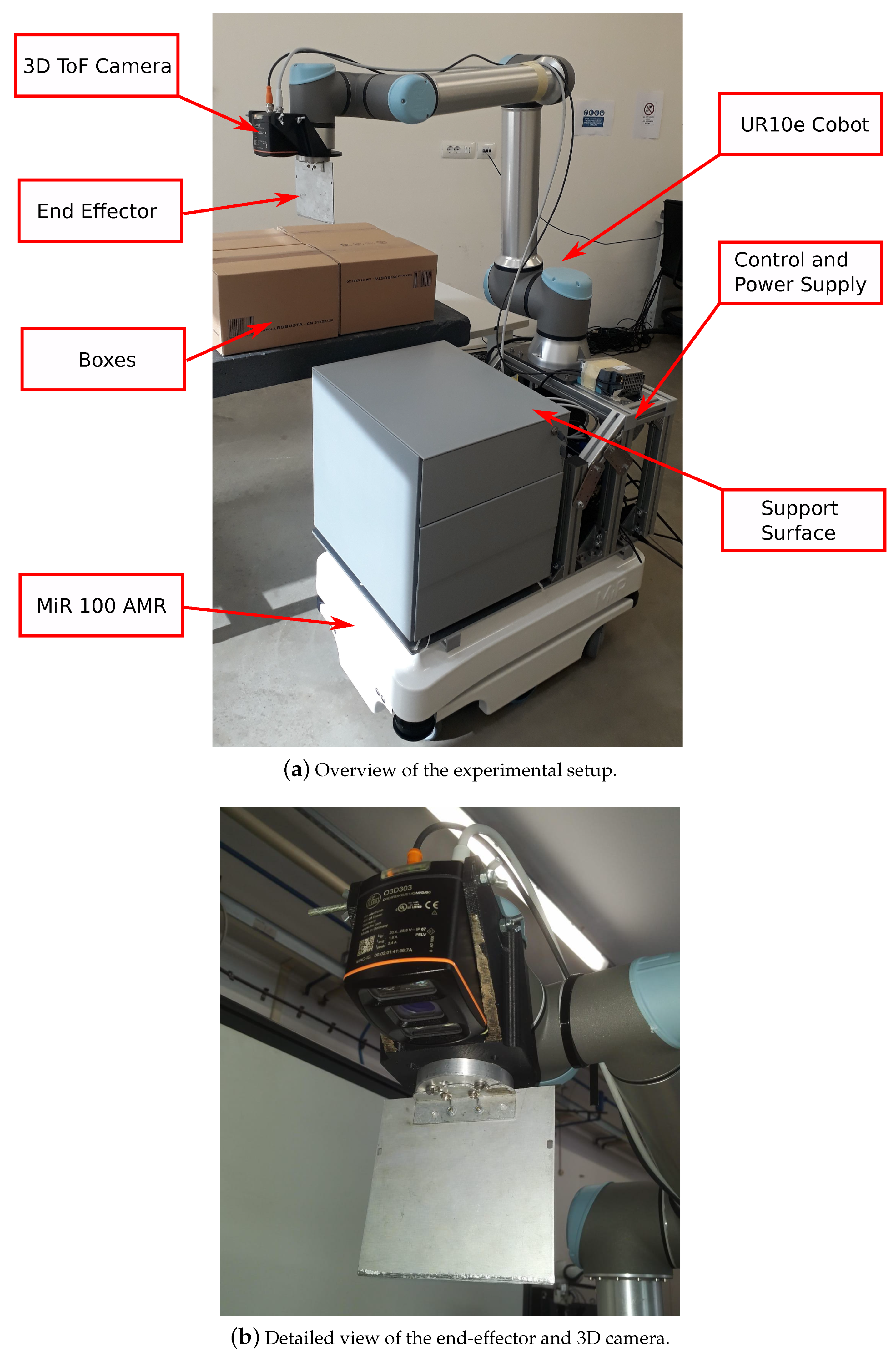

5. Experimental Setup, Perception and Control System

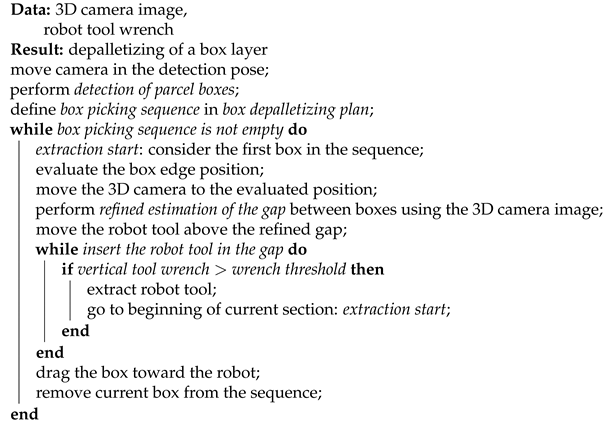

| Algorithm 1: Robotized Depalletizing Algorithm |

|

5.1. Detection of Parcel Boxes

5.1.1. Edge Detection and Candidate Boxes Computation

5.1.2. Genetic Optimization Algorithm

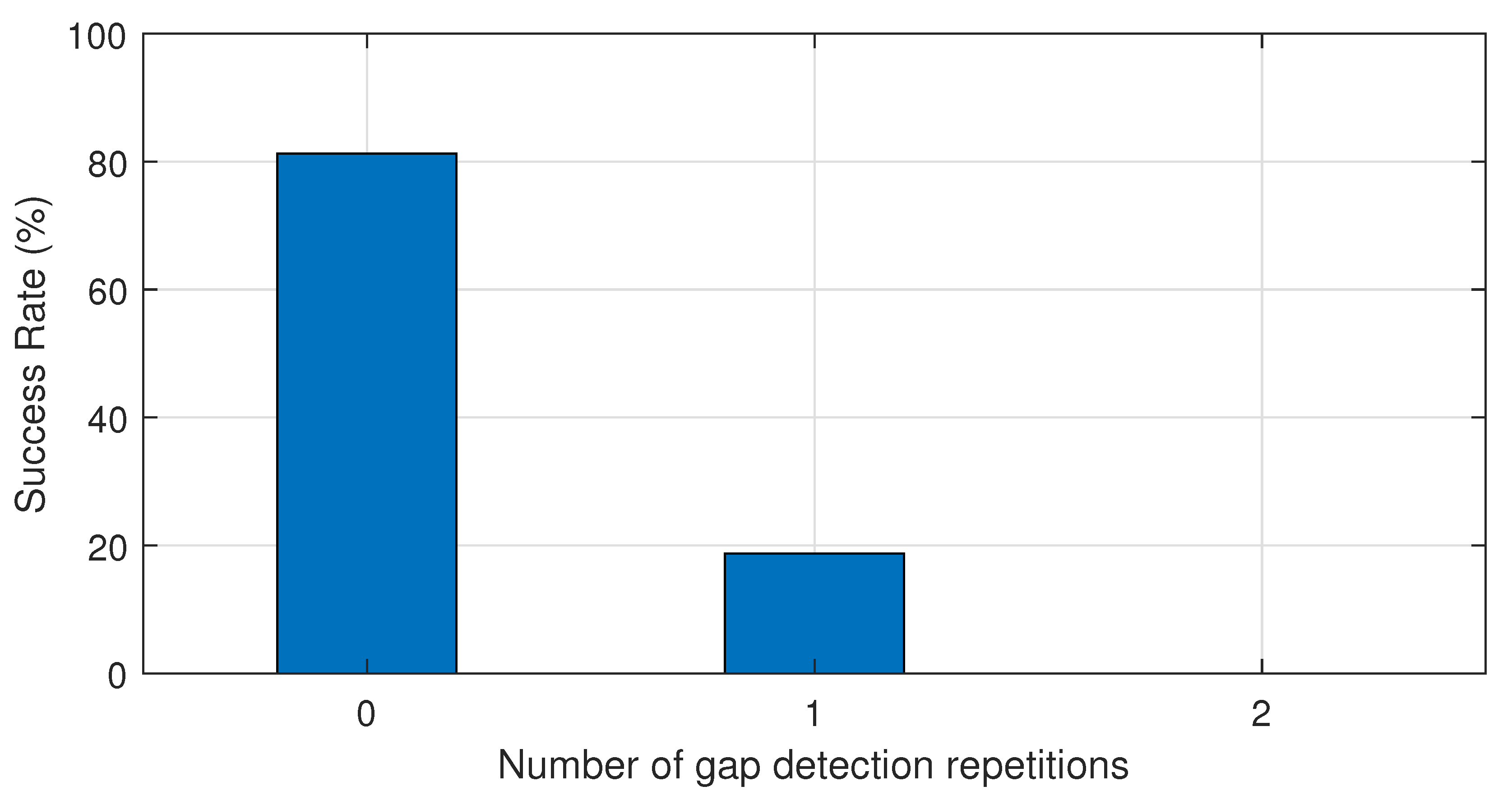

5.2. Refined Estimation of the Gap between Two Boxes

5.3. Controller Design

6. Experimental Results

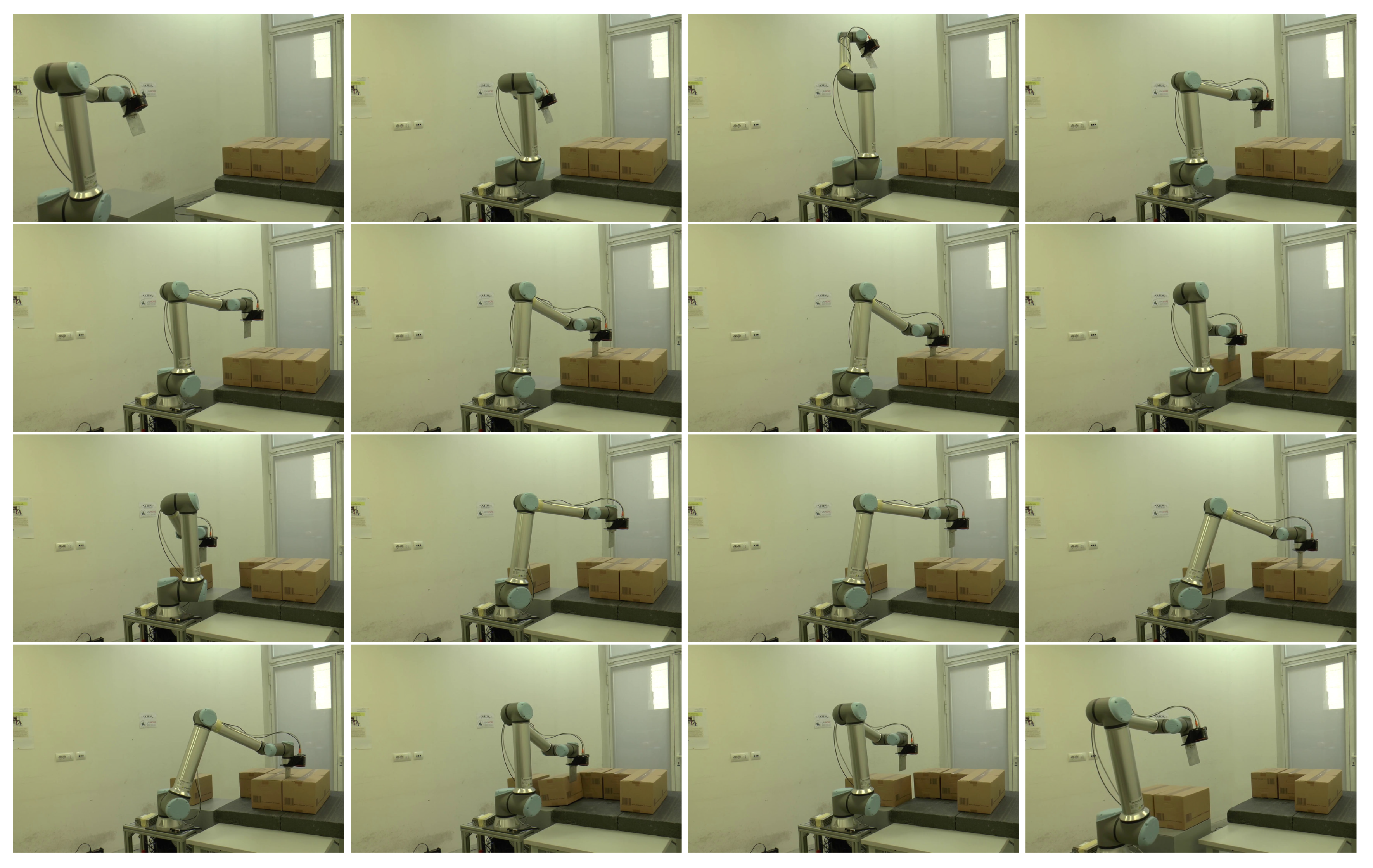

6.1. 2-Boxes Depalletizing and Transportation

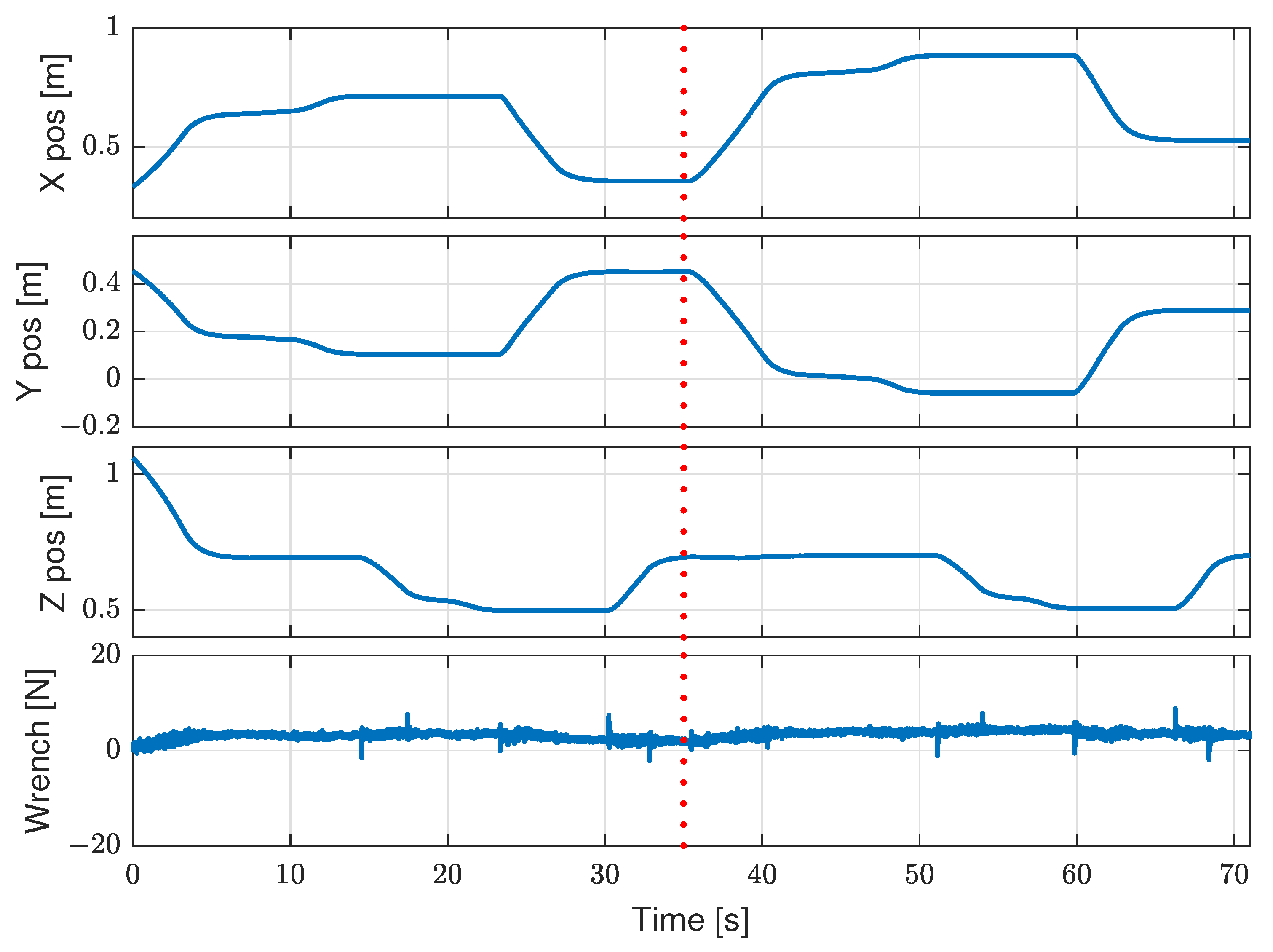

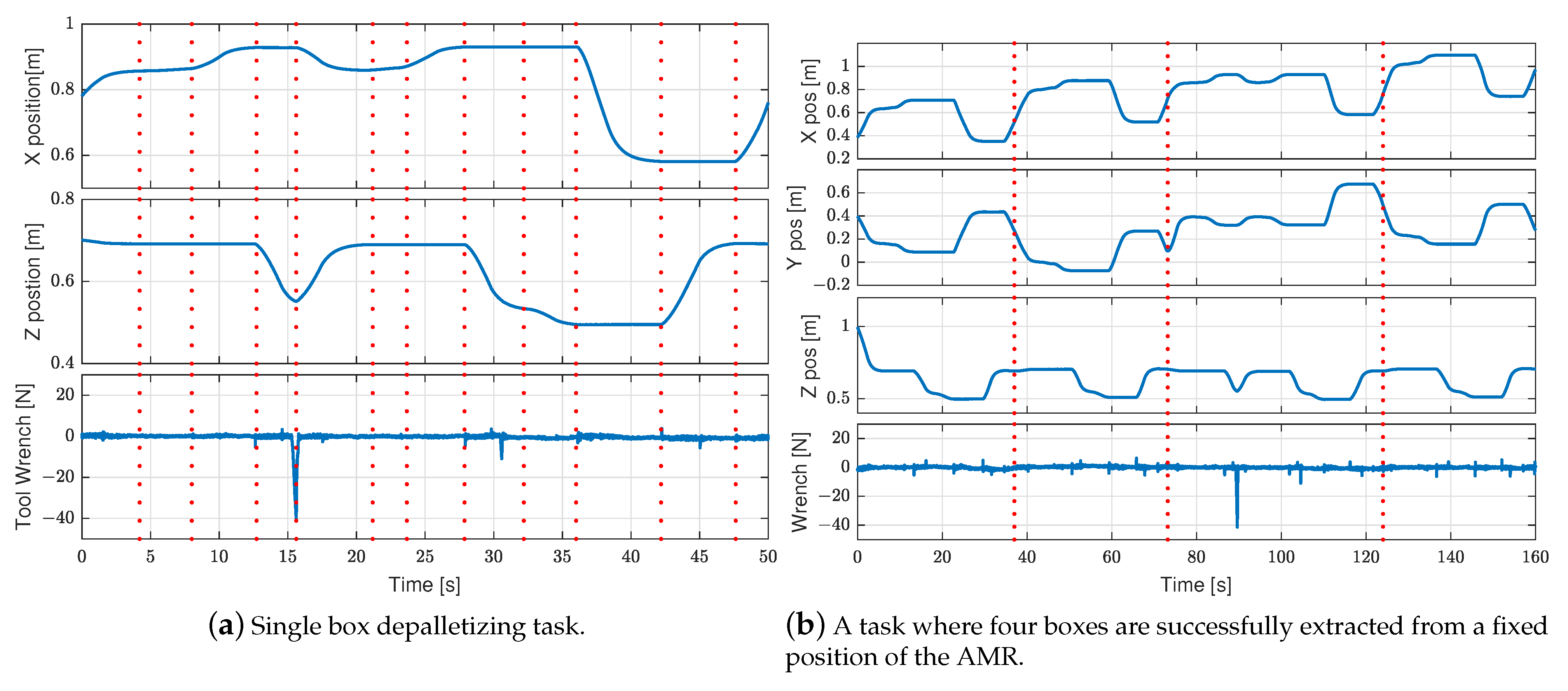

6.2. Single Box Extraction

6.3. Complete Layer Depalletizing

7. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Echelmeyer, W.; Kirchheim, A.; Wellbrock, E. Robotics-logistics: Challenges for automation of logistic processes. In Proceedings of the 2008 IEEE International Conference on Automation and Logistics, Qingdao, China, 1–3 September 2008; pp. 2099–2103. [Google Scholar]

- Khairuddin, U.; Razi, N.; Abidin, M.; Yusof, R. Smart Packing Simulator for 3D Packing Problem Using Genetic Algorithm. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2020; Volume 1447, p. 012041. [Google Scholar]

- Al-Jodah, A.A.L.; Shirinzadeh, B.; Pinskier, J.; Ghafarian, M.; Das, T.K.; Tian, Y.; Zhang, D. Antlion Optimized Robust Control Approach for Micropositioning Trajectory Tracking Tasks. IEEE Access 2020, 8, 220889–220907. [Google Scholar] [CrossRef]

- Al-Azza, A.A.; Al-Jodah, A.A.; Harackiewicz, F.J. Spider monkey optimization (SMO): A novel optimization technique in electromagnetics. In Proceedings of the 2016 IEEE Radio and Wireless Symposium (RWS), Austin, TX, USA, 24–27 January 2016; pp. 238–240. [Google Scholar]

- Li, Q.; Dong, S.; Zhang, D.; Wang, X. Research on the Lidar-based Recognition and Location Method for Depalletizing Targets. In Proceedings of the 2020 Chinese Automation Congress (CAC), Shanghai, China, 6–8 November 2020; pp. 683–687. [Google Scholar]

- Wei, C.; Ji, Z.; Cai, B. Particle swarm optimization for cooperative multi-robot task allocation: A multi-objective approach. IEEE Robot. Autom. Lett. 2020, 5, 2530–2537. [Google Scholar] [CrossRef]

- Sabattini, L.; Aikio, M.; Beinschob, P.; Boehning, M.; Cardarelli, E.; Digani, V.; Krengel, A.; Magnani, M.; Mandici, S.; Oleari, F.; et al. The PAN-Robots Project: Advanced Automated Guided Vehicle Systems for Industrial Logistics. IEEE Robot. Autom. Mag. 2018, 25, 55–64. [Google Scholar] [CrossRef]

- Cesetti, A.; Scotti, C.; Di Buo, G.; Longhi, S. A service oriented architecture supporting an autonomous mobile robot for industrial applications. In Proceedings of the 18th Mediterranean Conference on Control and Automation, MED’10, Marrakech, Morocco, 23–25 June 2010; pp. 604–609. [Google Scholar]

- Pedrosa, E.; Lim, G.H.; Amaral, F.; Pereira, A.; Cunha, B.; Azevedo, J.L.; Dias, P.; Dias, R.; Reis, L.P.; Shafii, N.; et al. TIMAIRIS: Autonomous Blank Feeding for Packaging Machines. In Bringing Innovative Robotic Technologies from Research Labs to Industrial End-Users; Springer: Berlin/Heidelberg, Germany, 2020; pp. 153–186. [Google Scholar]

- Bonini, T.; Forni, A.; Mazzolini, M. Design of an Intelligent Handling System using a Multi-Objective Optimization Approach. In Proceedings of the IEEE 23rd Internationl Conference on Emerging Technologies and Factory Automation (ETFA), Torino, Italy, 4–7 September 2018; Volume 1, pp. 887–894. [Google Scholar]

- Doliotis, P.; McMurrough, C.D.; Criswell, A.; Middleton, M.B.; Rajan, S.T. A 3D perception-based robotic manipulation system for automated truck unloading. In Proceedings of the IEEE Internationl Conference on Automation Science and Engineering (CASE), Fort Worth, TX, USA, 21–25 August 2016; pp. 262–267. [Google Scholar]

- Caccavale, R.; Arpenti, P.; Paduano, G.; Fontanellli, A.; Lippiello, V.; Villani, L.; Siciliano, B. A Flexible Robotic Depalletizing System for Supermarket Logistics. IEEE Robot. Autom. Lett. 2020, 5, 4471–4476. [Google Scholar] [CrossRef]

- Nakamoto, H.; Eto, H.; Sonoura, T.; Tanaka, J.; Ogawa, A. High-speed and compact depalletizing robot capable of handling packages stacked complicatedly. In Proceedings of the 2016 IEEE/RSJ Internationl Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 344–349. [Google Scholar]

- Kavoussanos, M.; Pouliezos, A. Visionary automation of sack handling and emptying. IEEE Robot. Autom. Mag. 2000, 7, 44–49. [Google Scholar] [CrossRef]

- Matsuo, I.; Shimizu, T.; Nakai, Y.; Kakimoto, M.; Sawasaki, Y.; Mori, Y.; Sugano, T.; Ikemoto, S.; Miyamoto, T. Q-bot: Heavy object carriage robot for in-house logistics based on universal vacuum gripper. Adv. Robot. 2020, 34, 173–188. [Google Scholar] [CrossRef]

- Lim, G.H.; Pedrosa, E.; Amaral, F.; Lau, N.; Pereira, A.; Azevedo, J.L.; Cunha, B.; Badini, S. Mobile manipulation for autonomous packaging in realistic environments: EuRoC challenge 2, stage II, showcase. In Proceedings of the 2018 IEEE Internationl Conference on Autonomous Robot Systems and Competitions (ICARSC), Torres Vedras, Portugal, 25–27 April 2018; pp. 231–236. [Google Scholar]

- Dogar, M.R.; Srinivasa, S.S. A planning framework for non-prehensile manipulation under clutter and uncertainty. Auton. Robot. 2012, 33, 217–236. [Google Scholar] [CrossRef]

- Papallas, R.; Dogar, M.R. Non-prehensile manipulation in clutter with human-in-the-loop. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Virtual Workshops, 31 May–30 June 2020; pp. 6723–6729. [Google Scholar]

- Acharya, P.; Nguyen, K.D.; La, H.M.; Liu, D.; Chen, I.M. Nonprehensile Manipulation: A Trajectory-Planning Perspective. IEEE/ASME Trans. Mechatron. 2020, 26, 527–538. [Google Scholar] [CrossRef]

- Ardakani, M.; Bimbo, J.; Prattichizzo, D. Quasi-static Analysis of Planar Sliding Using Friction Patches. arXiv 2019, arXiv:1904.06677. [Google Scholar]

- Qin, Y.; Escande, A.; Tanguy, A.; Yoshida, E. Vision-based Belt Manipulation by Humanoid Robot. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Online Metting, 25–29 October 2020; pp. 3547–3552. [Google Scholar]

- Hashimoto, M.; Sumi, K. Genetic labeling and its application to depalletizing robot vision. In Proceedings of the 1994 IEEE Workshop on Applications of Computer Vision, Sarasota, FL, USA, 5–7 December 1994; pp. 177–186. [Google Scholar]

- Yunardi, R.T.; Winarno, P. Contour-based object detection in Automatic Sorting System for a parcel boxes. In Proceedings of the 2015 International Conference on Advanced Mechatronics, Intelligent Manufacture, and Industrial Automation (ICAMIMIA), Surabaya, Indonesia, 15–17 October 2015; pp. 38–41. [Google Scholar]

- Prasse, C.; Stenzel, J.; Böckenkamp, A.; Rudak, B.; Lorenz, K.; Weichert, F.; Müller, H.; ten Hompel, M. New Approaches for Singularization in Logistic Applications Using Low Cost 3D Sensors. In Sensing Technology: Current Status and Future Trends IV; Mason, A., Mukhopadhyay, S.C., Jayasundera, K.P., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 191–215. [Google Scholar]

- Katsoulas, D.; Bastidas, C.C.; Kosmopoulos, D. Superquadric Segmentation in Range Images via Fusion of Region and Boundary Information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 781–795. [Google Scholar] [CrossRef] [PubMed]

- Zhang, B.; Skaar, S.B. Robotic de-palletizing using uncalibrated vision and 3D laser-assisted image analysis. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 11–15 October 2009; pp. 3820–3825. [Google Scholar]

- Arpenti, P.; Caccavale, R.; Paduano, G.; Andrea Fontanelli, G.; Lippiello, V.; Villani, L.; Siciliano, B. RGB-D Recognition and Localization of Cases for Robotic Depalletizing in Supermarkets. IEEE Robot. Autom. Lett. 2020, 5, 6233–6238. [Google Scholar] [CrossRef]

- Baldassarri, A.; Innero, G.; Di Leva, R.; Palli, G.; Carricato, M. Development of a Mobile Robotized System for Palletizing Applications. In Proceedings of the 25th IEEE International Conference on Emerging Technologies and Factory Automation, Vienna, Austria, 8–11 September 2020; Volume 1, pp. 395–401. [Google Scholar]

- Chiaravalli, D.; Palli, G.; Monica, R.; Lodi Rizzini, D.; Aleotti, J. Integration of a Multi-Camera Vision System and Admittance Control for Robotic Industrial Depalletizing. In Proceedings of the IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; Volume 1, pp. 667–674. [Google Scholar]

- Monica, R.; Aleotti, J.; Rizzini, D.L. Detection of Parcel Boxes for Pallet Unloading Using a 3D Time-of-Flight Industrial Sensor. In Proceedings of the 2020 Fourth IEEE International Conference on Robotic Computing (IRC), Virtual Conference, 9–11 November 2020; pp. 314–318. [Google Scholar]

| Loading | Unloading | ||

|---|---|---|---|

| Motion | Time (s) | Time (s) | |

| Box 1 | 1 | 5.75 | 5.55 |

| 2 | 1.04 | 1.04 | |

| 3 | 3.10 | 3.05 | |

| 4 | 1.55 | 1.55 | |

| Partial | sTl1 = 11.44 s | sTu1 = 11.19 s | |

| Box 2 | 1 | 5.25 | 3.55 |

| 2 | 1.04 | 1.04 | |

| 3 | 3.10 | 3.05 | |

| 4 | 1.55 | 1.6 | |

| Total | sTl = 22.38 s | sTu = 20.43 s |

| Loading | Unloading | ||

|---|---|---|---|

| Motion | Time (s) | Time (s) | |

| Box 1 | 1 | 4.25 | 5.90 |

| 2 | 1.05 | 1.05 | |

| 4 | 1.05 | 1.05 | |

| 5 | 3.09 | 3.10 | |

| 7 | 1.05 | 1.05 | |

| 8 | 1.55 | 1.54 | |

| Partial | vTl1 = 12.04 s | vTu1 = 13.69 s | |

| Box 2 | 1 | 5.65 | 3.35 |

| 2 | 1.05 | 1.05 | |

| 4 | 1.05 | 1.05 | |

| 5 | 3.10 | 3.10 | |

| 7 | 1.05 | 1.10 | |

| 8 | 1.54 | 1.54 | |

| Total | vTl = 23.48 s | vTu = 24.88 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aleotti, J.; Baldassarri, A.; Bonfè, M.; Carricato, M.; Chiaravalli, D.; Di Leva, R.; Fantuzzi, C.; Farsoni, S.; Innero, G.; Lodi Rizzini, D.; et al. Toward Future Automatic Warehouses: An Autonomous Depalletizing System Based on Mobile Manipulation and 3D Perception. Appl. Sci. 2021, 11, 5959. https://doi.org/10.3390/app11135959

Aleotti J, Baldassarri A, Bonfè M, Carricato M, Chiaravalli D, Di Leva R, Fantuzzi C, Farsoni S, Innero G, Lodi Rizzini D, et al. Toward Future Automatic Warehouses: An Autonomous Depalletizing System Based on Mobile Manipulation and 3D Perception. Applied Sciences. 2021; 11(13):5959. https://doi.org/10.3390/app11135959

Chicago/Turabian StyleAleotti, Jacopo, Alberto Baldassarri, Marcello Bonfè, Marco Carricato, Davide Chiaravalli, Roberto Di Leva, Cesare Fantuzzi, Saverio Farsoni, Gino Innero, Dario Lodi Rizzini, and et al. 2021. "Toward Future Automatic Warehouses: An Autonomous Depalletizing System Based on Mobile Manipulation and 3D Perception" Applied Sciences 11, no. 13: 5959. https://doi.org/10.3390/app11135959

APA StyleAleotti, J., Baldassarri, A., Bonfè, M., Carricato, M., Chiaravalli, D., Di Leva, R., Fantuzzi, C., Farsoni, S., Innero, G., Lodi Rizzini, D., Melchiorri, C., Monica, R., Palli, G., Rizzi, J., Sabattini, L., Sampietro, G., & Zaccaria, F. (2021). Toward Future Automatic Warehouses: An Autonomous Depalletizing System Based on Mobile Manipulation and 3D Perception. Applied Sciences, 11(13), 5959. https://doi.org/10.3390/app11135959