Supervised Learning Based Peripheral Vision System for Immersive Visual Experiences for Extended Display

Abstract

:1. Introduction

- It only provides the screen with the limited content and field of view as the peripheral vision system lacks due to the limited screen size and the image formats.

- It provides the visual experience restricted to the virtual scene boxed in the frame of the display and the physical environment surrounding the user is neglected.

- (1)

- The design of the system to augment the area surrounding the TV with projected context image creating immersive visual experiences.

- (2)

- Replacing the traditional video display with the immersive visual experiences for the user at low cost using the components of the TV and projector easily available everywhere.

- (3)

- Quantitative and qualitative evaluation of our system with the State of the Art approaches

2. Related Prior Research

- (1)

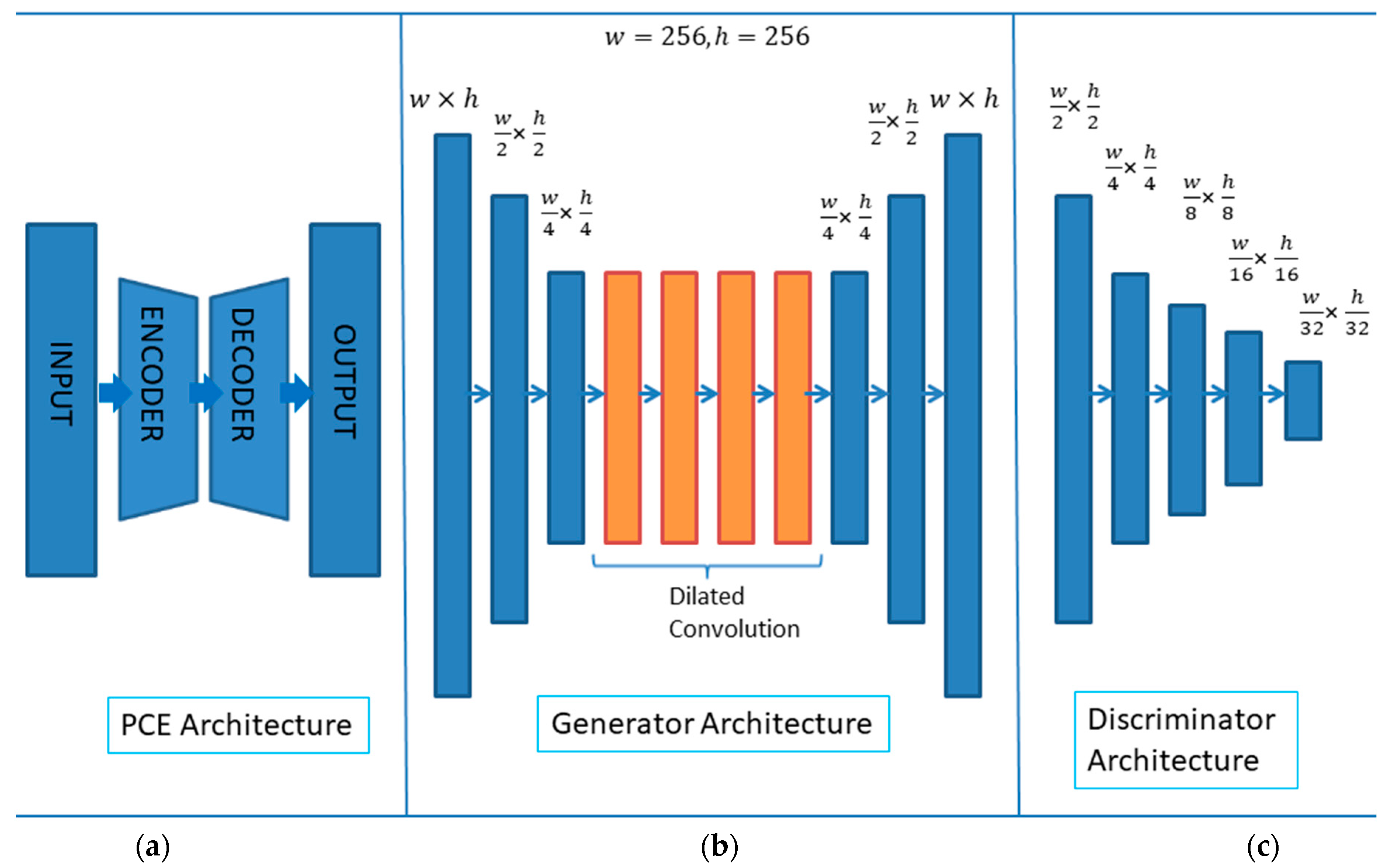

- Utilization of the PCE network for peripheral vision system (PCE was not previously used for peripheral vision system).

- (2)

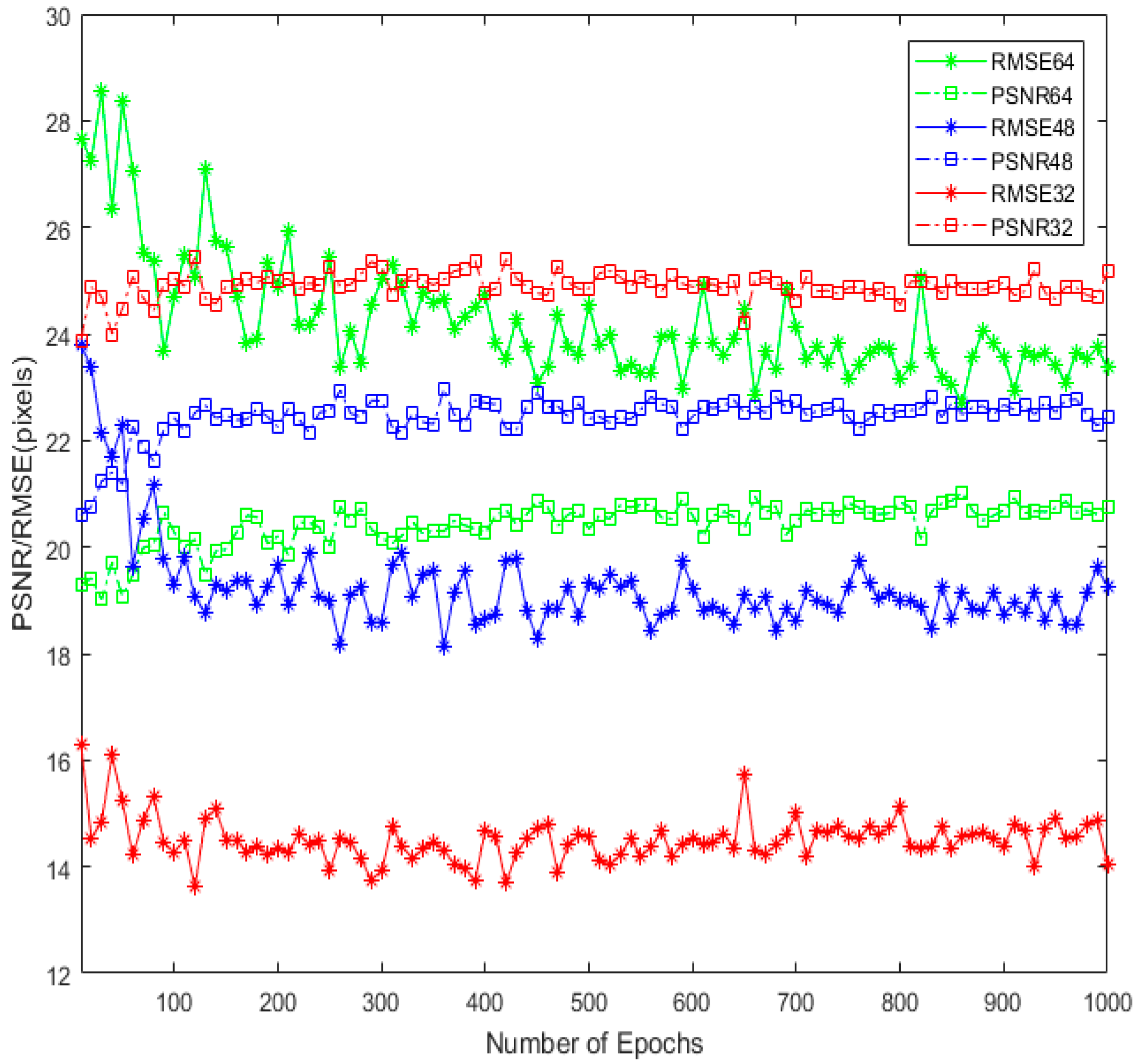

- We performed the investigation of the effect of different sizes of the extended area on the network’s accuracy and reported that larger size of the extended area results in less PSNR value (see Table 3).

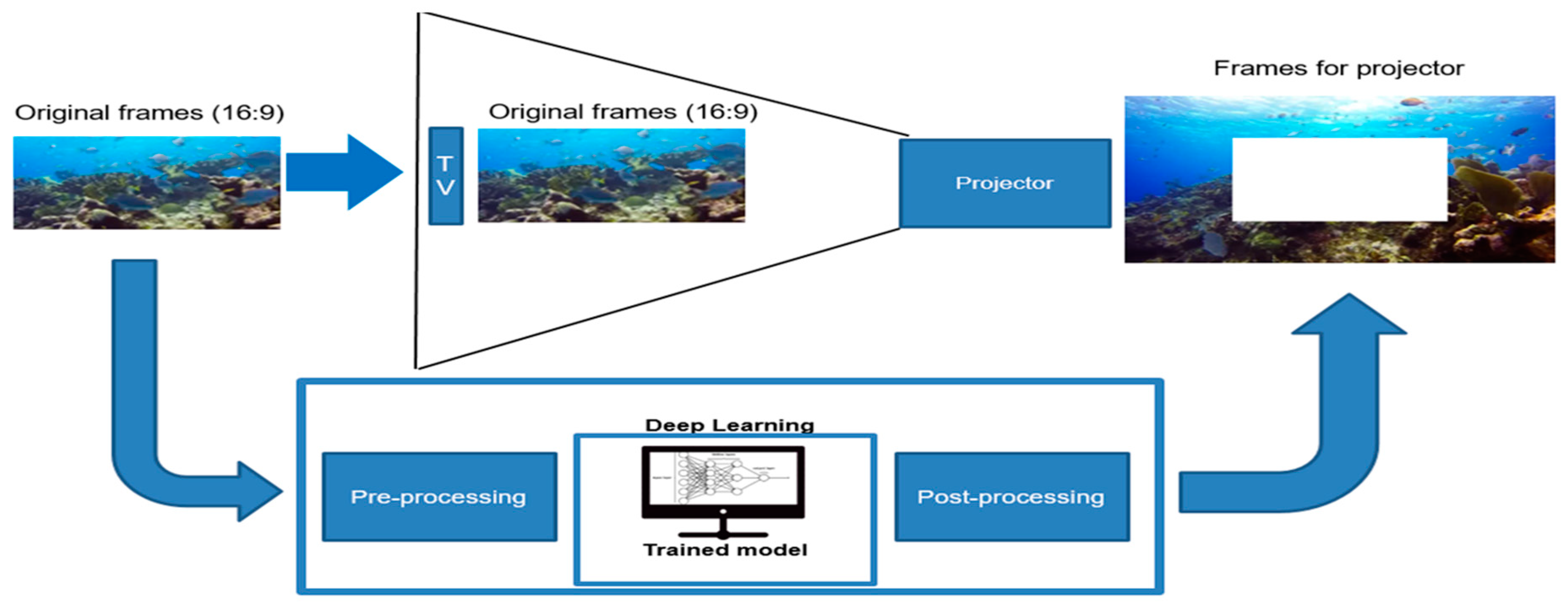

3. Proposed Approach

3.1. Focus + Context Design Scheme

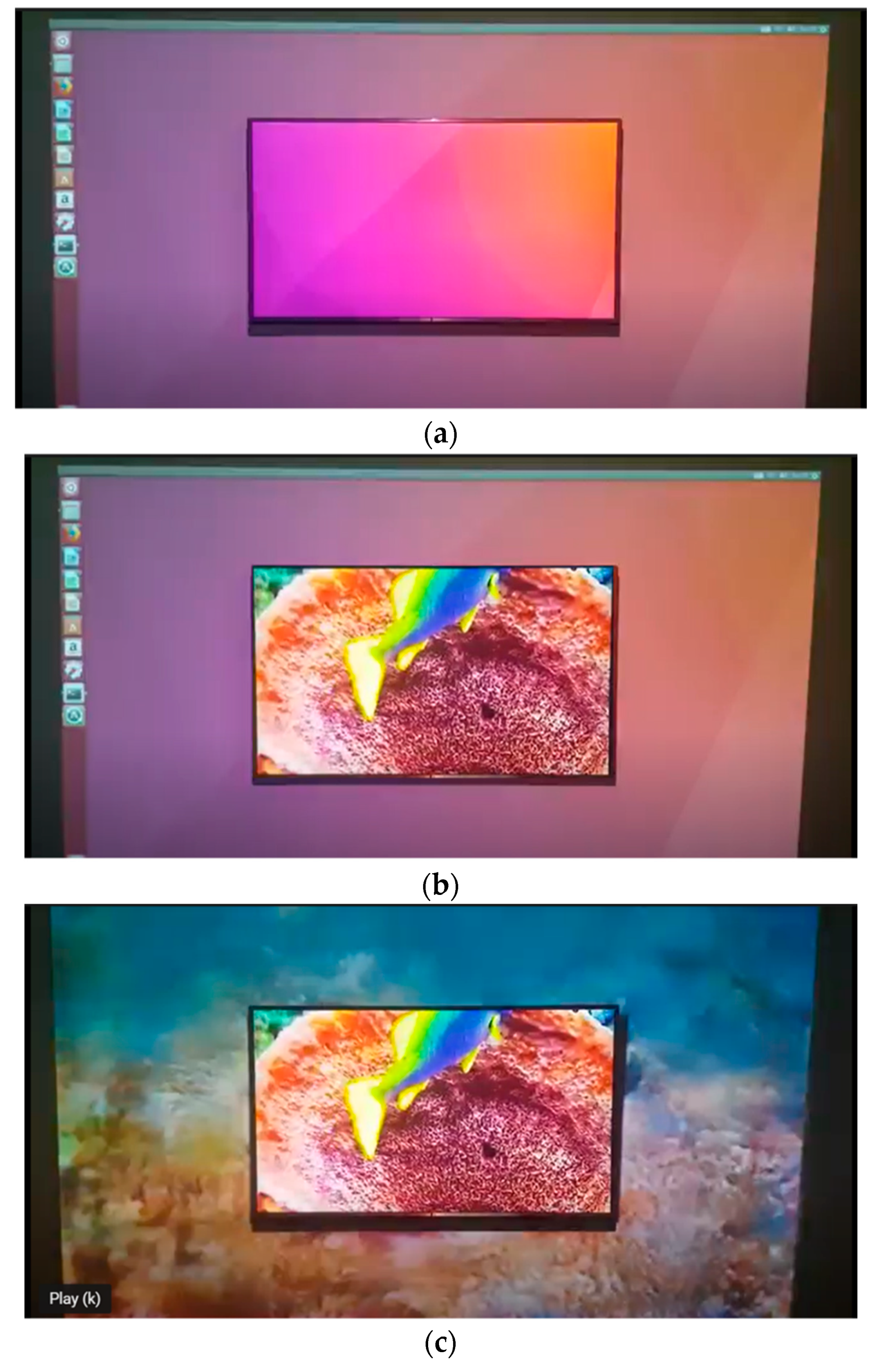

3.2. Proposed Peripheral System

3.3. PCE Architecture

3.4. Mathematical Expression for Loss function

4. Experiments and Results

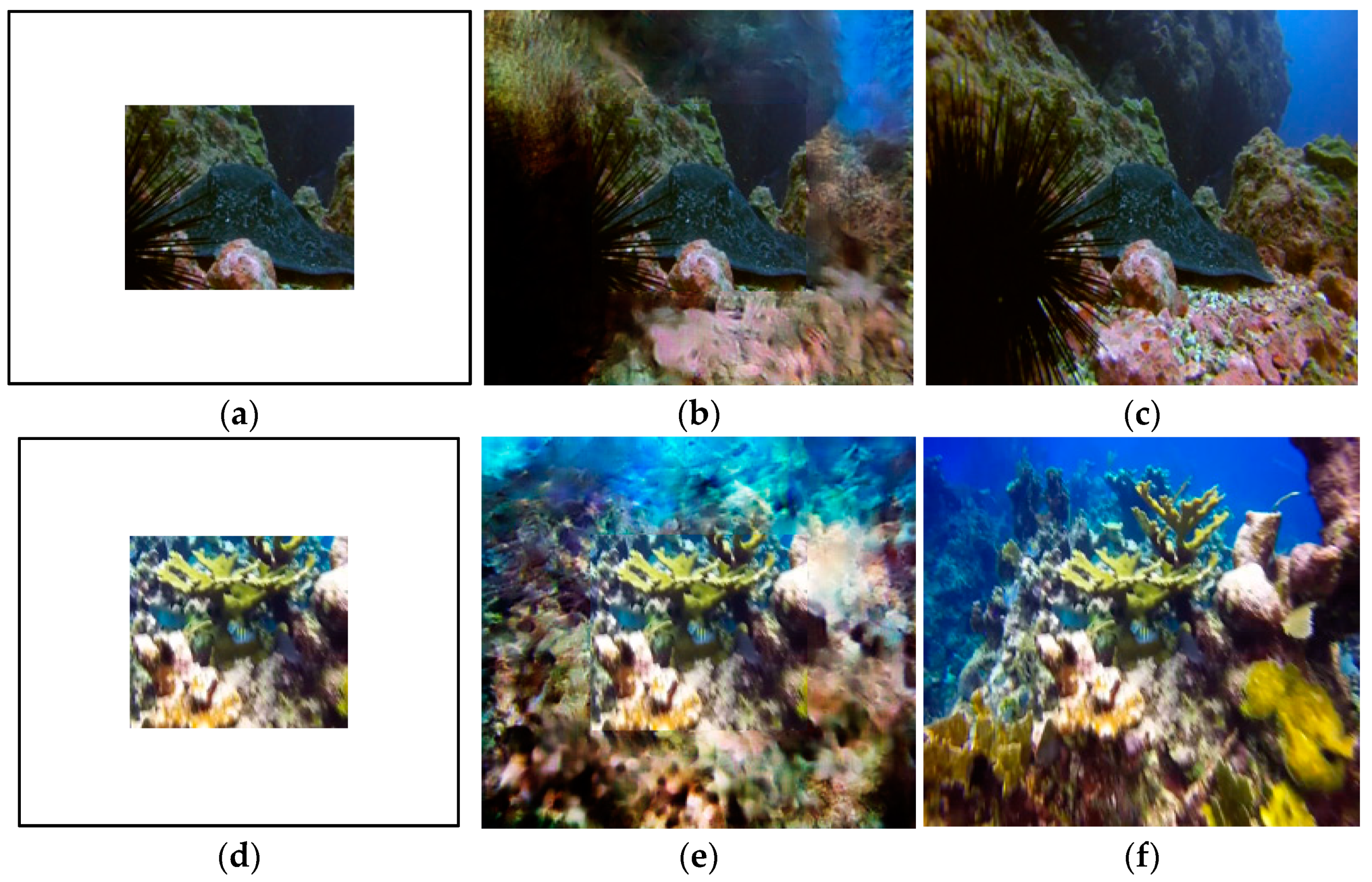

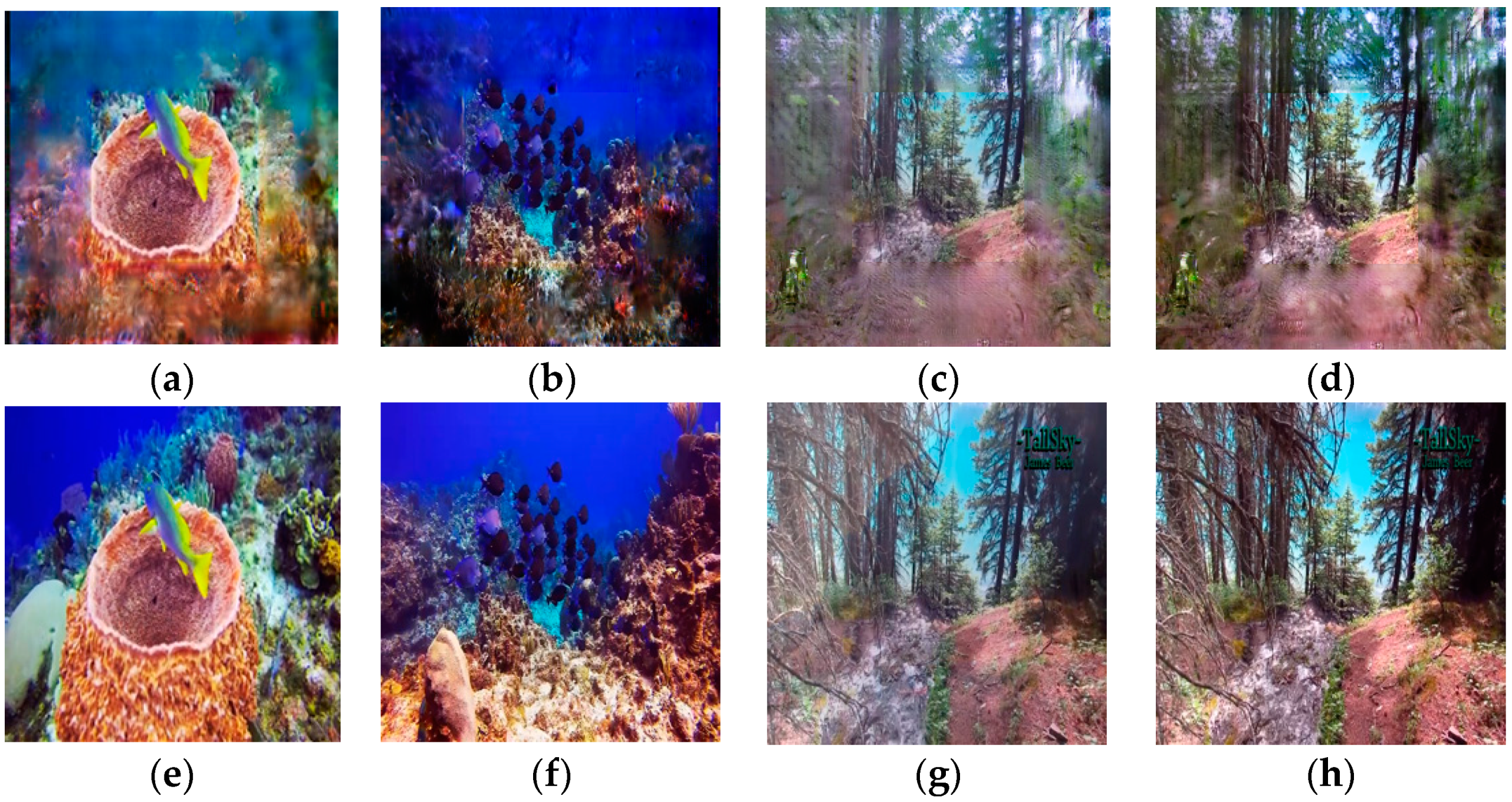

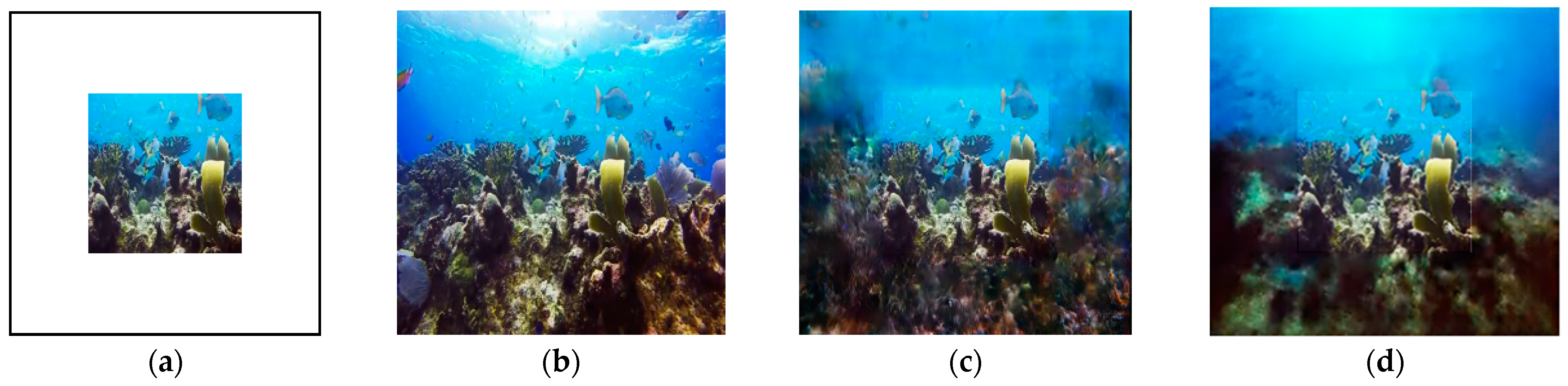

4.1. PCE for Ocean and Forest Dataset

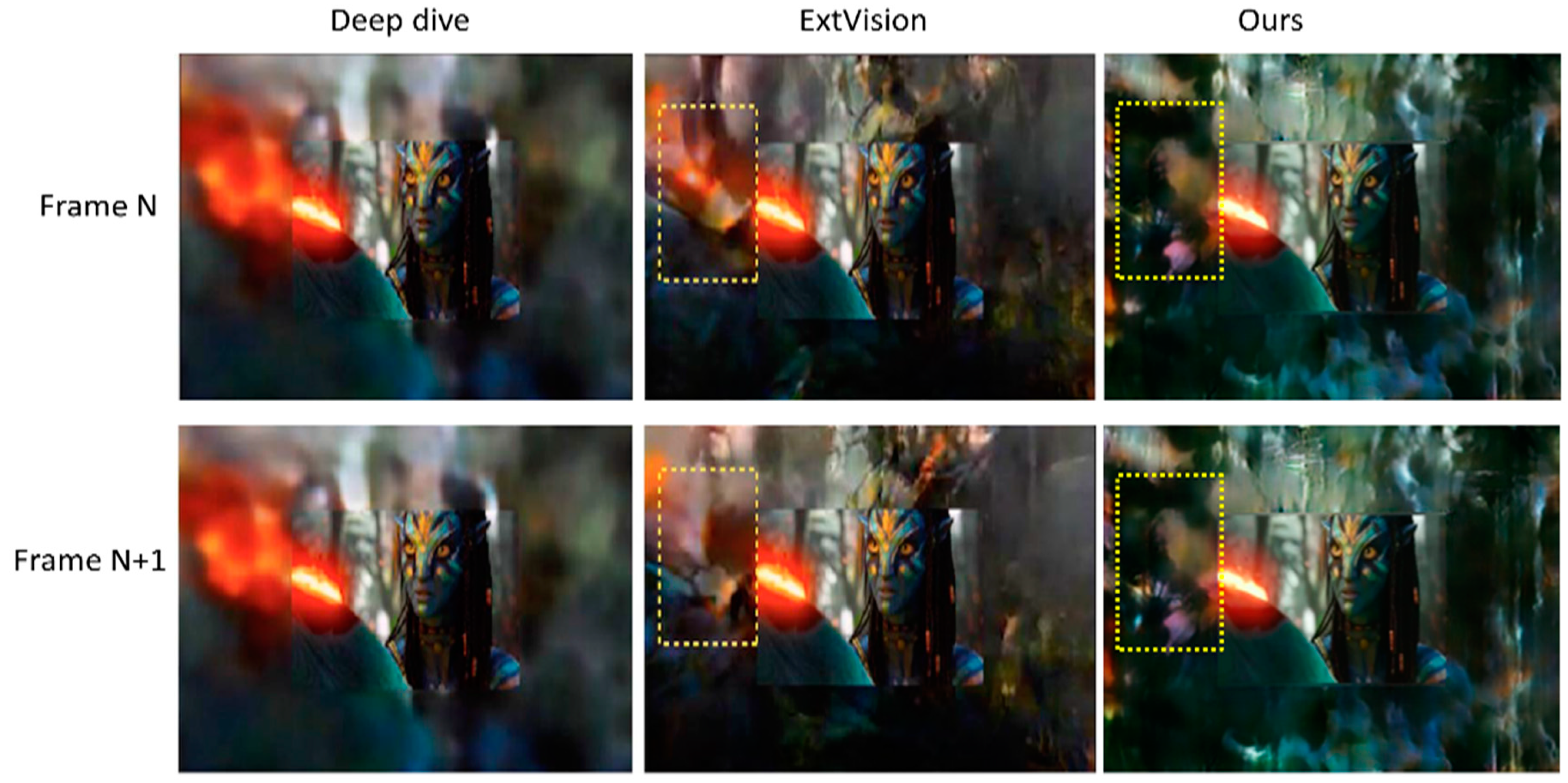

4.2. Comparison with the State of the Art

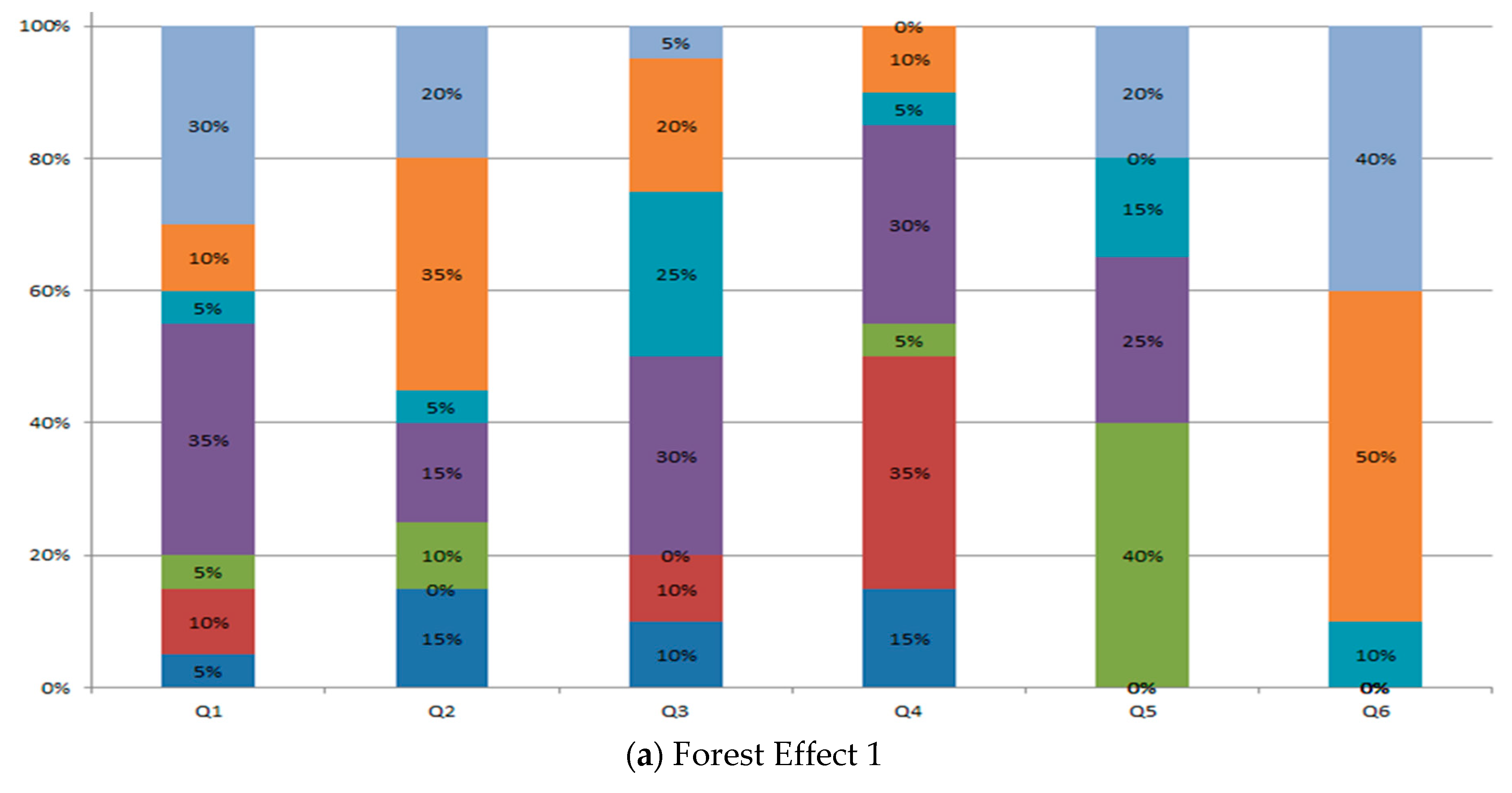

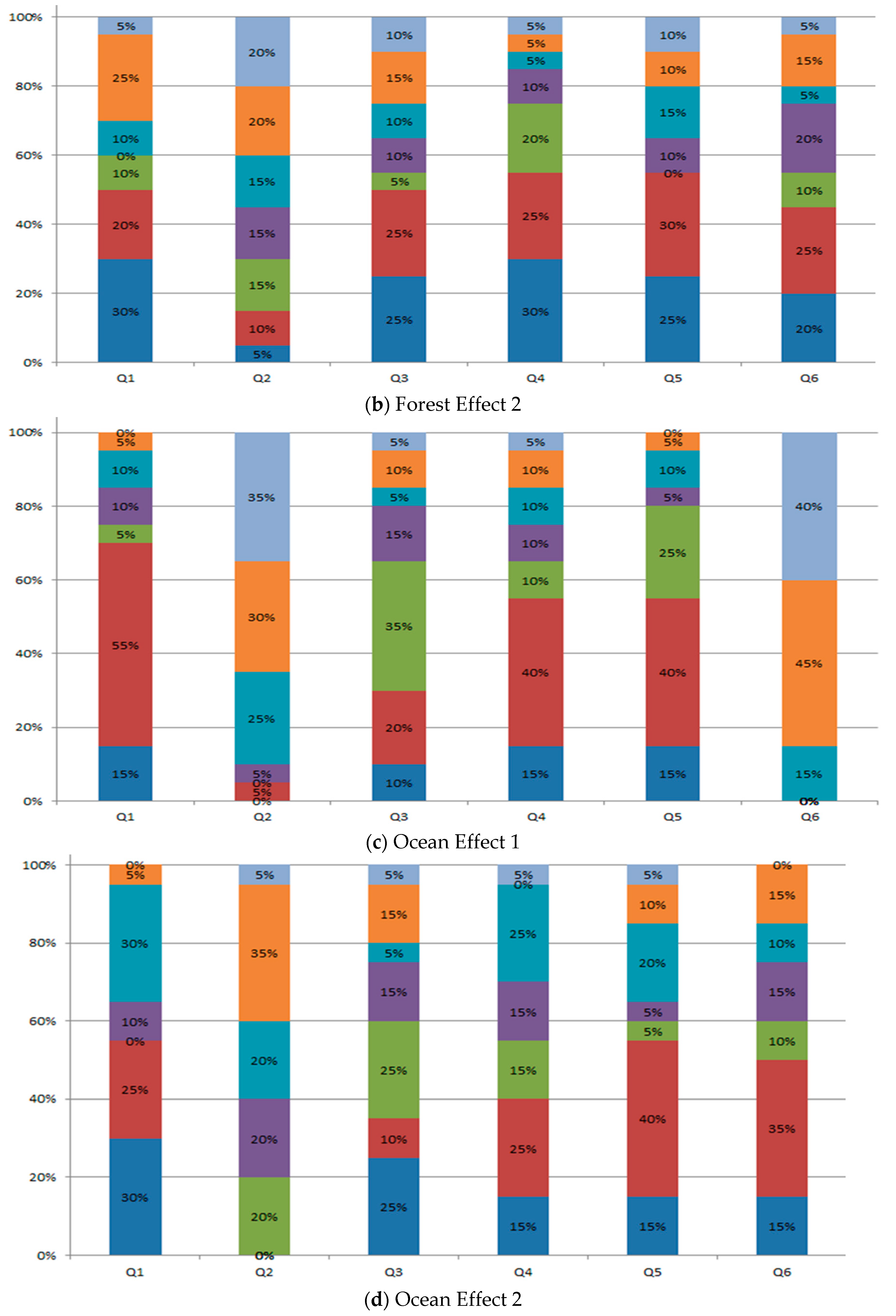

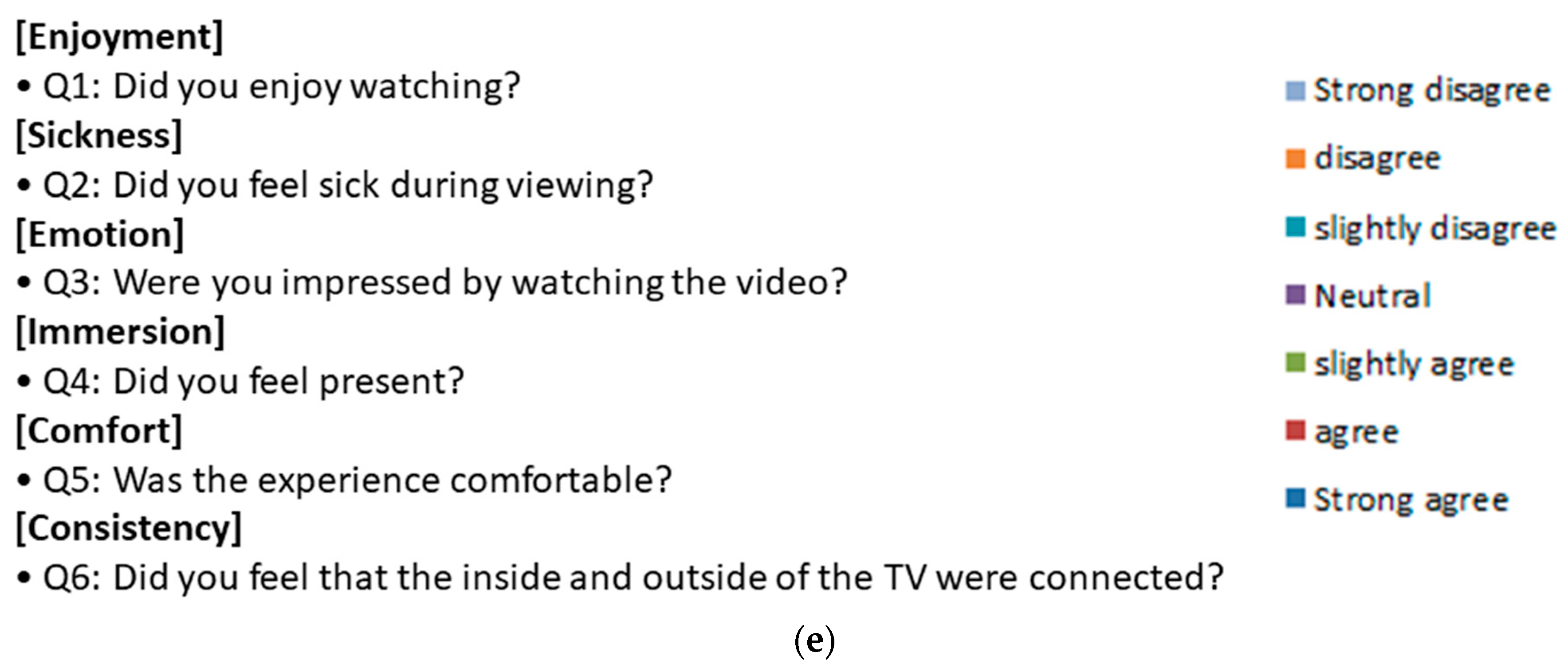

4.3. User Study

- M = Mean score

- Scale = 1 to 7 (SD = 1, D = 2, SLD = 3, N = 4, SLA = 5, A = 6, SA = 7)

- Response = number of responses from strong disagree to strong agree

- n = number of participants.

- Q1: Did you enjoy watching?

- Q2: Did you feel sick during viewing?

- Q3: Were you impressed by watching the video?

- Q4: Did you feel present?

- Q5: Was the experience comfortable?

- Q6: Did you feel that the inside and outside of the TV were connected?

| Measurement | Dataset | Questions | |||||

|---|---|---|---|---|---|---|---|

| Q1 | Q2 | Q3 | Q4 | Q5 | Q6 | ||

| M | Forest Effect 1 | 3 | 3 | 4 | 5 | 4 | 2 |

| E | Forest Effect 2 | 5 | 3 | 5 | 5 | 5 | 5 |

| A | Ocean Effect 1 | 5 | 2 | 5 | 5 | 5 | 2 |

| N | Ocean Effect 2 | 5 | 3 | 5 | 5 | 5 | 5 |

- “I really enjoyed the videos. This effect can be used in cinemas and living room to enhance the visual experiences.” (positive comment),

- “The videos give dizziness or sickness feeling, either it’s going too fast or something else is an issue.” (negative comment).

- “Colors are vibrant and stimulating. The illumination seems great making visual experiences immersive and entertaining.” (positive comment),

- “The projector resolution seems low disturbing the feeling of emotion and immersion.” (negative comment).

- “The effects are enchanting and attractive.”(positive comment),

- “The visual experience causes some kind of flicker movement.”(negative comment).

- “The contrast as well as the resolution seems enhanced. The bigger screen immerses the user fully in the scene, while the smaller screen focuses on the content and delivering the information.”

- “There is a synchronization problem between the video on TV and projected content as the projected video is slower than the one playing on TV, it’s hard to relate to both at a time.”

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jones, B.R.; Benko, H.; Ofek, E.; Wilson, A.D. IllumiRoom: Peripheral projected illusions for interactive experiences. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 869–878. [Google Scholar]

- Shirazi, M.A.; Cho, H.; Woo, W. Augmentation of Visual Experiences using Deep Learning for Peripheral Vision based Extended Display Application. In Proceedings of the IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 11–13 January 2019; pp. 1–2. [Google Scholar]

- Kimura, N.; Rekimoto, J. ExtVision: Augmentation of Visual Experiences with Generation of Context Images for a Peripheral Vision Using Deep Neural Network. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–10. [Google Scholar]

- Turban, L.; Urban, F.; Guillotel, P. Extrafoveal video extension for an immersive viewing experience. IEEE Trans. Vis. Comput. Graph. 2016, 23, 1520–1533. [Google Scholar] [CrossRef] [PubMed]

- Aides, A.; Avraham, T.; Schechner, Y.Y. Multiscale ultrawide foveated video extrapolation. In Proceedings of the IEEE International Conference on Computational Photography (ICCP), Pittsburgh, PA, USA, 8–10 April 2011; pp. 1–8. [Google Scholar]

- Novy, D.E. Computational Immersive Displays. Ph.D. Thesis, Department of Architecture MIT, Cambridge, MA, USA, 2013. [Google Scholar]

- Bimber, O.; Raskar, R. Spatial Augmented Reality: Merging Real and Virtual Worlds; AK Peters Ltd.: New York, NY, USA, 2005; pp. 1–392. [Google Scholar]

- Raskar, R.; Welch, G.; Low, K.L.K.; Bandyopadhyay, D. Shader lamps: Animating real objects with image-based illumination. In Proceedings of the Eurographics Workshop on Rendering Techniques, London, UK, 25–27 June 2001; pp. 89–102. [Google Scholar]

- Pinhanez, C. The Everywhere Displays Projector: A Device to Create Ubiquitous Graphical Interfaces. In Proceedings of the ACM UbiComp, Atlanta, GA, USA, 30 September–2 October 2001; pp. 315–331. [Google Scholar]

- Raskar, R.; Welch, G.; Cutts, M.; Lake, A.; Stesin, L.; Fuchs, H. The Office of the Future: A Unified Approach to Image-Based Modeling and Spatially Immersive Displays. In Proceedings of the ACM SIGGRAPH, Orlando, FL, USA, 19–24 July 1998; pp. 179–188. [Google Scholar]

- Wilson, A.; Benko, H.; Izadi, S.; Hilliges, O. Steerable Augmented Reality with the Beamatron. In Proceedings of the ACM UIST, Cambridge, MA, USA, 7–10 October 2012; pp. 413–422. [Google Scholar]

- Jones, B.; Sodhi, R.; Campbell, R.; Garnett, G.; Bailey, B.P. Build Your World and Play in It: Interacting with Surface Particles on Complex Objects. In Proceedings of the IEEE ISMAR, Seoul, Korea, 13–16 October 2010; pp. 165–174. [Google Scholar]

- Bimber, O.; Coriand, F.; Kleppe, A.; Bruns, E.; Zollmann, S.; Langlotz, T. Superimposing pictorial artwork with projected imagery. IEEE Multimed. 2005, 12, 16–26. [Google Scholar] [CrossRef]

- Bimber, O.; Emmerling, A.; Klemmer, T. Embedded entertainment with smart projectors. IEEE Comput. 2005, 38, 48–55. [Google Scholar] [CrossRef]

- Flagg, M.; Rehg, J.M. Projector-Guided Painting. In Proceedings of the ACM UIST, Montreux, Switzerland, 15–18 October 2006; pp. 235–244. [Google Scholar]

- Baudisch, P.; Good, N.; Stewart, P. Focus plus context screens: Combining display technology with visualization techniques. In Proceedings of the ACM UIST, Orlando, FL, USA, 11–14 November 2001; pp. 31–40. [Google Scholar]

- Philips, T.V. Experience Ambilight|Philips. Available online: https://www.philips.co.uk/c-m-so/tv/p/ambilight (accessed on 14 August 2018).

- Cruz-Neira, C.; Sandin, D.J.; DeFanti, T.A. Surround-Screen Projection-Based Virtual Reality: The Design and Implementation of the CAVE. In Proceedings of the SIGGRAPH, Anaheim, CA, USA, 2–6 August 1993; pp. 135–142. [Google Scholar]

- Avraham, T.; Schechner, Y.Y. Ultrawide Foveated Video Extrapolation. IEEE J. Sel. Top. Signal Process. 2011, 5, 321–334. [Google Scholar] [CrossRef] [Green Version]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Globally and locally consistent image completion. ACM Trans. Graph. 2017, 36, 107–120. [Google Scholar] [CrossRef]

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context Encoders: Feature Learning by Inpainting. In Proceedings of the IEEE CVPR, Las Vegas, NV, USA, 27–30 June 2016; pp. 2536–2544. [Google Scholar]

- Van Noord, N.; Postma, E. Light-weight pixel context encoders for image inpainting. Computing Research Repository. arXiv 2018, arXiv:1801.05585. Available online: https://arxiv.org/abs/1801.05585 (accessed on 1 January 2021).

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Phillipi. phillipi/pix2pix. December 2017. Available online: https://github.com/phillipi/pix2pix (accessed on 17 August 2019).

- Kimura, N.; Kono, M.; Rekimoto, J. Deep dive: Deep-neural-network-based video extension for immersive head-mounted display experiences. In Proceedings of the 8th ACM PerDis ’19, Palermo, Italy, 12–14 June 2019; pp. 1–7. [Google Scholar]

- Naofumi, A.; Kasai, S.; Hayashi, M.; Aoki, Y. 360-Degree Image Completion by Two-Stage Conditional Gans. In Proceedings of the IEEE ICIP, Taipei, Taiwan, 22–25 September 2019; pp. 4704–4708. [Google Scholar]

- Kimura, N.; Kono, M.; Rekimoto, J. Using deep-neural-network to extend videos for head-mounted display experiences. In Proceedings of the ACM VRST, Tokyo, Japan, 28 November–1 December 2018; pp. 1–2. [Google Scholar]

- Kölüş, Ç.; Başçiftçi, F. The Future Effects of Virtual Reality Games. Computer Engineering: 47. Available online: https://academicworks.livredelyon.com/cgi/viewcontent.cgi?article=1014&context=engineer_sci#page=57 (accessed on 23 April 2021).

- Nvidia. NVIDIA DLSS 2.0: A Big Leap in AI Rendering. 2020. Available online: https://www.nvidia.com/en-us/geforce/news/nvidia-dlss-2-0-a-big-leap-in-ai-rendering/ (accessed on 23 April 2021).

- Gruenefeld, U.; El Ali, A.; Boll, S.; Heuten, W. Beyond Halo and Wedge: Visualizing out-of-view objects on head-mounted virtual and augmented reality devices. In Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services, Barcelona, Spain, 3–6 September 2018. [Google Scholar]

- Biener, V.; Schneider, D.; Gesslein, T.; Otte, A.; Kuth, B.; Kristensson, P.O.; Ofek, E.; Pahud, M.; Grubert, J. Breaking the Screen: Interaction Across Touchscreen Boundaries in Virtual Reality for Mobile Knowledge Workers. IEEE Trans. Vis. Comput. Graph. 2020, 26, 3490–3502. [Google Scholar] [CrossRef] [PubMed]

- Almagro, M. Key Trends in Immersive Display Technologies and Experiences. Available online: https://www.soundandcommunications.com/key-trends-immersive-display-technologies-experiences/ (accessed on 25 May 2020).

- Koulieris, G.A.; Akşit, K.; Stengel, M.; Mantiuk, R.K.; Mania, K.; Richardt, C. Near-Eye Display and Tracking Technologies for Virtual and Augmented Reality. Comput. Graph. Forum 2019, 38, 493–519. [Google Scholar] [CrossRef]

- Aksit, K.; Chakravarthula, P.; Rathinavel, K.; Jeong, Y.; Albert, R.; Fuchs, H.; Luebke, D. Manufacturing Application-Driven Foveated Near-Eye Displays. IEEE Trans. Vis. Comput. Graph. 2019, 25, 1928–1939. [Google Scholar] [CrossRef] [PubMed]

- Friston, S.; Ritschel, T.; Steed, A. Perceptual rasterization for head-mounted display image synthesis. ACM Trans. Graph. 2019, 38, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Tursun, O.T.; Arabadzhiyska-Koleva, E.; Wernikowski, M.; Mantiuk, R.; Seidel, H.-P.; Myszkowski, K.; Didyk, P. Luminance-contrast-aware foveated rendering. ACM Trans. Graph. 2019, 38, 1–14. [Google Scholar] [CrossRef]

| S. No. | Focus + Context Scheme | Merits | Demerits |

|---|---|---|---|

| 1. | F + C Full | The user pays attention to the LED TV screen and the visual experience is enhanced by the peripheral projection on the whole background. | It uses a non-flat, non-white projection surface with radiometric compensation. Limited ability to compensate the existing surface color. |

| 2. | F + C Edges | Robustness to the ambient light in the room with enhanced optical flow. | Projection of the black and white edge information instead of colored content. |

| 3. | F + C Seg | This scheme allows the projection on the specific area of the background, such as rear flat wall surrounding the television. | This scheme does not cover the whole background by peripheral projection. |

| 4. | F + C Sel | This scheme allows certain elements to escape the TV screen creating feelings of surprise and immersion. | This scheme does not cover the whole background by peripheral projection. |

| S. No. | Device | Specifications |

|---|---|---|

| 1. | LED TV | Display Type: LED Resolution: 3840 × 2160 Display Format: 4K UHD 2160p Diagonal Size: 54.6 inches Refresh Rate: True Motion 120 (Refresh Rate 60 Hz) |

| 2. | Digital Projector | Projector Type: DLP projector Resolution: 1920 × 1080 Brightness: 3500 ANSI lumens Projection ratio: 1.48 to 1.62:1 Size (W × H × D): 314 × 224 × 100 mm |

| S. No. | Extended Region (pixel) | RMSE (1 fps) | RMSE (30 fps) | PSNR (1 fps) | PSNR (30 fps) |

|---|---|---|---|---|---|

| 1. | 32 | 13.63 | 13.62 | 25.43 | 25.44 |

| 2. | 48 | 17.96 | 18.12 | 23.04 | 22.96 |

| 3. | 64 | 22.51 | 22.68 | 21.08 | 21.01 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shirazi, M.A.; Uddin, R.; Kim, M.-Y. Supervised Learning Based Peripheral Vision System for Immersive Visual Experiences for Extended Display. Appl. Sci. 2021, 11, 4726. https://doi.org/10.3390/app11114726

Shirazi MA, Uddin R, Kim M-Y. Supervised Learning Based Peripheral Vision System for Immersive Visual Experiences for Extended Display. Applied Sciences. 2021; 11(11):4726. https://doi.org/10.3390/app11114726

Chicago/Turabian StyleShirazi, Muhammad Ayaz, Riaz Uddin, and Min-Young Kim. 2021. "Supervised Learning Based Peripheral Vision System for Immersive Visual Experiences for Extended Display" Applied Sciences 11, no. 11: 4726. https://doi.org/10.3390/app11114726

APA StyleShirazi, M. A., Uddin, R., & Kim, M.-Y. (2021). Supervised Learning Based Peripheral Vision System for Immersive Visual Experiences for Extended Display. Applied Sciences, 11(11), 4726. https://doi.org/10.3390/app11114726