Telelocomotion—Remotely Operated Legged Robots

Abstract

Featured Application

Abstract

1. Introduction

- (I)

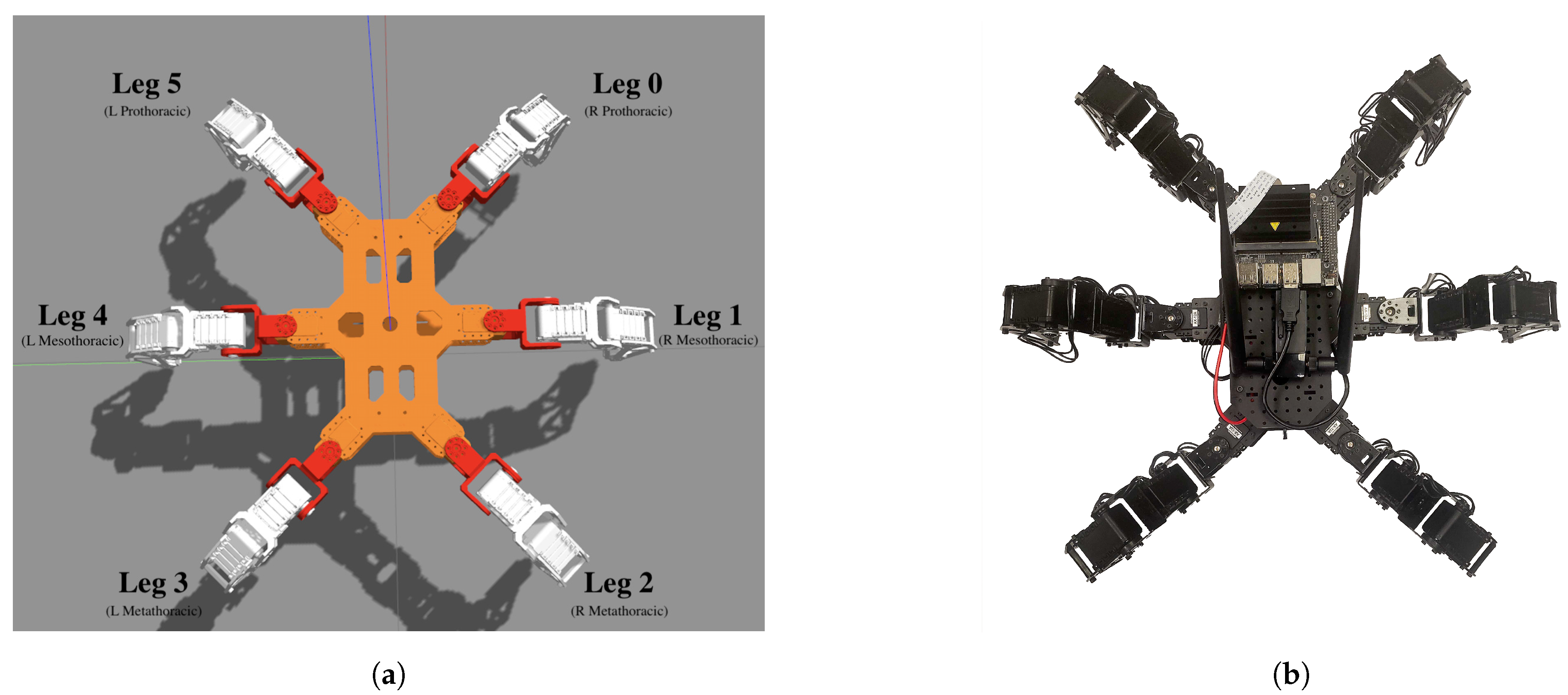

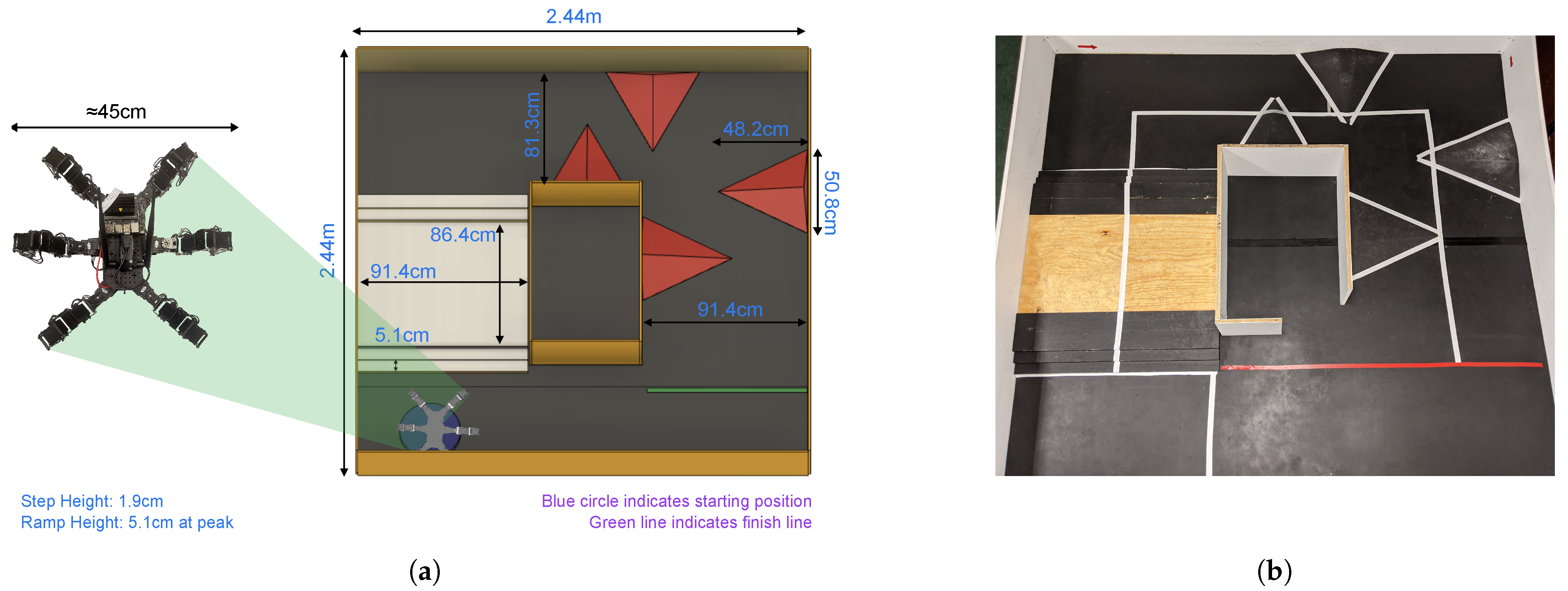

- simulated servo-driven hexapedal telerobotic platform, as shown in Figure 1a, to compare the proposed haptic interface with commonly used alternatives for controlling locomotion in varying levels of traversal task difficulty;

- (II)

- physical implementation of hexapedal telerobotic platform, as shown in Figure 1b, to evaluate the haptic virtual fixtures developed.

1.1. Teleoperation

1.2. Haptic Feedback in Teleoperation

1.3. Dynamic Locomotion

1.3.1. DARPA Robotics Challenge

1.3.2. Bio-inspired Locomotion

1.4. Contributions

2. Methods

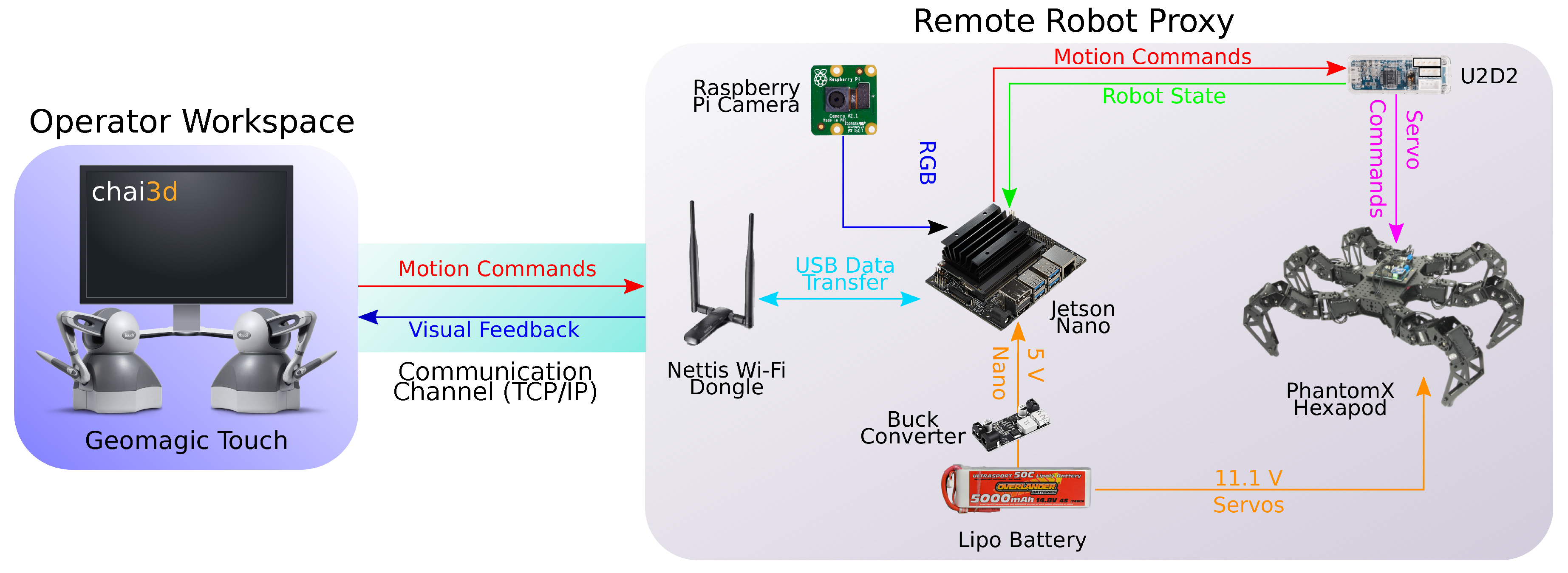

2.1. Teleoperated Platform

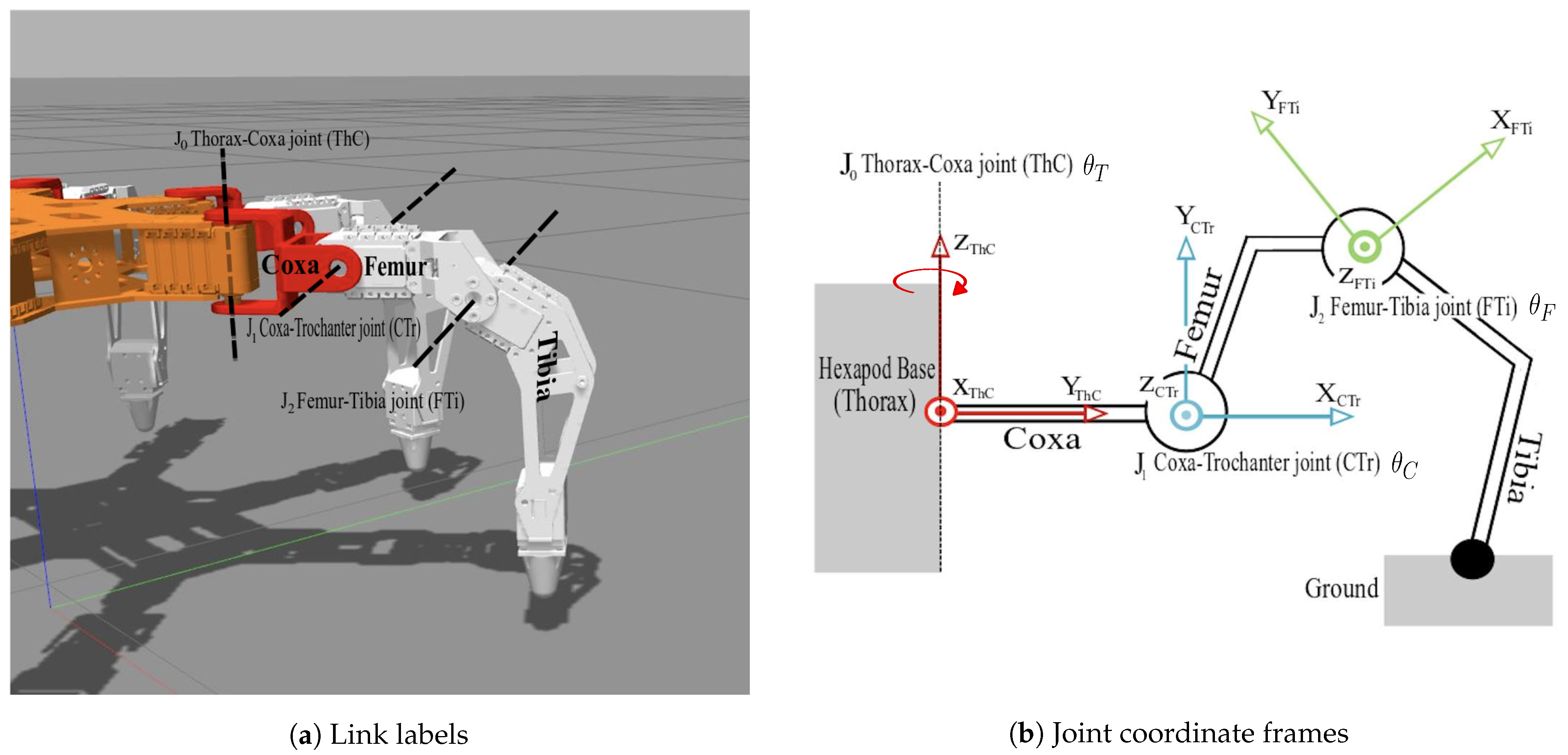

2.1.1. Hexapod Legged Mechanism

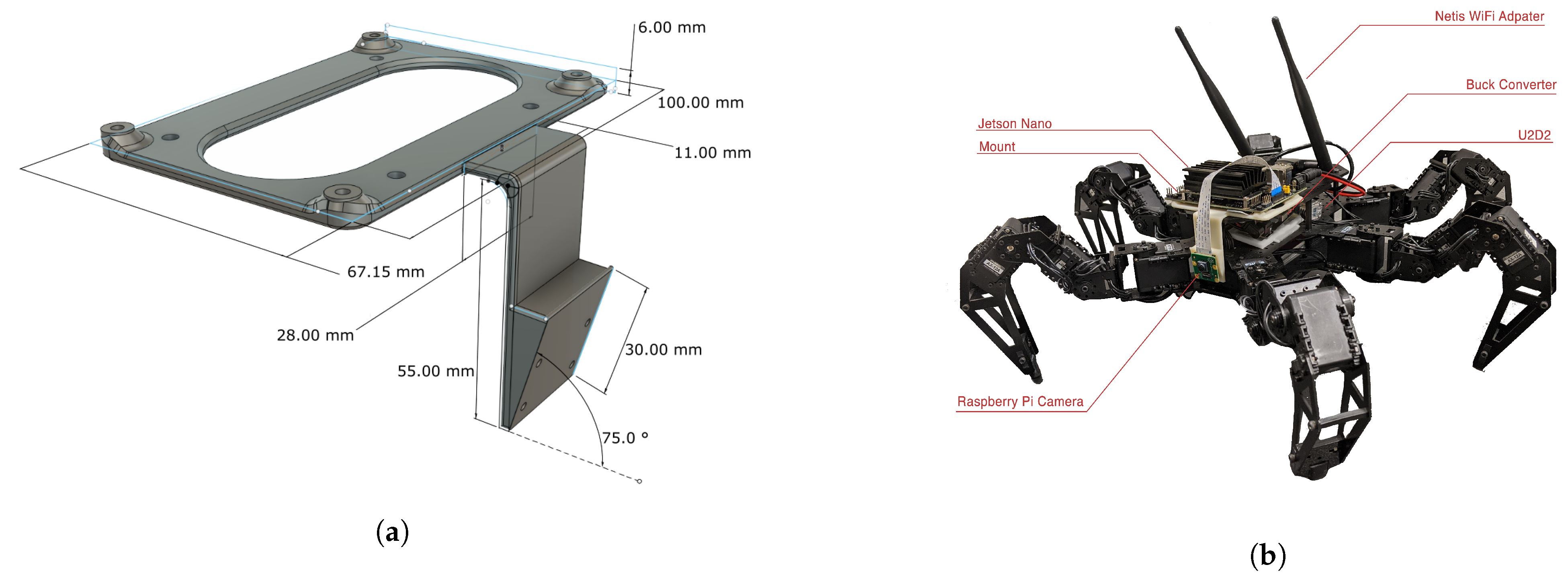

2.1.2. Peripherals and Augmentations

2.2. Operator Interface

2.2.1. Visual Feedback

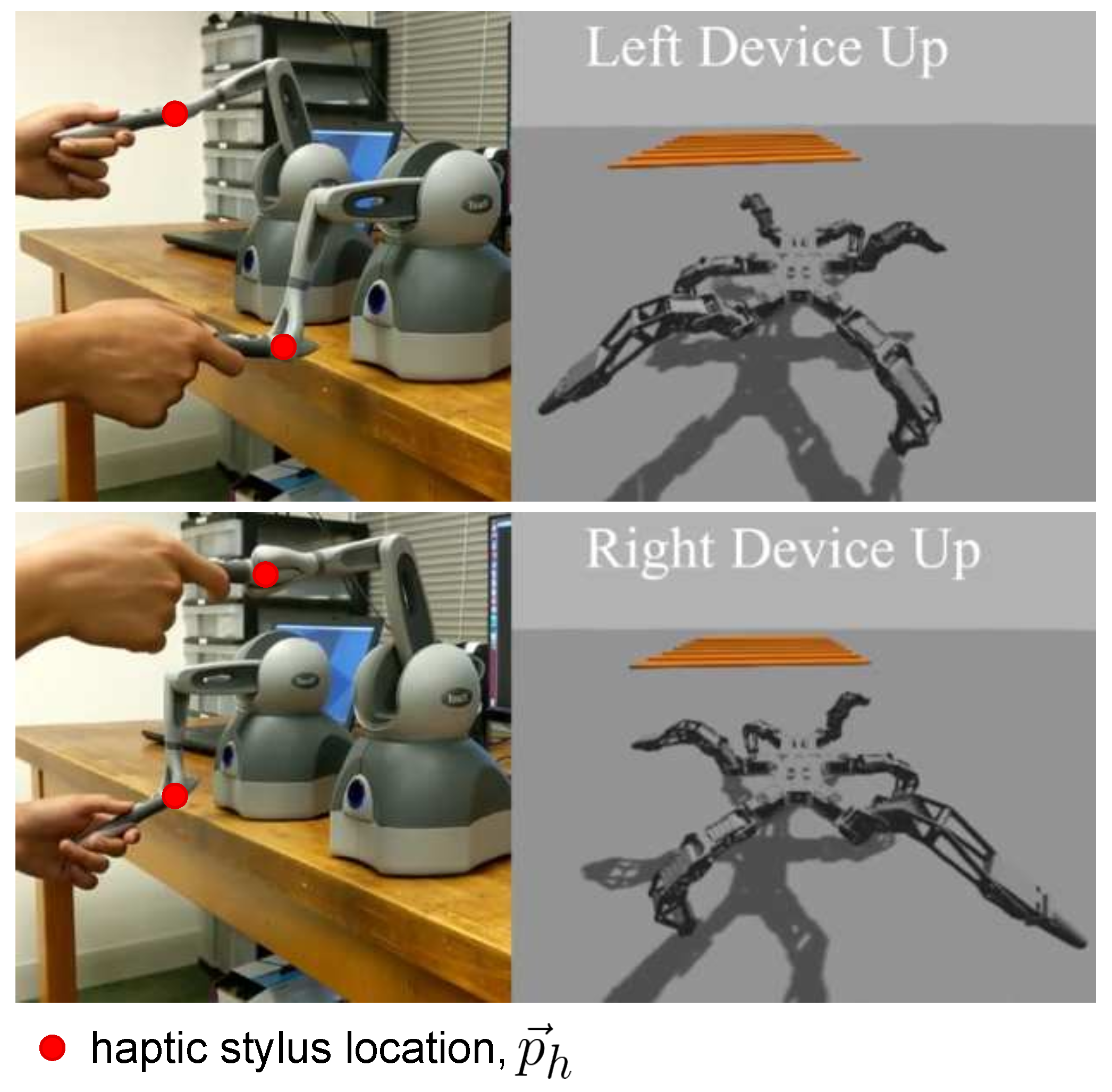

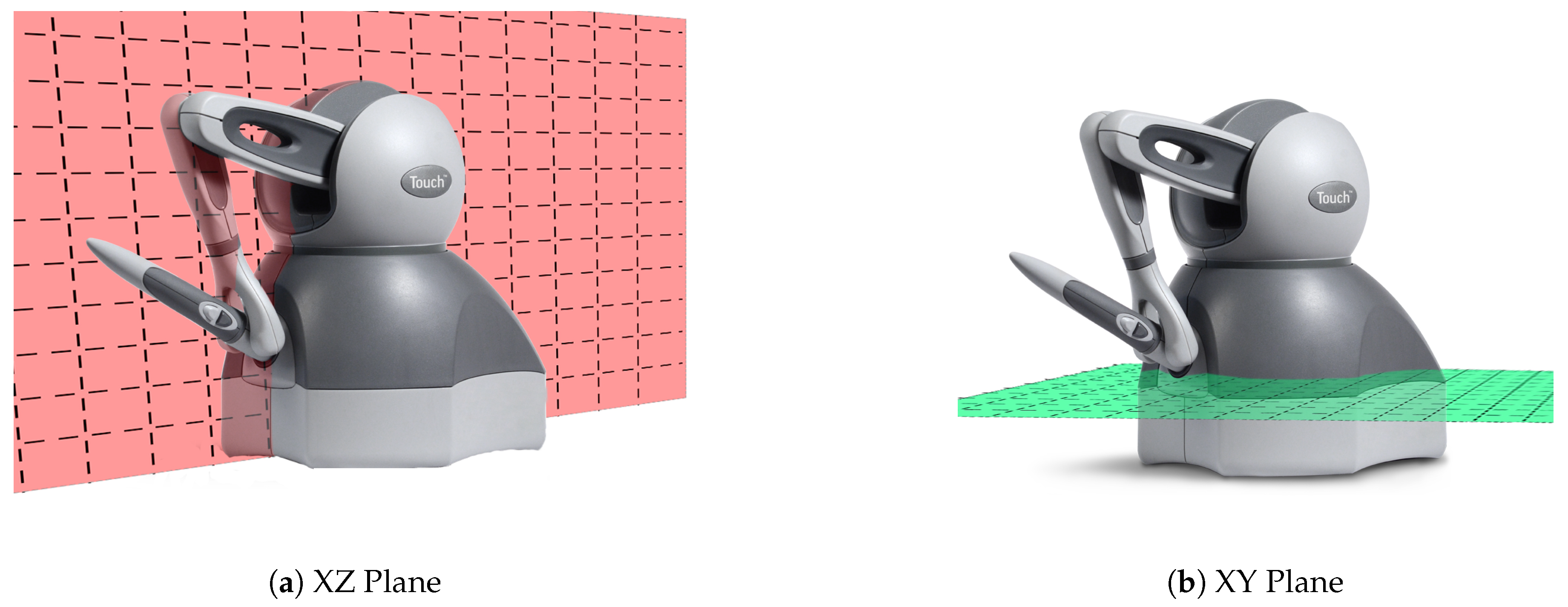

2.2.2. Haptic Interface

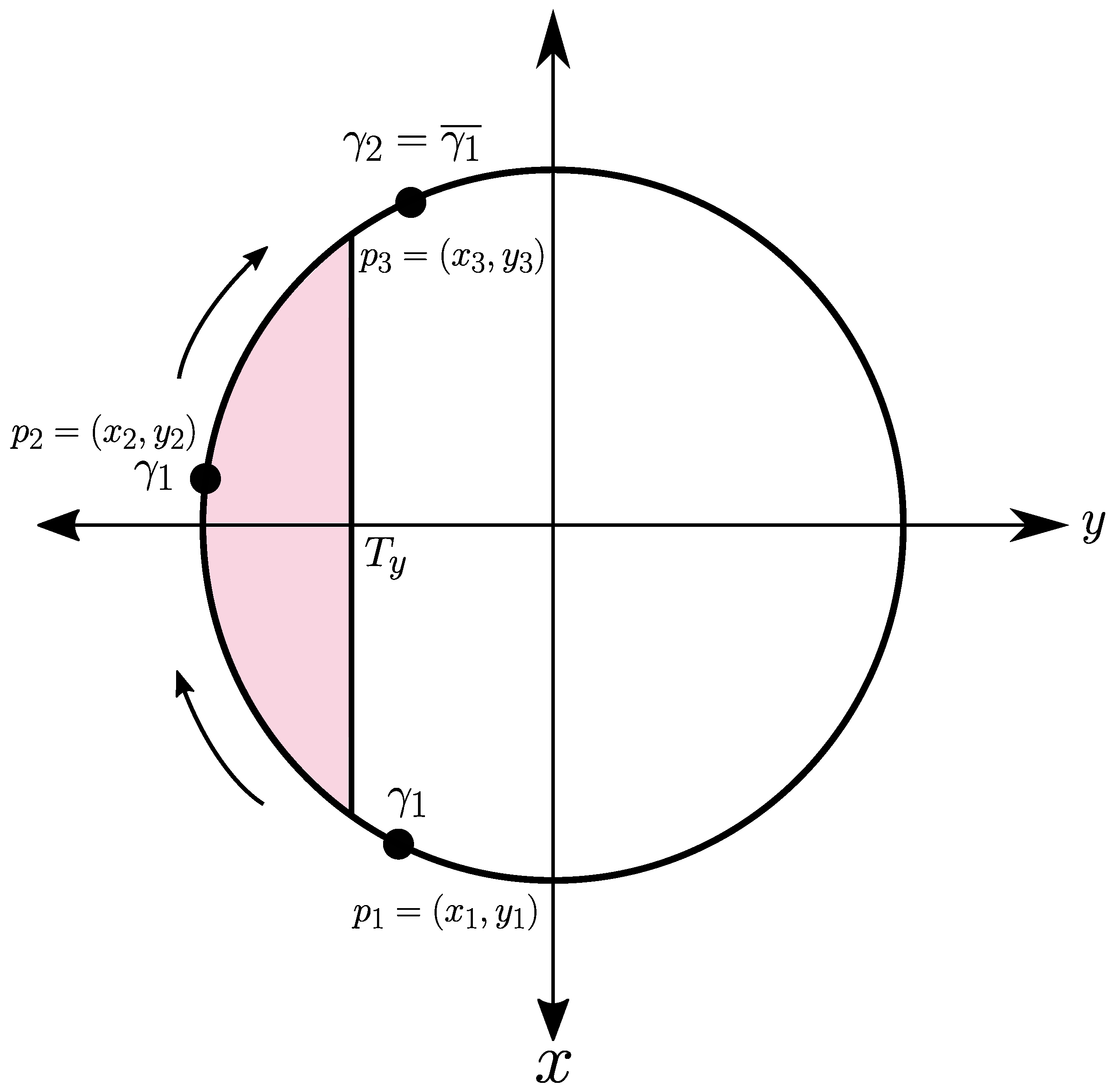

| Algorithm 1 Translational Motion. |

|

| Algorithm 2 Pivoting motion. |

|

3. Experimental Protocol

- (I)

- simulation based comparison of competing interface types with varying task complexity;

- (II)

- physical implementation of the haptic interface evaluating efficacy of the virtual fixtures.

3.1. Experiment I—Telelocomotion Interface Types with Varying Traversal Complexity

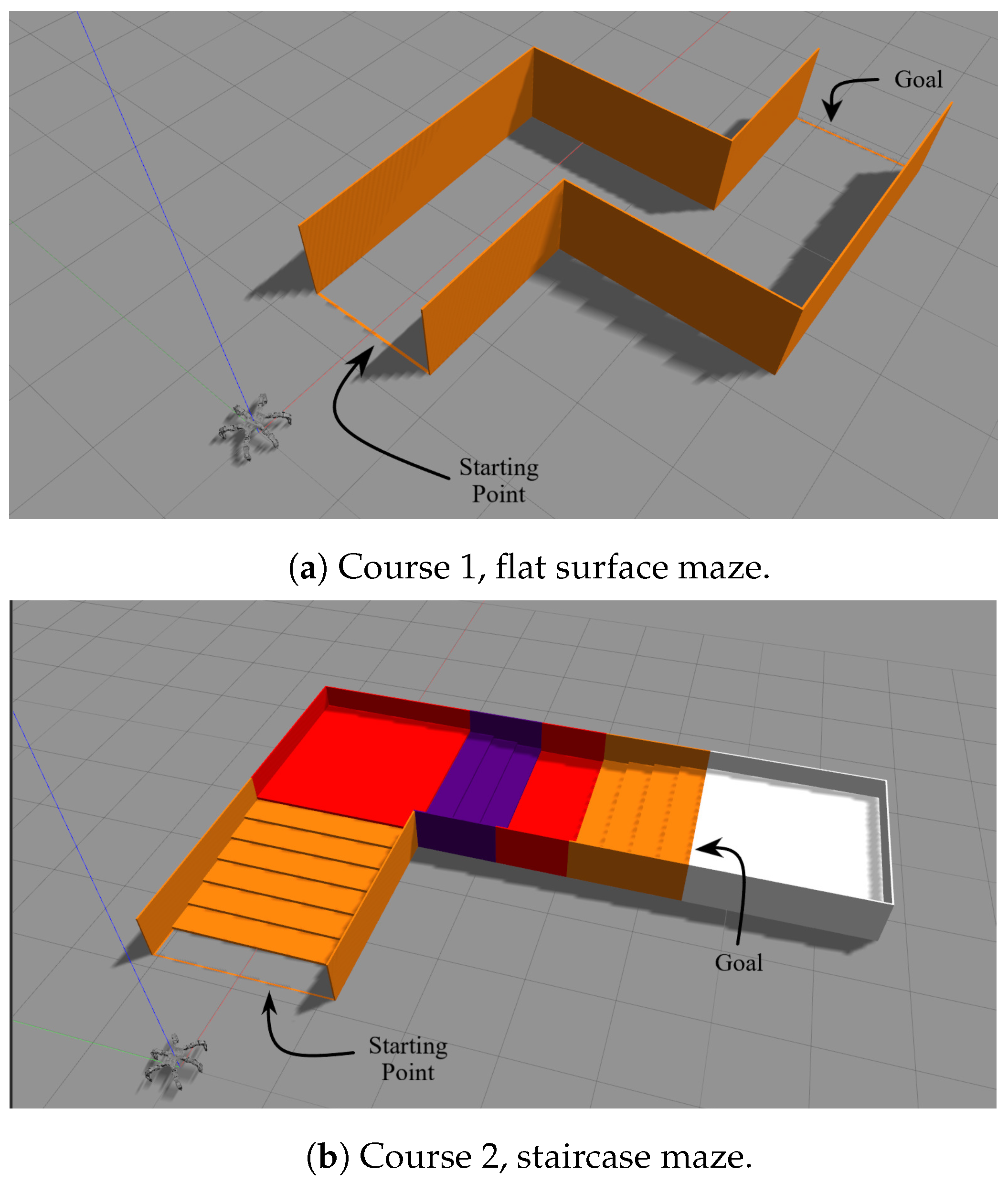

3.1.1. Simulation Environment

3.1.2. Experimental Task

3.1.3. Evaluated Operator Interface Types

- (i)

- standard computer keyboard, K;

- (ii)

- standard gaming controller, J;

- (iii)

- Sensable PHANToM Omni 3 DOF haptic device, H.

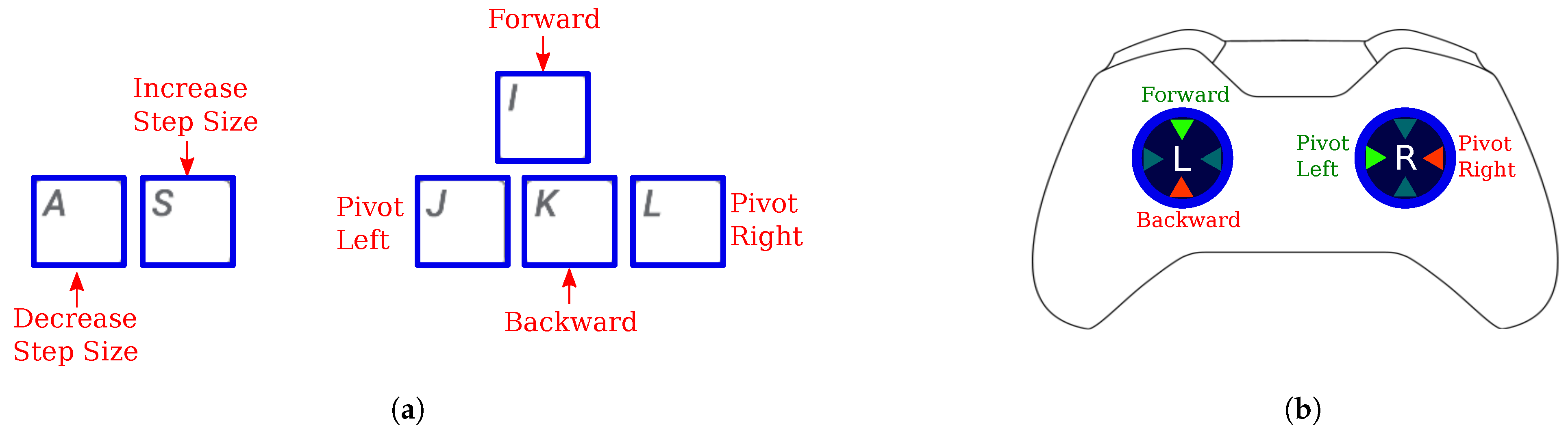

Computer Keyboard, K

Gaming Controller, J

3.1.4. Subject Recruitment

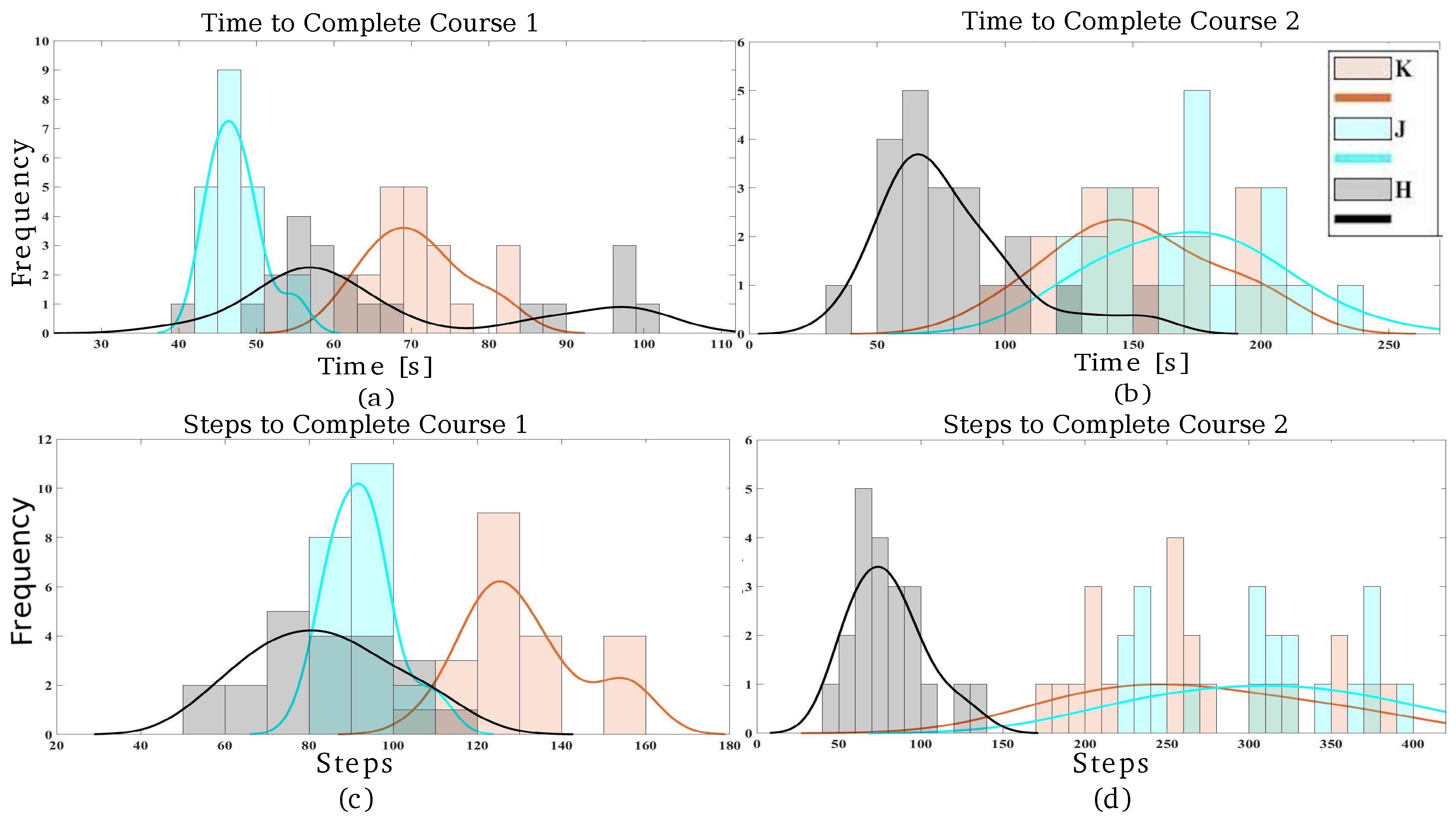

3.1.5. Metrics

- (i)

- time taken to complete the navigation task (s)

- (ii)

- number of steps taken to complete the navigation task

3.1.6. Procedure

3.2. Experiment II—Physical Implementation

3.2.1. Experimental Task

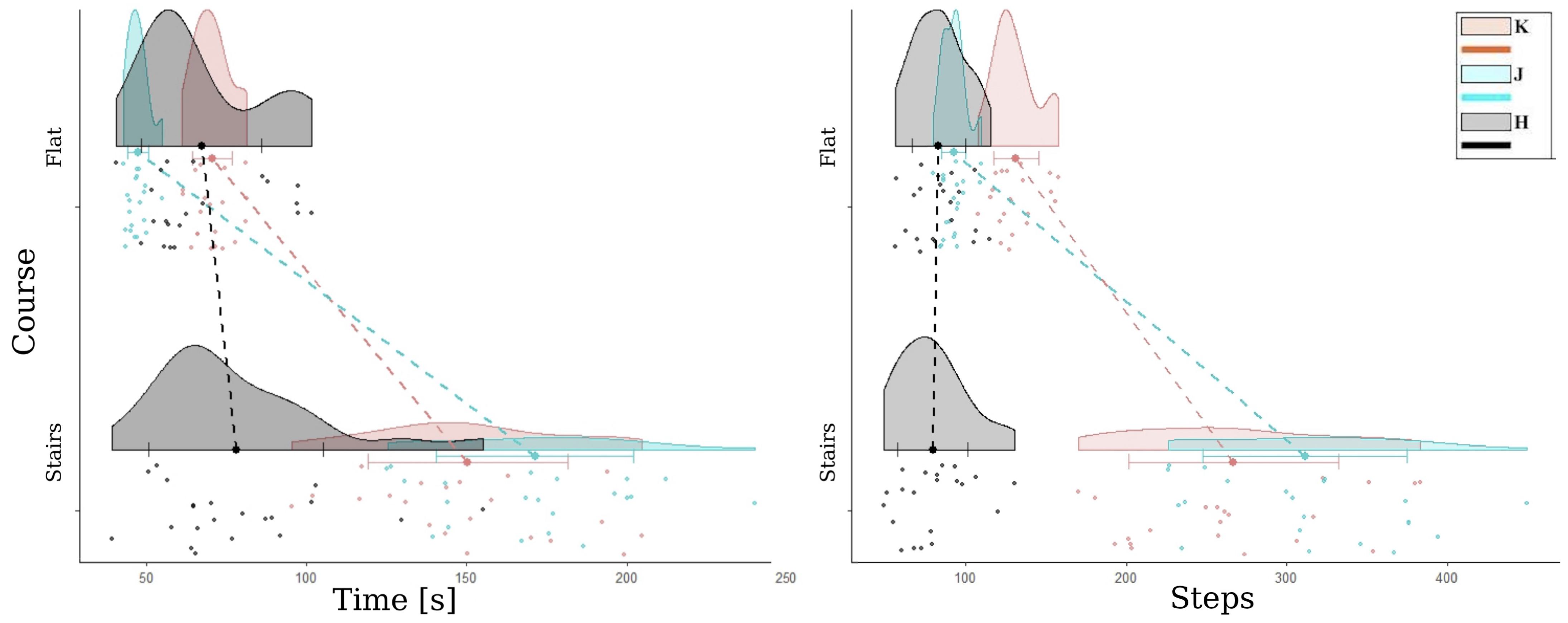

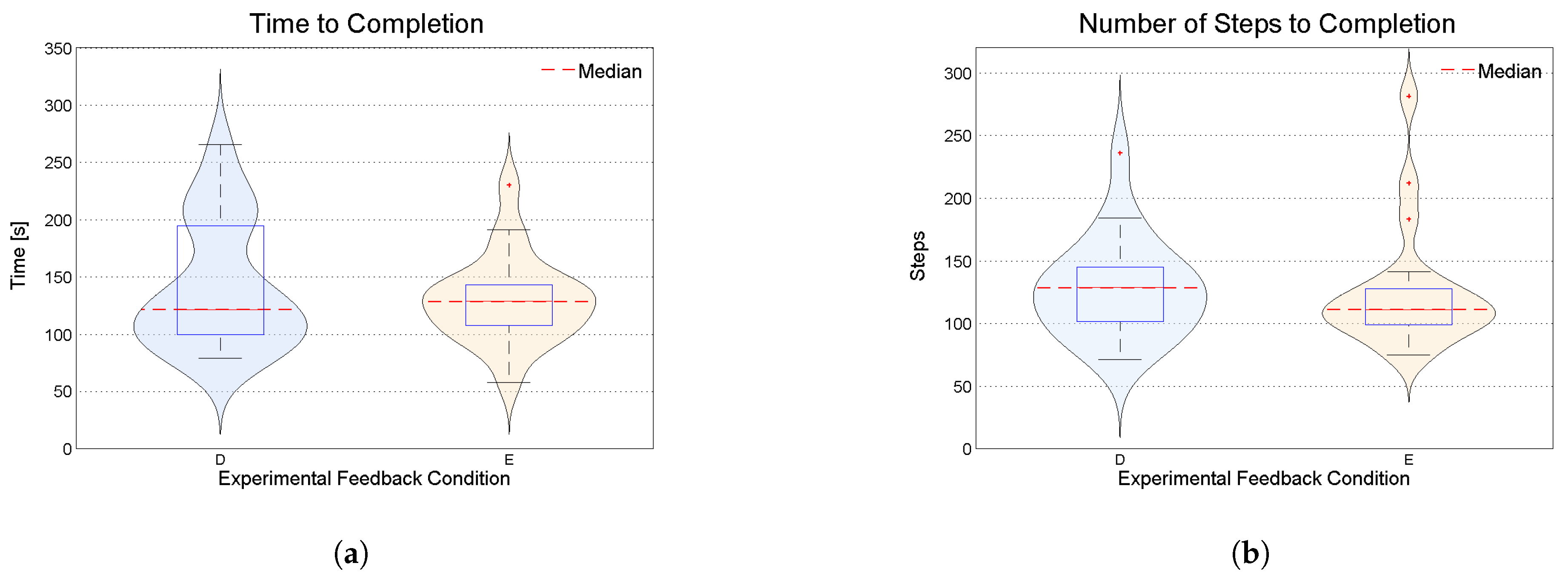

3.2.2. Experimental Conditions

- (i)

- haptic virtual fixtures disabled, D;

- (ii)

- haptic virtual fixtures enabled, E.

3.2.3. Subject Recruitment

3.2.4. Metrics

3.2.5. Procedure

4. Results

4.1. Experiment I—Telelocomotion Interface Types with Varying Traversal Complexity

4.2. Experiment II—Physical Implementation

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Franchi, A.; Secchi, C.; Son, H.I.; Bulthoff, H.H.; Giordano, P.R. Bilateral teleoperation of groups of mobile robots with time-varying topology. IEEE Trans. Robot. 2012, 28, 1019–1033. [Google Scholar] [CrossRef]

- De Barros, P.G.; Linderman, R.W. A Survey of User Interfaces for Robot Teleoperation. WPI Digital Commons 2009. [Google Scholar]

- Niemeyer, G.; Preusche, C.; Stramigioli, S.; Lee, D. Telerobotics. In Springer Handbook of Robotics; Springer: Cham, Switzerland, 2016; pp. 1085–1108. [Google Scholar]

- Mortimer, M.; Horan, B.; Seyedmahmoudian, M. Building a Relationship between Robot Characteristics and Teleoperation User Interfaces. Sensors 2017, 17, 587. [Google Scholar] [CrossRef] [PubMed]

- Huang, K.; Chitrakar, D.; Rydén, F.; Chizeck, H.J. Evaluation of haptic guidance virtual fixtures and 3D visualization methods in telemanipulation—A user study. Intell. Serv. Robot. 2019, 12, 289–301. [Google Scholar] [CrossRef]

- Okamura, A.M. Haptic feedback in robot-assisted minimally invasive surgery. Curr. Opin. Urol. 2009, 19, 102. [Google Scholar] [CrossRef]

- Bimbo, J.; Pacchierotti, C.; Aggravi, M.; Tsagarakis, N.; Prattichizzo, D. Teleoperation in cluttered environments using wearable haptic feedback. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 3401–3408. [Google Scholar]

- Leeper, A.; Hsiao, K.; Ciocarlie, M.; Sucan, I.; Salisbury, K. Methods for collision-free arm teleoperation in clutter using constraints from 3d sensor data. In Proceedings of the 2013 13th IEEE-RAS International Conference on Humanoid Robots (Humanoids), Atlanta, GA, USA, 15–17 October 2013; pp. 520–527. [Google Scholar]

- Roberts, R.; Barajas, M.; Rodriguez-Leal, E.; Gordillo, J.L. Haptic feedback and visual servoing of teleoperated unmanned aerial vehicle for obstacle awareness and avoidance. Int. J. Adv. Robot. Syst. 2017, 14, 1729881417716365. [Google Scholar] [CrossRef]

- Ni, D.; Yew, A.; Ong, S.; Nee, A. Haptic and visual augmented reality interface for programming welding robots. Adv. Manuf. 2017, 5, 191–198. [Google Scholar] [CrossRef]

- Gancet, J.; Urbina, D.; Letier, P.; Ilzokvitz, M.; Weiss, P.; Gauch, F.; Antonelli, G.; Indiveri, G.; Casalino, G.; Birk, A.; et al. Dexrov: Dexterous undersea inspection and maintenance in presence of communication latencies. IFAC-PapersOnLine 2015, 48, 218–223. [Google Scholar] [CrossRef]

- Rydén, F.; Stewart, A.; Chizeck, H.J. Advanced telerobotic underwater manipulation using virtual fixtures and haptic rendering. In Proceedings of the 2013 OCEANS-San Diego, San Diego, CA, USA, 23–27 September 2013; pp. 1–8. [Google Scholar]

- Okamura, A.M.; Verner, L.N.; Reiley, C.; Mahvash, M. Haptics for robot-assisted minimally invasive surgery. In Robotics Research; Springer: Berlin/Heidelberg, Germany, 2010; pp. 361–372. [Google Scholar]

- Su, Y.H.; Huang, I.; Huang, K.; Hannaford, B. Comparison of 3d surgical tool segmentation procedures with robot kinematics prior. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4411–4418. [Google Scholar]

- Zhang, B.; Staab, H.; Wang, J.; Zhang, G.Q.; Boca, R.; Choi, S.; Fuhlbrigge, T.A.; Kock, S.; Chen, H. Teleoperated Industrial Robots. U.S. Patent 9,132,551, 15 September 2015. [Google Scholar]

- Peer, A.; Stanczyk, B.; Unterhinninghofen, U.; Buss, M. Tele-assembly in Wide Remote Environments. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Beijing, China, 9–15 October 2006. [Google Scholar]

- Enayati, N.; De Momi, E.; Ferrigno, G. Haptics in robot-assisted surgery: Challenges and benefits. IEEE Rev. Biomed. Eng. 2016, 9, 49–65. [Google Scholar] [CrossRef]

- Ju, Z.; Yang, C.; Li, Z.; Cheng, L.; Ma, H. Teleoperation of humanoid baxter robot using haptic feedback. In Proceedings of the 2014 International Conference on Multisensor Fusion and Information Integration for Intelligent Systems (MFI), Beijing, China, 28–29 September 2014; pp. 1–6. [Google Scholar]

- Costes, A.; Danieau, F.; Argelaguet-Sanz, F.; Lécuyer, A.; Guillotel, P. KinesTouch: 3D Force-Feedback Rendering for Tactile Surfaces. In International Conference on Virtual Reality and Augmented Reality; Springer: Cham, Switzerland, 2018; pp. 97–116. [Google Scholar]

- Su, Y.H.; Huang, K.; Hannaford, B. Real-time vision-based surgical tool segmentation with robot kinematics prior. In Proceedings of the 2018 International Symposium on Medical Robotics (ISMR), Atlanta, GA, USA, 1–3 March 2018; pp. 1–6. [Google Scholar]

- Huang, K.; Su, Y.H.; Khalil, M.; Melesse, D.; Mitra, R. Sampling of 3DOF Robot Manipulator Joint-Limits for Haptic Feedback. In Proceedings of the 2019 IEEE 4th International Conference on Advanced Robotics and Mechatronics (ICARM), Toyonaka, Japan, 3–5 July 2019; pp. 690–696. [Google Scholar]

- Huang, K.; Lancaster, P.; Smith, J.R.; Chizeck, H.J. Visionless Tele-Exploration of 3D Moving Objects. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 2238–2244. [Google Scholar]

- Huang, K.; Jiang, L.T.; Smith, J.R.; Chizeck, H.J. Sensor-aided teleoperated grasping of transparent objects. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 4953–4959. [Google Scholar]

- Jiang, W.; Zheng, J.J.; Zhou, H.J.; Zhang, B.K. A new constraint-based virtual environment for haptic assembly training. Adv. Eng. Softw. 2016, 98, 58–68. [Google Scholar] [CrossRef]

- Diolaiti, N.; Melchiorri, C. Teleoperation of a mobile robot through haptic feedback. In Proceedings of the IEEE International Workshop HAVE Haptic Virtual Environments and Their, Ottawa, ON, Canada, 17–18 November 2002; pp. 67–72. [Google Scholar]

- Cislo, N. Telepresence and Intervention Robotics; Technical report; Laboratoire de Robotique de Paris: Velizy-Villacoublay, France, 2000. [Google Scholar]

- Miyasato, T. Tele-nursing system with realistic sensations using virtual locomotion interface. In Proceedings of the 6th ERCIM Workshop “User Interfaces for All”, Florence, Italy, 25–26 October 2000. [Google Scholar]

- Klamt, T.; Rodriguez, D.; Schwarz, M.; Lenz, C.; Pavlichenko, D.; Droeschel, D.; Behnke, S. Supervised autonomous locomotion and manipulation for disaster response with a centaur-like robot. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar]

- Bellicoso, C.D.; Bjelonic, M.; Wellhausen, L.; Holtmann, K.; Günther, F.; Tranzatto, M.; Fankhauser, P.; Hutter, M. Advances in real-world applications for legged robots. J. Field Robot. 2018, 35, 1311–1326. [Google Scholar] [CrossRef]

- Luk, B.; Galt, S.; Cooke, D.; Hewer, N. Intelligent walking motions and control for a legged robot. In Proceedings of the 1999 European Control Conference (ECC), Karlsruhe, Germany, 31 August–3 September 1999; pp. 4756–4761. [Google Scholar]

- Focchi, M.; Orsolino, R.; Camurri, M.; Barasuol, V.; Mastalli, C.; Caldwell, D.G.; Semini, C. Heuristic planning for rough terrain locomotion in presence of external disturbances and variable perception quality. In Advances in Robotics Research: From Lab to Market; Springer: Cham, Switzerland, 2020; pp. 165–209. [Google Scholar]

- Xi, W.; Yesilevskiy, Y.; Remy, C.D. Selecting gaits for economical locomotion of legged robots. Int. J. Robot. Res. 2016, 35, 1140–1154. [Google Scholar] [CrossRef]

- Carpentier, J.; Mansard, N. Multicontact locomotion of legged robots. IEEE Trans. Robot. 2018, 34, 1441–1460. [Google Scholar] [CrossRef]

- Guizzo, E.; Ackerman, E. The hard lessons of DARPA’s robotics challenge [News]. IEEE Spectr. 2015, 52, 11–13. [Google Scholar] [CrossRef]

- Westervelt, E.R.; Grizzle, J.W.; Chevallereau, C.; Choi, J.H.; Morris, B. Feedback Control of Dynamic Bipedal Robot Locomotion; CRC Press: Boca Raton, FL, USA, 2007. [Google Scholar]

- Hong, A.; Lee, D.G.; Bülthoff, H.H.; Son, H.I. Multimodal feedback for teleoperation of multiple mobile robots in an outdoor environment. J. Multimodal User Interfaces 2017, 11, 67–80. [Google Scholar] [CrossRef]

- Dubey, S. Robot Locomotion—A Review. Int. J. Appl. Eng. Res. 2015, 10, 7357–7369. [Google Scholar]

- Johnson, M.; Shrewsbury, B.; Bertrand, S.; Wu, T.; Duran, D.; Floyd, M.; Abeles, P.; Stephen, D.; Mertins, N.; Lesman, A.; et al. Team IHMC’s lessons learned from the DARPA robotics challenge trials. J. Field Robot. 2015, 32, 192–208. [Google Scholar] [CrossRef]

- Yanco, H.A.; Norton, A.; Ober, W.; Shane, D.; Skinner, A.; Vice, J. Analysis of human-robot interaction at the DARPA robotics challenge trials. J. Field Robot. 2015, 32, 420–444. [Google Scholar] [CrossRef]

- DeDonato, M.; Dimitrov, V.; Du, R.; Giovacchini, R.; Knoedler, K.; Long, X.; Polido, F.; Gennert, M.A.; Padır, T.; Feng, S.; et al. Human-in-the-loop control of a humanoid robot for disaster response: A report from the DARPA Robotics Challenge Trials. J. Field Robot. 2015, 32, 275–292. [Google Scholar] [CrossRef]

- Feng, S.; Whitman, E.; Xinjilefu, X.; Atkeson, C.G. Optimization-based full body control for the DARPA robotics challenge. J. Field Robot. 2015, 32, 293–312. [Google Scholar] [CrossRef]

- Atkeson, C.G.; Babu, B.; Banerjee, N.; Berenson, D.; Bove, C.; Cui, X.; DeDonato, M.; Du, R.; Feng, S.; Franklin, P.; et al. What happened at the DARPA robotics challenge, and why. DRC Final. Spec. Issue J. Field Robot. 2016, 1. submitted. [Google Scholar]

- Kohlbrecher, S.; Romay, A.; Stumpf, A.; Gupta, A.; Von Stryk, O.; Bacim, F.; Bowman, D.A.; Goins, A.; Balasubramanian, R.; Conner, D.C. Human-robot teaming for rescue missions: Team ViGIR’s approach to the 2013 DARPA Robotics Challenge Trials. J. Field Robot. 2015, 32, 352–377. [Google Scholar] [CrossRef]

- Hopkins, M.A.; Griffin, R.J.; Leonessa, A.; Lattimer, B.Y.; Furukawa, T. Design of a compliant bipedal walking controller for the DARPA Robotics Challenge. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea, 3–5 November 2015; pp. 831–837. [Google Scholar]

- Sitti, M.; Menciassi, A.; Ijspeert, A.J.; Low, K.H.; Kim, S. Survey and introduction to the focused section on bio-inspired mechatronics. IEEE/ASME Trans. Mechatronics 2013, 18, 409–418. [Google Scholar] [CrossRef]

- Righetti, L.; Ijspeert, A.J. Programmable central pattern generators: An application to biped locomotion control. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, ICRA 2006, Orlando, FL, USA, 15–19 May 2006; pp. 1585–1590. [Google Scholar]

- Lachat, D.; Crespi, A.; Ijspeert, A.J. Boxybot: A swimming and crawling fish robot controlled by a central pattern generator. In Proceedings of the The First IEEE/RAS-EMBS International Conference on Biomedical Robotics and Biomechatronics, BioRob 2006, Pisa, Italy, 20–22 February 2006; pp. 643–648. [Google Scholar]

- Raibert, M.; Blankespoor, K.; Nelson, G.; Playter, R. Bigdog, the rough-terrain quadruped robot. IFAC Proc. Vol. 2008, 41, 10822–10825. [Google Scholar] [CrossRef]

- Saranli, U.; Buehler, M.; Koditschek, D.E. RHex: A simple and highly mobile hexapod robot. Int. J. Robot. Res. 2001, 20, 616–631. [Google Scholar] [CrossRef]

- Boxerbaum, A.S.; Oro, J.; Quinn, R.D. Introducing DAGSI Whegs™: The latest generation of Whegs™ robots, featuring a passive-compliant body joint. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 1783–1784. [Google Scholar]

- Kim, S.; Clark, J.E.; Cutkosky, M.R. iSprawl: Design and tuning for high-speed autonomous open-loop running. Int. J. Robot. Res. 2006, 25, 903–912. [Google Scholar] [CrossRef]

- Zucker, M.; Ratliff, N.; Stolle, M.; Chestnutt, J.; Bagnell, J.A.; Atkeson, C.G.; Kuffner, J. Optimization and learning for rough terrain legged locomotion. Int. J. Robot. Res. 2011, 30, 175–191. [Google Scholar] [CrossRef]

- Hoover, A.M.; Burden, S.; Fu, X.Y.; Sastry, S.S.; Fearing, R.S. Bio-inspired design and dynamic maneuverability of a minimally actuated six-legged robot. In Proceedings of the 2010 3rd IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics, Tokyo, Japan, 26–29 September 2010; pp. 869–876. [Google Scholar]

- Ramos, J.; Kim, S. Dynamic locomotion synchronization of bipedal robot and human operator via bilateral feedback teleoperation. Sci. Robot. 2019, 4. [Google Scholar] [CrossRef]

- Ramos, J.; Wang, A.; Ubellacker, W.; Mayo, J.; Kim, S. A balance feedback interface for whole-body teleoperation of a humanoid robot and implementation in the HERMES system. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea, 3–5 November 2015; pp. 844–850. [Google Scholar]

- Ramos, J.; Kim, S. Humanoid dynamic synchronization through whole-body bilateral feedback teleoperation. IEEE Trans. Robot. 2018, 34, 953–965. [Google Scholar] [CrossRef]

- Wilde, G.A.; Murphy, R.R. User Interface for Unmanned Surface Vehicles Used to Rescue Drowning Victims. In Proceedings of the 2018 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Philadelphia, PA, USA, 6–8 August 2018; pp. 1–8. [Google Scholar]

- Hindman, A. Robotic Bronchoscopy. Oncol. Issues 2019, 34, 16–20. [Google Scholar] [CrossRef]

- Atkeson, C.G.; Babu, B.P.W.; Banerjee, N.; Berenson, D.; Bove, C.P.; Cui, X.; DeDonato, M.; Du, R.; Feng, S.; Franklin, P.; et al. No falls, no resets: Reliable humanoid behavior in the DARPA robotics challenge. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea, 3–5 November 2015; pp. 623–630. [Google Scholar]

- Guizzo, E. Rescue-robot show-down. IEEE Spectr. 2014, 51, 52–55. [Google Scholar] [CrossRef]

- Dupeyroux, J.; Passault, G.; Ruffier, F.; Viollet, S.; Serres, J. Hexabot: A Small 3D-Printed Six-Legged Walking Robot Designed for Desert Ant-Like Navigation Tasks. In Proceedings of the 20th World Congress of the International Federation of Automatic Control (IFAC), Toulouse, France, 9–14 July 2017; pp. 16628–16631. [Google Scholar]

- Chitrakar, D.; Mitra, R.; Huang, K. Haptic Interface for Hexapod Gait Execution. In Proceedings of the 2020 Fourth IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 9–11 November 2020; pp. 414–415. [Google Scholar]

| Operator Mode | K | J | H | p | |

|---|---|---|---|---|---|

| Metric | |||||

| Course 1 (Flat) | Time [s] | 70.655 | 47.342 | 67.265 | |

| Steps | 131.30 | 92.449 | 83.125 | ||

| Course 2 (Stairs) | Time [s] | 150.30 | 171.38 | 78.048 | |

| Steps | 266.87 | 331.31 | 79.571 |

| Comparison Mode | K-J | K-H | J-H | |

|---|---|---|---|---|

| Metric | ||||

| Course 1 (Flat) | Time [s] | 7.547 × 10 | 0.226 | 4.319 × 10 |

| Steps | 6.009 × 10 | 1.7843 × 10 | 0.264 | |

| Course 2 (Stairs) | Time [s] | 0.321 | 2.328 × 10 | 1.119 × 10 |

| Steps | 0.412 | 2.422 × 10 | 2.589 × 10 |

| Operator Mode | D Virtual Fixtures Disabled | E Virtual Fixtures Enabled | D-E Improvement with Fixtures | |

|---|---|---|---|---|

| Metric | ||||

| Time [s] | 141.5 | 130 | 11.5 | |

| Steps | 127.7 | 124.95 | 2.75 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, K.; Subedi, D.; Mitra, R.; Yung, I.; Boyd, K.; Aldrich, E.; Chitrakar, D. Telelocomotion—Remotely Operated Legged Robots. Appl. Sci. 2021, 11, 194. https://doi.org/10.3390/app11010194

Huang K, Subedi D, Mitra R, Yung I, Boyd K, Aldrich E, Chitrakar D. Telelocomotion—Remotely Operated Legged Robots. Applied Sciences. 2021; 11(1):194. https://doi.org/10.3390/app11010194

Chicago/Turabian StyleHuang, Kevin, Divas Subedi, Rahul Mitra, Isabella Yung, Kirkland Boyd, Edwin Aldrich, and Digesh Chitrakar. 2021. "Telelocomotion—Remotely Operated Legged Robots" Applied Sciences 11, no. 1: 194. https://doi.org/10.3390/app11010194

APA StyleHuang, K., Subedi, D., Mitra, R., Yung, I., Boyd, K., Aldrich, E., & Chitrakar, D. (2021). Telelocomotion—Remotely Operated Legged Robots. Applied Sciences, 11(1), 194. https://doi.org/10.3390/app11010194