Impacts of Weather on Short-Term Metro Passenger Flow Forecasting Using a Deep LSTM Neural Network

Abstract

1. Introduction

2. Input Variables

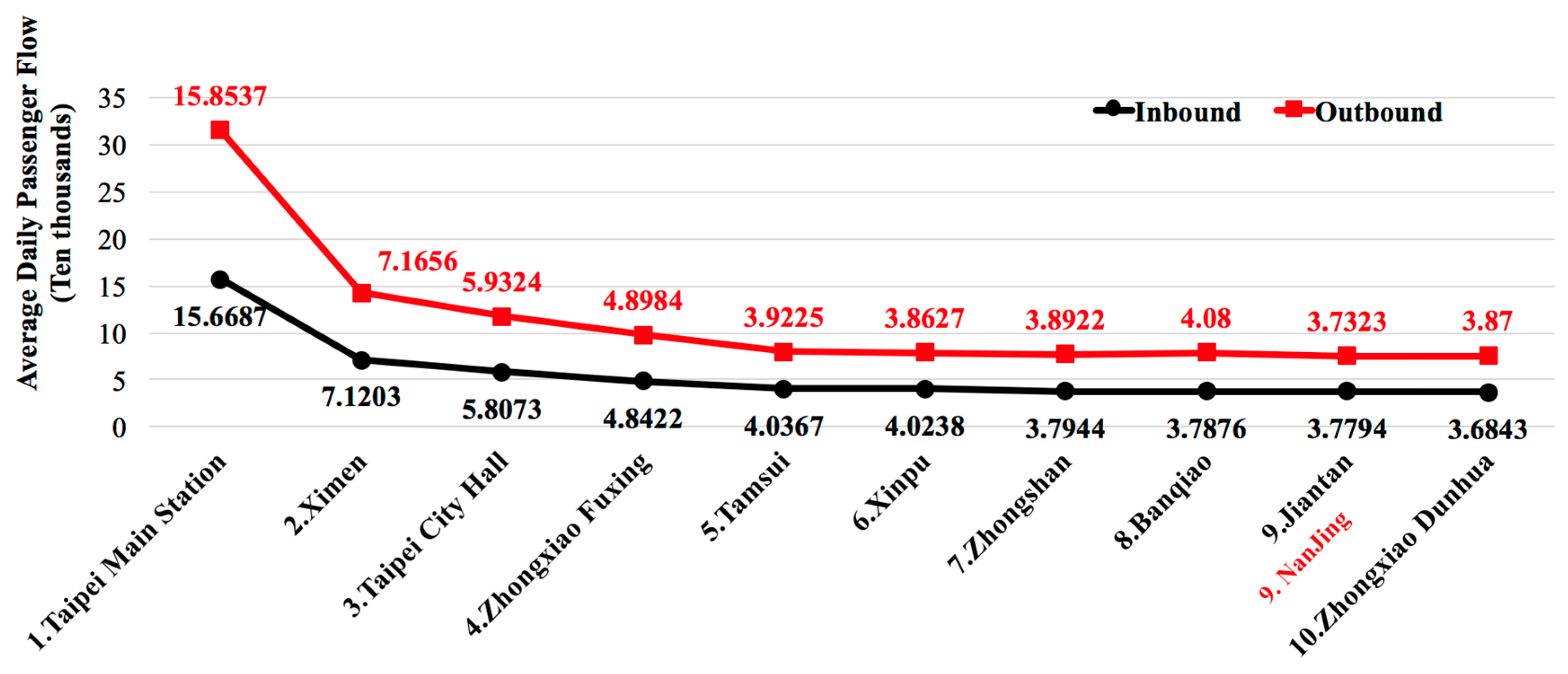

2.1. Station-level Data Description

2.2. Endogenous Input Variables

2.3. Exogenous Input Variables

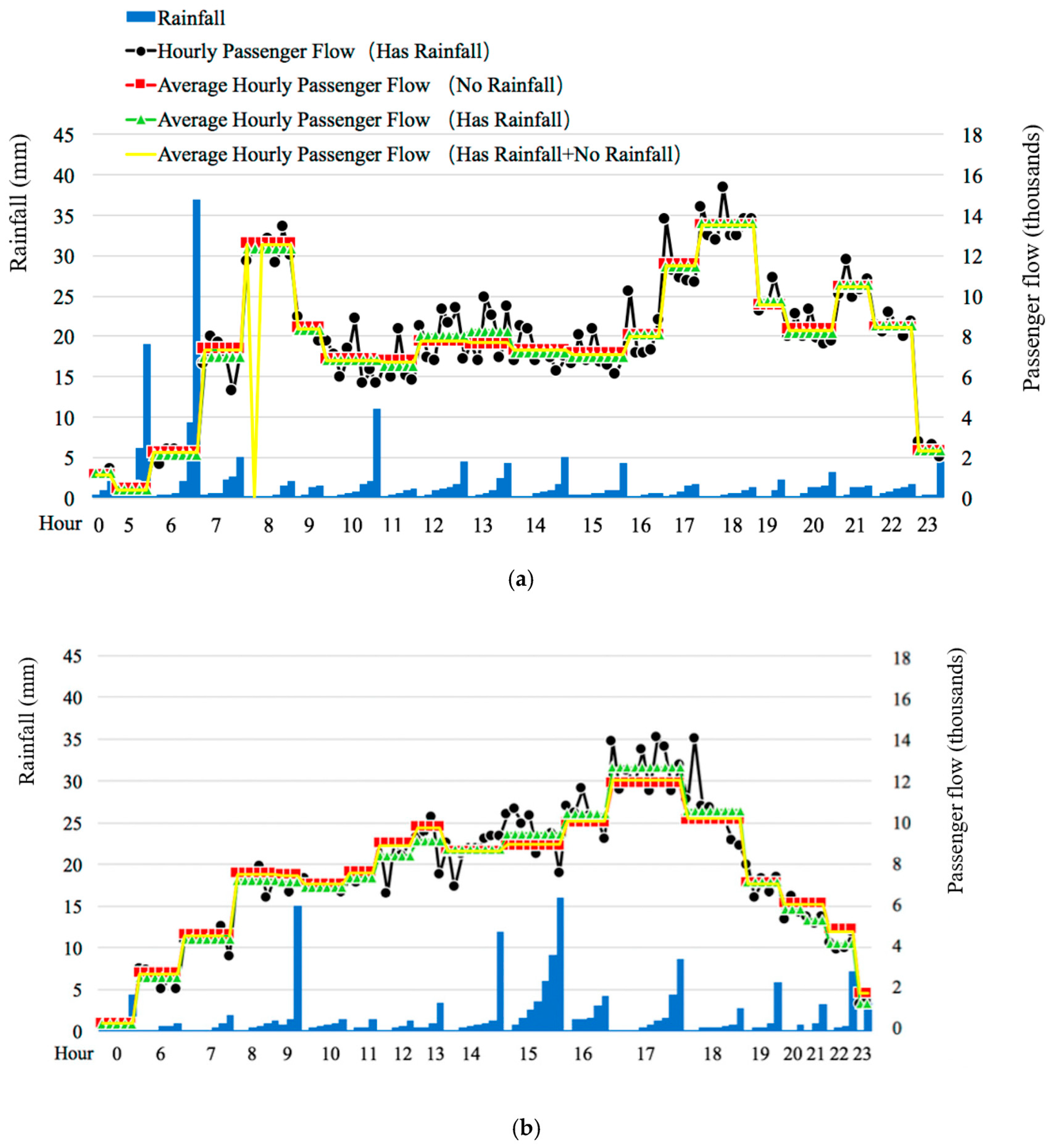

2.4. Analysis of Impacts of Weather Conditions on Hourly Passenger Flow

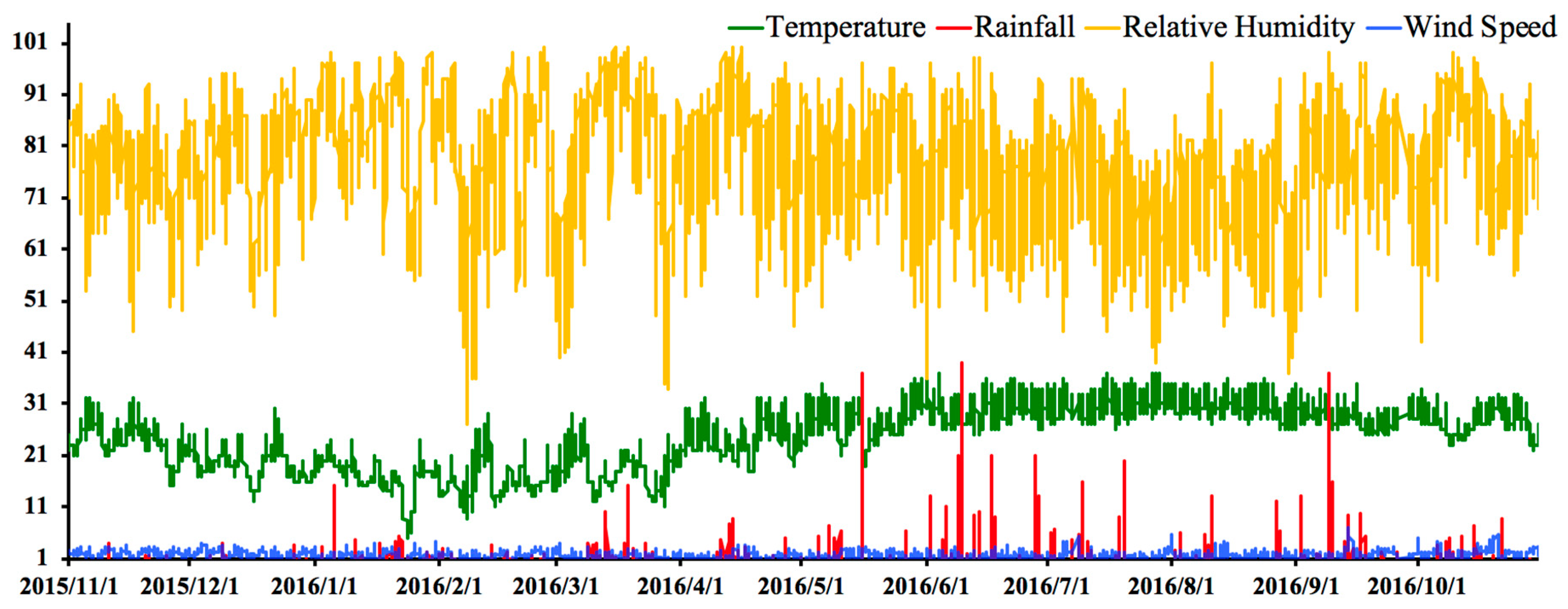

2.4.1. Overview of Annual Weather Conditions in Taipei

2.4.2. Impact of Rainfall on Hourly Passenger Flow

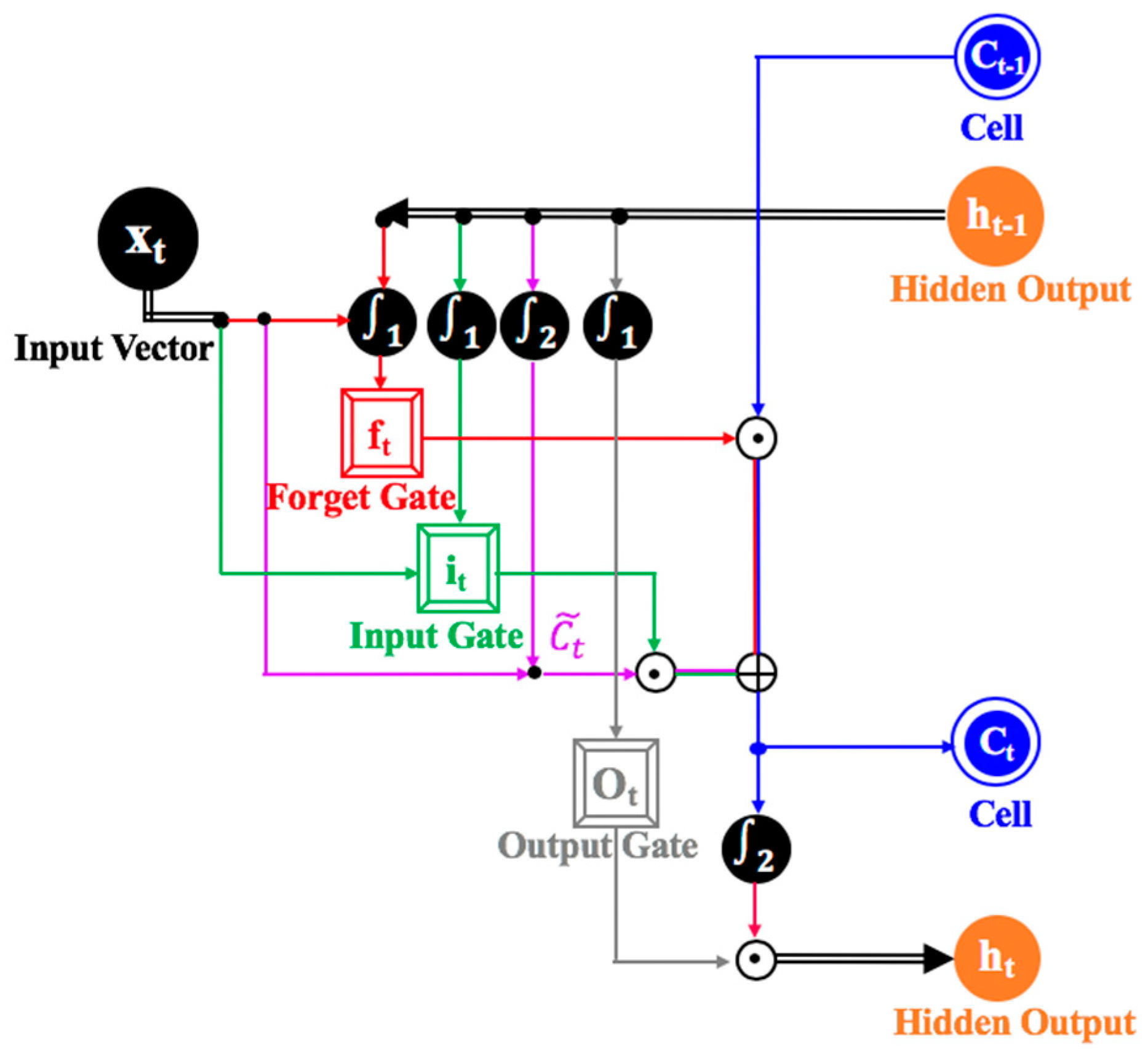

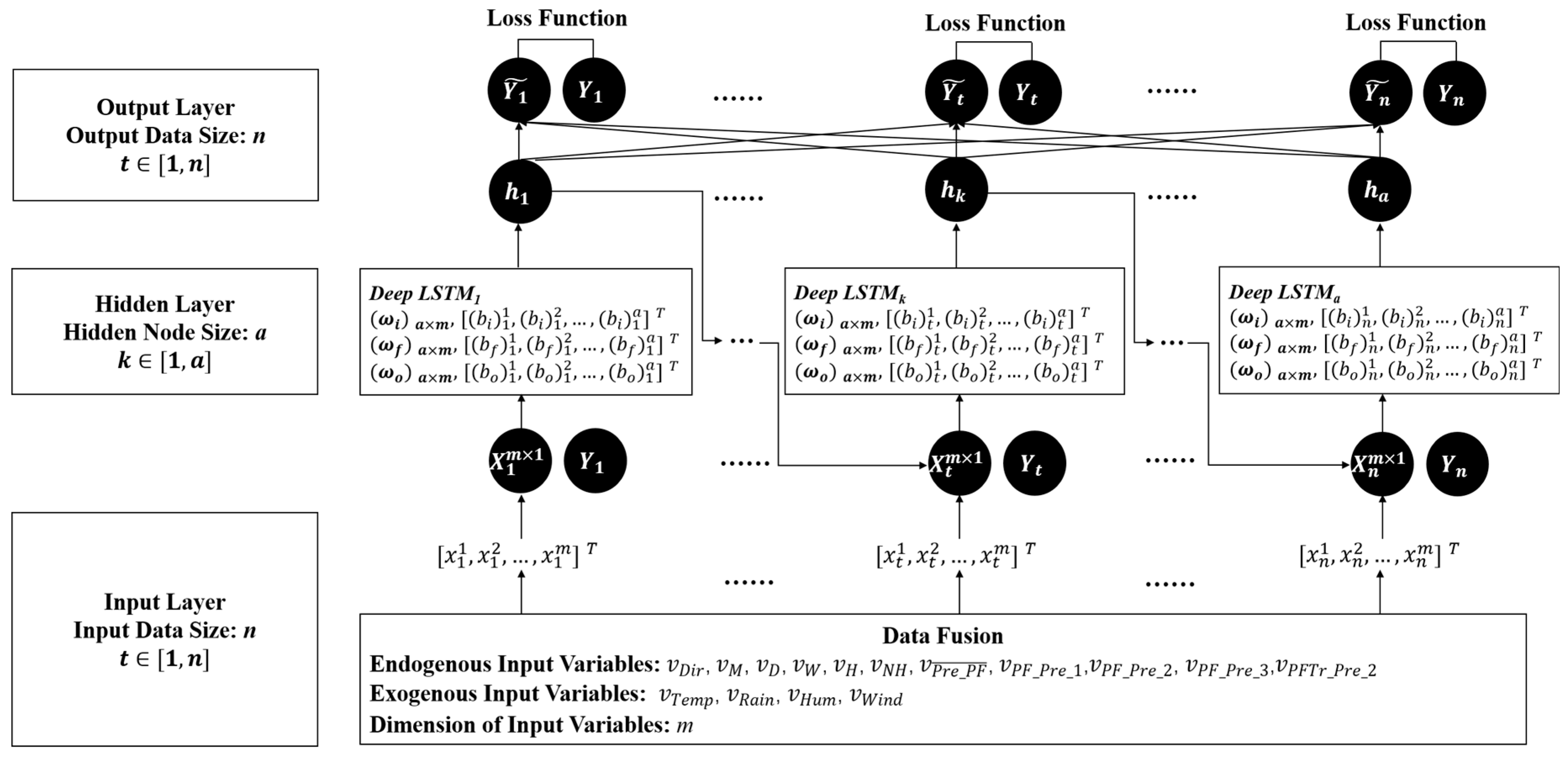

3. Methodology

4. Experimental Results and Discussion

4.1. Data Specification

4.2. Results of not Using any Weather Variables

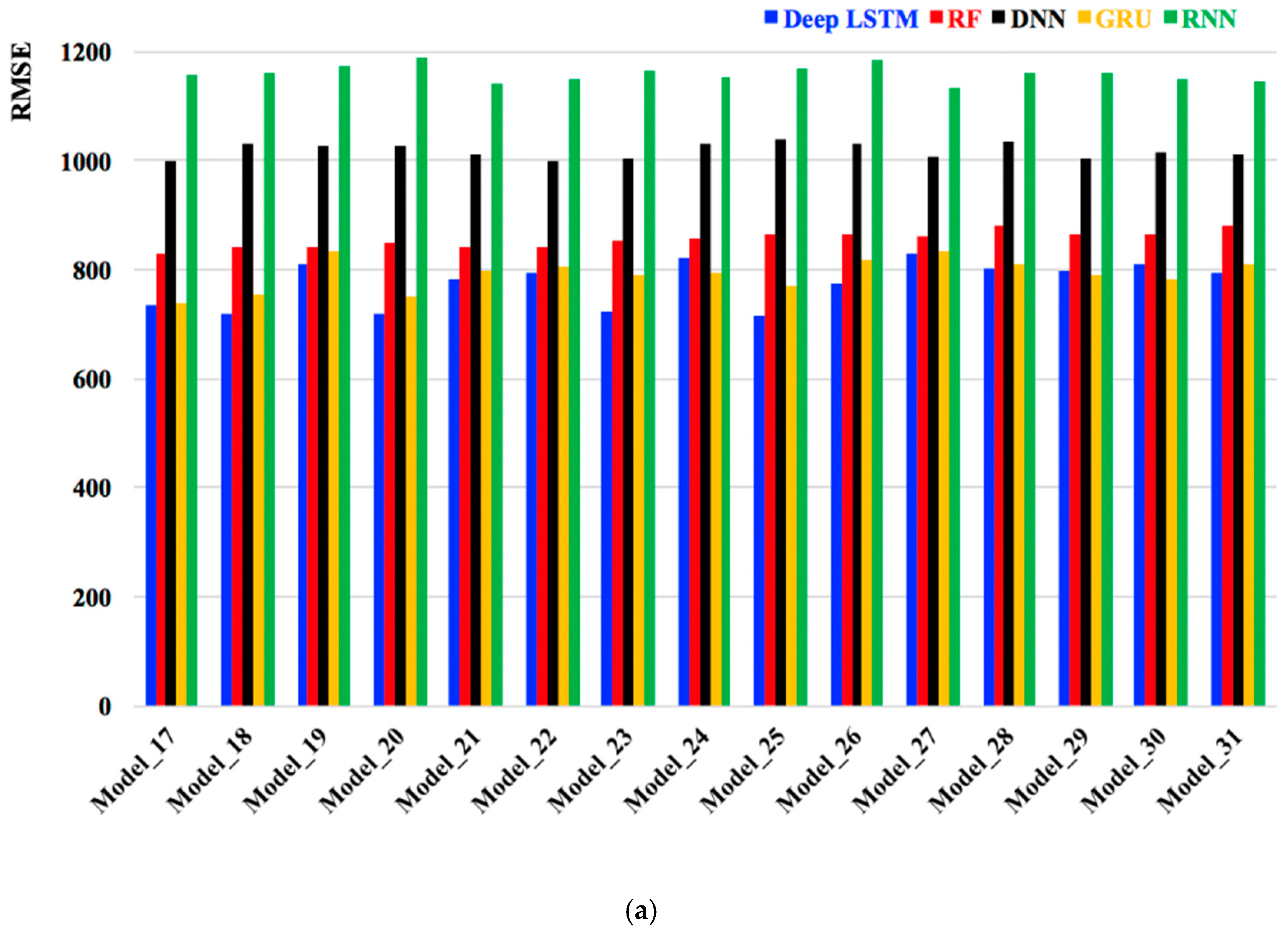

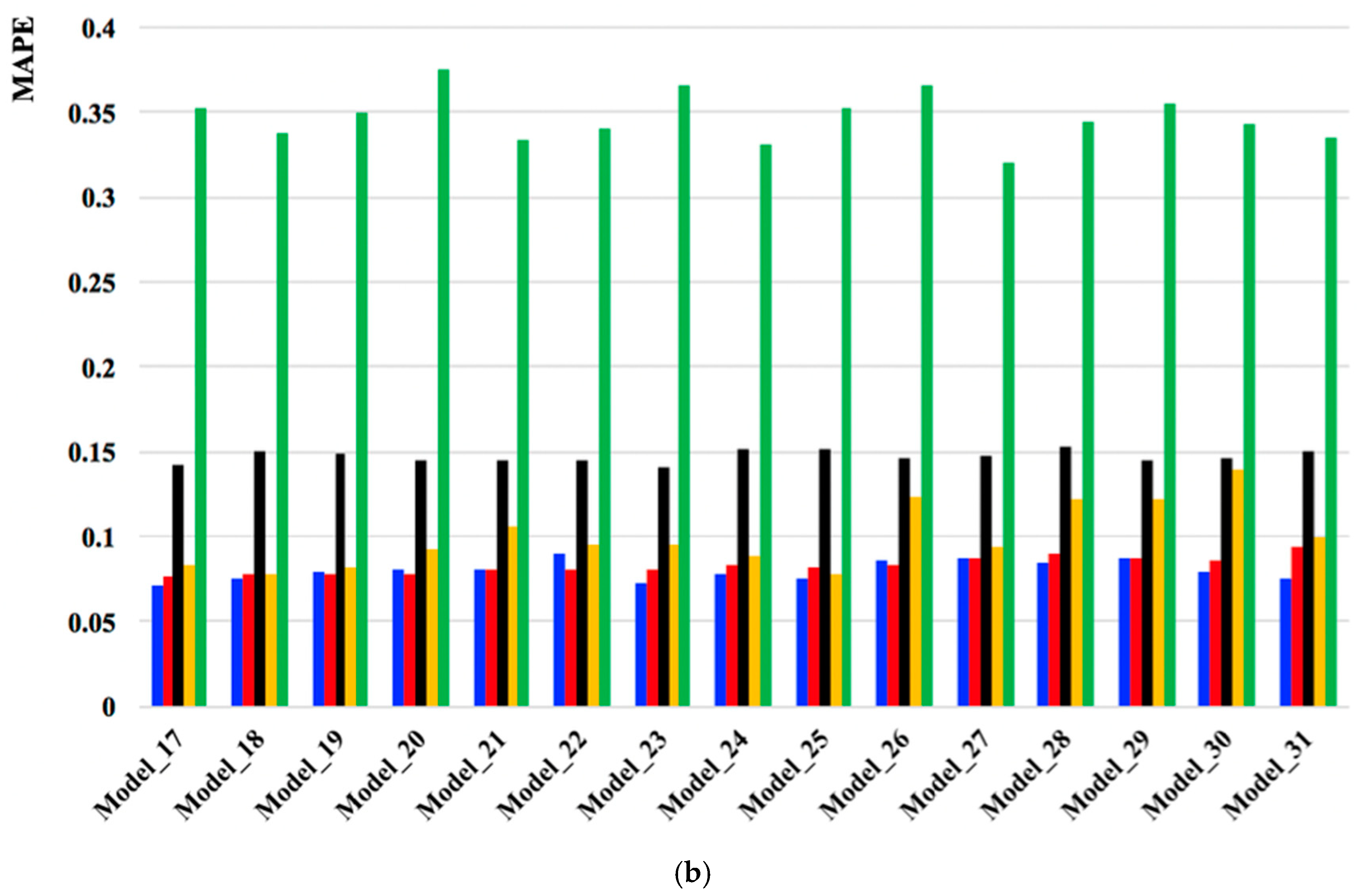

4.3. Results of Using Weather Variables

4.4. Comparisons

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wang, J.Z.; Kong, X.J.; Zhao, W.H.; Tolba, A.; Al-Makhadmeh, Z.; Feng, X. STLoyal: A spatio-temporal loyalty-based model for subway passenger flow prediction. IEEE Access 2018, 6, 47461–47471. [Google Scholar]

- Zhao, J.J.; Zhang, F.; Tu, L.; Xu, C.Z.; Shen, D.Y.S.; Tian, C.; Li, X.Y.; Li, Z.X. Estimation of passenger route choice pattern using smart card data for complex metro systems. IEEE Transp. Intell. Transp. Syst. 2017, 18, 790–801. [Google Scholar] [CrossRef]

- Yan, D.F.; Wang, J. Subway passenger flow forecasting with multi-station and external factors. IEEE Access 2019, 7, 57415–57423. [Google Scholar]

- Saneinejad, S.; Roorda, M.J.; Kennedy, C. Modelling the impact of weather conditions on active transportation travel behavior. Transp. Res. D Transp. Environ. 2012, 17, 129–137. [Google Scholar] [CrossRef]

- Singhal, A.; Kamga, C.; Yazici, A. Imapct of weather on urban transit ridership. Transp. Res. A Pol. Prac. 2014, 69, 379–391. [Google Scholar] [CrossRef]

- Hao, S.; Lee, D.; Zhao, D. Sequence to sequence learning with attention mechanism for short- term passenger flow prediction in large-scale metro system. Transp. Res. C Emerg. Technol. 2019, 107, 287–300. [Google Scholar] [CrossRef]

- Tao, S.; Corcoran, J.; Rowe, F.; Hickman, M. To travel or not to travel: ‘Weather’ is the question. Modelling the effect of local weather conditions on bus ridership. Transp. Res. C Emerg. Technol. 2018, 86, 147–167. [Google Scholar] [CrossRef]

- Xu, M.L.; Fu, X.; Tang, J.Y.; Liu, Z.Y. Effects of weather factors on the spatial and temporal distributions of metro passenger flows: An empirical study based on smart card data. Prog. Geogr. 2020, 39, 45–55. [Google Scholar] [CrossRef]

- Ke, J.T.; Zheng, H.Y.; Yang, H.; Chen, X.Q. Short-term forecasting of passenger demand under on-demand ride services: A spatio-temporal deep learning approach. Transp. Res. Res. C Emerg. Technol. 2017, 85, 591–608. [Google Scholar] [CrossRef]

- Tang, L.; Zhao, Y.; Cabrera, J.; Ma, J.; Tsui, K. Forecasting short-term passenger flow: An empirical study on Shenzhen metro. IEEE Transp. Intell. Transp. Syst. 2019, 20, 3613–3622. [Google Scholar] [CrossRef]

- Chen, W.Y.; Pan, X.; Fang, X.P. Short-term prediction of passenger flow on bus routes based on K-means clustering combination models. J. S. China Univ. Technol. (Nat. Sci.) 2019, 47, 83–89, 113. [Google Scholar]

- Liu, X.T.; Huang, X.L.; Xie, B.L. A model of short-term forecast of passenger flow of buses based on SVM-KNN under rainy conditions. J. Transp. Inf. Saf. 2018, 36, 117–123. [Google Scholar]

- Li, M.; Li, J.; Wei, Z.J.; Wang, S.D.; Chen, L.J. Short-term passenger flow forecasting at subway station based on deep learning LSTM structure. Urban Mass Trans. 2018, 42–46, 77. [Google Scholar]

- Wan, H.; Guo, S.; Yin, K.; Liang, X.; Lin, Y. CTS-LSTM: LSTM-based neural networks for correlated time series prediction. Knowledge-Based Syst. 2020, 191, 1–10. [Google Scholar] [CrossRef]

- Stover, V.W.; Nygaard, N. The impact of weather on bus ridership in pierce county, Washington. J. Pub. Transp. 2012, 15, 95–110. [Google Scholar] [CrossRef]

- Bocker, L.; Dijst, M.; Prillwitz, J. Impact of everyday weather on individual daily travel behaviours in perspective: A literature review. Transp. Rev. 2013, 33, 71–91. [Google Scholar] [CrossRef]

- Liu, C.X.; Susilo, Y.O.; Karlström, A. The influence of weather characteristics variability on individual’s travel mode choice in different seasons and regions in Sweden. Transp. Policy 2015, 41, 147–158. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, D.; Liu, Y.; Dai, B.; Lee, L. Enhancing transportation systems via deep learning: A survey. Transp. Res. C Emerg. Technol. 2019, 99, 144–163. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Ding, Y.X.; LI, Z.; Zhang, C.D.; Ma, J. Correlation filtered spatial-temporal long short-term memory model.

- Li, X.Y.; Li, J.L.; Qu, Y.Z.; He, D. Gear pitting fault diagnosis using integrated CNN and GRU network with both Vibration and Acoustic Emission Signals. Science 2019, 9, 4. [Google Scholar] [CrossRef]

- Jozefowicz, R.; Zaremba, W.; Sutskever, I. An Empirical Exploration of Recurrent Network Architectures; JMLR: Lille, France, 2015; pp. 2342–2350. [Google Scholar]

- Zhao, Z.; Chen, W.H.; Wu, X.M.; Chen, C.Y.; Liu, J.M. LSTM network: A deep learning approach for short-term traffic forecast. IET Intell. Transp. Syst. 2017, 11, 68–75. [Google Scholar] [CrossRef]

- Wang, X.; Jiang, R.; Li, L.; Lin, Y.L.; Zheng, X.H.; Wang, F.Y. Capturing car-following behaviors by deep learning. IEEE Transp. Intell. Transp. Syst. 2018, 19, 910–920. [Google Scholar] [CrossRef]

- Taipei Open Government. Hourly Inbound and Outbound Passenger Flow in Taipei Metro. Available online: https://data.taipei/dataset/detail/metadata?id=63f31c7e-7fc3-418b-bd82-b95158755b4d (accessed on 1 January 2019).

- Liu, L.J.; Chen, R.C.; Zhao, Q.F.; Zhu, S.Z. Applying a multistage of input feature combination to random forest for improving MRT passenger flow prediction. J. Ambient Intell. Humaniz. Comput. 2019, 10, 4515–4532. [Google Scholar] [CrossRef]

- Taiwan Environmental Protection Administration. Annual Weather in Taipei. Available online: https://taqm.epa.gov.tw/taqm/tw/YearlyDataDownload.aspx. (accessed on 31 December 2018).

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Ott, J.; Lin, Z.H.; Zhang, Y.; Liu, S.C.; Bengio, Y.S. Recurrent neural. networks with limited numerical precision. arXiv 2017, arXiv:1608.06902v2. [Google Scholar]

- Wu, Y.H.; Zhang, S.Z.; Zhang, Y.; Bengio, Y.S.; Salakhutdinov, R. On Multiplicative Integration with Recurrent Neural Networks; NIPS: Barcelona, Spain, 2016; pp. 1–9. [Google Scholar]

- Donahue, J. LSTM Layer and LSTM Unit Layer, with Tests. Available online: https://github.com/BVLC/caffe/blob/master/include/caffe/layers/lstm_layer.hpp (accessed on 2 June 2016).

- Allaire, J. Package ‘Keras’. Available online: https://cran.r-project.org/web/packages/keras/keras.pdf (accessed on 22 November 2018).

- Meudec, R. Identity Initializer with Zero Padding. Available online: https://github.com/keras-team/keras/blob/master/keras/layers/recurrent.py#L900 (accessed on 5 January 2019).

- Koutaki, G.; Shirai, K.; Ambai, M. Hadamard Coding for Supervised. Discrete Hashing. IEEE Trans. Image. Process. 2018, 27, 5378–5392. [Google Scholar] [CrossRef]

- Brownlee, J. Multivariate Time Series Forecasting with LSTMs in Keras. Available online: https://machinelearningmastery.com/multivariate-time-series-forecasting-lstms-keras/ (accessed on 14 August 2017).

- Resuly. Using RNN to Forecast for the Time Series Sequence with Keras. Available online: http://resuly.me/2017/08/16/keras-rnn-tutorial/ (accessed on 16 August 2017).

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. arXiv 2017, arXiv:1412.6980v9. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2011, 45, 5–32. [Google Scholar] [CrossRef]

- Chung, J.Y.; Gulcehre, C.; Cho, K.H.; Bengio, Y.S. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555v1. [Google Scholar]

- Nikolopoulou, M. Outdoor thermal comfort. Front. Biosci. 2011, S3, 1552–1568. [Google Scholar] [CrossRef]

| Item | Training Dataset | Testing Dataset | |

|---|---|---|---|

| Duration | 1 November 2015–31 October 2016 | 1 November 2016–31 March 2017 | |

| Outlier | 8 July 2016; 27 September 2016; 28 September 2016 | None | |

| Number of days | 363 | 151 | |

| Number of national holidays | 31 | 13 | |

| Operating hours | Inbound | 0:00, 5:00–23:00 | |

| Outbound | 0:00, 6:00–23:00 | ||

| Special examples to be added | Inbound | 1:00–4:00 on 1 January 2016 | 1:00–4:00 on 1 January 2017 |

| Outbound | 1:00–5:00 on 1 January 2016 | 1:00–5:00 on 1 January 2017 | |

| Special examples to be deleted | 0:00 on 1 November 2015 | ||

| Time interval | One hour | ||

| Total examples | Inbound | 7263 | 3024 |

| Outbound | 6901 | 2874 | |

| National holiday | 1218 | 516 | |

| Non-national holiday | 12,946 | 5382 | |

| Total Examples | 14,164 | 5898 | |

| No. | Input Variables | Encoded Values | Descriptions |

|---|---|---|---|

| 1 | 0,1 | 0: inbound; 1: outbound | |

| 2 | 1–12 | January–December | |

| 3 | 1–31 | 31 days per month | |

| 4 | 1–7 | Monday–Sunday | |

| 5 | 0–23 | 24 hours per day | |

| 6 | Table 3 | ||

| 7 | calculated | (1) Normal days: The average value of hourly passenger flow on all non-national holidays; (2) National holidays: The average value of hourly passenger flow on all national holidays. | |

| 8 | actual | Previous 1-hour passenger flow | |

| 9 | actual | Previous 2-hour passenger flow | |

| 10 | actual | Previous 3-hour passenger flow | |

| 11 | calculated | (Previous 1-hour passenger flow)-(Previous 2-hour passenger flow) | |

| 12 | actual | Actual hourly atmospheric temperature (°C) | |

| 13 | actual | Actual hourly rainfall (mm) | |

| 14 | actual | Actual hourly relative humidity ( | |

| 15 | actual | Actual hourly average wind speed (mile/second) | |

| 16 | actual | Actual hourly passenger flow | |

| National Holiday | Order | Durations | Encoded Values |

|---|---|---|---|

| New Year’s Day | 1 | About 3 or 4 days | 11, 12, 13, 19 |

| Lunar New Year’s Day | 2 | About 6–9 days | 21–29 |

| Peace Memorial Day | 3 | About 3 days | 31, 32, 39 |

| Tomb-Sweeping Day | 4 | About 3 or 4 days | 41, 42, 43, 49 |

| Dragon Boat Day | 5 | About 3 or 4 days | 51, 52, 53, 59 |

| Mid-Autumn Day | 6 | About 3 or 4 days | 61, 62, 63, 69 |

| National Day | 7 | About 3 days | 71, 72, 79 |

| No. | Parameters | Values |

|---|---|---|

| 1 | epochs | {500, 1000, 1500, 2000, 2500, 3000, 3500, 4000, 4500, 5000} |

| 2 | batch size | {32, 64, 128, 256, 512} |

| 3 | activation | {relu, tanh, sigmoid} |

| 4 | recurrent_activation | {relu, tanh, sigmoid, hard_sigmoid} |

| 5 | learning rate | {0.001, 0.002, 0.003, 0.004, 0.005} |

| 6 | hidden nodes | {(50), (100), (200), (250), (256,128,64,10), (256,128,64,32), (256,128,64,32,10), (256,128,64), (256,256,256), (258,126)} |

| 7 | number of hidden layers | {1, 2, 3, 4} |

| 8 | optimizer | {RMSprop, adam, adamax, adagrad, SGD} |

| 9 | dropout | {0.05, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9} |

| 10 | momentum | {0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9} |

| No. | Parameters | Best Tuning Results | ||

|---|---|---|---|---|

| Deep LSTM_NN | One-layer LSTM_NN | RF | ||

| 1 | epochs | 1000 | 3500 | ✕ |

| 2 | batch size | 256 | 128 | ✕ |

| 3 | activation | tanh | relu | ✕ |

| 4 | recurrent_activation | hard_sigmoid | hard_sigmoid | ✕ |

| 5 | learning rate | 0.001 | 0.001 | ✕ |

| 6 | hidden nodes | (256,128,64) | (100) | ✕ |

| 7 | number of hidden layers | 3 | 1 | ✕ |

| 8 | optimizer | adam | adam | ✕ |

| 9 | dropout | 0.05 | 0.05 | ✕ |

| 10 | momentum | 0.9 | 0 | ✕ |

| 11 | max_features | ✕ | ✕ | 3 |

| 12 | n_estimators | ✕ | ✕ | 6500 |

| Evaluation Criteria | RMSE | 490.77 | 517.63 | 538.75 |

| MAPE | 0.0547 | 0.0629 | 0.0555 | |

| Dataset | Total Examples | Raining Examples | Ratio (Raining Examples/Total Examples) |

|---|---|---|---|

| Training dataset | 14,164 | 1748 | 12.34% |

| Testing dataset | 5898 | 524 | 11.26% |

| Models | Input Variables | Deep LSTM_NN | |||||

|---|---|---|---|---|---|---|---|

| Optimized Traditional Input Variables | RMSE | MAPE | |||||

| 1 | • | ✕ | ✕ | ✕ | ✕ | 753.88 | 0.0741 |

| 2 | • | • | ✕ | ✕ | ✕ | 754.76 | 0.0730 |

| 3 | • | ✕ | • | ✕ | ✕ | 726.76 | 0.0760 |

| 4 | • | ✕ | ✕ | • | ✕ | 770.12 | 0.0732 |

| 5 | • | ✕ | ✕ | ✕ | • | 745.83 | 0.0815 |

| 6 | • | • | • | ✕ | ✕ | 805.87 | 0.0818 |

| 7 | • | • | ✕ | • | ✕ | 771.99 | 0.0846 |

| 8 | • | • | ✕ | ✕ | • | 748.08 | 0.0757 |

| 9 | • | ✕ | • | • | ✕ | 820.02 | 0.0787 |

| 10 | • | ✕ | • | ✕ | • | 787.60 | 0.0864 |

| 11 | • | ✕ | ✕ | • | • | 793.76 | 0.0760 |

| 12 | • | • | • | • | ✕ | 829.60 | 0.0987 |

| 13 | • | ✕ | • | • | • | 855.64 | 0.0801 |

| 14 | • | • | ✕ | • | • | 785.41 | 0.1001 |

| 15 | • | • | • | ✕ | • | 804.82 | 0.0734 |

| 16 | • | • | • | • | • | 823.14 | 0.0849 |

| Models | Input Variables | Deep LSTM_NN | |||||

|---|---|---|---|---|---|---|---|

| Optimized Traditional Input Variables | RMSE | MAPE | |||||

| 1 | • | ✕ | ✕ | ✕ | ✕ | 753.88 | 0.0741 |

| 17 | • | • | ✕ | ✕ | ✕ | 735.79 | 0.0711 |

| 18 | • | ✕ | • | ✕ | ✕ | 718.83 | 0.0752 |

| 19 | • | ✕ | ✕ | • | ✕ | 809.57 | 0.0793 |

| 20 | • | ✕ | ✕ | ✕ | • | 718.83 | 0.0813 |

| 21 | • | • | • | ✕ | ✕ | 782.14 | 0.0806 |

| 22 | • | • | ✕ | • | ✕ | 794.34 | 0.0902 |

| 23 | • | • | ✕ | ✕ | • | 722.79 | 0.0734 |

| 24 | • | ✕ | • | • | ✕ | 823.00 | 0.0780 |

| 25 | • | ✕ | • | ✕ | • | 713.60 | 0.0757 |

| 26 | • | ✕ | ✕ | • | • | 773.20 | 0.0863 |

| 27 | • | • | • | • | ✕ | 831.89 | 0.0873 |

| 28 | • | ✕ | • | • | • | 802.04 | 0.0852 |

| 29 | • | • | ✕ | • | • | 796.50 | 0.0873 |

| 30 | • | • | • | ✕ | • | 810.28 | 0.0798 |

| 31 | • | • | • | • | • | 794.13 | 0.0758 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, L.; Chen, R.-C.; Zhu, S. Impacts of Weather on Short-Term Metro Passenger Flow Forecasting Using a Deep LSTM Neural Network. Appl. Sci. 2020, 10, 2962. https://doi.org/10.3390/app10082962

Liu L, Chen R-C, Zhu S. Impacts of Weather on Short-Term Metro Passenger Flow Forecasting Using a Deep LSTM Neural Network. Applied Sciences. 2020; 10(8):2962. https://doi.org/10.3390/app10082962

Chicago/Turabian StyleLiu, Lijuan, Rung-Ching Chen, and Shunzhi Zhu. 2020. "Impacts of Weather on Short-Term Metro Passenger Flow Forecasting Using a Deep LSTM Neural Network" Applied Sciences 10, no. 8: 2962. https://doi.org/10.3390/app10082962

APA StyleLiu, L., Chen, R.-C., & Zhu, S. (2020). Impacts of Weather on Short-Term Metro Passenger Flow Forecasting Using a Deep LSTM Neural Network. Applied Sciences, 10(8), 2962. https://doi.org/10.3390/app10082962