Predicting Reputation in the Sharing Economy with Twitter Social Data

Abstract

1. Introduction

- RQ1. Can social digital footprints be used for predicting bad user behavior on sharing economy sites?

- RQ2. What are the most relevant social features for predicting reputation in the Sharing Economy?

2. Background

2.1. Trust and Reputation Notions and Computational Models

2.2. Trust and Reputation in the Sharing Economy

2.3. Characterizing and Interlinking User Profiles in Social Networks

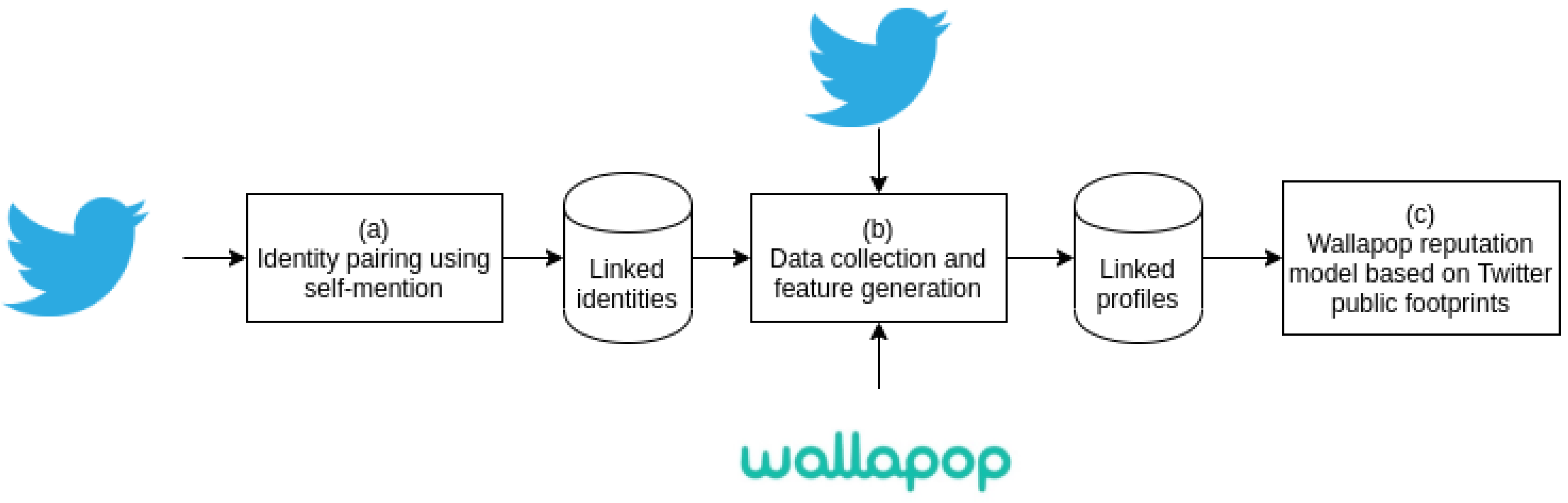

3. Case Study and Methodology

3.1. Identity Pairing

- Contain a link to a Wallapop product that we can use to reach the Wallapop profile of the seller.

- Tweets similar to the default tweet message, including keywords as selling or hashtags as #wallapop.

3.2. Data Collection and Feature Generation

3.2.1. Data Collection

- users/show: profile characteristics as name, picture, or description.

- statuses/user_timeline: list of tweets posted by the user.

- followers/list: list of followers.

- friends/list: list of friends.

- Search: a list of items available near a given location.

- Item: description of an item (including price and seller profile).

- User: user public profile, including data such as the number of reviews and verifications.

- User reviews: full set of reviews given or received by a user.

3.2.2. Feature Generation

- Profile: we selected the account creation date (in seconds since epoch) based on the hypothesis that users with active accounts for a long time are likely to be more trustable [82].

- Behavior: based on previous research [83,84], we have selected activity metrics: most frequent tweeting hours, tweets count, number of tweets marked as favorites by the user, and the tweet average length. Other authors [85,86] use other network metrics such as centrality metrics since they analyze trust networks. We have not used them since our dataset is very sparse, based on the followed data collection strategy.

- Linguistic: the average count of bad words by tweet has been calculated using a publicly available list (https://github.com/LDNOOBW/List-of-Dirty-Naughty-Obscene-and-Otherwise-Bad-Words) of known bad words in Spanish and English (the two majority languages in our dataset). Then, we checked the presence of each tweet word in this list.

- Social: the Twitter network is unweighted and directed, with edges formed through the following action. Thus, we can analyze these edges separating them between the inner (followers) and outer edges (friends). The extracted features include features such as the count of friends and followers.

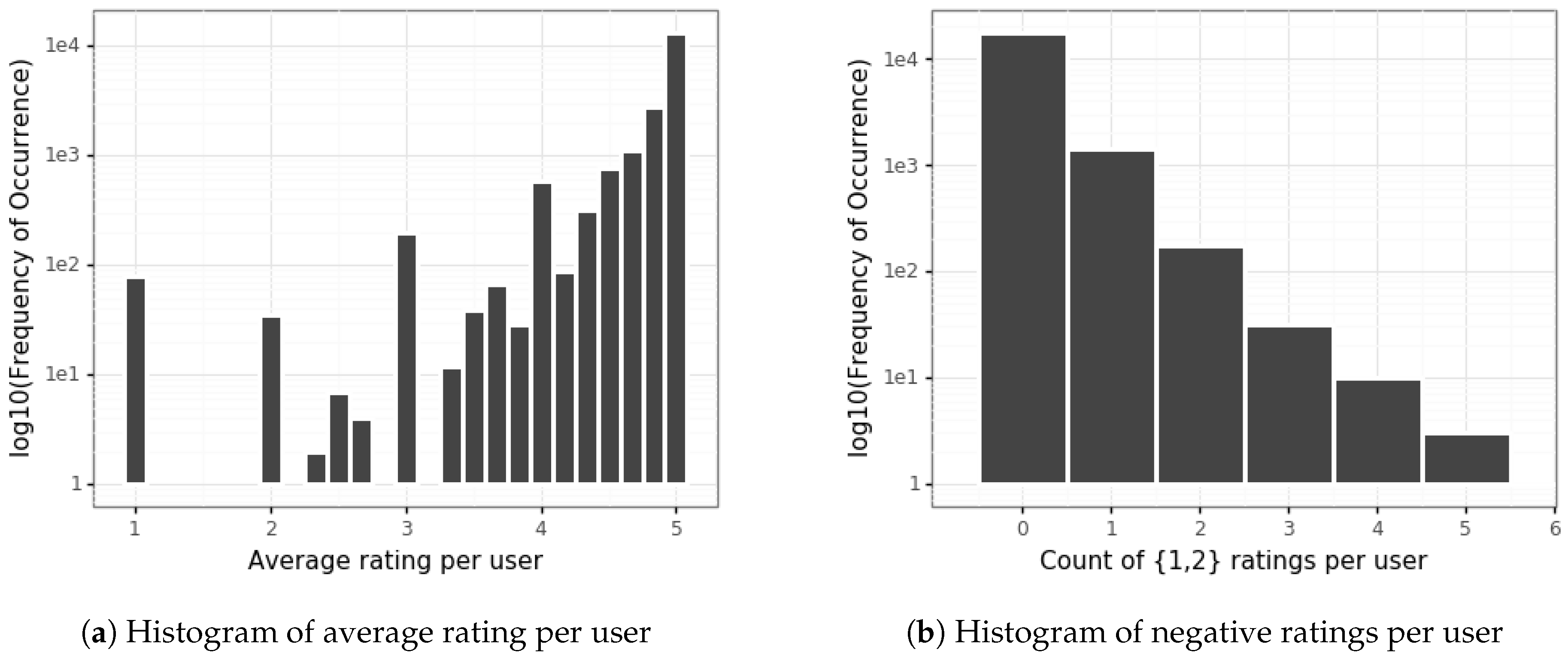

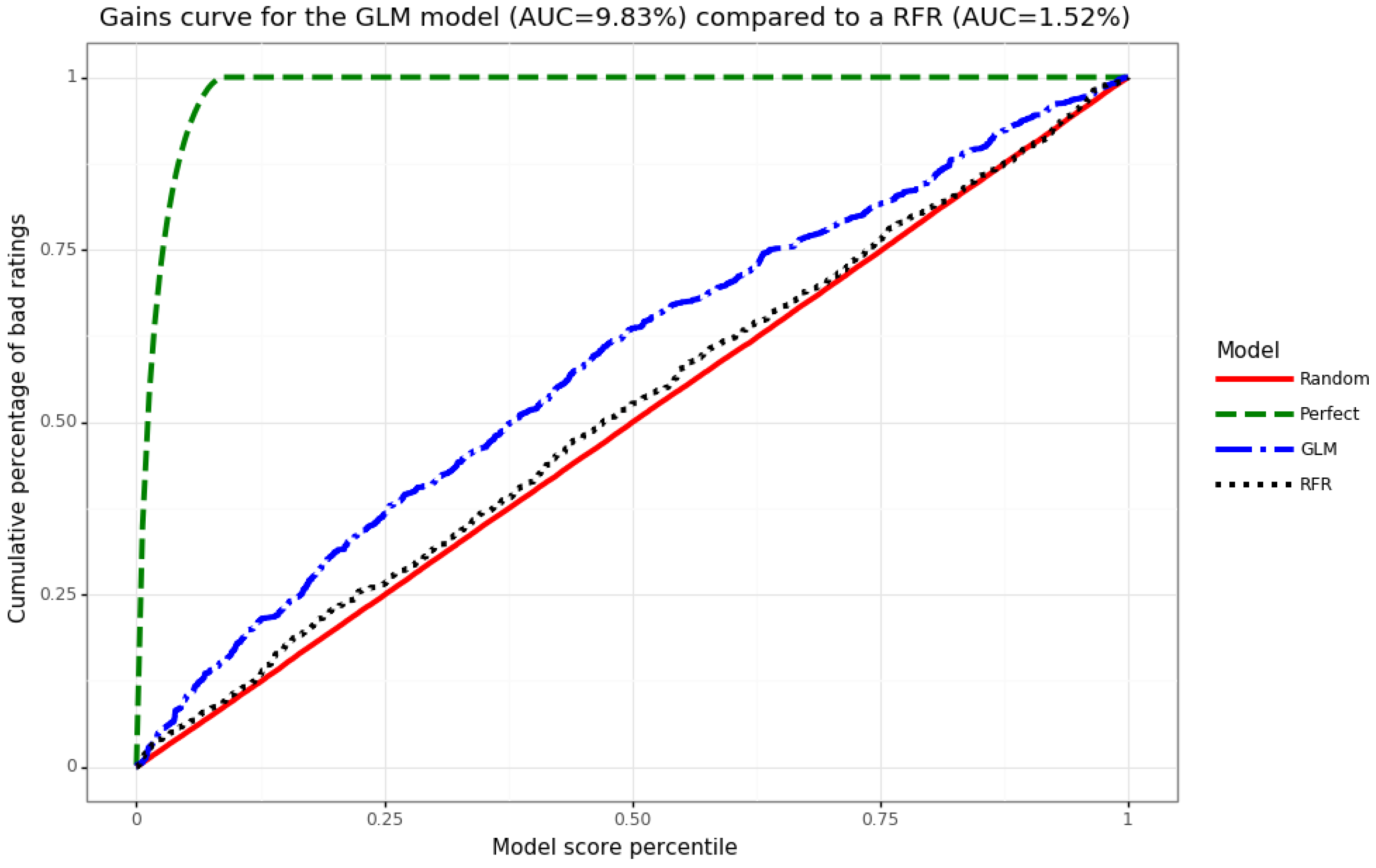

4. Prediction Model

5. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| API | Application Programming Interface |

| AUC | Area Under the Curve |

| C2C | Consumer to Consumer |

| FOAF | Friend-Of-A-Friend |

| ICT | Information and Communications technology |

| GLM | Generalized Linear Model |

| OSN | Online Social Network |

| P2P | Peer to Peer |

| RFR | Random Forest Regressor |

| SVM | Support Vector Machine |

References

- Qualman, E. Socialnomics: How Social Media Transforms the Way We Live and Do Business; John Wiley & Sons: Hoboken, NJ, USA, 2010. [Google Scholar]

- Statista. Social Media User Generated Content. 2018. Available online: https://www.statista.com/statistics/253577/number-of-monthly-active-instagram-users/ (accessed on 16 April 2020).

- Zhang, D.; Guo, B.; Li, B.; Yu, Z. Extracting social and community intelligence from digital footprints: An emerging research area. In Proceedings of the International Conference on Ubiquitous Intelligence and Computing; Springer: Berlin/Heidelberg, Germany, 2010; pp. 4–18. [Google Scholar]

- Muhammad, S.S.; Dey, B.L.; Weerakkody, V. Analysis of factors that influence customers’ willingness to leave big data digital footprints on social media: A systematic review of literature. Inf. Syst. Front. 2018, 20, 559–576. [Google Scholar] [CrossRef]

- Sobolevsky, S.; Sitko, I.; Grauwin, S.; Combes, R.T.D.; Hawelka, B.; Arias, J.M.; Ratti, C. Mining urban performance: Scale-independent classification of cities based on individual economic transactions. arXiv 2014, arXiv:1405.4301. [Google Scholar]

- Psomakelis, E.; Aisopos, F.; Litke, A.; Tserpes, K.; Kardara, M.; Campo, P.M. Big IoT and social networking data for smart cities: Algorithmic improvements on Big Data Analysis in the context of RADICAL city applications. arXiv 2016, arXiv:1607.00509. [Google Scholar]

- Malhotra, A.; Totti, L.; Meira, W., Jr.; Kumaraguru, P.; Almeida, V. Studying user footprints in different online social networks. In Proceedings of the 2012 IEEE Computer Society International Conference on Advances in Social Networks Analysis and Mining (ASONAM 2012), Istanbul, Turkey, 26–29 August 2012; pp. 1065–1070. [Google Scholar]

- Vosecky, J.; Hong, D.; Shen, V.Y. User identification across multiple social networks. In Proceedings of the IEEE 2009 1st International Conference on Networked Digital Technologies, NDT 2009, Ostrava, Czech Republic, 28–31 July 2009; pp. 360–365. [Google Scholar]

- Li, Y.; Zhang, Z.; Peng, Y.; Yin, H.; Xu, Q. Matching user accounts based on user generated content across social networks. Future Gener. Comput. Syst. 2018, 83, 104–115. [Google Scholar] [CrossRef]

- Möhlmann, M. Collaborative consumption: Determinants of satisfaction and the likelihood of using a sharing economy option again. J. Consum. Behav. 2015, 14, 193–207. [Google Scholar] [CrossRef]

- Cusumano, M.A. How Traditional Firms Must Compete in the Sharing Economy. Commun. ACM 2014, 58, 32–34. [Google Scholar] [CrossRef]

- Belk, R. You are what you can access: Sharing and collaborative consumption online. J. Bus. Res. 2014, 67, 1595–1600. [Google Scholar] [CrossRef]

- Walsh, G.; Bartikowski, B.; Beatty, S.E. Impact of customer-based corporate reputation on non-monetary and monetary outcomes: The roles of commitment and service context risk. Br. J. Manag. 2014, 25, 166–185. [Google Scholar] [CrossRef]

- Jøsang, A. Robustness of trust and reputation systems: Does it matter? In IFIP Advances in Information and Communication Technology; Springer: Berlin/Heidelberg, Germany, 2012; Volume 374, pp. 253–262. [Google Scholar]

- Zervas, G.; Proserpio, D.; Byers, J. A First, Look at Online Reputation on Airbnb, Where Every Stay Is Above Average. 2015. Available online: http://dx.doi.org/10.2139/ssrn.2554500 (accessed on 3 April 2020).

- Fei, G.; Li, H.; Liu, B. Opinion Spam Detection in Social Networks. In Sentiment Analysis in Social Networks; Pozzi, F., Fersini, E., Messina, E., Liu, B., Eds.; Morgan Kauffman: Burlington, MA, USA, 2017; Chapter 9; pp. 141–156. [Google Scholar]

- Rousseau, D.M.; Sitkin, S.B.; Burt, R.S.; Camerer, C. Not so different after all: A cross-discipline view of trust. Acad. Manag. Rev. 1998, 23, 393–404. [Google Scholar] [CrossRef]

- Zucker, L.G. Production of trust: Institutional sources of economic structure, 1840–1920. Res. Organ. Behav. 1986, 8, 53–111. [Google Scholar]

- Rotter, J.B. A new scale for the measurement of interpersonal trust 1. J. Personal. 1967, 35, 651–665. [Google Scholar] [CrossRef] [PubMed]

- Corsín Jiménez, A. Trust in anthropology. Anthropol. Theory 2011, 11, 177–196. [Google Scholar] [CrossRef]

- Levi, M.; Stoker, L. Political trust and trustworthiness. Annu. Rev. Political Sci. 2000, 3, 475–507. [Google Scholar] [CrossRef]

- Faulkner, P.; Simpson, T. The Philosophy of Trust; Oxford University Press: Oxford, UK, 2017. [Google Scholar]

- Dormandy, K. Trust in Epistemology; Routledge: Abingdon, UK, 2019. [Google Scholar]

- Mindus, P.; Gkouvas, T. Trust in Law. In Routledge Handbook of Trust and Philosophy; Simon, J., Ed.; Routledge: Abingdon, UK, 2020. [Google Scholar]

- Williamson, O.E. Calculativeness, trust, and economic organization. J. Law Econ. 1993, 36, 453–486. [Google Scholar] [CrossRef]

- Suryanarayana, G.; Taylor, R.N. A Survey of Trust Management and Resource Discovery Technologies in Peer-to-Peer Applications; Technical Report UCI-ISR-04-06; Institute for Software Research, University of California: Irvine, CA, USA, 2004. [Google Scholar]

- Sabater, J.; Sierra, C. Review on computational trust and reputation models. Artif. Intell. Rev. 2005, 24, 33–60. [Google Scholar] [CrossRef]

- Ruohomaa, S.; Kutvonen, L. Trust management survey. In International Conference on Trust Management; Springer: Berlin/Heidelberg, Germany, 2005; pp. 77–92. [Google Scholar]

- Artz, D.; Gil, Y. A survey of trust in computer science and the semantic web. J. Web Semant. 2007, 5, 58–71. [Google Scholar] [CrossRef]

- Ahmad, M.; Salam, A.; Wahid, I. A survey on Trust and Reputation-Based Clustering Algorithms in Mobile Ad-hoc Networks. J. Inf. Commun. Technol. Robot. Appl. 2018, 9, 59–72. [Google Scholar]

- Pinyol, I.; Sabater-Mir, J. Computational trust and reputation models for open multi-agent systems: A review. Artif. Intell. Rev. 2013, 40, 1–25. [Google Scholar] [CrossRef]

- Jøsang, A.; Ismail, R.; Boyd, C. A survey of trust and reputation systems for online service provision. Decis. Support Syst. 2007, 43, 618–644. [Google Scholar] [CrossRef]

- Momani, M.; Challa, S. Survey of trust models in different network domains. arXiv 2010, arXiv:1010.0168. [Google Scholar] [CrossRef]

- Beatty, P.; Reay, I.; Dick, S.; Miller, J. Consumer trust in e-commerce web sites: A meta-study. ACM Comput. Surv. CSUR 2011, 43, 1–46. [Google Scholar] [CrossRef]

- Sherchan, W.; Nepal, S.; Paris, C. A survey of trust in social networks. ACM Comput. Surv. CSUR 2013, 45, 1–33. [Google Scholar] [CrossRef]

- Rahimi, H.; Bekkali, H.E. State of the art of Trust and Reputation Systems in E-Commerce Context. arXiv 2017, arXiv:1710.10061. [Google Scholar]

- Sztompka, P. Trust: A Sociological Theory; Cambridge University Press: Cambridge, UK, 1999. [Google Scholar]

- Yamagishi, T. Trust as a form of social intelligence. In Trust in Society; Cook, K., Ed.; Russell Sage Foundation: New York, NY, USA, 2001; Chapter 4; pp. 121–147. [Google Scholar]

- Ert, E.; Fleischer, A.; Magen, N. Trust and reputation in the sharing economy: The role of personal photos in Airbnb. Tour. Manag. 2016, 55, 62–73. [Google Scholar] [CrossRef]

- Tavakolifard, M.; Almeroth, K.C. A taxonomy to express open challenges in trust and reputation systems. J. Commun. 2012, 7, 538–551. [Google Scholar] [CrossRef][Green Version]

- Golbeck, J. Trust on the world wide web: A survey. Found. Trends Web Sci. 2008, 1, 131–197. [Google Scholar] [CrossRef]

- Ramchurn, S.D.; Huynh, D.; Jennings, N.R. Trust in multi-agent systems. Knowl. Eng. Rev. 2004, 19, 1–25. [Google Scholar] [CrossRef]

- Bonatti, P.; Duma, C.; Olmedilla, D.; Shahmehri, N. An integration of reputation-based and policy-based trust management. Networks 2007, 2, 10. [Google Scholar]

- Kolar, M.; Fernandez-Gago, C.; Lopez, J. Policy Languages and Their Suitability for Trust Negotiation. In IFIP Annual Conference on Data and Applications Security and Privacy; Springer: Berlin/Heidelberg, Germany, 2018; pp. 69–84. [Google Scholar]

- Paci, F.; Bauer, D.; Bertino, E.; Blough, D.M.; Squicciarini, A.; Gupta, A. Minimal credential disclosure in trust negotiations. Identity Inf. Soc. 2009, 2, 221–239. [Google Scholar] [CrossRef]

- Sharples, M.; Domingue, J. The blockchain and kudos: A distributed system for educational record, reputation and reward. In Proceedings of the European Conference on Technology Enhanced Learning; Springer: Berlin/Heidelberg, Germany, 2016; pp. 490–496. [Google Scholar]

- Veloso, B.; Leal, F.; Malheiro, B.; Moreira, F. Distributed Trust & Reputation Models using Blockchain Technologies for Tourism Crowdsourcing Platforms. Procedia Comput. Sci. 2019, 160, 457–460. [Google Scholar]

- Bellini, E.; Iraqi, Y.; Damiani, E. Blockchain-Based Distributed Trust and Reputation Management Systems: A Survey. IEEE Access 2020, 8, 21127–21151. [Google Scholar] [CrossRef]

- Alahmadi, D.H.; Zeng, X.J. ISTS: Implicit social trust and sentiment based approach to recommender systems. Expert Syst. Appl. 2015, 42, 8840–8849. [Google Scholar] [CrossRef]

- Yan, Z.; Yan, R. Formalizing trust based on usage behaviors for mobile applications. In Proceedings of the International Conference on Autonomic and Trusted Computing; Springer: Berlin/Heidelberg, Germany, 2009; pp. 194–208. [Google Scholar]

- Xiong, L.; Liu, L. A reputation-based trust model for peer-to-peer e-commerce communities. In Proceedings of the IEEE International Conference on E-Commerce (CEC 2003), Newport Beach, CA, USA, 24–27 June 2003; pp. 275–284. [Google Scholar]

- Brickley, D.; Miller, L. FOAF Vocabulary Specification 0.91. 2007. Available online: http://xmlns.com/foaf/spec/20071002.html (accessed on 3 April 2020).

- Golbeck, J.A. Computing and Applying Trust in Web-Based Social Networks. Ph.D. Thesis, University of Maryland, College Park, MD, USA, 2005. [Google Scholar]

- Golbeck, J.; Rothstein, M. Linking Social Networks on the Web with FOAF: A Semantic Web Case Study. In Proceedings of the 23rd AAAI Conference on Artificial Intelligence; AAAI Press: Menlo Park, CA, USA; Chicago, IL, USA, 2008; Volume 8, pp. 1138–1143. [Google Scholar]

- Nepal, S.; Sherchan, W.; Paris, C. Strust: A trust model for social networks. In Proceedings of the 2011 IEEE 10th International Conference on Trust, security and Privacy in Computing and Communications, Changsha, China, 16–18 November 2011; pp. 841–846. [Google Scholar]

- Hang, C.W.; Singh, M.P. Trust-based recommendation based on graph similarity. In Proceedings of the 13th International Workshop on Trust in Agent Societies (TRUST), Toronto, ON, Canada, 10 May 2010; Volume 82. [Google Scholar]

- Liu, S.; Zhang, L.; Yan, Z. Predict pairwise trust based on machine learning in online social networks: A survey. IEEE Access 2018, 6, 51297–51318. [Google Scholar] [CrossRef]

- Tadelis, S. Reputation and Feedback Systems in Online Platform Markets. Annu. Rev. Econ. 2016, 8, 321–340. [Google Scholar] [CrossRef]

- Zloteanu, M.; Harvey, N.; Tuckett, D.; Livan, G. Digital Identity: The Effect of Trust and Reputation Information on User Judgement in the Sharing Economy. 2018. Available online: http://dx.doi.org/10.2139/ssrn.3136514 (accessed on 3 April 2020).

- Slee, T. What’s Yours Is Mine: Against the Sharing Economy; Or Books: New York, NY, USA, 2017. [Google Scholar]

- Mauri, A.G.; Minazzi, R.; Nieto-García, M.; Viglia, G. Humanize your business. The role of personal reputation in the sharing economy. Int. J. Hosp. Manag. 2018, 73, 36–43. [Google Scholar] [CrossRef]

- Ter Huurne, M.; Ronteltap, A.; Guo, C.; Corten, R.; Buskens, V. Reputation effects in socially driven sharing economy transactions. Sustainability 2018, 10, 2674. [Google Scholar] [CrossRef]

- Zhang, J. Trust Transfer in the Sharing Economy-A Survey-Based Approach. J. Manag. Sci. 2018, 3, 1–32. [Google Scholar]

- Lunden, I.; Lomas, N. Wallapop and LetGo, Two Craigslist Rivals, Merge to Take on the U.S. Market, Raise $100M More. 2016. Available online: https://techcrunch.com/2016/05/10/wallapop-and-letgo-two-craigslist-rivals-plan-merger-to-take-on-the-u-s-market/?guccounter=1 (accessed on 16 April 2020).

- Mackin, S. Could Wallapop Be Barcelona’s First Billion Dollar Startup? 2015. Available online: http://www.barcinno.com/could-wallapop-be-barcelonas-first-billion-dollar-startup/ (accessed on 16 April 2020).

- Eagle, N.; Pentland, A.S.; Lazer, D. Inferring friendship network structure by using mobile phone data. Proc. Natl. Acad. Sci. USA 2009, 106, 15274–15278. [Google Scholar] [CrossRef]

- Dong, Y.; Yang, Y.; Tang, J.; Chawla, N.V. Inferring User Demographics and Social Strategies in Mobile Social Networks. In Proceedings of the ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD), New York, NY, USA, 24–27 August 2014; pp. 15–24. Available online: http://keg.cs.tsinghua.edu.cn/jietang/publications/KDD14-Dong-WhoAmI.pdf (accessed on 17 April 2020).

- Yun, G.W.; David, M.; Park, S.; Joa, C.Y.; Labbe, B.; Lim, J.; Lee, S.; Hyun, D. Social media and flu: Media Twitter accounts as agenda setters. Int. J. Med. Inform. 2016, 91, 67–73. [Google Scholar] [CrossRef]

- Sánchez-Rada, J.F.; Torres, M.; Iglesias, C.A.; Maestre, R.; Peinado, E. A Linked Data Approach to Sentiment and Emotion Analysis of Twitter in the Financial Domain. In CEUR Workshop Proceedings Joint Proceedings of the Second International Workshop on Semantic Web Enterprise Adoption and Best Practice and Second International Workshop on Finance and Economics on the Semantic Web Co-located with 11th European Semantic Web Conference, WaSABi-FEOSW@ESWC 2014, Anissaras, Greece, 26 May 2014; CEUR: Aachen, Germany, 2014; Volume 1240, pp. 51–62. [Google Scholar]

- Llorente, A.; Garcia-Herranz, M.; Cebrian, M.; Moro, E. Social Media Fingerprints of Unemployment. PLoS ONE 2015, 10, e0128692. [Google Scholar] [CrossRef]

- Gosling, S.D.; Augustine, A.A.; Vazire, S.; Holtzman, N.; Gaddis, S. Manifestations of personality in online social networks: Self-reported Facebook-related behaviors and observable profile information. Cyberpsychol. Behav. Soc. Netw. 2011, 14, 483–488. [Google Scholar] [CrossRef]

- Jurgens, D. That’s What Friends Are For: Inferring Location in Online Social Media Platforms Based on Social Relationships. In Proceedings of the 7th International AAAI Conference on ICWSM ’13 Weblogs and Social Media, Cambridge, MA, USA, 8–11 July 2013; pp. 273–282. [Google Scholar]

- Mislove, A.; Lehmann, S.; Ahn, Y.Y.; Onnela, J.P.; Rosenquist, J.N. Understanding the Demographics of Twitter Users. In Proceedings of the Fifth International AAAI Conference on Weblogs and Social Media, Barcelona, Spain, 17–21 July 2011; pp. 554–557. [Google Scholar]

- Lindamood, J.; Heatherly, R.; Kantarcioglu, M.; Thuraisingham, B. Inferring private information using social network data. In Proceedings of the 18th International Conference on World Wide Web WWW 09, Madrid, Spain, 20–24 April 2009; Volume 10, p. 1145. [Google Scholar]

- Jain, P.; Kumaraguru, P.; Joshi, A. @i seek ’fb. me’: Identifying users across multiple online social networks. In Proceedings of the Second International Workshop on Web of Linked Entities (WoLE) held in conjunction with the 22th International World Wide Web Conference, Rio de Janeiro, Brazil, 13 May 2013; pp. 1259–1268. [Google Scholar]

- Raad, E.; Chbeir, R.; Dipanda, A. User Profile Matching in Social Networks. In Proceedings of the 2010 13th International Conference on Network-Based Information Systems, Takayama, Japan, 14–16 September 2010; NBIS’10. IEEE Computer Society: Washington, DC, USA, 2010; pp. 297–304. [Google Scholar]

- Bennacer, N.; Jipmo, C.N.; Penta, A.; Quercini, G. Matching User Profiles Across Social Networks. In International Conference on Advanced Information Systems Engineering; Springer: Berlin/Heidelberg, Germany, 2014; pp. 424–438. [Google Scholar]

- Zhang, Y.; Tang, J.; Yang, Z.; Pei, J.; Yu, P.S. COSNET: Connecting Heterogeneous Social Networks with Local and Global Consistency. In Proceedings of the 21th ACM SIGKDD International Conference on KDD ’15 Knowledge Discovery and Data Mining, Sydney, Australia, 10–13 August 2015; ACM: New York, NY, USA, 2015; pp. 1485–1494. [Google Scholar]

- Correa, D.; Sureka, A.; Sethi, R. WhACKY!-What anyone could know about you from Twitter. In Proceedings of the 2012 Tenth Annual International Conference on IEEE Privacy, Security and Trust (PST), Paris, France, 16–18 July 2012; pp. 43–50. [Google Scholar]

- Pennacchiotti, M.; Popescu, A.M. A Machine Learning Approach to Twitter User Classification. ICWSM 2011, 11, 281–288. [Google Scholar]

- Zheng, R.; Li, J.; Chen, H.; Huang, Z. A Framework for Authorship Identification of Online Messages: Writing-style Features and Classification Techniques. J. Am. Soc. Inf. Sci. Technol. 2006, 57, 378–393. [Google Scholar] [CrossRef]

- Almendra, V. Finding the needle: A risk-based ranking of product listings at online auction sites for non-delivery fraud prediction. Expert Syst. Appl. 2013, 40, 4805–4811. [Google Scholar] [CrossRef]

- Klassen, M. Twitter data preprocessing for spam detection. In Proceedings of the Future Computing 2013, The Fifth International Conference on Future Computational Technologies and Applications, Valencia, Spain, 27 May–1 June 2013; pp. 56–61. [Google Scholar]

- Ahmed, C.; Elkorany, A. Enhancing link prediction in Twitter using semantic user attributes. In Proceedings of the 2015 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining 2015, Paris, France, 25 August 2015; pp. 1155–1161. [Google Scholar]

- Ceolin, D.; Potenza, S. Social network analysis for trust prediction. In IFIP International Conference on Trust Management; Springer: Berlin/Heidelberg, Germany, 2017; pp. 49–56. [Google Scholar]

- Meo, P.D.; Musial-Gabrys, K.; Rosaci, D.; Sarnè, G.M.; Aroyo, L. Using centrality measures to predict helpfulness-based reputation in trust networks. ACM Trans. Internet Technol. TOIT 2017, 17, 1–20. [Google Scholar] [CrossRef]

- Ahn, S. An Interpretation of the Mixture of Poisson Distributions with a Gamma Distributed Parameter. In Proceedings of the Society of Korea Industrial and System Engineering (SKISE) Spring Conference, Dongan-gu, Korea, 16 May 2003; pp. 275–278. [Google Scholar]

- Lemaire, J. Automobile Insurance: Actuarial Models; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013; Volume 4. [Google Scholar]

- Goldburd, M.; Khare, A.; Tevet, D. Generalized Linear Models for Insurance Rating, 2nd ed.; Technical Report 5; Casualty Actuarial Society: Arlington, VA, USA, 2016. [Google Scholar]

- Haberman, S.; Renshaw, A.E. Generalized linear models and actuarial science. J. R. Stat. Soc. Ser. D Stat. 1996, 45, 407–436. [Google Scholar] [CrossRef]

- Evans, J.D. Straightforward Statistics for the Behavioral Sciences; Brooks/Cole: Andover, UK, 1996. [Google Scholar]

- Barlett, C.P.; Anderson, C.A. Direct and indirect relations between the Big 5 personality traits and aggressive and violent behavior. Personal. Individ. Differ. 2012, 52, 870–875. [Google Scholar] [CrossRef]

- Berry, J.; Hemming, G.; Matov, G.; Morris, O. Report of the model validation and monitoring in personal lines pricing working party. In Proceedings of General Insurance Convention (GIRO 2009); Institute and Faculty of Actuaries: London, UK, 2009; pp. 1–54. [Google Scholar]

- Fu, L.; Wang, H. Estimating insurance attrition using survival analysis. Variance. Adv. Sci. Risk 2014, 8, 55–82. [Google Scholar]

- Ling, C.X.; Li, C. Data Mining for Direct Marketing: Problems and Solutions. In Proceedings of the Fourth International Conference on Knowledge Discovery and Data Mining (KDD’98), New York, NY, USA, 27–31 August 1998; Volume 98, pp. 73–79. [Google Scholar]

- Mittal, S.; Gupta, P.; Jain, K. Neural network credit scoring model for micro enterprise financing in India. Qual. Res. Financ. Mark. 2011, 3, 224–242. [Google Scholar] [CrossRef]

- Statista. Number of Facebook Users in India from 2015 to 2018 with a Forecast until 2023. 2018. Available online: https://www.statista.com/statistics/304827/number-of-facebook-users-in-india/ (accessed on 17 April 2020).

- Kannadhasan, M. Retail investors’ financial risk tolerance and their risk-taking behavior: The role of demographics as differentiating and classifying factors. IIMB Manag. Rev. 2015, 27, 175–184. [Google Scholar] [CrossRef]

| Type | Features |

|---|---|

| Profile | Account creation date |

| Behavior | Tweets count, Favourites count, Most frequent tweeting hours, Tweets average length |

| Linguistic | Bad words ratio |

| Social | Times added to list, Avg. retweeted per tweet, Avg. favourited per tweet, Followers count, Friends count, Followers’ followers avg. count, Followers’ friends avg. count, Followers’ tweets avg. count, Friends’ friends avg. count, Friends’ tweets avg. count |

| Mean | std | Min | Max | |

|---|---|---|---|---|

| Account creation date | - | - | 2006-12-26 | 2017-03-14 |

| Tweets count | 7086.63 | 16,277.20 | 0 | 556,756 |

| Bad words ratio | 0.02 | 0.02 | 0 | 0.46 |

| Times added to list | 9.76 | 42.45 | 0 | 2735 |

| Favourites count | 3066.26 | 11,826.47 | 0 | 399,054 |

| Avg. retweeted per tweet | 1397.67 | 5039.60 | 0 | 365,745.75 |

| Avg. favourited per tweet | 0.49 | 9.77 | 0 | 906.60 |

| Most frequent tweeting hours | 15.24 | 6.83 | 0 | 23 |

| Followers count | 556.66 | 5519.25 | 0 | 458,789 |

| Friends count | 476.43 | 2035.52 | 0 | 258,934 |

| Tweets average length | 88.96 | 26.75 | 0 | 179.53 |

| Followers’ followers avg. count | 12,194.23 | 30,909.87 | 0 | 1,990,020 |

| Followers’ friends avg.count | 6945.60 | 12,969.62 | 0 | 441345.57 |

| Followers’ tweets avg. count | 7120.12 | 8148.27 | 0 | 327347.40 |

| Friends’ followers avg. count | 941,730.90 | 1,921,375.52 | 0 | 105,297,483 |

| Friends’ friends avg.count | 4323.63 | 8205.70 | 0 | 477,768.33 |

| Friends’ tweets avg. count | 17,643.89 | 12,186.34 | 0 | 391,207 |

| Coef | Std Err | P > |z| | |

|---|---|---|---|

| Intercept | −7.8458 | 0.657 | <0.001 |

| Account creation date | 2.957 × 10 | 4.77 × 10 | <0.001 |

| Tweets count | 1.867 × 10 | 2.2 × 10 | 0.932 |

| Bad words ratio | 2.8823 | 1.246 | 0.021 |

| Times added to list | −0.0013 | 0.001 | 0.350 |

| Favourites count | 8.7 × 10 | 2.59 × 10 | 0.737 |

| Avg. retweeted per tweet | −4.387 × 10 | 7.57 × 10 | 0.562 |

| Avg. favourited per tweet | 0.0037 | 0.002 | 0.056 |

| Most frequent tweeting hours | 0.0052 | 0.005 | 0.259 |

| Followers count | −4.28 × 10 | 3.63e-05 | 0.238 |

| Friends count | 6.774 × 10 | 3.84 × 10 | 0.078 |

| Tweets average length | −0.0078 | 0.001 | <0.001 |

| Followers’ followers avg. count | −1.384 × 10 | 2.21 × 10 | 0.531 |

| Followers’ friends avg. count | 8.631 × 10 | 3.86 × 10 | 0.025 |

| Followers’ tweets avg. count | −4.663 × 10 | 4.21 × 10 | 0.268 |

| Friends’ followers avg. count | 4.773 × 10 | 1.16 × 10 | <0.001 |

| Friends’ friends avg.count | 1.236 × 10 | 3.46 × 10 | 0.721 |

| Friends’ tweets avg. count | 3.512 × 10 | 2.23 × 10 | 0.115 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prada, A.; Iglesias, C.A. Predicting Reputation in the Sharing Economy with Twitter Social Data. Appl. Sci. 2020, 10, 2881. https://doi.org/10.3390/app10082881

Prada A, Iglesias CA. Predicting Reputation in the Sharing Economy with Twitter Social Data. Applied Sciences. 2020; 10(8):2881. https://doi.org/10.3390/app10082881

Chicago/Turabian StylePrada, Antonio, and Carlos A. Iglesias. 2020. "Predicting Reputation in the Sharing Economy with Twitter Social Data" Applied Sciences 10, no. 8: 2881. https://doi.org/10.3390/app10082881

APA StylePrada, A., & Iglesias, C. A. (2020). Predicting Reputation in the Sharing Economy with Twitter Social Data. Applied Sciences, 10(8), 2881. https://doi.org/10.3390/app10082881