Visual Information Requirements for Remotely Supervised Autonomous Agricultural Machines

Abstract

1. Introduction

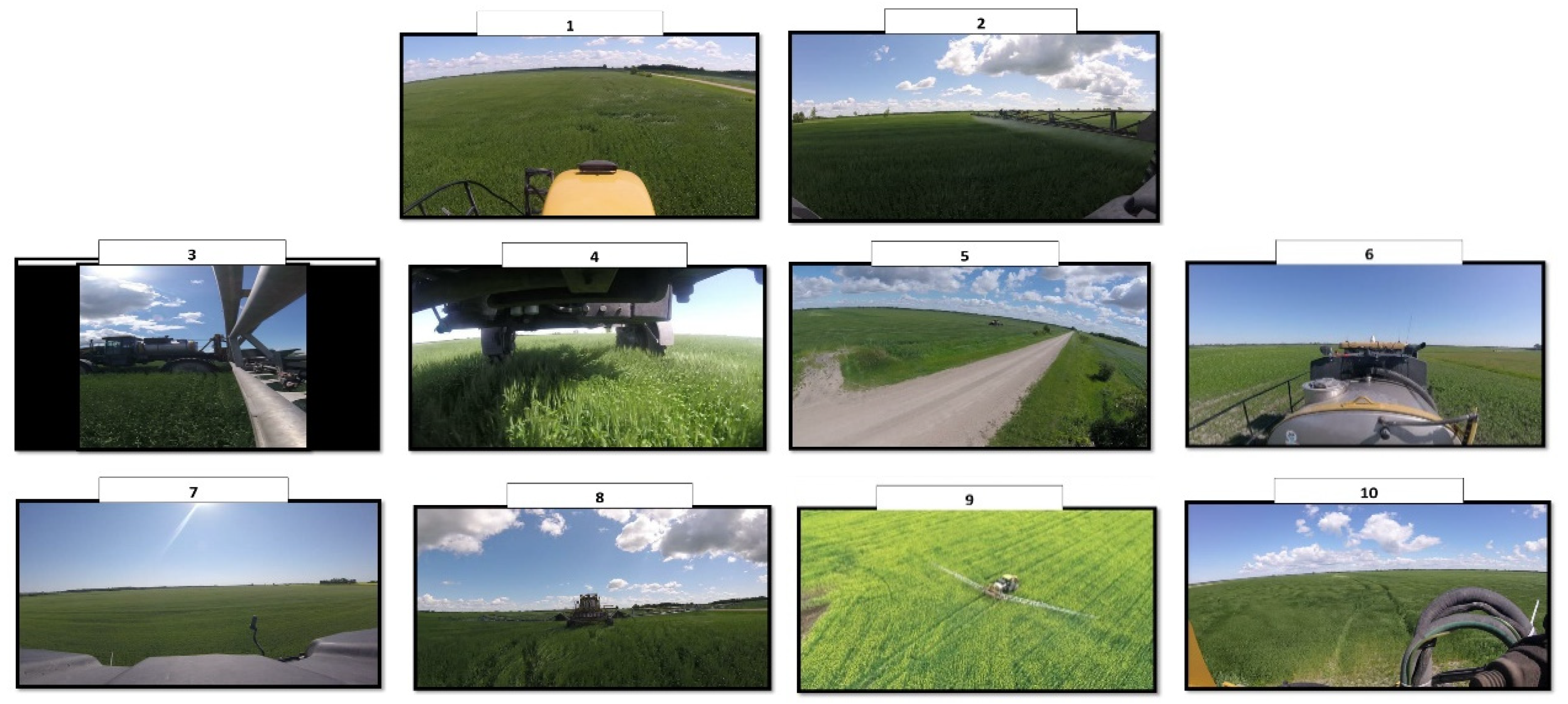

2. Materials and Methods

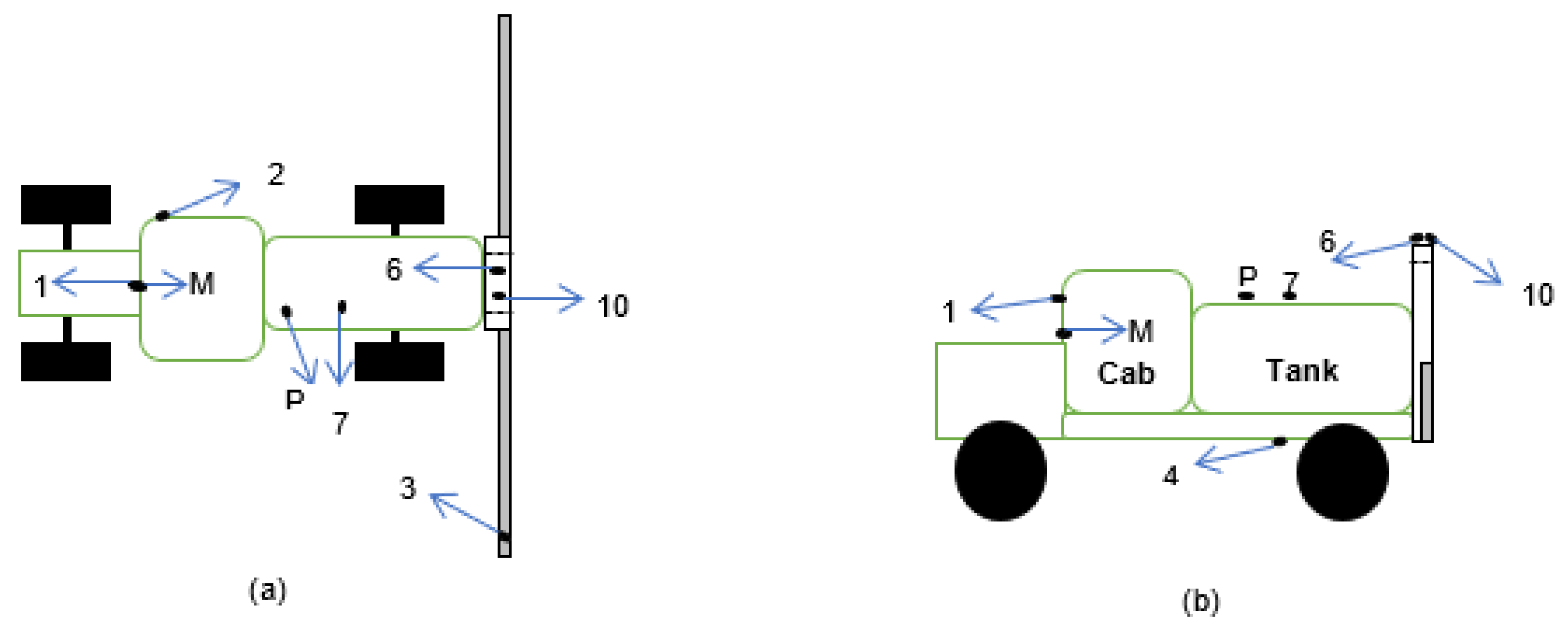

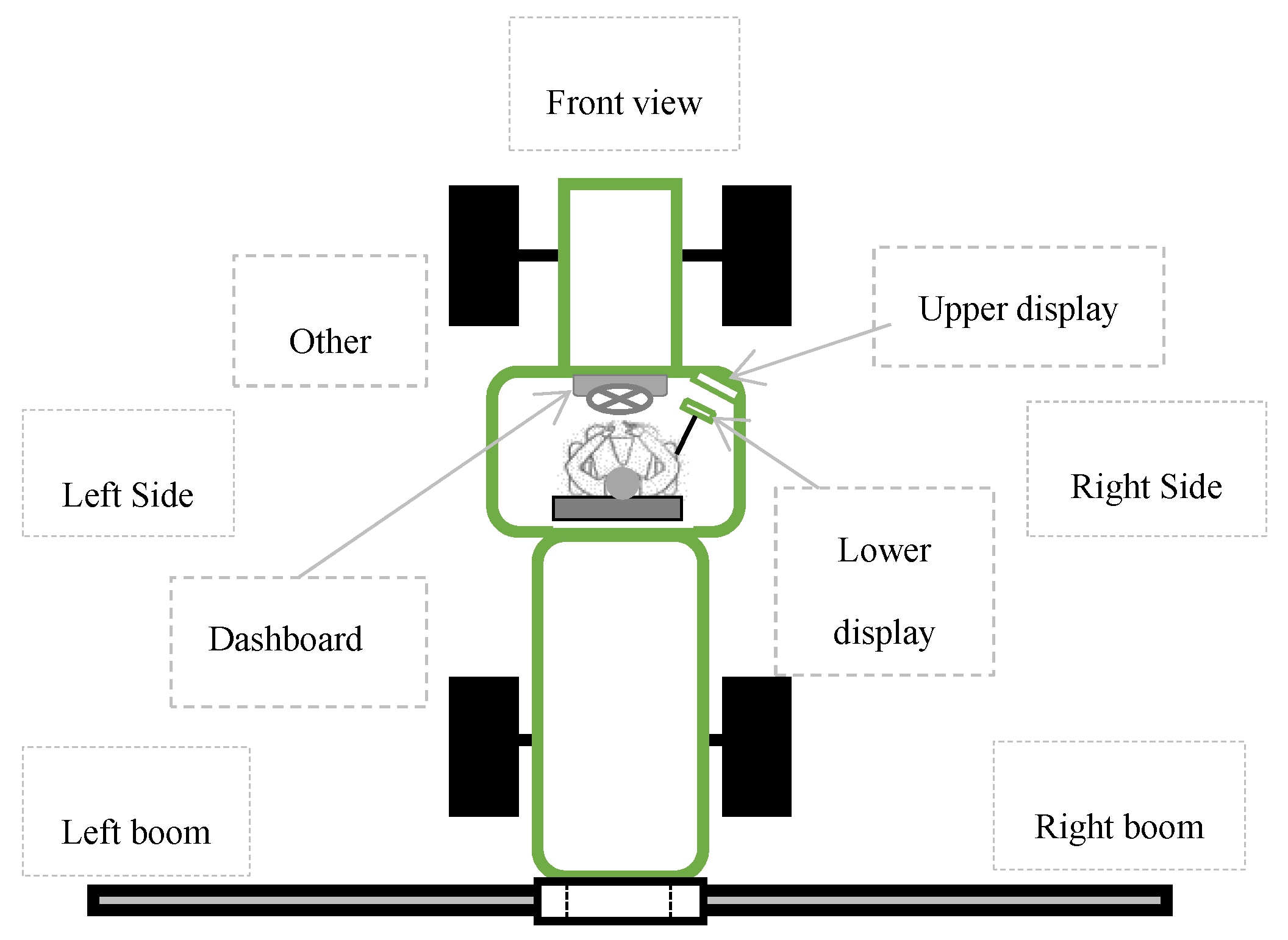

2.1. Identification of Look Zones during Manual Sprayer Operation

2.2. Determination of Relevant Visual Information

3. Results and Discussion

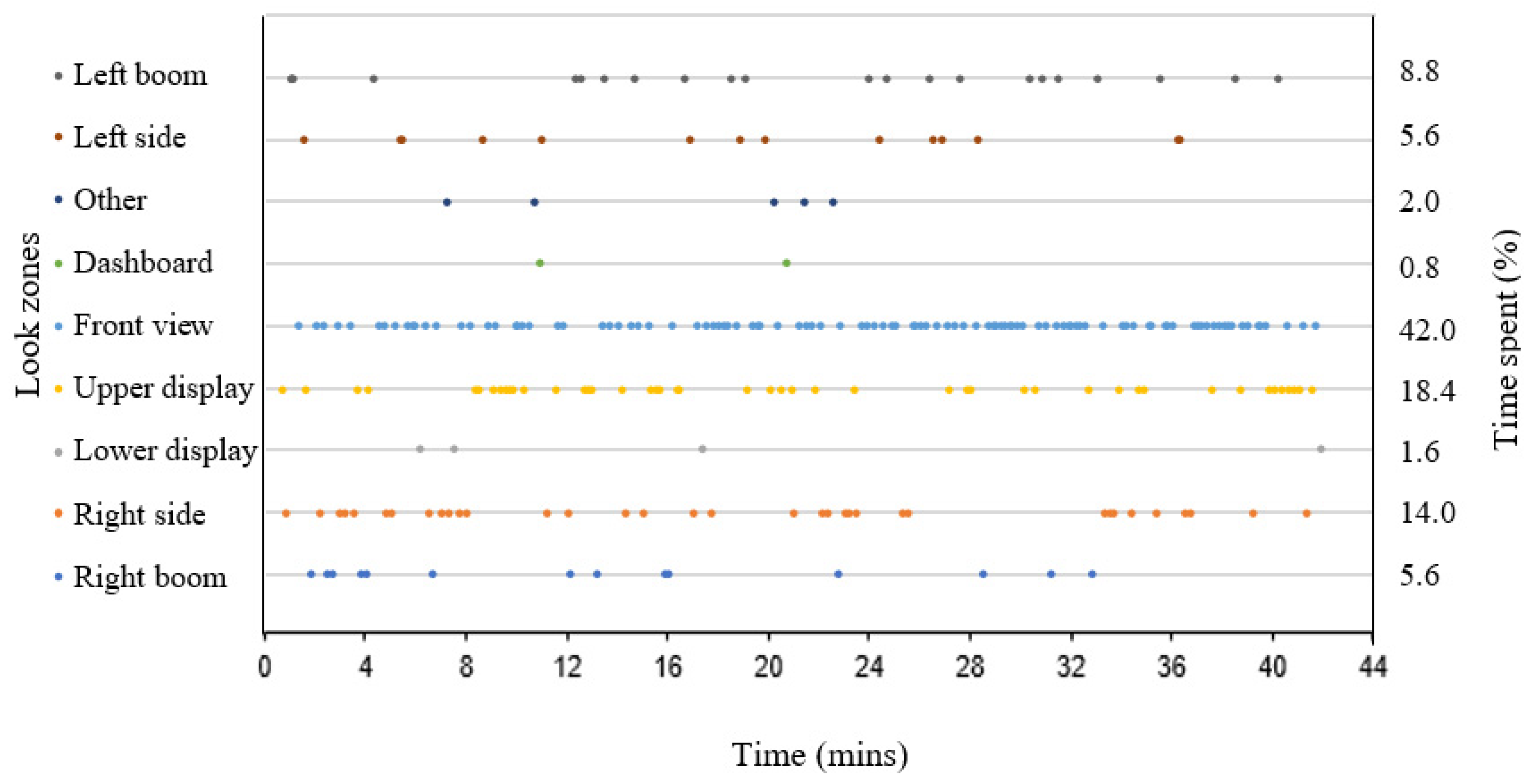

3.1. Identification of Look Zones during Manual Sprayer Operation

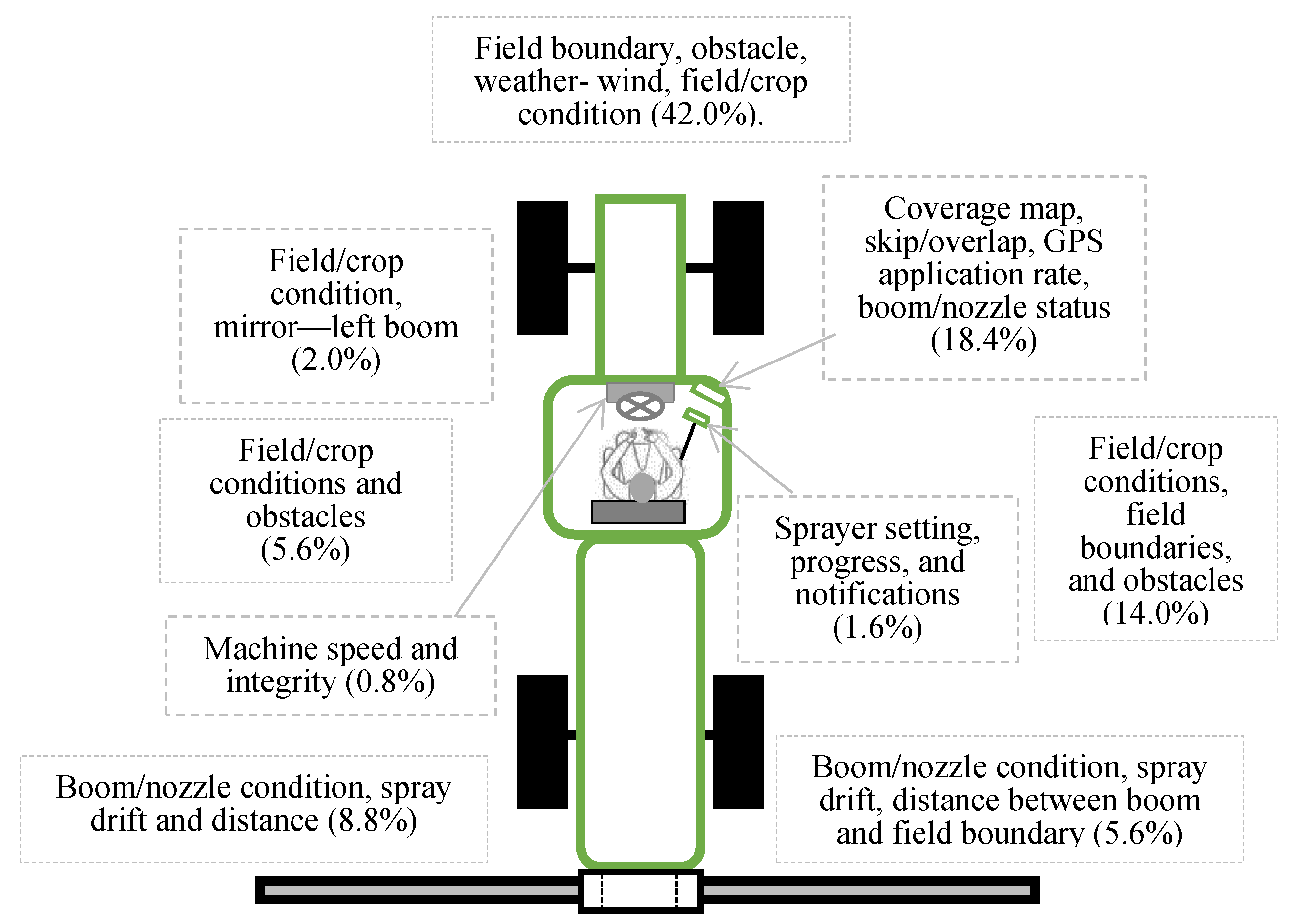

3.2. Determination of Relevant Visual Information

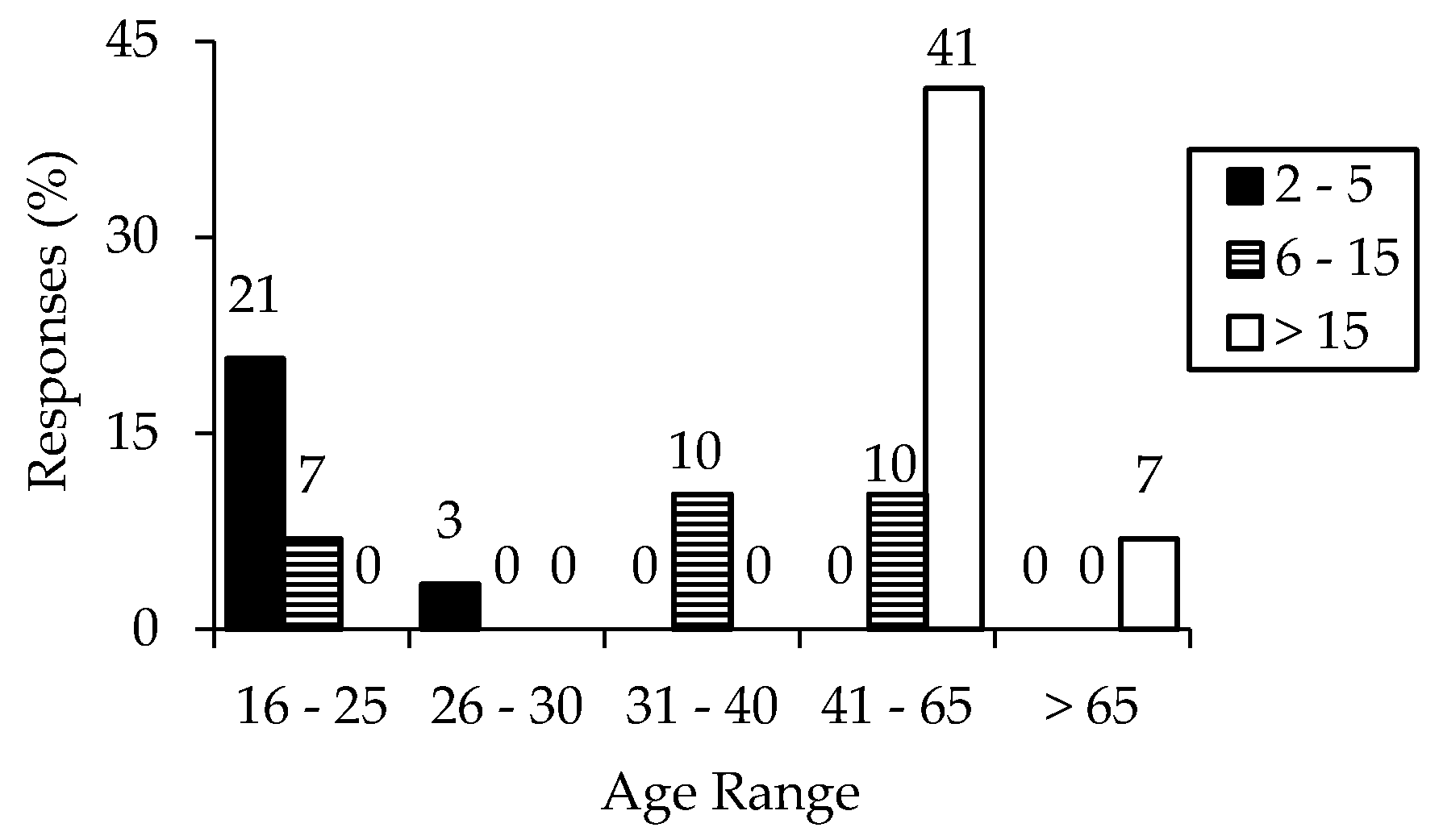

3.2.1. Participant Demographics

3.2.2. Preliminary Observations from the Practice Clip

3.2.3. Information Gained from Test Clips

3.2.4. Effect of Operators’ Experience on Information Gained

3.2.5. Influence of Camera Position

3.2.6. Alternate Camera Placement

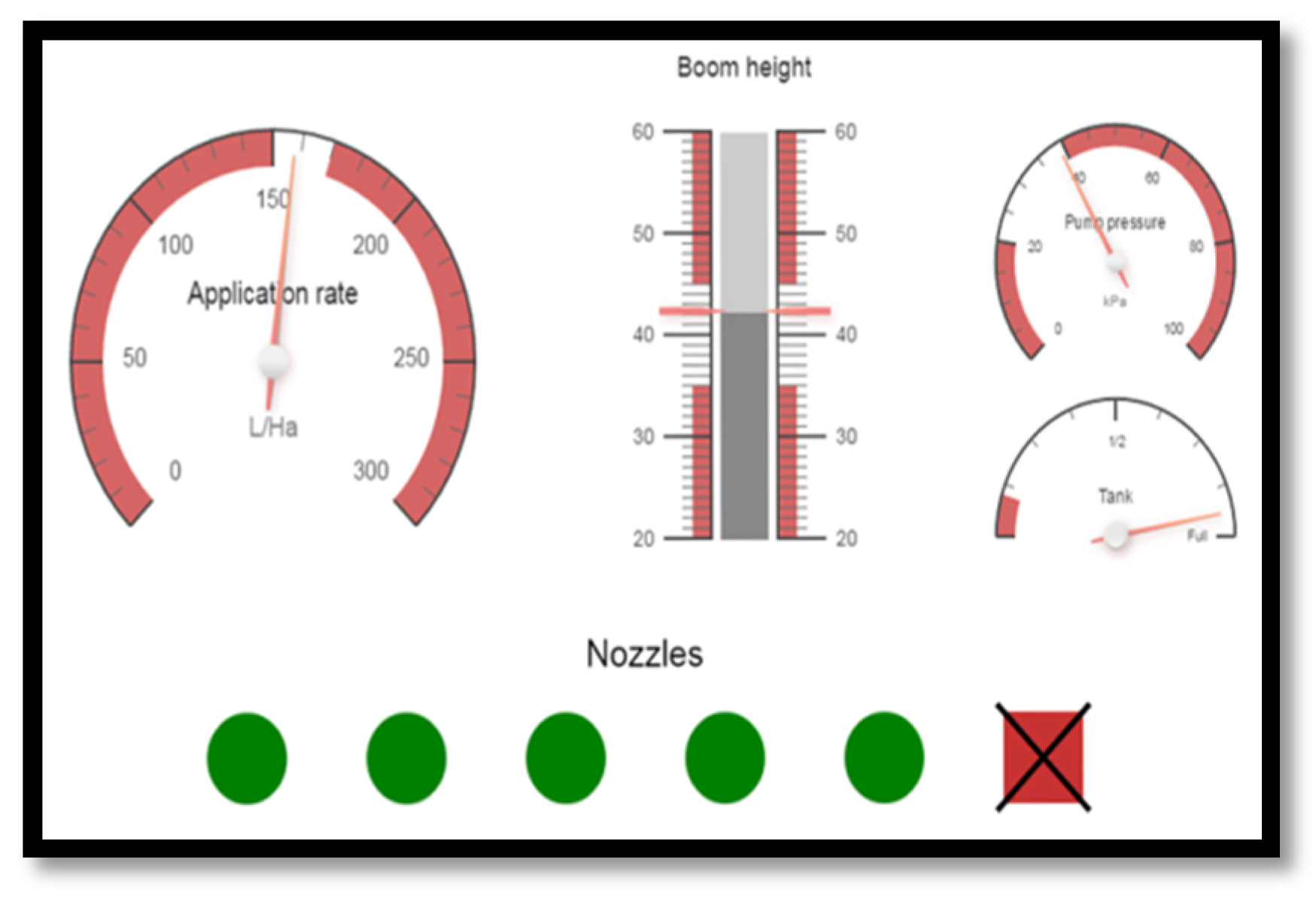

3.2.7. Live Video or Graphical Display of Information

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Video Clip | Clip Description | Information Gained (Multiple Responses Allowed; %) | How Information Gained Can Be Used (Multiple Responses Allowed) | Typically Viewed; % | Usefulness to “No” Participants; % | LoI Ranking; % | Replacing Clip with Display |

|---|---|---|---|---|---|---|---|

| 1 | Front view from sprayer cabin (operator’s sitting position). | Too windy to be spraying—48 Staying on track—28 Turning at headland—28 Field condition—17 Approximate travel speed—10 Obstacle—10 Crop condition—3 No comment—10 | - Increase droplet size. - Lower the boom. - Avoid obstacle. - Control speed at headland. - Prevent overlap/miss. - Utilize old track. | Yes: 97 No: 3 | Yes: 0 No: 100 n = 1 | L1 = 7 L2 = 0 L3 = 14 L4 = 24 L5 = 55 | a = 55 b = 21 c = 24 |

| 2 | Right boom spraying chemical. | No plugged nozzle—38 Boom height—28 Section control—21 How close is the boom end to the field boundary—21 Spray pattern—17 Crop condition—10 Obstacle—10 Approximate travel speed—10 Boom stability—3 Sharp turning—3 No comment—3 | - Clean plug nozzles. - Set/control boom height. - Turn off boom. - Avoid obstacle. - Avoid spraying another field. | Yes: 93 No: 7 | Yes: 100 No: 0 n = 2 | L1 = 3 L2 = 3 L3 = 10 L4 = 28 L5 = 55 | a = 62 b = 17 c = 21 |

| 3 | Left side of sprayer. | No information gained—48 Machine integrity—10 Travel speed—10 Sprayer clearance—7 No leaks—7 Boom height—7 Sprayer response to field topography—7 Boom is still attached—3 Crop condition—3 Boom stability—3 Field condition—3 No comment—7 | - Not useful - Stop if a leak was identified. - Changing travel speed. | Yes: 10 No: 90 | Yes: 23 No: 65 Blank: 12 n = 26 | L1 = 54 L2 = 14 L3 = 14 L4 = 14 L5 = 4 | a = 21 b = 10 c = 69 |

| 4 | Underside of sprayer. | No leaks—31 No information gained—24 Sprayer clearance—17 Machine integrity—17 Travel speed—10 Crop condition—10 Wheel alignment with previous path/track—10 Crop respond to tire—7 Field condition—3 Sprayer is still moving—3 | - Keep straight - Stop if there was a leak. - Keep your wheels in the existing sprayer tracks - Not useful - Adjust travel speed. - How high to set your center boom | Yes: 3 No: 97 | Yes: 32 No: 64 Blank: 4 n = 28 | L1 = 31 L2 = 34 L3 = 21 L4 = 7 L5 = 7 | a = 41 b = 10 c = 48 |

| 5 | Distance sprayer working in a windy field. | No information gained—34 Windy—31 Sprayer is in the right field—10 Path taken by sprayer—10 Sprayer is still moving—7 Spray drift—3 How close is the boom end from the field boundary—3 Boom height—3 Field condition—3 Size of the field—3 | - Not useful - Possibly stop spraying - Confirm that proper crop is being sprayed and that machine is in the correct field. | Yes: 7 No: 93 | Yes: 22 No: 74 Blank: 4 n = 27 | L1 = 48 L2 = 31 L3 = 10 L4 = 3 L5 = 7 | a = 28 b = 21 c = 48 |

| 6 | View of spray/rinse tank and field. | No information—59 Leaks on rinse tank—17 Weather—7 Driving straight—7 Sprayer is still moving—3 Obstacles—3 Travel speed—3 Obstacle—3 Travel speed—3 Field condition—3 Machine integrity—3 Tank latch is secure—3 | - Assist when passing low hanging wire or shed. - Mange leaks/open lid. | Yes: 0 No: 100 | Yes: 17 No: 79 Blank: 3 n = 29 | L1 = 59 L2 = 21 L3 = 10 L4 = 10 L5 = 0 | a = 28 b = 7 c = 66 |

| 7 | Left side of sprayer showing crop. | No information—66 Obstacles—10 Sprayer is still moving—7 Field condition—7 Crop condition—3 Awareness of another vehicle when driving off field—3 Weather—3 Side of sprayer—3 Travel speed—3 Identify the field—3 Sprayer location—3 | - Assist when turning unto a roadway. - Plan for low spot/ditches. - Avoid obstacles. | Yes: 28 No: 72 | Yes: 14 No: 81 Blank: 5 n = 21 | L1 = 62 L2 = 24 L3 = 10 L4 = 3 L5 = 0 | a = 34 b = 0 c = 59 |

| 8 | Back of sprayer driving up a field. | No information—31 Nozzle status—28 Boom height—17 Centre boom/nozzle—10 Spray pattern—10 Weather (wind)—7 Spray drift—7 Boom is fully open—7 Machine integrity—3 Obstacles—3 Leaks—3 Field condition—3 Travel speed—3 | - Proper boom height adjustment. - Adjust travel speed. - Reduced time spent to check for plugged nozzle in the center section. | Yes: 10 No: 90 | Yes: 50 No: 46 Blank: 4 n = 26 | L1 = 34 L2 = 10 L3 = 14 L4 = 38 L5 = 3 | a = 24 b = 31 c = 41 |

| 9 | Aerial (drone) view of sprayer operating in a canola field. | How close is the boom end from the field boundary—31 Sprayer’s location—28 Field condition—28 Obstacle—14 Crop condition—14 Spray pattern—7 Path followed—7 Boom is fully open—7 No information gained—7 Machine integrity—3 Travel speed—3 Section control—3 Field boundary—3 Spray drift—3 Boom height—3 | - Plan routes to avoid hidden obstacles - Adjust to stay within field boundary - Know how much work had been done | Yes: 3 No: 97 | Yes: 82 No: 14 Blank: 4 n = 28 | L1 = 7 L2 = 21 L3 = 41 L4 = 24 L5 = 7 | a = 52 b = 14 c = 31 |

| 10 | Field behind the sprayer while it is moving. | No information gained—31 Spray drift—31 Wheel alignment with old track/path—28 Travel speed—10 Front and rear wheel aligned properly—7 Leaks-7 Weather (wind)—7 Nozzle status based on tramlines—3 Spray pattern—3 Sprayer’s location—3 Object behind sprayer—3 Crop condition—3 Field condition—3 | - When backing out. - Align wheel properly. - Select/change droplet size or spray pressure. - Decide whether to continue spraying or not. | Yes: 14 No: 86 | Yes: 48 No: 48 Blank: 4 n = 25 | L1 = 21 L2 = 28 L3 = 21 L4 = 14 L5 = 17 | a = 41 b = 3 c = 52 |

References

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. A model for types and levels of human interaction with automation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2000, 30, 286–297. [Google Scholar] [CrossRef] [PubMed]

- Alexander, R.; Herbert, N.; Kelly, T. The role of the human in an autonomous system. In Proceedings of the 4th IET System Safety Conference, London, UK, 26–28 October 2009. [Google Scholar] [CrossRef]

- Schreckenghost, D.; Fong, T.; Milam, T. Human supervision of robotic site surveys. In American Institute of Physics Conference Proceedings; American Institute of Physics: College Park, MD, USA, 2008; Volume 969, pp. 776–783. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Endsley, M. From here to autonomy: Lessons learned from human-automation research. Hum. Factors 2017, 59, 5–27. [Google Scholar] [CrossRef]

- Endsley, M.; Kiris, E.O. The out-of-the-loop performance problem and level of control in automation. Hum. Factors 1995, 37, 381–394. [Google Scholar] [CrossRef]

- Stentz, A.; Dima, C.; Wellington, C.; Herman, H.; Stager, D. A system for semi-autonomous tractor operations. Auton. Robot. 2002, 13, 87–104. [Google Scholar] [CrossRef]

- Berenstein, R.; Edan, Y.; Halevi, I.B. A remote interface for a human-robot cooperative vineyard sprayer. In Proceedings of the 11th International Conference on Precision Agriculture, Indianapolis, IN, USA, 15–8 July 2012. [Google Scholar]

- Edet, U.; Hawley, E.; Mann, D.D. Remote supervision of autonomous agricultural sprayers: The farmer’s perspective. Can. Biosyst. Eng. Génie Biosyst. Can. 60 2018, 2.19–2.31. [Google Scholar] [CrossRef]

- Blackmore, S.; Fountas, S.; Have, H. Proposed system architecture to enable behavioral control of an autonomous tractor. In Proceedings of the Automation Technology for Off-Road Equipment Proceedings of the 2002 Conference, Chicago, IL, USA, 26–27 July 2002; pp. 13–23. [Google Scholar] [CrossRef]

- Johnson, D.A.; Naffin, D.J.; Puhalla, J.S.; Sanchez, J.; Wellington, C.K. Development and implementation of a team of robotic tractors for autonomous peat moss harvesting. J. Field Robot. 2009, 26, 549–571. [Google Scholar] [CrossRef]

- Moorehead, S.; Ackerman, C.; Smith, D.; Hoffman, J.; Wellington, C. Supervisory control of multiple tractors in an orchard environment. In Proceedings of the 4th IFAC International Workshop on Bio-Robotics, Information Technology and Intelligent Control for Bioproduction Systems, Urbana, IL, USA, 10–11 September 2009. [Google Scholar]

- Panfilov, I.; Mann, D.D. The Importance of Real-Time Visual Information for the Remote Supervision of an Autonomous Agricultural Machine. Can. Biosyst. Eng. 2018, 60. [Google Scholar] [CrossRef][Green Version]

- Sanchez, J.; Duncan, J.R. Operator-automation interaction in agricultural vehicles. Ergon. Des. 2009, 17, 14–19. [Google Scholar] [CrossRef]

- Dey, A.K.; Mann, D.D. A complete task analysis to measure the workload associated with operating an agricultural sprayer equipped with a navigation device. Appl. Ergon. 2010, 41, 146–149. [Google Scholar] [CrossRef] [PubMed]

- CNH Industrial: The CNH Industrial Autonomous Tractor Concept (Full Version). Available online: https://www.youtube.com/watch?v=T7Os5Okf3OQ (accessed on 20 May 2018).

- Ishibashi, M.; Iida, M.; Suguri, M.; Masuda, R. Remote monitoring of agricultural robot using web application. In Proceedings of the IFAC Conference on Modelling and Control in Agriculture, Horticulture and Post Harvest Industry, Espoo, Finland, 27–30 August 2013; Volume 46, pp. 138–142. [Google Scholar] [CrossRef]

| Camera | Rationale |

|---|---|

| M | Ability to observe the operator’s head and eye movement (from his seated position) during the spraying operation. |

| 1 | To capture the field ahead of the sprayer and other environmental cues. |

| P and 2 | To capture the view of the right and left boom, respectively, as perceived from the typical seated position of an operator in the cab of a self-propelled sprayer. |

| 3, 4, 6, 7, 8, and 10 | To capture regions around the sprayer that are currently not visible to operators (from their seated position). |

| 5 and 9 | To capture off-field (third party) views and assess their usefulness to the remote supervisor of the autonomous sprayer(s). |

| Video Clip | Typically Viewed (%) | High Level of Importance * (%) | Participant Preference for Visual Information | ||

|---|---|---|---|---|---|

| Live Video (%) | Graphical Display (%) | No Substantial Impact (%) | |||

| 1 | 97 | 79 | 55 | 21 | 24 |

| 2 | 93 | 83 | 62 | 17 | 21 |

| 3 | 10 | 18 | 21 | 10 | 69 |

| 4 | 3 | 14 | 41 | 10 | 48 |

| 5 | 7 | 10 | 28 | 21 | 48 |

| 6 | 0 | 10 | 28 | 7 | 66 |

| 7 | 28 | 3 | 34 | 0 | 59 |

| 8 | 10 | 41 | 24 | 31 | 41 |

| 9 | 3 | 31 | 52 | 14 | 31 |

| 10 | 14 | 31 | 41 | 3 | 52 |

| Clip | General Description | < 5 Years Experience | 5–15 Years Experience | >15 Years Experience |

|---|---|---|---|---|

| 1 | Front view from sprayer cabin. | Turning, speed, track path | Turning, track path, windy. | Turning, windy, obstacle. |

| 2 | Right boom spraying chemical. | Nozzle status, boom height. | Nozzle status, boom height, distance from field boundary, spray pattern. | Sprayer is operating, crop condition, boom height, obstacles, travel speed, distance from field edge. |

| 3 | Left side of sprayer. | Leak, how deep you can sink, very little. | Topography, leaks, teaching moment, machine integrity, not much. | Boom is still attached, travel speed, boom stability, not much. |

| 4 | Underneath of sprayer. | Leaks, crop respond, crop height. | Field condition, leaks, machine integrity, not much. | Crop conditions, leaks, crop height, crop damage, travel speed, clearance, nothing much. |

| 5 | Distance sprayer working in a windy field. | Field variability, size of field, boom height, not much. | Wind condition, amount of drift, sprayer location, not much. | Weather, sprayer is in the desired field, windy, sprayer is still moving, not much. |

| 6 | View of spray/rinse tank and field. | Tank is full, sprayer is driving straight, nothing much. | Machine integrity, weather, nothing. | Leaks, field conditions, sprayer is operating, making turns, obstacles, nothing. |

| 7 | Left side of sprayer showing crop. | Side view of sprayer, nothing. | Beneficial for off-field operation, nothing. | Travel speed, crop condition, weather, field conditions, obstacles, nothing. |

| 8 | Back of sprayer driving up a field. | Nozzle status, nothing. | Nozzle status, spray pattern, boom height, spray drift, leaks, nothing. | Sprayer is operating, field and weather conditions, nozzle status, wind, travel speed, spray drift. |

| 9 | Aerial (drone) view of sprayer operating in a canola field. | Field conditions, sprayer’s location, sprayer is applying chemical within boundary. | Distance of boom from field boundary, field condition, sprayer’s location, obstacles. | Sprayer is operating, following field edge, obstacles, field conditions, location of sprayer, boom is fully open, travel speed. |

| 10 | Field behind the sprayer while it is moving. | Spray drift, crop damage due to tire. | Spray drift, travel speed, field condition, crop condition, staying on track, wheel alignment, not much. | Spray drift, weather, spray pattern, travel speed, track alignment with previous path, front and rear wheel alignment. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Edet, U.; Mann, D. Visual Information Requirements for Remotely Supervised Autonomous Agricultural Machines. Appl. Sci. 2020, 10, 2794. https://doi.org/10.3390/app10082794

Edet U, Mann D. Visual Information Requirements for Remotely Supervised Autonomous Agricultural Machines. Applied Sciences. 2020; 10(8):2794. https://doi.org/10.3390/app10082794

Chicago/Turabian StyleEdet, Uduak, and Daniel Mann. 2020. "Visual Information Requirements for Remotely Supervised Autonomous Agricultural Machines" Applied Sciences 10, no. 8: 2794. https://doi.org/10.3390/app10082794

APA StyleEdet, U., & Mann, D. (2020). Visual Information Requirements for Remotely Supervised Autonomous Agricultural Machines. Applied Sciences, 10(8), 2794. https://doi.org/10.3390/app10082794