Research on 2D Laser Automatic Navigation Control for Standardized Orchard

Abstract

1. Introduction

2. Materials and Methods

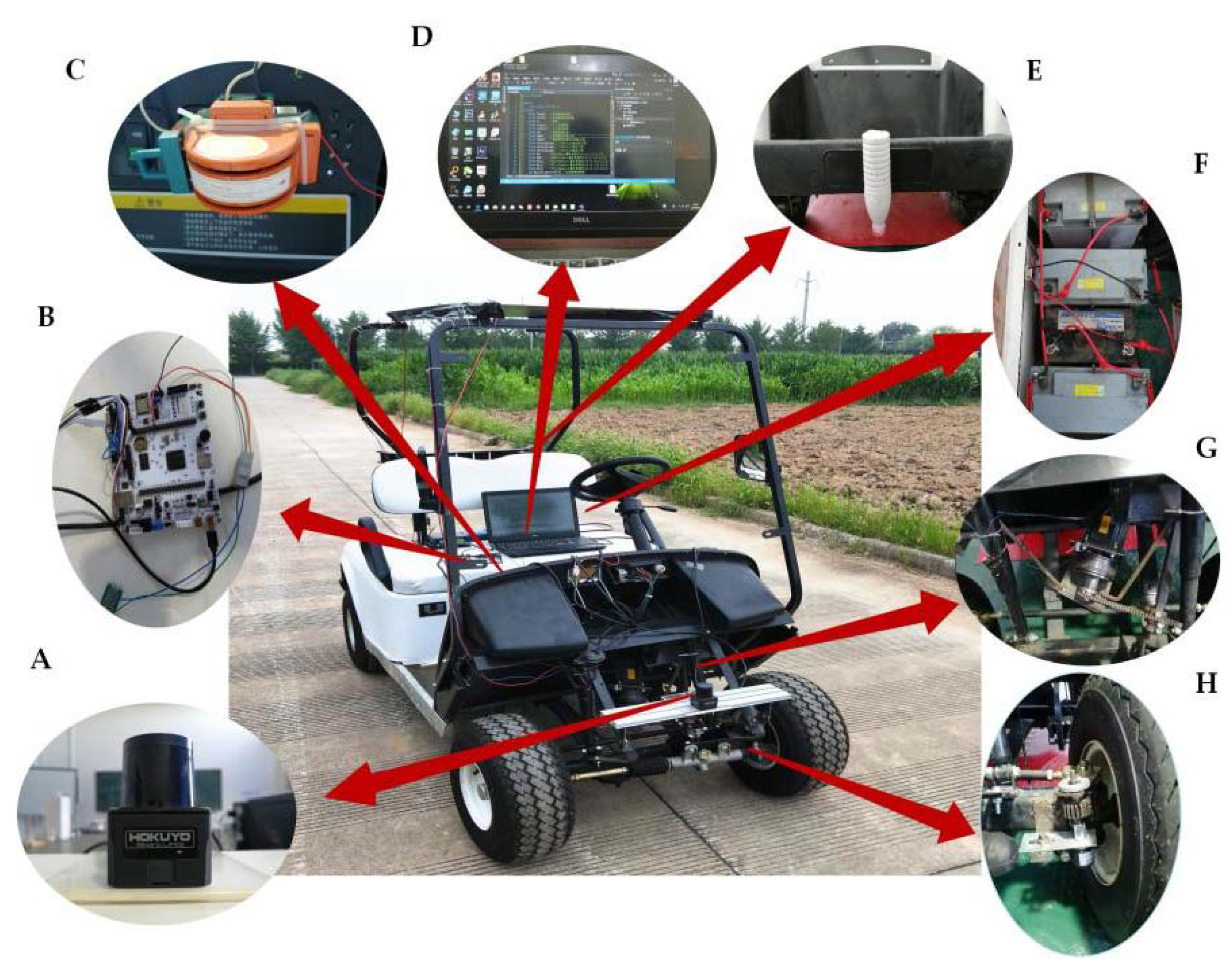

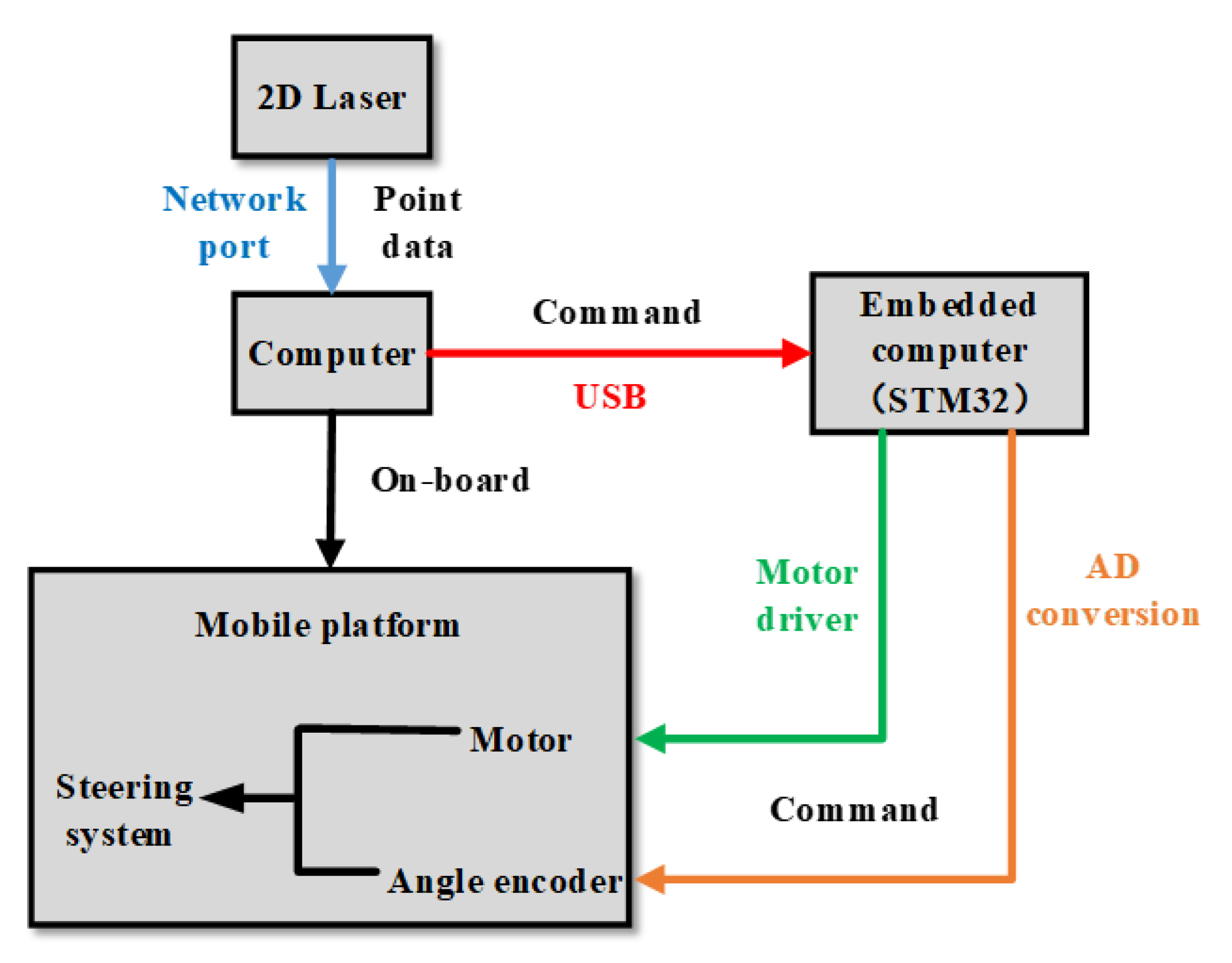

2.1. System Composition

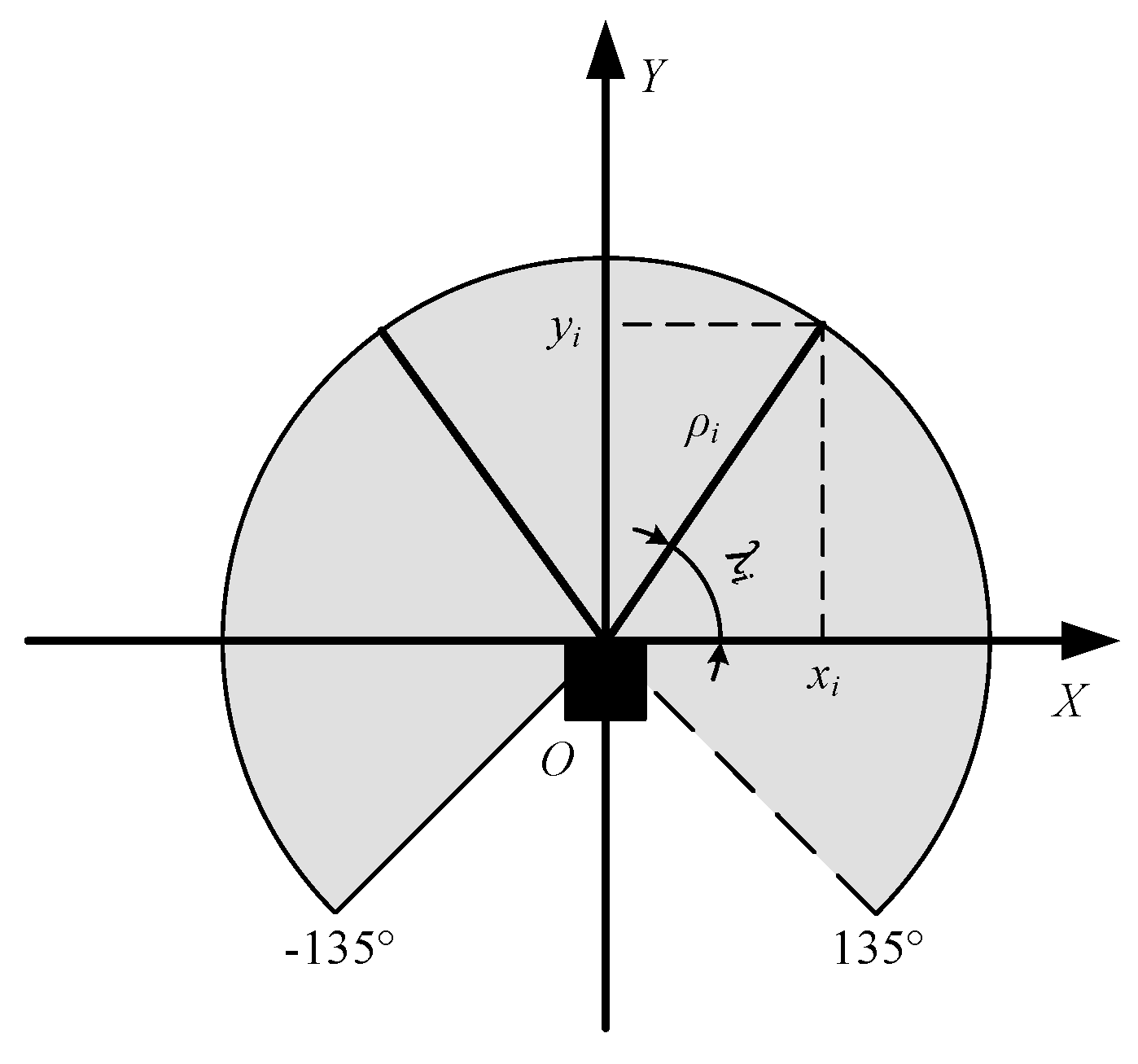

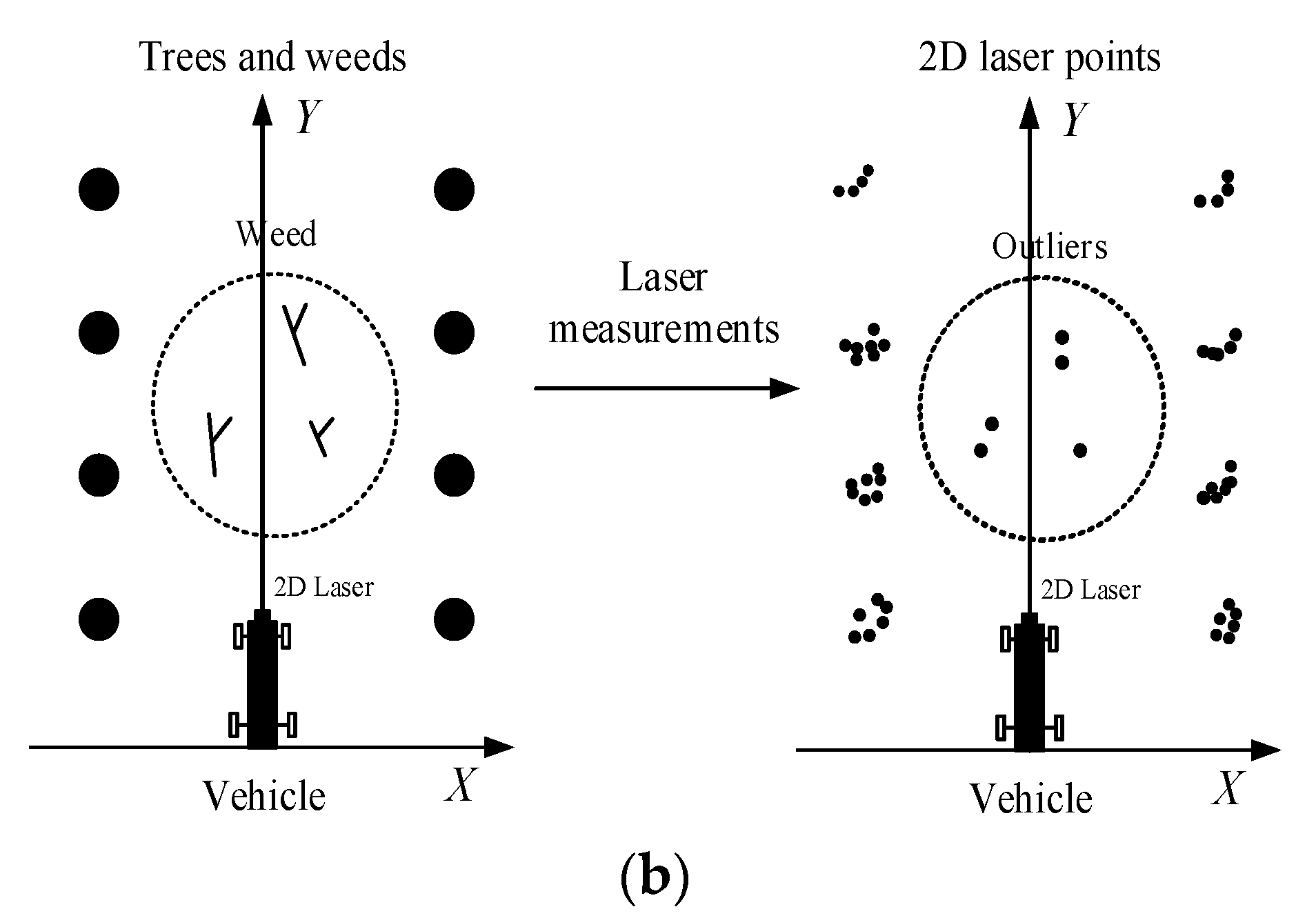

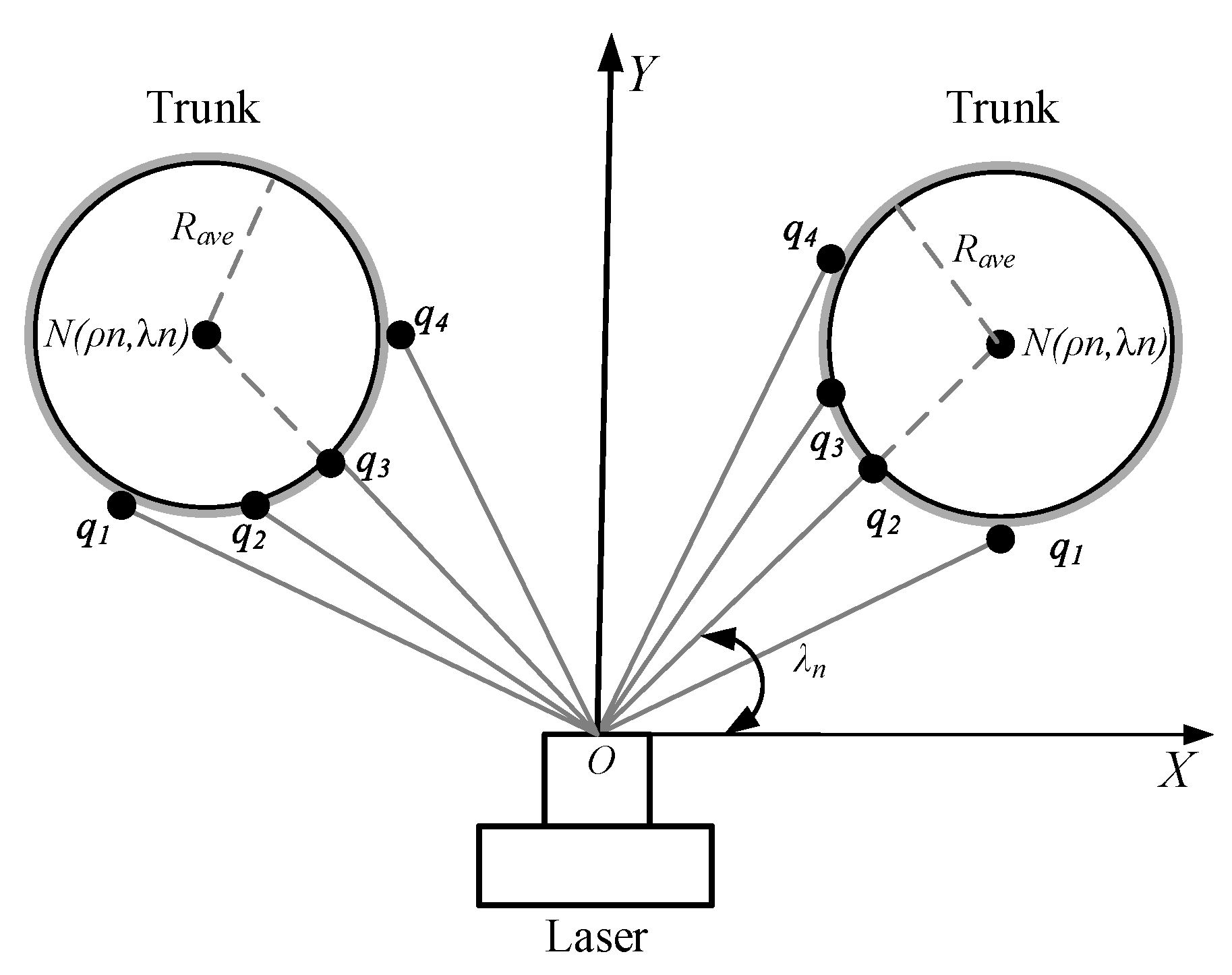

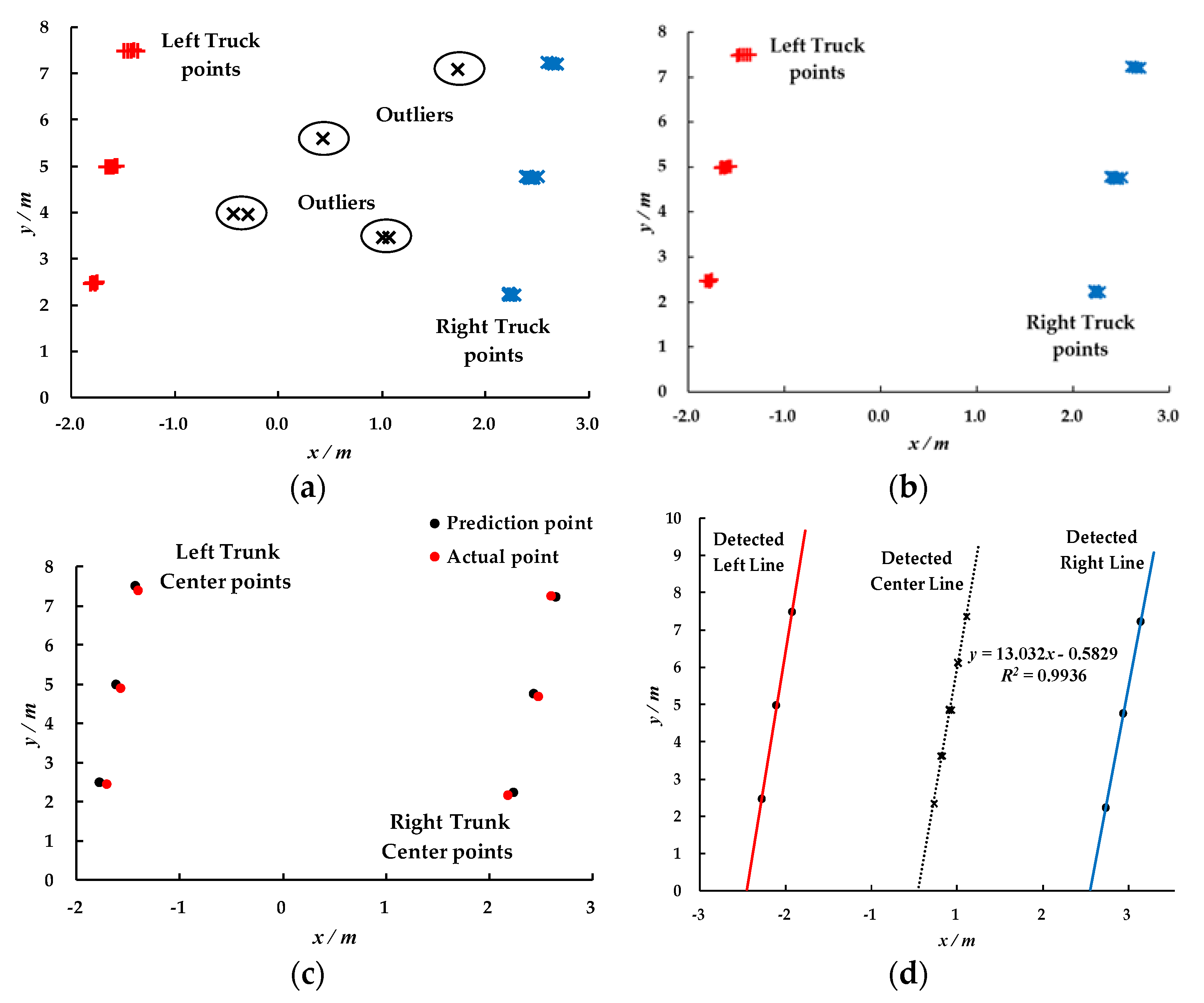

2.2. Fruit Tree Position Information Determination

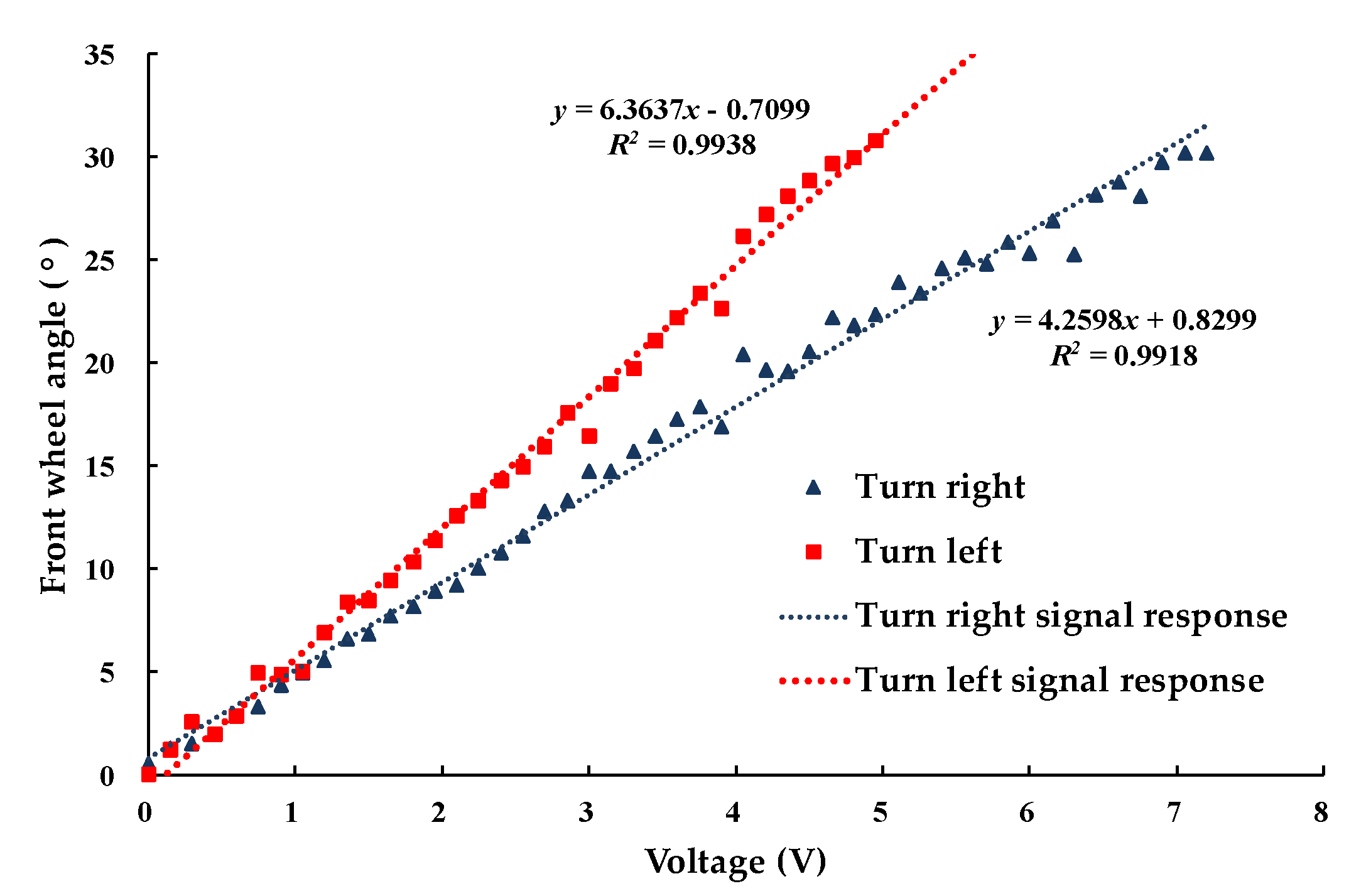

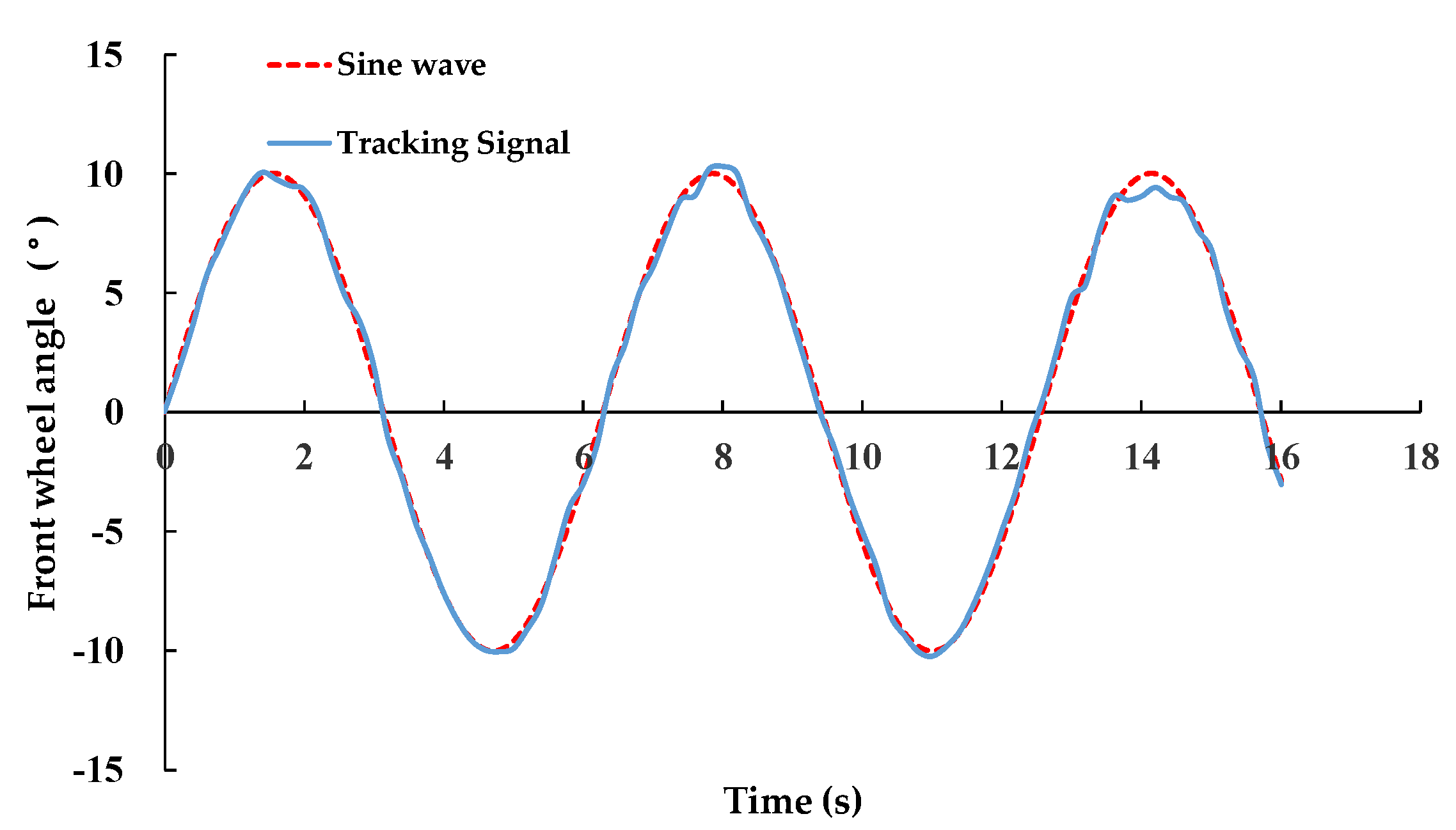

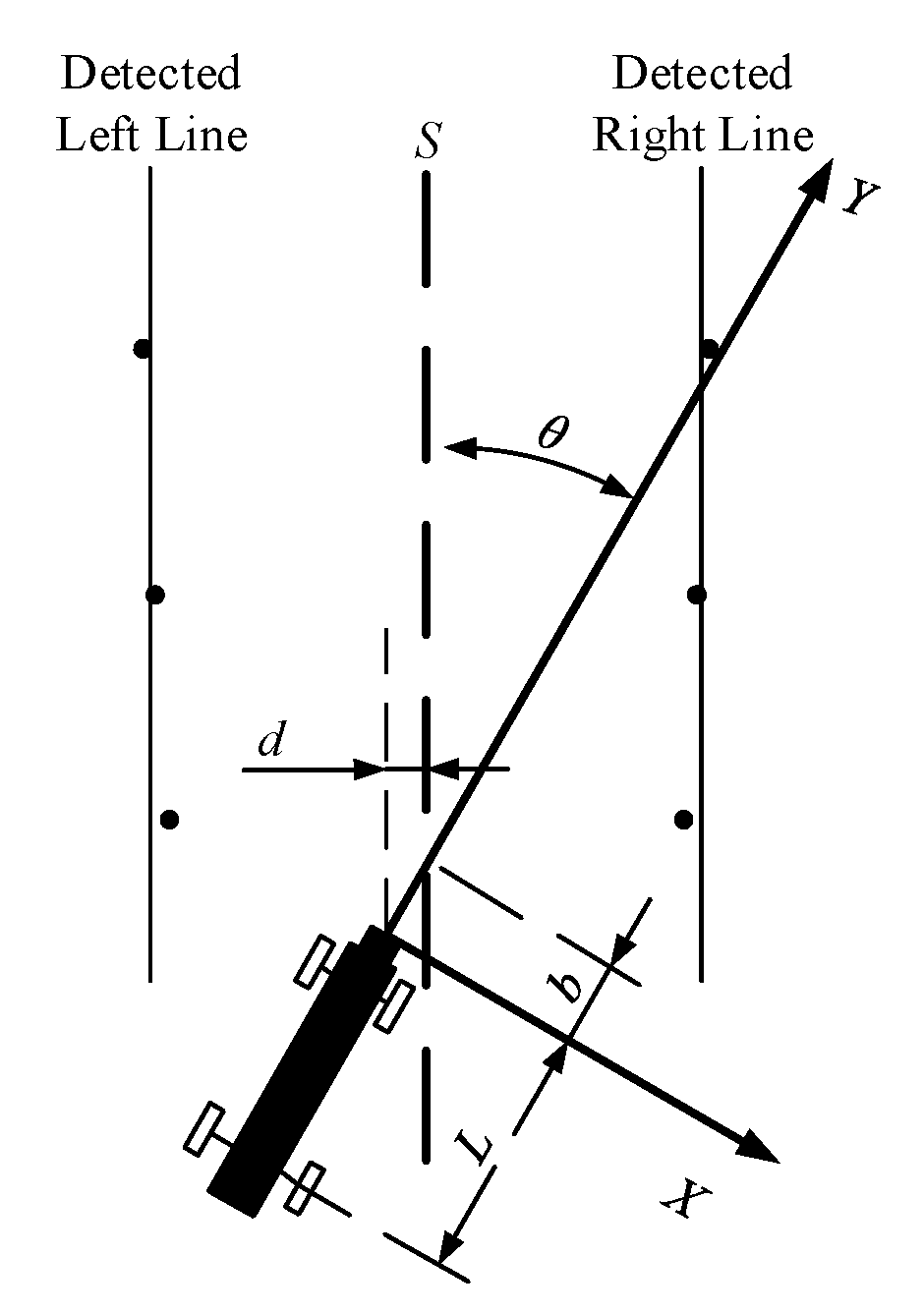

2.3. Navigation Control Parameter Acquisition

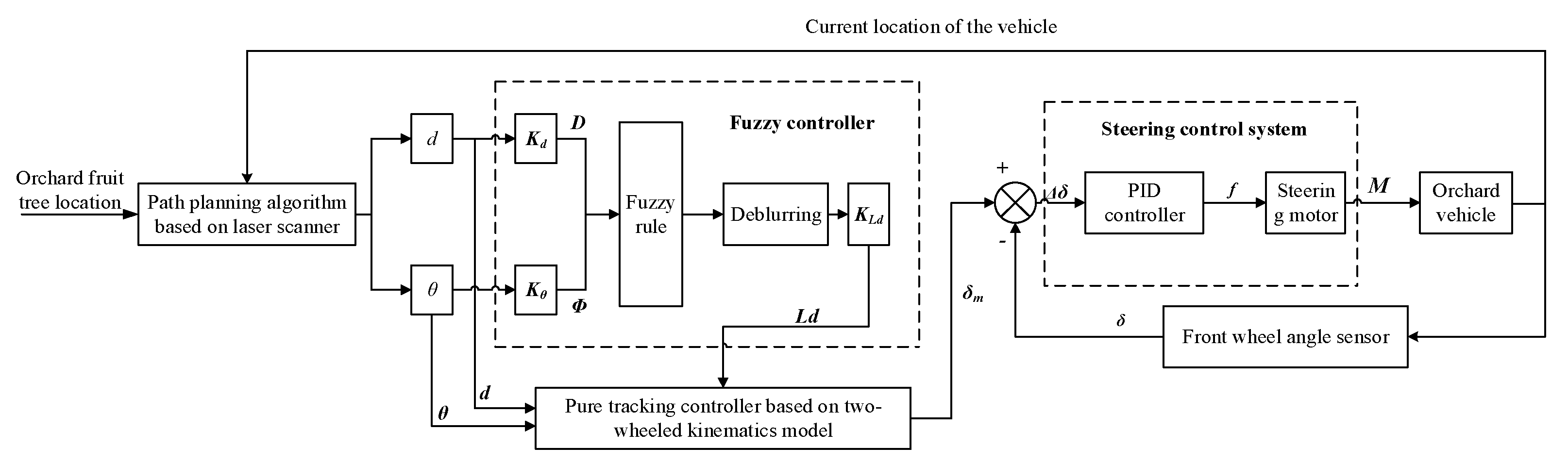

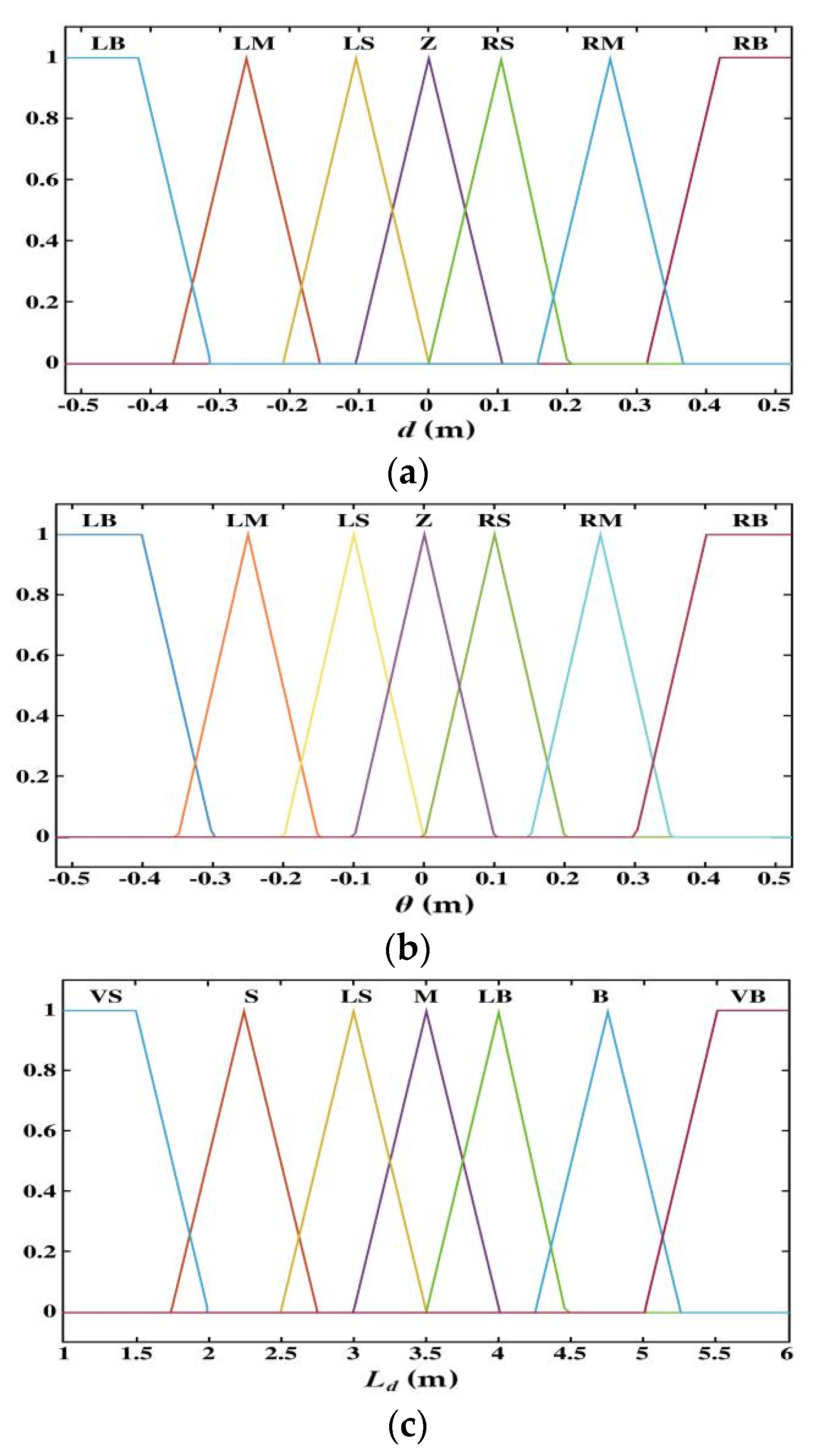

2.4. Navigation Controller Design

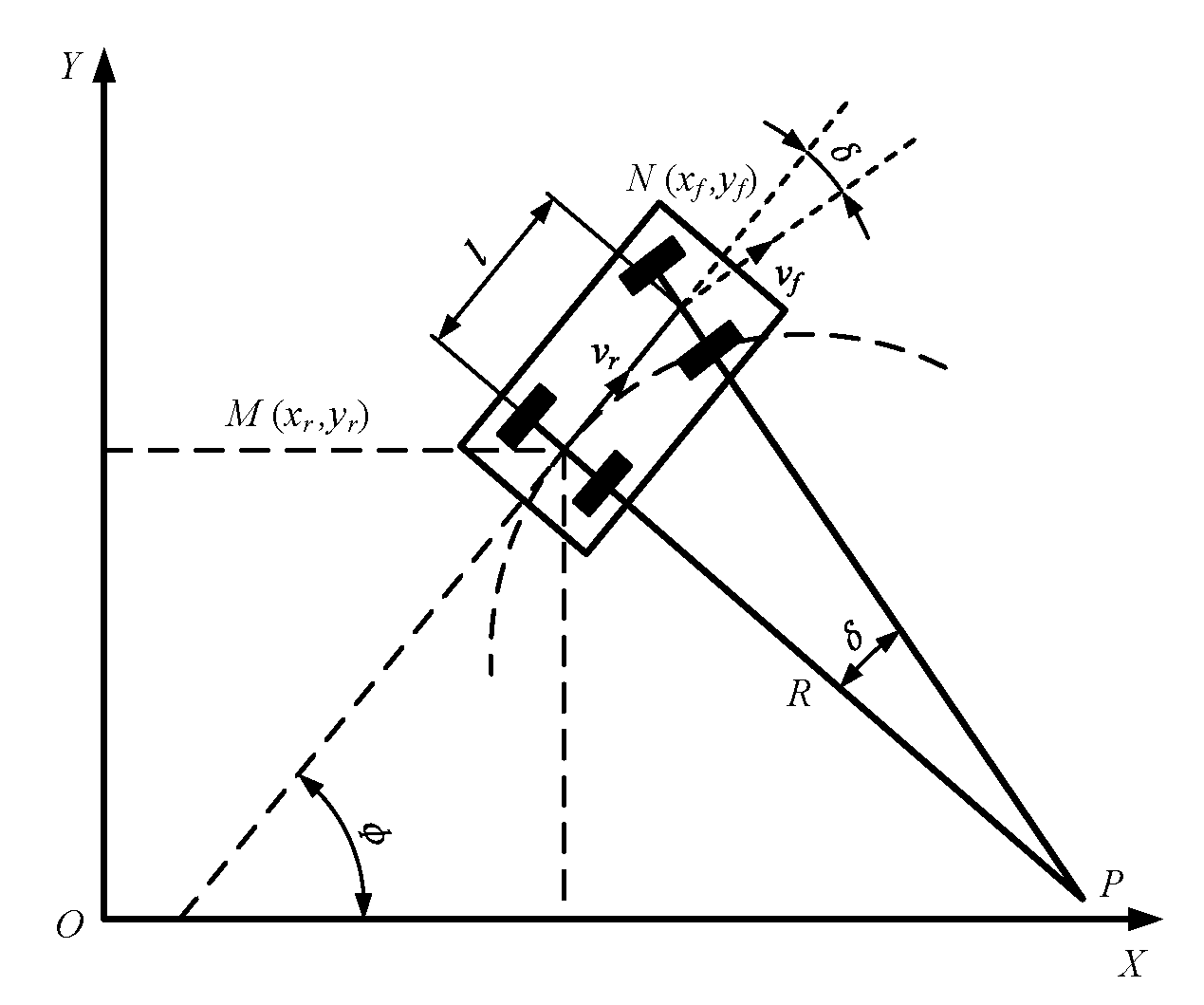

2.4.1. Calculating the Target Front Wheel Angle

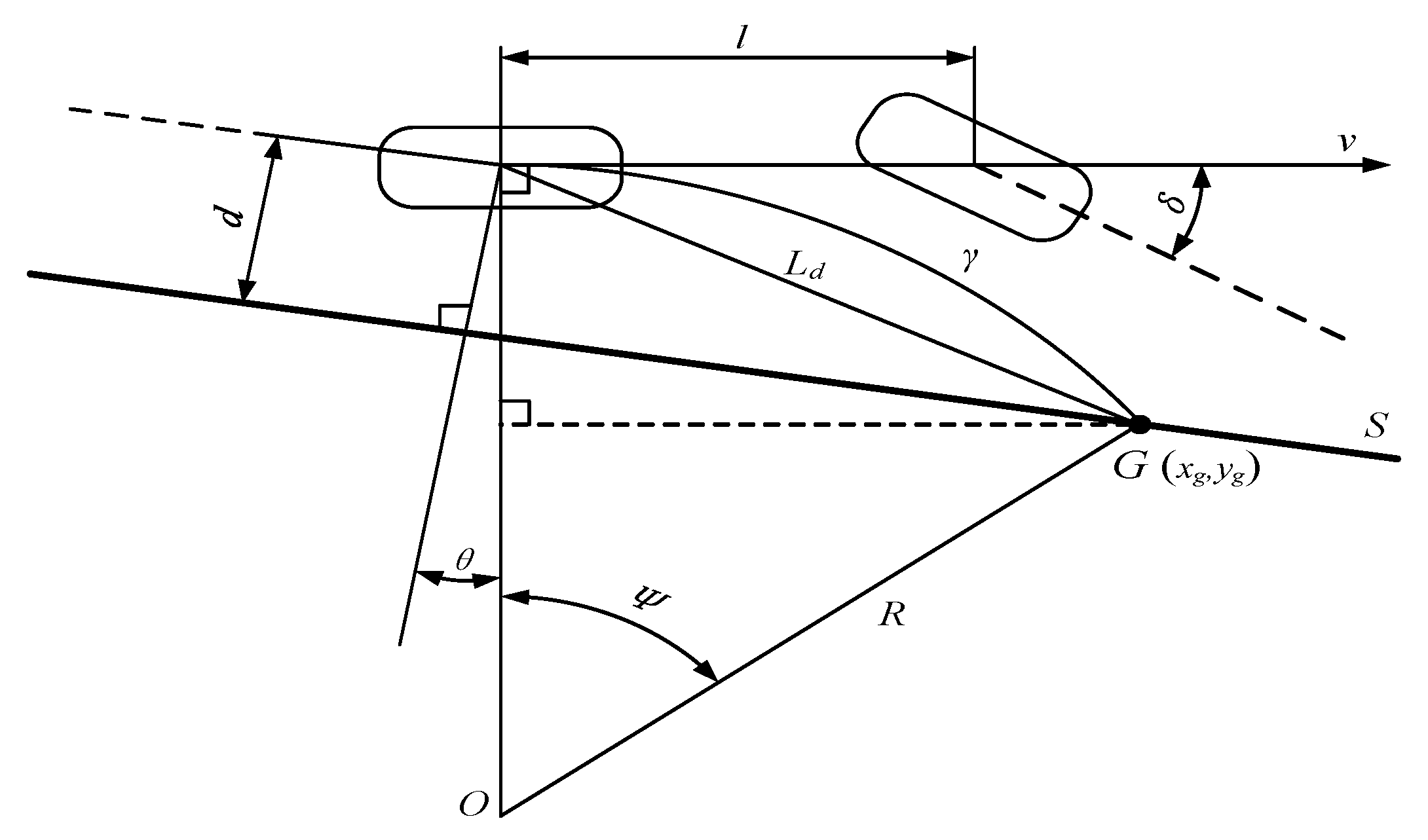

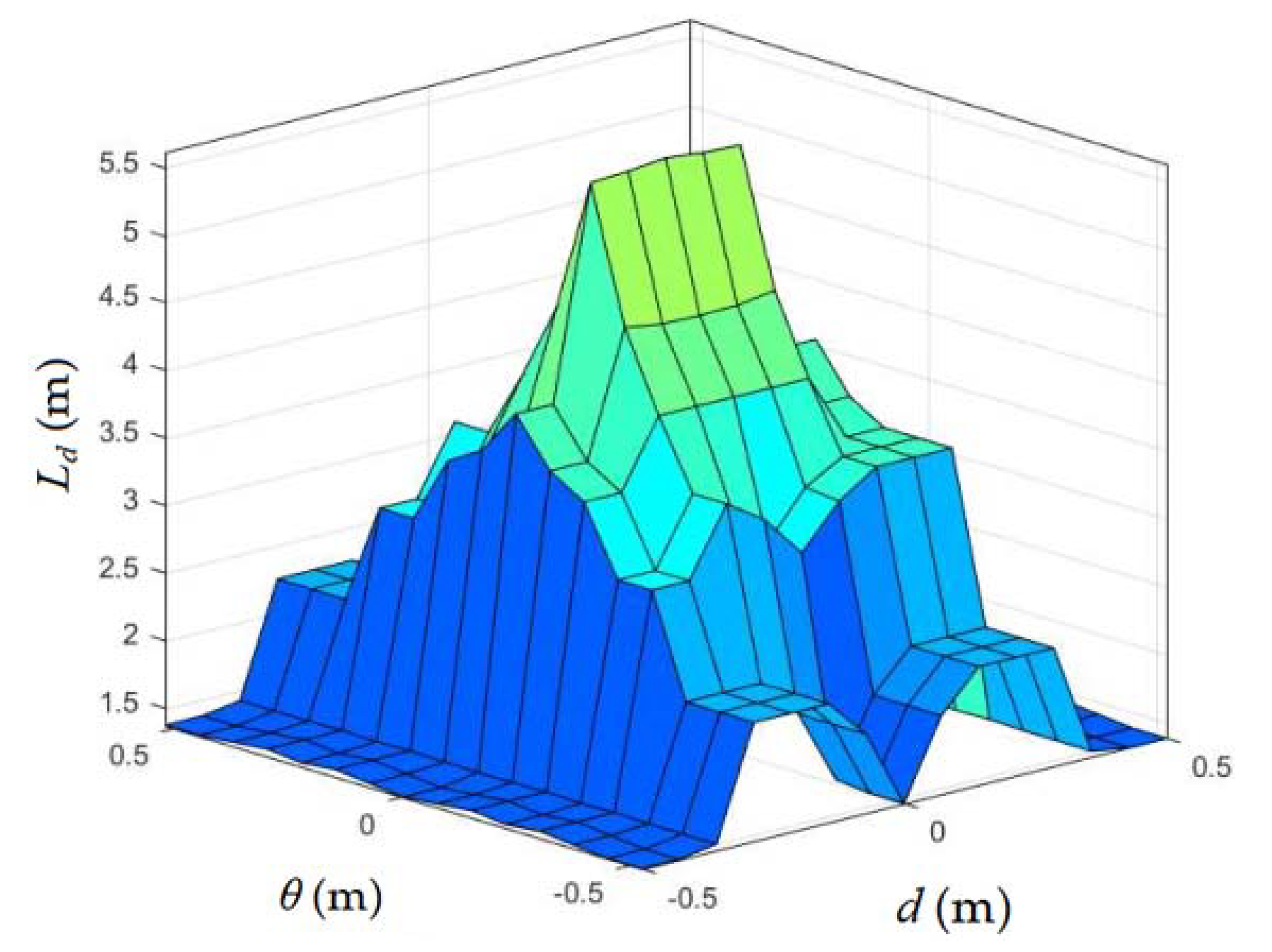

2.4.2. Adaptive Pure Tracking Model Controller Design

3. Results and Discussion

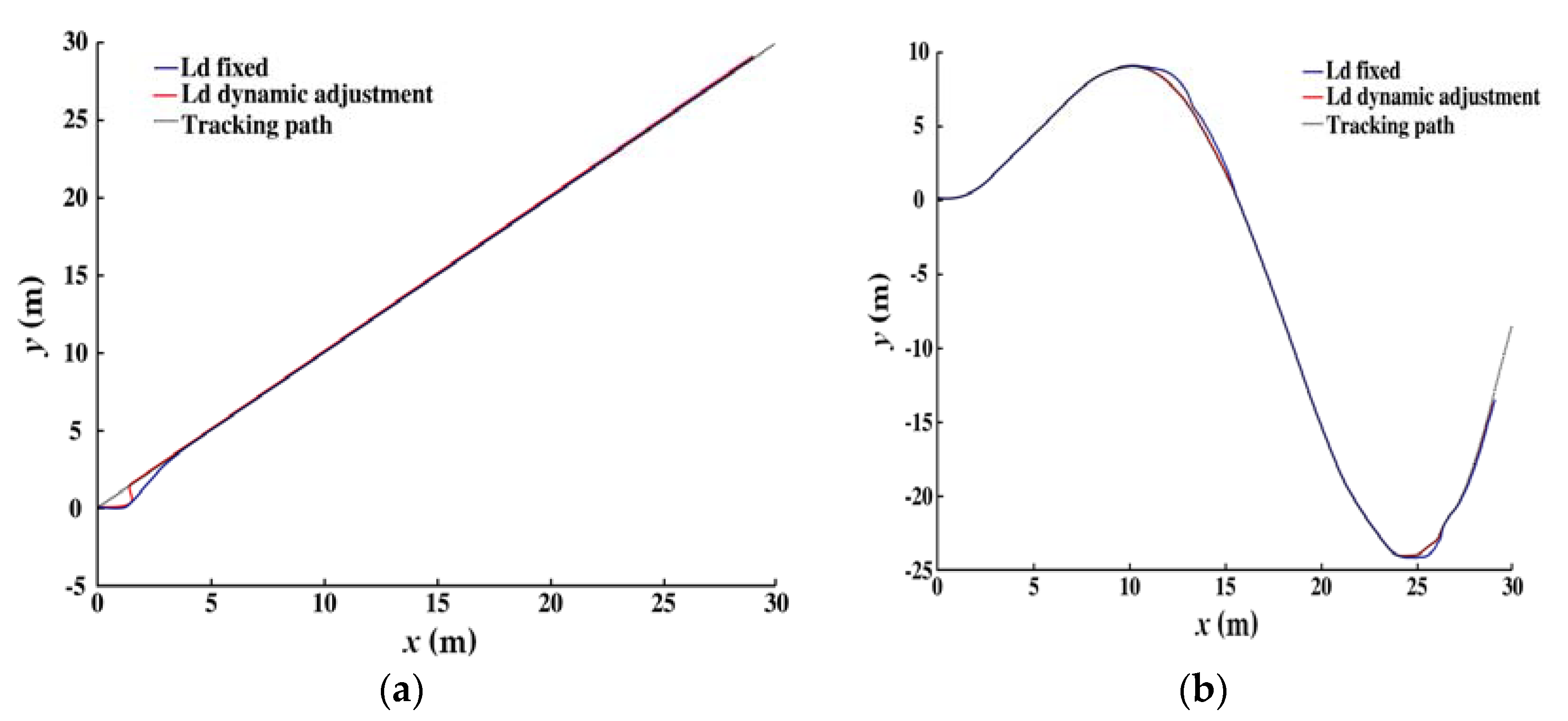

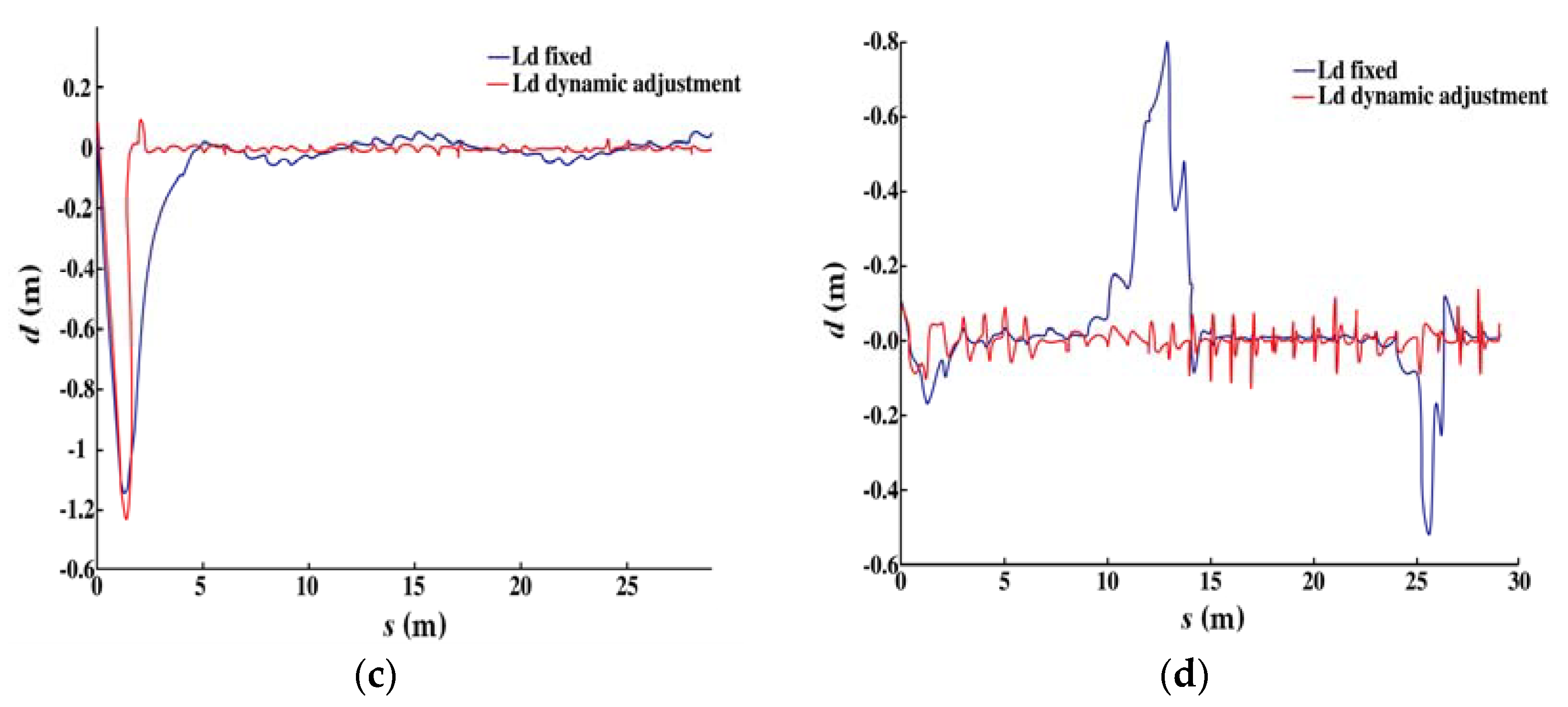

3.1. Path-Tracking Simulation Test

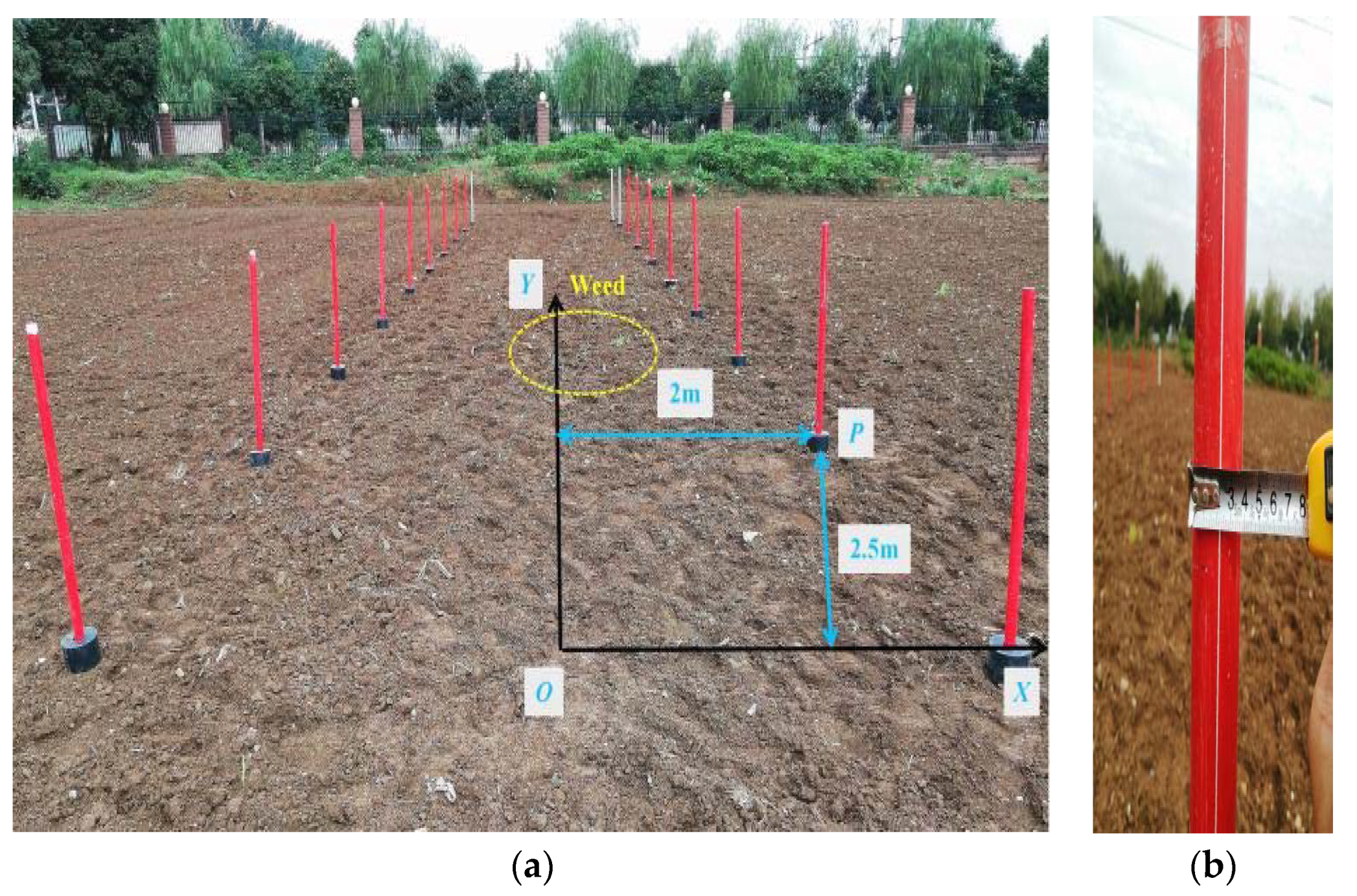

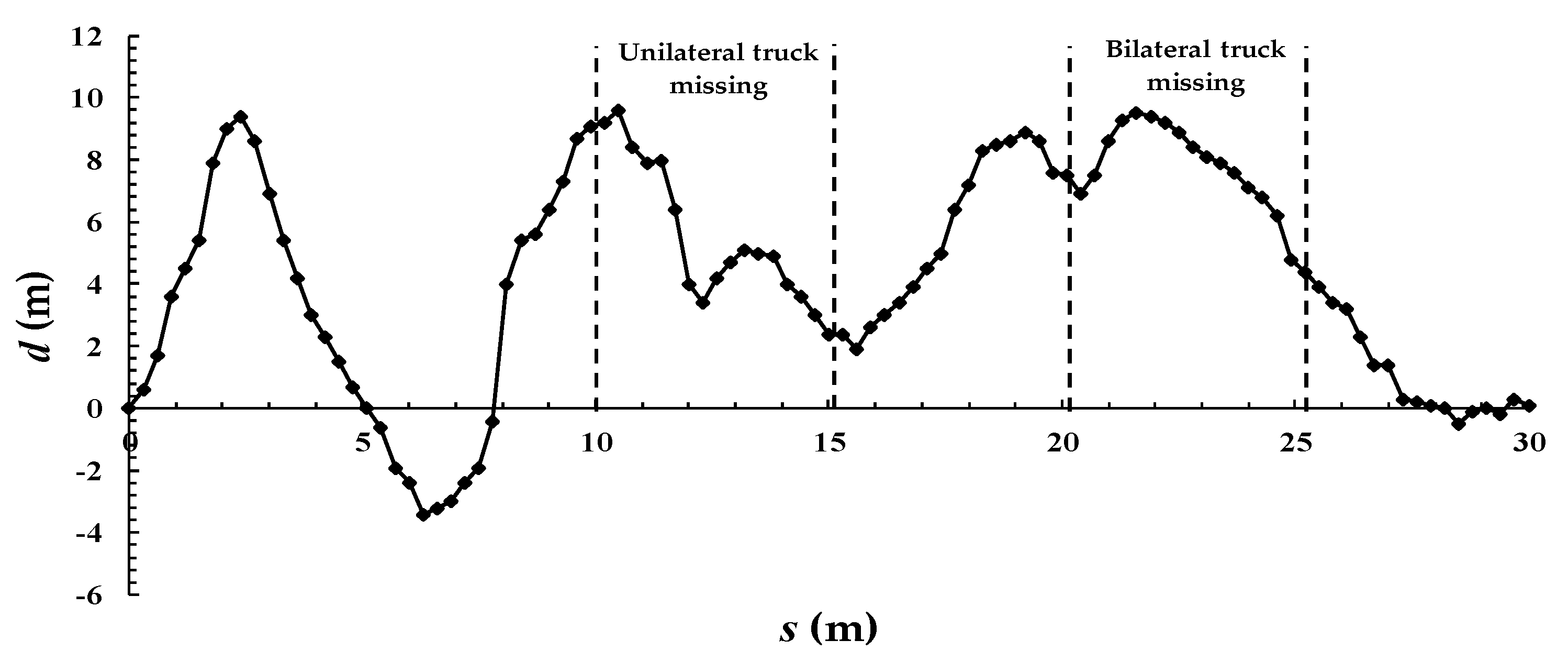

3.2. Feature Map and Navigation Parameter Acquisition Accuracy Test

3.3. Path-Tracking Accuracy Test

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhao, Y.; Xiao, H.; Mei, S.; Song, Z.; Ding, W.; Jin, Y.; Hang, Y.; Xia, X.; Yang, G. Current status and development strategies of orchard mechanization production in China. J. Chin. Agric. Univ. 2017, 22, 116–127. [Google Scholar]

- Gao, X.; Li, J.; Fan, L.; Zhou, Q.; Yin, K.; Wang, J.; Song, C.; Huang, L.; Wang, Z. Review of Wheeled Mobile Robots’ Navigation Problems and Application Prospects in Agriculture. IEEE Access 2018, 6, 49248–49268. [Google Scholar] [CrossRef]

- Shamshiri, R.R.; Weltzien, C.; Hameed, I.A.; Yule, I.J.; Grift, T.E.; Balasundram, S.K.; Pitonakova, L.; Ahmad, D.; Chowdhary, G. Research and development in agricultural robotics: A perspective of digital farming. Int. J. Agric. Biol. Eng. 2018, 11, 1–14. [Google Scholar] [CrossRef]

- Blok, P.M.; Van Boheemen, K.; Van Evert, F.K.; IJsselmuiden, J.; Kim, G.H. Robot navigation in orchards with localization based on Particle filter and Kalman filter. Comput. Electron. Agric. 2019, 157, 261–269. [Google Scholar] [CrossRef]

- Radcliffe, J.; Cox, J.; Bulanon, D.M. Machine vision for orchard navigation. Comput. Ind. 2018, 98, 165–171. [Google Scholar] [CrossRef]

- Ye, Y.; He, L.; Wang, Z.; Jones, D.; Hollinger, G.A.; Taylor, M.E.; Zhang, Q. Orchard manoeuvring strategy for a robotic bin-handling machine. Biosyst. Eng. 2018, 169, 85–103. [Google Scholar] [CrossRef]

- Keicher, R.; Seufert, H. Automatic guidance for agricultural vehicles in Europe. Comput. Electron. Agric. 2000, 25, 169–194. [Google Scholar] [CrossRef]

- Yayan, U.; Yucel, H.; Yazici, A. A Low Cost Ultrasonic Based Positioning System for the Indoor Navigation of Mobile Robots. J. Intell. Robot. Syst. 2015, 78, 541–552. [Google Scholar] [CrossRef]

- Ortiz, B.V.; Balkcom, K.B.; Duzy, L.; Van Santen, E.; Hartzog, D.L. Evaluation of agronomic and economic benefits of using RTK-GPS-based auto-steer guidance systems for peanut digging operations. Precis. Agric. 2013, 14, 357–375. [Google Scholar] [CrossRef]

- Yin, X.; Du, J.; Noguchi, N.; Yang, T.; Jin, C. Development of autonomous navigation system for rice transplanter. Int. J. Agric. Biol. Eng. 2018, 11, 89–94. [Google Scholar] [CrossRef]

- Xiong, B.; Zhang, J.; Qu, F.; Fan, Z.; Wang, D.; Li, W. Navigation Control System for Orchard Spraying Machine Based on Beidou Navigation Satellite System. Trans. Chin. Soc. Agric. Mach. 2017, 48, 45–50. [Google Scholar]

- Liu, L.; Mei, T.; Niu, R.; Wang, J.; Liu, Y.; Chu, S. RBF-Based Monocular Vision Navigation for Small Vehicles in Narrow Space below Maize Canopy. Appl. Sci. 2016, 6, 182. [Google Scholar] [CrossRef]

- Bengochea-Guevara, J.M.; Conesa-Muñoz, J.; Andújar, D.; Ribeiro, A. Merge Fuzzy Visual serving and GPS-based planning to obtain a proper navigation behavior for a small crop-inspection robot. Sensors 2016, 16, 276. [Google Scholar] [CrossRef]

- Matthies, L.; Kelly, A.; Litwin, T.; Tharp, G. Obstacle Detection for Unmanned Ground Vehicles: A Progress Report. In Proceedings of the Intelligent Vehicles 95 Symposium, Detroit, MI, USA, 25–26 September 1995; IEEE: Barcelona, Spain, 1995; pp. 66–71. [Google Scholar]

- Zhai, Z.; Zhu, Z.; Du, Y.; Song, Z.; Mao, E. Multi-crop-row detection algorithm based on binocular vision. Biosyst. Eng. 2016, 150, 89–103. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, Y.; Zhu, Z.; Li, Z.; Du, Y.; Mao, E. Tractor Path Tracking Control Based on Binocular Vision. Inf. Process. Agric. 2018, 5, 422–432. [Google Scholar] [CrossRef]

- Milella, A.; Reina, G. 3D reconstruction and classification of natural environments by an autonomous vehicle using multi-baseline stereo. Intell. Serv. Robot. 2014, 7, 79–92. [Google Scholar] [CrossRef]

- Zhao, H.; Shibasaki, R. Reconstructing a textured CAD model of an urban environment using vehicle-borne laser range scanners and line cameras. Mach. Vis. Appl. 2003, 14, 35–41. [Google Scholar] [CrossRef]

- Narvaez, F.J.Y.; Del Pedregal, J.S.; Prieto, P.A.; Torres-Torriti, M.; Cheein, F.A.A. LiDAR and thermal images fusion for ground-based 3D characterisation of fruit trees. Biosyst. Eng. 2016, 151, 479–494. [Google Scholar] [CrossRef]

- Wang, C.; Wang, J.; Li, C.; Ho, D.; Cheng, J.; Yan, T.; Meng, L.; Meng, M.Q.H. Safe and Robust Mobile Robot Navigation in Uneven Indoor Environments. Sensors 2019, 19, 2993. [Google Scholar] [CrossRef]

- Asvadi, A.; Premebida, C.; Peixoto, P.; Nunes, U. 3D Lidar-based static and moving obstacle detection in driving environments: An approach based on voxels and multi-region ground planes. Robot. Auto Syst. 2016, 83, 299–311. [Google Scholar] [CrossRef]

- Tuley, J.; Vandapel, N.; Hebert, M. Analysis and removal of artifacts in 3-D LADAR data. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation (ICRA 2005), Barcelona, Spain, 18–22 April 2005; IEEE: Barcelona, Spain, 2005; pp. 2203–2210. [Google Scholar]

- Hrubos, M.; Nemec, D.; Janota, A.; Pirnik, R.; Bubenikova, E.; Gregor, M.; Juhasova, B.; Juhas, M. Sensor fusion for creating a three-dimensional model for mobile robot navigation. Int. J. Adv. Robot. Syst. 2019, 16, 1–12. [Google Scholar] [CrossRef]

- Zhao, T.; Noguchi, N.; Yang, L.; Ishii, K.; Chen, J. Development of uncut crop edge detection system based on laser rangefinder for combine harvesters. Int. J. Agric. Biol. Eng. 2016, 9, 21–28. [Google Scholar]

- Barawid, O.C.; Mizushima, A.; Ishii, K.; Noguchi, N. Development of an Autonomous Navigation System using a Two-dimensional Laser Scanner in an Orchard Application. Biosyst. Eng. 2007, 96, 139–149. [Google Scholar] [CrossRef]

- Liu, P.; Chen, J.; Zhang, M. Automatic control system of orchard tractor based on laser navigation. Trans. Chin. Soc. Agric. Eng. 2011, 27, 196–199. [Google Scholar]

- Chen, J.; Jiang, H.; Liu, P.; Zhang, Q. Navigation Control for Orchard Mobile Robot in Curve Path. Trans. Chin. Soc. Agric. Mach. 2012, 43, 179–182+187. [Google Scholar]

- Thanpattranon, P.; Ahamed, T.; Takigawa, T. Navigation of autonomous tractor for orchards and plantations using a laser range finder: Automatic control of trailer position with tractor. Biosyst. Eng. 2016, 147, 90–103. [Google Scholar] [CrossRef]

- Bayar, G.; Bergerman, M.; Koku, A.B.; Konukseven, E.I. Localization and control of an autonomous orchard vehicle. Comput. Electron. Agric. 2015, 115, 118–128. [Google Scholar] [CrossRef]

- Kukko, A.; Kaasalainen, S.; Litkey, P. Effect of incidence angle on laser scanner intensity and surface data. Appl. Optics. 2008, 47, 986–992. [Google Scholar] [CrossRef]

- Soudarissanane, S.; Lindenbergh, R.; Menenti, M.; Teunissen, P. Scanning geometry: Influencing factor on the quality of terrestrial laser scanning points. ISPRS J. Photogramm. Remote Sens. 2011, 66, 389–399. [Google Scholar] [CrossRef]

- Lichti, D.D.; Harvey, B.R. The effects of reflecting surface properties on time-off light laser scanner measurements. In Geospatial Theory, Processing and Applications; ISPRS: Ottawa, ON, Canada, 2002; Volume XXXIV, Part 4-IV. [Google Scholar]

- Voegtle, T.; Schwab, I.; Landes, T. Influences of different materials on the measurements of a terrestrial laser scanner (TLS). In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 3–11 July 2008; Volume XXXVII, Part B5. pp. 1061–1066. [Google Scholar]

- Urbančič, T.; Koler, B.; Stopar, B.; Kosmatin Fras, M. Quality analysis of the sphere parameters determination in terrestrial laser scanning. Geod. Vestn. 2014, 58, 11–27. [Google Scholar] [CrossRef]

- Ai, C.; Lin, H.; Wu, D.; Feng, Z. Path planning algorithm for plant protection robots in vineyard. Trans. Chin. Soc. Agric. Eng. 2018, 34, 77–85. [Google Scholar]

- Xue, J.; Zhang, S. Navigation of an Agricultural Robot Based on Laser Radar. Trans. Chin. Soc. Agric. Mach. 2014, 45, 55–60. [Google Scholar]

- Zhang, C.; Yong, L.; Chen, Y.; Zhang, S.; Ge, L.; Wang, S.; Li, W. A Rubber-Tapping Robot Forest Navigation and Information Collection System Based on 2D LiDAR and a Gyroscope. Sensors 2019, 19, 2136. [Google Scholar] [CrossRef] [PubMed]

- Kayacan, E.; Kayacan, E.; Ramon, H.; Saeys, W. Towards agrobots: Identification of the yaw dynamics and trajectory tracking of an autonomous tractor. Comput. Electron. Agric. 2015, 115, 78–87. [Google Scholar] [CrossRef]

- Xue, J.; Zhang, L.; Grift, T.E. Variable field-of-view machine vision based row guidance of an agricultural robot. Comput. Electron. Agric. 2012, 84, 85–91. [Google Scholar] [CrossRef]

- Zhang, S.; Liu, J.; Du, Y.; Zhu, Z.; Mao, E.; Song, Z. Method on automatic navigation control of tractor based on speed adaptation. Trans. Chin. Soc. Agric. Eng. 2017, 33, 48–55. [Google Scholar]

- Conlter, R.C. Implementation of the Pure Pursuit Path Tracking Algorithm: Technical Report; Camegie Mellon University: Pittsburgh, PA, USA, 1992. [Google Scholar]

- Liu, J.K. Intelligent Control, 4th ed.; Electronic Industry Press: Beijing, China, 2017; pp. 39–41. [Google Scholar]

| Description | Parameter |

|---|---|

| Light source | Semiconductor laser (905 nm) |

| Measuring range | 0.06 (m)–8 (m) Maximum detection distance 60 (m) |

| Ranging accuracy | 40 (mm) |

| Angular resolution | 0.25° |

| Maximum scanning range | 270° |

| Scanning period | 25 (ms) |

| θ | d | ||||||

|---|---|---|---|---|---|---|---|

| LB | LM | LS | Z | RS | RM | RB | |

| LB | VS | S | S | VS | S | S | VS |

| LM | VS | LS | M | LS | M | M | VS |

| LS | VS | M | LB | LB | LB | M | VS |

| Z | VS | LB | VB | VB | VB | LB | VS |

| RS | VS | M | LB | LB | LB | M | VS |

| RM | VS | LS | M | LS | M | LS | VS |

| RB | VS | S | S | VS | LS | S | VS |

| Ld (m) | d (m) | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| −6 | −5 | −4 | −3 | −2 | −1 | 0 | 1 | 2 | 3 | 4 | 5 | 6 | ||

| θ (rad) | −6 | 1.38 | 1.38 | 1.70 | 2.25 | 2.25 | 2.06 | 1.38 | 2.06 | 2.25 | 2.25 | 1.70 | 1.38 | 1.38 |

| −5 | 1.38 | 1.38 | 1.70 | 2.25 | 2.25 | 2.06 | 1.38 | 2.06 | 2.25 | 2.25 | 1.70 | 1.38 | 1.38 | |

| −4 | 1.43 | 1.43 | 1.72 | 2.27 | 2.30 | 2.05 | 1.46 | 2.05 | 2.29 | 2.28 | 1.74 | 1.43 | 1.43 | |

| −3 | 1.39 | 1.39 | 1.95 | 3.00 | 3.34 | 3.40 | 3.00 | 3.40 | 3.50 | 3.50 | 2.12 | 1.39 | 1.39 | |

| −2 | 1.46 | 1.46 | 2.34 | 3.26 | 3.57 | 3.57 | 3.51 | 3.57 | 3.76 | 3.50 | 2.24 | 1.46 | 1.46 | |

| −1 | 1.39 | 1.39 | 2.37 | 3.58 | 4.28 | 4.32 | 4.31 | 4.32 | 4.28 | 3.58 | 2.37 | 1.39 | 1.39 | |

| 0 | 1.38 | 1.38 | 2.28 | 4.00 | 5.05 | 5.61 | 5.62 | 5.61 | 5.05 | 4.00 | 2.28 | 1.38 | 1.38 | |

| 1 | 1.39 | 1.39 | 2.37 | 3.58 | 4.28 | 4.32 | 4.31 | 4.32 | 4.28 | 3.58 | 2.37 | 1.39 | 1.39 | |

| 2 | 1.46 | 1.46 | 2.34 | 3.26 | 3.57 | 3.57 | 3.51 | 3.57 | 3.57 | 3.26 | 2.34 | 1.46 | 1.46 | |

| 3 | 1.39 | 1.39 | 1.95 | 3.00 | 3.34 | 3.40 | 3.00 | 3.40 | 3.34 | 3.00 | 1.95 | 1.39 | 1.39 | |

| 4 | 1.43 | 1.43 | 1.72 | 2.27 | 2.30 | 2.05 | 1.46 | 2.54 | 2.75 | 2.27 | 1.72 | 1.43 | 1.43 | |

| 5 | 1.38 | 1.38 | 1.70 | 2.25 | 2.25 | 2.06 | 1.38 | 2.61 | 2.74 | 2.25 | 1.70 | 1.38 | 1.38 | |

| 6 | 1.38 | 1.38 | 1.70 | 2.25 | 2.25 | 2.06 | 1.38 | 2.61 | 2.74 | 2.25 | 1.70 | 1.38 | 1.38 | |

| Serial Number | θ (°) | Δd (cm) | ||

|---|---|---|---|---|

| Actual Value | Measurements | Error | ||

| 1 | −30 | −30.13 | 0.13 | 2.75 |

| 2 | −25 | −25.40 | −0.40 | −2.18 |

| 3 | −20 | −19.09 | −0.91 | 1.62 |

| 4 | −15 | −14.34 | −0.66 | 1.04 |

| 5 | −10 | −10.57 | 0.57 | −2.00 |

| 6 | −5 | −5.88 | 0.88 | −2.14 |

| 7 | 0 | 0.89 | 0.89 | 4.66 |

| 8 | 5 | 4.45 | −0.55 | −1.06 |

| 9 | 10 | 10.58 | 0.58 | −1.14 |

| 10 | 15 | 14.24 | −0.76 | 2.88 |

| 11 | 20 | 19.05 | −0.95 | −2.71 |

| 12 | 25 | 24.17 | −0.83 | 2.20 |

| 13 | 30 | 30.75 | 0.75 | −1.17 |

| MAD (m) | 0.682 | 2.119 | ||

| SD (m) | 0.237 | 1.010 | ||

| Number | Maximum Deviation (m) | AVG Deviation (m) | SD Deviation (m) |

|---|---|---|---|

| 1 | 0.09 | 0.05 | 0.05 |

| 2 | 0.13 | 0.08 | 0.04 |

| 3 | −0.07 | −0.04 | 0.03 |

| 4 | −0.10 | 0.04 | 0.03 |

| 5 | 0.09 | −0.03 | 0.02 |

| MAD (m) | 0.096 | 0.048 | 0.034 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Guo, C.; Gao, Z.; Sugirbay, A.; Chen, J.; Chen, Y. Research on 2D Laser Automatic Navigation Control for Standardized Orchard. Appl. Sci. 2020, 10, 2763. https://doi.org/10.3390/app10082763

Zhang S, Guo C, Gao Z, Sugirbay A, Chen J, Chen Y. Research on 2D Laser Automatic Navigation Control for Standardized Orchard. Applied Sciences. 2020; 10(8):2763. https://doi.org/10.3390/app10082763

Chicago/Turabian StyleZhang, Shuo, Chengyang Guo, Zening Gao, Adilet Sugirbay, Jun Chen, and Yu Chen. 2020. "Research on 2D Laser Automatic Navigation Control for Standardized Orchard" Applied Sciences 10, no. 8: 2763. https://doi.org/10.3390/app10082763

APA StyleZhang, S., Guo, C., Gao, Z., Sugirbay, A., Chen, J., & Chen, Y. (2020). Research on 2D Laser Automatic Navigation Control for Standardized Orchard. Applied Sciences, 10(8), 2763. https://doi.org/10.3390/app10082763