Abstract

This paper proposes a new method to get explicit expressions of some quantities associated with performance analysis of the maximum likelihood DOA algorithm in the presence of an additive Gaussian noise on the antenna elements. The motivation of the paper is to make a quantitative analysis of the ML DOA algorithm in the case of multiple incident signals. We present a simple method to derive a closed-form expression of the MSE of the DOA estimate based on the Taylor series expansion. Based on the Taylor series expansion and approximation, we get explicit expressions of the MSEs of estimates of azimuth angles of all incident signals. The validity of the derived expressions is shown by comparing the analytic results with the simulation results.

1. Introduction

There has been a lot of research on the direction-of-arrival (DOA) estimation [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19]. Our interest in this paper is the performance analysis of the maximum likelihood (ML)-based DOA estimation algorithm.

In [8], a performance analysis of the ML DOA estimation algorithm for low SNR and small number of snapshots is considered. A threshold effect in the ML DOA algorithm is exploited, and the authors derive approximations to the mean square error and probability of outlier.

If the noise variance at each sensor in the array antenna system is equal, the noise covariance matrix is considered to be multiples of an identity matrix. In [9], nonuniform white noise, whose covariance matrix can be expressed as an arbitrary diagonal covariance, is considered, and the new ML DOA algorithm for nonuniform noise is proposed, and the performance analysis of the proposed algorithm is also presented.

In [10], the authors addressed the DOA estimation using sparse sensor arrays, where the sensor noises can be uncorrelated between different subarrays due to large intersubarray spacings. The authors proposed a new maximum-likelihood estimator, which can be extended to the uncalibrated arrays with sensor gain and phase mismatch.

A new computationally efficient ML DOA algorithm exploiting spatial aliasing is proposed in [11]. Generally, spatial aliasing is undesirable since it degrades the DOA estimation accuracy. In [11], the authors adopted a nested array structure with a doubly spaced aperture. The computational burden of the ML DOA estimation algorithm is reduced by the highly compressed search range and the small number of candidate angles to be searched. The authors also presented Monte Carlo simulation based mean square error (MSE). However, analytic performance analysis of the proposed scheme is not presented in [11].

A new ML DOA algorithm for use with a uniform linear array is proposed [12]. The scheme is superior to the conventional ML algorithm when the true DOAs of two incident signals are very close to each other. The formulation for the new DOA algorithm is based on an asymptotic approximation of the unconditional maximum likelihood (UML) procedure when two closely space signals are incident on the ULA. Taylor approximation is also adopted for derivation of the new algorithm. Empirical MSE is illustrated to validate the proposed scheme. However, the authors do not present analytic performance analysis of the new DOA algorithm.

To overcome the problem of large computational complexity for implementation of the ML DOA estimation algorithm, based on a spatially overcomplete array output formulation, an efficient ML DOA estimator is proposed in [13]. Empirical performance from Monte Carlo simulation is present to illustrate the superiority of the proposed scheme over the other DOA algorithms. Analytic performance analysis is not given.

Although the ML estimator is known to be optimal in DOA estimation, its computational cost can be quite prohibitive, especially for a large number of incident signals. To solve this problem, in [14], three kinds of natural computing algorithms, differential evolution, clonal selection algorithm, and the particle swarm optimization, are applied for implementation of the multivariable nonlinear optimization of the cost function of the ML DOA estimation algorithm. It turns out that all three natural computing algorithms are capable of optimizing the ML DOA cost function, irrespective of the number of incident signals and their nature. In addition, the number of points evaluated by natural computing algorithms is much smaller than that associated with exhaustive grid search-based algorithms, justifying the application of these natural computing algorithms to the optimization of the cost function of the ML DOA estimation algorithm.

In [15], a new implementation of ML DOA estimation, which outperforms the other DOA algorithms for closely spaced incident signals, is proposed. The concept of Monte Carlo importance sampling is applied. The superiority of the proposed scheme comes from its better convergence to a global maximum in comparison with other iterative approaches. Although analytical performance analysis of the proposed scheme is not presented, empirical performance of the propose algorithm and the other DOA algorithms is given. Note that Monte Carlo simulation is employed to get empirical performance in terms of the MSE of the DOA estimate.

A heuristic optimization algorithm, called gravitational search algorithm, is presented to optimize the cost function of the ML DOA estimation algorithm for a uniform circular array [16]. It is empirically shown that the proposed algorithm is superior to the MUSIC algorithm and particle swarm optimization-based ML algorithm. Analytic performance analysis of the proposed scheme is not presented in [16].

To reduce computational burden of optimizing the cost function of ML DOA estimation algorithm, the artificial bee colony (ABC) algorithm is applied to maximize the cost function of the ML DOA estimation algorithm [17]. It is empirically shown that the proposed scheme is superior to other ML-based DOA estimation methods in the view point of efficiency in computation and statistical performance. Analytic performance analysis of the proposed scheme is not presented in [17].

DOA estimation of narrowband sources in unknown nonuniform white noise is considered in [18]. The stepwise concentration of the log-likelihood function with respect to the signal parameters and noise parameters is obtained by alternating minimization of the Kullback–Leibler divergence. Closed-form expressions for the signal parameters and noise parameters are derived, implying that the proposed scheme results in significant reduction in computational complexity in comparison with exhaustive multidimensional search-based ML DOA algorithms.

In [19], a new wideband ML DOA estimation algorithm for an unknown nonuniform sensor noise is proposed to reduce the performance degradation due to nonuniformity of the noise. Two associated implementation schemes are proposed: one is iterative and the other is non-iterative. Simulation results show that the performance of two processing algorithms is consistent with the Cramer–Rao lower bound. Analytic performance analysis, more specifically the Cramer–Rao lower bound, of the proposed algorithm is presented in [19].

In this paper, we are concerned with quantitative study on how much estimation error is induced due to an additive Gaussian noise on array antennas. More specifically, mean-squared error (MSE) of direction-of-arrival estimation in terms of a standard deviation of an additive noise is derived. In this paper, performance analysis of azimuth estimation using uniform linear array (ULA) is presented.

In this paper, the estimate with no superscript denotes the estimate of the original ML algorithm. Note that no approximation is used in getting the estimate with no superscript. In this paper, the estimate with the superscript denotes the estimate by using the first approximation, and that with the superscript represents the estimate by using the first approximation and the second approximation.

The difference between the estimate with no superscript and the estimate with the superscript quantifies the error due to the first approximation since the first approximation is applied in getting the estimate with the superscript . Note that no approximation is applied in getting the estimate with no superscript. Similarly, the difference between the estimate with the superscript and the estimate with the superscript quantifies the error due to the second approximation since the first approximation and the second approximations are applied in getting the estimate with the superscript . Based on this intuition, by comparing these three estimates, we can easily determine which approximation results in the dominant approximation error. This inspection cannot be obtained from the scheme presented in the previous study [7,8,9,19].

In this paper, Gaussian noise is used to model measurement uncertainty. The effect of Gaussian noise on the accuracy of the azimuth estimate is rigorously derived. Furthermore, an explicit expression of the MSE of the azimuth estimate is also derived. In comparison with the previous studies on the performance analysis of the maximum likelihood algorithm [7,8,9,19], a more explicit representation of the MSE of the azimuth estimate is proposed in this paper.

Many previous studies on the ML DOA estimation algorithm focused on how the performance of the ML DOA estimation algorithm can be improved by proposing new algorithms or by modifying the ML DOA estimation algorithm [9,10,11,12,13,14,15,16,17,18,19]. Note that our contribution in this paper does not lie in how much improvement can be achieved by proposing an improved ML DOA algorithm. Our contribution lies in a reduction in computational cost in getting the MSE of an existing ML DOA algorithm by adopting an analytic approach, rather than the Monte Carlo simulation-based MSE under measurement uncertainty which is assumed to be Gaussian distributed. That is, the scheme described how analytic MSE can be obtained with much less computational complexity than the Monte Carlo simulation-based MSE.

In this paper, the derivation is based on the Taylor series expansion of the sample covariance matrix since the cost function of the ML DOA estimation algorithm can be explicitly written in terms of the sample covariance matrix. The difference between the sample covariance matrix associated with noisy measurement and that associated with noiseless sample covariance matrix is explicitly expressed in terms of additive noises on the antenna arrays. Azimuth estimation error is explicitly expressed in terms of the additive noises. Finally, the MSE of the azimuth estimate is given in terms of the statistics of an additive noise. To the best of our knowledge, no previous study presented these explicit expressions of the azimuth estimation error and the MSE of the azimuth estimate in terms of the statistics of an additive noise.

The proposed scheme can be used in predicting how accurate the estimate of the ML DOA estimation algorithm is without a computationally intensive Monte Carlo simulation. The performance of the ML algorithm depends on various parameters including the number snapshots, the number antenna elements in the array, inter-element spacing between adjacent antenna elements, and the SNR. Therefore, Monte Carlo simulations for different values of the various parameters can be computationally intensive. Therefore, the scheme presented in this paper can be adopted to predict the accuracy of the ML DOA algorithm for different values of various parameters.

2. Maximum Likelihood Algorithm

In this section, the maximum likelihood (ML) for use with the uniform linear array (ULA) algorithm is briefly described.

In the case of ULA, for the incident signal from , the array vector associated with the m-th antenna can be written as

where is wavelength and is the distance between two neighboring elements.

Projection matrix on to the column space of can be expressed as [6]

The incident signals on the array antenna elements can be written as, for ,

The noisy incident signals can be expressed as

It is assumed that the entries of the Gaussian vector are independent and identically distributed Gaussian random variables with the same mean and the same variance. Note that the noise are complex-valued and that the real part and the imaginary part of the noise are independent Gaussian random variables with non-zero mean . The variance of the real part is denoted by , which is equal to the variance of the imaginary part,.

Let L denote the number of the snapshots. is a sample covariance matrix given by

where is given by (4).

From (5), we get

is defined as

In the ML algorithm, the estimates, , are obtained from

where is given by , and can be written as

where M is the number of antenna elements.

3. Closed-Form Expression of Estimation Error

Using (12), can be written as

denotes adjoint of a matrix. -th element of the adjoint of can be expressed as

where the determinant can be obtained in many ways, one of which is a cofactor expansion.

Generally, the number of incident signals is d. From (14) and (15), an explicit expression of the entry at the k-th row and l-th column of can be obtained. However, it is very complicated to express the determinant in (15) in terms of the entries of for all and . In addition, due to very complicated expression, expressing each entry of in terms of the entries of may impair the readability of this paper. Therefore, the number of signals in this paper is set to two.

The numerator of is defined as :

Let and denote the true incident angles of two incident signals and let and denote the estimates of two incident signals. From (9) and (10), and in Appendix I, the estimates, , satisfy

The derivatives of ML cost function with respect to and should be zero at the true incident angles for the noiseless sample covariance matrix:

Substituting and with the Tayler series approximation in Appendix J, and, using

we have

where the first order derivatives of and with respect to and are given in Appendix A.

We define and as follows:

The solution of (28) and the associated estimates are given by

where the superscript indicates that the first order Taylor expansion is used. This approximation is called U approximation.

At high SNR, it is true that is much larger than :

This approximation is called V approximation.

Using (31) in (25) yields

where the superscript indicates that both U approximation and V approximation are used to get the estimates.

Let be defined by

4. Closed-Form Expression of Mean Square Error of and

From (34), analytic mean square errors (MSEs) of and are given by

where the subscript 11 represents the entry at the first row and at the first column. The subscript 22 is similarly defined. From (27), can be expressed as

where is given in Appendix G.

5. Numerical Results

In Section 3, U approximation and V approximation have been described and analytic mean square error(MSE) have been derived in Section 4. Empirical MSEs of the azimuths are defined as

where W denotes the number of repetitions. The subscript (w) denotes the estimate associated with the w-th repetition out of W repetitions.

and in (39) and (42) are given by (9). Similarly, and in (40) and (43) are given by (29) and (30). and in (41) and (44) are obtained from (34) and (35).

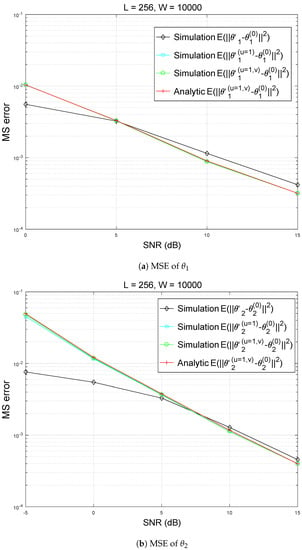

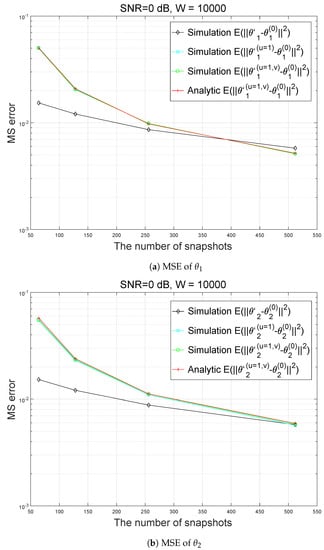

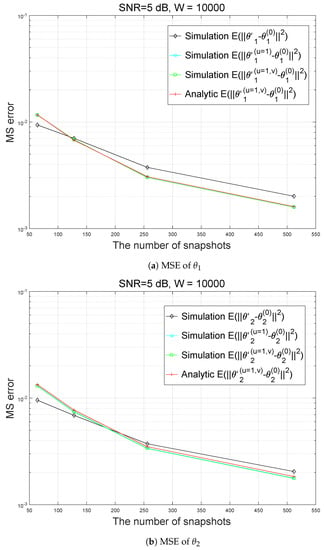

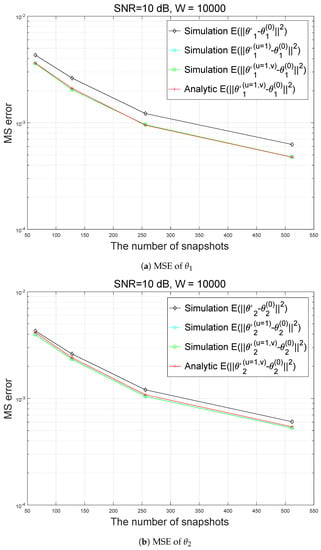

In Figure 1, Figure 2, Figure 3 and Figure 4, we illustrate the accuracy of estimation of azimuths. In the simulation, an additive noise is assumed to be zero-mean Gaussian-distributed. In Figure 1, Figure 2, Figure 3 and Figure 4, the results with ‘Simulation ’, ‘Simulation ’, ‘Simulation ’, and ‘Analytic ’ are obtained from (39), (40), (41), and (36), respectively.

Figure 1.

Analytic and simulated MSEs (Mean Square Errors) of and with respect to SNR (Signal to Noise Ratio).

Figure 2.

Analytic and simulated MSEs and with respect to the number of snapshots (SNR = 0 dB).

Figure 3.

Analytic and simulated MSEs and with respect to the number of snapshots (SNR = 5 dB).

Figure 4.

Analytic and simulated MSEs and with respect to the number of snapshots (SNR = 10 dB).

Similarly, the results with ‘Simulation ’, ‘Simulation ’, ‘Simulation ’, and ‘Analytic ’ are obtained from (42), (43), (44) and (36), respectively.

For all the results in Figure 1, Figure 2, Figure 3 and Figure 4, the difference between Simulation ’ and ‘Simulation ’ is much larger than that between ‘Simulation ’ and ‘Simulation ’, which implies that U approximation results in much greater error than V approximation. Therefore, to improve DOA estimation performance, second-order Taylor expansion, which corresponds to , can be used.

Actually, in all the results in Figure 1, Figure 2, Figure 3 and Figure 4, ‘Simulation ’ and ‘Simulation ’ are approximately equal.

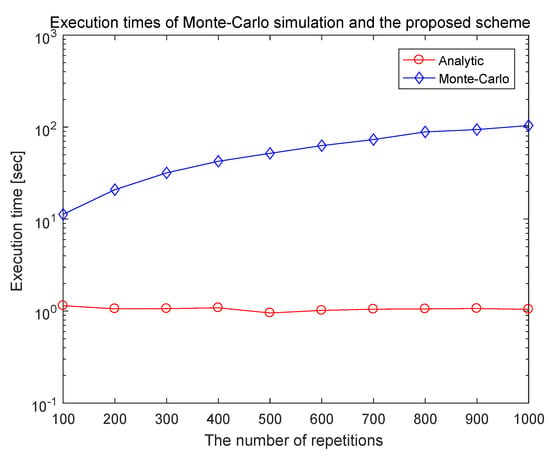

Instead, to quantify how computationally efficient the proposed algorithm is, execution time is obtained both for analytically derived MSE and for the Monte Carlo simulation-based MSE.

The number of incident signals is two, where two signals are incident from and . The number of antenna elements is 10, and the number of snapshots is 1000. In getting the Monte Carlo simulation-based MSE, since the computational complexity is nearly proportional to the number of repetitions, the number of repetitions varies from 100 to 1000 in increments of 100.

Figure 5 shows how computationally efficient the proposed algorithm is. The execution times of the simulation-based MSEs and analytic MSEs are illustrated with respect to the number of repetitions. Note that the execution time for analytically derived MSE is essentially independent of the number of repetitions.

Figure 5.

Comparing the execution times between Monte Carlo simulation-based MSEs and Analytic MSEs.

It is clearly shown in Figure 5 that execution time for the Monte Carlo simulation-based MSE is much greater than that for the analytically derived MSE even for the number of repetitions of 100. Figure 5 illustrates that getting analytically derived MSE is much less computationally intensive than getting Monte Carlo simulation based MSE, which justifies why the analytically derived MSEs should be employed for performance analysis.

6. Conclusions and Summary

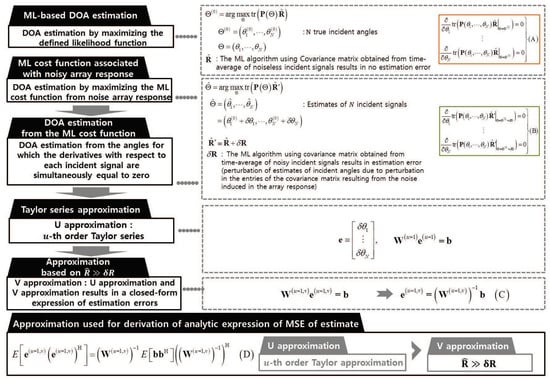

In Figure 6, the proposed algorithm is outlined. Note that Equations (A)–(D) in this section refer to Equations (A)–(D) in Figure 6, respectively. The constraints used for derivation of estimation errors are equations (A) and (B): equation (A) is valid since, in a noiseless environment, no estimation error occurs in the azimuth estimates. The estimation error due to an additive noise in noisy environment is formulated as equation (B).

Figure 6.

Outline of the proposed performance analysis scheme.

To quantify the estimation error due to an additive noise, equations (A) and (B) and two approximations of U approximation and V approximation are used. Note that the covariance matrix in equation (A) and that in equation (B) are associated with noiseless response and noisy response, respectively. Applying the Taylor series approximation in equation (B) and using equation (A) in the approximated expression, the azimuth estimation error in equation (C) can be derived. To get an explicit expression of the MSE of the azimuth estimate, V approximation is applied, and the closed-form expression of the MSE of the estimate is obtained from equation (D).

To the best of our knowledge, no previous study used explicit equations (A) and (B) to derive the azimuth error in equation (C). One of the novelties of this paper is that the derivation is based on the observation that the azimuth error in the subscript in equation (B) can be analytically derived since equation (A) is true for noiseless covariance matrix.

In summary, applying U approximation to equation (B) and using the constraint in equation (A), the estimation error in equation (C) can be obtained. The MSE of the azimuth estimation error in equation (D) is obtained from equation (C) and V approximation. Note that, to get equation (D) from equation (C), the statistics of an additive noise should also be exploited.

Quantitative study on the estimation error for direction-of-arrival estimation in terms of standard deviation of an additive noise has been addressed in this paper.

In this paper, in case of estimating azimuths of multiple incident signals, the closed-form expression of the MSE of the DOA estimate for the ML algorithm has been derived by stepwise approximations. The type of antenna array is assumed as ULA. The closed-form of the MSE has been derived by using the Taylor series approximation which linearizes the nonlinear parts of the array vector and additional approximation based on the assumption that the estimation error is very small at high SNR. The closed-form of the MSE has been verified through numerical results. The closed-form of the MSE has been verified through numerical results. All of the stepwise approximated simulation results and the results obtained from the closed-form of the MSE show good agreement.

Although the formulation and the numerical results for two incident signals are presented in this paper, an extension to multiple incident signals is quite intuitively clear and straightforward.

In this paper, we rigorously derive how the MSE of the ML algorithm for direction-of-arrival can be expressed in terms of various parameters, which include the number of sensor elements, the number of incident signals, the number of snapshots, and the variance of additive noises on the antenna elements. Although, for convenience, the statistics of additive noise is assumed to be non-zero-mean Gaussian distributed, the derivation in the Appendices can be extended to the case where noise can be modeled as any other random variable as long as the moments of the random variable are analytically available.

Author Contributions

J.W.P. and K.-H.L. made a Matlab implementation of the proposed algorithm. J.-H.L. formulated the proposed algorithm. J.-H.L., J.W.P., and K.-H.L. wrote an initial draft, and J.-H.L. and J.W.P. revised the manuscript. J.W.P. and K.-H.L. derived the results in the appendices. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (2018R1D1A1B07048294). The authors gratefully acknowledge the support from Electronic Warfare Research Center at Gwangju Institute of Science and Technology (GIST), originally funded by Defense Acquisition Program Administration (DAPA) and Agency for Defense Development (ADD).

Conflicts of Interest

The authors declare no conflict of interest.

Notation

| Hermitian matrix transpose | |

| Noisy quantity | |

| Difference between noisy quantity and the corresponding noiseless quantity | |

| Matrix whose columns are array vector for and | |

| Estimate of the c-th incident signal | |

| True azimuth of c-th incident signal | |

| Noisy signals on the antenna array at | |

| Sample covariance matrix of the noiseless signal | |

| The entry at the k-th row and the l-th column of | |

| Sample covariance matrix of the noisy signal | |

| The entry at the k-th row and the l-th column of the | |

| Difference between and | |

| Difference between and | |

| First, order U approximation of based on Taylor expansion | |

| First, order U approximation of | |

| Difference between and | |

| V approximation of | |

| Difference between and | |

| associated with w-th repetition out of W repetitions. | |

| associated with w-th repetition out of W repetitions. | |

| associated with w-th repetition out of W repetitions. |

Abbreviations

The following abbreviations are used in this manuscript:

| DOA | Direction-of-Arrival |

| ML | Maximum Likelihood |

| MSE | Mean Square Error |

| SNR | Signal to Noise Ratio |

| ULA | Uniform Linear array |

Appendix A. First Order Derivative of fkl (θ1,θ2) and gkl (θ1,θ2)

Note that the first and the second order derivatives of are given in Appendix B and first and second order derivatives of are given in Appendix C.

Appendix B. First and Second Order Derivatives of Det (θ1,θ2)

Appendix C. First and Second Order Derivatives of B (θ1,θ2)

The first order and the second order derivatives of and are given in Appendix D.

Appendix D. First and Second Order Derivatives of ak (θ1) and ak (θ2)

Appendix E. Derivations of fkl (θ1,θ2) and gkl (θ1,θ2)

Let and denote the partial derivatives of with respect to and , respectively:

Appendix F. Derivations of fkl (θ1,θ2) and gkl (θ1,θ2) with the Tayler Series Approximation

Appendix G. Derivation of

From (A55), is given by

Finally, when , is given by

for and and .

for and and .

for and and .

for and and .

for and and .

for and and .

for and and .

for and and .

for and and and .

for and and and .

for and and and .

for and and and .

for and and and .

for and and and .

otherwise. When , is given by

for and and .

for and and .

for and and .

for and and .

for .

otherwise.

Appendix H. Fourth Order Non-Central Moment of Non-Zero-Mean Complex Gaussian Random Variables with Variance σ2

- (a)

For , (A78) can be written as

The first term of (A79) is given by

- (b)

Using a similar way to get (A88), for , is given by

Appendix I. Third Order Non-Central Moment of Non-Zero-Mean Complex Gaussian Random Variable with Variance σ2

We define ten cases depending on how , and e are related:

- and and

- and and

- and and

- and and

- and and

- and and

- and and

- and and

- and and

- and and

- for Case I can be written as:Note that, in deriving (A91) and (A92), we used the fact that the real part and the imaginary part of noise are independent and identically distributed with .Using the same manipulation used in obtaining (A92), for Case II–Case X,can be shown as

- In a similar way to get (A94), we get

Appendix J. Second Order Non-Central Moment of Non-Zero-Mean Complex Gaussian Random Variable with Variance σ2

Depending on how a, b, d and e are related, we define four cases:

- and

- and

- and

- and

- For Case I, is given bySimilarly, it can be shown that is identically for Case II–Case IV:

- Using the same algebraic manipulation used in evaluating , it can be shown that is equal to for Case I–Case IV:

- Using the same algebraic manipulation used in evaluating , it can be shown that is equal to for Case I–Case IV:

References

- Del Rio, J.E.F.; Catedra-Perez, M.F. A comparison between matrix pencil and Root-MUSIC for direction-of-arrival estimation making use of uniform linear arrays. Digit. Signal Process. 1997, 7, 153–162. [Google Scholar] [CrossRef]

- Silverstein, S.D.; Zoltowski, M.D. The mathematical basis for element and fourier beamspace MUSIC and root-MUSIC algorithms. Digit. Signal Process. 1991, 1, 161–175. [Google Scholar] [CrossRef]

- Weng, Z.; Djuric, P.M. A search-free DOA estimation algorithm for coprime arrays. Digit. Signal Process. 1991, 1, 27–33. [Google Scholar] [CrossRef]

- He, Z.-Q.; Shi, Z.-P.; Huang, L. Covariance sparsity-aware DOA estimation for nonuniform noise. Digit. Signal Process. 2014, 24, 75–81. [Google Scholar] [CrossRef]

- Li, J.F.; Zhang, X.F. Two-dimensional angle estimation for monostatic MIMO arbitrary array with velocity receive sensors and unknown locations. Digit. Signal Process. 1991, 24, 34–41. [Google Scholar] [CrossRef]

- Anton, H. Elementary Linear Algebra, 11th ed.; Wiley: Hoboken, NJ, USA, 2019. [Google Scholar]

- Stoica, P.; Gershman, A.B. Maximum-likelihood DOA estimation by data-supported grid search. IEEE Signal Process. Lett. 1999, 6, 273–275. [Google Scholar] [CrossRef]

- Athley, F. Threshold region performance of maximum likelihood direction of arrival estimators. IEEE Trans. Signal Process. 2005, 53, 1359–1373. [Google Scholar] [CrossRef]

- Pesavento, M.; Gershman, A.B. Maximum-likelihood direction-of-arrival estimation in the presence of unknown nonuniform noise. IEEE Trans. Signal Process. 2001, 49, 1310–1324. [Google Scholar] [CrossRef]

- Vorobyov, S.A.; Gershman, A.B.; Wong, K.M. Maximum likelihood direction-of-arrival estimation in unknown noise filed using sparse sensor arrays. IEEE Trans. Signal Process. 2005, 53, 34–43. [Google Scholar] [CrossRef]

- Shin, J.W.; Lee, Y.-J.; Kim, H.-N. Reduced-complexity maximum likelihood direction-of-arrival estimation based on spatial aliasing. IEEE Trans. Signal Process. 2014, 62, 6568–6581. [Google Scholar] [CrossRef]

- Vincent, F.; Besson, O.; Chaumette, E. Approximate unconditional maximum likelihood direction of arrival estimation for two closely spaced targets. IEEE Signal Process. Lett. 2015, 22, 86–89. [Google Scholar] [CrossRef]

- Liu, Z.-M.; Huang, Z.-T.; Zhou, Y.-Y. An efficient maximum likelihood method for direction-of-arrival estimation via sparse Bayesian learning. IEEE Trans. Wirel. Commun. 2012, 11, 3607–3617. [Google Scholar] [CrossRef]

- Boccato, L.; Krummenauer, R.; Attux, R.; Lopes, A. Application of natural computing algorithms to maximum likelihood estimation of direction of arrival. Signal Process. 2012, 92, 1338–1352. [Google Scholar] [CrossRef]

- Wang, H.; Kay, S.; Saha, S. An importance sampling maximum likelihood direction of arrival estimator. IEEE Trans. Signal Process. 2008, 56, 5082–5092. [Google Scholar] [CrossRef]

- Magdy, A.; Mahmoud, K.R.; Abdel-Gawad, S.G.; Ibrahim, I.I. Direction of arrival estimation based on maximum likelihood criteria using gravitational search algorithm. In Proceedings of the Progress in Electromagnetics Research Symposium, Taipei, Taiwan, 25–28 March 2013; pp. 1162–1167. [Google Scholar]

- Zhang, Z.; Lin, J.; Shi, Y. Application of artificial bee colony algorithm to maximum likelihood DOA estimation. J. Bionic Eng. 2013, 10, 100–109. [Google Scholar] [CrossRef]

- Seghouane, A.K. A Kullback–Leibler methodology for unconditional ML DOA estimation in unknown nonuniform noise. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 3012–3021. [Google Scholar] [CrossRef]

- Chen, C.E.; Lorenzelli, F.; Hudson, R.E.; Yao, K. Maximum likelihood DOA estimation of multiple wideband sources in the presence of nonuniform sensor noise. EURASIP J. Adv. Signal Process. 2008, 14, 1–12. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).