A Study of the Effectiveness Verification of Computer-Based Dementia Assessment Contents (Co-Wis): Non-Randomized Study

Abstract

Featured Application

Abstract

1. Introduction

2. Materials and Methods

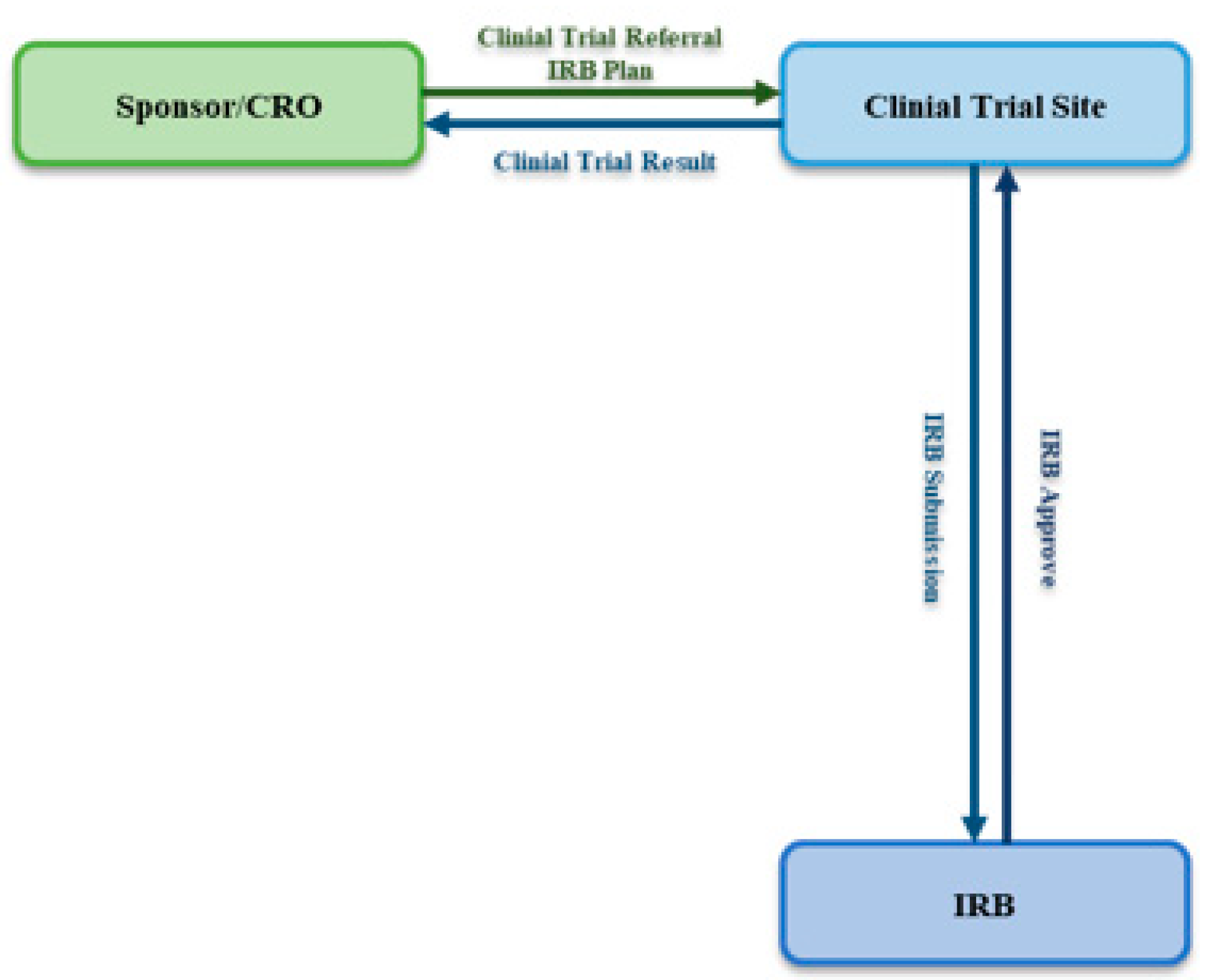

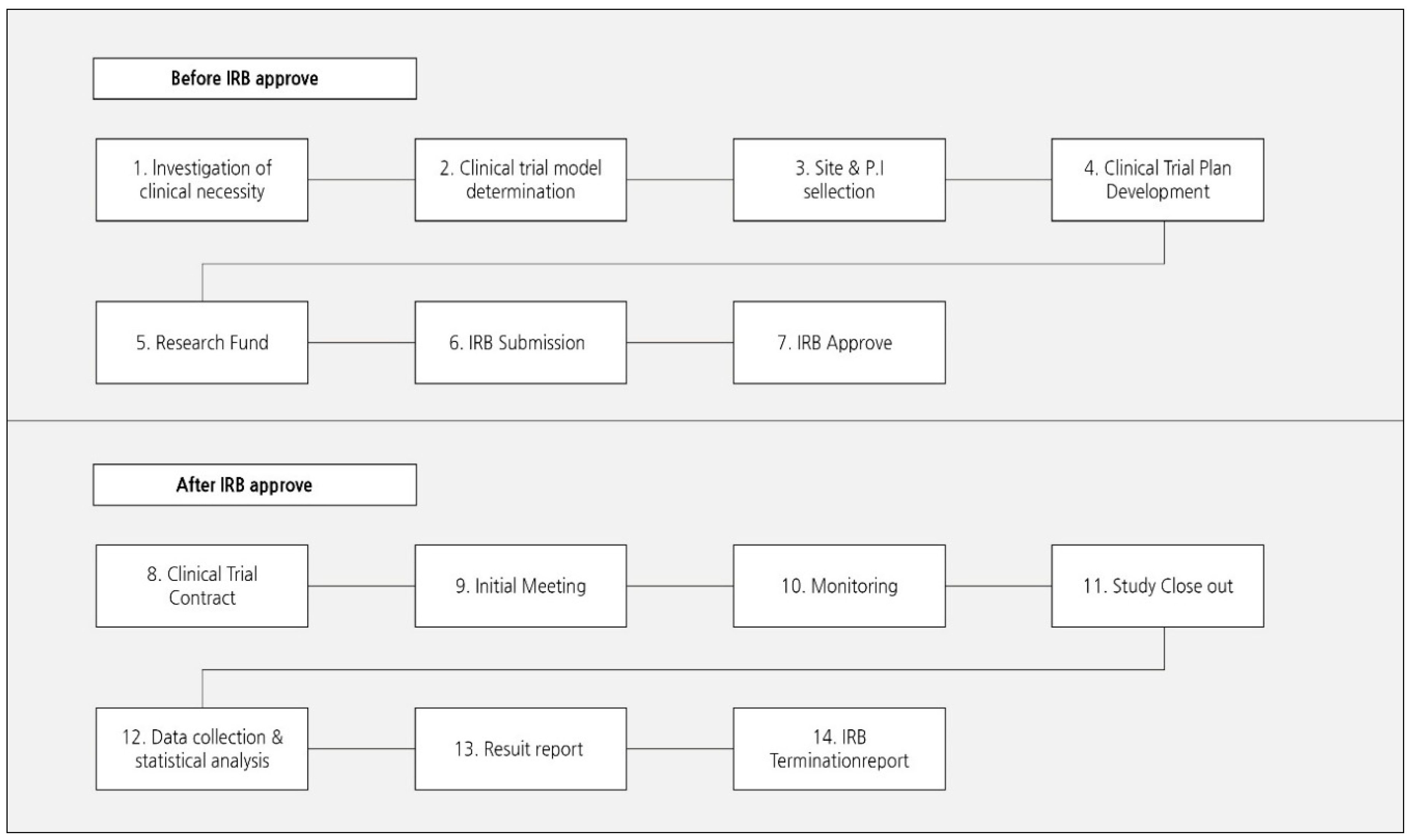

2.1. Clinical Trial Ethics (Institutional Review Board Approval)

2.2. Clinical Trial Design

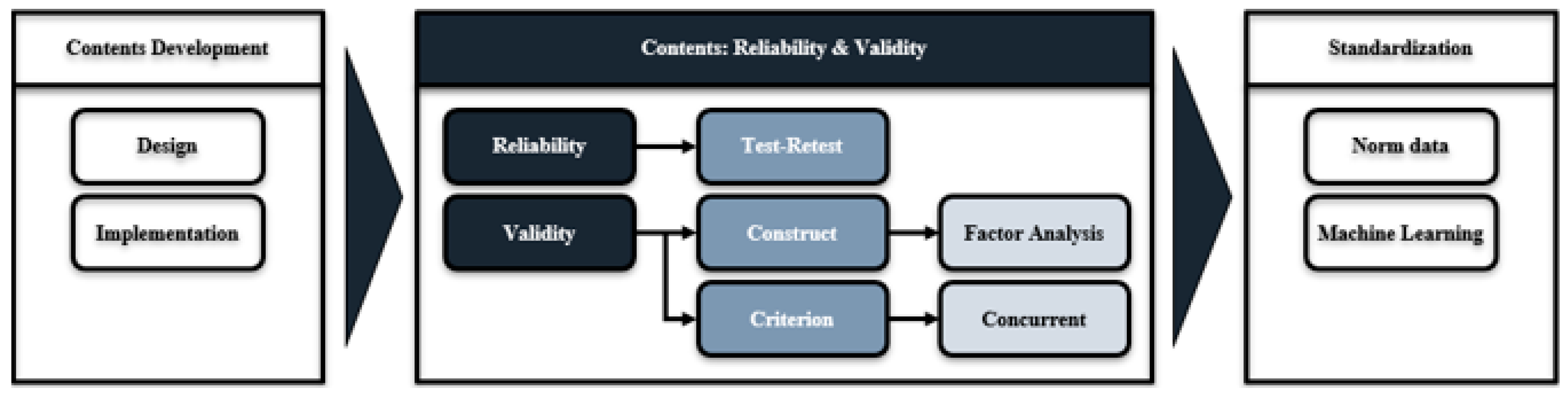

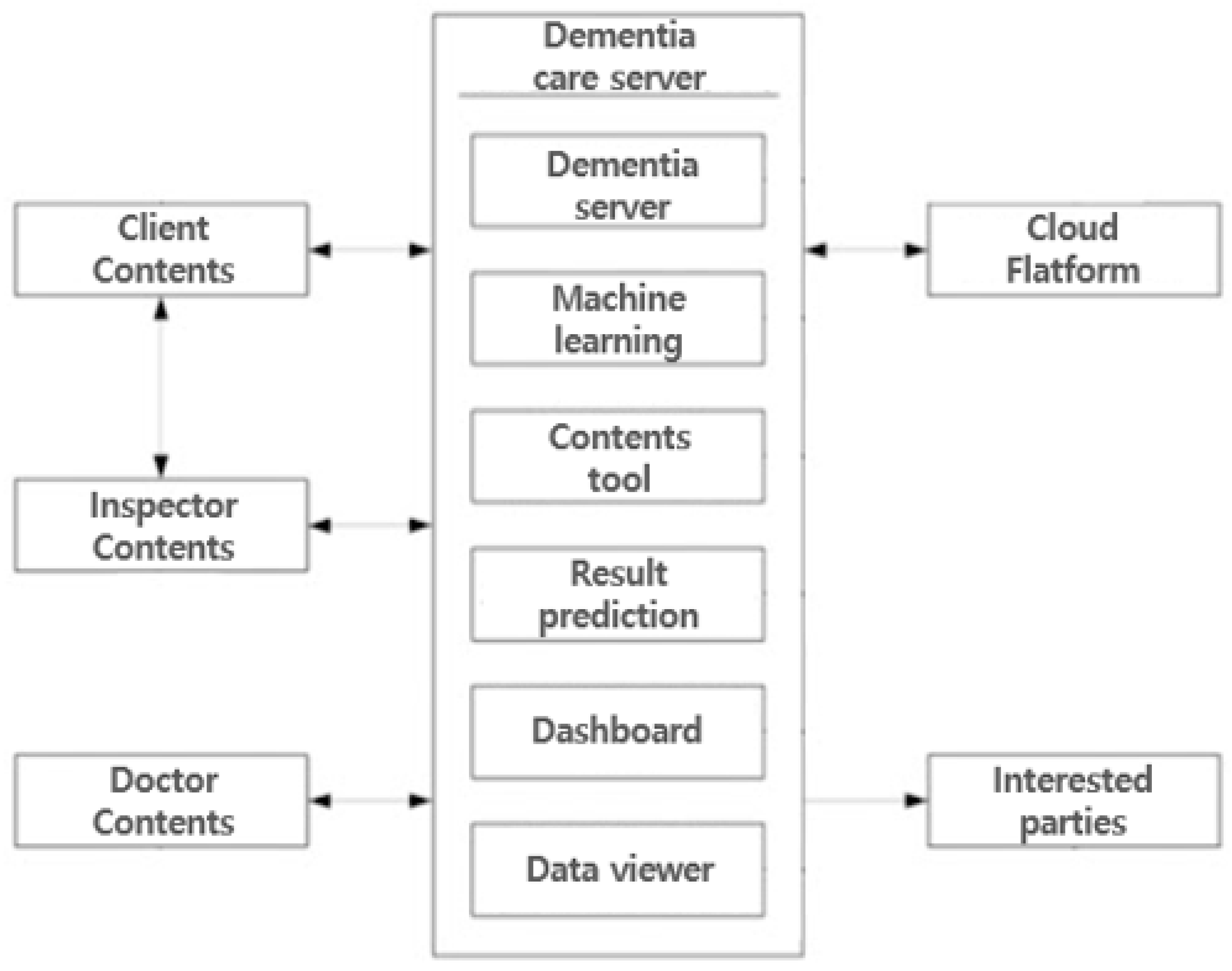

2.3. Computer-Based Dementia Assessment Contents (Co-Wis)

2.4. Data Analysis

3. Results

3.1. Descriptive Statistics

3.2. Concurrent Validity

3.3. Test–Retest Reliability

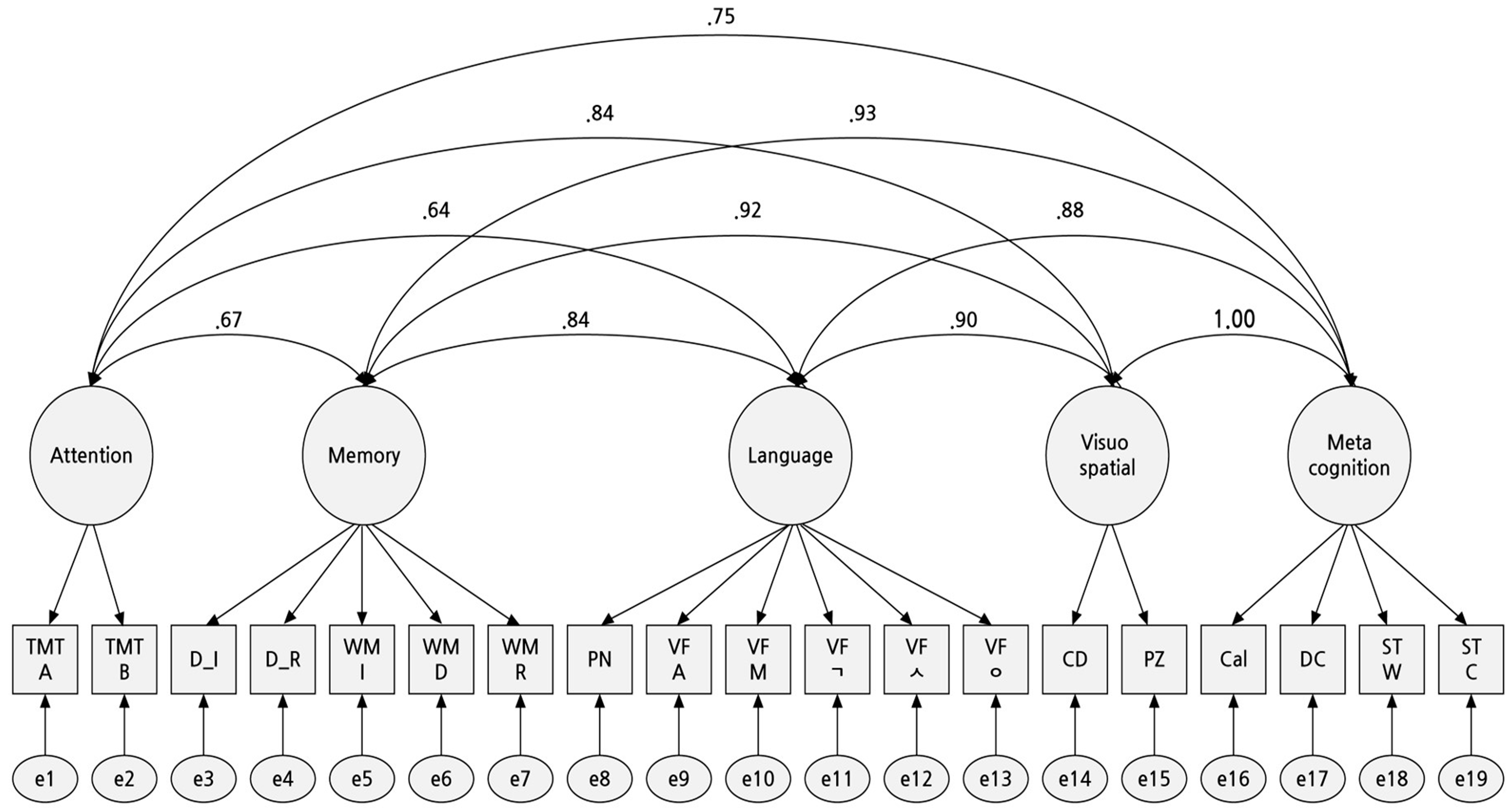

3.4. Construct Validity

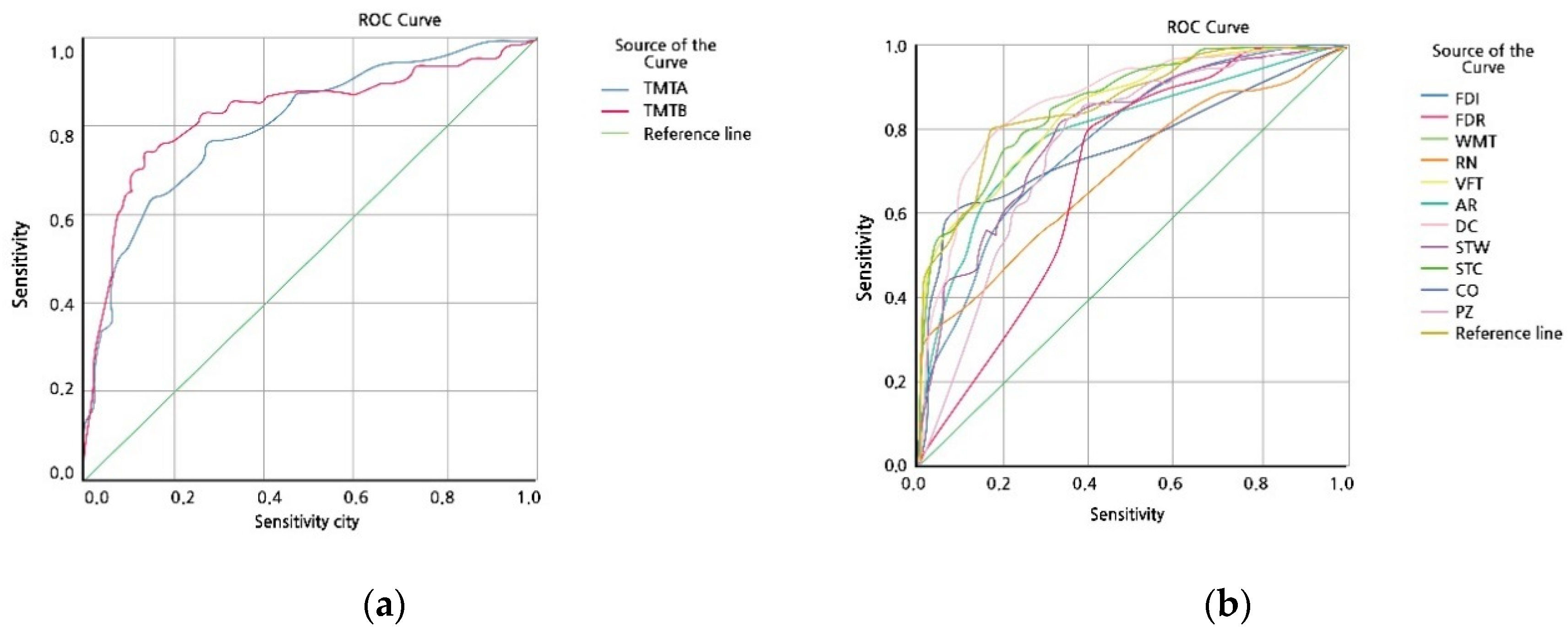

3.5. Signal detection analysis

4. Discussion

5. Patents

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jo, J.H.; Kim, B.S.; Chang, S.M. Major Depressive Disorder in Family Caregivers of Patients with Dementia. J. Korean Soc. Biol. Ther. Psychiatry 2019, 25, 95–100. [Google Scholar]

- Lee, D.W.; Seong, S.J. Korean national dementia plans: From 1st to 3rd. J. Korean Med. Assoc. 2018, 61, 298–303. [Google Scholar] [CrossRef]

- Sarant, J.S.; Harris, D.; Busby, P.; Maruff, P.; Schembri, A.; Lemke, U.; Launer, S. The Effect of Hearing Aid Use on Cognition in Older Adults: Can We Delay Decline or Even Improve Cognition Function? J. Clin. Med. 2020, 9, 254. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.E.; Shin, D.W.; Han, K.; Kim, D.; Yoo, J.E.; Lee, J.; Kim, S.Y.; Son, K.Y.; Cho, B.; Kim, M.J. Change in Metabolic Syndrome Status and Risk of Dementia. J. Clin. Med. 2020, 9, 122. [Google Scholar] [CrossRef] [PubMed]

- Pasquier, F. Early Diagnosis of Dementia. J. Korean Acad. Fam. Med. 1999, 246, 6–15. [Google Scholar]

- Ahn, H.J.; Chin, J.H.; Park, A.; Lee, B.H.; Suh, M.K.; Seo, W.S.; Na, D.L. Seoul Neuropsychological Screening Battery-Dementia Version(SNSB-D): A Useful Tool for Assessment and Monitoring Cognitive Impairments in Dementia Patients. J. Korean Med. Sci. 2009, 25, 1071–1076. [Google Scholar] [CrossRef]

- Jun, J.Y.; Song, S.E.; Park, J.P. A Study of the Reliability and the Validity of Clinical Data Interchange Standards Consortium(CDISC) based Nonpharmacy Dementia Diagnosis Contents(Co-Wis). J. Korean Contents Assoc. 2019, 19, 638–649. [Google Scholar]

- Zygouris, S.; Tsolaki, M. Computerized Cognitive Testing for Older Adults: A review. Am. J. Alzheimer’s Dis. Other Dement. 2014, 13, 1–16. [Google Scholar] [CrossRef]

- Park, J.H. A Systematic Review of Computerized Cognitive Function Tests for the Screening of Mild Cognitive Impairment. Korean Soc. Occup. Ther. 2016, 24, 19–31. [Google Scholar] [CrossRef]

- Tierney, M.C.; Szalai, J.P.; Snow, W.G.; Fisher, R.H.; Nores, A.; Nadon, G.; Dunn, E. George-Hyslop, P.H. St. Prediction of probable Alzheimer’s disease in memory-impaired patients: A prospective longitudinal study. Am. Acad. Neurol. 1996, 46, 661–665. [Google Scholar]

- Werner, P.; Korczyn, A.D. Willingness to use computerized systems for the diagnosis of dementia testing a theoretical model in an Israeli sample. Alzheimer Dis. Assoc. Disord. 2012, 26, 171–178. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.H.; Shin, S.H.; Park, S.H.; Ko, M.H. Cognitive Assessment for Patient with Brain Injury by Computerized Neuropsychological. Korean Acad. Rehabil. Med. 2001, 25, 209–216. [Google Scholar]

- You, Y.S.; Jung, I.K.; Lee, J.H.; Lee, H.S. The Study of the Usefulness of Computerized Neuropsychological Test (STIM) in Traumatic Brain-injury Patients. Korean J. Clin. Psychol. 1998, 17, 133–147. [Google Scholar]

- Lim, S.C.; Seo, J.C.; Kim, K.U.; Seo, B.M.; Kim, S.W.; Lee, S.Y.; Jung, T.Y.; Han, S.W.; Lee, H. The Comparison of Acupuncture Sensation Index Among Three Different Acupuncture Devices. J. Korean Acupunct. Moxibustion Soc. 2004, 21, 209–219. [Google Scholar]

- Cheon, J.S. Neurocognitive Assessment of Geriatric Patients. J. Soc. Biol. Ther. Psychiatry 2000, 6, 126–139. [Google Scholar]

- Jung, Y.J.; Kang, S.W. Difference in Sleep, Fatigue, and Neurocognitive Function between Shift Nurses and Non-shift Nurses. Korean J. Adult Nurs. 2017, 29, 190–199. [Google Scholar] [CrossRef]

- Song, S.J.; Shim, H.J.; Park, C.H.; Lee, S.H.; Yoon, S.W. Analysis of Correlation between Cognitive Function and Speech Recognition in Noise. Korean J. Otorhinolaryngol. Head Neck Surg. 2010, 53, 215–220. [Google Scholar] [CrossRef]

- American Occupational Therapy Association. Occupational therapy practice framework: Domain & process(3rd edition). Am. J. Occup. Ther. 2014, 68, 1–48. [Google Scholar]

- Montes, R.M.; Lobete, L.D.; Pereira, J.; Schoemaker, M.M.; Riego, S.S.; Pousada, T. Identifying Children with Developmental Coordination Disorder via Parental Questionnaires. Spanish Reference Norms for the DCDDaily-Q-ES and Correlation with the DCDQ-ES. Int. J. Environ. Res. Public Health 2020, 17, 555. [Google Scholar] [CrossRef]

- Wattad, R.; Gabis, L.V.; Shefer, S.; Tresser, S.; Portnoy, S. Correlations between Performance in a Virtual Reality Game and the Movement Assessment Battery Diagnostic in Children with Developmental Coordination Disorder. Appl. Sci. 2020, 10, 833. [Google Scholar] [CrossRef]

- Eun, H.J.; Kwon, T.W.; Lee, S.M.; Kim, T.H.; Choi, M.R.; Cho, S.J. A Study on Reliability and Validity of the Korean Version of Impact of Event Scale-Revised. J. Korean Neuropsychiatry Assoc. 2005, 44, 303–310. [Google Scholar]

- Edwards, J.D.; Vance, D.E.; Wadley, V.G.; Cissell, G.M.; Roenker, D.L.; Ball, K.K. The Reliability and Validity of Useful Field of View Test. Rehabil. Welf. Eng. Assist. Technol. 2005, 11, 529–543. [Google Scholar]

- Ku, M.H.; Kim, H.J.; Kwon, E.J.; Kim, S.H.; Lee, H.S.; Ko, H.J.; Jo, S.M.; Kim, D.K. A Study on the Reliability and Validity of Seoul-Instrumental Activities of Daily Living(S-IADL). Korean Neuropsychiatry Assoc. 2004, 43, 189–199. [Google Scholar]

- Ha, K.S.; Kwon, J.S.; Lyoo, I.G.; Kong, S.W.; Lee, D.W. Development and Standardization Process, and Factor Analysis of the Computerized Cognitive Function Test System for Korea Adults. Korean Neuropsychiatry Assoc. 2002, 41, 551–562. [Google Scholar]

- Kang, H.C. A Guide on the Use of Factor Analysis in the Assess. Constr. Validity. J. Korean Acad. Nurs. 2013, 43, 587–594. [Google Scholar] [CrossRef] [PubMed]

- Hong, S.H. The Criteria for Selecting Appropriate Fit Indices in Structural Equation Modeling and Their Rationales. Korean J. Clin. Psychol. 2000, 19, 161–177. [Google Scholar]

- Choi, J.E.; Hwang, S.K. Predictive Validity of Pressure Ulcer Risk Assessment Scales among Patient in a Trauma Intensive Care Unit. J. Korean Crit. Care Nurs. 2019, 12, 26–38. [Google Scholar] [CrossRef]

- Di Nuovo, A.; Varrasi, S.; Lucas, A.; Conti, D.; McNamara, J.; Soranzo, A. Assessment of Cognitive skills via Human-robot Interaction and Cloud Computing. J. Bionic Eng. 2019, 16, 526–539. [Google Scholar] [CrossRef]

- Rossi, S.; Santangelo, G.; Staffa, M.; Varrasi, S.; Conti, D.; Di Nuovo, A. Psychometric Evaluation Supported by a Social Robot: Personality Factors and Technology Accpectance. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication RO-MAN2018, Nanjing, China, 27–31 August 2018. [Google Scholar]

- Kim, J.S.; Kim, K. Effect of Motor Imagery Training with Visual and Kinesthetic Imagery Training on Balance Ability in Post Stroke Hemiparesis. J. Korean Soc. Phys. Med. 2010, 5, 517–525. [Google Scholar]

- Lee, J.Y.; Kim, H.; Seo, Y.K.; Kang, H.W.; Kang, W.C.; Jung, I.C. A Research to Evaluate the Reliability and Validity of Pattern Identifications Tool for Cognitive Disorder: A Clinical Study Protocol. J. Orient. Neuropsychiatry 2018, 29, 255–266. [Google Scholar]

- De Gruijter, D.N.; Leo, J.T. Statistical Test Theory for the Behavioral Science; CRC Press: London, UK, 2007. [Google Scholar]

- Bartels, C.; Wegrzyn, M.; Wiedl, A.; Ackermann, V.; Ehrenreich, H. Practice effects in healthy adults: A longitudinal study on frequent repetitive cognitive testing. BMC Neurosci. 2010, 11, 118. [Google Scholar] [CrossRef] [PubMed]

- Han, S.S.; Lee, S.C. Nursing and Health Statistical Analysis; Hannarae Publishing: Seoul, Korea, 2018. [Google Scholar]

- Hooper, D.; Coughlan, J.; Mullen, M.R. Structural Equation Modeling: Guidelines for Determining Model Fit. Electron. J. Bus. Res. Methods 2008, 6, 53–60. [Google Scholar]

- Park, H.S.; Heo, S.Y. Mobile Screening Test System for Mild Cognitive Impairment: Concurrent validity with the Montreal Cognitive Assessment and Inter-rater Reliablity. Korean Soc. Cogn. Rehabil. 2017, 6, 25–42. [Google Scholar]

- Park, H.S.; Yang, N.Y.; Moon, J.H.; Yu, C.H.; Jeong, S.M. The Validity of Reliability of Computerized Comprehensive Neurocognitive Function Test in the Elderly. J. Rehabil. Welf. Eng. Assist. Technol. 2017, 11, 339–348. [Google Scholar]

- Lee, J.M.; Won, J.H.; Jang, M.Y. Validity and Reliability of Tablet PC-Based Cognitive Assessment Tools for Stroke Patients. Korean Soc. Occup. Ther. 2019, 27, 45–56. [Google Scholar] [CrossRef]

- Takahashi, J.; Kawai, H.; Suzuki, H.; Fujiwara, Y.; Watanabe, Y.; Hirano, H.; Kim, H.; Ihara, K.; Miki, A.; Obuchi, S. Development and validity of the Computer-Based Cognitive Assessment Tool for intervention in community-dwelling older individuals. Geriatr. Gerontol. Int. 2020, 1–5. [Google Scholar] [CrossRef]

| Cognition Domain | Subtest | Subtest Item |

|---|---|---|

| Attention | Trail Making Test | A, B type |

| Memory | Figure Drawing | Immediate, Recall |

| Word Memory | Immediate, Delay, Recognition | |

| Language | Picture Naming | |

| Verbal Fluency | Animal, Market, Initial Sound | |

| Visuospatial | Clock Drawing | |

| Puzzle Construction | ||

| Executive Function | Four Fundamental Arithmetic | Add, Subtract, Multiply, Divide |

| Digit Coding | ||

| Stroop | Word, Color |

| Demographics | Male | Female | Total |

|---|---|---|---|

| N (%) or Mean (SD) | N (%) or Mean (SD) | N (%) or Mean (SD) | |

| Sex | 46 (40.71) | 67 (59.29) | 113 (100) |

| Age | |||

| Range | 47–86 | 45–86 | 45–86 |

| 45–59 | 7 (15.22) | 7 (10.45) | 14 (12.39) |

| 60–69 | 7 (15.22) | 21 (31.34) | 28 (24.78) |

| 70–79 | 27 (58.70) | 27 (40.30) | 54 (47.79) |

| Over 80 | 5 (10.87) | 12 (17.91) | 17 (15.04) |

| Total | 70.11 (10.29) | 71.08 (8.63) | 70.68 (9.31) |

| Education | |||

| 0–10 | 17 (36.96) | 52 (77.61) | 69 (61.06) |

| 11–20 | 29 (63.04) | 15 (22.39) | 44 (38.94) |

| Total | 11.50 (4.35) | 7.37 (4.12) | 9.05 (4.67) |

| K-MMSE | |||

| 10–18 | 6 (13.04) | 40 (86.96) | 18 (15.93) |

| 19–26 | 12 (17.91) | 55 (82.09) | 95 (84.07) |

| Total | 23.22 (3.58) | 22.99 (3.63) | 23.08 (3.60) |

| CDR | |||

| 0.5 | 28 (60.87) | 37 (55.22) | 65 (57.52) |

| 1 | 13 (28.26) | 25 (37.31) | 38 (33.63) |

| 2 | 5 (10.87) | 5 (7.46) | 10 (8.85) |

| Total | 0.80 (0.48) | 0.80 (0.42) | 0.80 (0.44) |

| Co-Wis | Traditional Assessment (SNSB-II) | r | ||

|---|---|---|---|---|

| Subtest | Mean (SD) | Subtest | Mean (SD) | |

| Word memory (immediate) | 11.39 (4.98) | SVLT-E (immediate) | 13.87 (5.64) | 0.607 ** |

| Word memory (delay) | 1.69 (2.20) | SVLT-E (delay) | 2.99 (2.77) | 0.534 ** |

| Word memory (recognition) | 5.88 (2.49) | SVLT-E (recognition) | 8.53 (3.02) | 0.409 ** |

| Word memory (total) | 18.96 (8.39) | SVLT-E (total) | 25.39 (9.76) | 0.654 ** |

| Figure drawing (immediate) | 14.81 (4.78) | RCFT (copy) | 26.14 (10.45) | 0.501 ** |

| Figure drawing (recall) | 4.65 (5.46) | RCFT (delay) | 8.50 (8.03) | 0.592 ** |

| Clock drawing | 2.24 (0.98) | Clock drawing | 2.32 (0.91) | 0.639 ** |

| Puzzle construction | 1.84 (1.82) | Clock drawing | 2.32 (0.91) | 0.468 ** |

| Four fundamental arithmetic (total) | 6.19 (3.30) | Calculation (total) | 8.74 (3.18) | 0.776 ** |

| Digit coding | 7.91 (6.83) | Digit coding | 8.74 (3.18) | 0.776 ** |

| Stroop (word) | 40.72 (17.15) | K-CWST-60 (word) | 51.87 (26.93) | 0.494 ** |

| Stroop (color) | 18.56 (14.97) | K-CWST-60 (color) | 27.89 (16.78) | 0.655 ** |

| Picture naming | 6.01 (1.99) | S-K-BNT | 10.11 (2.98) | 0.664 ** |

| Verbal fluency (total) | 31.06 (16.99) | COWAT (total) | 38.47 (21.17) | 0.793 ** |

| Trail making (A type) | 47.91 (56.66) | Trail making (A type) | 56.05 (55.88) | 0.422 ** |

| Trail making (B type) | 135.04 (115.67) | Trail making (B type) | 145.04 (112.74) | 0.798 ** |

| Co-Wis Subtest | Co-Wis Visit 1 Mean (SD) | Co-Wis Visit 2 Mean (SD) | r |

|---|---|---|---|

| Word memory (immediately) | 11.39 (4.95) | 13.42 (6.22) | 0.772 ** |

| Word memory (delay) | 1.69 (2.20) | 2.72 (2.79 | 0.626 ** |

| Word memory (recognition) | 5.88 (2.49) | 6.46 (2.42) | 0.683 ** |

| Word memory (total) | 18.96 (8.39 | 22.60 (10.47) | 0.816 ** |

| Figure drawing (immediately) | 14.81 (4.78) | 15.48 (5.10) | 0.564 ** |

| Figure drawing (recall) | 4.65 (5.46) | 8.82 (6.87) | 0.553 ** |

| Clock drawing | 2.24 (0.98) | 2.38 (0.81) | 0.588 ** |

| Puzzle construction | 1.84 (1.82) | 1.90 (1.86) | 0.529 ** |

| Four fundamental arithmetic | 6.19 (3.30) | 6.48 (3.39) | 0.759 ** |

| Digit coding | 7.91 (6.83) | 9.02 (7.64) | 0.872 ** |

| Stroop (word) | 40.72 (17.15) | 41.49 (15.82) | 0.587 ** |

| Stroop (color) | 18.56 (14.97) | 21.39 (15.57) | 0.765 ** |

| Picture naming | 6.01 (1.99) | 6.47 (1.99) | 0.754 ** |

| Verbal fluency (total) | 31.06 (16.99) | 32.75 (17.05) | 0.829 ** |

| Trail making (A type) | 47.91 (56.66) | 39.53 (40.35) | 0.251 ** |

| Trail making (B type) | 135.04 (115.67) | 109.49 (105.60) | 0.696 ** |

| Measure | Result Value |

|---|---|

| Kaiser–Meyer–Olkin | 0.928 |

| Bartlett | 0.000** |

| Co-Wis Subtest | Initial | Extraction |

|---|---|---|

| Word memory (immediately) | 1.000 | 0.636 |

| Word memory (delay) | 1.000 | 0.635 |

| Word memory (recognition) | 1.000 | 0.456 |

| Figure drawing (immediately) | 1.000 | 0.374 |

| Figure drawing (recall) | 1.000 | 0.502 |

| Clock drawing | 1.000 | 0.574 |

| Puzzle construction | 1.000 | 0.527 |

| Four fundamental arithmetic | 1.000 | 0.610 |

| Digit coding | 1.000 | 0.734 |

| Stroop (word) | 1.000 | 0.641 |

| Stroop (color) | 1.000 | 0.691 |

| Picture naming | 1.000 | 0.576 |

| Verbal fluency (category: animal) | 1.000 | 0.645 |

| Verbal fluency (category: market) | 1.000 | 0.636 |

| Verbal fluency (initial word: ㄱ) | 1.000 | 0.783 |

| Verbal fluency (initial word: ㅅ) | 1.000 | 0.748 |

| Verbal fluency (initial word: ㅇ) | 1.000 | 0.728 |

| Trail making (A type) | 1.000 | 0.577 |

| Trail making (B type) | 1.000 | 0.710 |

| Co-Wis Subtest | χ2 | df | CMIN/df | TLI | CFI | RMSEA |

|---|---|---|---|---|---|---|

| 5 factor | 264.696 | 142 | 1.864 | 0.876 | 0.897 | 0.088 |

| Co-Wis Result Subtest | Area | Standard Error | p Value | 95% CI | |

|---|---|---|---|---|---|

| Lower | Upper | ||||

| Trail making A type | 0.800 | 0.043 | 0.001 | 0.715 | 0.885 |

| Trail making B type | 0.824 | 0.043 | 0.001 | 0.739 | 0.909 |

| Figure drawing immediately | 0.779 | 0.043 | 0.001 | 0.598 | 0.791 |

| Figure drawing recall | 0.694 | 0.049 | 0.001 | 0.770 | 0.912 |

| Word memory total | 0.860 | 0.035 | 0.001 | 0.791 | 0.929 |

| Picture naming | 0.702 | 0.051 | 0.001 | 0.602 | 0.801 |

| Verbal fluency total | 0.848 | 0.037 | 0.001 | 0.776 | 0.920 |

| Four fundamental arithmetic | 0.800 | 0.044 | 0.001 | 0.714 | 0.886 |

| Digit coding | 0.856 | 0.036 | 0.001 | 0.785 | 0.927 |

| Stroop semantic | 0.784 | 0.044 | 0.001 | 0.698 | 0.871 |

| Stroop color | 0.840 | 0.037 | 0.001 | 0.768 | 0.912 |

| Clock drawing | 0.767 | 0.049 | 0.001 | 0.672 | 0.862 |

| Puzzle construction | 0.753 | 0.047 | 0.001 | 0.661 | 0.845 |

| Co-Wis Result Subtest | Sensitivity (%) | Specificity (%) | Cuff-Off Score |

|---|---|---|---|

| Trail making A type | 75.0 | 72.3 | 31 |

| Trail making B type | 77.1 | 78.5 | 89 |

| Figure drawing immediately | 70.8 | 70.8 | 16 |

| Figure drawing recall | 68.8 | 63.1 | 3 |

| Word memory total | 81.3 | 83.1 | 18 |

| Picture naming | 70.8 | 53.8 | 7 |

| Verbal fluency total | 77.1 | 72.3 | 29 |

| Four fundamental arithmetic | 79.2 | 72.3 | 7 |

| Digit coding | 83.3 | 76.9 | 7 |

| Stroop semantic | 70.8 | 72.3 | 43 |

| Stroop color | 79.2 | 73.8 | 16 |

| Clock drawing | 66.7 | 75.4 | 3 |

| Puzzle construction | 83.3 | 58.5 | 3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, S.I.; Jeong, H.S.; Park, J.P.; Kim, J.Y.; Bai, D.S.; Kim, G.H.; Cho, D.H.; Koo, B.H.; Kim, H.G. A Study of the Effectiveness Verification of Computer-Based Dementia Assessment Contents (Co-Wis): Non-Randomized Study. Appl. Sci. 2020, 10, 1579. https://doi.org/10.3390/app10051579

Song SI, Jeong HS, Park JP, Kim JY, Bai DS, Kim GH, Cho DH, Koo BH, Kim HG. A Study of the Effectiveness Verification of Computer-Based Dementia Assessment Contents (Co-Wis): Non-Randomized Study. Applied Sciences. 2020; 10(5):1579. https://doi.org/10.3390/app10051579

Chicago/Turabian StyleSong, Seung Il, Hyun Seok Jeong, Jung Pil Park, Ji Yean Kim, Dai Seg Bai, Gi Hwan Kim, Dong Hoon Cho, Bon Hoon Koo, and Hye Geum Kim. 2020. "A Study of the Effectiveness Verification of Computer-Based Dementia Assessment Contents (Co-Wis): Non-Randomized Study" Applied Sciences 10, no. 5: 1579. https://doi.org/10.3390/app10051579

APA StyleSong, S. I., Jeong, H. S., Park, J. P., Kim, J. Y., Bai, D. S., Kim, G. H., Cho, D. H., Koo, B. H., & Kim, H. G. (2020). A Study of the Effectiveness Verification of Computer-Based Dementia Assessment Contents (Co-Wis): Non-Randomized Study. Applied Sciences, 10(5), 1579. https://doi.org/10.3390/app10051579