1. Introduction

The use of biometric technology in everyday life is increasing. The driving force for this increase is not only the requirement for additional security, but also the need for usability improvements. For information security, fingerprints are one of the most commonly used biometric modalities [

1]. Fingerprint recognition is used for laptop and mobile device login, airport check-in, e-banking, ATM devices access control, and access control in general (locks on doors, deposit boxes, etc.).

Fingerprints are a representation of finger skin epidermis. The combination of ridges and valleys make each fingerprint unique [

2]. As skin epidermis is formed through combination of both hereditary and environmental factors, even identical twins have different fingerprints [

3].

Finger image quality has a significant impact on the quality of the acquired fingerprint. Environmental conditions such as illumination, humidity, and temperature impact fingerprint acquisition. Physiological characteristics of a person (age, gender, physical damage, etc.) can affect the quality of a fingerprint. Moreover, the technology used for fingerprint recognition also has an influence on biometric system performance. The utilization of better algorithms and high-quality fingerprint sensors lead to higher precision.

Designing good biometric system can be a challenging task. System designers must have different areas of expertise. Different combinations of sensors, algorithms, and supporting software can lead to diverse results, which may not always lead to favorable outcome. User interaction with the biometric system is another important aspect which needs to be taken into the consideration.

At the University of Belgrade’s Faculty of Organizational Sciences, the importance of biometric technologies was recognized. One of the goals set by the University was to provide students with insights into different aspects of biometric technologies. Hence, various aspects of these technologies, from biometric acquisition to matching algorithms and human-computer interaction, are taught as part of a course on biometric technologies.

As lecturers we became aware of the fact that certain aspects of biometric systems are particularly challenging for students. The need for more practical experience and hands-on exercises was recognized, in order to improve student motivation and learning outcomes. Consequently, we have decided to establish a biometric acquisition laboratory supported by specially developed software tools to address this issue.

Our biometric laboratory provides students with an opportunity to work with an assortment of hardware devices such as fingerprint sensors, humidity and temperature sensors, heaters, etc. All of these sensors and acquisition devices are components of the biometric acquisition surface. In our laboratory, biometric data is collected from several biometric acquisition surfaces and made available for later analysis and processing. All of the sensors and devices used in our biometric laboratory are part of a network, which in itself is an application of IoT concepts for educational purposes.

The application of IoT concepts for educational process improvement is a logical extension of ICT (Information and Communication Technology) applications in the field of education. For example, when we take e-education into consideration, we can notice different applications of technology. Multimedia learning, VLE (Virtual Learning Environment), and CAI (Computer-Assisted Instruction) are just some of the examples. However, using technology does not always mean just the application of new hardware or software components for educational purposes. For example, the PLE (Personal Learning Environment) approach focuses on technology application in education, and the main goal is to allow students to take control of their education environment [

4]. The technology which can be used for this purpose can vary, from distributed Web 2.0 and cloud services to mobile phones and semantic web. In order to allow students to make the best use of the resources available in our biometric laboratory, we have decided to apply IoT concepts in combination with the flipped classroom approach.

In

Section 2 of our paper, a state of the art review is given.

Section 3 contains the problem definition. In

Section 4, a description of the fingerprint acquisition laboratory is presented. Accompanying tools are described in

Section 5 and

Section 6. The evaluation of laboratory impact on student motivation and learning outcomes is laid out in

Section 7 and

Section 8 Conclusions and suggestions for further research are given in

Section 9.

2. State of the Art

2.1. Fingerprint Acquisition

Fingerprints are the most widely used modality for biometric recognition [

5]. Scars, cuts, or other significant types of damage to the fingers can be caused by the person’s age or profession. These states can seriously affect the raw biometric image quality, and consequently lower the performance of the biometric system based on the fingerprint modality.

There is a possibility that fingerprint recognition process can result in a low accuracy. The reason for such behavior is in most cases bad image accuracy. Bad image accuracy can be caused by the acquisition environment or by the state of the finger skin. It is possible to have blisters, cuts or wrinkles on the fingerprint area. Fingerprint skin can be coarse, too dry, or too wet. All these states affect recognition accuracy. Also, human behavior has a significant impact on the fingerprint acquisition process. Human–computer interaction deals with these behavioral aspects of fingerprint acquisition [

6]. For example, the user of a fingerprint biometric system can press the sensor too strong or too weak. Fingers can be improperly aligned to working area of the fingerprint sensor. The duration of finger contact with the acquisition sensor may be too short.

Authors from China [

7] have also analyzed the impact of the image quality on the fingerprint recognition precision. Experiment participants belonged to the population aged between 21 and 25 years. Acquisition was performed from December 2013 to May 2017, and data was collected from 1000 participants per month. Seasonal factors were monitored as well as their impact on fingerprint image quality, ESR (Enrollment Success Rate) and CPR (Capture Success Rate). Some of the conclusions were that ESR and CPR have the same variations trends and that the image quality varies between different seasons. Better image quality was obtained in the summer, during July and August, than in the winter months.

The environmental condition of the acquisition environment, such as the dirty fingerprint sensor area, extremely high temperatures, or unusual lightning can affect fingerprint image quality. Some of the more common conditions which were tested in the controlled environment are cold finger, cold-wet finger, heated finger, soaked finger, glued finger, and dirty finger [

8]. The experiment [

8] was conducted on four different fingerprint readers. There were 80 participants aged between 21 and 66 years old. Testing was performed in twenty acquisition cycles under controlled conditions. The experiment results have shown that the average value of the FRR (false rejection rate) is in practice greater than the one quoted by the fingerprint sensors manufacturers.

The fact that quality of the fingerprint impacts biometric system performance was recognized by Olsen et al. [

9]. In their research, the authors collected database of 6600 fingerprints collected from 33 persons, by the use of five different optical fingerprint sensors. Different skin states were simulated, such as wet, wrinkled, and dry. The authors have concluded that both the choice of sensors and the skin state affect system performance.

In their experiment, Modi and Eliott have studied two groups of examinees. The first group had 79 experiment participants, aged between 18 and 25 years. The second group consisted of 62 participants, whose age was over 62 years. The results have shown that the participant age has a significant impact on fingerprint image quality. Authors have used the NFIQ (Nist Fingerpint Image Quality) [

10] tool to assess the quality of the fingerprint images. The reason is the large number of low-quality images collected from the group of older participants. The reliable detection of minutiae is very hard in the case of low-quality images. This fact has a direct impact on biometric system precision [

11,

12]. The younger population had more high-quality fingerprint images, which resulted in greater system precision.

Kang, Lee, Kim, Shin, and Kim [

12] have studied the impact of the type of fingerprint reader on biometric system performance. They have tested four types of technologies used for fingerprint reader acquisition. The tested technologies were optical, semiconductor, tactile, and thermal. Depending on the used type of fingerprint reader, they have measured the impact of the fingerprint quality under different acquisition conditions. The conclusion is that the human interaction aspects have more impact on the fingerprint quality than the conditions of the environment.

2.2. Internet of Things and Smart Classroom

First applications of smart classroom aimed to give remote student a real live learning experience. In [

13], the authors have integrated voice-recognition, computer vision, and other technologies in order to provide both remote students and teachers more ways to interact. The classroom contains several cameras and some of them are used to track teacher movements, while the others show the classroom from different views. Artificial intelligence is used to choose which camera should broadcast video to students at a time. Speech recognition allows teachers to control the classroom system with the use of voice. Furthermore, the teaching board is a touch sensitive screen, while the teacher’s pen also contains a laser pointer, which is used as an interactive tool. Teacher and students’ identities are verified with face and voice biometrics.

A use of IOT in a classroom environment was described in [

14]. The authors used affordable sensors to measure the impact of environmental conditions on the student’s learning focus. Five parameters of physical environment were tracked: CO

2, temperature, humidity, noise level, and lecturer’s voice. The study included data collected from 197 students during 14 different lectures. The authors have extracted 22 features from the lecturer’s voice, combined them with other parameters and applied various classifiers in order to find out which was the best in correctly separating focused and unfocused segments of a student’s attention. AdaBoost M1 had the best recognition accuracy. The results of the study were meant to be later used to implement a smart classroom which would be able to determine if the classroom environment is optimized to maximize student attention.

Research study [

15] presents a smart classroom with the use of NFC (Near Field Communication) technology. The NFC was used in combination with LED (Light-Emitting Diodes) displays and multi-touch displays for attendance management, tracking the student’s location and enabling the real-time student feedback. An evaluation of the proposed system effect on student’s attitude towards science education was given. The different aspects evaluated were the self-concept in computer science, learning computer science outside of school, learning computer science at school, future participation in computer science, and the importance of computer science. Case study results showed improvement in the student’s attitudes.

In [

16], an IoT approach was used for classroom management. Arduino and NFC were used for teacher authentication, and collected data is transmitted to master node via the radio frequency, while the master node stores collected data on cloud storage. Collected data is sent to Xively server, an IoT platform owned by Google. A web app which uses Xively APi and Google maps was created to allow visualization of collected data. Social networks were also used to propagate collected information. A service which allows combining several web applications called Zapier was used to publish data from Xively to Twitter. Applied approach showed that data collected from classrooms can be easily used by different applications.

Application of IoT in education domain is described in [

17]. The authors have developed a system which allows students to interact with objects in the laboratory. RFID (Radio-Frequency Identification) and NFC are used to identify objects. Students use their mobile phones to receive information about objects in front of them, and are assigned tasks related to those objects. System supports multimedia contents, such as animations, pictures, audio, and video. Content is uploaded online by the teaching personnel. The system was evaluated with two groups, experimental and control. The experimental group used the system for learning, while the control group used a traditional learning approach. System evaluation has shown a positive impact of the use of IoT as a tool for enhancing the teaching process.

Hamidi et al. [

18] described an approach for smart health based on biometrics and IoT. Authors have developed a new standard for applying biometrics for developing smart healthcare solutions based on IoT. The approach was tailored to allow high data access capacity while being easy to use. The use of biometric sensors adds an additional layer of security to user authentication.

3. Problem Definition

Biometric data acquisition is an integral part of the biometric recognition process. For the feature extraction to take place, a biometric sensor has to provide raw biometric data [

19]. The quality of the provided biometric data has a significant impact on matching precision. For biometric system evaluation, Phillips et al. [

20] have defined three types of evaluations—technological, scenario, and operational. Technological evaluations measure just the precision of the biometric algorithm on a predefined dataset. Scenario tries to measure algorithm precision for specific types of application, while the operational measures performance of a concrete use case. Both scenario and operational evaluations are impacted by the acquisition process, as systems use different acquisition sensors and operate under various environment conditions.

The process of biometric acquisition is a part of the curriculum of the biometric technology course taught at the graduate study level at the University of Belgrade. Although biometrics is now commonly used for practical purposes, most of our students have limited experience with biometric systems (personal ID data acquisition for most cases). When faced with practical problems, they tended to focus on matching algorithms and application design, while overlooking some of the parts of the acquisition process they were not that familiar with. This led to somewhat unsatisfactory learning outcomes.

Also, students tended to focus on the predefined topics, and lacked motivation to explore more on their own. The traditional lecturing style placed some restrictions on student creativity and their participation in the learning process. Therefore, we have identified the need for an alternative approach to teaching the process of biometric acquisition.

4. Laboratory Overview

With the aim of introducing students to biometric acquisition, we have designed a laboratory which simulates the entire acquisition process. In order to take better advantage of practical equipment, we have decided to apply the flipped-classroom approach. This approach inverts the classical approach to lecturing and homework. As stated in [

21], when a classroom is “flipped”, what is usually done for homework is done in class, and what is usually done in class is done for homework. In this way, students can benefit from the help of instructors when solving more complex tasks, like case studies or laboratory exercises. In our case, before each lesson, students were given lecture notes with basic concepts required for the class along with instructions for the following laboratory exercise. Our course consisted of two parts—the acquisition process and the result evaluation. Each part had two lessons.

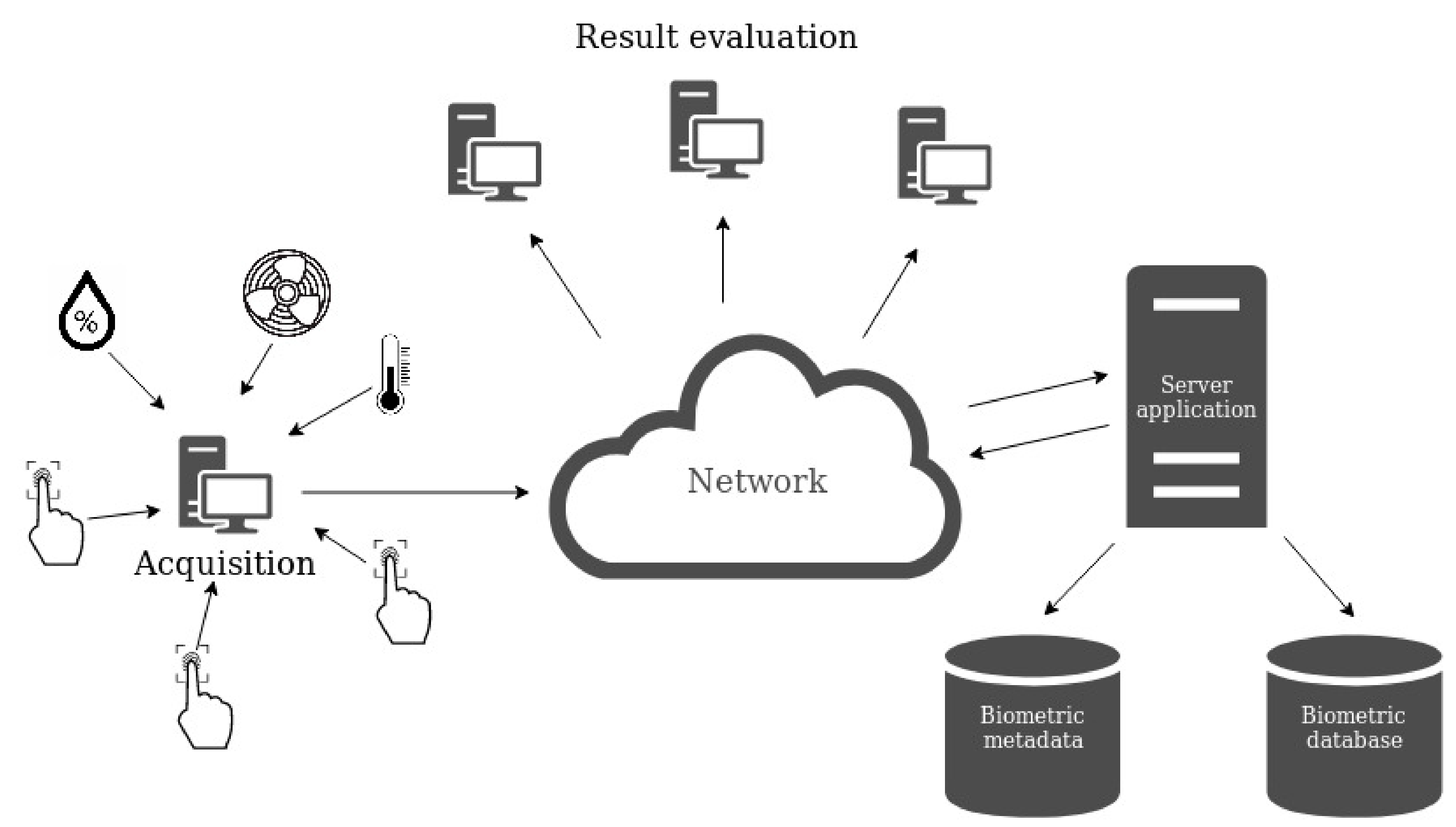

In the acquisition phase, biometric sensors, a temperature sensor, and a humidity sensor were connected to Raspberry Pi 4. Biometric sensors were connected via the USB interface, while the humidity and temperature sensor sent data via the radio connection. The Raspberry Pi, and the sensors and the integrated components create a biometric acquisition surface. In the laboratory, there are several biometric acquisition surfaces. From each surface, the extracted biometric templates with environment metadata are sent over the network to the server application, which stores them in the database. Environmental conditions can be controlled from the central server console provided by the server application. The server application can pass instructions to biometric acquisition surfaces in order to create a desired acquisition environment. For example, the heater can be turned on to raise the temperature to the desired level. Collected data is available for further evaluation, as students can access it with appropriate tools during the second part of the course. The architecture of the fingerprint acquisition laboratory is shown in

Figure 1.

In the acquisition phase, each of the participants provides several samples of the index and middle fingers of both hands in the various environmental conditions. The acquisition phase has three parts. In the first part, participants provide fingerprints in normal environmental conditions, at a temperature between 20 and 24 °C. In the second part, wet, greasy, and wrinkled fingerprint images are collected, besides the fingerprints taken in normal conditions. The laboratory temperature for the second part of the acquisition is also between 20 and 24 °C. The final, third part is done in a warmer environment, with a temperature between 35 and 40 °C. In order to achieve such a higher temperature, a special environment for fingerprint acquisition was created. Other conditions are similar to those in the second acquisition session.

For acquiring images in higher temperatures, a Plexiglas box was built. The box has a height of 40 cm, length of 70 cm, and width of 45 cm. There is a round hole with a 15 cm diameter, through which the participants can access the fingerprint sensors. For proper heat isolation, the box was wrapped in Styrofoam. Raspberry Pi was used to control three heaters (RC016-8W) and a power rectifier. The heaters were placed on a metal grid inside the metal box. On the other side of the grid, several ventilators were placed. The ventilators were used to move the heated air to the other parts of the Plexiglas box. Heat sensors were placed on two locations inside the Plexiglas box and connected to the Raspberry Pi. If the temperature falls below the value designated for the acquisition scenario, heaters are turned on. The Plexiglas box and the sensors are shown in

Figure 2.

Depending on the environmental conditions, the quality of the biometric sample can vary. In this laboratory, five different environmental conditions are simulated: normal, wet, dirty, greasy, and wrinkled fingerprints. Fingerprint conditions are considered normal when the finger is clean, and the skin has usual humidity. The wet fingerprint state is simulated by the use of a wet sponge. The user has to touch the wet area of the sponge, then the dry area of the sponge, and then the fingerprint sensor. A greasy fingerprint is created by applying baby oil to the sponge. The user at first touches the greasy area, then the dry sponge area, and finally the fingerprint sensor. For the creation of a dirty fingerprint, baby talcum powder is used. Finally, for the wrinkled fingerprints, the system user has to keep his fingers in hot water between 7 and 15 min. The actual duration is different for each person, because each individual has different skin characteristics. After that, the dried wrinkled finger is applied to the fingerprint sensor area.

For the acquisition we have used two types of sensors—capacitive and optical. The optical sensor has a specialized digital camera for capturing ridges and valleys of the fingerprint. These types of sensors generate only two-dimensional images [

22]. Capacitive fingerprint sensors use electricity to create the image of the fingerprint. The ridge pattern is identified by the strength of the electric field between the finger and the acquisition sensor. When interacting with the optical sensor, the system user needs to press his finger on the sensor area. If the capacitive sensor is used, the finger is swiped across the sensor area. In our laboratory, we have used two models of optical sensors: Digital Persona (Palm Beach Gardens, FL, USA) [

23,

24] and HF-7000 (HFSecurity Hui Fan Technology, Chongqing, China) [

25], and a single capacitive sensor: UPEK Eikon [

26].

For the extraction and matching of the collected fingerprints we have used two open source solutions. The first solution was a combination of NIST (National Institute of Standards and Technology) tools mindtct and bozorth3, both of which are parts of the NBIS software package, version 5.0.0 [

10]. An alternative solution, available for result analysis, SourceAFIS (version 3.7) by Robert Vazan [

27], was also used.

For our laboratory, we have designed two separate learning tools. The first tool is used for simulating biometric fingerprint enrollment and the second tool for remote result evaluation. In this way, classroom participants can gain deeper insights into the different parts of the biometric recognition process.

5. Biometric Data Acquisition Tool

The biometric acquisition process requires adequate software to be successfully completed. We have developed an application which enables students to place themselves in the role of a biometric system operator. The tool guides students through all phases of biometric data acquisition, and allows management of collected data.

Each biometric acquisition surface is supplied with the biometric data acquisition tool. Biometric templates are extracted from data acquired by the use of biometric sensors. Templates are sent over the network together with environment metadata gathered by the temperature and humidity sensors, and stored by the server application in appropriate databases.

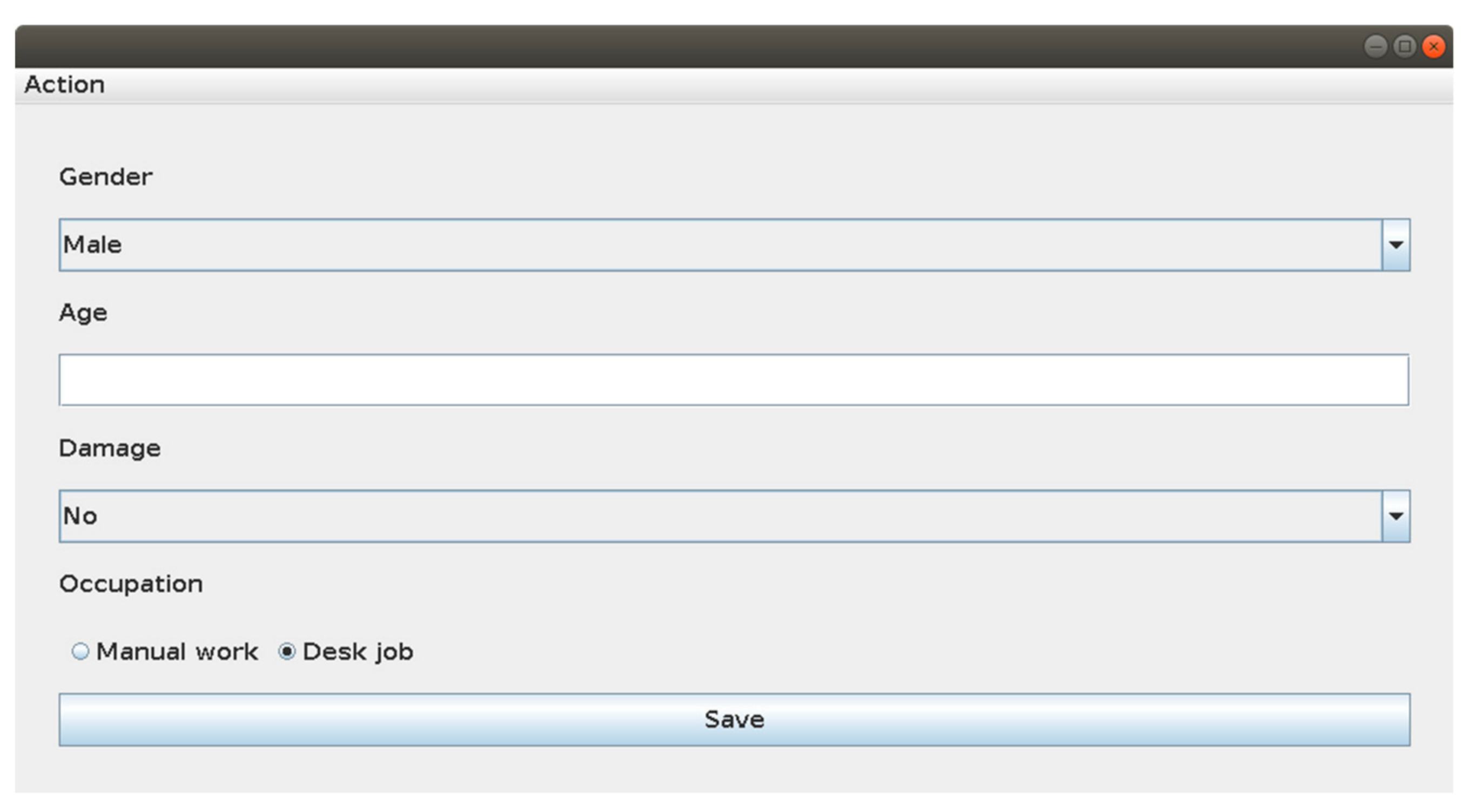

Figure 3 presents the application form for entering new users and it is the part of the first tool mentioned previously. For each user the basic demographic data is collected, such as gender, age, potential physical damage to fingerprints, and the occupation of the user.

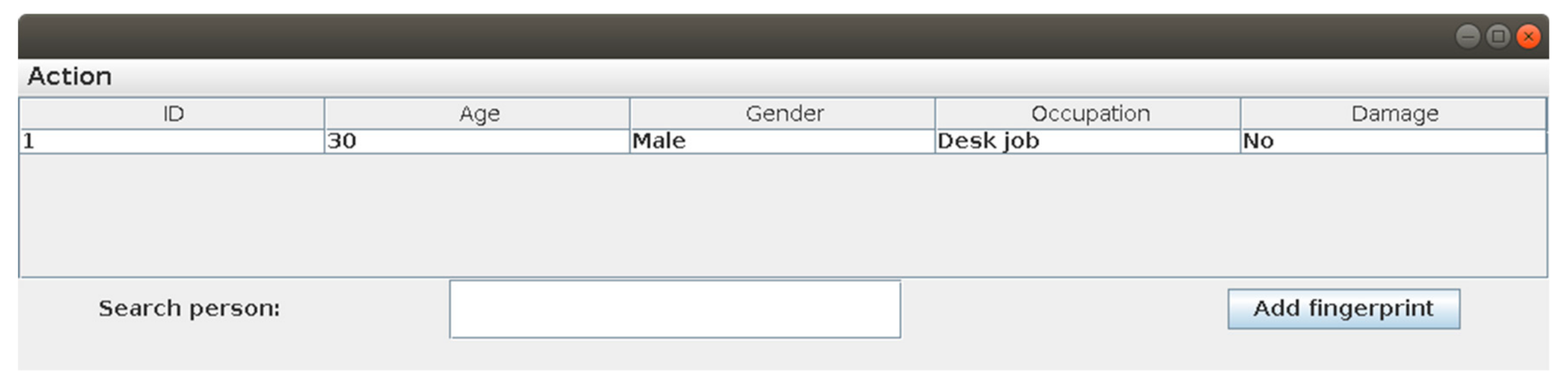

After the system operator fills out the form, user data are saved to the database. Each user is assigned an ID. In

Figure 4, we can see the data from all users enrolled. We can search the enrolled users by various parameters available. After selecting enrolled user, we can click on the “Add fingerprint” button.

In

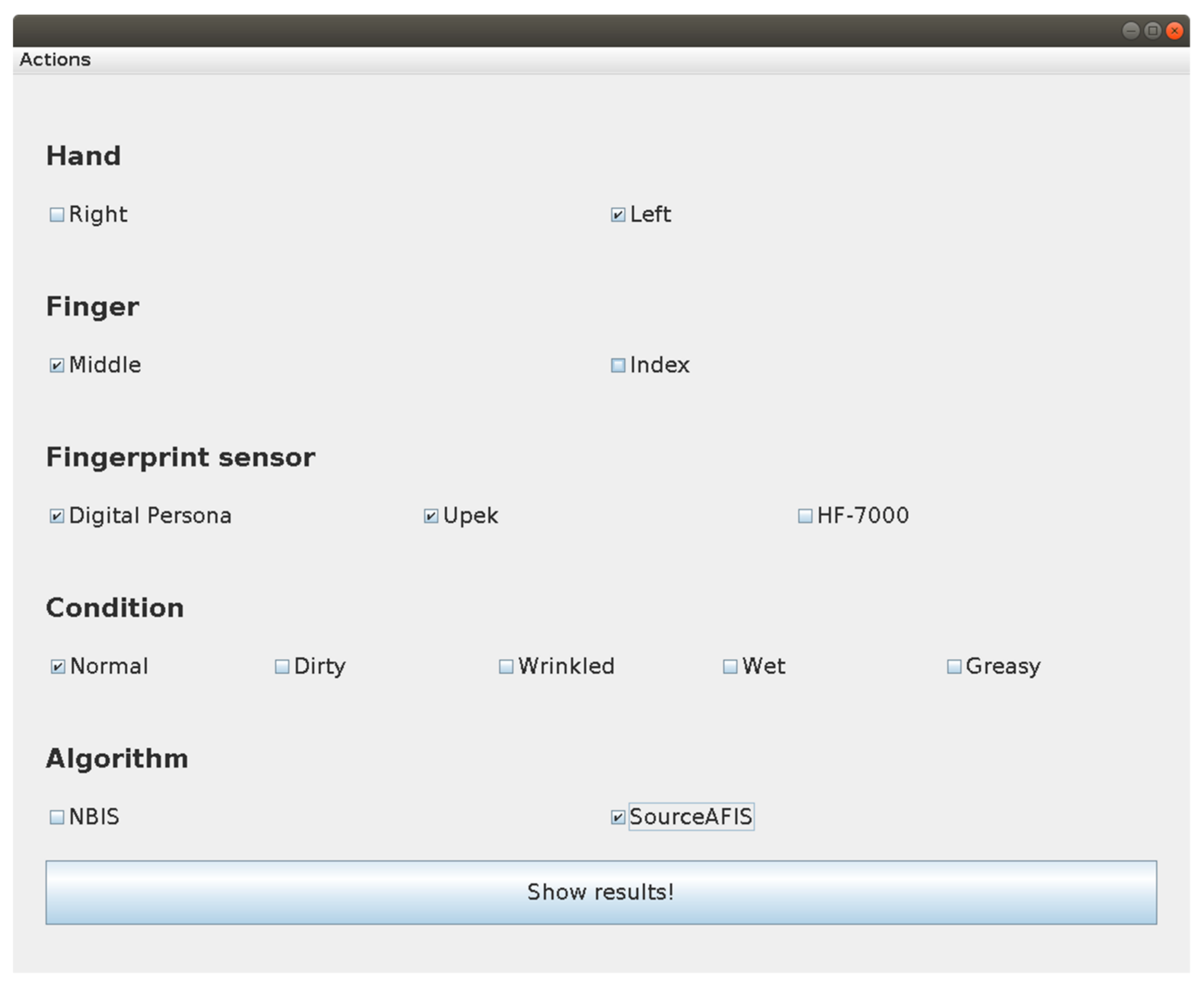

Figure 5, the application form for collecting fingerprints is displayed. System operator can choose between different hands, fingers, types of fingerprint reader, and expected states of the collected fingerprint. After clicking the “Save!” button, the user is able to leave the fingerprint on the chosen biometric sensor. If the fingerprint was acquired successfully, it is saved with its metadata in the database.

6. Biometric System Evaluation Tool

The second described tool is used for biometric system evaluation. The developed tool has a server and client components. Collected fingerprints are analyzed and various queries are available to the system operator, who uses the client part of the tool. The server application executes the query, and sends the results back to the client part of the application. Students can monitor system performances for different subsets of collected data. They can choose between normal, dirty, greasy, wet, and wrinkled fingerprints. A choice between the three fingerprint sensors we used in our laboratory is also available. Moreover, students can analyze the impacts of different algorithms for feature extraction and fingerprint matching.

When the biometric system is operating in verification mode, it decides on the basis of the value of the matching score. The matching score is the result of a comparison of two different biometric templates. Feature extraction algorithms create templates from raw fingerprint templates. When similarity metric is used for score representation, a score greater than the predefined system threshold means that the user was successfully verified by the system. A score which is lower than the designated threshold implies that the user was rejected by the biometric system. As a user can falsely claim the identity of another person, there are four possible outcomes: acceptance of a genuine template, rejection of a genuine template, acceptance of an imposter template, and rejection of an imposter template.

Various metrics are used for describing biometric system performance. For example, GAR (Genuine Acceptance Rate) describes the percentage of genuine user templates which were accepted as genuine by the system. FMR (False Match Rate) represents the percentage of accepted imposter templates. These two parameters depend on the value of the system threshold. If the system threshold is lower, more genuine and imposter templates will be accepted. Conversely, when the system threshold is higher, fewer imposter templates are accepted, but also fewer genuine ones [

19]. Tradeoffs between different threshold values are shown by ROC (Receiver Operating Characteristics) curves.

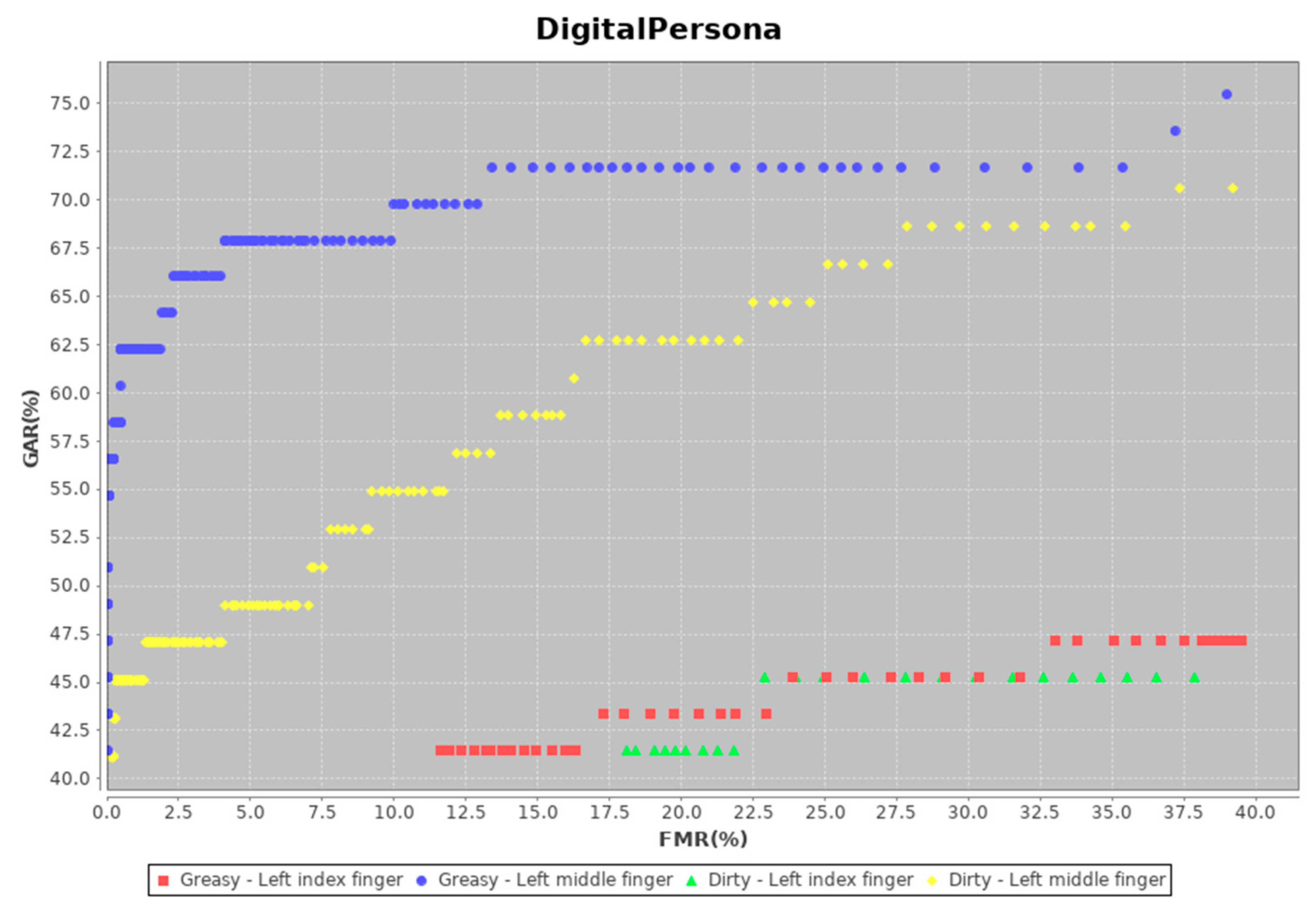

Figure 6 presents the options available to students for performing a system evaluation. After selecting the desired options, an ROC curve with the system performance is displayed, as depicted in

Figure 7. The points on the curve represent the tradeoff between GAR (Genuine Acceptance Rate) and FMR (False Match Rate). A different system threshold value was applied for each presented point. Values of GAR and FMR for each threshold were based on matching scores calculated from the collected fingerprint templates. Since the computation of scores from a large number of fingerprints can take a lot of time on desktop computers, even with parallelization, scores from queries are cached in order to improve user experience. Combinations of fingerprint instances and underlying enrollment conditions for each curve are shown in the legend of

Figure 7.

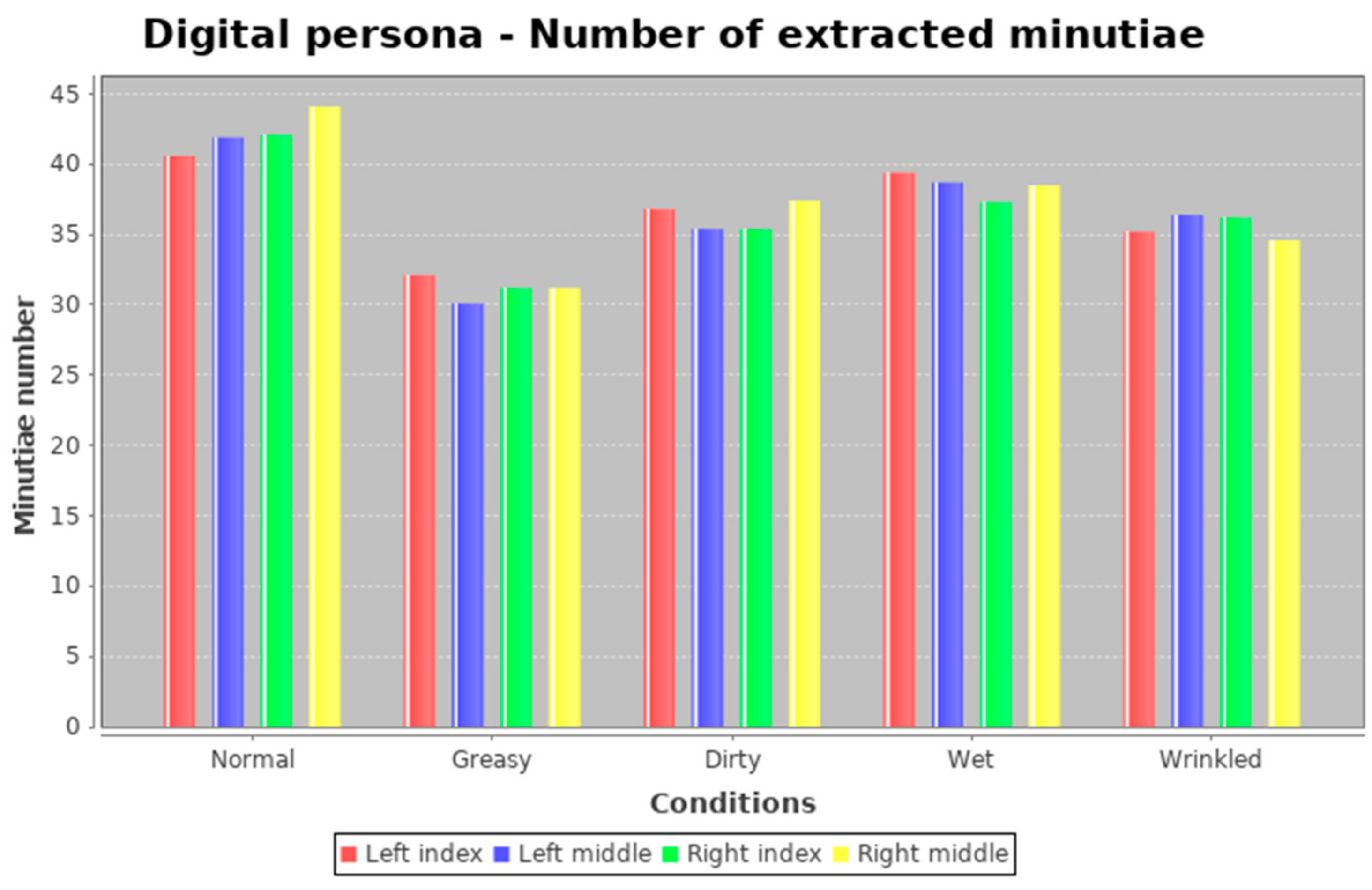

Another important functionality of the biometric evaluation tool is the analysis of the average number of minutiae per collected fingerprint instance. Minutiae are fingerprint ridge endings or bifurcations. They are used in fingerprint recognition algorithms as they are generally stable and robust to fingerprint impression conditions. However, a small sensor area or a poor fingerprint quality can lead to a partial capture of fingerprint minutiae. The partial capture of fingerprint minutiae can result in a smaller number of minutiae correspondences when comparing fingerprints taken from the same finger [

28]. This can lead to false rejects and impact the performance of a fingerprint matching algorithm.

As the impact of the fingerprint state and applied algorithm is also important for this system component, all the options available for generating ROC curves can also be used for this purpose. The resulting bar chart with the average number of minutia is displayed in

Figure 8.

7. Research Study

7.1. Purpose of the Study

With the idea of testing the value of learning a specific topic in the laboratory environment, we tackle two targets: learning motivation and learning outcomes.

Although authors often use engagement when describing learning or working activities we prefer the word motivation here because it encompasses more aspects of human behavior. Of course, it is necessary to operationalize the concept, so that it is clear what it is meant by it. Engagement is more observable, demonstrated as a behavior, but in this context we are not observing students, rather we ask them about their experience while learning. For example, in [

29], the authors see engagement as being a wider concept than motivation.

On the other side, when Deci and Ryan speak about engagement [

30], they see it as something intrinsic, while motivation is more extrinsic. We expect that students will be more intrinsically motivated in what is expected to be a more engaging context, but they might be motivated for different reasons, expecting some incentives for their activity. Thus, we have decided to use the more traditional concept of motivation defining it as wide as it is, but focusing on the specific model for researching learning motivation.

As traditionally defined motivation, based on the well-known dichotomy between intrinsic and extrinsic, is too wide to follow, in our research we use the theoretical framework offered by Keller [

31]. This concept provides clues for developing behavioral indicators of the level of students’ learning motivation. In this context, the learning and motivation bond is achieved through four principles: attention, relevance, confidence, and satisfaction. This means that a learning activity, in order to be seen as motivating to students, has to be arousing, perceived as relevant, achievable, and must bring personal satisfaction.

Learning motivation considers mechanisms that initiate the learning activity in the first place, connects learners with their learning goals and keeps them energized throughout the process [

32]. From that point of view it is obvious that the learning context is a relevant factor for not only providing motivating experience, but also for attaining learning goals.

However, students are learning in order to adopt certain knowledge and the ultimate test of the effectiveness of a learning method is the level of knowledge they have acquired. Although these two variables are not independent, we will treat them separately here. There are different ways to measure students’ achievement but the traditional and most common one is their grades. Other alternatives could be measuring the learning time or the number of errors they made, counting the number of trials before they perform a task and so on.

In order to achieve our research goal of exploring the effects of a flipped classroom setting on students’ motivation and learning outcomes, we conducted the experiment on students. We have postulated two hypotheses regarding the difference between students learning through the traditional lecture based approach and those learning in a flipped classroom laboratory.

With regard to a student’s motivation, some researchers corroborate the supremacy of the untraditional concepts [

33,

34], while others do not [

35]. Nevertheless, we postulated the first Hypothesis (H1) that the students who attend the flipped classroom laboratory environments would exhibit more motivation (engagement) than the students who learned the traditional way.

As most research studies show that the learning outcomes of students are often higher when unorthodox methods of teaching are applied [

35,

36,

37,

38,

39], the second Hypothesis (H2) postulates that the students who attend the flipped classroom laboratory environment will have better learning outcomes in comparison with students learning about the same topics in a traditional way.

7.2. Participants and Settings

In the experiment, 42 participants were involved. The first group of students (N = 21) learned about the topic of biometric acquisition through the traditional lecture based approach, while the second group participated in a flipped classroom setting (N = 21), which presumed the use of our laboratory and accompanying tools. The students were assigned to the learning experience groups randomly. It should be noted that, with regard to the features relevant for our experiment such as previous experience and level of specific knowledge, all of the participants were equivalent. To evaluate whether there was a difference between the two groups, an entry-test in the form of a multiple choice test was given to members of both groups. The test questions were about basic biometric concepts, metrics, and algorithms, so as to see whether the students were already familiar with some of the biometric concepts.

The concept of the experiment was that the one group of students learned the lesson of biometric acquisition in the flipped classroom laboratory. After they completed the course the level of their motivation was evaluated by a questionnaire measuring their subjective perception of being engaged during the learning process.

The second group learned the same material traditionally through lectures delivered by professors. After they were “treated” with the conventional learning methodology, their engagement and learning outcomes were evaluated in the same way as with the first group.

The learning material includes topics such as: biometric sensor characteristics, the biometric acquisition process, storage of biometric data, feature extraction and matching algorithms, acquisition impact on system precision, and system performance visualization techniques.

The level of students’ engagement is tested by a questionnaire regarding students’ learning motivation, which was based on another one we previously used for similar purposes in different learning contexts [

40]. The questionnaire has 12 items and utilizes Likert’s scale with seven degrees of freedom. Researches using ARCS (Attention, Relevance, Confidence, Satisfaction) model [

31] and Keller himself often use categories with yes or no, or at least four degrees of confirmation (agreements). We believe that our respondents would have problem to give categorical answers to a concrete questions because they have to consider different factors when deciding if some learning experience is more or less satisfying, arousing, etc. It is said that when people make fine decisions, potential information gain increases as the number of scale points increases [

41]. The judgment about the motivational experience is more the question of the gradually different experience than the “black and white” situation.

On the other hand, researches using Likert’s scale often use five or seven point scale. As methodological literature says, there is no standard for the number of points on rating scales [

41]. We managed to make a relatively equidistant and stable continuum, covering different shades of experience. Dawes [

42], in some other context, found that there is not much difference in results when using five or seven point rating scales in marketing researches, which was not the case with a ten point scale, so we believe that the seven point scale is the limit for the number of points here. We decided to use seven levels because it gives more choice to respondents and diminishes the effect of the middle points in the scale [

43].

The questionnaire is provided in the

Appendix A,

Table A1. Various aspects of motivation, are covered by our questions, according to Keller’s model of classifying indicators [

44], which is a widely used motivational model in various educational settings [

31]. Attention means provoking curiosity in students and it is covered by questions: 1, 5, and 6. Relevance is connected with a sense of purpose in regard to the topics (questions 2, 3, and 4). Their confidence develops from the dynamic and interactive manner of learning with natural feedback provided—questions 7, 8, and 9. Satisfaction is their emotional reaction to the experience and it is covered by questions 10, 11, and 12.

For measuring the level of students’ learning accomplishments, we test their acquired knowledge (both groups take the same test), by a multiple choice test. The test covers all of the topics and assesses the level of memorized facts about the topic, the level at which the students understand the concepts and are able to apply their knowledge. Traditional testing seems to be the most complete measure of students’ achievement if it covers different learning goals (remembering—memorizing facts, understanding memorized facts, and being able to apply the acquired knowledge). For testing the hypotheses, we have used independent sample t-test.

8. Research Results

To test whether our questions measure the same construct, we have calculated Cronbach’s alpha value, which has a value of 0.92. Therefore, since the value of internal consistency is high, we have decided to use mean values for each participant in our analysis.

Our hypothesis was that the use of our laboratory with a flipped classroom setting will result in better student motivation than the use of the traditional lecture approach. In order to test whether our data is normally distributed, we have performed the Shapiro–Wilk test, and the results are presented in

Table 1. The results show that our data is normally distributed, and we can proceed to independent samples

t-test (the results are given

Table 2).

Data in

Table 3 shows that the group average motivation values for flipped classroom and traditional approach were 5.88 and 5.01, respectively. Leven’s test of equality of variances has shown that both groups have equal variances. As the

t-test significance is lower than 0.05, we cannot reject our first hypothesis.

To find if there were any differences in prior knowledge (i.e., knowledge before taking this course), students from both groups were given an entry test. The test questions were about basic biometric concepts. The Shapiro–Wilk test has shown that the entry-test data has a normal distribution (

Table 4), so we continued our analysis with the independent samples

t-test. The results are presented in

Table 5. The flipped classroom group had an average result of 63.33 points, while the traditional group had an average result of 60.52 points.

As the t-test significance is greater than our chosen significance level, after analysis we have concluded that there was no difference in prior knowledge between the two groups. Both groups have demonstrated some knowledge about biometrics, but there was no statistically significant difference between the two groups.

After the completion of the acquisition part of the course, we have used students’ grades as indicators of learning outcomes. The results of Shapiro–Wilk test (

Table 6) have shown that our data has a normal distribution, so we have proceeded to the independent samples

t-test. The results of independent samples

t-test are presented in

Table 7.

The traditional group had an average result of 76.05 points, while the flipped classroom group had an average result of 85.8 points. As the significance value is lower than 0.05, we can conclude that there is a statistically significant difference in results between the two groups, so we cannot reject our second hypothesis.

Analysis has shown that our first Hypothesis H1 cannot be rejected. Therefore, we can conclude that there is a statistically significant difference in motivation (engagement) between the students who attended the flipped classroom laboratory environments and those who learned on traditional way. Furthermore, the result of the analysis show that also our second Hypothesis H2 cannot be rejected. As a result, we can conclude that there is a statistically significant difference in learning outcomes between the students who attended the flipped classroom laboratory environments and those who learned on traditional way.

9. Conclusions

In this paper, a fingerprint acquisition laboratory with specially developed software tools was presented. The laboratory provided a simulation of various environmental conditions and their impact on fingerprint image quality. The IoT approach allowed students to gain more insights into the biometric acquisition process. Different types of sensors were used to collect data, which was stored in a database in order to be available for further evaluation. Visualization tools were provided for students, in order to allow them to analyze the impact of environmental conditions on biometric recognition precision.

Results show that students learning about the biometric acquisition experience in our laboratory are more motivated during the process of learning compared to those learning the traditional way. This is in concordance with previous research [

33,

34] that shows similar conclusions. Learning in an environment designed for the topic, with more engagement in the learning process, and with timely feedback with regard to their progress, leads to an increase in student satisfaction. Consequently, they are also likely to be more motivated. It triggers intrinsic motivation [

30], and might be considered proof of the value of Keller’s ARCS model.

Whether “unconventional” learning approaches will yield benefits, often depends on the syllabus and, of course, on the utilized methodology. In this paper, we proved the advantage of our concept for the Biometric Technologies course. We believe that this is the result of a good match between the course subject and our method of teaching which brings students into real life situations (as outlined in this paper), thereby providing them with a better understanding of the usage and relevance of more abstract concepts. Therefore, we conclude that the flipped classroom approach, along with the utilization of specially created tools, has led to better learning outcomes for the flipped classroom group.

As our experiment had a specific domain and environment, the described approach might not be useful for other purposes. Also, we might take into a consideration the fact that various learning (and teaching) approaches bring diverse advantages depending on the individual differences of the students. For example, cognitive [

32] or learning style [

45] are proven to be of relevance.

Moreover, as the use of the fingerprint acquisition laboratory has proved beneficial for the Biometric Technologies course, a possible extension to other biometric modalities would be a good choice for further work. Also, future versions of such laboratory settings should explore ways to promote teamwork among students, as biometrics is a multidisciplinary field and practitioners will usually have to collaborate with experts in other fields.