Analyzing and Predicting Students’ Performance by Means of Machine Learning: A Review

Abstract

1. Introduction

2. Methodology

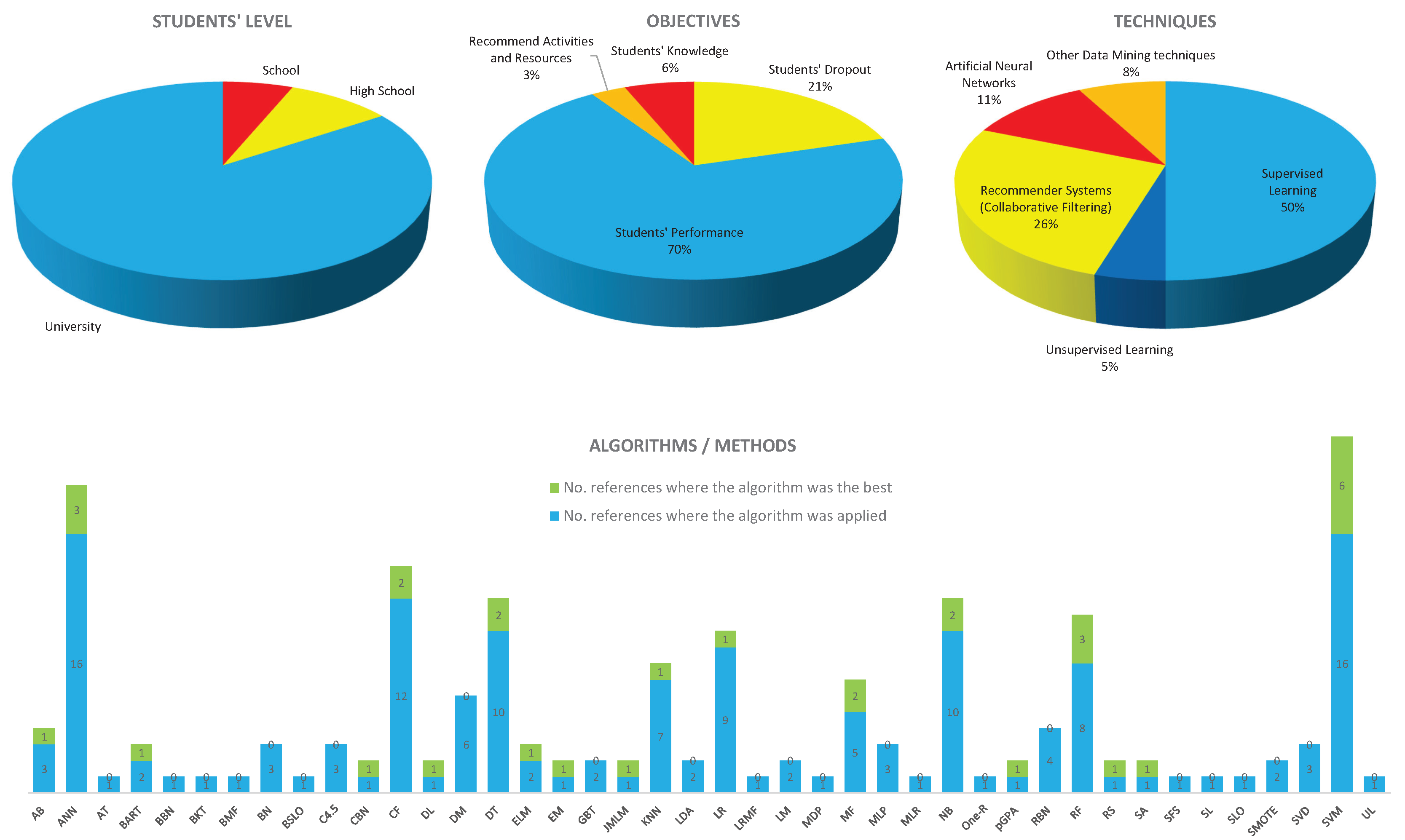

- Students’ level: Each reference analyzes datasets built from students of a particular level. We consider a classification of wide levels, corresponding to School (S), High School (HS) and University (U).

- Objectives: The objectives are connected to the interests and risks in the students’ learning processes.

- Techniques: The techniques consider the different algorithms, methods and tools that process the data to analyze and predict the above objectives.

- Algorithms and methods: The main algorithms and computational methods applied in each case are detailed in the Table 1. Other algorithms with related names or versions not shown in this table could be also applied. The shadowed cells corresponds with the best algorithms found when several methods were compared for the same purpose.

3. Techniques

3.1. Machine Learning

3.1.1. Supervised Learning

3.1.2. Unsupervised Learning

3.2. Recommender Systems

Collaborative Filtering

3.3. Artificial Neural Networks

3.4. Impact of the Techniques

4. Objectives

4.1. Student Dropout

4.2. Student Performance

4.3. Recommender Activities and Resources

4.4. Students’ Knowledge

5. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AB | AdaBoost |

| ANN | Artificial Neural Networks |

| AT | Answer Tree |

| BART | Bayesian Additive Regressive Trees |

| BBN | Bayesian Belief Network |

| BKT | Bayesian Knowledge Tracing |

| BMF | Biased-Matrix Factorization |

| BN | Bayesian Networks |

| BSLO | Bipolar Slope One |

| CBN | Combination of Multiple Classifiers |

| CF | Collaborative Filtering |

| DL | Deep Learning |

| DM | Data Mining |

| DT | Decision Tree |

| ELM | Extreme Learning Machine |

| EM | Expectation-Maximization |

| GBT | Gradient Boosted Tree |

| JMLM | Jacobian Matrix-Based Learning Machine |

| KNN | K-Nearest Neighbor |

| LDA | Latent Dirichlet Allocation |

| LR | Logistic Regression |

| LRMF | Low Range Matrix Factorization |

| LM | Linear Models |

| MDP | Markov Decision Process |

| MF | Matrix Factorization |

| ML | Machine Learning |

| MLP | Multilayer Perception |

| MLR | Multiple Linear Regression |

| NB | Naïves Bayes |

| pGPA | Grade Prediction Advisor |

| RBN | Radial Basis Networks |

| RF | Random Forests |

| RS | Recommender Systems |

| SA | Survival Analysis |

| SFS | Sequential Forward Selection |

| SL | Supervised Learning |

| SLO | Slope One |

| SMOTE | Synthetic Minority Over-Sampling |

| SVD | Singular Value Decomposition |

| SVM | Support Vector Machine |

| UL | Unsupervised Learning |

References

- Han, J.; Kamber, M.; Pei, J. Data Mining: Concepts and Techniques, 3rd ed.; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2011. [Google Scholar]

- Kotsiantis, S.; Pierrakeas, C.; Pintelas, P. Predicting Students’ Performance in Distance Learning using Machine Learning Techniques. Appl. Artif. Intell. 2004, 18, 411–426. [Google Scholar] [CrossRef]

- Navamani, J.; Kannammal, A. Predicting performance of schools by applying data mining techniques on public examination results. Res. J. Appl. Sci. Eng. Technol. 2015, 9, 262–271. [Google Scholar] [CrossRef]

- Moseley, L.; Mead, D. Predicting who will drop out of nursing courses: A machine learning exercise. Nurse Educ. Today 2008, 28, 469–475. [Google Scholar] [CrossRef] [PubMed]

- Nandeshwar, A.; Menzies, T.; Nelson, A. Learning patterns of university student retention. Expert Syst. Appl. 2011, 38, 14984–14996. [Google Scholar] [CrossRef]

- Thammasiri, D.; Delen, D.; Meesad, P.; Kasap, N. A critical assessment of imbalanced class distribution problem: The case of predicting freshmen student attrition. Expert Syst. Appl. 2014, 41, 321–330. [Google Scholar] [CrossRef]

- Dewan, M.; Lin, F.; Wen, D.; Kinshuk. Predicting dropout-prone students in e-learning education system. In Proceedings of the 2015 IEEE 12th Intl Conference on Ubiquitous Intelligence and Computing and 2015 IEEE 12th Intl Conference on Autonomic and Trusted Computing and 2015 IEEE 15th Intl Conference on Scalable Computing and Communications and Its Associated Workshops (UIC-ATC-ScalCom), Beijing, China, 14 August 2016; pp. 1735–1740. [Google Scholar] [CrossRef]

- Tan, M.; Shao, P. Prediction of student dropout in E-learning program through the use of machine learning method. Int. J. Emerg. Technol. Learn. 2015, 10, 11–17. [Google Scholar] [CrossRef]

- Sultana, S.; Khan, S.; Abbas, M. Predicting performance of electrical engineering students using cognitive and non-cognitive features for identification of potential dropouts. Int. J. Electr. Eng. Educ. 2017, 54, 105–118. [Google Scholar] [CrossRef]

- Chen, Y.; Johri, A.; Rangwala, H. Running out of STEM: A comparative study across STEM majors of college students At-Risk of dropping out early. In Proceedings of the 8th International Conference on Learning Analytics and Knowledge, Sydney, Australia, 7–9 March 2018; pp. 270–279. [Google Scholar]

- Nagy, M.; Molontay, R. Predicting Dropout in Higher Education Based on Secondary School Performance. In Proceedings of the 2018 IEEE 22nd International Conference on Intelligent Engineering Systems (INES), Las Palmas de Gran Canaria, Spain, 21 June 2018; pp. 000389–000394. [Google Scholar] [CrossRef]

- Serra, A.; Perchinunno, P.; Bilancia, M. Predicting student dropouts in higher education using supervised classification algorithms. Lect. Notes Comput. Sci. 2018, 10962 LNCS, 18–33. [Google Scholar] [CrossRef]

- Gray, C.; Perkins, D. Utilizing early engagement and machine learning to predict student outcomes. Comput. Educ. 2019, 131, 22–32. [Google Scholar] [CrossRef]

- Chung, J.; Lee, S. Dropout early warning systems for high school students using machine learning. Child. Youth Serv. Rev. 2019, 96, 346–353. [Google Scholar] [CrossRef]

- Hussain, M.; Zhu, W.; Zhang, W.; Abidi, S.; Ali, S. Using machine learning to predict student difficulties from learning session data. Artif. Intell. Rev. 2018, 52, 1–27. [Google Scholar] [CrossRef]

- Huang, S.; Fang, N. Predicting student academic performance in an engineering dynamics course: A comparison of four types of predictive mathematical models. Comput. Educ. 2013, 61, 133–145. [Google Scholar] [CrossRef]

- Slim, A.; Heileman, G.L.; Kozlick, J.; Abdallah, C.T. Predicting student success based on prior performance. In Proceedings of the 2014 IEEE Symposium on Computational Intelligence and Data Mining (CIDM), Singapore, 16 April 2015; pp. 410–415. [Google Scholar] [CrossRef]

- Zhao, C.; Yang, J.; Liang, J.; Li, C. Discover learning behavior patterns to predict certification. In Proceedings of the 2016 11th International Conference on Computer Science & Education (ICCSE), Nagoya, Japan, 23 August 2016; pp. 69–73. [Google Scholar] [CrossRef]

- Chaudhury, P.; Mishra, S.; Tripathy, H.; Kishore, B. Enhancing the capabilities of student result prediction system. In Proceedings of the Second International Conference on Information and Communication Technology for Competitive Strategies, Udaipur, India, 4–5 March 2016. [Google Scholar] [CrossRef]

- Nespereira, C.; Elhariri, E.; El-Bendary, N.; Vilas, A.; Redondo, R. Machine learning based classification approach for predicting students performance in blended learning. Adv. Intell. Syst. Comput. 2016, 407, 47–56. [Google Scholar] [CrossRef]

- Sagar, M.; Gupta, A.; Kaushal, R. Performance prediction and behavioral analysis of student programming ability. In Proceedings of the 2016 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Jaipur, India, 21 September 2016; pp. 1039–1045. [Google Scholar] [CrossRef]

- Verhun, V.; Batyuk, A.; Voityshyn, V. Learning Analysis as a Tool for Predicting Student Performance. In Proceedings of the 2018 IEEE 13th International Scientific and Technical Conference on Computer Sciences and Information Technologies (CSIT), Lviv, Ukraine, 11 September 2018; Volume 2, pp. 76–79. [Google Scholar] [CrossRef]

- Backenköhler, M.; Wolf, V. Student performance prediction and optimal course selection: An MDP approach. Lect. Notes Comput. Sci. 2018, 10729 LNCS, 40–47. [Google Scholar] [CrossRef]

- Hsieh, Y.Z.; Su, M.C.; Jeng, Y.L. The jacobian matrix-based learning machine in student. Lect. Notes Comput. Sci. 2017, 10676 LNCS, 469–474. [Google Scholar] [CrossRef]

- Han, M.; Tong, M.; Chen, M.; Liu, J.; Liu, C. Application of Ensemble Algorithm in Students’ Performance Prediction. In Proceedings of the 2017 6th IIAI International Congress on Advanced Applied Informatics (IIAI-AAI), Hamamatsu, Japan, 16 November 2017; pp. 735–740. [Google Scholar] [CrossRef]

- Shanthini, A.; Vinodhini, G.; Chandrasekaran, R. Predicting students’ academic performance in the University using meta decision tree classifiers. J. Comput. Sci. 2018, 14, 654–662. [Google Scholar] [CrossRef]

- Ma, C.; Yao, B.; Ge, F.; Pan, Y.; Guo, Y. Improving prediction of student performance based on multiple feature selection approaches. In Proceedings of the ICEBT 2017, Toronto, ON, Canada, 10–12 September 2017; pp. 36–41. [Google Scholar] [CrossRef]

- Tekin, A. Early prediction of students’ grade point averages at graduation: A data mining approach [Öǧrencinin mezuniyet notunun erken tahmini: Bir veri madenciliǧi yaklaşidotlessmidotless]. Egit. Arastirmalari Eurasian J. Educ. Res. 2014, 207–226. [Google Scholar] [CrossRef]

- Pushpa, S.; Manjunath, T.; Mrunal, T.; Singh, A.; Suhas, C. Class result prediction using machine learning. In Proceedings of the 2017 International Conference On Smart Technologies For Smart Nation (SmartTechCon), Bengaluru, India, 19 August 2018; pp. 1208–1212. [Google Scholar] [CrossRef]

- Howard, E.; Meehan, M.; Parnell, A. Contrasting prediction methods for early warning systems at undergraduate level. Internet High. Educ. 2018, 37, 66–75. [Google Scholar] [CrossRef]

- Villagrá-Arnedo, C.; Gallego-Duran, F.; Compan-Rosique, P.; Llorens-Largo, F.; Molina-Carmona, R. Predicting academic performance from Behavioural and learning data. Int. J. Des. Nat. Ecodyn. 2016, 11, 239–249. [Google Scholar] [CrossRef]

- Sorour, S.; Goda, K.; Mine, T. Estimation of Student Performance by Considering Consecutive Lessons. In Proceedings of the 4th International Congress on Advanced Applied Informatics, Okayama, Japan, 12 June 2016; pp. 121–126. [Google Scholar] [CrossRef]

- Guo, B.; Zhang, R.; Xu, G.; Shi, C.; Yang, L. Predicting Students Performance in Educational Data Mining. In Proceedings of the 2015 International Symposium on Educational Technology (ISET), Wuhan, China, 24 March 2016; pp. 125–128. [Google Scholar] [CrossRef]

- Rana, S.; Garg, R. Prediction of students performance of an institute using ClassificationViaClustering and ClassificationViaRegression. Adv. Intell. Syst. Comput. 2017, 508, 333–343. [Google Scholar] [CrossRef]

- Anand, V.K.; Abdul Rahiman, S.K.; Ben George, E.; Huda, A.S. Recursive clustering technique for students’ performance evaluation in programming courses. In Proceedings of the 2018 Majan International Conference (MIC), Muscat, Oman, 19 March 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Bydžovská, H. Student performance prediction using collaborative filtering methods. Lect. Notes Comput. Sci. 2015, 9112, 550–553. [Google Scholar] [CrossRef]

- Bydžovská, H. Are collaborative filtering methods suitable for student performance prediction? Lect. Notes Comput. Sci. 2015, 9273, 425–430. [Google Scholar] [CrossRef]

- Park, Y. Predicting personalized student performance in computing-related majors via collaborative filtering. In Proceedings of the 19th Annual SIG Conference on Information Technology Education, Fort Lauderdale, FL, USA, 3 October 2018; p. 151. [Google Scholar] [CrossRef]

- Liou, C.H. Personalized article recommendation based on student’s rating mechanism in an online discussion forum. In Proceedings of the 2016 49th Hawaii International Conference on System Sciences (HICSS), Koloa, HI, USA, 5–8 January 2016; pp. 60–65. [Google Scholar] [CrossRef]

- Elbadrawy, A.; Karypis, G. Domain-aware grade prediction and top-n course recommendation. In Proceedings of the 10th ACM Conference on Recommender Systems, Boston, MA, USA, 15 September 2016; pp. 183–190. [Google Scholar] [CrossRef]

- Pero, v.; Horváth, T. Comparison of collaborative-filtering techniques for small-scale student performance prediction task. Lect. Notes Electr. Eng. 2015, 313, 111–116. [Google Scholar] [CrossRef]

- Song, Y.; Jin, Y.; Zheng, X.; Han, H.; Zhong, Y.; Zhao, X. PSFK: A student performance prediction scheme for first-encounter knowledge in ITS. Lect. Notes Comput. Sci. 2015, 9403, 639–650. [Google Scholar] [CrossRef]

- Xu, K.; Liu, R.; Sun, Y.; Zou, K.; Huang, Y.; Zhang, X. Improve the prediction of student performance with hint’s assistance based on an efficient non-negative factorization. IEICE Trans. Inf. Syst. 2017, E100D, 768–775. [Google Scholar] [CrossRef]

- Sheehan, M.; Park, Y. pGPA: A personalized grade prediction tool to aid student success. In Proceedings of the sixth ACM conference on Recommender systems, Dublin, Ireland, 3 September 2012; pp. 309–310. [Google Scholar] [CrossRef]

- Lorenzen, S.; Pham, N.; Alstrup, S. On predicting student performance using low-rank matrix factorization techniques. In Proceedings of the 9th European Conference on E-Learning—ECEL 2010 (ECEL 2010), Porto, Portugal, 5 November 2010; pp. 326–334. [Google Scholar]

- Houbraken, M.; Sun, C.; Smirnov, E.; Driessens, K. Discovering hidden course requirements and student competences from grade data. In Proceedings of the UMAP ’17: Adjunct Publication of the 25th Conference on User Modeling, Adaptation and Personalization, Bratislava, Slovakia, 9–10 July 2017; pp. 147–152. [Google Scholar] [CrossRef]

- Gómez-Pulido, J.; Cortés-Toro, E.; Durán-Domínguez, A.; Crawford, B.; Soto, R. Novel and Classic Metaheuristics for Tunning a Recommender System for Predicting Student Performance in Online Campus. Lect. Notes Comput. Sci. 2018, 11314 LNCS, 125–133. [Google Scholar] [CrossRef]

- Chavarriaga, O.; Florian-Gaviria, B.; Solarte, O. A recommender system for students based on social knowledge and assessment data of competences. Lect. Notes Comput. Sci. 2014, 8719 LNCS, 56–69. [Google Scholar] [CrossRef]

- Jembere, E.; Rawatlal, R.; Pillay, A. Matrix Factorisation for Predicting Student Performance. In Proceedings of the 2017 7th World Engineering Education Forum (WEEF), Kuala Lumpur, Malaysia, 16 November 2018; pp. 513–518. [Google Scholar] [CrossRef]

- Sweeney, M.; Lester, J.; Rangwala, H. Next-term student grade prediction. In Proceedings of the 2015 IEEE International Conference on Big Data (Big Data), Santa Clara, CA, USA, 1 November 2015; pp. 970–975. [Google Scholar] [CrossRef]

- Adán-Coello, J.; Tobar, C. Using collaborative filtering algorithms for predicting student performance. Lect. Notes Comput. Sci. 2016, 9831 LNCS, 206–218. [Google Scholar] [CrossRef]

- Rechkoski, L.; Ajanovski, V.; Mihova, M. Evaluation of grade prediction using model-based collaborative filtering methods. In Proceedings of the 2018 IEEE Global Engineering Education Conference (EDUCON), Santa Cruz de Tenerife, Spain, 17 April 2018; pp. 1096–1103. [Google Scholar] [CrossRef]

- Adewale Amoo, M.; Olumuyiwa, A.; Lateef, U. Predictive modelling and analysis of academic performance of secondary school students: Artificial Neural Network approach. Int. J. Sci. Technol. Educ. Res. 2018, 9, 1–8. [Google Scholar] [CrossRef]

- Gedeon, T.; Turner, H. Explaining student grades predicted by a neural network. In Proceedings of the 1993 International Conference on Neural Networks, Nagoya, Japan, 25 October 1993; Volume 1, pp. 609–612. [Google Scholar]

- Arsad, P.M.; Buniyamin, N.; Manan, J.A. A neural network students’ performance prediction model (NNSPPM). In Proceedings of the 2013 IEEE International Conference on Smart Instrumentation, Measurement and Applications (ICSIMA), Kuala Lumpur, Malaysia, 27 November 2013; pp. 1–5. [Google Scholar] [CrossRef]

- Iyanda, A.; D. Ninan, O.; Ajayi, A.; G. Anyabolu, O. Predicting Student Academic Performance in Computer Science Courses: A Comparison of Neural Network Models. Int. J. Mod. Educ. Comput. Sci. 2018, 10, 1–9. [Google Scholar] [CrossRef][Green Version]

- Dharmasaroja, P.; Kingkaew, N. Application of artificial neural networks for prediction of learning performances. In Proceedings of the 2016 12th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Changsha, China, 13 August 2016; pp. 745–751. [Google Scholar] [CrossRef]

- Musso, M.; Kyndt, E.; Cascallar, E.; Dochy, F. Predicting general academic performance and identifying the differential contribution of participating variables using artificial neural networks. Frontline Learn. Res. 2013, 1, 42–71. [Google Scholar] [CrossRef]

- Mala Sari Rochman, E.; Rachmad, A.; Damayanti, F. Predicting the Final result of Student National Test with Extreme Learning Machine. Pancar. Pendidik. 2018, 7. [Google Scholar] [CrossRef]

- Villegas-Ch, W.; Lujan-Mora, S.; Buenano-Fernandez, D.; Roman-Canizares, M. Analysis of web-based learning systems by data mining. In Proceedings of the 2017 IEEE Second Ecuador Technical Chapters Meeting (ETCM), Guayas, Ecuador, 16 October 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Karagiannis, I.; Satratzemi, M. An adaptive mechanism for Moodle based on automatic detection of learning styles. Educ. Inf. Technol. 2018, 23, 1331–1357. [Google Scholar] [CrossRef]

- Masci, C.; Johnes, G.; Agasisti, T. Student and school performance across countries: A machine learning approach. Eur. J. Oper. Res. 2018, 269, 1072–1085. [Google Scholar] [CrossRef]

- Johnson, W. Data mining and machine learning in education with focus in undergraduate cs student success. In Proceedings of the 2018 ACM Conference on International Computing Education Research, Espoo, Finland, 13–15 August 2018; pp. 270–271. [Google Scholar] [CrossRef]

- Liu, D.; Richards, D.; Froissard, C.; Atif, A. Validating the effectiveness of the moodle engagement analytics plugin to predict student academic performance. In Proceedings of the 21st Americas Conference on Information Systems (AMCIS 2015), Fajardo, Puerto Rico, 13 August 2015. [Google Scholar]

- Saqr, M.; Fors, U.; Tedre, M. How learning analytics can early predict under-achieving students in a blended medical education course. Med. Teach. 2017, 39, 757–767. [Google Scholar] [CrossRef] [PubMed]

- Kotsiantis, S. Supervised machine learning: A review of classification techniques. Informatica 2007, 31, 249–268. [Google Scholar]

- Hosmer, D.; Lemeshow, S.; Sturdivant, R. Applied Logistic Regression; Wiley Series in Probability and Statistics; Wiley: Hoboken, NJ, USA, 2013. [Google Scholar]

- Gentleman, R.; Carey, V.J. Unsupervised Machine Learning. In Bioconductor Case Studies; Springer: New York, NY, USA, 2008; pp. 137–157. [Google Scholar] [CrossRef]

- Bobadilla, J.; Ortega, F.; Hernando, A.; Gutiérrez, A. Recommender systems survey. Knowl. Based Syst. 2013, 46, 109–132. [Google Scholar] [CrossRef]

| Objectives | Techniques | Algorithms and Methods (2)(3) | ||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Reference | Students’ Level (1) | Students’ Dropout | Students’ Performance | Recommend Activities and Resources | Students’ Knowledge | Supervised Learning | Unsupervised Learning | Recommender Systems (C. Filtering) | Artificial Neural Networks | Data Mining Techniques | AB | ANN | AT | BART | BBN | BKT | BMF | BN | BSLO | C4.5 | CBN | CF | DL | DM | DT | ELM | EM | GBT | JMLM | KNN | LDA | LR | LRMF | LM | MDP | MF | MLP | MLR | NB | One-R | pGPA | RBN | RF | RS | SA | SFS | SL | SLO | SMOTE | SVD | SVM | UL |

| [2] | U | × | × | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||

| [3] | HS | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||

| [4] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [5] | U | × | × | × | × | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||

| [6] | U | × | × | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||

| [7] | U | × | × | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||

| [8] | U | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||

| [9] | U | × | × | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||

| [10] | U | × | × | × | × | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||

| [11] | U | × | × | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||

| [12] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [13] | U | × | × | × | × | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||

| [14] | S | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [15] | U | × | × | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||

| [16] | U | × | × | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||

| [17] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [18] | U | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||

| [19] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [20] | HS | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||||

| [21] | U | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||

| [22] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [23] | HS | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [24] | HS | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||

| [25] | S | × | × | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||

| [26] | U | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||||

| [27] | U | × | × | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||

| [28] | U | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||

| [29] | U | × | × | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||

| [30] | U | × | × | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||

| [31] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [32] | U | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||||

| [33] | HS | × | × | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||

| [34] | U | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||||

| [35] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [36] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [37] | U | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||||

| [38] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [39] | U | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||||

| [40] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [41] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [42] | U | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||||

| [43] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [44] | U | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||||

| [45] | U | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||

| [46] | U | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||||

| [47] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [48] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [49] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [50] | U | × | × | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||

| [51] | U | × | × | × | × | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||

| [52] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [53] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [54] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [55] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [56] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [57] | U | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||||

| [58] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [59] | S | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||||

| [60] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [61] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [62] | S | × | × | × | × | |||||||||||||||||||||||||||||||||||||||||||||||

| [63] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [64] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

| [65] | U | × | × | × | ||||||||||||||||||||||||||||||||||||||||||||||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rastrollo-Guerrero, J.L.; Gómez-Pulido, J.A.; Durán-Domínguez, A. Analyzing and Predicting Students’ Performance by Means of Machine Learning: A Review. Appl. Sci. 2020, 10, 1042. https://doi.org/10.3390/app10031042

Rastrollo-Guerrero JL, Gómez-Pulido JA, Durán-Domínguez A. Analyzing and Predicting Students’ Performance by Means of Machine Learning: A Review. Applied Sciences. 2020; 10(3):1042. https://doi.org/10.3390/app10031042

Chicago/Turabian StyleRastrollo-Guerrero, Juan L., Juan A. Gómez-Pulido, and Arturo Durán-Domínguez. 2020. "Analyzing and Predicting Students’ Performance by Means of Machine Learning: A Review" Applied Sciences 10, no. 3: 1042. https://doi.org/10.3390/app10031042

APA StyleRastrollo-Guerrero, J. L., Gómez-Pulido, J. A., & Durán-Domínguez, A. (2020). Analyzing and Predicting Students’ Performance by Means of Machine Learning: A Review. Applied Sciences, 10(3), 1042. https://doi.org/10.3390/app10031042