Abstract

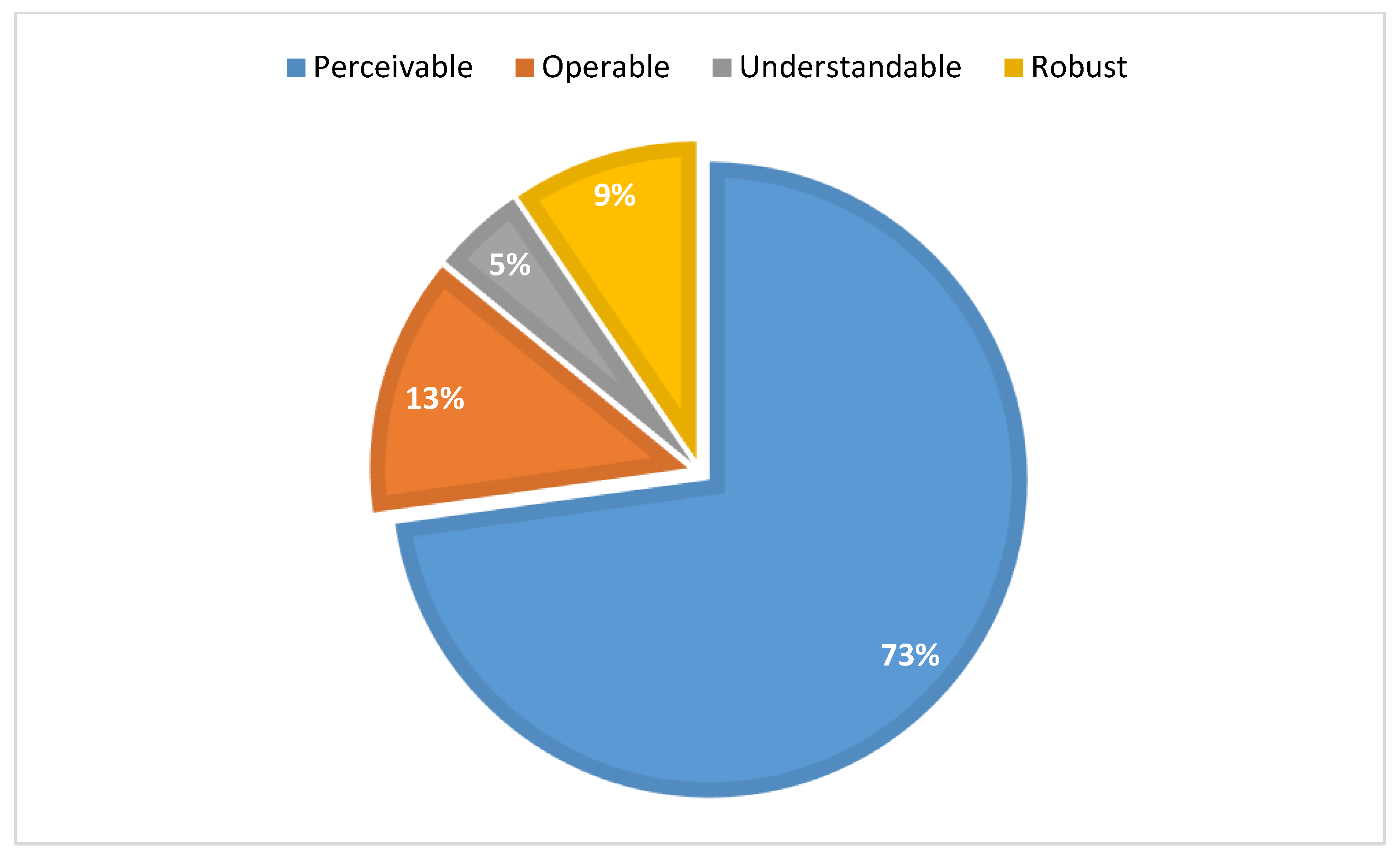

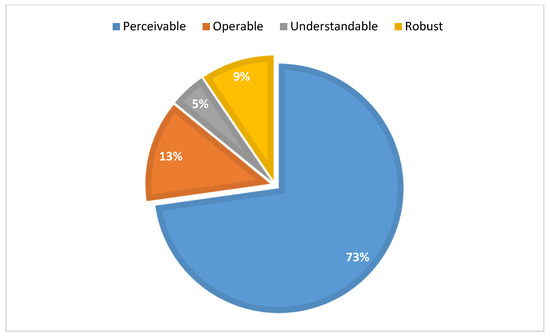

The Saudi government pays great attention to the usability and accessibility issues of e-government systems. E-government educational systems, such as Noor, Faris, and iEN systems, are some of the most rapidly developing e-government systems. In this study, we used a mixed-methods approach (usability testing and automated tools evaluation) to investigate the degree of difficulty faced by teachers with visual impairment while accessing such systems. The usability testing was done on four visually impaired teachers. In addition, four automated tools, namely, AChecker, HTML_CodeSniffer, SortSite, and Total Validator, were utilized in this study. The results showed that all three systems failed to support screen readers effectively as it was the main issue reported by the participants. On the other hand, the automated evaluation tools helped with identifying the most prominent accessibility issues of these systems. The perceivable principle was the principle most violated by the three systems, followed by operable, and then robust. The errors corresponding to the perceivable principle alone represented around 73% of the total errors. Moreover, further analysis revealed that most of the detected errors violated even the lowest level of accessibility conformance, which reflected the failure of these systems to pass the accessibility evaluation.

1. Introduction

Digital transformation is a challenge that is facing governments around the world, forcing them to re-engineer their processes and explore new models for providing services in order to provide reliable and cost-effective information and knowledge services [1].

The concept of e-government was introduced in the field of public administration in the late 1990s. It can be defined as the use of information communication technologies (ICTs), such as computers and the Internet, to provide citizens and businesses with services and information in a more convenient and effective way [2].

In order to define the scope of e-government studies, Fang [2] has defined eight different models in an e-government system: government-to-citizen (G2C), citizen-to-government (C2G), government to-business (G2B), business-to-government (B2G), government-to-government (G2G), government-to-nonprofit (G2N), nonprofit-to-government (N2G), and government-to-employee (G2E).

In recent years, the implementation of e-government has grown significantly. In Saudi Arabia, there are many e-government systems that are provided in different sectors, including human resources, education, health, and trading. These systems have radically transformed communication between citizens and the public sector. According to the United Nations e-Government Survey [2], Saudi Arabia is ranked 43rd among 193 countries in 2020, with an e-Government Development Index equal to 0.799. Looking back to 2008, Saudi Arabia was ranked 70th among 193 countries, with an e-Government Development Index equal to 0.4935. The obvious improvement reflects the big attention paid by Saudi’s Government in this regard.

In the educational sector, e-government systems have been utilized to provide many services, either to the teachers or to the students and their parents, such as Noor, Faris, and iEN systems. Noor is an educational management system that serves all schools managed by the Saudi Ministry of Education (MOE) directorates. The system provides a wide range of online services for students, teachers, parents, and the schools’ directors. These services include students’ registration, entering and auditing students’ grades, class schedules, and generating all required reports on the educational processes. The Faris system is one of the essential services that target teachers and school administrators. It provides them with essential human resources services, such as vacation requests, salary queries, and employee information. iEN, on the other hand, is a national educational portal that provides distance- and e-learning services for teachers, students, and parents. In recent years, the MOE in Saudi Arabia has shown a growing interest in the of inclusion of visually impaired (VI) students and teachers in public schools. Despite the efforts that the MOE has been exerting to facilitate the educational process for visually impaired teachers [3], the e-government systems in the educational sector still have some limitations regarding its accessibility and usability for this group.

According to the International Organization for Standardization (ISO) [4], accessibility is defined as “the usability of a product, service, environment or facility by people with the widest range of capabilities.” Accessibility and usability are closely related aspects regarding developing a website that works for all. Usability is considered as a big umbrella that includes accessibility [4]. This implies that for a website to be accessible for specific users, it should be usable by those users, where the users in our case are VI teachers. Therefore, this study aimed to evaluate the accessibility of Saudi e-government systems for teachers, identify the accessibility and usability issues from a visually impaired user’s perspective, and answer the following research questions:

- Q1: How accessible are the Saudi e-government systems for visually impaired teachers?

- Q2: What are the key difficulties faced by visually impaired teachers while using Saudi e-government systems for teachers?

First, we conducted an automatic evaluation using different accessibility evaluation tools, namely, Total Validator [5] HTML_CodeSniffe [6], SortSite [7], and AChecker [8] to assess the evaluated systems against the Web Content Accessibility Guidelines (WCAG) 2.0, which have three levels: A, AA, and AAA. Level A is the basic level of web accessibility features and has priority. Level AA involves the intermediate guidelines that deal with the most common barriers for disabled users. The highest level, which is level AAA, deals with the most complex issues in web accessibility. Second, we conducted a usability evaluation with four VI participants to measure the efficiency, effectiveness, and users’ satisfaction [4] with the Noor, Faris, and iEN systems. We hope that this research will have a positive impact on raising awareness on the importance of designing accessible solutions that guarantee equivalent access to all users; therefore, the contribution of this study is being the first study to evaluate Arabic transactional websites for the visually impaired.

The rest of this paper is structured as follows. The next section will present the related work. Section 3 will describe our methods in detail, including automatic tools evaluation and usability testing. Section 4 will show the results of the evaluation. Finally, we conclude the paper with some recommendations and suggestions for future work.

2. Related Work

The previous literature has evaluated the accessibility of websites using a variety of methods, including heuristic evaluation, user testing, or a combination of both. In this section, we present studies that are related to our present work. We tried to cover a variety of studies that conducted an accessibility evaluation of educational, governmental, and transactional websites for VI users.

2.1. Accessibility Testing Using Heuristic Evaluation

Heuristic evaluation is the use of manual or automated tools to measure the accessibility of websites. Automated heuristic evaluation tools have grabbed the attention of software developers as an effective mechanism for evaluating web accessibility and usability. Automated tools are online services or software programs that are designed to check whether the code or content of web pages complies with accessibility guidelines or not [9].

Several usability and accessibility studies evaluated e-government websites by checking their compliance with international standards, such as the WCAG 2.0 or Nielson usability guidelines [10]. In [11], a variety of e-government sites in 12 countries in the European Union (EU), Asia, and Africa were examined for their level of disability accessibility. Similar government ministries, in total six for each country, were selected for evaluation. These websites were evaluated automatically using the TAW [12] tool against the WCAG 1.0. The evaluation results showed that most of the evaluated sites did not meet the WCAG 1.0 checkpoint standards. However, the results showed that the websites of EU countries outperformed Asian and African websites in terms of compliance with all three WCAG priority levels. The authors attributed this difference in performance to the existence of strong internal disability laws in EU countries. Similarly, Wan et al. [13] evaluated the usability and accessibility of 155 government web portals in Malaysia. The results show that these websites needed to be more accessible and usable for users. Similar results were also found in [9], in which the authors evaluated the accessibility of 45 governmental websites in Pakistan for visual impairment users. Two evaluation tools were used in this study: Functional Accessibility Evaluator and Total Validator, but the evaluations were limited to the home pages only. Some similar studies on the accessibility of government websites were also conducted by Acosta et al. [14] and Pribeanu et. al. [15].

Several studies have been conducted to evaluate different governmental websites in the Arab region. In [16], the authors examined a set of common Saudi and Omani e-government websites (13 from Saudi Arabia and 14 ministries’ sites from Oman). They examined accessibility guidelines, evaluation methods, and the analysis tools used. The evaluation was restricted to the conformance to an “A” rating. Using the Bobby tool for the evaluation, the results showed that none of the evaluated websites conformed to all “A” rating checkpoints. Following up on a previous study [17] conducted in 2010, Al-Khalifa et. al. [18] re-evaluated several Saudi government websites against the WCAG 2.0 guidelines to check whether the accessibility of these websites had improved since 2010. They examined 34 websites and selected three representative web pages from each site in both versions: Arabic and English. Using automatic tools, such as AChecker, Total Validator, and WAVE, the results showed an improvement in the accessibility of the evaluated websites compared to 2010; however, the authors recommended more training to enhance the websites’ accessibility. A recent study was conducted by Al-Faries et al. [19]. The study evaluated the usability and accessibility of a representative sample of the top 20 services provided by different e-government websites in Saudi Arabia, including Foreign Affairs, Civil Services, Real Estate Development, Interior, and Public Pension websites. Based on an expert’s review and automated tool assessment, the results showed that all examined websites/services had one or more accessibility violation(s) and none of them fully conformed to the WCAG 2.0 standards. In contrast, most of the tested websites/services were user-friendly and well designed. Other studies were also conducted to evaluate governmental website accessibility in Dubai [20] and Libya [21]. In [20], the authors evaluated the accessibility of 21 Dubai e-government websites to check to what extent these websites complied with accessibility guidelines (WCAG 1.0). The results showed that the most common detected accessibility barriers were related to the non-text elements and dynamic contents. As for [21], the authors analyzed ten government websites in Libya according to the accessibility guidelines (WCAG 2.0). The evaluation results using AChecker and TAW tools showed that almost all Libyan government websites did not comply with accessibility guidelines. Similar to [20], the most commonly identified issues were related to the non-text element.

2.2. Accessibility Evaluation Using User Testing

While the automated tools have been extensively used in the literature to assess the websites’ accessibility, other studies have focused on the user’s perspective regarding the usability and the accessibility experiences to identify more accurate findings.

Several studies that explored the online behaviors of VI users uncovered several accessibility issues that can negatively affect VI users’ experiences. For instance, a user test was conducted by Sulong et al. [22], along with interviews, recorded observations, and task-browsing activities, to determine the main challenges encountered by the VI users while interacting with different types of websites (e.g., Facebook and email). The study results showed that the complexity of the web page’s layout could affect the learning process and task completion of a VI person. Similar findings were also reported in [23], where a complex page layout was shown to be a serious problem for website accessibility. In these studies, the authors recommended integrating VI users throughout the design process to eliminate these accessibility issues.

New web trends, such as interactive web elements, which establishes an interaction between the user and the content, were found to lack accessibility for both VI and sighted users, according to Tiago et al. [24]. In their work, the authors conducted an empirical study that was focused on investigating the accessibility in new web design trends for VI users by comparing the task performance of VI and sighted users in responsive and non-responsive websites. The study revealed that there was an obvious relationship between the website accessibility and its structure, where shallower and wider navigational structures were better than deeper ones.

The accessibility of an online job application for VI users has been investigated by Lazar et al. [25]. The results demonstrate that participants with VI faced considerable obstacles while submitting their online applications. Thus, the authors provided recommendations for employers to ensure that their online employment applications are accessible and usable for all individuals, including individuals with disabilities. Another user test [26] has been conducted to evaluate the accessibility of the University of Ankara website for visually impaired students. Along with the user test, a satisfaction survey and interviews were also conducted. The results showed that although most of the evaluated tasks were completed successfully, some accessibility issues were identified, such as the lack of descriptive labels and captions, which made some pages less accessible. Students also reported that they had to ask for help from volunteers or university staff for their daily tasks. Yi [27] presented an evaluation of the accessibility of government and public agency healthcare websites in Korea through a user test. The study recruited VI and second-level sight-impaired people to perform multiple tasks with ten healthcare websites. The results revealed some issues and problems across the four dimensions of accessibility: perceivable, operable, understandable, and robust.

2.3. Accessibility Evaluation Using Mixed Methods

In the literature, several studies have examined the accessibility of a wide range of websites for VI users using a mixed-method approach that incorporates several methods, such as automated testing and expert evaluation and/or user testing methods.

Following this approach, some studies showed that the results obtained from automated and user testing were inconsistent. For example, in [28], the authors investigated the difficulties in accessing web-based educational resources provided by Thailand Cyber University (TCU) for VI users. The study employed two evaluation methods to collect data: automated testing and user testing. A total of 13 selected pages were evaluated. The results of the automated testing using AChecker and ASTEC tools showed that all selected web pages failed to meet the minimum requirements of the WCAG 2.0; however, eight VI participants rated only one of the 13 pages as inaccessible, Similarly, Kuakiatwong [29] presented an exploratory study that followed a mixed-methods approach to understand the best practices for the accessible design of academic library websites for VI users. Three library websites, two academic and one public, were tested for their usability and accessibility with six VI persons. The websites were also tested for standards-compliant code using AChecker. After analyzing the test results, the authors noticed that most of the barriers identified during the usability testing were not related to any of the errors identified by the AChecker tool; rather, the barriers were more related to semantics and navigational design. Abu-Doush et al. [30] evaluated the accessibility and usability of an e-commerce website using several methods: automatic testing using the SortSite tool, manual testing, expert evaluation, and user testing. The results showed that expert and manual evaluations discovered some accessibility and usability problems that were not detected by the automatic tool. Moreover, the results of user testing with a group of 20 VI users showed that the site had some accessibility and usability issues that negatively affected the effectiveness, efficiency, and satisfaction of users.

Moreover, several studies have evaluated a wide range of educational, governmental, and transactional websites and revealed some critical issues affecting their accessibility for VI users. For instance, Park et al. [31] presented a study in which several massive open online courses (MOOC) websites were evaluated for their accessibility using manual evaluation and user testing methods. The result of this study illustrates that some critical barriers appear to have a serious impact on the completion of the assigned tasks, which were mainly related to dropdown menus, repetitive elements, and dynamic webpage structure. Aside from educational websites, Fuglerud et al. [32] evaluated the accessibility of several prototypes of e-voting systems for users with different types of impairment (low vision, totally blind, and deaf). The study used three different methods for its evaluation purposes: automated testing, expert testing, and user testing. Each prototype was tested against two scenarios with 24 participants with different levels of impairment. As a result, many usability and accessibility issues were identified. However, the overall experience of the participants was positive. Moreover, the accessibility of nine Jordanian e-government websites by VI users was broadly studied in [33]. The study was conducted using three different approaches: questionnaire, user testing, and an expert’s evaluation. Results showed that most of the evaluated e-government websites had some accessibility issues, which made most of the tested tasks impossible to complete.

Finally, Navarrete and Luján-Mora [34] proposed a design for an open educational resources (OER) website that aims to enhance accessibility through personalization of the whole OER environment based on a user profile that includes self-identification of the user’s disability status. As a proof of concept, they conducted an accessibility test using an automatic tool and user experience (UX) test for users who had different disabilities. The results confirmed the assumptions of the proposed work that claimed the UX could be enhanced by focusing on the user’s profile.

Relevant literature clearly shows the importance of accessibility evaluations to uncover different types of issues that may negatively affect the online experiences of VI users. It also reveals that, despite the importance of the websites’ accessibility for those users, most of the evaluated websites were found to be inaccessible and had many problems that require more investigation. While previous research demonstrates the ability of automated tools to identify several technical issues, user testing is very effective at identifying additional problems from the user’s perspective. Thus, in this study, we aimed to conduct an evaluation of three e-government systems in Saudi Arabia (Faris, Noor, and iEN) using a combination of both heuristic and usability testing methods. We focused on these systems as they are particularly essential for teachers to perform their regular tasks, such as registration, grading, and human resources services. Furthermore, many services provided by these systems involve confidential information, which makes it more difficult for VI users to rely on external assistance.

3. Materials and Methods

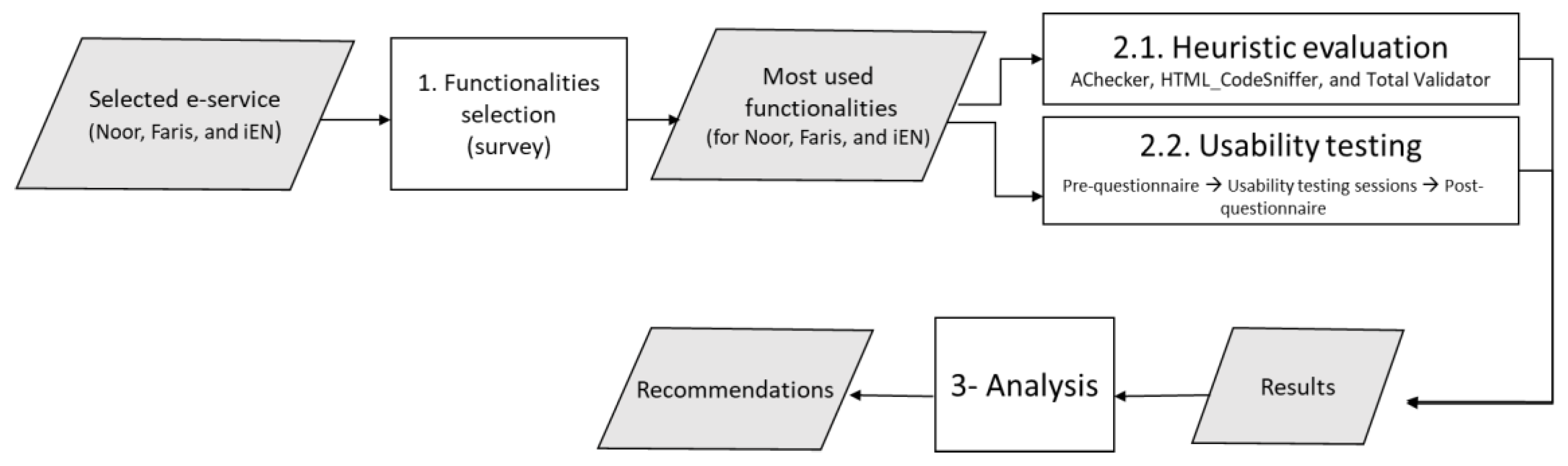

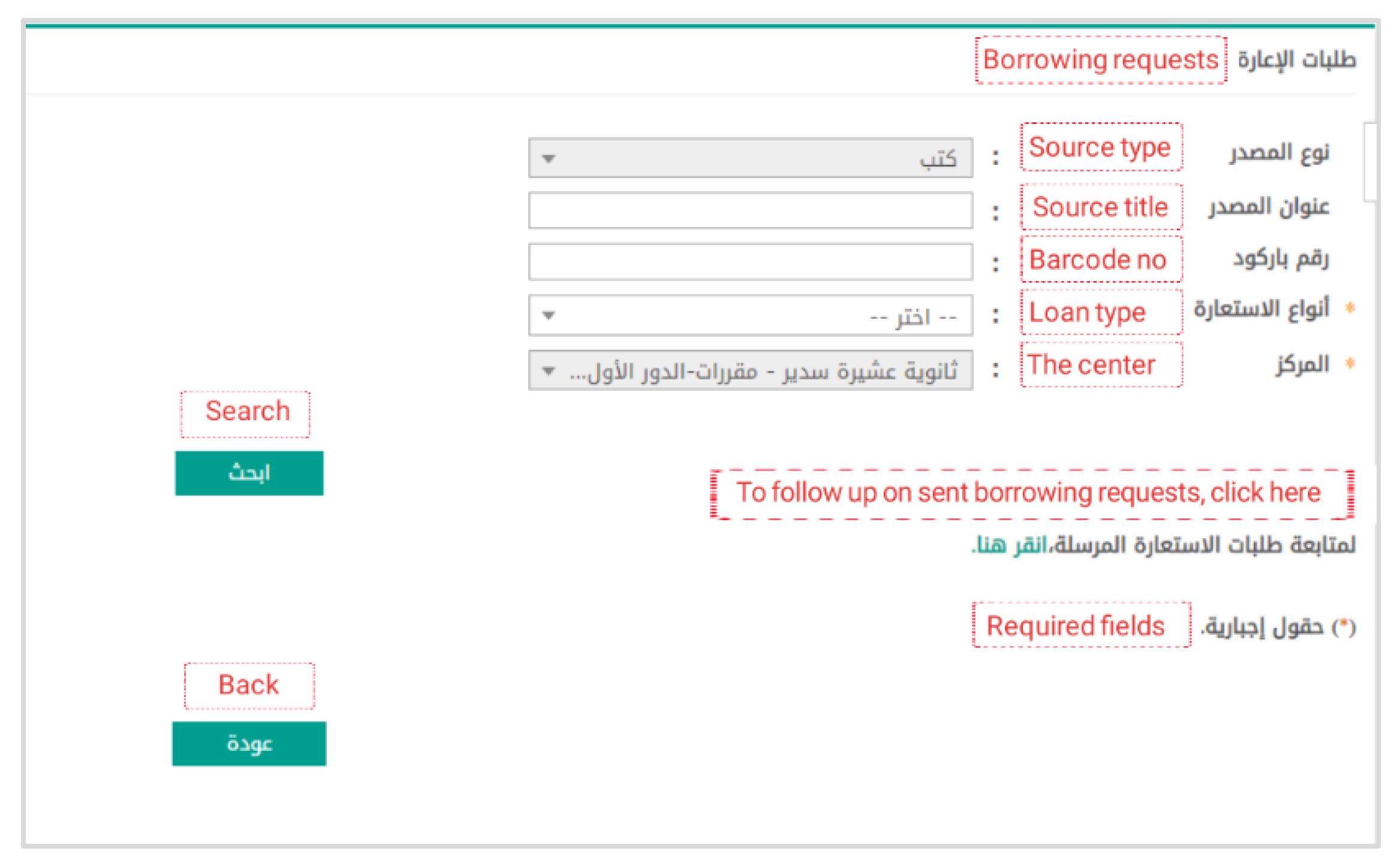

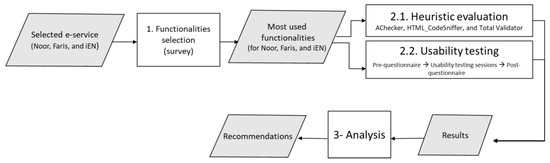

To evaluate the accessibility of the e-government systems for VI users, we first narrowed down the evaluated systems’ functionalities into the most commonly used ones. Based on the selected functionalities, we employed a heuristic evaluation approach in which we used several automated tools to assess the systems’ conformance to the WCAG 2.0 accessibility guidelines. We also performed usability testing with four VI participants. The methodology workflow is depicted in Figure 1. In the next subsection, we discuss each step in more detail.

Figure 1.

Methodology flowchart.

3.1. E-Government Systems and the Functionalities Selection Criteria

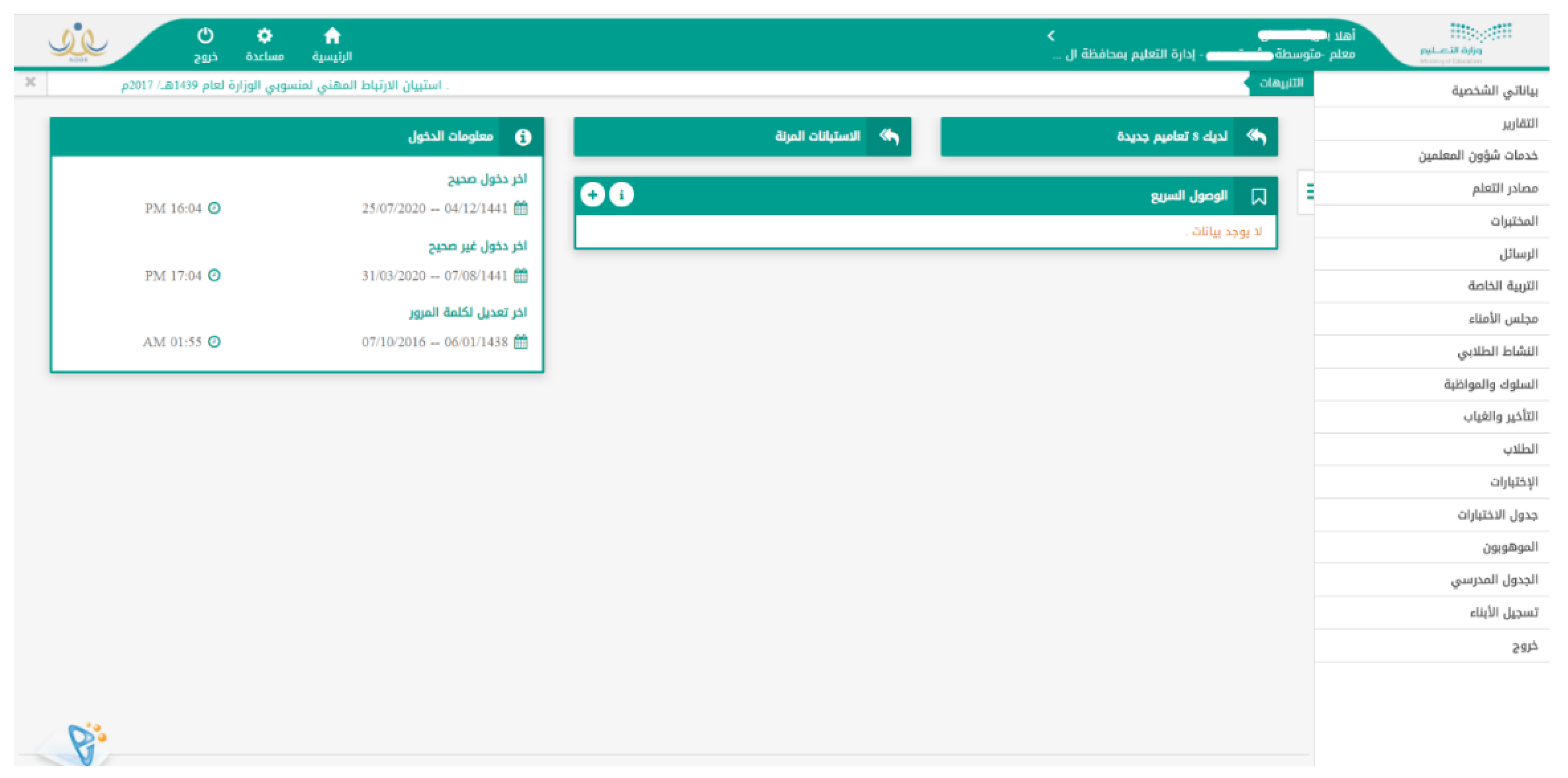

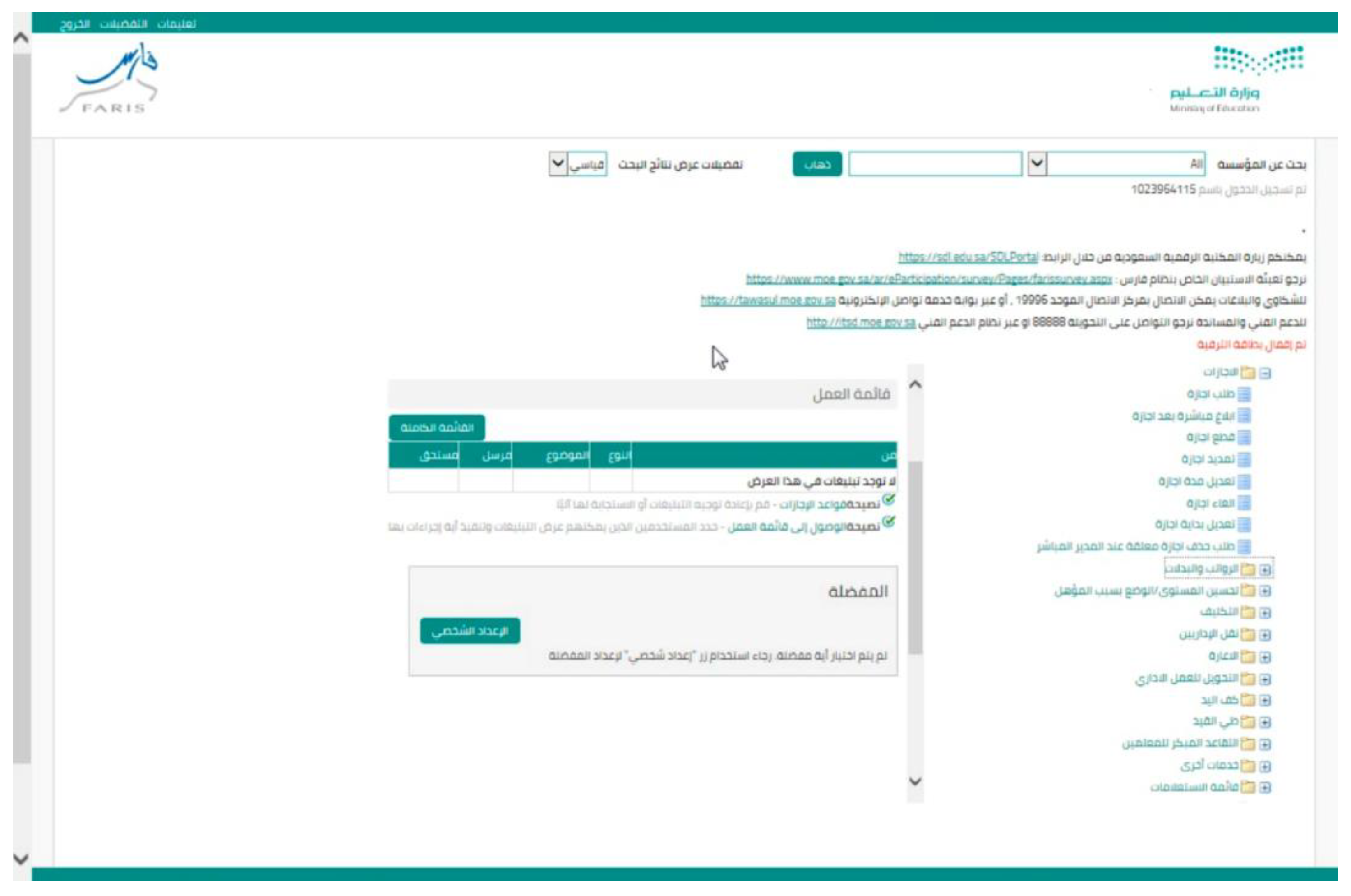

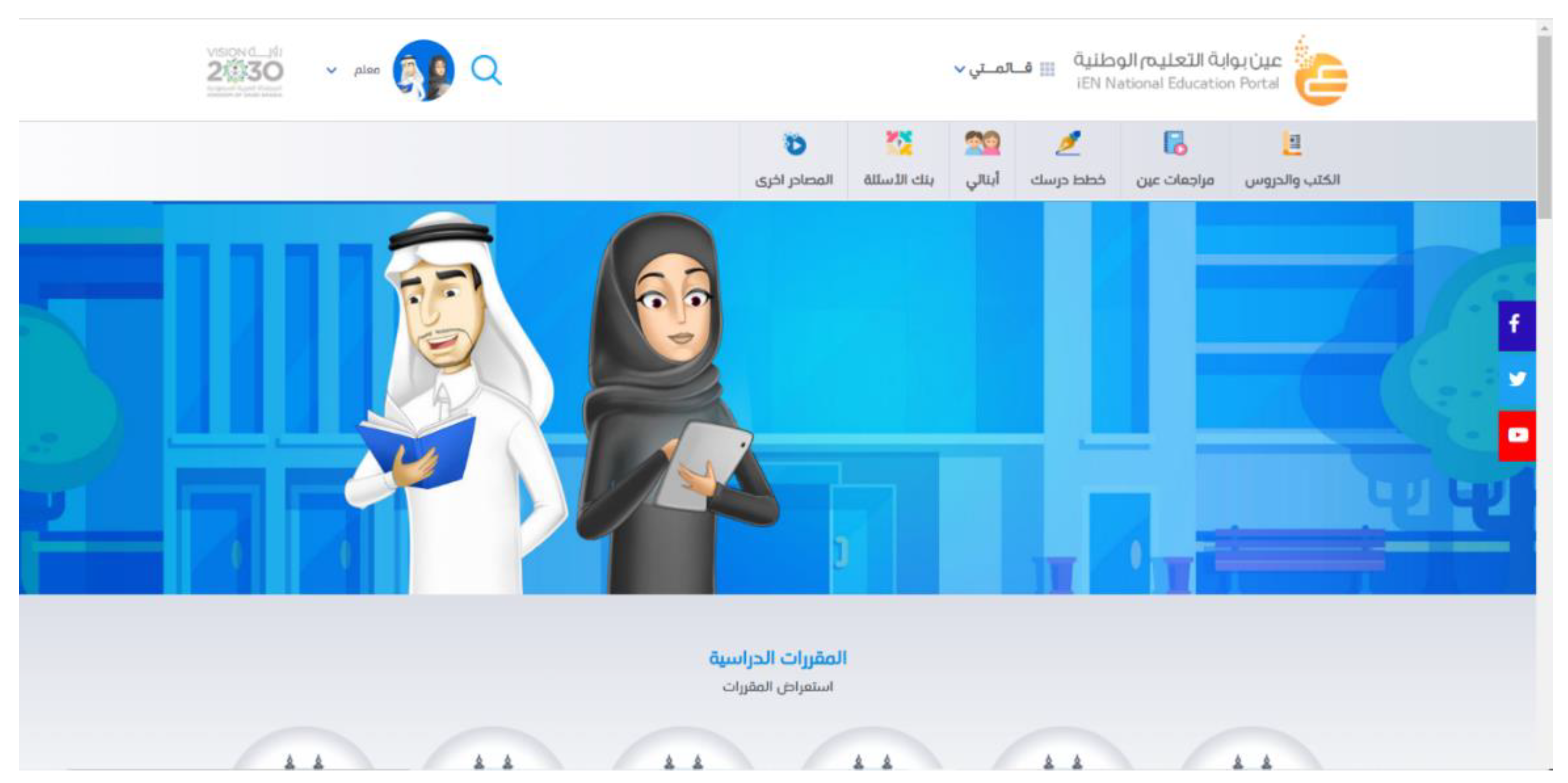

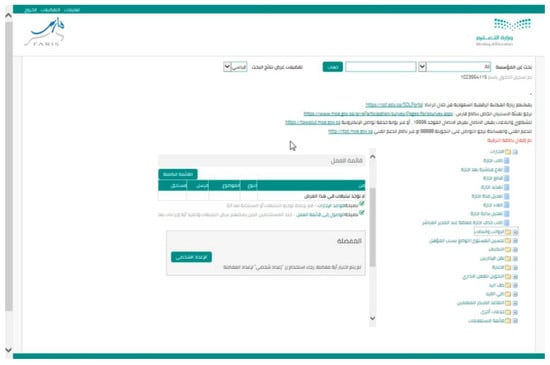

The MOE has provided over 15 systems for teachers, parents, and students in both general and higher education [35]. In this study, we opted to evaluate three main services that are mandatory for teachers and must be used to accomplish different tasks, ranging from checking their schedule to requesting leave and retirement. The selected systems were Noor, Faris, and iEN, and a screenshot for each system’s homepage is presented in Figure 2, Figure 3 and Figure 4, respectively. Noor is an educational administration management system that provides a wide range of functionalities for teachers to manage class schedules, grades, assignments, etc. Faris is a human resources information system that offers various services for teachers, such as vacation requests, salary information, and leave requests. Finally, the iEN system is an educational e-learning system that delivers online course materials, digital tools, and communication channels to facilitate the distance learning process for teachers and students.

Figure 2.

Noor homepage.

Figure 3.

Faris homepage.

Figure 4.

iEN homepage.

To understand the most important functionalities in each system, we developed a survey for teachers to rate the most commonly used functionalities in each system (see Appendix A). The survey was publicly distributed through social media and we received a total of 46 responses from teachers. For the Noor system, we found that the top three most used functionalities based on users’ rankings were: (1) entering skills/grades, (2) teachers’ affairs services, and (3) borrowing books. However, after examining the teachers’ affairs services, we found that the related functionalities were enabled during a limited time in the year, thus we excluded them from our test plan. Therefore, the final selected tasks were: (1) logging in, (2) entering skills/grades, and (3) borrowing books. The second task (entering skills/grades) was quite different depending on the grade that the participant was teaching. For elementary school teachers, the task was to enter the students’ skills throughout the semester, while for secondary school teachers, the task was to enter the students’ exam grades at the end of the semester. Accordingly, different scenarios for this task were created as for the iEN system, we found that (1) playing interactive content, (2) searching course plans, and (3) downloading course content were the top three most used functionalities. Thus, the final selected tasks were as follows: (1) logging in, (2) playing interactive content, (3) searching course plans, and (4) downloading course content. Finally, the selected functionalities for the Faris system were (1) logging in, (2) requesting a vacation, and (3) requesting a salary identification. The final selected functionalities are illustrated in Table 1.

Table 1.

The final selected functionalities for each system.

3.2. Heuristic Evaluation Using Automated Tools

Heuristic evaluation is a method for assessing computer software usability based on specific principles. It can be done by either usability experts or through automated tools. Many automated tools can assist with identifying accessibility issues. According to [36], the effectiveness of automated tools depends on reaching a high level of three aspects: coverage, completeness, and correctness. However, the performance of a single tool can vary at achieving different levels of these aspects. As an example, SortSite and Total Validator show the highest coverage and completeness, while AChecker has high correctness. Therefore, to increase the tool’s effectiveness, the authors of [36] suggested employing different approaches. Accordingly, four evaluation tools, namely, Total Validator, HTML_CodeSniffer, SortSite, and AChecker, were adopted to perform the accessibility evaluation tests. These tools were the most commonly used tools for accessibility assessments in the reviewed studies, including [6,9,12,21].

Total Validator is an accessibility tool that comprises an all-in-one validator. It helps to ensure that the website is accessible based on the WCAG and US Section 508 guidelines, that it uses valid HTML and CSS, has no broken links, and is free from spelling mistakes. Total Validator has three versions: test (lowest version), which performs accessibility and HTML validations and only outputs a summary; the basic version validates spelling and link checking and provides detailed results; the pro version validates an entire website or multiple websites in one go, checks the CSS against World Wide Web Consortium (W3C) standards, and checks the password-protected areas of websites. In our study, we used the basic version because it provided the required features to complete the evaluation process.

HTML_CodeSniffer, which is an open-source, online checker tool, is written in the JavaScript language; therefore, it checks the code by using the browser without the need for any server-side handling. Furthermore, it evaluates websites based on different standards, including the WCAG 2.0 and Section 508, and provides step-by-step correction methods.

The third tool was SortSite, which is a one-click web site testing tool used by federal agencies, Fortune 100 corporations, and independent consultancies. The tool is available as a desktop application for Mac or Windows and is also available as a web application. It was released in 2007 and has been widely used by many entities since then. The web version assesses 10 pages of the entered URL at a time, whereas the desktop version checks only the uploaded page.

Finally, AChecker, which is an open-source automatic tool, assesses compliance with different accessibility guidelines, including Barrierefreie Informationstechnik-Verordnung (Barrier-Free Information Technology Regulation; Germany) (BITV) 1.0 (level 2), Section 508, Stanca Act, WCAG 1.0, and WCAG 2.0. Moreover, it provides three methods for checking the accessibility of a specific website: pasting a URL, uploading an HTML page, and pasting the source code of the HTML page.

To evaluate the systems, we selected the web pages of the three most used functionalities (besides the log-in task), as described in Section 3.1. Therefore, we chose the corresponding pages of the most used tasks in the three systems, as shown in Table 2.

Table 2.

Selected pages of the three systems.

3.3. Usability Testing

In this section, we describe the conducted usability testing, including the recruited participants, data collection methods, measurements, and finally, the procedure.

3.3.1. Participants

In this study, four VI teachers were recruited to participate in the evaluation. We recruited them by calling several schools that employed VI teachers (elementary, intermediate, and secondary public schools). After that, we asked each teacher individually if she was interested in participating in the study. We were not able to recruit a large number of participants for this study and only four female VI teachers volunteered to participate. However, previous usability studies have stated that three to four participants are sufficient to detect 80% of design usability problems [37,38].

Three of the participants were totally blind and one was partially blind. They were aged between 30 to 50 with a bachelor’s degree and 6 to 18 years of working experience. As for their computer skills, three participants were occasional users of computers, while one of them stated that she always used the computer. As for Internet usage rates, two participants were daily users of the Internet while the other two occasionally used the Internet. Two of the participants were teaching elementary school and the other two were teaching secondary school (Table 3).

Table 3.

Profiles of participants.

3.3.2. Data Collection

Various data collection methods were used to collect both quantitative and qualitative data. These methods included pre- and post-questionnaires and direct observation during the test sessions.

- Pre-questionnaire: A pre-questionnaire was used to collect data on the participants’ usage of the Noor, Faris, and iEN systems (see Appendix B). Participants were asked about the tasks they usually performed on the three systems and their usage rate. The pre-questionnaire also included questions about the hardware and software tools they used to access the three systems. We also asked the participants to rate the systems from different perspectives.

- Usability testing sessions: During the usability testing sessions, several types of data were collected while observing the participants performing a total of ten developed task scenarios; three each for Noor and Faris and four for iEN. These data included quantitative data, such as the time to complete the task and the task completion status. Qualitative data, which included difficulties encountered by the participants when performing the tasks and the feedback from the participants, was also recorded through written notes.

- Post-questionnaire: After performing the tasks for each system, users were asked to fill in a post-questionnaire in order to assess their satisfaction with the tested system. A separate form for each system was created (see Appendix C). Preferences information was gathered using seven five-point Likert scale questions. Furthermore, five open-ended questions were created to collect some qualitative data regarding the participants’ opinions and feelings toward the tested systems.

3.3.3. Measurements

We measured three usability attributes, namely efficiency, effectiveness, and satisfaction. The efficiency was measured using the completion time and the effectiveness was measured using the completion status. As for the satisfaction, it was assessed using different qualitative data, such as the observation notes, user comments, the open-ended questionnaire, and the Likert scale ratings.

3.3.4. Procedure

The usability testing sessions were conducted at a usability lab. The participants were asked to perform the pre-defined tasks using a laptop with speakers, prepared with NonVisual Desktop Access (NVDA) screen reader. To access the systems, all participants were asked to use their credentials to log into the system. To observe and record the sessions, we used the Morae recorder on the participant’s laptop and the Morae observer on the observer’s laptop. The observer was sitting in the same room in which the sessions were conducted but with an adequate distance from the participant.

As for the materials, both the pre- and post-questionnaires were created using Google forms, which helped us to collect the data from the participants. As for the consent forms, they were translated into braille so participants could read and sign them independently.

To build the task scenarios, we selected the three most used functionalities in the Noor, Faris, and iEN systems (besides the log-in task, as described in Section 3.1). For the complete task scenarios, please refer to Table 4, Table 5 and Table 6.

Table 4.

Noor system task scenarios.

Table 5.

Faris system task scenarios.

Table 6.

iEN system task scenarios.

Before starting the testing sessions, participants were asked to read and sign the consent form that acknowledged that their participation was voluntary and that the session could be videotaped and used for research purposes only. After this step, pre-questionnaires with basic questions about the profile and background of each participant, as well as their usage of the tested systems, were sent to the participants’ mobiles to be filled in. After filling in the questionnaires, the aim and the procedure of the study were explained verbally to all participants. Each participant engaged in three separate test sessions (one for each system), separated by a 10 to 20 min break. The test sessions were conducted in a different order for each participant. During each test session, the moderator read aloud each scripted task scenario, one at a time, to the participant. The tasks within each test session were tested in a different order for each participant. A Morae recorder [39] was used to record the sessions on the participant’s laptop, while the Morae observer was used by the observer to watch the participant’s actions in real time. For all participants, the first task was the log-in task. The participants were allowed to express their opinions and feedback during and after the execution of tasks. The test sessions lasted between 20 and 50 min. During the testing session, the Noor system was down for almost two hours; because of this, only three participants were able to engage in the Noor test sessions.

4. Results

The results of the heuristic evaluation using the automated tools are presented in the following Section 4.1, followed by the results of the usability evaluation.

4.1. Heuristic Evaluation Using Automated Tools

In this section, we describe the results of the automatic accessibility evaluation. The analysis addressed three different aspects:

- Principles, which addressed the issues related to the four principles of the WCAG 2.0, namely, perceivable, operable, understandable, and robust. It began with the common issues in the three systems, and after that, it moved to the uncommon issues, which appeared specifically in one system.

- Tool performance, which compared the used automated tools in terms of the similarities and differences.

- System performance, which mentioned the issues identified in each of the three systems and presented the pages that had the highest number of errors.

Table 7 summarizes the accessibility evaluation results for the three automatic tools (AChecker, HTML_CodeSniffer, and Total Validator) that were applied to the three systems (Noor, Faris, and iEN). Regarding the Noor system, most of the errors were at level A (376 errors) and the rest of them (83 errors) were at level AA. Furthermore, the Faris system had 715 level A errors, 64 level AA errors, and 9 level AAA errors. The iEN system had 555 level A and 64 level AA errors. Thus, we observed that most of the errors violated even the lowest level of accessibility conformance, which reflected the failure of these systems to pass the accessibility evaluation.

Table 7.

Total number of errors based on the principles.

4.1.1. Principles

In this section, we start with the common issues in the three systems. Regarding the four principles, perceivable was the most violated principle by all the systems, followed by operable, then robust. As shown in Figure 5, the errors corresponding to the perceivable principle alone represented about 73% of the total errors. This principle concerns the ability of the user to recognize the displayed information using any of their senses. Most of the perceivable errors were not compliant with two success criteria.

Figure 5.

Overall comparison of the violated principles.

Guideline 1.1 (Text Alternatives)

1.1.1: Non-text Content (Level A)

Images were used widely as links; however, these images did not have an ALT attribute. The ALT attribute is an alternative text that can be used to describe the purpose of the link. This issue is critical since many screen readers are unable to read ALT; accordingly, VI users will not recognize the existence of a link, thus negatively impacting the time that VI users take for browsing or performing tasks. Moreover, the absence of the ALT attribute in image tags is a big issue, especially when the image contains important information, such as statistical data or important announcements.

Guideline 1.3 (Adaptable)

1.3.1: Info and Relationships (Level A)

Many pages used regular text as headings and some of them had more than one h1 element. The correct usage of headings will allow screen readers to identify headings and read the text as a heading with its level, thus allowing VI users to get to the content of interest quickly. To overcome this issue, the heading tag must not be used unless the text is intended to be a heading. Moreover, the proper hierarchical h1–h6 should be used to denote the level (importance) of the headings by making the title of the main content h1 and changing other h1 elements to a lower heading level. The remaining errors did not comply with the following success criteria.

Guideline 3.1 (Readable)

3.1.1: Language of the Page (Level A)

The default language of many pages was identified as the English language, while the main language of these pages was Arabic. Moreover, the lang attribute was missing from some pages. The lang attribute is important for many reasons, including the fact that it allows screen readers to pronounce words in the appropriate accent and offers definitions using a dictionary. Therefore, the lang attribute must be employed in a way that reflects the main language of the web page. Furthermore, the lang attribute should be added to allow screen readers to pronounce words correctly.

In the previous subsection, we discussed the common accessibility issues identified in all three systems. In addition, we were able to identify other violations of accessibility principles in some of the systems. Next, we will discuss these errors in detail.

Guideline 4.1 (Compatible)

4.1.1: Parsing (Level A)

In the iEN system, there were many duplications of the id attribute; therefore, some elements had the same id value. The duplication of id affects screen readers that depend on the id attribute to detect the relationships between different contents. Therefore, assigning a unique value to each id attribute is essential.

4.1.2: Name, Role, and Value (Level A)

In the Noor system, many input elements did not have the value attribute. Specifying the role, name, and value of elements is important as it allows for keyboard operation and enables the assistive technology of HTML interactive elements.

Guideline 2.4 (Navigable)

2.4.8: Location (Level AAA)

In the Faris system, many pages located the link element outside the head section of the web page. The link element usually provides metadata and must be written inside of the head section.

4.1.2. Tool Performance

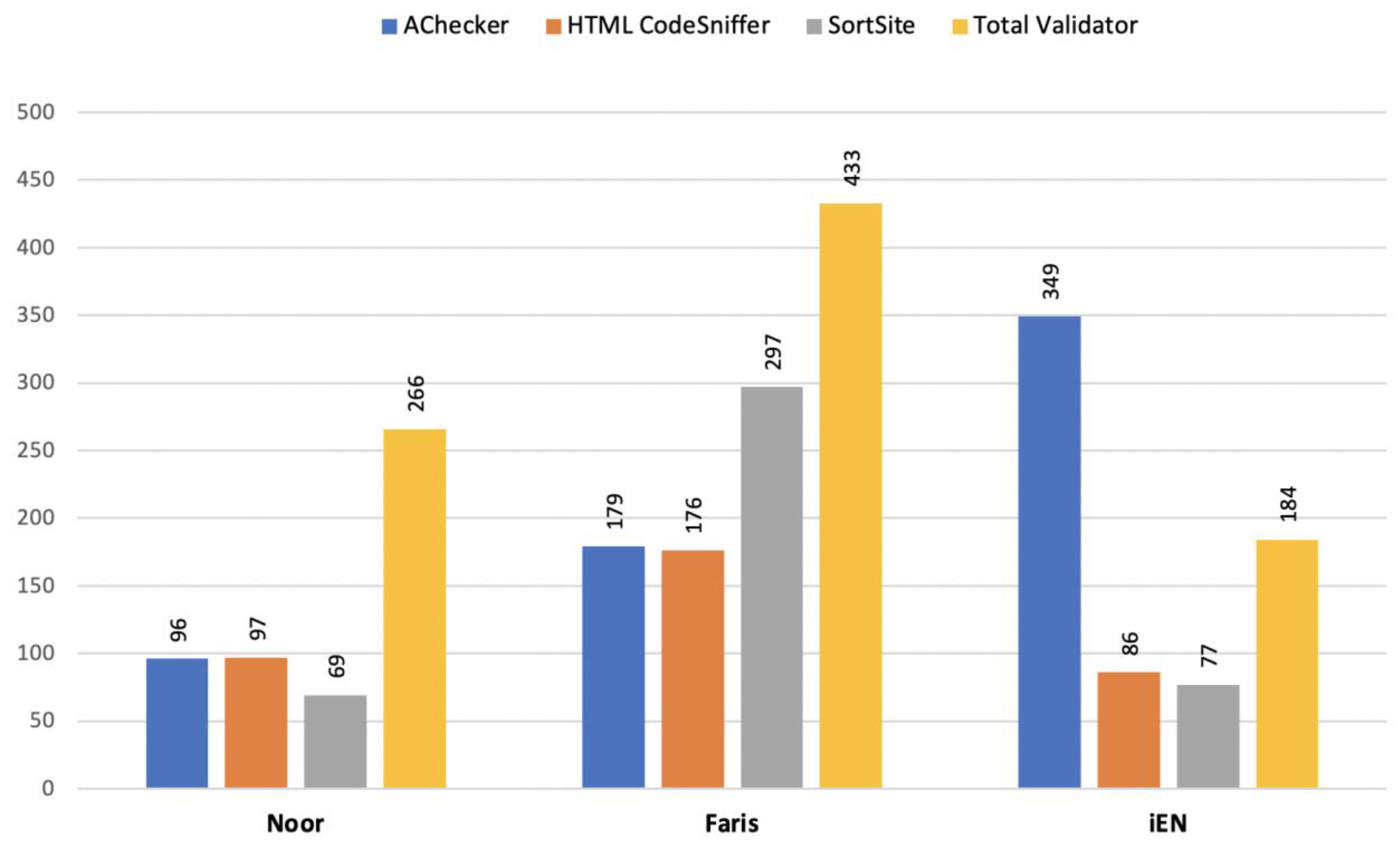

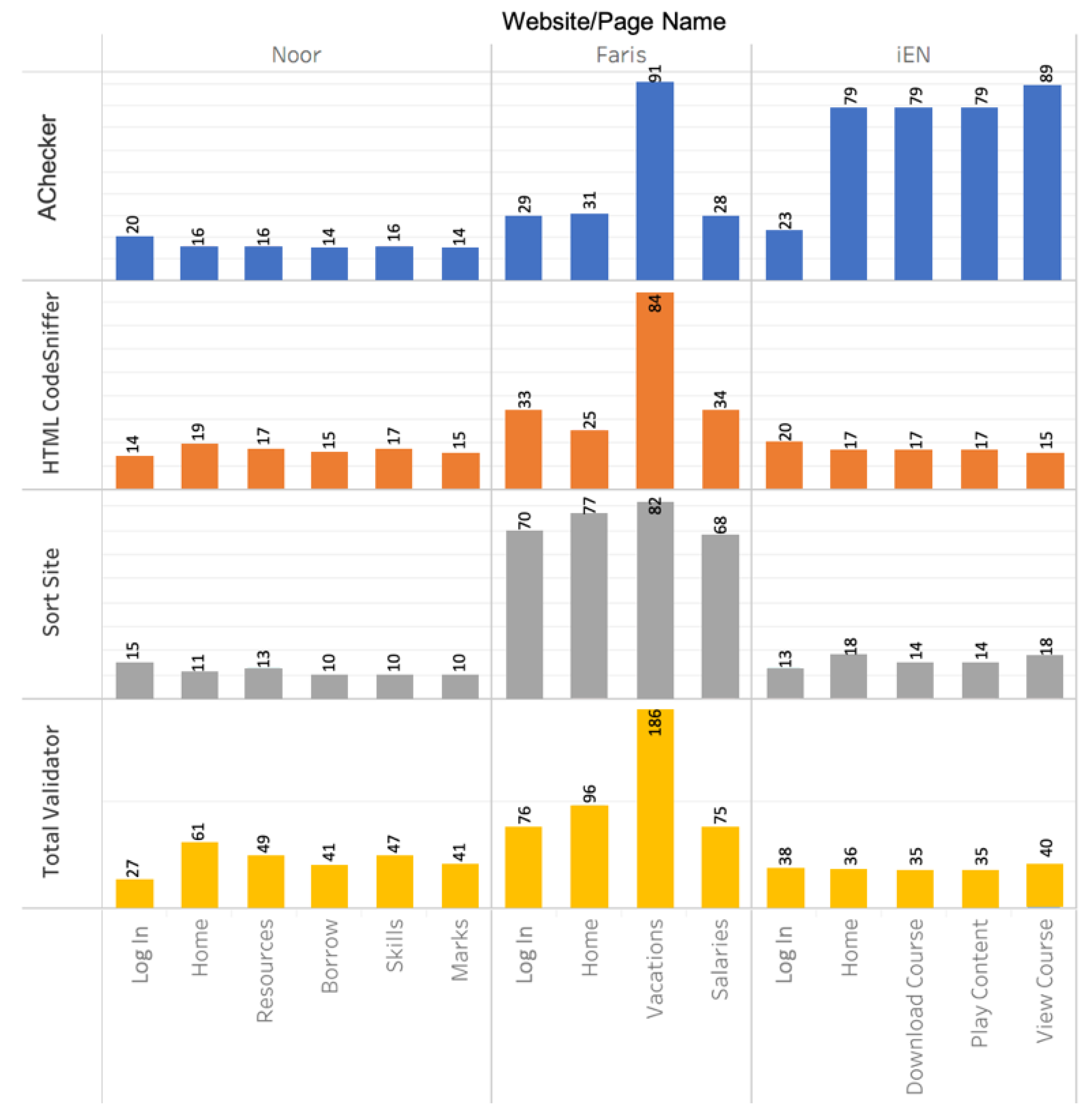

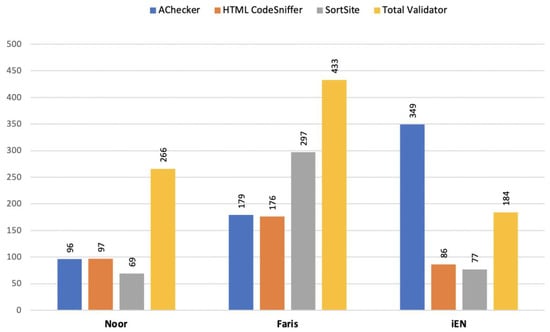

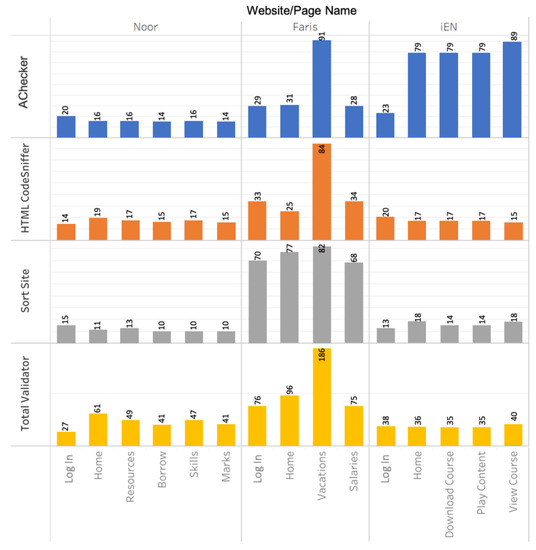

Regarding the tool’s performance, we can see from Table 8 that the tools’ performance differed according to the system. The performances of the AChecker and HTML_CodeSniffer tools were similar; the difference between their total performance in terms of the number of detected errors did not exceed one and three errors for the Noor and Faris systems, respectively. Furthermore, the results for the HTML_CodeSniffer and SortSite tools were similar for the iEN system; the difference between their total performance scores did not exceed nine. Generally, the performances of the three tools AChecker, HTML_CodeSniffer, and SortSite were similar. However, the performance of the Total Validator tool had a big gap compared to the others; after we investigated this gap, we found that the tool calculated the redundancy of the error; this explained the huge numbers generated by the tool. Figure 6 illustrates the performance of the tools for the three systems. Moreover, returning to Table 7, we can see that the AChecker tool failed to detect the violations corresponding to the robust principle for the Noor system and the operable principle for the Faris and iEN systems. Furthermore, the HTML_CodeSniffer tool did not detect any errors regarding the operable principle in the iEN system. The observed discrepancies in the performances of different tools highlighted the importance of employing different tools to increase the effectiveness of the evaluation [36].

Table 8.

Total number of errors based on the pages examined.

Figure 6.

Comparison based on the tools’ performances (total number of errors).

4.1.3. System Performance

We also addressed the systems’ performance aspect. According to Table 8, the Faris system had the highest number of errors (1085 errors), followed by iEN (696 errors), and finally, Noor (528 errors). Now, if we look at the pages that had the most errors in each system, we found these to be the Home page in the Noor system, the Vacations page on the Faris system, and the View Course page in the iEN system. This was expected, as some of these pages were quite complex with many form elements and some of them are transactional pages that have many functions to be performed. Figure 7 shows the performances of the three systems; it also presents the pages that had the most errors on each system.

Figure 7.

Comparison based on the systems’ performances (number of errors).

Finally, according to the SortSite tool, the Noor and iEN systems were compatible with major browsers, such as Firefox, Safari, and Chrome; however, users who used old versions of these browsers might face minor issues while using the Faris system. It is worth mentioning that the SortSite tool provides a different form of analysis based on accessibility, compatibility, search, standards, and usability, as shown in Table 9.

Table 9.

SortSite tool results.

4.2. Usability Testing

As mentioned earlier, we started our evaluation with accessibility testing, we performed a heuristic evaluation using automated tools, and the results were discussed in the previous subsections. The following step was to perform a usability test to get more insights into the usability and accessibility levels of the three systems for VI users. The process and results of the usability testing are discussed in the next subsections.

4.2.1. Pre-Questionnaire

In the pre-questionnaire, we asked the participants several questions regarding their experience with the three systems (Noor, Faris, and iEN). The questionnaires also gathered information about the assistive tools (hardware and/or software) that VI teachers usually use. One of the asked questions was “How often do you use the system?”. Regarding the Noor system, the four participants answered the question as rarely, occasionally, often, and always, respectively. Meanwhile, for the Faris system, two participants answered the question with always, and two of them answered with occasionally. Unfortunately, three participants did not use the iEN system and the fourth rarely used it; she was using the system to search for course plans. Moreover, she found that the system did not support the NVDA screen reader.

As for the most frequently used tasks, participants stated that they usually used Noor to enter skills/grades and check/update their personal information (which was part of the teachers’ affairs services). Moreover, requesting vacation and requesting salary identification were the most frequently used tasks in the Faris system. Finally, participants mentioned that they usually used iEN to download course content, play interactive content, and search for course plans.

Three participants indicated that they used Noor and Faris systems with the help of someone else, such as school administration, a colleague, or a facilitator, and one said that she used the system unaided using her computer and mobile phone. However, all participants stated that they used the iEN system with the help of someone. To analyze the participants’ ratings of the three systems, all participants considered the three systems as moderate to difficult to use and to learn.

Regarding the effectiveness of iEN, Noor, and Faris, all participants agreed that all systems were moderately effective. However, only one answer stated that the system was not effective for each of them.

Two participants found Noor to be accessible, while the other two found it inaccessible. Furthermore, three of the participants found Faris accessible and one found it inaccessible. Lastly, one participant found iEN to be accessible, while two found it moderately accessible and the last participant found it inaccessible.

Regarding the main barriers of the three systems, most participants stated that the main problem was that the systems did not support their screen readers, which resulted in difficulty using them.

As for the most useful features of the Noor and Faris systems, the participants liked the easy log-in process and the availability of many options and features. Regarding the iEN system, the participants liked the features that were provided for both teachers and students, such as getting the curriculum. However, only one of the participants stated that the systems had no useful features that helped to perform the needed tasks.

4.2.2. Usability Testing

In this section, we present the usability testing results according to the effectiveness (task completion), efficiency (time to complete a task), and satisfaction (participants’ feelings, comments, and encountered difficulties). For all three systems, we tested many tasks on the four participants (one VI and three totally blind). However, regarding the Noor system, only three participants were able to perform the test due to system downtime.

Effectiveness

The effectiveness results are presented in Table 10. We noticed that none of the tasks were performed successfully without any help, except for only one participant in the iEN system. The participant was able to complete the log-in task without any help. Moreover, most of the participants either completed the task with help or did not complete it. This was due to many elements in the user interface being unreadable by the screen readers and/or unreachable by the tab key.

Table 10.

Frequency of the completion status for each task: completed (C), not completed (NC), or completed with help (CH).

Furthermore, the log-in task was the only one that was completed (with help) by all participants in all three systems; this might be due to being a common task in all the systems. However, we found that the step of entering the CAPTCHA image code was not performed by any of the participants due to the screen reader’s inability to read it. Because of that, the moderator had to read the code loudly for the participants. Moreover, the participants faced difficulties while typing the password as they were not familiar with the English keyboard, so they had difficulties in locating the characters. Furthermore, the VI participants were unable to switch the language to English to enter the username and password.

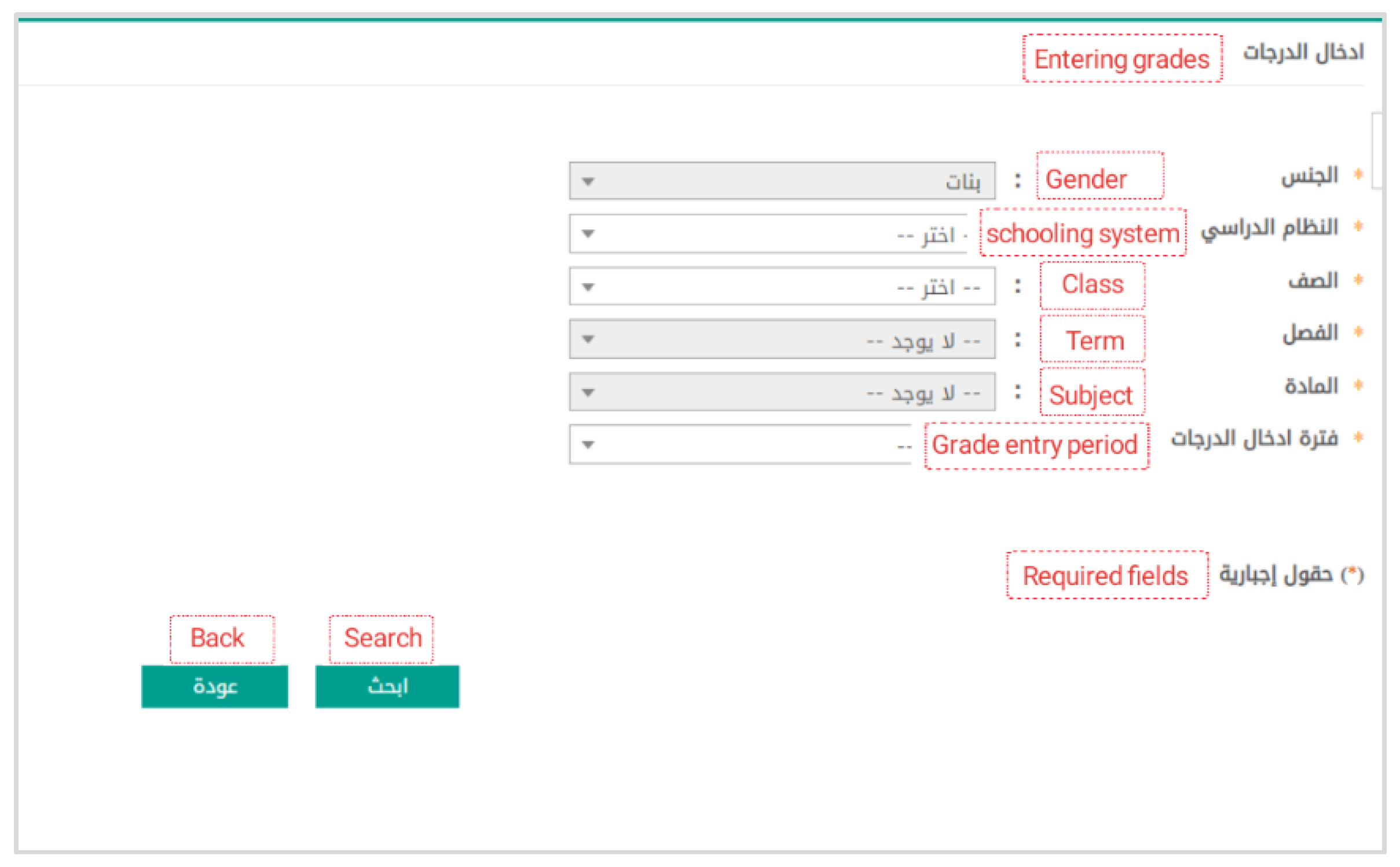

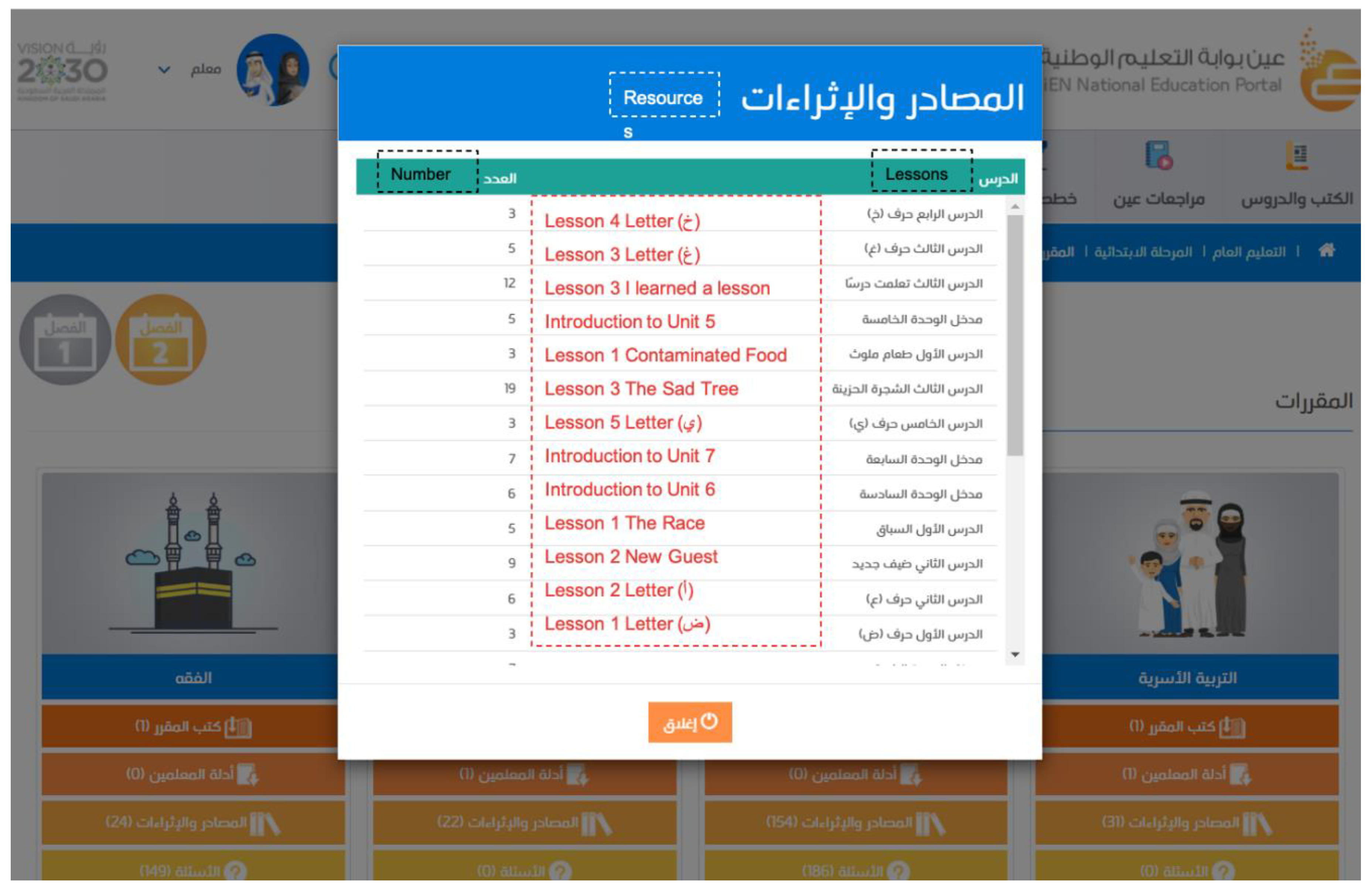

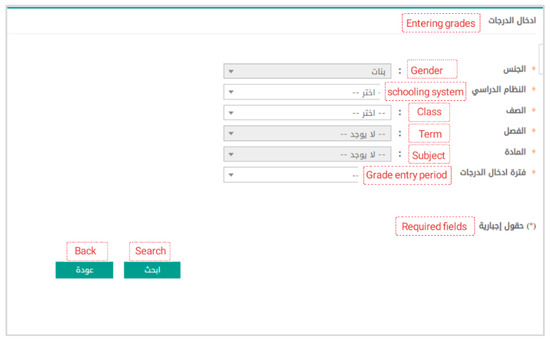

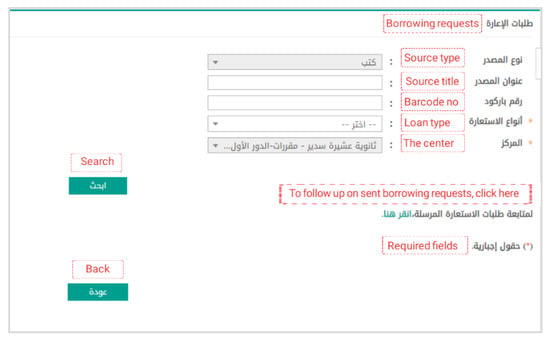

In the next paragraphs, we discuss the results of the remaining tasks that were related to each system separately. First, we show the results of the Noor system tasks. It should be noted before presenting the results that one of the participants was partially blind, so she preferred to use the mouse as the main input device during the session. The other two participants were totally blind and therefore they used only the keyboard when performing the tasks. In task 2 (enter skills/grades) and task 3 (borrow a book), the two keyboard-using participants failed to complete the task’s last step, which was the step of clicking the search button (see Figure 8 and Figure 9). This was due to the search button being unreachable by the tab key. However, the third participant, who was a mouse user and partially blind, was able to successfully complete the task. Still, it was completed after the help of the moderator, who read the unreadable dropdown menu elements and labels.

Figure 8.

Entering grades task with an overlay translation in red.

Figure 9.

Borrowing a book task with an overlay translation in red.

Regarding the results of the Faris system tasks, we found the following. As for the “request a vacation” and “request a salary identification” tasks, we observed a variation in the users’ performances, which may be due to the differences in their experience. However, we noticed that all participants (novice and expert) faced difficulties and needed instructions when they reached the “fill in the request form” step. According to our observations, this step had many fields that needed to be filled in, especially the start and end date of the vacation, which used a calendar and the reader was not able to read it. Moreover, it is good to mention that one of the participants failed to complete the tasks, and according to the pre-questionnaire, she had not used the Faris system before. Furthermore, in the “request a vacation” task, we found that some participants did not know the correct type of leave (some of them chose “emergency leave” when the scenario was about “sick leave”).

As for the results of the iEN system with the “download course content,” “play interactive content,” and “search for a course plan” tasks, we found the following. Only two of the participants completed the tasks. One of them was the visually impaired user and she completed the tasks with hints, while the second was one of the three blind participants and completed the tasks with help from the moderator. Moreover, two of the blind participants refused to complete the tasks.

Efficiency

The efficiency was measured using the completion time, as shown in Table 11.

Table 11.

Average completion time (CT) per task for each system in minutes.

Regarding the Noor system, it had the lowest completion time compared to the other systems. Moreover, we found that the “enter skills/grades” task had the highest completion time.

As for the Faris system, we observed that the “request a vacation” task was the most time-consuming task with an average of 22 min. Therefore, this result may lead the developers to find alternative ways to reduce the time needed to accomplish this task. In addition, we found that the participant who has good experience accomplished all the tasks with the least overall time (29 min).

In the iEN system, the VI participant completed the task in the least amount of time compared to other participants, while two blind participants took more time to try but did not complete three tasks. In addition, one participant completed all tasks in 49 min because they had previous experience in using the system.

Satisfaction

Satisfaction was assessed using observation notes, user comments, and open-ended questionnaires, as well as the quantitative data collected using Likert scale ratings. Table 12 presents the satisfaction results, where most of the participants were happy and confident during the log-in task, as it is an easy and common task. However, they were confused and anxious about the rest of the tasks. Therefore, as one of the important goals of the study was to understand and gather users’ comments and feedback, we present our collected observations during the test sessions. The aim behind this was to assess their satisfaction and to identify the barriers faced when using the three systems.

Table 12.

User feelings criteria: happy and confident (H), a little bit confused and worried (W), and afraid and not happy (A).

First, the incompatibility with the screen readers was the main issue that users mentioned in all systems, as the screen reader could not read the forms’ labels, the dropdown menu choices, or the buttons. According to our investigation, this issue can happen for two reasons. First, these features miss some attributes, such as the “tabindex” attribute and the ALT attribute, or some elements, such as form labels. Second, there was improper usage of some elements, such as “input,” “label,” “option,” and “select,” and defining them within many levels of nesting elements. This can be considered a very critical problem since it prevented the participants from completing the tasks successfully. We observed that because of this problem, the participants were entering arbitrary grades just to complete the “enter skills/grades” task in the Noor system and refusing to complete the last three tasks in the iEN system.

Second, the log-in task was a common task for all systems and could be considered as one of the easiest tasks for the participants; however, all of the users expressed their disappointment because no audible CAPTCHA alternative was provided. In the same task, we noticed that even the labels of the forms were unreadable by the screen reader; all participants were able to complete the task.

As for the Noor system, the VI participant who used the mouse for navigation ended up using the tab key because the navigation elements were unreadable by the screen reader when using the mouse. She stated that the “Noor system should support every type of input, including the mouse because it is easier for me.” Another participant suggested changing the navigation of the Noor system to a hierarchy and replacing it with fewer options and elements. Moreover, due to the problem caused by the improper setting of “tabindex” in task 2 and task 3, the participants appeared a little frustrated because they had to navigate a long list of navigation elements, corporate icons, site searches, and other elements before arriving at the main content, and they did this every time they needed to start the task over.

The Faris system contains private information, such as salaries, status, and vacations, which led some participants to feel uncomfortable, especially during the “request a salary identification” task. Moreover, the participants appeared to be a little disappointed as the nature of most pages was a table. Therefore, when the screen reader began to read the table, it said “row 1, column 1,” then it started reading the content; however, some of the participants did not listen till the screen reader was finished but instead they jumped quickly to the next. Thus, we think some of them needed training on using screen readers. Furthermore, we noticed that experience had a big role in the confidence of the participants.

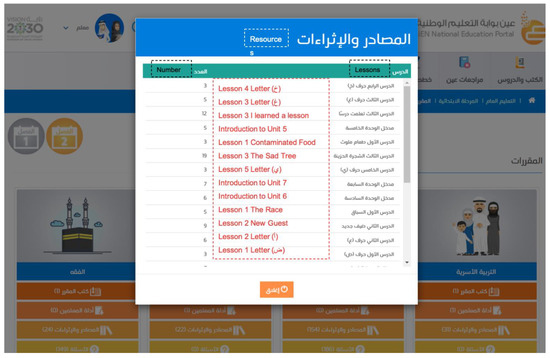

As for the iEN system, we noticed that the participants were not satisfied with the “play interactive content” task as the drop-down lists appeared after each selection (see Figure 10). This problem could be solved by displaying a new page to select the desired topic. Moreover, the similarity between the names of the functions could lead to a wrong selection during the task’s performance.

Figure 10.

Play interactive content task.

Overall, the participants showed a positive willingness to accomplish the tasks by themselves, and thus preserve their privacy.

4.2.3. Post-Questionnaire

After the usability testing session, the moderator asked the participants to fill out the post-session questionnaire to measure the user’s satisfaction and suggestions for the three systems. Regarding the Noor system, only three participants filled the form because, as we mentioned before, one of the participants could not perform the tasks due to the unforeseen downtime of the Noor system. The participants were asked to rate the three systems based on seven statements and using a five-point Likert scale (totally disagree to totally agree).

Two participants said that they wanted to use the three systems again; however, the other two participants stated that they did not want to use the iEN system.

One of the participants stated that she did not face any problem with the screen readers when using the Noor and Faris systems, and it is worth mentioning that this participant was partially blind. The rest of the participants stated that they had problems, while one of them was neutral, i.e., she did not have an opinion.

As for the ease of use, the answers varied; half of the participants stated that they found the systems easy to use or they were neutral about that, while the other half found the systems difficult to use.

Most of the participants said that they totally agreed that they needed someone to help them to use the three systems, while few of the participants had a neutral feeling about that for the Noor and Faris systems. Moreover, one participant did not agree that she needed someone to help her to use the iEN system.

Furthermore, when we asked the participants if they thought they would learn to use the three systems quickly, the answers ranged between “totally agree” and “neutral” regarding the Noor and Faris systems. However, all participants answered “totally disagree” and “disagree” regarding the iEN system. About half of the participants stated that they felt confident while using the systems; however, three of them did not feel confident regarding the iEN system. All participants agreed that they needed to learn a lot of things before they could get going with the systems.

As for the open-ended questions, when we asked about what they liked least about the systems, all participants said that they did not like how the systems did not support screen readers. As for the things they liked, one participant said she liked the easy login process in the Noor and Faris systems. Others said that she liked to use the two systems to “be independent and maintain the privacy of my information.” However, no participants liked anything regarding the iEN system.

The participants stated three reasons that would make them use the systems again, which were: maintaining privacy, training, and independence.

Finally, all participants emphasized the importance of achieving high levels of accessibility in the three systems and considering designing the systems for screen readers’ compatibility. Another important issue that was identified by participants was to provide suitable training for VI teachers to help them use the systems with the same level of independence and privacy as anyone else.

5. Discussion

An important observation we found was that the partially blind participant made fewer errors compared to the totally blind participants. On the other hand, the participants showed a positive willingness while using the systems to accomplish their tasks unaided. However, Table 13 presents a list of some of the difficulties faced by the participants while using the three systems.

Table 13.

Difficulty descriptions and their reasons.

Table 14 shows some of the most frequent accessibility issues identified in the Noor, Faris, and iEN systems with some recommendations for resolving them.

Table 14.

Common accessibility issues and suggested corrections.

6. Conclusions

The Saudi government has recently been focusing on the usability and accessibility issues of e-government systems [40,41]. Systems such as Noor, Faris, and iEN are some of the most rapidly developed and used e-government systems by teachers. Consequently, in this study, we investigated the degree of accessibility VI teachers had in accessing and using these systems based on a mixed-methods approach. The usability testing was done on four participants (three totally blind and one visually impaired teacher). In addition, four automated tools, namely, AChecker, HTML_CodeSniffer, SortSite, and Total Validator, were utilized in this study.

Theoretically, our adopted methodology used a mixed-method approach that incorporated several methods, such as automated testing and user testing methods, which was similar to previous research, such as [24,25,26], which proved to be an effective methodology for highlighting the major accessibility issues faced by VI users.

Practically, the obtained results from usability testing sessions showed that all systems did not support the screen readers effectively as it was the main issue reported by all participants. This issue can happen for many reasons, such as missing some HTML attributes or improper usage of some elements. Regarding automated tools, the evaluation helped us to identify the most important accessibility issues of the three systems. Therefore, we found that the perceivable principle was the most violated principle by all systems, followed by operable, and then robust. The errors corresponding to the perceivable principle alone represented around 73% of the total errors. Moreover, we observed that most of the errors violated even the lowest level of accessibility conformance, which reflected the failure of these systems to pass the accessibility evaluation.

The limitations of this study can be seen in the number of VI participants and the scarcity of finding VI teachers that were able to use computers with screen readers efficiently. Another limitation was in the number of covered functions in each system since some functions were time-bound (opened for specific dates in the year).

Therefore, we propose the following recommendations to enhance the usability and accessibility of the three systems.

- Usability Recommendations:

- The systems need to be more accessible and usable for any type of screen readers and input devices.

- The CAPTCHA image can be replaced with a verification message that is delivered to the user’s phone, thus preserving privacy.

- Categorizing the elements of the main menu based on user-related tasks will help with reducing the needed steps to do a certain task. Furthermore, adding a breadcrumb trail makes the return to the previous page much easier.

- Accessibility Recommendations:

Different solutions were proposed by the tools; therefore, here we will present some of the proposed solutions to solve the previously mentioned problems.

- Providing alternative text for all non-text content (1.1.1: Non-text Content).

- Specifying the right structure, such as using h1–h6 to identify the headings (1.3.1: Info and Relationships).

- Defining the default language of the website by using language attributes < html lang = “…” > (3.1.1: Language of Page).

- Determining the name and role of the components to enable keyboard operations (4.1.2: Name, Role, and Value).

- Putting all link elements in the head section of the document (2.4.8: Location).

- Assigning a unique value to each id attribute (4.1.1: Parsing).

Future work will involve conducting a large-scale usability study with VI participants. Furthermore, we plan to revisit the system for another heuristic evaluation using other automated tools and with evaluations from accessibility experts. Our future work will also involve evaluating the accessibility of other e-government systems in the educational sector.

Author Contributions

Idea, methodology, writing and reviewing, D.A.; writing and reviewing, H.A. (Hend Alkhalifa); writing and reviewing, H.A. (Hind Alotaibi); literature review accessibility test and usability study, R.A.; analysis and accessibility test and usability study, N.A.-M.; literature review, accessibility test and usability study, S.A.; analysis and accessibility test and usability study, H.T.B.; analysis accessibility test and usability study, A.M.A. All authors have read and agreed to the published version of the manuscript.

Funding

The authors wish to extend their appreciation to the Research Center for Humanity, Deanship of Scientific Research at King Saud University for funding this research (group no. HRGP-1-19-03).

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. Functionalities Selection Survey

- General Information:

- -

- Your name ______________________________________________

- -

- What grades are you currently teaching?

- o

- Elementary

- o

- Intermediate

- o

- Secondary

- -

- Condition

- o

- Sighted

- o

- Blind

- o

- Partially blind

- -

- Do you use Noor, Faris, and iEN independently, or do you need assistance when using them?

- o

- I use then independently

- o

- I need an assistant

- -

- If you need assistance, then for what exactly?

- _____________________________________________________________

- Noor System

- -

- On a scale from 1 to 6, rank the services you use in the Noor system from the most to the least used, where number 1 represents the most used service.

Table A1.

Rank the services you use in the Noor system.

Table A1.

Rank the services you use in the Noor system.

| 1 | 2 | 3 | 4 | 5 | 6 | |

|---|---|---|---|---|---|---|

| Teacher affairs | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Learning resources | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Labs | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Exams | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Talents | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Skills | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

- Faris System

- -

- On a scale from 1 to 6, rank the services you use in the Faris system from the most to the least used, where number 1 represents the most used service.

Table A2.

Rank the services you use in the Faris system.

Table A2.

Rank the services you use in the Faris system.

| 1 | 2 | 3 | 4 | 5 | 6 | |

|---|---|---|---|---|---|---|

| Vacations | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Salaries and allowances | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Labs | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Exams | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Talents | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Skills | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

- iEN system

Table A3.

Rank the services you use in the iEN system.

Table A3.

Rank the services you use in the iEN system.

| 1 | 2 | 3 | 4 | 5 | 6 | |

|---|---|---|---|---|---|---|

| Vacations | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Salaries and allowances | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Qualifications | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Assignments | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Inquiries | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

| Transfer | ◦ | ◦ | ◦ | ◦ | ◦ | ◦ |

Appendix B. Pre-Questionnaires

Usability Evaluation Testing: Pre-test Interview Questions [42].

- General Information

- 1.

- Age

- o

- 20–30

- o

- 30–40

- o

- 40–50

- o

- 50–60

- o

- over60

- 2.

- Education

- □ Bachelor’s degree

- □ Master’s degree

- □ Doctorate

- 3.

- How long have you been teaching?

- years

- 4.

- What grades are you currently teaching?

- □ Elementary

- □ Intermediate

- □ Secondary–terms

- □ Secondary–courses

- Computer skills

- 5.

- How often do you use your personal computer?

| Never | Rarely | Occasionally | Frequently | Very Frequently |

- 6.

- How often do you browse the Internet?

| Never | Rarely | Occasionally | Frequently | Very Frequently |

- Experience with the Noor system

- 7.

- How often do you use the Noor system?

| Never | Rarely | Occasionally | Frequently | Very Frequently |

- 8.

- What are the main tasks you perform using the Noor system?

- ____________________________________________________________

- 9.

- Do you use the Noor system by yourself? If no, who helps you?

- o

- Yes

- o

- No, ----------------

- If yes, how do you usually access Noor (phone/tablet/desktop)? Do you use any assistive technology (devices/apps)? What do you use most?

- ____________________________________________________________

- 10.

- How do you rate the ease-of-use of the Noor system?

| (1) | (2) | (3) | (4) | (5) |

| Very difficult | Very easy |

- 11.

- How do you rate the ease-of-learning of the Noor system?

| (1) | (2) | (3) | (4) | (5) |

| Very difficult | Very easy |

- 12.

- How do you rate the efficacy of the Noor system?

| (1) | (2) | (3) | (4) | (5) |

| Inefficient | Efficient |

- 13.

- How well does the Noor system support the tasks you want to achieve?

| (1) | (2) | (3) | (4) | (5) |

| Little support | Good support |

- 14.

- What are the main features in the Noor system, if any, that you find helpful when you perform your common tasks?

- ____________________________________________________________

- ____________________________________________________________

- ____________________________________________________________

- Experience with the Faris system

- 1.

- How often do you use the Faris system?

| Never | Rarely | Occasionally | Frequently | Very Frequently |

- 2.

- What are the main tasks that you perform using the Faris system?

- ____________________________________________________________

- 3.

- Do you use the Faris system by yourself? If no, who helps you?

- o

- Yes

- o

- No, ----------------

- If yes, how do you usually access Faris (phone/tablet/desktop)? Do you use any assistive technology (devices/apps)? What do you use most?

- ____________________________________________________________

- 4.

- How do you rate the ease-of-use of the Faris system?

| (1) | (2) | (3) | (4) | (5) |

| Very difficult | Very easy |

- 5.

- How do you rate the ease-of-learning of the Faris system?

| (1) | (2) | (3) | (4) | (5) |

| Very difficult | Very easy |

- 6.

- How do you rate the efficacy of the Faris system?

| (1) | (2) | (3) | (4) | (5) |

| Inefficient | Efficient |

- 7.

- How well does the Faris system support the tasks you want to achieve?

| (1) | (2) | (3) | (4) | (5) |

| Little support | Good support |

- 8.

- What are the main features in the Faris system, if any, that you find helpful when you perform your common tasks?

- ____________________________________________________________

- ____________________________________________________________

- ____________________________________________________________

- Experience with iEN system

- 1.

- How often do you use the iEN system?

| Never | Rarely | Occasionally | Frequently | Very Frequently |

- 2.

- What are the main tasks you perform using iEN system?

- ____________________________________________________________

- 3.

- Do you use iEN system by yourself? If no, who helps you?

- o

- Yes

- o

- No, ----------------

- If yes, how do you usually access iEN (phone/tablet/desktop)? Do you use any assistive technology (devices/apps)? What do you use most?

- ____________________________________________________________

- 4.

- How do you rate the ease-of-use of the iEN system?

| (1) | (2) | (3) | (4) | (5) |

| Very difficult | Very easy |

- 5.

- How do you rate the ease-of-learning of iEN system?

| (1) | (2) | (3) | (4) | (5) |

| Very difficult | Very easy |

- 6.

- How do you rate the efficacy of the iEN system?

| (1) | (2) | (3) | (4) | (5) |

| Inefficient | Efficient |

- 7.

- How well does the iEN system support the tasks you want to achieve?

| (1) | (2) | (3) | (4) | (5) |

| Little support | Good support |

- 8.

- What are the main features of the iEN system, if any, that you find helpful when you perform your common tasks?

- ____________________________________________________________

- ____________________________________________________________

- ____________________________________________________________

Appendix C. Post-Questionnaires

Usability Evaluation Testing: Post-Test Questionnaires [43].

| Strongly Disagree | Somewhat Disagree | Neutral | Somewhat Agree | Strongly Agree | |

| 1. I think I would like to use this tool frequently. | ◦ | ◦ | ◦ | ◦ | ◦ |

| 2. I found the tool unnecessarily complex. | ◦ | ◦ | ◦ | ◦ | ◦ |

| 3. I thought the tool was easy to use. | ◦ | ◦ | ◦ | ◦ | ◦ |

| 4. I think that I would need the support of a technical person to be able to use this system. | ◦ | ◦ | ◦ | ◦ | ◦ |

| 5. I found that the various functions in this tool were well integrated. | ◦ | ◦ | ◦ | ◦ | ◦ |

| 6. I thought there was too much inconsistency in this tool. | ◦ | ◦ | ◦ | ◦ | ◦ |

| 7. I would imagine that most people would learn to use this tool very quickly. | ◦ | ◦ | ◦ | ◦ | ◦ |

| 8. I found the tool very cumbersome to use. | ◦ | ◦ | ◦ | ◦ | ◦ |

| 9. I felt very confident using the tool. | ◦ | ◦ | ◦ | ◦ | ◦ |

| 10. I needed to learn a lot of things before I could get going with this tool. | ◦ | ◦ | ◦ | ◦ | ◦ |

| •In general, what do you like the most about the system? |

| •In general, what do you like the least about the system? |

| •Do you have any recommendations to improve the system? |

| •Do you have any further comments? |

References

- Gronlund, A.; Horan, T. Developing a generic framework for e-Government. Commun. Assoc. Inf. Syst. 2005, 15, 713–729. [Google Scholar]

- Fang, Z. E-government in digital era: Concept, practice, and development. Int. J. Comput. Internet Manag. 2002, 10, 1–22. [Google Scholar]

- Ministry of Education—Help Offered to Differently Abled Persons. Available online: https://pbs.twimg.com/profile_images/882666205/mohe_new_logo_400x400.png (accessed on 8 October 2020).

- ISO 9241-161:2016(en), Ergonomics of Human-System Interaction—Part 161: Guidance on Visual User-Interface Elements. Available online: https://www.iso.org/obp/ui/#iso:std:iso:9241:-161:ed-1:v1:en (accessed on 28 April 2019).

- Validator, T. Web Accessibility Checker | Total Validator. Available online: https://www.totalvalidator.com/ (accessed on 24 October 2020).

- HTML_CodeSniffer. Available online: https://squizlabs.github.io/HTML_CodeSniffer/ (accessed on 24 October 2020).

- Website Error Checker: Accessibility & Link Checker—SortSite. Available online: https://www.powermapper.com/products/sortsite/ (accessed on 24 October 2020).

- IDI Web Accessibility Checker: Web Accessibility Checker. Available online: https://achecker.ca/checker/index.php (accessed on 24 October 2020).

- Bakhsh, M.; Mehmood, A. Web Accessibility for Disabled: A Case Study of Government Websites in Pakistan. In Proceedings of the 2012 10th International Conference on Frontiers of Information Technology, Islamabad, India, 17–19 December 2012; pp. 342–347. [Google Scholar]

- Nielsen, J. Ten Usability Heuristics. Available online: http://www.nngroup.com/articles/ten-usability-heuristics/ (accessed on 8 October 2005).

- Kuzma, J.; Dorothy, Y.; Oestreicher, K. Global e-government Web Accessibility: An Empirical Examination of EU, Asian and African Sites. In Proceedings of the Second International Conference on Information and Communication Technologies and Accessibility, Hammamet, Tunisia, 7–9 May 2009. [Google Scholar]

- TAW Tools. Available online: https://www.tawdis.net/proj (accessed on 8 October 2020).

- Mohd Isa Assessing the Usability and Accessibility of Malaysia E-Government Website. Am. J. Econ. Bus. Adm. 2011, 3, 40–46. [CrossRef]

- Acosta, T.; Acosta-Vargas, P.; Luján-Mora, S. Accessibility of eGovernment Services in Latin America. In Proceedings of the 2018 International Conference on eDemocracy & eGovernment (ICEDEG), Ambato, Ecuador, 4–6 April 2018; pp. 67–74. [Google Scholar]

- Pribeanu, C.; Fogarassy-Neszly, P.; Pătru, A. Municipal web sites accessibility and usability for blind users: Preliminary results from a pilot study. Univers. Access Inf. Soc. 2014, 13, 339–349. [Google Scholar] [CrossRef]

- Abanumy, A.; Al-Badi, A.; Mayhew, P. e-Government Website accessibility: In-depth evaluation of Saudi Arabia and Oman. Electron. J. E-Gov. 2005, 3, 99–106. [Google Scholar]

- Al-Khalifa, H.S. Heuristic Evaluation of the Usability of e-Government Websites: A Case from Saudi Arabia. In Proceedings of the 4th International Conference on Theory and Practice of Electronic Governance, Beijing, China, 25–28 October 2010; ACM: New York, NY, USA, 2010; pp. 238–242. [Google Scholar]

- Al-Khalifa, H.S.; Baazeem, I.; Alamer, R. Revisiting the accessibility of Saudi Arabia government websites. Univers. Access Inf. Soc 2017, 16, 1027–1039. [Google Scholar] [CrossRef]

- Al-Faries, A.; Al-Khalifa, H.S.; Al-Razgan, M.S.; Al-Duwais, M. Evaluating the accessibility and usability of top Saudi e-government services. In Proceedings of the 7th International Conference on Theory and Practice of Electronic Governance—ICEGOV ’13, Seoul, Korea, 22–25 October 2013; ACM Press: New York, NY, USA, 2013; pp. 60–63. [Google Scholar]

- Al Mourad, M.B.; Kamoun, F. Accessibility Evaluation of Dubai e-Government Websites: Findings and Implications. JEGSBP 2013, 1–15. [Google Scholar] [CrossRef]

- Karaim, N.A.; Inal, Y. Usability and accessibility evaluation of Libyan government websites. Univers. Access Inf. Soc. 2019, 18, 207–216. [Google Scholar] [CrossRef]

- Sulong, S.; Sulaiman, S. Exploring Blind Users’ Experience on Website to Highlight the Importance of User’s Mental Model. In User Science and Engineering; Abdullah, N., Wan Adnan, W.A., Foth, M., Eds.; Springer: Singapore, 2018; Volume 886, pp. 105–113. ISBN 9789811316272. [Google Scholar]

- Swierenga, S.J.; Sung, J.; Pierce, G.L.; Propst, D.B. Website Design and Usability Assessment Implications from a Usability Study with Visually Impaired Users. In Universal Access in Human-Computer Interaction. Users Diversity; Stephanidis, C., Ed.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6766, pp. 382–389. ISBN 978-3-642-21662-6. [Google Scholar]

- do Carmo Nogueira, T.; Ferreira, D.J.; de Carvalho, S.T.; de Oliveira Berretta, L.; Guntijo, M.R. Comparing sighted and blind users task performance in responsive and non-responsive web design. Knowl. Inf. Syst. 2019, 58, 319–339. [Google Scholar] [CrossRef]

- Lazar, J.; Olalere, A.; Wentz, B. Investigating the Accessibility and Usability of Job Application Web Sites for Blind Users. J. Usabil. Stud. 2012, 7, 68–87. [Google Scholar]