Abstract

Recently, a number of data analysists have suffered from an insufficiency of historical observations in many real situations. To address the insufficiency of historical observations, self-starting forecasting process can be used. A self-starting forecasting process continuously updates the base models as new observations are newly recorded, and it helps to cope with inaccurate prediction caused by the insufficiency of historical observations. This study compared the properties of several exponentially weighted moving average methods as base models for the self-starting forecasting process. Exponentially weighted moving average methods are the most widely used forecasting techniques because of their superior performance as well as computational efficiency. In this study, we compared the performance of a self-starting forecasting process using different existing exponentially weighted moving average methods under various simulation scenarios and real case datasets. Through this study, we can provide the guideline for determining which exponentially weighted moving average method works best for the self-starting forecasting process.

1. Introduction

Time series is a sequence of observations, measured typically at successive points in time spaced at uniform intervals. This exists in many situations, including the daily closing value of the stock market, manufacturing process, health status of patients, and economic indicators [1]. Using these time series data, forecasting future events has been of considerable interest in various fields, including control charts [2,3,4,5], health care surveillance [6,7,8], inventory controls [9], stock market prediction [10,11,12], pandemic occurrence prediction [13], and electricity demand forecasting [14]. In such industrial areas, the accurate forecasting of future time series values helps establish more effective decision or management policy. To achieve satisfactory forecasting performance, a number of time series forecasting methods have been proposed, including exponentially weighted moving average (EWMA; [15,16,17,18]), autoregressive integrated moving average (ARIMA; [19]), generalized autoregressive conditionally heteroskedastic (GARCH; [20]), and vector autoregression (VAR; [21,22]) methods. In recent years, neural network-based approaches [23,24] have been widely applied for the time series forecasting tasks [25,26,27]. Among these time series forecasting methods, in this study, we focus on the EWMA model.

The EWMA model assigns different weights for individual observations collected over time, and more recent observations are weighted more heavily than remote observations, and hence, dynamics of time series can be effectively reflected into the forecasting models. Furthermore, such an EWMA model has a desirable property with a recursive form, which leads to computational efficiency. Finally, the EWMA models do not need any distributional assumption and prior knowledge on the time series [1,28]. Because of these advantages, a number of EWMA models have been proposed, including single exponential smoothing (single ES; [15,16]) model, double exponential smoothing (double ES; [17]) model, triple exponential smoothing (triple ES; [18]) model, and two-stage exponentially weighted moving average (two-stage EWMA; [29,30]) model. We presented a more detailed description on these existing EWMA models in Section 2.1.

To ensure the satisfactory forecasting performance of the EWMA models, historical time series observations should be sufficiently accumulated. However, lots of data analysists have suffered from the insufficiency of historical observations in many real situations [31]. For example, the demand prediction of newly launched products is not an easy task because there are no sales records of these products. In addition, the prognosis of a manufacturing system status is also difficult immediately after the preventive maintenance because meaningful process data are not sufficiently accumulated after these operations. Accordingly, the insufficiency of historical observations has been regarded as one of the challenging problems for time series data analysis [28].

To address the insufficiency of initial time series data, self-starting approaches [32,33,34] can be incorporated into the EWMA model. In the self-starting-based EWMA modeling process, an initial EMWA model is constructed only using small initial time series observations, and the EWMA model is continuously updated as a new observation is sequentially recorded. By doing so, forecasting the performance of EWMA models can be improved over time because historical records are continuously accumulated in the forecasting process. Besides, the self-starting forecasting process can effectively accommodate dynamically changing time-varying patterns in that the forecasting models are updated by adding the most recently recorded observation to the initial time series data. In Section 2.2, we also presented more details on the self-starting forecasting process.

The main purpose of this study was to examine the characteristics of EWMA models as base-forecasting models for the self-starting forecasting process. Although various properties on existing EMWA models have been thoroughly examined in a number of previous studies, their characteristics as a base model for self-starting forecasting have not been systematically analyzed. Hence, this study has a remarkable contribution in that this is the first attempt to comprehensively investigate the properties of the EWMA models as base models of the self-starting forecasting process.

The remainder of this paper is organized as follows. In Section 2, we describe both the characteristics of existing EWMA models and self-forecasting process. In Section 3, we present a simulation study to compare the methods under various scenarios with only small initial time series data. In Section 4, we present the comparative results from real case data, and Section 5 presents concluding remarks.

2. Background

2.1. Exponentially Weighted Moving Average Model

In 1956, a single ES model was first proposed [15], which was designed to forecast demands in inventory control systems. In the single EWMA model, it is assumed that time series data, Y1, Y2, …, Yt, have no trend or seasonal pattern, and the level factor at time t, , is given by

where and α is a smoothing parameter between 0 and 1. Equation (1) can be rewritten as follows:

Thus, the single ES model can be considered a weighted average of the current and all past observations. Because the multiplier of the past observation is (1-α), which is smaller than one for any α values, it gives more weight to the current observation than past observations. Finally, the forecast for s times ahead from the time t can be determined as follows:

As shown in Equation (3), single ES forecasts the future observations as a constant value. Hence, this method might not yield satisfactory prediction performance in many real situations because the time series data are dynamically changed over time. To accommodate a time-varying pattern with a linear trend, a double ES model was proposed [17]. The double ES model assumes that the time series, Y1, Y2, …, Yt, exhibit a linear trend with no seasonal pattern. In this model, the level factor and trend factor at time t, denoted as , and , respectively, can be calculated by following equations:

where , and , and 0 < α < 1, 0 < β < 1. The parameter α is a smoothing parameter for the level factor, and the parameter β is for a trend factor. The forecast for s times further from time t is given by

This double ES model has successfully worked for forecasting the time series that has a constant trend, however, it does not properly work in a time series having a time-varying pattern of varying trends or seasonal variations.

To consider the seasonal variations, Winters [18] proposed the triple ES model to forecast the time series with both a linear trend and seasonality. Unlike to all the aforementioned methods, the triple ES method additionally estimates the parameters of a period term and seasonal factors with the historical data. This m is composed of three factors: the level factor, trend factor, and seasonality factor at time t, denoted as , , and , respectively. Each factor is expressed as

where , and , and 0 < α < 1, 0 < β < 1, 0 < γ < 1. The parameter α is a smoothing parameter for the level factor, the parameter β is for a linear trend factor, the parameter γ is for seasonality factor, and the parameter L represents the period. The forecast for s times further from the current time t can be computed as follows:

If a time series has a seasonal pattern with increasing variation, the multiplicative triple ES method is more appropriate than the additive model described above. The calculation procedure of the multiplicative method is almost the same as the additive model except for some minor modifications. For multiplicative ES method, in Equation (7) and in Equation (9) can be replaced with and , respectively.

However, it is not easy to estimate such parameters accurately when the historical data are not sufficient or if prior knowledge on the time series is not available. Thus, the triple ES model might not be an appropriate base model for the self-starting forecasting process under insufficient initial historical observations. In addition, these traditional EWMA models are based on the assumption that the future observations will have a similar pattern to past observations. However, in various fields, such as signal processing, the time-varying patterns of future observations are unknown and far from the historical pattern [29,30]. Consequently, these traditional models often suffer from the bias of forecasting results from true future observations because of this assumption.

To address this drawback of the traditional EWMA models, Ryu and Han proposed two-stage EWMA, which mimics the double ES model [29,30]. The two-stage EWMA can be formulated with three factors: level factor, adjustment factor, and drift factor. In the first step, the level factor at time t, , can be computed as follows:

where . Then, the adjustment factor at time t, , can be estimated as follows:

where is a first-order difference between time t and t-1, which can be defined as follows:

In addition, . Finally, we estimate the drift factors of time t by

where . The final forecast for s further times from the current time t can be computed by the following equation:

The drift factor plays similar role to the trend factor in the double ES model. However, it should be noted that the drift factor is defined as a difference between two successive observations, and hence, the drift factor can more accurately reflect the dynamically changing patterns than the trend factors, which is the difference between the estimated level factors of two successive time points. Moreover, this method involves the adjusted factors in order to alleviate the effects from sudden and unexpected changing patterns. Hence, the two-stage EWMA method can outperform the double ES method.

2.2. Self-Starting Forecasting Process with EWMA Model

To ensure the satisfactory performance of these EWMA models, sufficient historical time series observations should be prepared beforehand. However, in many real situations, only a small number of time series observations are available. One of possible solutions to this problem is a self-starting approach, which both forecasting and model updating are simultaneously done as a new time series observation is added to the training instances. With that approach, the base EWMA model is built without requiring large preliminary initial time series observations. In addition, in the self-starting approach, the models can effectively accommodate the time-varying patterns of time series in that the base EWMA models are continuously updated as time series observations are newly recorded. It should be noted that other forecasting methods, including ARIMA, GARCH, and VAR, cannot be used for the self-starting forecasting process. These methods estimate a number of parameters, and in order to estimate them, more time series observations than the number of parameters are required [35,36]. Accordingly, other forecasting methods are not available for the self-starting forecasting process. Conversely, as described in Section 2.1, only a small number of time series observations (two) are required to estimate the initial factors of EWMA models; therefore, we only consider the EWMA models as a base model for the self-starting forecasting process.

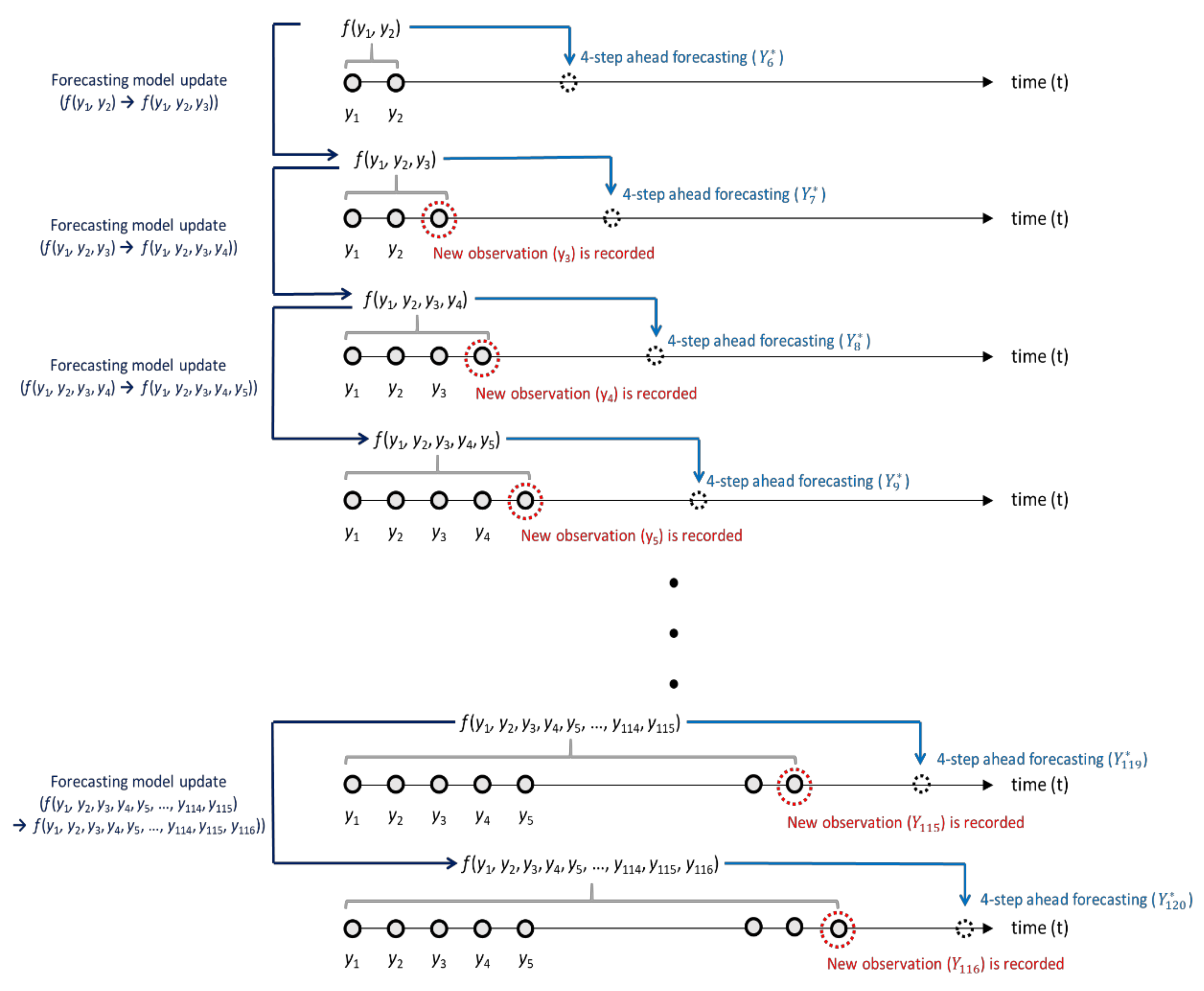

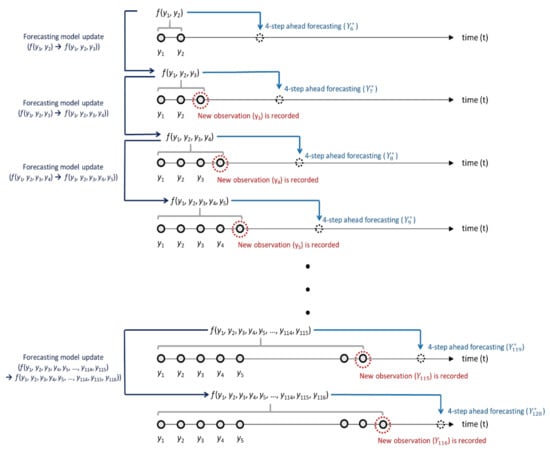

Because the self-starting forecasting process was proposed to address the situation where there was no or only very small initial training observations available, it starts using only the minimum observations required for the base model as initial training data. Thus, we also constructed the initial base EWMA model using only the first two observations. Then, with the base EWMA model, we forecasted s-step ahead future observations (i.e., forecasting window is denoted as s). The EWMA models were continuously updated when a new observation was added, and s-step ahead forecasting was also continuously conducted through the updated EWMA model. Figure 1 exemplifies the self-starting forecasting process, which was considered in this study, when the forecasting window, s, is set as 4, and the total length of the time series observation is 120.

Figure 1.

Graphical illustration of self-starting forecasting process when the forecasting window (s) and total time series length are 4 and 120, respectively.

As shown in Figure 1, the initial EWMA model f(y1, y2) is constructed by only two initial observations (y1 and y2), and the f(y1, y2) generates the four-step ahead forecasting value, . Then, when the observation is in the third time point, y3, is newly recorded, the initial forecasting model is updated to f (y1, y2, y3). These forecasting and model updates are repeated until 116th observation is recorded. This continuous model update process was repeated until (120 s) time series observations were accumulated.

3. Simulation Study

3.1. Simulation Setup

We conducted simulation studies under nine time series patterns to evaluate the performance of four EWMA methods: (1) single ES; (2) double ES; (3) triple ES; and (4) two-stage EWMA methods. The simulation was conducted under the following nine patterns:

- Pattern 1: ;

- Pattern 2: ;

- Pattern 3: ;

- Pattern 4:;

- Pattern 5: ;

- Pattern 6: ;

- Pattern 7: ;

- Pattern 8: ;

- Pattern 9: .

The length of each time series pattern is 120, and the noises generated from the normal distribution whose mean is zero and standard deviation is were added to the baseline. In this study, we investigated the performance of EWMA methods on both homoscedasticity and the heteroscedasticity of the noises. Hence, the standard deviation of noise is , which can be defined as follows:

- Small noise (homoscedastic): ;

- Medium noise (homoscedastic): ;

- Large noise (homoscedastic):

- Increasing noise (heteroscedastic): ;

- Deceasing noise (heteroscedastic): .

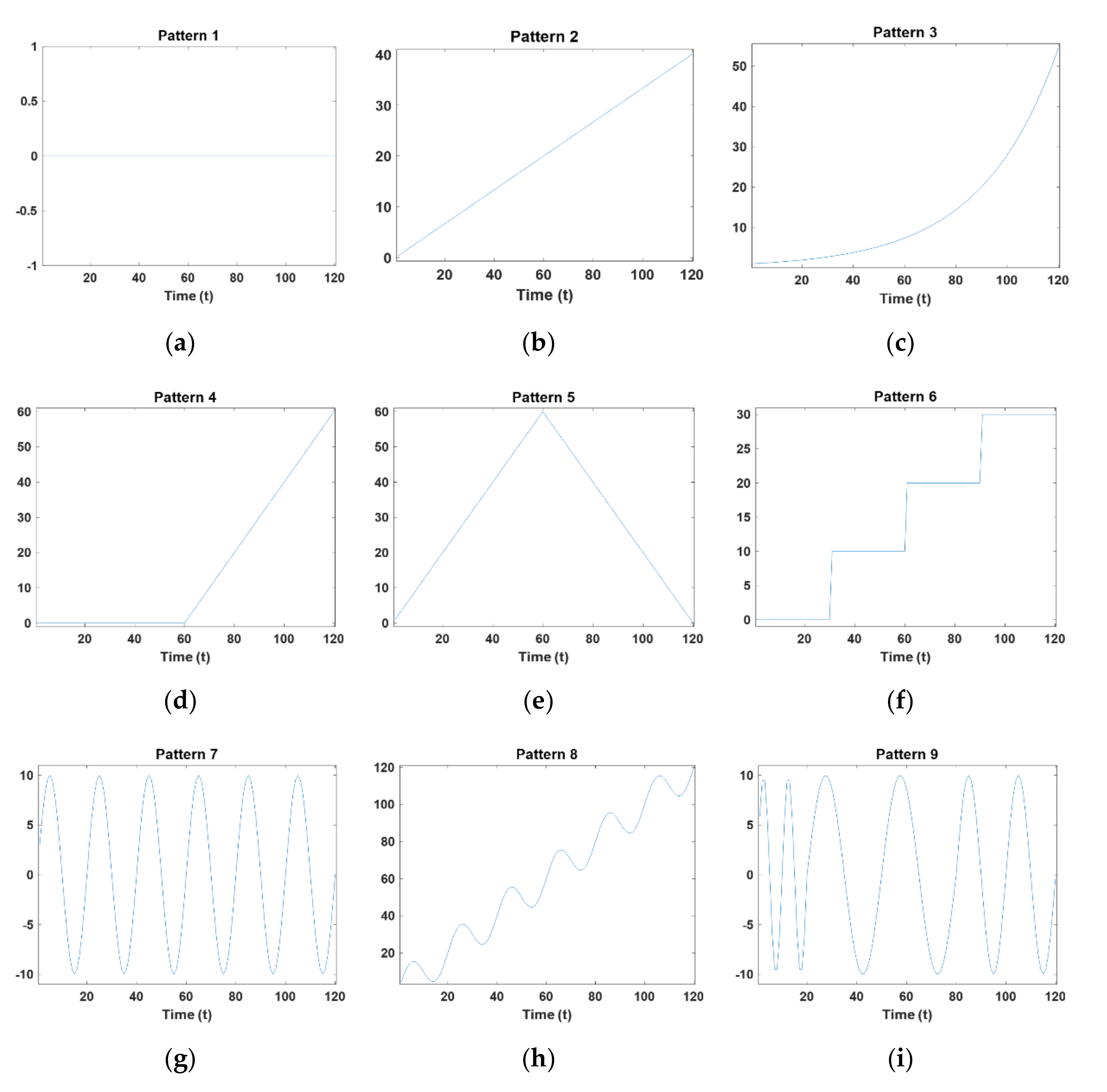

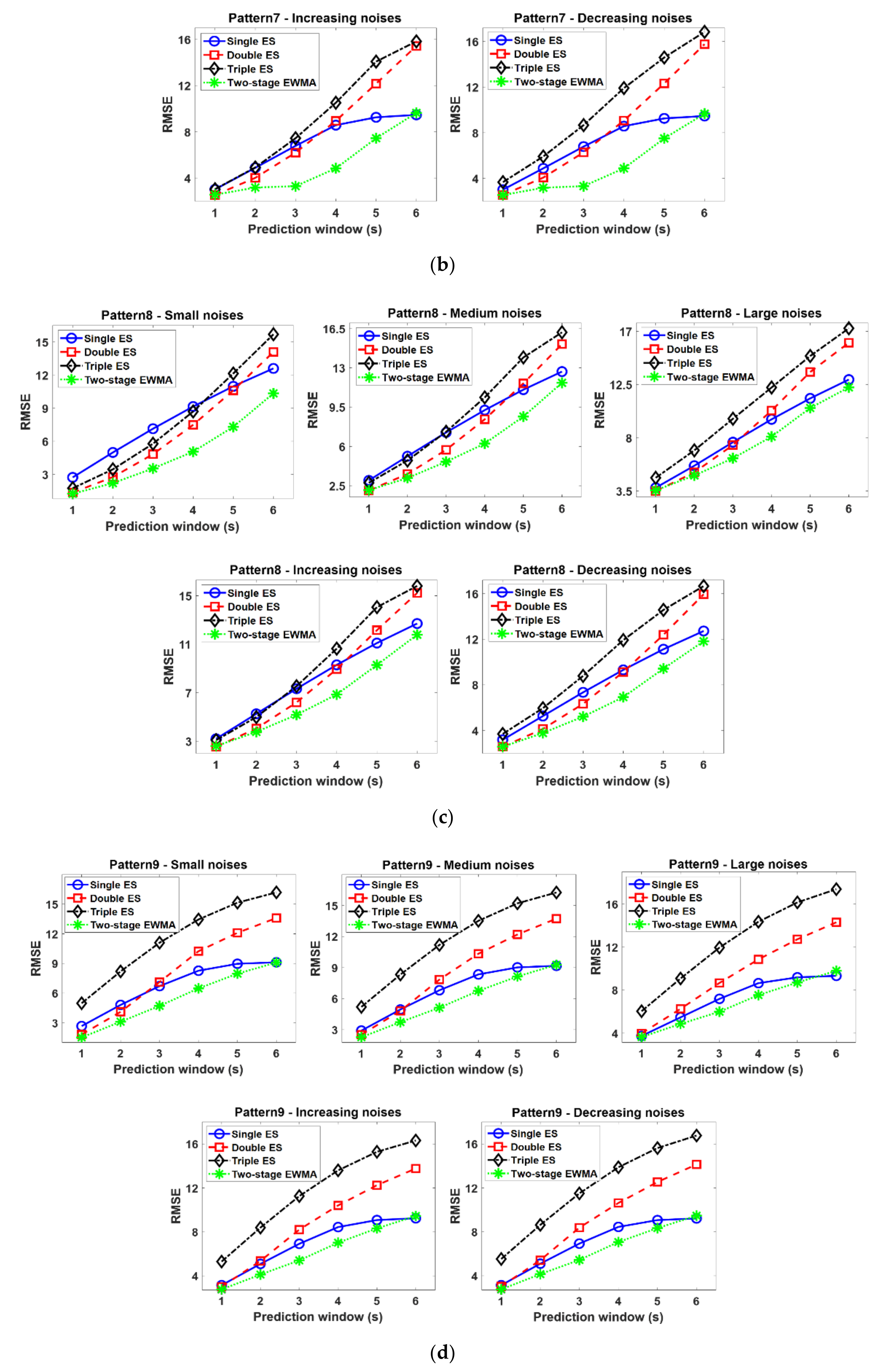

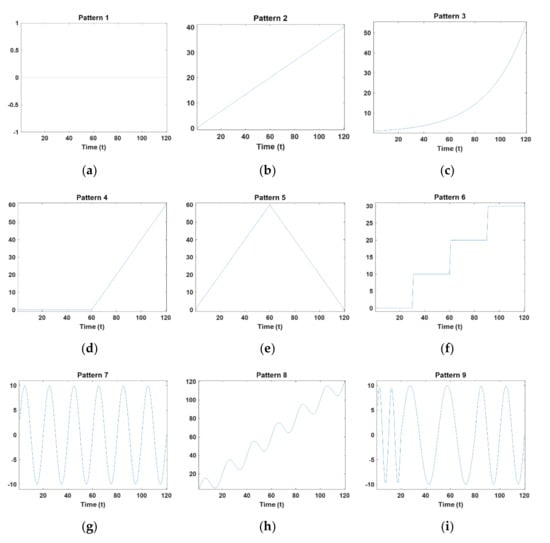

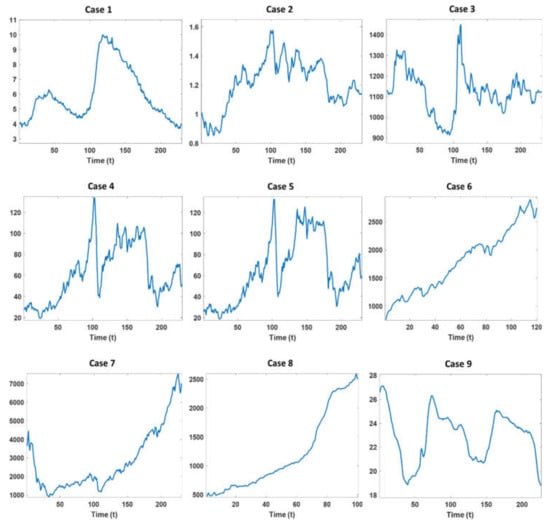

Figure 2 shows the baseline of the time series in each pattern.

Figure 2.

Baselines of nine time series patterns.

Pattern 1 and 2 represent the simplest time series patterns, which are the stationary and linear trend, respectively. On the other hand, Pattern 3 is a time series with an exponential trend, one of the most well known nonlinearly time-varying pattern, and Pattern 4 represents a time series that changes from stationary to non-stationary. More specifically, the series has a stationary pattern from time 1 to 60, followed by a constantly increasing trend from time 61 to 120. In addition, in Pattern 5, the direction of the trend is changed from an increasing pattern to decreasing pattern. That is to say, the trend of the simulation data constantly increased from time 1 to 60, and then, it constantly decreased from time 61 to 120. The simulated data in Pattern 6 consist of four stationary patterns with step-like increments with a period size of 30. Patten 7 considers time series that exhibit constant seasonal time series whose period size is 20, and Pattern 8 shows time series that exhibit seasonal variation (period size is 20) with a linearly increasing trend. Finally, Pattern 9 considers time series with seasonal variation with changing periods. In this scenario, the periods are 10, 20, and 30, respectively.

In each simulation study, we applied the self-starting EWMA model to each simulation case. That is, we built the initial EWMA models using only the first two observations as a training time series data, and forecasted s-step ahead future observations using these initial models. Then, the EWMA models were continuously updated as a successive observation was added to the training time series data. Finally, the updated EWMA model forecasted s-step ahead future observations. This continuous model update and forecasting process was repeated until (120-s) time series observations were accumulated.

In this simulation study, we applied the forecasting window, s, from one to six. In each s value, we repeated this experiment 100 times and reported the average root mean square error (RMSE) values of s-step ahead forecasting obtained from these 100 repetitions. To use the EWMA methods, appropriate smoothing parameters should be determined. In this simulation, we changed the smoothing parameters between 0.1 and 0.9 with a step size of 0.1, and we reported the lowest average RMSE values among total smoothing parameter sets. The value of the smoothing parameter, 0.1, is sufficient to reduce the risk of involving the noises of latest time series observation, and also, the value of smoothing parameter, 0.9, is a sufficiently large value to consider the impact of the most current time series observations. For this reason, in previous works, the range of smoothing parameter values have been set between 0.1 and 0.9 [28,37]. Since simulation data for pattern from 1 to 6 do not have seasonality, we conducted the experiments without the triple ES model in these patterns. Finally, the self-starting forecasting process uses only two initial data, and the period and seasonal factors of the triple ES method cannot be accurately estimated using only this small number of time series data. Hence, in this study, we used the ground truth of these factors to determine them.

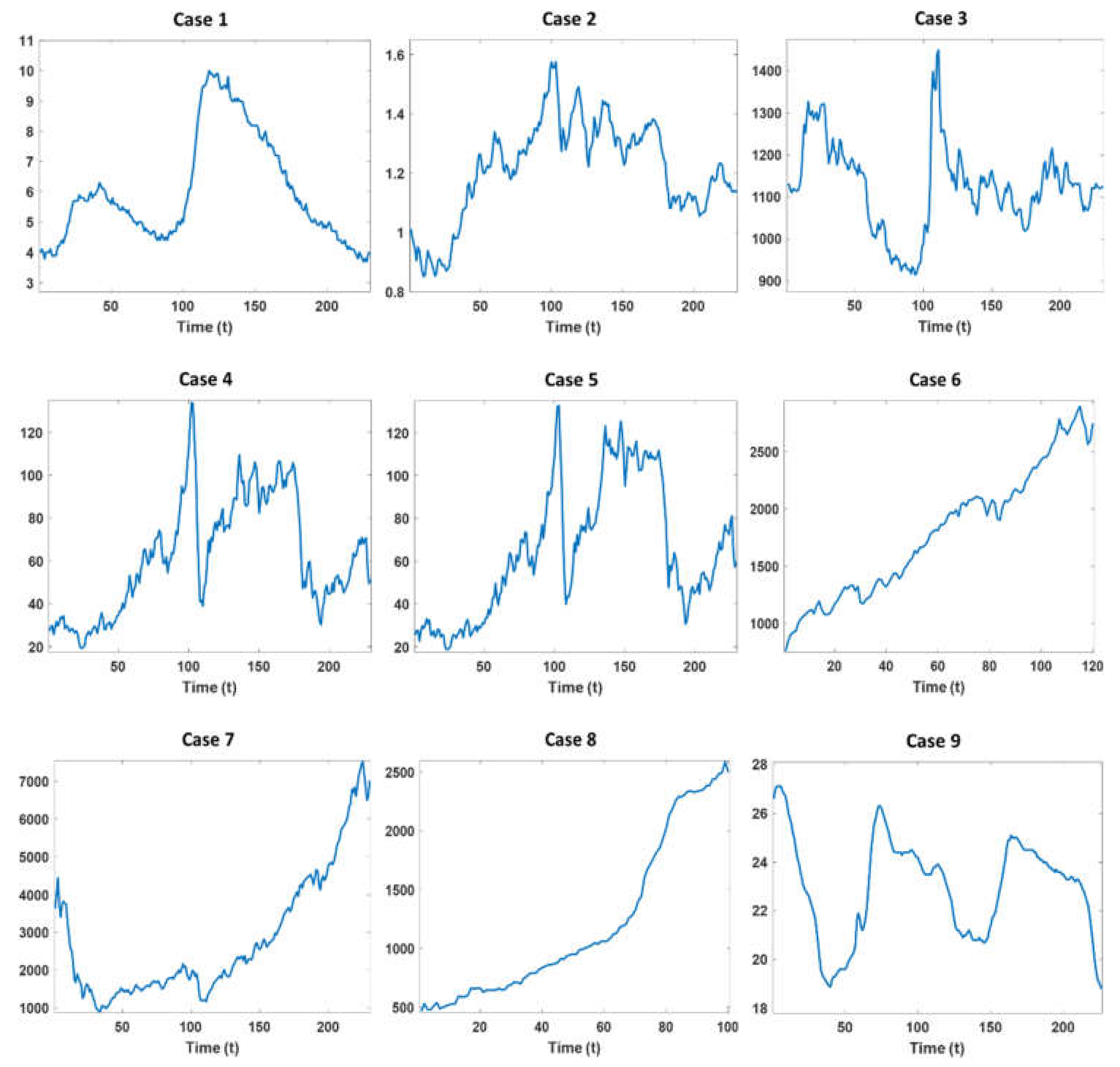

3.2. Simulation Results

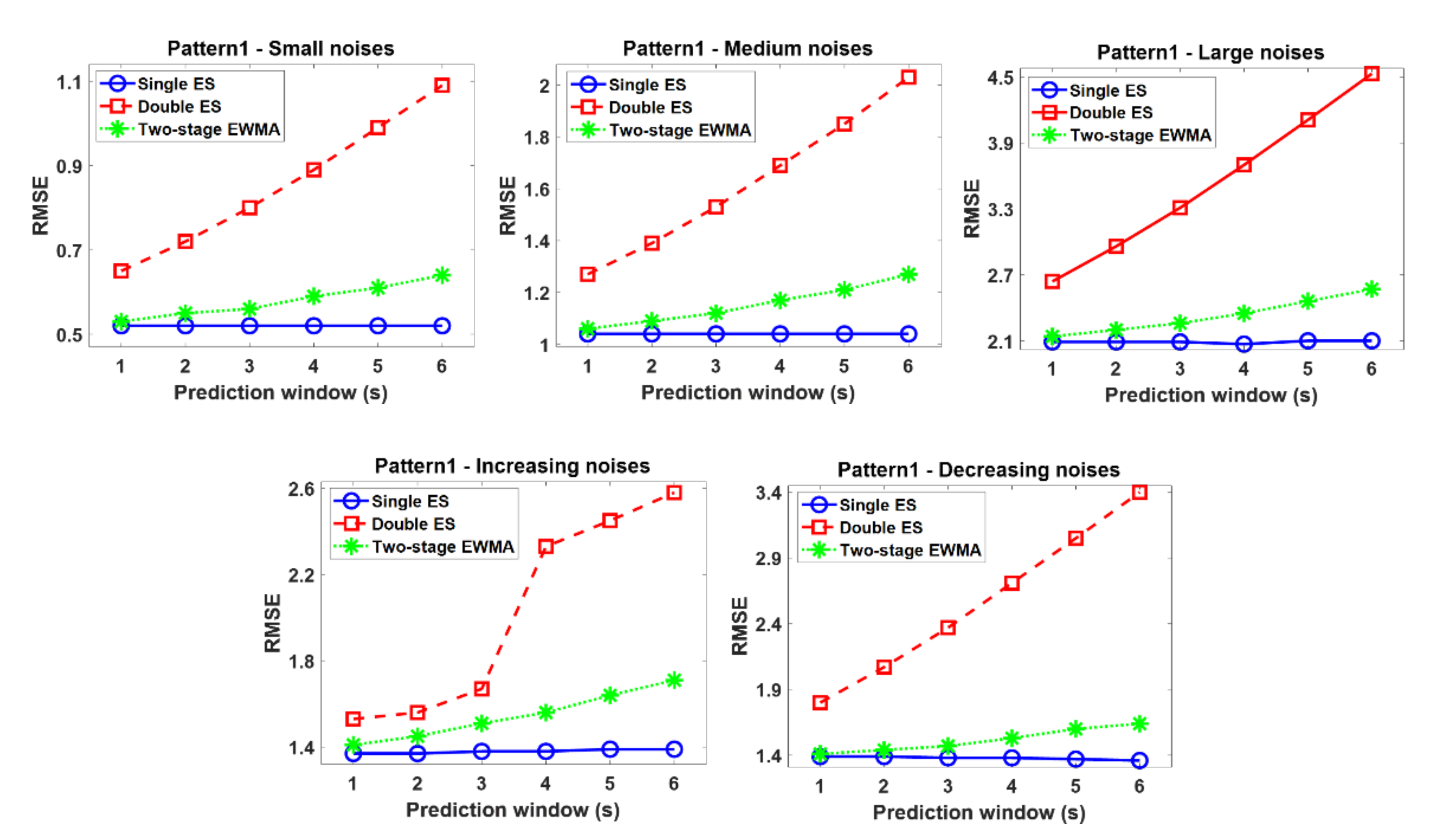

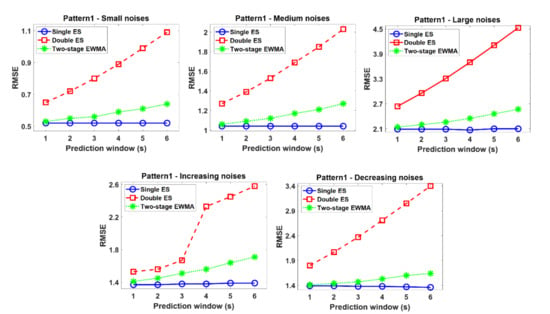

From Figure 3, Figure 4 and Figure 5, x-axis is the prediction windows and y-axis is average root mean square error (RMSE) values obtained from 100 repeated experiments. In Figure 3, the RMSE curves in Pattern 1 are exhibited. In this time series pattern, the single ES yields a smaller RMSE than the other two methods because the single ES is designed for stationary patterns. In addition, the trend factors in the double ES methods tend to be overestimated, and these inappropriately estimated trend factors lead to poor forecasting results. On the other hand, compared to the double ES method, the two-stage EWMA method produced better forecasting performance than the double ES because the adjustment factor alleviates the effect of the overestimated trend factors.

Figure 3.

Comparison results between a single exponential smoothing (ES), double ES, and two-stage EWMA in Pattern 1.

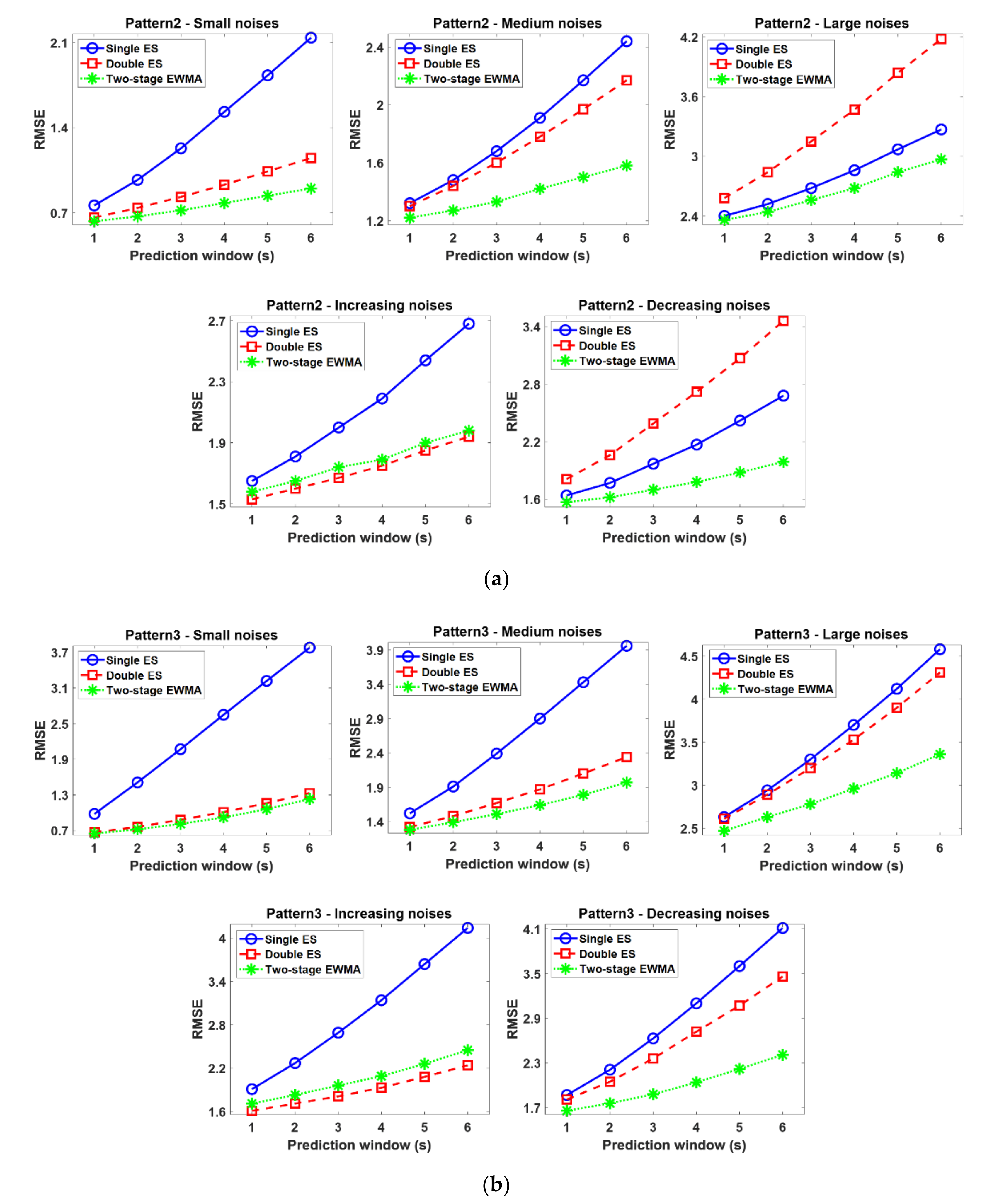

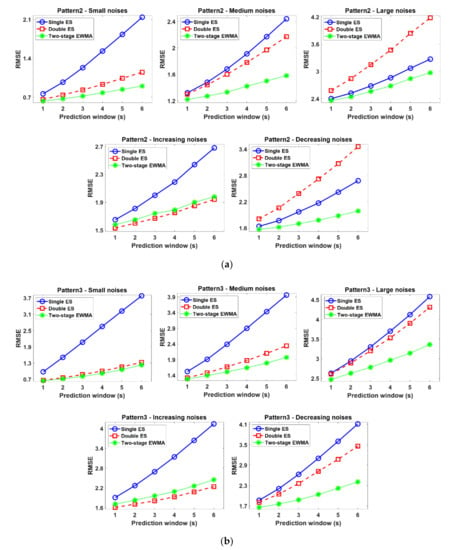

Figure 4.

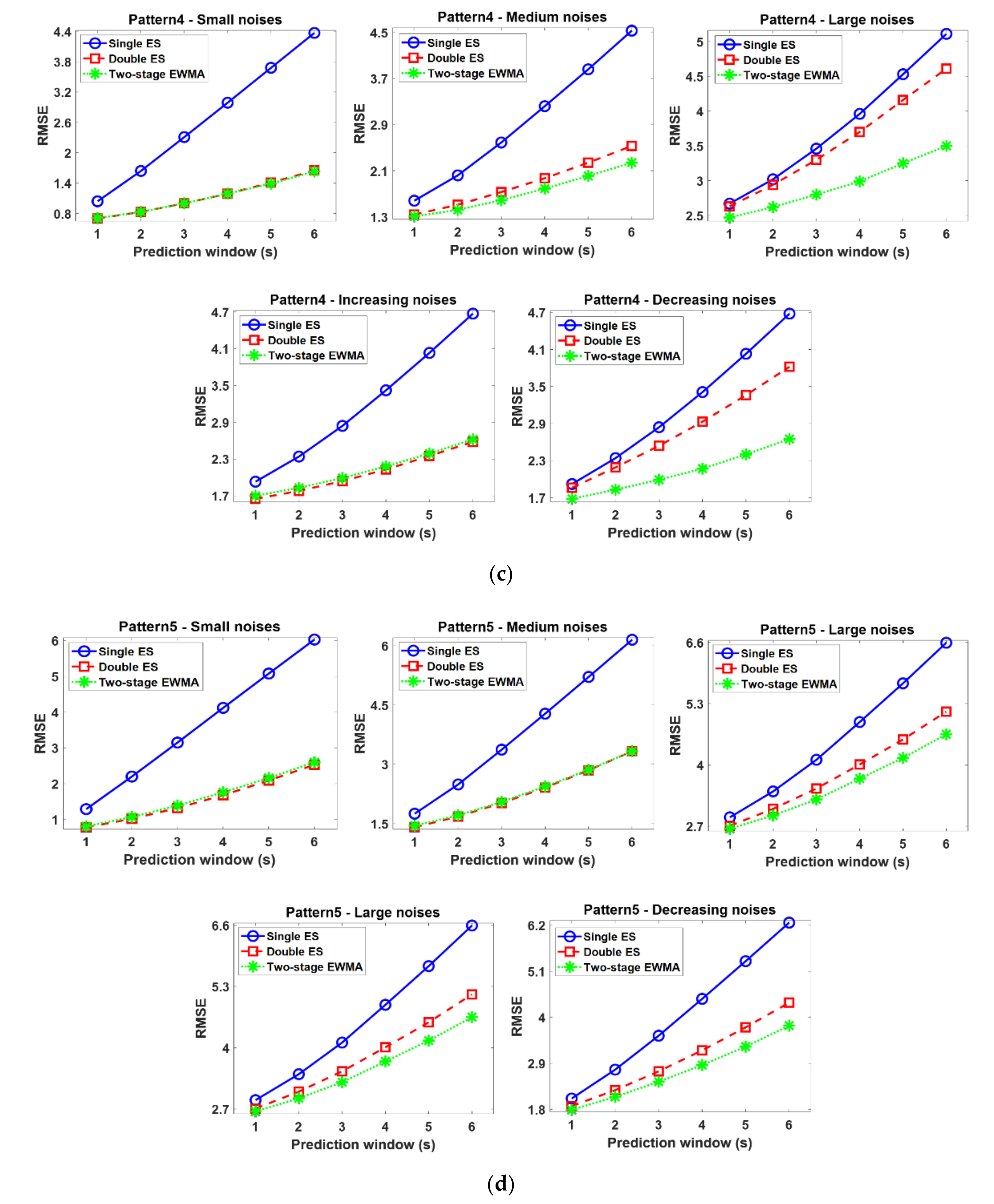

Comparison results between the single ES, double ES, and the two-stage EWMA in (a) Pattern 2, (b) Pattern 3, (c) Pattern 4 and (d) Pattern 5.

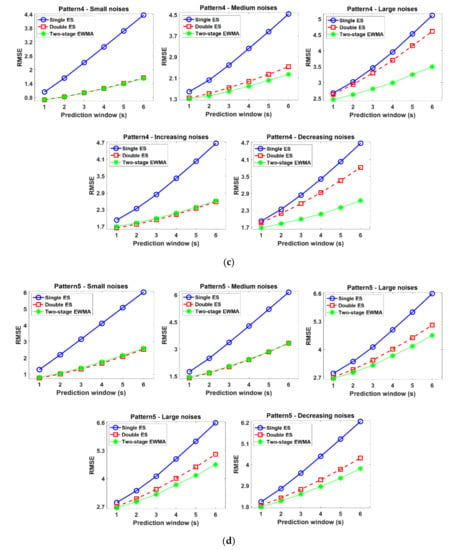

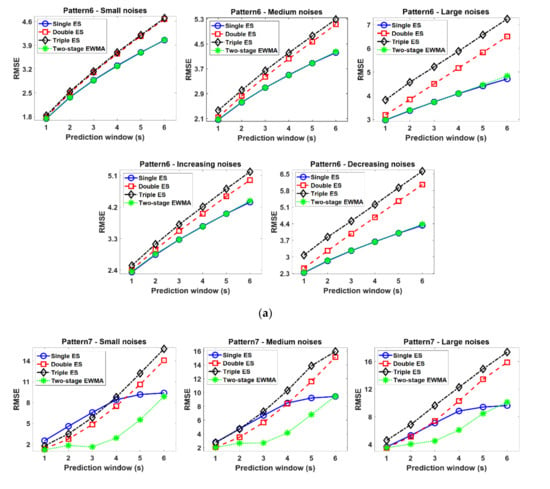

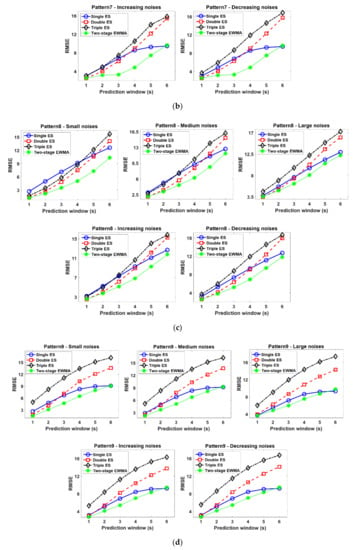

Figure 5.

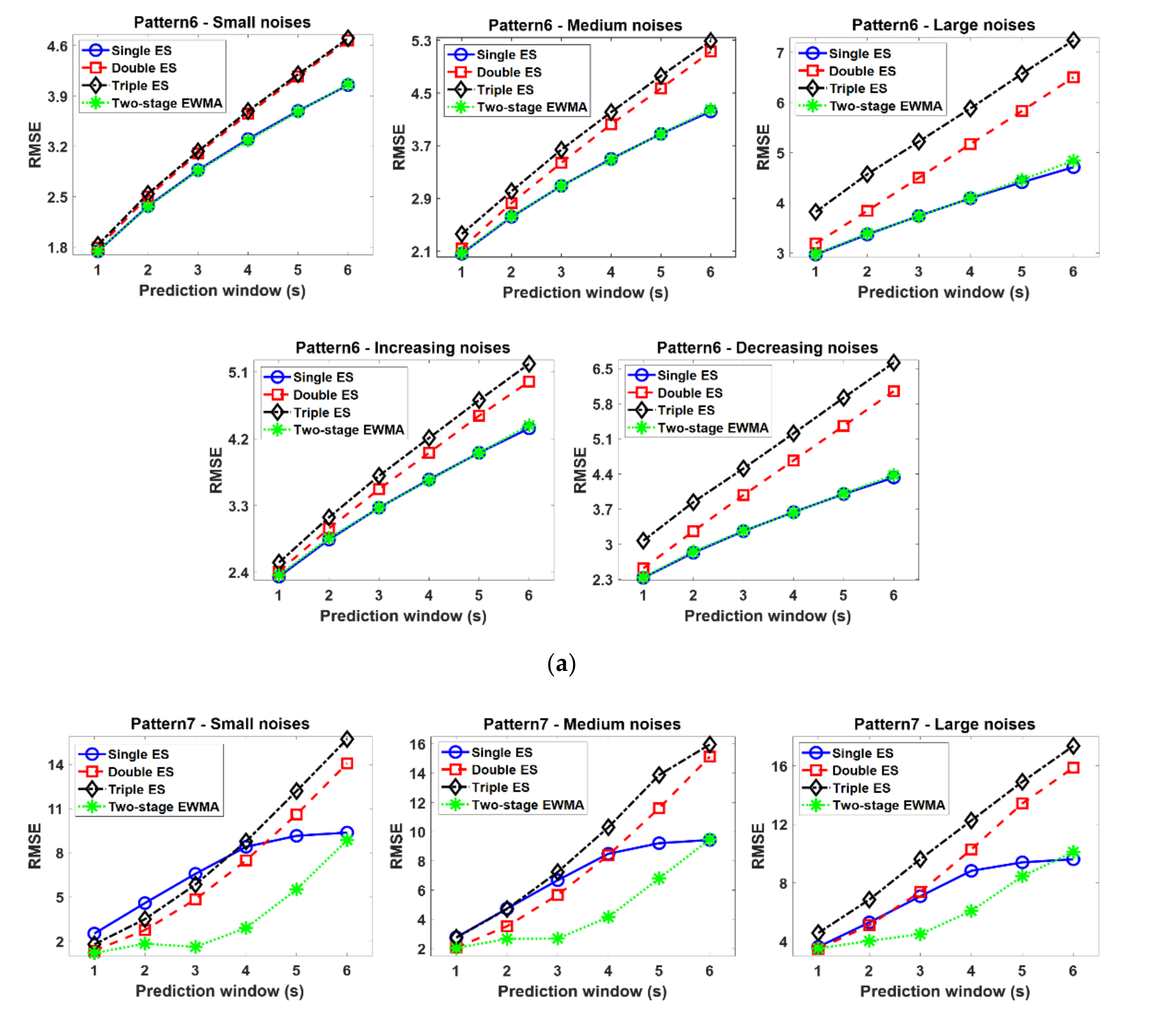

Comparison results between the single ES, double ES, triple ES, and two-stage EWMA in (a) Pattern 6, (b) Pattern 7, (c) Pattern 8 and (d) Pattern 9.

Figure 4 shows that the comparative results from Pattern 2 to Pattern 5 are nonstationary time series having monotonically changing parts but no seasonality pattern. When the noises are not large, the double ES model shows comparable performance with other two methods because these patterns are composed of monotonically changing parts. In addition, the single ES model produced the worst results because it cannot consider the nonstationary time series. On the other hand, the two-stage EWMA model also produced the comparable performance with double ES in that the two-stage EWMA model also basically designed the forecasting of the monotonically changing time series. In particular, the two-stage EWMA method outperformed the other two methods in Pattern 3 because this model can properly deal with a nonlinearly increasing pattern by reducing the bias based on its adjustment term. On the other hand, when the large-sized noises are added, the double ES model performs the worst because of its inaccurately estimated trend factors. That is to say, the number of initial observations is too small to properly estimate the initial trend factors in the noisy time series, and these poor trend factor estimation results sequentially influence the successive model update process of the double ES model. Conversely, the two-stage EWMA model produces better forecasting performance because the adjustment factors can lessen the risk of the inaccurate estimation of trend factors due to the noises and small number of initial time series data. In addition, as mentioned earlier, the drift factor of the two-stage EWMA model helps to accommodate the dynamics of time series in that they are computed from the difference between the two successive time series observations—not from the difference between the estimated level factors, as in the double ES model. These results imply that the two-stage EWMA model is robust to the noises because the adjustment factor can successfully compensate the inaccurately estimated trend factors.

In the current study, we also investigated the properties of three EWMA models when the size of noise varies over time (heteroscedastic settings). When the initial noises are small, trend factors in the double ES are accurately estimated in early phase, and they are also appropriately updated, not affected by the noise in the early update process. Although the noise is increased over time, the double ES model tends to still accurately forecast because these accurately estimated trend factors in the early phase can alleviate the effects of noises. Conversely, the two-stage EWMA model showed smaller RMSE values when the time series started with large-sized noise because the adjustment factor can successfully compensate the inaccurately estimated trend factors, as the same as the experimental results in homoscedastic settings. The simulation results in the heteroscedastic settings demonstrate that the initial condition of time series observations might significantly affect the performance of a self-starting EWMA model when the time series patterns a have monotonically increasing or decreasing part.

Figure 5 shows the comparative results from Pattern 6 to Pattern 9 that have a seasonality pattern. Please note that the initial seasonal factors in the triple ES model should be carefully estimated for more accurate forecasting. However, in our problem setting, the number of initial historical data is too small to accurately estimate the initial seasonal factors. Moreover, when the noises become large, the seasonal factors also tend to be inaccurately estimated. For these reasons, the triple ES model showed the worst forecasting performance in these scenarios, although this model is proposed for forecasting the seasonal time series. These results imply that the triple ES model is not effective for seasonality-patterned data when the initial time series is insufficiently prepared and the time series data contain large sized noises. Finally, when the periods of the seasonality are changing (Pattern 9), the triple ES model performs the worst because this model is based on the assumption that the period is constant. The double ES model also yielded unsatisfactory forecasting results because this model was designed for only considering the linear trend factors. In addition, as mentioned earlier, this model is quite sensitive to the noises, and hence, when large-sized noises are added to the time series, forecasting accuracies become worse. Conversely, the two-stage EMWA model tends to produce the best forecasting results among all EWMA models because the adjustment factor helps adjust the bias of the estimation results of the level factors for the patterns whose changing rates between successive time series observations is not constant. However, the two-stage EMWA model cannot accurately forecast when the forecasting windows become large in the seasonality-patterned data because this method is basically proposed for forecasting the monotonically changing patterns. Thus, these results confirmed that the two-stage EWMA can also be effectively applied to short-term forecasting for the seasonality time series data.

In addition, the experimental results in heteroscedastic settings are similar to those in homoscedastic settings. That is to say because the seasonal factors cannot be accurately estimated under insufficient time series observations, the triple ES model cannot produce a satisfactory forecasting performance regardless of the varying patterns of noises. Besides, single ES and double ES models are proposed for the stationary and monotonically changing time series patterns, and thus, they cannot yield superior performance in seasonal time series patterns for both cases that the noises are homoscedastic and heteroscedastic.

4. Case Study

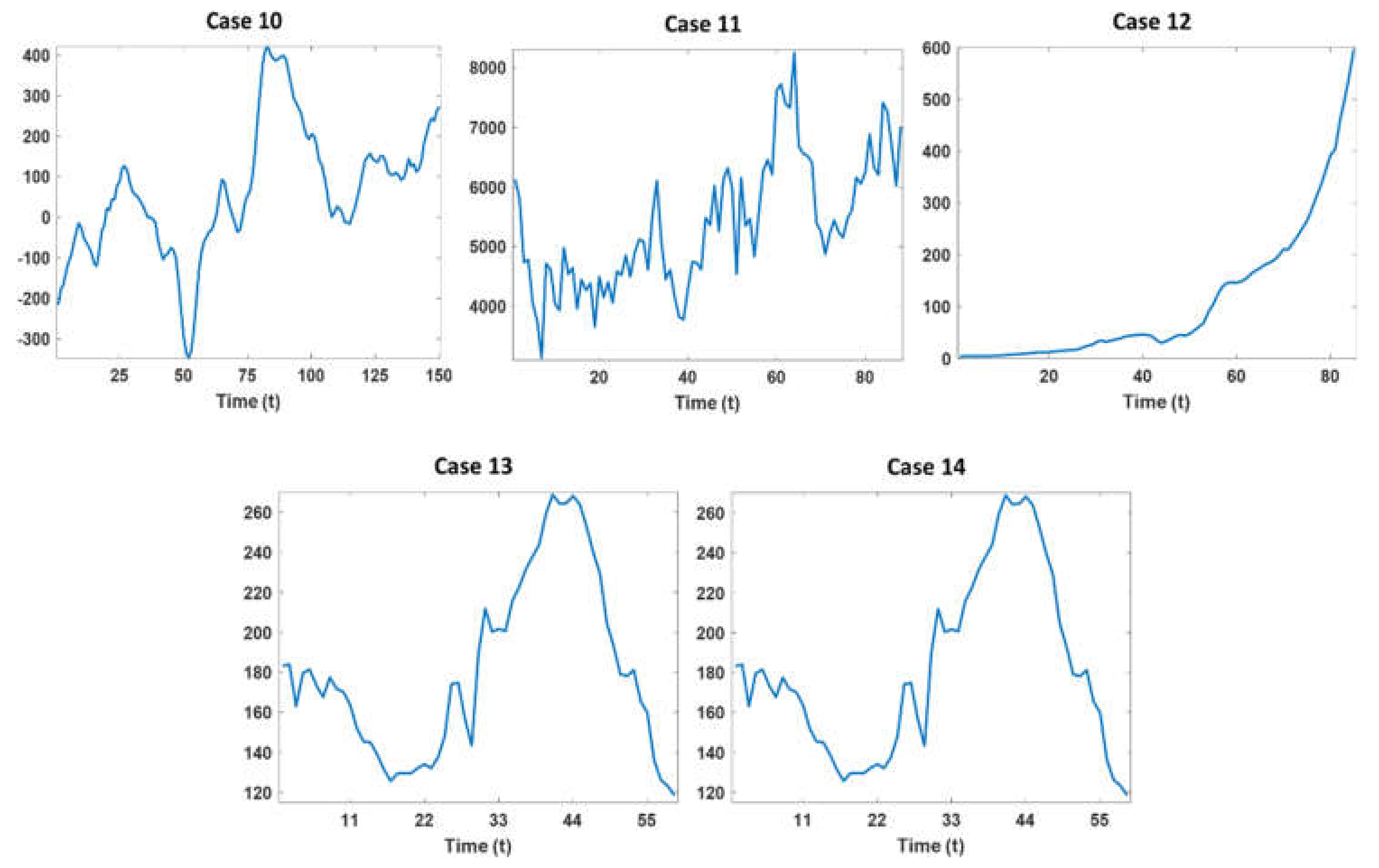

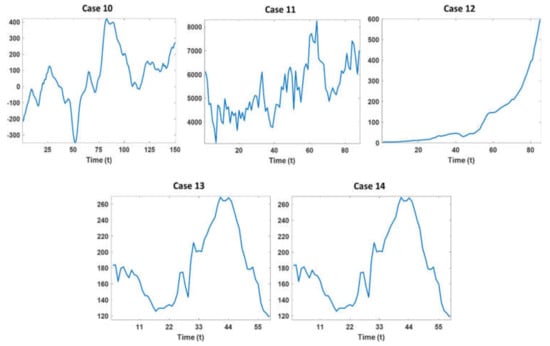

In this section, we investigated the performance of EWMA methods using 18 real time series datasets. Table 1 and Figure 6 show the characteristics and time series patterns of these real data, respectively. These real data are available in public data repositories (Federal Reserve Bank of St. Louis (https://research.stlouisfed.org/); https://datamarket.com/data/list/?q=provider%3Atsdl; https://datahub.io/core/global-temp#data).

Table 1.

Summary of real cases.

Figure 6.

Time series data of 14 real cases.

As shown in Figure 6, most time series data in real cases are nonstationary patterns, and they also have large-sized noises. In real situations, prior knowledge on periods and seasonality factors for the triple ES is not available. However, we already demonstrated that the triple ES is not effective for our problem settings in the simulation study. Hence, we only considered the single ES, double ES, and two-stage EWMA models in the real time series cases.

In the case study, we applied the same experimental setting as the simulation study. At first, we constructed the initial EWMA models using only the first two observations as training time series data and forecasted s-step ahead future observations using these initial models. In the next step, we updated the EWMA models by adding the successive new observation to the training time series. Finally, the updated model forecasted the s-step ahead future observations. This continuous model update process was repeated until the (L-s) time series observations were accumulated where L was the length of the total time series data. In addition, as the same in the simulation study, we considered the smoothing parameter values between 0.1 and 0.9 with a step size of 0.1, and we reported the lowest average RMSE values among the total smoothing parameter sets. Finally, the RMSE values are computed by varying the forecasting window (s) from one to six.

Table 2 shows the comparison results between three EWMA models.

Table 2.

RMSE values of EWMA models in 14 real cases varying forecasting windows.

In Table 2, the lowest RMSE values in each case and prediction window are highlighted as bold and underline for more effectively showing the best EWMA model. As shown in Table 2, the experimental results in most cases are the almost same as those in simulation studies. That is to say, the double ES model tends to show the worst forecasting results because the trend factors in double ES are sensitive to large-sized noises. Moreover, single ES model also showed unsatisfactory performance in that this model cannot accommodate the nonstationary time series encountered in many real situations. On the other hand, as confirmed in a simulation study, the two-stage EWMA model shows the best performance in most cases because the adjustment factor of the two-stage EWMA model helps to lessen the effect of noises and the bias of forecast caused by insufficient time series observations. Besides, the two-stage EWMA model yields much smaller RMSE values than the double ES model because the drift factor better models the changing patterns of time series than the trend factor in the double ES model. In other words, the drift factor is calculated as the first-order difference of two successive observations, and it is more effective to accommodate the inherent dynamics of the time series than the trend factor which is defined as the difference of two successive estimated level factors.

5. Conclusions

In this study, we examined the properties of the EWMA models as a base forecasting model of a self-starting forecasting process. To this end, we conducted situation studies under the situations that only small initial time series data (number of initial observations is two). In the simulation study, we generated a number of scenarios that reflected a variety of practical situations. These simulation scenarios consider different time series patterns and sizes of noises. In addition to the simulation study, we also applied the self-starting forecasting using the EMWA models on the various real case data. Through comparative experiments in such a number of cases, we can summarize the characteristics of EMWA models as base models of self-starting the forecasting process as follows:

- Single ES performs best only in the stationary time series and yields unsatisfactory results in nonstationary patterns. Thus, this model is not proper for the base model for the self-starting forecasting process in that, in many real situations, there is no assurance that the time series data is changed with stationary patterns.

- Double ES shows comparable or better performances than other EMWA models when the time series observations are monotonically increased or decreased. However, this model is vulnerable to the noises when there are no sufficient time series observations to compensate the effect of noise. In other words, the trend factor in the double ES model cannot be accurately estimated when large-sized noises are added to the insufficient time series observations, and these poorly estimated trend factors sequentially influence the successive model update process of the double ES model. Therefore, the double ES model might not be proper for a base model of the self-starting forecasting process in that the time series data in many real situations often contain large-sized noises.

- The seasonal factor in the triple ES model should be carefully estimated for the sake of more accurate forecasting. However, the seasonal factors are poorly estimated when the initial time series observations are not sufficient, and thus, the triple ES shows the worst performance although this model is designed for handling seasonality patterns. In addition, prior knowledge on the true period is not available when time series observations are not sufficiently accumulated. For these reasons, the tripe ES model is not appropriate to be used for a base model of the self-starting forecasting process.

- Conversely, the two-stage EWMA model tends to yield comparable or better performance than other EWMA models in all cases. In particular, this model outperforms other EWMA models as a base model for the self-starting forecasting process in the complex time series (i.e., non-stationary and noisy time series) because of the drift factor and adjustment factor. That is to say, the drift factor calculated as the first-order difference of two successive observations helps to accommodate the dynamics of the time series, and the adjustment factor helps to lessen the intrinsic bias caused by the noises and insufficient initial time series data. Finally, these appropriately estimated factors also lead to desirable EWMA model updates in the self-starting process.

Based on these various comparative results, we suggest using the two-stage EMWA model as a base model of the self-starting forecasting process. For the further study, we will carefully investigate the effects of the smoothing parameters on the self-starting forecasting performance. In addition, we will also study the systematic way to select the optimal smoothing parameters of the EWMA model for the self-starting forecasting process. Finally, for the sake of exploiting these advantages of the two-stage EWMA as a base model for the self-starting approach, we will attempt to apply it to self-starting approach-based control chart techniques.

Author Contributions

J.Y. is responsible for the whole part of the paper (conceptualization, methodology, formal analysis and writing—original draft preparation), S.B.K. is responsible for review and editing, J.B. is responsible for validation and formal analysis, and S.W.H. is responsible for formal analysis, review and editing, and supervision. All authors have read and agreed to the published version of the manuscript.

Funding

The fourth author (S.W.H.) of this research was supported by the Korea Institute for Advancement of Technology (KIAT) grant funded by the Korea Government (MOTIE) (P0008691, The Competency Development Program for Industry Specialist).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bowerman, B.L.; O’Connell, R.T.; Koehler, A.B. Forecasting, Time Series, and Regression: An Applied Approach; Thomson Brooks/Cole: Pacific Grove, CA, USA, 2005. [Google Scholar]

- Hunter, J.S. The Exponentially Weighted Moving Average. J. Qual. Technol. 1986, 18, 203–210. [Google Scholar] [CrossRef]

- Crowder, S.V.A. Simple Method for Studying Run Length Distributions of Exponentially Weighted Moving Average Control Charts. Technometrics 1987, 29, 401–407. [Google Scholar]

- Crowder, S.V. Design of Exponentially Weighted Moving Average Schemes. J. Qual. Technol. 1989, 21, 155–162. [Google Scholar] [CrossRef]

- Lucas, J.M.; Saccucci, M.S. Exponentially Weighted Moving Average Control Schemes: Properties and Enhancements. Technometrics 1990, 32, 1–12. [Google Scholar] [CrossRef]

- Friker, R.D.; Knitt, M.C.; Hu, C.X. Comparing Directionally Sensitive MCUSUM and MEWMA Procedures with Application to Biosurveillance. Qual. Eng. 2008, 20, 478–494. [Google Scholar] [CrossRef]

- Joner, M.D.; Woodall, W.H.; Reynolds, M.R.; Fricker, R.D.A. One-sided MEWMA Chart for Health Surveillance. Qual. Reliab. Eng. Int. 2008, 24, 503–519. [Google Scholar] [CrossRef]

- Han, S.W.; Tsui, K.-L.; Ariyajunyab, B.; Kim, S.B. A Comparison of CUSUM, EWMA, and Temporal Scan Statistics for Detection of Increases in Poisson Rates. Qual. Reliab. Eng. Int. 2010, 26, 279–289. [Google Scholar] [CrossRef]

- Snyder, R.D.; Koehler, A.B.; Ord, J.K. Forecasting for Inventory Control with Exponential Smoothing. Int. J. Forecast. 2002, 18, 5–18. [Google Scholar] [CrossRef]

- De Faria, E.L.; Albuquerque, M.P.; Gonzalez, J.L.; Cavalcante, J.T.P.; Albuquerque, M.P. Predicting the Brazilian Stock Market through Neural Networks and Adaptive Exponential Smoothing Methods. Expert Syst. Appl. 2009, 36, 12506–12509. [Google Scholar] [CrossRef]

- Rundo, F.; Trenta, F.; di Stallo, A.L.; Battiato, S. Machine Learning for Quantitative Finance Applications: A Survey. Appl. Sci. 2019, 9, 5574. [Google Scholar] [CrossRef]

- Jiang, H.; Fang, D.; Spicher, K.; Cheng, F.; Li, B. A New Period-Sequential Index Forecasting Algorithm for Time Series Data. Appl. Sci. 2019, 9, 4386. [Google Scholar] [CrossRef]

- Shilbayeh, S.A.; Abonamah, A.; Masri, A.A. Partially versus Purely Data-Driven Approaches in SARS-CoV-2 Prediction. Appl. Sci. 2020, 10, 5696. [Google Scholar] [CrossRef]

- Taylor, J.W. Short-term Electricity Demand Forecasting using Double Seasonal Exponential Smoothing. J. Oper. Res. Soc. 2003, 54, 799–805. [Google Scholar] [CrossRef]

- Brown, R.G. Exponential Smoothing for Predicting Demand; Arthur, D., Ed.; Little Inc.: Cambridge, MA, USA, 1956. [Google Scholar]

- Brown, R.G. Statistical Forecasting for Inventory Control; McGraw-Hill: New York, NY, USA, 1959. [Google Scholar]

- Holt, C.C. Forecasting Seasonals and Trends by Exponentially Weighted Moving Averages. Int. J. Forecast. 2004, 20, 5–10. [Google Scholar] [CrossRef]

- Winters, P.R. Forecasting Sales by Exponentially Weighted Moving Averages. Manag. Sci. 1960, 6, 324–342. [Google Scholar] [CrossRef]

- Box, G.E.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Akgiray, V. Conditional Heteroscedasticity in Time Series of Stock Returns: Evidence and Forecasts. J. Bus. 1989, 62, 55–80. [Google Scholar] [CrossRef]

- Sims, C.A. Macroeconomics and Reality. Econom. J. Econom. Soc. 1980, 48, 1–48. [Google Scholar] [CrossRef]

- Watson, M.W. Vector Autoregressions and Cointegration. Handb. Econom. 1994, 4, 2843–2915. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Yang, H.; Pan, Z.; Tao, Q. Robust and Adaptive Online Time Series Prediction with Long Short-Term Memory. Comput. Intell. Neurosci. 2017, 2017, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Divina, F.; Torres Maldonado, J.F.; García-Torres, M.; Martínez-Álvarez, F.; Troncoso, A. Hybridizing Deep Learning and Neuroevolution: Application to the Spanish Short-Term Electric Energy Consumption Forecasting. Appl. Sci. 2020, 10, 5487. [Google Scholar] [CrossRef]

- Hao, Y.; Gao, Q. Predicting the Trend of Stock Market Index Using the Hybrid Neural Network Based on Multiple Time Scale Feature Learning. Appl. Sci. 2020, 10, 3961. [Google Scholar] [CrossRef]

- Montgomery, D.C. Statistical Quality Control, 5th ed.; Wiley: New York, NY, USA, 2004. [Google Scholar]

- Ryu, E.S.; Han, S.W. The Slice Group Based SVC Rate Adaptation Using Channel Prediction Model. IEEE COMSOC MMTC E Lett. 2011, 6, 39–41. [Google Scholar]

- Ryu, E.S.; Han, S.W. Two-Stage EWMA-Based H.264 SVC Bandwidth Adaptation. Electron. Lett. 2012, 48, 127–1272. [Google Scholar] [CrossRef]

- Thomassey, S.; Fiordaliso, A. A Hybrid Sales Forecasting System Based on Clustering and Decision Trees. Decis. Support Syst. 2006, 42, 408–421. [Google Scholar] [CrossRef]

- Zou, C.; Zhou, C.; Wang, Z.; Tsung, F. A Self-starting Control Chart for Linear Profiles. J. Qual. Technol. 2007, 39, 364–375. [Google Scholar] [CrossRef]

- Hawkins, D.M. Self-starting CUSUM charts for Location and Scale. J. R. Stat. Soc. Ser. D 1987, 36, 299–316. [Google Scholar] [CrossRef]

- Menzefricke, U. Control Charts for the Variance and Coefficient of Variation Based on Their Predictive Distribution. Commun. Stat. Theory Methods 2010, 39, 2930–2941. [Google Scholar] [CrossRef]

- Linden, A.; Adams, J.L.; Roberts, N. Evaluating Disease Management Program Effectiveness: An Introduction to Time-Series Analysis. Dis. Manag. 2003, 6, 243–255. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Kostenko, A.V. Minimum Sample Size Requirements for Seasonal Forecasting Models. Foresight 2007, 6, 12–15. [Google Scholar]

- Kang, J.H.; Yu, J.; Kim, S.B. Adaptive Nonparametric Control Chart for Time-varying and Multimodal Processes. J. Process Control. 2016, 37, 34–45. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).