Discrimination of Earthquake-Induced Building Destruction from Space Using a Pretrained CNN Model

Abstract

1. Introduction

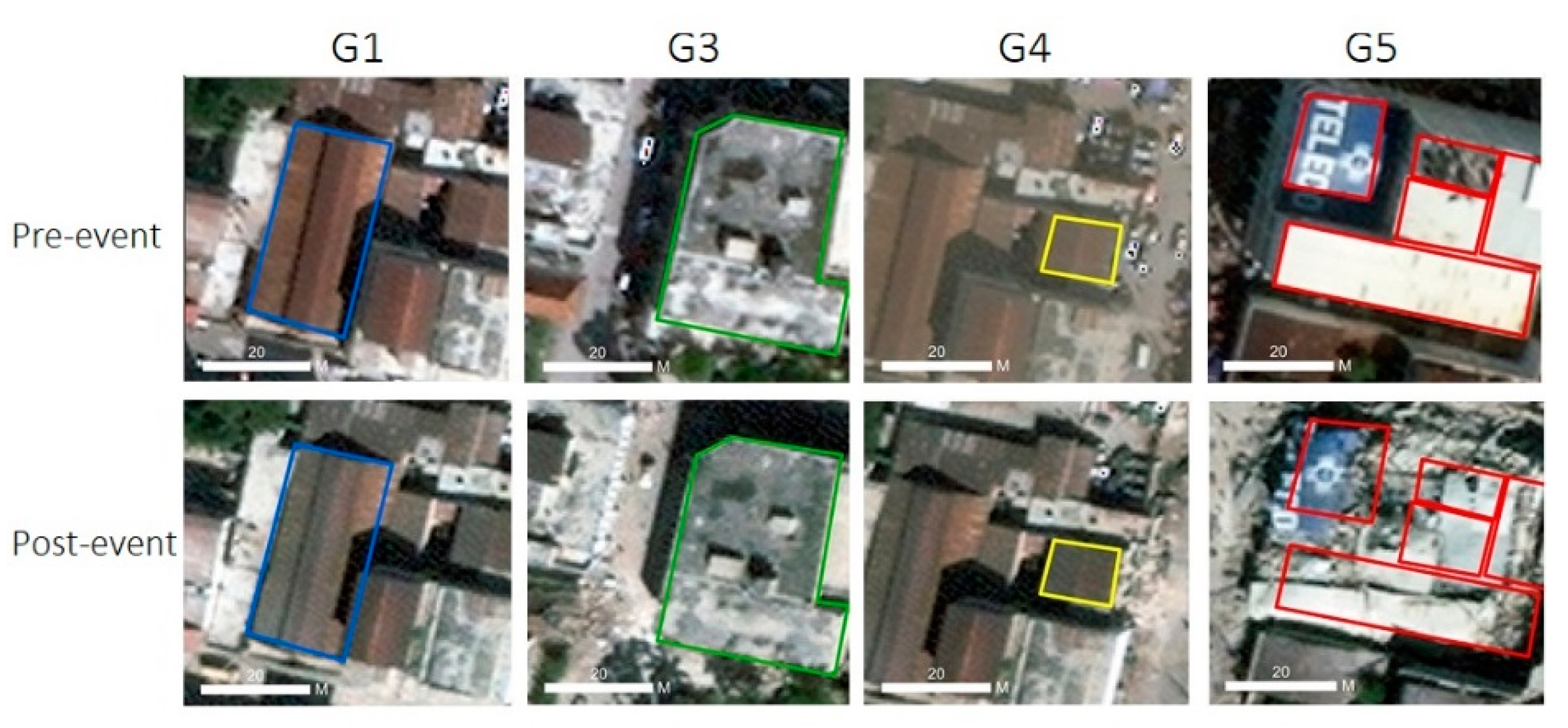

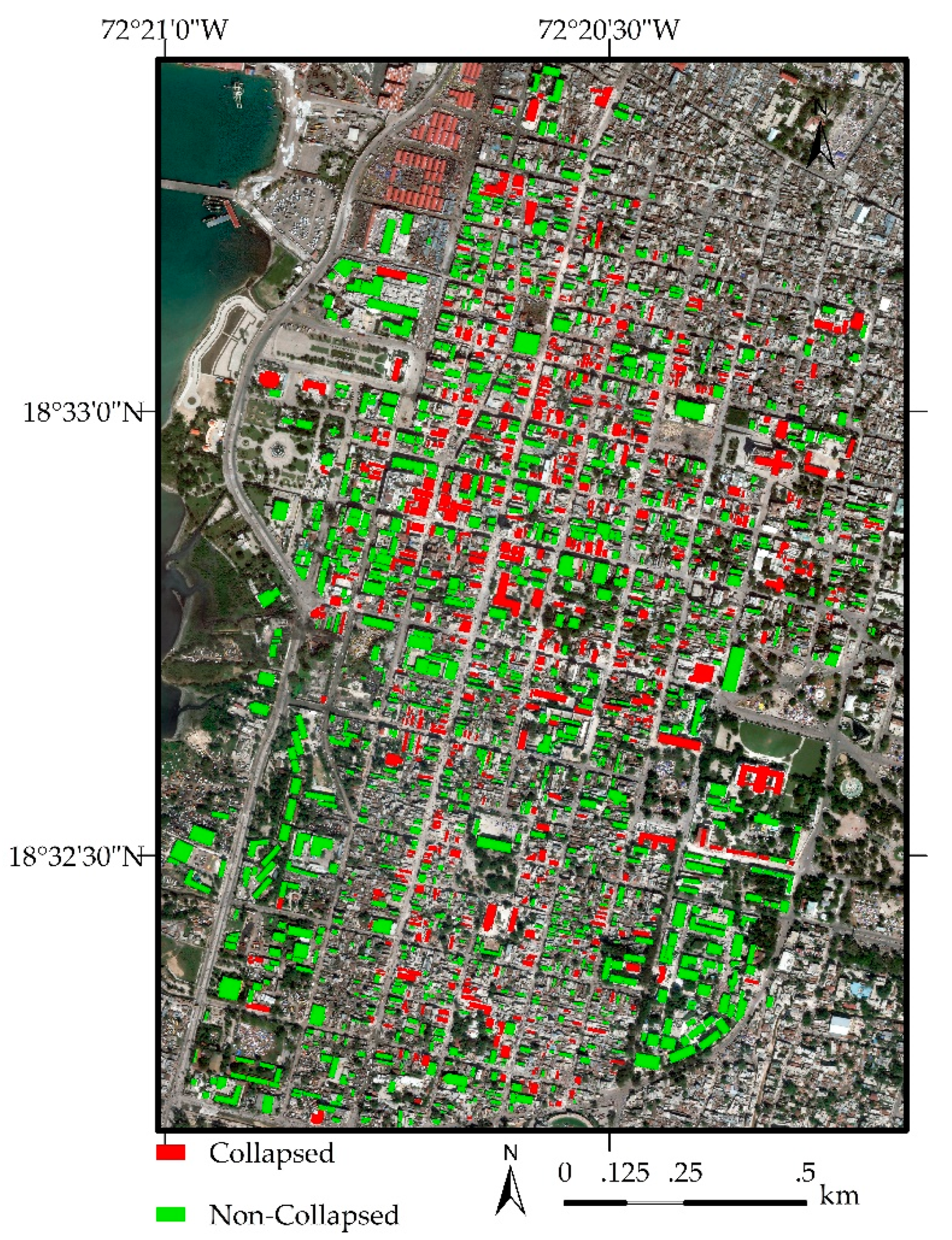

2. Study Area and Data Sources

3. Methodology

3.1. CNNs

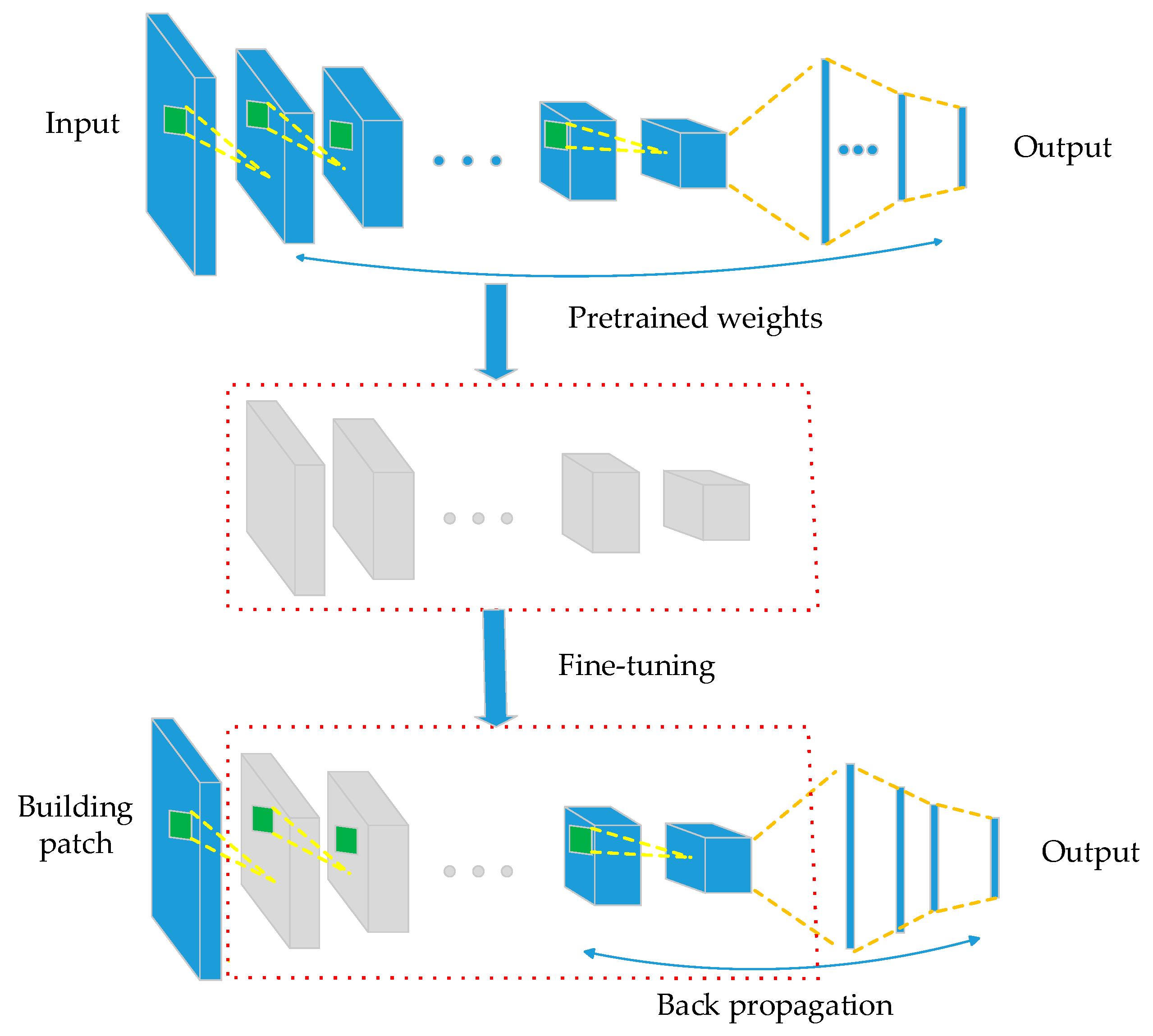

3.2. Fine-Tuning with the Pretrained CNN Model

3.3. Dataset Augmentation

3.4. Evaluation Metrics

4. Experimental Results and Discussion

4.1. Pretrained VGGNet Model for Collapsed Building Detection

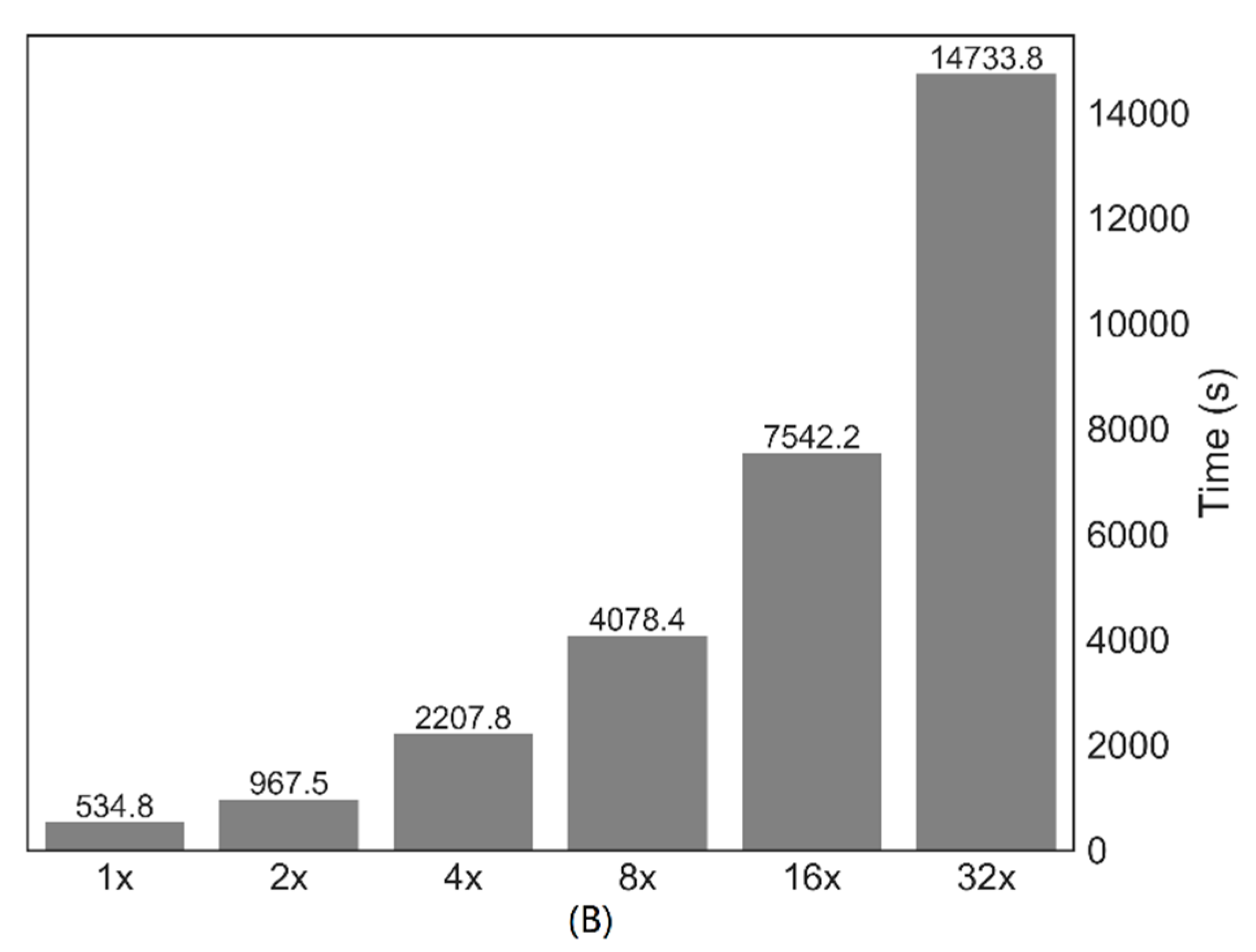

4.2. Impact of the Dataset Augmentation

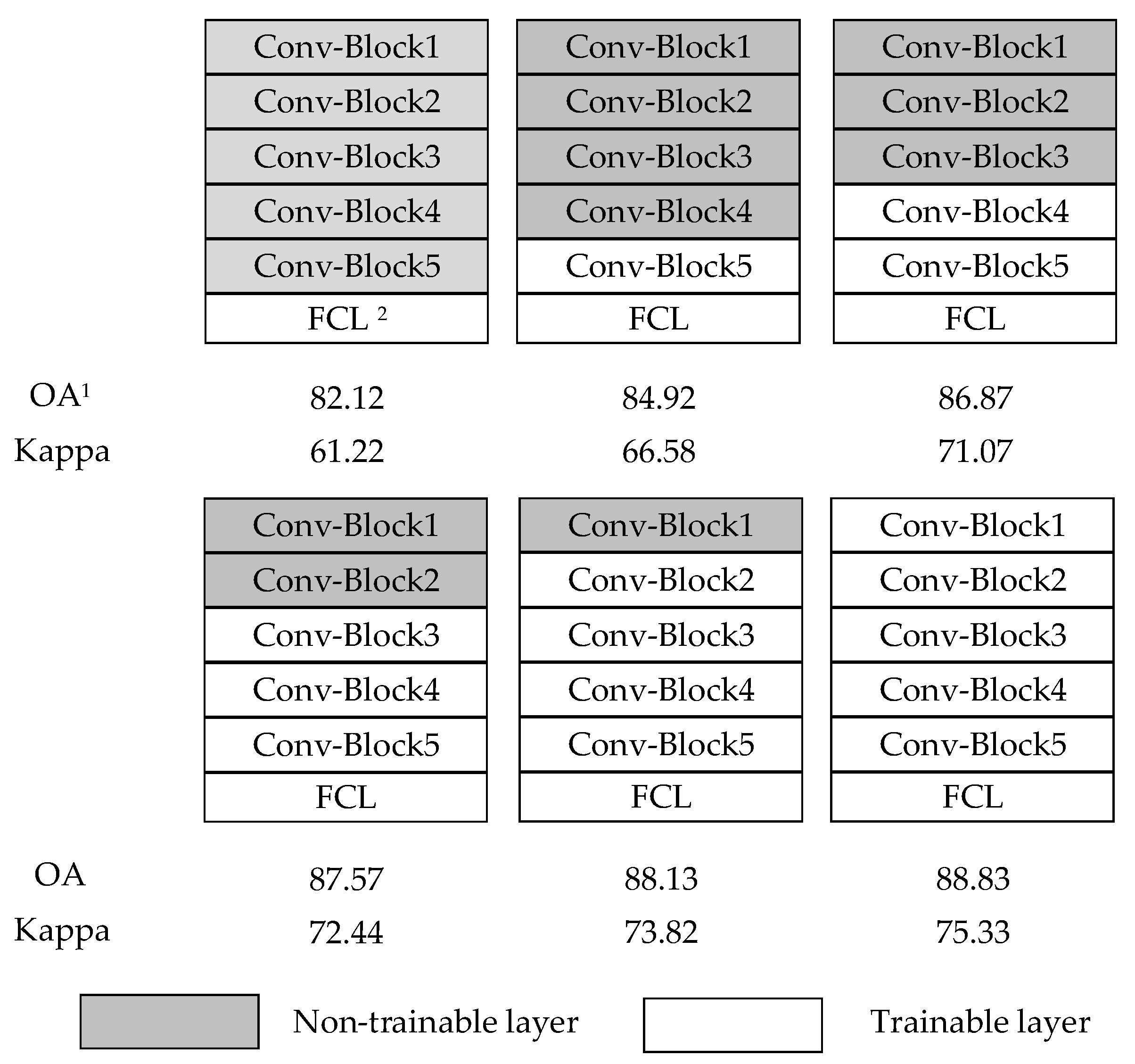

4.3. Effect of Fine-Tuning Different Layers for the Detecting Collapsed Buildings

4.4. Performance of Pretrained VGGNet for the Detection of Collapsed Buildings

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| CNNs | Convolutional Neural Networks |

| DHM | Digital Height Model |

| EMS | European Macroseismic Scale |

| FCL | Fully-Connected Layer |

| OA | Overall Accuracy |

| PA | Producer Accuracy |

| UA | User Accuracy |

References

- Ge, L.; Ng, A.H.M.; Li, X.; Liu, Y.; Du, Z.; Liu, Q. Near real-time satellite mapping of the 2015 Gorkha earthquake, Nepal. Ann. GIS 2015, 21, 175–190. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, Kerkyra, Greece, 20–27 September 1999; Volume 99, pp. 1150–1157. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005. [Google Scholar]

- Zhou, W.; Newsam, S.; Li, C.; Shao, Z. Learning low dimensional convolutional neural networks for high-resolution remote sensing image retrieval. Remote Sens. 2017, 9, 489. [Google Scholar] [CrossRef]

- Antipov, G.; Berrani, S.A.; Ruchaud, N.; Dugelay, J.L. Learned vs. hand-crafted features for pedestrian gender recognition. In Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 26–30 October 2015; ACM: New York, NY, USA, 2015; pp. 1263–1266. [Google Scholar]

- Ji, M.; Liu, L.; Du, R.; Buchroithner, M.F. A comparative study of texture and convolutional neural network features for detecting collapsed buildings after earthquakes using pre- and post-event satellite imagery. Remote Sens. 2019, 11, 1202. [Google Scholar] [CrossRef]

- Ji, M.; Liu, L.; Buchroithner, M. Identifying collapsed buildings using post-earthquake satellite imagery and convolutional neural networks: A case study of the 2010 Haiti earthquake. Remote Sens. 2018, 10, 1689. [Google Scholar] [CrossRef]

- Yeum, C.M.; Dyke, S.J.; Ramirez, J. Visual data classification in post-event building reconnaissance. Eng. Struct. 2018, 155, 16–24. [Google Scholar] [CrossRef]

- Amirkolaee, H.A.; Arefi, H. CNN-based estimation of pre- and post-earthquake height models from single optical images for identification of collapsed buildings. Remote Sens. Lett. 2019, 10, 679–688. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Germany, 2016; pp. 21–37. [Google Scholar]

- Li, Y.; Hu, W.; Dong, H.; Zhang, X. Building damage detection from post-event aerial imagery using single shot multibox detector. Appl. Sci. 2019, 9, 1128. [Google Scholar] [CrossRef]

- Gao, Y.; Mosalam, K.M. Deep transfer learning for image-based structural damage recognition. Comput. Aided Civ. Infrastruct. Eng. 2018, 33, 748–768. [Google Scholar] [CrossRef]

- Vetrivel, A.; Gerke, M.; Kerle, N.; Nex, F.; Vosselman, G. Disaster damage detection through synergistic use of deep learning and 3D point cloud features derived from very high resolution oblique aerial images, and multiple-kernel-learning. ISPRS J. Photogramm. Remote Sens. 2018, 140, 45–59. [Google Scholar] [CrossRef]

- Qin, Z. How convolutional neural network see the world-A survey of convolutional neural network visualization methods. arXiv 2018, arXiv:1804.11191. [Google Scholar] [CrossRef]

- Fang, Z.; Li, W.; Zou, J.; Du, Q. Using CNN-based high-level features for remote sensing scene classification. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 2610–2613. [Google Scholar]

- Huang, Z.; Pan, Z.; Lei, B. Transfer learning with deep convolutional neural network for SAR target classification with limited labeled data. Remote Sens. 2017, 9, 907. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Chen, Z.; Zhang, T.; Ouyang, C. End-to-end airplane detection using transfer learning in remote sensing images. Remote Sens. 2018, 10, 139. [Google Scholar] [CrossRef]

- Liu, X.; Chi, M.; Zhang, Y.; Qin, Y. Classifying high resolution remote sensing images by fine-tuned VGG deep networks. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 7141–7144. [Google Scholar]

- Grünthal, G. European Macroseismic Scale 1998; Cahiers du Centre Europèen de Gèodynamique et de Seismologie, Conseil de l’Europe: Luxembourg, 1998. [Google Scholar]

- United Nations Institute for Training and Research (UNITAR) Operational Satellite Applications Programme (UNOSAT). Available online: http//www.unitar.org/unosat/ (accessed on 10 May 2017).

- Ehrlich, D.; Guo, H.D.; Molch, K.; Ma, J.W.; Pesaresi, M. Identifying damage caused by the 2008 Wenchuan earthquake from VHR remote sensing data. Int. J. Digit. Earth 2009, 2, 309–326. [Google Scholar] [CrossRef]

- Miura, H.; Midorikawa, S.; Soh, H. Building damage detection of the 2010 Haiti earthquake based on texture analysis of high-resolution satellite images. In Proceedings of the 15th World Conference on Earthquake Engineering (15WCEE), Lisbon, Portugal, 24–28 September 2012; Volume 14, pp. 10703–10711. [Google Scholar]

- He, K.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Salamon, J.; Bello, J.P. Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Signal Process. Lett. 2017, 24, 279–283. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Erhan, D.; Bengio, Y.; Courville, A.; Manzagol, P.A.; Vincent, P.; Bengio, S. Why does unsupervised pre-training help deep learning? J. Mach. Learn. Res. 2010, 11, 625–660. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2014; pp. 3320–3328. [Google Scholar]

- Hu, F.; Xia, G.S.; Hu, J.; Zhang, L. Transferring deep convolutional neural networks for the scene classification of high-resolution remote sensing imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef]

- Guirado, E.; Tabik, S.; Alcaraz-Segura, D.; Cabello, J.; Herrera, F. Deep-learning versus OBIA for scattered shrub detection with Google earth imagery: Ziziphus Lotus as case study. Remote Sens. 2017, 9, 1220. [Google Scholar] [CrossRef]

- Agrawal, P.; Girshick, R.; Malik, J. Analyzing the performance of multilayer neural networks for object recognition. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Germany, 2014; pp. 329–344. [Google Scholar]

- Cooner, A.; Shao, Y.; Campbell, J. Detection of urban damage using remote sensing and machine learning algorithms: Revisiting the 2010 Haiti earthquake. Remote Sens. 2016, 8, 868. [Google Scholar] [CrossRef]

| Method | OA 1 (%) | Kappa (%) | Collapsed | Non-Collapsed | ||

|---|---|---|---|---|---|---|

| PA 2 (%) | UA 3 (%) | PA (%) | UA (%) | |||

| Pretrained VGGNet | 85.19 | 67.14 | 81.70 | 75.29 | 86.90 | 90.67 |

| VGGNet | 83.38 | 60.69 | 59.21 | 90.96 | 96.75 | 81.09 |

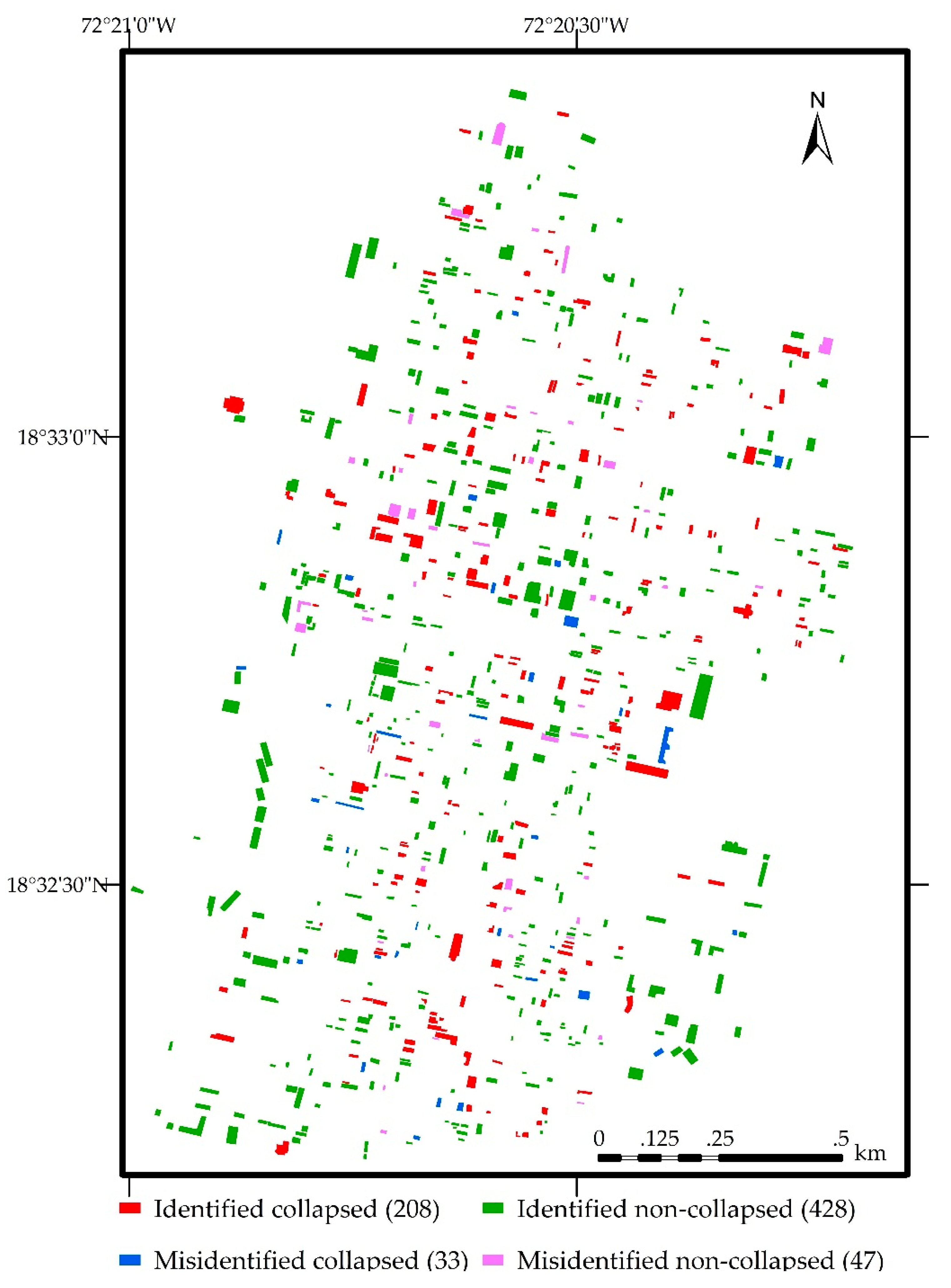

| Confusion Matrix | Ground Truth | UA (%) | ||

|---|---|---|---|---|

| Building Damage Grade | Collapsed Non-Collapsed | |||

| Predicted | Collapsed | 208 | 47 | 81.57 |

| Non-collapsed | 33 | 428 | 92.84 | |

| PA (%) | 86.31 | 90.11 | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ji, M.; Liu, L.; Zhang, R.; F. Buchroithner, M. Discrimination of Earthquake-Induced Building Destruction from Space Using a Pretrained CNN Model. Appl. Sci. 2020, 10, 602. https://doi.org/10.3390/app10020602

Ji M, Liu L, Zhang R, F. Buchroithner M. Discrimination of Earthquake-Induced Building Destruction from Space Using a Pretrained CNN Model. Applied Sciences. 2020; 10(2):602. https://doi.org/10.3390/app10020602

Chicago/Turabian StyleJi, Min, Lanfa Liu, Rongchun Zhang, and Manfred F. Buchroithner. 2020. "Discrimination of Earthquake-Induced Building Destruction from Space Using a Pretrained CNN Model" Applied Sciences 10, no. 2: 602. https://doi.org/10.3390/app10020602

APA StyleJi, M., Liu, L., Zhang, R., & F. Buchroithner, M. (2020). Discrimination of Earthquake-Induced Building Destruction from Space Using a Pretrained CNN Model. Applied Sciences, 10(2), 602. https://doi.org/10.3390/app10020602