Auditory Coding of Reaching Space

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

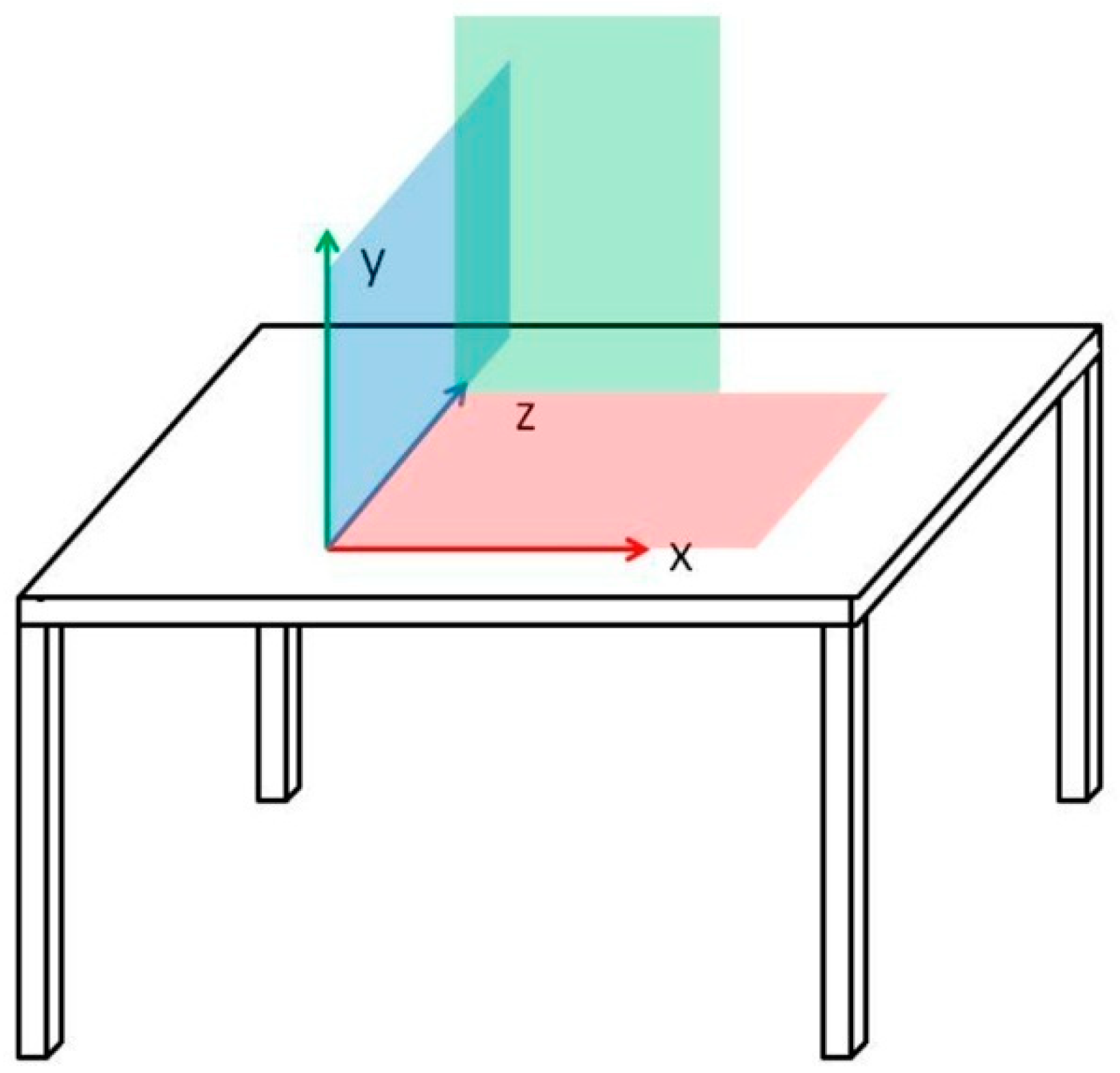

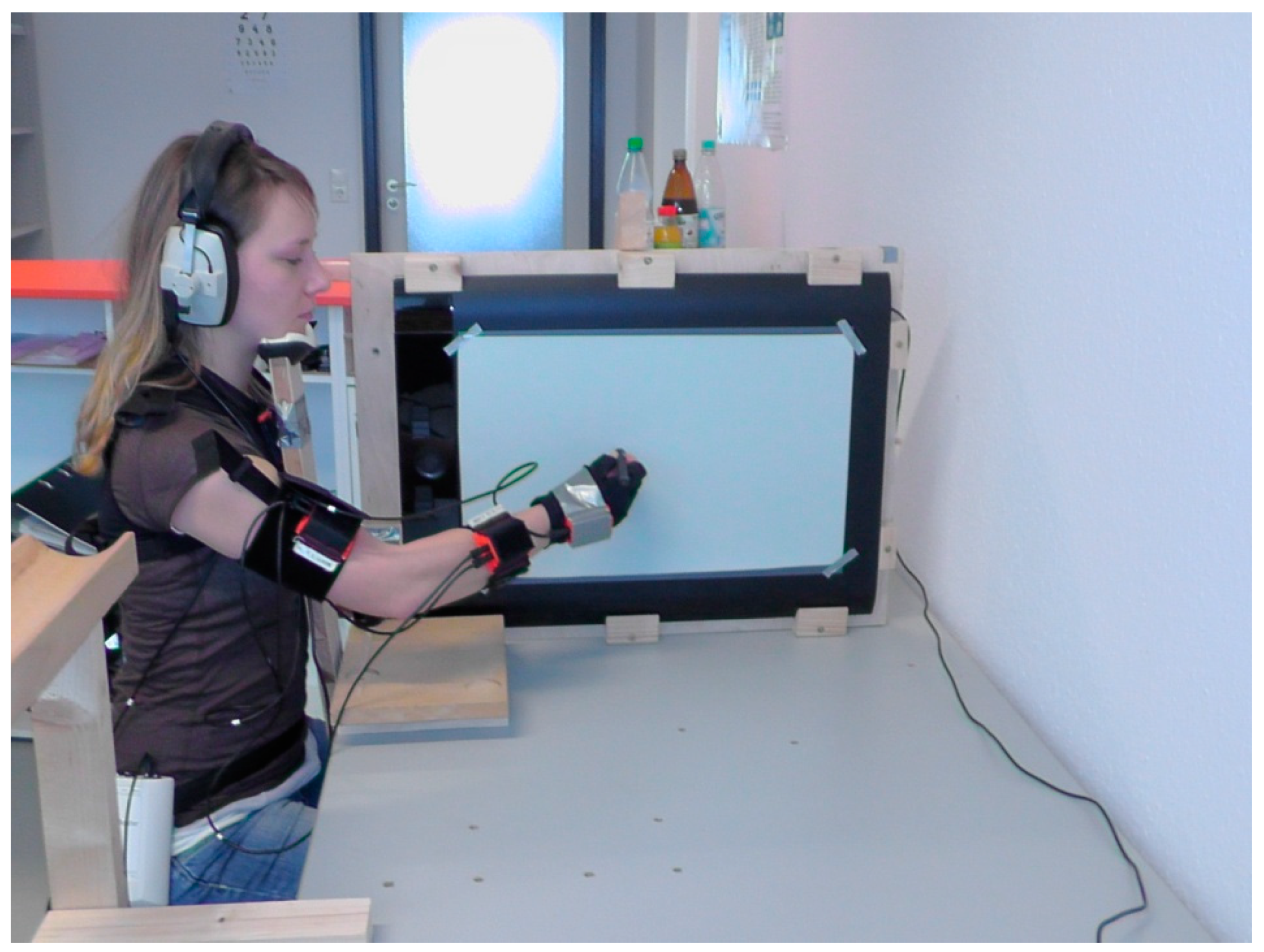

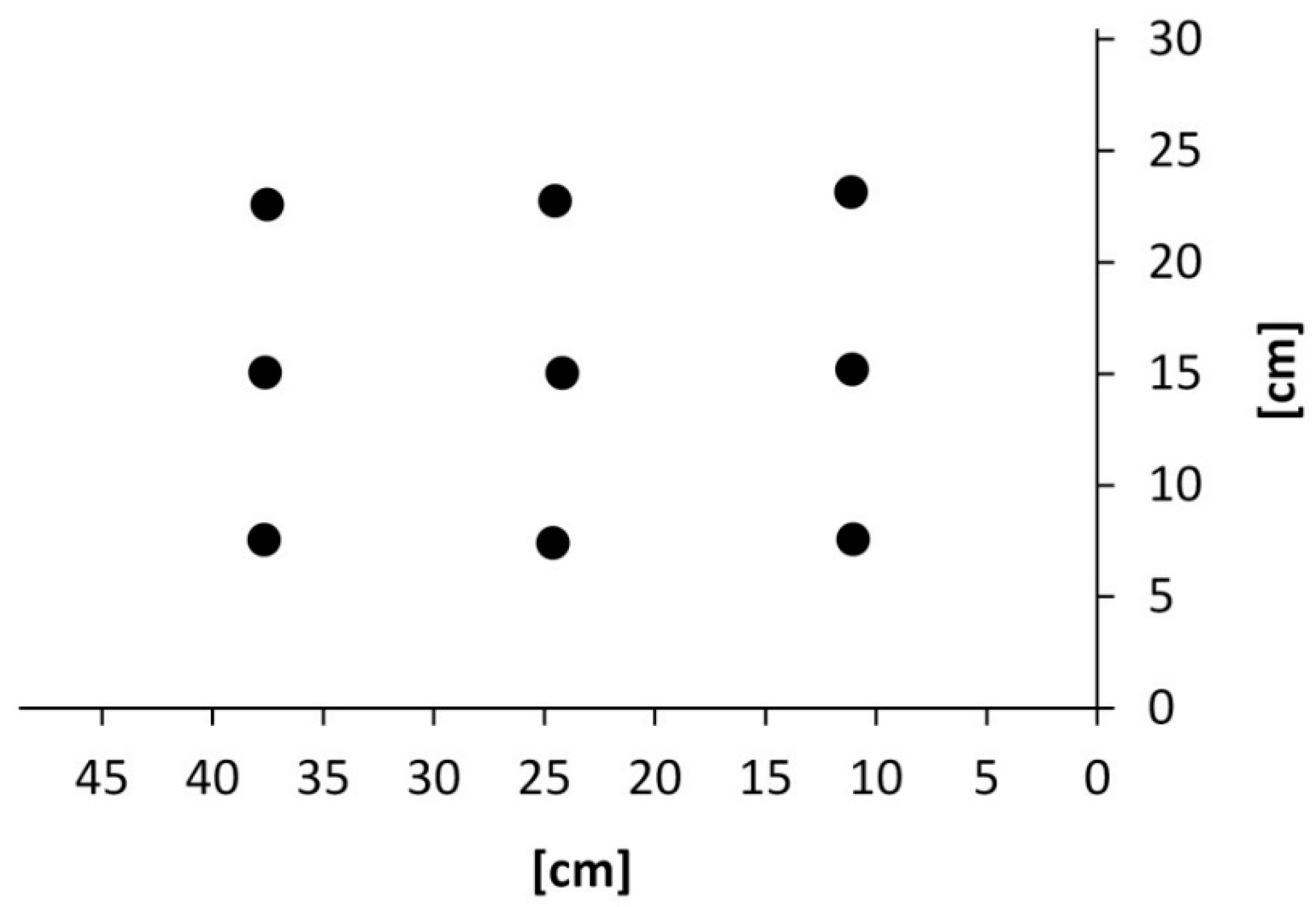

2.2. Experimental Setup

2.3. Stimulus Material

2.4. Experimental Conditions

- Continuous condition:

- Instruction: Sonification of the model’s reaching movement towards the target positions followed by the sonification of the model’s final position for 1 s.

- Feedback: Real-time sonification of the participant’s reaching movement followed by the sonification of the participant’s final position for 1 s.

- Discrete short condition:

- Instruction: Sonification of the model’s final position for 1 s.

- Feedback: Real-time sonification of the participant’s final position for 1 s.

- Discrete long condition:

- Instruction: Sonification of the model’s final position for the duration of the model’s reaching movement and one more second (in total 2.0–2.65 s).

- Feedback: Real-time sonification of the participant’s final position for 1 s.

2.5. Procedure

2.6. Data Acquisition and Analysis

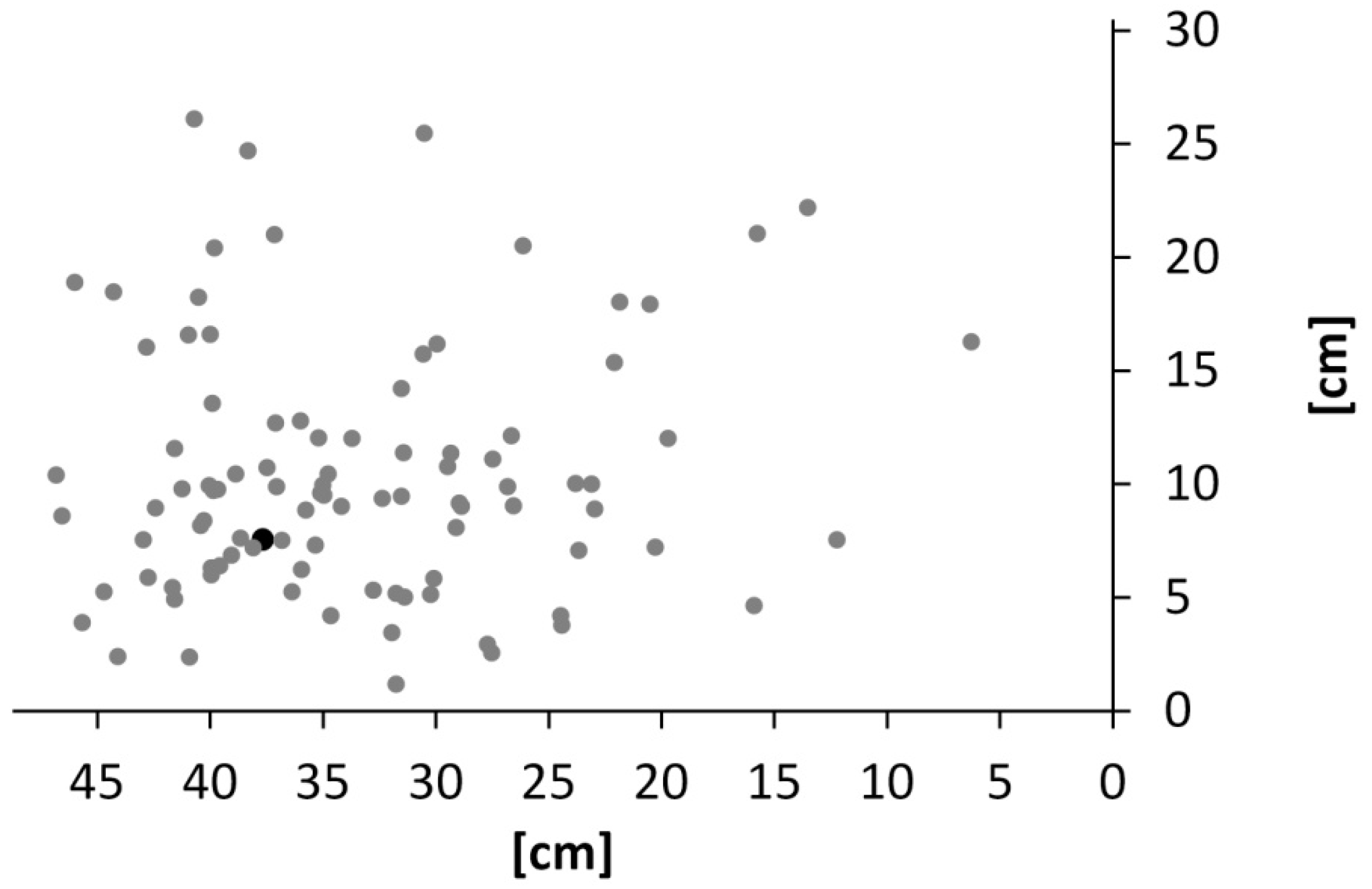

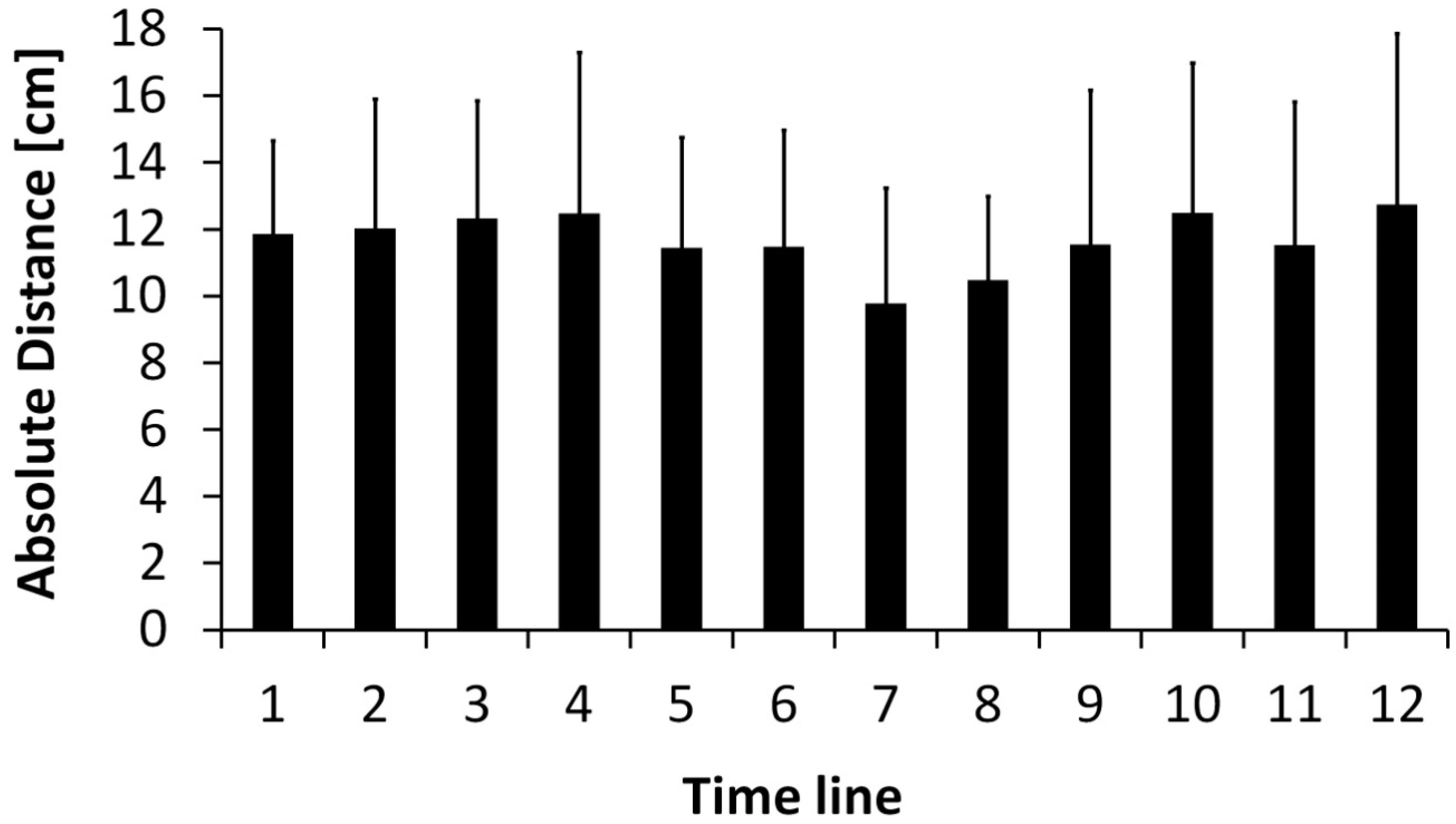

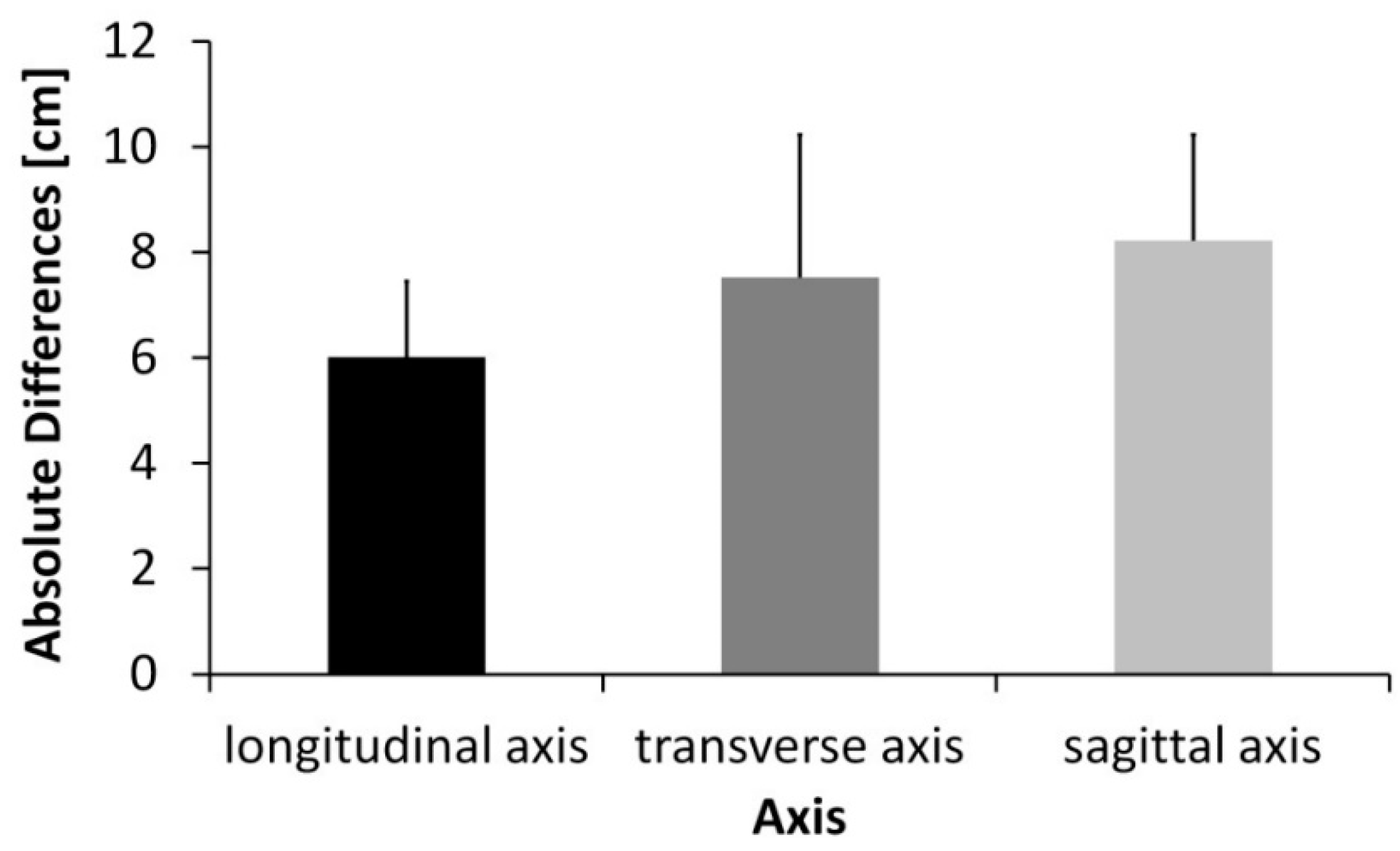

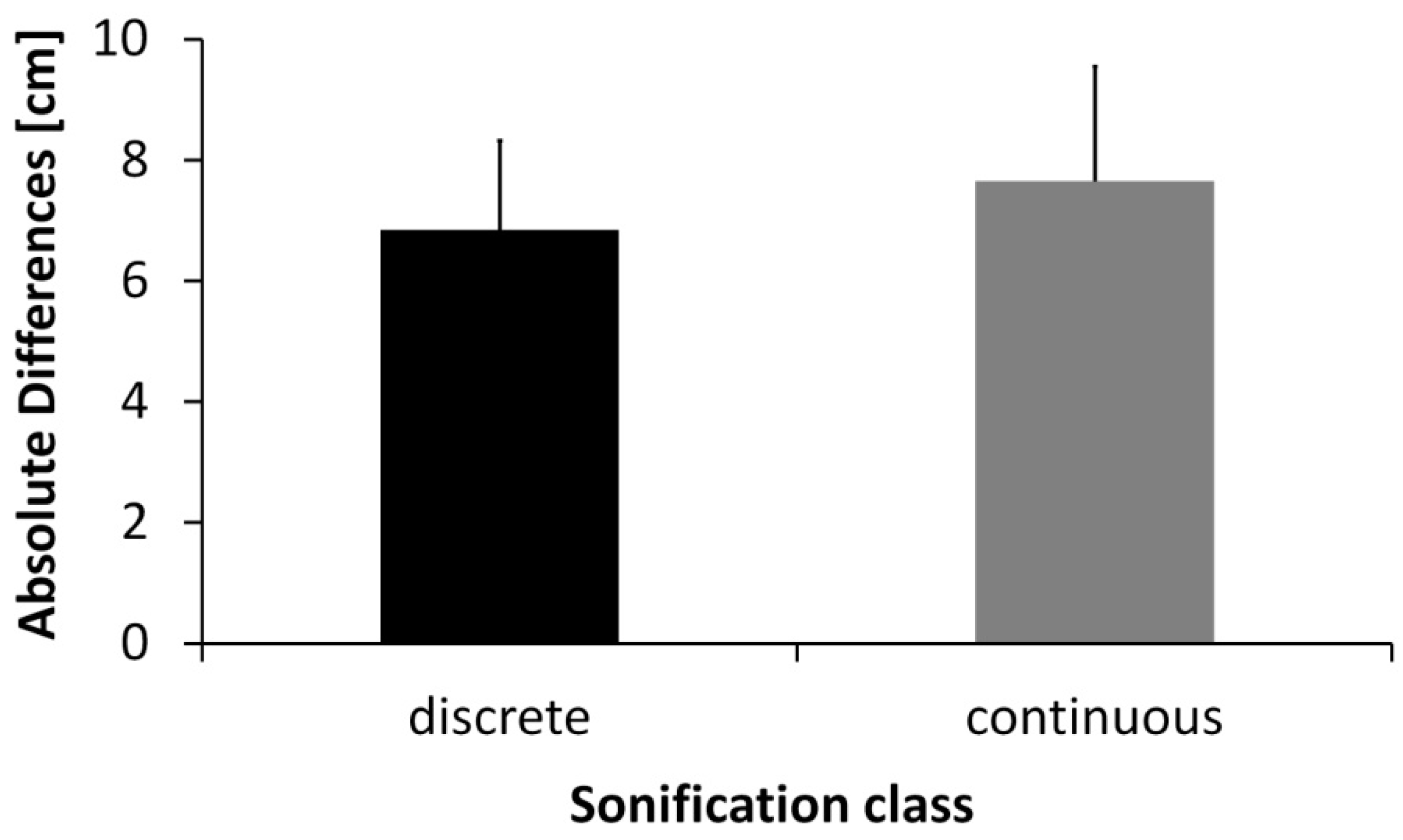

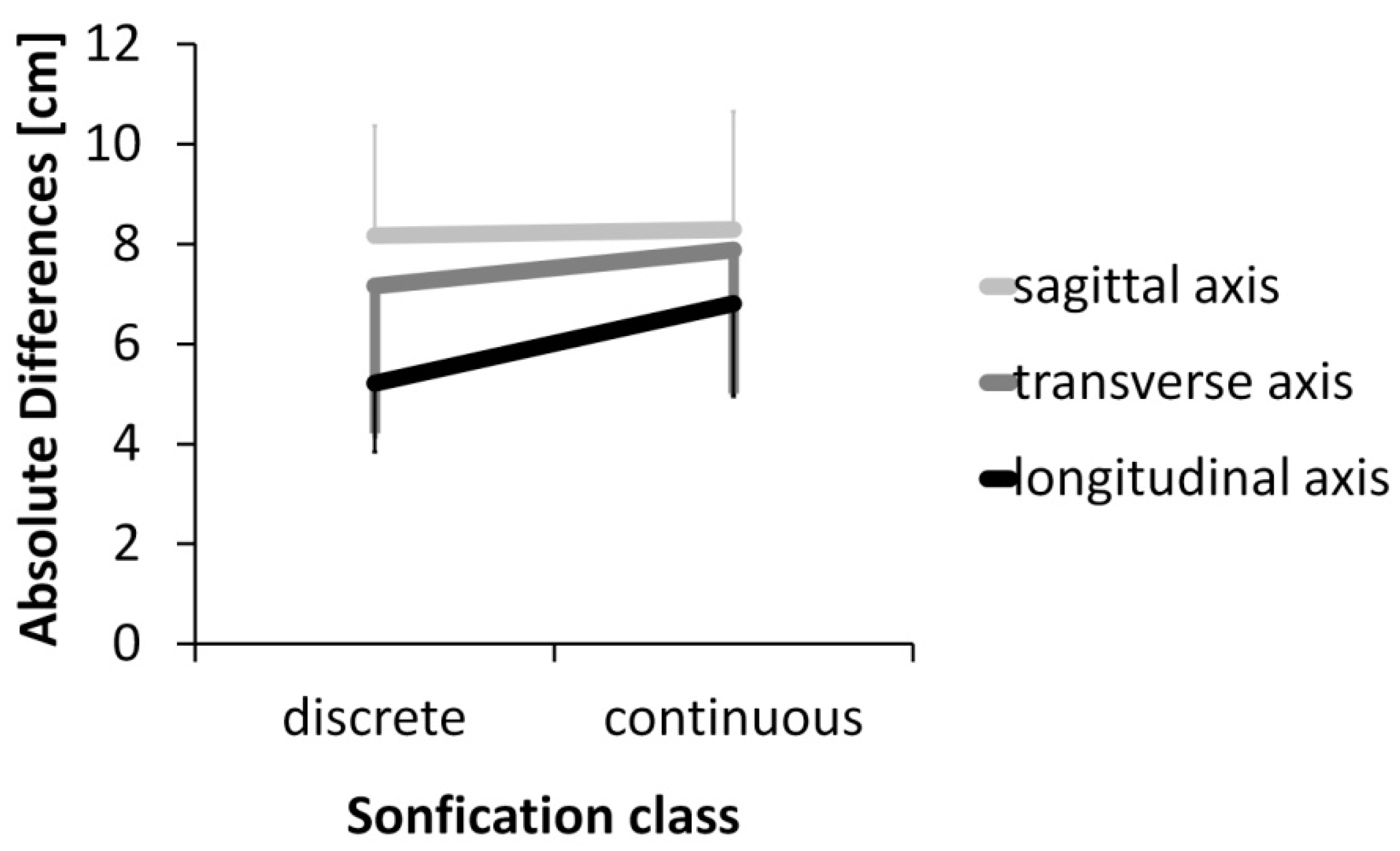

3. Results

4. Discussion

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Rossetti, Y.; Desmurget, M.; Prablanc, C. Vectorial coding of movement: Vision, proprioception, or both? J. Neurophysiol. 1995, 74, 457–463. [Google Scholar] [CrossRef] [PubMed]

- Graziano, M.S. Is reaching eye-centered, body-centered, hand-centered, or a combination? Rev. Neurosci. 2001, 12, 175–186. [Google Scholar] [CrossRef] [PubMed]

- Prablanc, C.; Pelisson, D.; Goodale, M.A. Visual control of reaching movements without vision of the limb. Exp. Brain Res. 1986, 62, 293–302. [Google Scholar] [CrossRef] [PubMed]

- Oscari, F.; Secoli, R.; Avanzini, F.; Rosati, G.; Reinkensmeyer, D.J. Substituting auditory for visual feedback to adapt to altered dynamic and kinematic environments during reaching. Exp. Brain Res. 2012, 221, 33–41. [Google Scholar] [CrossRef]

- Schmitz, G.; Bock, O. A Comparison of Sensorimotor Adaptation in the Visual and in the Auditory Modality. PLoS ONE 2014, 9, e107834. [Google Scholar] [CrossRef][Green Version]

- Boyer, E.O.; Babayan, B.M.; Bevilacqua, F.; Noisternig, M.; Warusfel, O.; Roby-Brami, A.; Hanneton, S.; Viaud-Delmon, I. From ear to hand: The role of the auditory-motor loop in pointing to an auditory source. Front. Comput. Neurosci. 2013, 7, 26. [Google Scholar] [CrossRef]

- Schaffert, N.; Janzen, T.B.; Mattes, K.; Thaut, M.H. A review on the relationship between sound and movement in sports and rehabilitation. Front. Psychol. 2019, 10, 244. [Google Scholar] [CrossRef]

- Effenberg, A.O. Movement sonification: Effects on perception and action. IEEE Multimed. 2005, 12, 53–59. [Google Scholar] [CrossRef]

- Hwang, T.H.; Schmitz, G.; Klemmt, K.; Brinkop, L.; Ghai, S.; Stoica, M.; Maye, A.; Blume, H.; Effenberg, A.O. Effect-and performance-based auditory feedback on interpersonal coordination. Front. Psychol. 2018, 9, 404. [Google Scholar] [CrossRef]

- Schaffert, N.; Mattes, K.; Effenberg, A.O. An investigation of online acoustic information for elite rowers in on-water training conditions. J. Hum. Sport Exerc. 2011, 6, 392–405. [Google Scholar] [CrossRef]

- Schaffert, N.; Mattes, K. Effects of acoustic feedback training in elite-standard Para-Rowing. J. Sports Sci. 2015, 33, 411–418. [Google Scholar] [CrossRef]

- Danna, J.; Velay, J.L. On the auditory-proprioception substitution hypothesis: Movement sonification in two deafferented subjects learning to write new characters. Front. Neurosci. 2017, 11, 137. [Google Scholar] [CrossRef] [PubMed]

- Effenberg, A.O.; Schmitz, G.; Baumann, F.; Rosenhahn, B.; Kroeger, D. SoundScript-Supporting the acquisition of character writing by multisensory integration. Open Psychol. J. 2015, 8, 230–237. [Google Scholar] [CrossRef][Green Version]

- Effenberg, A.O.; Fehse, U.; Schmitz, G.; Krueger, B.; Mechling, H. Movement sonification: Effects on motor learning beyond rhythmic adjustments. Front. Neurosci. 2016, 10, 219. [Google Scholar] [CrossRef] [PubMed]

- Sigrist, R.; Rauter, G.; Riener, R.; Wolf, P. Augmented visual, auditory, haptic, and multimodal feedback in motor learning: A review. Psychon. Bull. Rev. 2013, 20, 21–53. [Google Scholar] [CrossRef] [PubMed]

- Beauchamp, M.S. See me, hear me, touch me: Multisensory integration in lateral occipital-temporal cortex. Curr. Opin. Neurobiol. 2005, 15, 145–153. [Google Scholar] [CrossRef]

- Bidet-Caulet, A.; Voisin, J.; Bertrand, O.; Fonlupt, P. Listening to a walking human activates the temporal biological motion area. Neuroimage 2005, 28, 132–139. [Google Scholar] [CrossRef]

- Scheef, L.; Boecker, H.; Daamen, M.; Fehse, U.; Landsberg, M.W.; Granath, D.O.; Mechling, H.; Effenberg, A.O. Multimodal motion processing in area V5/MT: Evidence from an artificial class of audio-visual events. Brain Res. 2009, 1252, 94–104. [Google Scholar] [CrossRef]

- Bangert, M.; Altenmüller, E.O. Mapping perception to action in piano practice: A longitudinal DC-EEG study. BMC Neurosci. 2003, 4, 26. [Google Scholar] [CrossRef]

- Gazzola, V.; Aziz-Zadeh, L.; Keysers, C. Empathy and the somatotopic auditory mirror system in humans. Curr. Biol. 2006, 16, 1824–1829. [Google Scholar] [CrossRef]

- Kaplan, J.T.; Iacoboni, M. Multimodal action representation in human left ventral premotor cortex. Cogn. Process. 2007, 8, 103–113. [Google Scholar] [CrossRef] [PubMed]

- Lahav, A.; Saltzman, E.; Schlaug, G. Action representation of sound: Audiomotor recognition network while listening to newly acquired actions. J. Neurosci. 2007, 27, 308–314. [Google Scholar] [CrossRef] [PubMed]

- Schmitz, G.; Mohammadi, B.; Hammer, A.; Heldmann, M.; Samii, A.; Münte, T.F.; Effenberg, A.O. Observation of sonified movements engages a basal ganglia frontocortical network. BMC Neurosci. 2013, 14, 32. [Google Scholar] [CrossRef]

- Murgia, M.; Hohmann, T.; Galmonte, A.; Raab, M.; Agostini, T. Recognising one’s own motor actions through sound: The role of temporal factors. Perception 2012, 41, 976–987. [Google Scholar] [CrossRef]

- Young, W.; Rodger, M.; Craig, C.M. Perceiving and reenacting spatiotemporal characteristics of walking sounds. J. Exp. Psychol. Hum. Percept. Perform. 2013, 39, 464–476. [Google Scholar] [CrossRef]

- Agostini, T.; Righi, G.; Galmonte, A.; Bruno, P. The relevance of auditory information in optimizing hammer throwers performance. In Biomechanics and Sports; Pascolo, P.B., Ed.; Springer: Vienna, Austria, 2004; pp. 67–74. [Google Scholar]

- Vinken, P.M.; Kröger, D.; Fehse, U.; Schmitz, G.; Brock, H.; Effenberg, A.O. Auditory coding of human movement kinematics. Multisens. Res. 2013, 26, 533–552. [Google Scholar] [CrossRef]

- Fehse, U.; Weber, A.; Krüger, B.; Baumann, J.; Effenberg, A.O. Intermodal Action Identification. Presented at the International Conference on Multisensory Motor Behavior: Impact of Sound [Online], Hanover, Germany, 30 September–1 October 2013; p. 4. Available online: http://www.sonification-online.com/wp-content/uploads/2013/12/Postersession_neu.pdf (accessed on 12 October 2019).

- Auvray, M.; Hanneton, S.; O’Regan, J.K. Learning to perceive with a visuo-auditory substitution system: Localisation and object recognition with ‘The Voice’. Perception 2007, 36, 416–430. [Google Scholar] [CrossRef] [PubMed]

- Levy-Tzedek, S.; Hanassy, S.; Abboud, S.; Maidenbaum, S.; Amedi, A. Fast, accurate reaching movements with a visual-to-auditory sensory substitution device. Restor. Neurol. Neurosci. 2012, 30, 313–323. [Google Scholar] [CrossRef]

- Làdavas, E. Multisensory-based Approach to the recovery of unisensory deficit. Ann. N. Y. Acad. Sci. 2008, 1124, 98–110. [Google Scholar] [CrossRef]

- Bevilacqua, F.; Boyer, E.O.; Françoise, J.; Houix, O.; Susini, P.; Roby-Brami, A.; Hanneton, S. Sensori-motor learning with movement sonification: Perspectives from recent interdisciplinary studies. Front. Neurosci. 2016, 10, 385. [Google Scholar] [CrossRef]

- Scholz, D.S.; Wu, L.; Pirzer, J.; Schneider, J.; Rollnik, J.D.; Großbach, M.; Altenmüller, E.O. Sonification as a possible stroke rehabilitation strategy. Front. Neurosci. 2014, 8, 332. [Google Scholar] [CrossRef] [PubMed]

- Rosati, G.; Oscari, F.; Spagnol, S.; Avanzini, F.; Masiero, S. Effect of task-related continuous auditory feedback during learning of tracking motion exercises. J. Neuroeng. Rehabil. 2012, 9, 79. [Google Scholar] [CrossRef] [PubMed]

- Thaut, M.H.; McIntosh, G.C.; Rice, R.R.; Miller, R.A.; Rathbun, J.; Brault, J.M. Rhythmic auditory stimulation in gait training for Parkinson’s disease patients. Mov. Disord. Off. J. Mov. Disord. Soc. 1996, 11, 193–200. [Google Scholar] [CrossRef] [PubMed]

- Thaut, M.H.; McIntosh, G.C.; Rice, R.R. Rhythmic facilitation of gait training in hemiparetic stroke rehabilitation. J. Neurol. Sci. 1997, 151, 207–212. [Google Scholar] [CrossRef]

- Thaut, M.H.; Kenyon, G.P.; Hurt, C.P.; McIntosh, G.C.; Hoemberg, V. Kinematic optimization of spatiotemporal patterns in paretic arm training with stroke patients. Neuropsychologia 2002, 40, 1073–1081. [Google Scholar] [CrossRef]

- Ghai, S.; Ghai, I.; Effenberg, A.O. Effect of rhythmic auditory cueing on aging gait: A systematic review and meta-analysis. Aging Dis. 2018, 9, 901–923. [Google Scholar] [CrossRef]

- Ghai, S.; Ghai, I.; Effenberg, A.O. Effect of rhythmic auditory cueing on gait in cerebral palsy: A systematic review and meta-analysis. Neuropsychiatr. Dis. Treat. 2018, 14, 43–59. [Google Scholar] [CrossRef]

- Ghai, S.; Ghai, I.; Schmitz, G.; Effenberg, A.O. Effect of rhythmic auditory cueing on parkinsonian gait: A systematic review and meta-analysis. Sci. Rep. 2018, 8, 506. [Google Scholar] [CrossRef]

- Thaut, M.H. The discovery of human auditory-motor entrainment and its role in the development of neurologic music therapy. Prog. Brain Res. 2015, 217, 253–266. [Google Scholar] [CrossRef]

- Schauer, M.; Mauritz, K.H. Musical motor feedback (MMF) in walking hemiparetic stroke patients: Randomized trials of gait improvement. Clin. Rehabil. 2003, 17, 713–722. [Google Scholar] [CrossRef]

- Young, W.R.; Shreve, L.; Quinn, E.J.; Craig, C.; Bronte-Stewart, H. Auditory cueing in Parkinson’s patients with freezing of gait. What matters most: Action-relevance or cue-continuity? Neuropsychologia 2016, 87, 54–62. [Google Scholar] [CrossRef] [PubMed]

- Maulucci, R.A.; Eckhouse, R.H. A real-time auditory feedback system for retraining gait. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 5199–5202. [Google Scholar] [CrossRef]

- Robertson, J.V.; Hoellinger, T.; Lindberg, P.; Bensmail, D.; Hanneton, S.; Roby-Brami, A. Effect of auditory feedback differs according to side of hemiparesis: A comparative pilot study. J. Neuroeng. Rehabil. 2009, 6, 45. [Google Scholar] [CrossRef] [PubMed]

- Scholz, D.S.; Rohde, S.; Nikmaram, N.; Brückner, H.P.; Großbach, M.; Rollnik, J.D.; Altenmüller, E.O. Sonification of arm movements in stroke rehabilitation—A novel approach in neurologic music therapy. Front. Neurol. 2016, 7, 106. [Google Scholar] [CrossRef] [PubMed]

- Rodger, M.W.; Young, W.R.; Craig, C.M. Synthesis of walking sounds for alleviating gait disturbances in Parkinson’s disease. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 543–548. [Google Scholar] [CrossRef] [PubMed]

- Melara, R.D.; O’Brien, T.P. Interaction between synesthetically corresponding dimensions. J. Exp. Psychol. Gen. 1987, 116, 323–336. [Google Scholar] [CrossRef]

- Küssner, M.B.; Tidhar, D.; Prior, H.M.; Leech-Wilkinson, D. Musicians are more consistent: Gestural cross-modal mappings of pitch, loudness and tempo in real-time. Front. Psychol. 2014, 5, 789. [Google Scholar] [CrossRef][Green Version]

- Online Evaluation of Amusia—Public. Available online: http://www.brams.org/amusia-public (accessed on 12 October 2019).

- Peretz, I.; Gosselin, N.; Tillmann, B.; Cuddy, L.L.; Gagnon, B.; Trimmer, C.G.; Paquette, S.; Bouchard, B. On-line identification of congenital amusia. Music Percept. 2008, 25, 331–343. [Google Scholar] [CrossRef]

- Fong, D.T.P.; Chan, Y.Y. The use of wearable inertial motion sensors in human lower limb biomechanics studies: A systematic review. Sensors 2010, 10, 11556–11565. [Google Scholar] [CrossRef]

- Helten, T.; Brock, H.; Müller, M.; Seidel, H.P. Classification of trampoline jumps using inertial sensors. Sports Eng. 2011, 14, 155–164. [Google Scholar] [CrossRef]

- Brock, H.; Schmitz, G.; Baumann, J.; Effenberg, A.O. If motion sounds: Movement sonification based on inertial sensor data. In Procedia Engineering; Drahne, P., Sherwood, J., Eds.; Elsevier: Amsterdam, The Netherlands, 2012; Volume 34, pp. 556–561. [Google Scholar] [CrossRef][Green Version]

- Effenberg, A.O.; Schmitz, G. Acceleration and deceleration at constant speed: Systematic modulation of motion perception by kinematic sonification. Ann. N. Y. Acad. Sci. 2018, 1425, 52–69. [Google Scholar] [CrossRef]

- Ghez, C.; Gordon, J.; Ghilardi, M.F. Impairments of reaching movements in patients without proprioception. II. Effects of visual information on accuracy. J. Neurophysiol. 1995, 73, 361–372. [Google Scholar] [CrossRef] [PubMed]

- Graziano, M.S. Where is my arm? The relative role of vision and proprioception in the neuronal representation of limb position. Proc. Natl. Acad. Sci. USA 1999, 96, 10418–10421. [Google Scholar] [CrossRef] [PubMed]

- Schmitz, G.; Kroeger, D.; Effenberg, A.O. A mobile sonification system for stroke rehabilitation. In Proceedings of the Presented at the 20th International Conference on Auditory Display, New York, NY, USA, 22–25 June 2014. [Google Scholar] [CrossRef]

- Schmitz, G.; Bergmann, J.; Effenberg, A.O.; Krewer, C.; Hwang, T.H.; Müller, F. Movement sonification in stroke rehabilitation. Front. Neurol. 2018, 9, 389. [Google Scholar] [CrossRef] [PubMed]

- Reh, J.; Hwang, T.H.; Michalke, V.; Effenberg, A.O. Instruction and real-time sonification for gait rehabilitation after unilateral hip arthroplasty. In Proceedings of the Human Movement and Technology, Book of Abstracts—11th Joint Conference on Motor Control and Learning, Biomechanics and Training, Darmstadt, Germany, 28–30 September 2016; Wiemeyer, J., Seyfarth, A., Kollegger, G., Tokur, D., Schumacher, C., Hoffmann, K., Schöberl, D., Eds.; Shaker: Aachen, Germany, 2016. [Google Scholar]

- Baudry, L.; Leroy, D.; Thouvarecq, R.; Chollet, D. Auditory concurrent feedback benefits on the circle performed in gymnastics. J. Sports Sci. 2006, 24, 149–156. [Google Scholar] [CrossRef]

- Ripollés, P.; Rojo, N.; Grau-Sánchez, J.; Amengual, J.L.; Càmara, E.; Marco-Pallarés, J.; Juncadella, M.; Vaquero, L.; Rubio, F.; Duarte, E.; et al. Music supported therapy promotes motor plasticity in individuals with chronic stroke. Brain Imaging Behav. 2016, 10, 1289–1307. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fehse, U.; Schmitz, G.; Hartwig, D.; Ghai, S.; Brock, H.; Effenberg, A.O. Auditory Coding of Reaching Space. Appl. Sci. 2020, 10, 429. https://doi.org/10.3390/app10020429

Fehse U, Schmitz G, Hartwig D, Ghai S, Brock H, Effenberg AO. Auditory Coding of Reaching Space. Applied Sciences. 2020; 10(2):429. https://doi.org/10.3390/app10020429

Chicago/Turabian StyleFehse, Ursula, Gerd Schmitz, Daniela Hartwig, Shashank Ghai, Heike Brock, and Alfred O. Effenberg. 2020. "Auditory Coding of Reaching Space" Applied Sciences 10, no. 2: 429. https://doi.org/10.3390/app10020429

APA StyleFehse, U., Schmitz, G., Hartwig, D., Ghai, S., Brock, H., & Effenberg, A. O. (2020). Auditory Coding of Reaching Space. Applied Sciences, 10(2), 429. https://doi.org/10.3390/app10020429