Featured Application

Assembly tasks with industrial robot manipulators.

Abstract

Industrial robot manipulators are playing a significant role in modern manufacturing industries. Though peg-in-hole assembly is a common industrial task that has been extensively researched, safely solving complex, high-precision assembly in an unstructured environment remains an open problem. Reinforcement-learning (RL) methods have proven to be successful in autonomously solving manipulation tasks. However, RL is still not widely adopted in real robotic systems because working with real hardware entails additional challenges, especially when using position-controlled manipulators. The main contribution of this work is a learning-based method to solve peg-in-hole tasks with hole-position uncertainty. We propose the use of an off-policy, model-free reinforcement-learning method, and we bootstraped the training speed by using several transfer-learning techniques (sim2real) and domain randomization. Our proposed learning framework for position-controlled robots was extensively evaluated in contact-rich insertion tasks in a variety of environments.

1. Introduction

Autonomous robotic assembly is an essential component of industrial applications. Industrial robot manipulators are playing a significant role in modern manufacturing industries with the goal of improving production efficiency and reducing costs. Though peg-in-hole assembly is a common industrial task that has been extensively researched, safely solving complex, high-precision assembly in an unstructured environment remains an open problem [1].

Most common industrial robots are joint-position-controlled. For this type of robot, compliance control is necessary to safely attempt contact-rich tasks, or the robot is prone to causing large unsafe assembly forces even with tiny position errors. Compliant robot assembly tasks have been studied in two ways, passive and active methods. In passive methods, a mechanical device called remote center compliance (RCC) [2] is placed between the robot’s wrist and gripper. The passive compliance provided by the RCC lets the gripper move perpendicularly to the peg’s axis and rotate freely so as to reduce resistance. However, the passive method does not work well with high-precision assembly [3]. On the other hand, active compliant methods correct assembly errors through sensor feedback. In general, these methods use force sensors to detect the external forces and moments, and design control strategies on the basis of dynamic models of the task to minimize contact force [4]. Some active methods mimic human compliance during assembly [5]. Nevertheless, most of these assembly methods are not practical to use in real applications. Model parameters need to be identified, and controller gains need to be tuned. In both cases, the process is manually engineered for specific tasks, which requires a lot of time, effort, and expertise. These approaches are also not robust to uncertainties and do not generalize well to variations in the environment.

To reduce human involvement and increase robustness to uncertainties, the most recent research has been focused on learning assembly skills either from human demonstrations [6] or directly from interactions with the environment [7]. The present research focuses on the latter.

Reinforcement-learning (RL) methods allow for agents to learn complex behaviors through interactions with the surrounding environment, and by maximizing rewards received from the environment; ideally, the agents’ behavior can generalize to unseen scenarios or tasks [7]. Therefore, RL can be applied to robotic agents to learn high-precision assembly skills instead of only transferring human skills to the robot program [8]. Recent studies showed the importance of RL for robotic manipulation tasks [9,10,11], but none of these methods can be applied directly to high-precision industrial applications due to the lack of fine motion control.

In [12], an RL technique was used to learn a simple peg-in-hole insertion operation. Similarly, Inuo et al. [13] proposed a robot skill-acquisition approach by training a recurrent neural network to learn a peg-in-hole assembly policy. However, these approaches use a finite number of actions by discretizing the action space, which has many limitations in continuous-action control tasks [14], as is the case for robot control, which is continuous and high-dimensional.

Xu et al. [15] proposed learning dual peg insertion by using the deep deterministic policy gradient [16] (DDPG) algorithm with a fuzzy reward system. Similarly, Fan et al. [17] used DDPG combined with guided policy search (GPS) [18] to learn high-precision assembly tasks. Luo et al. [19] also used GPS to learn peg-in-hole tasks on a deformable surface. Nevertheless, these methods learn policies that control the motion trajectory only, and they require the manual tuning of force control gains; therefore, they do not scale well to variations of the environment.

Ren et al. [20] proposed the use of DDPG to simultaneously control position and force control gains, but they assumed the geometric knowledge of the insertion task, which made the learned policies inflexible to be applied to different insertion tasks. To solve high-precision assembly tasks, our approach focused on learning policies that simultaneously control the robot’s motion trajectory and actively tune a compliant controller to unknown geometric constraints.

Buchli et al. [21] accomplished variable stiffness skill learning on robot manipulators by using an RL algorithm call-policy improvement with path integrals (PI2). However, the method was formulated for torque-control robots. Another similar approach was to use a flexible robot so as to focus only on the motion trajectory, as in [22]; however, rigid position-controlled robots are still more widely used. Therefore, we focus on industrial robot manipulators, which are mainly controlled in a position-based manner.

Abu-Dakka et al. [23] proposed a learning method based on iterative learning control (ILC). Their method is focused on transferring manipulation skills from demonstrations that provide a reference trajectory and force profile. In this work, we present a method that can learn manipulation skills without prior knowledge of a reference trajectory or force profile. However, our method supports the use of such prior knowledge to speed up the learning phase.

The main contribution of this work is a robust learning-based framework for robotic peg-in-hole assembly given an uncertain goal position. Our method enables a position-controlled industrial robot manipulator to safely learn contact-rich manipulation tasks by controlling the nominal trajectory, and at the same time, learning variable force control gains for each phase of the task. We built this on the basis of our previous work [24]. More specifically, the contributions of this work are:

- A robust policy representation based on time convolutional neural networks (TCNs).

- Faster learning of control policies via domain transfer-learning techniques (sim2real) to greatly improve the training efficiency in real robots.

- Improved generalization capabilities of the learned control policies via domain randomization during the training phase in simulations. Although the effects of domain randomization have been researched [25,26], to the best of our knowledge, we are the first to study the effects of sim2real with domain randomization on contact-rich, real-robot applications with position-controlled robots.

The effectiveness of the proposed method is shown through extensive evaluations with a real robotic system on a variety of contact-rich peg-in-hole insertion tasks.

Problem Statement

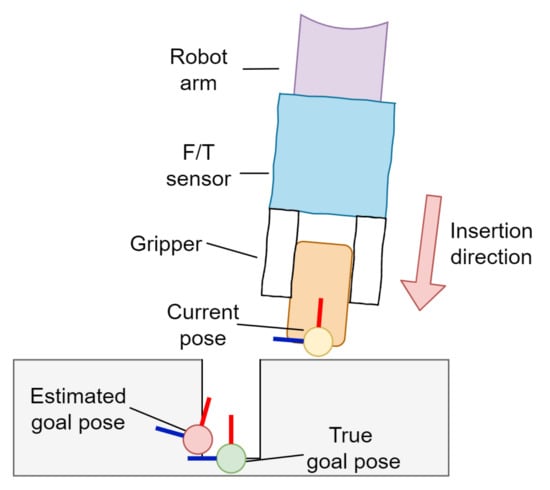

In the present study, we considered a peg-in-hole assembly task that required the mating of two components. One of the components was grasped and manipulated by the robot manipulator, while the second component had a fixed position either via fixtures to an environment surface or by being held by a second robot manipulator. Figure 1 provides a 2D representation of the considered insertion tasks and the components assumed to be available to solve the task. The proposed method was designed for a position-controlled robot manipulator with a force/torque sensor at its wrist. Typically, these insertion tasks can be broadly divided into two main phases [27], search and insertion. During the search phase, the robot aligns the peg within the clearance region of the hole. In the beginning, the peg is located at a distance from the center of the hole in a random direction. The distance from the hole is assumed to be the “positional error”. During the insertion phase, the robot adjusts the orientation of the peg with respect to the hole orientation, and pushes the peg to the desired position. We focused on both phases of the assembly task with the following assumptions:

Figure 1.

Insertion task with uncertain goal position.

- The manipulated object was already firmly grasped. However, slight changes of object orientation within the gripper were possible during manipulation.

- There was access to imperfect prediction of the target end-effector pose (as shown in Figure 1) or a reference trajectory and its degree of uncertainty.

- The manipulated object was inserted in a direction parallel to the gripper’s orientation.

We considered the second assumption fair given the advances in vision-recognition techniques, wherein the 6D poses of objects can be estimated from single RGB images [28,29] or RGB images with depth maps (RGB-D) [30,31]. The high accuracy of the predictions is in many cases enough for robot manipulation. Moreover, this second assumption included the specific case of using an assembly planner [32,33], where even if the initial position of the objects is known, the inevitable error throughout the manipulation (e.g. pick-and-place, grasping, and regrasping) that makes the positions/orientations of the manipulated objects uncertain during the insertion phase. A reference trajectory could be similarly obtained from demonstrations [34,35,36] when a complex motion is required to achieve the insertion. The last assumption allowed for defining a desired insertion force that may vary for different insertion tasks without loss of generalization.

2. Materials and Methods

2.1. System Overview

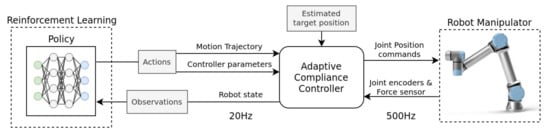

Our proposed system aims to solve assembly tasks with an uncertain goal pose. Figure 2 shows the overall system architecture. There were two control loops. The inner loop was an adaptive compliance controller; we chose to use a parallel position-force controller that was proven to work well for this kind of contact-rich manipulation task [24]. The inner loop ran at a control frequency of 500 Hz, which is the maximum available in Universal Robots e-series robotic arms (Robot details at https://www.universal-robots.com/e-series/). Details of the parallel controller are provided in Section 2.2.3. The outer loop was an RL control policy running at 20 Hz that provided subgoal positions and the parameters of the compliance controller. The outer loop’s slower control frequency allowed for the policy to process the robot state and compute the next action to be taken by the manipulator, while the inner loop’s precise high-frequency control would seek to achieve and maintain the subgoal provided by the policy. Details of the RL algorithm and the policy architecture are provided in Section 2.2. Lastly, the input to the system was estimated target position and orientation for the insertion task.

Figure 2.

Our proposed framework. On the basis of estimated target position for an insertion task, our system learns a control policy that defines motion-trajectory and force-control parameters of an adaptive compliance controller to control an industrial robot manipulator.

Motion commands sent to the adaptive compliance controller corresponded to the pose of the robot’s end effector. The pose was of the form , where is the position vector, and is the orientation vector. The orientation vector was described using Euler parameters (unit quaternions), denoted as , where is the scalar part of the quaternion and the vector part.

2.2. Learning Adaptive-Compliance Control

2.2.1. Reinforcement-Learning Algorithm

Robotic reinforcement learning is a control problem where a robot, the agent, acts in a stochastic environment by sequentially choosing actions over a sequence of time steps. The goal is to maximize a cumulative reward. Said problem was modeled as a Markov decision process. The environment is described by a state . The agent can perform actions , and perceives the environment through observations that may or not be equal to . We considered an episodic interaction of finite time steps with a limit of T time steps per episode. The agent’s goal is to find a policy that selects actions conditioned on observations to control the dynamical system. Given stochastic dynamics and reward function , the aim is to find a policy that maximizes the expected sum of future rewards given by , with being a discount factor [7].

In this work, we used an RL algorithm called soft actor critic (SAC), which is one of the state-of-the-art algorithms with high sample efficiency, ideal for real robotic applications. SAC [37] is an off-policy, actor-critic deep RL algorithm based on maximal entropy. SAC aims to maximize the expected reward while also optimizing maximal entropy. The SAC agent optimizes a maximal-entropy objective, which encourages exploration according to a temperature parameter . The core idea of this method is to succeed at the task while acting as randomly as possible. Since SAC is an off-policy algorithm, it uses a replay buffer to reuse information from recent rollouts for sample-efficient training. Additionally, we used the distributed prioritized experience replay approach for further improvement [38]. Our implementation of the SAC algorithm was based on the TF2RL repository (TF2RL: Deep-reinforcement-learning library using TensorFlow 2.0. https://github.com/keiohta/tf2rl).

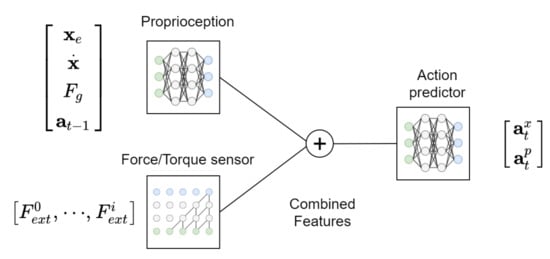

2.2.2. Multimodal Policy Architecture

The control policy was represented using neural networks, as shown in Figure 3. The policy input was the robot state. The robot state included the proprioception information of the manipulator and haptic information. Proprioception included the pose error between the current robot’s end-effector position and predicted target pose , end-effector velocity , desired insertion force , and actions taken in the previous time step . Proprioception feedback was encoded with a neural network with 2 fully connected layers with an activation function RELU to produce a 32-dimensional feature vector. For force-torque feedback, we considered the last 12 readings from the six-axis F/T sensor, filtered using a low-pass filter, as a 12 × 6 time series:

The F/T time series was fed to a temporal convolutional network (TCN) [39] to produce another 32-dimensional feature vector. The feature vectors from proprioception and haptic information were concatenated to obtain a 64-dimensional feature vector, and then fed to two fully connected layers to predict the next action.

Figure 3.

Control policy consisting of three networks. First, proprioception information is processed through a 2-layer neural network. Second, force/torque information is processed with a temporal convolutional network. Lastly, extracted features from the first two networks are concatenated and processed on a 2-layer neural network to predict actions.

The policy outputs actions for a parallel position-force controller. The policy produces two types of actions, , where are position/orientation subgoals, and are parameters of the parallel controller. The specific parameters controlled by are described in Section 2.2.3.

2.2.3. Compliance Control in Task Space

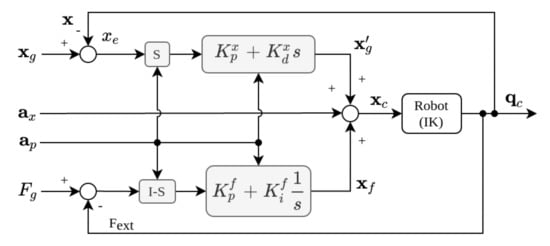

Our proposed method uses a common force-control scheme combined with a reinforcement-learning policy to learn contact-rich manipulations with a rigid position-controlled robot. For the family of contact-rich manipulation tasks that require some sort of insertion, the parallel position-force control [40] performs better and can be learned faster than using an admittance control scheme when combined with an RL policy [24].

The implemented parallel controller is depicted in Figure 4. A PID parallel position-force control was used with the addition of a selection matrix to define the degree of the control of position and force over each direction. The control law consisted of a PD action on position, a PI action on force, a selection matrix, and policy position action ,

where , and is the position commanded of the robot. The selection matrix is

where values correspond to the degree of control that each controller has over a given direction.

Figure 4.

Adaptive parallel position-force control scheme [24]. Inputs are the estimated goal position, policy actions, and a desired contact force. Controller outputs the joint position commands for the robotic arm.

Our parallel control scheme had a total of 30 parameters, 12 from the position PD controller’s gains, 12 from the force PI controller’s gains, and 6 from selection matrix S. We reduced the number of controllable parameters to prevent unstable behavior and to reduce system complexity. For the PD controller, only proportional gain was controllable, while derivative gain was computed on the basis of . was set to have a critically damped relationship as

Similarly, for the PI controller, only proportional gain was controllable, and integral gain was computed with respect to . In our experiments, was empirically set to be of . In total, 18 parameters were controllable. In summary, the policy actions regarding the parallel controller’s parameters are

As a safety measure, we narrowed the agent choices for the force-control parameters by imposing upper and lower limits to each parameter, assuming we had access to some baseline gain values . We defined a range of potential values for each parameter as with constant defining the size of the range. We mapped policy actions from range to each parameter’s range. and are the hyperparameters of our method.

2.3. A Task’s Reward Function

For all considered insertion tasks, the same reward function was used:

where is the desired insertion force, is the contact force, and is the defined allowed maximal contact force. is a linear mapping in the range 1 to 0; thus, the closer to the goal and the lower the contact force, the higher the reward obtained. is an L1,2 norm based on [9]. is a reward defined as follows:

During training, the task was considered completed if the Euclidean distance between the robot’s end-effector position and the true goal position was less than 1 mm. The agent was encouraged to complete the task as quickly as possible by providing an extra reward for every unused time step with respect to the maximal number of time steps per episode T. Moreover, we imposed a collision constraint wherein the agent was penalized for colliding with the environment by being given a negative reward and by finishing the episode early. This collision constraint encourages safer exploration, as shown in our previous work [24]. We defined a collision as exceeding force limit . Therefore, a collision detector and geometric knowledge of the environment were not necessary. Lastly, each component was weighted via w; all ws were hyperparameters.

2.4. Speeding up Learning

Two strategies were adopted to speed up the learning process. First, the exploitation of prior knowledge using the idea of residual reinforcement learning. Second, we used a physics simulator to train the robot on a peg-insertion task and transfer the learned policy directly to the real robot (sim2real).

2.4.1. Residual Reinforcement Learning

To speed up the learning of the control policy for insertion tasks that require complex manipulation, we used residual reinforcement learning [41,42]. The goal is to leverage the training process by exploiting prior knowledge. With the assumption of an estimated target position or a reference trajectory, we could manually define a controller . Then, said controller’s signal would be combined with policy action . The objective was to avoid training the policy from scratch, and avoid the exploration of the entire parameter space. The position command sent to the robot was

where is the reference trajectory process through a PD controller, is the policy signal on the position, and is the response to the contact force, as shown in Figure 4. The first two terms came from the parallel controller. Therefore, the policy would just need to learn to adjust the reference trajectory to achieve the task.

2.4.2. Sim2real

The proposed method works on the robot’s end-effector Cartesian task-space, which makes it easier to transfer learning from simulations to the real robot or even between robots [43]. For most insertion tasks, a simple peg-insertion task was used for training on a physics simulator. We used simulator Gazebo 9 [44]. To close the reality gap between the physics simulator and real-world dynamics, we used domain randomization [45]. During training on the simulator, the following aspects were randomized:

- Initial/goal end-effector position: Having random initial/goal positions helps the RL algorithm to find policies that generalize to a wide range of initial-position conditions.

- Object-surface stiffness: The RL agent also needs to learn to fine-tune the force-controller parameters to obtain a proper response to the contact force. Therefore, randomizing the stiffness of the manipulated objects helps it find policies that adapt to different dynamic conditions.

- Uncertainty error of goal pose prediction: On a real robot, the prediction of the target pose comes from noisy sensory information, either from a vision-detection system or from known prior manipulations (grasp and regrasp). Thus, during training on the simulation, we emulated this error by using normal Gaussian distribution with mean zero and standard deviation of a maximal distance error (for position and orientation).

- Desired insertion force: For different insertion tasks, a specific contact force is necessary for insertion to succeed. As we considered insertion force an input to the policy, during training, we randomized this value for each episode.

3. Experiments and Results

3.1. Experiment Setup

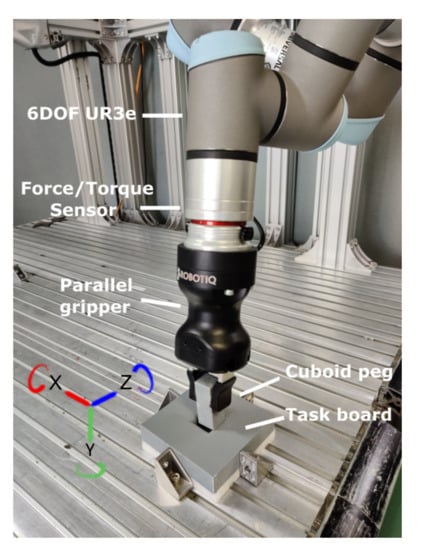

Experimental validation was performed on a simulated environment using Gazebo simulator [44] version 9, and on real hardware using a Universal Robot 3 e-series with a control frequency of up to 500 Hz. The robotic arm had a force/torque sensor mounted at its end effector, and a Robotiq Hand-e parallel gripper. In both environments, training of the RL agent was performed on a computer with an Intel i9-9900k CPU and a Nvidia RTX-2080 SUPER GPU. To control the robot agent, we used the Robot Operating System (ROS) [46] with the Universal Robot ROS Driver (ROS driver for Universal Robot robotic arms developed in collaboration between Universal Robots and the FZI Research Center for Information Technology https://github.com/UniversalRobots/Universal_Robots_ROS_Driver). The experiment environment of the real robot is shown in Figure 5.

Figure 5.

Real experiment environment with a 6-degree-of-freedom UR3e robotic arm. Cuboid peg and task board hole had a nonsmooth surface with 1.0 mm clearance.

3.2. Training

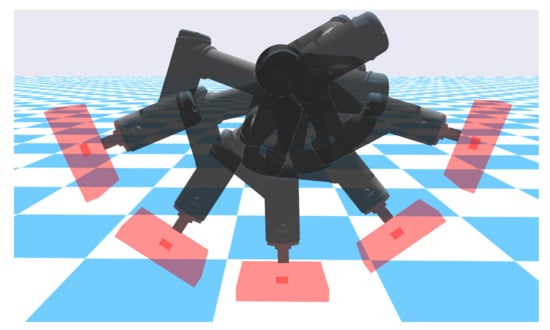

During the training phase, the agent’s task was to insert a cuboid peg into a task board in the simulated environment. The agent was trained for time steps, which, on average, took about 5 h to complete. During training, the environment was modified after each episode by randomizing one or several of the training conditions mentioned in Section 2.4.2. The range of values used for the randomization of the training conditions is shown in Table 1. The random goal position was selected from a defined set of possible insertion planes, as depicted in Figure 6.

Table 1.

Randomized training conditions.

Figure 6.

Simulation environment. Overlay of randomizable goal positions.

After training on the simulation, the learned policy was refined by retraining on the real robot for 3% off the simulation time steps, which took about 20 min, to further account for the reality gap between simulated and real-world physics dynamics.

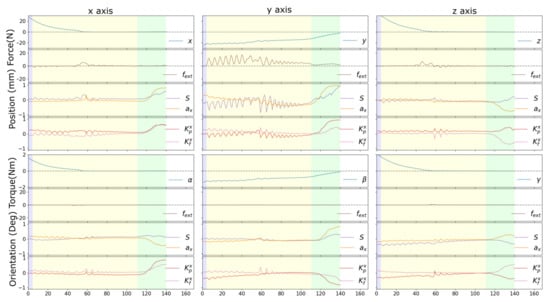

3.3. Evaluation

The learned policy was initially evaluated on the real robot with a 3D-printed version of the cuboid peg in the hole-insertion task with the true goal pose. During evaluation, observations and actions were recorded. Figure 7 shows the performance of the learned policy (sim2real + retrain). The figure shows the relative position of the end effector with respect to the goal position, the contact force, and the actions taken by the policy for each Cartesian direction normalized to the range of [−1, 1], as described in Section 2.2.3. As shown in Figure 1, the insertion direction was aligned with the y axis of the robot’s coordinate system. In Figure 7, we highlighted three phases of the task. Blue corresponds to the search phase in free space before contact with the surface, yellow is the search phase after initial contact with the environment, and green corresponds to the insertion phase. During the search phase, and particularly on the insertion direction (y axis), we could clearly observe that the learned policy properly reacted to contact with the environment by quickly adjusting the force control parameters. On top of that, during the insertion phase, the learned policy changed its strategy from just minimizing contact force to a mostly position-control strategy to complete insertion. This behavior is proper for this particular insertion task, as there is little resistance during the insertion phase, but it is not the desired behavior for other insertion tasks, as we discuss later in Section 3.4.2.1.

Figure 7.

Performance of learned policy (sim2real + retrain) on 3D-printed cuboid-peg-insertion task. Insertion direction was aligned with the y axis of the robot’s coordinate system. Relative distance from the robot’s end effector to goal position and contact force are shown. The 24 policy actions besides the corresponding axis are also shown.

Additionally, we compared the performance of the learned policy as a combination of sim2real and refinement in the real robot versus just learning in the real robot or just directly transferring the learned policy from the simulation (sim2real) without further training. We evaluated these policies in a 3D-printed version of the cuboid-peg-insertion task. Policies were tested 20 times with a random initial position assuming a perfect estimation of the goal position (true goal). Table 2 shows the results of the evaluation. The three policies had very high success rates, but the policy transfer from the simulation had difficulty with the real-world physics dynamics. As expected, the policy retrained from the simulation gave the best overall performance time.

Table 2.

Comparison of learning from scratch, straightforward sim2real, and sim2real + retraining (ours). Test performed on a 3D printed cuboid peg insertion task assuming knowledge of the true goal position.

3.4. Generalization

Now, to evaluate the generalization capabilities of our proposed learning framework, we used a series of environments with varying conditions.

3.4.1. Varying Degrees of Uncertainty Error

First, the learned policies were evaluated on the 3D printed cuboid peg insertion task where there was a degree of error on the estimation of the goal position. To clearly compare the performances of the different methods with different degrees of estimation error, we added and offset of position or orientation about the x axis of the true goal pose. Nevertheless, for completeness we also evaluated the policies on goal poses with added random offset of translation, millimeters, and orientation, , in all directions. In each case, the policies were tested 20 times from random initial positions. Results are shown in Table 3.

Table 3.

Comparison of learning from scratch, straightforward sim2real, and sim2real + retraining (ours) with different degrees of goal-position uncertainty error. Test performed during 3D-printed cuboid-peg insertion task.

In all cases, the policy learned from the simulation with domain randomization and fine-tuned in the real robot gave the best results. If the difference between the physics dynamics in the simulation and the real world was too big, learning from scratch could yield better results than only transferring the policy from the simulation, as can be seen when the uncertainty error of orientation was too big (5); where the friction with the environment makes the task much harder, such contact dynamics are difficult to simulate.

3.4.2. Varying Environment Stiffness

Second, the learned policy was also evaluated in different stiffness environments. Figure 8 shows the three environments considered for evaluation. High stiffness was the default environment. Medium stiffness was achieved by using a rubber band to hold the cuboid peg between the gripper fingers, adding a degree of static compliance. In addition to that, for the low-stiffness environment, a soft foam surface was added to further decrease stiffness. The policies were evaluated from 20 different initial positions; results are reported in Table 4.

Figure 8.

High, medium, and low-stiffness environments (left to right).

Table 4.

Success rate of a 3D-printed-cuboid insertion task with different degrees of contact stiffness.

3.4.2.1. Varying Insertion Tasks

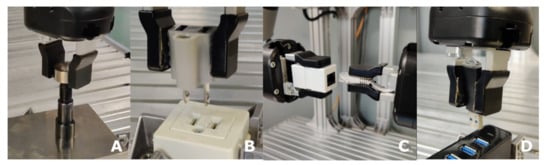

Lastly, we evaluated the learned policy through a series of novel insertion tasks, none seen during training, to assess its generalization capabilities. These insertion tasks included challenges such as adapting to a very hard surface (high stiffness), requiring a minimal insertion force to perform the insertion, and a complex peg shape for mating the parts. The different insertion scenarios are depicted in Figure 9.

Figure 9.

Several insertion tasks with different degrees of complexity. (A) Metal ring (high stiffness) with 0.2 mm of clearance. (B) Electric outlet requiring high insertion force. (C) Local-area-network (LAN) port, delicate with complex shape. (D) Universal serial bus (USB).

For each task, the learned policy was executed 20 times from random initial positions and assuming perfect estimation of the goal position. Table 5 shows the success rates of the learned policies in these novel tasks, along with the desired insertion force set for each task. As the insertion force was defined as a policy input, we could define a specific desired insertion force for each task. Even though the policy was only trained by using the simpler cuboid-peg insertion task, mainly in the simulation and shortly after refined in a real robot with a 3D-printed version of the same task, the learned policy achieved a high success rate in novel and complex insertion tasks.

Table 5.

Success rate of learned policy in several insertion tasks.

Compared to the cuboid-peg insertion task, in these novel insertion tasks, the peg was more likely to become stuck during the task’s search phase, as the surrounding surface near the hole was not smooth and may have had crevices. The extra challenges were not present during the training phase, which reduced the capability of the learned policy to react in an appropriate way. The insertion task of the LAN port was the most challenging for the policy due to the complex shape of the LAN cable endpoint. If just one corner of the LAN adapter was stuck, the insertion could not be completed even if a large force was applied.

Additionally, we tested the policy on different insertion planes for the electric outlet and the LAN port tasks. In both cases, the success rate was similar due to training with the randomized insertion planes. However, the policy was slightly better with insertions on the y axis plane due to retraining (on the real robot) only being done on this axis.

3.5. Ablation Studies

In this section, we evaluate the individual contributions of some components added to the proposed learning framework.

3.5.1. Learning from Scratch vs. Sim2real

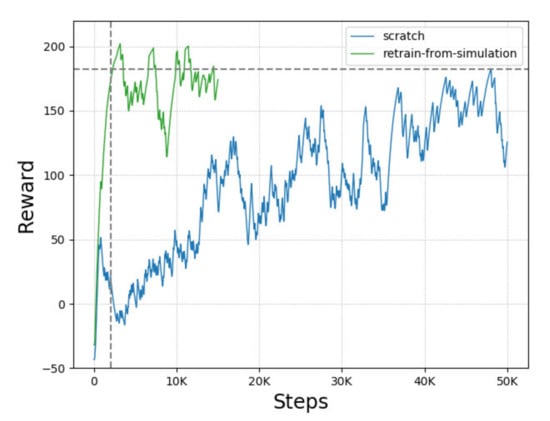

The inclusion of transfer learning from the simulation to the real robot for the proposed learning framework was evaluated. We compared the learning performance of training the agent in the real robot from scratch versus learning starting from a policy learned in a simulation. Training from scratch was performed for 50,000 steps, while retraining from the simulation lasted 15,000 steps. Figure 10 shows the learning curve for both training sessions. Learning from scratch required at least 50,000 steps to succeed at the tasks most of the time. In contrast, learning from the pretrained policy in the simulation achieved the same performance in under 5000 steps. The policy from the simulation still required some training to fine-tune the controller to real-world physics dynamics, which are difficult to simulate, as can be seen from the slow start and the drops in cumulative reward.

Figure 10.

Comparison between learning from scratch and learning from a policy learned in the simulation: learning curve for the 3D-printed cuboid-peg insertion task in a real robot with random initial positions.

3.5.2. Policy Architecture

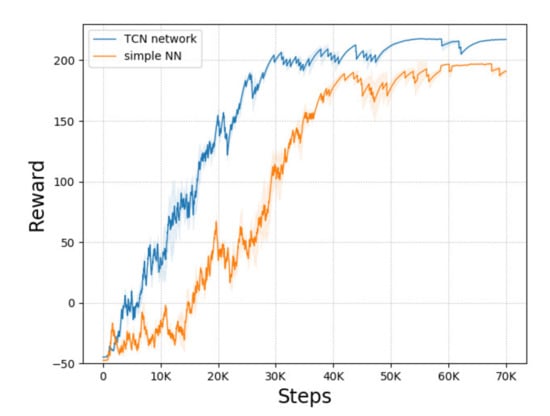

We evaluated the contribution of the policy architecture introduced in our method (see Section 2.2.2) by comparing it to a policy with a simple neural network (NN) with two fully connected layers, as used in previous work [24]. We trained both policies on the cuboid-peg insertion task in the simulation and compared their learning performances. Figure 11 shows the learning curves of both policy architectures for a training session of 70,000 time steps. From the figure, is clear that, with our newly proposed TCN-based policy, the agent was able to learn faster and exploit better rewards. The TCN-based policy learned a successful policy (25,000) about 15,000 steps faster than the simple neural-network (NN)-based policy did (40,000). Additionally, the TCN-based policy converged to a higher cumulative reward than that of the simple NN-based policy.

Figure 11.

Comparison between policy architectures: learning curve for the cuboid-peg insertion task with random initial positions.

3.5.3. Policy Inputs

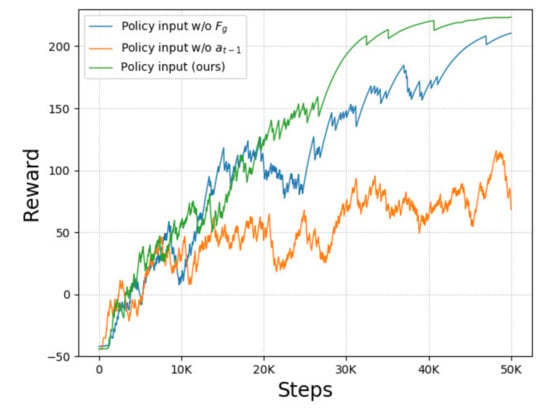

Lastly, we evaluated the choices of inputs for the policy. We compared our proposed policy architecture with all inputs, as defined in Section 2.2.2, with two variants. First, we considered the policy without the inclusion of prior action . Second, we considered the policy without knowledge of desired insertion force . The training environment was the cuboid-peg insertion task in the simulation with a random initial position and random desired insertion force. In the case of the policy that did not have as input, the cost function still accounted for the desired insertion force.

Figure 12 shows the comparison of the learning curves. Most notable is the poor performance of the policy that lacked the knowledge of prior action . Prior-action information is critical for the agent to more quickly converge to an optimal policy. Additionally, knowledge of enables the agent to find policies that yield higher cumulative rewards, and to learn faster.

Figure 12.

Comparison of policies with different inputs. Learning curve for cuboid-peg insertion task with random initial positions and random desired insertion force.

4. Discussion

We proposed a learning framework for position-controlled robot manipulators to solve contact-rich manipulation tasks. The proposed method allows for learning low-level high-dimensional control policies in real robotic systems. The effectiveness of the learned policies was shown through an extensive experimental study. We showed that the learned policies had a high success rate at performing the insertion task under the assumption of a perfect estimation of the goal position. The policy correctly learned the nominal trajectory and the appropriate force-control parameters to succeed at the task. The policy also achieved a high success rate under varying environmental conditions in terms of uncertainty of goal position, environmental stiffness, and novel insertion tasks.

While model free reinforcement-learning algorithm SAC was used in this work, the proposed framework can easily be adapted to other RL algorithms. The choice of SAC was due to its sample efficiency as an off-policy algorithm. The pros and cons of using other learning algorithms would be interesting future work.

One limitation of our learning framework is the selection of the force-control parameter range (see Section 2.2.3). The choice of a wide range of values may allow for the policy to adapt to very different environments, but it also increases the difficulty of learning a task, as small variations in the action may cause undesired behaviors, as was the case during the first 20,000 to 30,000 steps of training (see Figure 11). On the other hand, a narrow range would make it easier and faster to learn a task, but it may not generalize well to different environments. Defining a range is much easier than manually finding the optimal parameters for each task, but it is still a manual process. Therefore, another interesting future study would be to use demonstrations to learn a rough estimation of the optimal force parameters to further reduce training times.

Author Contributions

Methodology, C.C.B.-H., D.P., I.G.R.-A. and K.H.; Software, C.C.B.-H.; Investigation, C.C.B.-H., D.P. and I.G.R.-A.; Writing—Original Draft Preparation, C.C.B.-H.; Writing—Review & Editing, C.C.B.-H.; Supervision, D.P., I.G.R.-A. and K.H.; Project Administration, K.H.; Funding Acquisition, K.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kroemer, O.; Niekum, S.; Konidaris, G. A review of robot learning for manipulation: Challenges, representations, and algorithms. arXiv 2019, arXiv:1907.03146. [Google Scholar]

- Whitney, D.E. Quasi-Static Assembly of Compliantly Supported Rigid Parts. J. Dyn. Syst. Meas. Control. 1982, 104, 65–77. [Google Scholar] [CrossRef]

- Tsuruoka, T.; Fujioka, H.; Moriyama, T.; Mayeda, H. 3D analysis of contact in peg-hole insertion. In Proceedings of the 1997 IEEE International Symposium on Assembly and Task Planning (ISATP’97)-Towards Flexible and Agile Assembly and Manufacturing, Marina del Rey, CA, USA, 7–9 August 1997; pp. 84–89. [Google Scholar]

- Zhang, K.; Shi, M.; Xu, J.; Liu, F.; Chen, K. Force control for a rigid dual peg-in-hole assembly. Assem. Autom. 2017, 37, 200–207. [Google Scholar] [CrossRef]

- Fukumoto, Y.; Harada, K. Force Control Law Selection for Elastic Part Assembly from Human Data and Parameter Optimization. In Proceedings of the 2018 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids), Beijing, China, 6–9 November 2018; pp. 1–7. [Google Scholar]

- Kyrarini, M.; Haseeb, M.A.; Ristić-Durrant, D.; Gräser, A. Robot learning of industrial assembly task via human demonstrations. Auton. Robots 2019, 43, 239–257. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Yang, C.; Zeng, C.; Cong, Y.; Wang, N.; Wang, M. A learning framework of adaptive manipulative skills from human to robot. IEEE Trans. Ind. Inform. 2018, 15, 1153–1161. [Google Scholar] [CrossRef]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Ibarz, J.; Quillen, D. Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int. J. Robot. Res. 2018, 37, 421–436. [Google Scholar] [CrossRef]

- Pinto, L.; Gupta, A. Supersizing self-supervision: Learning to grasp from 50k tries and 700 robot hours. In Proceedings of the 2016 IEEE international conference on robotics and automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 3406–3413. [Google Scholar]

- Gu, S.; Holly, E.; Lillicrap, T.; Levine, S. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In Proceedings of the 2017 IEEE international conference on robotics and automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3389–3396. [Google Scholar]

- Nuttin, M.; Van Brussel, H. Learning the peg-into-hole assembly operation with a connectionist reinforcement technique. Comput. Ind. 1997, 33, 101–109. [Google Scholar] [CrossRef]

- Inoue, T.; De Magistris, G.; Munawar, A.; Yokoya, T.; Tachibana, R. Deep reinforcement learning for high precision assembly tasks. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 819–825. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Xu, J.; Hou, Z.; Wang, W.; Xu, B.; Zhang, K.; Chen, K. Feedback deep deterministic policy gradient with fuzzy reward for robotic multiple peg-in-hole assembly tasks. IEEE Trans. Ind. Inform. 2018, 15, 1658–1667. [Google Scholar] [CrossRef]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M.A. Deterministic Policy Gradient Algorithms. In Proceedings of the International Conference on Machine Learning, Beijing, China, 22–24 June 2014. [Google Scholar]

- Fan, Y.; Luo, J.; Tomizuka, M. A learning framework for high precision industrial assembly. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 811–817. [Google Scholar]

- Levine, S.; Koltun, V. Guided policy search. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 1–9. [Google Scholar]

- Luo, J.; Solowjow, E.; Wen, C.; Ojea, J.A.; Agogino, A.M. Deep reinforcement learning for robotic assembly of mixed deformable and rigid objects. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2062–2069. [Google Scholar]

- Ren, T.; Dong, Y.; Wu, D.; Chen, K. Learning-based variable compliance control for robotic assembly. J. Mech. Robot. 2018, 10, 061008. [Google Scholar] [CrossRef]

- Buchli, J.; Stulp, F.; Theodorou, E.; Schaal, S. Learning variable impedance control. Int. J. Robot. Res. 2011, 30, 820–833. [Google Scholar] [CrossRef]

- Lee, M.A.; Zhu, Y.; Srinivasan, K.; Shah, P.; Savarese, S.; Fei-Fei, L.; Garg, A.; Bohg, J. Making sense of vision and touch: Self-supervised learning of multimodal representations for contact-rich tasks. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8943–8950. [Google Scholar]

- Abu-Dakka, F.J.; Nemec, B.; Jørgensen, J.A.; Savarimuthu, T.R.; Krüger, N.; Ude, A. Adaptation of manipulation skills in physical contact with the environment to reference force profiles. Auton. Robot. 2015, 39, 199–217. [Google Scholar] [CrossRef]

- Beltran-Hernandez, C.C.; Petit, D.; Ramirez-Alpizar, I.G.; Nishi, T.; Kikuchi, S.; Matsubara, T.; Harada, K. Learning Force Control for Contact-rich Manipulation Tasks with Rigid Position-controlled Robots. IEEE Robot. Autom. Lett. 2020, 5, 5709–5716. [Google Scholar] [CrossRef]

- Chebotar, Y.; Handa, A.; Makoviychuk, V.; Macklin, M.; Issac, J.; Ratliff, N.; Fox, D. Closing the sim-to-real loop: Adapting simulation randomization with real world experience. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8973–8979. [Google Scholar]

- Andrychowicz, O.M.; Baker, B.; Chociej, M.; Jozefowicz, R.; McGrew, B.; Pachocki, J.; Petron, A.; Plappert, M.; Powell, G.; Ray, A.; et al. Learning dexterous in-hand manipulation. Int. J. Robot. Res. 2020, 39, 3–20. [Google Scholar] [CrossRef]

- Sharma, K.; Shirwalkar, V.; Pal, P.K. Intelligent and environment-independent peg-in-hole search strategies. In Proceedings of the 2013 International Conference on Control, Automation, Robotics and EMbedded Systems (CARE), Jabalpur, India, 16–18 December 2013; pp. 1–6. [Google Scholar]

- Zakharov, S.; Shugurov, I.; Ilic, S. Dpod: 6d pose object detector and refiner. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 1941–1950. [Google Scholar]

- Peng, S.; Liu, Y.; Huang, Q.; Zhou, X.; Bao, H. Pvnet: Pixel-wise voting network for 6dof pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4561–4570. [Google Scholar]

- Xiang, Y.; Schmidt, T.; Narayanan, V.; Fox, D. PoseCNN: A Convolutional Neural Network for 6D Object Pose Estimation in Cluttered Scenes. Robot. Sci. Syst. (RSS) 2018, 2018. [Google Scholar] [CrossRef]

- Hodan, T.; Haluza, P.; Obdržálek, Š.; Matas, J.; Lourakis, M.; Zabulis, X. T-LESS: An RGB-D dataset for 6D pose estimation of texture-less objects. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 880–888. [Google Scholar]

- Harada, K.; Nakayama, K.; Wan, W.; Nagata, K.; Yamanobe, N.; Ramirez-Alpizar, I.G. Tool exchangeable grasp/assembly planner. In Proceedings of the International Conference on Intelligent Autonomous Systems; Springer: Berlin/Heidelberg, Germany, 2018; pp. 799–811. [Google Scholar]

- Masehian, E.; Ghandi, S. ASPPR: A new Assembly Sequence and Path Planner/Replanner for monotone and nonmonotone assembly planning. Comput.-Aided Des. 2020, 123, 102828. [Google Scholar] [CrossRef]

- Nair, A.; McGrew, B.; Andrychowicz, M.; Zaremba, W.; Abbeel, P. Overcoming exploration in reinforcement learning with demonstrations. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 6292–6299. [Google Scholar]

- Gupta, A.; Kumar, V.; Lynch, C.; Levine, S.; Hausman, K. Relay Policy Learning: Solving Long-Horizon Tasks via Imitation and Reinforcement Learning. In Proceedings of the Conference on Robot Learning (CoRL) 2019, Osaka, Japan, 30 October–1 November 2019. [Google Scholar]

- Wang, Y.; Harada, K.; Wan, W. Motion planning of skillful motions in assembly process through human demonstration. Adv. Robot. 2020, 1–15. [Google Scholar] [CrossRef]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor. arXiv 2018, arXiv:1801.01290. [Google Scholar]

- Horgan, D.; Quan, J.; Budden, D.; Barth-Maron, G.; Hessel, M.; van Hasselt, H.; Silver, D. Distributed Prioritized Experience Replay. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- Chiaverini, S.; Sciavicco, L. The parallel approach to force/position control of robotic manipulators. IEEE Trans. Robot. Autom. 1993, 9, 361–373. [Google Scholar] [CrossRef]

- Johannink, T.; Bahl, S.; Nair, A.; Luo, J.; Kumar, A.; Loskyll, M.; Ojea, J.A.; Solowjow, E.; Levine, S. Residual Reinforcement Learning for Robot Control. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6023–6029. [Google Scholar] [CrossRef]

- Silver, T.; Allen, K.R.; Tenenbaum, J.B.; Kaelbling, L.P. Residual Policy Learning. arXiv 2018, arXiv:1812.06298. [Google Scholar]

- Bellegarda, G.; Byl, K. Training in Task Space to Speed Up and Guide Reinforcement Learning. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 2693–2699. [Google Scholar]

- Koenig, N.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2149–2154. [Google Scholar]

- Tobin, J.; Fong, R.; Ray, A.; Schneider, J.; Zaremba, W.; Abbeel, P. Domain randomization for transferring deep neural networks from simulation to the real world. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 23–30. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).