Featured Application

This study provides researchers and educators the resources to set up and run a software tool for extraction, processing, visualization and analysis of student self- and peer assessment data in Moodle Workshops.

Abstract

Situated in the intersection of two emerging trends, online self- and peer assessment modes and learning analytics, this study explores the current landscape of software applications to support peer assessment activities and their necessary requirements to complete the learning analytics cycle upon the information collected from those applications. More particularly, the study focuses on the specific case of Moodle Workshops, and proposes the design and implementation of an application, the Moodle Workshop Data EXtractor (MWDEX) to overcome the data analysis and visualization shortcomings of the Moodle Workshop module. This research paper details the architecture design, configuration, and use of the application, and proposes an initial validation of the tool based on the current peer assessment practices of a group of learning analytics experts. The results of the small-scale survey suggest that the use of software tools to support peer assessment is not so extended as it would initially seem, but also highlight the potential of MWDEX to take full advantage of Moodle Workshops.

1. Introduction

The emergence of massive online open courses (MOOCs) has had a dramatical impact on several different aspects of learning. Two of the most important elements affected by MOOCs are the analysis of data (learning analytics) as a means to understand what is actually occurring in a massive online course, and the need for efficient assessment methods that could work in large-scale settings. Both aspects of learning have also been impacted by the rapid implementation of virtual campuses across higher education institutions and the shift toward a kind of student-centered learning that incorporates intensive use of information technologies. Nonetheless, the intersection of learning analytics and peer assessment software tools has rarely been explored by academic researchers and practitioners. This study aims to contribute to both topics by exploring the correspondence between software to support peer assessment and the different stages of the learning analytics cycle, with a special focus on the case of Moodle, the most-used learning management system, and Moodle Workshop, a Moodle module to support peer assessment.

The following introductory subsections aim to provide the reader with an outline of the context, main motivations, and objectives of the study. The first subsection situates the research in the emerging field of learning analytics, focusing on one of the least explored stages of the learning analytics cycle, data preparation. The second subsection presents the learning context (peer assessment), analyzes the main functionalities of peer assessment tools, describes the operation of Moodle workshops, and discusses their main advantages and current limitations. To overcome some of the shortcomings of the Moodle Workshop module for learning analytics purposes, particularly those related to peer assessment-related data preparation, analysis, and visualization, Section 2 details the design and implementation of an application for extraction, processing, and visualization of such data, Moodle Workshop Data EXtractor (MWDEX), and explores an initial validation of the software. Finally, Section 3 summarizes the main conclusions and future lines of research.

1.1. Learning Analytics and Data Preparation

Learning analytics is an integral part of smart education, smart learning, and the smart classroom [1]. Learning analytics is an emerging field in education whose main objective is to improve teaching and optimize learning processes. The promise of learning analytics to improve and optimize learning processes has given this relatively new discipline a central role in IT-supported education. An essential element of learning analytics is the educational datum; more specifically, learning analytics focuses on how educational data associated with interactions between the learning agents and supporting technologies can be captured, analyzed, represented, and put at the disposal of students and instructors in the form of information that can be used for interventions and educational decision making. Most often, learning analytics cover a wide range of IT-mediated learning methodologies, such as flipped classroom [2], blended learning [3,4,5], traditional online distance learning [6,7], mobile learning [8], MOOCs [9], serious games [10], simulation-based learning [11], etc., but learning analytics can also use data generated in traditional in-class learning environments, such as video captured during classroom activities, movement information from body sensors, or eye-tracking devices [12], among others.

The activity of teachers or learners and data collection is considered just the first step in the cycle of learning analytics processes [13,14,15,16], followed by data processing and analysis that can serve as a basis for potential interventions or changes in the learning design. According to [17], educational data are a proxy between social-cognitive processes and their outputs in learning analytics processes, where the outputs must be explained in terms of the results of the analysis. The authors identify four different types of data (primary data, data resulting from measurements of artifacts, repurposed data, and transformed data) and two different routes to educational intervention and outcome, direct analysis, using the first three types of data, and data transformation and analysis, using transformed data.

The first route generally does not require data manipulation, and its effectiveness mostly depends on the choice of data elements to be included in the analysis and presented to users, especially with large datasets or data of a heterogeneous nature. This subtle distinction is particularly interesting to address, as most current research on learning analytics generally focuses on the development of different data mining techniques and algorithms, as well as the results of the application of such analysis techniques and the visual presentation of data. For example, in the systematic review of the literature on educational data mining by [18], the authors identify 166 research articles dealing with clustering methods (i.e., pertaining to the realm of data mining algorithms and analysis techniques) in educational settings in the 1983 to 2016 period. The same is not true for data extraction and processing, as the mapping study by [19] confirms. In general, little attention is given to the internal operation of data processing (data preprocessing generally involves three different types of operations as a requisite for data mining: data reduction, data projection [20] and data aggregation [21]; this research specifically focuses on data aggregation of peer assessement activities as a means to facilitate data visualization and guarantee extensibility and interoperability), which is an essential part of the learning analytics cycle and contributes to increasing the effectiveness of most learning analytics and educational data mining techniques [22]. Furthermore, even when the methods for data collection are reported, most often we find that researchers need to perform manual operations and, then, turn their attention to the analysis of such data, but rarely report how exactly are data being prepared and aggregated (e.g., [23,24,25]). As Section 2 shows, different alternatives can be used for the extraction and processing (filtering, transformation, and aggregation) of educational data from learning management systems (LMS), but our knowledge about available tools or processes to prepare data for learning analytics purposes is still limited.

1.2. Peer Assessment

Peer assessment and peer grading refer to the evaluation of students’ assignments by their peers, under a set of criteria defined by the instructors, most likely in the form of an assessment rubric (the term rubric refers to “a scoring guide used to evaluate the quality of students’ constructed responses” [26]; that is, a rubric lists the criteria for a piece of work and articulates gradations of quality for each criterion, from excellent to poor [27]; a rubric has three essential features: evaluative criteria (used to distinguish acceptable responses from unacceptable responses), quality definitions (describe the way that qualitative differences in students’ responses are to be judged), and a scoring strategy (either holistic/aggregated, or analytic or rendered criterion-by criterion) [26]). There are two main drivers of the increasing use of peer assessment in higher education. On the one hand, the implementation of the Bologna Process and the European Higher Education Area, which emphasizes student-centered learning techniques and methodologies [28,29]; on the other hand, the rise of MOOCs, where scaling of the assessment process is not feasible using other assessment methods (except maybe tests and multiple choice questions) [30,31], especially in writing domains [32]. Peer assessment is considered an adequate assessment method in collaborative learning settings [33,34] and a helpful tool to promote critical thinking of students, while fostering self-reflection about the work done. It is also common to use peer assessment in team-centered project-based learning methodologies, where students are asked to assess the work done by their teammates, or teams can assess other teams’ work; in addition, it is also possible to complement self-assessment mechanisms to evaluate students’ own work [35]. The type of peer assessment can also vary depending on the learning goals, contexts, and technologies, and can be used both for formative and summative assessment, using different grading criteria to define the final grade in the case of the latter.

The main benefits of peer assessment are generally associated with deeper learning experiences for students through reflection and self-criticism, as well as higher student involvement with the course and with the team (as a consequence of their inclusion as an active part in the assessment and grading process), a better and deeper understanding of assessment criteria, and a higher perception of fairness through student empowerment [36,37]. For the instructor, the main benefits refer to the improvement of the overall learning experience for students, but also to saving time. There are also critical views of peer assessment, mostly related to different perceptions between students and instructors about the assessment process regarding fairness, workload, or anonymity [38]. In [38], the authors state that proper explanation or moderation by instructors can reduce the perception of unfairness and that, when used for summative assessment, peer grading can reduce the perception of students of the value of their degree or, as [39] point out, to grade inflation and failure to differentiate high contributing students and their counterparts. Nonetheless, and using the particular case of Moodle Workshops, [38] argue in favor of using Moodle Workshops with a formative and summative approach.

1.2.1. Peer Assessment Software Tools

In general terms, peer assessment can be carried out without the need for, or support of specialized software. For instance, a face-to-face discussion of a student or group presentation can also be considered a form of formative peer assessment, and feedback about an assignment can also be gathered in written form. However, there are several benefits associated with the use of dedicated software tools to support self- and peer assessment processes. First, all the information related to the process (assignment submission, self-assessment, peer grading. and feedback) is centralized in the same repository; second, anonymization is easily achieved by masking the identification of the student submitting the assignment and the student performing the peer review; third, random distribution of assignments and reviewers can be easily automated; fourth, it is possible to schedule the different phases of the process; finally, it is easy to allow different levels of access to instructors and students based on permissions.

There are also different software systems to support online self- and peer assessment. Most often they are included as part of the most popular LMS or MOOC platforms (Self and Peer Assessment in Blackboard [40], Peer Review in Canvas [41,42,43], Open Response Assessments in edX [44], as well as different options in Moodle that are detailed later on), but they also can be offered as standalone platforms (e.g., peerscholar [45], Peerstudio [46], TEAMMATES [47], PeerWise [48], or Access Peer Assess Pro (Xorro) [49]) or be used either independently or integrated in a learning management system (e.g., ComPAIR [50,51], Peerceptiv [52]). Most of these software tools generally differentiate the following four stages in the elaboration of a peer-graded assignment: creation and implementation of the activity in the platform, including (if necessary) the loading of information about participants; submission phase; reviewing and grading; results and reflection. Table 1 below shows the main characteristics of the different software tools (it was not possible to check the availability of functionalities in some platforms).

Table 1.

Comparative table of the main software tools to support self- and peer assessment and their functionalities.

The main differences across the different software solutions lie in how grades are calculated (e.g., median, average, composite scores, etc.) or other specific aspects of each of them (e.g., Canvas’s peer review and peerScholar have a stronger focus on feedback and self-reflection as compared with grading aspects across other platforms; ComPAIR’s peer assessment process has a comparative approach where students compare two assignments; PeerWise only allows peer review of assignments authored by students and includes gamification aspects, such as badges and reputation; TEAMMATES and Peerceptive have a strong orientation toward peer assessment of groups; and Access Peer Assess Pro offers a broad range of statistics associated with the assessment process).

From Table 1, most of the different options available share the majority of functionalities, such as: (1) phased structure, by defining the peer assessment process as a sequence of phases or stages, which can be scheduled and generally consist of assignment preparation and initial setup of the activity, submission stage, assessment, grade calculation, and reporting; (2) grading scheme to assign quantitative grades during the assessment process; (3) self-assessment capabilities; (4) anonymity during the assessment process, including either single or blind review; (5) use of assessment rubrics, with or without an existing assessment provided as example or grading guide; (6) provision of feedback in the form of comments; (7) presentation of results in visual form, with tables and graphs; (8) data export capabilities; (9) automated assignment of reviewers to the submissions; and (10) possibility to form different assessment groups, which could be useful for assessment of team-based activities.

1.2.2. Peer Assessment in Moodle

Table 1 purposely omitted Moodle. Moodle is an open-source learning management system, written in PHP (PHP: Hypertext Processor), which supports the creation of online learning communities in different teaching and learning delivery modes (blended learning, online learning, flipped classroom, etc.). Moodle is the most used learning platform in the world, with 20 million registered courses, 175 million users and 109,000 sites in 234 countries [53]. Moodle has a student-centered design, facilitating collaborative learning and interactions between students and instructors, as well as among students in the virtual classroom.

Three activity modules have peer assessment functionalities in Moodle: workshops, message boards, and the database module, each with its own advantages and disadvantages [54]; of the three, Moodle Workshops are the most used for self- and peer assessment, as they have by and large the largest set of functionalities to support peer assessment activities.

In Moodle Workshops, students complete and submit their assignments following a series of five phases planned by the instructor which include: setup, submission, assessment, grade calculation, and closing of the activity. The progress of the activity is visible in the Workshop Planner Tool, along with all the tasks the user needs to perform during that phase and the duration of the phase. The peer assessment process is based on the idea that students submit their own assignments and then receive a predefined number of submissions from other students that they must review and assess, anonymously or not, according to the instructions and assessment criteria set by the instructor; if the instructor requests self-assessment, students must assess their own submissions too.

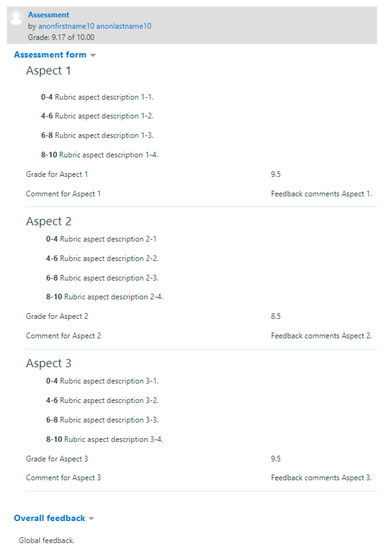

During the setup phase, instructors can modify all the following workshop settings: dates, grading strategy, information, assessment rubrics, etc. Students cannot perform any activity while in the setup phase. In the submission phase, students are allowed to submit their assignment. The instructor can define the start and end dates of submission, as well as check the current status of submission of all students. The assessment phase is when students perform and submit their assessment of other students’ submissions (and of their own submission, if self-assessment is enabled) until the specified end date and time. Depending on the setting, students must submit a final grade or grade different aspects of the assignment based on an assessment rubric; students can also provide specific feedback about the different aspects of the rubric, as well as a global feedback about the submission. Assessment is followed by grading, which involves calculation of the final grade and provision of feedback for students, both authors and reviewers. The submissions cannot be modified during this phase. Instructors can also add their own grading and publish the results. Moodle uses a grading method called “comparison with the best assessment”, which tries to imagine what a hypothetical absolutely fair assessment would look like and measures the ”distance” from other assessments to this best assessment, with the following five options associated with how strict the comparison should be: very lax, lax, fair, strict, and very strict. In this stage, instructors can also grade reviewers and provide feedback about the assessment. Closing means that the grades are written to Moodle’s Gradebook and made visible to students. Students can then view their submissions and the reviews and feedback received, opening a space for self-reflection.

An interesting feature of Moodle Workshop is that, in addition to the submission grade (the one given by one student to another), Moodle also calculates an assessment score, which indicates how close the grade given by a student is to the average grade of the assessed student. This gives an idea about how ”good” or reliable a student is at assessing the work of their peers; Moodle allows different ways to calculate assessment scores.

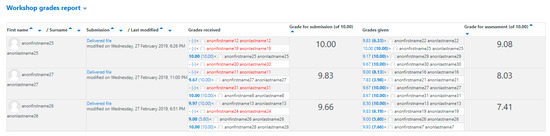

Comparing the functionalities of Moodle Workshops with those present in other tools or learning management systems in the previous Section, Moodle Workshops have three very important drawbacks. First, the visualization of results is very limited, i.e., the results are displayed on a browsable but static table, which instructors can only drill down to check individual assessments by visiting hyperlinks (see Figure 1 and Figure 2). Second, a complete extraction of data from the activity is complex (excluding course- and data-related tables, it involves data collection from 14 different database tables related to the Workshop module). Third, although instructors can export final grades from the Moodle gradebook or explore the information related with any assessment in their browser, the information about each individual assessment, partial grades (e.g., grades in specific aspects of a rubric) or written feedback cannot be exported at this moment.

Figure 1.

Overview of Moodle Workshop grading table summary.

Figure 2.

Detail of a submission review in Moodle Workshop.

Such exported data could be valuable to create personalized reports using assessment rubrics or to perform different types of analysis of peer-assessed activities. Due to its open source nature, it is possible to extend Moodle functionalities using external plug-ins or web services, as well as to modify the code and structure of the database of the LMS. Taking advantage of the extensibility features of Moodle, further additions to the Moodle Workshop module have already been proposed; for instance, [55,56] propose an extension of the assessment score calculation using Bayesian networks, which require code and database modifications and the design of an application program interface (API). This approach to the extension of the Moodle Workshop module is discussed in Section 2.

1.3. Closing the Learning Cycle in Moodle Workshops

As seen in the previous Section, Moodle Workshops has high potential for the application of learning analytics techniques, but lacks the necessary elements to facilitate closing the learning analytics cycle, i.e., a complete and effective data extraction, processing, and export method, and some sort of visualization to facilitate analysis, sensemaking, and decision making. Therefore, the main objective of this research is to overcome these important limitations that hinder the use of Moodle Workshops in general, and for learning analytics purposes in particular. To achieve this research objective, the remainder of this paper details the design, development, and implementation of the Moodle Workshop Data EXtractor (MWDEX), a tool for peer assessment data extraction, processing, and visualization in Moodle LMS.

2. MWDEX: Moodle Workshop Data EXtractor

2.1. Objectives and Design Approaches

From the discussion in Section 1, MWDEX is designed with the main objective of extracting data from Moodle Workshops for further analysis through data export and visualization. By exporting data, MWDEX extends the functionality of Moodle Workshop, returning a dataset that instructors can edit, manipulate, combine, and analyze at their convenience in a browser or an external spreadsheet software. In order to facilitate the analysis, the dataset should include all the available and relevant information about peer assessment of all the assignments, that is, the data should include a list of all the submissions of a given assignment, including the following information: name or identification of the submitter, name or identification of the reviewer, and grade assigned/received. In addition, if the assignment uses an assessment rubric, the dataset should incorporate the grades given/received in each aspect of the rubric. If the assessment includes feedback comments, these should also be included in the dataset (both global feedback comments and feedback about specific aspects of the rubric, if any). Finally, when the assignment also includes self-assessment, the dataset should identify each record as pertaining to self- or peer assessment.

When planning to extract data or extend the functionality of a Moodle module, such as in this case, there are three design alternatives (Table 2). The first design approach for data extraction is to craft a tailored SQL (structured query language) query to the database that returns a given dataset based on the parameters used in the query; this option requires either the use direct access to the database and the use of additional drivers (such as in [57]) or the use of custom reporting plug-ins (e.g., https://docs.moodle.org/37/en/Custom_SQL_queries_report), as well as the necessary privileges, knowledge about SQL syntax, and the structure of Moodle database. Furthermore, it is highly dependent on the specific internal identifiers of the course and workshop, which requires adaptation whenever a change affecting these identifiers occurs. Therefore, such a method could be considered only as a temporary solution.

Table 2.

Comparison of design choices for data extraction of peer assessment in Moodle.

The second design approach entails the development of a Moodle local plug-in, generally written in PHP language, or the modification of the module files; this option can also be complemented with the use of an external application that processes the data from the plug-in or the modified module. Examples of these procedures are the extension of the functionality of the Moodle Workshop module in [55,56]. There are two important drawbacks of the implementation of local plug-ins and modification of Moodle modules. First, the compatibility with newer versions of Moodle is not guaranteed, which in turn requires revising and updating (if necessary) the correct operation of the plug-in after any update of the LMS, or changing the module files after Moodle version update. Second, many LMS administrators are often reluctant to install external plug-ins (and plainly reject modifying original LMS files) due to security concerns.

A third way to tackle the extension of Moodle functionalities is to use Moodle’s native web service infrastructure, which allows external applications to collect or manipulate Moodle data. Moodle currently offers a series of web service API functions (named core web service functions) that allow accessing information about components of the LMS through different functions or methods (e.g., the mod_workshop_get_submission_assessments function returns a list of all the assessments of a given submission). When a required function is not implemented yet as part of the core web service functions, the recommended procedure is to develop a plug-in that enables and exposes the function; this approach is followed in [58,59], but it is also affected by the drawbacks associated with local plug-in installation. However, when all the required functions are available in the core web service functions, it is possible to create a web service that allows an external application to access all the information stored in the Moodle database. Because the web service API is maintained through the different versions of Moodle and the access permissions can be managed directly by Moodle administrators, such a solution does not present the drawbacks associated with the use of local plug-in, which is why this is the approach chosen for the development of MWDEX. An additional benefit of a web service-based approach is that the developers have complete freedom in the design (programming language, user interface) of the external application that collects and processes the data. In order to accomplish the goal of the application, MWDEX must retrieve all the information available about a given peer assessment assignment (i.e., a Moodle Workshop activity), process and transform the data, and deliver the following three types of output: a human-readable file that is easy-to-manipulate, edit and store, and with analysis capabilities (e.g., an MS Excel spreadsheet); a file that could be used by any other external program for extended functionality (e.g., JSON (JavaScript Object Notation)); and visual representations of the information from the Moodle Workshop activity for analysis.

A final consideration in the design of the application is that the use of web services requires the use of HTTP (Hypertext Transfer Protocol) requests to the Moodle web services infrastructure, which returns a response in JSON format, making it necessary that the application handles JSON data. The application must also ensure compatibility with different versions of the LMS (guaranteed by the use of Moodle web services), client platform independence (e.g., an online web application that can be accessed from any browser, regardless of the operating system), and high processing speed for increased performance. Given its superior performance [60,61], the application is programmed in Node.js.

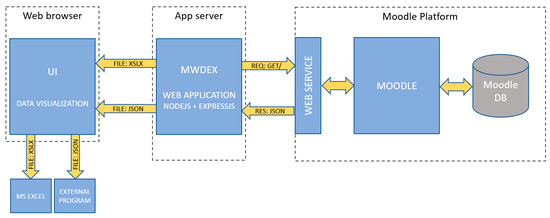

2.2. Architecture Design

On the basis of the application design principles outlined in the previous Section, MWDEX (https://github.com/TIGE-UPM/MWDEX) is configured following a model-view-controller approach and comprises three different components, a Moodle web service, the application (and application server), and the front-end or user interface (web browser). The web service enables access to the necessary Moodle core web service functions (detailed in Section 2.3) that establish a communication using the REST protocol with the web application (which can be run from a single computer as localhost or from a web server). The web application makes the data request to the LMS; this request is handled by the Moodle web services infrastructure, which gives a response with the necessary data in JSON format. Upon data reception, the web application processes the data and returns an MS Excel or JSON file with information about peer assessments for download; alternatively, the JSON file can also be processed to return an interactive visualization of the information in the web browser.

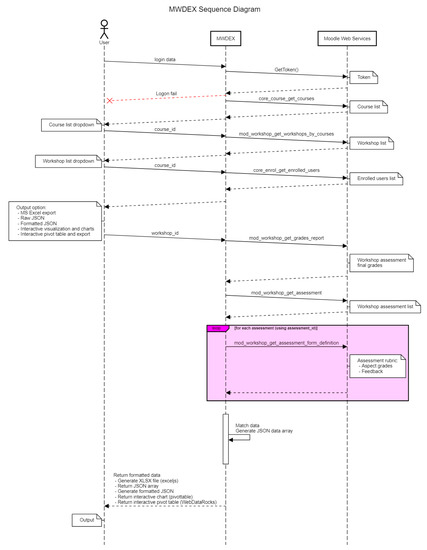

The process depicted in Figure 3, which summarizes the operation of MWDEX, can be explained in the following sequential stages:

Figure 3.

Graphic overview of Moodle Workshop Data EXtractor (MWDEX).

- The user (most likely, a teacher or course administrator) accesses the web application using a web browser (leftmost part of Figure 3), and enters his or her authentication details in Moodle, including the URL of the Moodle instance and the name of the web service. The web service needs to be activated in Moodle. The different functions of the web service are explained in further detail in Section 2.3.

- The HTTP request is passed from the browser to the web application.

- The web application requests the user’s token.

- If authentication credentials are correct, Moodle returns the token that the system uses for the following requests; otherwise, MWDEX shows a logon error message.

- Using the token, the web application requests the list of courses available for that user in Moodle.

- Moodle returns the list of courses in JSON format. The list could be empty if the user cannot access any course or no course exists in the Moodle LMS.

- The web application retrieves the list of courses and displays it in the web browser.

- The user selects a course and sends a request for the workshops available in that course, which is passed to Moodle via the web application.

- If the user is not enrolled in the course, an error is returned. Otherwise, Moodle returns the list of workshops for that course in JSON format. The list can be empty if no workshops are defined in the course in the Moodle LMS.

- The web application requests the list of users enrolled in the course and the list of course workshops.

- The user selects what type of output he or she wants to receive. Upon user confirmation, the web application requests all the information of assessments for the selected workshop.

- Moodle returns workshop peer assessment information in JSON format.

- For each user, the web application populates a variable listing all the information about each submission assessed. This process is explained in more detail later on in this document.

- After collection of all data, the web application generates the output file (in MS Excel format or JSON).

- Depending on the type of output requested, the browser prompts the user for file download (MS Excel), displays a JSON file for download, or creates an interactive pivot table or graphs for data presentation and analysis using the JSON output.

2.3. Implementation of the Web Service

This Section explains how to set up the web service in Moodle, in order to have MWDEX access the necessary information to export or visualize peer assessment-related data. Even though web service activation in Moodle is a straightforward process, it is omitted in this document due to length limitation. We refer the reader to the following URL for further information: https://docs.moodle.org/37/en/Using_web_services). Because all the required functions are provided natively by Moodle, there is no need to develop any external plug-in to access data. As mentioned earlier, a big advantage of using standard functions instead of a plug-in is that it makes the code easier to maintain and independent of changes in Moodle, unless the standard functions are changed.

In order to access the workshop-related data from Moodle, it is necessary to enable the web service and activate the REST protocol. These options appear in Moodle’s administration menu, under the Section “external services”. In order to create the web service, it is necessary to create a new service with the following functions:

- core_course_get_courses: Return course details. Required capabilities: moodle/course:view, moodle/course:update, moodle/course:viewhiddencourses.

- core_enrol_get_enrolled_users: Get enrolled users by course id. Required capabilities: moodle/user:viewdetails, moodle/user:viewhiddendetails, moodle/course:useremail, moodle/user:update, moodle/site:accessallgroups.

- mod_workshop_get_assessment: Retrieves the given assessment. Required capabilities: none.

- mod_workshop_get_assessment_form_definition: Retrieves the assessment form definition. Required capabilities: none.

- mod_workshop_get_grades_report: Retrieves the assessment grades report. Required capabilities: none.

- mod_workshop_get_workshops_by_courses: Returns a list of workshops in a provided list of courses; if no list is provided all workshops that the user can view will be returned. Required capabilities: mod/workshop:view.

The above operation activates the web service, but it is also necessary to configure access authorization. Administrators can grant authorization to individual users for using the web service in the “external services” menu, and they can then generate access tokens, if required, for authorized users in the “manage tokens” Section (even though the web service can be configured to be accessible to all users).

2.4. Security and Access Configuration

As mentioned in Section 2.1, security is one of the main concerns of Moodle LMS administrators, which is why solutions based on plug-in installation are hardly ever implemented across different institutions. The use of web services allows administrators to remain in complete control of permissions and access levels of the different types of users based on roles or individual permissions. The different functions used by the web service include a series of required capabilities; in other words, if a user (or the role the user has in Moodle) does not have those capabilities, the functions, when called, will return an error (“permission denied”) instead of the data requested. Because administrators by default have all the required capabilities, they only require a token to access the service; however, the teacher role generally assigned to instructors does not have the “webservice:createtoken” capability by default, and this might impede the use of MWDEX by instructors.

There are three possible and valid solutions for this problem which include: (1) changing the teacher role to give the role the missing capability, which would grant all teachers in the LMS this capability and could be considered a potential security flaw; (2) creating a ”MWDEX teacher role” as a clone of the teacher role with the only difference of the missing capability activated, and assign that role to all teachers using Moodle Workshops and MWDEX; in this case, the potential problem would be limited to the teachers with this new role; (3) creating a web services user; then create a web services role with the appropriate capabilities allowed (in this case, webservice:createtoken and webservice/rest:use) and assign it to the web services user as a system role. The web service user is then added as an authorized user and assigned a token; administrators can then assign the web services role only to the teachers who will be using the web service, effectively limiting access to the web service only to authorized users. The third option can be considered the most restrictive and secure, and therefore is the one recommended for the configuration of the web service.

2.5. User Interface

The main component of MWDEX is the web application, programmed in JavaScript and using Node.js and the Expressjs framework for the reasons mentioned in Section 2.1. Node.js allows extension of its core functionalities by importing external libraries. The four main JavaScript external libraries (with their corresponding dependencies) necessary to fulfill the design requirements of the application are ”jsonfile” (https://www.npmjs.com/package/jsonfile) to implement read/write of JSON files, ”request-promise” (https://github.com/request/request-promise) to add JavaScript promise support and control flow of data processing, ”exceljs/modern.nodejs” (https://github.com/exceljs) to export workshop data to MS Excel), and ”pivotable” (https://github.com/nicolaskruchten/pivottable) for the interactive visual presentation layer. The natural flow of execution of MWDEX has the following use case scenario:

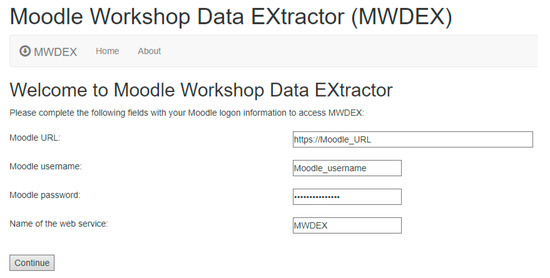

1. An instructor with a valid user in the Moodle LMS instance accesses MWDEX using his or her web browser at the specified URL, either local or remote (by default, the application is listening on the port 3000, but it is possible to configure the application to listen on a different port). Upon loading of MWDEX in the browser, the interface prompts for the following logon credentials: username, password, URL of the Moodle LMS, and name of the web service (Figure 4). The application also allows prepopulation of the different fields by using a URL with the following structure: https://[MWDEX_location]/#url=[MoodleURL]&username=[MoodleUsername]&password=[MoodlePassword]&service=[MWDEX_service_name_in_Moodle].

Figure 4.

MWDEX home page.

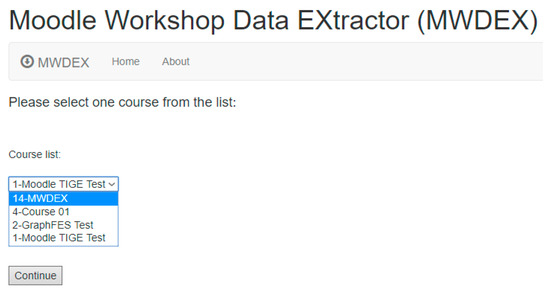

2. After authentication, the web interface of MWDEX displays a list of the available courses (Figure 5).

Figure 5.

MWDEX screen for course selection.

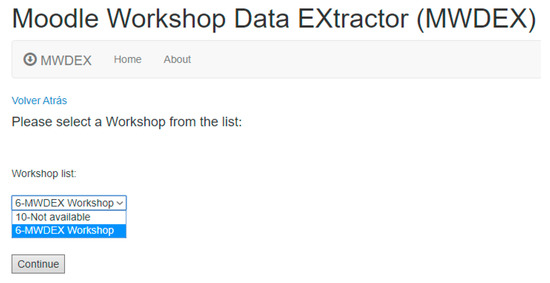

3. The instructor chooses one course and then MWDEX shows a list of the available workshops in that course (Figure 6).

Figure 6.

MWDEX screen for workshop selection.

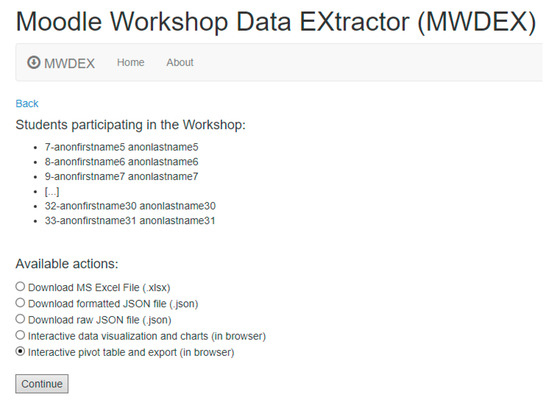

4. When a workshop is selected, the interface shows a list of all the students participating in the activity. The user must then choose the output format and confirm the selection (Figure 7). The following five different output options are possible: MS Excel file (.xlsx), formatted and raw JSON file (.json) and interactive visual presentation of results for analysis, with two different options (interactive data visualization and charts, and interactive pivot table and export).

Figure 7.

List of enrolled students (edited due to size limitations) and output selection.

5. Once all data have been retrieved, it is necessary to transform them so that each output file associates each user’s submission with its corresponding grades, reviewers, feedbacks, and assessments. This is where the main part of the code of MWDEX gets executed.

At this point, there are two main data structures involved, an array of users, with a list of the students enrolled in the selected course, and a multidimensional array of heterogeneous data. This data array includes the following four different subarrays: (1) an array with the different aspects of the assessment rubric (i.e., aspect grades and aspect feedback), this array uses an assessment_id as identifier; (2) an array that has information about the global feedback of each assessment, and which uses an assessment_id as identifier; (3) an array of submissions, which has information about submission IDs, assessment IDs, and reviewing and reviewed (i.e., grading and graded) student; this array is not used to create the MS Excel file but it is necessary to generate the formatted JSON output file; and (4) a data array including the identifiers of each submission, assessment, users (submitter and reviewer), as well as final grade and average grade of each aspect of the rubric. Figure 8 summarizes the data extraction and visualization process.

Figure 8.

Sequential diagram of the peer assessment data extraction and transformation process of MWDEX.

2.5.1. Data Export

The generation of the Excel file (the generation of the formatted JSON file is similar, albeit with some slight differences in data transformation) is performed by using a function to match all data and a variable to temporarily store all data before writing it to a file; this variable also includes an additional field to differentiate between peer-assessed and self-assessed submissions. The function goes through the data array; for each element, the code makes three different loops. The first traverses the user array and features a nested loop with information about the names of grading and graded student, final grade and average grade of each aspect of the rubric. The second loop populates the result variable with the global feedback of each submitted assessment. Finally, the third loop incorporates the feedback and grade of each aspect of the rubric to the result variable. The resulting variable has the following form:

- [reviewer, submitter, final calculated grade, average grade of rubric aspects, global feedback, grade of each aspect, feedback of each aspect, self-/peer assessment].

This variable is then written to an MS Excel file using the ”exceljs/modern.nodejs” library.

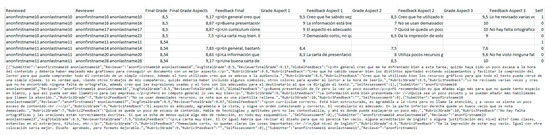

Once the application has finished processing the data and created the output file, the user can download it. The resulting file (in this example, a file with extension .xlsx) can be opened using an external application (e.g., MS Excel, OpenOffice Calc, etc.). Figure 9 shows an example of the .xlsx (top) and. json (bottom) outputs; in this case, each assignment had a maximum of three peer-reviews and self-assessment, and also included grading of three different aspects of an assessment rubric with feedback in each of the aspects. Blank spaces in grades represent a missing submission of the assessment and blank spaces in feedback represent that no feedback was provided.

Figure 9.

Excerpts of the MS Excel (top) and raw JSON (bottom) output files returned by MWDEX.

With the output file, instructors now have a complete view of all the results of the peer-review process, and it is also possible for them to copy and paste the results into a grading master file, if they have one. Additionally, and taking advantage of some of the added capabilities of the spreadsheet software, it is also possible to gain insight about the results; for example, the instructor can create offline graphs and analyze aggregated results using a simple pivot table in the spreadsheet software.

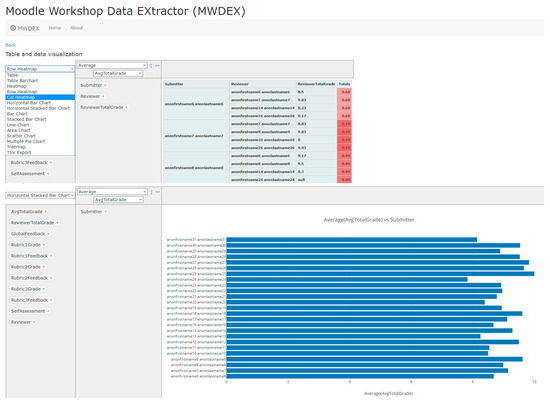

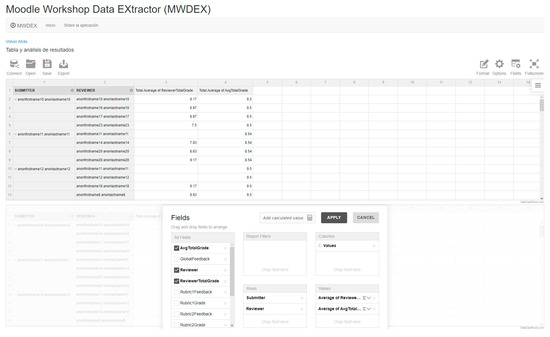

2.5.2. Data Visualization

In Section 1.3 we argued that data visualization is necessary to facilitate analysis, sensemaking and decision making, and effectively closing the learning analytics cycle. There are generally two approaches to data visualization for decision making: predefined and self-service visualizations, or what can be considered guided or non-guided visualizations. MWDEX provides two different types of data visualization focused on self-service and data discovery, and therefore technically falling in the category of learning analytics dashboards, as they display the most important information needed on a single screen, with a rich user interface for users to interact with the data [62]. The visualization layer of MWDEX aims to cover at least the two first levels of self-service (usage of information and creation of information) [63], by offering two different interactive data manipulation possibilities. The first one (Figure 10) has a strong focus on visualization. Using the pivottable library with additional renderers, the interface initially shows an interactive pivot table that can be manipulated using drag and drop actions with the different data fields, but also offers more advanced data visualizations, such as bar charts, pie charts, heatmaps, or scatter plots, all of which perform image manipulation actions, such as zoom, pan, multiselection, or snapshot downloading. This flexibility enables users to analyze the data using the visualization they are more familiar with or the one that is more adequate for each aspect of the data exploration and discovery process.

Figure 10.

Interactive data visualization and charts of MWDEX.

The second visualization (Figure 11) uses the WebDataRocks (WDR) Web Pivot Table reporting tool (https://www.webdatarocks.com). The objective of this visualization is to allow users to directly conduct the analysis that they would perform in a spreadsheet software application directly on the web browser. The WDR pivot table allows users not only to configure the pivot table in a similar way to what they are used to in spreadsheet software applications (e.g., report filters, different calculation of values, multiple rows, and columns), but also facilitates extending the analysis using calculated values, conditional formatting, and saving and exporting data (both the session state and the data after analysis).

Figure 11.

WebDataRocks (WDR) based interactive pivot table in MWDEX (top, main data visualization screen and bottom, field options).

2.6. Initial Validation of the Tool

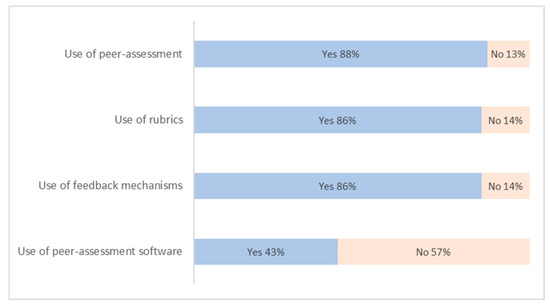

Once the design and implementation of MWDEX was completed (a demo of the English version is available at http://learning-analytics.tige.ior.etsit.upm.es/MWDEX/en/), a survey was conducted which aimed to give an initial measure of the potential use of the application in higher education. Because learning analytics is still a concept that is not popular among instructors, the initial survey was sent to members of the Spanish Network of Learning Analytics (SNOLA), comprised of university teachers. The survey asked the following questions sequentially, ending when the answer to questions 1 to 3 was negative: (Q1) “Do you use peer assessment in your courses?” (Q2) “Do you use assessment rubrics or feedback comments in your peer-assessed activities?” (Q3) “Do you use software tools to support peer assessment activities?” (Q4, only for users of software tools) “Which software tools do you use to support peer assessment activities?” This question showed a list of the tools detailed in Table 1, plus the three tools available in Moodle, and MS Excel; (Q5, only for Moodle Workshop users) “How satisfied are you with Moodle Workshops, and which additional functionalities would you like to see implemented in Moodle Workshops?”

Moodle Workshop users were also prompted to access a demo of MWDEX hosted in a research team’s server that connected to an instance of Moodle with anonymized student data from two courses. After using MWDEX, they were asked three additional questions, with possible answers in a 0 to 10 (strongly disagree to strongly agree) scale as follows: (Q6.1) “Do you find MWDEX useful to support peer assessment activities?” (Q6.2) “Do you find that MWDEX is easy to use?” (Q6.3) “Would you use MWDEX to support your peer assessment activities if you could?”. The survey ended with an open-ended question asking for additional feedback about MWDEX and potential additional functionalities.

Although the final sample is very small (23 respondents, with 16 complete responses), the results of the survey (Figure 12) were quite surprising. Despite a majority of teachers using self- and peer assessment (87.5 percent), assessment rubrics (84.6 percent of those using peer assessment) and feedback comments (85.7 percent of those using peer assessment) in their courses, more than half of them (six responses, or 57.1 percent of those using peer assessment) do not use any software tool to support the self- and peer assessment processes. The result is quite striking, given the technological orientation of these teachers. Among instructors who use software tools, the software applications in use are Blackboard’s Self and Peer Assessment (three responses, 50.0 percent), MS Excel or similar, Moodle Message Boards and Moodle Workshops (two responses, 33.3 percent), Canvas’s Peer review and OpenMOOC (one response, 16.7 percent). Given that most members of SNOLA use Moodle in their virtual campuses, the result shows an unbalance between the use of Blackboard’s peer assessment activities and Moodle modules, which could be a first indication that the current implementation of peer assessment activities in Moodle Workshops does not fulfill the needs of instructors. Even though the reasons for using or not using software tools were not asked, the main difference between Moodle Workshops and Blackboard’s Self and Peer Assessment lies in the presentation and export of results, which suggests that adding those functionalities to Moodle Workshops (for example, by using MWDEX with Moodle Workshops) might help improving its use among university teachers. Furthermore, some teachers keep using MS Excel or a similar spreadsheet tool to support self- and peer assessment, which could support the design idea of implementing different mechanisms with such functionalities in MWDEX (e.g., MS Excel export and interactive online pivot tables). Finally, only two respondents stated that they use Moodle Workshops for self- and peer assessment activities, with an average satisfaction with its use of 6.5 out of 10 (values of six and seven, respectively); among the desired functionalities by these two respondents, both mentioned complete and detailed data export and one mentioned graphs and data visualization, which seems to confirm that MWDEX covers the require functionalities that are, at this moment, missing in Moodle Workshops. After they visited the MWDEX demos, both teachers responded with 10 out of 10 points to questions Q6.1, Q6.2, and Q6.3, which the authors consider quite promising regarding the potential success in the implementation of MWDEX across higher education institutions.

Figure 12.

Comparison of the use of peer assessment, rubrics, feedback mechanisms, and peer assessment software among respondents.

3. Conclusions

This study focuses on self- and peer assessment activities, a type of assessment that is gaining popularity due to the shift toward student-centered learning and its scalability in large courses, such as MOOCs. The article presents and explains the main benefits and uses of peer assessment activities and the different educational technologies and tools that support peer assessment processes. More particularly, the study considers peer assessment processes as part of the learning analytics cycle, by investigating the potential value in collecting, processing, and presenting data from peer assessment activities as part of the learning analytics cycle.

The analysis of tools to support peer assessment activities identifies most of the features that are present in this kind of systems, such as: definition of the activity in different stages or phases; scheduling of activities; anonymity of submissions; use of assessment rubrics; grading and feedback; randomized, automated or group-based assignation of reviews; self-assessment capabilities; and data presentation and export. The study then analyzes Moodle, the most-used LMS across higher education institutions, and more specifically, Moodle Workshop, a module that supports peer assessment activities in Moodle.

To overcome the main limitations of Moodle Workshops (data export and visualization), we propose the design of a Moodle web services-based application, MWDEX. The study lays out the main requirements for such application and details its design principles and implementation. On the basis of the initial validation among a reduced sample of learning analytics experts from the SNOLA network, we found contradicting results regarding the potential success of campus-wide implementations of MWDEX.

On the positive side, the reception of the application, even though limited to a few participants, was very good and, despite the very reduced sample, the functionalities of MWDEX seem to cover the main limitations of Moodle Workshops, and therefore the application might facilitate a more intensive use of peer assessment activities supported by Moodle Workshops while providing instructors with useful and actionable information about the process. Naturally, these initial results must be further confirmed by pilot experiments in real learning settings and campus-wide implementations. Additionally, the current implementation of MWDEX is based on a non-guided self-service data analysis and discovery approach, which gives instructors absolute freedom to explore and analyze the information from the peer assessment process. Because not all instructors have developed their data discovery skills, an interesting line of study is the definition of experience-based preset configurations of the possible visualizations that would take advantage of the multiple configuration options of the two options currently available in MWDEX.

On the negative side, and again despite the small sample, the results also suggest a slow incorporation of software applications to support peer assessment into learning practices. This is in line with [38], who also discussed the idea that both students and teaching staff can struggle with the transition from offline traditional assessment to online collaborative modes of assessment but strongly advocate for the potential of Moodle Workshop for the development of student competences and capabilities. In addition, this study only evaluated MWDEX with data from a relatively small course; further performance tests should be conducted in large-scale courses to ensure the scalability and suitability of the application in massive courses.

Three main contributions emerge from this study. First, the study adds to the existing but scant literature that addresses the description of processes related to the extraction and transformation of educational data, with a special focus on peer assessment activities; the study also performs a comprehensive review of the software tools to support peer assessment and their functionalities. Second, from a methodological approach, the research explores the use of web services as a superior alternative to other means of LMS data collection, such as the use of SQL queries. Third, from a practical perspective, the software design detailed in this study addresses the instructors’ demands for some missing functionalities of the Moodle platform (which have remained unresolved for years [64,65]), by facilitating the extraction and analysis of data from Moodle Workshops.

As a consequence of the specific scope of the research, the study is not exempt from limitations. As mentioned above, the initial validation only included a reduced and selected group of learning analytics experts; future experimentation and analysis with a larger sample of Moodle Workshop users and non-users is required. Related to this, the study does not analyze optimal configurations of the data visualizations, which should also be tested and confirmed by large-scale analysis, in a similar fashion to [66]. The reader may also find that the study does not include the analysis of actual data from peer assessment activities (even though the data used for testing MWDEX was taken from a real course, after anonymization). This decision is completely intentional for the following two reasons: first, because of manuscript length limitations; and second, and following our initial argument, literature on learning analytics has been primarily centered on the application of mining algorithms and data analysis, whereas data extraction and processing have been only tangentially addressed in most studies. Therefore, it was the authors’ intention to offer an alternative view where this unbalance would be reverted. This study does provide the necessary tools to perform these analysis, and follow-up research should take advantage of the functionalities provided by MWDEX and perform the analysis of peer-assessed activities (including, but not limited to, rater reliability, natural language processing of feedback comments, or research on skill development based on rubric aspects). Finally, a very promising line of research that should be explored in further studies is how to combine the peer assessment data returned by MWDEX with other educational data (activity from LMS logs, academic data, informal learning data, among others) through interoperable and open systems to achieve the full potential of learning analytics (in line with the idea of open learning analytics [21]) and facilitating the transition toward true learning services-based technological ecosystems [67].

Author Contributions

Conceptualization, investigation, writing—original draft preparation, J.C.-P. and E.A.-N.; formal analysis, J.C.-P. and S.I.-P.; validation, writing—review and editing, S.I.-P. and F.J.R.-S.; resources and funding acquisition, F.J.R.-S. and E.A.-N.; methodology and supervision, J.C.-P.; software and data curation, S.I.-P.; visualization, F.J.R.-S.; project administration, E.A.-N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partly funded by Universidad Politécnica de Madrid, grant number IE1819.0904. This paper is supported by the ROBOSTEAM Erasmus+ KA201 Project with reference 2018-1-ES01-KA201-050939.

Acknowledgments

The authors thank Ángel Hernández-García and Isabel Rodríguez-Ruiz for their support in the development of MWDEX.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Uskov, V.L.; Bakken, J.P.; Heinemann, C.; Rachakonda, R.; Guduru, V.S.; Thomas, A.B.; Bodduluri, D.P. Building Smart Learning Analytics System for Smart University. In Smart Education and e-Learning 2017. SEEL 2017. Smart Innovation, Systems and Technologies; Uskov, V., Howlett, R., Jain, L., Eds.; Springer International Publishing AG: Cham, Switzerland, 2018; Volume 75, pp. 191–204. [Google Scholar]

- Giannakos, M.N.; Chorianopoulos, K.; Chrisochoides, N. Making Sense of Video Analytics: Lessons Learned from Clickstream Interactions, Attitudes, and Learning Outcome in a Video-Assisted Course. Int. Rev. Res. Open Dis. 2015, 16, 260–283. [Google Scholar] [CrossRef]

- Kotsiantis, S.; Tselios, N.; Filippidi, A.; Komis, V. Using learning analytics to identify successful learners in a blended learning course. Int. J. Technol. Enhanc. Learn. 2013, 5, 133–150. [Google Scholar] [CrossRef]

- Tempelaar, D.T.; Rienties, B.; Giesbers, B. In search for the most in-formative data for feedback generation: Learning analytics in a data-rich context. Comput. Hum. Behav. 2015, 47, 157–167. [Google Scholar] [CrossRef]

- Agudo-Peregrina, Á.F.; Iglesias-Pradas, S.; Conde-González, M.Á.; Hernández-García, Á. Can we predict success from log data in VLEs? Classification of interactions for learning analytics and their relation with performance in VLE-supported F2F and online learning. Comput. Hum. Behav. 2014, 31, 542–550. [Google Scholar] [CrossRef]

- Ma, J.; Han, X.; Yang, J.; Cheng, J. Examining the necessary condition for engagement in an online learning environment based on learning analytics approach: The role of the instructor. Internet High. Educ. 2015, 24, 26–34. [Google Scholar] [CrossRef]

- Hernández-García, Á.; González-González, I.; Jiménez-Zarco, A.I.; Chaparro-Peláez, J. Applying social learning analytics to message boards in online distance learning: A case study. Comput. Hum. Behav. 2015, 47, 68–80. [Google Scholar] [CrossRef]

- Fulantelli, G.; Taibi, D.; Arrigo, M. A framework to support educational decision making in mobile learning. Comput. Hum. Behav. 2015, 47, 50–59. [Google Scholar] [CrossRef]

- Khalil, M.; Ebner, M. Clustering patterns of engagement in Massive Open Online Courses (MOOCs): The use of learning analytics to reveal student categories. J. Comput. High. Educ. 2017, 29, 114–132. [Google Scholar] [CrossRef]

- Alonso-Fernández, C.; Cano, A.R.; Calvo-Morata, A.; Freire, M.; Martínez-Ortiz, I.; Fernández-Manjón, B. Lessons learned applying learning analytics to assess serious games. Comput. Hum. Behav. 2019, 99, 301–309. [Google Scholar] [CrossRef]

- Hernández-Lara, A.B.; Perera-Lluna, A.; Serradell-López, E. Applying learning analytics to students’ interaction in business simulation games. The usefulness of learning analytics to know what students really learn. Comput. Hum. Behav. 2019, 92, 600–612. [Google Scholar] [CrossRef]

- Ochoa, X. Multimodal Learning Analytics. In Handbook of Learning Analytics, 1st ed.; Lang, C., Siemens, G., Wise, A., Gašević, D., Eds.; Society for Learning Analytics Research, 2017; pp. 129–141. [Google Scholar]

- Clow, D. The Learning Analytics Cycle: Closing the loop effectively. In Proceedings of the 2nd International Conference on Learning Analytics & Knowledge (LAK’12), Vancouver, BC, Canada, 29 April–2 May 2012; Shum, S.B., Gasevic, D., Ferguson, R., Eds.; ACM: New York, NY, USA, 2012; pp. 134–138. [Google Scholar]

- Gómez-Aguilar, D.A.; García-Peñalvo, F.J.; Therón, R. Analítica visual en e-learning. Prof. Inf. 2014, 23, 236–245. [Google Scholar] [CrossRef]

- Khalil, M.; Ebner, M. Learning Analytics: Principles and Constraints. In Proceedings of the World Conference on Educational Media and Technology 2015 (EdMedia 2015), Montréal, QC, Canada, 22–24 June 2015; Carliner, S., Ostashewski, N., Eds.; Association for the Advancement of Computing in Education (AACE): Waynesville, NC, USA, 2015; pp. 1789–1799. [Google Scholar]

- Elias, T. Learning Analytics: Definitions, Processes and Potential; 2011. [Google Scholar]

- Nistor, N.; Hernández-García, Á. What types of data are used in learning analytics? An overview of six cases. Comput. Hum. Behav. 2018, 89, 335–338. [Google Scholar] [CrossRef]

- Dutt, A.; Ismail, M.A.; Herawan, T. A Systematic Review on Educational Data Mining. IEEE Access 2017, 5, 15991–16005. [Google Scholar] [CrossRef]

- Buenaño-Fernandez, D.; Villegas-CH, W.; Luján-Mora, S. The use of tools of data mining to decision making in engineering education—A systematic mapping study. Comput. Appl. Eng. Educ. 2019, 27, 744–758. [Google Scholar] [CrossRef]

- Crone, S.F.; Lessmann, S.; Stahlbock, R. The impact of preprocessing on data mining: An evaluation of classifier sensitivity in direct marketing. Eur. J. Oper. Res. 2006, 173, 781–800. [Google Scholar] [CrossRef]

- Chatti, M.A.; Muslim, A.; Schroeder, U. Toward an Open Learning Analytics Ecosystem. In Big Data and Learning Analytics in Higher Education. Current Theory and Practice; Daniel, B.K., Ed.; Springer: Cham, Switzerland, 2017; pp. 195–219. [Google Scholar]

- Costa, E.B.; Fonseca, B.; Almeida Santana, M.; Ferreira de Araújo, F.; Rego, J. Evaluating the effectiveness of educational data mining techniques for early prediction of students′ academic failure in introductory programming courses. Comput. Hum. Behav. 2017, 73, 247–256. [Google Scholar] [CrossRef]

- Altujjar, Y.; Altamimi, W.; Al-Turaiki, I.; Al-Razgan, M. Predicting Critical Courses Affecting Students Performance: A Case Study. Procedia Comput. Sci. 2016, 82, 65–71. [Google Scholar] [CrossRef]

- Marquez-Vera, C.; Romero Morales, C.; Ventura Soto, S. Predicting School Failure and Dropout by Using Data Mining Techniques. IEEE Rev. Iberoam. Tecnol. Aprendiz. 2013, 18, 7–14. [Google Scholar] [CrossRef]

- Shorfuzzaman, M.; Hossain, M.S.; Nazir, A.; Muhammad, G.; Alamri, A. Harnessing the power of big data analytics in the cloud to support learning analytics in mobile learning environment. Comput. Hum. Behav. 2019, 92, 578–588. [Google Scholar] [CrossRef]

- Popham, W.J. What’s Wrong—and What’s Right—with Rubrics. Educ. Leadersh. 1997, 55, 72–75. [Google Scholar]

- Goodrich, H. Understanding rubrics. Educ. Leadersh. 1996, 54, 14–17. [Google Scholar]

- Bologna Declaration. Joint Declaration of the European Ministers of Education; The European Higher Education Area: Bologna, Italy, 1999. [Google Scholar]

- Pereira, D.; Flores, M.A.; Niklasson, L. Assessment revisited: A review of research in Assessment and Evaluation in Higher Education. Assess. Eval. High. Educ. 2016, 41, 1008–1032. [Google Scholar] [CrossRef]

- Suen, H.K. Peer assessment for massive open online courses (MOOCs). Int. Rev. Res. Open Dis. 2014, 15, 312–327. [Google Scholar] [CrossRef]

- Sánchez-Vera, M.M.; Prendes-Espinosa, M.P. Beyond objective testing and peer assessment: Alternative ways of assessment in MOOCs. Int. J. Educ. Technol. High. Educ. 2015, 12, 119–130. [Google Scholar] [CrossRef]

- Ashton, S.; Davies, R.S. Using scaffolded rubrics to improve peer assessment in a MOOC writing course. Distance Educ. 2015, 36, 312–334. [Google Scholar] [CrossRef]

- Chin, P. Peer assessment. New Dir. Teach. Phys. Sci. 2016, 3, 13–18. [Google Scholar] [CrossRef]

- Casal, S. Cooperative Assessment for Learning in CLIL Contexts. Estudios Sobre Educ. 2016, 31, 139–157. [Google Scholar] [CrossRef]

- Ibarra-Sáiz, M.S.; Rodríguez-Gómez, G.; Gómez Ruiz, M.A. Benefits of Peer Assessment and Strategies for Its Practice at University. Rev. Educ. 2012, 359, 206–231. [Google Scholar]

- Van Gennip, N.A.E.; Segers, M.S.R.; Tillema, H.H. Peer assessment for learning from a social perspective: The influence of interpersonal variables and structural features. Educ. Res. Rev. 2009, 4, 41–54. [Google Scholar] [CrossRef]

- Adachi, C.; Hong-Meng Tai, J.; Dawson, P. Academics’ perceptions of the benefits and challenges of self and peer assessment in higher education. Assess. Eval. High. Educ. 2018, 43, 294–306. [Google Scholar] [CrossRef]

- Wilson, M.J.; Diao, M.M.; Huang, L. I’m not here to learn how to mark someone else’s stuff’: An investigation of an online peer-to-peer review workshop tool. Assess. Eval. High. Educ. 2015, 40, 15–32. [Google Scholar] [CrossRef]

- Sridharan, B.; Tai, J.; Boud, D. Does the use of summative peer assessment in collaborative group work inhibit good judgement? High. Educ. 2019, 77, 853–870. [Google Scholar] [CrossRef]

- Blackboard Help. Self and Peer Assessment. Available online: https://help.blackboard.com/Learn/Instructor/Assignments/Self_and_Peer_Assessment (accessed on 10 December 2019).

- Canvas LMS Community. How Do I Create a Peer Review Assignment? Available online: https://community.canvaslms.com/docs/DOC-10094 (accessed on 10 December 2019).

- Canvas LMS Community. How Do I Use Peer Review Assignments in a Course? Available online: https://community.canvaslms.com/docs/DOC-10256-4152719640 (accessed on 10 December 2019).

- Canvas LMS Community. How Do I Use Peer Review Discussions in a Course? Available online: https://community.canvaslms.com/docs/DOC-12946-4152719642 (accessed on 10 December 2019).

- Open Response Assessments. Available online: https://edx.readthedocs.io/projects/edx-partner-course-staff/en/latest/exercises_tools/open_response_assessments/ (accessed on 10 December 2019).

- PeerScholar. Available online: https://www.peerscholar.com (accessed on 10 December 2019).

- PeerStudio. Available online: https://www.peerstudio.org (accessed on 10 December 2019).

- Teammates. Available online: http://teammatesv4.appspot.com (accessed on 10 December 2019).

- PeerWise. Available online: https://peerwise.cs.auckland.ac.nz (accessed on 10 December 2019).

- Peer Assess Pro. Available online: https://www.peerassesspro.com (accessed on 10 December 2019).

- ComPAIR. Available online: https://isit.arts.ubc.ca/compair/ (accessed on 10 December 2019).

- ComPAIR. Available online: http://ubc.github.io/compair/ (accessed on 10 December 2019).

- Peerceptiv. Available online: https://www.peerceptiv.com (accessed on 10 December 2019).

- Moodle.net. Moodle Statistics. Available online: https://moodle.net/stats (accessed on 26 November 2019).

- EdTech Team. Peer Assessment Guide. Available online: https://sleguidance.atlassian.net/wiki/spaces/Moodle/pages/65307026/Peer+Assessment+Guide (accessed on 26 November 2019).

- Badea, G.; Popescu, E.; Sterbini, A.; Temperini, M. A Service-Oriented Architecture for Student Modeling in Peer Assessment Environments. In Emerging Technologies for Education. SETE 2018. Lecture Notes in Computer Science; Hao, T., Chen, W., Xie, H., Nadee, W., Lau, R., Eds.; Springer: Cham, Switzerland, 2018; Volume 11284, pp. 32–37. [Google Scholar]

- Badea, G.; Popescu, E.; Sterbini, A.; Temperini, M. Integrating Enhanced Peer Assessment Features in Moodle Learning Management System. In Foundations and Trends in Smart Learning. Lecture Notes in Educational Technology; Chang, M., Kinshuk, E.P., Chen, N.-S., Jemni, M., Huang, R., Spector, J.M., Sampson, D.G., Eds.; Springer: Singapore, 2019; pp. 135–144. [Google Scholar]

- Azevedo, J.; Oliveira, E.; Beites, P. Using Learning Analytics to evaluate the quality of multiple-choice questions: A perspective with Classical Test Theory and Item Response Theory. Int. J. Inf. Educ. Technol. 2019, 36, 322–341. [Google Scholar] [CrossRef]

- Chaparro-Peláez, J.; Acquila-Natale, E.; Iglesias-Pradas, S.; Suárez-Navas, I. A web services-based application for LMS data extraction and processing for social network analysis. In New Information and Communication Technologies for Knowledge Management in Organizations; Palacios-Marqués, D., Soriano, D.R., Huarng, K.H., Eds.; Springer: Cham, Switzerland, 2015; pp. 110–121. [Google Scholar]

- Hernández-García, Á.; Suárez-Navas, I. GraphFES: A Web Service and Application for Moodle Message Board Social Graph Extraction. In Big Data and Learning Analytics in Higher Education. Current Theory and Practice; Daniel, B.K., Ed.; Springer: Cham, Switzerland, 2017; pp. 167–194. [Google Scholar]

- Lei, K.; Ma, Y.; Tan, Z. Performance comparison and evaluation of web development technologies in php, python, and node. js. In Proceedings of the IEEE 17th International Conference on Computational Science and Engineering, Chengdu, China, 19–21 December 2014; Liu, X., Baz, D.E., Hsu, C.-H., Kang, K., Chen, W., Eds.; The Institute of Electrical and Electronics Engineers: Danvers, MA, USA, 2014; pp. 661–668. [Google Scholar]

- Chaniotis, I.K.; Kyriakou, K.I.D.; Tselikas, N.D. Is Node.js a viable option for building modern web applications? A performance evaluation study. Computing 2015, 97, 1023–1044. [Google Scholar] [CrossRef]

- Zheng, J.G. Data visualization in business intelligence. In Global Business Intelligence; Munoz, J.M., Ed.; Routledge: New York, NY, USA, 2017; pp. 67–81. [Google Scholar]

- Alpar, P.; Schulz, M. Self-Service Business Intelligence. Bus. Inf. Syst. Eng. 2016, 58, 151–155. [Google Scholar] [CrossRef]

- Moodle Tracker. Export Workshop Responses to a Spreadsheet. Available online: https://tracker.moodle.org/browse/MDL-20149 (accessed on 16 December 2019).

- Moodle Tracker. Need to be Able Export Entire Contents of Workshop Activity. Available online: https://tracker.moodle.org/browse/MDL-43542 (accessed on 16 December 2019).

- Villamañe, M.; Álvarez, A.; Larrañaga, M. EvalVis: Enriching Formative Assessment with Visual Learning Analytics. Int. J. Eng. Educ. 2018, 34, 1001–1012. [Google Scholar]

- García-Peñalvo, F.J.; Hernández-García, Á.; Conde, M.Á.; Fidalgo-Blanco, Á.; Sein-Echaluce, M.L.; Alier-Forment, M.; Llorens-Largo, F.; Iglesias-Pradas, S. Enhancing Education for the Knowledge Society Era with Learning Ecosystems. In Open Source Solutions for Knowledge Management and Technological Ecosystems; Garcia-Peñalvo, F.J., García-Holgado, A., Eds.; IGI Global: Hershey, PA, USA, 2017; pp. 1–24. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).