Why Do Swiss HR Departments Dislike Algorithms in Their Recruitment Process? An Empirical Analysis

Abstract

1. Introduction

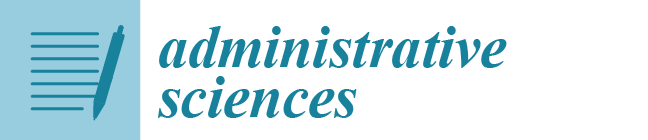

2. Literature Review, Theoretical Framework, and Hypotheses

2.1. AI and Algorithmic Decision-Making in HR

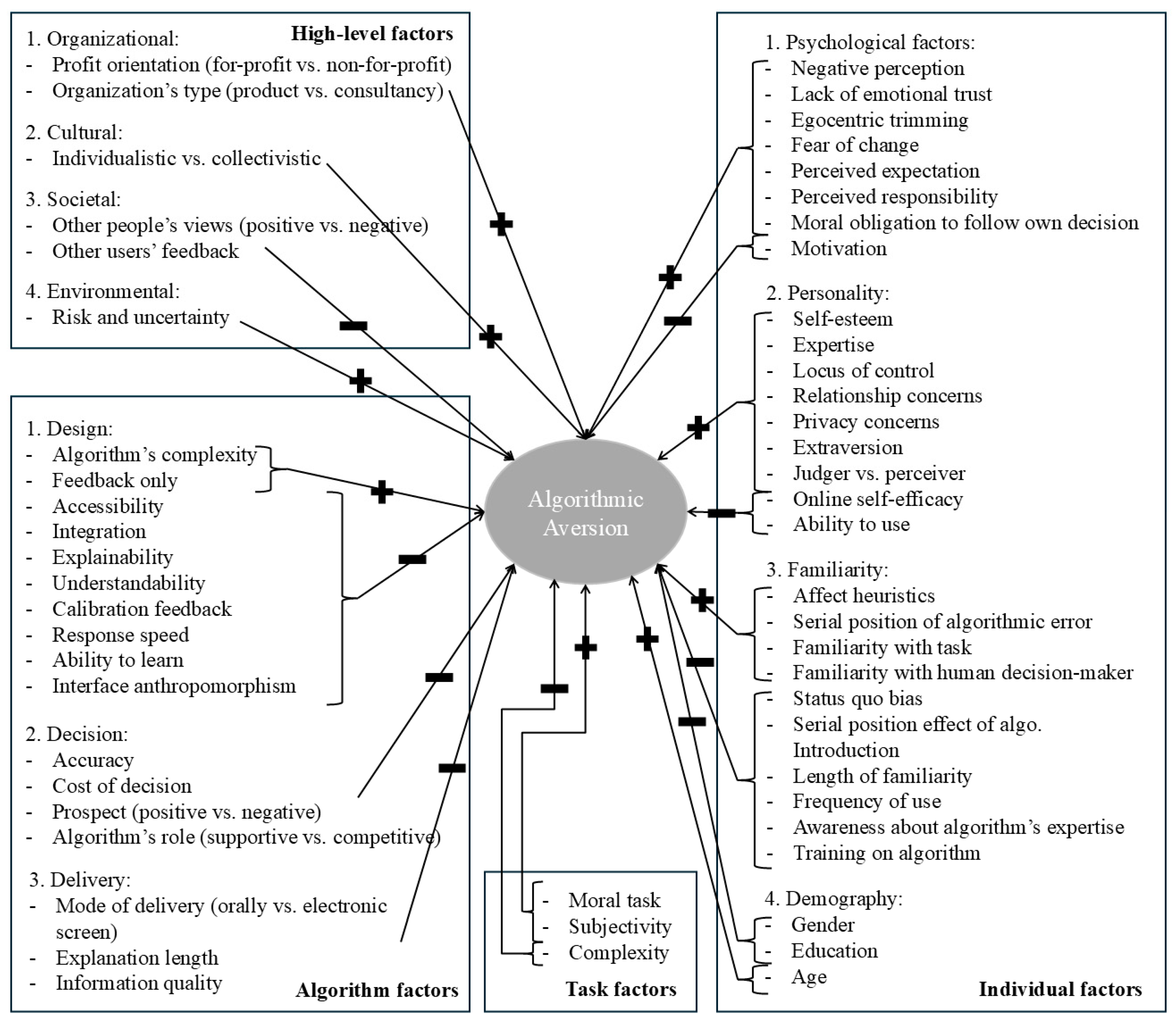

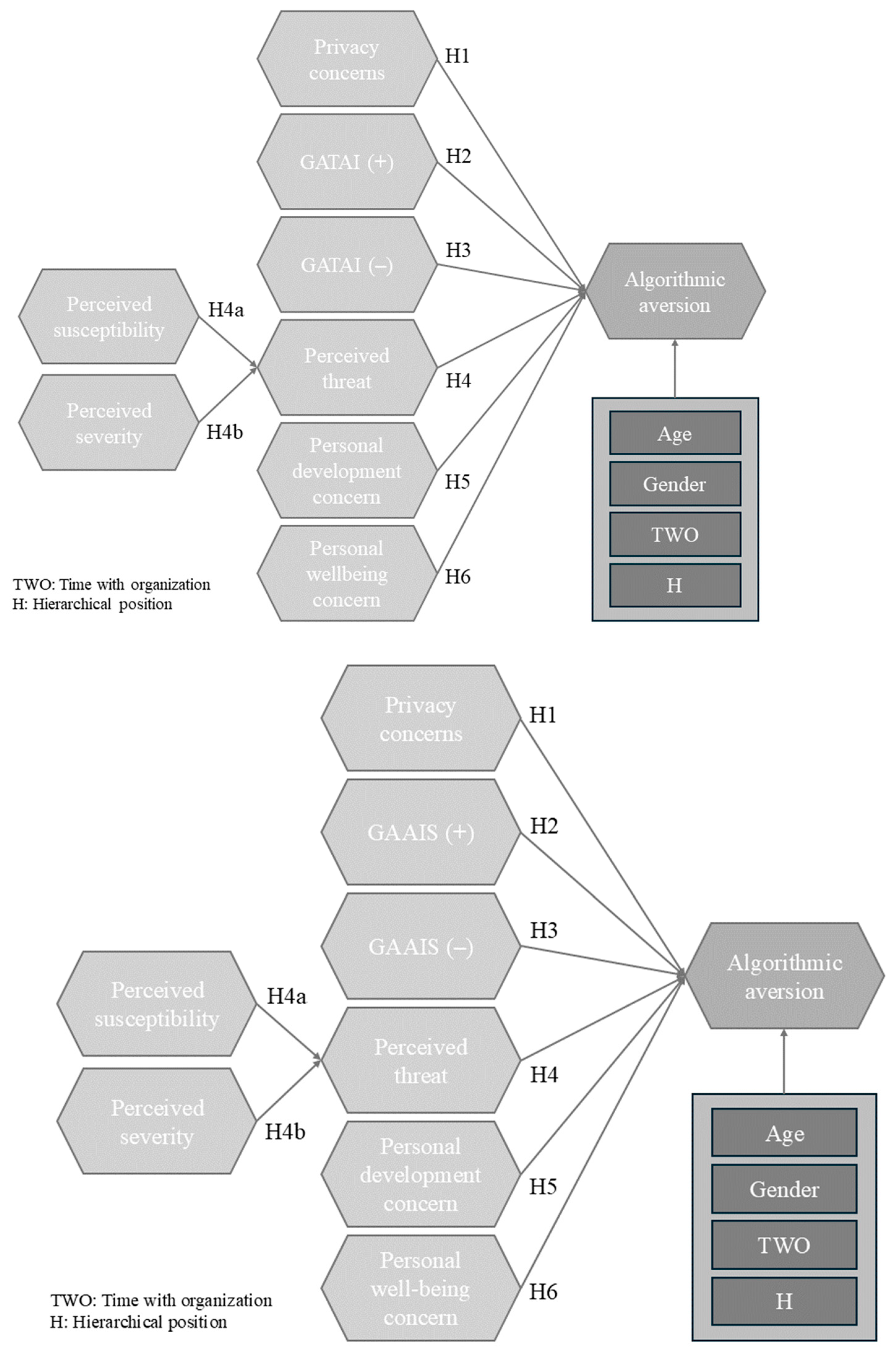

2.2. Research Model and Hypotheses

2.2.1. Hiring Process AA (Dependent Variable)

2.2.2. Explanatory Factors for AA in the Hiring Process (Independent Variables)

Privacy Concerns

General Attitude towards AI Scale

Perceived Threat and Its Determinants

Personal Development Concerns

Personal Well-Being Concerns

2.2.3. Control Variables

2.2.4. Final Research Model

3. Methods

3.1. Data Collection and Sample Characteristics

3.2. Preventing Bias

3.3. Measurements

3.3.1. Dependent Variable

3.3.2. Independent Variables

3.4. Analysis Procedure

3.4.1. Preliminary Considerations

3.4.2. Evaluation of Measurement Models

3.4.3. Evaluation of Structural Models

4. Results

4.1. Description of Results

4.1.1. Complete Database

4.1.2. Private Sector VS Public Sector

4.2. Confirmed/Invalidated Assumptions

5. Interpretation of Results and Discussion

6. Conclusions

6.1. Practical Implications

6.2. Limitations and Future Research

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Variables, Mean, Standard Deviation, Skewness, and Kurtosis

| Concept | Item | Mean | SD | Skew. | Kurt. | Wording |

| Dependent variable | ||||||

| Algorithmic aversion (Melick 2020) | aa1 | 2.91 | 1.19 | 0.070 | −0.968 | Selecting new employees using professional judgement of the hiring manager is more effective than selecting new employees using a formula designed to predict job performance. |

| aa2 | 2.93 | 1.22 | 0.023 | −1.03 | Using hiring’s managers review of resumes is more likely to identify high quality applicants than using computerized text analysis to screen resumes. | |

| Independent variables | ||||||

| Privacy concerns (Baek and Morimoto 2012) | pc_1 (R) | 3.39 | 0.840 | −1.45 | 4.52 | I feel uncomfortable when information is shared without permission. |

| pc_2 | 1.72 | 0.928 | 1.22 | 3.59 | I am concerned about misuse of personal information. | |

| pc_3 | 1.69 | 0.918 | 1.24 | 3.61 | I feel fear that information may not be safe while stored. | |

| pc_4 | 1.70 | 0.927 | 1.25 | 3.65 | I believe that personal information is often misused. | |

| pc_5 | 1.70 | 0.933 | 1.22 | 3.51 | I think my organization share information without permission. | |

| GAAIS+ (Schepman and Rodway 2020) | gaais_1 | 2.91 | 1.35 | 0.084 | −1.22 | For routine transactions, I would rather interact with an artificial intelligent system than with a human. |

| gaais_2 | 2.95 | 1.36 | 0.017 | −1.23 | Artificial intelligence can provide new economic opportunities for this country. | |

| gaais_4 | 3.04 | 1.31 | −0.039 | −1.16 | Artificially intelligent systems can help people feel happier. | |

| gaais_5 | 2.92 | 1.33 | 0.025 | −1.18 | I am impressed by what artificial intelligence can do. | |

| gaais_7 | 3.00 | 1.35 | −0.058 | −1.23 | I am interested in using artificially intelligent systems in my daily life. | |

| gaais_11 | 3.04 | 1.35 | −0.048 | −1.22 | Artificial intelligence can have positive impacts on people’s wellbeing. | |

| gaais_12 | 3.07 | 1.38 | −0.068 | −1.27 | Artificial intelligence is exciting. | |

| gaais_13 | 3.12 | 1.34 | −0.085 | −1.19 | An artificially intelligent agent would be better than an employee in many routine jobs. | |

| gaais_14 | 3.08 | 1.39 | −0.076 | −1.28 | There are many beneficial applications of artificial intelligence. | |

| gaais_16 | 3.09 | 1.35 | −0.042 | −1.24 | Artificially intelligent systems can perform better than humans. | |

| gaais_17 | 3.07 | 1.36 | −0.049 | −1.25 | Much of society will benefit form a future full of artificial intelligence. | |

| gaais_18 | 3.07 | 1.36 | −0.089 | −1.20 | I would like to use artificial intelligence in my own job. | |

| GAAIS– (Schepman and Rodway 2020) | gaais_3 | 2.90 | 1.24 | 0.063 | −1.05 | Organizations use artificial intelligence unethically. |

| gaais_6 | 2.89 | 1.24 | 0.086 | −1.02 | I think artificially intelligent systems make many errors. | |

| gaais_8 | 2.90 | 1.27 | 0.097 | −1.07 | I find artificial intelligence sinister. | |

| gaais_9 | 2.90 | 1.26 | 0.049 | −1.08 | Artificial intelligence might take control of people. | |

| gaais_10 | 2.85 | 1.25 | 0.128 | −1.05 | I think artificial intelligence is dangerous. | |

| gaais_15 | 2.84 | 1.29 | 0.146 | −1.10 | I shiver with discomfort when I think about future uses of artificial intelligence. | |

| gaais_19 | 2.85 | 1.29 | 0.119 | −1.12 | People like me will suffer if artificial intelligence is used more and more. | |

| gaais_20 | 2.91 | 1.27 | 0.065 | −1.09 | Artificial intelligence is used to spy on people. | |

| Perceived threat (Cao et al. 2021) | pt_1 | 2.92 | 1.25 | −0.006 | −1.10 | My fear of exposure to AI in HR’s risks is high. |

| pt_2_R (R) | 2.96 | 1.30 | 0.097 | −1.15 | The extent of my worry about AI in HR’s risks is low. | |

| pt_3 | 2.90 | 1.27 | −4.93 | −1.11 | The extent of my anxiety about potential loss due to AI in HR’s risks is high. | |

| pt_4 | 2.97 | 1.30 | −0.023 | −1.16 | I’m very concerned about the potential misuse of HR AI. | |

| Perceived susceptibility (Cao et al. 2021) | psus_1 | 3.02 | 1.32 | −0.05 | −1.19 | AI is likely to make bad HR decisions in the future. |

| psus_2 | 2.94 | 1.31 | 0.023 | −1.15 | The chances of AI making bad HR decisions are great. | |

| psus_3 | 3.00 | 1.40 | −0.022 | −1.31 | AI may make bad HR decisions at some point. | |

| Perceived severity (Cao et al. 2021) | psev_1 | 2.92 | 1.32 | 0.033 | −1.20 | AI in HR may perpetuate cultural stereotypes in available data. |

| psev_2 | 2.89 | 1.36 | 0.100 | −1.25 | AI in HR may amplify discrimination in available data. | |

| psev_3 | 3.04 | 1.34 | −0.065 | −1.21 | AI in HR may be prone to reproducing institutional biases in available data. | |

| psev_4 | 2.95 | 1.34 | 0.024 | −1.20 | AI in HR may have a propensity for intensifying systemic bias in available data. | |

| psev_5 | 2.92 | 1.34 | 0.041 | −1.19 | AI in HR may have the wrong objective due to the difficulty of specifying the objective explicitly. | |

| psev_6 | 2.98 | 1.39 | −0.004 | −1.31 | AI in HR may use inadequate structures such as problematic models. | |

| psev_7 | 2.98 | 1.34 | 0.006 | −1.22 | AI in HR may perform poorly due to insufficient training. | |

| Personal development concerns (Cao et al. 2021) | pdev_1_R (R) | 3.03 | 1.30 | −0.051 | −1.10 | AI HR supported or automatised decision-making would have a positive impact on my learning ability. |

| pdev_2_R (R) | 2.98 | 1.34 | −0.035 | −1.19 | AI HR supported or automatised decision-making would have a positive impact on my career development. | |

| pdev_3 | 3.00 | 1.31 | −0.040 | −1.13 | I would hesitate to use HR AI for fear of losing control of my personal development. | |

| pdev_4 | 2.97 | 1.32 | 0.001 | −1.14 | It scares me to think that I could lose the opportunity to learn from my own experience using AI HR supported or automatised decision-making. | |

| Personal well-being concerns (Cao et al. 2021) | pwb_1 (R) | 3.12 | 1.35 | −0.081 | −1.22 | AI in HR makes me feel relaxed. |

| pwb_2 | 2.87 | 1.39 | 0.106 | −1.27 | AI in HR makes me feel anxious. | |

| pwb_3 | 2.94 | 1.37 | 0.056 | −1.24 | AI in HR makes me feel redundant. | |

| pwb_4 | 2.91 | 1.38 | 0.076 | −1.25 | AI in HR makes me feel useless. | |

| pwb_5 | 2.85 | 1.37 | 0.061 | −1.26 | AI in HR makes me feel inferior. | |

| pwb_6 (R) | 3.03 | 1.36 | −0.051 | −1.22 | AI in HR would increase my job satisfaction. | |

| Control variables | ||||||

| Private/ Public | PP | 1.49 | 0.500 | 0.019 | 1.00 | Your organization is: 1: Private 2: Public or semi-public |

| Age | age | 4.98 | 0.822 | −0.526 | 2.78 | What age bracket do you belong to? 1: Under 18 2: 18–25 years old 3: 26–34 years old 4: 35–44 years 5: 45–54 years old 6: 55–64 years old 7: 65 and over |

| Gender | genre | 0.53 | 0.499 | −0.139 | 1.01 | Please indicate your gender below: 1: Male 2: Female 3: I don’t recognize myself in any of these categories |

| Time with organization | anciennete | 3.60 | 1.31 | −0.507 | 2.01 | How long have you worked for your organization? 1: Less than one year 2: From more than 1 to less than 3 years 3: From more than 3 to less than 5 years 4: From more than 5 to less than 10 years old 5: More than 10 years |

| Hierarchical position | hierarchie | 2.83 | 1.24 | −0.543 | 1.65 | What position do you occupy in your organization’s hierarchy? 1: HR manager/HR specialist (without managerial function) 2: Local manager 3: Middle management 4: Executive |

Appendix B. Analyses

| Indicators | PC | GAAIS+ | GAAIS− | PT | PDEV | PWB | PSUS | PSEV | AA |

|---|---|---|---|---|---|---|---|---|---|

| Model 1: both private and public respondents (N = 324) | |||||||||

| pc_1 | 0.955 | ||||||||

| pc_2 | 0.787 | ||||||||

| pc_3 | 0.864 | ||||||||

| pc_4 | 0.823 | ||||||||

| pc_5 | 0.846 | ||||||||

| gaais_1 | 0.975 | ||||||||

| gaais_2 | 0.754 | ||||||||

| gaais_4 | 0.744 | ||||||||

| gaais_17 | 0.764 | ||||||||

| gaais_7 | 0.727 | ||||||||

| gaais_11 | 0.795 | ||||||||

| gaais_18 | 0.727 | ||||||||

| gaais_13 | 0.795 | ||||||||

| gaais_14 | 0.713 | ||||||||

| gaais_16 | 0.722 | ||||||||

| gaais_5 | 0.786 | ||||||||

| gaais_12 | 0.802 | ||||||||

| gaais_3 | 0.963 | ||||||||

| gaais_6 | 0.837 | ||||||||

| gaais_8 | 0.811 | ||||||||

| gaais_9 | 0.784 | ||||||||

| gaais_10 | 0.765 | ||||||||

| gaais_15 | 0.760 | ||||||||

| gaais_19 | 0.766 | ||||||||

| gaais_20 | 0.752 | ||||||||

| pt_1 | 0.962 | ||||||||

| pt_2 | 0.840 | ||||||||

| pt_3 | 0.877 | ||||||||

| pt_4 | 0.890 | ||||||||

| psus_1 | 0.959 | ||||||||

| psus_2 | 0.916 | ||||||||

| psus_3 | 0.888 | ||||||||

| psev_1 | 0.975 | ||||||||

| psev_2 | 0.833 | ||||||||

| psev_3 | 0.816 | ||||||||

| psev_4 | 0.874 | ||||||||

| psev_5 | 0.864 | ||||||||

| psev_6 | 0.835 | ||||||||

| psev_7 | 0.832 | ||||||||

| pdev_1 | 0.969 | ||||||||

| pdev_2 | 0.896 | ||||||||

| pdev_3 | 0.887 | ||||||||

| pdev_4 | 0.882 | ||||||||

| pwb_1 | 0.974 | ||||||||

| pwb_2 | 0.860 | ||||||||

| pwb_3 | 0.879 | ||||||||

| pwb_4 | 0.844 | ||||||||

| pwb_5 | 0.863 | ||||||||

| pwb_6 | 0.874 | ||||||||

| aa_1 | 0.963 | ||||||||

| aa_2 | 0.952 | ||||||||

| Constructs | α | rhoC | AVE | rhoA |

|---|---|---|---|---|

| Model 1: both private and publics respondents (N = 324) | ||||

| Privacy concerns | 0.909 | 0.932 | 0.734 | 0.923 |

| GAAIS+ | 0.851 | 0.920 | 0.608 | 0.947 |

| GAAIS− | 0.922 | 0.937 | 0.652 | 0.935 |

| Perceived threat | 0.915 | 0.940 | 0.798 | 0.929 |

| Personal development concerns | 0.929 | 0.950 | 0.826 | 0.951 |

| Personal well-being concerns | 0.943 | 0.955 | 0.781 | 0.952 |

| Perceived susceptibility | 0.911 | 0.944 | 0.850 | 0.926 |

| Perceived severity | 0.942 | 0.953 | 0.745 | 0.952 |

| Algorithmic aversion | 0.910 | 0.957 | 0.917 | 0.919 |

| PC | GAAIS+ | GAAIS− | PT | PDEV | PWB | AGE | TWO | H | G | PSUS | PSEV | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Model 1: both private and public respondents (N = 324) | ||||||||||||

| GAAIS+ | 0.065 | |||||||||||

| GAAIS− | 0.134 | 0.348 | ||||||||||

| PT | 0.069 | 0.216 | 0.311 | |||||||||

| PDEV | 0.060 | 0.111 | 0.150 | 0.230 | ||||||||

| PWB | 0.036 | 0.284 | 0.230 | 0.221 | 0.163 | |||||||

| AGE | 0.046 | 0.069 | 0.066 | 0.014 | 0.044 | 0.028 | ||||||

| TWO | 0.013 | 0.093 | 0.080 | 0.075 | 0.018 | 0.068 | 0.127 | |||||

| H | 0.027 | 0.036 | 0.044 | 0.121 | 0.019 | 0.068 | 0.300 | 0.266 | ||||

| G | 0.085 | 0.044 | 0.056 | 0.062 | 0.075 | 0.085 | 0.149 | 0.154 | 0.345 | |||

| PSUS | 0.068 | 0.144 | 0.160 | 0.536 | 0.232 | 0.162 | 0.038 | 0.055 | 0.043 | 0.014 | ||

| PSEV | 0.066 | 0.116 | 0.151 | 0.498 | 0.116 | 0.061 | 0.022 | 0.068 | 0.109 | 0.033 | 0.272 | |

| AA | 0.177 | 0.487 | 0.661 | 0.445 | 0.353 | 0.420 | 0.035 | 0.072 | 0.012 | 0.112 | 0.234 | 0.199 |

| Model 1: Private and Public Respondents (N = 324) | |

|---|---|

| With AA: | |

| PC: 1.030 | PWB: 1.145 |

| GAAIS+: 1.196 | AGE: 1.118 |

| GAAIS−: 1.236 | TWO: 1.106 |

| PT: 1.187 | H: 1.306 |

| PDEV: 1.079 | G: 1.165 |

| With PT: | |

| PSUS: 1.069 | PSEV: 1.068 |

| Bootstrapped Paths: Model 1 (N = 324) | ||||||

|---|---|---|---|---|---|---|

| Path | East. | Boot. Mean | Boot. Sd | T-Stat. | 2.5% CI | 97.5% CI |

| PC → AA | 0.074 | 0.077 | 0.031 | 2.391 * | 0.016 | 0.137 |

| GAAIS+ → AA | −0.211 | −0.212 | 0.049 | −4.251 *** | −0.310 | −0.115 |

| GAAIS− → AA | 0.414 | 0.413 | 0.053 | 7.765 *** | 0.311 | 0.519 |

| PT → AA | 0.169 | 0.170 | 0.049 | 3.410 *** | 0.073 | 0.266 |

| PDEV → AA | 0.177 | 0.175 | 0.047 | 3.702 *** | 0.082 | 0.269 |

| PWB → AA | 0.181 | 0.180 | 0.050 | 3.630 *** | 0.085 | 0.280 |

| AGE → AA | −0.005 | −0.006 | 0.038 | −0.150 | −0.080 | 0.068 |

| TWO → AA | −0.022 | −0.021 | 0.038 | −0.583 | −0.095 | 0.052 |

| H → AA | −0.018 | −0.018 | 0.037 | −0.492 | −0.091 | 0.056 |

| G → AA | −0.044 | −0.043 | 0.039 | −1.117 | −0.120 | 0.034 |

| PSUS → AA | 0.401 | 0.402 | 0.055 | 7.268 *** | 0.294 | 0.510 |

| PSEV → AA | 0.369 | 0.370 | 0.054 | 6.787 *** | 0.263 | 0.475 |

| Model 1 | |||||||||||

| PLS out-of-sample metrics: | |||||||||||

| aa_1 | aa_2 | pt_1 | pt_2 | pt_3 | pt_4 | ||||||

| RMSE: | 0.791 | 0.910 | 0.946 | 1.094 | 1.033 | 1.085 | |||||

| LM out-of-sample metrics: | |||||||||||

| RMSE: | 0.834 | 0.955 | 0.914 | 1.170 | 1.049 | 1.140 | |||||

| Model 2 | |||||||||||

| PLS out-of-sample metrics: | |||||||||||

| RMSE: | 0.777 | 0.800 | 0.989 | 1.115 | 1.033 | 1.111 | |||||

| LM out-of-sample metrics: | |||||||||||

| RMSE: | 0.943 | 0.970 | 1.009 | 1.308 | 1.229 | 1.249 | |||||

| Model 3 | |||||||||||

| PLS out-of-sample metrics: | |||||||||||

| 0.814 | 0.968 | 0.909 | 1.075 | 1.034 | 1.043 | ||||||

| LM out-of-sample metrics: | |||||||||||

| 1.005 | 1.130 | 0.866 | 1.095 | 0.905 | 1.092 | ||||||

| 1 | Strohmeier (2020, p. 54) differentiates between “algorithmic decision-making in HRM” (ADM) and “human decision-making in HRM” (HDM). |

| 2 | In its simplest sense, an algorithm is a set of instructions expressed in a particular computer language such as Java, Python, or C++ that are used to solve a well-defined problem (Casilli 2019; Introna 2016, p. 21). Just like a recipe, it is then used to produce a result based on instructions. This conception is similar to that of Gillespie (2014, p. 1), who defines an algorithm as a “set of encoded procedures for transforming input data into a desired output, based on specified calculations”. Note that, depending on the data they are required to process, algorithms are divided into several fields that constitute artificial intelligence techniques such as natural language processing or machine learning (Prikshat et al. 2023, p. 5; Strohmeier 2022). |

| 3 | Mahmud et al. (2023, p. 17) differentiate between “rule-based AI decision systems” and “machine-learning-based decision systems”. The first term refers to the definition of an algorithm by Gillespie (2014). The latter refers to the evolutionary capacity of the decision system, enabling it to evolve over time. |

| 4 | For a non-HR reference, see Acharya et al. (2018). |

| 5 | “Job design ambiguity” (Böhmer and Schinnenburg 2023, p. 17). |

| 6 | “Transparency ambiguity” (Böhmer and Schinnenburg 2023, p. 18). |

| 7 | “Performance ambiguity” (ibid.). |

| 8 | “Data ambiguity” (ibid.). |

| 9 | Note, for example, Lee (2018). However, more recent studies, specifically those concerned with AA in HRM, such as those by Cecil et al. (2024), Fleiss et al. (2024), or Dargnies et al. (2024), appeared after the synthesis by Mahmud et al. (2022). However, the latter are not interested in the same predictors as our work, not in the same type of decision, and not in the same actors. |

| 10 | Mahmud et al. (2022, p. 11) defined the latter as: “Individual factors related to algorithm aversion consist of a wide range of factors including psychological factors, personality traits, demography, and individuals’ familiarity with algorithms and tasks. By psychological factors, we imply those factors related to individuals’ reasoning, logic, thinking, and emotion in connection to algorithmic decisions”. |

| 11 | The original definition of the latter is: “The process by which the organization defines the profiles of the positions to be filled, selects the most suitable people to fill them and then ensures their integration” (Emery and Gonin 2009, p. 43). |

| 12 | |

| 13 | This distinction was confirmed three years later by Schepman and Rodway (2023). |

| 14 | “For the general attitudes, items that loaded onto the positive factor expressed societal or personal benefits of AI, or a preference of AI over humans in some contexts (e.g., in routine transactions), with some items capturing emotional matters (AI being exciting, impressive, making people happier, enhancing their wellbeing)” (Schepman and Rodway 2020, p. 11). |

| 15 | “In the negative subscale, more items were eliminated from the initial pool, and those that were retained were dominated by emotions (sinister, dangerous, discomfort, suffering for “people like me”), and dystopian views of the uses of AI (unethical, error prone-ness, taking control, spying)”. (ibid.) |

| 16 | Attitude is defined as “an individual’s positive or negative feelings about using AI for organizational decision-making” (Cao et al. 2021, p. 5). |

| 17 | Generally, for the impacts of the introduction of new IT and AI tools on individuals, see Agogo and Hess (2018), Vimalkumar et al. (2021), and Zhang (2013). |

| 18 | For the details: Older people tend to perceive algorithmic decisions as less useful (Araujo et al. 2020) and, as a result, to trust them less (Lourenço et al. 2020). Thurman et al. (2019) show, for example, that older people prefer news recommendations made to them by human beings rather than by algorithms. However, this link between age and algorithm aversion is not totally unequivocal. In the medical field, Ho et al. (2005) show that older people trust algorithms more than humans when it comes to decision support systems. Logg et al. (2019), on the other hand, point out that there is no association between age and algorithm aversion. In addition to these considerations, there are also studies in the field of AI-related digital inequalities. Lutz (2019) suggests that age is not in itself a barrier to the propensity of individuals to be comfortable with technology, but that context could explain a large part of the appetence of individuals towards technical objects. In this respect, the Swiss context appears to be relatively favorable to the use of new technologies (Equey and Fragnière 2008). That said, we believe that the relative novelty of algorithms (Strohmeier 2022) encourage mistrust and, thus, the AA of older individuals. |

| 19 | For the details: A higher hierarchical level of respondents is sometimes associated with a greater appetite for information technology (El-Attar 2006). According to Campion (1989) or Sainsaulieu and Leresche (2023, p.19), this is essentially due to the desire of managers, executives, or hierarchical superiors to increase control over their employees’ activities. The introduction of decision-making algorithms into the employee engagement process would enable them to do that. |

| 20 | For the details: The introduction of new technologies can represent a challenge for long-serving employees with well-established work routines. For example, new technologies can lead to changes in job responsibilities, increased workloads, additional training to learn how to use them, and even have a profound impact on an organization’s policies. People with certain unique or rare skills now threatened or called into question by a technical system may see their introduction as a challenge to their power (Crozier and Friedberg 1977). In short, since AI-based decision-making systems necessarily introduce change into organizations (Malik et al. 2020, 2023), and since the most senior individuals within organizations are the most likely to have mastered the rules, to possess habits, or to control areas of uncertainty—which, incidentally, they would not like to see called into question—we believe that seniority is positively associated with algorithmic aversion. |

| 21 | 324 × 100/2892 = 11.203%, where 324 is the total number of responses out of a potential 2892 respondents. Respondents took an average of 25 min to complete the survey. |

| 22 | A four-point Likert scale, unlike an odd-point scale—such as a five-point scale—does not offer a mid-point. This forces respondents to express a clearer opinion, avoiding the tendency to choose a neutral position. This is particularly useful for measuring attitudes, sentiments or opinions about sensitive subjects such as, here, privacy concerns related to AI, where neutrality could mask real feelings (Fowler 2013). |

| 23 | For 13 missing observations. |

| 24 | The remaining 44.4% of the unexplained variance suggests the presence of other influential factors not captured by this model. To address this, several potential drivers of algorithmic aversion could be considered. Those interested in completing our analysis could thus refer to the determinants identified by Mahmud et al. (2022), which are, however, not summarized in full in our theoretical framework, for the sake of brevity. The same reasoning applies to the other variances reported below. |

| 25 | The average latent construct in the private sector is 1.72, compared with 1.62 in the public sector. |

| 26 | “Misuse of personal data” (Tiwari 2022). |

| 27 | This stricter regulatory framework in public organizations might indeed lead to lower concerns about data security (LIPAD 2020; LPrD 2007). For example, research by Veale and Edwards (2018) on the implementation of General Data Protection Regulation (GDPR) in public institutions shows that compliance with data protection laws is often more rigorous in the public sector due to higher levels of accountability and transparency requirements. Furthermore, studies focusing on public administration, such as those by Bannister and Connolly (2014), emphasize that public sector employees typically operate under stricter internal audit and compliance measures, reducing their perceived vulnerability to data breaches. This could explain why, despite a higher coefficient of association between PC and AA, the relationship is not statistically significant in the public sector. On the other hand, private organizations, while also subject to GDPR, may not always apply the same level of stringent data protection practices, especially when balancing cost efficiency and compliance (Tiwari 2022). Therefore, HR employees in the private sector may experience higher concerns regarding algorithmic decision-making, especially in relation to the potential misuse of personal data during recruitment processes (Kim and Bodie 2020). |

| 28 | Such as the Cambridge Analytica scandal (Isaak and Hanna 2018). |

| 29 | Such as bias reduction in hiring (Köchling and Wehner 2020), predictive analytics for workforce planning (Alabi et al. 2024), and improvements in talent management and employee engagement (Tambe et al. 2019; Meijerink et al. 2021). |

| 30 | As our use of latent constructs illustrates, HR issues such as motivation and commitment are difficult to measure in the real world. |

| 31 | In other words, make them accessible to the public. |

| 32 | According to Seeman (1967), job alienation consists of five dimensions: powerlessness, meaningfulness, normlessness, isolation, and self-estrangement. This definition has inspired numerous studies, including Soffia et al. (2022). |

| 33 | Emery and Gonin (2009, pp. 332–33) briefly review the different ways in which humans are viewed within organizations: first, as an economic individual driven by greed (Taylor [1911] 1957); then, as a social individual drawn to and nourished by the interactions they experiences within organizations (Deal and Kennedy 1983; Schein 2010); afterwards, as an individual in search of self-fulfillment (Emery and Gonin 2009, p. 332); and finally, as a complex person whose motivations within their organization are influenced by factors as diverse and varied as their individual career path, their hopes, their ambitions, and so on. This complexity is not without its contradictions, as Wüthrich et al. (2008) explain, requiring the organization to adapt its management to each individual and to personalize working conditions as much as possible. |

| 34 | The average latent construct in the private sector is 2.90, compared with 3.06 in the public sector. |

| 35 |

References

- Acharya, Abhilash, Sanjay Kumar Singh, Vijay Pereira, and Poonam Singh. 2018. Big data, knowledge co-creation and decision making in fashion industry. International Journal of Information Management 42: 90–101. [Google Scholar] [CrossRef]

- Acquisti, Alessandro, Laura Brandimarte, and George Loewenstein. 2015. Privacy and human behavior in the age of information. Science 347: 509–14. [Google Scholar] [CrossRef] [PubMed]

- Agogo, David, and Traci J. Hess. 2018. “How does tech make you feel?” a review and examination of negative affective responses to technology use. European Journal of Information Systems 27: 570–99. [Google Scholar] [CrossRef]

- Agrawal, Ajay, Joshua Gans, and Avi Goldfarb. 2017. How AI will change the way we make decisions. Harvard Business Review 26: 1–7. [Google Scholar]

- Alabi, Khadijat Oyindamola, Adegoke A. Adedeji, Samia Mahmuda, and Sunday Fowomo. 2024. Predictive Analytics in HR: Leveraging AI for Data-Driven Decision Making. International Journal of Research in Engineering, Science and Management 7: 137–43. [Google Scholar]

- Amadieu, Jean-François. 2013. DRH: Le Livre Noir. Paris: Média Diffusion. [Google Scholar]

- Anderfuhren-Biget, Simon, Frédéric Varone, David Giauque, and Adrian Ritz. 2010. Motivating employees of the public sector: Does public service motivation matter? International Public Management Journal 13: 213–46. [Google Scholar] [CrossRef]

- Araujo, Theo, Natali Helberger, Sanne Kruikemeier, and Claes H. de Vreese. 2020. In AI we trust? Perceptions about automated decision-making by artificial intelligence. AI & Society 35: 611–23. [Google Scholar]

- Arif, Imtiaz, Wajeeha Aslam, and Yujong Hwang. 2020. Barriers in adoption of internet banking: A structural equation modeling-Neural network approach. Technology in Society 61: 101231. [Google Scholar] [CrossRef]

- Azoulay, Eva, Pascal Lefebvre, and Rachel Marlin. 2020. Using artificial intelligence to diversify the recruitment process at L’Oréal. Le Journal de l’école de Paris du Management 142: 16–22. [Google Scholar]

- Bader, Jon, John Edwards, Chris Harris-Jones, and David Hannaford. 1988. Practical engineering of knowledge-based systems. Information and Software Technology 30: 266–77. [Google Scholar] [CrossRef]

- Baek, Tae Hyun, and Mariko Morimoto. 2012. Stay away from me. Journal of Advertising 41: 59–76. [Google Scholar] [CrossRef]

- Bannister, Frank, and Regina Connolly. 2014. ICT, public values and transformative government: A framework and programme for research. Government Information Quarterly 31: 119–28. [Google Scholar] [CrossRef]

- Baudoin, Emmanuel, Caroline Diard, Myriam Benabid, and Karim Cherif. 2019. Digital Transformation of the HR Function. Paris: Dunod. [Google Scholar]

- Berger, Benedikt, Martin Adam, Alexander Rühr, and Alexander Benlian. 2021. Watch me improve-algorithm aversion and demonstrating the ability to learn. Business & Information Systems Engineering 63: 55–68. [Google Scholar]

- Binns, Reuben, Max Van Kleek, Michael Veale, Ulrik Lyngs, Jun Zhao, and Nigel Shadbolt. 2018. ‘It’s Reducing a Human Being to a Percentage’ Perceptions of Justice in Algorithmic Decisions. Paper presented at 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, April 21–26; pp. 1–14. [Google Scholar]

- Blaikie, Norman. 2003. Analyzing Quantitative Data: From Description to Explanation. London: Sage. [Google Scholar]

- Blum, Benjamin, and Friedemann Kainer. 2019. Rechtliche Aspekte beim Einsatz von KI in HR: Wenn Algorithmen entscheiden. Personal Quarterly 71: 22–27. [Google Scholar]

- Bogert, Eric, Aaron Schecter, and Richard T. Watson. 2021. Humans rely more on algorithms than social influence as a task becomes more difficult. Scientific Reports 11: 8028. [Google Scholar] [CrossRef] [PubMed]

- Böhmer, Nicole, and Heike Schinnenburg. 2023. Critical exploration of AI-driven HRM to build up organizational capabilities. Employee Relations: The International Journal 45: 1057–82. [Google Scholar] [CrossRef]

- Borsboom, Denny, Gideon J. Mellenbergh, and Jaap van Heerden. 2004. The concept of validity. Psychological Review 111: 1061. [Google Scholar] [CrossRef]

- Broecke, Stijn. 2023. Artificial intelligence and labor market matching. OECD Working Papers on Social Issues, Employment and Migration 284: 1–52. [Google Scholar]

- Brougham, David, and Jarrod Haar. 2018. Smart technology, artificial intelligence, robotics, and algorithms (STARA): Employees’ perceptions of our future workplace. Journal of Management & Organization 24: 239–57. [Google Scholar]

- Browning, Vicky, Fiona Edgar, Brendan Gray, and Tony Garrett. 2009. Realising competitive advantage through HRM in New Zealand service industries. The Service Industries Journal 29: 741–60. [Google Scholar] [CrossRef]

- Brynjolfsson, Erik, and Andrew McAfee. 2014. The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies. New York: W. W. Norton & Company. [Google Scholar]

- Campion, Emily D., and Michael A. Campion. 2024. Impact of machine learning on personnel selection. Organizational Dynamics 53: 101035. [Google Scholar] [CrossRef]

- Campion, Michael G. 1989. Technophilia and technophobia. Australasian Journal of Educational Technology 5: 1–10. [Google Scholar] [CrossRef][Green Version]

- Cao, Guangming, Yanqing Duan, John S. Edwards, and Yogesh K. Dwivedi. 2021. Understanding managers’ attitudes and behavioral intentions toward using artificial intelligence for organizational decision-making. Technovation 106: 102312. [Google Scholar] [CrossRef]

- Carpenter, Darrell, Diana K. Young, Paul Barrett, and Alexander J. McLeod. 2019. Refining technology threat avoidance theory. Communications of the Association for Information Systems 44: 1–40. [Google Scholar] [CrossRef]

- Casilli, Antonio. A. 2019. Waiting for the Robots-Investigating the Work of the Click. Paris: Média Diffusion. [Google Scholar]

- Cecil, Julia, Eva Lermer, Matthias F. C. Hudecek, Jan Sauer, and Susanne Gaube. 2024. Explainability does not mitigate the negative impact of incorrect AI advice in a personnel selection task. Scientific Reports 14: 9736. [Google Scholar] [CrossRef]

- Chen, Yan, and Fatemeh Mariam Zahedi. 2016. Individuals’ internet security perceptions and behaviors. MIS Quarterly 40: 205–22. [Google Scholar] [CrossRef]

- Chen, Zhisheng. 2023. Ethics and discrimination in artificial intelligence-enabled recruitment practices. Humanities and Social Sciences Communications 10: 567. [Google Scholar] [CrossRef]

- Cheung, Gordon W., Helena D. Cooper-Thomas, Rebecca S. Lau, and Linda C. Wang. 2023. Reporting reliability, convergent and discriminant validity with structural equation modeling: A review and best-practice recommendations. Asia Pacific Journal of Management 41: 745–783. [Google Scholar] [CrossRef]

- Coltman, Tim, Timothy M. Devinney, David F. Midgley, and Sunil Venaik. 2008. Formative versus reflective measurement models: Two applications of formative measurement. Journal of Business Research 61: 1250–62. [Google Scholar] [CrossRef]

- Connelly, Lynne M. 2016. Cross-sectional survey research. Medsurg Nursing 25: 369. [Google Scholar]

- Coron, Clotilde. 2023. Quantifying Human Resources: The Contributions of Economics and Sociology of Conventions. In Handbook of Economics and Sociology of Conventions. Cham: Springer International Publishing, pp. 1–19. [Google Scholar]

- Crozier, Michel, and Erhard Friedberg. 1977. L’acteur et le système. Paris: Seuil. [Google Scholar]

- Dargnies, Marie-Pierre, Rustamdjan Hakimov, and Dorothea Kübler. 2024. Aversion to hiring algorithms: Transparency, gender profiling, and self-confidence. Management Science. [Google Scholar] [CrossRef]

- Dastin, Jeffrey. 2022. Amazon scraps secret AI recruiting tool that showed bias against women. In Ethics of Data and Analytics. Boca Raton: Auerbach Publications, pp. 296–99. [Google Scholar]

- Datta, Amit, Michael Carl Tschantz, and Anupam Datta. 2014. Automated experiments on ad privacy settings: A tale of opacity, choice, and discrimination. arXiv arXiv:1408.6491. [Google Scholar]

- Davenport, Thomas H., and Rajeev Ronanki. 2018. Artificial intelligence for the real world. Harvard Business Review 96: 108–16. [Google Scholar]

- Deal, Terrence E., and Allan A. Kennedy. 1983. Corporate cultures: The rites and rituals of corporate life. Business Horizons 26: 82–85. [Google Scholar] [CrossRef]

- Delaney, Rob, and Robert D’Agostino. 2015. The Challenges of Integrating New Technology into an Organization. Philadelphia: La Salle University. [Google Scholar]

- Dietvorst, Berkeley J., Joseph P. Simmons, and Cade Massey. 2015. Algorithm aversion: People erroneously avoid algorithms after seeing them err. Journal of Experimental Psychology: General 144: 114. [Google Scholar] [CrossRef]

- Dietvorst, Berkeley J., Joseph P. Simmons, and Cade Massey. 2018. Overcoming algorithm aversion: People will use imperfect algorithms if they can (even slightly) modify them. Management Science 64: 1155–70. [Google Scholar] [CrossRef]

- Duggan, James, Ultan Sherman, Ronan Carbery, and Anthony McDonnell. 2020. Algorithmic management and app-work in the gig economy: A research agenda for employment relations and HRM. Human Resource Management Journal 30: 114–32. [Google Scholar] [CrossRef]

- Dwivedi, Yogesh K., Laurie Hughes, Elvira Ismagilova, Gert Aarts, Crispin Coombs, Tom Crick, Yanqing Duan, Rohita Dwivedi, John Edwards, Aled Eirug, and et al. 2021. Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. International Journal of Information Management 57: 101994. [Google Scholar] [CrossRef]

- El-Attar, Sanabel El-Hakeem. 2006. User involvement and perceived usefulness of information technology. Ph.D. thesis, Mississippi State University, Mississippi State, MS, USA. [Google Scholar]

- Emery, Yves, and David Giauque. 2016. Administration and public agents in a post-bureaucratic environment. In The Actor and Bureaucracy in the 21st Century. Los Angeles: University of California, pp. 33–62. [Google Scholar]

- Emery, Yves, and David Giauque. 2023. Chapter 12: Human resources management. In Modèle IDHEAP d’administration publique: Vue d’ensemble. Edited by N. Soguel, P. Bundi, T. Mettler and S. Weerts. Lausanne: EPFL PRESS. [Google Scholar]

- Emery, Yves, and François Gonin. 2009. Gérer les Ressources Humaines: Des Théories aux Outils, un Concept Intégré par Processus, Compatible avec les Normes de Qualité. Lausanne: PPUR Presses Polytechniques. [Google Scholar]

- Engels, Friedrich. 1845. The Condition of the Working Class. Leipzig: Otto Wigand. [Google Scholar]

- Equey, Catherine, and Emmanuel Fragnière. 2008. Elements of perception regarding the implementation of ERP systems in Swiss SMEs. International Journal of Enterprise Information Systems (IJEIS) 4: 1–8. [Google Scholar] [CrossRef][Green Version]

- European Commission. 2020. White Paper on Artificial Intelligence—A European Approach to Excellence and Trust. Available online: https://commission.europa.eu/publications/white-paper-artificial-intelligence-european-approach-excellence-and-trust_en (accessed on 15 May 2024).

- Fleiss, Jürgen, Elisabeth Bäck, and Stefan Thalmann. 2024. Mitigating algorithm aversion in recruiting: A study on explainable AI for conversational agents. ACM SIGMIS Database: The DATABASE for Advances in Information Systems 55: 56–87. [Google Scholar] [CrossRef]

- Floridi, Luciano, and Josh Cowls. 2022. A unified framework of five principles for AI in society. In Machine Learning and the City: Applications in Architecture and Urban Design. Hoboken: Wiley, pp. 535–45. [Google Scholar]

- Fowler, Floyd J., Jr. 2013. Survey Research Methods, 4th ed. Thousand Oaks: SAGE Publications. [Google Scholar]

- Fritts, Megan, and Frank Cabrera. 2021. AI recruitment algorithms and the dehumanization problem. Ethics and Information Technology 23: 791–801. [Google Scholar] [CrossRef]

- Gao, Shuqing, Lingnan He, Yue Chen, Dan Li, and Kaisheng Lai. 2020. Public perception of artificial intelligence in medical care: Content analysis of social media. Journal of Medical Internet Research 22: e16649. [Google Scholar] [CrossRef] [PubMed]

- García-Arroyo, José A., Amparo Osca Segovia, and José M. Peiró. 2019. Meta-analytical review of teacher burnout across 36 societies: The role of national learning assessments and gender egalitarianism. Psychology & Health 34: 733–53. [Google Scholar]

- Ghosh, Adarsh, and Devasenathipathy Kandasamy. 2020. Interpretable artificial intelligence: Why and when. American Journal of Roentgenology 214: 1137–38. [Google Scholar] [CrossRef] [PubMed]

- Gillespie, Tarleton. 2014. The relevance of algorithms. In Media Technologies: Essays on Communication, Materiality, and Society. Cambridge: MIT Press, pp. 167–94. [Google Scholar]

- Grosz, Barbara J., Russ Altman, Eric Horvitz, Alan Mackworth, Tom Mitchell, Deirdre Mulligan, and Yoav Shoham. 2016. Artificial Intelligence and Life in 2030: One Hundred Year Study on Artificial Intelligence. Stanford: Stanford University. [Google Scholar]

- Hair, Joseph F. Jr, G. Tomas M. Hult, Christian M. Ringle, Marko Sarstedt, Nicholas P. Danks, and Soumya Ray. 2021. Partial Least Squares Structural Equation Modeling (PLS-SEM) Using R: A Workbook. Berlin: Springer Nature, p. 197. [Google Scholar]

- Hanafiah, Mohd Hafiz. 2020. Formative vs. reflective measurement model: Guidelines for structural equation modeling research. International Journal of Analysis and Applications 18: 876–89. [Google Scholar]

- Henseler, Jörg, Christian M. Ringle, and Marko Sarstedt. 2015. A new criterion for assessing discriminant validity in variance-based structural equation modeling. Journal of the Academy of Marketing Science 43: 115–35. [Google Scholar] [CrossRef]

- Hill, Stephen. 1981. Competition and Control at Work. London: Heinemann Educational Books. [Google Scholar]

- Ho, Geoffrey, Dana Wheatley, and Charles T. Scialfa. 2005. Age differences in trust and reliance of a medication management system. Interacting with Computers 17: 690–710. [Google Scholar] [CrossRef]

- Höddinghaus, Miriam, Dominik Sondern, and Guido Hertel. 2021. The automation of leadership functions: Would people trust decision algorithms? Computers in Human Behavior 116: 106635. [Google Scholar] [CrossRef]

- Huff, Jonas, and Thomas Götz. 2020. Was datengestütztes Personalmanagement kann und darf. Personalmagazin 1: 48–52. [Google Scholar]

- Introna, Lucas D. 2016. Algorithms, governance, and governmentality: On governing academic writing. Science, Technology, & Human Values 41: 17–49. [Google Scholar]

- Isaak, Jim, and Mina J. Hanna. 2018. User data privacy: Facebook, Cambridge Analytica, and privacy protection. Computer 51: 56–59. [Google Scholar] [CrossRef]

- Jantan, Hamidah, Abdul Razak Hamdan, and Zulaiha Ali Othman. 2010a. Human talent prediction in HRM using C4.5 classification algorithm. International Journal on Computer Science and Engineering 2: 2526–34. [Google Scholar]

- Jantan, Hamidah, Abdul Razak Hamdan, and Zulaiha Ali Othman. 2010b. Intelligent techniques for decision support system in human resource management. Decision Support Systems 78: 261–76. [Google Scholar]

- Jia, Qiong, Yue Guo, Rong Li, Yurong Li, and Yuwei Chen. 2018. A conceptual artificial intelligence application framework in human resource management. Paper presented at 18th International Conference on Electronic Business, ICEB, Guilin, China, December 2–6; pp. 106–14. [Google Scholar]

- Jia, Qiong, Shan Wang, and Wenxue Chen. 2023. Research on the relationship between digital job crafting, algorithm aversion, and job performance in the digital transformation. Paper presented at International Conference on Electronic Busines, Chiayi, Taiwan, October 19–23. [Google Scholar]

- Johnson, Brad A. M., Jerrell D. Coggburn, and Jared J. Llorens. 2022. Artificial intelligence and public human resource management: Questions for research and practice. Public Personnel Management 51: 538–62. [Google Scholar] [CrossRef]

- Kang, In Gu, Ben Croft, and Barbara A. Bichelmeyer. 2020. Predictors of turnover intention in U.S. federal government workforce: Machine learning evidence that perceived comprehensive HR practices predict turnover intention. Public Personnel Management 50: 009102602097756. [Google Scholar] [CrossRef]

- Kawaguchi, Kohei. 2021. When will workers follow an algorithm? A field experiment with a retail business. Management Science 67: 1670–95. [Google Scholar] [CrossRef]

- Kim, Pauline T., and Matthew T. Bodie. 2020. Artificial intelligence and the challenges of workplace discrimination and privacy. ABA Journal of Labor & Employment Law 35: 289. [Google Scholar]

- Kim, Sunghoon, Ying Wang, and Corine Boon. 2021. Sixty years of research on technology and human resource management: Looking back and looking forward. Human Resource Management 60: 229–47. [Google Scholar] [CrossRef]

- Köchling, Alina, and Marius Claus Wehner. 2020. Discriminated by an algorithm: A systematic review of discrimination and fairness by algorithmic decision-making in the context of HR recruitment and HR development. Business Research 13: 795–848. [Google Scholar] [CrossRef]

- Kozlowski, Steve W. J. 1987. Technological innovation and strategic HRM: Facing the challenge of change. Human Resource Planning 10: 69. [Google Scholar]

- Ladner, Andreas, and Alexander Haus. 2021. Aufgabenerbringung der Gemeinden in der Schweiz: Organisation, Zuständigkeiten und Auswirkungen. Lausanne: IDHEAP Institut de Hautes Études en Administration Publique. [Google Scholar]

- Ladner, Andreas, Nicolas Keuffer, Harald Baldersheim, Nikos Hlepas, Pawel Swianiewicz, Kristof Steyvers, and Carmen Navarro. 2019. Patterns of Local Autonomy in Europe. London: Palgrave Macmillan, pp. 229–30. [Google Scholar]

- Lalive D’Épinay, Christian, and Carlos Garcia. 1988. Le Mythe du Travail en Suisse. Genève: Georg éditeur. [Google Scholar]

- Lambrecht, Anja, and Catherine Tucker. 2019. Algorithmic bias? An empirical study of apparent gender-based discrimination in the display of STEM career ads. Management Science 65: 2966–81. [Google Scholar] [CrossRef]

- Langer, Markus, and Cornelius J. König. 2023. Introducing a multi-stakeholder perspective on opacity, transparency and strategies to reduce opacity in algorithm-based human resource management. Human Resource Management Review 33: 100881. [Google Scholar] [CrossRef]

- Lawler, John J., and Robin Elliot. 1993. Artificial intelligence in HRM: An experimental study of an expert system. Paper presented at 1993 Conference on Computer Personnel Research, St Louis, MO, USA, April 1–3; pp. 473–80. [Google Scholar]

- Lee, Min Kyung. 2018. Understanding perception of algorithmic decisions: Fairness, trust, and emotion in response to algorithmic management. Big Data and Society 5: 2053951718756684. [Google Scholar] [CrossRef]

- Leicht-Deobald, Ulrich, Thorsten Busch, Christoph Schank, Antoinette Weibel, Simon Schafheitle, Isabelle Wildhaber, and Gabriel Kasper. 2022. The challenges of algorithm-based HR decision-making for personal integrity. In Business and the Ethical Implications of Technology. Cham: Springer Nature Switzerland, pp. 71–86. [Google Scholar]

- Lennartz, Simon, Thomas Dratsch, David Zopfs, Thorsten Persigehl, David Maintz, Nils Große Hokamp, and Daniel Pinto dos Santos. 2021. Use and control of artificial intelligence in patients across the medical workflow: Single-center questionnaire study of patient perspectives. Journal of Medical Internet Research 23: e24221. [Google Scholar] [CrossRef]

- Leong, Lai Ying, Teck Soon Hew, Keng-Boon Ooi, and June Wei. 2020. Predicting mobile wallet resistance: A two-staged structural equation modeling-artificial neural network approach. International Journal of Information Management 51: 102047. [Google Scholar] [CrossRef]

- LIPAD. 2020. Law on Public Information, Access to Documents and Protection of Personal Data. Available online: https://silgeneve.ch/legis/index.aspx (accessed on 5 July 2024).

- Litterscheidt, Rouven, and David J. Streich. 2020. Financial education and digital asset management: What’s in the black box? Journal of Behavioral and Experimental Economics 87: 101573. [Google Scholar] [CrossRef]

- Logg, Jennifer M., Julia A. Minson, and Don A. Moore. 2019. Algorithm appreciation: People prefer algorithmic to human judgment. Organizational Behavior and Human Decision Processes 151: 90–103. [Google Scholar] [CrossRef]

- Lourenço, Carlos J. S., Benedict G. C. Dellaert, and Bas Donkers. 2020. Whose algorithm says so: The relationships between type of firm, perceptions of trust and expertise, and the acceptance of financial robo-advice. Journal of Interactive Marketing 49: 107–24. [Google Scholar] [CrossRef]

- LPrD. 2007. Personal Data Protection Act. Available online: https://prestations.vd.ch/pub/blv-publication/actes/consolide/172.65?key=1543934892528&id=cf9df545-13f7-4106-a95b-9b3ab8fa8b01 (accessed on 4 July 2024).

- Luo, Xueming, Siliang Tong, Zheng Fang, and Zhe Qu. 2019. Frontiers: Machines vs. humans: The impact of artificial intelligence chatbot disclosure on customer purchases. Marketing Science 38: 937–47. [Google Scholar] [CrossRef]

- Lutz, Christoph. 2019. Digital inequalities in the age of artificial intelligence and big data. Human Behavior and Emerging Technologies 1: 141–48. [Google Scholar] [CrossRef]

- Mahmud, Hasan, A. K. M. Najmul Islam, and Ranjan Kumar Mitra. 2023. What drives managers towards algorithm aversion and how to overcome it? Mitigating the impact of innovation resistance through technology readiness. Technological Forecasting and Social Change 193: 122641. [Google Scholar] [CrossRef]

- Mahmud, Hasan, A. K. M. Najmul Islam, Syed Ishtiaque Ahmed, and Kari Smolander. 2022. What influences algorithmic decision-making? A systematic literature review on algorithm aversion. Technological Forecasting and Social Change 175: 121390. [Google Scholar] [CrossRef]

- Malik, Ashish, Pawan Budhwar, and Bahar Ali Kazmi. 2023. Artificial intelligence (AI)-assisted HRM: Towards an extended strategic framework. Human Resource Management Review 33: 100940. [Google Scholar] [CrossRef]

- Malik, Ashish, N. R. Srikanth, and Pawan Budhwar. 2020. Digitisation, artificial intelligence (AI) and HRM. In Human Resource Management: Strategic and International Perspectives, 3rd ed. Edited by Jonathan Crashaw, Pawan Budhwar and Angela Davis. Los Angeles: SAGE Publications Ltd., pp. 88–111. [Google Scholar]

- Meijerink, Jeroen, Mark Boons, Anne Keegan, and Janet Marler. 2021. Algorithmic human resource management: Synthesizing developments and cross-disciplinary insights on digital HRM. The International Journal of Human Resource Management 32: 2545–62. [Google Scholar] [CrossRef]

- Melick, Sarah Ruth. 2020. Development and Validation of a Measure of Algorithm Aversion. Bowling Green: Bowling Green State University. [Google Scholar]

- Mer, Akansha, and Amarpreet Singh Virdi. 2023. Navigating the paradigm shift in HRM practices through the lens of artificial intelligence: A post-pandemic perspective. In The Adoption and Effect of Artificial Intelligence on Human Resources Management, Part A. Bingley: Emerald Publishing Limited, pp. 123–54. [Google Scholar]

- Merhbene, Ghofrane, Sukanya Nath, Alexandre R. Puttick, and Mascha Kurpicz-Briki. 2022. BurnoutEnsemble: Augmented intelligence to detect indications for burnout in clinical psychology. Frontiers in Big Data 5: 863100. [Google Scholar] [CrossRef]

- Metcalf, Lynn, David A. Askay, and Louis B. Rosenberg. 2019. Keeping humans in the loop: Pooling knowledge through artificial swarm intelligence to improve business decision making. California Management Review 61: 84–109. [Google Scholar] [CrossRef]

- Meuter, Matthew L., Amy L. Ostrom, Mary Jo Bitner, and Robert Roundtree. 2003. The influence of technology anxiety on consumer use and experiences with self-service technologies. Journal of Business Research 56: 899–906. [Google Scholar] [CrossRef]

- Mohamed, Syaiful Anwar, Moamin A. Mahmoud, Mohammed Najah Mahdi, and Salama A. Mostafa. 2022. Improving efficiency and effectiveness of robotic process automation in human resource management. Sustainability 14: 3920. [Google Scholar] [CrossRef]

- Nagtegaal, Rosanna. 2021. The impact of using algorithms for managerial decisions on public employees’ procedural justice. Government Information Quarterly 38: 101536. [Google Scholar] [CrossRef]

- Newell, Sue, and Marco Marabelli. 2020. Strategic opportunities (and challenges) of algorithmic decision-making: A call for action on the long-term societal effects of ‘datification’. In Strategic Information Management. London: Routledge, pp. 430–49. [Google Scholar]

- Nolan, Kevin P., Nathan T. Carter, and Dev K. Dalal. 2016. Threat of technological unemployment: Are hiring managers discounted for using standardized employee selection practices? Personnel Assessment and Decisions 2: 4. [Google Scholar] [CrossRef]

- OFS. 2021. Regional Portraits and Key Figures for All Municipalities. Available online: https://www.bfs.admin.ch/bfs/fr/home/statistiques/statistique-regions/portraits-regionaux-chiffres-cles/cantons/uri.html (accessed on 3 November 2023).

- Önkal, Dilek, Paul Goodwin, Mary Thomson, Sinan Gönül, and Andrew Pollock. 2009. The relative influence of advice from human experts and statistical methods on forecast adjustments. Journal of Behavioral Decision Making 22: 390–409. [Google Scholar] [CrossRef]

- Ozili, Peterson K. 2023. The acceptable R-square in empirical modelling for social science research. In Social Research Methodology and Publishing Results: A Guide to Non-Native English Speakers. Hershey: IGI Global, pp. 134–43. [Google Scholar]

- Pandey, Sanjay K., Bradley E. Wright, and Donald P. Moynihan. 2008. Public service motivation and interpersonal citizenship behavior in public organizations: Testing a preliminary model. International Public Management Journal 11: 89–108. [Google Scholar] [CrossRef]

- Park, Hyanghee, Daehwan Ahn, Kartik Hosanagar, and Joonhwan Lee. 2021. Human-AI interaction in human resource management: Understanding why employees resist algorithmic evaluation at workplaces and how to mitigate burdens. Paper presented at 2021 CHI Conference on Human Factors in Computing Systems, Yokohama Japan, May 8–13; pp. 1–15. [Google Scholar]

- Pee, L. G., Shan L. Pan, and Lili Cui. 2019. Artificial intelligence in healthcare robots: A social informatics study of knowledge embodiment. Journal of the Association for Information Science and Technology 70: 351–69. [Google Scholar] [CrossRef]

- Persson, Marcus, and Andreas Wallo. 2022. Automation and public service values in human resource management. In Service Automation in the Public Sector: Concepts, Empirical Examples and Challenges. Cham: Springer International Publishing, pp. 91–108. [Google Scholar]

- Pessach, Dana, Gonen Singer, Dan Avrahami, Hila Chalutz Ben-Gal, Erez Shmueli, and Irad Ben-Gal. 2020. Employees recruitment: A prescriptive analytics approach via machine learning and mathematical programming. Decision Support Systems 134: 113290. [Google Scholar] [CrossRef]

- Podsakoff, Philip M., Scott B. MacKenzie, and Nathan P. Podsakoff. 2012. Sources of method bias in social science research and recommendations on how to control it. Annual Review of Psychology 63: 539–69. [Google Scholar] [CrossRef]

- Prahl, Andrew, and Lyn Van Swol. 2017. Understanding algorithm aversion: When is advice from automation discounted? Journal of Forecasting 36: 691–702. [Google Scholar] [CrossRef]

- Prahl, Andrew, and Lyn Van Swol. 2021. Out with the humans, in with the machines?: Investigating the behavioral and psychological effects of replacing human advisors with a machine. Human-Machine Communication 2: 209–34. [Google Scholar] [CrossRef]

- Prikshat, Verma, Ashish Malik, and Pawan Budhwar. 2023. AI-augmented HRM: Antecedents, assimilation and multilevel consequences. Human Resource Management Review 33: 100860. [Google Scholar] [CrossRef]

- Raisch, Sebastian, and Sebastian Krakowski. 2021. Artificial intelligence and management: The automation–augmentation paradox. Academy of Management Review 46: 192–210. [Google Scholar] [CrossRef]

- Ram, Sudha, and Jagdish N. Sheth. 1989. Consumer resistance to innovations: The marketing problem and its solutions. Journal of Consumer Marketing 6: 5–14. [Google Scholar] [CrossRef]

- Raub, McKenzie. 2018. Bots, Bias and Big Data: Artificial Intelligence, Algorithmic Bias and Disparate Impact Liability in Hiring Practices. Arkansas Law Review 71: 529. Available online: https://scholarworks.uark.edu/alr/vol71/iss2/7 (accessed on 8 July 2024).

- Ringle, Christian M., Marko Sarstedt, Rebecca Mitchell, and Siegfried P. Gudergan. 2020. Partial least squares structural equation modeling in HRM research. The International Journal of Human Resource Management 31: 1617–43. [Google Scholar] [CrossRef]

- Ritz, Adrian, Kristina S. Weißmüller, and Timo Meynhardt. 2022. Public value at cross points: A comparative study on employer attractiveness of public, private, and nonprofit organizations. Review of Public Personnel Administration 43: 528–56. [Google Scholar] [CrossRef]

- Rodgers, Waymond, James M. Murray, Abraham Stefanidis, William Y. Degbey, and Shlomo Y. Tarba. 2023. An artificial intelligence algorithmic approach to ethical decision-making in human resource management processes. Human Resource Management Review 33: 100925. [Google Scholar] [CrossRef]

- Rodney, Harriet, Katarina Valaskova, and Pavol Durana. 2019. The artificial intelligence recruitment process: How technological advancements have reshaped job application and selection practices. Psychosociological Issues in Human Resource Management 7: 42–47. [Google Scholar]

- Rouanet, Henri, and Bruno Leclerc. 1970. The role of the normal distribution in statistics. Mathématiques et Sciences Humaines 32: 57–74. [Google Scholar]

- Sainsaulieu, Ivan, and Jean-Philippe Leresche. 2023. C’est qui ton chef?! Sociologie du leadership en Suisse. Lausanne: PPUR Presses Polytechniques. [Google Scholar]

- Saukkonen, Juha, Pia Kreus, Nora Obermayer, Óscar Rodríguez Ruiz, and Maija Haaranen. 2019. AI, RPA, ML and other emerging technologies: Anticipating adoption in the HRM field. Paper presented at ECIAIR 2019 European Conference on the Impact of Artificial Intelligence and Robotics, Oxford, UK, October 31–November 1; Manchester: Academic Conferences and Publishing Limited, vol. 287. [Google Scholar]

- Schein, Edgar H. 2010. Organizational Culture and Leadership. Hoboken: John Wiley & Sons, vol. 2. [Google Scholar]

- Schepman, Astrid, and Paul Rodway. 2020. Initial validation of the general attitudes toward Artificial Intelligence Scale. Computers in Human Behavior Reports 1: 100014. [Google Scholar] [CrossRef]

- Schepman, Astrid, and Paul Rodway. 2023. The General Attitudes toward Artificial Intelligence Scale (GAAIS): Confirmatory validation and associations with personality, corporate distrust, and general trust. International Journal of Human-Computer Interaction 39: 2724–41. [Google Scholar] [CrossRef]

- Schinnenburg, Heike, and Christoph Brüggemann. 2018. Predictive HR-Analytics. Möglichkeiten und Grenzen des Einsatzes im Personalbereich. ZFO-Zeitschrift Führung und Organisation 87: 330–36. [Google Scholar]

- Schuetz, Sebastian, and Viswanath Venkatesh. 2020. The rise of human machines: How cognitive computing systems challenge assumptions of user-system interaction. Journal of the Association for Information Systems 21: 460–82. [Google Scholar] [CrossRef]

- Sciarini, Pascal. 2023. Politique Suisse: Institutions, Acteurs, Processus. Lausanne: Presses Polytechniques et Universitaires Romandes. [Google Scholar]

- Seeman, Melvin. 1967. On the personal consequences of alienation in work. American Sociological Review 32: 273–85. [Google Scholar] [CrossRef]

- Shrestha, Yash Raj, Shiko M. Ben-Menahem, and Georg von Krogh. 2019. Organizational decision-making structures in the age of artificial intelligence. California Management Review 61: 66–83. [Google Scholar] [CrossRef]

- Soffia, Magdalena, Alex J. Wood, and Brendan Burchell. 2022. Alienation is not ‘Bullshit’: An empirical critique of Graeber’s theory of BS jobs. Work, Employment and Society 36: 816–40. [Google Scholar] [CrossRef]

- Song, Yuegang, and Ruibing Wu. 2021. Analysing human-computer interaction behaviour in human resource management system based on artificial intelligence technology. Knowledge Management Research & Practice 19: 1–10. [Google Scholar]

- Spyropoulos, Basilis, and George Papagounos. 1995. A theoretical approach to artificial intelligence systems in medicine. Artificial Intelligence in Medicine 7: 455–65. [Google Scholar] [CrossRef] [PubMed]

- Stone, Dianna L., and Kimberly M. Lukaszewski. 2024. Artificial intelligence can enhance organizations and our lives: But at what price? Organizational Dynamics 53: 101038. [Google Scholar] [CrossRef]

- Strohmeier, Stefan, ed. 2022. Handbook of Research on Artificial Intelligence in Human Resource Management. Cheltenham: Edward Elgar Publishing. [Google Scholar]

- Strohmeier, Stefan. 2020. Algorithmic Decision Making in HRM. In Encyclopedia of Electronic HRM. Berlin and Boston: De Gruyter Oldenburg, pp. 54–60. [Google Scholar]

- Strohmeier, Stefan, and Franca Piazza. 2015. Artificial intelligence techniques in human resource management—A conceptual exploration. In Intelligent Techniques in Engineering Management: Theory and Applications. Berlin: Springer, pp. 149–72. [Google Scholar]

- Sultana, Sharifa, Md. Mobaydul Haque Mozumder, and Syed Ishtiaque Ahmed. 2021. Chasing Luck: Data-driven Prediction, Faith, Hunch, and Cultural Norms in Rural Betting Practices. Paper presented at 2021 CHI Conference on Human Factors in Computing Systems, Yokohama Japan, May 8–13; pp. 1–17. [Google Scholar]

- Sutherland, Steven C., Casper Harteveld, and Michael E. Young. 2016. Effects of the advisor and environment on requesting and complying with automated advice. ACM Transactions on Interactive Intelligent Systems (TiiS) 6: 1–36. [Google Scholar] [CrossRef]

- Syam, Sid S., and James F. Courtney. 1994. The case for research in decision support systems. European Journal of Operational Research 73: 450–57. [Google Scholar] [CrossRef]

- Tambe, Prasanna, Peter Cappelli, and Valery Yakubovich. 2019. Artificial intelligence in human resources management: Challenges and a path forward. California Management Review 61: 15–42. [Google Scholar] [CrossRef]

- Tarafdar, Monideepa, Cary L. Cooper, and Jean-François Stich. 2019. The technostress trifecta—techno eustress, techno distress and design: Theoretical directions and an agenda for research. Information Systems Journal 29: 6–42. [Google Scholar] [CrossRef]

- Taylor, Frederic Winslow. 1957. La Direction Scientifique des Entreprises. Paris: Dunod. First published 1911. [Google Scholar]

- Thurman, Neil, Judith Moeller, Natali Helberger, and Damian Trilling. 2019. My friends, editors, algorithms, and I: Examining audience attitudes to news selection. Digital Journalism 7: 447–69. [Google Scholar] [CrossRef]

- Tiwari, Prakhar. 2022. Misuse of personal data by social media giants. Jus Corpus LJ 3: 1041. [Google Scholar]

- United Nations Development Programme. 2024. Human Development Report 2023/2024: Unstuck: Rethinking Cooperation in a Polarized World. Available online: https://hdr.undp.org/system/files/documents/global-report-document/hdr2023-24snapshoten.pdf (accessed on 4 July 2024).

- van den Broek, Elmira, Anastasia Sergeeva, and Marleen Huysman. 2019. Hiring algorithms: An ethnography of fairness in practice. In Association for Information Systems. Athens: AIS Electronic Library (AISeL). [Google Scholar]

- van Dongen, Kees, and Peter-Paul van Maanen. 2013. A framework for explaining reliance on decision aids. International Journal of Human-Computer Studies 71: 410–24. [Google Scholar] [CrossRef]

- van Esch, Patrick, J. Stewart Black, and Denni Arli. 2021. Job candidates’ reactions to AI-enabled job application processes. AI and Ethics 1: 119–30. [Google Scholar] [CrossRef]

- Varma, Arup, Vijay Pereira, and Parth Patel. 2024. Artificial intelligence and performance management. Organizational Dynamics 53: 101037. [Google Scholar] [CrossRef]

- Veale, Michael, and Lilian Edwards. 2018. Clarity, surprises, and further questions in the Article 29 Working Party draft guidance on automated decision-making and profiling. Computer Law & Security Review 34: 398–404. [Google Scholar]

- Venkatesh, Viswanath. 2022. Adoption and use of AI tools: A research agenda grounded in UTAUT. Annals of Operations Research 308: 641–652. [Google Scholar] [CrossRef]

- Venkatesh, Viswanath, Michael G. Morris, Gordon B. Davis, and Fred D. Davis. 2003. User acceptance of information technology: Toward a unified view. MIS Quarterly 27: 425–78. [Google Scholar] [CrossRef]

- Venkatesh, Viswanath, James Y. L. Thong, and Xin Xu. 2016. Unified theory of acceptance and use of technology: A synthesis and the road ahead. Journal of the Association for Information Systems 17: 328–76. [Google Scholar] [CrossRef]

- Vimalkumar, M., Sujeet Kumar Sharma, Jang Bahadur Singh, and Yogesh K. Dwivedi. 2021. ‘Okay Google, what about my privacy?’: User’s privacy perceptions and acceptance of voice-based digital assistants. Computers in Human Behavior 120: 106763. [Google Scholar] [CrossRef]

- Vrontis, Demetris, Michael Christofi, Vijay Pereira, Shlomo Tarba, Anna Makrides, and Eleni Trichina. 2021. Artificial intelligence, robotics, advanced technologies and human resource management: A systematic review. The International Journal of Human Resource Management 33: 1237–66. [Google Scholar] [CrossRef]

- Williams, Larry J., and Ernest H. O’Boyle, Jr. 2008. Measurement models for linking latent variables and indicators: A review of human resource management research using parcels. Human Resource Management Review 18: 233–42. [Google Scholar] [CrossRef]

- Workman, Michael. 2005. Expert decision support system use, disuse, and misuse: A study using the theory of planned behavior. Computers in Human Behavior 21: 211–31. [Google Scholar] [CrossRef]

- Wüthrich, Hans A., Dirk Osmetz, and Stefan Kaduk. 2008. Musterbrecher: Führung neu Leben. Berlin: Springer. [Google Scholar]

- Xiao, Qijie, Jiaqi Yan, and Greg J. Bamber. 2023. How does AI-enabled HR analytics influence employee resilience: Job crafting as a mediator and HRM system strength as a moderator. Personnel Review. [Google Scholar] [CrossRef]

- Yamakawa, Yasuhiro, Mike W. Peng, and David L. Deeds. 2008. What drives new ventures to internationalize from emerging to developed economies? Entrepreneurship Theory and Practice 32: 59–82. [Google Scholar] [CrossRef]

- Zahedi, Fatemeh Mariam, Ahmed Abbasi, and Yan Chen. 2015. Fake-website detection tools: Identifying elements that promote individuals’ use and enhance their performance. Journal of the Association for Information Systems 16: 2. [Google Scholar] [CrossRef]

- Zhang, Lixuan, Iryna Pentina, and Yuhong Fan. 2021. Who do you choose? Comparing perceptions of human vs robo-advisor in the context of financial services. Journal of Services Marketing 35: 634–46. [Google Scholar] [CrossRef]

- Zhang, Ping. 2013. The affective response model: A theoretical framework of affective concepts and their relationships in the ICT context. MIS Quarterly 37: 247–74. [Google Scholar] [CrossRef]

- Zhou, Yu, Lijun Wang, and Wansi Chen. 2023. The dark side of AI-enabled HRM on employees based on AI algorithmic features. Journal of Organizational Change Management 36: 1222–41. [Google Scholar] [CrossRef]

- Zu, Shicheng, and Xiulai Wang. 2019. Resume information extraction with a novel text block segmentation algorithm. International Journal on Natural Language Computing 8: 29–48. [Google Scholar] [CrossRef]

- Zuboff, Shoshana. 2019. Surveillance capitalism and the challenge of collective action. In New Labor Forum. Sage and Los Angeles: SAGE Publications, vol. 28, pp. 10–29. [Google Scholar]

| Gender | Linguistic Area | ||

| Woman | 49.69 | French-speaking Switzerland | 44.44 |

| Man | 43.21 | German-speaking Switzerland | 42.90 |

| Other | 0.00 | Italian-speaking Switzerland | 6.17 |

| NA | 7.10 | NA | 6.48 |

| Hierarchical pos. | Time with organization | ||

| Employee | 25.31 | <1 | 7.10 |

| Proximity exec. | 4.32 | 1–3 | 15.43 |

| Middle manager | 23.77 | 3–5 | 15.74 |

| Exec. Manager | 39.51 | 5–10 | 22.22 |

| NA | 7.10 | >10 | 31.48 |

| NA | 8.02 | ||

| Age | Level of activity (federalism) | ||

| <18 | 0.00 | International | 30.86 |

| 18–25 | 0.00 | Federal | 27.47 |

| 26–34 | 4.63 | Cantonal | 14.51 |

| 35–44 | 17.59 | Communal | 20.06 |

| 45–54 | 43.83 | NA | 7.10 |

| 55–64 | 25.93 | Private/Public | |

| >65 | 0.00 | Private | 48.46 |

| NA | 8.02 | Public or semi-public | 47.53 |

| NA | 4.01 |

| AA | PT | |

|---|---|---|

| R2 | 0.569 | 0.372 |

| R2 adjusted | 0.556 | 0.369 |

| PC | 0.074 * | / |

| GAAIS+ | −0.211 *** | / |

| GAAIS− | 0.414 *** | / |

| PT | 0.169 *** | / |

| PDEV | 0.177 *** | / |

| PWB | 0.181 *** | / |

| AGE | −0.006 | / |

| TWO | −0.022 | / |

| H | −0.019 | / |

| G | −0.044 | / |

| PSUS | / | 0.402 *** |

| PSEV | / | 0.369 *** |

| AA | PT | |||

|---|---|---|---|---|

| Private Sector | Public Sector | Private Sector | Public Sector | |

| R2 | 0.675 | 0.533 | 0.367 | 0.392 |

| R2 ajusted | 0.653 | 0.501 | 0.358 | 0.384 |

| PC | 0.079 * | 0.092 | / | / |

| GAAIS+ | −0.101 | −0.296 *** | / | / |

| GAAIS− | 0.521 *** | 0.373 *** | / | / |

| PT | 0.269 *** | 0.091 | / | / |

| PDEV | 0.100 | 0.249 *** | / | / |

| PWB | 0.153 * | 0.172 * | / | / |

| AGE | −0.030 | −0.011 | / | / |

| TWO | 0.031 | −0.047 | / | / |

| H | 0.052 | −0.035 | / | / |

| G | 0.042 | −0.085 | / | / |

| PSUS | / | / | 0.430 *** | 0.400 *** |

| PSEV | 0.352 *** | 0.364 *** | ||

| Hypo. | Wording | Confirmation |

|---|---|---|

| H1 | HR employees’ PC are positively associated with their AA. | Yes |

| H1private | Yes | |

| H1public | No | |

| H2 | HR employees’ GAAIS+ is negatively associated with their AA. | Yes |

| H2private | No | |

| H2public | Yes | |

| H3 | HR employees’ GAAIS− is positively associated with their AA. | Yes |

| H3private | Yes | |

| H3public | Yes | |

| H4 | HR employees’ PT is positively associated with their AA. | Yes |

| H4private | Yes | |

| H4public | No | |

| H4a | HR employees’ PSUS is positively associated with their PT. | Yes |

| H4aprivate | Yes | |

| H4apublic | Yes | |

| H4b | HR employees’ PSEV is positively associated with their PT. | Yes |

| H4bprivate | Yes | |

| H4bpublic | Yes | |

| H5 | HR employees’ PDEV are positively associated with their AA. | Yes |

| H5private | No | |

| H5public | Yes | |

| H6 | HR employees’ PWB are positively associated with their AA. | Yes |

| H6private | Yes | |

| H6public | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Revillod, G. Why Do Swiss HR Departments Dislike Algorithms in Their Recruitment Process? An Empirical Analysis. Adm. Sci. 2024, 14, 253. https://doi.org/10.3390/admsci14100253

Revillod G. Why Do Swiss HR Departments Dislike Algorithms in Their Recruitment Process? An Empirical Analysis. Administrative Sciences. 2024; 14(10):253. https://doi.org/10.3390/admsci14100253

Chicago/Turabian StyleRevillod, Guillaume. 2024. "Why Do Swiss HR Departments Dislike Algorithms in Their Recruitment Process? An Empirical Analysis" Administrative Sciences 14, no. 10: 253. https://doi.org/10.3390/admsci14100253

APA StyleRevillod, G. (2024). Why Do Swiss HR Departments Dislike Algorithms in Their Recruitment Process? An Empirical Analysis. Administrative Sciences, 14(10), 253. https://doi.org/10.3390/admsci14100253