Permissibility, Moral Emotions, and Perceived Moral Agency in Autonomous Driving Dilemmas: An Investigation of Pedestrian-Sacrifice and Driver-Sacrifice Scenarios in the Third-Person Perspective

Abstract

1. Introduction

1.1. The Moral Decision-Making of AVs

1.2. Moral Emotions and Moral Decision-Making

1.3. Moral Agency and Moral Decision-Making

1.4. Current Research

2. Experiment 1

2.1. Materials and Methods

2.1.1. Participants

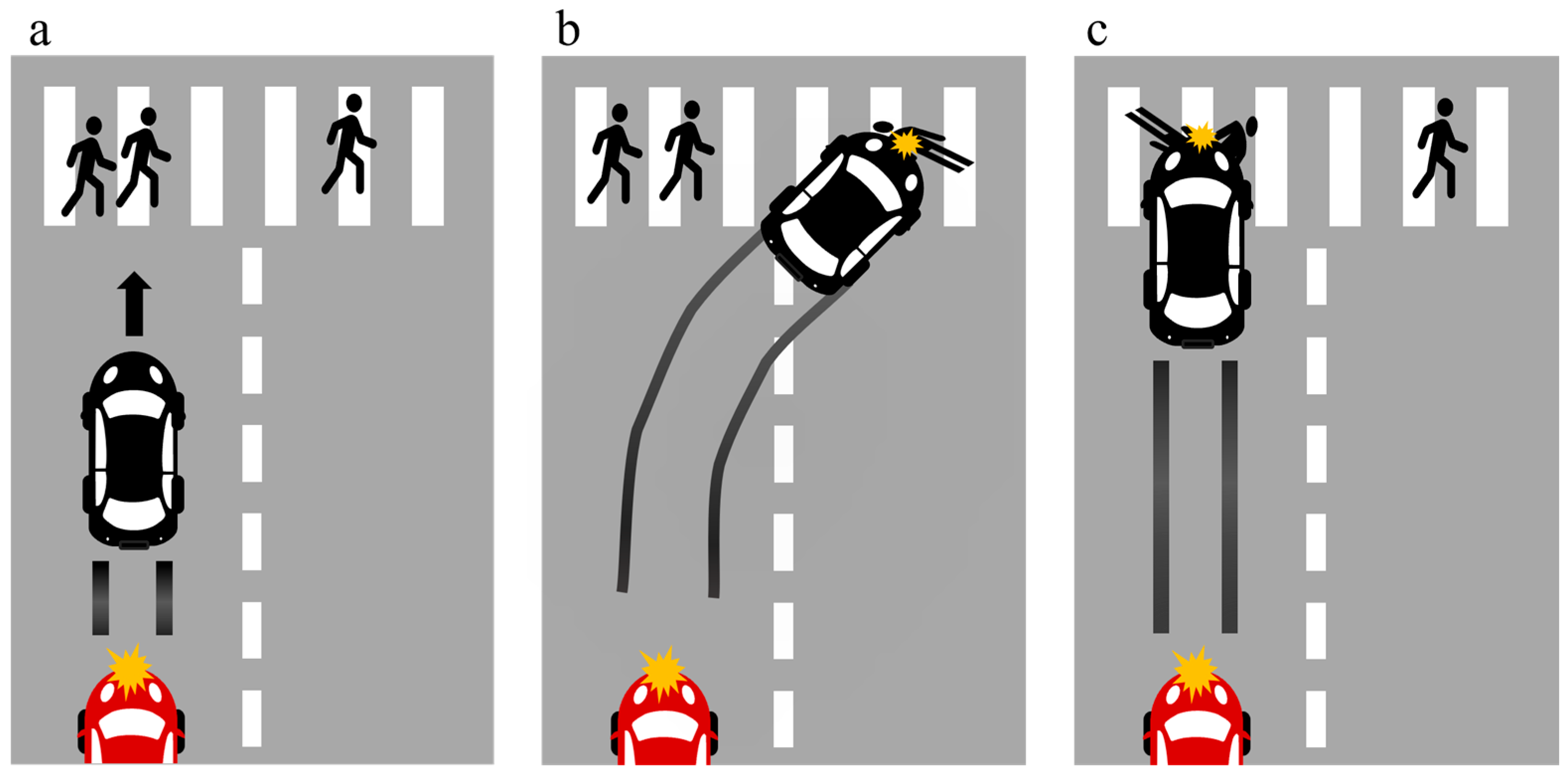

2.1.2. Experiment Design

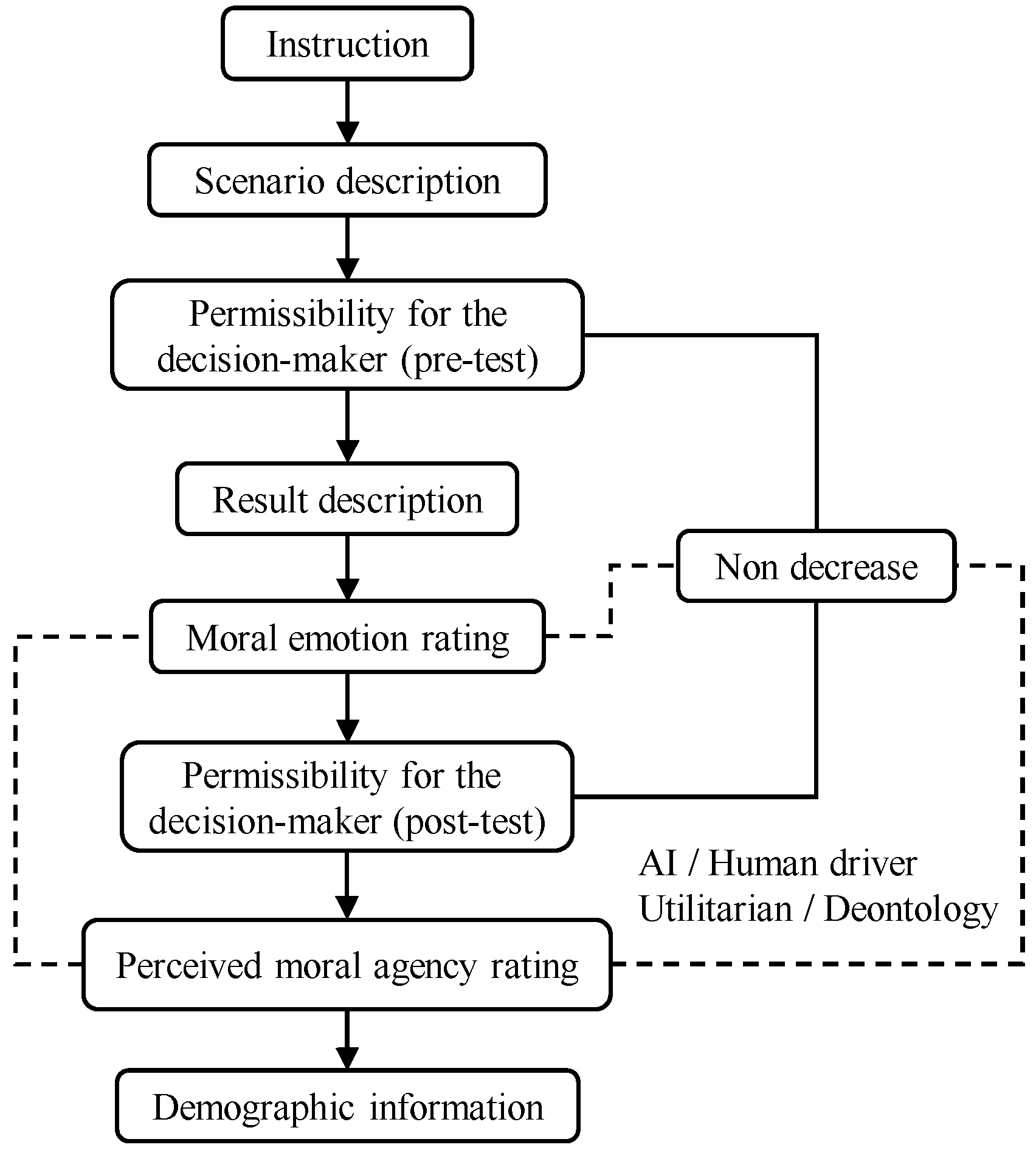

2.1.3. Procedure

2.1.4. Measures

2.1.5. Data Analysis

2.2. Results

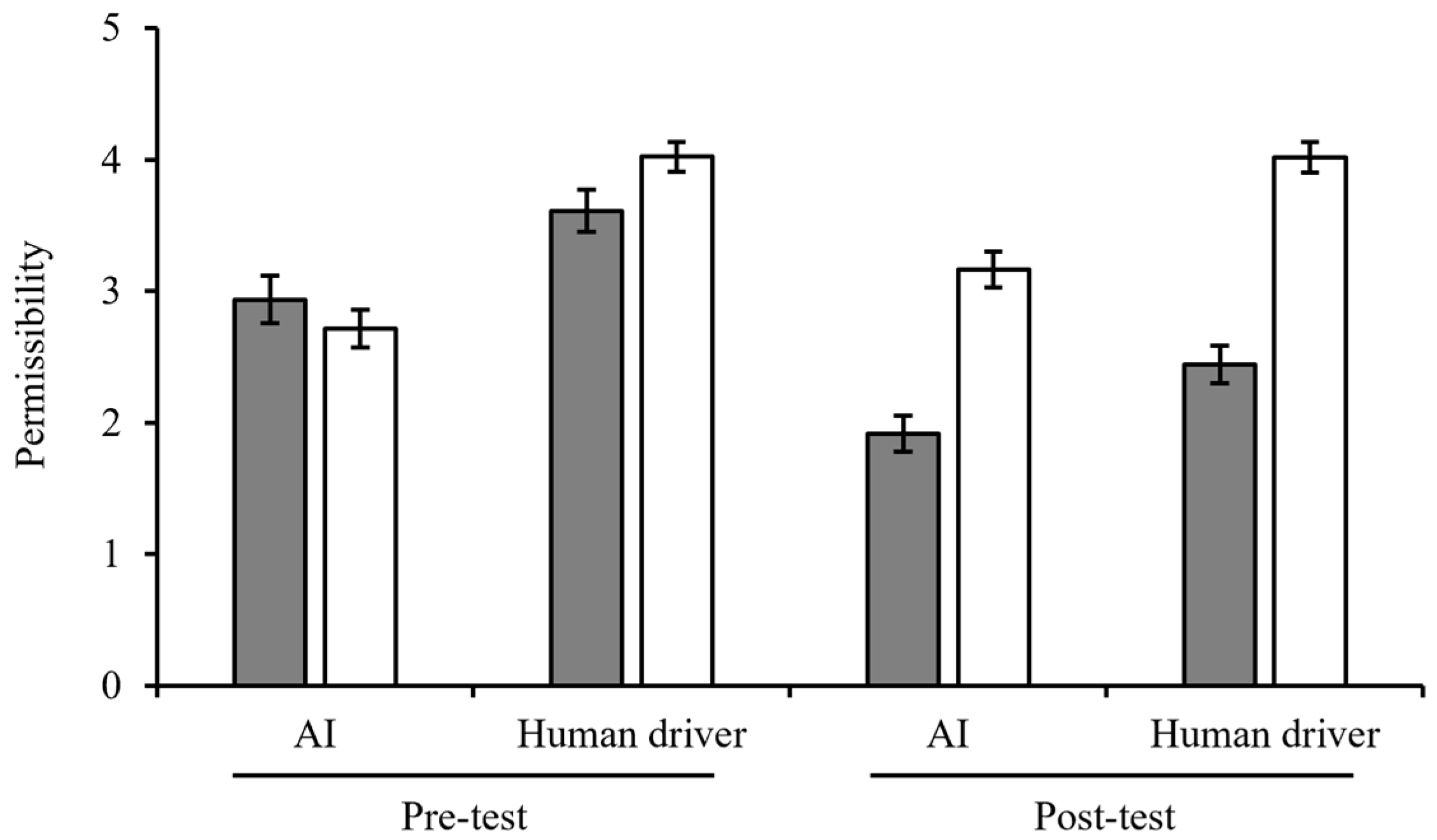

2.2.1. Permissibility for the Decision-Maker

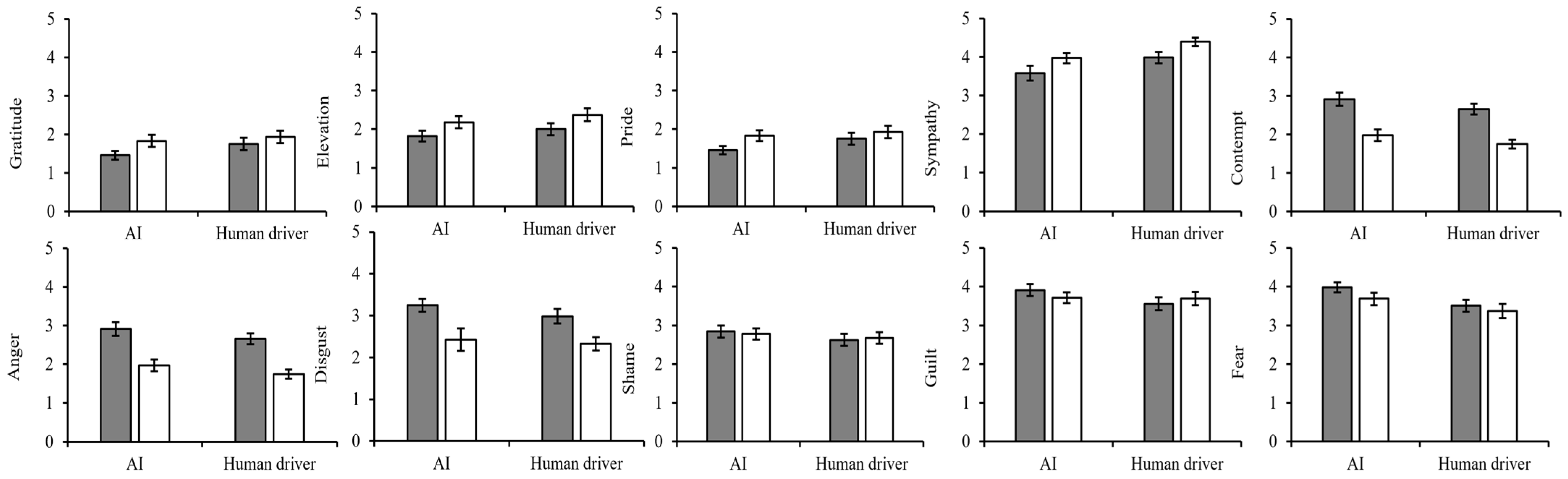

2.2.2. Perceived Moral Emotions

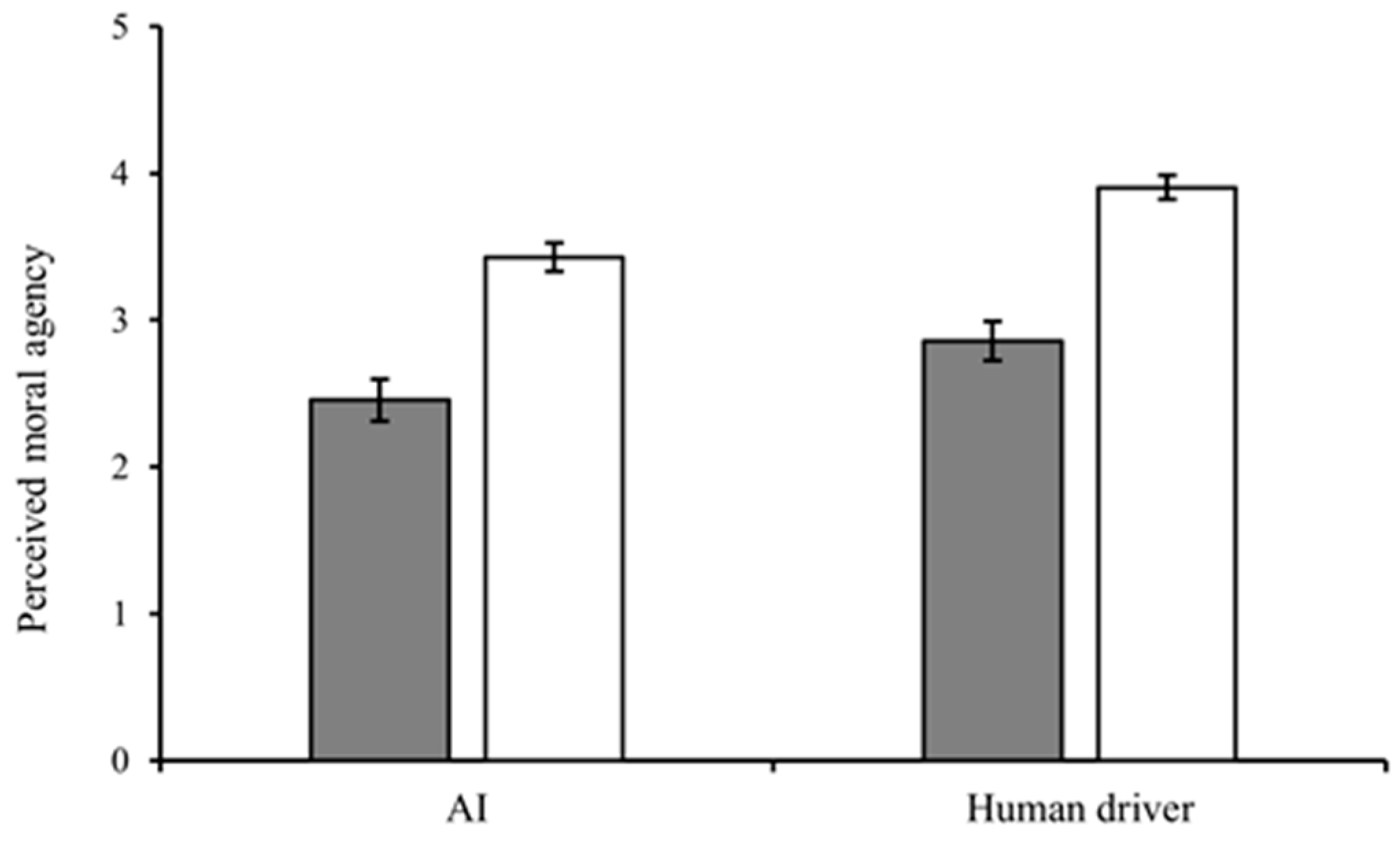

2.2.3. Perceived Moral Agency

2.2.4. Prediction of Non-Decrease in Permissibility

3. Experiment 2

3.1. Materials and Methods

3.1.1. Participants

3.1.2. Experiment Design

3.1.3. Procedure

3.1.4. Measures

3.1.5. Data Analysis

3.2. Results

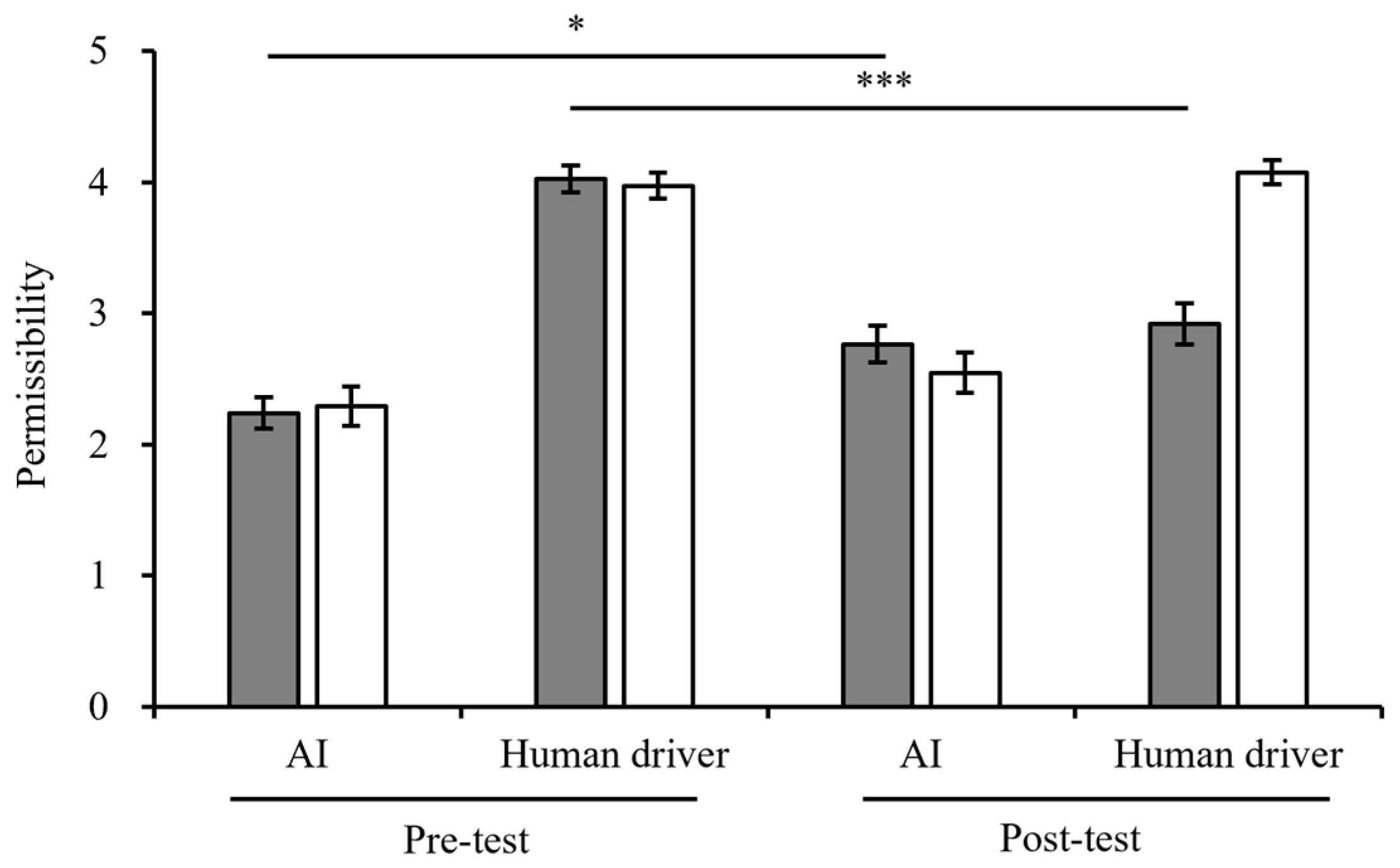

3.2.1. Permissibility for the Decision-Maker

3.2.2. Perceived Moral Emotions

3.2.3. Perceived Moral Agency

3.2.4. Prediction of Non-Decrease in Permissibility

4. Discussion

4.1. The Permissibility of Decision-Makers

4.2. The Moral Emotions

4.3. The Perceived Moral Agency

4.4. The Prediction of the Non-Decrease in Permissibility Ratings

4.5. Theoretical and Practical Implications

4.6. Limitations and Future Research

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| AVs | Automated vehicles |

Appendix A

| Result Description | ||||

|---|---|---|---|---|

| Mr. “Y” × Deontology | Mr. “Y” × Utilitarianism | ADS “X” × Deontology | ADS “X” × Utilitarianism | |

| Experiment 1 | Mr. Y decided to keep the current lane, sacrificing the two pedestrians to save the pedestrian on the right in front of the car. | Mr. Y decided to make a sharp turn to the right, sacrificing one pedestrian to save the two pedestrians in front of the vehicle. | The ADS “X” decided to keep the current lane, sacrificing the two pedestrians to save the pedestrian on the right in front of the car. | The ADS “X” decided to make a sharp turn to the right, sacrificing one pedestrian to save the two pedestrians in front of the car. |

| Experiment 2 | Mr. Y decided to keep the current lane, sacrificing the two pedestrians to save himself. | Mr. Y decided to make a sharp turn to the right, sacrificing himself to save the two pedestrians in front of the vehicle. | The ADS “X” decided to keep the current lane, sacrificing the two pedestrians to save Mr. Y. | The ADS “X” decided to make a sharp turn to the right, sacrificing Mr. Y to save the two pedestrians in front of the vehicle. |

Appendix B

Appendix C

| Pre-Test | Post-Test | ||||

|---|---|---|---|---|---|

| Experiment | Decision-Maker | Moral Belief | M(SD) | M(SD) | N |

| 1 | AI | Deontology | 2.94(1.40) | 1.92(1.05) | 57 |

| Utilitarianism | 2.71(1.26) | 3.16(1.22) | 77 | ||

| Human driver | Deontology | 3.61(1.26) | 2.44(1.29) | 61 | |

| Utilitarianism | 4.02(0.89) | 4.02(0.91) | 59 | ||

| 2 | AI | Deontology | 2.24(1.05) | 2.77(1.19) | 71 |

| Utilitarianism | 2.29(1.32) | 2.55(1.36) | 75 | ||

| Human driver | Deontology | 4.03(0.81) | 2.92(1.24) | 61 | |

| Utilitarianism | 3.97(0.79) | 4.08(0.74) | 62 |

Appendix D

| Experiment 1 | Experiment 2 | |||||

|---|---|---|---|---|---|---|

| Moral Emotion | Decision-Maker | Moral Belief | M(SD) | N | M(SD) | N |

| Gratitude | AI | Deontology | 1.53(0.89) | 57 | 3.07(1.42) | 71 |

| Utilitarianism | 2.22(1.20) | 77 | 2.35(1.25) | 75 | ||

| Human driver | Deontology | 1.89(1.29) | 61 | 1.89(1.05) | 61 | |

| Utilitarianism | 2.36(1.27) | 59 | 3.63(1.30) | 62 | ||

| Elevation | AI | Deontology | 1.82(1.10) | 57 | 2.30(1.34) | 71 |

| Utilitarianism | 2.18(1.23) | 77 | 2.77(1.38) | 75 | ||

| Human driver | Deontology | 2.00(1.22) | 61 | 1.84(1.05) | 61 | |

| Utilitarianism | 2.37(1.31) | 59 | 3.02(1.36) | 62 | ||

| Pride | AI | Deontology | 1.46(0.83) | 57 | 1.77(1.02) | 71 |

| Utilitarianism | 1.83(1.08) | 77 | 2.76(1.44) | 75 | ||

| Human driver | Deontology | 1.75(1.22) | 61 | 1.54(0.92) | 61 | |

| Utilitarianism | 1.93(1.26) | 59 | 2.97(1.35) | 62 | ||

| Sympathy | AI | Deontology | 3.58(1.46) | 57 | 3.70(1.13) | 71 |

| Utilitarianism | 3.97(1.04) | 77 | 2.45(1.21) | 75 | ||

| Human driver | Deontology | 3.98(1.18) | 61 | 3.48(1.16) | 61 | |

| Utilitarianism | 4.39(0.85) | 59 | 4.05(1.08) | 62 | ||

| Contempt | AI | Deontology | 2.91(1.35) | 57 | 2.13(1.18) | 71 |

| Utilitarianism | 1.97(1.14) | 77 | 2.49(1.57) | 75 | ||

| Human driver | Deontology | 2.66(1.35) | 61 | 2.77(1.35) | 61 | |

| Utilitarianism | 1.75(0.92) | 59 | 1.60(0.91) | 62 | ||

| Anger | AI | Deontology | 3.35(1.23) | 57 | 2.49(1.24) | 71 |

| Utilitarianism | 2.78(1.26) | 77 | 2.72(1.55) | 75 | ||

| Human driver | Deontology | 3.20(1.30) | 61 | 3.07(1.25) | 61 | |

| Utilitarianism | 2.27(1.20) | 59 | 2.06(1.21) | 62 | ||

| Disgust | AI | Deontology | 3.25(1.20) | 57 | 2.49(1.23) | 71 |

| Utilitarianism | 2.43(1.19) | 77 | 2.71(1.48) | 75 | ||

| Human driver | Deontology | 2.98(1.40) | 61 | 2.82(1.25) | 61 | |

| Utilitarianism | 2.32(1.24) | 59 | 1.74(1.16) | 62 | ||

| Shame | AI | Deontology | 2.84(1.18) | 57 | 3.25(1.33) | 71 |

| Utilitarianism | 2.78(1.31) | 77 | 1.92(1.21) | 75 | ||

| Human driver | Deontology | 2.62(1.23) | 61 | 3.05(1.31) | 61 | |

| Utilitarianism | 2.68(1.18) | 59 | 1.69(0.97) | 62 | ||

| Guilt | AI | Deontology | 3.91(1.21) | 57 | 3.82(1.23) | 71 |

| Utilitarianism | 3.71(1.22) | 77 | 1.88(1.09) | 75 | ||

| Human driver | Deontology | 3.56(1.31) | 61 | 3.64(1.13) | 61 | |

| Utilitarianism | 3.69(1.32) | 59 | 2.76(1.24) | 62 | ||

| Fear | AI | Deontology | 3.98(1.01) | 57 | 3.51(1.30) | 71 |

| Utilitarianism | 3.69(1.24) | 77 | 3.71(1.27) | 75 | ||

| Human driver | Deontology | 3.51(1.22) | 61 | 3.41(1.20) | 61 | |

| Utilitarianism | 3.37(1.46) | 59 | 3.02(1.22) | 62 | ||

Appendix E

| Perceived Moral Agency | ||||

|---|---|---|---|---|

| Experiment | Decision-Maker | Moral Belief | M(SD) | N |

| 1 | AI | Deontology | 2.46(1.10) | 57 |

| Utilitarianism | 3.43(0.87) | 77 | ||

| Human driver | Deontology | 2.86(1.08) | 61 | |

| Utilitarianism | 3.90(0.65) | 59 | ||

| 2 | AI | Deontology | 2.80(0.99) | 71 |

| Utilitarianism | 3.30(1.06) | 75 | ||

| Human driver | Deontology | 2.68(0.97) | 61 | |

| Utilitarianism | 4.34(0.47) | 62 | ||

References

- Awad, E., Dsouza, S., Kim, R., Schulz, J., Henrich, J., Shariff, A., Bonnefon, J.-F., & Rahwan, I. (2018). The moral machine experiment. Nature, 563(7729), 59–64. [Google Scholar] [CrossRef]

- Bagozzi, R. P., Brady, M. K., & Huang, M.-H. (2022). AI service and emotion. Journal of Service Research, 25(4), 499–504. [Google Scholar] [CrossRef]

- Banks, J. (2019). A perceived moral agency scale: Development and validation of a metric for humans and social machines. Computers in Human Behavior, 90, 363–371. [Google Scholar] [CrossRef]

- Barger, B., & Pitt Derryberry, W. (2013). Do negative mood states impact moral reasoning? Journal of Moral Education, 42(4), 443–459. [Google Scholar] [CrossRef]

- Baron, J., Gürçay, B., & Luce, M. F. (2018). Correlations of trait and state emotions with utilitarian moral judgments. Cognition and Emotion, 32(1), 116–129. [Google Scholar] [CrossRef]

- Bentham, J. (1967). An introduction to the principles of morals and legislation. In S. Yamashita (Trans.), Great books of the world 38 (pp. 69–210). Chuo Koron. (Original work published 1789). [Google Scholar]

- Bhattacharya, P., Saraswat, D., Savaliya, D., Sanghavi, S., Verma, A., Sakariya, V., Tanwar, S., Sharma, R., Raboaca, M. S., & Manea, D. L. (2023). Towards future internet: The metaverse perspective for diverse industrial applications. Mathematics, 11(4), 941. [Google Scholar] [CrossRef]

- Białek, M., Muda, R., Fugelsang, J., & Friedman, O. (2021). Disgust and moral judgment: Distinguishing between elicitors and feelings matters. Social Psychological and Personality Science, 12(3), 304–313. [Google Scholar] [CrossRef]

- Bigman, Y. E., & Gray, K. (2018). People are averse to machines making moral decisions. Cognition, 181, 21–34. [Google Scholar] [CrossRef]

- Bonnefon, J.-F., Rahwan, I., & Shariff, A. (2024). The moral psychology of artificial intelligence. Annual Review of Psychology, 75, 653–675. [Google Scholar] [CrossRef]

- Breuer, M. J. (2020). Paradise and iron. SF Heritage Press. [Google Scholar]

- Bruno, G., Spoto, A., Lotto, L., Cellini, N., Cutini, S., & Sarlo, M. (2023). Framing self-sacrifice in the investigation of moral judgment and moral emotions in human and autonomous driving dilemmas. Motivation and Emotion, 47(5), 781–794. [Google Scholar] [CrossRef]

- Chen, L., Li, Y., Huang, C., Li, B., Xing, Y., Tian, D., Li, L., Hu, Z., Na, X., Li, Z., Teng, S., Lv, C., Wang, J., Cao, D., Zheng, N., & Wang, F.-Y. (2023). Milestones in autonomous driving and intelligent vehicles: Survey of surveys. IEEE Transactions on Intelligent Vehicles, 8(2), 1046–1056. [Google Scholar] [CrossRef]

- Chu, Y., & Liu, P. (2023). Machines and humans in sacrificial moral dilemmas: Required similarly but judged differently? Cognition, 239, 105575. [Google Scholar] [CrossRef]

- Cohen, J. (1992). Statistical power analysis. Current Directions in Psychological Science, 1(3), 98–101. [Google Scholar] [CrossRef]

- Contissa, G., Lagioia, F., & Sartor, G. (2017). The Ethical Knob: Ethically-customisable automated vehicles and the law. Artificial Intelligence and Law, 25(3), 365–378. [Google Scholar] [CrossRef]

- Dahò, M. (2025). Emotional responses in clinical ethics consultation decision-making: An exploratory study. Behavioral Sciences, 15(6), 748. [Google Scholar] [CrossRef]

- Dasborough, M. T., Hannah, S. T., & Zhu, W. (2020). The generation and function of moral emotions in teams: An integrative review. Journal of Applied Psychology, 105(5), 433–452. [Google Scholar] [CrossRef]

- Erskine, T. (2024). AI and the future of IR: Disentangling flesh-and-blood, institutional, and synthetic moral agency in world politics. Review of International Studies, 50(3), 534–559. [Google Scholar] [CrossRef]

- Etxebarria, I., Conejero, S., Pascual, A., Ortiz Barón, M. J., & Apodaca, P. (2019). Moral pride, more intense in girls than in boys? Journal of Moral Education, 48(2), 230–246. [Google Scholar] [CrossRef]

- Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. [Google Scholar] [CrossRef] [PubMed]

- Faulhaber, A. K., Dittmer, A., Blind, F., Wächter, M. A., Timm, S., Sütfeld, L. R., Stephan, A., Pipa, G., & König, P. (2019). Human decisions in moral dilemmas are largely described by utilitarianism: Virtual car driving study provides guidelines for autonomous driving vehicles. Science and Engineering Ethics, 25(2), 399–418. [Google Scholar] [CrossRef]

- Festinger, L. (1957). A theory of cognitive dissonance. Stanford University Press. [Google Scholar]

- Firt, E. (2024). What makes full artificial agents morally different. AI & SOCIETY, 40, 175–184. [Google Scholar] [CrossRef]

- Gangemi, A., Rizzotto, C., Riggio, F., Dahò, M., & Mancini, F. (2025). Guilt emotion and decision-making under uncertainty. Frontiers in Psychology, 16, 1518752. [Google Scholar] [CrossRef]

- Garrigan, B., Adlam, A. L. R., & Langdon, P. E. (2018). Moral decision-making and moral development: Toward an integrative framework. Developmental Review, 49, 80–100. [Google Scholar] [CrossRef]

- Ginther, M. R., Hartsough, L. E. S., & Marois, R. (2022). Moral outrage drives the interaction of harm and culpable intent in third-party punishment decisions. Emotion, 22(4), 795–804. [Google Scholar] [CrossRef]

- Goetz, J. L., Keltner, D., & Simon-Thomas, E. (2010). Compassion: An evolutionary analysis and empirical review. Psychological Bulletin, 136(3), 351–374. [Google Scholar] [CrossRef]

- Gogoshin, D. L. (2021). Robot responsibility and moral community. Frontiers in Robotics and AI, 8, 768092. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez Fabre, R., Camacho Ibáñez, J., & Tejedor Escobar, P. (2021). Moral control and ownership in AI systems. AI & SOCIETY, 36(1), 289–303. [Google Scholar] [CrossRef]

- Gray, J. A. (1987). The psychology of fear and stress (2nd ed.). Cambridge University Press. [Google Scholar]

- Greenbaum, R., Bonner, J., Gray, T., & Mawritz, M. (2020). Moral emotions: A review and research agenda for management scholarship. Journal of Organizational Behavior, 41(2), 95–114. [Google Scholar] [CrossRef]

- Greene, J. D. (2007). Why are VMPFC patients more utilitarian? A dual-process theory of moral judgment explains. Trends in Cognitive Sciences, 11(8), 322–323. [Google Scholar] [CrossRef]

- Greene, J. D., Sommerville, R. B., Nystrom, L. E., Darley, J. M., & Cohen, J. D. (2001). An fMRI investigation of emotional engagement in moral judgment. Science, 293(5537), 2105–2108. [Google Scholar] [CrossRef]

- Haidt, J. (2001). The emotional dog and its rational tail: A social intuitionist approach to moral judgment. Psychological Review, 108(4), 814–834. [Google Scholar] [CrossRef]

- Haidt, J. (2003). The moral emotions. In R. J. Davison, K. R. Scherer, & H. H. Goldsmith (Eds.), Handbook of affective sciences (pp. 852–870). Oxford University Press. [Google Scholar]

- Han, K., Kim, M. Y., Sohn, Y. W., & Kim, Y.-H. (2023). The effect of suppressing guilt and shame on the immoral decision-making process. Current Psychology, 42(4), 2693–2707. [Google Scholar] [CrossRef]

- Harbin, A. (2020). Inducing fear. Ethical Theory and Moral Practice, 23(3), 501–513. [Google Scholar] [CrossRef]

- Hardy, C. (2019). Clinical sympathy: The important role of affectivity in clinical practice. Medicine, Health Care and Philosophy, 22(4), 499–513. [Google Scholar] [CrossRef] [PubMed]

- Helion, C., & Ochsner, K. N. (2018). The role of emotion regulation in moral judgment. Neuroethics, 11(3), 297–308. [Google Scholar] [CrossRef] [PubMed]

- Higgs, C., McIntosh, T., Connelly, S., & Mumford, M. (2020). Self-focused emotions and ethical decision-making: Comparing the effects of regulated and unregulated guilt, shame, and embarrassment. Science and Engineering Ethics, 26(1), 27–63. [Google Scholar] [CrossRef] [PubMed]

- Himma, K. E. (2009). Artificial agency, consciousness, and the criteria for moral agency: What properties must an artificial agent have to be a moral agent? Ethics and Information Technology, 11(1), 19–29. [Google Scholar] [CrossRef]

- Hutcherson, C. A., & Gross, J. J. (2011). The moral emotions: A social-functionalist account of anger, disgust, and contempt. Journal of Personality and Social Psychology, 100(4), 719–737. [Google Scholar] [CrossRef]

- Iwai, T., & de França Carvalho, J. V. (2022). Would you help me again? The role of moral identity, helping motivation and quality of gratitude expressions in future helping intentions. Personality and Individual Differences, 196, 111719. [Google Scholar] [CrossRef]

- Ju, U., & Kim, S. (2024). Willingness to take responsibility: Self-sacrifice versus sacrificing others in takeover decisions during autonomous driving. Heliyon, 10(9), e29616. [Google Scholar] [CrossRef]

- Kallioinen, N., Pershina, M., Zeiser, J., Nosrat Nezami, F., Pipa, G., Stephan, A., & König, P. (2019). Moral judgements on the actions of self-driving cars and human drivers in dilemma situations from different perspectives. Frontiers in Psychology, 10, 2415. [Google Scholar] [CrossRef]

- Kant, I. (1976). Grundlegung zur metaphysic der sitten (H. Shinoda, Trans.). Iwanami Shoten. (Original work published 1785). [Google Scholar]

- Kim, T., & Peng, W. (2025). Do we want AI judges? The acceptance of AI judges’ judicial decision-making on moral foundations. AI & SOCIETY, 40(5), 3683–3696. [Google Scholar] [CrossRef]

- Klenk, M. (2022). The influence of situational factors in sacrificial dilemmas on utilitarian moral judgments. Review of Philosophy and Psychology, 13(3), 593–625. [Google Scholar] [CrossRef]

- Kornilaki, E. N., & Chlouverakis, G. (2004). The situational antecedents of pride and happiness: Developmental and domain differences. British Journal of Developmental Psychology, 22(4), 605–619. [Google Scholar] [CrossRef]

- Köbis, N., Bonnefon, J.-F., & Rahwan, I. (2021). Bad machines corrupt good morals. Nature Human Behavior, 5(6), 679–685. [Google Scholar] [CrossRef] [PubMed]

- Kugler, T., Ye, B., Motro, D., & Noussair, C. N. (2020). On trust and disgust: Evidence from face reading and virtual reality. Social Psychological and Personality Science, 11(3), 317–325. [Google Scholar] [CrossRef]

- Laakasuo, M., Kunnari, A., Francis, K., Košová, M. J., Kopecký, R., Buttazzoni, P., Koverola, M., Palomäki, J., Drosinou, M., & Hannikainen, I. (2025). Moral psychological exploration of the asymmetry effect in AI-assisted euthanasia decisions. Cognition, 262, 106177. [Google Scholar] [CrossRef]

- Landmann, H., & Hess, U. (2017). What elicits third-party anger? The effects of moral violation and others’ outcome on anger and compassion. Cognition & Emotion, 31(6), 1097–1111. [Google Scholar] [CrossRef]

- Landy, J. F., & Goodwin, G. P. (2015). Does incidental disgust amplify moral judgment? A meta-analytic review of experimental evidence. Perspectives on Psychological Science: A Journal of the Association for Psychological Science, 10(4), 518–536. [Google Scholar] [CrossRef]

- Li, X., Hou, M., He, Y., & Ma, M. (2023). People roar at the sight of injustice: Evidences from moral emotions. Current Psychology, 42(34), 29868–29879. [Google Scholar] [CrossRef]

- Liu, P., Chu, Y., Zhai, S., Zhang, T., & Awad, E. (2025). Morality on the road: Should machine drivers be more utilitarian than human drivers? Cognition, 254, 106011. [Google Scholar] [CrossRef]

- Liu, P., & Liu, J. (2021). Selfish or utilitarian automated vehicles? Deontological evaluation and public acceptance. International Journal of Human–Computer Interaction, 37(13), 1231–1242. [Google Scholar] [CrossRef]

- Lockwood, P. L., Bos, W. v. d., & Dreher, J.-C. (2025). Moral learning and decision-making across the lifespan. Annual Review of Psychology, 76(1), 475–500. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y., Wang, Z., Yang, H., & Yang, L. (2020). Artificial intelligence applications in the development of autonomous vehicles: A survey. IEEE/CAA Journal of Automatica Sinica, 7(2), 315–329. [Google Scholar] [CrossRef]

- Malle, B. F., Scheutz, M., Cusimano, C., Voiklis, J., Komatsu, T., Thapa, S., & Aladia, S. (2025). People’s judgments of humans and robots in a classic moral dilemma. Cognition, 254, 105958. [Google Scholar] [CrossRef] [PubMed]

- Maninger, T., & Shank, D. B. (2022). Perceptions of violations by artificial and human actors across moral foundations. Computers in Human Behavior Reports, 5, 100154. [Google Scholar] [CrossRef]

- Mascolo, M. F., & Fischer, K. W. (1995). Developmental transformations in appraisals for pride, shame, and guilt. In J. P. Tangney, & K. W. Fischer (Eds.), Self-conscious emotions: The psychology of shame, guilt, embarrassment, and pride (pp. 64–113). Guilford Press. [Google Scholar]

- Moll, J., & de Oliveira-Souza, R. (2009). “Extended attachment” and the human brain: Internalized cultural values and evolutionary implications. In J. Verplaetse, J. Schrijver, S. Vanneste, & J. Braeckman (Eds.), The moral brain: Essays on the evolutionary and neuroscientific aspects of morality (pp. 69–85). Springer. [Google Scholar] [CrossRef]

- Moll, J., Zahn, R., de Oliveira-Souza, R., Krueger, F., & Grafman, J. (2005). The neural basis of human moral cognition. Nature Reviews Neuroscience, 6(10), 799–809. [Google Scholar] [CrossRef]

- Moor, J. H. (2006). The nature, importance, and difficulty of machine ethics. IEEE Intelligent Systems, 21(4), 18–21. [Google Scholar] [CrossRef]

- Niedenthal, P. M., & Brauer, M. (2012). Social functionality of human emotion. Annual Review of Psychology, 63, 259–285. [Google Scholar] [CrossRef]

- Nijssen, S. R. R., Müller, B. C. N., Bosse, T., & Paulus, M. (2023). Can you count on a calculator? The role of agency and affect in judgments of robots as moral agents. Human–Computer Interaction, 38(5–6), 400–416. [Google Scholar] [CrossRef]

- Oh, J., Kim, Y. K., Park, G., & Lee, S. (2023). Unpacking the impact of gratitude on unethical behavior. Deviant Behavior, 45, 1155–1169. [Google Scholar] [CrossRef]

- Ohbuchi, K.-i. (1988). Arousal of empathy and aggression. Psychologia: An International Journal of Psychology in the Orient, 31(4), 177–186. [Google Scholar]

- Ong, H. H., Mullette-Gillman, O. A., Kwok, K., & Lim, J. (2014). Moral judgment modulation by disgust is bi-directionally moderated by individual sensitivity. Frontiers in Psychology, 5, 194. [Google Scholar] [CrossRef] [PubMed]

- Parisi, I., Mancini, A., Mancini, F., Aglioti, S. M., & Panasiti, M. S. (2021). Deontological guilt and disgust sensitivity modulate moral behavior. Clinical neuropsychiatry, 18(4), 196–210. [Google Scholar] [CrossRef]

- Pérez-Moreno, E., Naranjo, J. E., Hernández, M. J., Ruíz, T., Valle, A., Cruz, A., Serradilla, F., & Jiménez, F. (2025). Perceived risk and acceptance of automated vehicles users to unexpected hazard situations in real driving conditions. Behaviour & Information Technology, 0(0), 1–18. [Google Scholar] [CrossRef]

- Shweder, R. A., Much, N. C., Mahapatra, M., & Park, L. (1997). The “big three” of morality (autonomy, community, divinity) and the “big three” explanations of suffering. In A. M. Brandt, & P. Rozin (Eds.), Morality and health (pp. 119–169). Taylor & Frances/Routledge. [Google Scholar]

- Singh, A., Murzello, Y., Pokhrel, S., & Samuel, S. (2025). An investigation of supervised machine learning models for predicting drivers’ ethical decisions in autonomous vehicles. Decision Analytics Journal, 14, 100548. [Google Scholar] [CrossRef]

- Skoe, E. E. A., Eisenberg, N., & Cumberland, A. (2002). The role of reported emotion in real-life and hypothetical moral dilemmas. Personality and Social Psychology Bulletin, 28(7), 962–973. [Google Scholar] [CrossRef]

- Strohminger, N., Lewis, R. L., & Meyer, D. E. (2011). Divergent effects of different positive emotions on moral judgment. Cognition, 119(2), 295–300. [Google Scholar] [CrossRef]

- Sugarman, J. (2005). Persons and moral agency. Theory & Psychology, 15, 793–811. [Google Scholar] [CrossRef]

- Swanepoel, D. (2021). The possibility of deliberate norm-adherence in AI. Ethics and Information Technology, 23(2), 157–163. [Google Scholar] [CrossRef]

- Szekely, R. D., & Miu, A. C. (2015). Incidental emotions in moral dilemmas: The influence of emotion regulation. Cognition and Emotion, 29(1), 64–75. [Google Scholar] [CrossRef]

- Tao, Y., Dong, J., Niu, H., Lv, Y., He, X., Zhang, S., & Liu, X. (2023). Fear facilitates utilitarian moral judgments: Evidence from a moral judgment task. PsyCh Journal, 12(5), 680–689. [Google Scholar] [CrossRef]

- Telesca, G., Rullo, M., & Pagliaro, S. (2024). To be (or not to be) elevated? Group membership, moral models, and prosocial behavior. The Journal of Positive Psychology, 20(1), 111–122. [Google Scholar] [CrossRef]

- Tracy, J. L., & Robins, R. W. (2006). Appraisal antecedents of shame and guilt: Support for a theoretical model. Personality and Social Psychology Bulletin, 32(10), 1339–1351. [Google Scholar] [CrossRef] [PubMed]

- Trivedi-Bateman, N. (2021). The combined roles of moral emotion and moral rules in explaining acts of violence using a situational action theory perspective. Journal of Interpersonal Violence, 36(17–18), 8715–8740. [Google Scholar] [CrossRef] [PubMed]

- Turiel, E. (1983). The development of social knowledge: Morality and convention. Cambridge University Press. [Google Scholar]

- Vallor, S., & Vierkant, T. (2024). Find the gap: AI, responsible agency and vulnerability. Minds and Machines, 34(3), 20. [Google Scholar] [CrossRef] [PubMed]

- Vianello, M., Galliani, E. M., & Haidt, J. (2010). Elevation at work: The effects of leaders’ moral excellence. The Journal of Positive Psychology, 5(5), 390–411. [Google Scholar] [CrossRef]

- Weiner, B. (1985). An attributional theory of achievement motivation and emotion. Psychological Review, 92(4), 548. [Google Scholar] [CrossRef]

- Ye, H., Tan, F., Ding, M., Jia, Y., & Chen, Y. (2010). Sympathy and punishment: Evolution of cooperation in public goods game. Journal of Artificial Societies and Social Simulation, 14(4), 20. [Google Scholar] [CrossRef]

- Yokoi, R., & Nakayachi, K. (2021a). The effect of value similarity on trust in the automation systems: A case of transportation and medical care. International Journal of Human–Computer Interaction, 37(13), 1269–1282. [Google Scholar] [CrossRef]

- Yokoi, R., & Nakayachi, K. (2021b). Trust in autonomous cars: Exploring the role of shared moral values, reasoning, and emotion in safety-critical decisions. Human Factors, 63(8), 1465–1484. [Google Scholar] [CrossRef]

- Yokoi, R., & Nakayachi, K. (2021c). Trust in autonomous cars: The role of value similarity and capacity for sympathy. The Japanese Journal of Experimental Social Psychology, 61(1), 22–27. [Google Scholar] [CrossRef]

- Zafar, M. (2024). Normativity and AI moral agency. AI and Ethics, 5, 2605–2622. [Google Scholar] [CrossRef]

Deontology.

Deontology.  Utilitarianism.

Utilitarianism.

Deontology.

Deontology.  Utilitarianism.

Utilitarianism.

Deontology.

Deontology.  Utilitarianism.

Utilitarianism.

Deontology.

Deontology.  Utilitarianism.

Utilitarianism.

Deontology.

Deontology.  Utilitarianism. Significance markers: * p < 0.05, *** p < 0.001.

Utilitarianism. Significance markers: * p < 0.05, *** p < 0.001.

Deontology.

Deontology.  Utilitarianism. Significance markers: * p < 0.05, *** p < 0.001.

Utilitarianism. Significance markers: * p < 0.05, *** p < 0.001.

Deontology.

Deontology.  Utilitarianism. Significance markers: * p < 0.05, ** p < 0.01, *** p < 0.001.

Utilitarianism. Significance markers: * p < 0.05, ** p < 0.01, *** p < 0.001.

Deontology.

Deontology.  Utilitarianism. Significance markers: * p < 0.05, ** p < 0.01, *** p < 0.001.

Utilitarianism. Significance markers: * p < 0.05, ** p < 0.01, *** p < 0.001.

Deontology.

Deontology.  Utilitarianism. Significance markers: ** p < 0.01, *** p < 0.001.

Utilitarianism. Significance markers: ** p < 0.01, *** p < 0.001.

Deontology.

Deontology.  Utilitarianism. Significance markers: ** p < 0.01, *** p < 0.001.

Utilitarianism. Significance markers: ** p < 0.01, *** p < 0.001.

| Factor | F(df) | p | η2p | Results and M ± SD |

|---|---|---|---|---|

| Decision-maker | 46.53 (1,250) | <0.001 | 0.157 | AI (2.72 ± 1.31) < human driver (3.52 ± 1.28) |

| Moral belief | 37.31 (1,250) | <0.001 | 0.130 | Deontology (2.74 ± 1.40) < utilitarianism (3.41 ± 1.24) |

| Pre-test and post-test | 27.15 (1,250) | <0.001 | 0.098 | Pre-test (3.28 ± 1.32) > post-test (2.90 ± 1.36) |

| Item | Factor | F(df) | p | η2p | Results and M ± SD |

|---|---|---|---|---|---|

| Gratitude | Decision-maker | 2.75 (1,250) | 0.098 | 0.011 | AI (1.93 ± 1.13), human driver (2.12 ± 1.30) |

| Moral belief | 15.31 (1,250) | <0.001 | 0.058 | Deontology (1.71 ± 1.13) < utilitarianism (2.28 ± 1.23) | |

| Elevation | Decision-maker | 1.41 (1,250) | 0.237 | 0.006 | AI (2.03 ± 1.19), human driver (2.18 ± 1.28) |

| Moral belief | 5.59 (1,250) | 0.019 | 0.022 | Deontology (1.92 ± 1.67) < utilitarianism (2.27 ± 1.27) | |

| Pride | Decision-maker | 2.02 (1,250) | 0.156 | 0.008 | AI (1.67 ± 1.00), human driver (1.84 ± 1.24) |

| Moral belief | 3.89 (1,250) | 0.050 | 0.015 | Deontology (1.61 ± 1.05) < utilitarianism (1.88 ± 1.16) | |

| Sympathy | Decision-maker | 8.06 (1,250) | 0.005 | 0.031 | AI (3.81 ± 1.25) < human driver (4.18 ± 1.05) |

| Moral belief | 7.69 (1,250) | 0.006 | 0.030 | Deontology (3.79 ± 1.33) > utilitarianism (4.15 ± 0.98) | |

| Contempt | Decision-maker | 2.60 (1,250) | 0.111 | 0.108 | AI (2.37 ± 1.31), human driver (2.21 ± 1.24) |

| Moral belief | 37.19 (1,250) | <0.001 | 0.130 | Deontology (2.78 ± 1.35) > utilitarianism (1.88 ± 1.05) | |

| Anger | Decision-maker | 4.38 (1,250) | 0.037 | 0.017 | AI (3.02 ± 1.28) > human driver (2.74 ± 1.33) |

| Moral belief | 22.41 (1,250) | <0.001 | 0.082 | Deontology (3.27 ± 1.27) > utilitarianism (2.56 ± 1.26) | |

| Disgust | Decision-maker | 1.35 (1,250) | 0.246 | 0.005 | AI (2.78 ± 1.25), human driver (2.66 ± 1.36) |

| Moral belief | 21.76 (1,250) | <0.001 | 0.080 | Deontology (3.11 ± 1.31) > utilitarianism (2.38 ± 1.21) | |

| Shame | Decision-maker | 9.95 (1,250) | 0.002 | 0.038 | AI (2.81 ± 1.25) > human driver (2.65 ± 1.20) |

| Moral belief | 0.00 (1,250) | 0.980 | 0.000 | Deontology (2.73 ± 1.20), utilitarianism (2.74 ± 1.26) | |

| Guilt | Decision-maker | 1.37 (1,250) | 0.243 | 0.005 | AI (3.80 ± 1.22), human driver (3.63 ± 1.31) |

| Moral belief | 0.04 (1,250) | 0.850 | 0.000 | Deontology (3.73 ± 1.27), utilitarianism (3.71 ± 1.26) | |

| Fear | Decision-maker | 6.31 (1,250) | 0.013 | 0.025 | AI (3.81 ± 1.15) > human driver (3.44 ± 1.34) |

| Moral belief | 1.87 (1,250) | 0.173 | 0.007 | Deontology (3.74 ± 1.14), utilitarianism (3.55 ± 1.34) |

| Factor | F(df) | p | η2p | Results and M ± SD |

|---|---|---|---|---|

| Decision-maker | 150.02 (1,265) | <0.001 | 0.361 | AI (2.46 ± 1.20) < human driver (3.75 ± 1.03) |

| Moral belief | 4.98 (1,265) | 0.026 | 0.018 | Deontology (2.95 ± 1.26) < utilitarianism (3.15 ± 1.33) |

| Pre-test and post-test | 0.53 (1,265) | 0.469 | 0.002 | Pre-test (3.06 ± 1.30), post-test (3.04 ± 1.30) |

| Item | Factor | F(df) | p | η2p | Results and M ± SD |

|---|---|---|---|---|---|

| Gratitude | Decision-maker | 0.10 (1,265) | 0.754 | 0.000 | AI (2.70 ± 1.38), human driver (2.76 ± 1.4) |

| Moral belief | 10.84 (1,265) | <0.001 | 0.200 | Deontology (2.52 ± 1.39) < utilitarianism (2.93 ± 1.42) | |

| Elevation | Decision-maker | 0.47 (1,265) | 0.495 | 0.002 | AI (2.54 ± 1.38), human driver (2.43 ± 1.35) |

| Moral belief | 27.28 (1,265) | <0.001 | 0.093 | Deontology (2.08 ± 1.23) < utilitarianism (2.88 ± 1.37) | |

| Pride | Decision-maker | 0.01 (1,265) | 0.930 | 0.000 | AI (2.28 ± 1.34), human driver (2.26 ± 1.36) |

| Moral belief | 66.10 (1,265) | <0.001 | 0.200 | Deontology (1.67 ± 0.98) < utilitarianism (2.85 ± 1.40) | |

| Sympathy | Decision-maker | 23.62 (1,265) | <0.001 | 0.082 | AI (3.06 ± 1.32) < human driver (3.76 ± 1.15) |

| Moral belief | 5.81 (1,265) | 0.017 | 0.021 | Deontology (3.60 ± 1.44) > utilitarianism (3.18 ± 1.40) | |

| Contempt | Decision-maker | 0.64 (1,265) | 0.424 | 0.002 | AI (2.32 ± 1.40), human driver (2.18 ± 1.29) |

| Moral belief | 6.54 (1,266) | 0.011 | 0.024 | Deontology (2.42 ± 1.30) > utilitarianism (2.09 ± 1.39) | |

| Anger | Decision-maker | 0.07 (1,265) | 0.799 | 0.000 | AI (2.61 ± 1.41), human driver (2.56 ± 1.33) |

| Moral belief | 5.66 (1,266) | 0.018 | 0.021 | Deontology (2.76 ± 1.27) > utilitarianism (2.42 ± 1.44) | |

| Disgust | Decision-maker | 4.07 (1,256) | 0.045 | 0.015 | AI (2.60 ± 1.36) > human driver (2.28 ± 1.31) |

| Moral belief | 12.46 (1,256) | 0.007 | 0.027 | Deontology (2.64 ± 1.24) > utilitarianism (2.27 ± 1.42) | |

| Shame | Decision-maker | 2.10 (1,265) | 0.149 | 0.008 | AI (2.57 ± 1.43), human driver (2.37 ± 1.33) |

| Moral belief | 81.77 (1,265) | <0.001 | 0.236 | Deontology (3.16 ± 1.32) > utilitarianism (1.82 ± 1.06) | |

| Guilt | Decision-maker | 5.96 (1,256) | 0.015 | 0.022 | AI (2.82 ± 1.51) < human driver (3.20 ± 1.26) |

| Moral belief | 96.47 (1,257) | <0.001 | 0.267 | Deontology (3.74 ± 1.18) > utilitarianism (2.28 ± 1.24) | |

| Fear | Decision-maker | 6.31 (1,256) | 0.013 | 0.024 | AI (3.61 ± 1.28) > human driver (3.21 ± 1.22) |

| Moral belief | 0.40 (1,256) | 0.527 | 0.002 | Deontology (3.46 ± 1.25), utilitarianism (3.39 ± 1.29) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, C.; You, X.; Li, Y. Permissibility, Moral Emotions, and Perceived Moral Agency in Autonomous Driving Dilemmas: An Investigation of Pedestrian-Sacrifice and Driver-Sacrifice Scenarios in the Third-Person Perspective. Behav. Sci. 2025, 15, 1038. https://doi.org/10.3390/bs15081038

Dong C, You X, Li Y. Permissibility, Moral Emotions, and Perceived Moral Agency in Autonomous Driving Dilemmas: An Investigation of Pedestrian-Sacrifice and Driver-Sacrifice Scenarios in the Third-Person Perspective. Behavioral Sciences. 2025; 15(8):1038. https://doi.org/10.3390/bs15081038

Chicago/Turabian StyleDong, Chaowu, Xuqun You, and Ying Li. 2025. "Permissibility, Moral Emotions, and Perceived Moral Agency in Autonomous Driving Dilemmas: An Investigation of Pedestrian-Sacrifice and Driver-Sacrifice Scenarios in the Third-Person Perspective" Behavioral Sciences 15, no. 8: 1038. https://doi.org/10.3390/bs15081038

APA StyleDong, C., You, X., & Li, Y. (2025). Permissibility, Moral Emotions, and Perceived Moral Agency in Autonomous Driving Dilemmas: An Investigation of Pedestrian-Sacrifice and Driver-Sacrifice Scenarios in the Third-Person Perspective. Behavioral Sciences, 15(8), 1038. https://doi.org/10.3390/bs15081038