Does Covert Retrieval Benefit Adolescents’ Learning in 8th Grade Science Classes?

Abstract

1. Introduction

2. Materials and Methods

2.1. Educational Context

2.2. Design and Participants

2.3. Materials

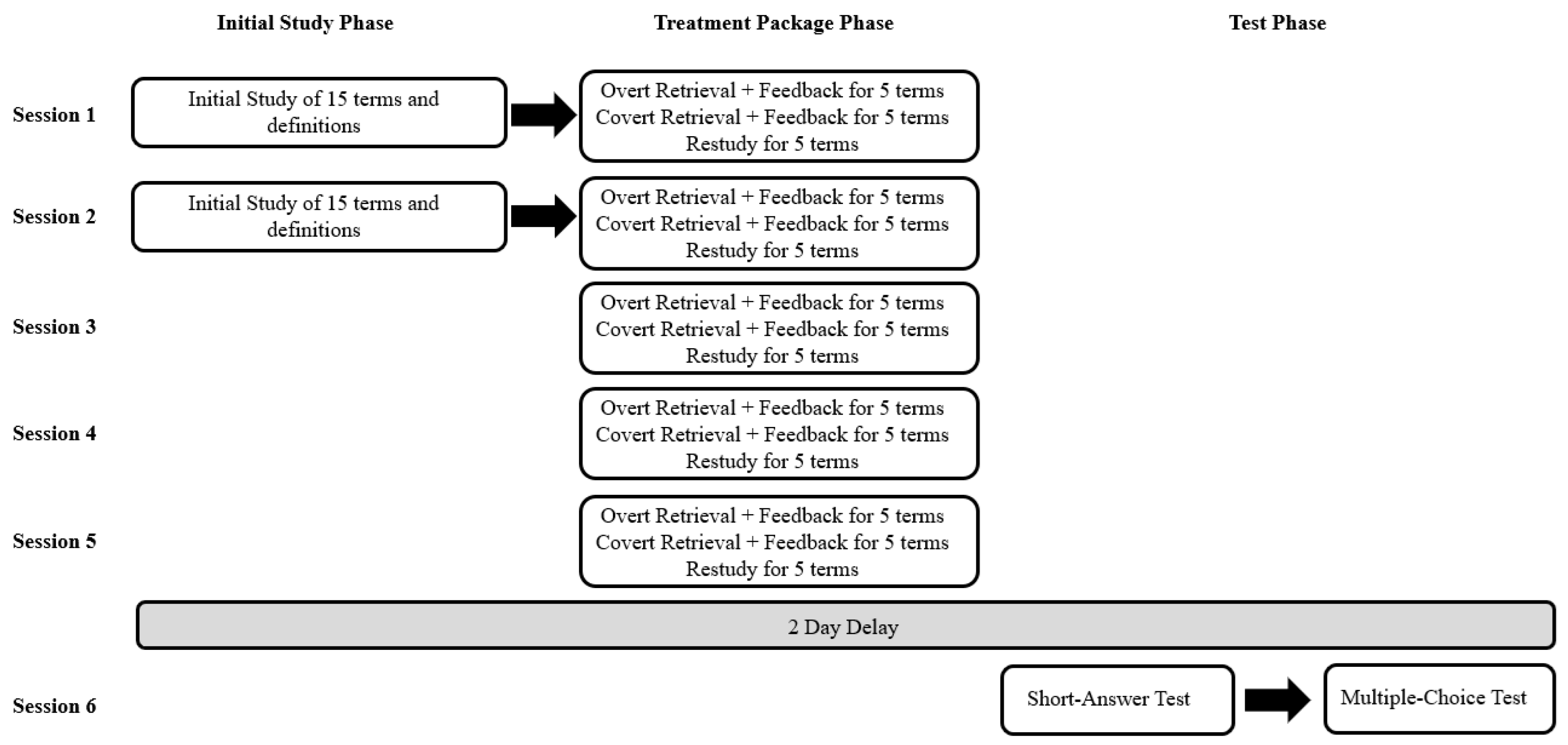

2.4. Procedure

“We selected 15 science terms that you need to learn for your science class. Specifically, you will learn terms that are important for understanding Earth’s oceans and atmosphere. They are also important for learning about energy and matter as well as cause and effect.

Your task is to learn the definition to each term so that you will be able to remember it on a future memory test. The test will be short-answer format, which means that you will be given the terms and will be asked to write the definition to each.

It is very important that you try your hardest to learn each definition. Remember, these are concepts that you need to learn for your class, so if you work hard at learning them now, you may benefit later! You will be given 15 s to study each definition, which we will present on the screen and read aloud. Do you have any questions before we begin?”

“You are now going to take a practice test on some of the terms and definitions. To do so, you will practice retrieving the definition to each term in preparation for the final memory test.

You will be presented with each term one-at-a-time. For this test, your task is to write down the definition to each on the paper provided. You will be given 30 s to write each definition.

IMPORTANT: You should do your best to recall and write down as much of the definition as you can. Think about this like short answer questions on a test, in which you are asked to write down the whole definition for a term. The important thing is to really try to recall and write down each definition. When you have finished writing the definition for the term, we will provide you with feedback as to the correct definition for it, which we will provide on the screen and read aloud.”

“You are going to take a practice test on some of the terms and definitions. To do so, you will practice retrieving the definition to each term in preparation for the final memory test.

You will be presented with each term one-at-a-time. For this test, your task is to silently retrieve the definition to each term. You will be given 30 s to think of the definition for each.

IMPORTANT: You should do your best to recall as much of the definitions as you can. Think about this as if you and a friend were quizzing each other on terms for an upcoming test in your class—if your friend gave you a term, you’d try to come up with the entire definition. Or, think about it like a practice test, in which you were asked to retrieve the entire definition for each term. In both cases, you would try to retrieve the entire definition for each term. That is what we’d like you to do on each trial here.

The important thing is to really try to recall each definition. On each trial, you should not just read the term and assume that you know it because it seems familiar. Rather, use the term as a cue to try to silently recall the definition from memory. When you have finished retrieving the definition for the term, we will provide you with feedback as to the correct definition for it, which we will provide on the screen and read aloud.

Remember, you are learning the definitions for each term in preparation for a future memory test. On that test you will be provided with the terms, and you will be required to write the full and correct definition for each. Do you have any questions before we begin?”

“You are going to restudy some of the terms and definitions. To do so, you will study each term and definition in preparation for the final memory test. The terms will be presented one-at-a-time on the screen and will be read aloud. You will be given 30 s to study each definition. Do you have any questions before we begin?”

“First, you are going to take a final short-answer test over the terms and definitions that you have studied last week. For each question, your task is to write the full and correct definition for each term. So, please do your best to recall as much as you can for each question!

Important: you must do your best to answer EVERY question on this test. You cannot leave any blank. Once you have your paper, you are free to begin! If you have any questions, simply raise your hand and a researcher will come to you.”

“Next, you are going to take a final multiple-choice test over the terms and definitions that you have studied last week. For each question, your task is to select the definition that best corresponds to the term.

Important: you must do your best to answer EVERY question on this test. You cannot leave any blank. Once you have your paper, you are free to begin! If you have any questions, simply raise your hand and a researcher will come to you.”

2.5. Data Scoring

3. Results

3.1. Analytic Plan

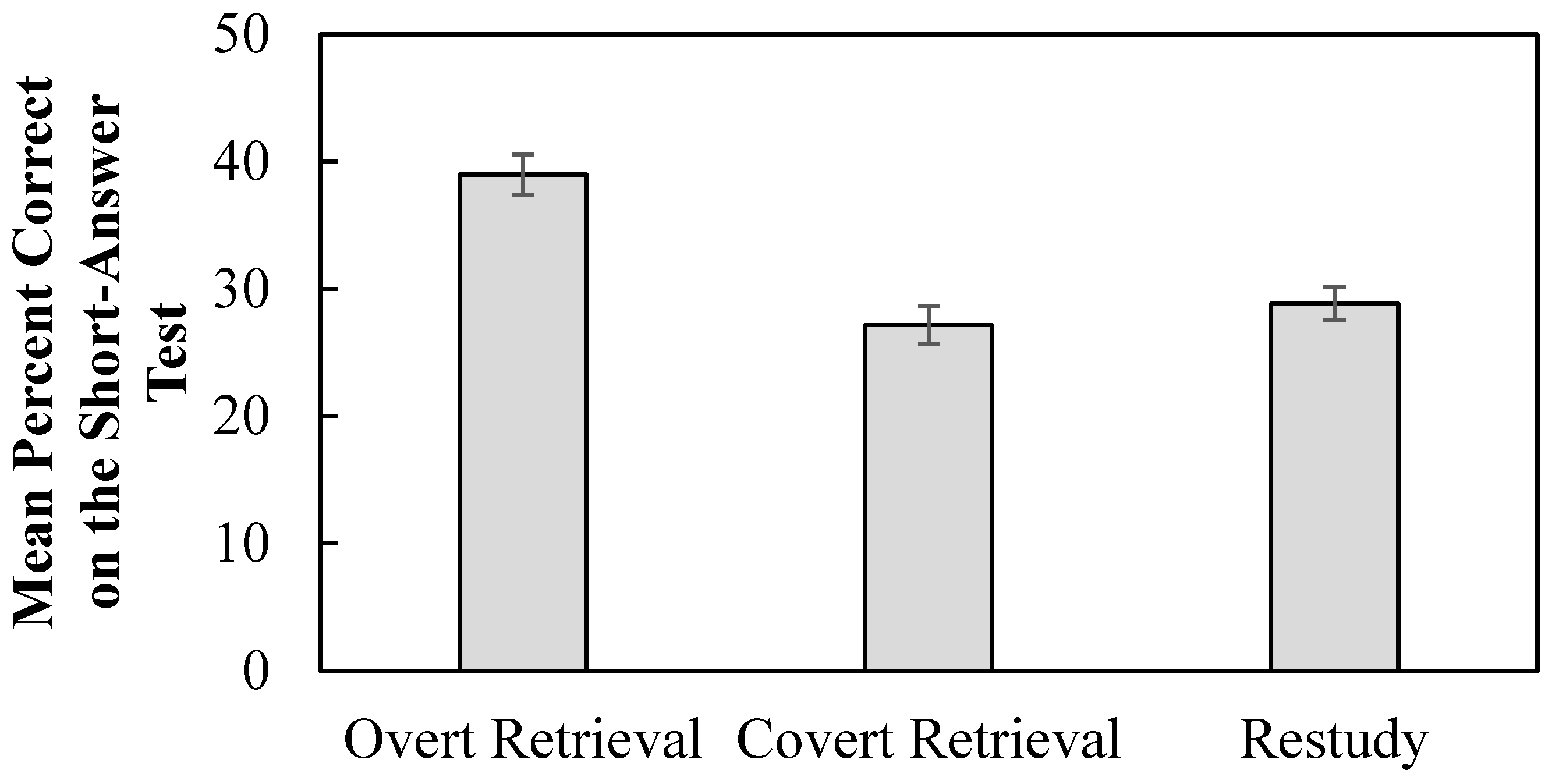

3.2. Percent Correct on the Final Short-Answer Test

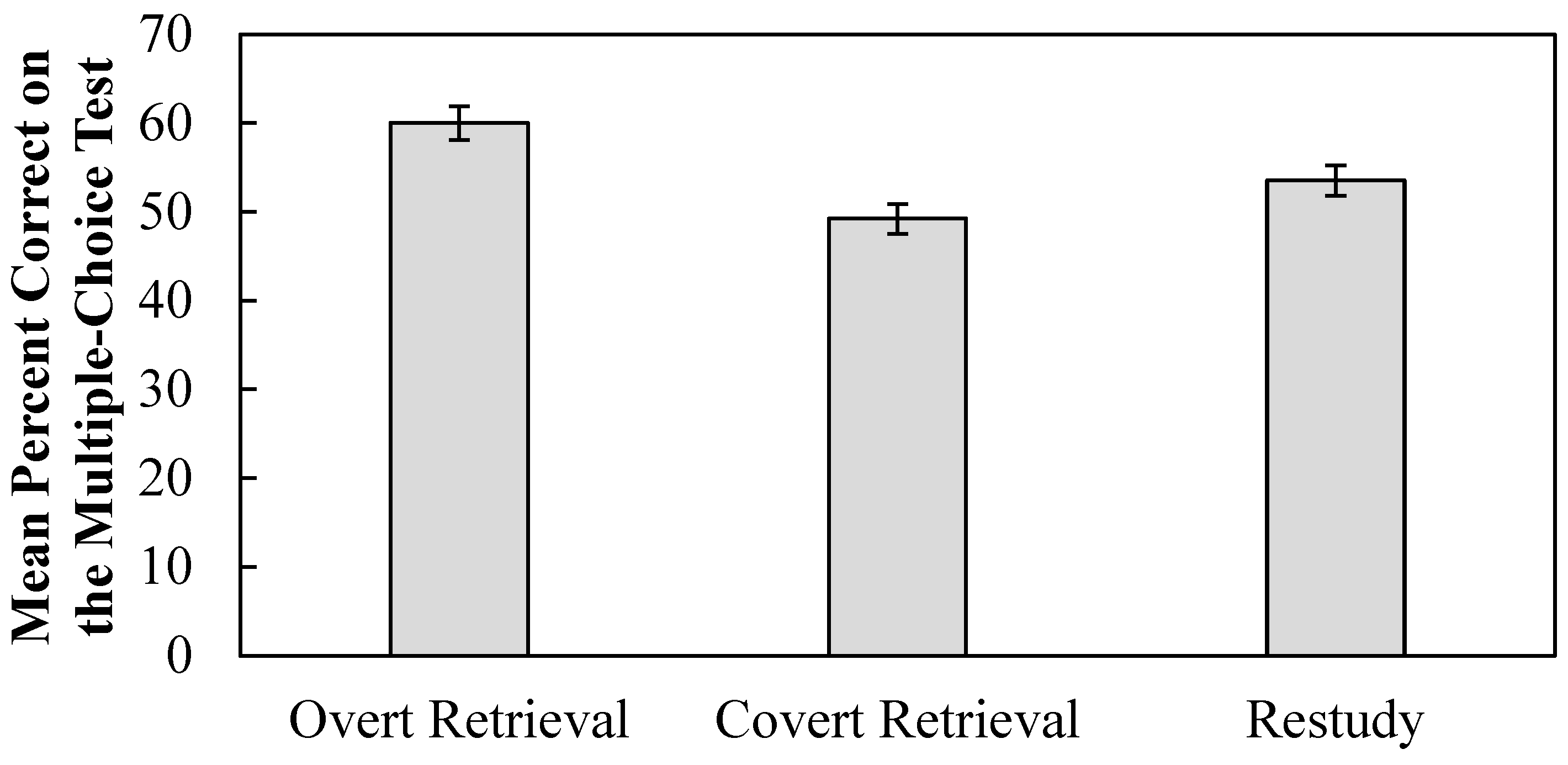

3.3. Percent Correct on the Final Multiple-Choice Test

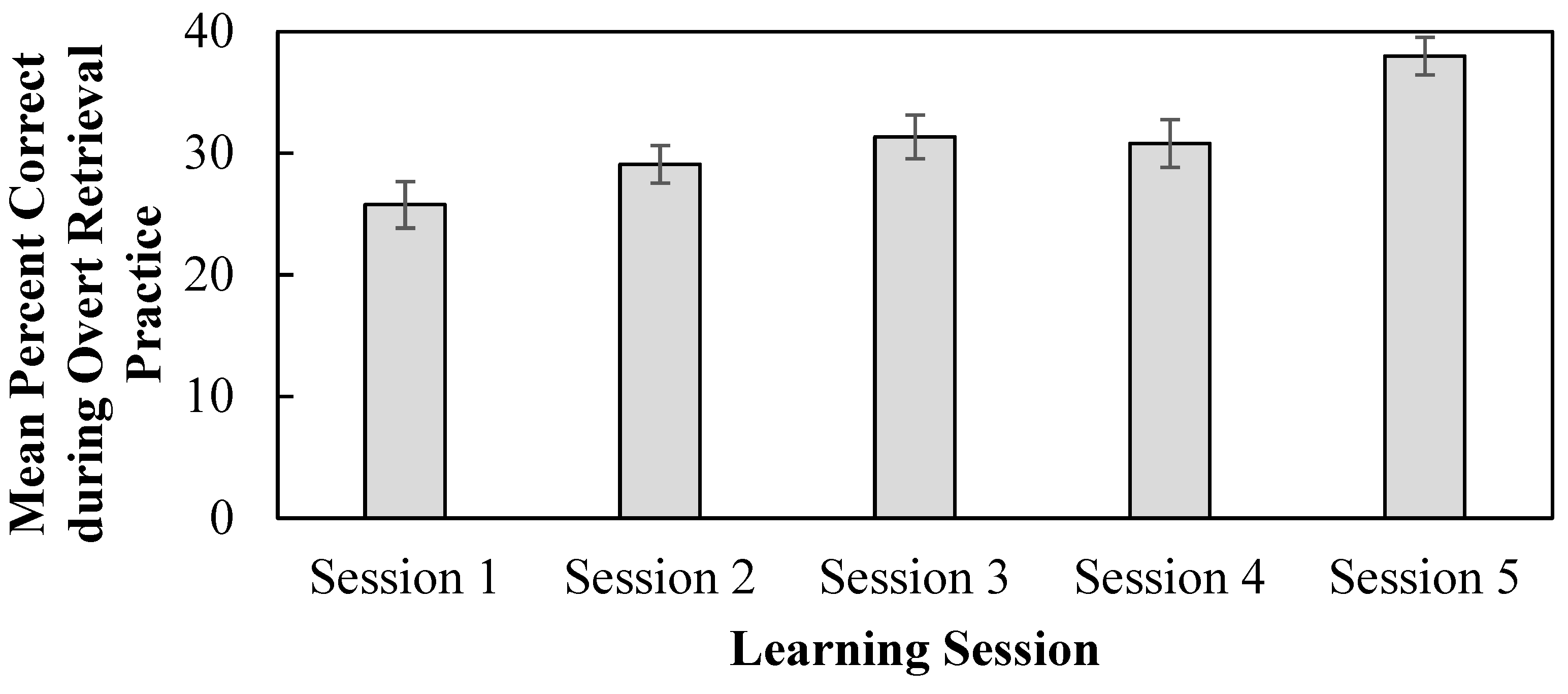

3.4. Percent Correct During Overt Retrieval Practice

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Abel, M., & Roediger, H. L. (2018). The testing effect in a social setting: Does retrieval practice benefit a listener? Journal of Experimental Psychology: Applied, 24(3), 347–359. [Google Scholar] [CrossRef]

- Adesope, O. O., Trevisan, D. A., & Sundararajan, N. (2017). Rethinking the use of tests: A meta-analysis of practice testing. Review of Educational Research, 87(3), 659–701. [Google Scholar] [CrossRef]

- Agarwal, P. K., Bain, P. M., & Chamberlain, R. W. (2012). The value of applied research: Retrieval practice improves classroom learning and recommendations from a teacher, a principal, and a scientist. Educational Psychology Review, 24(3), 437–448. [Google Scholar] [CrossRef]

- Agarwal, P. K., D’Antonio, L., Roediger, H. L., McDermott, K. B., & McDaniel, M. A. (2014). Classroom-based programs of retrieval practice reduce middle school and high school students’ test anxiety. Journal of Applied Research in Memory and Cognition, 3(3), 131–139. [Google Scholar] [CrossRef]

- Agarwal, P. K., Nunes, L. D., & Blunt, J. R. (2021). Retrieval practice consistently benefits student learning: A systematic review of applied research in schools and classrooms. Educational Psychology Review, 33(4), 1409–1453. [Google Scholar] [CrossRef]

- Ariel, R., Karpicke, J. D., Witherby, A. E., & Tauber, S. K. (2021). Do judgments of learning directly enhance learning of educational materials? Educational Psychology Review, 33(2), 693–712. [Google Scholar] [CrossRef]

- Bangert-Drowns, R. L., Kulik, C. L. C., Kulik, J. A., & Morgan, A. (1991). The instructional effect of feedback in test-like events. Review of Educational Research, 61, 213–238. [Google Scholar] [CrossRef]

- Carpenter, S. K., Pan, S. C., & Butler, A. C. (2022). The science of effective learning with spacing and retrieval practice. Nature Reviews Psychology, 1(9), 496–511. [Google Scholar] [CrossRef]

- Coyne, J. H., Borg, J. M., DeLuca, J., Glass, L., & Sumowski, J. F. (2015). Retrieval practice as an effective memory strategy in children and adolescents with traumatic brain injury. Archives of Physical Medicine and Rehabilitation, 96(4), 742–745. [Google Scholar] [CrossRef]

- Darabi, A. A., Nelson, D. W., & Palanki, S. (2007). Acquisition of troubleshooting skills in computer simulation: Worked example vs. conventional problem solving instructional strategies. Computers in Human Behavior, 23(4), 1809–1819. [Google Scholar] [CrossRef]

- Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. [Google Scholar] [CrossRef]

- Faulkenberry, T. J., Ly, A., & Wagenmakers, E. (2020). Bayesian inference in numerical cognition: A tutorial using JASP. Journal of Numerical Cognition, 6, 231–259. [Google Scholar] [CrossRef]

- Fritz, C. O., Morris, P. E., Acton, M., Voelkel, A. R., & Etkind, R. (2007). Comparing and combining retrieval practice and the keyword mnemonic for foreign vocabulary learning. Applied Cognitive Psychology, 21(4), 499–526. [Google Scholar] [CrossRef]

- Higham, P. A., Fastrich, G. M., Potts, R., Murayama, K., Pickering, J. S., & Hadwin, J. A. (2023). Spaced retrieval practice: Can restudying trump retrieval? Educational Psychology Review, 35, 98. [Google Scholar] [CrossRef]

- JASP Team. (2024). JASP (Version 0.18.3, Computer software). JASP Team, University of Amsterdam. [Google Scholar]

- Jönsson, F. U., Kubik, V., Sundqvist, M. L., Todorov, I., & Jonsson, B. (2014). How crucial is the response format for the testing effect? Psychological Research, 78, 623–633. [Google Scholar] [CrossRef]

- Kang, S. H., McDaniel, M. A., & Pashler, H. (2011). Effects of testing on learning of functions. Psychonomic Bulletin & Review, 18(5), 998–1005. [Google Scholar] [CrossRef]

- Krushcke, J. K. (2013). Bayesian estimation supersedes the t test. Journal of Experimental Psychology: General, 142, 573–603. [Google Scholar] [CrossRef]

- Kubik, V., Jönsson, F. U., de Jonge, M., & Arshamian, A. (2020). Putting action into testing: Enhanced retrieval benefits long-term retention more than covert retrieval. Quarterly Journal of Experimental Psychology, 73(12), 2093–2105. [Google Scholar] [CrossRef] [PubMed]

- Kubik, V., Koslowski, K., Schubert, T., & Aslan, A. (2022). Metacognitive judgments can potentiate new learning: The role of covert retrieval. Metacognition and Learning, 17(3), 1057–1077. [Google Scholar] [CrossRef]

- Loftus, G. R., & Masson, M. E. (1994). Using confidence intervals in within-subject designs. Psychonomic Bulletin & Review, 1(4), 476–490. [Google Scholar] [CrossRef]

- McDaniel, M. A., Agarwal, P. K., Huelser, B. J., McDermott, K. B., & Roediger, H. L. (2011). Test-enhanced learning in a middle school science classroom: The effects of quiz frequency and placement. Journal of Educational Psychology, 103(2), 399–414. [Google Scholar] [CrossRef]

- McDaniel, M. A., Anderson, J. L., Derbish, M. H., & Morrisette, N. (2007). Testing the testing effect in the classroom. European Journal of Cognitive Psychology, 19(4–5), 494–513. [Google Scholar] [CrossRef]

- McDaniel, M. A., Thomas, R. C., Agarwal, P. K., McDermott, K. B., & Roediger, H. L. (2013). Quizzing in middle-school science: Successful transfer performance on classroom exams. Applied Cognitive Psychology, 27(3), 360–372. [Google Scholar] [CrossRef]

- McDermott, K. B. (2021). Practicing retrieval facilitates learning. Annual Review of Psychology, 72(1), 609–633. [Google Scholar] [CrossRef] [PubMed]

- McDermott, K. B., Agarwal, P. K., D’Antonio, L., Roedgier, H. L., & McDaniel, M. A. (2014). Both multiple-choice and short-answer quizzes enhance later exam performance in middle and high school classes. Journal of Experimental Psychology: Applied, 20(1), 3–21. [Google Scholar] [CrossRef]

- Moreira, B. F. T., Pinto, T. S. S., Starling, D. S. V., & Jaeger, A. (2019). Retrieval practice in classroom settings: A review of applied research. Frontiers in Education, 4, 1–16. [Google Scholar] [CrossRef]

- Pan, S. C., Arpita, G., & Rickard, T. C. (2016). Testing with feedback yields potent, but piecewise, learning of history and biology facts. Journal of Educational Psychology, 108(4), 563–575. [Google Scholar] [CrossRef]

- Pan, S. C., Hutter, S., D’Andrea, D., Unwalla, D., & Rickard, T. C. (2019). In search of transfer following cued recall practice: The case of process-based biology concepts. Applied Cognitive Psychology, 33(4), 629–645. [Google Scholar] [CrossRef]

- Pashler, H., Cepeda, N. J., Wixted, J. T., & Rohrer, D. (2005). When does feedback facilitate learning of words? Journal of Experimental Psychology: Learning, Memory, and Cognition, 31, 3–8. [Google Scholar] [CrossRef] [PubMed]

- Putnam, A. L., & Roediger, H. L. (2013). Does response mode affect amount recalled or the magnitude of the testing effect? Memory & Cognition, 41(1), 36–48. [Google Scholar] [CrossRef]

- Rhodes, M. G., & Tauber, S. K. (2011). The influence of delaying judgments of learning on metacognitive accuracy: A meta-analytic review. Psychological Bulletin, 137(1), 131–148. [Google Scholar] [CrossRef] [PubMed]

- Roediger, H. L., Agarwal, P. K., McDaniel, M. A., & McDermott, K. B. (2011). Test-enhanced learning in the classroom: Long-term improvements from quizzing. Journal of Experimental Psychology: Applied, 17(4), 382–395. [Google Scholar] [CrossRef] [PubMed]

- Roediger, H. L., & Karpicke, J. D. (2006a). Test-enhanced learning: Taking memory tests improves long-term retention. Psychological Science, 17(3), 249–255. [Google Scholar] [CrossRef] [PubMed]

- Roediger, H. L., & Karpicke, J. D. (2006b). The power of testing memory: Basic research and implications for educational practice. Perspectives on Psychological Science, 1(3), 181–210. [Google Scholar] [CrossRef]

- Rowland, C. A. (2014). The effect of testing versus restudy on retention: A meta-analytic review of the testing effect. Psychological Bulletin, 140(6), 1432–1463. [Google Scholar] [CrossRef] [PubMed]

- Schwieren, J., Barengerg, J., & Dutke, S. (2017). The testing effect in the psychology classroom: A meta-analytic perspective. Psychology Learning & Teaching, 16(2), 179–196. [Google Scholar] [CrossRef]

- Smith, M. A., Roediger, H. L., & Karpicke, J. D. (2013). Covert retrieval practice benefits retention as much as overt retrieval practice. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39(6), 1712–1725. [Google Scholar] [CrossRef]

- Sumeracki, M. A., & Castillo, J. (2022). Covert and overt retrieval practice in the classroom. Translational Issues in Psychological Science, 8(2), 282–293. [Google Scholar] [CrossRef]

- Sundqvist, M. L., Mäntylä, T., & Jönsson, F. U. (2017). Assessing boundary conditions of the testing effect: On the relative efficacy of covert vs. overt retrieval. Frontiers in Psychology, 8, 1018–1033. [Google Scholar] [CrossRef]

- Tauber, S. K., Dunlosky, J., & Rawson, K. A. (2015). The influence of retrieval practice versus delayed judgments of learning on memory: Resolving a memory-metamemory paradox. Experimental Psychology, 62(4), 254–263. [Google Scholar] [CrossRef]

- Tauber, S. K., Witherby, A. E., Dunlosky, J., Rawson, K. A., Putnam, A. L., & Roediger, H. L. (2018). Does covert retrieval benefit learning of key-term definitions? Journal of Applied Research in Memory and Cognition, 7(1), 106–115. [Google Scholar] [CrossRef]

- van Ravenzwaaij, D., & Etz, A. (2021). Simulation studies as a tool to understand Bayes factors. Advances in Methods and Practices in Psychological Science, 4, 1–21. [Google Scholar] [CrossRef]

- Wissman, K. T., Rawson, K. A., & Pyc, M. A. (2011). The interim test effect: Testing prior material can facilitate learning of new material. Psychonomic Bulletin & Review, 18(6), 1140–1147. [Google Scholar] [CrossRef]

- Witherby, A. E., Babineau, A. L., & Tauber, S. K. (2025). Students’, teachers’, and parents’ knowledge about and perceptions of learning strategies. Behavioral Sciences, 15(2), 160. [Google Scholar] [CrossRef] [PubMed]

- Witherby, A. E., & Tauber, S. K. (2019). The current status of students’ note-taking: Why and how do students take notes? Journal of Applied Research in Memory and Cognition, 8(2), 139–153. [Google Scholar] [CrossRef]

| Treatment Package Order | ||||||

|---|---|---|---|---|---|---|

| Session | O, R, C | O, C, R | R, O, C | R, C, O | C, O, R | C, R, O |

| 1 | 12 | 18 | 20 | 0 | 0 | 15 |

| 2 | 0 | 12 | 0 | 15 | 18 | 20 |

| 3 | 15 | 0 | 12 | 18 | 20 | 0 |

| 4 | 20 | 0 | 18 | 12 | 15 | 0 |

| 5 | 0 | 15 | 0 | 20 | 12 | 18 |

| Total | 47 | 45 | 50 | 65 | 65 | 53 |

| Treatment Package Type | |||

|---|---|---|---|

| Term | Overt | Covert | Restudy |

| Cold Front | 35 | 18 | 12 |

| Convection | 18 | 32 | 15 |

| Convection Current | 20 | 18 | 27 |

| Coriolis Effect | 18 | 32 | 15 |

| Deep Current | 32 | 18 | 15 |

| Global Winds | 18 | 27 | 20 |

| Jet Stream | 27 | 20 | 18 |

| Local Winds | 18 | 35 | 12 |

| Meteorology | 0 * | 15 | 50 * |

| Ocean Current | 32 | 15 | 18 |

| Surface Current | 15 | 32 | 18 |

| Upwelling | 30 | 15 | 20 |

| Warm Front | 32 | 18 | 15 |

| Weather | 15 | 12 | 38 |

| Weather Map | 15 | 18 | 32 |

| Treatment Package Type | |||||

|---|---|---|---|---|---|

| Term | Idea Units | Overt | Covert | Restudy | Overall |

| Cold Front | 4 | 42.86 (4.98) | 35.29 (7.29) | 52.08 (10.64) | 42.58 (3.88) |

| Convection | 3 | 11.76 (3.12) | 8.85 (2.59) | 8.89 (2.75) | 9.64 (1.65) |

| Convection Current | 3 | 13.33 (3.75) | 9.80 (3.52) | 17.28 (4.02) | 14.06 (2.26) |

| Coriolis Effect + | 4 | 15.28 (3.58) | 5.08 (2.62) | 8.33 (2.64) | 8.65 (1.80) |

| Deep Current * | 3 | 58.85 (6.03) | 37.25 (6.31) | 43.33 (6.04) | 49.48 (3.87) |

| Global Winds | 1 | 52.78 (8.55) | 62.96 (5.72) | 42.50 (7.50) | 53.85 (4.15) |

| Jet Stream + | 2 | 40.74 (7.45) | 18.75 (6.25) | 20.83 (7.34) | 28.46 (4.31) |

| Local Winds | 2 | 13.24 (3.79) | 7.14 (2.19) | 16.67 (5.62) | 10.55 (1.91) |

| Meteorology | 3 | 47.78 (8.74) | 38.67 (4.22) | 40.77 (3.82) | |

| Ocean Current | 2 | 76.56 (5.01) | 66.67 (7.97) | 65.28 (8.37) | 71.15 (3.85) |

| Surface Current | 3 | 32.22 (7.71) | 24.48 (3.89) | 16.67 (5.35) | 24.22 (3.4) |

| Upwelling | 3 | 23.56 (6.22) | 34.44 (10.72) | 8.33 (5.05) | 21.35 (4.21) |

| Warm Front | 4 | 42.97 (5.83) | 42.65 (9.10) | 50.00 (8.80) | 44.53 (4.26) |

| Weather | 5 | 47.33 (5.11) | 34.17 (7.33) | 23.51 (4.03) | 31.09 (3.17) |

| Weather Map | 5 | 32.67 (5.97) | 21.76 (5.51) | 23.13 (3.40) | 25.00 (2.65) |

| Treatment Package Type | |||||

|---|---|---|---|---|---|

| Term | Idea Units | Overt | Covert | Restudy | Overall |

| Cold Front | 4 | 65.71 (8.14) | 66.67 (11.43) | 75.00 (13.06) | 67.69 (5.85) |

| Convection | 3 | 44.44 (12.05) | 31.25 (8.32) | 46.67 (13.33) | 38.46 (6.08) |

| Convection Current | 3 | 25.00 (9.93) | 33.33 (11.43) | 29.63 (8.96) | 29.23 (5.69) |

| Coriolis Effect * | 4 | 61.11 (11.82) | 25.00 (7.78) | 60.00 (13.09) | 43.08 (6.19) |

| Deep Current | 3 | 65.63 (8.53) | 61.11 (11.82) | 86.67 (9.09) | 69.23 (5.77) |

| Global Winds + | 1 | 44.44 (12.05) | 55.56 (9.75) | 20.00 (9.18) | 41.54 (6.16) |

| Jet Stream | 2 | 74.07 (8.59) | 55.00 (11.41) | 66.67 (11.43) | 66.15 (5.91) |

| Local Winds | 2 | 44.44 (12.05) | 34.29 (8.14) | 58.33 (14.86) | 41.54 (6.16) |

| Meteorology | 3 | 80.00 (10.69) | 62.00 (6.93) | 66.15 (5.91) | |

| Ocean Current + | 2 | 83.33 (11.24) | 48.57 (8.57) | 72.22 (10.86) | 61.54 (6.08) |

| Surface Current | 3 | 66.67 (12.60) | 43.75 (8.91) | 50.00 (12.13) | 50.77 (6.25) |

| Upwelling | 3 | 43.33 (9.20) | 46.67 (13.33) | 20.00 (9.18) | 36.92 (6.03) |

| Warm Front | 4 | 71.89 (8.08) | 55.56 (12.05) | 66.67 (12.60) | 66.15 (5.91) |

| Weather * | 5 | 73.33 (11.82) | 91.67 (8.33) | 42.11 (8.12) | 58.46 (6.16) |

| Weather Map + | 5 | 100 (00) | 72.22 (10.86) | 68.75 (8.32) | 76.92 (5.27) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Witherby, A.E.; Northern, P.E.; Tauber, S.K. Does Covert Retrieval Benefit Adolescents’ Learning in 8th Grade Science Classes? Behav. Sci. 2025, 15, 843. https://doi.org/10.3390/bs15070843

Witherby AE, Northern PE, Tauber SK. Does Covert Retrieval Benefit Adolescents’ Learning in 8th Grade Science Classes? Behavioral Sciences. 2025; 15(7):843. https://doi.org/10.3390/bs15070843

Chicago/Turabian StyleWitherby, Amber E., Paige E. Northern, and Sarah K. Tauber. 2025. "Does Covert Retrieval Benefit Adolescents’ Learning in 8th Grade Science Classes?" Behavioral Sciences 15, no. 7: 843. https://doi.org/10.3390/bs15070843

APA StyleWitherby, A. E., Northern, P. E., & Tauber, S. K. (2025). Does Covert Retrieval Benefit Adolescents’ Learning in 8th Grade Science Classes? Behavioral Sciences, 15(7), 843. https://doi.org/10.3390/bs15070843