Durability of Students’ Learning Strategies Use and Beliefs Following a Classroom Intervention

Abstract

1. Introduction

2. Study 1

2.1. Method

2.1.1. Participants

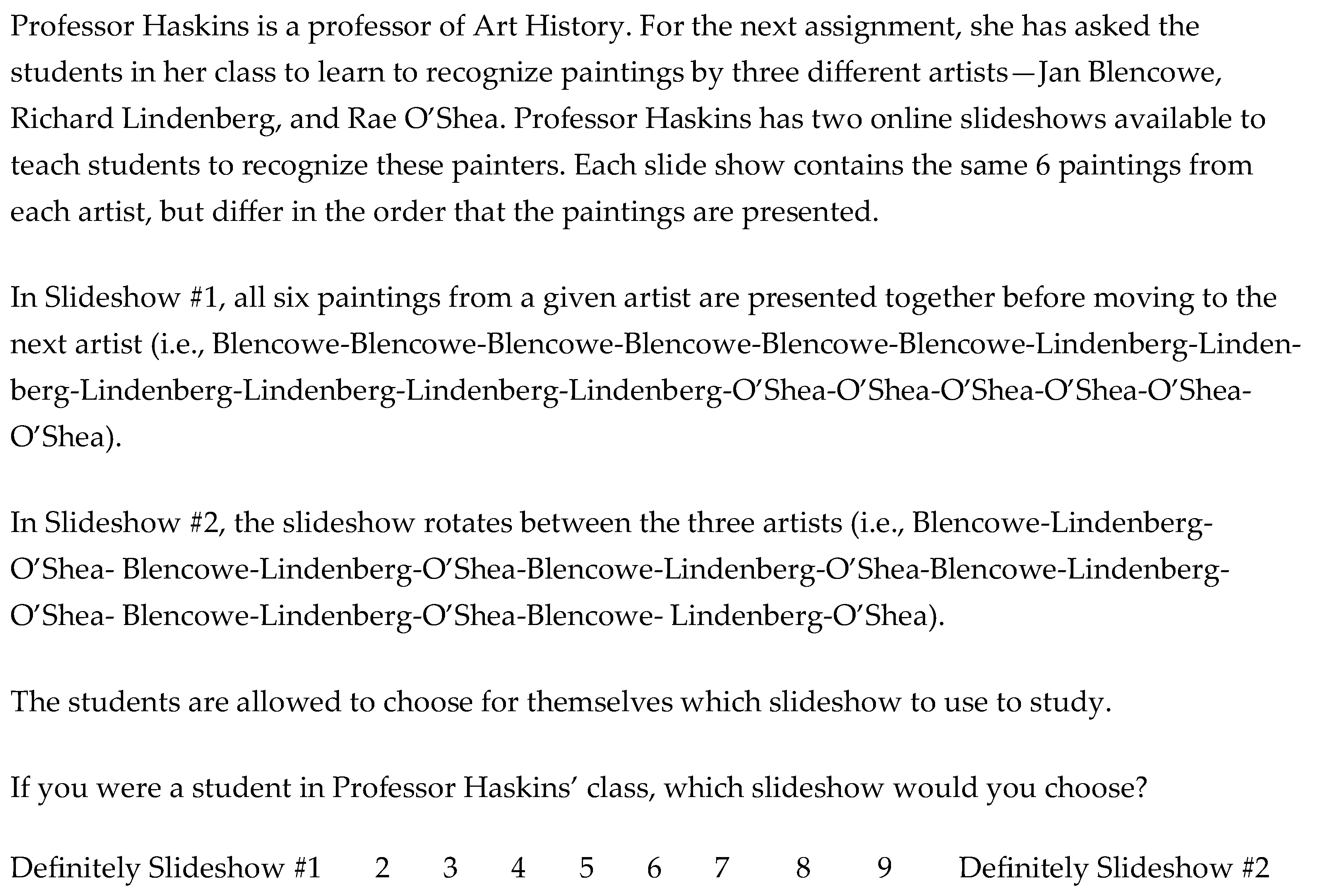

2.1.2. Procedure

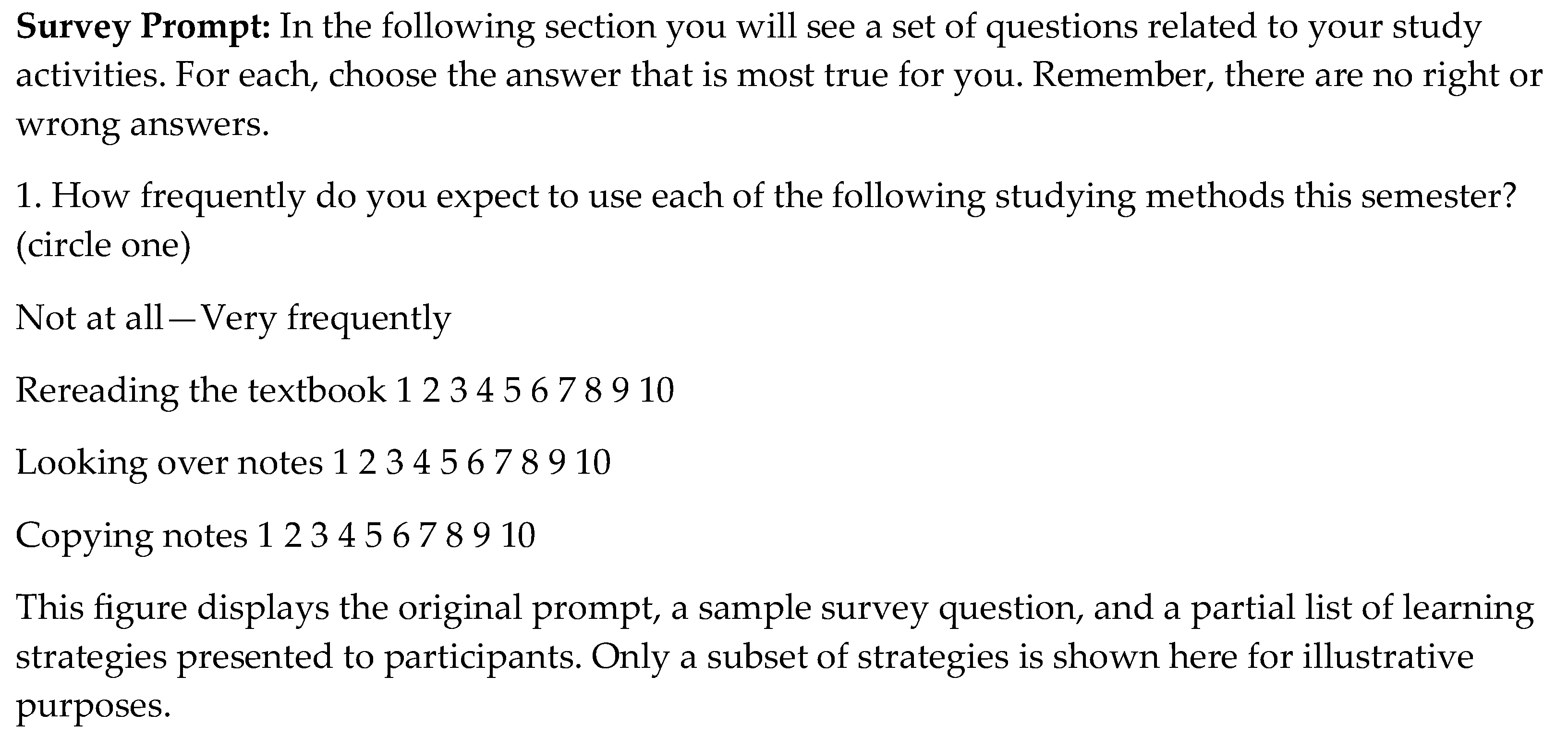

2.1.3. Assessments

2.2. Results

2.2.1. Pre-Intervention Comparison of Groups

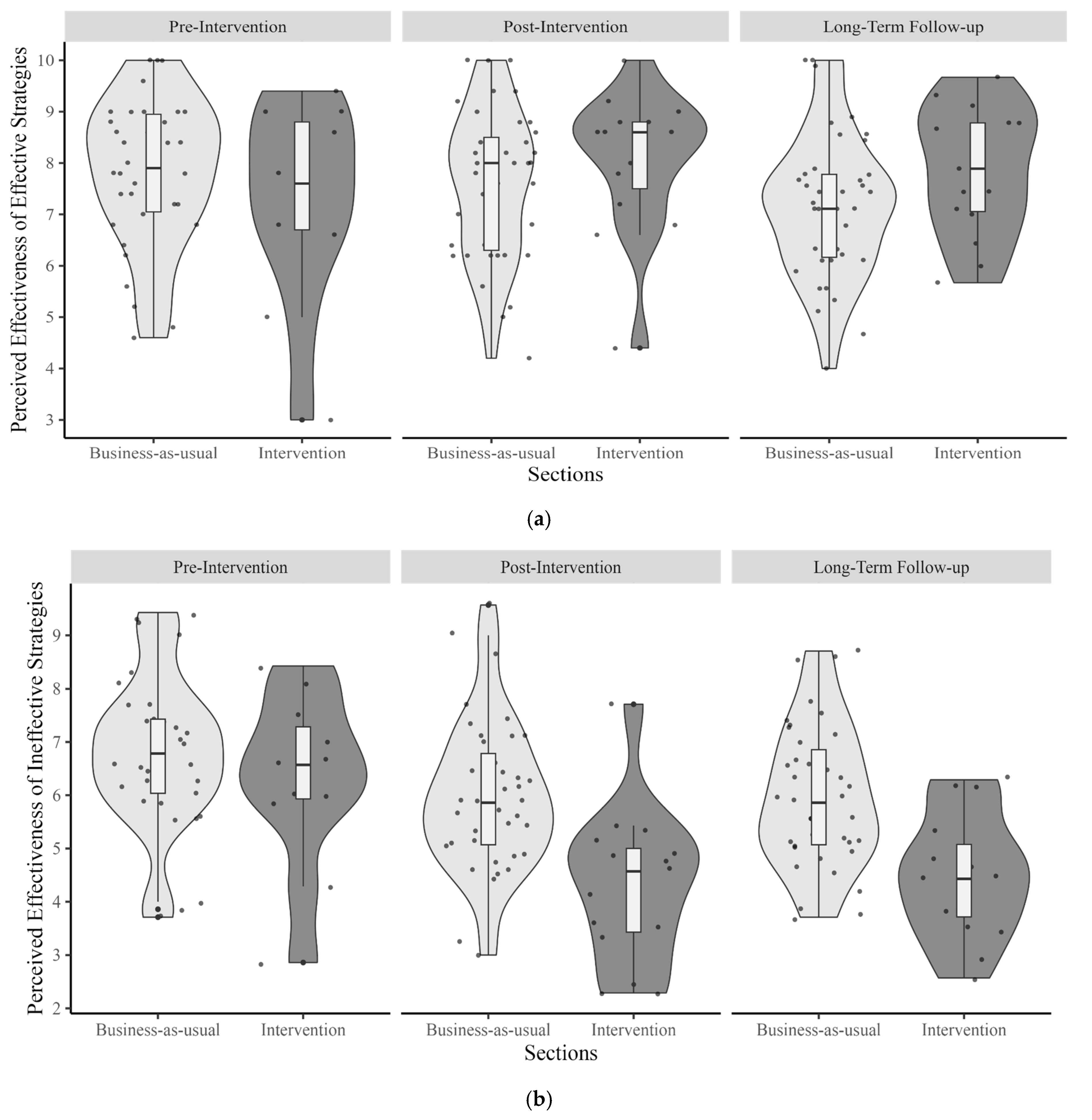

2.2.2. Use and Perceived Effectiveness of Effective Learning Strategies

3. Study 2

3.1. Method

3.1.1. Participants

3.1.2. Assessments

3.2. Results

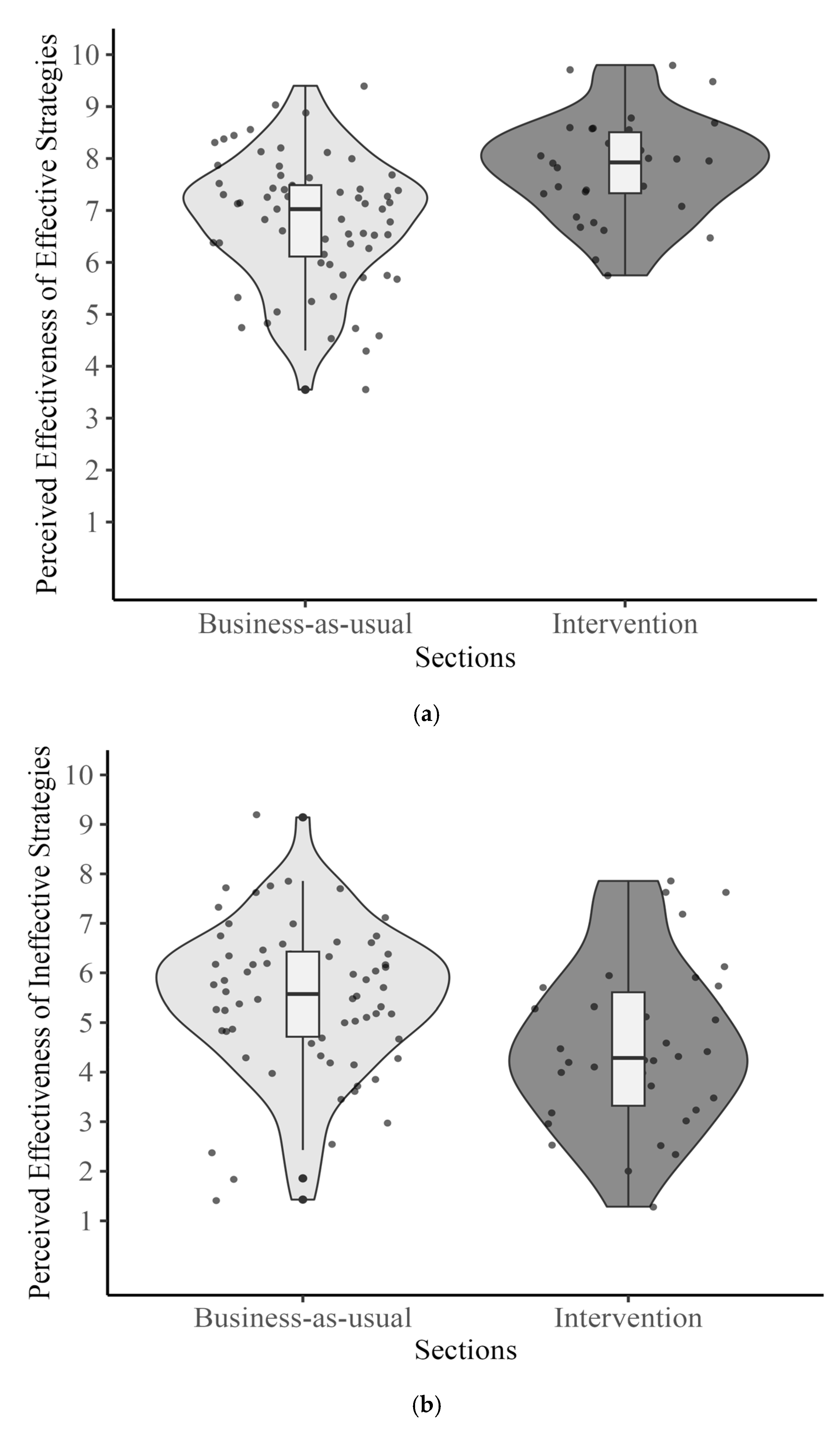

3.2.1. Quantitative Assessments

3.2.2. Open-Ended Questions

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1

| Learning Strategies | Intervention | Business-as-Usual | Group × Time p-Value | ||||

|---|---|---|---|---|---|---|---|

| Pre- Intervention | Post- Intervention | Long-Term | Pre- Intervention | Post- Intervention | Long-Term | ||

| Reading Textbook | 6.36 | 4.06 | 2.88 | 6.79 | 6.69 | 5.83 | 0.052 |

| Taking Tests | 8.36 | 9.88 | 9.71 | 9.15 | 9.53 | 9.28 | 0.046 * |

| Using Life Examples | 7.82 | 9.12 | 8.29 | 8.06 | 8.62 | 7.91 | 0.547 |

| Looking at Notes | 7.91 | 6.59 | 5.29 | 8.41 | 7.49 | 7.91 | 0.030 * |

| Highlighting Notes | 5.64 | 2.53 | 3.29 | 7.15 | 4.51 | 5.19 | 0.773 |

| Creating Outlines | 6.27 | 6.41 | 6.53 | 7.76 | 5.38 | 7.02 | 0.588 |

| Copying Notes | 6.00 | 4.94 | 5.53 | 6.53 | 6.09 | 6.91 | 0.526 |

| Highlighting Textbook | 5.73 | 1.71 | 2.12 | 6.47 | 4.20 | 4.63 | 0.150 |

| Cramming | 4.73 | 3.29 | 3.65 | 3.79 | 3.84 | 3.85 | 0.421 |

| Summarizing Notes | 8.00 | 7.00 | 8.35 | 8.38 | 7.76 | 7.85 | 0.283 |

| Using Flashcards | 6.82 | 7.12 | 7.24 | 7.59 | 7.76 | 7.59 | 0.718 |

| Group Study | 6.36 | 7.65 | 6.88 | 6.76 | 6.11 | 5.98 | 0.365 |

| Learning Strategies | Intervention | Business-as-Usual | Group × Time p-Value | ||||

|---|---|---|---|---|---|---|---|

| Pre- Intervention | Post- Intervention | Long-Term | Pre- Intervention | Post- Intervention | Long-Term | ||

| Reading Textbook | 5.91 | 3.59 | 3.41 | 6.24 | 6.24 | 4.74 | 0.59 |

| Taking Tests | 8.00 | 9.52 | 8.47 | 8.50 | 7.53 | 7.21 | 0.17 |

| Using Life Examples | 6.82 | 7.76 | 7.12 | 7.79 | 6.75 | 6.15 | 0.09 |

| Looking at Notes | 8.91 | 8.00 | 8.35 | 8.47 | 8.46 | 8.33 | 0.74 |

| Highlighting Notes | 6.00 | 2.47 | 4.00 | 7.15 | 4.77 | 4.37 | 0.47 |

| Creating Outlines | 5.90 | 4.00 | 5.35 | 6.64 | 4.48 | 5.04 | 0.42 |

| Copying Notes | 5.45 | 5.53 | 6.41 | 5.09 | 4.93 | 6.35 | 0.81 |

| Highlighting Textbook | 5.81 | 1.23 | 2.11 | 5.14 | 2.31 | 2.54 | 0.44 |

| Cramming | 6.00 | 6.35 | 6.17 | 5.44 | 7.72 | 6.84 | 0.36 |

| Summarizing Notes | 7.36 | 5.94 | 7.35 | 7.70 | 6.71 | 6.35 | 0.19 |

| Using Flashcards | 5.54 | 3.64 | 4.47 | 5.50 | 5.68 | 4.73 | 0.99 |

| Group Study | 5.82 | 3.53 | 5.29 | 5.18 | 3.93 | 4.13 | 0.57 |

Appendix A.2

| Response | Business-as-Usual | Intervention | Total |

|---|---|---|---|

| Not Enough Time | 2 | 0 | 2 |

| No Desire | 1 | 0 | 1 |

| Lack of Resources | 4 | 1 | 5 |

| Unsure Effectiveness | 13 | 3 | 16 |

| Procrastination | 3 | 1 | 4 |

| Distractions | 11 | 5 | 16 |

| Hard to Change | 2 | 0 | 2 |

| Nobody Else Available | 1 | 1 | 2 |

| Mental Health | 1 | 2 | 3 |

| Response | Business-as-Usual | Intervention | Total |

|---|---|---|---|

| Retrieval Practice | 8 | 3 | 11 |

| Rereading | 1 | 1 | 2 |

| Cramming | 5 | 0 | 5 |

| Time Management | 3 | 1 | 4 |

| Spacing | 1 | 0 | 1 |

| Using Flashcards | 3 | 1 | 4 |

| Studying in Advance | 9 | 3 | 12 |

| Copying Notes | 5 | 0 | 5 |

| Creating Outline | 2 | 1 | 3 |

| Group Study | 1 | 0 | 1 |

| Active Notetaking | 5 | 0 | 5 |

| Metacognitive Monitoring | 1 | 0 | 1 |

| Watching Videos | 3 | 0 | 3 |

| Self-Explanation | 13 | 5 | 18 |

| Summarization | 3 | 1 | 4 |

| Using Planner | 4 | 2 | 6 |

| Visualization | 3 | 0 | 3 |

| Environment Optimization | 1 | 0 | 1 |

| Error Analysis | 1 | 0 | 1 |

| Attending Office Hours | 5 | 0 | 5 |

| Studying in Different Places | 3 | 1 | 4 |

| Highlighting | 1 | 0 | 1 |

| Learning From Examples | 1 | 0 | 1 |

| Listening Audiobooks | 1 | 0 | 1 |

| Interleaved Practice | 1 | 0 | 1 |

Appendix B

Appendix B.1

| Number of Participants | |||

|---|---|---|---|

| Semester | Business-as-Usual | Intervention | Total |

| 2019–2020 Fall | 10 | 0 | 10 |

| 2019–2020 Spring | 2 | 0 | 2 |

| 2020–2021 Fall | 10 | 5 | 15 |

| 2020–2021 Spring | 2 | 3 | 5 |

| 2021 Summer | 2 | 0 | 2 |

| 2021–2022 Fall | 15 | 4 | 19 |

| 2021–2022 Spring | 9 | 0 | 9 |

| 2022 Summer | 1 | 0 | 1 |

| 2022–2023 Fall | 8 | 22 | 30 |

| 2022–2023 Spring | 9 | 0 | 9 |

Appendix B.2

| Learning Strategies | Intervention | Business-as-Usual | p Value |

|---|---|---|---|

| Reading Textbook | 3.74 | 5 | 0.87 |

| Looking at Notes | 6.41 | 7.44 | 0.94 |

| Copying Notes | 5.24 | 6.1 | 0.20 |

| Summarizing Notes | 6.44 | 7.12 | 0.80 |

| Taking Tests | 9.26 | 8.81 | 0.06 |

| Highlighting Notes | 3.09 | 4.53 | 0.23 |

| Highlighting Textbook | 2.79 | 3.66 | 0.73 |

| Using Flashcards | 7.09 | 6.87 | 0.24 |

| Using Life Examples | 8.29 | 7.5 | 0.10 |

| Creating Outlines | 7 | 6.53 | 0.098 |

| Cramming | 3.82 | 4.54 | 0.278 |

| Group Study | 7.32 | 5.6 | 0.01 * |

| Studying in Different Places | 8.15 | 5.81 | 0.0001 ** |

| Spacing | 8.15 | 7.29 | 0.45 |

| Self-Explanation | 9.29 | 8.59 | 0.13 |

| Interleaving | 5.88 | 4.37 | 0.002 ** |

| Learning Strategies | Intervention | Business-as-Usual | p Value |

|---|---|---|---|

| Reading Textbook | 4.24 | 4.43 | 0.17 |

| Looking at Notes | 8.15 | 8.35 | 0.86 |

| Copying Notes | 5.59 | 5.82 | 0.48 |

| Summarizing Notes | 5.47 | 5.99 | 0.69 |

| Taking Tests | 8.44 | 7.46 | 0.15 |

| Highlighting Notes | 3.06 | 5.04 | 0.21 |

| Highlighting Textbook | 2.5 | 3.18 | 0.71 |

| Using Flashcards | 5.12 | 5.29 | 0.76 |

| Using Life Examples | 6.62 | 6.32 | 0.73 |

| Creating Outlines | 5.56 | 5.49 | 0.57 |

| Cramming | 6.29 | 6.43 | 0.51 |

| Group Study | 5.62 | 4.35 | 0.11 |

| Studying in Different Places | 7.29 | 5.37 | 0.01 * |

| Spacing | 6.44 | 5.84 | 0.56 |

| Self-Explanation | 6.88 | 5.79 | 0.14 |

| Interleaving | 6.41 | 4.79 | 0.007 ** |

Appendix C

References

- Ahmed, S. F., Tang, S., Waters, N. E., & Davis-Kean, P. (2019). Executive function and academic achievement: Longitudinal relations from early childhood to adolescence. Journal of Educational Psychology, 111(3), 446–458. [Google Scholar] [CrossRef]

- Ariel, R., & Karpicke, J. D. (2018). Improving self-regulated learning with a retrieval practice intervention. Journal of Experimental Psychology: Applied, 24(1), 43–56. [Google Scholar] [CrossRef] [PubMed]

- Balch, W. R. (2001). Study tips: How helpful do introductory psychology students find them? Teaching of Psychology, 28(4), 272–274. [Google Scholar] [CrossRef]

- Balch, W. R. (2006). Encouraging distributed study: A classroom experiment on the spacing effect. Teaching of Psychology, 33(4), 249–252. [Google Scholar]

- Bartoszewski, B. L., & Gurung, R. A. R. (2015). Comparing the relationship of learning techniques and exam score. Scholarship of Teaching and Learning in Psychology, 1(3), 219–228. [Google Scholar] [CrossRef]

- Bowen, R. W., & Wingo, J. M. (2012). Predicting success in introductory psychology from early testing: High consistency and low trajectory in multiple-choice test performance. North American Journal of Psychology, 14(3), 419–434. [Google Scholar] [CrossRef]

- Brown-Kramer, C. R. (2022). Teaching students to learn better in introductory psychology: A replication and extension study. Teaching of Psychology, 49(2), 108–117. [Google Scholar] [CrossRef]

- Butler, A. C., & Roediger, H. L. (2007). Testing improves long-term retention in a simulated classroom setting. European Journal of Cognitive Psychology, 19(4–5), 514–527. [Google Scholar] [CrossRef]

- Cassidy, S. (2012). Exploring individual differences as determining factors in student academic achievement in higher education. Studies in Higher Education, 37(7), 793–810. [Google Scholar] [CrossRef]

- Cathey, C. L., Visio, M. E., Whisenhunt, B. L., Hudson, D. L., & Shoptaugh, C. F. (2016). Helping when they are listening: A midterm study skills intervention for introductory psychology. Psychology Learning & Teaching, 15(3), 250–267. [Google Scholar] [CrossRef]

- Chen, G., Gully, S. M., Whiteman, J. A., & Kilcullen, R. N. (2000). Examination of relationships among trait-like individual differences, state-like individual differences, and learning performance. Journal of Applied Psychology, 85(6), 835–847. [Google Scholar] [CrossRef] [PubMed]

- Dembo, M. H., & Seli, H. P. (2004). Students’ resistance to change in learning strategies courses. Journal of Developmental Education, 27(3), 2. [Google Scholar]

- DeWinstanley, P. A., & Bjork, E. L. (2004). Processing strategies and the generation effect: Implications for making a better reader. Memory & Cognition, 32(6), 945–955. [Google Scholar] [CrossRef]

- Dunlosky, J., Rawson, K. A., Marsh, E. J., Nathan, M. J., & Willingham, D. T. (2013). Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychological Science in the Public Interest, 14(1), 4–58. [Google Scholar] [CrossRef] [PubMed]

- Einstein, G. O., Mullet, H. G., & Harrison, T. L. (2012). The testing effect: Illustrating a fundamental concept and changing study strategies. Teaching of Psychology, 39(3), 190–193. [Google Scholar] [CrossRef]

- Foerst, N. M., Klug, J., Jöstl, G., Spiel, C., & Schober, B. (2017). Knowledge vs. action: Discrepancies in university students’ knowledge about and self-reported use of self-regulated learning strategies. Frontiers in Psychology, 8, 1288. [Google Scholar] [CrossRef]

- Fowler, R. L., & Barker, A. S. (1974). Effectiveness of highlighting for retention of text material. Journal of Applied Psychology, 59(3), 358–364. [Google Scholar] [CrossRef]

- Gurung, R. A. R., & Burns, K. (2019). Putting evidence-based claims to the test: A multi-site classroom study of retrieval practice and spaced practice. Applied Cognitive Psychology, 33(5), 732–743. [Google Scholar] [CrossRef]

- Gurung, R. A. R., Weidert, J., & Jeske, A. (2010). Focusing on how students study. Journal of the Scholarship of Teaching and Learning 10(1), 28–35. Available online: https://scholarworks.iu.edu/journals/index.php/josotl/article/view/1734 (accessed on 1 June 2021).

- Hartwig, M. K., & Dunlosky, J. (2012). Study strategies of college students: Are self-testing and scheduling related to achievement? Psychonomic Bulletin & Review, 19(1), 126–134. [Google Scholar] [CrossRef]

- Hassanbeigi, A., Askari, J., Nakhjavani, M., Shirkhoda, S., Barzegar, K., Mozayyan, M. R., & Fallahzadeh, H. (2011). The relationship between study skills and academic performance of university students. Procedia—Social and Behavioral Sciences, 30, 1416–1424. [Google Scholar] [CrossRef]

- Hattie, J. A., & Donoghue, G. M. (2018). A model of learning: Optimizing the effectiveness of learning strategies. In Contemporary theories of learning (pp. 97–113). Routledge. [Google Scholar] [CrossRef]

- Hoon, P. W. (1974). Efficacy of three common study methods. Psychological Reports, 35(3), 1057–1058. [Google Scholar] [CrossRef]

- Karpicke, J. D., Butler, A. C., & Roediger, H. L., III. (2009). Metacognitive strategies in student learning: Do students practise retrieval when they study on their own? Memory, 17(4), 471–479. [Google Scholar] [CrossRef] [PubMed]

- Kornell, N., & Bjork, R. A. (2007). The promise and perils of self-regulated study. Psychonomic Bulletin & Review, 14(2), 219–224. [Google Scholar] [CrossRef]

- Lyons, K. E., & Zelazo, P. D. (2011). Monitoring, metacognition, and executive function: Elucidating the role of self-reflection in the development of self-regulation. Advances in Child Development and Behavior, 40, 379–412. [Google Scholar] [CrossRef]

- McCabe, J. (2011). Metacognitive awareness of learning strategies in undergraduates. Memory & Cognition, 39(3), 462–476. [Google Scholar] [CrossRef]

- Pan, S. C., Flores, S. R., Kaku, M. E., & Lai, W. H. E. (2025). Interleaved practice enhances grammar skill learning for similar and dissimilar tenses in Romance languages. Learning and Instruction, 95, 102045. [Google Scholar] [CrossRef]

- Pan, S. C., & Rivers, M. L. (2023). Metacognitive awareness of the pretesting effect improves with self-regulation support. Memory & Cognition 51, 1461–1480. [Google Scholar] [CrossRef]

- Ponce, H. R., Mayer, R. E., & Méndez, E. E. (2022). Effects of learner-generated highlighting and instructor-provided highlighting on learning from text: A meta-analysis. Educational Psychology Review, 34(2), 989–1024. [Google Scholar] [CrossRef]

- R Core Team. (2017). R: A language and environment for statistical computing. Available online: https://www.R-project.org/ (accessed on 24 April 2025).

- Roediger, H. L., & Butler, A. C. (2011). The critical role of retrieval practice in long-term retention. Trends in Cognitive Sciences, 15(1), 20–27. [Google Scholar] [CrossRef]

- Roediger, H. L., & Karpicke, J. D. (2006). Test-enhanced learning: Taking memory tests improves long-term retention. Psychological Science, 17(3), 249–255. [Google Scholar] [CrossRef] [PubMed]

- Soderstrom, N. C., & Bjork, R. A. (2015). Learning versus performance: An integrative review. Perspectives on Psychological Science, 10(2), 176–199. [Google Scholar] [CrossRef]

- Usdansky, M. L., McDaniel, M. A., Razza, R., Lopoo, L. M., Tillotson, J. W., & Granato, R. (2024). Coaching to learn: Motivating students to adopt and adapt effective learning strategies. Learning Assistance Review (TLAR), 28(3), 223–256. [Google Scholar]

- van den Broek, G. S., Segers, E., Takashima, A., & Verhoeven, L. (2014). Do testing effects change over time? Insights from immediate and delayed retrieval speed. Memory, 22(7), 803–812. [Google Scholar] [CrossRef]

- Witherby, A. E., Babineau, A. L., & Tauber, S. K. (2025). Students’, teachers’, and parents’ knowledge about and perceptions of learning strategies. Behavioral Sciences, 15(2), 160. [Google Scholar] [CrossRef] [PubMed]

- Yan, V. X., Bjork, E. L., & Bjork, R. A. (2016). On the difficulty of mending metacognitive illusions: A priori theories, fluency effects, and misattributions of the interleaving benefit. Journal of Experimental Psychology: General, 145(7), 918. [Google Scholar] [CrossRef]

- Yüksel, E. M., Green, C. S., & Vlach, H. A. (2024). Effect of instruction and experience on students’ learning strategies. Metacognition Learning 19, 345–364. [Google Scholar] [CrossRef]

- Zimmerman, B. J. (2002). Becoming a self-regulated learner: An overview. Theory into Practice, 41(2), 64–70. [Google Scholar] [CrossRef]

| Perceived Effectiveness | ||||||

|---|---|---|---|---|---|---|

| Effective Strategies | Ineffective Strategies | |||||

| Groups | Pre- Intervention | Post- Intervention | Long-Term Follow-Up | Pre- Intervention | Post- Intervention | Long-Term Follow-Up |

| Business-as-usual | 7.83 (1.44) | 7.59 (1.45) | 7.14 (1.39) | 6.80 (1.42) | 5.97 (1.41) | 5.96 (1.32) |

| Intervention | 7.24 (1.92) | 8.05 (1.36) | 7.87 (1.25) | 6.31 (1.63) | 4.28 (1.44) | 4.49 (1.16) |

| Reported Frequency of Use | ||||||

|---|---|---|---|---|---|---|

| Effective Strategies | Ineffective Strategies | |||||

| Groups | Pre- Intervention | Post- Intervention | Long-Term Follow-Up | Pre- Intervention | Post- Intervention | Long-Term Follow-Up |

| Business-as-usual | 6.68 (1.27) | 5.33 (1.76) | 5.39 (1.48) | 6.40 (1.37) | 5.74 (1.55) | 5.78 (1.21) |

| Intervention | 6.55 (1.97) | 5.73 (1.36) | 6.34 (1.13) | 6.70 (1.73) | 4.74 (1.29) | 5.39 (1.35) |

| Perceived Effectiveness | Reported Frequency of Use | Scenarios | |||

|---|---|---|---|---|---|

| Groups | Effective Strategies | Ineffective Strategies | Effective Strategies | Ineffective Strategies | |

| Business-as-usual | 6.79 (1.22) | 5.48 (1.47) | 5.62 (1.38) | 5.60 (1.34) | 6.80 (1.02) |

| Intervention | 7.83 (0.96) | 4.50 (1.63) | 6.51 (1.29) | 5.04 (1.52) | 8.28 (1.18) |

| Response | Business-as-Usual | Intervention | Total |

|---|---|---|---|

| Retrieval Practice | 37/76 (49%) | 15/35 (43%) | 52/111 (47%) |

| Rereading | 33/76 (43%) | 14/35 (40%) | 47/111 (42%) |

| Cramming | 21/76 (28%) | 8/35 (23%) | 29/111 (26%) |

| Time Management | 22/76 (29%) | 7/35 (20%) | 29/111 (26%) |

| Spacing | 20/76 (26%) | 8/35 (23%) | 28/111 (25%) |

| Using Flashcards | 22/76 (29%) | 4/35 (11%) | 26/111 (23%) |

| Studying in Advance | 6/76 (8%) | 9/35 (26%) | 15/111 (14%) |

| Copying Notes | 8/76 (11%) | 4/35 (11%) | 12/111 (11%) |

| Creating Outline | 8/76 (11%) | 0/35 (0) | 8/111 (7%) |

| Group Study | 4/76 (5%) | 3/35 (9%) | 7/111 (6%) |

| Active Notetaking | 2/76 (3%) | 4/35 (11%) | 6/111 (5%) |

| Metacognitive Monitoring | 3/76 (4%) | 3/35 (9%) | 6/111 (5%) |

| Watching Videos | 4/76 (5%) | 2/35 (6%) | 6/111 (5%) |

| Self-Explanation | 3/76 (4%) | 1/35 (3%) | 4/111 (4%) |

| Summarization | 3/76 (4%) | 1/35 (3%) | 4/111 (4%) |

| Using Planner | 2/76 (3%) | 1/35 (3%) | 3/111 (3%) |

| Visualization | 2/76 (3%) | 1/35 (3%) | 3/111 (3%) |

| Environment Optimization | 1/76 (1%) | 1/35 (3%) | 2/111 (2%) |

| Error Analysis | 2/76 (3%) | 0/35 (0) | 2/111 (2%) |

| Attending Office Hours | 1/76 (1%) | 1/35 (3%) | 2/111 (2%) |

| Studying in Different Places | 0/76 (0) | 2/35 (6%) | 2/111 (2%) |

| Highlighting | 1/76 (1%) | 0/35 (0) | 1/111 (1%) |

| Learning From Examples | 1/76 (1%) | 0/35 (0) | 1/111 (1%) |

| Listening Audiobooks | 1/76 (1%) | 0/35 (0) | 1/111 (1%) |

| Interleaved Practice | 0/76 (0) | 1/35 (3%) | 1/111 (1%) |

| Response | Business-as-Usual | Intervention | Total |

|---|---|---|---|

| Yes | 58/80 (73%) | 28/37 (76%) | 86/117 (74%) |

| Unsure | 15/80 (19%) | 4/37 (11%) | 19/117 (16%) |

| No | 7/80 (9%) | 5/37 (14%) | 12/117 (10%) |

| Response | Business-as-Usual | Intervention | Total |

|---|---|---|---|

| There Are More Effective Strategies | 11/17 (64.7%) | 2/6 (33.3%) | 13/23 (56.5%) |

| Procrastination | 3/17 (17.6%) | 3/6 (50.0%) | 6/23 (26.1%) |

| Not Organized | 4/17 (23.5%) | 1/6 (16.7%) | 5/23 (21.7%) |

| Lack of Information | 3/17 (17.6%) | 0/6 (0.0%) | 3/23 (13.0%) |

| Response | Business-as-Usual | Intervention | Total |

|---|---|---|---|

| Good Grades | 22/65 (33.8%) | 10/32 (31.3%) | 32/97 (33.0%) |

| Learn Effectively | 17/65 (26.2%) | 14/32 (43.8%) | 31/97 (32.0%) |

| Understand, Not Memorize | 12/65 (18.5%) | 4/32 (12.5%) | 16/97 (16.5%) |

| Good Representation of Exams | 7/65 (10.8%) | 2/32 (6.3%) | 9/97 (9.3%) |

| Helps with Thinking Through Problems | 4/65 (6.2%) | 4/32 (12.5%) | 8/97 (8.2%) |

| Save Time | 5/65 (7.7%) | 3/32 (9.4%) | 8/97 (8.2%) |

| Feels Productive | 5/65 (7.7%) | 2/32 (6.3%) | 7/97 (7.2%) |

| Stay Organized | 5/65 (7.7%) | 0/32 (0.0%) | 5/97 (5.2%) |

| Help Fix Weaknesses | 0/65 (0.0%) | 2/32 (6.3%) | 2/97 (2.1%) |

| Response | Business-as-Usual | Intervention | Total |

|---|---|---|---|

| Retrieval Practice | 30/76 (39.5%) | 17/36 (47.2%) | 47/112 (42.0%) |

| Time Management | 23/76 (30.3%) | 11/36 (30.6%) | 34/112 (30.4%) |

| Using Flashcards | 25/76 (32.9%) | 7/36 (19.4%) | 32/112 (28.6%) |

| Rereading | 20/76 (26.3%) | 10/36 (27.8%) | 30/112 (26.8%) |

| Spacing | 12/76 (15.8%) | 8/36 (22.2%) | 20/112 (17.9%) |

| Group Study | 13/76 (17.1%) | 5/36 (13.9%) | 18/112 (16.1%) |

| Copying Notes | 13/76 (17.1%) | 4/36 (11.1%) | 17/112 (15.2%) |

| Study More | 10/76 (13.2%) | 4/36 (11.1%) | 14/112 (12.5%) |

| Studying Different Places | 5/76 (6.6%) | 6/36 (16.7%) | 11/112 (9.8%) |

| Studying in Advance | 8/76 (10.5%) | 3/36 (8.3%) | 11/112 (9.8%) |

| Environment Optimization | 6/76 (7.9%) | 4/36 (11.1%) | 10/112 (8.9%) |

| Creating Outline | 7/76 (9.2%) | 2/36 (5.6%) | 9/112 (8.0%) |

| Metacognitive Monitoring | 7/76 (9.2%) | 2/36 (5.6%) | 9/112 (8.0%) |

| Visualization | 6/76 (7.9%) | 3/36 (8.3%) | 9/112 (8.0%) |

| Self-Explanation | 3/76 (3.9%) | 5/36 (13.9%) | 8/112 (7.1%) |

| Active Note-Taking | 2/76 (2.6%) | 5/36 (13.9%) | 7/112 (6.3%) |

| Office Hours | 7/76 (9.2%) | 0/36 (0.0%) | 7/112 (6.3%) |

| Summarization | 6/76 (7.9%) | 1/36 (2.8%) | 7/112 (6.3%) |

| Using Planner | 6/76 (7.9%) | 1/36 (2.8%) | 7/112 (6.3%) |

| Watching Videos | 5/76 (6.6%) | 1/36 (2.8%) | 6/112 (5.4%) |

| Cramming | 4/76 (5.3%) | 0/36 (0.0%) | 4/112 (3.6%) |

| Interleaved Practice | 1/76 (1.3%) | 3/36 (8.3%) | 4/112 (3.6%) |

| Highlighting | 3/76 (3.9%) | 0/36 (0.0%) | 3/112 (2.7%) |

| Real-Life Examples | 3/76 (3.9%) | 0/36 (0.0%) | 3/112 (2.7%) |

| Mnemonics | 1/76 (1.3%) | 1/36 (2.8%) | 2/112 (1.8%) |

| Listening to Audiobooks | 1/76 (1.3%) | 0/36 (0.0%) | 1/112 (0.9%) |

| Elaborative Interrogation | 0/76 (0.0%) | 1/36 (2.8%) | 1/112 (0.9%) |

| Error Analysis | 0/76 (0.0%) | 1/36 (2.8%) | 1/112 (0.9%) |

| Learning from Examples | 0/76 (0.0%) | 1/36 (2.8%) | 1/112 (0.9%) |

| Response | Business-as-Usual | Intervention | Total |

|---|---|---|---|

| Not Enough Time | 31/67 (46.3%) | 17/30 (56.7%) | 48/97 (49.5%) |

| No Desire | 21/67 (31.3%) | 9/30 (30.0%) | 30/97 (30.9%) |

| Lack of Resources | 14/67 (20.9%) | 5/30 (16.7%) | 19/97 (19.6%) |

| Unsure Effectiveness | 9/67 (13.4%) | 5/30 (16.7%) | 14/97 (14.4%) |

| Procrastination | 9/67 (13.4%) | 3/30 (10.0%) | 12/97 (12.4%) |

| Distractions | 7/67 (10.4%) | 4/30 (13.3%) | 11/97 (11.3%) |

| Hard to Change | 4/67 (6.0%) | 5/30 (16.7%) | 9/97 (9.3%) |

| Nobody Else Available | 2/67 (3.0%) | 4/30 (13.3%) | 6/97 (6.2%) |

| Mental Health | 5/67 (7.5%) | 0/30 (0.0%) | 5/97 (5.2%) |

| Schedule Conflicts | 2/67 (3.0%) | 3/30 (10.0%) | 5/97 (5.2%) |

| Lack of Flow | 3/67 (4.5%) | 1/30 (3.3%) | 4/97 (4.1%) |

| Variety Requirement | 2/67 (3.0%) | 2/30 (6.7%) | 4/97 (4.1%) |

| Distance | 3/67 (4.5%) | 0/30 (0.0%) | 3/97 (3.1%) |

| Introversion | 3/67 (4.5%) | 0/30 (0.0%) | 3/97 (3.1%) |

| Monetary Restrictions | 1/67 (1.5%) | 1/30 (3.3%) | 2/97 (2.1%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yüksel, E.M.; Green, C.S.; Vlach, H.A. Durability of Students’ Learning Strategies Use and Beliefs Following a Classroom Intervention. Behav. Sci. 2025, 15, 706. https://doi.org/10.3390/bs15050706

Yüksel EM, Green CS, Vlach HA. Durability of Students’ Learning Strategies Use and Beliefs Following a Classroom Intervention. Behavioral Sciences. 2025; 15(5):706. https://doi.org/10.3390/bs15050706

Chicago/Turabian StyleYüksel, Ezgi M., C. Shawn Green, and Haley A. Vlach. 2025. "Durability of Students’ Learning Strategies Use and Beliefs Following a Classroom Intervention" Behavioral Sciences 15, no. 5: 706. https://doi.org/10.3390/bs15050706

APA StyleYüksel, E. M., Green, C. S., & Vlach, H. A. (2025). Durability of Students’ Learning Strategies Use and Beliefs Following a Classroom Intervention. Behavioral Sciences, 15(5), 706. https://doi.org/10.3390/bs15050706