Interpolated Retrieval of Relevant Material, Not Irrelevant Material, Enhances New Learning of a Video Lecture In-Person and Online

Abstract

:1. Introduction

2. The Current Study

3. Experiment 1: Laboratory Study

3.1. Methods

3.1.1. Design and Participants

3.1.2. Materials and Procedure

3.2. Results

Do All Interpolated Retrieval Tasks Boost New Learning?

4. Experiment 2: Online Study

4.1. Methods

4.1.1. Design and Participants

4.1.2. Materials and Procedure

4.2. Results

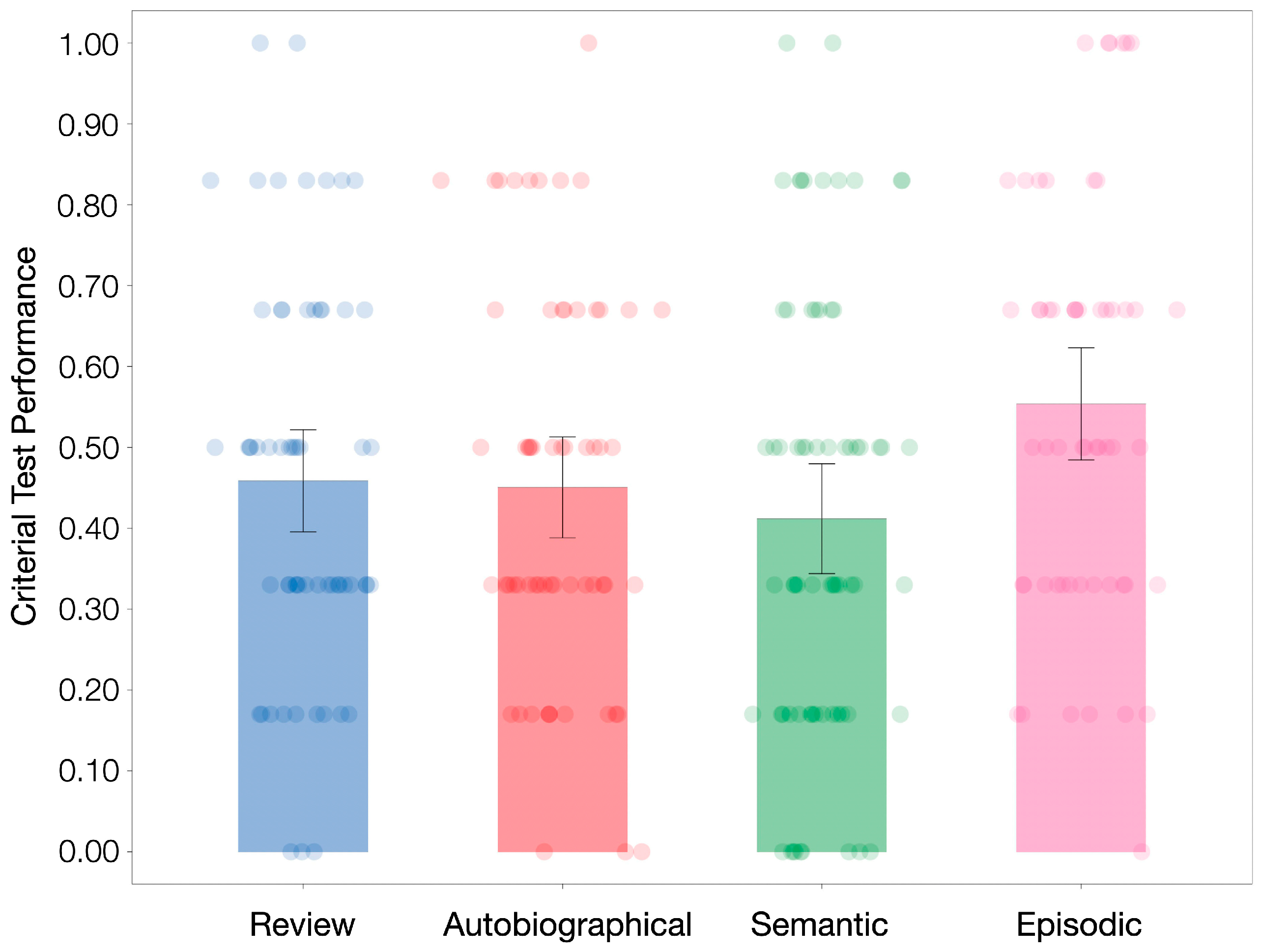

Only Content-Relevant Episodic Retrieval Enhanced New Learning

5. Exploratory Analyses

5.1. Lecture Relevance as a Determinant of Test-Potentiated New Learning

5.2. Measuring Context Change and Its Impact on New Learning

6. Discussion

6.1. Interpolated Retrieval Online and In-Lab

6.2. Interpolated Retrieval, Strategy Change, and Context Change

6.3. Limitations and Constraints on Generality

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| IRP | Interpolated retrieval practice |

| FTE | Forward testing effect |

| PI | Proactive interference |

| EEG | Electroencephalogram |

| ANOVA | Analysis of variance |

References

- Adhani, L. K., & Remijn, G. B. (2023, December 7–8). A survey on external distractions affecting students’ study performance during online learning. 3rd International Conference on Community Engagement and Education for Sustainable Development (pp. 178–184), Grand Rohan Jogja Yogyakarta, Indonesia. [Google Scholar] [CrossRef]

- Ahn, D., & Chan, J. C. K. (2022). Does testing enhance new learning because it insulates against proactive interference? Memory & Cognition, 50, 1664–1682. [Google Scholar] [CrossRef]

- Ahn, D., & Chan, J. C. K. (2024). Does testing potentiate new learning because it enables learners to use better strategies? Journal of Experimental Psychology: Learning, Memory, and Cognition, 50(3), 435–457. [Google Scholar] [CrossRef]

- Aivaz, K. A., & Teodorescu, D. (2022). College students’ distractions from learning caused by multitasking in online vs. face-to-face classes: A case study at a public university in Romania. International Journal of Environmental Research and Public Health, 19(18), 11188. [Google Scholar] [CrossRef] [PubMed]

- Bjork, E. L., & Storm, B. C. (2011). Retrieval experience as a modifier of future encoding: Another test effect. Journal of Experimental Psychology: Learning, Memory, and Cognition, 37(5), 1113–1124. [Google Scholar] [CrossRef]

- Boustani, S., Owens, C., Don, H. J., Yang, C., & Shanks, D. R. (2023). Evaluating the conceptual strategy change account of test-potentiated new learning in list recall. Journal of Memory and Language, 130, 104412. [Google Scholar] [CrossRef]

- Brame, C. J. (2016). Effective educational videos: Principles and guidelines for maximizing student learning from video content. CBE—Life Sciences Education, 15(4), 1–6. [Google Scholar] [CrossRef]

- Brown, J. (1958). Some tests of the decay theory of immediate memory. Quarterly Journal of Experimental Psychology, 10(1), 12–21. [Google Scholar] [CrossRef]

- Chan, J. C. K., Ahn, D., Szpunar, K. K., Assadipour, Z., & Gill, H. (2025). Bridging the lab-field gap: Multi-site evidence that in-lecture quizzes improve online learning for university and community college students. Communications Psychology, 3(1), 54. [Google Scholar] [CrossRef]

- Chan, J. C. K., Manley, K. D., & Ahn, D. (2020). Does retrieval potentiate new learning when retrieval stops but new learning continues? Journal of Memory and Language, 115, 104150. [Google Scholar] [CrossRef]

- Chan, J. C. K., Manley, K. D., Davis, S. D., & Szpunar, K. K. (2018a). Testing potentiates new learning across a retention interval and a lag: A strategy change perspective. Journal of Memory and Language, 102, 83–96. [Google Scholar] [CrossRef]

- Chan, J. C. K., & McDermott, K. B. (2007a). The effects of frontal lobe functioning and age on veridical and false recall. Psychonomic Bulletin & Review, 14(4), 606–611. [Google Scholar] [CrossRef]

- Chan, J. C. K., & McDermott, K. B. (2007b). The testing effect in recognition memory: A dual process account. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33(2), 431–437. [Google Scholar] [CrossRef] [PubMed]

- Chan, J. C. K., Meissner, C. A., & Davis, S. D. (2018b). Retrieval potentiates new learning: A theoretical and meta-analytic review. Psychological Bulletin, 144(11), 1111–1146. [Google Scholar] [CrossRef]

- Conrad, C., & Newman, A. (2021). Measuring mind wandering during online lectures assessed with EEG. Frontiers in Human Neuroscience, 15, 697532. [Google Scholar] [CrossRef]

- Davis, S. D., & Chan, J. C. K. (2015). Studying on borrowed time: How does testing impair new learning? Journal of Experimental Psychology: Learning, Memory, and Cognition, 41(6), 1741–1754. [Google Scholar] [CrossRef] [PubMed]

- Davis, S. D., & Chan, J. C. K. (2023). Effortful tests and repeated metacognitive judgments enhance future learning. Educational Psychology Review, 35(3), 86. [Google Scholar] [CrossRef]

- Davis, S. D., Chan, J. C. K., & Wilford, M. M. (2017). The dark side of interpolated testing: Frequent switching between retrieval and encoding impairs new learning. Journal of Applied Research in Memory and Cognition, 6(4), 434–441. [Google Scholar] [CrossRef]

- Delaney, P. F., Sahakyan, L., Kelley, C. M., & Zimmerman, C. A. (2010). Remembering to forget: The amnesic effect of daydreaming. Psychological Science, 21(7), 1036–1042. [Google Scholar] [CrossRef]

- deWinstanley, P. A., & Bjork, E. L. (2004). Processing strategies and the generation effect: Implications for making a better reader. Memory & Cognition, 32(6), 945–955. [Google Scholar] [CrossRef]

- Divis, K. M., & Benjamin, A. S. (2014). Retrieval speeds context fluctuation: Why semantic generation enhances later learning but hinders prior learning. Memory & Cognition, 42(7), 1049–1062. [Google Scholar] [CrossRef]

- Francis, M. K., Wormington, S. V., & Hulleman, C. (2019). The costs of online learning: Examining differences in motivation and academic outcomes in online and face-to-face community college developmental mathematics courses. Frontiers in Psychology, 10, 2054. [Google Scholar] [CrossRef] [PubMed]

- Glisky, E. L., Polster, M. R., & Routhieaux, B. C. (1995). Double dissociation between item and source memory. Neuropsychology, 9(2), 229–235. [Google Scholar] [CrossRef]

- Hansch, A., Hillers, L., McConachie, K., Newman, C., Schildhauer, T., & Schmidt, P. (2015). Video and online learning: Critical reflections and findings from the field. HIIG Discussion Paper Series No. 2015-02. [Google Scholar] [CrossRef]

- Hays, M. J., Kornell, N., & Bjork, R. A. (2013). When and why a failed test potentiates the effectiveness of subsequent study. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39(1), 290–296. [Google Scholar] [CrossRef]

- Hew, K. F. (2018). Unpacking the strategies of ten highly rated MOOCs: Implications for engaging students in large online courses. Teachers College Record, 120(1), 010308. [Google Scholar] [CrossRef]

- Hollister, B., Nair, P., Hill-Lindsay, S., & Chukoskie, L. (2022). Engagement in online learning: Student attitudes and behavior during COVID-19. Frontiers in Education, 7, 851019. [Google Scholar] [CrossRef]

- Hong, M. K., Polyn, S. M., & Fazio, L. K. (2019). Examining the episodic context account: Does retrieval practice enhance memory for context? Cognitive Research: Principles and Implications, 4(1), 46. [Google Scholar] [CrossRef]

- Jang, Y., & Huber, D. E. (2008). Context retrieval and context change in free recall: Recalling from long-term memory drives list isolation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 34(1), 112–127. [Google Scholar] [CrossRef]

- JASP Team. (2024). JASP (Version 0.18.3) [Computer Software]. Available online: https://jasp-stats.org (accessed on 5 May 2025).

- Jing, H. G., Szpunar, K. K., & Schacter, D. L. (2016). Interpolated testing influences focused attention and improves integration of information during a video-recorded lecture. Journal of Experimental Psychology: Applied, 22(3), 305–318. [Google Scholar] [CrossRef]

- Johnstone, A. H., & Percival, F. (1976). Attention breaks in lectures. Education in Chemistry, 13, 49–50. [Google Scholar]

- Jonker, T. R., Seli, P., & MacLeod, C. M. (2013). Putting retrieval-induced forgetting in context: An inhibition-free, context-based account. Psychological Review, 120(4), 852–872. [Google Scholar] [CrossRef] [PubMed]

- Kliegl, O., & Bäuml, K.-H. T. (2021). When retrieval practice promotes new learning—The critical role of study material. Journal of Memory and Language, 120, 104253. [Google Scholar] [CrossRef]

- Kliegl, O., & Bäuml, K.-H. T. (2023). How retrieval practice and semantic generation affect subsequently studied material: An analysis of item-level effects. Memory, 31(1), 127–136. [Google Scholar] [CrossRef] [PubMed]

- Kriechbaum, V. M., & Bäuml, K.-H. T. (2024). Retrieval practice can promote new learning with both related and unrelated prose materials. Journal of Applied Research in Memory and Cognition, 13(3), 319–328. [Google Scholar] [CrossRef]

- Lee, H. S., & Ahn, D. (2018). Testing prepares students to learn better: The forward effect of testing in category learning. Journal of Educational Psychology, 110(2), 203–217. [Google Scholar] [CrossRef]

- Murphy, D. H., Little, J. L., & Bjork, E. L. (2023). The value of using tests in education as tools for learning—Not just for assessment. Educational Psychology Review, 35(3), 89. [Google Scholar] [CrossRef]

- Pan, S. C., Schmitt, A. G., Bjork, E. L., & Sana, F. (2020). Pretesting reduces mind wandering and enhances learning during online lectures. Journal of Applied Research in Memory and Cognition, 9(4), 542–554. [Google Scholar] [CrossRef]

- Pastötter, B., Bäuml, K.-H., & Hanslmayr, S. (2008). Oscillatory brain activity before and after an internal context change—Evidence for a reset of encoding processes. NeuroImage, 43(1), 173–181. [Google Scholar] [CrossRef]

- Pastötter, B., & Bäuml, K.-H. T. (2014). Retrieval practice enhances new learning: The forward effect of testing. Frontiers in Psychology, 5, 286. [Google Scholar] [CrossRef]

- Pastötter, B., Schicker, S., Niedernhuber, J., & Bäuml, K.-H. T. (2011). Retrieval during learning facilitates subsequent memory encoding. Journal of Experimental Psychology: Learning, Memory, and Cognition, 37(2), 287–297. [Google Scholar] [CrossRef]

- Peterson, L., & Peterson, M. (1959). Short-term retention of individual verbal items. Journal of Experimental Psychology, 58(3), 193–198. [Google Scholar] [CrossRef] [PubMed]

- Riccio, D. C., Richardson, R., & Ebner, D. L. (1984). Memory retrieval deficits based upon altered contextual cues: A paradox. Psychological Bulletin, 96(1), 152–165. [Google Scholar] [CrossRef]

- Riccio, D. C., Richardson, R., & Ebner, D. L. (1999). The contextual change paradox is still unresolved: Comment on Bouton, Nelson, and Rosas (1999). Psychological Bulletin, 125(2), 187–189. [Google Scholar] [CrossRef]

- Risko, E. F., Anderson, N., Sarwal, A., Engelhardt, M., & Kingstone, A. (2012). Everyday attention: Variation in mind wandering and memory in a lecture. Applied Cognitive Psychology, 26(2), 234–242. [Google Scholar] [CrossRef]

- Roediger, H. L., & Karpicke, J. D. (2006). The power of testing memory: Basic research and implications for educational practice. Perspectives on Psychological Science, 1(3), 181–210. [Google Scholar] [CrossRef] [PubMed]

- Rowland, C. A. (2014). The effect of testing versus restudy on retention: A meta-analytic review of the testing effect. Psychological Bulletin, 140(6), 1432–1463. [Google Scholar] [CrossRef] [PubMed]

- Sahakyan, L., Delaney, P. F., Foster, N. L., & Abushanab, B. (2013). List-method directed forgetting in cognitive and clinical research. Psychology of Learning and Motivation, 59, 131–189. [Google Scholar] [CrossRef]

- Sahakyan, L., & Kelley, C. M. (2002). A contextual change account of the directed forgetting effect. Journal of Experimental Psychology: Learning, Memory, and Cognition, 28(6), 1064–1072. [Google Scholar] [CrossRef]

- Schacter, D. L., Benoit, R. G., & Szpunar, K. K. (2017). Episodic future thinking: Mechanisms and functions. Current Opinion in Behavioral Sciences, 17, 41–50. [Google Scholar] [CrossRef]

- Seaton, D. T., Bergner, Y., Chuang, I., Mitros, P., & Pritchard, D. E. (2014). Who does what in a massive open online course? Communications of the ACM, 57(4), 58–65. [Google Scholar] [CrossRef]

- Shimizu, Y., & Jacoby, L. L. (2005). Similarity-guided depth of retrieval: Constraining at the front end. Canadian Journal of Experimental Psychology/Revue Canadienne De Psychologie Experimentale, 59(1), 17–21. [Google Scholar] [CrossRef]

- Soderstrom, N. C., & Bjork, R. A. (2014). Testing facilitates the regulation of subsequent study time. Journal of Memory and Language, 73, 99–115. [Google Scholar] [CrossRef]

- Szpunar, K. K., Chan, J. C. K., & McDermott, K. B. (2009). Contextual processing in episodic future thought. Cerebral Cortex, 19(7), 1539–1548. [Google Scholar] [CrossRef] [PubMed]

- Szpunar, K. K., McDermott, K. B., & Roediger, H. L. (2008). Testing during study insulates against the buildup of proactive interference. Journal of Experimental Psychology: Learning, Memory, and Cognition, 34(6), 1392–1399. [Google Scholar] [CrossRef]

- Szpunar, K. K., Moulton, S. T., & Schacter, D. L. (2013). Mind wandering and education: From the classroom to online learning. Frontiers in Psychology, 4, 495. [Google Scholar] [CrossRef] [PubMed]

- Szpunar, K. K., Watson, J. M., & McDermott, K. B. (2007). Neural substrates of envisioning the future. Proceedings of the National Academy of Sciences of the United States of America, 104(2), 642–647. [Google Scholar] [CrossRef]

- Tulving, E., Kapur, S., Craik, F. I., Moscovitch, M., & Houle, S. (1994). Hemispheric encoding/retrieval asymmetry in episodic memory: Positron emission tomography findings. Proceedings of the National Academy of Sciences of the United States of America, 91(6), 2016–2020. [Google Scholar] [CrossRef]

- Tulving, E., & Watkins, M. (1974). Negative transfer—Effects of testing one list on recall of another. Journal of Verbal Learning and Verbal Behavior, 13(2), 181–193. [Google Scholar] [CrossRef]

- Van Overschelde, J. P., Rawson, K. A., & Dunlosky, J. (2004). Category norms: An updated and expanded version of the Battig and Montague (1969) norms. Journal of Memory and Language, 50(3), 289–335. [Google Scholar] [CrossRef]

- Weinstein, Y., Gilmore, A. W., Szpunar, K. K., & McDermott, K. B. (2014). The role of test expectancy in the build-up of proactive interference in long-term memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 40(4), 1039–1048. [Google Scholar] [CrossRef]

- Weinstein, Y., McDermott, K., Szpunar, K. K., Bäuml, K.-H., & Pastötter, B. (2015, November 30). Not all retrieval during learning facilitates subsequent memory encoding [Conference presentation]. Annual Meeting of the Psychonomic Society, Chicago, IL, USA. [Google Scholar]

- Wickens, D. D., Born, D. G., & Allen, C. K. (1963). Proactive inhibition and item similarity in short-term memory. Journal of Verbal Learning & Verbal Behavior, 2(5–6), 440–445. [Google Scholar] [CrossRef]

- Yang, C., Chew, S.-J., Sun, B., & Shanks, D. R. (2019). The forward effects of testing transfer to different domains of learning. Journal of Educational Psychology, 111(5), 809–826. [Google Scholar] [CrossRef]

- Yang, C., Potts, R., & Shanks, D. R. (2017). The forward testing effect on self-regulated study time allocation and metamemory monitoring. Journal of Experimental Psychology: Applied, 23(3), 263–277. [Google Scholar] [CrossRef]

- Yang, C., Potts, R., & Shanks, D. R. (2018). Enhancing learning and retrieval of new information: A review of the forward testing effect. Npj Science of Learning, 3(1), 8. [Google Scholar] [CrossRef] [PubMed]

- Yang, C., Zhao, W., Luo, L., Sun, B., Potts, R., & Shanks, D. R. (2022). Testing potential mechanisms underlying test-potentiated new learning. Journal of Experimental Psychology: Learning, Memory, and Cognition, 48(8), 1127–1143. [Google Scholar] [CrossRef] [PubMed]

- Zaromb, F. M., & Roediger, H. L. (2010). The testing effect in free recall is associated with enhanced organizational processes. Memory and Cognition, 38(8), 995–1008. [Google Scholar] [CrossRef]

| Race | N |

| Asian/Asian American | 20 |

| Black/African American | 7 |

| Hispanic/Latinx | 17 |

| Multi-racial | 2 |

| Other | 2 |

| White | 207 |

| Decline to disclose | 3 |

| Gender | N |

| Man | 80 |

| Non-binary | 2 |

| Woman | 166 |

| Decline to disclose | 1 |

| Race | N |

|---|---|

| Asian/Asian American | 21 |

| Black/African American | 50 |

| Hispanic/Latinx | 22 |

| Multi-racial | 7 |

| Other | 4 |

| White | 148 |

| Decline to disclose | 4 |

| Gender | N |

| Agender | 1 |

| Man | 121 |

| Non-binary | 4 |

| Woman | 115 |

| Transgender | 2 |

| Decline to disclose | 3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Assadipour, Z.; Ahn, D.; Chan, J.C.K. Interpolated Retrieval of Relevant Material, Not Irrelevant Material, Enhances New Learning of a Video Lecture In-Person and Online. Behav. Sci. 2025, 15, 668. https://doi.org/10.3390/bs15050668

Assadipour Z, Ahn D, Chan JCK. Interpolated Retrieval of Relevant Material, Not Irrelevant Material, Enhances New Learning of a Video Lecture In-Person and Online. Behavioral Sciences. 2025; 15(5):668. https://doi.org/10.3390/bs15050668

Chicago/Turabian StyleAssadipour, Zohara, Dahwi Ahn, and Jason C. K. Chan. 2025. "Interpolated Retrieval of Relevant Material, Not Irrelevant Material, Enhances New Learning of a Video Lecture In-Person and Online" Behavioral Sciences 15, no. 5: 668. https://doi.org/10.3390/bs15050668

APA StyleAssadipour, Z., Ahn, D., & Chan, J. C. K. (2025). Interpolated Retrieval of Relevant Material, Not Irrelevant Material, Enhances New Learning of a Video Lecture In-Person and Online. Behavioral Sciences, 15(5), 668. https://doi.org/10.3390/bs15050668