Metacognitive Prompts Influence 7- to 9-Year-Olds’ Executive Function at the Levels of Task Performance and Neural Processing

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Procedure

2.3. Measures

2.3.1. Parent Questionnaires

2.3.2. Child Questionnaires

2.3.3. Child Interview

2.3.4. Metacognitive DCCS

2.3.5. EEG Acquisition and Preprocessing

2.4. Data Analysis

2.4.1. Data Preparation

2.4.2. ERP Analyses

2.4.3. Analytic Plan

3. Results

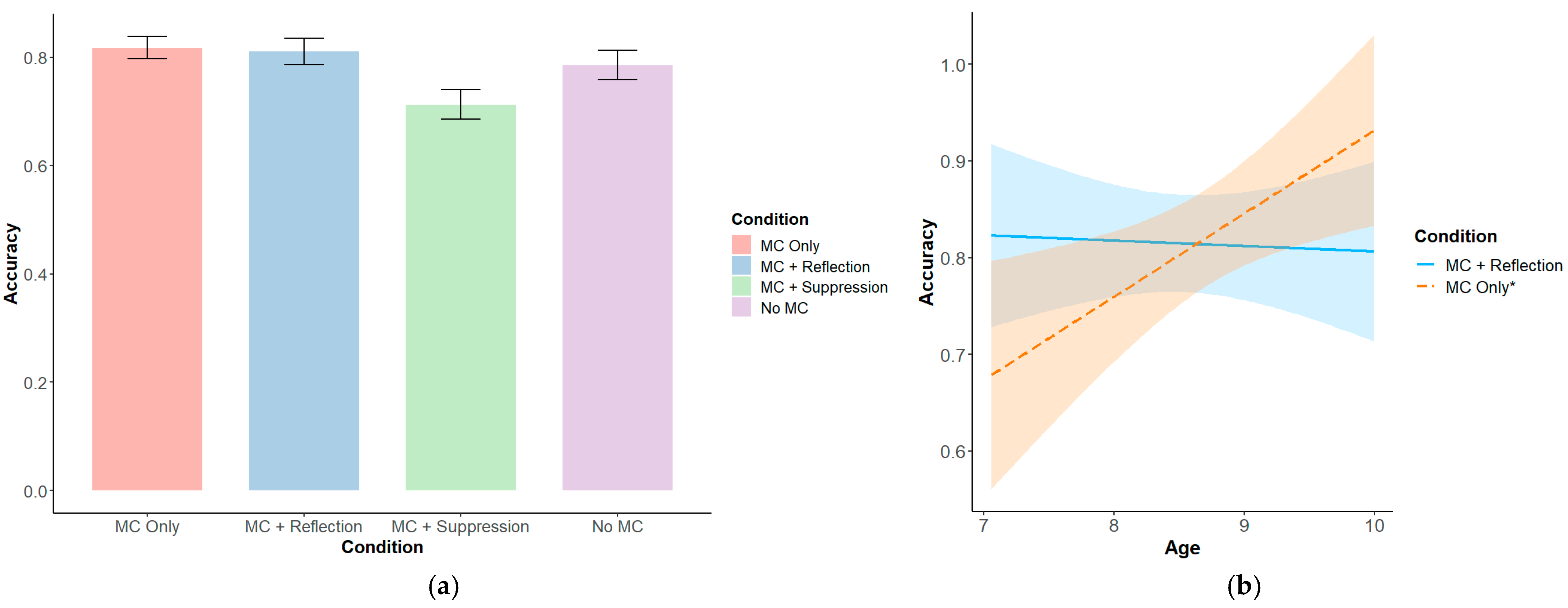

3.1. Task-Level Results

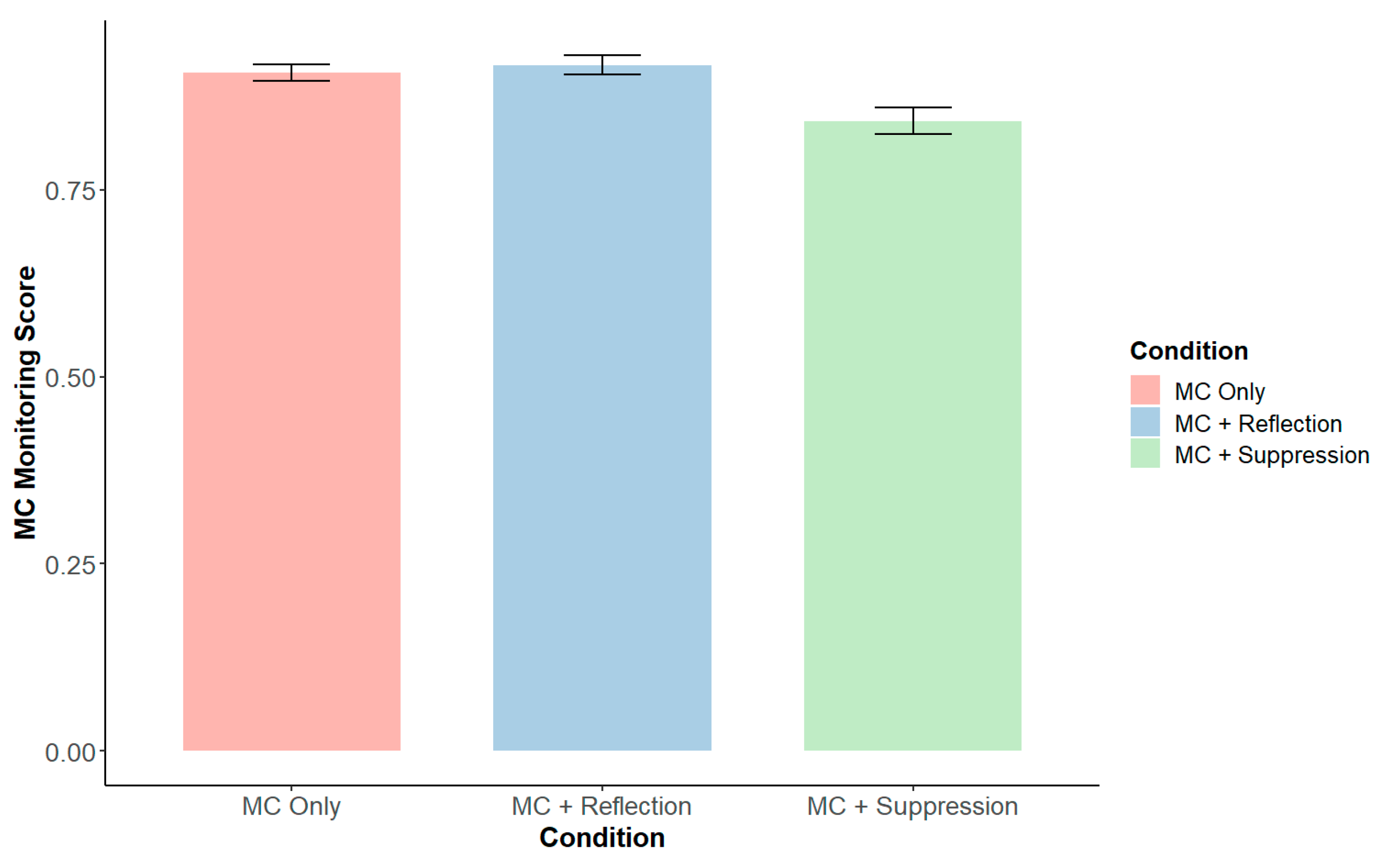

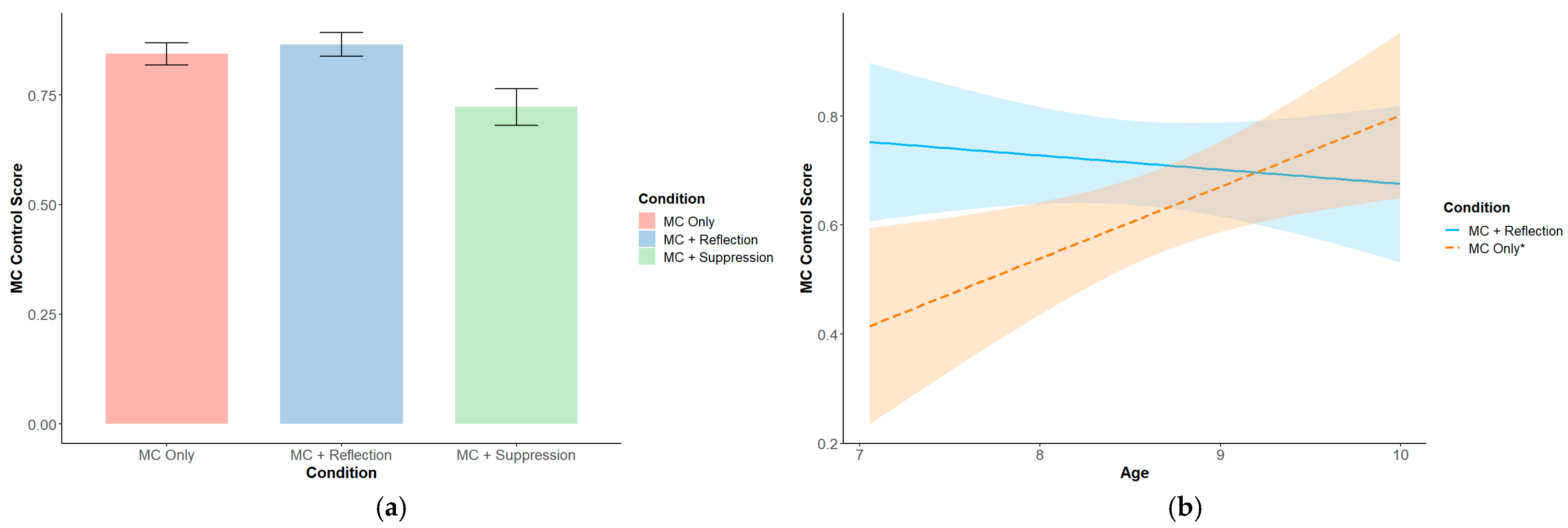

3.2. Metacognitive-Level Results

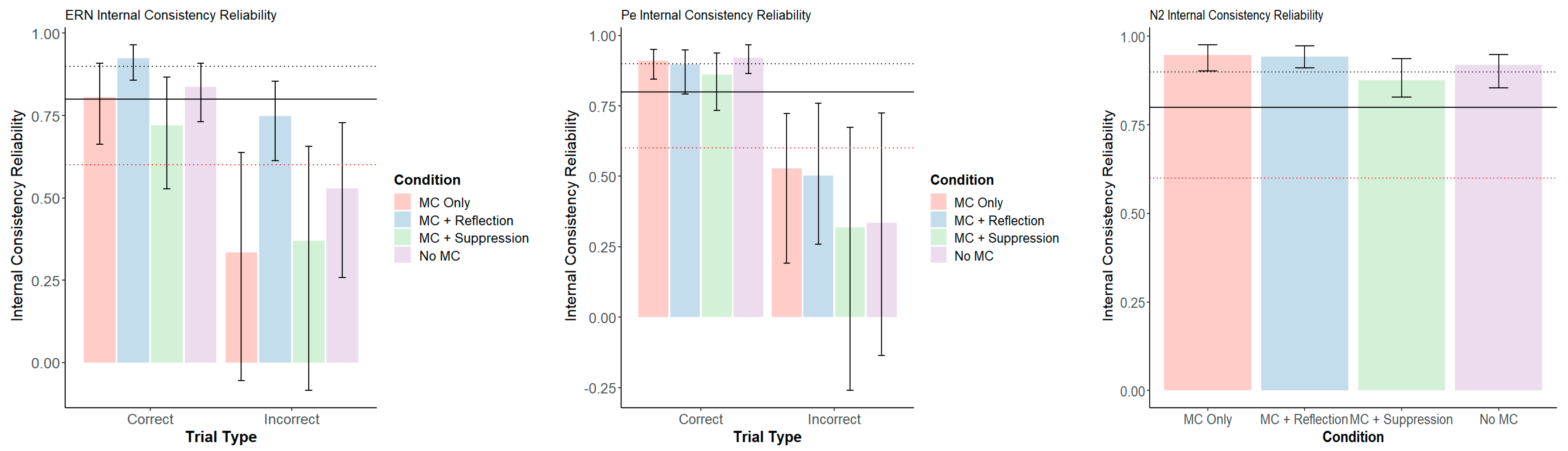

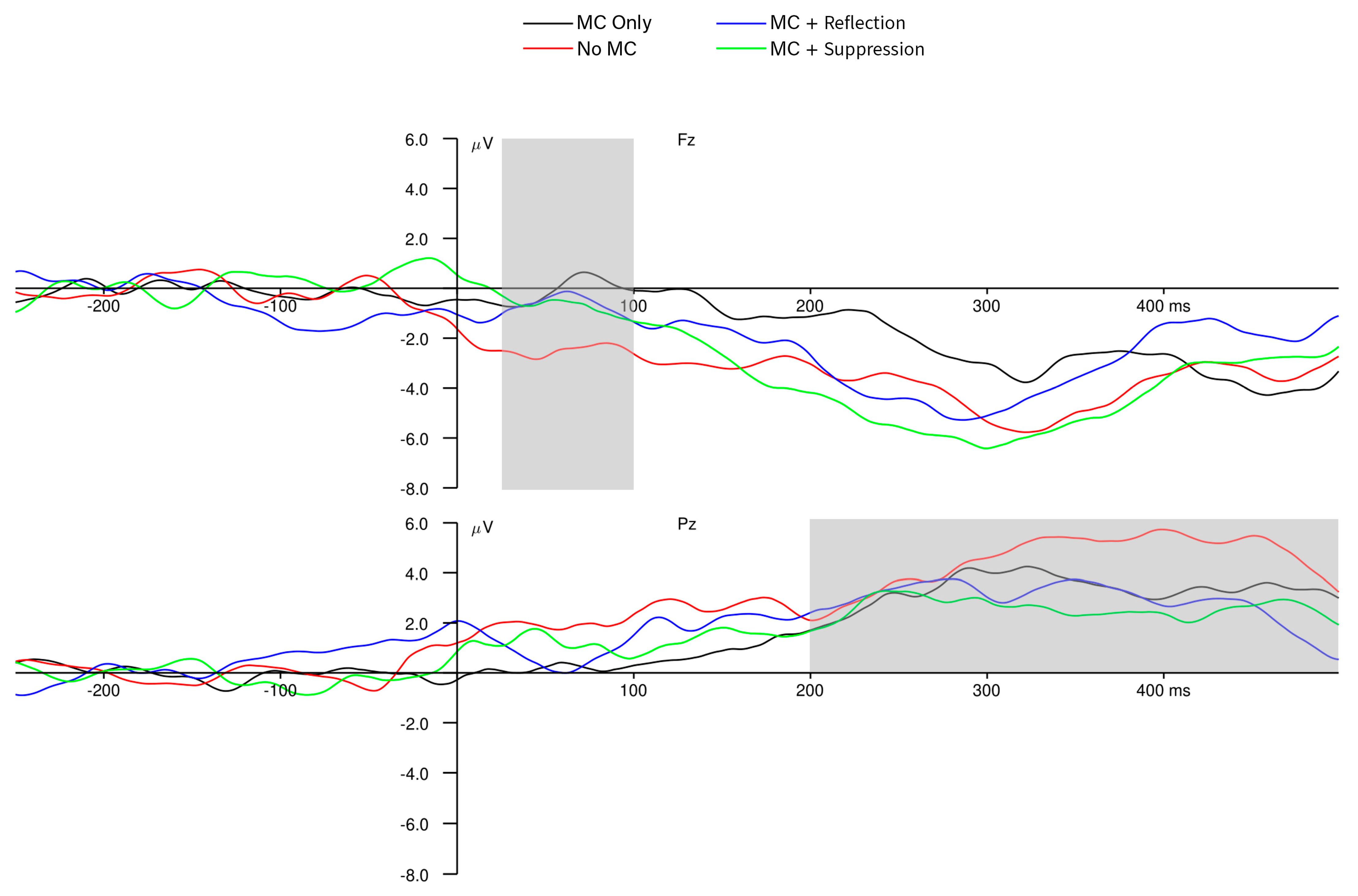

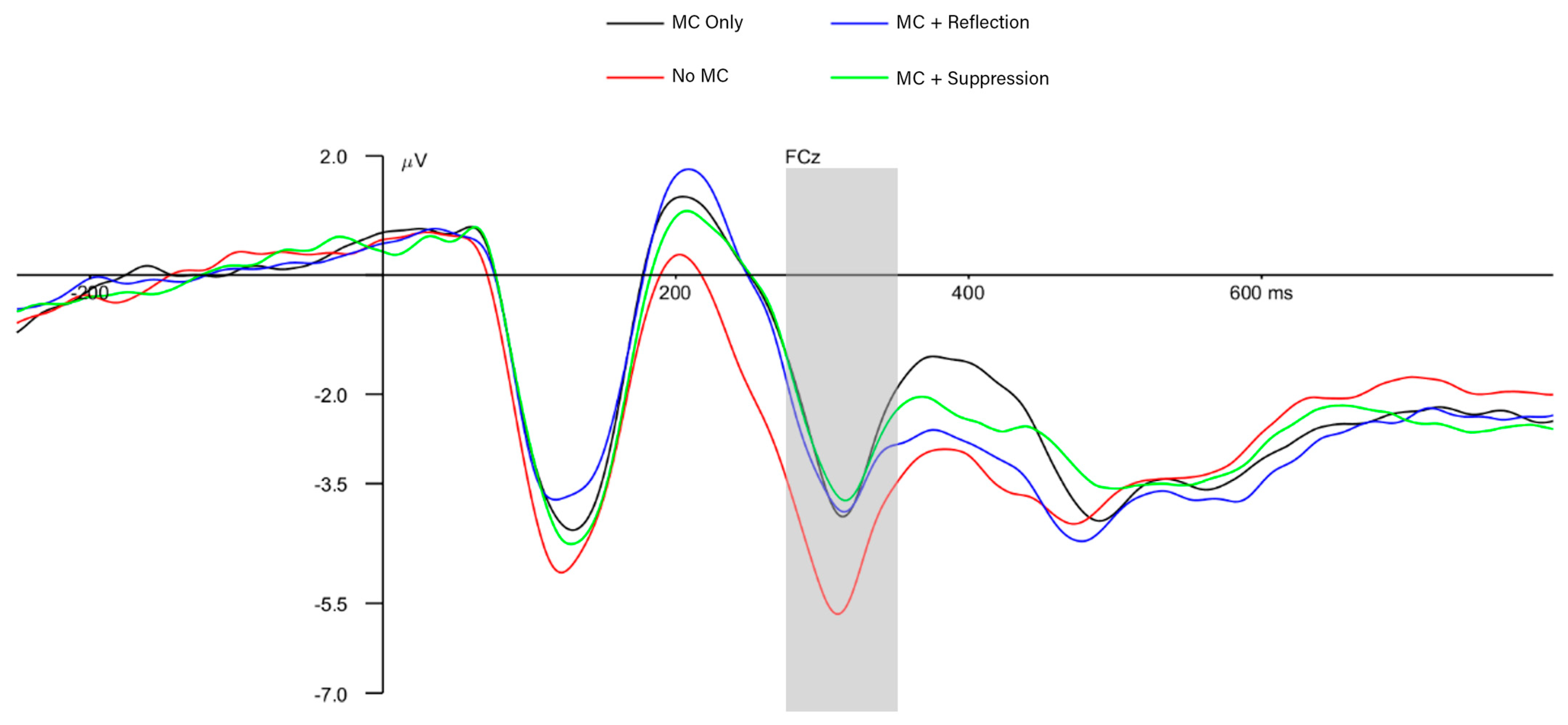

3.3. Neural-Level Results

3.4. Residual Correlations Among Multi-Level Dependent Variables

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| aSME | Analytic Standard Measurement Error |

| DCCS | Dimensional Change Card Sort |

| EEG | Electroencephalography |

| EF | Executive Function |

| ERN | Error-related Negativity |

| ERP | Event-related Potential |

| HAPPE | Harvard Automated Processing Pipeline for Electroencephalography |

| MAAS | Mindful Attention Awareness Scale |

| MC | Metacognitive |

| McKI | Metacognitive Knowledge Interview |

| Pe | Error Positivity |

| RT | Reaction Time |

| SCARED | Screen for Child Anxiety Related Disorders |

Appendix A

| N | No-MC | No-MC × Age | Reflection | Reflection × Age | Suppression | Suppression × Age | Age | Anxiety | Mindfulness | MC Knowledge | Gender | Income | Ethnicity | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DCCS Accuracy | 107 | −0.18 | 0.03 | 0.07 | −0.64 * | −0.70 ** | −0.43 | 0.61 * | −0.05 | 0.23 * | 0.11 | −0.20 | −0.01 | −0.46 |

| DCCS RT | 107 | 0.09 | 0.05 | −0.04 | −0.28 | 0.24 | −0.23 | 0.03 | 0.03 | 0.06 | 0.04 | −0.39 | 0.02 | −0.06 |

| DCCS PES | 104 | 0.30 | −0.00 | 0.28 | −0.16 | −0.00 | 0.39 | 0.11 | −0.02 | 0.09 | 0.11 | −0.08 | −0.03 | 0.10 |

| MC Monitoring | 82 | - | - | 0.25 | −0.13 | −0.76 ** | −0.35 | 0.13 | −0.08 | 0.01 | 0.18 | 0.06 | 0.02 | 0.22 |

| MC Control | 82 | - | - | 0.47 | −0.72 * | −0.35 | −0.25 | 0.60 * | 0.15 | 0.22 * | 0.32 ** | −0.39 | −0.02 | 0.10 |

| ERN amplitude | 96 | −0.67 * | −0.42 | −0.20 | −0.11 | −0.13 | −0.20 | 0.04 | 0.12 | 0.06 | −0.04 | 0.16 | −0.05 | 0.03 |

| Pe amplitude | 96 | 0.56 | 0.10 | −0.12 | 0.07 | 0.08 | −0.03 | 0.15 | 0.40 *** | 0.00 | 0.02 | 0.01 | 0.03 | −0.29 |

| N2 amplitude | 106 | −0.93 ** | 0.30 | −0.55 | −0.03 | −0.40 | 0.05 | −0.29 | −0.02 | 0.01 | −0.15 | 0.14 | −0.07 * | 0.07 |

References

- Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society Series B: Statistical Methodology, 57(1), 289–300. [Google Scholar] [CrossRef]

- Birmaher, B., Khetarpal, S., Brent, D., Cully, M., Balach, L., Kaufman, J., & Neer, S. M. (1997). The screen for child anxiety related emotional disorders (SCARED): Scale construction and psychometric characteristics. Journal of the American Academy of Child & Adolescent Psychiatry, 36(4), 545–553. [Google Scholar] [CrossRef]

- Boen, R., Quintana, D. S., Ladouceur, C. D., & Tamnes, C. K. (2022). Age-related differences in the error-related negativity and error positivity in children and adolescents are moderated by sample and methodological characteristics: A meta-analysis. Psychophysiology, 59(6), e14003. [Google Scholar] [CrossRef] [PubMed]

- Bryce, D., Whitebread, D., & Szűcs, D. (2015). The relationships among executive functions, metacognitive skills and educational achievement in 5 and 7 year-old children. Metacognition and Learning, 10(2), 181–198. [Google Scholar] [CrossRef]

- Chevalier, N., Martis, S. B., Curran, T., & Munakata, Y. (2015). Metacognitive processes in executive control development: The case of reactive and proactive control. Journal of Cognitive Neuroscience, 27(6), 1125–1136. [Google Scholar] [CrossRef]

- Cortés Pascual, A., Moyano Muñoz, N., & Quílez Robres, A. (2019). The relationship between executive functions and academic performance in primary education: Review and meta-analysis. Frontiers in Psychology, 10, 1582. [Google Scholar] [CrossRef]

- Davidson, M. C., Amso, D., Anderson, L. C., & Diamond, A. (2006). Development of cognitive control and executive functions from 4 to 13 years: Evidence from manipulations of memory, inhibition, and task switching. Neuropsychologia, 44(11), 2037–2078. [Google Scholar] [CrossRef]

- Davies, P. L., Segalowitz, S. J., & Gavin, W. J. (2004). Development of response-monitoring ERPs in 7-to 25-year-olds. Developmental Neuropsychology, 25(3), 355–376. [Google Scholar] [CrossRef]

- Delorme, A., & Makeig, S. (2004). EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods, 134(1), 9–21. [Google Scholar] [CrossRef]

- Demetriou, A., Makris, N., Kazi, S., Spanoudis, G., & Shayer, M. (2018). The developmental trinity of mind: Cognizance, executive control, and reasoning. Wiley Interdisciplinary Reviews: Cognitive Science, 9(4), e1461. [Google Scholar] [CrossRef]

- de Mooij, S. M., Dumontheil, I., Kirkham, N. Z., Raijmakers, M. E., & van der Maas, H. L. (2022). Post-error slowing: Large scale study in an online learning environment for practising mathematics and language. Developmental Science, 25(2), e13174. [Google Scholar] [CrossRef]

- Dumont, É., Castellanos-Ryan, N., Parent, S., Jacques, S., Séguin, J. R., & Zelazo, P. D. (2022). Transactional longitudinal relations between accuracy and reaction time on a measure of cognitive flexibility at 5, 6, and 7 years of age. Developmental Science, 25(5), e13254. [Google Scholar] [CrossRef] [PubMed]

- Dutilh, G., Vandekerckhove, J., Forstmann, B. U., Keuleers, E., Brysbaert, M., & Wagenmakers, E. J. (2012). Testing theories of post-error slowing. Attention, Perception, & Psychophysics, 74, 454–465. [Google Scholar] [CrossRef]

- Emerson, M. J., & Miyake, A. (2003). The role of inner speech in task switching: A dual-task investigation. Journal of Memory and Language, 48(1), 148–168. [Google Scholar] [CrossRef]

- Espinet, S. D., Anderson, J. E., & Zelazo, P. D. (2012). N2 amplitude as a neural marker of executive function in young children: An ERP study of children who switch versus perseverate on the Dimensional Change Card Sort. Developmental Cognitive Neuroscience, 2, S49–S58. [Google Scholar] [CrossRef]

- Espinet, S. D., Anderson, J. E., & Zelazo, P. D. (2013). Reflection training improves executive function in preschool-age children: Behavioral and neural effects. Developmental Cognitive Neuroscience, 4, 3–15. [Google Scholar] [CrossRef] [PubMed]

- Fatzer, S. T., & Roebers, C. M. (2012). Language and executive functions: The effect of articulatory suppression on executive functioning in children. Journal of Cognition and Development, 13(4), 454–472. [Google Scholar] [CrossRef]

- Flavell, J. H. (1979). Metacognition and cognitive monitoring: A new area of cognitive–developmental inquiry. American Psychologist, 34(10), 906–911. [Google Scholar] [CrossRef]

- Fleming, S. M., & Lau, H. C. (2014). How to measure metacognition. Frontiers in Human Neuroscience, 8, 443. [Google Scholar] [CrossRef]

- Gabard-Durnam, L. J., Mendez Leal, A. S., Wilkinson, C. L., & Levin, A. R. (2018). The Harvard Automated Processing Pipeline for Electroencephalography (HAPPE): Standardized processing software for developmental and high-artifact data. Frontiers in Neuroscience, 12, 97. [Google Scholar] [CrossRef]

- Geurten, M., Catale, C., & Meulemans, T. (2016). Involvement of executive functions in children’s metamemory. Applied Cognitive Psychology, 30(1), 70–80. [Google Scholar] [CrossRef]

- Gonthier, C., & Blaye, A. (2022). Preschoolers can be instructed to use proactive control. Cognitive Development, 62, 101175. [Google Scholar] [CrossRef]

- Hadley, L. V., Acluche, F., & Chevalier, N. (2020). Encouraging performance monitoring promotes proactive control in children. Developmental Science, 23(1), e12861. [Google Scholar] [CrossRef]

- Hajcak, G., Moser, J. S., Yeung, N., & Simons, R. F. (2005). On the ERN and the significance of errors. Psychophysiology, 42(2), 151–160. [Google Scholar] [CrossRef]

- Harvey, N. (1997). Confidence in judgment. Trends in Cognitive Sciences, 1(2), 78–82. [Google Scholar] [CrossRef] [PubMed]

- Heemskerk, C. H. H. M., & Roebers, C. M. (2024). Speed and accuracy training affects young children’s cognitive control. Journal of Cognition and Development, 25(5), 643–666. [Google Scholar] [CrossRef]

- Holroyd, C. B., & Coles, M. G. (2002). The neural basis of human error processing: Reinforcement learning, dopamine, and the error-related negativity. Psychological Review, 109(4), 679–709. [Google Scholar] [CrossRef]

- Juul, H., Poulsen, M., & Elbro, C. (2014). Separating speed from accuracy in beginning reading development. Journal of Educational Psychology, 106(4), 1096–1106. [Google Scholar] [CrossRef]

- Kabat-Zinn, J. (1994). Wherever you go, there you are: Mindfulness meditation in everyday life. Hachette UK. [Google Scholar]

- Karageorgos, P., Richter, T., Haffmans, M. B., Schindler, J., & Naumann, J. (2020). The role of word-recognition accuracy in the development of word-recognition speed and reading comprehension in primary school: A longitudinal examination. Cognitive Development, 56, 100949. [Google Scholar] [CrossRef]

- Kaunhoven, R. J., & Dorjee, D. (2017). How does mindfulness modulate self-regulation in pre-adolescent children? An integrative neurocognitive review. Neuroscience & Biobehavioral Reviews, 74, 163–184. [Google Scholar] [CrossRef]

- Kälin, S., & Roebers, C. M. (2022). Longitudinal associations between executive functions and metacognitive monitoring in 5-to 8-year-olds. Metacognition and Learning, 17(3), 1079–1095. [Google Scholar] [CrossRef]

- Lamm, C., Zelazo, P. D., & Lewis, M. D. (2006). Neural correlates of cognitive control in childhood and adolescence: Disentangling the contributions of age and executive function. Neuropsychologia, 44, 2139–2148. [Google Scholar] [CrossRef] [PubMed]

- Laureys, F., De Waelle, S., Barendse, M. T., Lenoir, M., & Deconinck, F. J. (2022). The factor structure of executive function in childhood and adolescence. Intelligence, 90, 101600. [Google Scholar] [CrossRef]

- Lawlor, M. S., Schonert-Reichl, K. A., Gadermann, A. M., & Zumbo, B. D. (2014). A validation study of the mindful attention awareness scale adapted for children. Mindfulness, 5, 730–741. [Google Scholar] [CrossRef]

- Lo, S. L. (2018). A meta-analytic review of the event-related potentials (ERN and N2) in childhood and adolescence: Providing a developmental perspective on the conflict monitoring theory. Developmental Review, 48, 82–112. [Google Scholar] [CrossRef]

- Lopez-Calderon, J., & Luck, S. J. (2014). ERPLAB: An open-source toolbox for the analysis of event-related potentials. Frontiers in Human Neuroscience, 8, 213. [Google Scholar] [CrossRef]

- Luria, A. R. (1959). The directive function of speech in development and dissolution. Word, 15(2), 341–352. [Google Scholar] [CrossRef]

- Lyons, K. E., & Ghetti, S. (2011). The development of uncertainty monitoring in early childhood. Child Development, 82(6), 1778–1787. [Google Scholar] [CrossRef]

- Lyons, K. E., & Zelazo, P. D. (2011). Monitoring, metacognition, and executive function: Elucidating the role of self-reflection in the development of self-regulation. Advances in Child Development and Behavior, 40, 379–412. [Google Scholar] [CrossRef]

- Mak, C., Whittingham, K., Cunnington, R., & Boyd, R. N. (2018). Efficacy of mindfulness-based interventions for attention and executive function in children and adolescents—A systematic review. Mindfulness, 9, 59–78. [Google Scholar] [CrossRef]

- Marcovitch, S., & Zelazo, P. D. (2009). A hierarchical competing systems model of the emergence and early development of executive function. Developmental Science, 12, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Marulis, L. M., & Nelson, L. J. (2021). Metacognitive processes and associations to executive function and motivation during a problem-solving task in 3–5 year olds. Metacognition and Learning, 16(1), 207–231. [Google Scholar] [CrossRef]

- Marulis, L. M., Baker, S. T., & Whitebread, D. (2020). Integrating metacognition and executive function to enhance young children’s perception of and agency in their learning. Early Childhood Research Quarterly, 50, 46–54. [Google Scholar] [CrossRef]

- Marulis, L. M., Palincsar, A. S., Berhenke, A. L., & Whitebread, D. (2016). Assessing metacognitive knowledge in 3–5 year olds: The development of a metacognitive knowledge interview (McKI). Metacognition and Learning, 11, 339–368. [Google Scholar] [CrossRef]

- Moran, T. P. (2016). Anxiety and working memory capacity: A meta-analysis and narrative review. Psychological Bulletin, 142(8), 831. [Google Scholar] [CrossRef]

- Moser, J. S., Moran, T. P., Schroder, H. S., Donnellan, M. B., & Yeung, N. (2013). On the relationship between anxiety and error monitoring: A meta-analysis and conceptual framework. Frontiers in Human Neuroscience, 7, 466. [Google Scholar] [CrossRef] [PubMed]

- Nelson, T. O., & Narens, L. (1994). Why investigate metacognition? In J. Metcalfe, & A. P. Shimamura (Eds.), Metacognition: Knowing about knowing (pp. 1–25). MIT Press. [Google Scholar] [CrossRef]

- Nolan, H., Whelan, R., & Reilly, R. B. (2010). FASTER: Fully automated statistical thresholding for EEG artifact rejection. Journal of Neuroscience Methods, 192(1), 152–162. [Google Scholar] [CrossRef]

- Olvet, D. M., & Hajcak, G. (2009). Reliability of error-related brain activity. Brain Research, 1284, 89–99. [Google Scholar] [CrossRef]

- Overbeek, T. J., Nieuwenhuis, S., & Ridderinkhof, K. R. (2005). Dissociable components of error processing: On the functional significance of the Pe vis-à-vis the ERN/Ne. Journal of Psychophysiology, 19(4), 319–329. [Google Scholar] [CrossRef]

- Overton, W. F., & Ricco, R. B. (2011). Dual–systems and the development of reasoning: Competence–procedural systems. WIREs Cognitive Science, 2, 231–237. [Google Scholar] [CrossRef]

- Pozuelos, J. P., Combita, L. M., Abundis, A., Paz-Alonso, P. M., Conejero, Á., Guerra, S., & Rueda, M. R. (2019). Metacognitive scaffolding boosts cognitive and neural benefits following executive attention training in children. Developmental Science, 22(2), e12756. [Google Scholar] [CrossRef]

- Roebers, C. M. (2017). Executive function and metacognition: Towards a unifying framework of cognitive self-regulation. Developmental Review, 45, 31–51. [Google Scholar] [CrossRef]

- Roebers, C. M., Cimeli, P., Röthlisberger, M., & Neuenschwander, R. (2012). Executive functioning, metacognition, and self-perceived competence in elementary school children: An explorative study on their interrelations and their role for school achievement. Metacognition and Learning, 7, 151–173. [Google Scholar] [CrossRef]

- Roebers, C. M., & Spiess, M. (2017). The development of metacognitive monitoring and control in second graders: A short-term longitudinal study. Journal of Cognition and Development, 18(1), 110–128. [Google Scholar] [CrossRef]

- Schraw, G. (2009). A conceptual analysis of five measures of metacognitive monitoring. Metacognition and Learning, 4, 33–45. [Google Scholar] [CrossRef]

- Shields, G. S., Sazma, M. A., & Yonelinas, A. P. (2016). The effects of acute stress on core executive functions: A meta-analysis and comparison with cortisol. Neuroscience & Biobehavioral Reviews, 68, 651–668. [Google Scholar] [CrossRef]

- Spiegel, J. A., Goodrich, J. M., Morris, B. M., Osborne, C. M., & Lonigan, C. J. (2021). Relations between executive functions and academic outcomes in elementary school children: A meta-analysis. Psychological Bulletin, 147(4), 329–351. [Google Scholar] [CrossRef] [PubMed]

- Stucke, N. J., & Doebel, S. (2024). Early childhood executive function predicts concurrent and later social and behavioral outcomes: A review and meta-analysis. Psychological Bulletin, 150(10), 1178–1206. [Google Scholar] [CrossRef]

- Troller-Renfree, S. V., Buzzell, G. A., & Fox, N. A. (2020). Changes in working memory influence the transition from reactive to proactive cognitive control during childhood. Developmental Science, 23(6), e12959. [Google Scholar] [CrossRef]

- Xu, W., Monachino, A. D., McCormick, S. A., Margolis, E. T., Sobrino, A., Bosco, C., Franke, C. J., Davel, L., Zieff, M. R., Donald, K. A., Gabard-Durnam, L. J., & Morales, S. (2024). Advancing the reporting of pediatric EEG data: Tools for estimating reliability, effect size, and data quality metrics. Developmental Cognitive Neuroscience, 70, 101458. [Google Scholar] [CrossRef]

- Yang, Y., Shields, G. S., Zhang, Y., Wu, H., Chen, H., & Romer, A. L. (2022). Child executive function and future externalizing and internalizing problems: A meta-analysis of prospective longitudinal studies. Clinical Psychology Review, 97, 102194. [Google Scholar] [CrossRef]

- Zelazo, P. D. (2004). The development of conscious control in childhood. Trends in Cognitive Sciences, 8(1), 12–17. [Google Scholar] [CrossRef] [PubMed]

- Zelazo, P. D. (2006). The dimensional change card sort (DCCS): A method of assessing executive function in children. Nature Protocols, 1, 297–301. [Google Scholar] [CrossRef] [PubMed]

- Zelazo, P. D. (2015). Executive function: Reflection, iterative reprocessing, complexity, and the developing brain. Developmental Review, 38, 55–68. [Google Scholar] [CrossRef]

- Zelazo, P. D. (2020). Executive function and psychopathology: A neurodevelopmental perspective. Annual Review of Clinical Psychology, 16, 431–454. [Google Scholar] [CrossRef]

- Zelazo, P. D., Anderson, J. E., Richler, J., Wallner-Allen, K., Beaumont, J. L., & Weintraub, S. (2013). II. NIH toolbox cognition battery (CB): Measuring executive function and attention. Monographs of the Society for Research in Child Development, 78(4), 16–33. [Google Scholar] [CrossRef]

- Zelazo, P. D., & Carlson, S. M. (2023). Reconciling the context-dependency and domain-generality of executive function skills from a developmental systems perspective. Journal of Cognition and Development, 24(2), 205–222. [Google Scholar] [CrossRef]

| N | Mean | SD | 1. | 2. | 3. | 4. | 5. | 6. | 7. | 8. | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. DCCS accuracy | 119 | 0.78 | 0.14 | - | |||||||

| 2. DCCS RT | 119 | 708 | 219 | 0.25 * | - | ||||||

| 3. DCCS PES | 116 | 51 | 242 | −0.07 | 0.06 | - | |||||

| 4. MC monitoring | 90 | 0.89 | 0.18 | 0.61 *** | 0.14 | −0.06 | - | ||||

| 5. MC control | 90 | 0.81 | 0.19 | 0.41 ** | 0.11 | −0.13 | 0.42 *** | - | |||

| 6. ERN amplitude | 107 | −0.87 | 5.04 | −0.07 | −0.02 | 0.05 | 0.03 | 0.33 * | - | ||

| 7. Pe amplitude | 107 | 3.50 | 4.44 | 0.21 | 0.21 | −0.22 | 0.07 | 0.13 | −0.16 | - | |

| 8. N2 amplitude | 118 | −3.35 | 3.22 | 0.01 | 0.18 | 0.03 | −0.09 | 0.15 | 0.11 | 0.07 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Drexler, C.; Zelazo, P.D. Metacognitive Prompts Influence 7- to 9-Year-Olds’ Executive Function at the Levels of Task Performance and Neural Processing. Behav. Sci. 2025, 15, 644. https://doi.org/10.3390/bs15050644

Drexler C, Zelazo PD. Metacognitive Prompts Influence 7- to 9-Year-Olds’ Executive Function at the Levels of Task Performance and Neural Processing. Behavioral Sciences. 2025; 15(5):644. https://doi.org/10.3390/bs15050644

Chicago/Turabian StyleDrexler, Colin, and Philip David Zelazo. 2025. "Metacognitive Prompts Influence 7- to 9-Year-Olds’ Executive Function at the Levels of Task Performance and Neural Processing" Behavioral Sciences 15, no. 5: 644. https://doi.org/10.3390/bs15050644

APA StyleDrexler, C., & Zelazo, P. D. (2025). Metacognitive Prompts Influence 7- to 9-Year-Olds’ Executive Function at the Levels of Task Performance and Neural Processing. Behavioral Sciences, 15(5), 644. https://doi.org/10.3390/bs15050644