Feel the Music!—Audience Experiences of Audio–Tactile Feedback in a Novel Virtual Reality Volumetric Music Video

Abstract

1. Introduction

- Haptic feedback, as vibrotactile stimuli, can enhance factors of user experience in virtual reality volumetric music video experiences.

- Haptic feedback, as vibrotactile stimuli, can influence subjective evaluations of the contributing aspects of presence experiences in virtual reality volumetric music video experiences.

2. Background and Related Work

2.1. Immersive Virtual Environments and Presence

2.1.1. Multimodal Stimuli

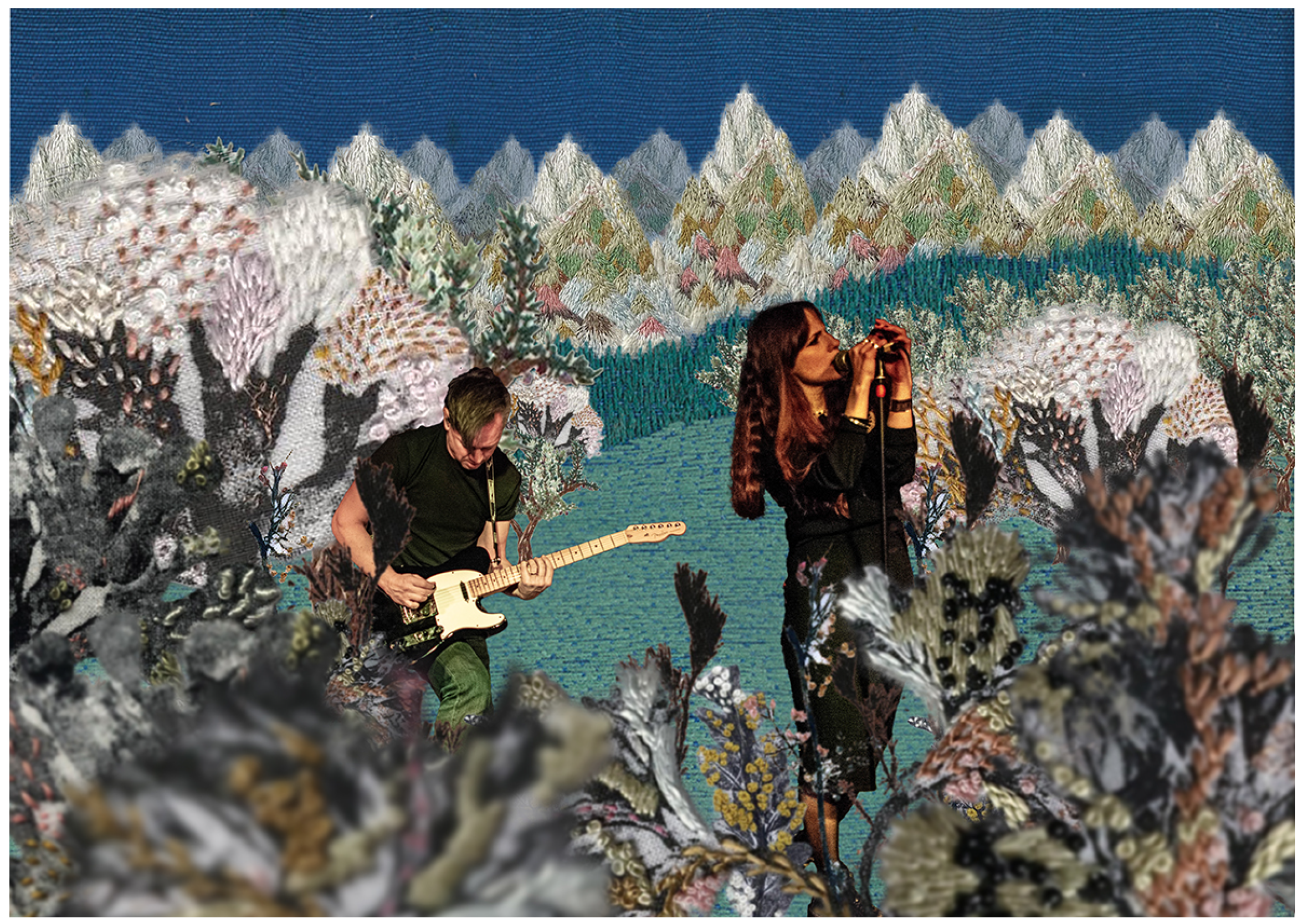

2.1.2. Volumetric Video

2.1.3. Spatial Sound

2.1.4. Haptics

2.1.5. VR Performance

3. Methodology

3.1. Experiment Task and Measurement Tools

- Baseline Scenario (B0)—participants view a volumetric music video via a VR device;

- Experiment Scenario 1 (S1)—participants view a volumetric music video via a VR device with an additional vibrotactile stimulus.

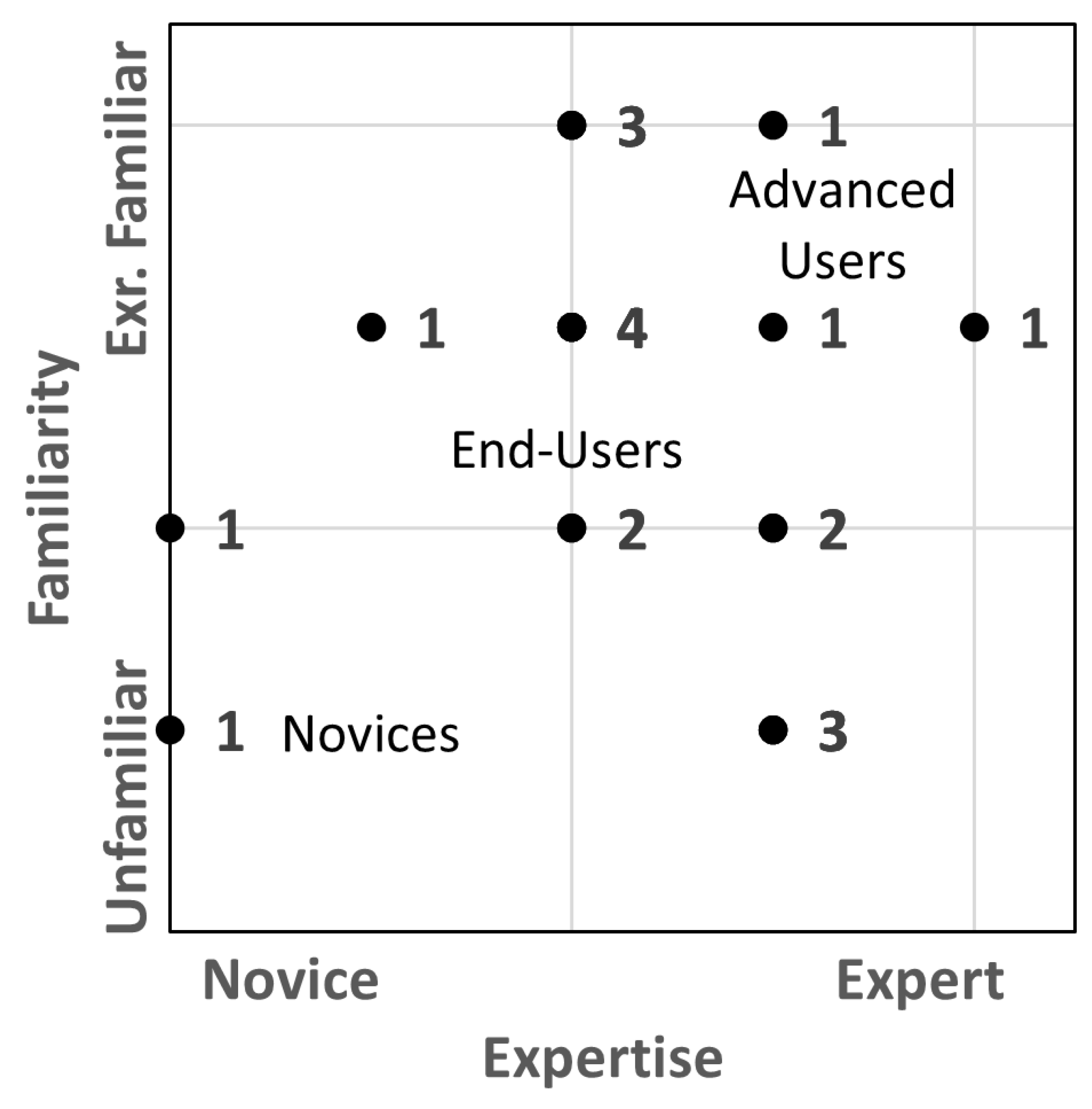

3.2. Participants

4. Results

4.1. UEQ

4.2. Presence Questionnaire

4.3. Qualitative Feedback

5. Discussion

5.1. Familiarity with Musical Content

5.2. User Experiences

5.3. Feelings of Presence

“VR offers a medium for impressive and awe-inspiring performances; therefore, it shouldn’t be wasted on conventional stages (normal-sized people and statues). The live Travis Scott concert in the online video game Fortnite is a good example of what should be aimed for in the virtual scene.”

5.4. The Use of Volumetric Video

“I think both can co-exist and complete each other, although this new type of performance can benefit musicians (advertising, music discovery, etc.).”

“People might prefer concerts in their living room, like Netflix streaming, instead of going to the cinema.”

“I don’t think VV music videos will affect the live music scene—they cannot yet capture the liveness integral to gigs. It is perhaps more likely that they will compete with commercial music videos and be used similarly.”

“They’d need to have a higher definition to become more popular, and they would need to be easily accessible (online hub?) through VR platforms or AR marketplaces on smartphones.”

“You lose part of the immersion, brushing shoulders with another human being at a concert. It’s odd watching a performance when you cannot physically interact with the world. However, the vibrations on my hands did improve my immersion overall.”

“A huge group of people’s engagement is very different from a person with a headset. A live concert is more touching and exciting, and VR is more supervised.”

“I can imagine there would be a market for people to attend a Beatles concert or Elvis Presley concert since these are things we can’t do anymore, but people can participate in regular shows or watch them on TV.”

6. Constraints and Future Work

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

| 1 | |

| 2 |

References

- AltspaceVR. n.d. Live Musical Performance with Yunjiverse. Available online: https://account.altvr.com/events/1362104248040423923 (accessed on 29 January 2023).

- Avanzini, Federico. 2022. Procedural modeling of interactive sound sources in virtual reality. In Sonic Interactions in Virtual Environments. Cham: Springer, pp. 49–76. [Google Scholar]

- Bailenson, Jeremy. 2018. Experience on Demand: What Virtual Reality Is, How It Works, and What It Can Do. New York: WW Norton & Company. [Google Scholar]

- Barbosa, Jerônimo, Filipe Calegario, Veronica Teichrieb, Geber Ramalho, and Patrick McGlynn. 2012. Considering Audience’s View Towards an Evaluation Methodology for Digital Musical Instruments. Paper presented at the New Interfaces for Musical Expression NIME’12, Ann Arbor, MI, USA, May 21–23. [Google Scholar]

- Barbosa, Jeronimo, Joseph Malloch, Marcelo M. Wanderley, and Stéphane Huot. 2015. What does “Evaluation” mean for the NIME community? Paper presented at the 15th International Conference on New Interfaces for Musical Expression, Baton Rouge, LA, USA, May 31–June 3. [Google Scholar]

- Bates, Enda, Brian Bridges, and Adam Melvin. 2019. Sound Spatialization. In Foundations in Sound Design for Interactive Media: A Multidisciplinary Approach. Abingdon-on-Thames: Routledge, pp. 141–60. [Google Scholar]

- Begault, Durand R. 2000. 3-D Sound for Virtual Reality and Multimedia; Washington, DC: National Aeronautics and Space Administration NASA.

- Benzing, Kiira. 2021. Finding Pandora X. Social VR. Drama. Double Eye Productions. Pune: VR Performance. [Google Scholar]

- Blascovich, Jim, Jack Loomis, Andrew C. Beall, Kimberly R. Swinth, Crystal L. Hoyt, and Jeremy N. Bailenson. 2002. Immersive virtual environment technology as a methodological tool for social psychology. Psychological Inquiry 13: 103–24. [Google Scholar] [CrossRef]

- Bowman, Doug A., and Ryan P. McMahan. 2007. Virtual reality: How much immersion is enough? Computer 40: 36–43. [Google Scholar] [CrossRef]

- Brown, Emily, and Paul Cairns. 2004. A grounded investigation of game immersion. In CHI’04 Extended Abstracts on Human Factors in Computing Systems. New York: ACM, pp. 1297–300. [Google Scholar]

- Cerdá, Salvador, Alicia Giménez, and Rosa M. Cibrián. 2012. An objective scheme for ranking halls and obtaining criteria for improvements and design. Journal of the Audio Engineering Society 60: 419–30. [Google Scholar]

- Collet, Alvaro, Ming Chuang, Pat Sweeney, Don Gillett, Dennis Evseev, David Calabrese, Hugues Hoppe, Adam Kirk, and Steve Sullivan. 2015. High-quality streamable free-viewpoint video. ACM Transactions on Graphics (ToG) 34: 1–13. [Google Scholar] [CrossRef]

- Coomans, Marc K., and Harry J. Timmermans. 1997. Towards a taxonomy of virtual reality user interfaces. Paper presented at the 1997 IEEE Conference on Information Visualization (Cat. No. 97TB100165), London, UK, August 27–29; pp. 279–84. [Google Scholar]

- Csikszentmihalyi, Mihaly, and Reed Larson. 2014. Flow and the Foundations of Positive Psychology. Dordrecht: Springer, vol. 10, pp. 978–94. [Google Scholar]

- Csikszentmihalyi, Mihaly, Sonal Khosla, and Jeanne Nakamura. 2016. Flow at Work. The Wiley Blackwell Handbook of the Psychology of Positivity and Strengths-Based Approaches at Work. Hoboken: Wiley, pp. 99–109. [Google Scholar]

- Davis, Tom. 2011. Towards a relational understanding of the performance ecosystem. Organised Sound 16: 120–24. [Google Scholar] [CrossRef]

- Evans, Leighton. 2018. The Re-Emergence of Virtual Reality. Abingdon-on-Thames: Routledge. [Google Scholar]

- Fang, Likun, Reimann Malte, Erik Pescara, and Michael Beigl. 2022. Investigate the Piano Learning Rate with Haptic Actuators in Mixed Reality. Paper presented at the Augmented Humans (AHs 2022), Chiba, Japan, March 13–15; New York: ACM, pp. 287–90. [Google Scholar] [CrossRef]

- Fyans, A. Cavan, Michael Gurevich, and Paul Stapleton. 2009. Spectator understanding of error in performance. In CHI’09 Extended Abstracts on Human Factors in Computing Systems. New York: Association for Computing Machinery, pp. 3955–60. [Google Scholar]

- Fyans, A. Cavan, Michael Gurevich, and Paul Stapleton. 2010. Examining the Spectator Experience. Paper presented at the New Interfaces for Musical Expression NIME’10, Sydney, Australia, June 15–18, vol. 10, pp. 1–4. [Google Scholar]

- Gall, Dominik, and Marc Erich Latoschik. 2018. The effect of haptic prediction accuracy on presence. Paper presented at the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, March 22–26; pp. 73–80. [Google Scholar]

- García-Valle, Gonzalo, Manuel Ferre, Jose Breñosa, and David Vargas. 2017. Evaluation of presence in virtual environments: Haptic vest and user’s haptic skills. IEEE Access 6: 7224–33. [Google Scholar] [CrossRef]

- Geronazzo, Michele, and Stefania Serafin. 2022. Sonic Interactions in Virtual Environments: The Egocentric Audio Perspective of the Digital Twin. In Sonic Interactions in Virtual Environments. Cham: Springer International Publishing, pp. 3–45. [Google Scholar]

- Giam, Connor, Joseph Kong, and Troy McDaniel. 2022. Passive Haptic Learning as a Reinforcement Modality for Information. Paper presented at the Smart Multimedia: Third International Conference, ICSM 2022, Marseille, France, August 25–27; Revised Selected Papers. Cham: Springer International Publishing, pp. 395–405. [Google Scholar]

- Glennie, Evelyn. 2015. Hearing Essay. Available online: https://www.evelyn.co.uk/hearing-essay/ (accessed on 27 January 2023).

- Grindlay, Graham Charles. 2007. The Impact of Haptic Guidance on Musical Motor Learning. Ph.D. dissertation, Massachusetts Institute of Technology, Cambridge, MA, USA. [Google Scholar]

- Grimshaw, Mark. 2007. Sound and immersion in the first-person shooter. Paper presented at the 11th International Conference on Computer Games: AI, Animation, Mobile, Educational and Serious Games, Rochelle, France, November 21–23. [Google Scholar]

- Gunther, Eric, and Sile O’Modhrain. 2003. Cutaneous grooves: Composing for the sense of touch. Journal of New Music Research 32: 369–81. [Google Scholar] [CrossRef]

- Gurevich, Michael, and A. Cavan Fyans. 2011. Digital musical interactions: Performer–system relationships and their perception by spectators. Organised Sound 16: 166–75. [Google Scholar] [CrossRef]

- Habermann, Marc, Weipeng Xu, Michael Zollhöfer, Gerard Pons-Moll, and Christian Theobalt. 2019. Livecap: Real-time human performance capture from monocular video. ACM Transactions on Graphics (TOG) 38: 1–17. [Google Scholar] [CrossRef]

- Haywood, Naomi, and Paul Cairns. 2006. Engagement with an interactive museum exhibit. In People and Computers XIX—The Bigger Picture: Proceedings of HCI 2005. London: Springer, pp. 113–29. [Google Scholar]

- Hekkert, Paul. 2006. Design aesthetics: Principles of pleasure in design. Psychology Science 48: 157. [Google Scholar]

- Hödl, Oliver. 2016. The Design of Technology-Mediated Audience Participation in Live Music. Ph.D. dissertation, Technische Universität Wien, Wien, Vienna. [Google Scholar]

- Huang, Kevin, Thad Starner, Ellen Do, Gil Weinberg, Daniel Kohlsdorf, Claas Ahlrichs, and Ruediger Leibrandt. 2010. Mobile music touch: Mobile tactile stimulation for passive learning. Paper presented at the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, April 10; pp. 791–800. [Google Scholar]

- Jennett, Charlene, Anna L. Cox, Paul Cairns, Samira Dhoparee, Andrew Epps, Tim Tijs, and Alison Walton. 2008. Measuring and defining the experience of immersion in games. International Journal of Human-Computer STUDIES 66: 641–61. [Google Scholar] [CrossRef]

- Karam, Maria, Carmen Branje, Gabe Nespoli, Norma Thompson, Frank A. Russo, and Deborah I. Fels. 2010. The emoti-chair: An interactive tactile music exhibit. In CHI’10 Extended Abstracts on Human Factors in Computing Systems. New York: Association for Computing Machinery, pp. 3069–74. [Google Scholar]

- Laugwitz, Bettina, Theo Held, and Martin Schrepp. 2008. Construction and evaluation of a user experience questionnaire. In HCI and Usability for Education and Work: 4th Symposium of the Workgroup Human-Computer Interaction and Usability Engineering of the Austrian Computer Society, USAB 2008, Graz, Austria, 20–21 November 2008. Proceedings 4. Berlin/Heidelberg: Springer, pp. 63–76. [Google Scholar]

- Laurel, Brenda. 2013. Computers as Theatre. Boston: Addison-Wesley. [Google Scholar]

- Letowski, Tomasz R., and Szymon T. Letowski. 2012. Auditory spatial perception: Auditory localization. In Army Research Lab Aberdeen Proving Ground Md Human Research and Engineering Directorate. Adelphi: Army Research Laboratory. [Google Scholar]

- Lessiter, Jane, Jonathan Freeman, Edmund Keogh, and Jules Davidoff. 2001. A cross-media presence questionnaire: The ITC-Sense of Presence Inventory. Presence: Teleoperators & Virtual Environments 10: 282–97. [Google Scholar]

- Macefield, Ritch. 2009. How to specify the participant group size for usability studies: A practitioner’s guide. Journal of Usability Studie 5: 34–45. [Google Scholar]

- McDowell, John, and Dermont J. Furlong. 2018. Haptic-Listening and the Classical Guitar. Paper presented at the New Interfaces for Musical Expression, Blacksburg, VA, USA, June 3–6; pp. 293–98. [Google Scholar]

- Merchel, Sebastian, and M. Ercan Altinsoy. 2009. Vibratory and acoustical factors in multimodal reproduction of concert DVDs. In Haptic and Audio Interaction Design: 4th International Conference, HAID 2009 Dresden, Germany, 10–11 September 2009 Proceedings 4. Berlin/Heidelberg: Springer, pp. 119–27. [Google Scholar]

- Merchel, Sebastian, and M. Ercan Altinsoy. 2018. Auditory-tactile experience of music. In Musical Haptics. Cham: Springer Nature, pp. 123–48. [Google Scholar]

- Mestre, Daniel R. 2017. CAVE versus Head-Mounted Displays: Ongoing thoughts. In IS&T International Symposium on Electronic Imaging: The Engineering Reality of Virtual Reality. Springfield: Society for Imaging Science and Technology, pp. 31–35. [Google Scholar] [CrossRef]

- Nanayakkara, Suranga, Elizabeth Taylor, Lonce Wyse, and S. H. Ong. 2009. An enhanced musical experience for the deaf: Design and evaluation of a music display and a haptic chair. In Sigchi Conference on Human Factors in Computing Systems. New York: Association for Computing Machinery, pp. 337–46. [Google Scholar]

- Nielsen, Jakob. 1994. Usability Engineering. Burlington: Morgan Kaufmann. [Google Scholar]

- O’Dwyer, Neill, Gareth W. Young, Aljosa Smolic, Matthew Moynihan, and Paul O’Hanrahan. 2021. Mixed Reality Ulysses. In SIGGRAPH Asia 2021 Art Gallery, 1. SA’21. New York: Association for Computing Machinery. [Google Scholar] [CrossRef]

- O’Dwyer, Néill, Gareth W. Young, and Aljosa Smolic. 2022. XR Ulysses: Addressing the disappointment of cancelled site-specific re-enactments of Joycean literary cultural heritage on Bloomsday. International Journal of Performance Arts and Digital Media 18: 29–47. [Google Scholar] [CrossRef]

- O’Dwyer, Néill. 2021. VR Ulysses Live in AltSpaceVR—V-SENSE. Academic Research. V-SENSE. Available online: https://v-sense.scss.tcd.ie/research/vr-ulysses-live-in-altspacevr/ (accessed on 30 March 2023).

- Pagés, Rafael, Konstantinos Amplianitis, David Monaghan, Jan Ondřej, and Alijosa Smolić. 2018. Affordable content creation for free-viewpoint video and VR/AR applications. Journal of Visual Communication and Image Representation 53: 192–201. [Google Scholar] [CrossRef]

- Pal, Varun, Suhana Shrivastava, Jaaron Leibson, Yin Yu, Lina Kim, Alanna Bartolini, and Diarmid Flatley. 2021. The Piano Press: A Cable-Actuated Glove for Assistive Piano Playing. Journal of Student Research 10. [Google Scholar] [CrossRef]

- Papetti, Stefano, and Charalampos Saitis. 2018. Musical Haptics. Cham: Springer Nature, p. 285. [Google Scholar]

- Pine, B. Joseph, and James H. Gilmore. 1999. The Experience Economy: Work Is Theatre & Every Business a Stage. Brighton: Harvard Business Press. [Google Scholar]

- Radbourne, Jennifer, Katya Johanson, Hilary Glow, and Tabitha White. 2009. The audience experience: Measuring quality in the performing arts. International Journal of Arts Management 11: 16–29. [Google Scholar]

- Reaney, Mark. 1999. Virtual reality and the theatre: Immersion in virtual worlds. Digital Creativity 10: 183–88. [Google Scholar] [CrossRef]

- Reber, Rolf, Norbert Schwarz, and Piotr Winkielman. 2004. Processing fluency and aesthetic pleasure: Is beauty in the perceiver’s processing experience? Personality and Social Psychology Review 8: 364–82. [Google Scholar] [CrossRef]

- Reeves, Stuart, Steve Benford, Claire O’Malley, and Mike Fraser. 2005. Designing the spectator experience. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. New York: Association for Computing Machinery, pp. 741–50. [Google Scholar]

- Rovan, Joseph, and Vincent Hayward. 2000. Typology of tactile sounds and their synthesis in gesture-driven computer music performance. In Trends in Gestural Control of Music. Paris: IRCAM, pp. 297–320. [Google Scholar]

- Ryan, Marie-Laure. 1999. Immersion vs. interactivity: Virtual reality and literary theory. SubStance 28: 110–37. [Google Scholar] [CrossRef]

- Saler, Michael. 2012. As If: Modern Enchantment and the Literary Prehistory of Virtual Reality. Oxford: Oxford University Press. [Google Scholar]

- Sallnäs, Eva-Lotta. 2010. Haptic feedback increases perceived social presence. Paper presented at the International Conference on Human Haptic Sensing and Touch Enabled Computer Applications, Amsterdam, The Netherlands, July 8–10; Berlin/Heidelberg: Springer, pp. 178–85. [Google Scholar]

- Schloss, W. Andrew. 2003. Using contemporary technology in live performance: The dilemma of the performer. Journal of New Music Research 32: 239–42. [Google Scholar] [CrossRef]

- Schrepp, Martin, Jörg Thomaschewski, and Andreas Hinderks. 2017. Construction of a benchmark for the user experience questionnaire (UEQ). International Journal of Interactive Multimedia and Artificial Intelligence 4: 40–44. [Google Scholar] [CrossRef]

- Schubert, Thomas, Frank Friedmann, and Holger Regenbrecht. 2001. The experience of presence: Factor analytic insights. Presence: Teleoperators & Virtual Environments 10: 266–81. [Google Scholar]

- Schutze, Stephan. 2018. Virtual Sound: A practical Guide to Audio, Dialogue and Music in VR and AR. Boca Raton: Taylor & Francis. [Google Scholar]

- Scoggin, Lyndsie. 2021. The Severance Theory: Welcome to Respite. Animation. CoAct Productions, Ferryman Collective. Animation. Seattle: IMDb.com, Inc. [Google Scholar]

- Seth, Abhishek, Judy M. Vance, and James H. Oliver. 2011. Virtual reality for assembly methods prototyping: A review. Virtual Reality 15: 5–20. [Google Scholar] [CrossRef]

- Slater, Mel, and Maria V. Sanchez-Vives. 2016. Enhancing Our Lives with Immersive Virtual Reality. Frontiers in Robotics and AI 3: 74. [Google Scholar] [CrossRef]

- Slater, Mel, and Sylvia Wilbur. 1997. A framework for immersive virtual environments (FIVE): Speculations on the role of presence in virtual environments. Presence: Teleoperators & Virtual Environments 6: 603–16. [Google Scholar]

- Smolic, Aljosa, Konstantinos Amplianitis, Matthew Moynihan, Neill O’Dwyer, Jan Ondrej, Rafael Pagés, Gareth W. Young, and Emin Zerman. 2022. Volumetric Video Content Creation for Immersive XR Experiences. In London Imaging Meeting 2022. Springfield: Society for Imaging Science and Technology. [Google Scholar]

- Sparacino, Flavia, Christopher Wren, Glorianna Davenport, and Alex Pentland. 1999. Augmented performance in dance and theater. International Dance and Technology 99: 25–28. [Google Scholar]

- Turchet, Luca, Travis West, and Marcelo M. Wanderley. 2021. Touching the audience: Musical haptic wearables for augmented and participatory live music performances. Personal and Ubiquitous Computing 25: 749–69. [Google Scholar] [CrossRef]

- UQO Cyberpsychology Lab. 2004. Revised Presence Questionnaire. Available online: https://osf.io/zk8hv/download (accessed on 1 January 2023).

- Valenzise, Giuseppe, Martin Alain, Emin Zerman, and Cagri Ozcinar, eds. 2022. Immersive Video Technologies. Cambridge: Academic Press. [Google Scholar]

- Visch, Valentijn T., Ed S. Tan, and Dylan Molenaar. 2010. The emotional and cognitive effect of immersion in film viewing. Cognition and Emotion 24: 1439–45. [Google Scholar] [CrossRef]

- V-SENSE. 2019. ‘XR Play Trilogy (V-SENSE).’ Research Portfolio. V-SENSE: Creative Experiments (Blog). Available online: https://v-sense.scss.tcd.ie/research/mr-play-trilogy/ (accessed on 1 January 2023).

- Wang, Xining, Gareth W. Young, Conor Mc Guckin, and Aljosa Smolic. 2021. A Systematic Review of Virtual Reality Interventions for Children with Social Skills Deficits. Paper presented at the 2021 IEEE International Conference on Engineering, Technology & Education (TALE), Wuhan, China, December 5–8; Piscataway: IEEE, pp. 436–40. [Google Scholar]

- Weibel, David, Bartholomäus Wissmath, Stephan Habegger, Yves Steiner, and Rudolf Groner. 2008. Playing online games against computer vs. Human-controlled opponents: Effects on presence, flow, and enjoyment. Computers in Human Behavior 24: 2274–91. [Google Scholar] [CrossRef]

- West, Travis J., Alexandra Bachmayer, Sandeep Bhagwati, Joanna Berzowska, and Marcelo M. Wanderley. 2019. The design of the body: Suit: Score, a full-body vibrotactile musical score. In Human Interface and the Management of Information. Information in Intelligent Systems: Thematic Area, HIMI 2019, Held as Part of the 21st HCI International Conference, HCII 2019, Orlando, FL, USA, 26–31 July 2019, Proceedings, Part II 21. Cham: Springer International Publishing, pp. 70–89. [Google Scholar]

- Wise, Kerryn, and Ben Neal. 2020. Facades. Virtual Reality. Available online: http://facades.info/ (accessed on 1 January 2023).

- Witmer, Bob G., and Michael J. Singer. 1998. Measuring presence in virtual environments: A presence questionnaire. Presence 7: 225–40. [Google Scholar] [CrossRef]

- Yang, Jing, and Cheuk Yu Chan. 2019. Audio-augmented museum experiences with gaze tracking. Paper presented at the 18th International Conference on Mobile and Ubiquitous Multimedia, Pisa, Italy, November 27–29; pp. 1–5. [Google Scholar]

- Young, Gareth W. 2016. Human-Computer Interaction Methodologies Applied in the Evaluation of Haptic Digital Musical Instruments. Ph.D. dissertation, University College Cork, Cork, Ireland. [Google Scholar]

- Young, Gareth W., and Dave Murphy. 2015a. Digital Musical Instrument Analysis: The Haptic Bowl. Paper presented at the Computer Music Multidisciplinary Research Conference, Plymouth, UK, November 15–19; pp. 591–602. [Google Scholar]

- Young, Gareth W., and Dave Murphy. 2015b. HCI Models for Digital Musical Instruments: Methodologies for Rigorous Testing of Digital Musical Instruments. Paper presented at the Computer Music Multidisciplinary Research Conference, Plymouth, UK, November 15–19; pp. 534–544. [Google Scholar]

- Young, Gareth W., David Murphy, and Jeffrey Weeter. 2013. Audio-tactile glove. Paper presented at the Sound 16th International Conference on Digital Audio Effects (DAFx-13), Maynooth, Ireland, September 2–5. [Google Scholar]

- Young, Gareth W., David Murphy, and Jeffrey Weeter. 2018. A functional analysis of haptic feedback in digital musical instrument interactions. Musical Haptics, 95–122. [Google Scholar] [CrossRef]

- Young, Gareth W., Néill O’Dwyer, and Aljosa Smolic. 2021. Exploring virtual reality for quality immersive empathy building experiences. Behaviour & Information Technology 41: 3415–31. [Google Scholar]

- Young, Gareth W., Néill O’Dwyer, and Aljosa Smolic. 2022a. Audience experiences of a volumetric virtual reality music video. Paper presented at the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, March 12–16; Piscataway: IEEE, pp. 775–81. [Google Scholar]

- Young, Gareth W., Néill O’Dwyer, and Aljosa Smolic. 2022b. A Virtual Reality Volumetric Music Video: Featuring New Pagans [VR Demo]. Paper presented at the New Interfaces for Musical Expression NIME’22, Auckland, New Zealand, June 28–July 1. [Google Scholar]

- Young, Gareth W., Néill O’Dwyer, and Aljosa Smolic. 2022c. A Virtual Reality Volumetric Music Video: Featuring New Pagans [VR Demo]. Paper presented at the ACM Multimedia Systems (MMSys) Conference, Athlone, Ireland, June 14–17; pp. 669–670. [Google Scholar]

- Young, Gareth W., Néill O’Dwyer, and Aljosa Smolic. 2022d. A Virtual Reality Volumetric Music Video: Featuring New Pagans [VR Demo]. Paper presented at the Sound, Music, and Computing (SMC) Conference, Saint-Étienne, France, June 8–11. [Google Scholar]

- Young, Gareth W., Néill O’Dwyer, and Aljosa Smolic. 2023. Volumetric video as a novel medium for creative storytelling. In Immersive Video Technologies. Cambridge: Academic Press, pp. 591–607. [Google Scholar]

- Zerman, Emin, Néill O’Dwyer, Gareth W. Young, and Aljosa Smolic. 2020. A case study on the use of volumetric video in augmented reality for cultural heritage. Paper presented at the 11th Nordic Conference on Human-Computer Interaction: Shaping Experiences, Shaping Society, Tallinn, Estonia, October 25–29; pp. 1–5. [Google Scholar]

- Zhang, Ping, Na Li, and Heshan Sun. 2006. Affective quality and cognitive absorption: Extending technology acceptance research. Paper presented at the 39th Annual Hawaii International Conference on System Sciences (HICSS’06), Kauai, HI, USA, January 4–7, vol. 8, p. 207a. [Google Scholar]

| B0 | S1 | T-Test | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Scale | Mean | Std. Dev. | Confidence | Alpha | Mean | Std. Dev. | Confidence | Alpha | t | Sig. |

| Attractiveness | 0.55 | 1.03 | 0.64 | 0.92 | 1.57 | 0.80 | 0.50 | 0.85 | 2.46 | 0.02 |

| Perspicuity | 1.08 | 0.67 | 0.41 | 0.49 | 1.65 | 0.84 | 0.52 | 0.80 | 1.67 | 0.11 |

| Efficiency | 0.12 | 0.74 | 0.46 | 0.68 | 0.85 | 0.85 | 0.53 | 0.86 | 0.06 | 0.05 |

| Dependability | 0.33 | 0.78 | 0.48 | 0.61 | 1.00 | 0.86 | 0.53 | 0.71 | 1.84 | 0.08 |

| Stimulation | 0.30 | 1.18 | 0.73 | 0.89 | 1.57 | 0.72 | 0.45 | 0.61 | 2.89 | 0.01 |

| Novelty | 0.73 | 1.09 | 0.68 | 0.90 | 1.69 | 0.54 | 0.34 | 0.64 | 2.51 | 0.02 |

| B0 | S1 | T-Test | ||||

|---|---|---|---|---|---|---|

| Presence Questionnaire Categories | Mean | SD | Mean | SD | t | Sig |

| Total | 114.10 | 14.10 | 113.60 | 11.51 | 0.09 | 0.93 |

| Realism | 29.00 | 7.13 | 26.50 | 5.46 | 0.88 | 0.39 |

| Possibility to act | 18.40 | 3.57 | 19.00 | 3.13 | 0.40 | 0.69 |

| Quality of interface | 16.50 | 2.68 | 17.10 | 3.67 | 0.42 | 0.68 |

| Possibility to examine | 15.20 | 2.35 | 16.80 | 2.57 | 1.45 | 0.16 |

| Self-evaluation of performance | 11.60 | 2.46 | 12.60 | 2.12 | 0.97 | 0.34 |

| Sounds | 16.50 | 2.64 | 16.30 | 3.06 | 0.16 | 0.88 |

| Haptic | 6.90 | 2.42 | 5.30 | 2.79 | 1.37 | 0.19 |

| Previous Experience | Advantages and Disadvantages | Improvements |

| • Nothing or experience with: | • New performances | • Level of detail |

| • Volumetric videos in 2D | • Cost/availability | • VV shaders |

| • Using the Volu app | • Analogue vs. digital | • Locomotion |

| • Cinema | • Audience perspectives | • Accessibility |

| • AR/VR HMDs | • Audience/artist interaction | • Shared presence |

| Examples: | ||

| “None; I hadn’t heard of volumetric music videos.” | “If some artists are against hi-tech, they may reject the adaption.” | “A greater level of detail and color in the models.” |

| “I don’t have any experience with 3D music videos.” | “People go to a concert for the experience itself, not only for the music and the performance.” | “Compared to the live concert, maybe the main difference is the atmosphere.” |

| “I have some limited experience using the Volu app.” | “Audiences have limited interactions at a live concert.” | “A huge group of people’s engagement is very different from a person with a headset.” |

| “Not sure if Franz Ferdinand’s music video counts, but it stuck with me | “I could see it being a barrier for new artists.” | “AR/VR concerts offer an inexpensive and accessible way to enjoy performances.” |

| “I might only see volumetric scenes from some films.” | “I think volumetric music videos introduce costs for artists struggling to break even.” | “It feels lonely without the accompany of real people.” |

| “Bjork’s VR music videos.” | “Passivity is sometimes lovely; not everyone will want to have everything interactive.” | “The experience was more like an artwork; what it lacks over a real concert is the company of people.” |

| “I have seen scans of actors at a conference, and Kpop artist scans for an MR stage performance.” | “I feel safer in the front row, but I don’t think you can replace a real concert with that.” | “I see great potential in music videos because the possibility of interaction is unlimited.” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Young, G.W.; O’Dwyer, N.; Vargas, M.F.; Donnell, R.M.; Smolic, A. Feel the Music!—Audience Experiences of Audio–Tactile Feedback in a Novel Virtual Reality Volumetric Music Video. Arts 2023, 12, 156. https://doi.org/10.3390/arts12040156

Young GW, O’Dwyer N, Vargas MF, Donnell RM, Smolic A. Feel the Music!—Audience Experiences of Audio–Tactile Feedback in a Novel Virtual Reality Volumetric Music Video. Arts. 2023; 12(4):156. https://doi.org/10.3390/arts12040156

Chicago/Turabian StyleYoung, Gareth W., Néill O’Dwyer, Mauricio Flores Vargas, Rachel Mc Donnell, and Aljosa Smolic. 2023. "Feel the Music!—Audience Experiences of Audio–Tactile Feedback in a Novel Virtual Reality Volumetric Music Video" Arts 12, no. 4: 156. https://doi.org/10.3390/arts12040156

APA StyleYoung, G. W., O’Dwyer, N., Vargas, M. F., Donnell, R. M., & Smolic, A. (2023). Feel the Music!—Audience Experiences of Audio–Tactile Feedback in a Novel Virtual Reality Volumetric Music Video. Arts, 12(4), 156. https://doi.org/10.3390/arts12040156