1. Introduction

Generative artificial intelligence (GenAI), particularly large language models (LLMs), offers significant potential for automating information-intensive tasks in the architecture, engineering, and construction (AEC) sector. Recent studies show that LLMs can support design reasoning, regulatory querying, document interpretation, construction scheduling, and administrative tasks without requiring programming expertise [

1,

2,

3,

4,

5]. LLM-enabled workflows therefore lower the digital-skill barrier and facilitate broader adoption of AI tools among practitioners.

Despite these advances, construction cost code verification remains largely untouched by LLM-based assistance. In Vietnam, verification is legally mandatory for all state-funded construction projects and plays a central role in design approval, procurement, payment appraisal, and public investment governance. The process requires verifying hundreds or thousands of bill-of-quantity items, checking the correctness of work item codes, confirming units of measurement, and validating material-labor-machinery (MTR-LBR-MCR) price components. Prior research highlights the semantic ambiguity, procedural complexity, and high human-error rates inherent in cost checking [

6,

7].

In practice, many inconsistencies arise from simple human mistakes: mis-typed or outdated codes, copy-and-paste errors, incorrect units of measurement (UoMs), or manual adjustments to price components without updating the corresponding codes. Because the cost code determines both the normative UoM and the MTR-LBR-MCR structure, a single incorrect code can propagate errors throughout the verification workflow. Identifying the intended code becomes especially difficult in large spreadsheets where mis-entries may go unnoticed.

These challenges underscore that effective verification requires not only deterministic rule checking but also mechanisms for recognizing mis-typed or mis-assigned codes. However, any such mechanism must remain strictly deterministic, reproducible, and grounded in normative datasets to satisfy auditability requirements in public-sector workflows. Unlike many LLM applications that rely on semantic interpretation or similarity-based retrieval, cost verification in the Vietnamese regulatory context permits only rule-governed operations and exact numerical comparison. Most importantly, strict equality in this context refers to deterministically defined numerical equivalence rather than unexamined raw-value identity, to allow regulatory practices, such as documented rounding, to be accommodated without compromising auditability.

To address this constraint, we developed a GPT-based assistant that functions strictly as a controlled rule-execution engine rather than an open-ended generative model. Embedded within the GPT environment, the assistant executes the verification sequence (e.g., code lookup, UoM checking, price comparison, etc.) using strictly Python-based operations. This approach explicitly prohibits semantic inference or approximate matching. In cases where a project item’s code fails to match any unit price book (UPB) entry, the assistant performs an exact-match comparison of the UoM and the full MTR-LBR-MCR price vector against all normative items. The system identifies a corresponding normative code only when a perfect numerical match is found; otherwise, the item is conservatively classified as “unlisted” or “TT” (derived from the Vietnamese term tam tinh, meaning “self-estimated”), in accordance with professional practice.

Against this backdrop, the study applies an Action Research methodology to co-develop, test, and refine a deterministic GPT-based assistant tailored to Vietnam’s cost-verification environment. The study pursues three objectives:

To examine the semantic, procedural, and computational challenges that shape current verification workflows, focusing on the dominance of code-related errors and the limitations of existing software tools.

To design and iteratively refine a GPT-based assistant that operationalizes verification rules through deterministic Python-executed logic, including exact-match diagnostics for identifying mis-typed or mis-assigned codes.

To establish a transparent, audit-ready framework for LLM-supported verification, demonstrating how LLMs can be constrained to behave as dependable rule-execution systems rather than probabilistic inference engines.

This study distinguishes itself from prior LLM applications in the AEC domain by demonstrating that when tightly controlled through system prompts, guardrails, and Python-executed computations, LLMs can perform compliance-critical verification tasks with full transparency and reproducibility. As elaborated in

Section 4.2, the selected system architecture—the GPT Code Interpreter combined with structured UPB datasets—enables deterministic rule execution without relying on semantic interpretation or similarity-based reasoning. This approach ensures that the assistant remains aligned with regulatory expectations while offering a replicable framework for integrating AI tools into public-sector cost management.

In summary, this study makes three major contributions. First, it offers a methodological contribution by demonstrating how LLMs can be constrained to function as deterministic rule-execution engines rather than probabilistic or semantic reasoners in compliance-critical contexts. Second, it provides a practical contribution by operationalizing Vietnam’s regulatory cost-verification logic into an auditable, Python-governed verification workflow that reflects real public-sector practice. Third, it introduces an exact-match and controlled comparison framework that enables typographical error recovery and systematic handling of rounding-induced boundary conditions while preserving strict auditability.

2. Background

2.1. Regulatory Context and the Practice of Cost Verification in Vietnam

Cost estimation and verification in state-funded projects in Vietnam are governed by a comprehensive legal framework, including the Law on Construction (No 50/2015/QH13 [

8] and its amended version No 62/2020/QH14 [

9]), Decree 10/2021/ND-CP [

10], Circulars 11/2021/TT-BXD [

11], 12/2021/TT-BXD [

12] and 09/2024/TT-BXD [

13]. For most publicly financed projects, verification is mandatory prior to budget appraisal and design approval, serving as a compliance mechanism to ensure that item descriptions, units of measurement (UoMs), and MTR-LBR-MCR unit prices align with provincial unit price books (UPBs).

In practice, the verification process begins with validating the work item code because it determines the legally valid UoM and normative price components required for direct cost appraisal. Only after a code is confirmed as valid can UoM and unit-price checks be meaningfully performed. However, because UPBs cannot cover every possible work item, some project-specific tasks may be classified as unlisted (or TT) items. These cases require evaluators to determine whether the item is genuinely not listed or the result of an incorrectly selected or mis-entered code. This inherently increases the cognitive burden of verification.

Vietnam’s reliance on detailed normative datasets, combined with manual spreadsheet-based workflows, makes cost verification both labor-intensive and error-prone, particularly when hundreds or thousands of items must be processed. The accuracy of direct costs significantly affects downstream components—indirect costs, pre-taxable income, and value-added tax—reinforcing the importance of reliable code checking early in the appraisal process.

2.2. Nature of Direct Cost Verification Workflows

Construction estimate verification primarily focuses on direct costs, as other major cost components are calculated as percentages of the direct costs, with the exception of a few minor special costs [

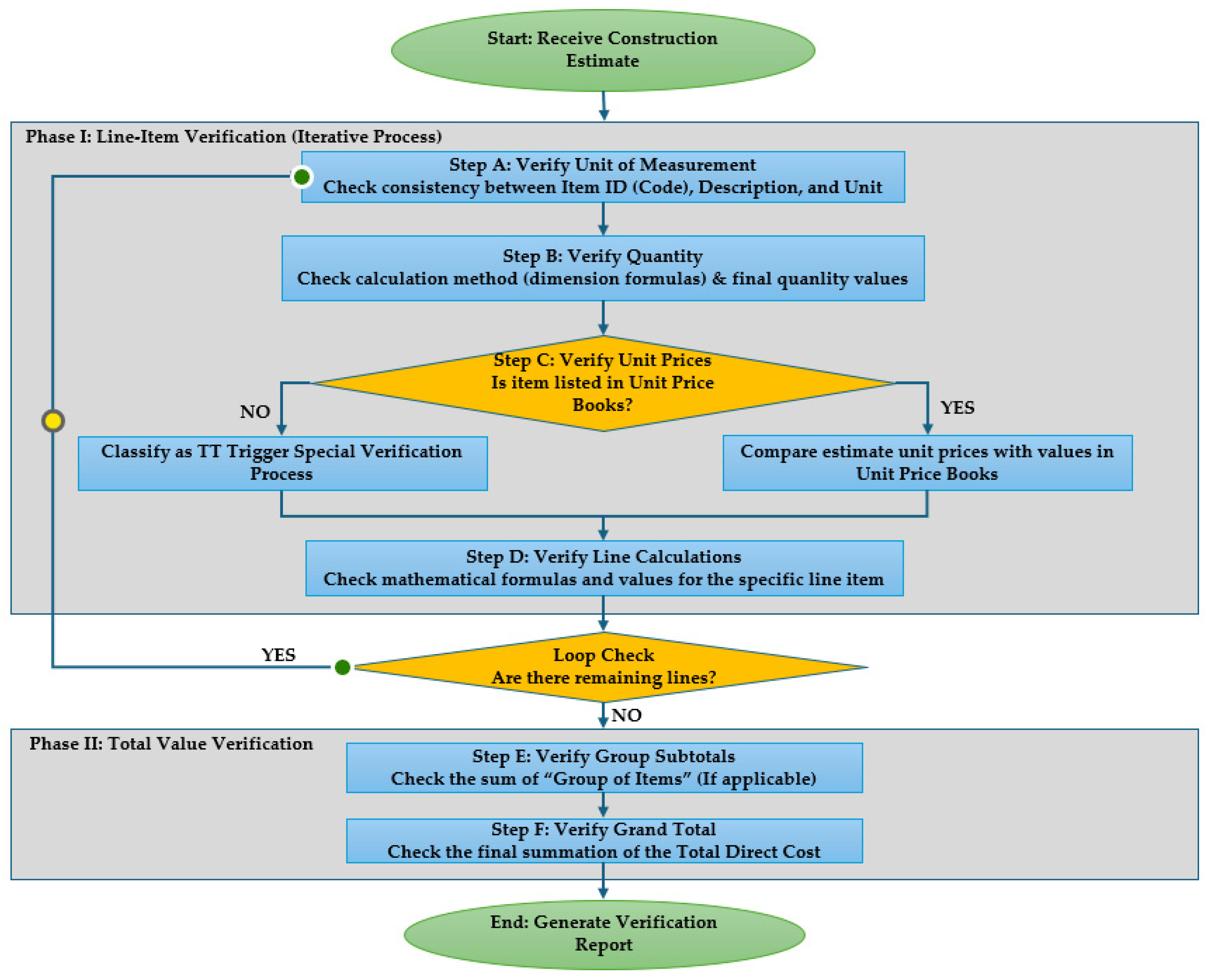

11]. The verification of direct costs is conducted in two main phases: line-item verification and total value verification. In Vietnam, construction estimates, whether generated by specialized software or otherwise, are consistently presented in Microsoft Excel spreadsheet format. Line-item verification begins with validating the unit of measurement against the item code and description, followed by checking calculation methods and construction quantities. Subsequently, the unit price for each item code is cross-referenced with the corresponding value in the official unit price books. Items not listed in these books are classified as TT (i.e., either unlisted or provisional items) and undergo a separate verification procedure. Mathematical calculations for individual lines and work groups are verified for both formula accuracy and numerical values until all line items are processed. Finally, the verification extends to the total values, including subtotals for item groups (if applicable) and the grand total of the direct cost estimate. The resulting verification report must detail any discrepancies regarding item codes, descriptions, units of measurement, unit prices, line or group calculations, and total values (

Figure 1).

In the context of construction estimate verification, validating work item codes and the associated parameters derived from UPBs—including work descriptions, UoM, and unit prices—constitutes a critical task. Beyond the standard items explicitly listed in the UPBs, analyzing codes that exhibit characteristics similar to non-standard items (hereafter referred to as “TT” items) is also valuable, as it enables the extraction of cost data that can serve as a reference benchmark for verifiers. The workflow for verifying work item codes in construction estimates generally comprises the following components:

(1) Code verification: Confirming whether the work item code exists in the provincial UPB.

(2) UoM verification: Ensuring that the project-applied unit of measurement (UoM) aligns with the normative UoM of the corresponding UPB item.

(3) Normative price comparison: Checking whether the material, labor, and machinery unit price components match the official normative values for the verified codes.

(4) Handling of unmatched codes: When a code cannot be found in the UPBs, the assistant must check whether the item corresponds to an existing normative item that has been mis-typed or incorrectly coded. This requires cross-checking the estimate’s UoM and full price vector (MTR-LBR-MCR) against the entire UPB to identify exact matches.

If an exact match is found, the system classifies the item as a typographical or mis-assigned code and suggests the correct code to replace the erroneous one.

If no exact match is found, the item is classified as a TT item and routed to the TT verification pathway, where compliance is determined through human evaluation of supporting price quotations, which are reported by the system but not automatically validated.

As noted in prior studies, the fragmented, spreadsheet-driven nature of construction documentation tends to cause inconsistencies and error propagation [

14,

15,

16].

Since the entire verification process relies on the accuracy of these item codes, the fragmented nature of spreadsheet data poses unique challenges, giving rise to specific operational difficulties that are examined in the following section on code-centered verification.

2.3. Key Challenges in Code-Centered Verification

International literature highlights several challenges inherent in construction cost checking, including semantic ambiguity, manual data handling, and the complexity of aligning project-specific descriptions with standardized cost norms [

6,

7,

17,

18]. In Vietnam, these issues are magnified due to the structure of UPBs and the heavy use of Excel-based workflows. The following challenges are particularly consequential:

- -

Mis-typed or mis-entered codes: These represent the most frequent and disruptive errors. Even minor deviations, e.g., extra spaces, missing digits, outdated codes, or carry-over errors from old spreadsheets, prevent linkage with normative datasets. Given that a single mis-typed code invalidates UoM and price checking, this issue accounts for a large proportion of verification inconsistencies [

17,

19].

- -

Partial modifications to price components: Designers may update material, labor, or machinery prices to reflect new assumptions but forget to update the work item code. Since each normative item has a characteristic price structure, such partial edits often produce mismatches undetectable by rule-based software tools.

- -

Semantic ambiguity in descriptions: Project-specific descriptions often diverge from the standardized nomenclature used in UPBs. This creates semantic drift that forces evaluators to rely on experience rather than deterministic matching, especially when codes are missing or inconsistent [

14,

20].

- -

Limitations of existing software tools: Popular Vietnamese estimating tools (e.g., G8, GXD, F1, ETA, etc.) primarily perform formula checking or code existence lookup [

21,

22,

23]. They do not recover intended codes, interpret price-structure anomalies, or identify nearest matches, forcing evaluators to diagnose errors manually.

- -

Time pressure and workload: Verification is often conducted under compressed timelines linked to appraisal cycles or procurement deadlines, increasing the likelihood of oversight [

24,

25].

These challenges highlight the need for intelligent tools that can perform deterministic rule-checking and perform diagnostic reasoning when inconsistencies arise.

2.4. Why Determining the Intended Code Matters

The correctness of the work item code is foundational to the entire verification process because it determines the normative UoM and MTR-LBR-MCR price components. Errors at this stage would invalidate subsequent checks. Four commonly encountered error categories include:

(1) typographical deviations;

(2) outdated or incorrect codes carried over from previous designs;

(3) partial edits to unit price components without updating the code; and

(4) intentional code manipulation for strategic reasons.

A key practical observation is that designers seldom modify all three price components simultaneously. Instead, adjustments are usually applied to one or two components, so the underlying price vector retains a characteristic profile associated with the intended normative item. This makes the MTR-LBR-MCR structure a valuable diagnostic signal for code recovery, often more reliable than semantic similarity when descriptions are ambiguous or incomplete.

This insight aligns with recent research on numerical feature-based retrieval and vector similarity in construction information extraction [

17,

18,

26]. However, in the context of formal verification, such numerical profiles can only be operationalized through strict deterministic comparison rather than approximate or similarity-based inference. Accordingly, automated recovery of intended codes must rely on exact equality between the project item’s UoM and full MTR-LBR-MCR price vector and the normative UPB entries. When this full vector matches a normative item perfectly, the discrepancy can be attributed to a mis-typed or mis-assigned code; when no such match exists, the item must be treated as unlisted (or TT) and handled through the appropriate procedural pathway.

Thus, while the underlying price vector may retain a distinctive numerical signature, the system developed in this study employs only exact-match comparison to maintain auditability and avoid unsupported inference. Deterministic equality—not similarity—is the sole basis for automated intended-code recovery.

Given the variability of project conditions, evolving construction methods, and non-standard spreadsheet practices, deterministic rule-based tools remain essential for the foundational stages of verification. Exact-match diagnostics complement these rules by enabling transparent, reproducible identification of typographical or mis-assigned codes without introducing probabilistic behavior or semantic interpretation.

3. Literature Review

3.1. Cost Verification and Norm Alignment: The Central Role of Code Correctness

Cost verification functions as a critical quality-assurance process in construction projects, especially in public-sector environments where transparency and accountability are required [

27]. Prior research consistently shows that verification workflows are highly vulnerable to inaccuracies arising from human interpretation and fragmented documentation [

28]. A recurring issue concerns misclassification, in which project-specific descriptions fail to align with standardized cost norms [

17], leading to inconsistent item mapping and distorted budgeting [

18].

However, as demonstrated in

Section 2, the most consequential verification failures in Vietnam arise not from semantic discrepancies but from incorrect work item codes, particularly mis-typed, mis-entered, or outdated ones. Because the code determines the normative units of measurement (UoMs) and the material-labor-machinery (MTR-LBR-MCR) structure, even minor deviations could invalidate large portions of the estimate. Such errors, though common in practice, have received limited attention in prior verification research.

A second recurring issue arises when unit price components are partially modified without updating the corresponding code, resulting in price profiles that no longer align with normative items. Although existing studies acknowledge that inconsistent unit prices undermine estimate reliability [

6,

29], they rarely examine the underlying behavioral pattern driving these inconsistencies: practitioners often modify only one or two components, leaving the residual price profile largely intact.

This insight is critical for intended-code recovery. The MTR-LBR-MCR price vector often retains a distinctive “signature” of the intended normative item, even when the code or description is incorrect. Existing digital approaches, including BIM-based rule checking, ontology-driven classification, and automated quantity take-off [

30,

31,

32], do not address this numerical mechanism. Likewise, existing Vietnamese estimation software focuses primarily on deterministic checks and lacks diagnostic inference capabilities [

21,

22,

23].

These studies point to the need for methods that can detect code inconsistencies and determine whether a project item exactly corresponds to a normative entry based on strict numerical equality, rather than similarity-based inference, which provides the conceptual motivation for this research. In this context, strict numerical equality refers to deterministically defined equivalence under explicitly specified rules, rather than unexamined raw-value identity.

3.2. Digital and AI Approaches for Code Matching and Numerical Similarity

Recent research in digital construction has shown significant advances in natural language processing (NLP), machine learning, and structured data extraction for tasks such as classification, compliance checking, and information retrieval [

20,

33]. While these methods effectively handle unstructured documents, they do not address the structured numerical reasoning that is central to cost verification.

Research on similarity-based retrieval provides a complementary direction. Embedding methods, clustering, and vector similarity have been used to compare construction attributes or identify related tasks [

17,

18]. When adapted to cost verification workflows, these approaches enable comparison of unit-price vectors (i.e., the MTR-LBR-MCR vectors) to identify the most similar normative items. This direction is especially relevant in Vietnam because, as shown in

Section 2, practitioners frequently adjust only one or two price components, producing a partially intact price signature that can be leveraged for inferential matching.

Machine-learning studies also highlight the potential of structured numerical features for matching construction tasks [

26,

34]. Although these studies do not explicitly target unit-price matching, they demonstrate the feasibility of vector-based retrieval methods in construction informatics. However, in the context of regulated cost verification, such numerical similarity cannot be used for automated decision-making. Instead, all computational methods must rely on strict deterministic comparison rather than approximate or nearest-neighbor matching. Such deterministic comparison may operate on values that have been transformed through explicitly declared, rule-governed operations (e.g., rounding), provided that equivalence is evaluated strictly after transformation and not through tolerance-based approximation.

Recent advances in transformer-based large language models (LLMs) extend this potential by enabling multi-step reasoning and integration of external datasets [

35,

36]. Nevertheless, deterministic verification workflows—such as those required for code checking under Vietnamese norms—prohibit probabilistic inference or similarity-based suggestions. Thus, LLMs can support tasks only when their behavior is constrained to rule execution and exact numerical comparison, rather than semantic or similarity-based interpretation.

While existing literature confirms the feasibility of vector comparison, our system implements these ideas strictly through exact-match numerical equality. This approach ensures auditability by eliminating reliance on similarity-based inference.

Despite these developments, no existing research integrates LLM-driven reasoning with numerical similarity for cost code verification. Current systems remain focused on textual interpretation or deterministic rule-checking, leaving a clear gap in workflows involving mis-typed codes, partially edited price components, or unlisted (or TT) items.

The framework proposed in this study directly addresses this gap by combining LLM-based procedural orchestration with Python-executed deterministic unit-price vector equality checks, supporting code verification and intended-code recovery without approximate reasoning.

3.3. Large Language Models and Their Potential for Structured Verification Tasks

Advances in AI have transformed how construction information is interpreted and used to support engineering decision-making. Reviews show increasing adoption of machine learning, deep learning, and natural language processing (NLP) across construction management tasks [

35], with text mining frequently applied to extract structured information from heterogeneous documents [

36].

Earlier reviews, including Wang et al. [

20], highlight that construction documents typically contain mixed-format and inconsistently structured information, creating opportunities for NLP to automate repetitive interpretation tasks. However, existing NLP and text-mining applications mainly target unstructured textual data (e.g., drawings, specifications, daily reports, and regulatory documents) and do not address the structured numerical reasoning required in cost verification.

Large language models (LLMs) extend the capabilities of NLP by enabling multi-step reasoning, rule interpretation, and procedural task execution. Unlike traditional NLP pipelines, which extract or classify information through predefined features, LLMs can combine instructions, external references, and structured datasets within a single reasoning process. This allows LLMs to perform tasks such as:

checking whether entries align with external rules or normative datasets;

identifying inconsistencies in tabular values;

verifying multi-component numerical structures; and

generating transparent explanations that reflect human-like verification logic.

LLMs are therefore well-suited for verification tasks requiring contextual reasoning, step-by-step checking, and dataset interpretation.

However, it is important to note that existing LLM applications in the architecture, engineering, construction, and operations (AECO) sector have focused primarily on document understanding, knowledge extraction, and high-level reasoning. No current studies apply LLMs to structured numerical comparisons, such as checking cost codes, verifying unit-of-measurement validity, or evaluating the consistency of unit price components (materials, labor, machinery). Similarly, existing AI research does not explore the potential of LLMs to support intended code recovery, a task requiring both detection of mis-entered data and inference of the most plausible normative item based on numerical similarity.

In compliance-critical workflows such as cost verification, however, these higher-level reasoning capabilities cannot be used for automated decision-making unless they are tightly constrained. LLMs must be restricted to deterministic rule execution, with all numerical comparisons performed through exact, Python-executed equality checks rather than probabilistic or similarity-based reasoning.

This gap positions LLMs as a novel enabler for structured verification workflows. By integrating procedural rules with reference datasets and numerical checking methods, LLMs can assist verifiers in diagnosing errors, validating price consistency, and retrieving the nearest normative items when mismatches occur. In the system developed in this study, such assistance is limited to orchestrating the verification sequence, while all computations that determine verification outcomes are grounded in deterministic Python logic to ensure full auditability and eliminate unsupported inference. This conceptual foundation motivates the design of the GPT-based assistant developed in this study and informs the Action Research methodology described in

Section 4.

3.4. Research Gap

Despite significant advances in AI for construction engineering and management, several critical gaps remain. Existing digital methods focus primarily on text-based interpretation, offering limited support for the numerical reasoning required in cost verification [

20,

36]. Prior research does not examine the detection of mis-typed or mis-assigned codes, even though these constitute one of the most frequent and consequential errors in practice.

Despite strong practical relevance, no AI studies leverage unit-price-vector similarity to infer intended codes or benchmark TT items (i.e., unlisted items), as practitioners typically modify only part of a price structure. However, in compliance-critical settings, such vector-based analysis must be operationalized through strict deterministic equality rather than similarity-based inference. Yet, LLMs have not been applied to structured verification tasks that require deterministic reasoning, dataset grounding, and transparent justification.

This study addresses these gaps by developing a GPT-based verification assistant that is capable of deterministic code checking, units of measurement (UoM) and unit-price validation, intended-code recovery, and TT (or unlisted items) benchmarking, operationalized through an Action Research methodology aligned with Vietnam’s regulatory environment.

4. Methodology: Action Research Design

4.1. Rationale for Choosing Action Research

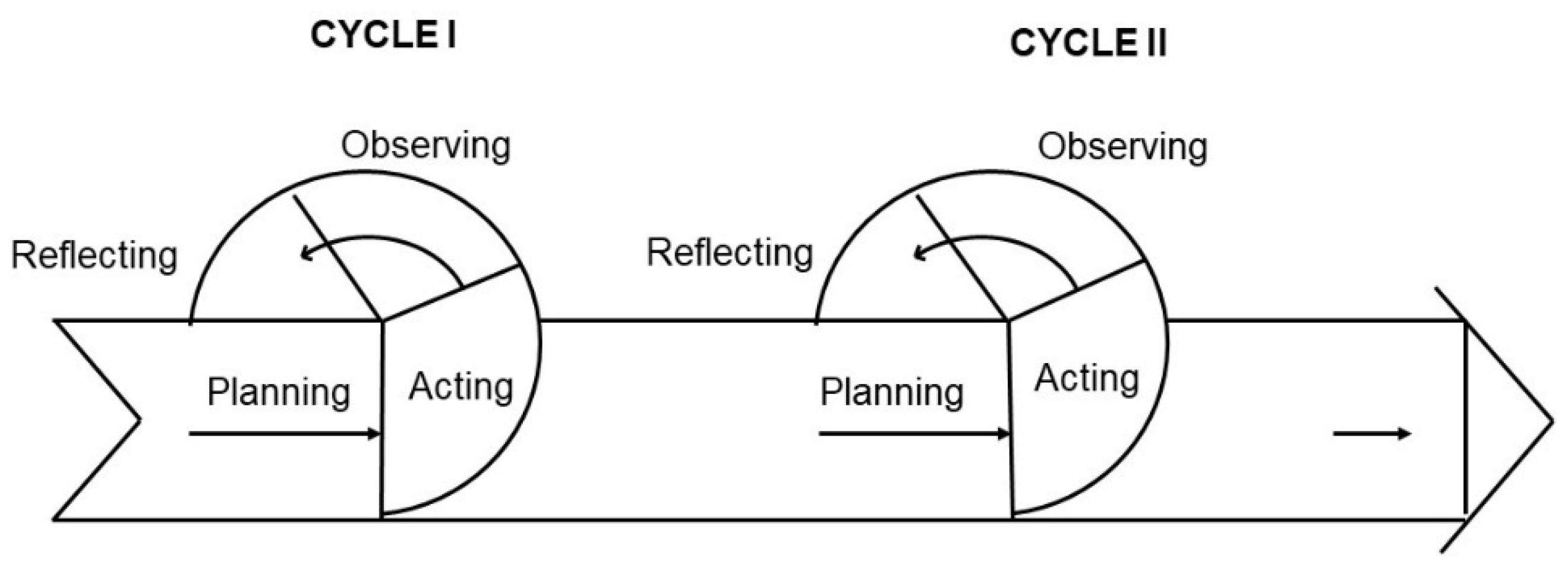

Action research is a cyclical and collaborative methodology involving planning, acting, observing, and reflecting (

Figure 2), through which researchers and practitioners iteratively refine processes and systems [

37]. Some authors [

38,

39] extend this cycle by introducing diagnosing as an initial step aimed at identifying or defining the problem. Action Research is ideal for socio-technical contexts requiring domain expertise, evolving rules, and iterative calibration. Because Action Research prioritizes continuous learning and real-world experimentation, it provides a robust methodological foundation for developing a GPT-based assistant that must be incrementally aligned with professional verification practices.

In construction cost verification, project conditions, spreadsheet structures, normative datasets, and practitioner interpretations vary widely across contexts. These factors make it unrealistic to design a fully deterministic AI system from the outset. Instead, the verification logic, prompt structures, and grounding mechanisms must be progressively refined based on inconsistencies observed in real projects and feedback from evaluators. Action Research supports this evolution by enabling systematic adjustments after each development cycle, ensuring that the assistant remains practical, auditable, and aligned with regulatory workflows.

Accordingly, this study adopts the Action Research framework to structure the development of the verification assistant. Each cycle contributes to identifying system limitations, refining reasoning patterns, and enhancing dataset-grounded behavior. The resulting framework adapts to practitioner insights, mirroring the actual logic of cost checking.

Most importantly, such refinements occur through adjustments to deterministic rule specifications, system prompts, and Python-based procedures rather than through any form of model learning or probabilistic adaptation in this study.

4.2. Planning Phase

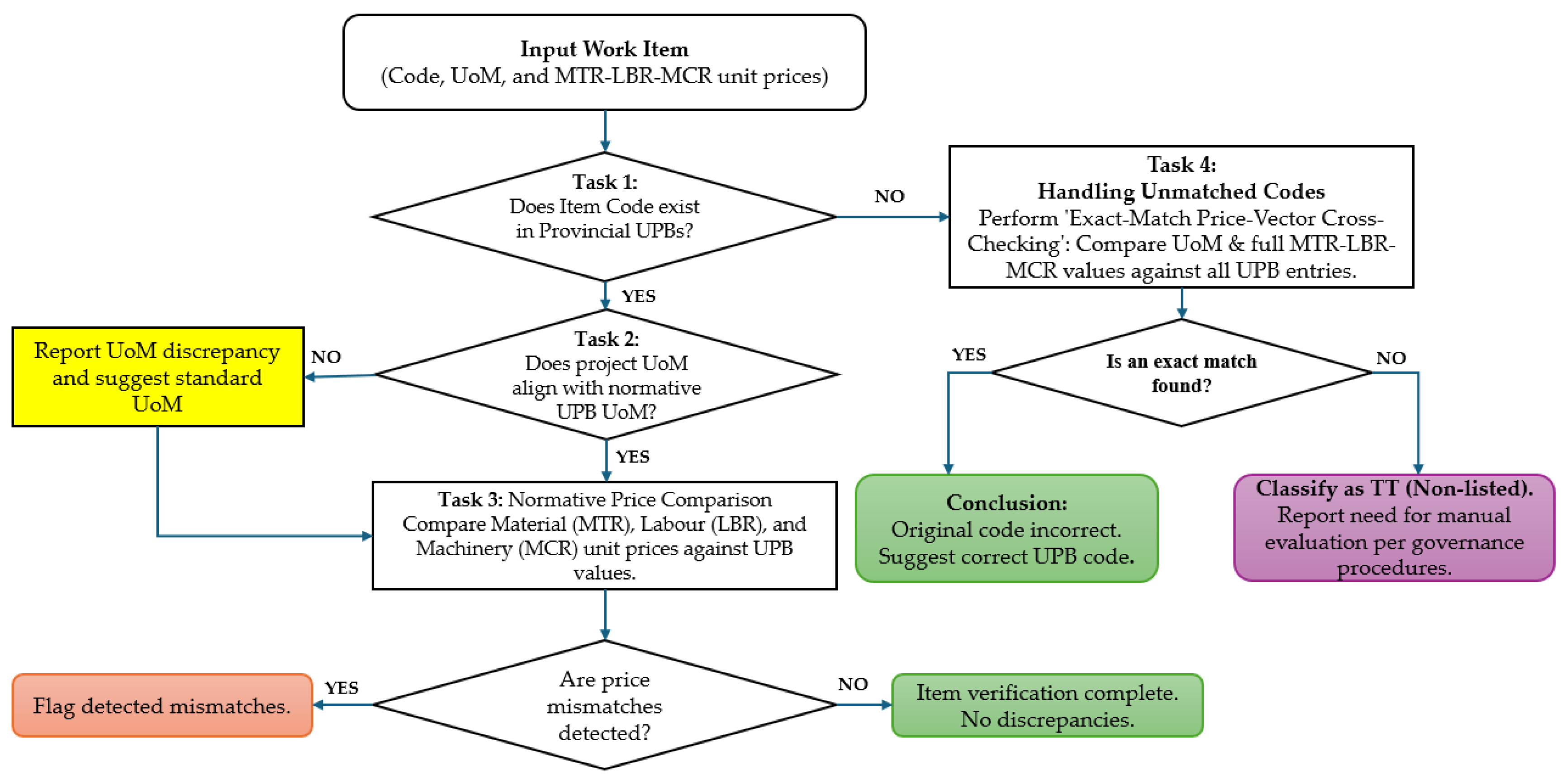

The planning phase focused on translating established verification rules into a structured framework that could be operationalized within a GPT-based assistant. As cost verification in Vietnam follows a strict rule sequence—beginning with code verification, followed by UoM checking, normative price comparison, and finally the treatment of unmatched codes (see

Section 2 and

Figure 3)—the first step is to formalize these rules as deterministic system instructions.

Figure 3 summarizes the deterministic verification sequence as initially specified during the planning phase, which serves as a stable reference framework for iterative refinement across subsequent Action Research cycles. These instructions define the boundaries within which the GPT must operate and articulate the conditions under which each verification step may proceed or terminate.

Within this phase, particular attention was given to the handling of unmatched codes. Since many inconsistencies in practice arise from mis-typed or mis-assigned codes, the assistant must determine whether a given item corresponds to an existing normative entry, despite the absence of a matching code. This required specifying an exact-match protocol in which the project item’s UoM and its full MTR-LBR-MCR unit-price vector are compared deterministically against every item in the UPB. A perfect match is interpreted as evidence of a typographical or mis-assigned code, allowing the correct normative code to be reported. If no such match exists, the item is identified as TT (i.e., an unlisted item) and routed accordingly. The assistant does not attempt to validate price quotations associated with TT items, as quotation evaluation is considered a professional responsibility outside the scope of the automated workflow.

The design and planning phase served a dual purpose within the Action Research framework: it not only formalized the full sequence of verification rules but also established the foundational system architecture for the first development cycle, to provide a structured basis for iterative refinement in subsequent cycles. Given the stringent auditability and numerical precision required for construction cost-code verification, the study evaluated three feasible GPT-based system architectures: (i) fine-tuned GPT models, (ii) Retrieval-Augmented Generation (RAG), and (iii) GPT integrated with the Code Interpreter (Python) and a structured unit-price database. The third approach was selected as the only viable option in this research. In contrast to semantic-search-driven RAG, which is prone to hallucinations and unsuitable for precise numerical comparisons, the Python-enabled architecture facilitates deterministic rule execution, exact equality matching, and reproducible logic—capabilities that are essential for cost-estimation verification.

Following the architectural decision, a customized GPT model—referred to as Cost Code Verifier—was constructed using ChatGPT Plus Model 5.1. The UPBs were uploaded into the model’s knowledge environment, forming the retrieval-ready dataset to be accessed through Python-based queries. The next step was to encode the entire verification workflow into a single, comprehensive system prompt so that the assistant would consistently behave as a deterministic rule-execution engine rather than as an open-ended generative model.

At a high level, the system prompt is organized into seven sections: file and data-model rules, header detection, line-item detection, the four-task verification workflow, final classification, reporting, and safety constraints. It specifies that (i) all files stored in the Knowledge environment are treated as UPBs and must be loaded into a unified master table; (ii) all user-uploaded files are treated as single-sheet estimates from which the assistant must detect the header row, identify valid line items, and extract the code, description, UoM, quantity (if available), and the three unit price components (mtr_unit_price, lbr_unit_price, mcr_unit_price); and (iii) all extraction, normalization, and numerical comparisons must be executed exclusively through Python 3.7, and never through LLM “guessing” or internal numerical reasoning.

The prompt further encodes the four-task deterministic workflow: (1) code existence checking, (2) UoM verification, (3) component-wise comparison of the three price fields against UPB values, and (4) an exception flow for items whose codes do not exist in the UPB. For this exception flow, the prompt instructs the assistant to perform exact equality checks on the full UoM and MTR-LBR-MCR price vector against all UPB entries and to suggest a corrected code only when a perfect match is found; otherwise, the item must be classified as TT (i.e., an unlisted item). Final outcomes are mapped into a finite set of statuses (e.g., exact_match, uom_mismatch, price_mismatch, code_not_found, code_corrected_by_diagnostic, out_of_library/tt, parsing_issue), and the reporting section requires line-by-line explanations detailing the rules applied and the evidence used.

Crucially, the system prompt establishes strict guardrails to prohibit non-auditable behaviors, including the generation of hypothetical codes, inference of normative values, or similarity-based matching. Through this structured design process, the logical model of the verification assistant was fully specified before implementation, ensuring that all subsequent Action Research cycles remained anchored in transparent, deterministic, and regulation-aligned decision rules.

Subsequent execution revealed that Python could not access UPB files stored in the Knowledge environment; this implementation constraint and the resulting workflow adjustments are discussed in Cycle 1 (

Section 4.3).

4.3. Acting Phase

During the acting phase, the planned verification framework was implemented into a functional GPT-based assistant and iteratively refined through cycles of prompt engineering, dataset integration, and behavioral calibration. The assistant was designed to parse heterogeneous spreadsheet structures by using pattern-guided extraction instructions that enable it to recognize work item codes, UoMs, and MTR-LBR-MCR fields even when presented in varying layouts. These extraction mechanisms normalize dissimilar header formats and irregular cell structures, ensuring that verification proceeds from consistent and reliable inputs.

The deterministic rule sequence formalized in the planning phase was then embedded into the assistant’s operational workflow. Rather than relying on LLM-style procedural reasoning or interpretation, the assistant executed each verification step through Python-controlled logic, ensuring that all operations followed the deterministic sequence exactly as specified. The model executed the verification steps in strict order and was prevented from bypassing or reordering them. All numerical comparisons, rule evaluation, and exception handling were performed by Python, not by the LLM’s internal reasoning processes.

When encountering an unlisted code, the system activates the exact-match protocol, in which the extracted UoM and price vector were compared against all UPB entries. This comparison was carried out entirely through deterministic equality matching in Python, and only a complete match led to the identification of the correct normative code; otherwise, the item was conservatively classified as TT. In addition, the assistant clearly distinguished between verified items, UoM inconsistencies, normative price deviations, typographical errors, and TT outcomes, presenting each result in an auditable and practitioner-oriented format. Its role in this phase was limited to orchestrating the workflow and reporting outcomes, without performing any form of probabilistic inference or LLM-driven diagnostic reasoning.

These mechanisms ensured that the GPT’s behavior remained transparent and compliant with the deterministic logic required for public-sector cost verification.

During the first acting cycle, execution revealed a critical platform constraint: Python could not access files stored in the GPT’s Knowledge environment. Since deterministic verification depended on Python loading and comparing UPB datasets, this limitation prevented the prototype from constructing the normative tables required for rule execution. Instead, the assistant returned runtime errors requesting manual UPB uploads. This observation required a redesign of the data-loading workflow, prompting Cycle 2 to remove dependence on Knowledge-stored UPBs and to incorporate explicit instructions for runtime dataset uploads.

4.4. Observing Phase

The observing phase assessed how the GPT-based assistant performed when executing verification tasks across both real project estimates and the controlled error-injection cases. Across all trials, the assistant consistently applied the deterministic rule sequence, reliably identifying valid codes, UoM mismatches, and deviations between project and normative MTR-LBR-MCR values. Because every decision was grounded in the UPB datasets and executed through Python-based equality checks, the assistant did not hallucinate codes, interpolate missing values, or introduce interpretive judgments beyond the prescribed workflow.

A critical component examined during this phase was the system’s behavior when encountering invalid or non-existent codes. In these situations, the deterministic pathway triggered the exact-match diagnostic, in which the project item’s UoM and full price vector were compared—using strict Python equality—against every entry in the UPB. When an exact match existed, the assistant correctly identified the intended normative code, reproducing the manual recovery step typically carried out by human verifiers. When no match existed, the item was conclusively classified as TT, and no suggestion, ranking, or approximate resemblance was generated. This behavior aligned directly with regulatory expectations prohibiting probabilistic or similarity-driven matching.

The observing phase also highlighted several categories of mismatches where exact-match diagnostics were intentionally not permitted, including wrong-but-valid codes and altered price components. When a code existed in the UPB but its MTR-LBR-MCR values differed from normative data, the assistant would not attempt any reverse recovery, because the presence of a valid code indicated that the discrepancy originated from edits to the price components rather than from a typographical error. Such items were therefore flagged deterministically as “normative price mismatches,” not routed to the exact-match layer to maintain clear separation between the deterministic and diagnostic pathways.

The evaluation further revealed boundary cases arising from spreadsheet formatting inconsistencies, such as rounding differences or text-format numbers. In all such cases, the assistant correctly refrained from recovery: because numerical identity was not satisfied, no deterministic recovery could be triggered, and the system classified the item solely according to rule-based conditions—either as inconsistent (if the code was valid) or TT (if the code was invalid). This behavior confirmed that the system applies no partial, approximate, or threshold-based logic.

In parallel with technical observation, practitioner feedback was systematically collected during this phase through structured walkthrough sessions. Professional cost verifiers (including two authors) reviewed line-by-line verification outputs generated from real project estimates and discussed the clarity, interpretability, and regulatory acceptability of the system’s classifications. These sessions were complemented by targeted follow-up discussions focusing on auditability, boundary conditions, and alignment with established verification practices. Qualitative observations from these interactions were recorded as part of the Action Research process and used to identify recurring patterns and conceptual tensions rather than to evaluate user satisfaction or system usability.

Practitioner feedback obtained through these structured interactions reinforced these observations. Reviewers emphasized that the deterministic explanations reduced the effort required to identify typographical errors—the only class of errors eligible for exact-match recovery—while also preventing misclassification of items that merely resembled normative entries numerically. They also confirmed that no part of the assistant’s behavior resembled inference, interpretation, or similarity reasoning; all outcomes stemmed exclusively from dataset-grounded deterministic execution.

Overall, the observing phase confirmed that the assistant’s behavior strictly adhered to the decision tree encoded during system design. Exact-match recovery applied only to invalid codes. All other cases—including valid codes with altered prices, incorrect UoMs, or TT items—were handled exclusively by deterministic rules.

4.5. Reflecting Phase: Refining Verification Rules and Reasoning Patterns

Reflections across the development cycles informed refinements to both the assistant’s extraction mechanisms and the deterministic rule specifications governing its behavior. Early cycles highlighted the need for more robust structural parsing, leading to improved handling of multi-row headers and non-standard layouts. Subsequent reflections focused on strengthening the enforcement of deterministic rules, including clearer sequencing constraints and tighter grounding instructions to prevent the model from attempting approximate matches. The exact-match procedure for detecting typographical errors was likewise refined to ensure consistency across datasets.

Practitioner feedback played a critical role in this reflective process. Insights from walkthrough sessions and follow-up discussions were used to identify conceptual boundary conditions—most notably, cases in which strict raw-value equality conflicted with documented rounding practices in real estimates. Importantly, such feedback informed refinements to deterministic rule definitions and system prompts, rather than introducing any form of probabilistic learning, tolerance-based reasoning, or adaptive model behavior.

Through this reflective process, the GPT assistant increasingly mirrored the step-by-step logic used by human verifiers, producing outputs that were more interpretable, auditable, and compliant with regulatory expectations without relying on any form of LLM-style inference or internal reasoning. All refinements applied in this phase concerned adjustments to system prompts and Python-based rule execution rather than changes to the model’s reasoning processes.

4.6. Fourth Action Research Cycle: Addressing Rounding-Induced Equality Conflicts

The fourth Action Research cycle was initiated after the core deterministic verification logic had been stabilized through the first three cycles and deployed across real construction estimates. Unlike earlier cycles, which primarily addressed structural parsing, rule sequencing, and exact-match diagnostics for typographical errors, this cycle was triggered by a conceptual boundary condition observed during practical use rather than by implementation failure.

4.6.1. Observing: Emergence of Rounding-Induced Equality Conflicts

During verification of real project estimates, several cases were observed in which unit price components differed marginally from the corresponding UPB values despite reflecting no substantive modification of normative prices. These discrepancies were attributable to systematic rounding practices applied during estimate preparation, such as rounding unit prices to the nearest thousand, hundred, or ten Vietnamese Dong. Importantly, these deviations were consistent across items and aligned with documented estimation conventions, rather than arising from arbitrary edits or calculation errors.

Under the existing deterministic design, which relied exclusively on absolute numerical equality, such cases were conservatively classified as normative price mismatches or TT items, depending on code validity. While this behavior was technically correct within the original rule specification, practitioner feedback indicated that it could generate false negatives in situations where rounding practices were transparent, intentional, and professionally accepted. This observation highlighted a tension between strict numerical equality and faithful representation of real-world estimation workflows.

4.6.2. Reflecting: Reframing Equality Without Introducing Approximation

Reflection on these observations led to a reassessment of how numerical equality should be operationalized in a compliance-critical context. Crucially, the issue was not one of tolerating numerical deviation or introducing approximate reasoning, both of which would undermine auditability. Rather, it concerned whether deterministic equality should be evaluated on raw numerical values or on values that had been transformed in a transparent and rule-governed manner consistent with professional practice.

Accordingly, the verification framework was refined to incorporate a user-selectable comparison mode, explicitly distinguishing between two deterministic verification pathways. In the absolute comparison mode, the assistant preserves the original behavior, requiring exact numerical equality between project and UPB unit price components. In the relative comparison mode, both project values and UPB values are deterministically transformed using a predefined rounding operation before equality evaluation. The rounding precision—thousands, hundreds, or tens of VND—must be explicitly declared by the user prior to execution. The verifier supports both strict absolute matching and controlled rounding-tolerant matching based on discrete monetary rounding levels, reflecting practical estimation conventions while preserving auditability.

This refinement did not introduce tolerance thresholds, epsilon-based comparisons, similarity metrics, or probabilistic inference. Equality was still assessed strictly, but only after both values had undergone an identical, user-declared transformation. Rounding was thus treated as a deterministic preprocessing step rather than as an approximation mechanism, preserving full traceability and auditability of the verification process.

4.6.3. Acting: Integrating Controlled Comparison Modes into Deterministic Execution

Following this conceptual clarification, the deterministic rule set and system prompts were updated to operationalize the two comparison modes within the existing Python-executed verification engine. The assistant was explicitly instructed to apply only the comparison mode selected by the user and to document the selected mode and rounding precision in its structured output. No automatic inference of rounding conventions was permitted; the system did not attempt to detect or guess appropriate rounding behavior from the data.

All numerical comparisons continue to be executed exclusively through Python, ensuring reproducibility and eliminating any reliance on internal LLM reasoning. By constraining the assistant to user-declared, deterministic transformations, this cycle extends the verification framework while maintaining the conservative philosophy underpinning the earlier cycles.

To ensure methodological transparency, the implementation of rounding-aware exact-match recovery was formalized explicitly at the system-instruction level. The assistant supported two deterministic price-comparison modes: strict absolute equality and a user-declared rounding-based mode, in which both project and UPB unit price components were transformed using an identical, predefined rounding operation prior to equality evaluation. The rounding precision (thousands, hundreds, or tens of VND) must be explicitly specified by the user; no tolerance thresholds, similarity metrics, or implicit inference are permitted. The effect of this mechanism was verified using controlled error-injection cases to demonstrate that exact-match recovery was triggered exclusively for invalid codes with fully matching price vectors, while preventing false recovery in all other scenarios. The fourth Action Research cycle therefore represented a conceptual refinement rather than a technical correction. It demonstrated how deterministic verification logic could be incrementally aligned with professional estimation practices without compromising regulatory integrity, so as to reinforce the role of Action Research in mediating between formal audit requirements and empirical realities observed during system deployment.

4.7. Summary of Action Research Cycles

Across the four Action Research cycles, the system evolved from a rule specification to a fully implemented GPT-based verification assistant capable of executing deterministic checks and identifying typographical errors through exact-match price-vector comparison. The first cycle established a robust data-extraction layer and ensured that spreadsheet variability did not impede downstream reasoning. The second cycle refined the assistant’s internal logic, embedding deterministic sequencing and exact-match rules directly into its operational instructions. The third cycle focused on improving TT classification and consolidating conservative behaviors that prevent unsupported inference. The fourth cycle addressed a conceptual boundary condition observed during real-world deployment, refining how deterministic equality is operationalized in the presence of systematic rounding practices without tolerance-based or similarity-driven reasoning.

Specifically, the verifier now supports both strict absolute matching and controlled rounding-based matching defined over discrete monetary precision levels, while preserving strict determinism and auditability.

The integrated outcome of these cycles is a verification assistant whose operation is transparent, grounded in authoritative datasets, and aligned with the regulatory logic of Vietnam’s cost verification practice. Across all four cycles, improvements were achieved through adjustments to system prompts, deterministic rule definitions, and Python-based procedures, rather than through any form of model learning or LLM-style inference.

By the end of the fourth cycle, all verification outcomes were generated exclusively through deterministic rule execution, ensuring full auditability and eliminating reliance on internal LLM reasoning.

5. System Implementation

The system was implemented to operationalize the verification logic defined during the planning stage of the Action Research cycles. Following iterative refinement across four Action Research cycles, this implementation reflects the final deterministic verification logic resulting from both structural stabilization and conceptual rule refinement. Whereas the planning phase established the rule structure and behavioral constraints of the assistant, the implementation phase focused on translating these design specifications into a functioning GPT-based verification tool. The resulting system integrates deterministic rule execution, exact-match diagnostics, and structured reporting, enabling the assistant to replicate the reasoning sequence used by professional verifiers while remaining fully grounded in the provincial UPBs.

The architecture comprises three coordinated components: a data extraction and normalization layer, a deterministic verification engine, and an exact-match module for handling codes that do not exist in the UPB. These components are embedded within a controlled GPT configuration that restricts the model’s behavior to auditable, rule-based operations. By integrating GPT’s natural language capabilities with strict procedural constraints, the system delivers explainable verification outputs without relying on probabilistic inference, semantic approximation, or any attempt to “guess” intended codes when a valid UPB code is already present.

5.1. Data Extraction and Normalization

Cost estimates from real projects often exhibit substantial structural variability, including displaced headers, merged cells, heterogeneous naming conventions, and intermittent non-data rows. Before verification can occur, the system must interpret these structures and extract relevant fields reliably. In this implementation, all extraction, parsing, and header normalization operations should be processed using Python to ensure deterministic and reproducible handling of input spreadsheets rather than relying on the LLM’s internal interpretation. This is achieved by guiding the GPT-based assistant through a series of pattern-oriented parsing instructions designed to identify the work item code, description, unit of measurement, and the three unit price components corresponding to material, labor, and machinery.

During extraction, the assistant converts the input into a standardized internal representation. Header inconsistencies are resolved through normalization rules, and irrelevant or narrative rows are filtered out. Python-driven routines enforce strict column detection, datatype parsing, and canonicalization, ensuring that no inference or heuristic reasoning from the LLM influences the extracted values. This ensures that subsequent verification steps rely on standardized data, irrespective of the original spreadsheet layout. Where formatting is ambiguous, the system applies conservative Python-based extraction behavior to preserve auditability and avoid any reliance on LLM-generated assumptions.

5.2. Deterministic Verification Operations

Once the input data have been normalized, the system executes the deterministic verification workflow defined in the planning phase. This workflow replicates established verification procedures used in Vietnam and reflects the regulatory logic underlying the UPB framework. All verification steps—including code lookup, UoM comparison, and evaluation of MTR-LBR-MCR price components—are executed exclusively through Python to ensure deterministic, reproducible outcomes consistent with the system architecture described in

Section 4.2. Equality is assessed strictly, either on raw numerical values or on values that have undergone an identical, user-declared deterministic transformation (e.g., rounding), depending on the selected verification mode.

The system first determines whether the work item code exists in the UPB. If the code is valid, the assistant does not attempt any form of reverse lookup, intended code recovery, or approximate matching. Instead, it retrieves the corresponding normative UoM and compares it with the project-applied UoM. Any discrepancy triggers an immediate classification of inconsistency. When both code and UoM are consistent, the system compares the project’s unit price components with the normative values. Items exhibiting deviations are classified strictly as “normative price mismatches”; no diagnostic recovery is triggered when a valid UPB code is already present.

Throughout this process, the GPT assistant is constrained by system-level instructions that prohibit reordering steps, inferring missing information, or generating codes not present in the UPB. The assistant does not perform any numerical reasoning internally; all rule evaluations and numerical comparisons are computed by Python rather than by the LLM. All decisions must be traceable to regulatory data, and all reasoning must occur within the deterministic structure defined in the design phase.

5.3. Exact-Match Detection for Mis-Typed or Mis-Assigned Codes

A substantial proportion of verification inconsistencies in practice arise from typographical errors in work item codes, rather than from semantic mis-assignment. To address these scenarios without resorting to probabilistic similarity methods, the system implements an exact-match module. This module is activated only when the project code does not exist in the UPB. In such cases, the module performs a deterministic equality comparison, implemented in Python, between the project item’s UoM and full price vector and all entries in the UPB.

A perfect match is treated as evidence that the estimator intended to reference the corresponding normative item but entered the code incorrectly. In these cases, the assistant reports the correct normative code and provides an explanation of the mismatch. No inference, no approximate matching, and no semantic interpretation are applied—only exact numerical identity qualifies for recovery. When relative comparison is selected, exact-match recovery is evaluated after both project and UPB price vectors have undergone the same user-declared deterministic transformation, preserving strict equality while accommodating documented rounding practices.

If no exact match is found, the system concludes that the item is genuinely non-listed (TT) and routes it accordingly. The assistant does not assess or interpret price quotations associated with TT items, as quotation appraisal falls outside the scope of automated verification. This strict reliance on Python-based deterministic equality checks ensures that the module remains consistent with regulatory requirements and avoids any behavior resembling LLM-driven interpretation or inference.

5.4. Non-Listed Item Classification and Structured Verification Output

Once an item is classified as TT, the assistant provides structured outputs explaining the basis for classification and the required procedural steps for compliance. These outputs specify whether the TT status arises from the absence of a corresponding UPB code, the lack of an exact-match price vector, or both. As quotation appraisal falls outside the scope of automated verification, the assistant simply signals the need for supporting evidence rather than attempting to validate it.

Across all verification outcomes—compliant items, UoM mismatches, normative price deviations, typographical corrections, and TT items—the assistant provides line-by-line explanations of the rules applied and the evidence used. Because the system performs no semantic reasoning, no similarity assessment, and no inference beyond UPB data, all outputs remain auditable and fully aligned with regulatory expectations. This structured reporting format supports transparency, facilitates audit review, and allows the system to be incorporated directly into formal verification workflows for state-funded projects.

6. Results

6.1. Dataset and Test Setup

This subsection describes the dataset used to evaluate the GPT-based verification assistant, including the real cost estimates, the preprocessing procedures applied to standardize their heterogeneous structures, and the controlled error cases designed to test the full spectrum of the verification logic.

A total of 20 construction cost estimates were collected from state-funded projects in Vietnam. These spreadsheets, prepared by project design consultants, exhibit substantial structural variation that directly affects automated code extraction, UoM verification, and construction of price vectors for deterministic comparison. Across the dataset, 16,100 work-item rows were analyzed, with individual estimates ranging from 27 to 4677 rows, providing wide coverage across project types and levels of complexity.

Several characteristics of the real dataset were particularly relevant to code-focused verification. A total of 35 header configurations were identified, including multi-row headers, merged cells, displaced header rows, and heterogeneous labels for MTR-LBR-MCR fields. These structural variants influence the assistant’s ability to correctly identify the columns containing work item codes, units of measurement, and price components. In addition, 39 subtotal or non-data rows were detected and removed to avoid false error detection. The dataset also included 222 TT items, which provided a useful basis for evaluating how the price-vector engine behaves under TT-type conditions. These characteristics are summarized in

Table 1, which outlines the structural and formatting patterns most relevant to automated code verification and deterministic comparison.

The 20 cost estimates span a diverse set of state-funded project types, reflecting the breadth of construction contexts in which code-level verification is required in practice. These include educational buildings (e.g., schools and associated facilities), healthcare facilities, office buildings and administrative headquarters, warehouses and military-related facilities, industrial plants, residential buildings, and infrastructure and public works. Across these projects, the integrated construction packages cover a wide range of work categories, including structural works, architectural and finishing works, foundation and basement construction, fire protection systems, and specialized utility and auxiliary facilities. This diversity ensures that the dataset captures a broad spectrum of coding practices, unit-of-measurement conventions, and price-vector structures relevant to deterministic verification. Importantly, project type was not treated as an analytical variable, as the verification logic operates at the level of work item codes and price structures rather than project typology.

Further variability occurred in the representation of codes, UoMs, and price fields. Many codes appeared with extra spaces, hyphens, appended characters, or were embedded in merged cells. UoMs were expressed using multiple variants (e.g., “m2,” “M2,” “m2”), while price components often contained missing values, zeros, or inconsistent numeric formatting. These irregularities required robust preprocessing mechanisms—including canonicalization of codes, normalization of UoMs, deterministic numeric parsing, and consistent extraction of material–labor–machinery price fields—to ensure accurate rule-based verification and stable construction of price vectors for subsequent processing.

To ensure full coverage of verification scenarios that may appear infrequently in practice, an additional controlled set of 12 injected error cases was constructed. These represent six error types—mis-typed codes, valid codes with altered MTR-LBR-MCR values, incorrect UoMs, invalid codes with no exact match, TT-type synthetic items, and mixed-pattern deviations—each with two representative samples. The injected set was designed to test both the deterministic components of the verification workflow (code existence, UoM correctness, normative price matching) and the strict exact-match mechanism, which activates only when the code does not exist in the UPB.

Table 2 summarizes the structure and purpose of this error-injection dataset.

Each work item, both real and injected, was processed through the full verification pipeline:

(1) data ingestion and extraction of the code, UoM, and MTR-LBR-MCR values;

(2) deterministic checks for code existence, UoM alignment, and normative price consistency;

(3) conditional activation of exact-match diagnostics only when codes are invalid; and

(4) structured reporting with similarity-based benchmarking when applicable.

All similarity-based or approximate benchmarking processes present in earlier prototypes were fully removed to preserve deterministic, auditable behavior.

6.2. Deterministic Verification Performance

The deterministic components of the verification workflow were evaluated across the full combined dataset, including the 20 real construction estimates and the controlled error cases described in

Section 6.1. These components operate strictly on rule-based logic—verifying whether (1) the work item code exists in the applicable Unit Price Book, (2) the UoM aligns with the normative specification, and (3) the MTR-LBR-MCR price components match the official values. Together, these checks form the foundational requirements of code-focused verification and determine whether the exact-match module must be activated for invalid codes only.

To clarify how these deterministic checks translate into system outputs, the verification outcomes were grouped into five categories that capture the complete range of results produced by the rule-based pathway. The previous category describing “strong price-pattern deviation” was removed, as the system does not perform threshold-based or heuristic deviation analysis and cannot generate diagnostic signaling unless the code itself is invalid.

Table 3 summarizes these five outcome categories, the conditions under which each category is triggered, and the corresponding system behavior. These categories collectively define the decision boundaries for deterministic verification and determine whether corrective or exception handling pathways (e.g., exact-match recovery or TT classification) should be invoked.

The four deterministic verification outcome categories in

Table 3 include:

Valid Code—Full Normative Match,

Valid Code—UoM Mismatch,

Valid Code—Normative Price Mismatch, and

Invalid or Non-existent Code.

The system does not include any outcome category based on inferred or approximate assessments of price deviation; any deviation in price components under a valid code is treated solely as a deterministic mismatch rather than a trigger for diagnostic recovery. Diagnostic behavior is activated only when the code is invalid.

These outcome categories form the complete range of deterministic behaviors that the system can exhibit during verification, ensuring that all items are classified solely according to rule-based checks and not through any form of approximate or nearest-match diagnostics.

6.3. Exact-Match Recovery Performance

The revised diagnostic mechanism relies exclusively on exact numerical matching between a project item’s unit-price structure and entries in the provincial UPBs. This mechanism reflects the deterministic procedure used by human verifiers when attempting to recover typographical errors in invalid codes, relying strictly on numerical identity rather than semantic resemblance. Unlike earlier conceptual formulations, the evaluation of this module was conducted solely on the twelve controlled error-injection cases rather than on the full set of twenty real estimates, ensuring that its performance was assessed under clearly defined and reproducible test conditions.

Across these twelve injected cases, the assistant encountered scenarios intentionally constructed to trigger exact-match behavior, including mis-typed codes, invalid codes with no normative correspondence, TT-type items, and mixed-pattern deviations. Only when a code was not found in the UPB did the deterministic engine activate the exact-match comparison, in which the project item’s UoM and complete MTR-LBR-MCR price vector were evaluated for strict equality against all UPB entries. In cases where perfect equality was observed, the assistant recovered the correct normative code and reported the detected typographical error. All recovery outcomes resulted entirely from Python-executed equality checks, with no inferencing or approximate matching by the LLM.

The results demonstrated that exact-match recovery performs reliably for the narrow class of errors it is designed to address—namely, typographical deviations in codes whose price vector remains fully normative. All mis-typed code cases in the injected dataset were successfully recovered, and one mixed-pattern case also produced a valid recovery because its price vector remained intact despite the malformed code. For all other injected scenarios, exact-match recovery was correctly not invoked. Items with valid codes but altered prices, or valid codes with incorrect UoMs, were deterministically classified as inconsistent. Items containing invalid codes whose price vectors did not match any normative entry were routed to the TT pathway, distinct from TT-type synthetic items, which represent genuinely non-listed construction tasks rather than code-entry errors.

Boundary conditions also emerged in which rounding, formatting anomalies, or number-encoding differences produced marginal deviations in price vectors. In accordance with the system’s strict design principles, the assistant refrained from recovery in all such cases, as exact numerical identity is the sole permissible condition for code correction. This strict determinism is essential for auditability in state-funded verification workflows, where probabilistic, semantic, or similarity-based reasoning is not acceptable.

Practitioner feedback affirmed the interpretability of the exact-match module. Reviewers noted that deterministic explanations improved traceability and reduced the time required to identify typographical errors without encouraging overreach into semantic interpretation. The module therefore functions strictly as an exact-match detector rather than a reasoning engine, ensuring that all corrections arise from rule-based evaluation grounded entirely in normative data.

It is important to note that exact-match recovery is not evaluated using aggregate accuracy metrics or similarity thresholds. Instead, its effect is verified through strict activation control: the mechanism is triggered exclusively when a code is invalid and full equality is satisfied across the UoM and the complete MTR–LBR–MCR price vector, following the explicitly declared comparison mode. This design ensures zero false recovery outside the intended error class and preserves auditability by preventing any tolerance-based or inferential behavior.

The performance of the exact-match recovery mechanism across the twelve injected cases is summarized in

Table 4, which reports the recovery outcomes and deterministic classifications for each error category.

6.4. Improvements Across Action Research Cycles

Improvements to the GPT-based verification assistant emerged progressively through the three Action Research cycles, with each cycle revealing different classes of inconsistencies present in real estimates and prompting refinements to both extraction logic and deterministic rule execution. Across these cycles, the assistant evolved from a preliminary prototype with basic rule-following capabilities into a stable, auditable system aligned with the procedural expectations of Vietnamese cost verifiers. No inference-based or similarity-driven behavior was retained; all refinements strengthened deterministic, data-grounded processing.

During the first cycle, substantial variation in spreadsheet structures—particularly displaced headers, merged cells, and non-standard column naming—made clear the need for more robust extraction patterns. The assistant’s initial difficulties in identifying price fields under inconsistent labels prompted enhancements to header detection and normalization rules, resulting in a more stable representation of MTR-LBR-MCR components across heterogeneous files. These changes strengthened deterministic parsing stability and ensured that later verification steps operated on fully normalized, unambiguous inputs.

The second cycle focused on the interaction between deterministic checks and the diagnostic layer. In early iterations, the system occasionally surfaced behavior that resembled interpretive matching, especially when attempting to compare project items with normative data that were numerically similar but not identical. These behaviors were eliminated by reinforcing guardrails that required all diagnostic operations to rely exclusively on Python-based exact equality checks. As a result, exact-match recovery became strictly limited to invalid codes whose price vectors were identical to a normative entry, with no possibility of approximate or interpretive matching.

The third cycle emphasized the treatment of TT items and the clarity of classification boundaries. Earlier behavior in which the assistant suggested speculative alignments for non-matching items was entirely eliminated. TT classification was redefined to occur only when (1) the project code does not exist in the UPB and (2) no exact-match equality is found—ensuring clean separation between typographical error recovery and genuinely non-listed items. Additionally, refinements were made to the system’s structured reporting format, ensuring that all TT decisions reflect the deterministic decision tree and do not resemble any form of inference or recommendation.

Together, the improvements achieved across the Action Research cycles enhanced the system’s reliability, transparency, and alignment with real-world verification workflows. By the end of the third cycle, the assistant’s behavior was fully deterministic at every stage—parsing, verification, diagnostic comparison, and classification—executed through Python-based equality checks and strict rule sequencing with no reliance on LLM reasoning, similarity-based inference, or speculative judgment.

Afterwards, in the fourth Action Research cycle, this deterministic behavior was preserved while refining how numerical equality is operationalized in the presence of systematic rounding practices. Selected cases previously classified as normative price mismatches were re-evaluated under the relative comparison mode when rounding precision was explicitly declared, restoring deterministic equality without expanding the diagnostic scope or introducing tolerance-based reasoning. More importantly, no new recovery categories were added, and all verification outcomes continued to be produced exclusively through Python-executed rule evaluation.

6.5. Practitioner-Facing Insights from Real Estimates

Analysis of the twenty collected estimates also yielded several insights into the practical challenges that arise during verification and the implications these challenges hold for the deployment of the GPT-based assistant. A consistent observation was the extent of structural heterogeneity across spreadsheets. Header configurations varied widely not only in layout but also in terminology and ordering, and several files included non-standard header rows, repeated project metadata, or partially merged cells. These inconsistencies confirmed the need for a robust extraction and normalization layer capable of interpreting variant structures while avoiding erroneous field mappings. The assistant’s ability to parse these heterogeneous formats deterministically—without relying on LLM inference—was essential for ensuring that downstream verification remained stable and auditable.

A second insight relates to the prevalence of malformed or non-standard codes, which appeared more frequently than initially anticipated. Many of these arose from simple copy-and-paste errors or ad hoc formatting adjustments, leading to missing digits, additional characters, or truncated prefixes. Because such errors often blended into the surrounding spreadsheet context, they could remain unnoticed during manual verification. The assistant’s exact-match recovery mechanism proved effective in resolving these situations only when the corresponding price vector matched a normative UPB entry exactly. Practitioners reported that this capability reduced the time spent tracing numerical inconsistencies and helped prevent false TT classification caused solely by typographical errors. Importantly, users recognized that these corrections resulted from deterministic equality checks that were executed in Python, not from any interpretive or inferential behavior by the LLM.

At the same time, the evaluation highlighted several cases where discrepancies stemmed not from obvious data-entry errors but from partial or incremental edits to unit price components. These included deliberate or inadvertent adjustments to only one or two elements of the MTR-LBR-MCR structure, leaving the remaining values untouched. In these scenarios, because the code was valid, the assistant did not invoke any recovery logic; instead, such items were deterministically classified as normative price mismatches. Practitioners noted that these cases often required closer human scrutiny, as the assistant was designed in a way that applied no approximate matching, similarity reasoning, or semantic interpretation beyond strict rule checks.

Another recurring insight involved the handling of TT items, which occurred frequently across the dataset, particularly in estimates for specialized or project-specific works. Practitioners emphasized that the assistant’s conservative behavior—classifying TT items only after confirming both the absence of the code in the UPB and the absence of any exact-match correspondence—was essential to maintaining methodological integrity. Reviewers also appreciated that the assistant did not attempt to evaluate the plausibility of supporting quotations or the appropriateness of TT pricing, reflecting the fact that quotation appraisal lies outside the permissible scope of deterministic automated verification. They further noted that the assistant clearly documented the reasoning path leading to a TT classification, thereby simplifying subsequent manual appraisal.