HFE-YOLO: Hybrid Feature Enhancement with Multi-Attention Mechanisms for Construction Site Object Detection

Abstract

1. Introduction

2. Theoretical Background and Related Work

2.1. Object Detection Foundations

2.2. Multi-Task Learning Framework

2.3. Construction Monitoring Approaches

3. Methodology

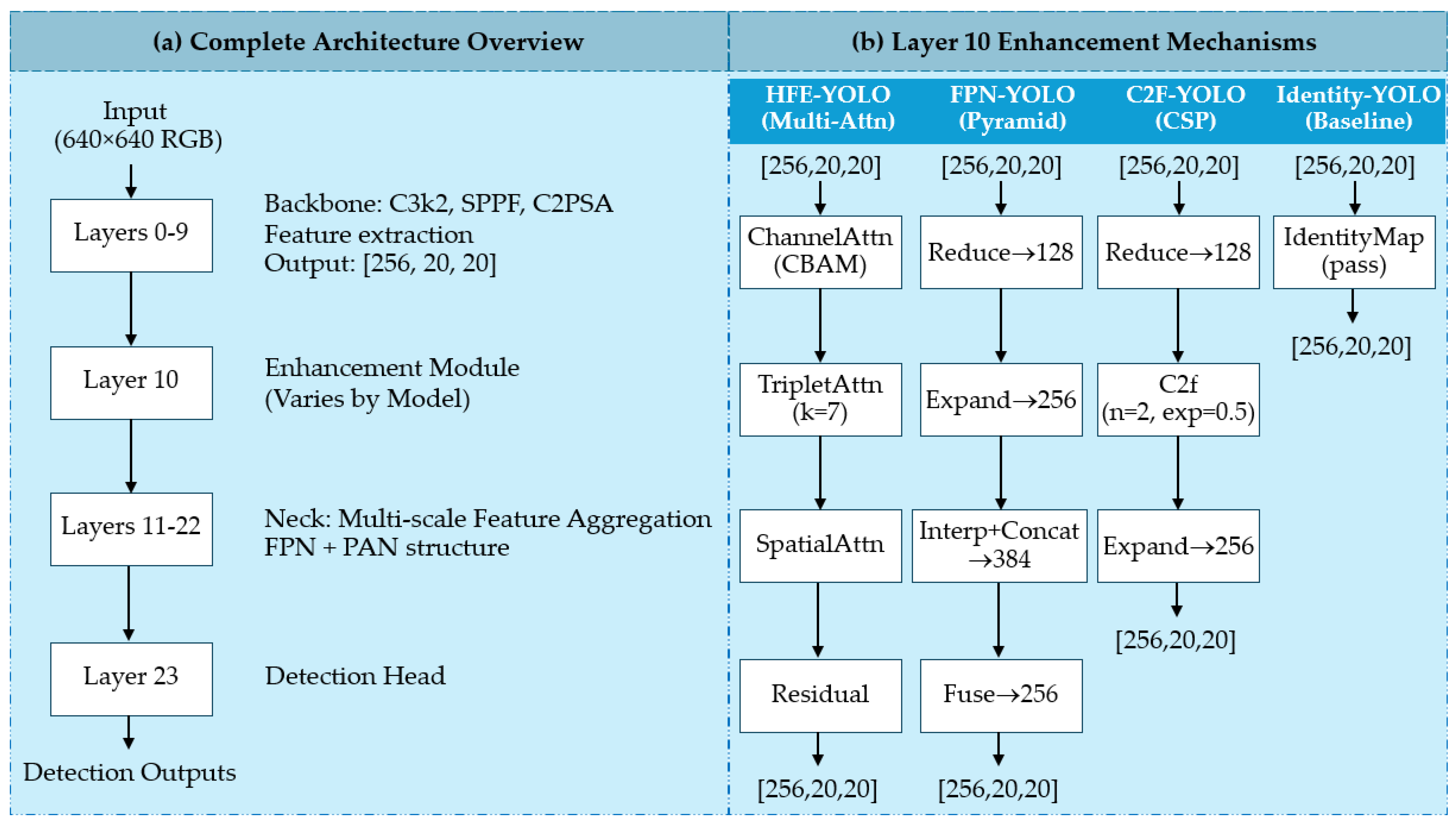

3.1. YOLOv11 Architecture Overview

3.2. Enhancement Mechanisms

3.2.1. HFE-YOLO: Multi-Attention Processing

3.2.2. Identity-YOLO: Minimal Intervention Baseline

3.2.3. FPN-YOLO: Feature Pyramid Enhancement

3.2.4. C2F-YOLO: Cross-Stage Partial Enhancement

3.2.5. Comparative Analysis and Architectural Trade-Offs

3.3. Datasets and Distribution Characteristics

3.4. Experimental Environment

3.5. Performance Metrics

3.5.1. Intersection over Union (IoU)

3.5.2. Precision and Recall

3.5.3. Average Precision (AP) and Mean Average Precision (mAP)

3.5.4. mAP@50 and mAP@50–95

3.5.5. Loss Functions

4. Experimental Results

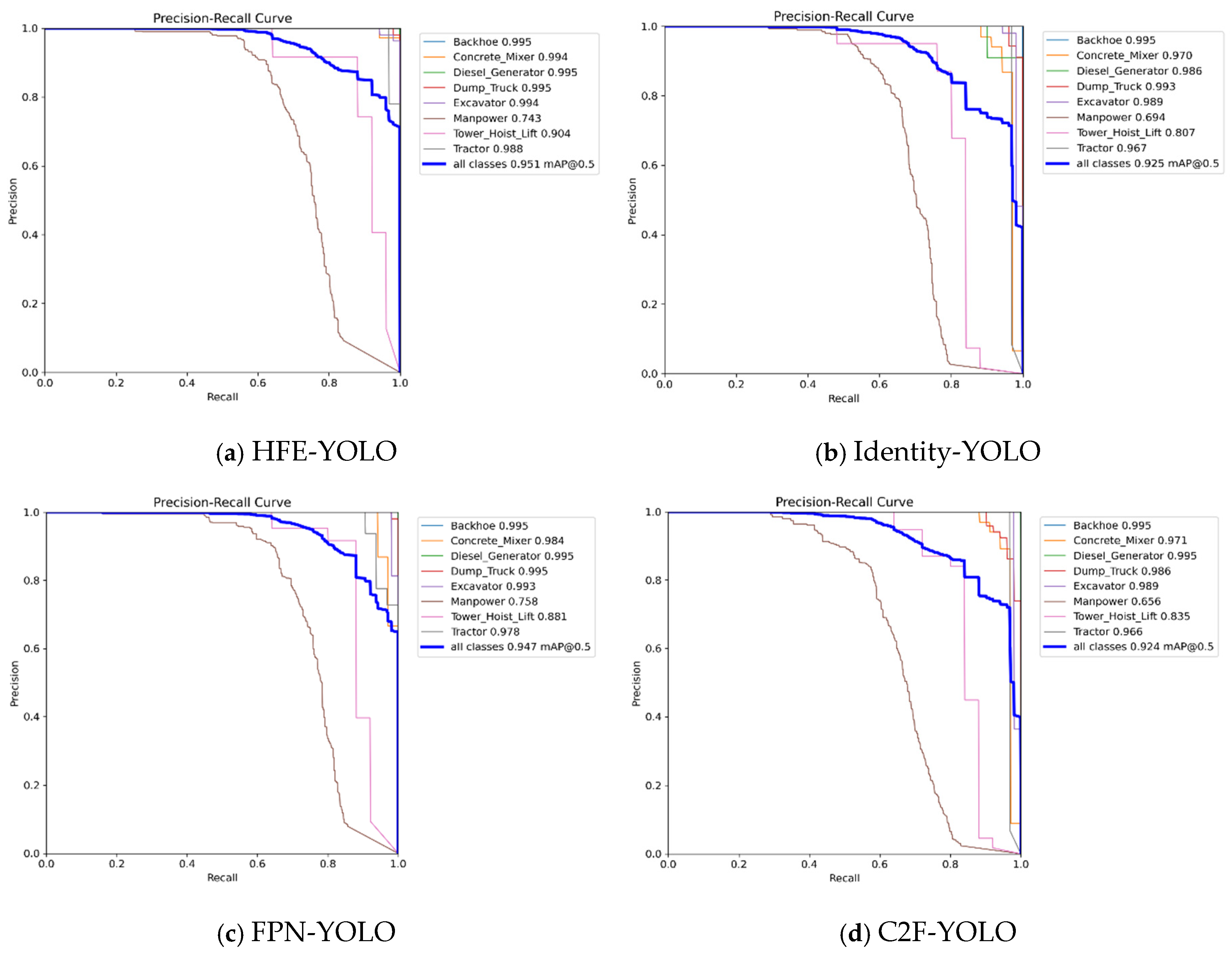

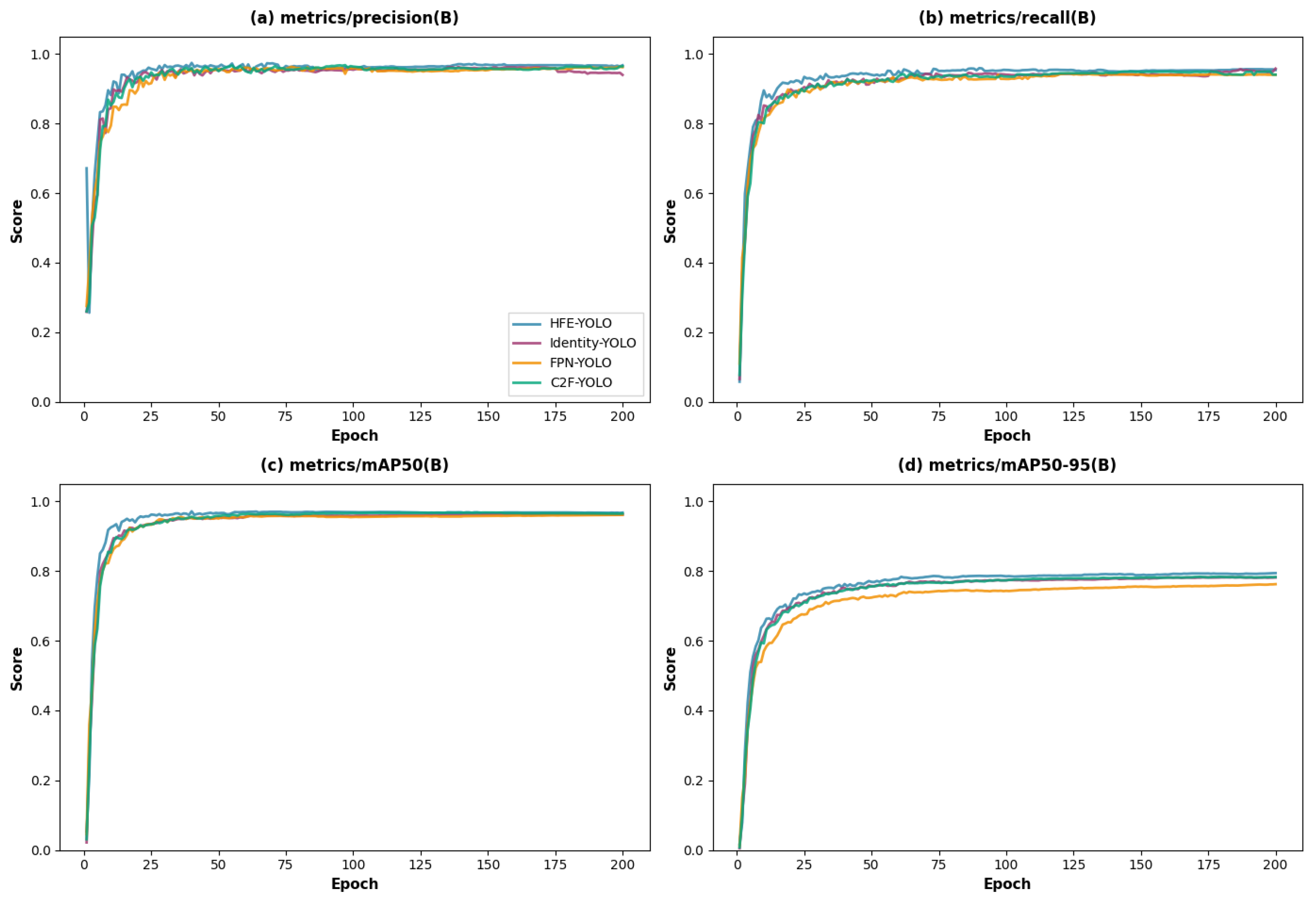

4.1. Performance on CS Dataset

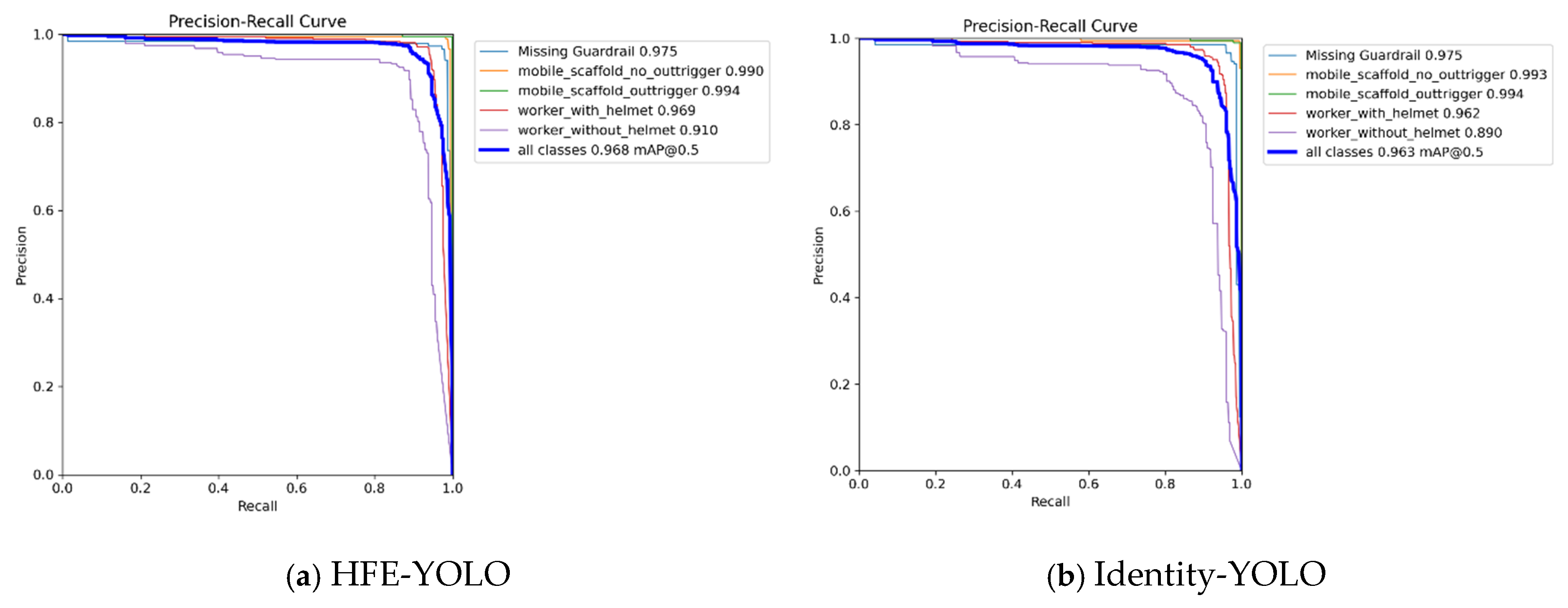

4.2. Performance on SG Dataset

4.3. Enhancement Mechanism Comparison at Layer 10

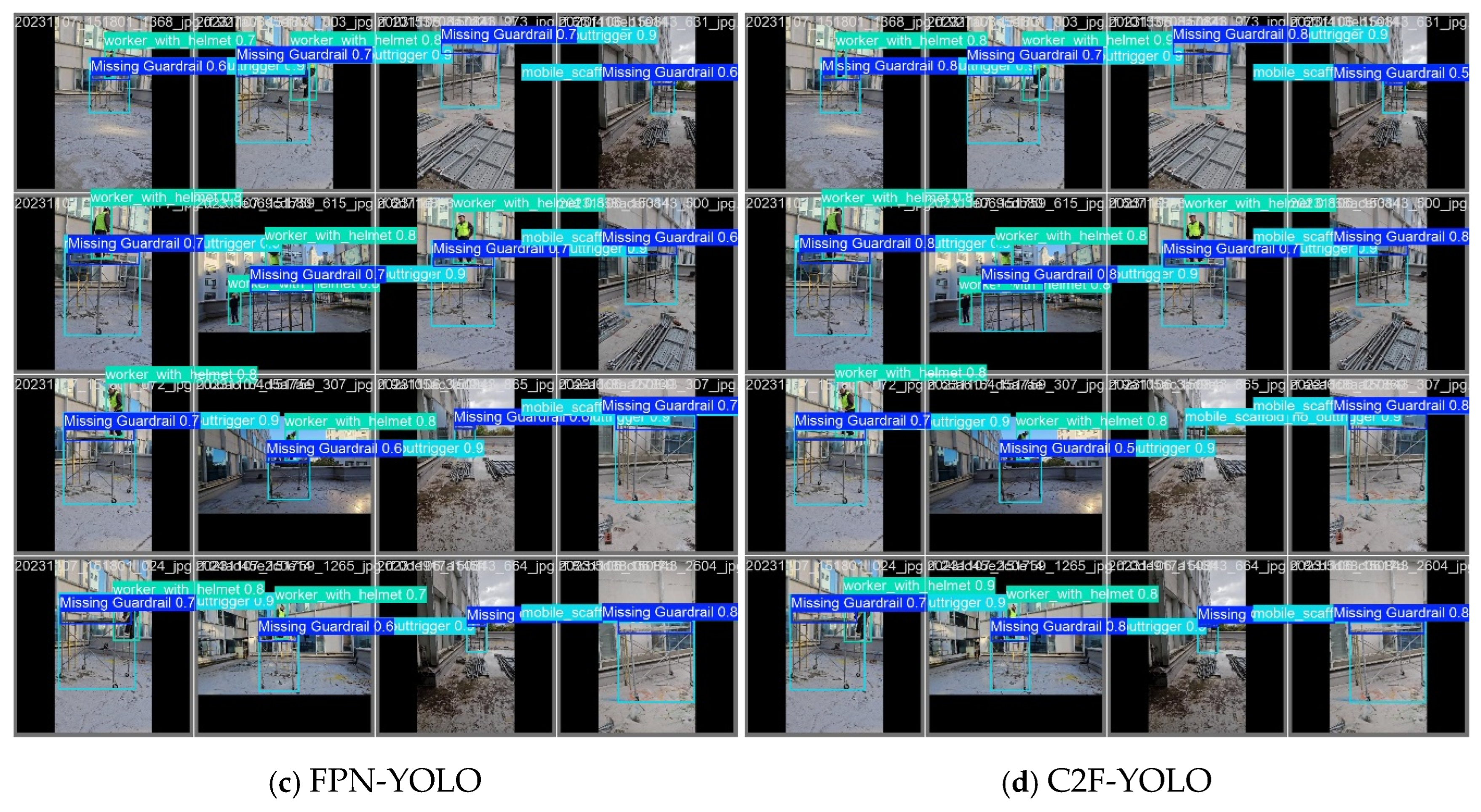

4.3.1. CS Enhancement Comparison

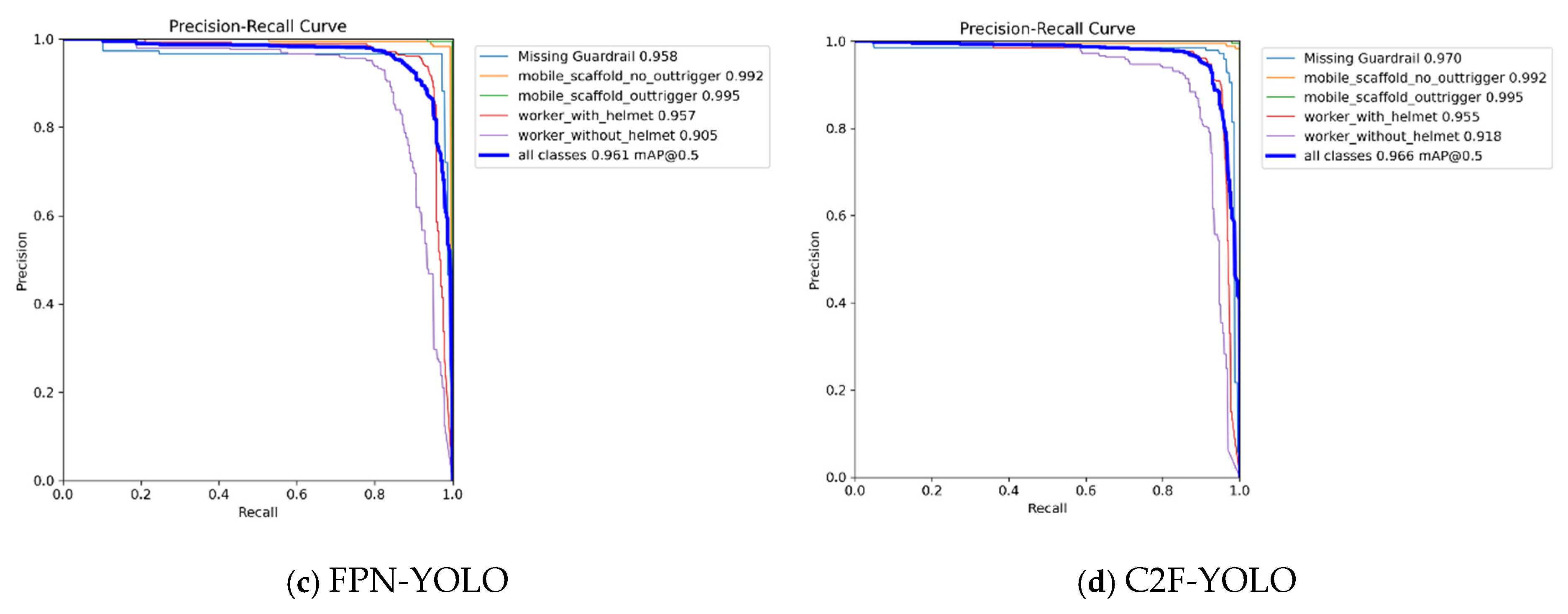

4.3.2. SG Enhancement Comparison

4.3.3. Enhancement Mechanism Analysis

5. Discussion

5.1. Enhancement Mechanism Effectiveness

5.2. Class-Specific Performance

5.3. Comparative Benchmarking with Prior Literature

5.4. Broader Implications and Limitations

6. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Study | Detection Target | Base Architecture | Key Technical Innovations | Classes | Dataset (Size) | mAP@0.5 (%) | Notable Contribution | Limitations |

|---|---|---|---|---|---|---|---|---|

| [8] | Construction machinery | Faster-RCNN-ResNet101 | First construction-dataset (ACID); 4-algorithm benchmark | 10 classes: Excavator, dump truck, compactor, dozer, grader, concrete mixer truck, wheel loader, backhoe loader, tower crane, mobile crane | 10,000 images | 89.2 | First comprehensive machine dataset with systematic methodology | Limited 10 machine types; annotation quality issues |

| [28] | MOCS: Moving objects | Large-scale real sites | 174 sites across multiple countries and project types | 13 classes: Worker, tower crane, hanging hook, vehicle crane, roller, bulldozer, excavator, truck, loader, pump truck, concrete mixer truck, pile driver, other vehicle | 41,668 images | 51.04 | Largest moving object dataset; 174-site diversity; systematic benchmark | Scaffold work mentioned but no safety monitoring class |

| [31] | SODA: 4M1E construction | Multi-device collection | UAV + handheld cameras; multiple construction phases; privacy protection | 15 classes: Worker, helmet, vest, board, wood, rebar, brick, scaffold, handcart, cutter, electric box, hopper, hook, fence, slogan | 19,846 images (10 sites: Guangzhou, Shenzhen, Dongguan) | 81.47 | Largest 4M1E dataset; per-pixel segmentation; publicly available | Scaffold lacks safety compliance specifications |

| [5] | Machinery swarm operations | Improved YOLOv4 | K-means anchors; hybrid dilated convolution; Focal loss | 4 classes: Loader, excavator, truck, person | Self-built swarm operations (11,250 instances) | 97.03 | First swarm operations focus; maintained 31.11 FPS | Occlusion limitations; dataset not public |

| [9] | Multi-scale site monitoring | YOLOv5s + SOD | Small object detection; edge computing deployment | 16 classes: Excavator, payloader, forklift, dump truck, mixer truck, pump car, pile driver, truck, person, bicycle, car, motorcycle, bus, scissor lift, tower crane, aerial lift truck | COCO + AI Hub (299,655 images, 575,913 instances) | 75.6 | First edge computing implementation | Manual configuration; limited equipment-safety integration |

| [1] | Heavy equipment benchmark | YOLOv5l | K-means anchors; systematic augmentation | 9 classes: Bulldozers, dump trucks, excavators, forklifts, loaders, MEWP, mobile cranes, Remicon, scrapers | Web-crawled + onsite (10,294 images) | 98.93 (mAP@0.5:0.95: 90.26) | Highest reported accuracy (96.61–99.79% per-class AP) | Poor low-light performance; dataset not public |

| [25] | Occluded object detection | YOLOv7 + ECA | Efficient Channel Attention; SIoU loss; DIoU-NMS | 6 classes: Workers, machinery, vehicles, excavators, raw materials, construction components | MOCS dataset (17,000 images) | 85.4 | Comprehensive occlusion handling mechanisms | Poor small object performance; no real-world validation |

| [26] | Multi-task safety monitoring | Multi-task YOLOv8 | Unified detection + segmentation + pose + tracking | 7 classes: Workers, excavators, cranes, trucks, loaders, concrete mixers, helmets | SODA (19,846), MOCS (23,404), Excavator Pose Dataset (8380) | 73.2 (detect), 63.7 (segment), 99.5 (pose) | First comprehensive multi-task framework; Unity-generated virtual data | Lower accuracy vs. specialized models; tracking inconsistencies |

| [30] | Heavy construction equipment | Custom CNN | Sequential CNN architecture | 12 classes: Excavator, dump truck, concrete mixer machine/truck, asphalt roller, boom lift, forklift, loader, motor grader, pile driving machine, scissor lift, telescopic handler | Self-collected (10,846 images) | Not reported (P: 0.71–0.87, R: 0.73–0.86, F1: 0.75–0.86) | Most extensive category coverage (12 equipment types) | No multi-site validation; class imbalance |

| [27] | Multi-object segmentation | YOLOv11x-Seg | C3K2 modules; C2PSA attention | 13 classes: Bulldozer, concrete mixer, crane, excavator, hanging head, loader, other vehicle, pile driving, pump truck, roller, static crane, truck, worker | SODA + MOCS combined (40,659 images) | 80.8 | First YOLOv11-Seg application; 13-category coverage | Uneven class performance; no safety integration |

| [32] | Hierarchical safety classification | SRGAN + RT-DETR-X + DINOv2 | Super-resolution; 3-level hierarchical classification | 5 classes: Backhoe, dump truck, excavator, bulldozer, wheel loader | YouTube videos (44,295 images) | 95.0 | First super-resolution cascade learning; hierarchical safety status | High computational demands (256 GB RAM); limited to 5 classes |

| [29] | Heavy equipment | YOLOv10 + Swin Transformer | Transformer backbone integration; dual-label assignment | 5 classes: Loader, dump truck, excavator, Remicon, crane | Korea construction sites (21,772 images) | 89.72 | First YOLOv10-transformer integration; real-time 35 FPS | Domain adaptation challenges; Korea-only validation |

| [10] | Worker–equipment collision prevention | MSP-YOLO (YOLOv12n-based) | Small Object Enhancement Pyramid; Multi-axis Gated Attention | 7 classes: Workers, excavators, loaders, dump trucks, mobile cranes, rollers, bulldozers | Fujian Province, China (22,798 images) | 82.5 | Multi-scale small object detection; open-source | Computational overhead; dataset not public |

| [4] | Scaffolding safety | YOLOv12 + RealSense D455 | 3D depth reasoning; temporal analysis; regulatory compliance | 5 classes: Missing guardrail, mobile scaffold—no outrigger, mobile scaffold—outrigger, worker with helmet, worker without helmet, z-Tag | 4868 images | 97.9 | Only scaffolding structural safety study; 3D depth integration | Controlled environment only; no equipment integration |

| [62] | Comprehensive safety monitoring | Feature Fusion (InceptionV3 + Mobile NetV2) | Model truncation; multi-scale fusion; SE attention | 10 classes: Machinery, vehicle, hardhat, no hardhat, safety vest, no safety vest, person, mask, no mask, safety cone | 5780 images | 81–90 | Negative class detection (no hardhat, no vest) | High computational requirements; untested deployment |

| [60] | Drone-based monitoring | GS-LinYOLOv10 | GSConv; Linformer attention; IoT sensor fusion | 16 classes: Excavator, wheel loader, dump truck, mixer truck, pump car, scissor lift, tower crane, aerial lift truck, person, hardhat, safety vest, bicycle, car, motorcycle, bus, truck | COCO (200,000+) + Construction Safety (2801) | 89.4–90.3 | Highest accuracy + fastest inference (92.41 FPS); drone deployment | Small object limitations; no structural safety detection |

| [61] | Mobile resource tracking | YOLOv8 + PANet | PANet features; twice-association tracking; Enhanced Kalman Filter | 13 classes: Workers, dump trucks, excavators, concrete mixers, concrete pump trucks, compactors, wheel loaders, tank trucks, forklifts, semitrucks, sprinklers, crawler cranes, tower cranes | 11,039 images | Not reported (Tracking: MOTA 96.49%, IDF1 95.63%, HOTA 90.20%) | First 13-category tracking; fastest real-time (123.6 FPS) | 2D tracking only; prolonged occlusion failures |

| This Study (2025) | Equipment + structural safety | YOLOv11n + 4 variants | Systematic backbone–neck comparison (HFE, FPN, C2F, Identity); dual-dataset design | 13 classes: 8 equipment (backhoe, concrete mixer, diesel generator, dump truck, excavator, manpower, tower hoist, tractor) + 5 safety (missing guardrail, mobile scaffold variants, helmet compliance) | ConstructSight (2195 images) + SafeGuard (12,645 images) | 92.4–96.8 | Distribution-dependent effectiveness quantified; class imbalance impact on architecture selection | Worker detection challenges; Layer 10 focus only |

References

- Shin, Y.; Choi, Y.; Won, J.; Hong, T.; Koo, C. A new benchmark model for the automated detection and classification of a wide range of heavy construction equipment. J. Manag. Eng. 2024, 40, 04023069. [Google Scholar] [CrossRef]

- Li, X.; Ji, H. Enhanced safety helmet detection through optimized YOLO11: Addressing complex scenarios and lightweight design. J. Real-Time Image Process. 2025, 22, 128. [Google Scholar] [CrossRef]

- Huang, K.; Abisado, M.B. Lightweight construction safety behavior detection model based on improved YOLOv8. Discov. Appl. Sci. 2025, 7, 326. [Google Scholar] [CrossRef]

- Abbas, M.S.; Hussain, R.; Zaidi, S.F.A.; Lee, D.; Park, C. Computer Vision-Based Safety Monitoring of Mobile Scaffolding Integrating Depth Sensors. Buildings 2025, 15, 2147. [Google Scholar] [CrossRef]

- Hou, L.; Chen, C.; Wang, S.; Wu, Y.; Chen, X. Multi-Object Detection Method in Construction Machinery Swarm Operations Based on the Improved YOLOv4 Model. Sensors 2022, 22, 7294. [Google Scholar] [CrossRef]

- Shanti, M.Z.; An, B.; Yeun, C.Y.; Cho, C.S.; Damiani, E.; Kim, T.Y. Enhancing Worker Safety at Heights: A Deep Learning Model for Detecting Helmets and Harnesses using DETR Architecture. IEEE Access 2025, 13, 12345–12356. [Google Scholar] [CrossRef]

- Sun, L.; Li, H.; Wang, L. HWD-YOLO: A New Vision-Based Helmet Wearing Detection Method. Comput. Mater. Contin. 2024, 80, 4543–4560. [Google Scholar] [CrossRef]

- Xiao, B.; Kang, S.C. Development of an image data set of construction machines for deep learning object detection. J. Comput. Civ. Eng. 2021, 35, 05020005. [Google Scholar] [CrossRef]

- Kim, S.; Hong, S.H.; Kim, H.; Lee, M.; Hwang, S. Small object detection (SOD) system for comprehensive construction site safety monitoring. Autom. Constr. 2023, 156, 105103. [Google Scholar] [CrossRef]

- Zhang, Y.; Lin, C.; Chen, G. Efficient multi-scale detection of construction workers and vehicles based on deep learning. J. Real-Time Image Process. 2025, 22, 127. [Google Scholar] [CrossRef]

- Rahimian, F.P.; Seyedzadeh, S.; Oliver, S.; Rodriguez, S.; Dawood, N. On-demand monitoring of construction projects through a game-like hybrid application of BIM and machine learning. Autom. Constr. 2020, 110, 103012. [Google Scholar] [CrossRef]

- Data Subjects, Digital Surveillance, AI and the Future of Work: Study; European Parliament: Luxembourg, 2020. [CrossRef]

- Abraha, H.H. A pragmatic compromise? The role of Article 88 GDPR in upholding privacy in the workplace. Int. Data Priv. Law 2022, 12, 276–296. [Google Scholar] [CrossRef]

- Saif, W.; Williams, T.; Wong, C.; Dobos, J.; Martinez, P.; Kassem, M. Digital Twin for Safety on Construction Sites: A Real-time Risk Monitoring System Combining Wearable Sensors and 4D BIM. In Proceedings of the 2024 European Conference on Computing in Construction, Chania, Greece, 4–7 July 2024. [Google Scholar] [CrossRef]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Guo, M.H.; Xu, T.X.; Liu, J.J.; Liu, Z.N.; Jiang, P.T.; Mu, T.J.; Zhang, S.K.; Martin, R.R.; Cheng, M.M.; Hu, S.M. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar] [CrossRef]

- Fang, Y.; Ma, Y.; Zhang, X.; Wang, Y. Enhanced YOLOv5 algorithm for helmet wearing detection via combining bi-directional feature pyramid, attention mechanism and transfer learning. Multimed. Tools Appl. 2023, 82, 28617–28641. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, J.; Shi, L.; Huang, M.; Lin, L.; Zhu, L.; Wang, Z.; Zhang, C. Detection method of the seat belt for workers at height based on UAV image and YOLO algorithm. Array 2024, 22, 100340. [Google Scholar] [CrossRef]

- Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data (General Data Protection Regulation). Off. J. Eur. Union 2016, 119, 1–88. Available online: http://data.europa.eu/eli/reg/2016/679/oj (accessed on 20 June 2025).

- Council Directive 89/391/EEC of 12 June 1989 on the introduction of measures to encourage improvements in the safety and health of workers at work. Off. J. Eur. Communities 1989, 183, 1–8. Available online: http://data.europa.eu/eli/dir/1989/391/oj (accessed on 20 June 2025).

- Martínez-Aires, M.D.; López-Alonso, M.; Aguilar-Aguilera, A.; de la Hoz-Torres, M.L.; Costa, N.; Arezes, P. The General Principles of Prevention in the Framework Directive 89/391/EEC of Occupational Risk Prevention for the Building Construction. In Occupational and Environmental Safety and Health VI; Studies in Systems, Decision and Control; Springer: Cham, Switzerland, 2025; Volume 230, pp. 293–302. [Google Scholar] [CrossRef]

- Council Directive 92/57/EEC of 24 June 1992 on the implementation of minimum safety and health requirements at temporary or mobile construction sites. Off. J. Eur. Communities 1992, 245, 6–22. Available online: http://data.europa.eu/eli/dir/1992/57/oj (accessed on 20 June 2025).

- Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 laying down harmonised rules on artificial intelligence and amending Regulations (EC) No 300/2008, (EU) No 167/2013, (EU) No 168/2013, (EU) 2018/858, (EU) 2018/1139 and (EU) 2019/2144 and Directives 2014/90/EU, (EU) 2016/797 and (EU) 2020/1828 (Artificial Intelligence Act). Off. J. Eur. Union 2024, 1689, 1–144. Available online: http://data.europa.eu/eli/reg/2024/1689/oj (accessed on 20 June 2025).

- Wang, Q.; Liu, H.; Peng, W.; Tian, C.; Li, C. A vision-based approach for detecting occluded objects in construction sites. Neural Comput. Appl. 2024, 36, 10825–10837. [Google Scholar] [CrossRef]

- Liu, L.; Guo, Z.; Liu, Z.; Zhang, Y.; Cai, R.; Hu, X.; Yang, R.; Wang, G. Multi-Task Intelligent Monitoring of Construction Safety Based on Computer Vision. Buildings 2024, 14, 2429. [Google Scholar] [CrossRef]

- He, L.; Zhou, Y.; Liu, L.; Ma, J. Research and Application of YOLOv11-Based Object Segmentation in Intelligent Recognition at Construction Sites. Buildings 2024, 14, 3777. [Google Scholar] [CrossRef]

- Xuehui, A.; Li, Z.; Zuguang, L.; Chengzhi, W.; Pengfei, L.; Zhiwei, L. Dataset and benchmark for detecting moving objects in construction sites. Autom. Constr. 2021, 122, 103482. [Google Scholar] [CrossRef]

- Eum, I.; Kim, J.; Wang, S.; Kim, J. Heavy Equipment Detection on Construction Sites Using You Only Look Once (YOLO-Version 10) with Transformer Architectures. Appl. Sci. 2025, 15, 2320. [Google Scholar] [CrossRef]

- Yamany, M.S.; Elbaz, M.M.; Abdelaty, A.; Elnabwy, M.T. Leveraging convolutional neural networks for efficient classification of heavy construction equipment. Asian, J. Civ. Eng. 2024, 25, 6007–6019. [Google Scholar] [CrossRef]

- Duan, R.; Deng, H.; Tian, M.; Deng, Y.; Lin, J. SODA: A large-scale open site object detection dataset for deep learning in construction. Autom. Constr. 2022, 142, 104499. [Google Scholar] [CrossRef]

- Kim, B.; An, E.J.; Kim, S.; Sri Preethaa, K.R.; Lee, D.E.; Lukacs, R.R. SRGAN-enhanced unsafe operation detection and classification of heavy construction machinery using cascade learning. Artif. Intell. Rev. 2024, 57, 206. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016, Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science, Volume 9905; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 6000–6010. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer Vision—ECCV 2018, Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science, Volume 11211; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Caruana, R. Multitask learning. Mach. Learn. 1997, 28, 41–75. [Google Scholar] [CrossRef]

- Ruder, S. An overview of multi-task learning in deep neural networks. arXiv 2017, arXiv:1706.05098. [Google Scholar] [CrossRef]

- Xiao, F.; Mao, Y.; Tian, G.; Chen, G.S. Partial-model-based damage identification of long-span steel truss bridge based on stiffness separation method. Struct. Control Health Monit. 2024, 2024, 5530300. [Google Scholar] [CrossRef]

- Xiao, F.; Mao, Y.; Sun, H.; Chen, G.S.; Tian, G. Stiffness separation method for reducing calculation time of truss structure damage identification. Struct. Control Health Monit. 2024, 2024, 5171542. [Google Scholar] [CrossRef]

- Mao, Y.; Xiao, F.; Tian, G.; Xiang, Y. Sensitivity analysis and sensor placement for damage identification of steel truss bridge. Structures 2025, 73, 108310. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Saeheaw, T. SC-YOLO: A Real-Time CSP-Based YOLOv11n Variant Optimized with Sophia for Accurate PPE Detection on Construction Sites. Buildings 2025, 15, 2854. [Google Scholar] [CrossRef]

- Alkhammash, E.H. Multi-Classification Using YOLOv11 and Hybrid YOLO11n-MobileNet Models: A Fire Classes Case Study. Fire 2025, 8, 17. [Google Scholar] [CrossRef]

- Misra, D.; Nalamada, T.; Arasanipalai, A.U.; Hou, Q. Rotate to Attend: Convolutional Triplet Attention Module. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 3139–3148. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A New Backbone that Can Enhance Learning Capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar] [CrossRef]

- AI IN CIVIL. Construction Project Monitoring Dataset. Roboflow Universe. 2024. Available online: https://universe.roboflow.com/ai-in-civil/02.-construction-project-monitoring (accessed on 20 June 2025).

- CombinedMobileScaffolding. MobileScaffoldingCheck Dataset. Roboflow Universe. 2024. Available online: https://universe.roboflow.com/combinedmobilescaffolding/mobilescaffoldingcheck (accessed on 20 June 2025).

- Oksuz, K.; Cam, B.C.; Kalkan, S.; Akbas, E. Imbalance problems in object detection: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3388–3415. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014, Proceedings of the 13th European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Lecture Notes in Computer Science, Volume 8693; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on deep learning with class imbalance. J. Big Data 2019, 6, 27. [Google Scholar] [CrossRef]

- Buda, M.; Maki, A.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259. [Google Scholar] [CrossRef]

- Lyu, Y.; Yang, X.; Guan, A.; Wang, J.; Dai, L. Construction personnel dress code detection based on YOLO framework. CAAI Trans. Intell. Technol. 2024, 9, 709–721. [Google Scholar] [CrossRef]

- Yipeng, L.; Junwu, W. Personal protective equipment detection for construction workers: A novel dataset and enhanced YOLOv5 approach. IEEE Access 2024, 12, 47338–47358. [Google Scholar] [CrossRef]

- Yang, G.; Hong, X.; Sheng, Y.; Sun, L. YOLO-Helmet: A novel algorithm for detecting dense small safety helmets in construction scenes. IEEE Access 2024, 12, 107170–107180. [Google Scholar] [CrossRef]

- Chen, J.; Zhu, J.; Li, Z.; Yang, X. YOLOv7-WFD: A novel convolutional neural network model for helmet detection in High-Risk workplaces. IEEE Access 2023, 11, 113580–113592. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Song, Y.; Chen, Z.; Yang, H.; Liao, J. GS-LinYOLOv10: A drone-based model for real-time construction site safety monitoring. Alex. Eng. J. 2025, 120, 62–73. [Google Scholar] [CrossRef]

- Deng, R.; Wang, K.; Mao, Y. Real-Time Monitoring of Mobile Construction Resources Based on Multiple Object Tracking. J. Comput. Civ. Eng. 2025, 39, 04025059. [Google Scholar] [CrossRef]

- Sharifzada, H.; Wang, Y.; Sadat, S.I.; Javed, H.; Akhunzada, K.; Javed, S.; Khan, S. An Image-Based Intelligent System for Addressing Risk in Construction Site Safety Monitoring Within Civil Engineering Projects. Buildings 2025, 15, 1362. [Google Scholar] [CrossRef]

| Dataset/Study | Total Images | Equipment Classes | Safety-Related Classes | Safety Annotation Type | Equipment-Safety Integration |

|---|---|---|---|---|---|

| ACID [8] | 10,000 | 10 (machinery) | 0 | None | No |

| MOCS [28] | 41,668 | 13 (moving objects) | 0 | None | No |

| SODA [31] | 19,846 | 12 (equipment + materials) a | 3 (worker, helmet, vest) | PPE only | Partial (no structural safety) |

| [1] | 10,294 | 9 (heavy equipment) | 0 | None | No |

| [26] | 51,630 b | 6 (equipment) c | 2 (workers, helmets) | PPE only | No (multi-task but no structural) |

| [32] | 44,295 | 5 (heavy equipment) | 0 | None | No |

| [29] | 21,772 | 5 (heavy equipment) | 0 | None | No |

| [4] | 4868 | 0 | 5 (guardrails, scaffolds, helmets) | Structural + PPE | Safety-only (no equipment) |

| This Study | 14,840 d | 8 (specialized equipment) | 5 (structural + PPE) | Structural + PPE | Yes (unified framework) |

| Model | Enhancement Type | Key Operations | Strengths | Limitations |

|---|---|---|---|---|

| HFE-YOLO | Multi-attention | CBAM (r = 16) + Triplet (k = 7) + Spatial + Residual | Multi-dimensional attention, Sequential processing | Higher computational cost |

| Identity-YOLO | Pass-through | Identity mapping | Minimal overhead, Direct gradient flow | No feature enhancement |

| FPN-YOLO | Lightweight FPN | Reduce (128)→Expand (256)→ Interp + Concat (384) →Fuse(256) | Structured processing, Multi-stream fusion | Limited attention mechanisms |

| C2F-YOLO | Cross-stage partial | Reduce (128)→C2f (n = 2, exp = 0.5)→Expand (256) | Bottleneck design, Structured processing | Moderate complexity |

| Task | Dataset | # Classes | # Images | # Instances | Object Classes |

|---|---|---|---|---|---|

| Equipment Detection | ConstructSight (CS) | 8 | 2195 | 7980 | Backhoe, concrete mixer, diesel generator, dump truck, excavator, manpower, tower hoist lift, tractor |

| Safety Compliance | SafeGuard (SG) | 5 | 12,645 | 26,601 | Missing guardrail, mobile scaffold (no outrigger), mobile scaffold (outrigger), worker with helmet, worker without helmet |

| Class | Train | Val | Test | Total | % |

|---|---|---|---|---|---|

| Backhoe | 502 | 20 | 34 | 556 | 7.0 |

| Concrete Mixer | 356 | 34 | 21 | 411 | 5.2 |

| Diesel Generator | 124 | 10 | 7 | 141 | 1.8 |

| Dump Truck | 592 | 51 | 26 | 669 | 8.4 |

| Excavator | 526 | 52 | 27 | 605 | 7.6 |

| Manpower | 4108 | 417 | 291 | 4816 | 60.4 |

| Tower Hoist Lift | 206 | 25 | 12 | 243 | 3.0 |

| Tractor | 470 | 32 | 37 | 539 | 6.8 |

| Total Instances | 6884 | 641 | 455 | 7980 | 100.0 |

| Total Images | 1930 | 147 | 118 | 2195 | — |

| Split Ratio (%) | 87.9 | 6.7 | 5.4 | 100.0 | — |

| Avg Inst./Image | 3.57 | 4.36 | 3.86 | 3.64 | — |

| Class | Train | Val | Test | Total | % |

|---|---|---|---|---|---|

| Missing Guardrail | 3723 | 146 | 169 | 4038 | 15.2 |

| Mobile Scaffold (No Outrigger) | 3897 | 176 | 114 | 4187 | 15.7 |

| Mobile Scaffold (Outrigger) | 4435 | 186 | 173 | 4794 | 18.0 |

| Worker with Helmet | 6124 | 328 | 442 | 6894 | 25.9 |

| Worker without Helmet | 5983 | 224 | 481 | 6688 | 25.1 |

| Total Instances | 24,162 | 1060 | 1379 | 26,601 | 100.0 |

| Total Images | 11,679 | 483 | 483 | 12,645 | — |

| Split Ratio (%) | 92.4 | 3.8 | 3.8 | 100.0 | — |

| Avg Inst./Image | 2.07 | 2.19 | 2.86 | 2.10 | — |

| Model | Precision | Recall | mAP@50 | mAP@50–95 |

|---|---|---|---|---|

| HFE-YOLO | 0.959 | 0.912 | 0.950 | 0.826 |

| FPN-YOLO | 0.950 | 0.907 | 0.948 | 0.824 |

| Identity-YOLO | 0.939 | 0.890 | 0.925 | 0.744 |

| C2F-YOLO | 0.913 | 0.897 | 0.924 | 0.721 |

| Model | Precision | Recall | mAP@50 | mAP@50–95 |

|---|---|---|---|---|

| HFE-YOLO | 0.964 | 0.956 | 0.968 | 0.794 |

| FPN-YOLO | 0.962 | 0.940 | 0.961 | 0.761 |

| C2F-YOLO | 0.958 | 0.948 | 0.966 | 0.782 |

| Identity-YOLO | 0.949 | 0.948 | 0.963 | 0.781 |

| Model | Enhancement Type | mAP@50–95 | Δ vs. Identity | Precision | Recall | mAP@50 |

|---|---|---|---|---|---|---|

| Identity-YOLO | Minimal (pass-through) | 0.744 | reference | 0.939 | 0.890 | 0.925 |

| C2F-YOLO | Cross-stage partial | 0.721 | −0.023 | 0.913 | 0.897 | 0.924 |

| FPN-YOLO | Feature pyramid | 0.824 | +0.080 | 0.950 | 0.907 | 0.948 |

| HFE-YOLO | Multi-attention | 0.826 | +0.082 | 0.959 | 0.912 | 0.950 |

| Model | Enhancement Type | mAP@50–95 | Δ vs. Identity | Precision | Recall | mAP@50 |

|---|---|---|---|---|---|---|

| Identity-YOLO | Minimal (pass-through) | 0.781 | reference | 0.949 | 0.948 | 0.963 |

| C2F-YOLO | Cross-stage partial | 0.782 | +0.001 | 0.958 | 0.948 | 0.966 |

| FPN-YOLO | Feature pyramid | 0.761 | −0.020 | 0.962 | 0.940 | 0.961 |

| HFE-YOLO | Multi-attention | 0.794 | +0.013 | 0.964 | 0.956 | 0.968 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saeheaw, T. HFE-YOLO: Hybrid Feature Enhancement with Multi-Attention Mechanisms for Construction Site Object Detection. Buildings 2025, 15, 4274. https://doi.org/10.3390/buildings15234274

Saeheaw T. HFE-YOLO: Hybrid Feature Enhancement with Multi-Attention Mechanisms for Construction Site Object Detection. Buildings. 2025; 15(23):4274. https://doi.org/10.3390/buildings15234274

Chicago/Turabian StyleSaeheaw, Teerapun. 2025. "HFE-YOLO: Hybrid Feature Enhancement with Multi-Attention Mechanisms for Construction Site Object Detection" Buildings 15, no. 23: 4274. https://doi.org/10.3390/buildings15234274

APA StyleSaeheaw, T. (2025). HFE-YOLO: Hybrid Feature Enhancement with Multi-Attention Mechanisms for Construction Site Object Detection. Buildings, 15(23), 4274. https://doi.org/10.3390/buildings15234274