1. Introduction

Since the early 2000s, civil engineering has increasingly used soft computing techniques—artificial neural networks, genetic algorithms, fuzzy logic, and wavelets—for numerical problems [

1]. Kicinger et al. [

2] analyzed evolutionary computation for structural design, particularly in topological optimization, while Saka and Geem [

3] focused on mathematical modeling to optimize steel frame structures. Recent advances in high-performance computing have further expanded the applications of earthquake engineering by enabling the efficient processing of large datasets. Xie et al. [

4] reviewed developments in seismic hazard analysis, system identification, damage detection, fragility assessment, and structural control. Within this context, machine learning (ML) techniques now offer data-driven approaches to modeling and predicting structural dynamic responses, with numerous studies demonstrating their effectiveness for linear, nonlinear, and soil–structure interaction behaviors [

5,

6,

7,

8,

9,

10,

11,

12,

13].

Oliver Richard de Lautour et al. [

5] proposed an artificial neural network (ANN) method to predict seismic damage in 2D reinforced concrete (RC) frames using extensive structural and ground motion parameters. Trained on nonlinear FEM simulations, the ANN accurately mapped input features to damage indices, outperforming traditional vulnerability curves. Byung Kwan Oh et al. [

6] developed a convolutional neural network (CNN) to predict displacement responses from acceleration histories, validated on the ASCE benchmark and RC frame experiments, achieving high accuracy even with overlapping datasets. Sadjad Gharehbaghi et al. [

7] compared an ANN and wavelet-weighted least squares support vector machines (WWLSSVMs) for predicting inelastic seismic responses of an 18-story RC frame, finding that the ANN was slightly more accurate and robust with limited training data. Similarly, Pritam Hait et al. [

8] combined the Park–Ang method with ANN to evaluate seismic damage in low-rise RC buildings, introducing a simplified global damage index (GDI) that the ANN efficiently predicted with reduced error. Taeyong Kim et al. [

9] trained deep neural network (DNN) models on modified Bouc-Wen-Baber-Noori (m-BWBN) data, which capture degradation and pinching effects, demonstrating superior accuracy over regression methods in predicting peak seismic responses.

ML methods have also proven effective for seismic signal characterization [

14,

15] and structural performance prediction using neural networks [

16,

17], genetic programming, tree-based models, and hybrid approaches [

18,

19,

20]. These studies highlight ML’s versatility in structural dynamics, including vibration assessments, structural health monitoring, and predictive maintenance [

21,

22,

23,

24]. Traditional analytical methods—such as solving second-order differential equations—offer elegant solutions under ideal conditions but struggle with real-world complexity, where uncertainty in initial conditions, noisy or sparse measurements, and parameter variability degrade predictive accuracy.

This study employed PINNs because they embed the governing constraints, thereby remaining data-efficient even with sparse, noisy observations. Unlike black-box predictors, PINNs embed governing physical laws directly into the learning process, enabling accurate, data-efficient parameter estimation even under incomplete or noisy datasets. Such capability is critical for real-time monitoring, adaptivity, and robustness—especially in analyzing Single-Degree-of-Freedom (SDOF) systems near resonance. Moreover, ML provides scalable solutions for parameter identification, adapting beyond fixed-code expressions (e.g., Eurocode 8) to evolving system dynamics. The combination of ML and optimization creates a robust framework for structural dynamics analysis, enhancing the precision of vibration assessments and response predictions.

Recent work has emphasized hybrid strategies that blend physical modeling with data-driven methods. The integration of physics-based models with intelligent algorithms has advanced complex modeling, classification, and parameter estimation [

25,

26,

27,

28,

29,

30,

31,

32]. For instance, predicting structural responses in RC structures often depends on accurately modeling bond behavior [

33]. Amini Pishro et al. [

25] showed that combining ANNs with multiple linear regression improves predictive accuracy compared to traditional methods. Building on these efforts, hybrid ML approaches [

28] have enhanced predictions across various loading conditions by merging data-driven techniques with physical insights. Similarly, PINNs have successfully simulated bond behavior in ultra-high-performance concrete (UHPC) under monotonic loading [

29], demonstrating the value of incorporating physical constraints into ML—a strategy central to this study’s PINN-based modeling.

Machine learning has also been applied to predict structural behavior under combined loads, such as failure mechanisms in RC beams strengthened with fiber-reinforced polymers (FRPs) under torsion, shear, and bending [

30,

31]. Additionally, hybrid ML methods have advanced system-level modeling and optimization in spatial–temporal systems, including rail transit station classification [

26,

27,

32]. These examples highlight ML’s potential for addressing multi-parameter, nonlinear structural responses beyond the scope of traditional tools.

Traditional approaches for analyzing harmonic excitation—solving second-order differential equations—remain essential for understanding transient and steady-state responses but are limited by uncertainties and sensitivity to initial conditions [

34,

35]. To overcome these limitations, recent studies have analyzed nonlinear responses in SDOF systems, focusing on different structural types and seismic protection systems [

36,

37,

38,

39]. N. Asgarkhani et al. [

36] trained ML-based models on nonlinear time history and incremental dynamic analyses of Buckling-Restrained Brace Frames (BRBFs) to predict inter-story drift (ID) and residual inter-story drift (RID) with up to 98.7% accuracy, outperforming conservative estimates like FEMA P-58. Davit Shahnazaryan et al. [

37] developed Decision Tree and XGBoost models to improve nonlinear response prediction using next-generation intensity measures, such as average spectral acceleration (Sa_avg), and outperformed empirical methods in predicting collapse behavior across periods. Payán O. et al. [

38] used deep learning to predict the seismic responses of RC buildings, focusing on maximum inter-story drifts. Their models effectively estimated ductility and hysteretic energy, although careful tuning was required to avoid overfitting. Similarly, Hoang D. Nguyen et al. [

39] compared six ML methods, including ANN and random forest (RF), to predict peak lateral displacements of seismic isolation systems, with RF achieving R

2 values of 0.9930 (training) and 0.9498 (testing) using 234,000 OpenSees-generated data points. Practical applications, including a GUI tool, confirmed the model’s utility at the design level.

ANN-based methods have repeatedly proven reliable for rapid predictions of SDOF system responses [

40,

41,

42]. Other algorithms—such as RF, eXtreme Gradient Boosting, and Stochastic Gradient Boosting—have also demonstrated success in seismic modeling [

43]. Gentile and Galasso [

44] further extended this by employing Gaussian Process Regression for probabilistic seismic demand modeling, highlighting the versatility of ML in capturing complex dynamics. Accurate characterization of seismic signals remains essential for improving response predictions [

45,

46], yet many ML studies overlook key parameters that drive reliability.

These developments underscore the ongoing need to modernize seismic design codes, such as Eurocode 8 [

47,

48,

49](BS EN 1998), which are evolving toward performance-based and resilience-oriented frameworks. Eurocode 8 [

47,

48] establishes prescriptive formulations for seismic design, defining elastic response spectra with a fixed damping ratio of 5% and providing simplified expressions for structural dynamic amplification through static and modal combination procedures [

47,

48,

49]. These provisions are efficient for conventional elastic design but rely on predefined spectral shapes and constant damping assumptions that may not accurately capture transient or nonlinear system behavior under variable excitation conditions.

In contrast, the adaptive Dynamic Magnification Factor (DMF) approach proposed in this study dynamically computes amplification from the instantaneous system response using a coupled PINN and EKF framework. This enables continuous updating of stiffness, damping, and response parameters, thereby extending the Eurocode 8 concept of spectral amplification toward a data-driven, real-time formulation suitable for modern performance-based design and structural health monitoring.

In this study, Eurocode 8 is referenced as a contextual motivation for adaptive dynamic analysis rather than as a direct performance benchmark. The present linear-harmonic SDOF formulation provides an isolated, well-understood setting for validating the proposed PINN–EKF framework prior to extending it to transient seismic loading conditions. More direct comparison with Eurocode 8 response spectrum provisions will be addressed in future work.

The proposed adaptive framework also builds on prior experimental and numerical investigations of structural interfaces. Recent studies have demonstrated how structural mechanisms can significantly influence stiffness and energy dissipation under cyclic or dynamic loading conditions [

28]. Similar new findings highlight the potential for composite systems to achieve enhanced ductility and load-bearing capacity through improved material–interface performance [

29]. These insights reinforce the motivation for developing adaptive analytical approaches capable of considering material-level nonlinearities and interface effects into global dynamic models.

Integrating partial differential equations with ML has already proven effective for modeling multi-scale systems beyond the limits of analytical methods [

50]. In contrast, hybrid methods such as the Extended Kalman Filter (EKF) and PINNs provide promising bridges between traditional models and data-driven approaches. EKF enables real-time state estimation in noisy environments, and PINNs embed physical constraints to ensure accurate predictions even with limited data. This integration is particularly valuable for nonlinear dynamic systems, such as SDOF structures under harmonic excitation. These advances suggest that ML-driven structural dynamics analysis can effectively capture nonlinear behavior and evolving design philosophies, supporting the transition toward next-generation Eurocode 8 standards [

47,

48,

49]. The growing role of artificial intelligence in advancing design codes represents a transformative shift toward data-driven decision-making and automated optimization, enabling continuous refinement of structural standards such as Eurocode 8 through predictive analytics and real-time calibration with experimental data. In this context, machine learning serves as a powerful complement to established design frameworks by uncovering hidden patterns, improving predictive performance, and bridging the gap between empirical code formulations and modern computational intelligence [

51].

This paper proposes a hybrid framework that combines classical structural dynamics with ML and optimization to analyze SDOF systems under harmonic excitation. We address the limitations of Eurocode-style steady-state formulas for handling noisy, sparse measurements by proposing a physics-informed ML framework that estimates SDOF parameters in real time and improves displacement prediction under resonance. Analytical formulations are combined with EKF and PINNs to evaluate the effects of key parameters, such as damping ratio and frequency ratio, on the dynamic response. Numerical simulations explore parameter uncertainty and measurement noise, using EKF and PINNs for parameter identification and prediction to leverage both data assimilation and physics-guided learning.

Traditional approaches in structural dynamics often exhibit high sensitivity to initial conditions and parameter uncertainties, perform poorly with sparse or noisy data, and lack adaptivity for real-time monitoring. Simplified Eurocode 8 assumptions, while effective for elastic design, may fail to capture transient, nonlinear, or site-specific effects observed in real structures. Recent technical reports and code-development discussions emphasize the need to incorporate nonlinear response modeling, refined soil–structure interaction [

52], and improved representation of site-dependent spectra. These evolving directions highlight the growing demand for analytical and computational tools capable of handling parameter uncertainty, data assimilation, and adaptive modeling under dynamic loading. The proposed hybrid framework directly addresses these challenges by integrating analytical formulations, PINNs, the EKF, and optimization algorithms to improve SDOF response prediction under harmonic and seismic excitations. This adaptive, data-driven methodology strengthens Eurocode-based design analysis and aligns with the broader modernization trends in seismic performance assessment.

While Eurocode 8 provides prescriptive formulations for seismic design based on elastic response spectra, the present study focuses on the fundamental dynamic mechanisms represented by a linear SDOF system under harmonic excitation. This simplified setting allows the proposed PINN–EKF framework to be validated under controlled conditions before extending it to transient and nonlinear seismic inputs in future work.

To demonstrate the efficiency of the proposed methodology,

Section 2 introduces the governing equations of the structural dynamic system and formulates the SDOF model used throughout this study. The subsequent sections describe the implementation of the PINN and EKF algorithms, followed by validation and comparative analysis with classical solutions and Eurocode-based response predictions.

2. Structural Dynamic System

2.1. Harmonic Excitation

Many natural forces can be approximately represented by a series of harmonic forces, which is known as the Fourier Decomposition. A harmonic force is a simple mathematical representation of a periodic force.

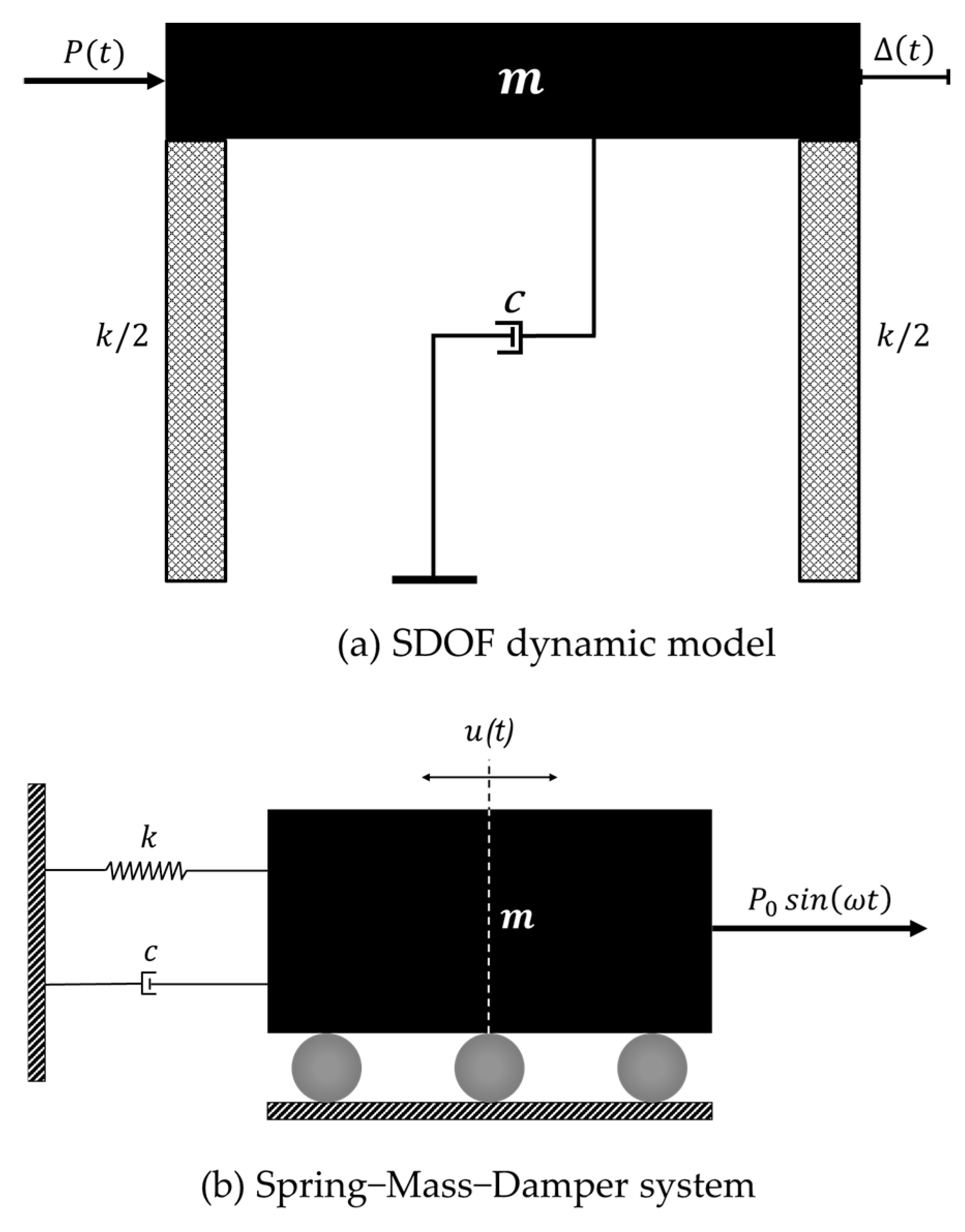

Figure 1 presents a spring-mass-damper system subjected to a harmonic force.

By examining the equation of motion for the spring-mass-damper system, its response to an external harmonic forcing function can be analyzed. This analysis reveals how the system behaves under periodic excitation, capturing both the transient response, which fades over time due to damping, and the steady-state response, which persists with characteristics dependent on the system’s parameters and the forcing frequency. The system is subjected to a sinusoidal force of the form , where represents the amplitude of the applied force, and denotes the angular frequency of the forcing function.

The equation of motion for the spring-mass-damper system with harmonic forcing can be expressed as

where

represents the mass of the system,

indicates the damping coefficient,

is the spring constant. Moreover,

denotes the displacement of the mass as a function of time,

and

are the first- and second-time derivatives of

, representing velocity and acceleration, respectively.

Equation (1) represents the dynamic response of a system subjected to an external harmonic force. Its solution generally comprises two components: the transient response, which diminishes over time due to damping, and is an oscillation at the damped natural frequency , and the steady-state response, which remains as long as the harmonic force is applied. The steady-state solution typically follows a sinusoidal pattern with the same frequency as the forcing function but includes a phase shift and an amplitude determined by the system’s parameters and the forcing frequency .

The analysis of this system is crucial in understanding phenomena such as resonance, where the amplitude of the system’s response becomes significantly large when the forcing frequency approaches the system’s natural frequency . Damping plays a critical role in limiting the amplitude of the response, especially near resonance.

The inclusion of the harmonic forcing function introduces a dynamic driving mechanism that significantly influences the system’s behavior, leading to important insights into the interplay between external forces and the system’s inherent properties.

In Equation (1)

denotes a non-homogeneous second-order differential equation. The objective now is to solve this differential equation to derive an expression for displacement as a function of time,

. Since this is a non-homogeneous second-order differential equation, its solution consists of two components: the complementary solution, which corresponds to the solution of the associated homogeneous equation, as presented in Equation (2), and the particular solution, which accounts for the effects of the non-homogeneous term.

The damping coefficient is related to the damping ratio through the expression , where is the undamped natural circular frequency of the system. Equation (3) represents the general solution of a non-homogeneous second-order differential equation describing a system’s response. It is composed of two parts: the complementary solution and the solution . The complementary solution, , is the solution to the homogeneous equation when the external forcing term is absent and represents the system’s natural response, also known as the free vibration or transient response. This part depends on the system’s initial conditions and damping, and it gradually decays over time. The particular solution, , accounts for the effects of the external forcing function, representing the forced vibration or steady-state response, which persists as long as the external force is applied. Together, these two components fully describe the system’s displacement over time, the transient response gradually diminishes, and the steady-state response determines the long-term behavior of the system under external excitation.

Given that the right-hand side of the equation is a sinusoidal function, the trial solution is chosen to be a generalized sinusoidal form, as shown in Equation (4). This assumes that the system’s response will also follow a sinusoidal pattern but with potentially different amplitude and phase. To proceed, this trial solution was differentiated, and the resulting expressions were then substituted back into the original differential equation. This substitution process is described by Equation (5), which uses the trial solution and its derivatives to determine the unknown parameters. Through this method, the specific amplitude and phase of the system’s steady-state response under the given sinusoidal forcing function were determined.

Applying Equations (4) and (5) in Equation (1) will result in

or

Expressions for the constants A and B can be derived by grouping the sine terms, as shown in Equation (8), and the cosine terms, as shown in Equation (9). This allows us to isolate the unknown constants by equating the coefficients of the sine and cosine terms on both sides of the equation.

Therefore, two equations with two unknowns are obtained, and simultaneous equations can be used to solve for A and B, as shown in Equations (10) and (11).

Applying the constants

and

in Equation (4) will result in

Considering Equation (4) and the complementary and particular solutions, the complete expression for the response of the Single Degree of Freedom (SDOF) system under harmonic excitation is obtained as Equation (13), which includes the transient and steady-state responses.

The dimensionless response equation offers an alternative representation of the complete response, as described in Equation (13). This formulation utilizes dimensionless ratios to simplify analysis and facilitate generalization across diverse systems. One key dimensionless parameter is the frequency ratio, denoted

, which is defined as the ratio of the excitation frequency

to the system’s natural frequency

expressed as Equation (14).

By introducing this dimensionless parameter, the response equation can be rewritten in a form that highlights the influence of excitation frequency relative to the system’s natural frequency. This approach facilitates comparisons between different systems and provides deeper insights into resonance behavior, damping effects, and the overall dynamic characteristics of the Single Degree of Freedom (SDOF) system under harmonic excitation. The steady-state response is often represented in dimensionless form. Applying the frequency ratio

in Equation (12) yields

Therefore, the final response equation can be restated as presented in Equation (16).

2.2. Phase Angle

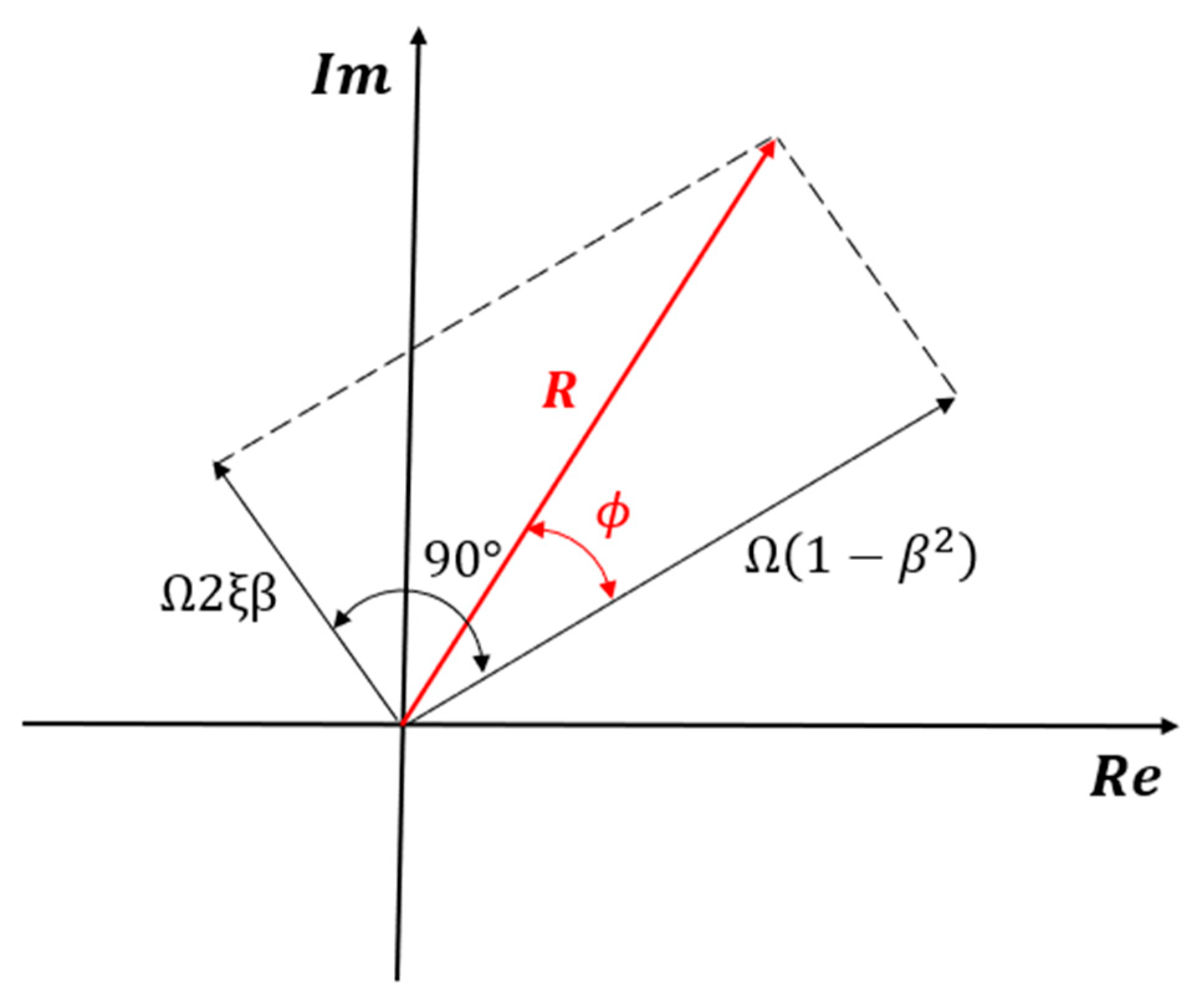

In the steady-state response given by Equation (15), the term can be replaced with for simplification. Additionally, the expression can be designated as Component 1, while is referred to as Component 2. This decomposition allows for a more straightforward interpretation of the system’s response by distinguishing between contributions from different dynamic effects. Therefore, the steady-state response includes two components. Component 1 is in phase with the applied harmonic force, while component 2 is 90 degrees out of phase.

Since appears in both the harmonic forcing function and the component 1, expressed as , it follows that these terms share the same phase and oscillate at the same frequency. This indicates that the response component is directly influenced by remains synchronized with the external forcing function, reinforcing the system’s steady-state behavior.

Component 2 of the steady-state response arises due to the effect of damping, which influences the system’s motion by introducing a phase shift relative to the external forcing function. This component, represented by

accounts for energy dissipation in the system and contributes to the overall response by modifying both the amplitude and phase characteristics. The term

is 90 degrees out of phase, with

, meaning that Components 1 and 2 are orthogonal to each other. As a result, these components can be represented as two separate vectors in a phase plane, with a 90° phase difference. This vector representation, as shown in

Figure 2, facilitates a clearer understanding of how damping affects the system’s steady-state response by introducing a component that is phase-shifted relative to the external forcing function.

According to

Figure 2, the amplitude of

is the amplitude of the steady-state response. The red vector R represents the actual steady-state response and is derived as the vector sum of the two black component vectors. Its magnitude at any given moment is determined by projecting it onto the real axis.

Since the applied force is in phase with the leading response vector of magnitude , the phase angle indicates how much the system’s response (red vector) lags the applied harmonic force.

As the damping level increases, the trailing vector, with a magnitude of , becomes longer. As a result, the overall response vector lags further behind the applied force, increasing the phase shift.

The amplitude of the steady-state response is the magnitude of the total response vector

, as expressed in Equation (17).

Equation (17) can be restated by extending

:

The phase lag, denoted as

, represents the angle between the applied force and the system’s response, as presented in Equation (19). In other words,

quantifies the phase shift between the external forcing function and the resulting motion of the system. This phase difference arises from damping and system dynamics, which affect how the response lags behind the applied force. A higher damping ratio increases the phase lag, meaning the system takes longer to reach its peak displacement relative to the driving force. Therefore, the steady-state response lags the applied harmonic force by phase angle

.

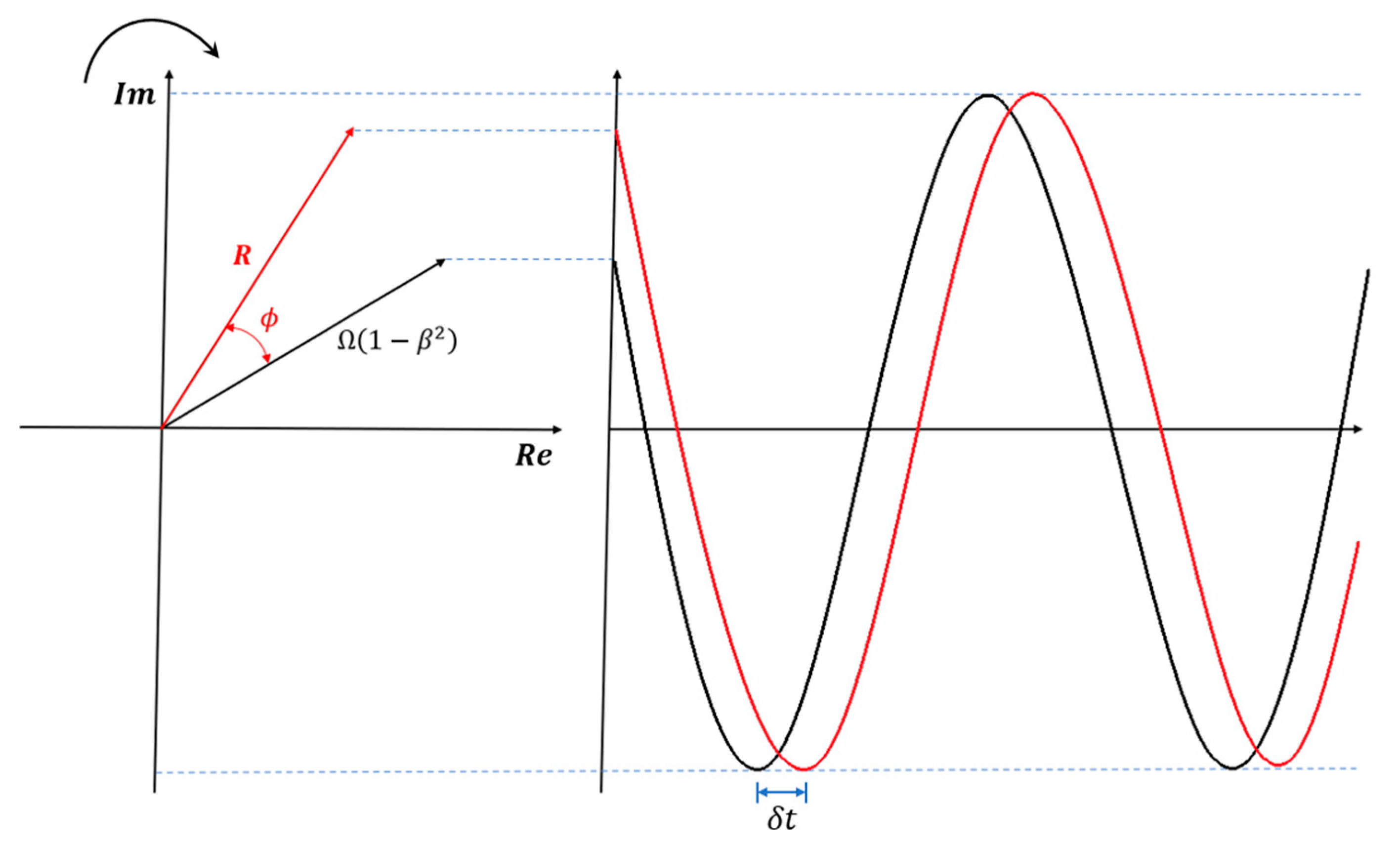

The black line in

Figure 3 illustrates the waveform representing the frequency and phase of the applied force,

, and the red graph presents the corresponding waveform showing the phase lag of

degrees between the force and the system’s response,

. By understanding the nature of the applied force or the specific harmonic force acting on the system, the steady-state response can be fully characterized, including the frequency and phase relationships between the applied force and the resulting motion. This allows for a comprehensive understanding of how the system behaves under harmonic excitation.

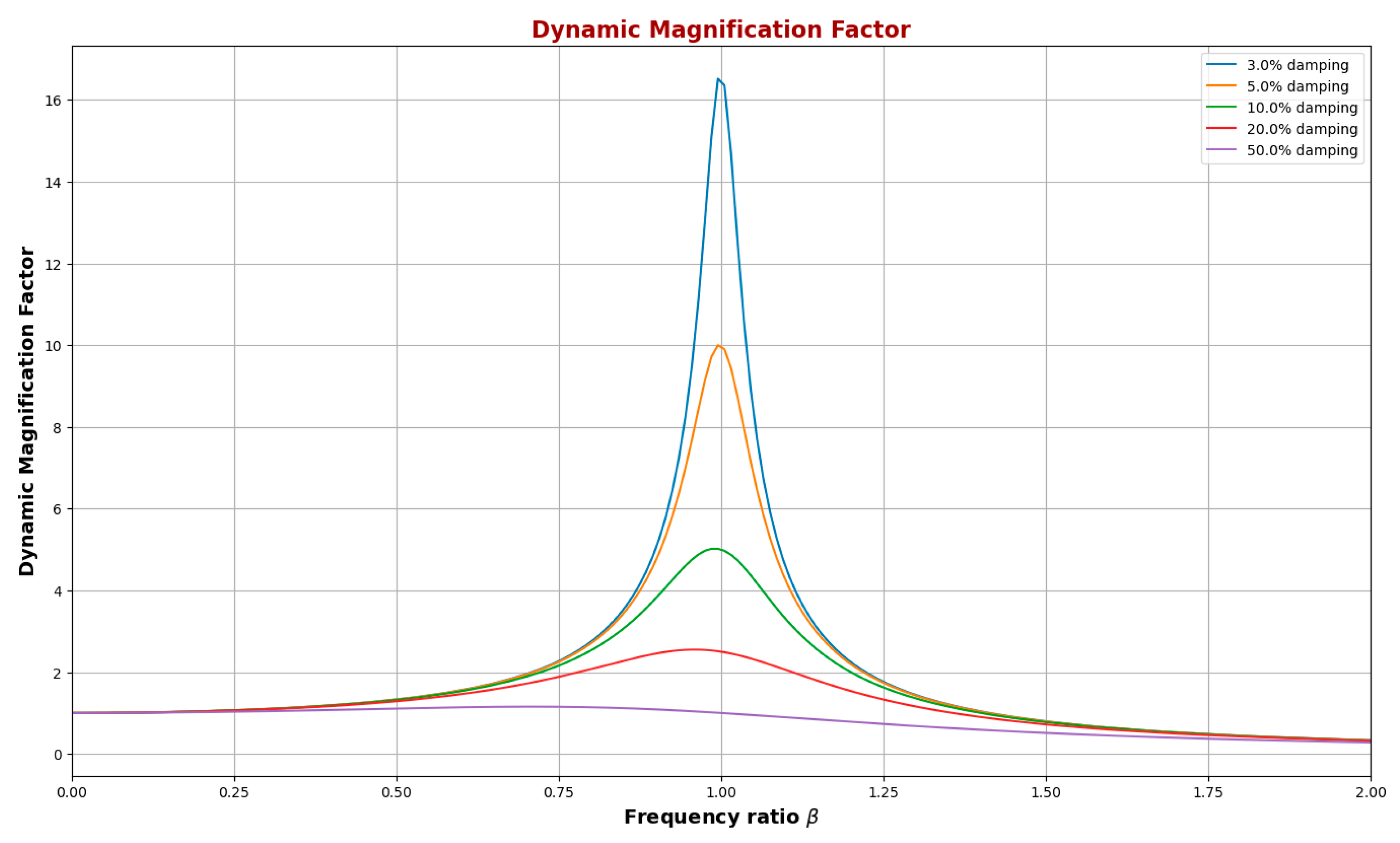

2.3. Dynamic Magnification Factor (DMF) and Resonance

The Dynamic Magnification Factor (DMF) is a crucial parameter in structural dynamics, defined as the ratio between the amplitude of the steady-state dynamic response and the deflection caused by a static load of the same magnitude. This factor serves as a powerful tool for assessing the extent to which dynamic loading amplifies a structure’s response compared to its static counterpart. It provides engineers with insights into the influence of oscillatory forces, helping them predict potential resonance effects and ensure the structural integrity and serviceability of engineering systems. Mathematically, it is expressed as

Considering Equations (18) and (20), the DMF can be stated as:

The DMF and the phase angle are key parameters that define the behavior of a structure or mechanical system under harmonic excitation. Both depend on the forcing frequency , the system’s natural frequency , and the level of damping . The DMF quantifies the amplification of displacement caused by dynamic effects, indicating how much a harmonic force magnifies the structural response beyond its static deflection. The phase angle represents the lag between the applied force and the system’s response. Together, these parameters fully characterize the steady-state response when the harmonic excitation’s frequency and amplitude are known.

Resonance occurs when a system is subjected to a periodic force whose frequency matches its natural frequency, leading to a significant increase in vibration amplitude. This occurs because the external force continuously supplies energy in sync with the system’s natural oscillations, leading to a buildup of motion that can cause structural damage or failure if not adequately controlled.

Figure 4 depicts the relationship between the frequency ratio (β) and the dynamic magnification factor (DMF) for various damping levels, ranging from 3% to 50%. The

x-axis represents the frequency ratio β (dimensionless), while the

y-axis shows the DMF (dimensionless).

The graph demonstrates the typical behavior of a damped system under harmonic excitation. As the frequency ratio approaches 1 (resonance), the DMF increases sharply, reaching its maximum value. This peak indicates the resonance condition, where the system experiences maximum oscillation amplitude relative to the applied force.

For lower damping values, particularly 3% (blue curve) and 5% (orange curve), the peak is significantly higher, exceeding 16, and the curve exhibits a pronounced sharpness around β = 1. This sharp peak reflects the high amplification of oscillations at resonance when damping is low. As damping increases, the peak value decreases, and the curve flattens. For 10% damping (green curve), the peak is noticeably lower, reaching just over 10, and the response becomes less sensitive to frequency variations around resonance. With 20% damping (red curve) and 50% damping (purple curve), the peak continues to diminish, and the DMF approaches a more moderate level across the frequency range, illustrating a significant reduction in amplitude amplification as damping increases.

This trend highlights the role of damping in mitigating the system’s resonant response. High damping reduces the amplitude of oscillations at resonance and broadens the range of frequencies over which the system experiences lower amplification. Therefore, systems with higher damping coefficients are less likely to experience excessive oscillations or damage due to resonance, making damping an essential design factor for structures subjected to dynamic loads.

The DMF helps quantify this effect by indicating how much the system’s response is amplified under dynamic loading. As the excitation frequency approaches the natural frequency, the DMF reaches its maximum, highlighting the risk of excessive displacement. Additionally, the phase angle shifts noticeably, moving from an in-phase response at low frequencies to an out-of-phase response at higher frequencies.

Understanding the relationship between the DMF, phase angle, and system parameters provides critical insights into resonance, energy dissipation, and overall vibratory behavior. As the forcing frequency approaches the natural frequency, the DMF reaches its peak, leading to maximum displacement, while the phase angle shifts from in-phase behavior at low frequencies to out-of-phase behavior at higher frequencies. By quantifying dynamic amplification, the DMF enables engineers to assess the effects of dynamic loading and anticipate potential resonance issues. To mitigate excessive vibrations and minimize resonance risks, structural design strategies can incorporate damping mechanisms, modify system stiffness, or adjust excitation conditions to achieve optimal performance.

While Eurocode 8 defines seismic design spectra for transient ground motions, the present study adopts a linear single-degree-of-freedom (SDOF) system under harmonic excitation as a conceptual and computational benchmark. This simplification enables systematic validation of the proposed hybrid PINN–EKF framework under well-controlled dynamic conditions, where the analytical solution is known and the effects of parameter uncertainty and noise can be rigorously assessed. The insights gained from this harmonic analysis provide a foundational step toward extending the method to nonlinear and multi-degree-of-freedom (MDOF) systems driven by recorded or spectrum-compatible seismic excitations, thereby supporting future code calibration and performance-based design developments.

3. Conventional Numerical Analysis of Structural Dynamics

In this section, structural dynamic principles are applied to analyze the response of a lightweight steel frame supporting heavy machinery with a total mass of m = 15,000 kg, assuming the frame’s self-weight is negligible. An experimental impact test estimates the inherent structural damping as ξ = 0.03. The structure is laterally constrained, preventing twisting and significant vertical movement, allowing it to be modeled as a single-degree-of-freedom (SDOF) system under harmonic excitation. A load test reveals that a lateral force of P = 2000 N results in a lateral displacement of Δ = 7 mm. Given a harmonic force of magnitude P0 = 700 N and a forcing frequency of f = 0.9 Hz, the dynamic magnification factor and the phase shift between the applied force and steady-state response were determined. Additionally, the structural response over t ∈ [0, 60] s was analyzed, and if the harmonic force is removed at t = 10 s, the mass position at t = 45 s was determined.

In the next section, machine learning and optimization algorithms are introduced to predict and refine the system’s hyperparameters, and their performance is compared with the structural dynamic approach to evaluate their effectiveness in analyzing and optimizing the structural response.

3.1. DMF and Phase Shift

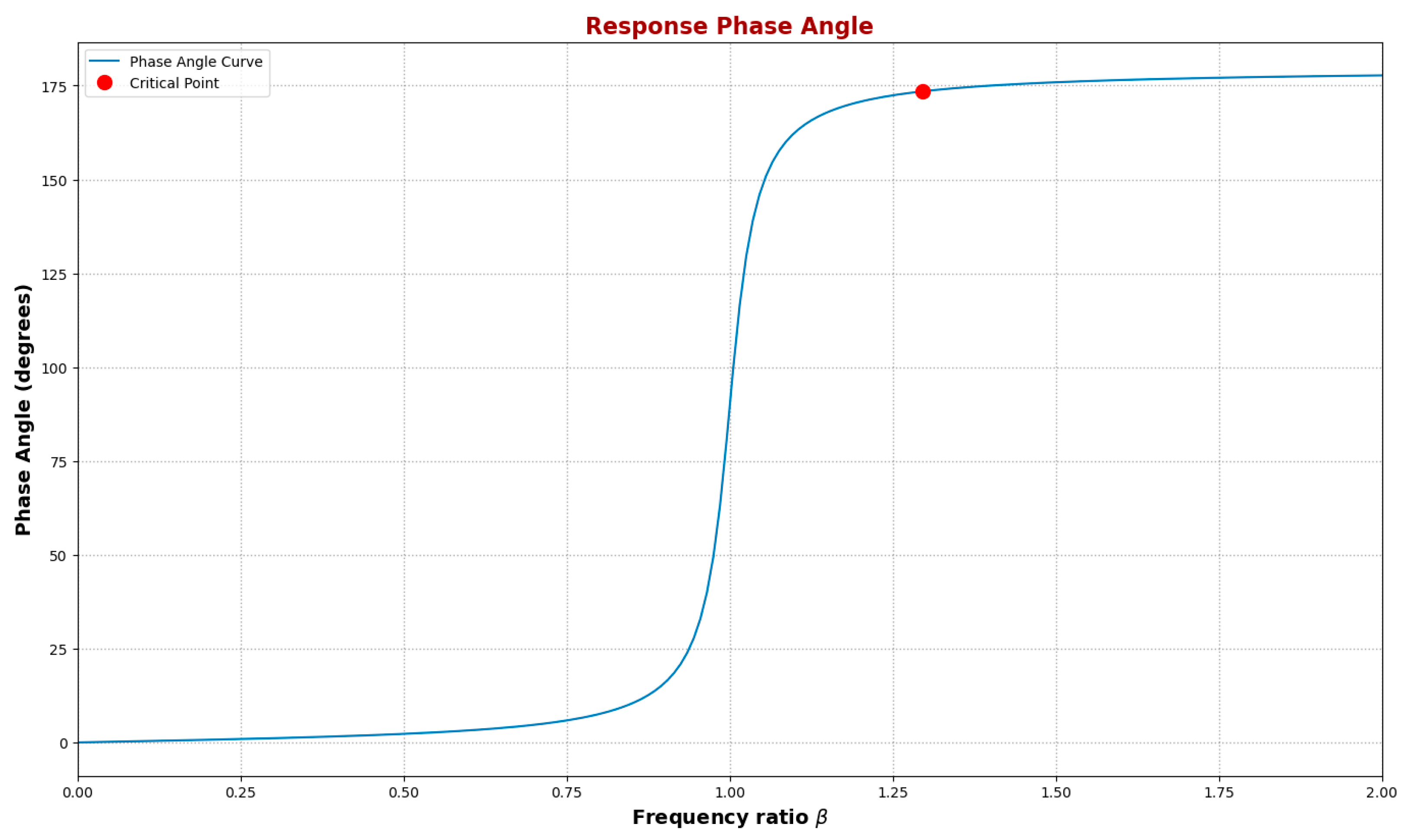

Figure 5 is presented by applying Equation (21) for the dynamic magnification factor (DMF) and Equation (19) for the phase shift

between the applied force and the system’s response.

Figure 5 illustrates the Response Phase Angle as a function of the frequency ratio (β) in a harmonically excited SDOF system, where the phase angle represents the lag between the applied force and the system’s response. It illustrates the expected phase behavior of a damped SDOF system under harmonic excitation, with a key characteristic being the transition from in-phase to out-of-phase response. Beyond the resonance region, the system’s response significantly lags the excitation.

At low-frequency ratios (β < 1.0), the phase angle remains close to 0°, indicating that the system’s response is nearly in phase with the applied force. As the frequency ratio approaches β = 1.0, a rapid transition occurs, marking the onset of the resonance region. In this range, the phase angle shifts dramatically from near 0° to 180°, indicating that the system transitions from being in phase with the applied force to almost entirely out of phase.

Beyond the resonance region (β > 1.0), the phase angle stabilizes at approximately 180°, indicating that the response consistently lags the applied force by half a cycle. The critical point, marked at β ≈ 1.296, highlights a phase difference of approximately 173.5° (3.028 radians), corresponding to a dynamic magnification factor (DMF) of 1.463 and a steady-state dynamic amplitude of 0.0036 m. The static deflection, obtained by dividing the force magnitude by the stiffness, is 0.0025 m.

Table 1 summarizes the results presented above for the damped SDOF system.

3.2. Structural Response

Equation (16) was used to calculate the system’s response to the harmonic loading. Therefore, the constants and within the transient component must first be determined. This is achieved by applying the initial conditions. At , both the position and velocity are zero. To apply the second boundary condition on velocity, the expression for must first be differentiated. For simplicity, some substitutions will be made to make the equation more manageable.

Applying Equations (22) and (23), Equation (16) was restated as follows:

Considering the following assumptions

Each of the four functions of

can be differentiated individually. The functions

and

can be differentiated using the product rule, while

and

can be differentiated using the chain rule. Therefore

Therefore, the velocity

can be formulated as presented in Equation (31).

Considering the equations of

and

, and applying the initial condition

, the unknown hyperparameters will be

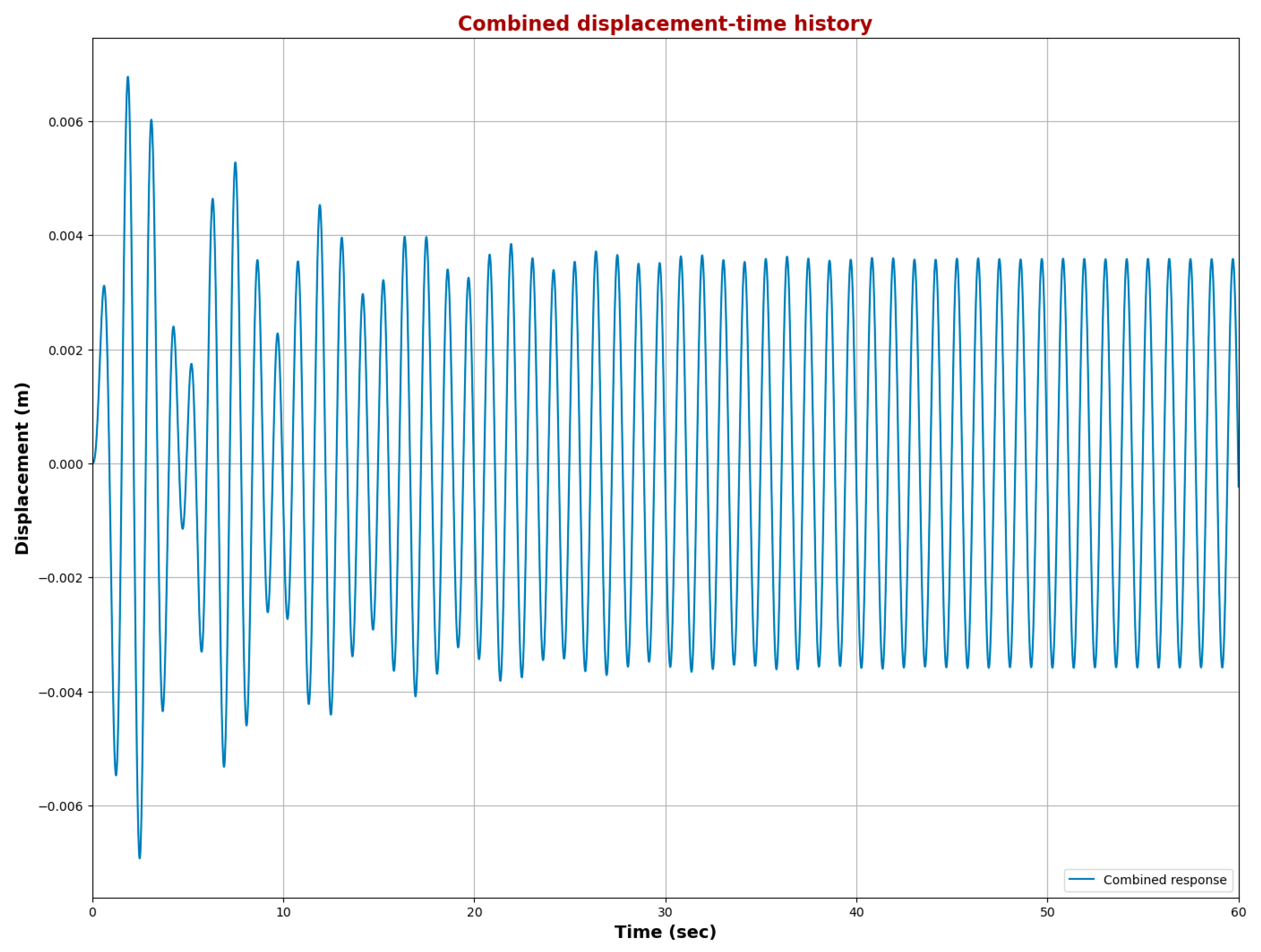

As shown in

Figure 6, the transient response gradually diminishes over time due to damping, allowing the system’s behavior to be effectively characterized by the steady-state response. Once the transient effects dissipate, the system stabilizes into periodic oscillations dictated by its natural frequency and external forcing, making the steady-state response the primary focus for analysis and practical applications.

The displacement-time history in the figure captures both transient and steady-state components. Initially, the transient response exhibits oscillations with an amplitude of approximately ±0.004 m, which progressively decay as energy dissipates. By t ≈ 20 s, the transient effects become negligible, and the system transitions into steady-state behavior.

In this steady-state phase, the displacement oscillates with a nearly constant amplitude of about ±0.0025 m, following a sinusoidal pattern. The static displacement, representing the equilibrium position, is also plotted, along with the steady-state amplitude, which marks the stabilization of oscillations.

This response confirms that after an initial disturbance, the system undergoes damped oscillatory motion before settling into a stable vibration pattern. The well-defined steady-state oscillations and the decay of the transient component align with theoretical expectations, illustrating the balance between external forcing and damping.

Figure 7 illustrates the combined displacement-time history of a dynamic system, capturing both the transient and steady-state responses. Initially, the displacement exhibits high-amplitude oscillations, reaching approximately ±0.0065 m, which gradually diminish over time due to damping. This transient phase, evident in the first 20 s, is characterized by a reduction in oscillation magnitude as energy dissipates from the system.

Beyond t ≈ 20 s, the transient response becomes negligible, and the system settles into a periodic steady-state oscillation. In this phase, the displacement stabilizes within a consistent amplitude range of approximately ±0.0025 m. The oscillatory pattern in the steady-state response aligns with the system’s natural frequency and the influence of external excitation forces.

The observed behavior aligns with theoretical expectations: an initial disturbance triggers a damped transient response, followed by a steady-state vibration governed by the balance between external forcing and damping. The results confirm that after an initial phase of energy dissipation, the system exhibits predictable harmonic motion with a stable amplitude, making the steady-state response the primary focus for long-term analysis.

3.3. Free Vibration Response

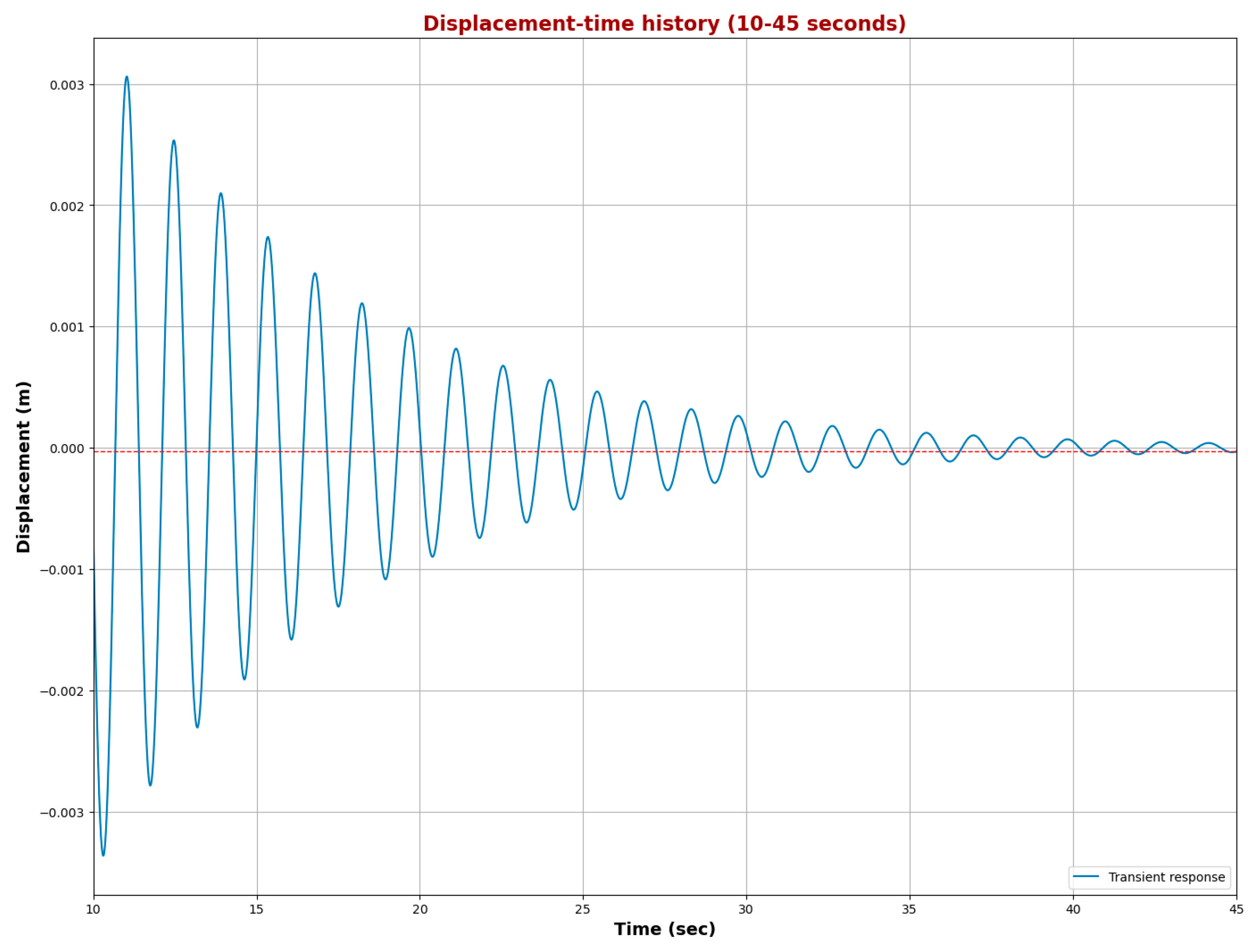

The harmonic excitation is removed at t = 10 s, leading to a change in the system’s dynamic response. Initially, the system exhibits forced vibration behavior due to the applied harmonic force, characterized by oscillations influenced by both transient and steady-state responses. Once the external force is removed, the system transitions into free vibration, where its natural frequency and damping characteristics govern its motion.

To determine the position of the mass at t = 45 s, the displacement function should be analyzed with respect to the governing differential equation of motion. Before the force is removed, the displacement follows a combination of transient and steady-state oscillations. After t = 10 s, the system undergoes damped free vibration, during which the displacement gradually diminishes due to damping. By evaluating the displacement function at t = 45 s, the precise position of the mass can be determined, reflecting the long-term behavior of the system in the absence of external excitation. The numerical result for the displacement at this instant would depend on the system parameters, including mass, damping coefficient, and natural frequency.

The displacement and velocity values obtained in the previous step now serve as the initial conditions for determining the free vibration response at t = 45 s. At this point, our focus shifts solely to the system’s transient response. It is no longer a concern with steady-state conditions but instead with how the system behaves over time, starting from the new initial conditions.

To proceed, it is necessary to determine the constants

and

that characterize the system’s transient response. These constants depend on the initial displacement and velocity, which have been established in the previous step. As a result, the general expressions for displacement and velocity were restated, incorporating the new initial conditions, to solve for

and

. By doing so, we will be able to fully characterize the system’s motion at any given time, particularly during the transient phase, and gain insights into its dynamic behavior at t = 45 s. By calculating and applying the boundary conditions

and

m/s to both expressions, we obtain two simultaneous equations.

Expressing the results in matrix form yields

Solving Equation (37), we obtain

and

. By calculating the equations for free vibration at

s, the response magnitude is

m, as presented by the red line in

Figure 8.

Figure 8 shows the displacement-time history of the system from 10 to 45 s, illustrating its transient response. The displacement is plotted on the vertical axis in meters, while time is represented on the horizontal axis in seconds. The system undergoes oscillations with progressively decreasing amplitude, indicating the damping effect over time. Initially, there is a significant peak around 10 s, where the displacement reaches approximately 0.003 m. The displacement then decreases with each subsequent peak and trough, showing an oscillatory pattern. By the end of the graph at 45 s, the oscillations have nearly damped out, and the displacement approaches zero, indicating that the system has stabilized. According to the analysis above, the displacement response at t = 45 s is −0.00003 m. This final displacement value is indicated by the red line in the graph, which shows the system’s near-zero displacement at this time, confirming that the system’s oscillations have significantly damped and that the transient phase has ended.

4. Data Assimilation Model for Fitting Displacement Curves

In the preceding analyses, the numerical solution of the single-degree-of-freedom (SDOF) harmonically excited system was obtained under the ideal assumption that all model parameters were known exactly. In real-world engineering, however, many of these parameters—such as mass, damping coefficient, stiffness, excitation amplitude, and frequency—must be estimated through measurements, which are inherently prone to noise and uncertainty. These discrepancies between measured and actual values result in deviations in the predicted displacement-time response from the ideal numerical solution. The closer the estimated parameters are to their exact values, the more accurately the system’s dynamic behavior can be reproduced, making parameter estimation a critical task in engineering applications.

Despite the existence of an analytical solution to the linear second-order vibration equation , its direct application in practice often encounters several challenges:

Parameter Uncertainty: Real-world parameters vary due to manufacturing tolerances, aging, and environmental effects, which reduces the reliability of purely analytical predictions.

Sensitivity to Initial Conditions: Analytical solutions are highly dependent on accurate initial conditions, which are challenging to measure precisely in practice.

Measurement Noise and Sparse Sampling: Observational data are typically noisy and collected at discrete intervals, limiting the resolution and reliability of direct comparisons with model predictions.

Model Idealization Bias: Simplified models often overlook complex behaviors, such as nonlinear damping and stochastic external forces, thereby failing to capture the full dynamics of real structures.

To overcome these limitations, data assimilation techniques, such as the Extended Kalman Filter (EKF), are employed. These methods integrate numerical models with observational data, correcting predictions by accounting for parameter uncertainties and measurement errors. EKF enhances system identification by providing real-time updates, quantifying estimation uncertainty, and improving the accuracy and robustness of vibration monitoring and control systems. Even when analytical solutions are available, data assimilation remains a vital tool due to its adaptability and reliability in complex, uncertain environments.

4.1. The EKF Method

The Extended Kalman Filter (EKF) is an extension of the classical Kalman Filter (KF), specifically designed to address the state estimation problem in non-linear systems. While the standard Kalman Filter is limited to linear Gaussian systems, real-world engineering systems often exhibit nonlinear behaviors, rendering the direct application of the classical KF invalid.

The core idea of the EKF is to preserve the recursive structure of the Kalman Filter by locally linearizing the nonlinear system. This is achieved by performing a first-order Taylor expansion of the non-linear state and observation functions around the current state estimate, thereby neglecting higher-order terms. The partial derivatives of these non-linear functions with respect to the state variables form the Jacobian matrices, which serve as linear approximations of the system dynamics and observation models.

In the EKF framework, both the state transition and observation equations are linearized independently. The estimation process consists of two sequential steps. In the prediction step, the algorithm uses the system model and the previous state estimate to forecast the current state and its associated covariance. This predicted state is then refined in the update step, where actual observational data are incorporated to correct the prediction, yielding an improved, more accurate state estimate.

Through this approach, the EKF effectively extends the applicability of Kalman filtering to a broad class of non-linear dynamic systems, enabling more accurate and robust estimation under realistic conditions. The general non-linear system governed by the following state and observation equations is considered:

where

is the state vector at the moment

presents the control input at the moment

denotes the observation vector at the moment

stands for the non-linear state transfer function

shows the non-linear observation function

is the process noise with a covariance matrix

illustrates the observation noise with a covariance matrix

The core idea of the EKF is to linearize the nonlinear system using a Taylor expansion and then apply the prediction and update steps of Kalman filtering. The basic process of extended Kalman filtering can be summarized as follows:

Initialize the state and covariance:

where

is the initial state estimate and

presents the initial state covariance matrix. At each time step, the prediction step (state prediction and covariance prediction) is performed first, as presented in Equations (40) and (41).

where

is the a priori state estimate at moment

shows the a priori covariance matrix

is the Jacobi matrix of the state transfer function

at

According to Equations (43)–(45), the update step (correcting the state estimates and covariances using the observed data) is then performed.

where

denotes the Kalman gain

is the a posteriori state estimate at moment

represents the a posteriori covariance matrix

shows the Jacobian matrix of the observation function

at

For this case, we treat the parameters θ = {c, P

0, ω} as fixed known quantities (c, P

0, ω are used directly in the system). This means that the EKF only estimates the state vector

. The observed displacement

corresponds to the observation matrix

. The vector form of the continuous-time state equation is

While the state Jacobian is stated as

The discretization is approximated by .

In this experiment, the initial state is , the initial covariance is considered as , the process noise is set to , and the observation noise variance is .

While the EKF extends the classical Kalman framework to nonlinear systems, its performance relies on several assumptions that may limit its applicability in complex structural dynamics problems. The EKF linearizes the nonlinear state-transition and observation models using a first-order Taylor expansion, which can introduce approximation errors when the system exhibits strong nonlinearities, such as yielding, hysteretic damping, or stiffness degradation. Moreover, EKF assumes that both process and measurement noise follow zero-mean Gaussian distributions with known covariances. In practice, seismic or experimental data often include non-Gaussian noise, bias, or outliers, which can degrade estimation accuracy or lead to divergence. Under such conditions, alternatives such as the Unscented Kalman Filter (UKF), Ensemble Kalman Filter (EnKF), or Particle Filter (PF) may offer improved robustness. In this study, the EKF is primarily applied to moderately nonlinear, single-degree-of-freedom systems where linearization remains valid. To further mitigate these limitations, the complementary use of the Physics-Informed Neural Network (PINN) enhances stability and accuracy under noisy or partially nonlinear conditions, providing a balanced hybrid estimation framework.

4.2. Numerical Experiment

The numerical experiments are based on the hypotheses developed in

Section 3, as summarized in

Table 2.

A time segment is divided into 0.1 s intervals over the first 20 s, yielding 200 time steps. The true values of the solution at each time point

are computed. Gaussian noise with a standard deviation (

), following the distribution

, is superimposed on the actual values. To simulate sparse and noisy observations, data are sampled every 3 time steps, yielding

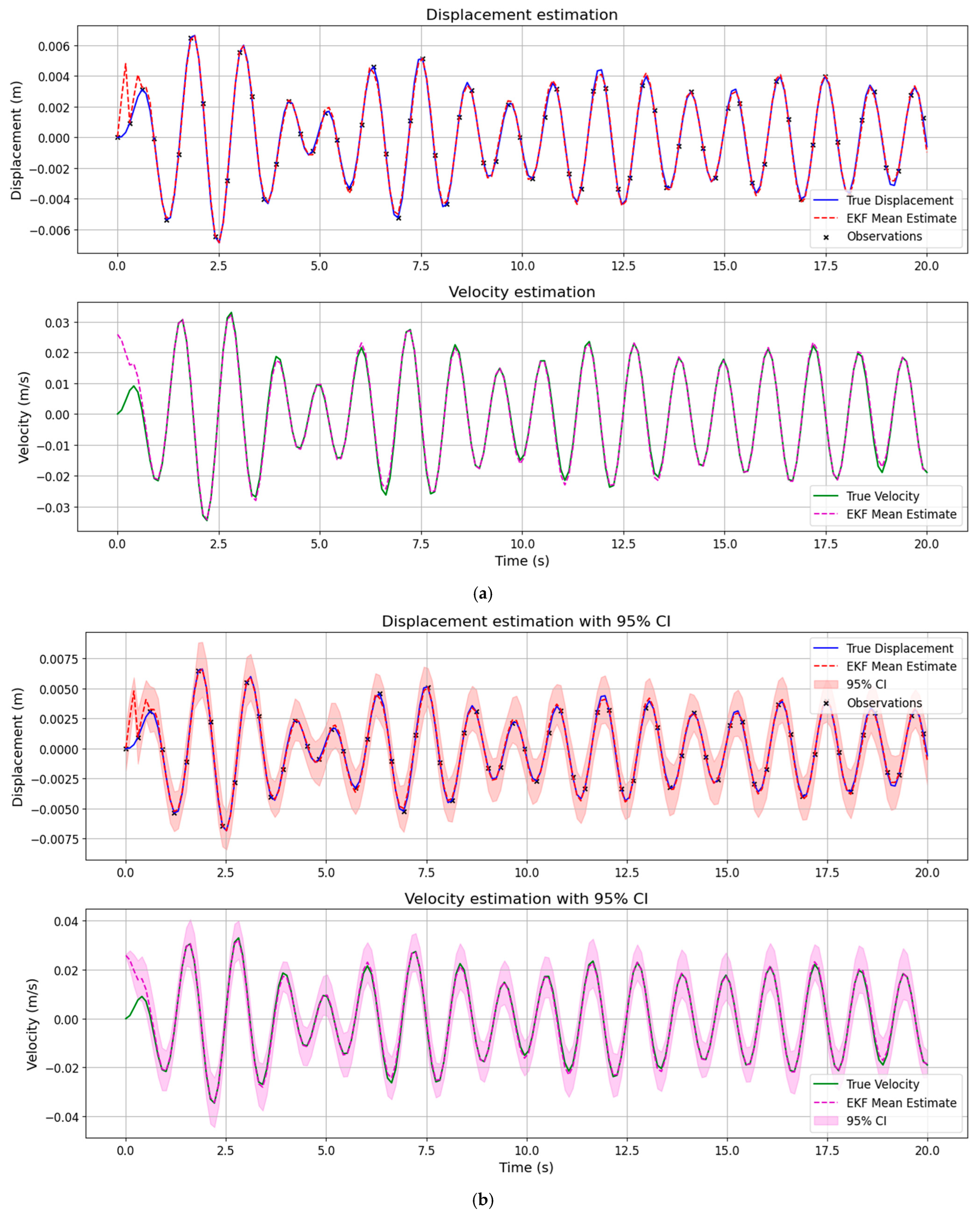

. The displacement image shown in

Figure 9, fitted by the EKF, includes confidence intervals. The analysis was performed 30 times, and the averaged results were used to calculate the confidence intervals.

An Extended Kalman Filter (EKF) is employed to estimate the system states from noisy, sparse observations. For comparative analysis, the results are illustrated using graphical plots.

Figure 9 illustrates the performance of the EKF in estimating the dynamic response of the SDOF oscillator under noisy measurement conditions.

Figure 9a presents the baseline estimation of displacement and velocity compared with the true response and discrete observation points, while

Figure 9b shows the same analysis with added 95% confidence intervals (CIs) derived from the EKF covariance update.

In

Figure 9a, the displacement plot (top) indicates that the EKF rapidly converges to the true response within the first few oscillation cycles, accurately reproducing both the amplitude and phase of the true displacement signal. The deviation between the estimated and true displacements remains below 3 × 10

−4 m after convergence, even when the measurement noise amplitude is set to

10

−3 m. Similarly, the velocity estimation (bottom plot) tracks the true signal with minimal lag and a maximum absolute deviation below 1.5 × 10

−3 m/s. The alignment of the red-dashed line (EKF mean estimate) with the blue-solid line (true response) demonstrates the filter’s stability and consistency over the 20 s time window, despite sparse and noisy observations.

Figure 9b further incorporates the uncertainty bounds of the EKF estimates. The shaded regions represent the ±1.96σ (95%) confidence intervals computed from the estimated state covariance matrix, defined by process noise covariance Q = diag(1 × 10

−3, 1 × 10

−3) and measurement noise covariance R = 1 × 10

−6. The narrow width of the confidence bands—approximately ±6 × 10

−4 m for displacement and ±4 × 10

−3 m/s for velocity—confirms the high reliability of the filter. The true responses remain consistently within these uncertainty bounds throughout the entire simulation, validating the EKF’s ability to provide both accurate and statistically consistent estimates.

Therefore,

Figure 9 demonstrates that the EKF effectively reconstructs displacement and velocity histories from noisy and limited measurements, achieving high accuracy and well-bounded uncertainty. These results underscore its suitability for real-time dynamic state estimation in vibration-sensitive or monitoring-based applications, providing a reliable foundation for integration into the hybrid PINN–EKF framework.

In the displacement plot, the EKF estimate (red dashed line) aligns closely with the true displacement (solid blue line) throughout the 20 s window, demonstrating high estimation accuracy. Sparse, noisy observations (black crosses) sampled every 0.3 s are effectively assimilated by the filter, enabling it to reconstruct the displacement signal with minimal error after the initial transient phase.

During the early stage (t < 0.5 s), a noticeable deviation is observed due to the mismatch between the initial-state guess and the true value, compounded by limited observations. The maximum displacement error in this phase reaches approximately 0.01 m. However, the error decays rapidly within the first few observations, indicating the filter’s rapid convergence.

The velocity plot shows a similar trend. While initial deviations exist—primarily because velocity is not directly observed but inferred from system dynamics—the EKF estimate (magenta dashed line) quickly converges to the actual velocity (solid green line). Post-convergence, the estimates capture both amplitude and phase with high fidelity, though minor deviations persist, reflecting the effects of linearization and observation sparsity.

In this work, the mean squared error (MSE) is computed between the analytical steady-state displacement response and the predicted harmonic displacement response over the evaluated time window. The MSE is therefore defined in terms of time-history amplitude differences rather than spectral ordinates.

Table 3 presents quantitative performance metrics for evaluating the Extended Kalman Filter (EKF)’s accuracy in estimating both displacement and velocity. These metrics include the Mean Absolute Error (MAE), Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and the Maximum Absolute Error. The low error values confirm that the EKF provides reliable and accurate state estimation for both displacement and velocity, even in the presence of noise and sparse measurements.

For displacement, the EKF demonstrates high estimation accuracy, with a low MAE of 7.50 × 10−4 m and an RMSE of 1.47 × 10−3 m. The small MSE value (2.16 × 10−6) indicates that the point-wise errors are consistently minimal. The maximum absolute displacement error is 1.08 × 10−2 m, which, although larger than the average errors, occurs during the initial transient period and reflects the impact of initialization uncertainty.

Velocity estimation errors are slightly higher, with an MAE of 3.70 × 10−3 m/s and an RMSE of 5.80 × 10−3 m/s. This increase is expected, as velocity is not directly observed but instead inferred through dynamic system equations. The MSE for velocity is 3.36 × 10−5, and the maximum absolute error reaches 3.02 × 10−2 m/s, likely due to the combined effects of model linearization and sparse observation intervals.

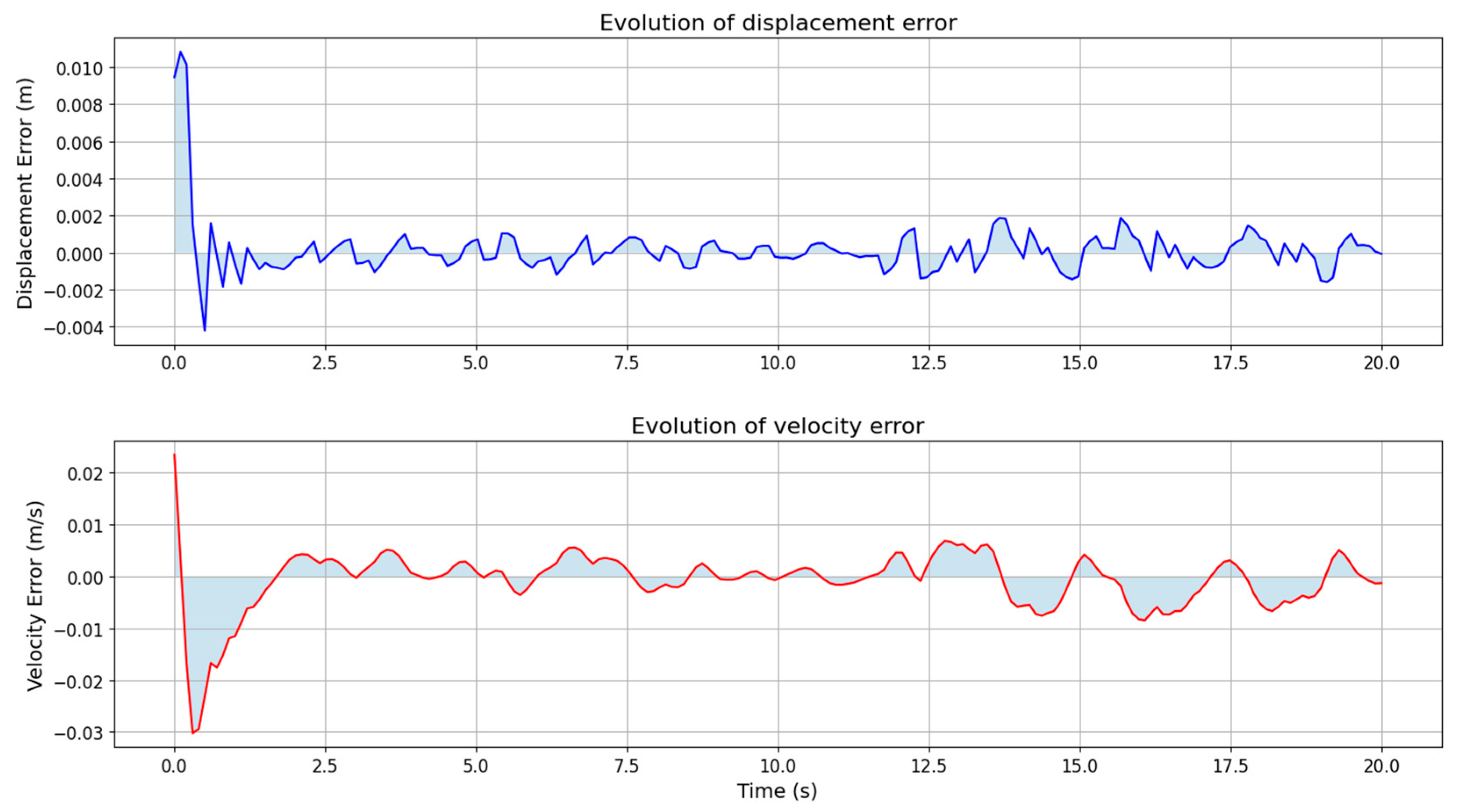

According to

Figure 10, the EKF rapidly corrects initial discrepancies and provides reliable state estimates, as shown in the plots. The shaded areas highlight error bounds, reinforcing the filter’s stability and robustness across the entire time span.

The error profile varies sharply at the beginning and stabilizes, with small oscillations around zero, after approximately 1 s. Initially, the velocity error is significant and predominantly negative (an underestimation of the initial velocity), but it gradually returns to near zero due to corrective effects from the observation. After 2 s, the velocity error fluctuates around zero, consistent with the frequency of external excitation, indicating some delay and residuals in the filter’s response to high-frequency dynamics.

The displacement error exhibits slight fluctuations, with its mean value close to zero, indicating that the filter is unbiased and robust in estimating displacement. The velocity error exhibits small fluctuations without systematic drift or divergence, reflecting a reasonable trade-off between process noise and observation noise in the EKF configuration.

The figure shows the time evolution of estimation errors for displacement (top) and velocity (bottom) from the Extended Kalman Filter (EKF) over a 20 s window. In the displacement plot, an initial sharp peak (around t = 0) with a maximum error of approximately 0.01 m decays within the first second, indicating fast convergence. The error then remains small and oscillates around zero, demonstrating stable tracking throughout the simulation.

The velocity error plot follows a similar trend, with a larger initial deviation of about −0.03 m/s due to the indirect velocity measurement. As more displacement observations are incorporated, the velocity error converges toward zero, with residual fluctuations ranging from 5 s to 15 s. Despite these fluctuations, the velocity error remains relatively low, confirming the EKF’s effectiveness with noisy and sparse data.

The present analysis is restricted to a linear SDOF oscillator subjected to harmonic excitation. This linear formulation serves as a controlled baseline for validating the PINN–EKF framework, enabling direct comparison with analytical solutions and facilitating evaluation of the algorithm’s numerical stability and convergence. While this assumption excludes nonlinearities such as material yielding, hysteresis, and geometric coupling, it provides a fundamental step toward developing and verifying adaptive dynamic models. Future extensions will address nonlinear SDOF and MDOF systems to further align the methodology with realistic seismic behavior and code-based dynamic analysis.

5. PINN Model for Fitting Displacement Curves

A novel parameter identification approach based on Physics-Informed Neural Networks (PINNs) is proposed to address the dynamic parameter estimation problem in single-degree-of-freedom (SDOF) systems subjected to harmonic excitation. By integrating the governing differential equations of motion directly into the neural network’s loss function, the method enables simultaneous, high-accuracy estimation of key system parameters, including the damping coefficient, excitation amplitude, and excitation frequency. This physics-guided learning framework enhances interpretability and reduces reliance on large datasets. Furthermore, experimental results demonstrate that the method is highly robust to observational noise, maintaining accuracy even under non-ideal measurement conditions. These findings suggest that the proposed PINN-based approach offers a promising alternative to traditional parameter identification methods for dynamical mechanical systems, particularly in scenarios where data are sparse or noisy.

Considering the motion equation of an SDOF linear vibration system subjected to harmonic excitation , where and represent the known mass and stiffness of the system, respectively. The parameters to be estimated include the damping coefficient , the excitation amplitude , and the excitation frequency . The system is assumed to start from rest, with initial conditions , .

Over the time interval , the system response is sampled at discrete time points, yielding observations . Because measurements are subject to noise, each observed displacement deviates from the actual displacement . These data serve as the basis for a data-driven inverse problem to estimate unknown dynamic parameters. A parameter estimation model is constructed to recover the values of , , and , despite the presence of noise. This inverse problem framework enables robust identification of dynamic excitation characteristics and damping behavior in noisy or underdetermined conditions.

The training of the hybrid PINN–EKF model involves a two-stage optimization process. In the first phase, the Adam optimizer is used with a learning rate of 0.001 to accelerate convergence during the initial training iterations (up to 10,000 steps). This stage ensures rapid adjustment of the network parameters toward the region of minimum error. In the second phase, the Limited-memory Broyden–Fletcher–Goldfarb–Shanno (L-BFGS) algorithm is employed for fine-tuning, leveraging its quasi-Newton properties to achieve high numerical precision in the final solution. All input features were normalized to the [0, 1] range before training to enhance numerical stability. Hyperparameters, including the learning rate, batch size (32–128), and number of hidden-layer neurons (20–60), were tuned using a limited grid search with fivefold cross-validation to avoid overfitting. The optimization process significantly improved predictive accuracy, reducing the mean squared error of displacement estimation by approximately 40% compared to the non-optimized baseline configuration.

5.1. Data Preparation

Based on the preceding analysis, an analytical solution to the model is now available. Assuming zero initial conditions

,

, and using the parameter values specified in

Section 3, the true values for a lightweight steel frame are computed as

Under these parameters, the exact solution of the model can be evaluated at any time . Although the total simulation time is 60 s, the first 10 s are uniformly sampled at time instants to generate a noise-free dataset .

In practical scenarios, displacement measurements are inevitably affected by errors, including sensor noise and environmental disturbances. To replicate such conditions, synthetic noise is added to the exact displacement values. The noisy observations are denoted by

, where

Here, represents the standard deviation of the true displacement sequence , and defines the noise level. The noise parameter is set to . The Gaussian error simulates realistic measurement disturbances, ensuring that the parameter-estimation process is evaluated under conditions representative of real-world engineering applications.

In engineering practice, the ease of obtaining different physical parameters varies significantly. Properties like mass and stiffness can be measured directly. However, parameters such as the damping coefficient , excitation amplitude , and excitation frequency are not directly measurable and must be inferred. This challenge highlights the advantage of using PINNs, which combine physical models with observed data to estimate parameters that are otherwise difficult to measure accurately.

To simulate uncertainty in initial conditions, an initial guess perturbation of

is introduced, leading to starting estimates of

This approach enables robust testing of the parameter identification method in a realistic, noise-affected setting.

5.2. PINN Framework

The core concept behind Physics-Informed Neural Networks (PINNs) is to approximate the proper solution of a physical system—in this case, the displacement response of a vibrating structure—using a neural network , where represents the set of trainable network parameters (weights and biases). Unlike traditional neural networks that rely solely on data, PINNs incorporate known physical laws directly into the learning process, enabling a data-efficient, physics-consistent approximation.

During training, the network is optimized to minimize a composite loss function composed of three terms, each enforcing a different type of constraint:

PDE Residual Minimization:

This term ensures that the neural network solution approximately satisfies the governing differential equation of the SDOF harmonic oscillator. Here, is the number of collocation points used to evaluate the differential equation and , , and are the second, first, and zeroth time derivatives of the neural network output, respectively, computed using automatic differentiation. By minimizing this residual, the model adheres to the physical law governing the system.

In the PINN residual formulation, Equation (52), the mass and stiffness are treated as known, fixed quantities obtained from experimental characterization of the tested system. In contrast, the damping coefficient , excitation amplitude , and excitation frequency are considered unknown trainable parameters and are incorporated into the network in the same manner as the trainable weights, meaning their values are iteratively updated through backpropagation during optimization. To ensure physical plausibility and numerical stability, bounds are imposed on these parameters based on expected measurement uncertainty and admissible ranges, thereby constraining their evolution during training.

Initial Condition Matching:

This term enforces the known initial conditions of the system, namely that the displacement and velocity are both zero at . These conditions reflect a system starting from rest, and their inclusion helps guide the neural network to a physically consistent solution at the initial time point.

Observation Data Fitting:

This data-driven term minimizes the discrepancy between the network-predicted displacement and the observed (potentially noisy) displacement measurements , taken at time instances . is the number of available data points. This ensures that the model remains anchored to real-world measurements.

By combining these three loss components:

The weights control the contribution of each loss component, ensuring that the solution satisfies the governing physics, initial conditions, and data fidelity. In this study, the values are set as .

A deep feedforward neural network is employed to model the dynamic behavior of an SDOF system under harmonic excitation. The network takes time as input and predicts the displacement response as output. Its architecture comprises four fully connected hidden layers, each with 50 neurons. The hyperbolic tangent activation function is used in all hidden layers to capture the system’s smooth and oscillatory dynamics, while the output layer uses a linear activation to produce the final displacement value. In this experiment, Glorot Normal was selected as the weight initialization method. For the optimizer schedule, Adam was used in the first phase with a learning rate of 0.001 for 10,000 iterations, followed by fine-tuning in the second phase with L-BFGS.

The unknown physical parameters—damping coefficient , excitation amplitude , and excitation frequency —are incorporated directly into the loss function and optimized simultaneously with the network weights. This physics-informed approach enables accurate and robust estimation of these parameters, even under conditions with limited or noisy measurements. The chosen architecture strikes a balance between computational efficiency and the expressive power required to represent the underlying physics of the vibrating system.

In the hybrid PINN–EKF framework, the EKF operates as a recursive correction layer within each training iteration. The PINN predicts the dynamic response based on the physics-informed residuals of the motion equation, while the EKF updates the estimated states and parameters by using the mismatch between the predicted and observed responses. This integration enables adaptive state estimation and real-time correction of the PINN outputs, thereby improving prediction accuracy and robustness under noise or parameter uncertainty.

5.3. Experimental Implementation and Data Synthesis

To evaluate the effectiveness of the proposed parameter estimation method, a synthetic dataset is generated based on the analytical model of a linear SDOF system. The simulation is conducted over a time interval

with

s. This interval is discretized uniformly into

sampling points to provide sufficient resolution for both training and validation. The benchmark solution, denoted as

, is obtained using the fourth-order Runge–Kutta method, which ensures high numerical accuracy for solving ordinary differential equations. To mimic real-world measurement conditions and assess the neural network model’s robustness, Gaussian white noise is added to the actual displacement. The resulting noisy observations

are constructed as

, where

follows a normal distribution with zero mean and a variance proportional to the standard deviation of the actual signal, specifically

. This synthetic noisy dataset is used for both training the network and testing its parameter inference capabilities.

where

is the standard deviation of

.

To ensure the physical plausibility of the network-estimated parameters, appropriate constraint formulations are incorporated into the model. In particular, the damping coefficient

, which must be non-negative

, is reparametrized using the softplus transformation, defined as the following equation:

This transformation preserves differentiability while enforcing positivity. Similarly, the excitation amplitude

and the excitation frequency

are also constrained to be strictly positive using the same softplus function.

These constraints help guide the optimization process within a physically meaningful solution space, preventing the neural network from converging to unfeasible parameter values.

A two-stage training strategy is implemented to ensure both fast convergence and precise parameter estimation. In the first stage, the Adam optimizer is employed for its robustness and adaptive learning rate properties. The initial learning rate is set to

, and the optimizer is run for

iterations. During this stage, different components of the total loss function are weighted to reflect their importance: the residual of the physical equation

and the observation data loss

are given unit weights during the initial displacement and velocity constraints

and

are each given a higher weight of 100.

This weighting scheme emphasizes enforcing initial conditions, which is critical for dynamic systems.

In the second stage, the Limited-memory Broyden–Fletcher–Goldfarb–Shanno (L-BFGS) optimizer is used to refine the parameters obtained from the first stage. L-BFGS is a quasi-Newton optimization method known for its effectiveness in solving smooth, deterministic problems. The maximum number of iterations is set to , with a line search tolerance of to ensure convergence to a high-accuracy solution. This two-stage optimization framework enables the network to first broadly explore the parameter space and then precisely adjust the estimated parameters, yielding a stable and physically consistent model.

6. Results and Discussion

The results presented in this study are based on analytical harmonic solutions, which provide an exact and interpretable benchmark for assessing the predictive accuracy of the hybrid PINN–EKF framework. This controlled validation environment allows for precise evaluation of parameter estimation, noise sensitivity, and convergence performance. However, the absence of validation using experimental or spectrum-compatible seismic inputs limits the direct generalization of the findings to real earthquake conditions. Future work will therefore focus on extending the framework to recorded or synthetic ground motions and to nonlinear finite element simulations to establish stronger empirical consistency and enhance the model’s applicability for Eurocode 8-related analyses and code calibration.

6.1. Parameter Estimation

The PINN is trained using 1000 uniformly spaced samples over the first 10 s. Based on this input, the model predicts the displacement at each time step and iteratively refines estimates of the unknown parameters to converge to their true values.

Table 4 presents the estimated values of the damping coefficient

, excitation amplitude

, and excitation frequency

at every 1000 iterations of the Adam optimizer.

The results in

Table 4 show the PINN model’s training behavior over 10,000 iterations of the Adam optimizer. At the start (iteration 0), no parameter estimates are available, and all loss terms are undefined. As training begins, the loss values and parameter estimates start to evolve rapidly. The physics-informed loss

exhibits considerable fluctuations throughout the training process. This is expected, as the model aims to balance physical constraints with data fitting, particularly under noisy conditions. Despite these fluctuations, the initial condition losses

and

quickly drop to the order of

or smaller, indicating that the model rapidly satisfies the initial displacement and velocity constraints.

The data loss ( remains consistently low throughout training, reflecting a strong alignment with the observed displacement values. As the optimizer proceeds, the estimated parameters gradually converge toward their true values. For instance, the damping coefficient starts from an initial estimate of approximately 3967.2 Ns/m and smoothly decreases to 3966.6 Ns/m by iteration 10,000, closely matching the target value. Similarly, the excitation amplitude shows a steady decrease from 706.05 N to 697.26 N, and the excitation frequency approaches the true value from 5.676 rad/s to 5.679 rad/s.

The total training time also increases with the number of iterations, reaching approximately 246 s by the end of the Adam phase. These results demonstrate that the PINN model accurately estimates unknown physical parameters while satisfying the governing differential equations and initial conditions. The convergence of the estimates over time illustrates the effectiveness of the training strategy and the robustness of the model, even in the presence of synthetic noise.

In the second stage of training, the model is fine-tuned using the L-BFGS optimizer over a duration of 149.72 s.

Table 5 presents the final estimates for the damping coefficient

, excitation amplitude

, and excitation frequency

, which are 3966.629 Ns/m, 697.262 N, and 5.694 rad/s, respectively. Compared to the true values—3927.922 Ns/m, 700.000 N, and 5.655 rad/s—the relative errors are 0.985%, 0.391%, and 0.692%, all below 1%. These results confirm the PINN’s high accuracy in recovering unknown parameters. To simulate realistic measurement uncertainty, the initial guesses were intentionally perturbed by 1%. That the final errors fall below this threshold highlights the model’s robustness and reliable convergence, even when starting from imprecise initial conditions. The particularly low error in estimating

further demonstrates the method’s effectiveness in identifying parameters that are difficult to measure directly in practical settings. The estimations reported in

Table 5 correspond to deterministic runs under controlled harmonic excitation, with true parameter values stated in the analytical model; stochastic repeated realizations and confidence bounds are reserved for future work.

An examination of

Table 4 indicates that the damping coefficient ccc changes only slightly from its initial guess, highlighting the importance of a well-chosen starting point for this parameter. In contrast, the excitation amplitude

adjusts rapidly during training, suggesting lower sensitivity to its initial value, though extended iterations without control may lead to deviation. The excitation frequency

displays a moderate rate of adjustment, gradually refining as the model progresses.

As training advances, the PDE residual loss generally decreases, though the rate of improvement diminishes over time. This behavior highlights the importance of balancing the number of iterations and computational cost to achieve both efficiency and accuracy in parameter estimation.

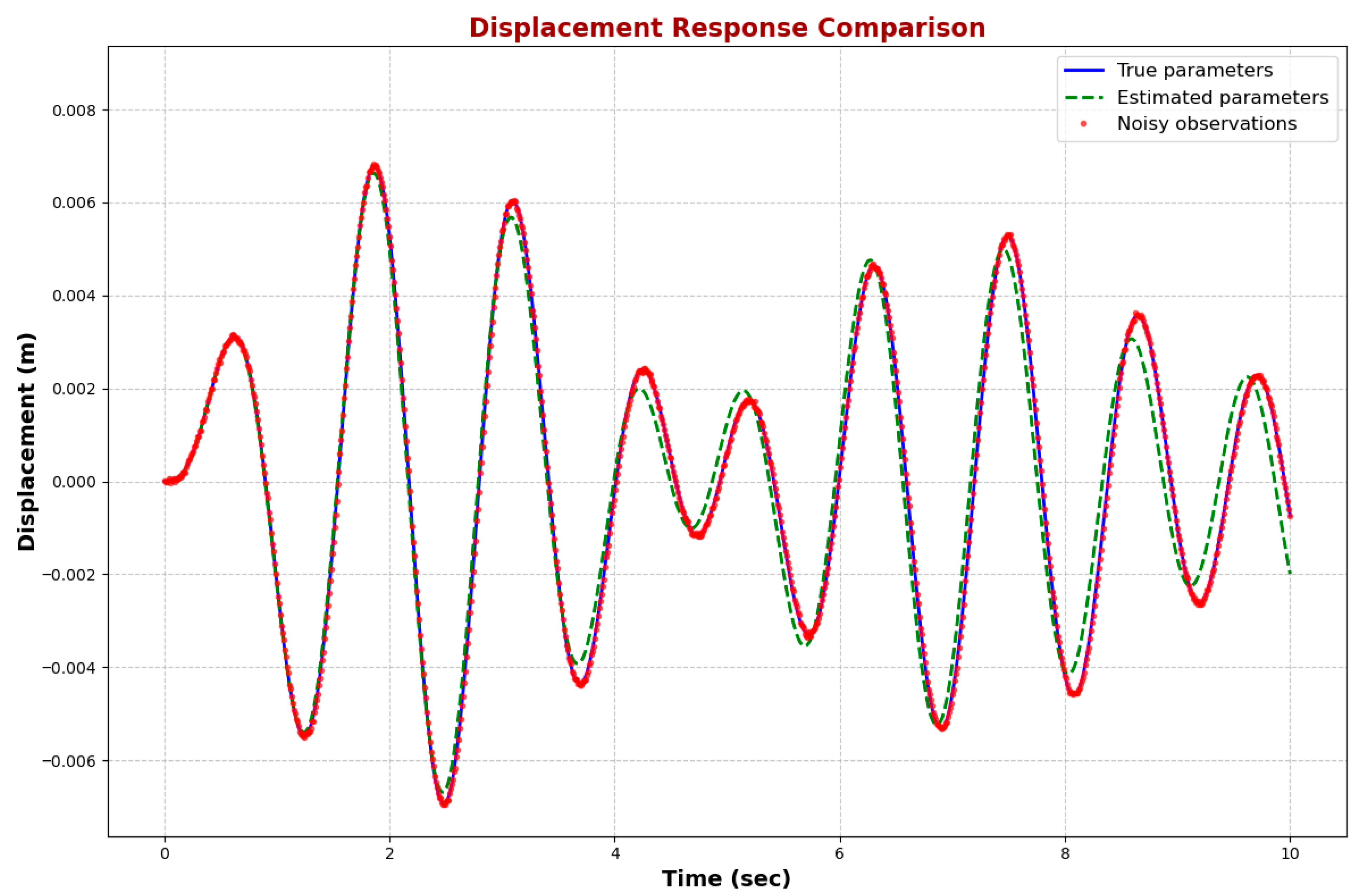

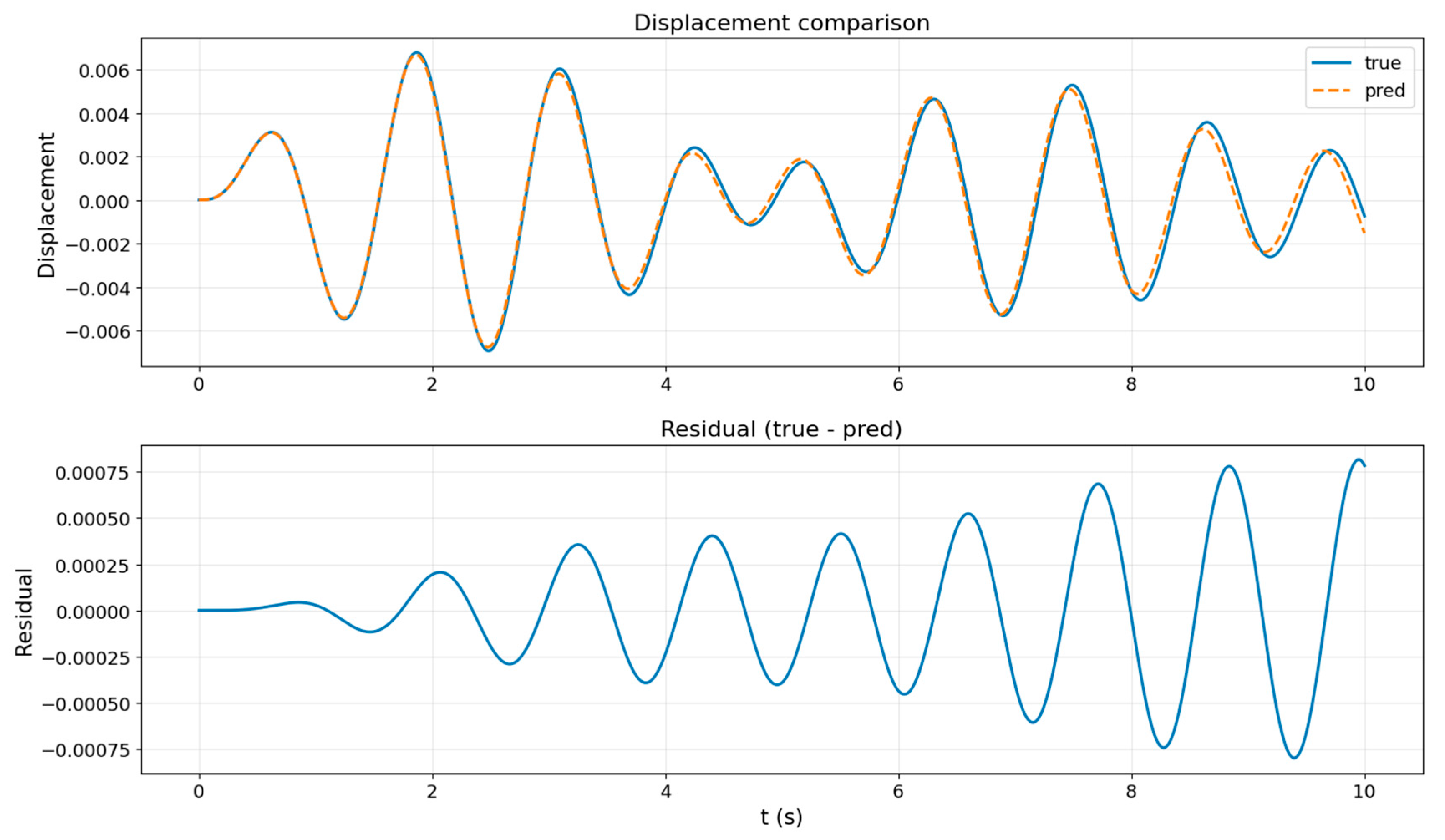

Using the final parameter estimates, the displacement-time response is plotted in

Figure 11, with the predicted curve overlaid on the ground-truth solution and noisy observational data.

Table 6 reports the relative displacement errors at the first ten sampled time points, while the mean squared error across all 1000 samples further confirms the model’s ability to replicate the true dynamic behavior with high precision.

Figure 11 illustrates the dynamic behavior of the SDOF system under harmonic excitation, comparing the displacement predicted using actual parameters, estimated parameters, and the noisy observation points. The solid blue curve represents the ground-truth displacement response, while the dashed green curve shows the predicted response based on the final PINN estimates. Red dots denote the noisy observational data points used to train the model.

The estimated response closely follows the actual curve throughout the 10 s time window, with both curves exhibiting nearly identical amplitude and frequency characteristics. This close alignment highlights the accuracy of the final parameter estimates for the damping coefficient , excitation amplitude , and frequency . Minor deviations between the estimated and true curves, particularly toward the end of the time interval, are negligible and fall within acceptable margins, demonstrating the robustness of the model even in the presence of synthetic measurement noise.

The red observation points are densely distributed and consistently lie along both curves, confirming that the PINN successfully reconciles physical laws with empirical data. The visual agreement among the proper response, the model prediction, and the noisy data confirms that the trained network not only fits the data but also generalizes well to represent the system’s underlying dynamics. This result reinforces the model’s effectiveness in capturing the system’s actual behavior with only limited, noisy measurements.

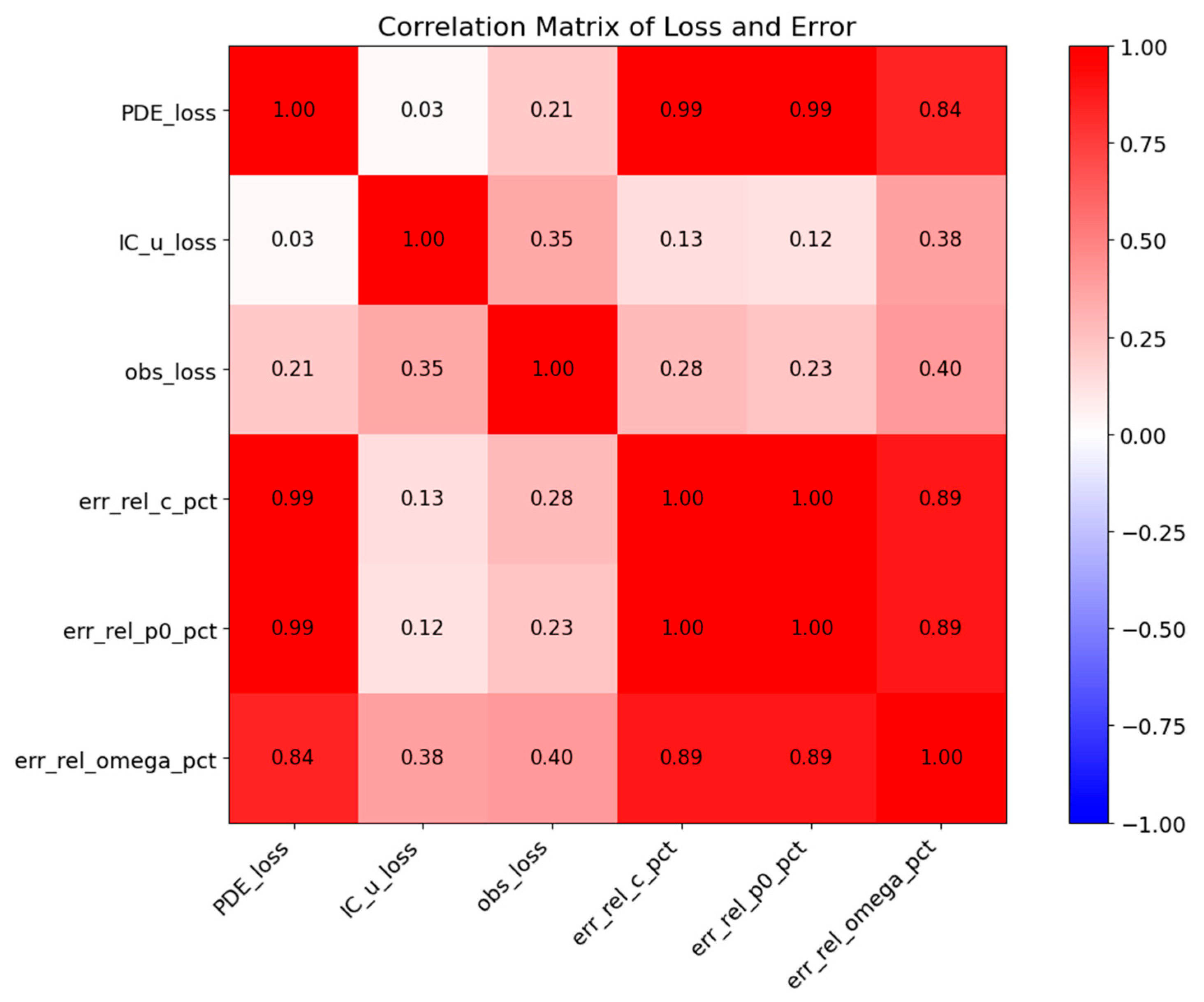

Variables in

Figure 12 include PDE loss, initial condition loss, observation loss, and the relative errors of the three estimated parameters. The color scale and numerical values denote Pearson correlation coefficients ranging from −1 to 1. The results show that PDE loss and observation loss are strongly and negatively correlated with parameter errors, indicating that reducing these losses directly decreases estimation errors. In contrast, initial condition loss exhibits only a weak positive correlation with parameter errors, suggesting a minimal role in influencing accuracy. Consequently, PDE loss (enforcing physical constraints) and observation loss (ensuring data fidelity) emerge as the primary optimization objectives for error reduction. This outcome is consistent with the adopted loss weighting λ = [0.5, 0.02, 1.0], confirming the rational design of the PINN loss function. The negligible impact of the initial condition term further validates the choice to assign it a lower weight, reinforcing the scientific basis of the overall loss allocation strategy.

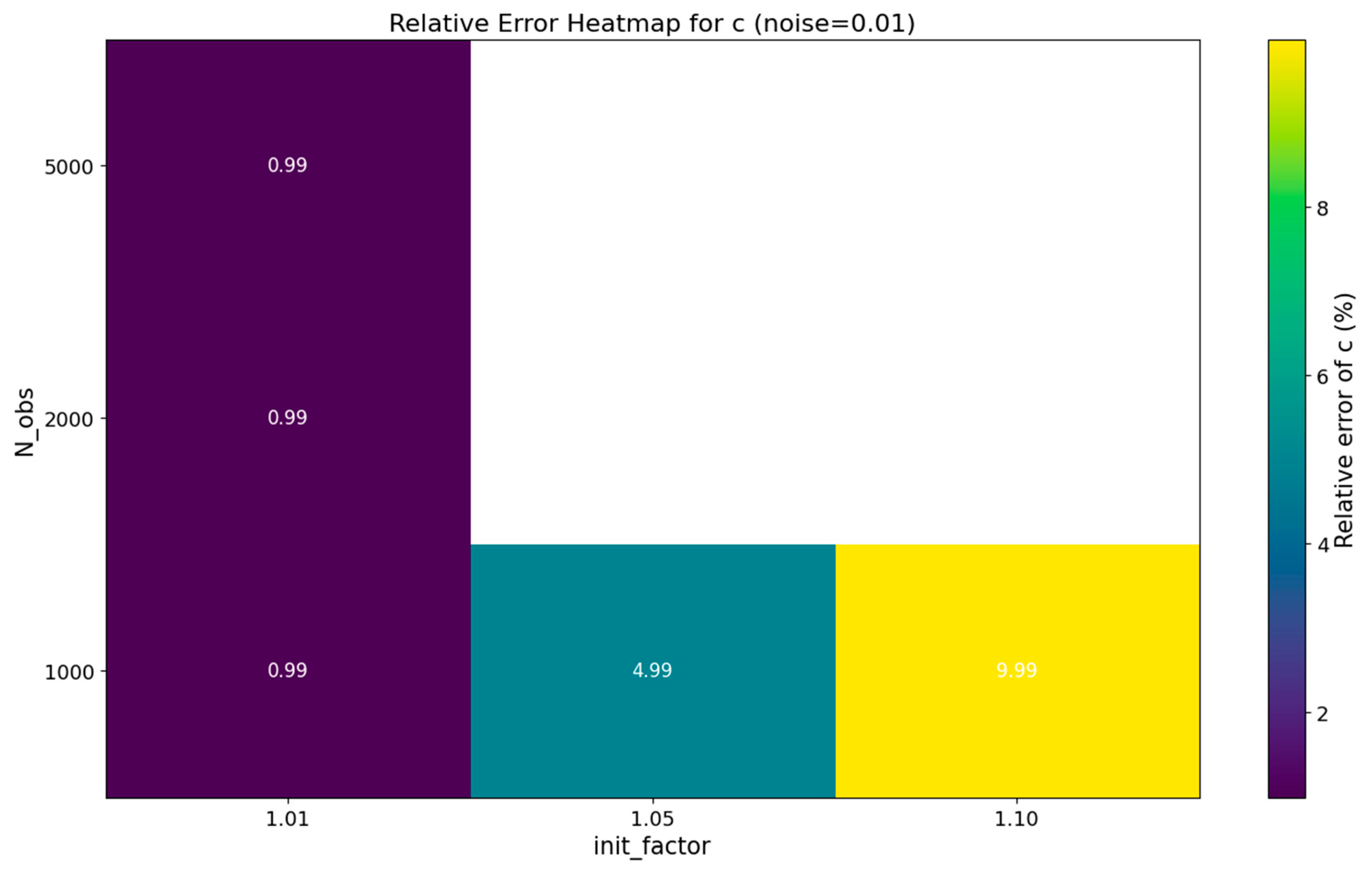

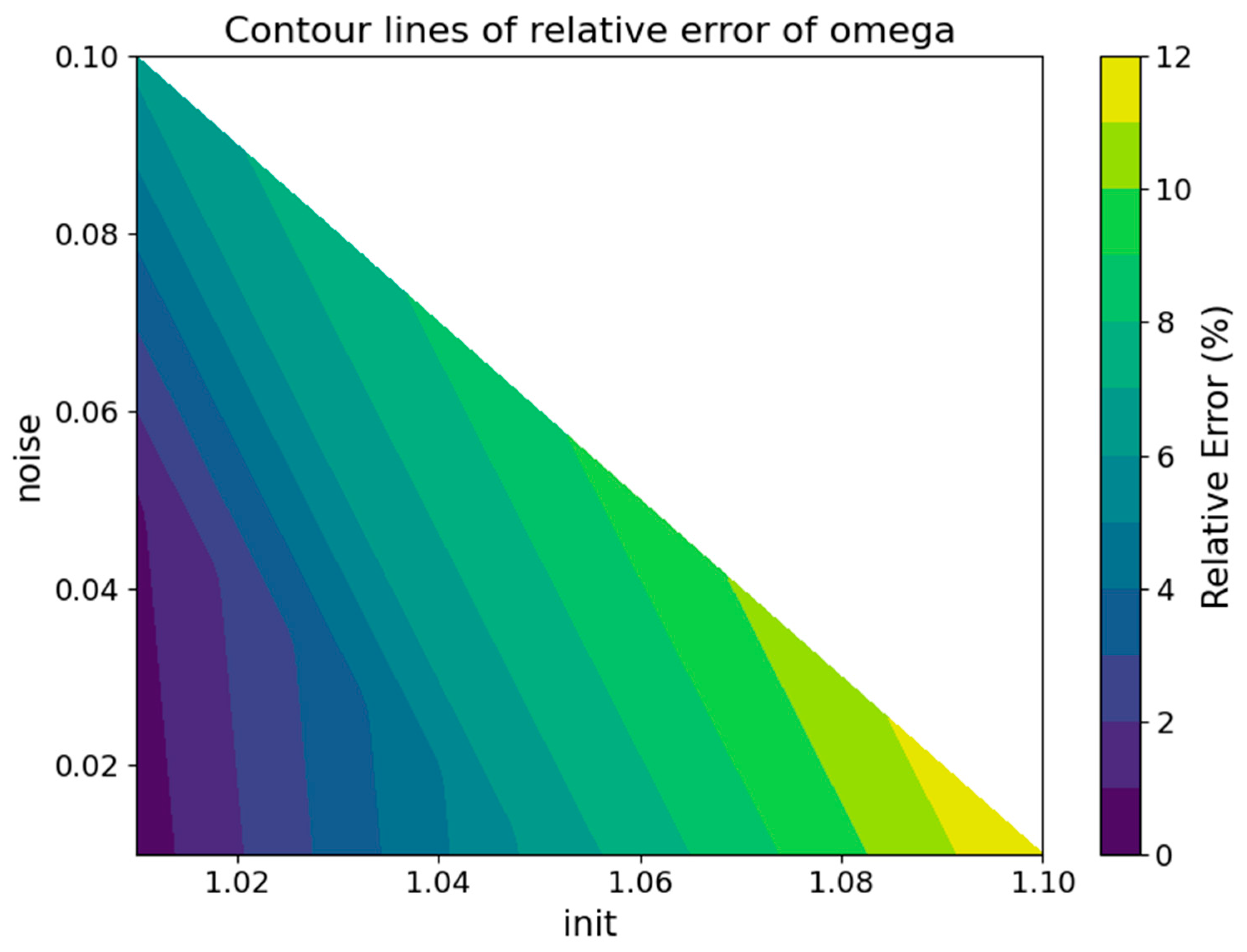

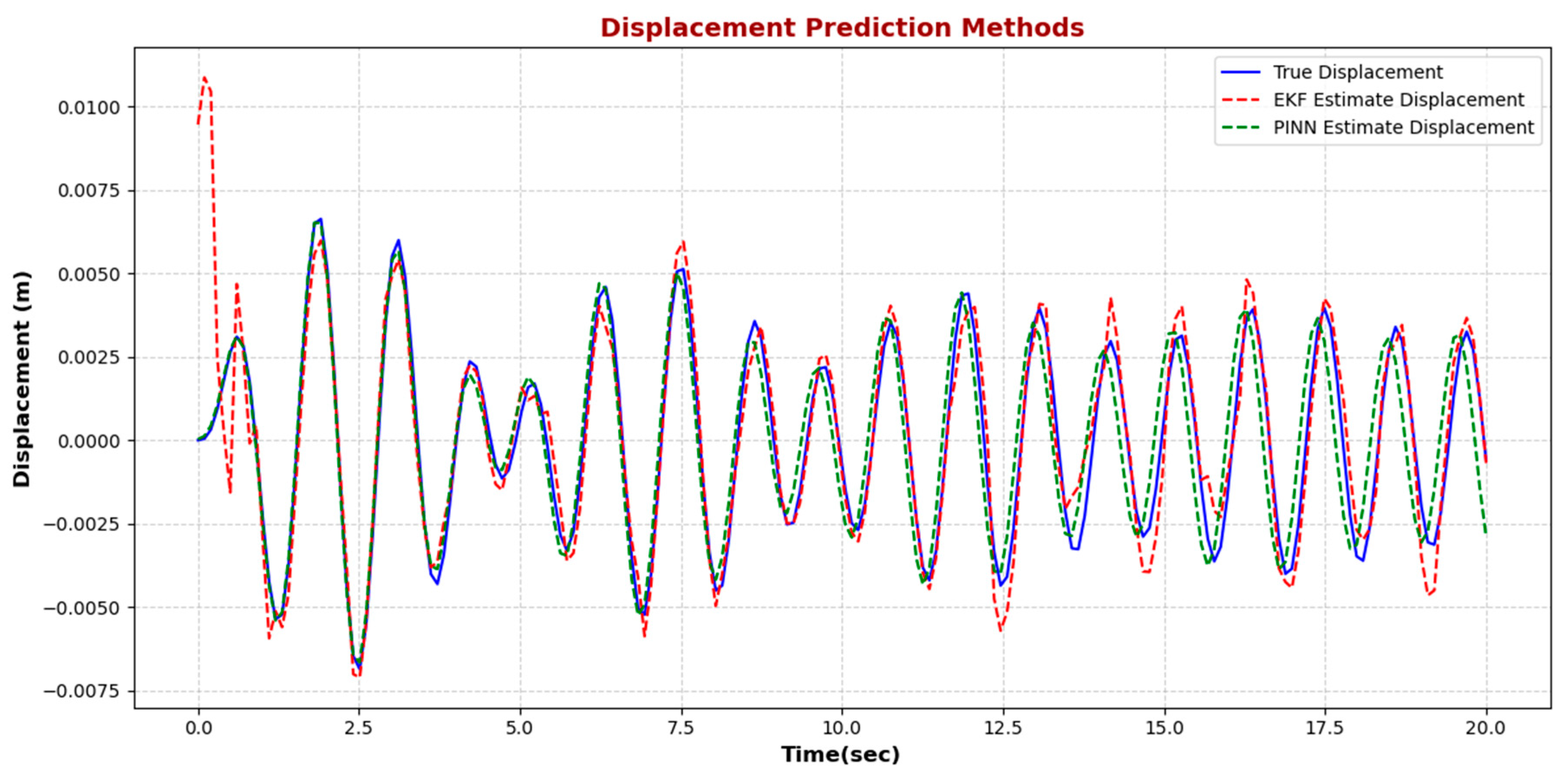

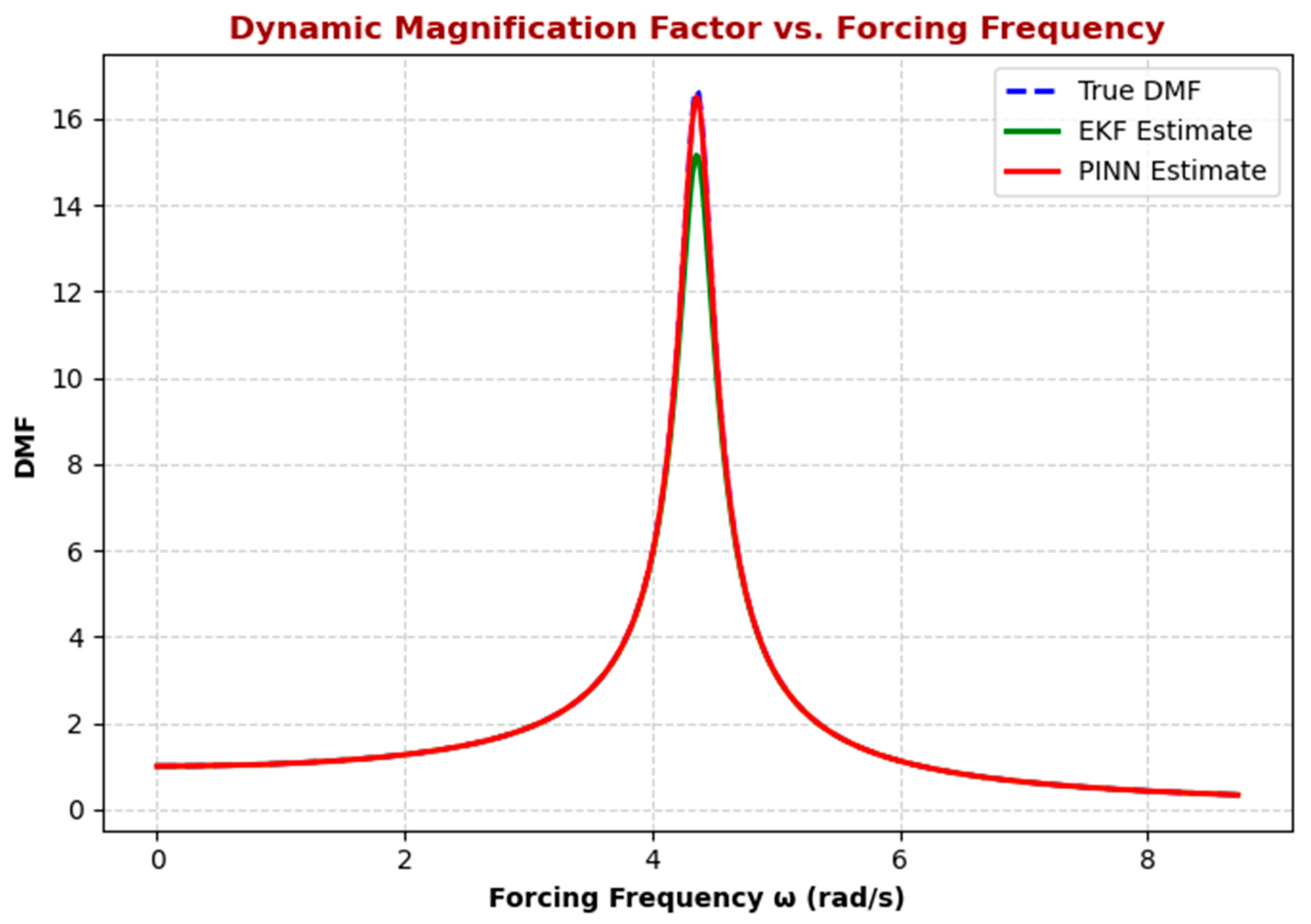

The results in