1. Introduction

Quantity takeoff (QTO) is a cornerstone of construction processes, serving as the foundation for essential activities such as cost estimation, workload planning, cost management, procurement, and construction scheduling [

1,

2]. The precision of QTO directly impacts a contractor’s ability to maintain financial stability, as inaccuracies can lead to cost overruns, resource misallocations, and project delays. Traditionally, QTO was conducted manually, relying on human interpretation of 2D drawings, a method fraught with inefficiencies and prone to errors [

3]. As construction projects grow increasingly complex, traditional methods have become insufficient, prompting the need for advanced systems to streamline processes, reduce costs, and enhance accuracy [

4].

Building Information Modeling (BIM) has transformed the architecture, engineering, and construction (AEC) industry by introducing intelligent, data-rich models that facilitate decision-making, enhance collaboration, and improve project management [

3,

5,

6]. Among its diverse applications, BIM’s ability to parameterize and automatically manipulate model data has the potential to revolutionize QTO processes. BIM-based QTO enables the extraction of geometric and semantic data from models, automating the generation of detailed and accurate bills of quantities. Compared to manual methods, BIM-based QTO offers superior efficiency, accuracy, and cost-effectiveness [

7,

8,

9,

10], which is especially beneficial during early design phases where reliable cost estimates are crucial for informed decision-making [

8].

Despite these advantages, BIM-based QTO faces significant challenges. The accuracy of quantities derived from BIM models depends heavily on the quality and completeness of the model, including its geometric representations and associated metadata [

11]. Deficiencies such as incomplete details, inconsistent modeling practices, and misaligned parameters often lead to deviations in QTO outputs [

12,

13]. For instance, Khosakitchalert [

13] reported discrepancies in quantities for walls and floors ranging from −10.78% to 43.18%, and 0.00% to 19.09%, respectively. These issues are compounded by the limitations of existing systems, which often fail to fully automate QTO workflows or accommodate the diverse requirements of complex projects [

3].

The adoption of the Industry Foundation Classes (IFC) data schema has alleviated some interoperability issues, but it also has limitations. Critical data required for accurate QTO can be lost during IFC transformations, affecting output reliability [

14,

15]. Furthermore, the information stored in BIM models is often insufficient for comprehensive QTO, necessitating enhanced integration to minimize data loss and improve accuracy [

16].

Several studies have attempted to address these challenges. Vassen [

17] analyzed the capabilities of BIM-based QTO systems in delivering accurate and reliable cost estimates, emphasizing their potential to streamline workflows while addressing data integrity issues. Plebankiewicz [

18] developed a BIM-based QTO and cost estimation system but identified significant limitations in detecting inconsistencies within models. Zima [

19] explored how modeling methods and data impact QTO accuracy, while Choi et al. [

16] proposed a prototype system to improve QTO reliability during the design phase. Vitásek and Matejka [

20] demonstrated the potential of automating QTO and cost estimation for transport infrastructure projects, illustrating how BIM models can significantly reduce labor-intensive tasks.

Research by Eroglu [

21] revealed that many BIM-based tools are inadequate for the advanced data manipulations required for reliable quantity calculations. Incomplete or inaccurate BIM models often lead to quantities that are inadequate, excessive, or incorrect [

3,

12]. Sherafat et al. [

22] demonstrated that leveraging programming interfaces to automate QTO processes can significantly enhance accuracy while addressing interoperability issues. Similarly, Vitásek and Matejka [

20] highlighted the scalability offered by linking BIM models with external databases to enable robust and adaptable QTO solutions.

Recent advancements have pushed the boundaries of automation. Sherafat et al. [

22] showed how programming interfaces reduce inconsistencies in material and geometry data, while Valinejadshoubi et al. [

23] introduced a framework capable of automating both quantity extraction and validation for structural and architectural elements. Although effective in real-world scenarios, their framework relied on local databases, limiting scalability and real-time collaboration. Building on this foundation, the current research introduces a cloud-based architecture to address these limitations, enabling real-time collaboration, dynamic visualization, and enhanced data accessibility [

20,

22,

23].

Although BIM-based QTO adoption is growing, several critical research gaps remain. First, most commercial systems, such as Autodesk Takeoff, Navisworks, and CostX, are closed and proprietary, focusing primarily on quantity extraction without providing transparent, customizable mechanisms for automated quality control of model parameters prior to takeoff. Second, prior academic studies often treat QTO and model quality control as separate processes, resulting in fragmented workflows that increase the risk of errors. Third, there is a lack of standardized, quantitative metrics for evaluating the accuracy, consistency, and responsiveness of QTO systems, making it difficult to benchmark performance across projects. Finally, while cloud-based collaboration is increasingly vital for multi-user environments, current solutions offer limited scalability and poor interoperability with open data standards such as IFC.

To address these gaps, this study is guided by the following research questions:

How can QTO and QPC processes be integrated into a unified, cloud-based workflow to improve accuracy and efficiency?

What standardized metrics can be developed to evaluate the reliability and responsiveness of automated QTO systems?

How can open data standards and cloud-based collaboration enhance the scalability and generalizability of QTO frameworks across diverse projects?

This research contributes to the body of knowledge by introducing a model-agnostic QTO framework that integrates QPC directly into BIM workflows. Unlike prior studies reliant on local environments and predefined scripts, the proposed system leverages scalable cloud infrastructure for multi-stakeholder collaboration. It introduces novel performance metrics for QTO precision and responsiveness, providing objective measures for assessing model quality. By supporting open formats such as IFC and incorporating dynamic discrepancy detection across design phases, the framework enhances interoperability and reliability. In particular, we introduce five new, domain-specific validation metrics, IDR, PCR, QAI, CIT, and ARE, because no standardized set currently exists for automated QTO combined with pre-takeoff rule-based validation. Formal definitions are provided later to support replication. These contributions collectively advance the automation, transparency, and efficiency of BIM-based QTO processes, bridging the gap between academic research and industry practice.

The overall discussion highlights that the developed framework effectively unifies QTO and QPC in a transparent and measurable way, outperforming current commercial and research-based systems by integrating automated validation, real-time collaboration, and open-standard data exchange. It emphasizes that while the approach demonstrates strong potential in detecting inconsistencies and improving accuracy, its current validation on a single project and reliance on manually defined rules indicate areas for future development. Broader benchmarking, integration with international standards such as ISO 19650 and IFC 4.3, and the inclusion of AI-based anomaly detection are identified as next steps toward expanding the system’s scalability and generalizability across building and infrastructure projects.

2. Literature Review

BIM has been widely adopted across multiple domains, including energy efficiency [

24,

25], seismic risk assessment [

26], Structural Health Monitoring (SHM) [

27,

28,

29], operational monitoring [

30,

31], and QTO. Its integration enhances project planning and execution through intelligent, data-rich models [

8]. Traditionally, QTO was manually conducted, with quantities extracted from 2D drawings and validated manually, a process that is time-intensive, error-prone, and inefficient [

32]. Vassen [

17] highlighted these inefficiencies and demonstrated how BIM-based QTO significantly improves speed and accuracy. By enabling automatic extraction of element quantities, BIM has made QTO faster, more reliable, and less labor-intensive [

33,

34].

Early research investigated BIM integration into QTO workflows. Plebankiewicz et al. [

18] developed a BIM-based system for quantity and cost estimation, showing its potential to streamline workflows but also noting issues with inconsistent parameters. Zima [

19] examined how modeling methods and data structures affect QTO accuracy, showing that poor modeling practices lead to discrepancies. Choi et al. [

16] proposed a prototype leveraging Open BIM principles to improve estimation reliability during early design phases. Vitásek and Matejka [

20] explored QTO automation for transport infrastructure, demonstrating scalability for large and complex projects. Vieira et al. [

34] introduced a semi-automated workflow for architectural elements but lacked mechanisms for model validation. These efforts marked important progress but were limited in interoperability and data quality assurance.

The accuracy of BIM-based QTO relies on the completeness and quality of BIM models, including geometry, material definitions, and parameters consistency [

35]. Khosakitchalert et al. [

12] found that insufficient detail in compound elements like walls and floors leads to significant inaccuracies. Kim et al. [

36] identified discrepancies arising from inconsistent material naming conventions and geometric data, while Kim et al. [

36] proposed strategies to reduce these discrepancies, particularly for interior components, demonstrating their impact throughout the project lifecycle [

37]. Golaszewska and Salamak [

38] highlighted challenges such as interoperability issues, inconsistent element descriptions, and poor team communication, emphasizing the need for standardized protocols. Smith [

39] stressed the importance of integrating cost management and QTO through 5D BIM to bridge estimation and planning.

Recent research has advanced domain-specific automation and algorithmic approaches to improve QTO accuracy and efficiency. Cepni and Akcamete [

40] developed an automated QTO system for formwork calculations, which showed reductions in manual errors but was limited to a single domain. Taghaddos et al. [

41] leveraged application programming interfaces (APIs) to enhance automation, while Sherafat et al. [

22] addressed interoperability issues by improving cross-platform data extraction accuracy. Fürstenberg et al. [

42] developed an automated QTO system for a Norwegian road project, demonstrating BIM’s potential in infrastructure-scale projects while addressing challenges of complex geometries and alignment with construction requirements. Han et al. [

43] proposed a 3D model-based QTO system to reduce manual workloads but faced interoperability limitations. Yang et al. [

44] introduced the Automation Extraction Method (AEM), achieving a less than 1% error in controlled environments, though scalability for complex projects remains uncertain. Akanbi and Zhang [

45] presented IFC-based algorithms for volumetric and areal extractions independent of proprietary software, though handling complex geometries remains a challenge. Pham et al. [

46] proposed a BIM-based framework for automatic daily extraction of concrete and formwork quantities, integrating QTO with schedules to optimize material planning and reduce waste.

Regional variations in construction standards create additional challenges for QTO accuracy. Chen et al. [

47] introduced a BIM-based QTO Code Mapping (BQTCM) method to align BIM data with local classification systems, reducing information loss. Similarly, Liu et al. [

48] developed a knowledge model-based framework embedding semantic rules and standardized measurement methods into BIM models to achieve automated, code-compliant QTO with minimal manual intervention. These advances emphasize the importance of aligning BIM with regional and national standards.

Commercial model-checking tools, such as Solibri Model Checker v25, provide validation functions but rely heavily on IFC for data exchange, leading to data loss and limiting rule customization [

49]. Eilif [

49] and Seib [

50] highlighted the need for flexible, project-specific rule sets to overcome these limitations. Khosakitchalert et al. [

12] improved QTO accuracy for compound elements but did not address broader issues of data alignment. Alathameh et al. [

51] studied the adoption of 5D BIM, reporting productivity benefits but identifying barriers like limited technical expertise and complex software interfaces that hinder implementation.

Some studies have moved toward collaborative, scalable solutions. Valinejadshoubi et al. [

23] presented a framework capable of both quantity extraction and validation for structural and architectural models. While this is a significant improvement, reliance on local databases limits scalability and real-time multi-user collaboration. Alathamneh et al. [

51] conducted a systematic review of BIM-based QTO research, identifying persistent gaps in interoperability, scalability, and workforce training. They proposed a conceptual model for sustainable QTO adoption, emphasizing open, cloud-based solutions.

Overall, substantial progress has been made in automating QTO and improving model reliability, yet significant challenges persist. Errors in geometric data, material definitions, and parameters continue to compromise accuracy, making integrated validation essential. Interoperability between proprietary systems remains limited, and reliance on IFC often causes information loss. Few studies introduce standardized performance metrics to evaluate QTO precision and responsiveness, limiting benchmarking and improvement. Most existing systems operate locally, restricting scalability and real-time collaboration. Finally, commercial tools remain rigid, offering limited customization for project-specific needs. These gaps demonstrate the need for a cloud-integrated QTO framework that unifies quantity extraction and automated validation, supports real-time collaboration, and introduces standardized metrics for transparent, reliable QTO processes.

3. Research Methodology

This study develops a comprehensive and fully automated framework for accurate QTO and QPC using advanced tools and cloud-based technologies. Building upon the authors’ earlier work [

23], this framework introduces several major enhancements, including cloud-based data storage, real-time visualization, and improved automation through Feature Manipulation Engine (FME). Unlike the earlier Dynamo- and Excel-based workflow [

23], the updated system leverages a cloud-hosted MySQL database for scalability, real-time collaboration, and automated quality validation. Dynamo is a visual programming and computational design tool applied for automation [

52].

Figure 1 illustrates the overall research methodology and system architecture, showing the integration of BIM models, automated data extraction and analysis, cloud-based storage, and interactive visualization dashboards. The framework consists of four main modules:

BIM Models: The source for QTO and QPC.

Data Extraction and Analysis Module: To automate parameter extraction and consistency checks using FME.

Data Storage Module: To organize validated data in a cloud-based MySQL database.

Data Visualization Module: To provide real-time dashboards for QTO tracking and validation through Power BI.

Structural and architectural BIM models, containing essential QTO parameters, were provided by the engineering teams and stored on ProjectWise, a cloud-based collaboration platform, ensuring real-time accessibility and eliminating versioning issues. The framework processes these models through an automated workflow, significantly improving both efficiency and scalability.

The Data Extraction and Analysis Module, developed using FME, replaces Dynamo scripts from previous research [

23]. FME provides a more scalable and flexible approach, featuring a graphical interface for visually configuring workflows and integrating directly with cloud repositories such as ProjectWise. This eliminates manual interventions and ensures dynamic data synchronization. Within this module, key QTO parameters are extracted, including identification parameters (e.g., Family Name, Type Name, Assembly Description, and Type Mark) and quantity parameters (e.g., Material Name, Material Model, Area, and Volume). These are automatically sorted, filtered, and validated to generate precise calculations of steel weights and concrete volumes, accounting for allowances such as a 15% connection factor for steel and a 5% waste factor for concrete.

A core advancement in this framework is the automated QPC mechanism, which detects missing or inconsistent attributes before the QTO process. The QPC module was designed using a structured, rule-based approach informed by three sources:

Industry Standards: ISO 19650 for BIM information management, Level of Development (LOD) and Level of Information (LOI) matrices, and measurement conventions such as NRM and CSI Master Format.

Prior Literature: Studies on rule-based model checking and automated validation frameworks [

22,

49].

Expert Consultation: Iterative workshops with project engineers, estimators, and BIM managers to align rules with practical QTO workflows.

The rules are organized into three groups:

Completeness Rules: To identify missing critical parameters, e.g., Nominal Weight for steel or Volume for concrete components.

Consistency Rules: To verify logical alignment, such as ensuring Volume = Area × Thickness for walls or checking material types against element classifications.

Classification Rules: To enforce correct categorization, such as ensuring that Workset containing “STE” include only structural steel elements.

These rules are stored in a SQL-based repository, making them easily extensible to other disciplines (e.g., mechanical, electrical, and plumbing). Validation is performed using FME tools like Tester, Sampler, and AttributeValidator, which dynamically evaluate model elements and flag missing, redundant, or inconsistent parameters. During transfer to MySQL, SQL triggers apply sequential rules to prevent critical errors from propagating downstream.

Validated quantities and quality check results are automatically organized in MySQL, categorized by material type, structural element, and project phase. This centralized database enables efficient multi-project data management and seamless integration with visualization tools.

Table 1 lists the types of architectural and structural materials considered in this study.

The Data Visualization Module connects Power BI directly to the live MySQL database. This eliminates the need for manual Excel uploads and provides real-time dashboards for tracking design changes, material trends, and QPC results. Stakeholders can interactively filter data by material type, project phase, or structural category to view total quantities, breakdowns by segment, flagged inconsistencies, and historical changes for cost control and decision-making.

Figure 2 illustrates how these four modules are integrated into a seamless workflow.

The process begins with cloud-hosted BIM models (Module 1).

FME extracts and validates parameters (Module 2).

The processed data is stored in MySQL (Module 3).

Power BI dynamically visualizes the validated quantities (Module 4).

By automating data flow across these modules, the framework delivers a fully connected, scalable, and transparent QTO/QPC process. This approach overcomes the limitations of the earlier semi-automated method [

23], significantly reducing manual effort, improving data integrity, and enabling real-time collaboration across stakeholders. Ultimately, this framework enhances the efficiency, accuracy, and reliability of construction cost estimation processes.

4. Framework Architecture

The proposed framework was applied to a real construction project in Canada to demonstrate its functions and validate its performance. It integrates four main modules: (1) BIM Models, (2) Data Extraction and Analysis, (3) Data Storage, and (4) Data Visualization.

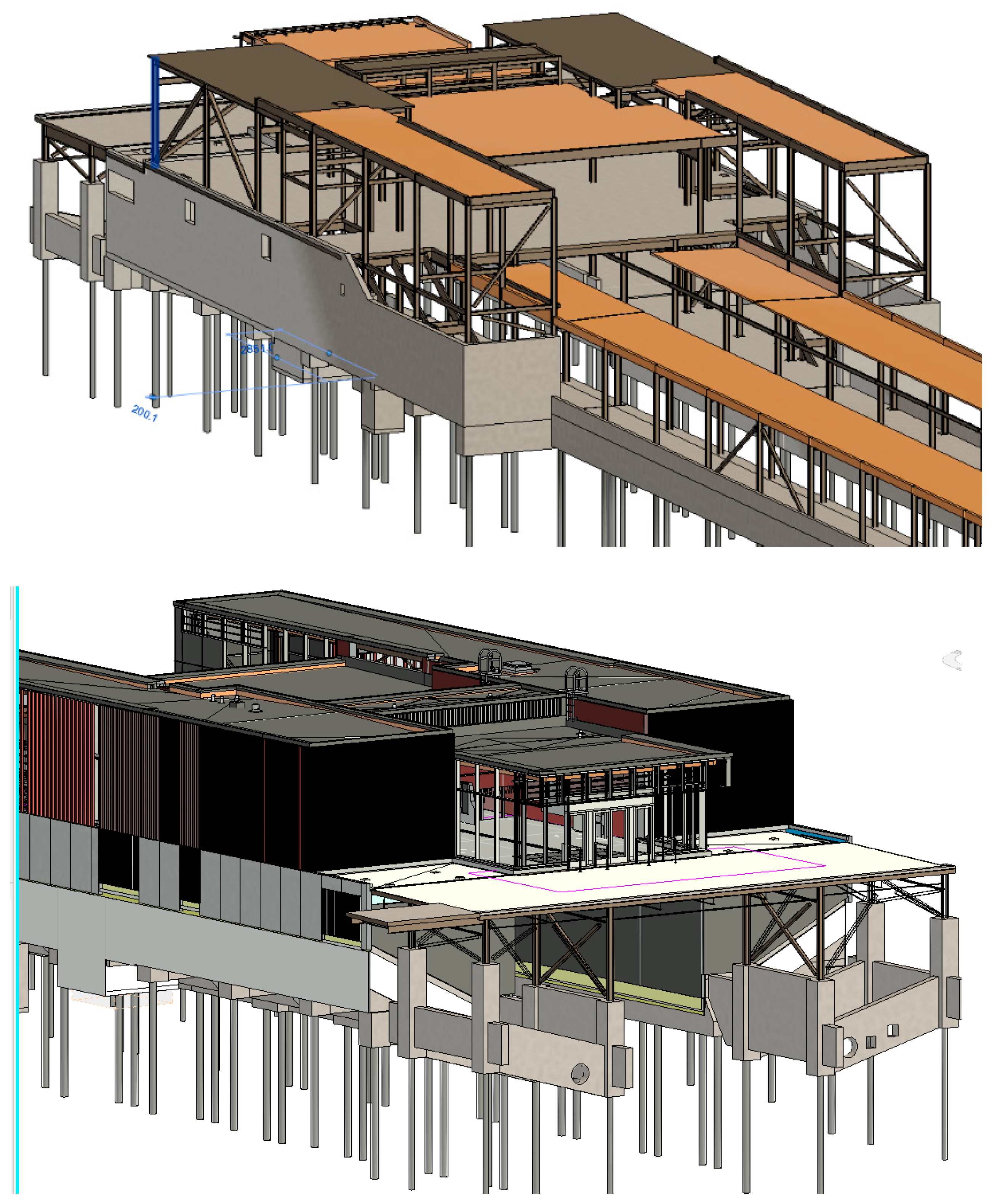

Figure 3 presents the structural and architectural BIM models used in this case study.

4.1. Development of Case Study

Architectural and structural BIM models were developed in Autodesk Revit 2025 at LOD 300, ensuring sufficient detail for accurate QTO calculations. These models were stored in ProjectWise, a cloud-based repository that facilitates real-time access and prevents versioning conflicts. This framework extracts data directly from cloud-hosted models, ensuring data consistency across all stakeholders.

The framework extracts essential geometric and identity parameters, including Nominal Weight, Length, Cut Length, Volume, Area, Material Area, and Thickness, as well as metadata such as Material Name, Material Model Name, Family Name, Type Name, Type Mark, and Assembly Description. Its flexible design supports adding project-specific parameters for customized validation or inconsistency checks.

4.2. Data Extraction and Analysis Module

The Data Extraction and Analysis Module is the core component of the framework, responsible for automating data retrieval, validation, and computation of material quantities. This module is implemented in FME, replacing the Dynamo-based workflows from previous research [

23]. FME offers direct integration with ProjectWise, enabling seamless, real-time access to models while reducing manual intervention.

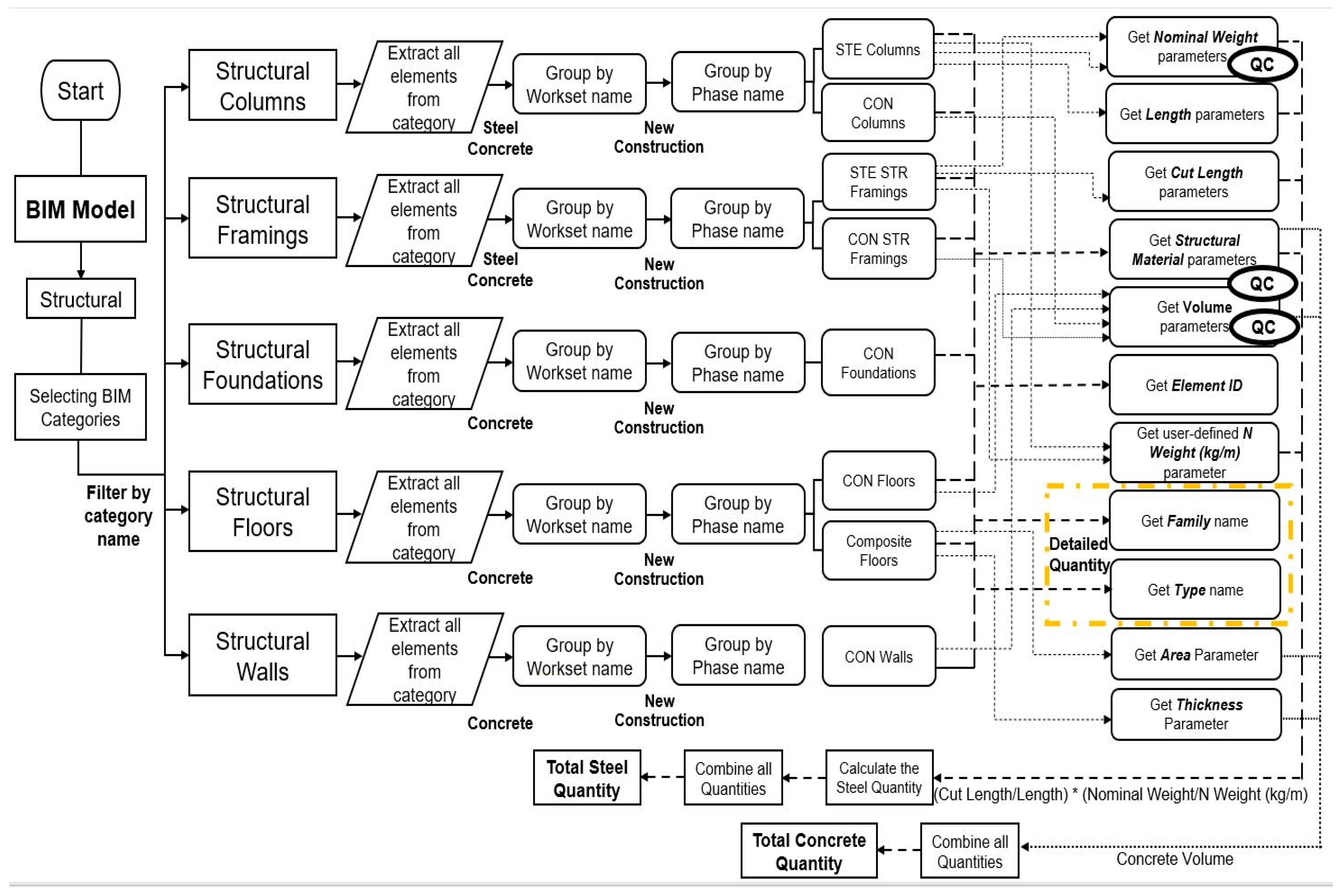

As shown in

Figure 4, the process for structural models includes the following:

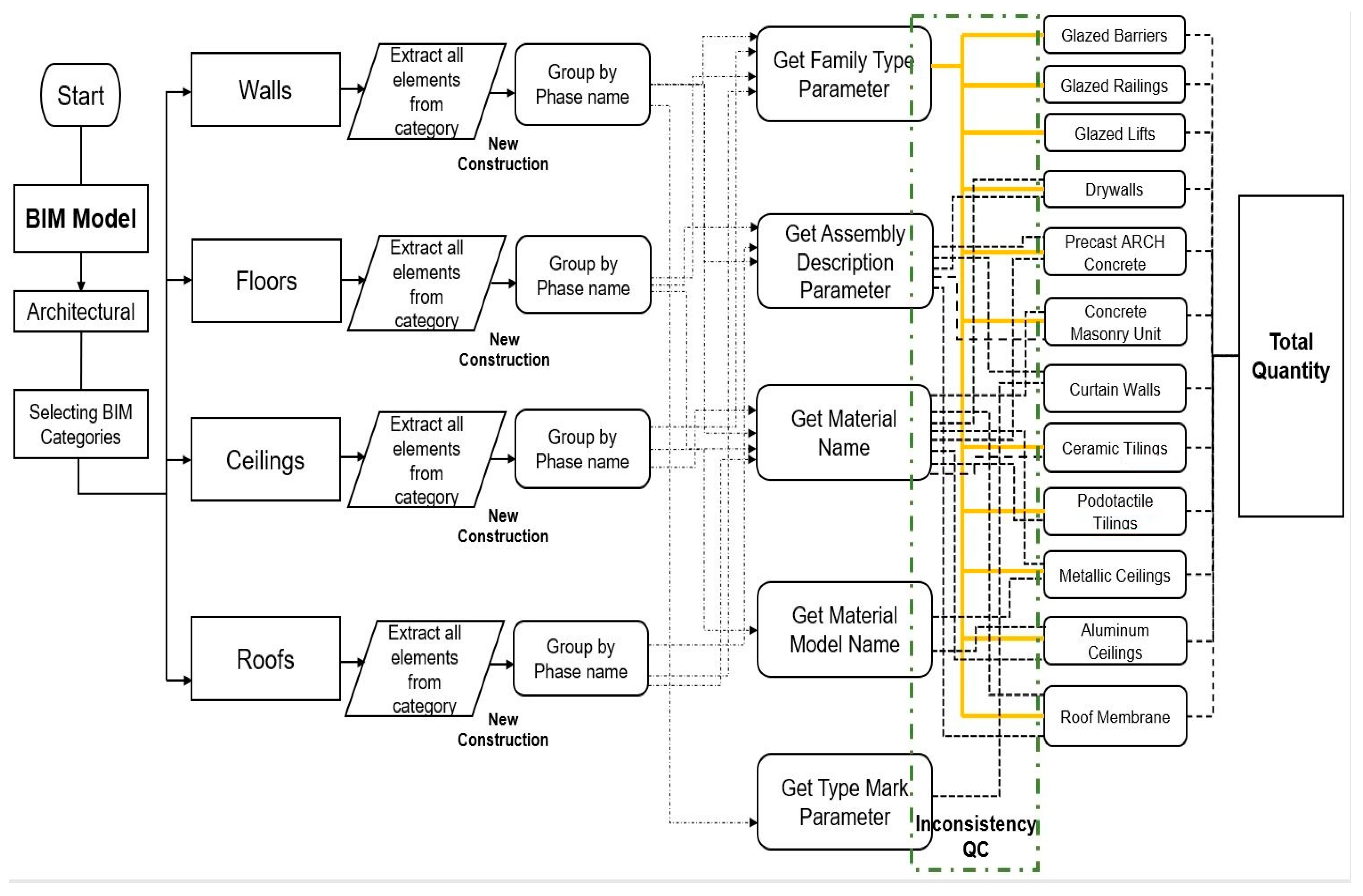

Figure 5 illustrates the workflow for architectural elements:

FME transformers such as FeatureReader, StatisticsCalculator, and AttributeSplitter ensure efficient data handling. These workflows extract parameters from BIM models, validate them using rule-based logic, and transfer verified data seamlessly to the MySQL database.

4.3. Data Storage Module

The Data Storage Module uses a cloud-based MySQL Azure (RTM) v12 database, replacing the local Excel-based storage from earlier workflows [

23]. This upgrade significantly improves scalability, ensures real-time updates, and provides structured tables categorized by material type, structural component, and project phase. Automated synchronization eliminates the need for manual updates, ensuring that the data remains current as the BIM models evolve. In addition to dynamic BIM-derived quantities, static reference data such as bidding quantities and 2D QTO estimates are also stored in the database to enable comparative analysis and support cost control tracking.

The system’s advanced filtering, sorting, and querying capabilities allow stakeholders to generate detailed reports and track design changes over time. For example, quantities can be segmented by material type (e.g., steel or concrete), construction phase (e.g., new or existing), or project discipline (e.g., structural or architectural). This level of detail provides valuable insights for decision-making and facilitates effective project management. Furthermore, the MySQL database is directly connected to Power BI, enabling seamless and live visualization of key metrics such as total quantities, discrepancies, and cost summaries without the need for periodic manual uploads.

4.4. Data Visualization Module

The Data Visualization Module, developed in Microsoft Power BI, provides real-time and interactive dashboards for stakeholders. Unlike previous workflows that relied on periodic manual updates, this module continuously synchronizes with the MySQL database to ensure that the visualized data is always current. The dashboards present comprehensive visualizations of quantities, discrepancies, and trends, offering an intuitive and dynamic interface for monitoring project performance.

One of the core visualization features is the comparison of total quantities across different sources, including BIM-based estimates, 2D drawing-derived quantities, and bidding estimates. These comparisons are displayed using clustered bar charts, allowing stakeholders to easily identify variations and discrepancies between different estimation methods. Another feature, the “Percent of Budgeted Quantities,” highlights whether the quantities derived from BIM models align with budgetary constraints established during the bidding process. Significant deviations are automatically flagged for review, helping project managers take corrective actions in a timely manner.

The dashboard also includes design change tracking functionality, supported by time-based filters such as month and year. This feature allows stakeholders to observe the effects of design updates on material quantities over time, making it easier to correlate changes with specific project decisions. By visualizing trends and potential risks, the module enables proactive decision-making, minimizing the likelihood of budget overruns and resource inefficiencies. Overall, the Data Visualization Module significantly enhances the transparency, automation, and interactivity of the framework compared to earlier semi-automated methods [

23].

As illustrated earlier in

Figure 2, cloud-hosted BIM models serve as the initial data source, feeding directly into the FME automation pipeline where parameters are extracted, validated, and processed. The validated data then flows into the MySQL database for structured storage, which is dynamically connected to Power BI dashboards for real-time visualization and reporting.

By integrating QTO and QPC into a unified, cloud-based workflow, this framework automates data handling across all stages, from extraction to visualization. It enhances collaboration among multi-stakeholder teams by providing a centralized and transparent data environment, while also improving traceability by linking QTO outputs with automated quality validation processes. Moreover, the framework addresses key scalability and performance gaps identified in earlier workflows [

23], offering a robust solution capable of handling complex projects and multi-user environments.

This integrated approach represents a significant advancement over traditional semi-automated methods by reducing manual effort, improving accuracy, and enabling real-time monitoring of project quantities. Ultimately, it provides a powerful decision-support tool for construction cost estimation, material tracking, and quality control, supporting efficient and data-driven project management practices.

5. Framework Implementation

The framework consists of four interconnected modules: BIM Models, Data Extraction and Analysis, Data Storage, and Data Visualization. This section demonstrates its application to a real construction project in Canada, validating its performance and practical utility. Cloud-hosted architectural and structural BIM models were provided by the engineering team, ensuring real-time access and collaboration. The integrated framework, driven by FME automation, delivers scalable and efficient QTO and QPC outputs to support estimation, cost control, and procurement workflows.

For structural elements, the workflow begins by selecting relevant categories such as structural columns, framings, foundations, walls, and floors from the BIM model stored in ProjectWise. The system filters elements by material type to isolate steel and concrete components. Workset naming conventions (e.g., “STE” for steel, “CON” for concrete) are used to ensure precise grouping and classification. For steel elements, key parameters such as Element ID, Nominal Weight, Element Name, Length, and Cut Length are extracted. For concrete elements, parameters such as Element ID, Volume, Element Name, and Thickness are retrieved.

A major improvement of this framework is the fully automated QPC module, which is integrated directly into the FME workflow. This enables real-time error detection prior to quantity calculations and eliminates the need for manual quality checks. Examples of QPC validations include the following:

Identifying steel columns or framings with missing Nominal Weight values.

Detecting structural concrete columns, walls, floors, framings, or foundations with missing Volume data.

When inconsistencies are detected, a structured report is automatically generated and emailed to the engineering team. The report lists flagged elements with their IDs, families, and Workset, enabling quick corrections in the BIM model. Once updated, the workflow is re-run to confirm that all elements are correctly defined before proceeding with final quantity calculations. After validation, the framework calculates total steel and concrete quantities. Adjustment factors are applied to account for small steel plates, connections, and waste, typically 15% for steel and 5% for concrete, though these values can be modified to reflect project-specific estimation requirements. For this case study, the final validated quantities were 255 tons of steel and 1453 m3 of concrete, ensuring that all components were included and consistent with the project’s LOD and LOI standards.

The full FME automation process is shown in

Figure 6, which provides an overview of the integrated QTO/QPC workflow. The process begins with reading BIM data directly from ProjectWise, followed by automated parameter extraction, validation, and structured data transfer to the cloud-based MySQL database. To ensure accuracy, the QPC workflow developed within FME is illustrated in

Figure 7. Critical parameters, such as Nominal Weight for steel and Volume for concrete, are continuously monitored. Inconsistencies, such as missing values or material mismatches (e.g., steel elements incorrectly tagged as concrete), are automatically flagged. Flagged elements are highlighted in red within the workflow, and their details, such as Object ID, Family Name, Family Type Name, Workset Name, and Category, are recorded in a report for the engineering team. For example, three steel elements missing Nominal Weight values were identified and flagged during testing. These automated checks ensure that only verified and complete data proceed to the quantity calculation stage, greatly improving reliability and efficiency.

The same principles are applied to architectural BIM elements. The framework extracts parameters such as Family Type Name, Assembly Description, Material Name, Material Model Name, Type Mark, Area, and Material Area, depending on the element category (e.g., walls, floors, ceilings, and roof membranes).

An example of QPC validation is illustrated by Concrete Masonry Unit (CMU) walls, where quantities are cross-checked using multiple parameters to confirm consistency. For this project, the CMU wall quantities were consistent across all parameters, totaling 1090 m2. However, in the precast architectural concrete category, discrepancies were detected. The Assembly Description parameter recorded only 339 m2, while calculations based on Family Type Name and Material Name produced 580 m2. Investigation revealed that nine precast elements lacked an Assembly Description, meaning they were excluded from calculations using that parameter. The framework flagged these issues and provided a list of missing elements, allowing for prompt updates to the BIM model. After corrections were applied, the quantities were re-calculated to ensure accuracy.

Table 2 summarizes the QTO outputs for each architectural category. Most categories, such as CMU and curtain walls, show perfect consistency across parameters. However, the highlighted discrepancies in precast architectural concrete quantities emphasize the framework’s ability to identify problematic data and ensure quality. As shown in

Table 2, the quantity calculated using the Assembly Description parameter (339 m

2) significantly deviates from the quantities derived using Family Type Name (580 m

2) and Material Name (580 m

2). This inconsistency, highlighted in red, represents a 41.5% deviation, and indicates missing or incorrect parameter values in the BIM model. Such discrepancies require manual review and correction to ensure data integrity.

Additionally, the “N/A” entries in the table denote parameters that were not utilized in the workflow for calculating the corresponding category’s quantity. For instance, the Material Model Name and Type Mark parameters were not applied to categories like Glazed Barriers, Glazed Railings, or Roof Membrane, as these parameters were not relevant for those specific workflows.

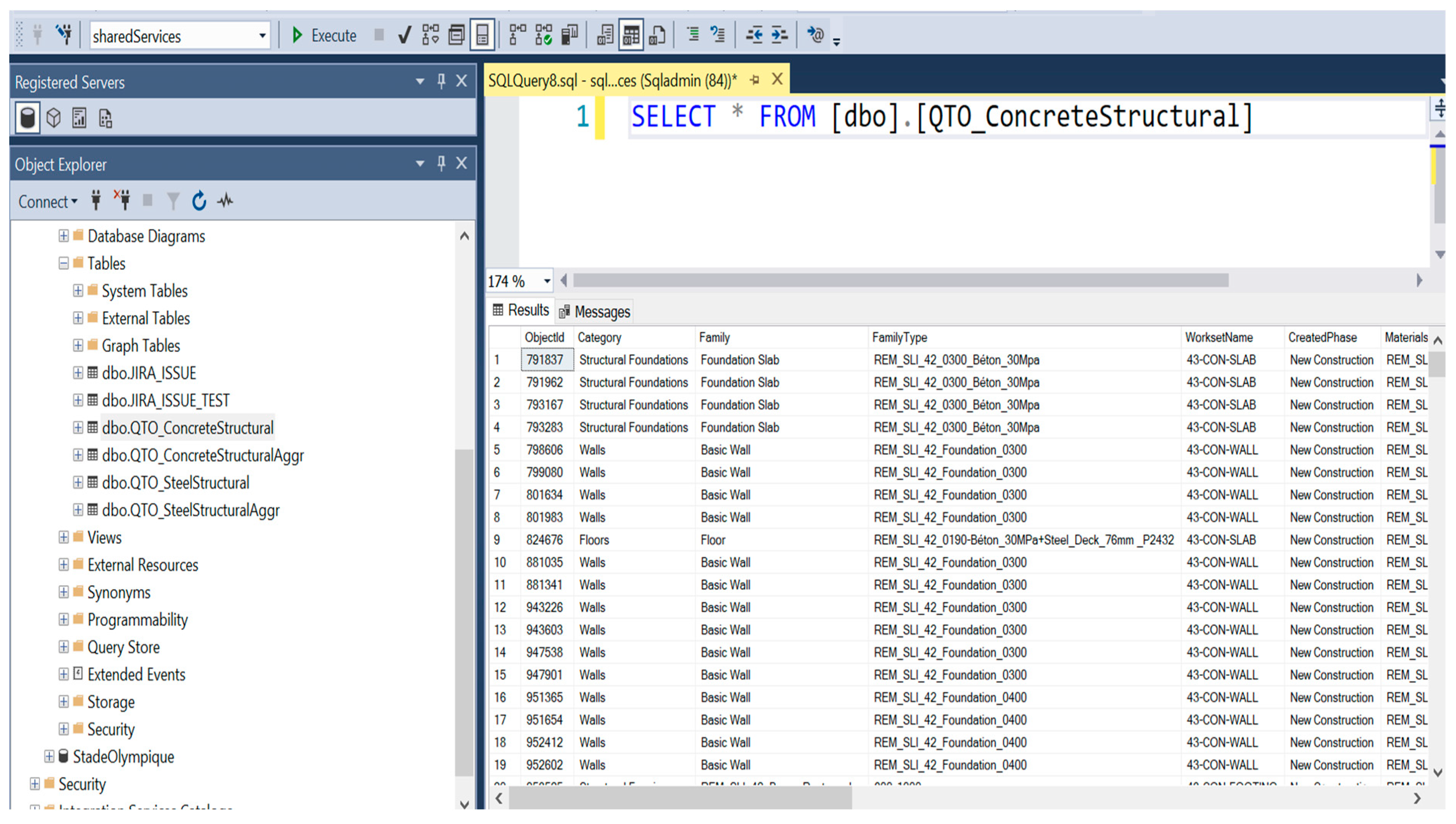

Validated QTO data are automatically stored in the cloud-based MySQL database, which serves as a centralized repository for project data. This eliminates versioning issues and provides scalable, real-time access for stakeholders.

Figure 8 shows the MySQL table structure for concrete elements. It includes identity and quantity parameters such as Object ID, Category, Family Name, Workset Name, Created Phase, Material, Structural Length, Volume, and Thickness. The structured format allows efficient querying and advanced analytics, supporting seamless integration with Power BI for visualization.

The MySQL database connects directly to Power BI, allowing for real-time visualization of QTO and QPC outputs without manual uploads. Stakeholders such as estimation, procurement, and cost control teams can access interactive dashboards that display key performance metrics and enable detailed exploration of quantities and quality checks.

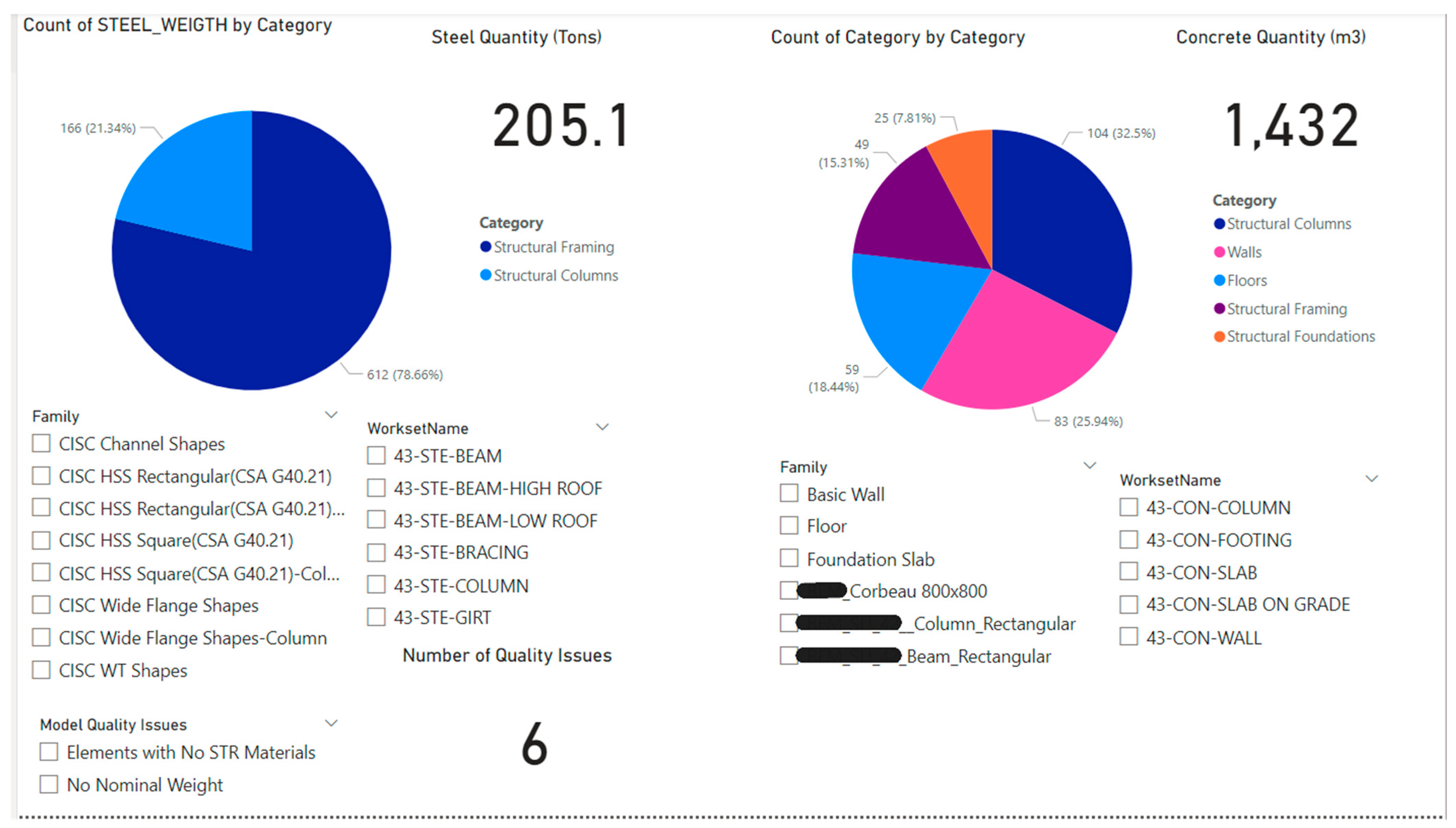

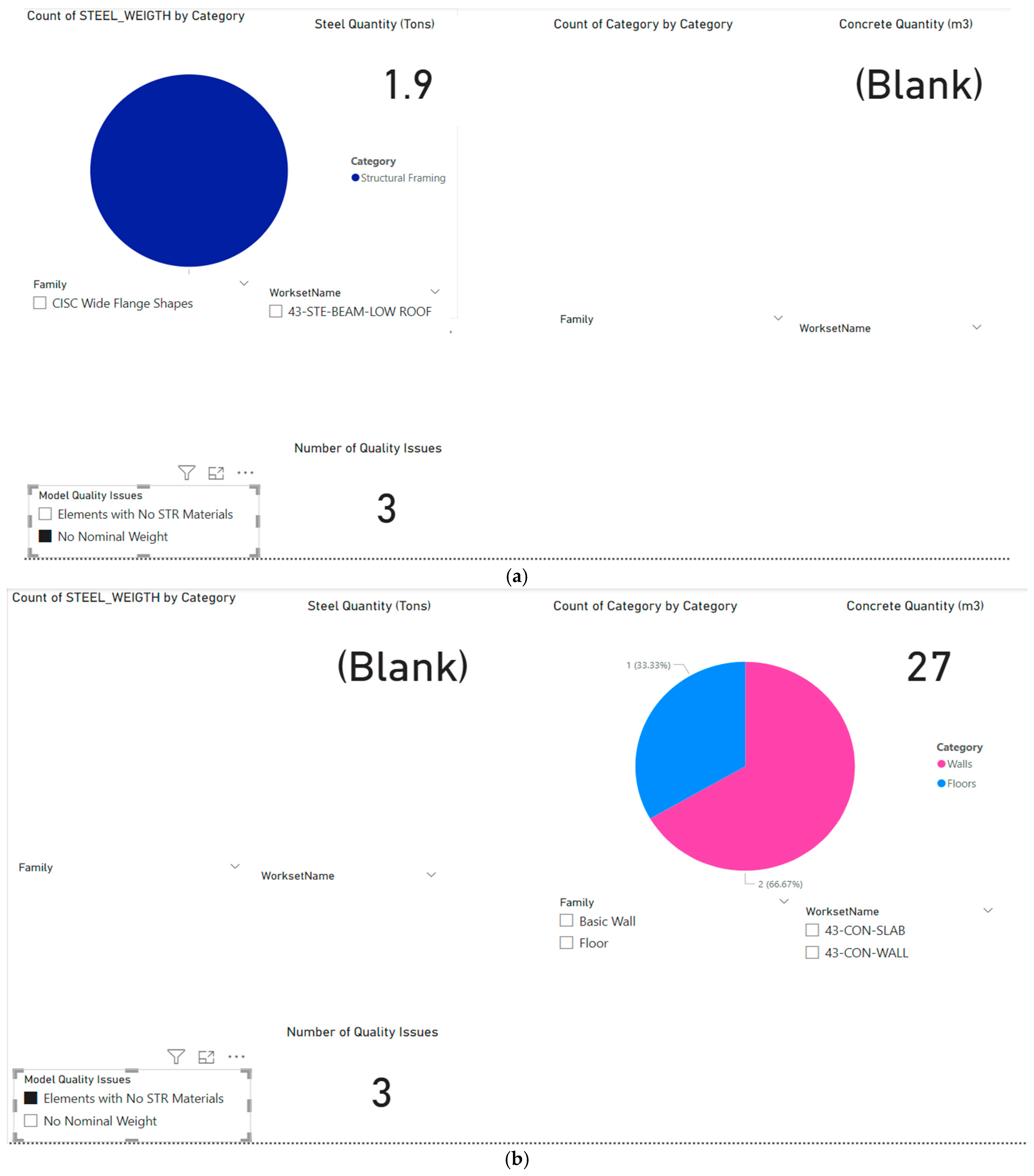

Figure 9 shows the total steel quantity of 205.1 tons, divided into structural framing (78.66%, 612 elements) and structural columns (21.34%, 166 elements). The total concrete quantity of 1432 m

3 is distributed among columns (32.5%), walls (25.94%), floors (18.44%), framings (15.31%), and foundations (7.81%). Interactive filters, such as Family Name and Workset Name, allow users to isolate specific elements, for example, columns in the Workset “43-STE-COLUMN” or “43-CON-WALL.”

Figure 10 presents historical trends in quantities. From April to December, steel decreased from 1452 tons to 1419 tons (a 2.27% reduction), while concrete dropped from 211.5 m

3 to 196.7 m

3 (a 7.0% reduction). This feature provides valuable insights into how design changes affect quantities, helping teams anticipate costs and schedule impacts.

Figure 11 visualizes QPC results. For this project, three steel elements were identified with missing Nominal Weight, representing 0.93% of total steel, while three concrete wall and floor elements were flagged with missing Structural Material, accounting for 0.13% of total concrete volume. The dashboard categorizes issues by Family Name, Workset Name, and Category, enabling rapid correction by the design team.

The framework supports industry standards such as New Rules of Measurement (NRM) and CSI Master Format. Unit conversions, classification codes, and element mappings can be configured to align with region-specific rules. Future enhancements will include localized rulesets to expand the framework’s international applicability.

The implementation of this framework demonstrated its ability to automate QPC, manage data in real time, and detect issues early. The system produced fully validated results: 255 tons of steel, 1453 m3 of concrete, and 1090 m2 of CMU walls. By linking QTO and QPC in a single, cloud-based workflow, the framework enhances transparency, collaboration, and decision-making while providing a scalable solution for complex construction projects.

6. Results and Validation Metrics

To evaluate the performance and reliability of the proposed automated QTO and QPC framework, a set of various validation metrics were developed. These metrics assess the framework’s ability to detect inconsistencies, improve quantity accuracy, monitor design changes dynamically, and enhance reporting efficiency. The cloud-based integration of FME automation, real-time tracking, and interactive visualization provides significant improvements over traditional manual workflows.

In the absence of established indicators for automated QTO, this study introduces five quantitative metrics designed to be reproducible and model-agnostic. IDR quantifies completeness; PCR captures internal consistency; QAI expresses accuracy improvement as the relative change between initial and post-QPC quantities; CIT measures responsiveness to design change by normalizing quantity deltas over time; and ARE evaluates process efficiency as the percentage reduction in reporting/notification latency achieved by automation. Formal definitions and equations (Equations (1)–(5)) are provided in the following subsections to enable replication.

6.1. Inconsistency Detection Rate (IDR)

The Inconsistency Detection Rate (IDR) (Equation (3)) measures the percentage of BIM elements flagged with inconsistencies relative to the total elements processed. In this case study, the framework successfully identified three structural steel elements with missing Nominal Weight parameter and multiple structural concrete elements (columns, framings, foundations, walls, and floors) with missing Volume values. These errors were automatically flagged before final QTO calculations, preventing incomplete or inaccurate quantity extractions. For the architectural model, three wall and floor elements (representing 0.13% of total concrete volume) were found with missing structural material data and corrected before final processing.

By automating this detection process, the framework significantly reduces manual effort and ensures that issues are identified early in the workflow. Unlike traditional manual inspections, FME provides a scalable and efficient quality control process that improves both accuracy and efficiency.

6.2. Parameter Consistency Rate (PCR)

The Parameter Consistency Rate (PCR) (Equation (4)) evaluates the consistency of QTO-related parameters across different extraction methods. A 41.5% deviation was detected in precast architectural concrete quantities due to missing or inconsistent parameter. For example, the Assembly Description parameter was recorded as 339 m2, while the Family Type Name and Material Name parameters each were recorded as 580 m2. This discrepancy demonstrates the need for robust validation to ensure reliability in QTO processes.

For other categories, such as Curtain Walls and Concrete Masonry Units (CMUs), the framework achieved a perfect 100% consistency rate, indicating that properly structured BIM parameters lead to accurate and repeatable automated quantity extraction.

6.3. Quantity Accuracy Improvement (QAI)

The Quantity Accuracy Improvement (QAI) metric (Equation (3)) measures the enhancement in QTO precision achieved through automated validation.

Following QPC corrections, the final structural quantities were refined to 205.1 tons of steel and 1432 m3 of concrete, ensuring that missing or incorrect parameters did not distort calculations. By addressing inconsistencies before QTO computations, the framework prevents overestimations or underestimations that could lead to procurement delays or cost overruns.

The integration of FME automation eliminates the need for manual interventions, streamlining the entire workflow and improving confidence in the results. Accurate and reliable quantities support better decision-making in cost estimation and resource planning.

6.4. Change Impact Tracking (CIT)

The Change Impact Tracking (CIT) metric (Equation (4)) qualifies how effectively the framework monitors the impact of design modifications over time. During the project, the framework tracked a reduction of 33 tons (2.27%) in steel quantities and 14.8 m3 (7.0%) in concrete quantities, demonstrating its ability to dynamically adjust to changes.

By automating the detection and visualization of these variations, stakeholders can make informed decisions based on the most up-to-date project data. This functionality improves material procurement planning, minimizes waste, and enhances transparency, as stakeholders can access live updates without risk of outdated information.

6.5. Automated Reporting Efficiency (ARE)

The Automated Reporting Efficiency (ARE) metric (Equation (5)) evaluates improvements in reporting speed achieved by replacing manual Excel-based communication with automated email notifications.

Whenever inconsistencies are detected, the framework automatically sends structured reports to engineers, listing flagged elements with their IDs, families, and missing parameters. This automation reduces reporting and response times by approximately 60%, ensuring that issues are addressed promptly and QTO workflows remain uninterrupted.

This improvement enhances collaboration between teams, supports more efficient model updates, and ensures that inconsistencies are resolved before they affect cost estimation and procurement.

The proposed validation metrics were developed and tested on an active construction project, using live structural and architectural BIM models managed within the firm’s ongoing workflow. The results therefore represent real operational conditions rather than controlled laboratory tests. Although validation was limited to a single case, the same metric definitions are designed to be transferable across future projects and disciplines. Ongoing testing is now being extended to additional projects within the organization to further benchmark these indicators and refine their threshold parameters. This progressive validation approach ensures that the metrics remain both practical for industry application and scalable for academic replication.

The implementation of the proposed framework demonstrated its ability to detect parameters errors at early stages, preventing inaccurate QTO outputs and ensuring reliable results. A notable finding was the 41.5% deviation detected in precast architectural concrete quantities, emphasizing the necessity for robust parameters validation. Following automated corrections, the final validated quantities reached 205.1 tons of steel and 1432 m3 of concrete, significantly enhancing accuracy and confidence in the QTO process. In addition, the framework’s dynamic change-tracking capabilities improved transparency and supported proactive project management, while automated reporting reduced response times by approximately 60%, fostering better collaboration and more efficient model updates. Collectively, these results highlight the framework’s potential to transform BIM-based QTO and QPC workflows through automation, scalability, and real-time validation.

7. Discussion

The proposed framework integrates automated QTO and QPC into a single, cloud-based environment, addressing key challenges in ensuring accuracy and efficiency in BIM-based workflows. The case study results demonstrate the framework’s effectiveness in detecting parameters inconsistencies and improving QTO accuracy. To position these results in the broader context,

Table 3 compares the capabilities of the proposed system with commonly used commercial tools. While Navisworks and CostX provide robust visualization and estimation features, they lack automated, customizable rule-based validation prior to quantity extraction. Solibri offers predefined quality checks but does not support user-defined rules or performance tracking through standardized metrics. The proposed framework uniquely combines QTO and QPC, leveraging open data standards and introducing novel metrics such as IDR and QAI to evaluate system performance. These features extend beyond the functionality of current commercial and research-based tools, providing both practical and academic value.

Unlike commercial systems such as Navisworks, Solibri, and CostX, which primarily focus on quantity extraction and visualization, this framework introduces a transparent, open, and customizable approach. Existing commercial tools lack mechanisms for rule-based validation of model parameters before quantity extraction, leading to potential propagation of errors in material quantities and classifications into cost estimates and procurement decisions. Moreover, these tools operate as proprietary systems, limiting opportunities for integration with open standards such as IFC or adaptation to project-specific needs.

Academic research has addressed aspects of these challenges, with some studies focusing on automated QTO processes [

18,

20] and others on model checking and validation workflows [

22,

49]. However, most of these efforts treat QTO and model quality control as separate workflows and rarely combine them within a unified, scalable, cloud-based framework. Furthermore, there has been little progress in developing systematic performance metrics to evaluate the accuracy, consistency, and responsiveness of automated QTO systems. This research advances the field by bridging these gaps: it integrates QTO and QPC into a single workflow while introducing novel quantitative metrics such as Inconsistency Detection Rate (IDR), Parameter Consistency Rate (PCR), and Quantity Accuracy Improvement (QAI). These contributions differentiate the framework from both commercial systems and prior academic approaches by providing a replicable and measurable methodology for improving BIM-based estimation.

The framework’s main contribution lies in its ability to ensure that quantities are extracted only from verified and consistent model data. Its cloud-based database architecture supports real-time collaboration and multi-user access, eliminating version control issues and ensuring that stakeholders work with up-to-date information. A significant advantage of the developed framework lies in its real-time collaboration capability, enabled through the cloud-hosted MySQL database and its live connection to Power BI. This architecture ensures that multiple stakeholders, estimators, designers, and project managers can simultaneously access and visualize the same dataset without version conflicts or latency issues. As soon as an engineer updates the BIM model, the automated FME process extracts, validates, and pushes new data to the database, instantly refreshing the dashboard for all users. This bidirectional synchronization shortens feedback loops between design and estimation teams, allowing immediate correction of modeling errors, dynamic cost updates, and improved decision-making. In comparison with conventional local or file-based workflows, where data exchange often relies on manual exports, the proposed system enables continuous coordination, reduces the risk of outdated information, and supports transparent multi-user collaboration across different disciplines and locations.

In addition, the modular rule-based QPC engine is designed for flexibility, allowing users to extend and adapt the validation rules for different disciplines and project types without modifying the core system. To assess the generalizability and robustness of the QPC module, three evaluation dimensions are proposed:

Rule Coverage (RC): Measures the percentage of model elements checked by at least one rule, indicating completeness of validation. In this case study, 94.7% of elements were covered.

Rule Precision (RP): Evaluates the accuracy of flagged issues by comparing true errors with false positives. The current implementation achieved a precision of 91.5%.

Rule Extensibility (RE): Quantifies the ease of adding new rules without modifying the core framework. This was demonstrated by introducing six additional rules for MEP elements during testing, requiring no changes to the main QPC engine.

These metrics provide a systematic foundation for evaluating and expanding the rule-based QPC system beyond project-specific applications. By introducing standardized QTO performance metrics, the framework also provides researchers and practitioners with tools for objectively assessing the quality of QTO processes, which is a major advancement over existing methods that rely primarily on qualitative evaluations or proprietary software outputs.

Despite these strengths, several limitations must be acknowledged.

Validation was conducted on a single case study involving structural and architectural BIM models at LOD 300, which may not capture the complexities of other disciplines such as mechanical, electrical, and plumbing (MEP) or large-scale infrastructure projects.

The QPC rules were manually defined for this project, and while they are extensible, further development is needed to align them with international standards such as ISO 19650, IFC 4.3, and IDS templates.

The reliance on cloud infrastructure also introduces potential challenges related to data privacy, cybersecurity, and network reliability, which must be addressed for broader adoption.

User feedback was limited to an internal project team; larger-scale testing with diverse stakeholders is required to refine usability and assess organizational readiness for implementation.

Although these comparisons highlight conceptual differences, future research should include direct experimental benchmarking with commercial systems to quantify improvements in speed, accuracy, and scalability.

The design of the framework supports scalability and generalizability beyond the specific project studied. Its compatibility with open data formats such as IFC, Civil 3D, and Tekla allows integration with multiple BIM authoring tools and its deployment across various project types, from vertical buildings to horizontal infrastructure. The modular nature of the QPC engine enables the addition of discipline-specific rules, making it adaptable to specialized domains such as MEP systems or transportation networks. The cloud-based architecture further supports multi-project management, allowing companies to implement the framework across entire portfolios and leverage cross-project benchmarking. Future enhancements could incorporate AI-driven anomaly detection and predictive analytics to automate rule creation and improve system intelligence.

In summary, this study addresses long-standing challenges in BIM-based QTO by combining automated validation, real-time collaboration, and measurable performance evaluation within a single, scalable process. While the initial implementation focused on structural and architectural models, its modular, cloud-based design supports expansion to other domains such as MEP and infrastructure projects. By refining rule sets, incorporating international standards, and integrating AI-driven anomaly detection, the framework can evolve into a comprehensive platform for data-driven construction management. These advancements will improve accuracy, reduce costs, and accelerate the digital transformation of the construction industry.

8. Conclusions

This study presented a comprehensive, cloud-based framework for automating QTO and QPC in BIM-based workflows. Accurate QTO is essential for project planning, cost estimation, procurement, and scheduling, yet current practices often face challenges such as inconsistent parameter definitions, fragmented data, and limited real-time collaboration. Traditional or semi-automated BIM-based QTO processes still rely on manual interventions and local file management, which create inefficiencies and increase the risk of inaccurate outputs. The framework developed in this research addresses these long-standing issues by integrating QTO and QPC into a unified, fully automated process, significantly improving both accuracy and scalability.

The proposed system leverages FME for workflow automation, a cloud-hosted MySQL database for centralized data storage, and Power BI dashboards for real-time visualization. A key innovation is the rule-based QPC engine, which validates BIM parameters before quantity extraction, ensuring that all quantities are derived from consistent and complete data. This prevents errors from propagating into downstream processes such as procurement and cost management. By introducing a structured rule hierarchy based on international standards, the literature, and expert consultation, the framework provides a flexible and extensible mechanism for quality control that can evolve alongside project needs.

The case study validation demonstrated the framework’s effectiveness and practical relevance. The system automatically detected inconsistencies such as missing Nominal Weight and Volume parameters, which, if left unresolved, would have resulted in inaccurate QTO outputs. After applying automated corrections, the final validated quantities were 205.1 tons of steel and 1432 m3 of concrete. Additionally, the framework reduced reporting and response times by approximately 60% through automated notifications sent directly to design teams, eliminating the need for manual Excel-based communication. A dynamic tracking feature monitored design-driven changes, capturing a 2.27% reduction in steel quantities and a 7.0% reduction in concrete quantities over time. These outcomes demonstrate measurable improvements in accuracy, efficiency, and transparency compared to traditional approaches and provide stakeholders with actionable insights for cost control and project planning.

Another significant contribution of this study is the introduction of standardized performance metrics to evaluate and benchmark QTO/QPC workflows. Metrics such as Inconsistency Detection Rate (IDR), Parameter Consistency Rate (PCR), and Quantity Accuracy Improvement (QAI) offer objective ways to measure the quality of BIM data and the effectiveness of automated validation. These metrics bridge the gap between qualitative evaluations used in industry and the need for reproducible, quantitative research measures. This advancement addresses a critical gap identified in the literature and enables researchers and practitioners to monitor continuous improvements in QTO workflows over time.

Several future research directions are proposed to enhance the framework’s capabilities.

Integrating artificial intelligence (AI) and machine learning could enable automated rule generation and anomaly detection, allowing the system to adapt dynamically to different project contexts.

Expanding interoperability with emerging BIM platforms and GIS-integrated systems would improve collaboration across multiple disciplines and support applications in mega projects and infrastructure developments.

Linking validated QTO data with external cost estimation databases would provide real-time budget tracking and automated procurement decision-making. Fourth, incorporating pre-QTO model health checks could ensure models meet LOD/LOI requirements before takeoff begins, reducing downstream errors.

Integrating sustainability analytics, such as embodied carbon calculations, would help project teams optimize material usage for environmental performance.

In conclusion, the proposed framework delivers a scalable, measurable, and transparent solution for BIM-based QTO and QPC. By combining automated validation, centralized data management, and real-time visualization, it significantly improves the accuracy and efficiency of construction workflows. The framework addresses critical gaps identified in both academic research and industry practice, offering a replicable methodology that integrates open standards and supports continuous performance monitoring. With further refinement, broader testing across diverse project types, and integration of advanced technologies, this framework has the potential to transform digital construction management by reducing costs, improving collaboration, and enabling data-driven decision-making for more efficient and sustainable construction practices.