Post-Earthquake Damage Detection and Safety Assessment of the Ceiling Panoramic Area in Large Public Buildings Using Image Stitching

Abstract

1. Introduction

2. Image Stitching and Quality Evaluation Methods

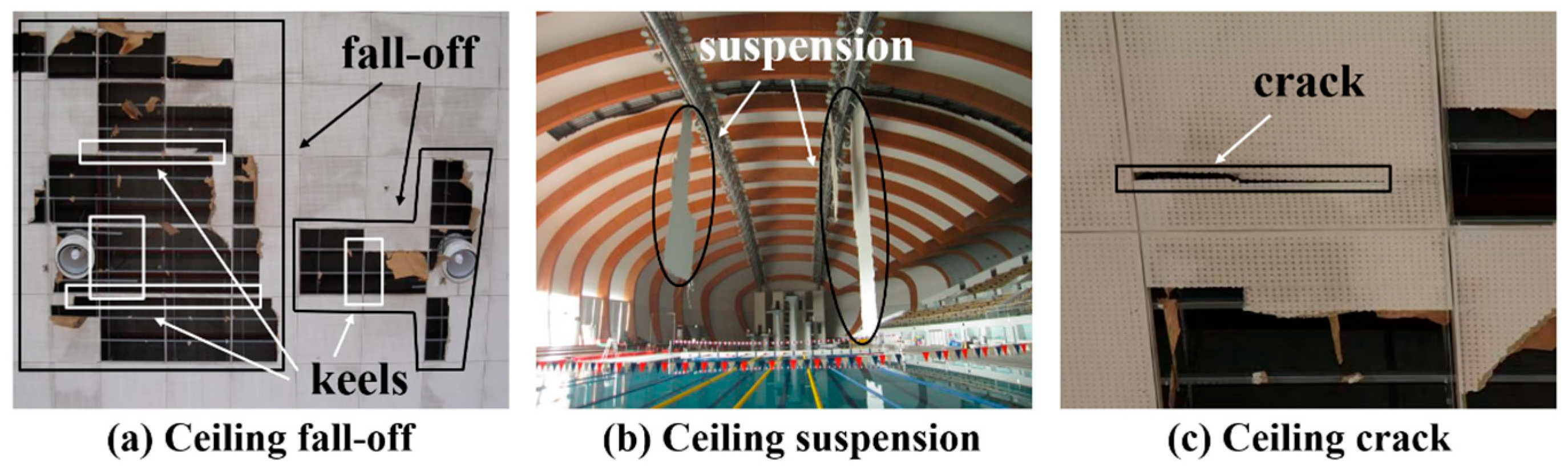

2.1. Image Feature Extraction Algorithms

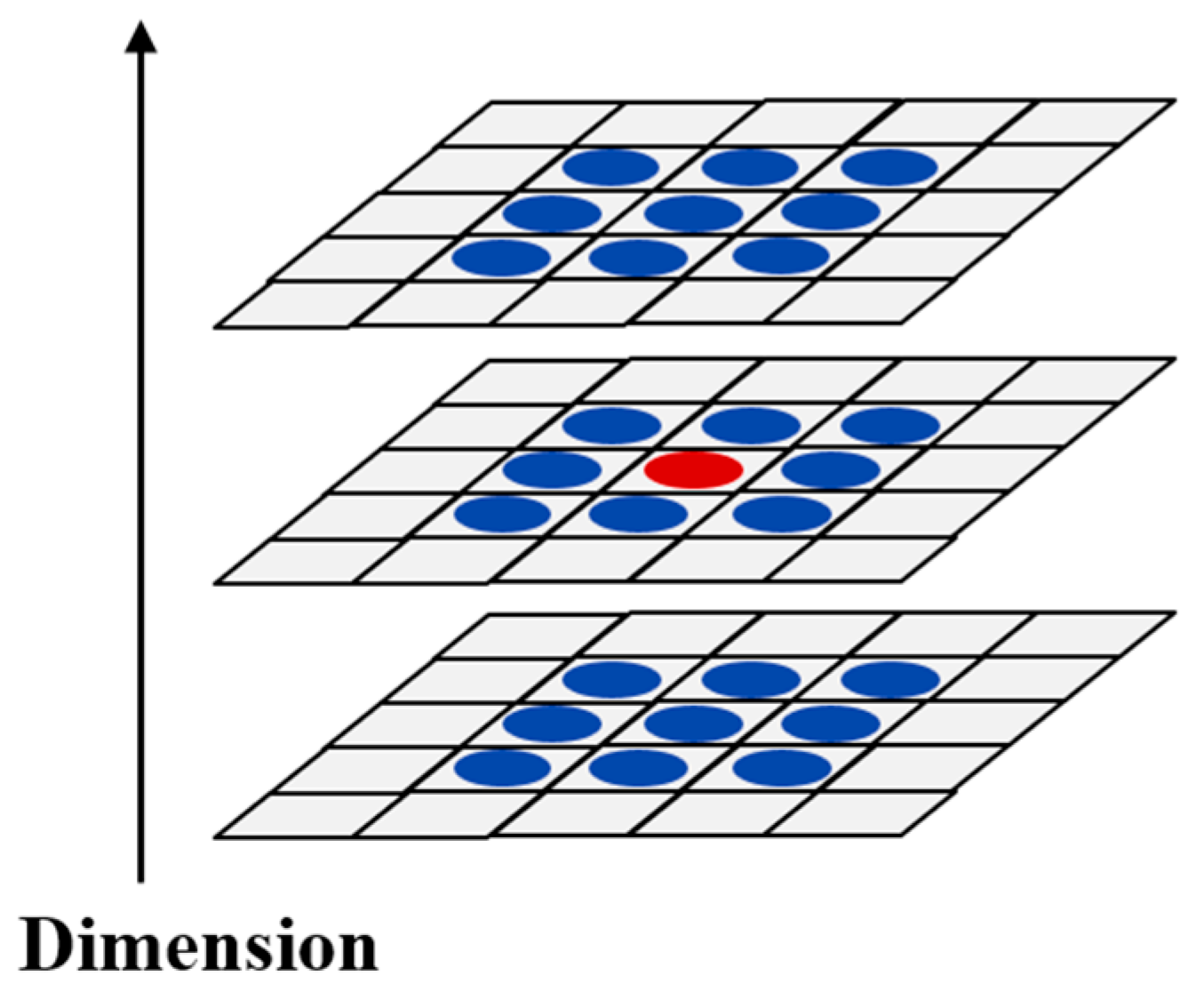

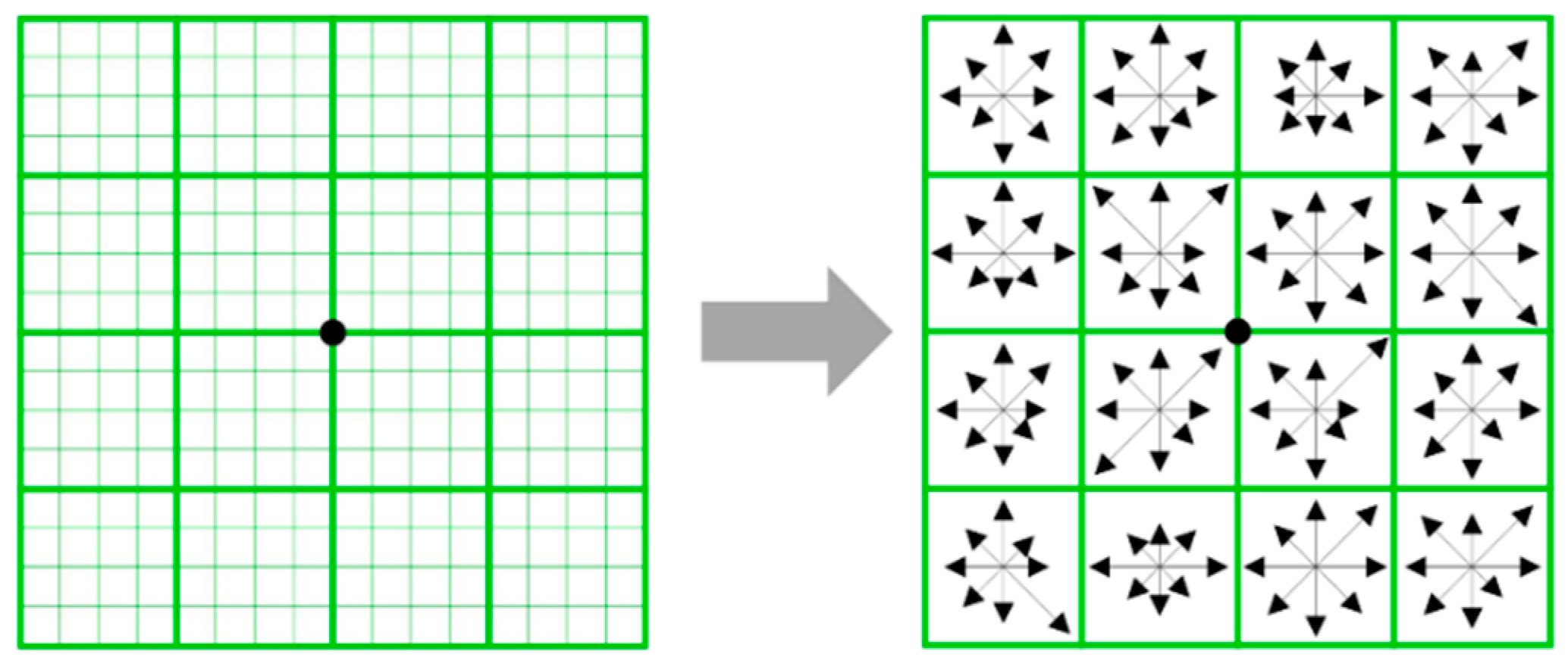

2.1.1. Keypoint Detection

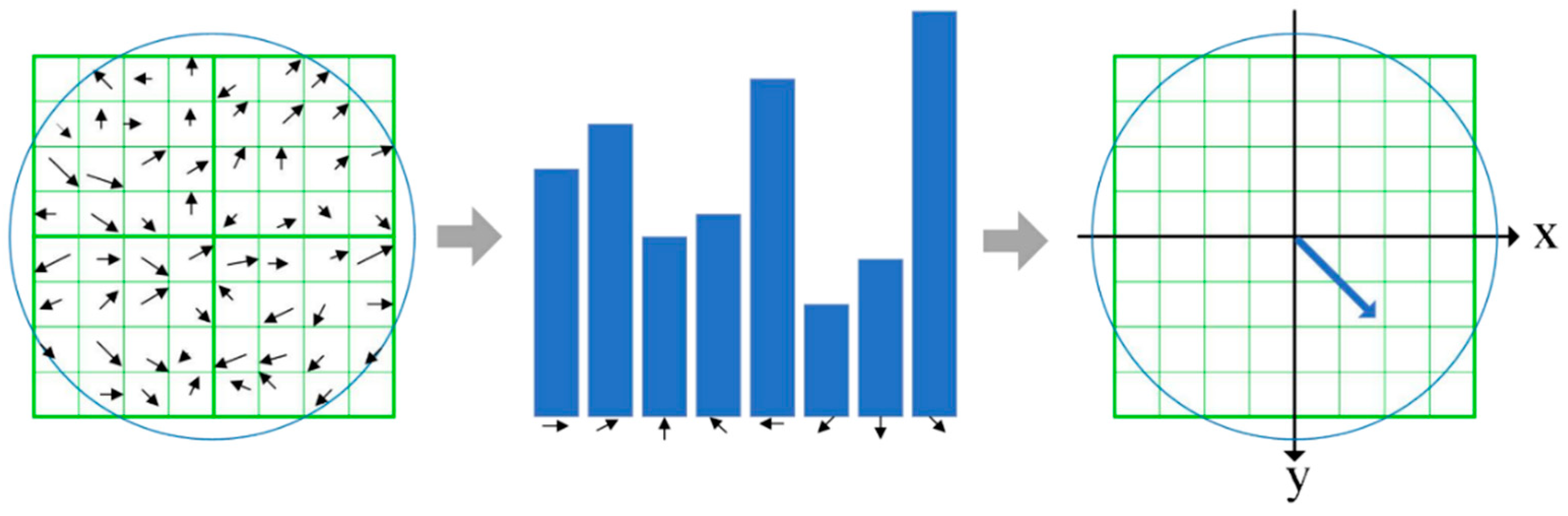

2.1.2. Feature Point Localization and Orientation Determination

2.1.3. Generation of Feature Descriptors

2.1.4. Feature Vector Matching

2.2. Image Quality Assessment (IQA) Methods for Stitching Quality Evaluation

3. Panoramic Image Creation

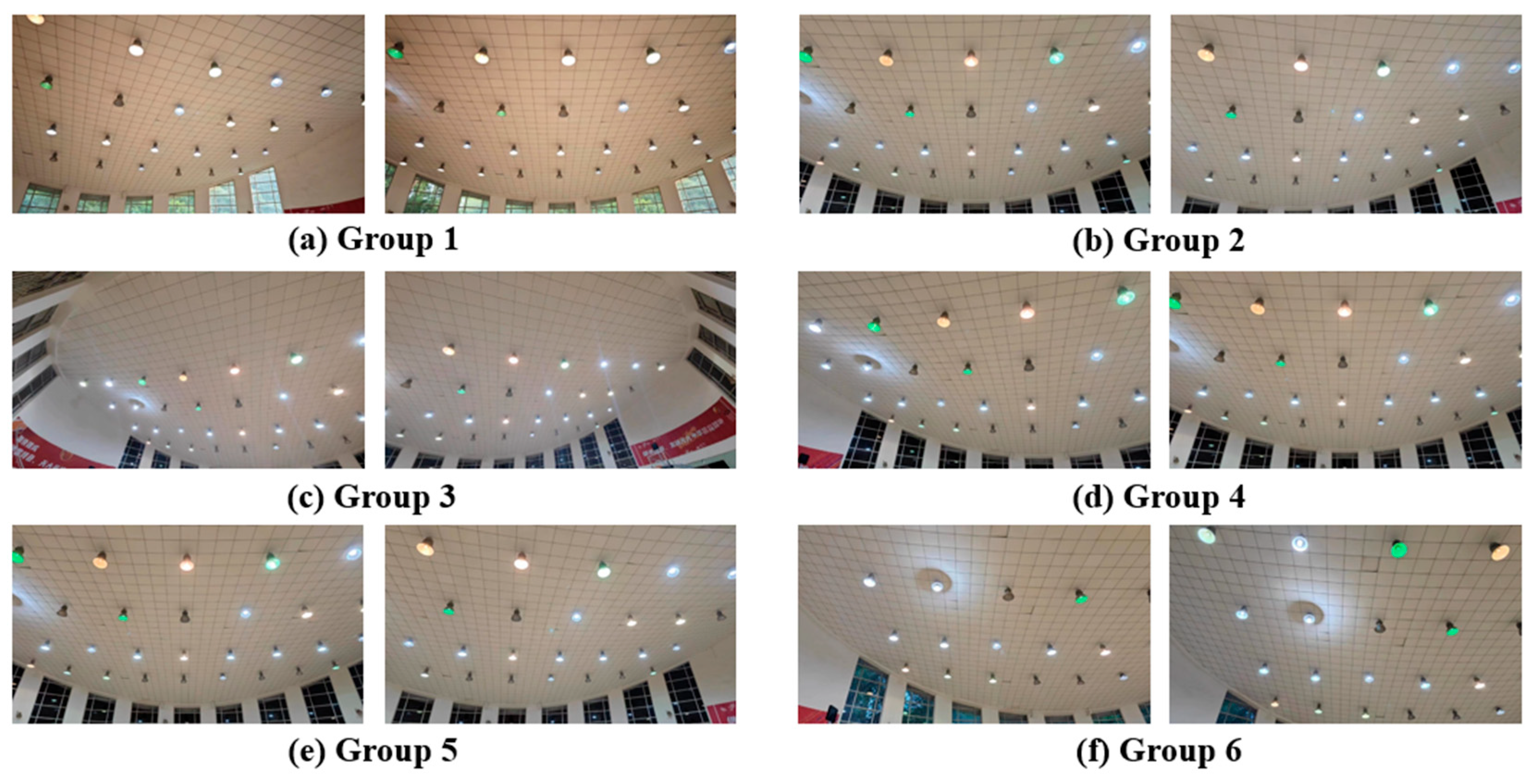

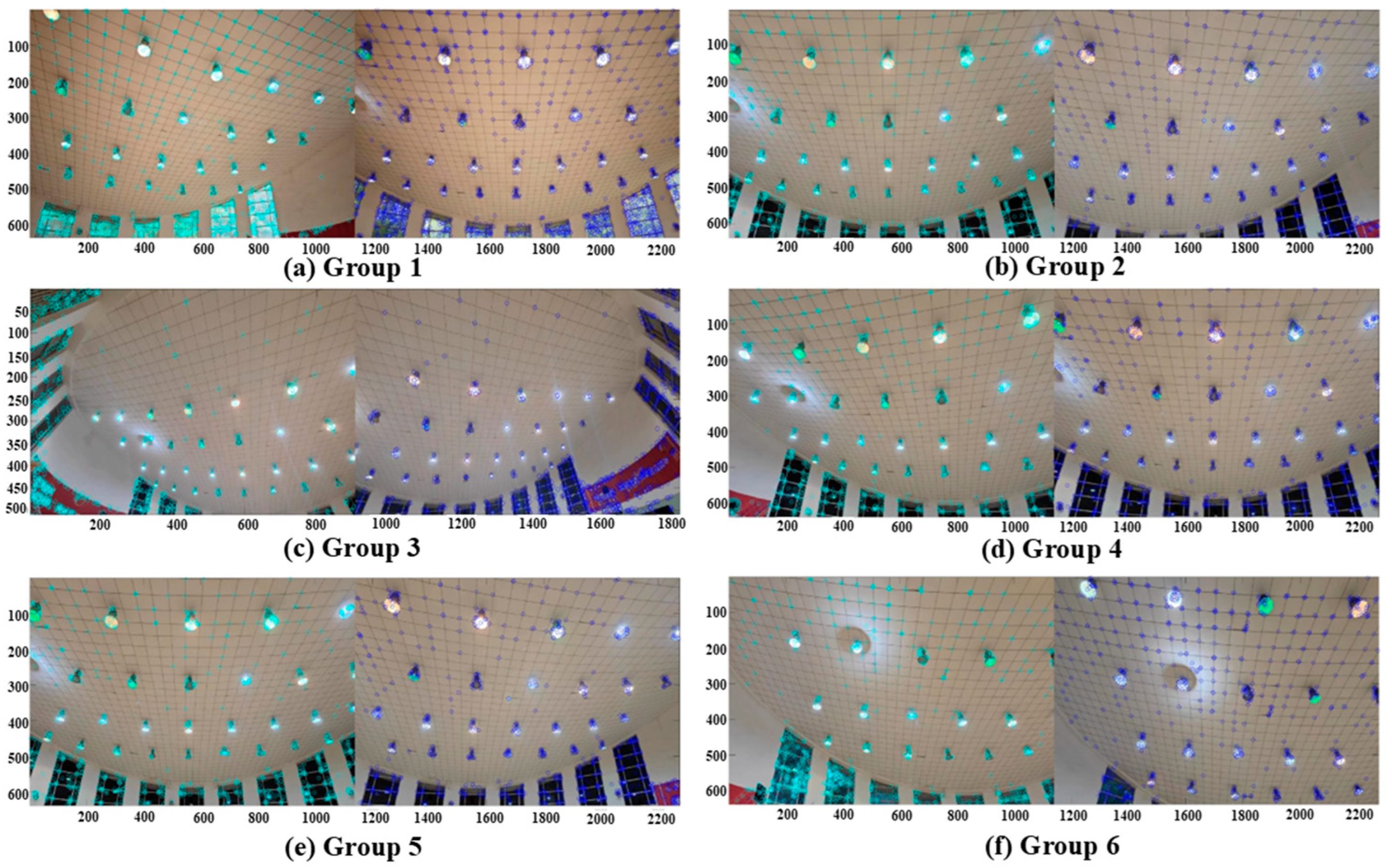

3.1. Detection of Image Feature Points

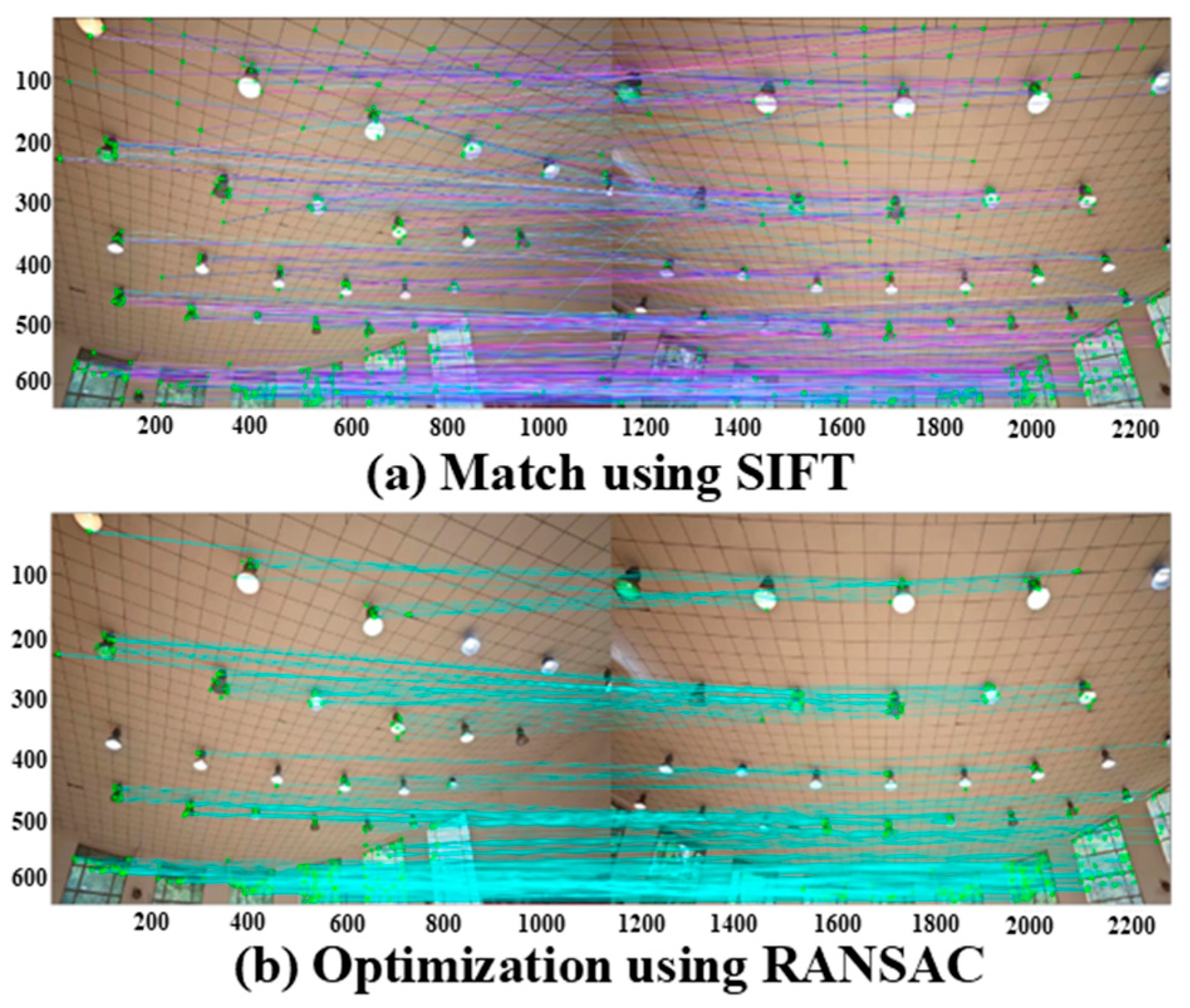

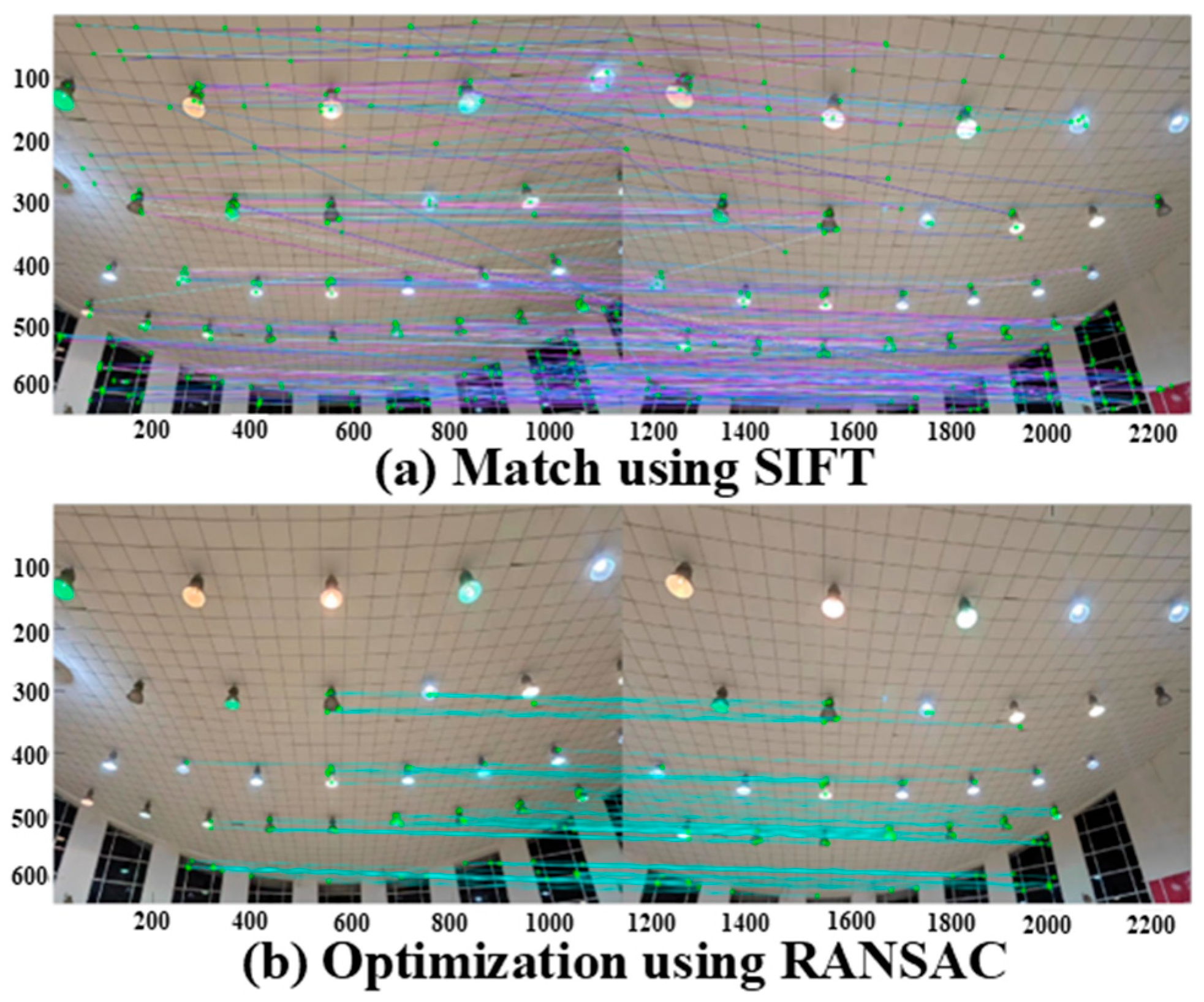

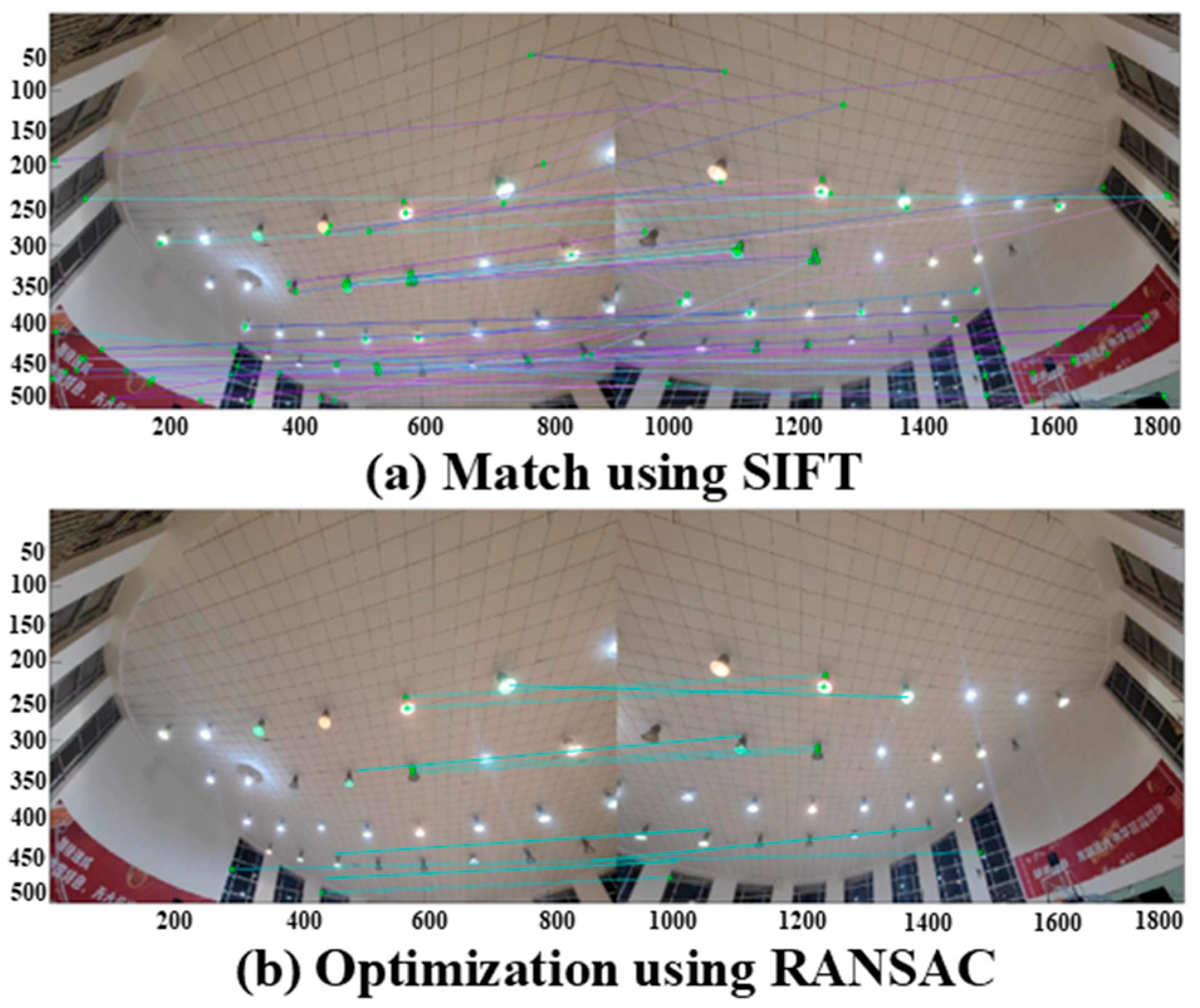

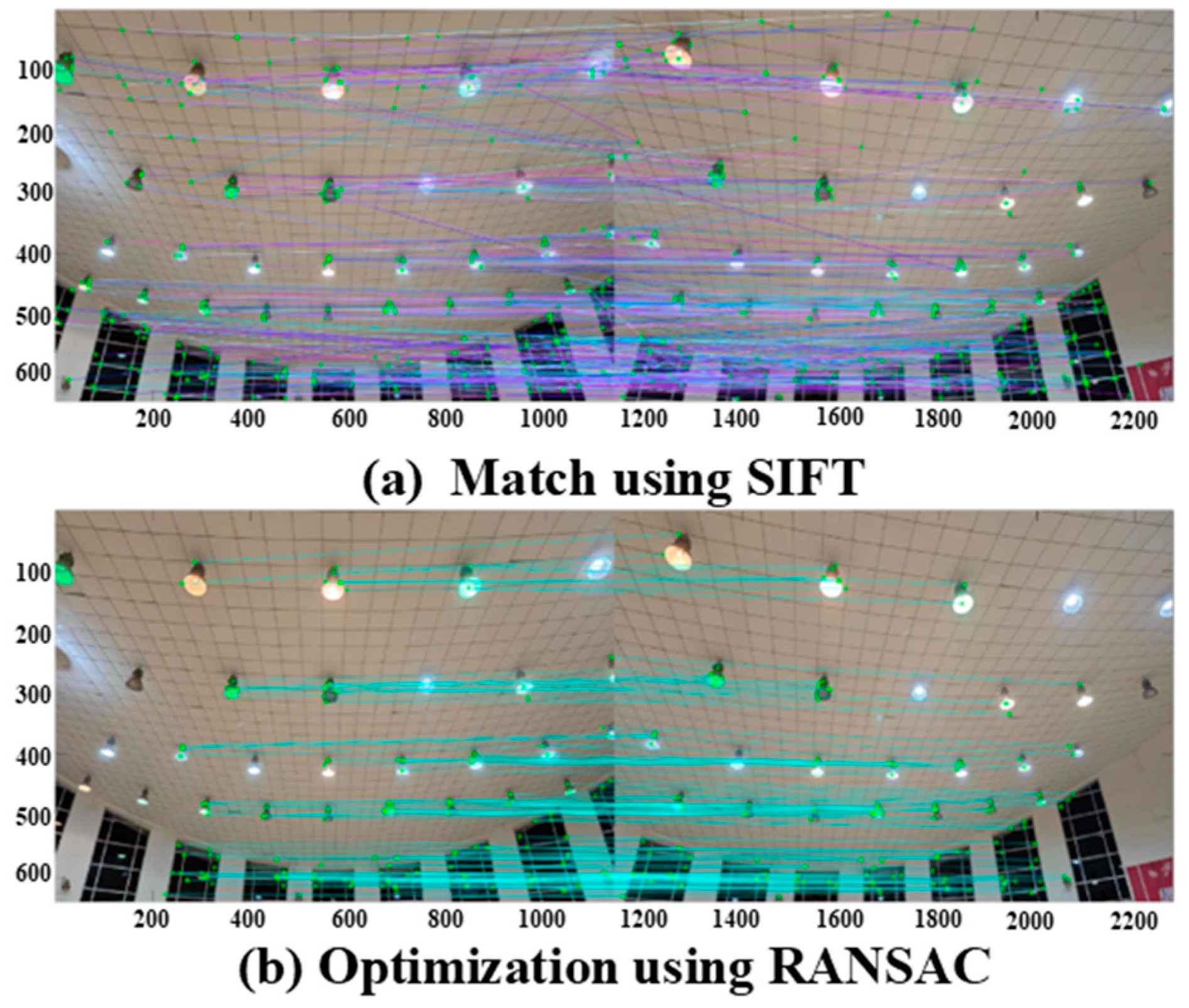

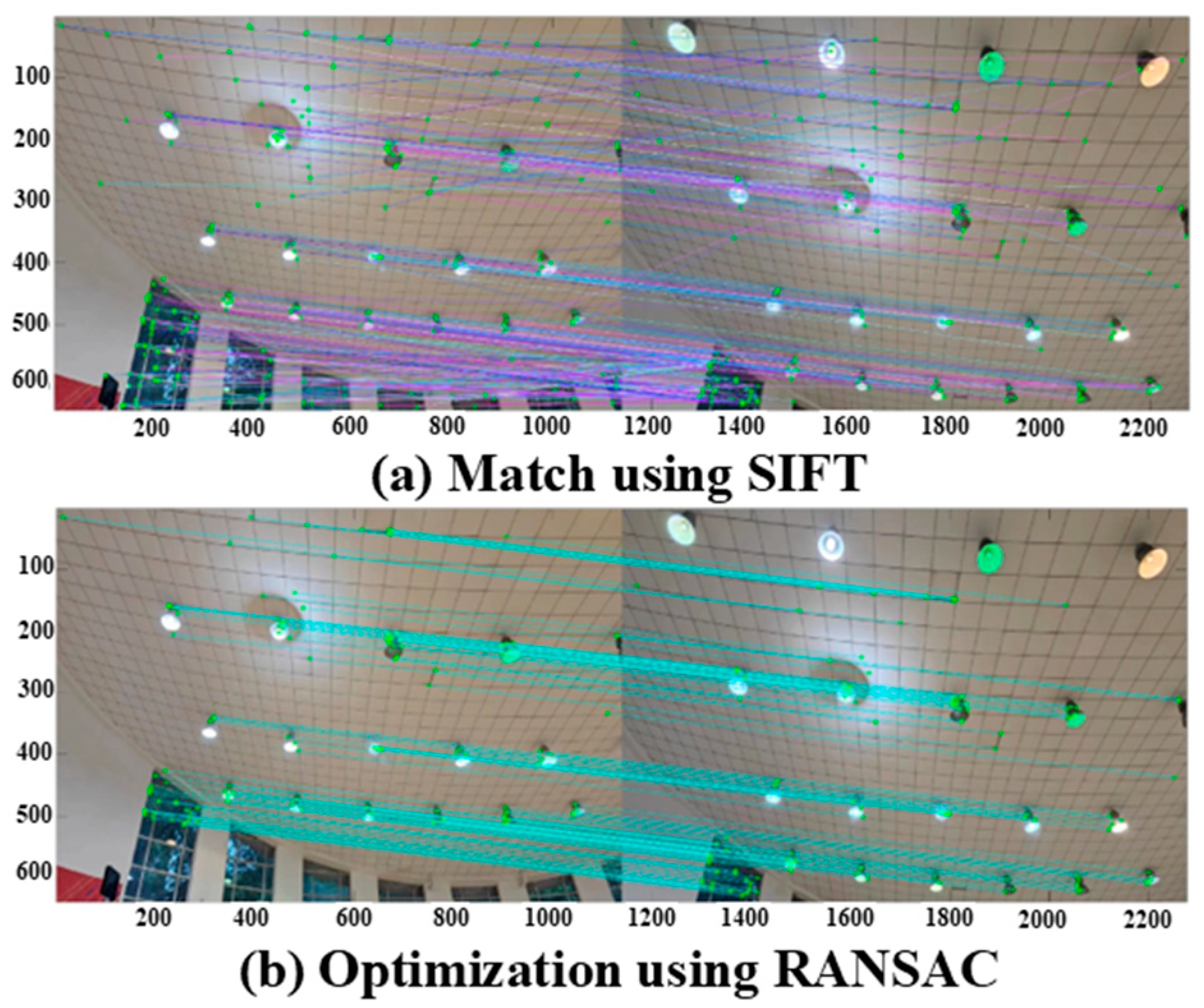

3.2. Feature Point Matching of Images

3.3. Image Stitching Quality

4. Results and Discussion

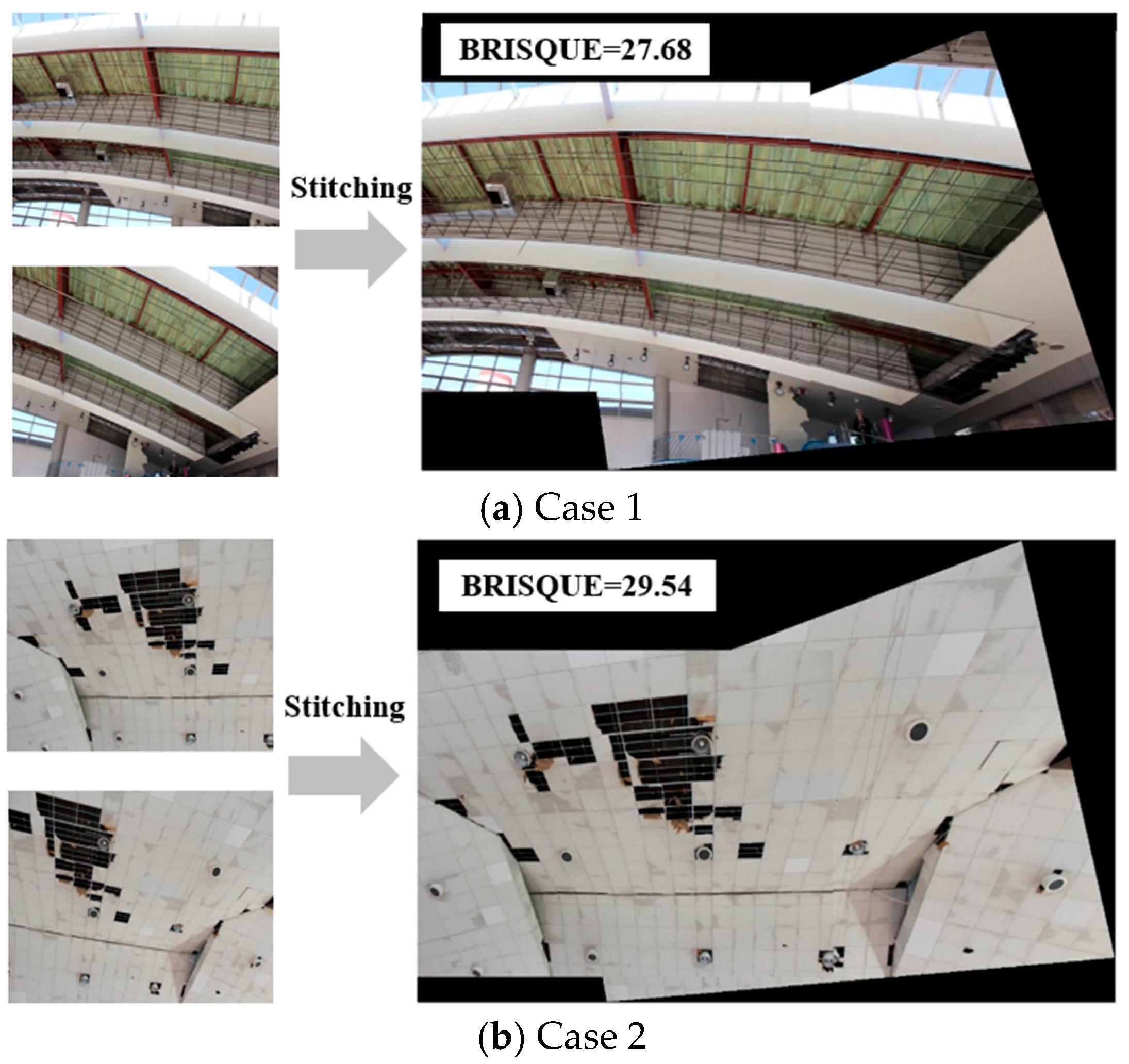

4.1. Image Stitching of the Test Set

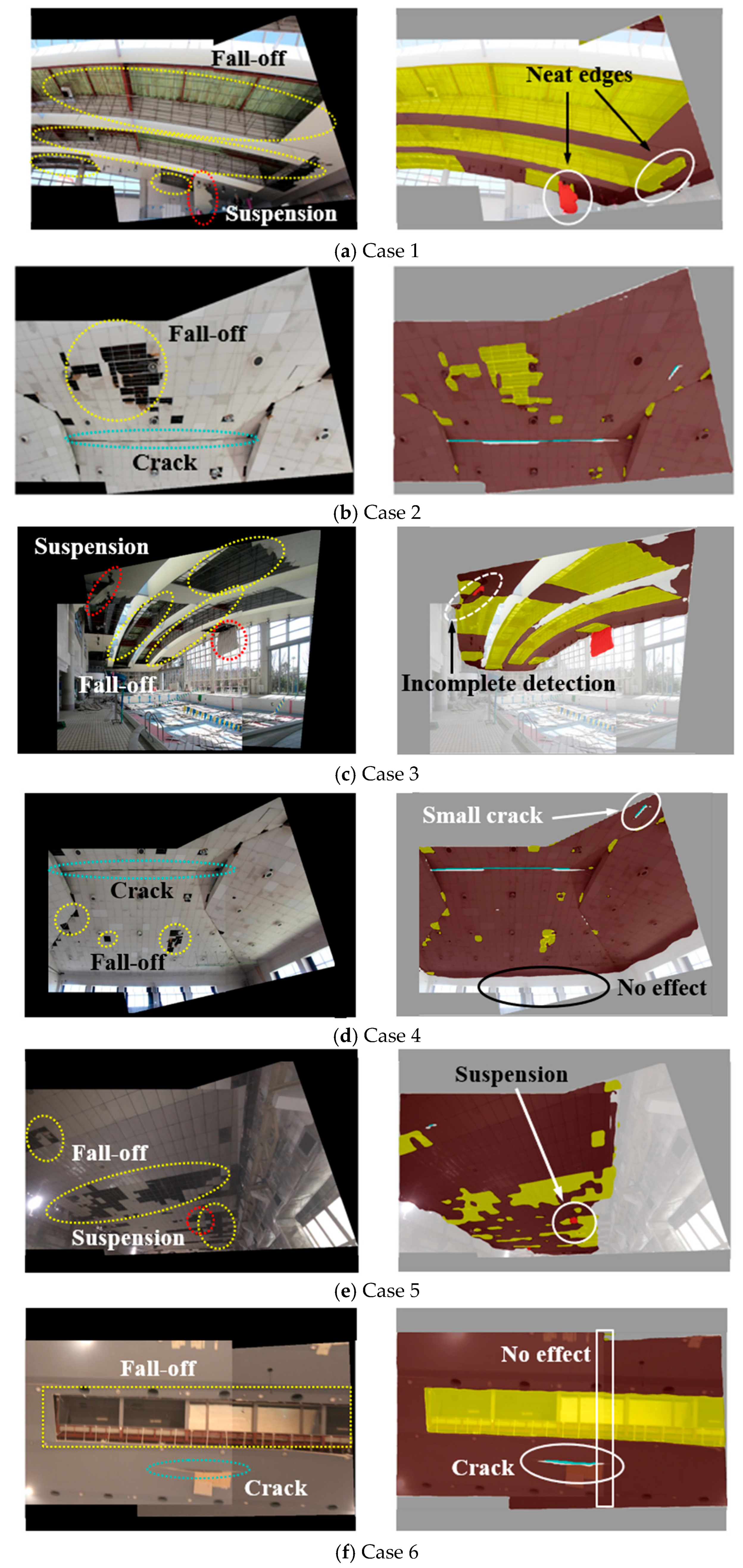

4.2. Safety Assessment of Stitched Ceiling Images

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhai, C.; Yue, Q.; Xie, L. Evaluation and construction of seismic resilient cities. J. Build. Struct. 2024, 45, 1–13. [Google Scholar] [CrossRef]

- Kawaguchi, K. Damage to Non-structural Components in Large Rooms by the Japan Earthquake. In Structures Congress; American Society of Civil Engineers: New York, NY, USA, 2012; pp. 1035–1044. [Google Scholar] [CrossRef]

- Han, Q.; Zhao, Y.; Lu, Y. Seismic behavior and resilience improvements of nonstructural components in the large public buildings—A review. China Civ. Eng. J. 2020, 53, 1–10. [Google Scholar] [CrossRef]

- Xie, L.; Qu, Z. On civil engineering disasters and their mitigation. Earthq. Eng. Eng. Vib. 2018, 17, 1–10. [Google Scholar] [CrossRef]

- Hu, W.; Wang, W.; Ai, C.; Wang, J.; Wang, W.; Meng, X.; Liu, J.; Tao, H.; Qiu, S. Machine vision-based surface crack analysis for transportation infrastructure. Autom. Constr. 2021, 132, 103973. [Google Scholar] [CrossRef]

- Dais, D.; Bal, İ.E.; Smyrou, E.; Sarhosis, V. Automatic crack classification and segmentation on masonry surfaces using convolutional neural networks and transfer learning. Autom. Constr. 2021, 125, 103606. [Google Scholar] [CrossRef]

- Kurata, M.; Li, X.; Fujita, K.; Yamaguchi, M. Piezoelectric dynamic strain monitoring for detecting local seismic damage in steel buildings. Smart Mater. Struct. 2013, 22, 115002. [Google Scholar] [CrossRef]

- Pathirage, S.N.; Li, J.; Li, L.; Hao, H.; Liu, W.; Ni, P. Structural damage identification based on autoencoder neural networks and deep learning. Eng. Struct. 2018, 172, 13–28. [Google Scholar] [CrossRef]

- Wang, L.; Kawaguchi, K.; Wang, P. Damaged ceiling detection and localization in large-span structures using convolutional neural networks. Autom. Constr. 2020, 116, 103230. [Google Scholar] [CrossRef]

- Wang, P.; Xiao, J.; Kawaguchi, K.; Wang, L. Automatic Ceiling Damage Detection in Large-Span Structures Based on Computer Vision and Deep Learning. Sustainability 2022, 14, 3275. [Google Scholar] [CrossRef]

- Han, Q.; Yan, S.; Wang, L.; Kawaguchi, K. Ceiling damage detection and safety assessment in large public buildings using semantic segmentation. J. Build. Eng. 2023, 80, 107961. [Google Scholar] [CrossRef]

- Wang, B.; Yang, Z. Review on image-stitching techniques. Multimed. Syst. 2020, 26, 413–430. [Google Scholar] [CrossRef]

- Lingua, A.; Marenchino, D.; Nex, F. Performance analysis of the SIFT operator for automatic feature extraction and matching in photogrammetric applications. Sensors 2009, 9, 3745–3766. [Google Scholar] [CrossRef]

- Schwind, P.; Suri, S.; Reinartz, P.; Siebert, A. Applicability of the SIFT operator to geometric SAR image registration. Int. J. Remote Sens. 2010, 31, 1959–1980. [Google Scholar] [CrossRef]

- Li, F.; Ye, F. Summarization of SIFT-based remote sensing image registration techniques. Remote Sens. Land Resour. 2016, 28, 14–20. [Google Scholar] [CrossRef]

- Wu, X.; Zhao, Q.; Bu, W. A SIFT-based contactless palmprint verification approach using iterative RANSAC and local palmprint descriptors. Pattern Recognit. 2014, 47, 3314–3326. [Google Scholar] [CrossRef]

- Vourvoulakis, J.; Kalomiros, J.; Lygouras, J. FPGA-based architecture of a real-time SIFT matcher and RANSAC algorithm for robotic vision applications. Multimed. Tools Appl. 2018, 77, 9393–9415. [Google Scholar] [CrossRef]

- Wang, L.; Spencer, B.F.; Li, J.; Hu, P. A fast image-stitching algorithm for characterization of cracks in large-scale structures. Smart Struct. Syst. 2021, 27, 593–605. [Google Scholar] [CrossRef]

- Cheng, K.; Shan, J.; Liu, Y. Feature-based image stitching for panorama construction and visual inspection of structures. Smart Struct. Syst. 2021, 28, 661–673. [Google Scholar] [CrossRef]

- Cui, D.; Zhang, C. crack detection of curved surface structure based on multi-image stitching method. Buildings 2024, 14, 1657. [Google Scholar] [CrossRef]

- Zhu, Z.; German, S.; Brilakis, I. Detection of large-scale concrete columns for automated bridge inspection. Autom. Constr. 2010, 19, 1047–1055. [Google Scholar] [CrossRef]

- Xie, R.; Xie, J.; Xie, R.; Ya, J.; Liu, K.; Lu, X.; Liu, Y.; Xia, M.; Zeng, Q. Automatic multi-image stitching for concrete bridge inspection by combining point and line features. Autom. Constr. 2018, 90, 265–280. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, Q.; Dai, Z.; Song, C.; Hu, X. Seismic damage quantification of RC short columns from crack image using the enhanced U-Net. Buildings 2025, 15, 322. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, F.; Zou, X. Efficient lightweight CNN and 2D visualization for concrete crack detection in bridges. Buildings 2025, 15, 3423. [Google Scholar] [CrossRef]

- Zhu, H.; Zhao, S. Research on a rapid image stitching method for tunneling front based on navigation and positioning information. Sensors 2025, 25, 3023. [Google Scholar] [CrossRef]

- Zhang, Q.; Rui, T.; Fang, H.; Zhang, J.; Gou, H. Particle Filter Object Tracking Based on Harris-SIFT Feature Matching. Procedia Eng. 2012, 29, 924–929. [Google Scholar] [CrossRef]

- Wan, G.; Wang, J.; Li, J.; Cao, H.; Wang, S.; Wang, L.; Li, Y.; Wei, R. Method for quality assessment of image mosaic. J. Commun. 2013, 34, 76–81. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-Reference Image Quality Assessment in the Spatial Domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

- Ishikawa, K.; Kawaguchi, K.; Tagawa, K.; Sakai, T.; Sakai, T. Report on gymnasium and spatial structures damaged by hyogoken-hanbu earthquake. AIJ J. Technol. Des. 1997, 3, 96–101. [Google Scholar] [CrossRef]

| Set | Number of Feature Points in Target Image | Number of Feature Points in Registered Image | Set | Number of Feature Points in Target Image | Number of Feature Points in Registered Image |

|---|---|---|---|---|---|

| 1 | 1331 | 1547 | 4 | 890 | 1027 |

| 2 | 942 | 817 | 5 | 1027 | 987 |

| 3 | 884 | 1128 | 6 | 1019 | 832 |

| Set | Registration Points Using SIFT | Optimized Registration Points Using RANSAC | Inlier Rates/% |

|---|---|---|---|

| 1 | 382 | 252 | 65.9 |

| 2 | 286 | 111 | 38.8 |

| 3 | 63 | 16 | 25.4 |

| 4 | 269 | 122 | 45.4 |

| 5 | 332 | 153 | 46.1 |

| 6 | 255 | 107 | 41.9 |

| No. | Category | Number of Pixels for Fall-Off | Number of Pixels for Suspension | Number of Pixels for Crack | Fall-Off Rate/% | Result |

|---|---|---|---|---|---|---|

| Case 1 | True | 755,869 | 13,846 | 0 | 70.59 | Danger |

| Detection | 746,290 | 13,392 | 0 | 68.86 | Danger | |

| Error/Accuracy | 1.3% | 3.3% | 0 | 2.5% | Accurate | |

| Case 2 | True | 90,228 | 0 | 4510 | 6.51 | Danger |

| Detection | 87,497 | 0 | 4726 | 6.35 | Danger | |

| Error/Accuracy | 3.0% | 0 | 4.6% | 2.5% | Accurate | |

| Case 3 | True | 292,300 | 19,756 | 0 | 50.12 | Danger |

| Detection | 291,641 | 17,866 | 0 | 55.11 | Danger | |

| Error/Accuracy | 0.2% | 9.6% | 0 | 9.1% | Accurate | |

| Case 4 | True | 22,770 | 0 | 4500 | 2.04 | Danger |

| Detection | 23,207 | 0 | 4864 | 2.13 | Danger | |

| Error/Accuracy | 1.9% | 0 | 7.5% | 4.4% | Accurate | |

| Case 5 | True | 144,011 | 724 | 852 | 15.04 | Danger |

| Detection | 134,237 | 932 | 624 | 16.53 | Danger | |

| Error/Accuracy | 6.8% | 28.7% | 26.7% | 9.9% | Accurate | |

| Case 6 | True | 325,350 | 0 | 1763 | 25.01 | Danger |

| Detection | 325,865 | 0 | 2255 | 25.06 | Danger | |

| Error/Accuracy | 0.2% | 0 | 27.9% | 0.2% | Accurate |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Liang, Y.; Yan, S. Post-Earthquake Damage Detection and Safety Assessment of the Ceiling Panoramic Area in Large Public Buildings Using Image Stitching. Buildings 2025, 15, 3922. https://doi.org/10.3390/buildings15213922

Wang L, Liang Y, Yan S. Post-Earthquake Damage Detection and Safety Assessment of the Ceiling Panoramic Area in Large Public Buildings Using Image Stitching. Buildings. 2025; 15(21):3922. https://doi.org/10.3390/buildings15213922

Chicago/Turabian StyleWang, Lichen, Yapeng Liang, and Shihao Yan. 2025. "Post-Earthquake Damage Detection and Safety Assessment of the Ceiling Panoramic Area in Large Public Buildings Using Image Stitching" Buildings 15, no. 21: 3922. https://doi.org/10.3390/buildings15213922

APA StyleWang, L., Liang, Y., & Yan, S. (2025). Post-Earthquake Damage Detection and Safety Assessment of the Ceiling Panoramic Area in Large Public Buildings Using Image Stitching. Buildings, 15(21), 3922. https://doi.org/10.3390/buildings15213922