A Transformer-Based Multi-Scale Semantic Extraction Change Detection Network for Building Change Application

Abstract

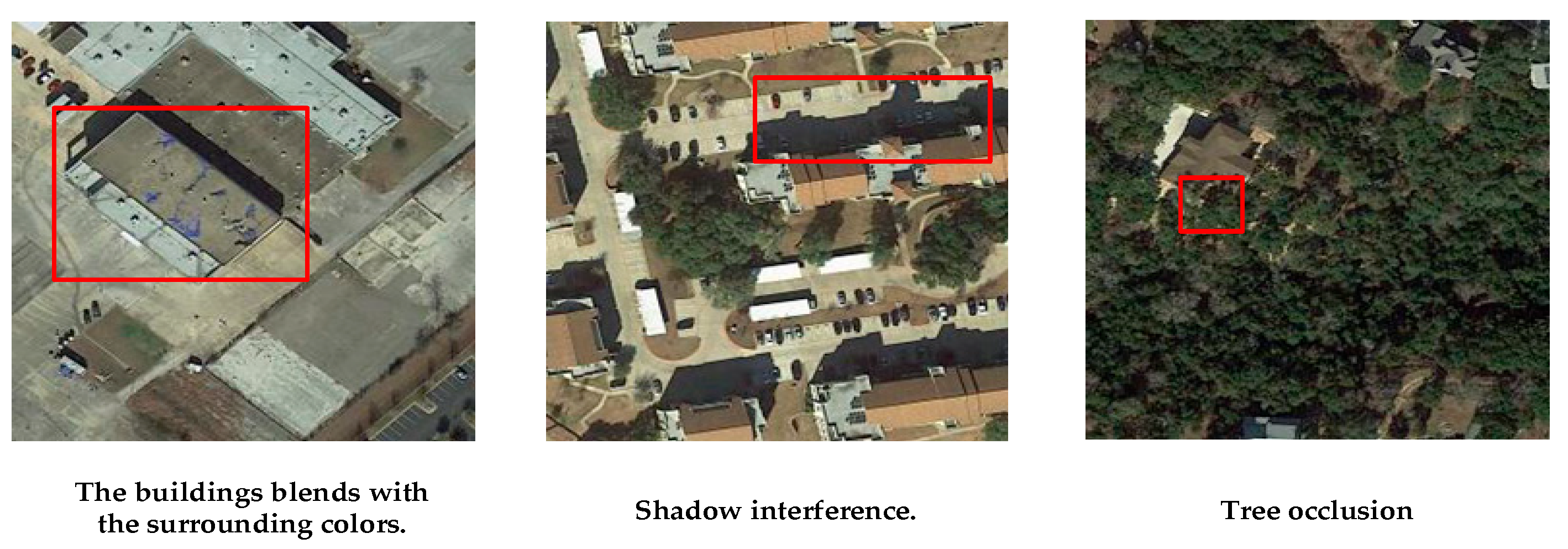

1. Introduction

- (1)

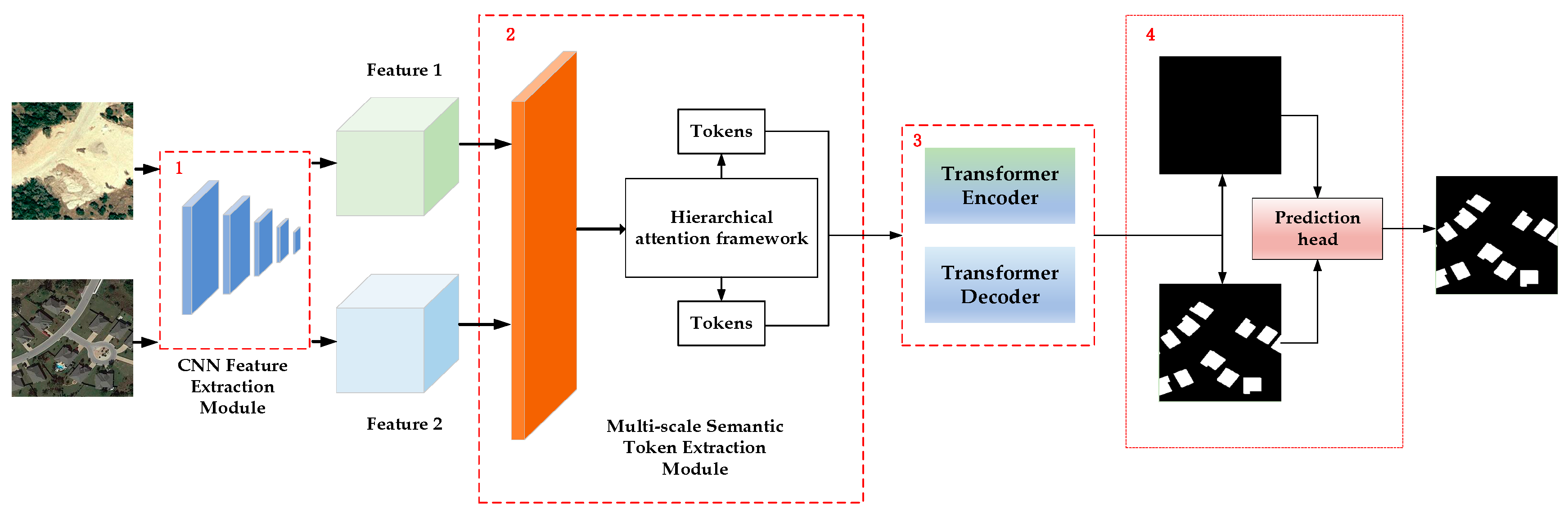

- We propose a Transformer-based change detection network (MSSE-CDNet) for detecting changed building areas in urban environments. The network demonstrates significantly enhanced semantic information extraction capability in complex environments compared to other models.

- (2)

- A multi-scale feature extraction mechanism is proposed to select different building feature extraction approaches across varying scene complexities. Unlike traditional single-scale extraction methods, this approach employs multi-scale building feature extraction in complex scenarios to enhance the detail of local features.

- (3)

- We formulate an adaptive feature fusion mechanism to interpolate and enhance features across different scales. This mechanism integrates multi-scale building features in complex environments, and the fused features serve as input for subsequent feature analysis.

- (4)

- Validation experiments on the LEVIR-CD dataset demonstrate that the proposed building change detection model outperforms existing methods in prediction accuracy.

2. Related Work

3. Methodology

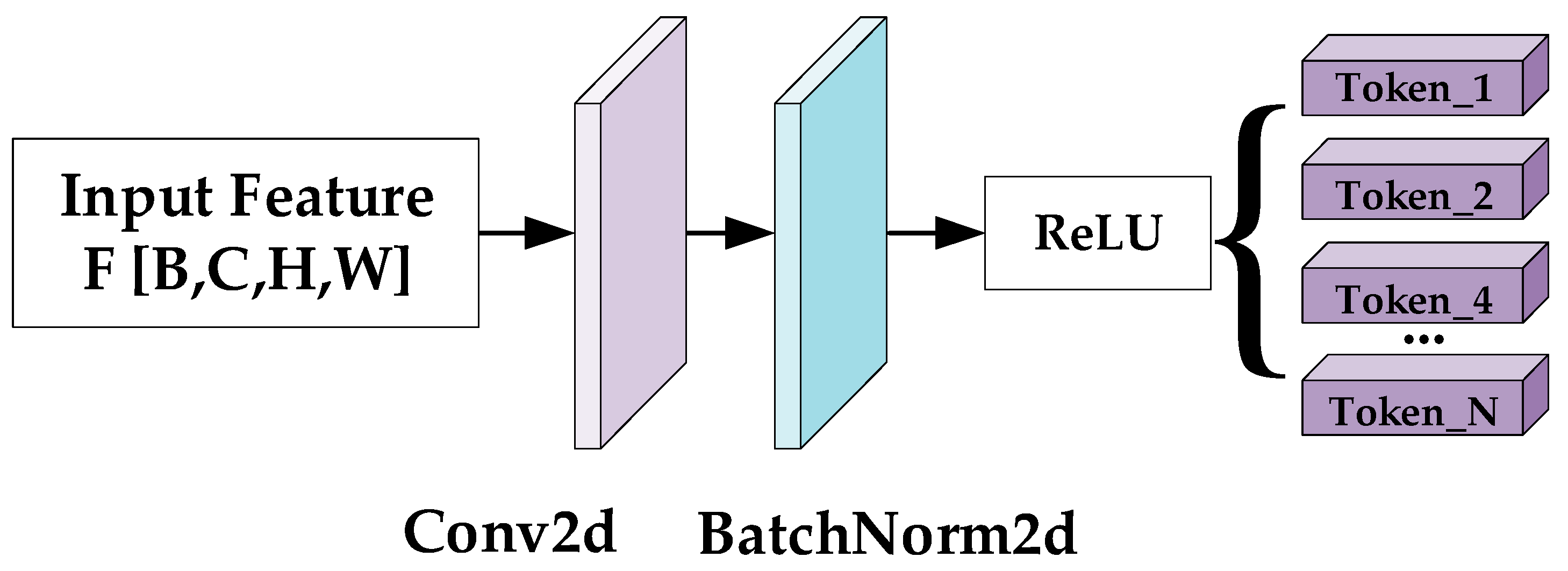

3.1. CNN Feature Extraction Module

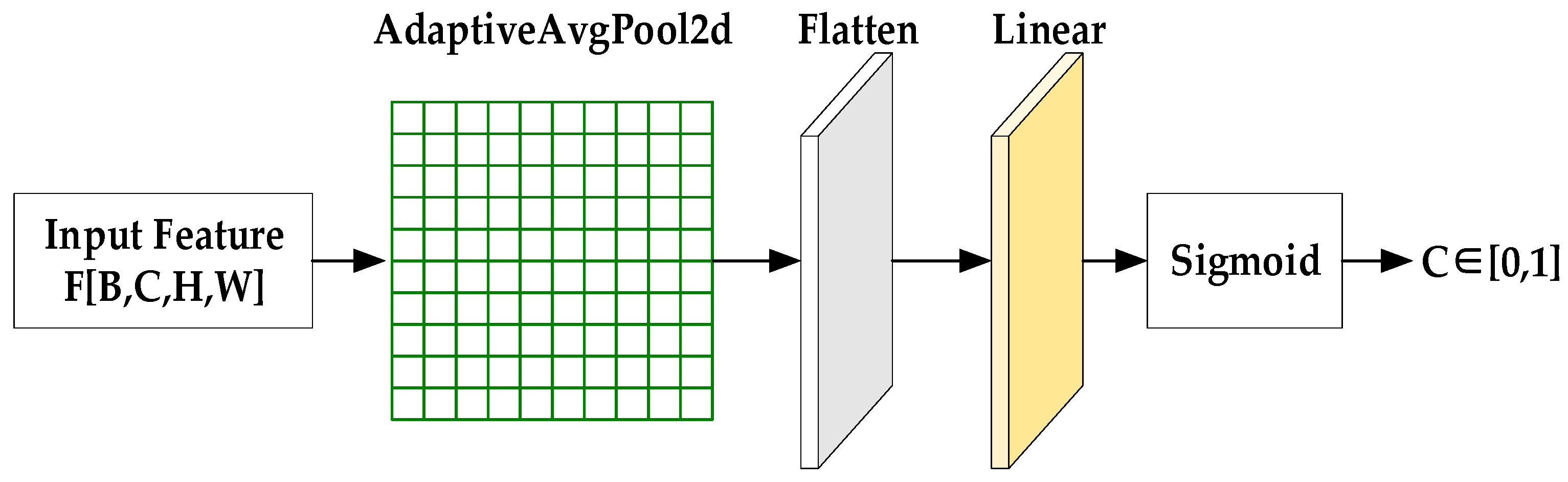

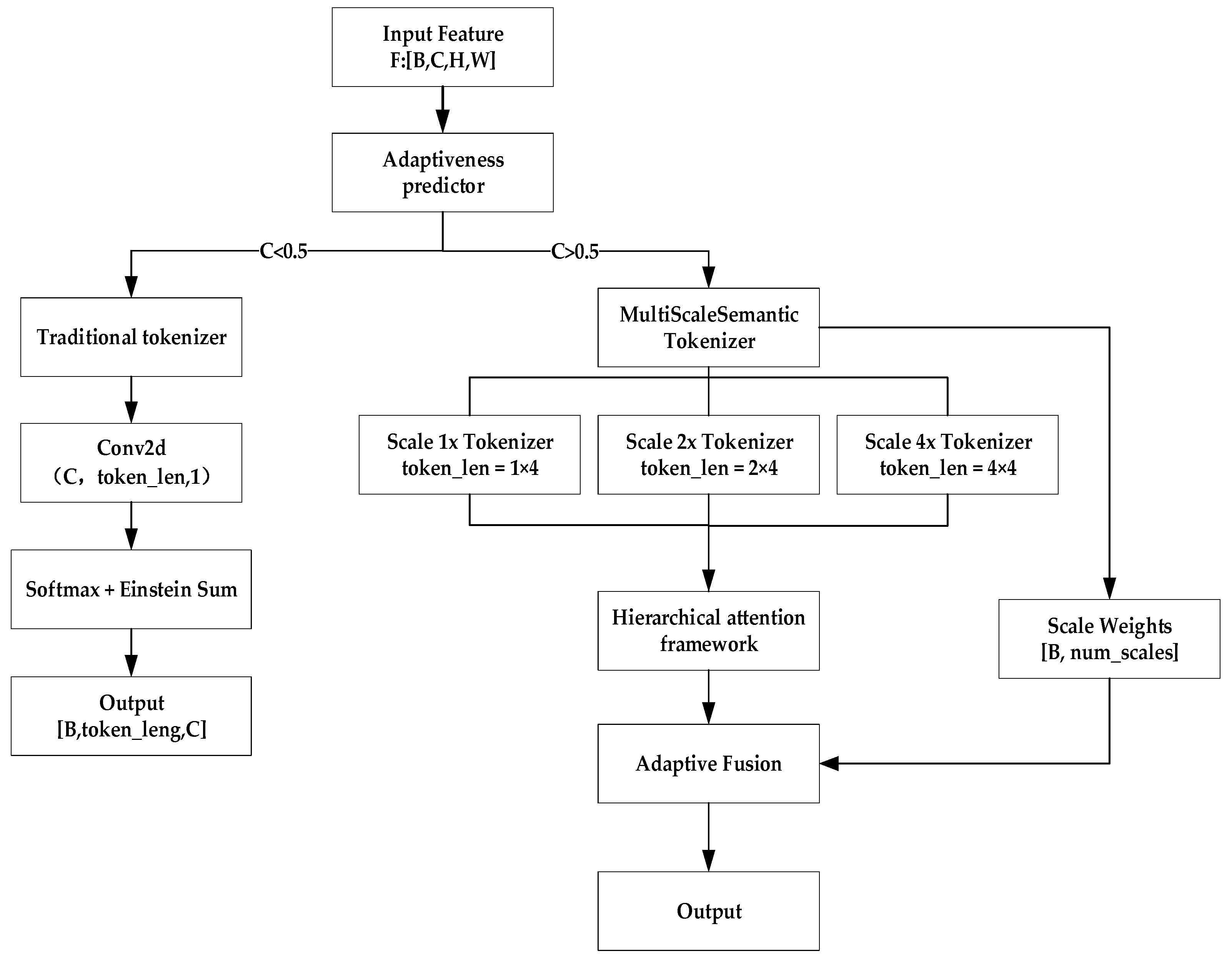

3.2. Multi-Scale Semantic Extraction Module

3.2.1. Multi-Scale Extraction

3.2.2. Adaptive Fusion

3.3. Transformer Encoder and Decoder Module

3.4. Prediction Head Module

4. Experiment

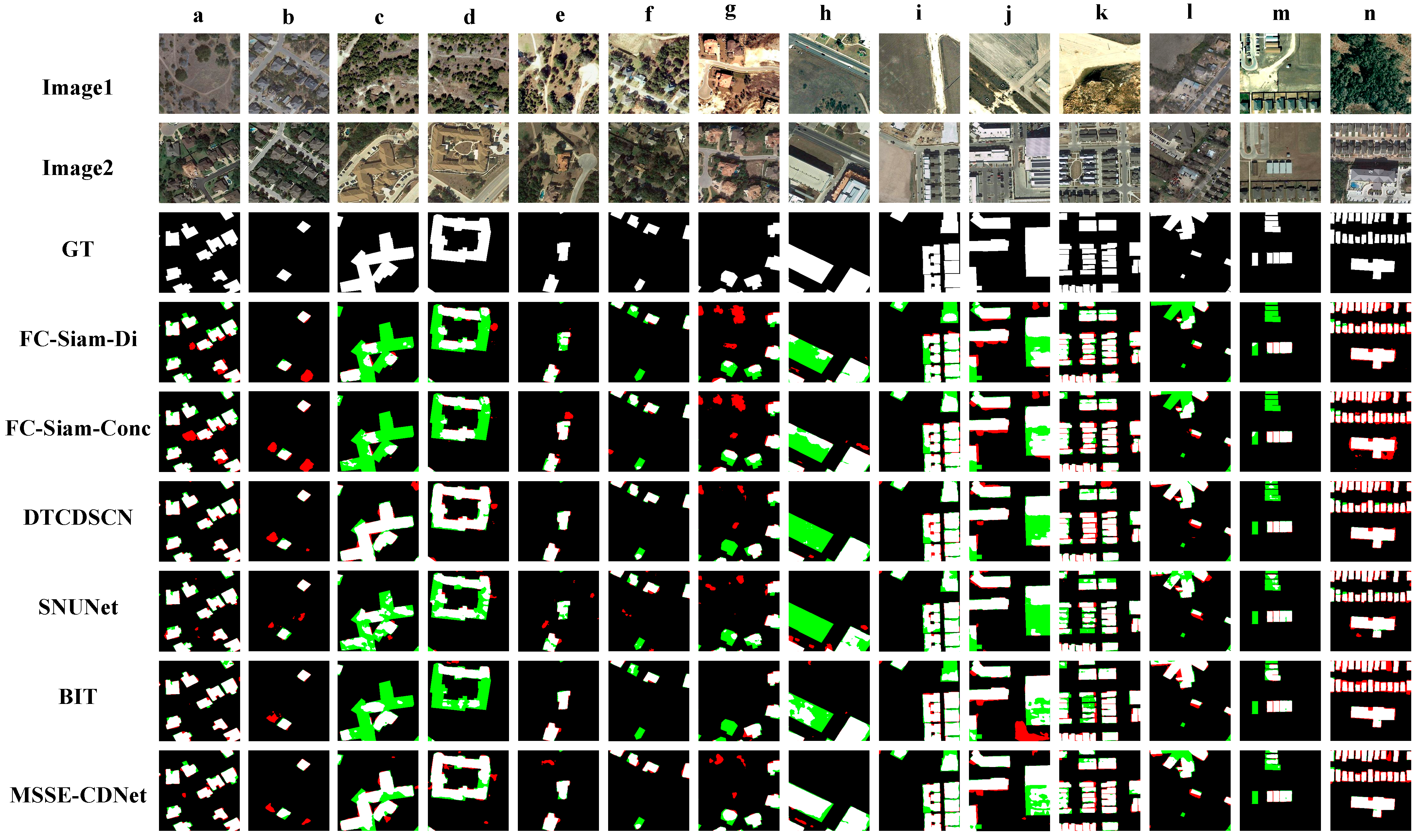

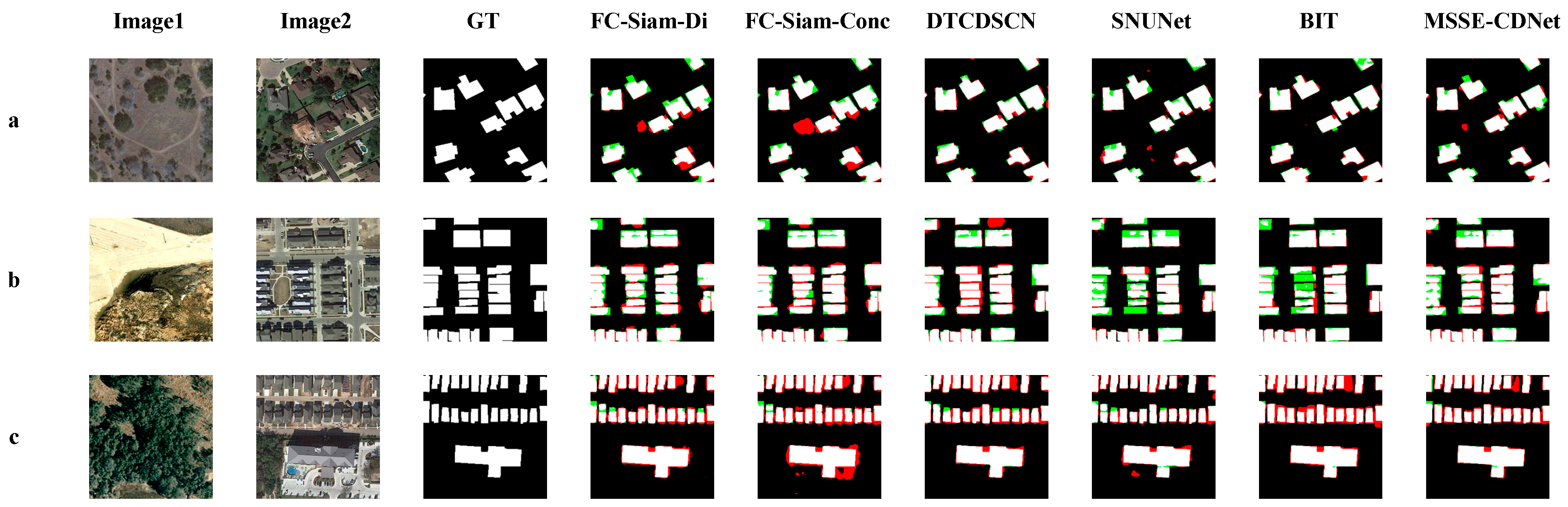

4.1. Model Accuracy Comparison

4.1.1. Dataset

4.1.2. Evaluation Metrics

4.1.3. Comparison of Experimental Results

4.2. Model Efficiency and Effectiveness

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Salah, H.S.; Goldin, S.E.; Rezgui, A.; El Islam, B.N.; Ait-Aoudia, S. What Is a Remote Sensing Change Detection Technique? Towards a Conceptual Framework. Int. J. Remote Sens. 2020, 41, 1788–1812. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, M.; Wang, F.; Yang, G.; Zhang, Y.; Jia, J.; Wang, S. A Novel Squeeze-and-Excitation W-Net for 2d and 3d Building Change Detection with Multi-Source and Multi-Feature Remote Sensing Data. Remote Sens. 2021, 13, 440. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Continuous Change Detection and Classification of Land Cover Using All Available Landsat Data. Remote Sens. Environ. 2014, 144, 152–171. [Google Scholar] [CrossRef]

- Lv, Z.; Wang, F.; Cui, G.; Benediktsson, J.A.; Lei, T.; Sun, W. Spatial–Spectral Attention Network Guided with Change Magnitude Image for Land Cover Change Detection Using Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Luo, H.; Liu, C.; Wu, C.; Guo, X. Urban Change Detection Based on Dempster–Shafer Theory for Multitemporal Very High-Resolution Imagery. Remote Sens. 2018, 10, 980. [Google Scholar] [CrossRef]

- He, C.; Zhao, Y.; Dong, J.; Xiang, Y. Use of Gan to Help Networks to Detect Urban Change Accurately. Remote Sens. 2022, 14, 5448. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, M.; Shen, X.; Shi, W. Landslide Mapping Using Multilevel-Feature-Enhancement Change Detection Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3599–3610. [Google Scholar] [CrossRef]

- Liu, S.; Zheng, Y.; Dalponte, M.; Tong, X. A Novel Fire Index-Based Burned Area Change Detection Approach Using Landsat-8 Oli Data. Eur. J. Remote Sens. 2020, 53, 104–112. [Google Scholar] [CrossRef]

- Gao, F.; Liu, X.; Dong, J.; Zhong, G.; Jian, M. Change Detection in Sar Images Based on Deep Semi-Nmf and Svd Networks. Remote Sens. 2017, 9, 435. [Google Scholar] [CrossRef]

- Shi, J.; Liu, W.; Yin, P.; Cao, Z.; Wang, Y.; Shan, H.; Zhang, Z. Vector Boundary Constrained Land Use Vector Polygon Change Detection Method based on Deep Learning and High-resolution Remote Sensing Images. Remote Sens. Technol. Appl. 2024, 39, 753–763. [Google Scholar]

- Yang, M.; Zhou, Y.; Feng, Y.; Huo, S. Edge-Guided Hierarchical Network for Building Change Detection in Remote Sensing Images. Appl. Sci. 2024, 14, 5415. [Google Scholar] [CrossRef]

- Jiang, K.; Zhao, Z.; Ma, L.; Ma, C. A Review of Development on Changing Detection Methods of Remote Sensing Images Based on Deep Learning. Radio Eng. 2025, 55, 343–356. [Google Scholar]

- Shafique, A.; Cao, G.; Khan, Z.; Asad, M.; Aslam, M. Deep Learning-Based Change Detection in Remote Sensing Images: A Review. Remote Sens. 2022, 14, 871. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, M.; Gao, X.; Shi, W. Advances and Challenges in Deep Learning-Based Change Detection for Remote Sensing Images: A Review through Various Learning Paradigms. Remote Sens. 2024, 16, 804. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Long Beach, CA, USA, 2017; pp. 6000–6010. [Google Scholar]

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection with Transformers. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, J.; Zhu, S.; Zhong, C.; Zhang, Y. Deep Multiscale Siamese Network with Parallel Convolutional Structure and Self-Attention for Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Ke, Q.; Zhang, P. Hybrid-Transcd: A Hybrid Transformer Remote Sensing Image Change Detection Network Via Token Aggregation. ISPRS Int. J. Geo-Inf. 2022, 11, 263. [Google Scholar] [CrossRef]

- Bandara, W.G.C.; Patel, V.M. A Transformer-Based Siamese Network for Change Detection. In Proceedings of the IGARSS 2022–2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022. [Google Scholar]

- Zhang, C.; Wang, L.; Cheng, S.; Li, Y. Swinsunet: Pure Transformer Network for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Feng, J.; Yang, X.; Gu, Z.; Zeng, M.; Zheng, W. Smbcnet: A Transformer-Based Approach for Change Detection in Remote Sensing Images through Semantic Segmentation. Remote Sens. 2023, 15, 3566. [Google Scholar] [CrossRef]

- Teng, Y.; Liu, S.; Sun, W.; Yang, H.; Wang, B.; Jia, J. A Vhr Bi-Temporal Remote-Sensing Image Change Detection Network Based on Swin Transformer. Remote Sens. 2023, 15, 2645. [Google Scholar] [CrossRef]

- Feng, Y.; Jiang, J.; Xu, H.; Zheng, J. Change Detection on Remote Sensing Images Using Dual-Branch Multilevel Intertemporal Network. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Xu, X.; Li, J.; Chen, Z.; Hua, Z. Tcianet: Transformer-Based Context Information Aggregation Network for Remote Sensing Image Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 1951–1971. [Google Scholar] [CrossRef]

- Yang, K.; Xia, G.-S.; Liu, Z.; Du, B.; Yang, W.; Pelillo, M.; Zhang, L. Asymmetric Siamese Networks for Semantic Change Detection in Aerial Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Zhuo, L.; Yu, W.; Jia, T.; Li, J. Research Progress of Transformer-based Remote Sensing Image Change Detection. J. Beijing Univ. Technol. 2025, 51, 851–866. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Chen, H.; Shi, Z. A Spatial-Temporal Attention-Based Method and a New Dataset for Remote Sensing Image Change Detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Wang, Z.; Peng, C.; Zhang, Y.; Wang, N.; Luo, L. Fully Convolutional Siamese Networks Based Change Detection for Optical Aerial Images with Focal Contrastive Loss. Neurocomputing 2021, 457, 155–167. [Google Scholar] [CrossRef]

- Liu, Y.; Pang, C.; Zhan, Z.; Zhang, X.; Yang, X. Building Change Detection for Remote Sensing Images Using a Dual-Task Constrained Deep Siamese Convolutional Network Model. IEEE Geosci. Remote Sens. Lett. 2021, 18, 811–815. [Google Scholar] [CrossRef]

- Fang, S.; Li, K.; Shao, J.; Li, Z. Snunet-Cd: A Densely Connected Siamese Network for Change Detection of Vhr Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

| Models | Pre | Rec | F1 | IoU | OA |

|---|---|---|---|---|---|

| FC-Siam-Di [29] | 89.53 | 83.31 | 86.31 | 75.92 | 98.67 |

| FC-Siam-Conc [29] | 91.99 | 76.77 | 83.69 | 71.96 | 98.49 |

| DTCTSCN [30] | 88.53 | 86.83 | 87.67 | 78.05 | 98.77 |

| BIT [16] | 89.24 | 89.37 | 89.31 | 80.68 | 98.92 |

| SNUNet [31] | 89.18 | 87.17 | 88.16 | 78.83 | 98.82 |

| MSSE-CDNet | 91.25 | 89.83 | 90.53 | 82.70 | 98.97 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, L.; Di, S.; Wang, Z.; Liu, Y. A Transformer-Based Multi-Scale Semantic Extraction Change Detection Network for Building Change Application. Buildings 2025, 15, 3549. https://doi.org/10.3390/buildings15193549

Hu L, Di S, Wang Z, Liu Y. A Transformer-Based Multi-Scale Semantic Extraction Change Detection Network for Building Change Application. Buildings. 2025; 15(19):3549. https://doi.org/10.3390/buildings15193549

Chicago/Turabian StyleHu, Lujin, Senchuan Di, Zhenkai Wang, and Yu Liu. 2025. "A Transformer-Based Multi-Scale Semantic Extraction Change Detection Network for Building Change Application" Buildings 15, no. 19: 3549. https://doi.org/10.3390/buildings15193549

APA StyleHu, L., Di, S., Wang, Z., & Liu, Y. (2025). A Transformer-Based Multi-Scale Semantic Extraction Change Detection Network for Building Change Application. Buildings, 15(19), 3549. https://doi.org/10.3390/buildings15193549