Rock Surface Crack Recognition Based on Improved Mask R-CNN with CBAM and BiFPN

Abstract

1. Introduction

2. Materials and Methods

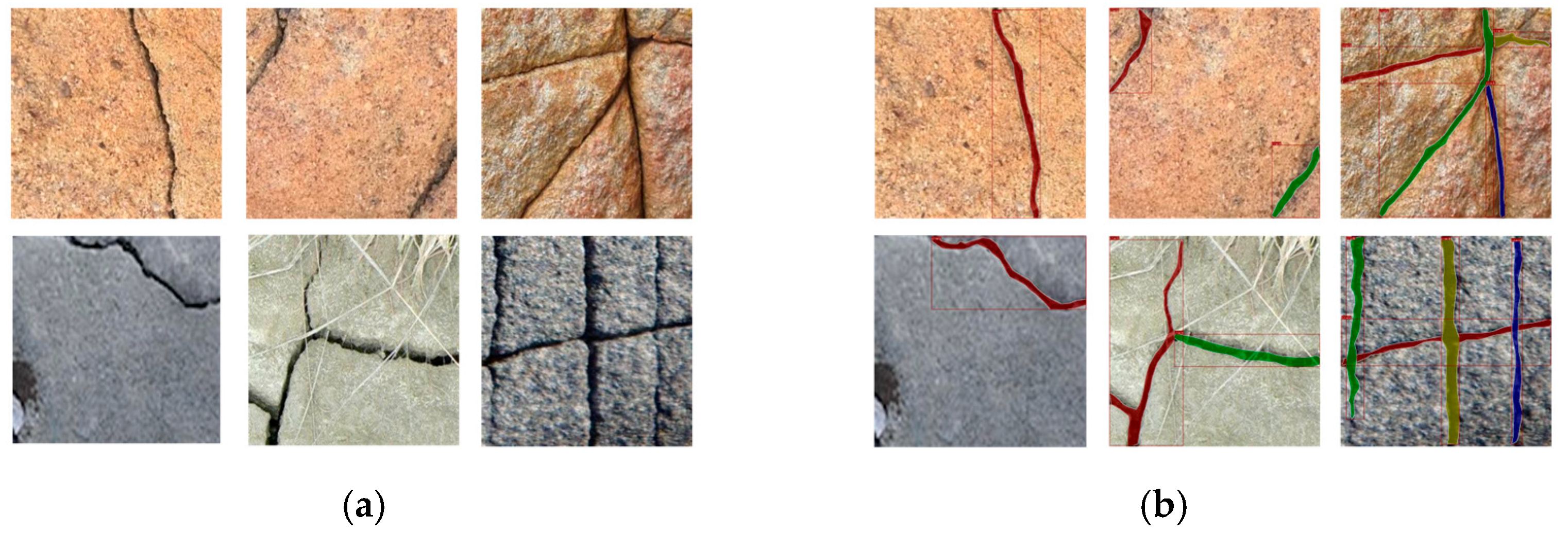

2.1. Dataset Construction

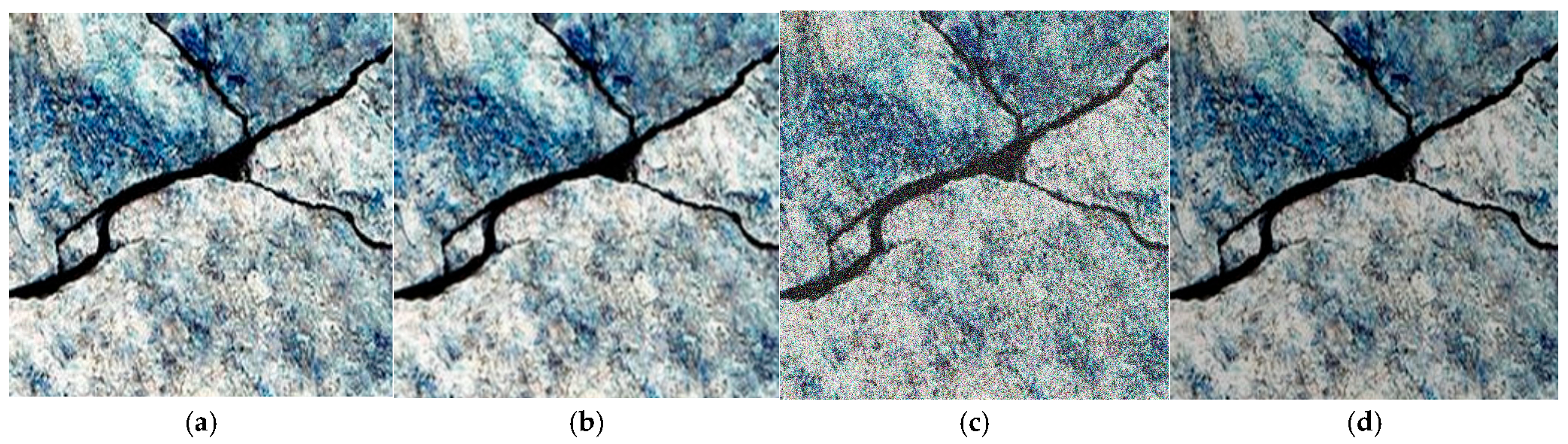

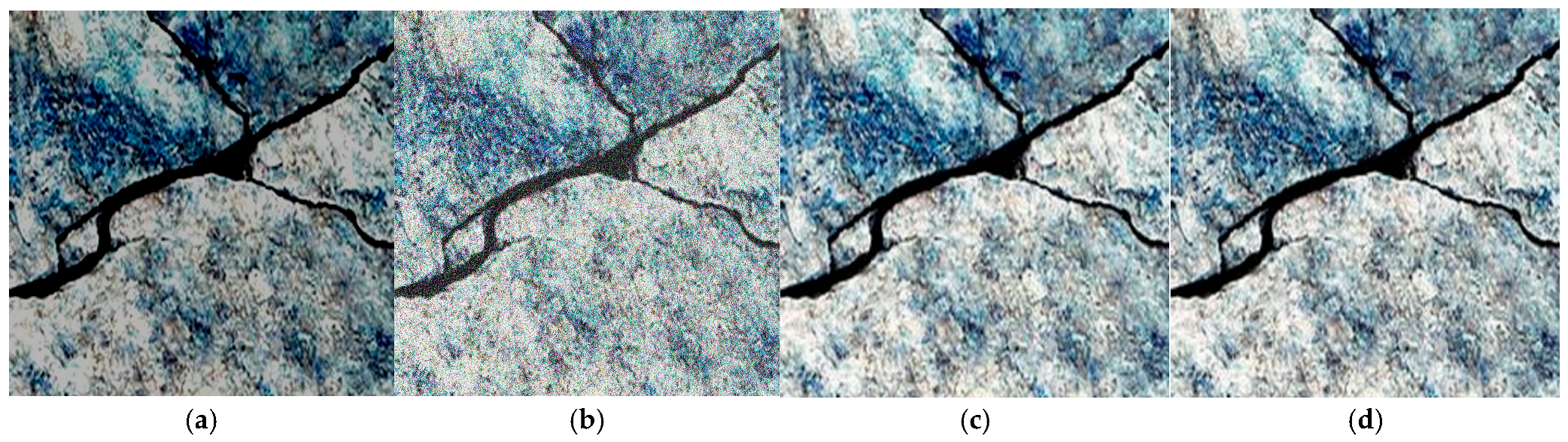

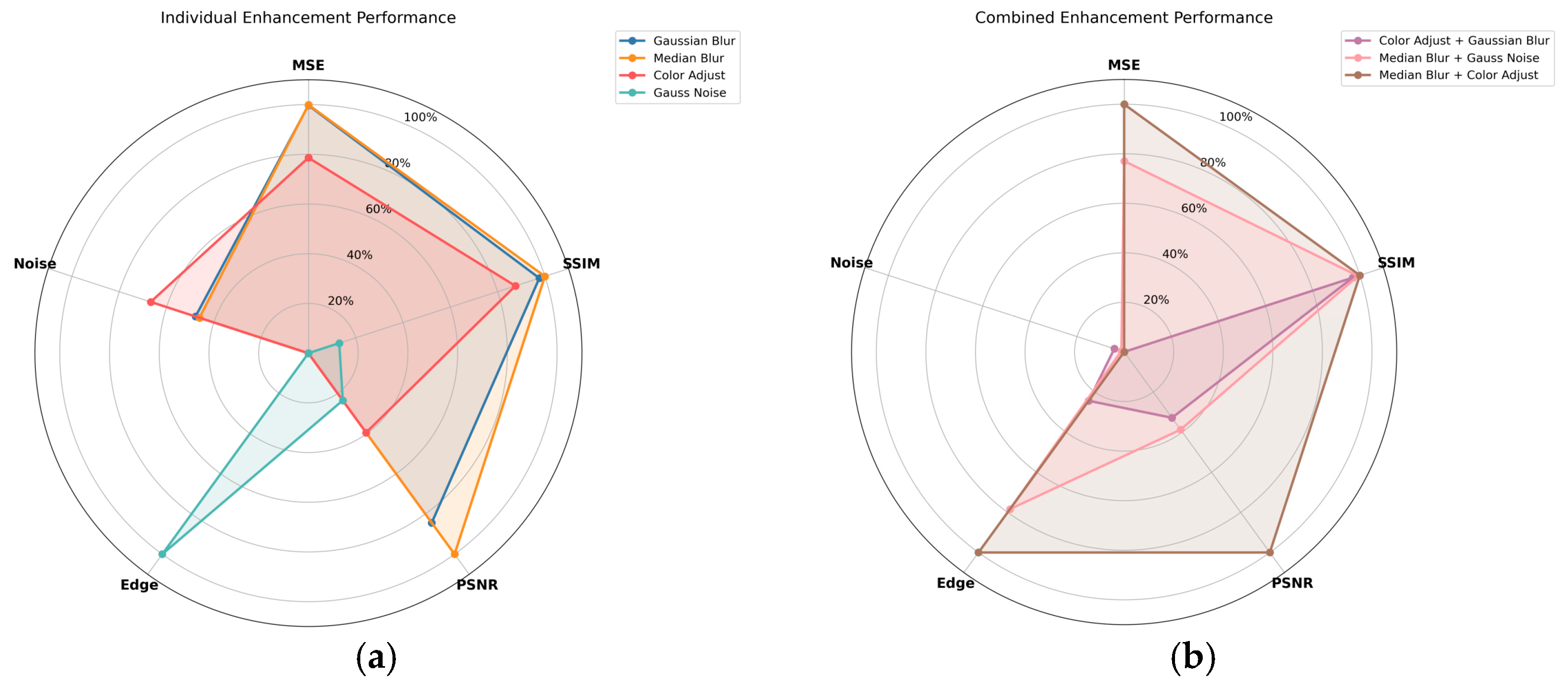

2.2. Raw Image Preprocessing

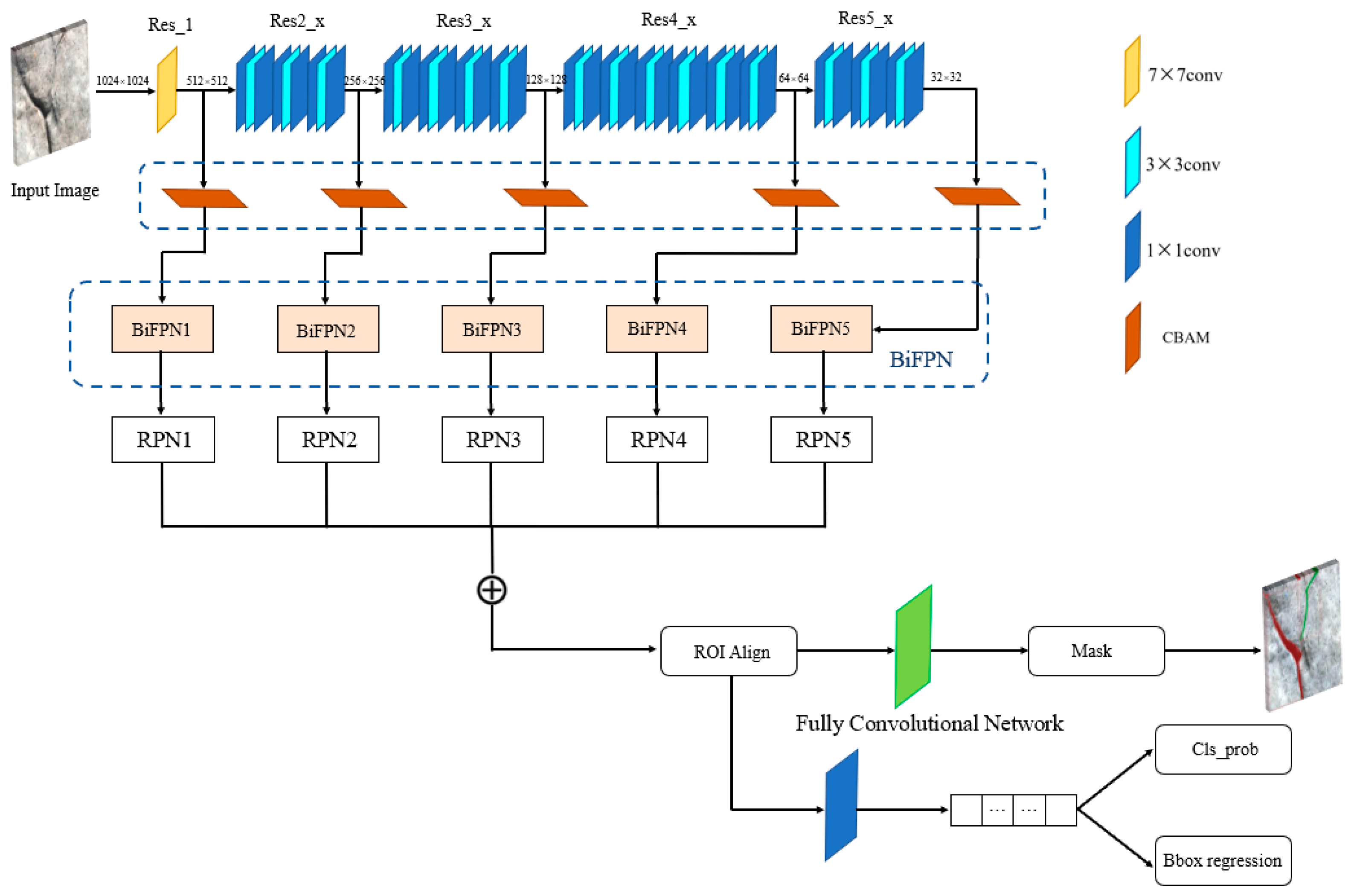

2.3. CBAM-BiFPN-Mask R-CNN Network

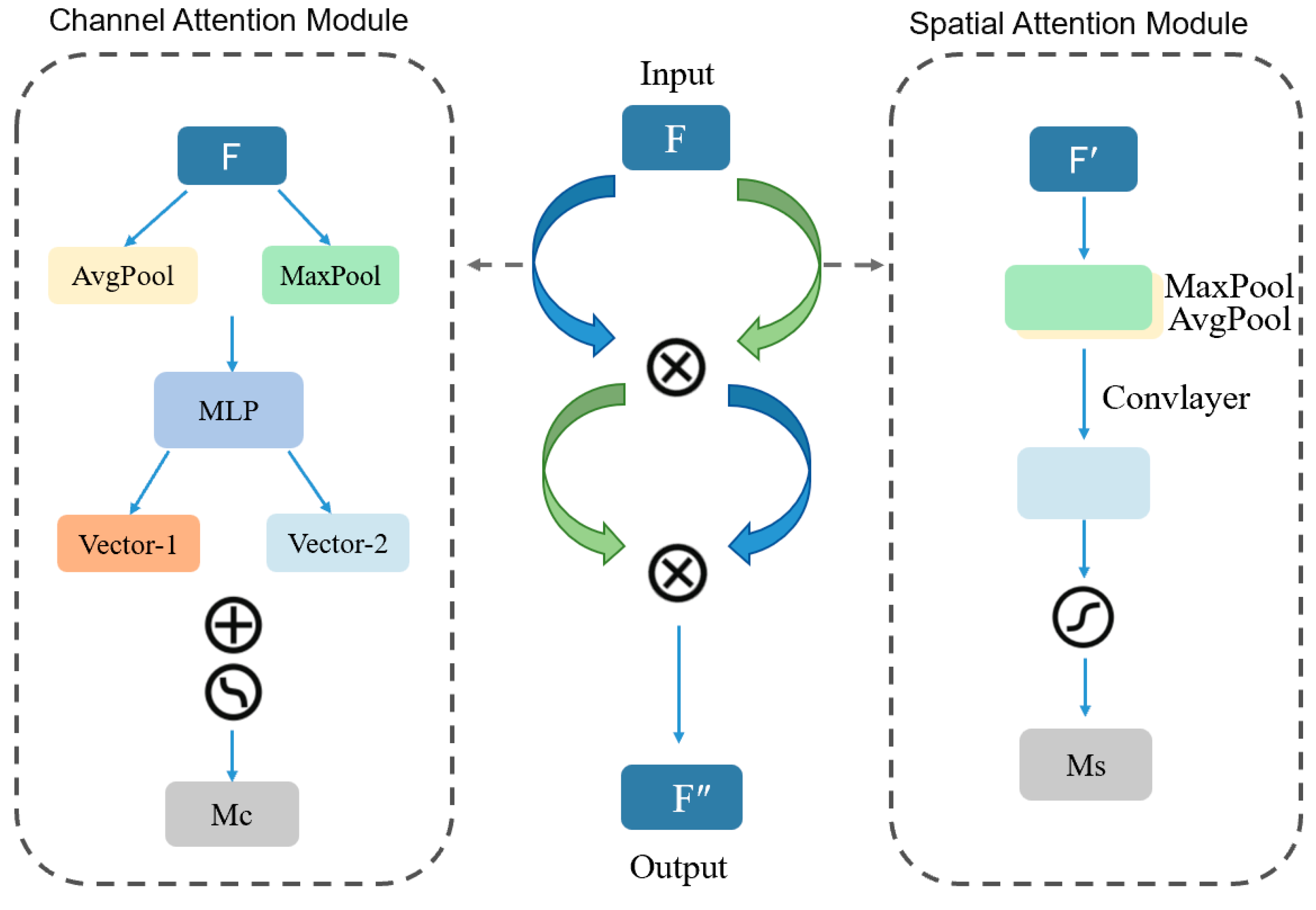

2.3.1. CBAM Attention Mechanism

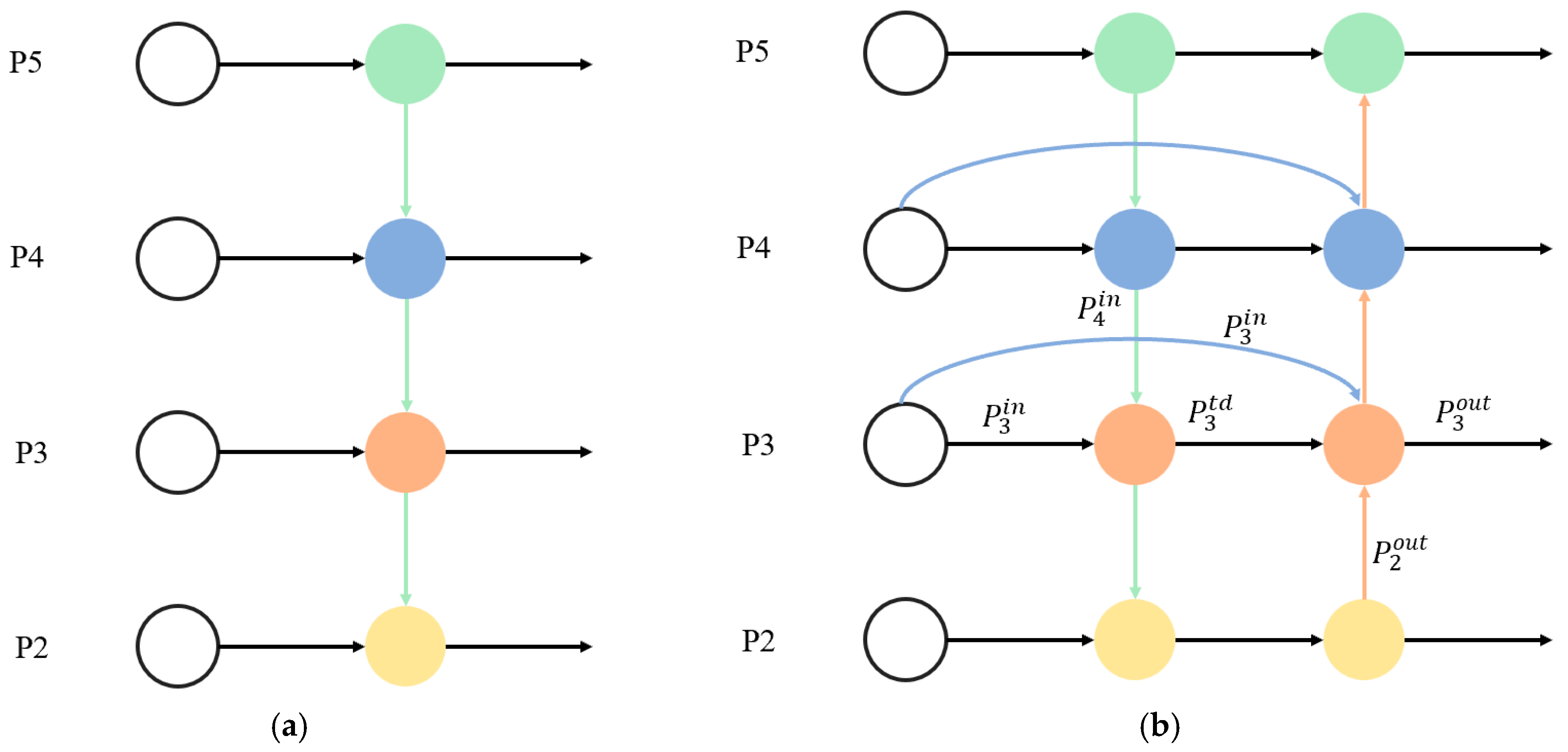

2.3.2. Weighted Bidirectional Feature Pyramid Network

3. Experiment

3.1. Experimental Environment

3.2. Evaluation Indicators

3.3. Experimental Setup

4. Result Analysis

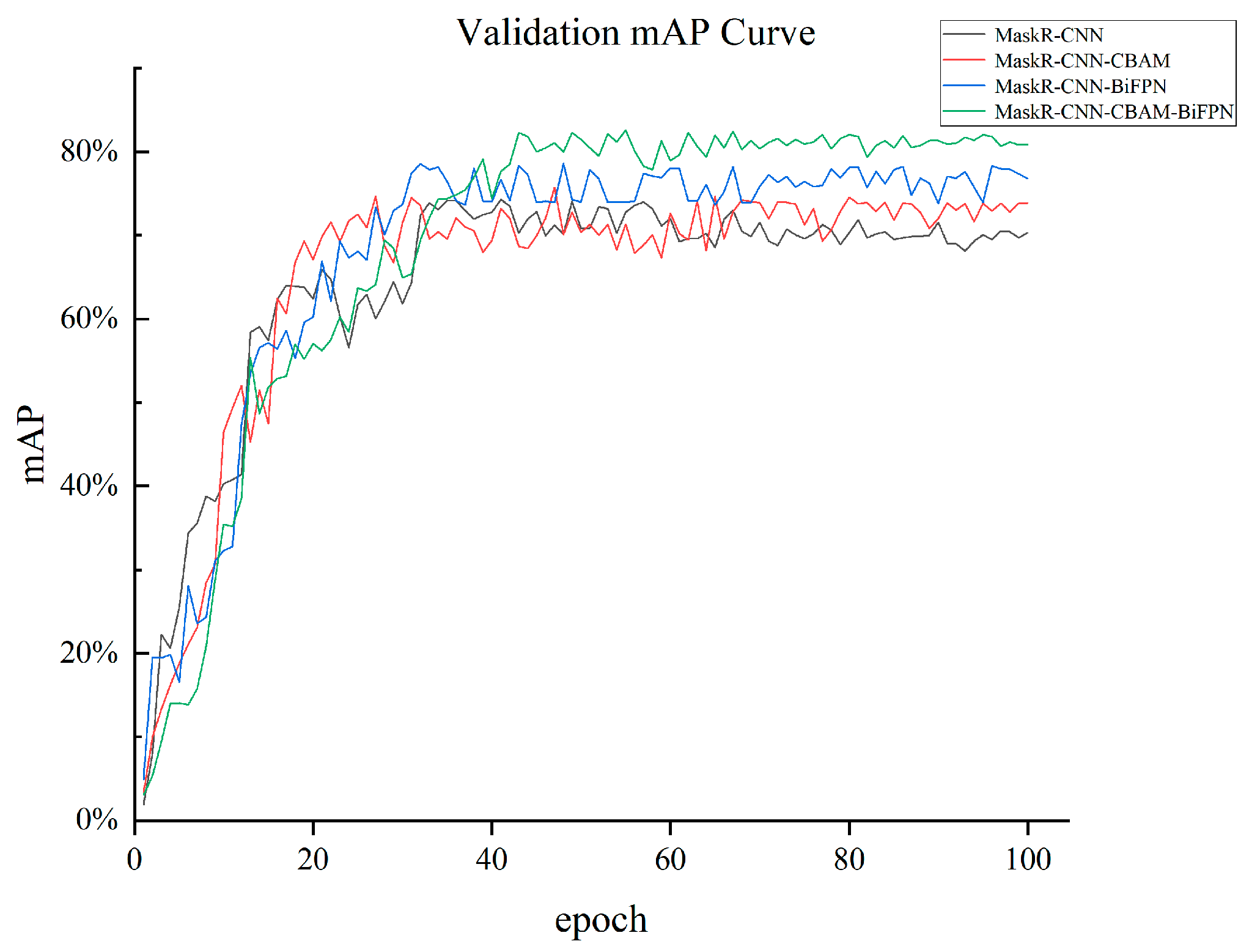

4.1. Model Training Results Analysis

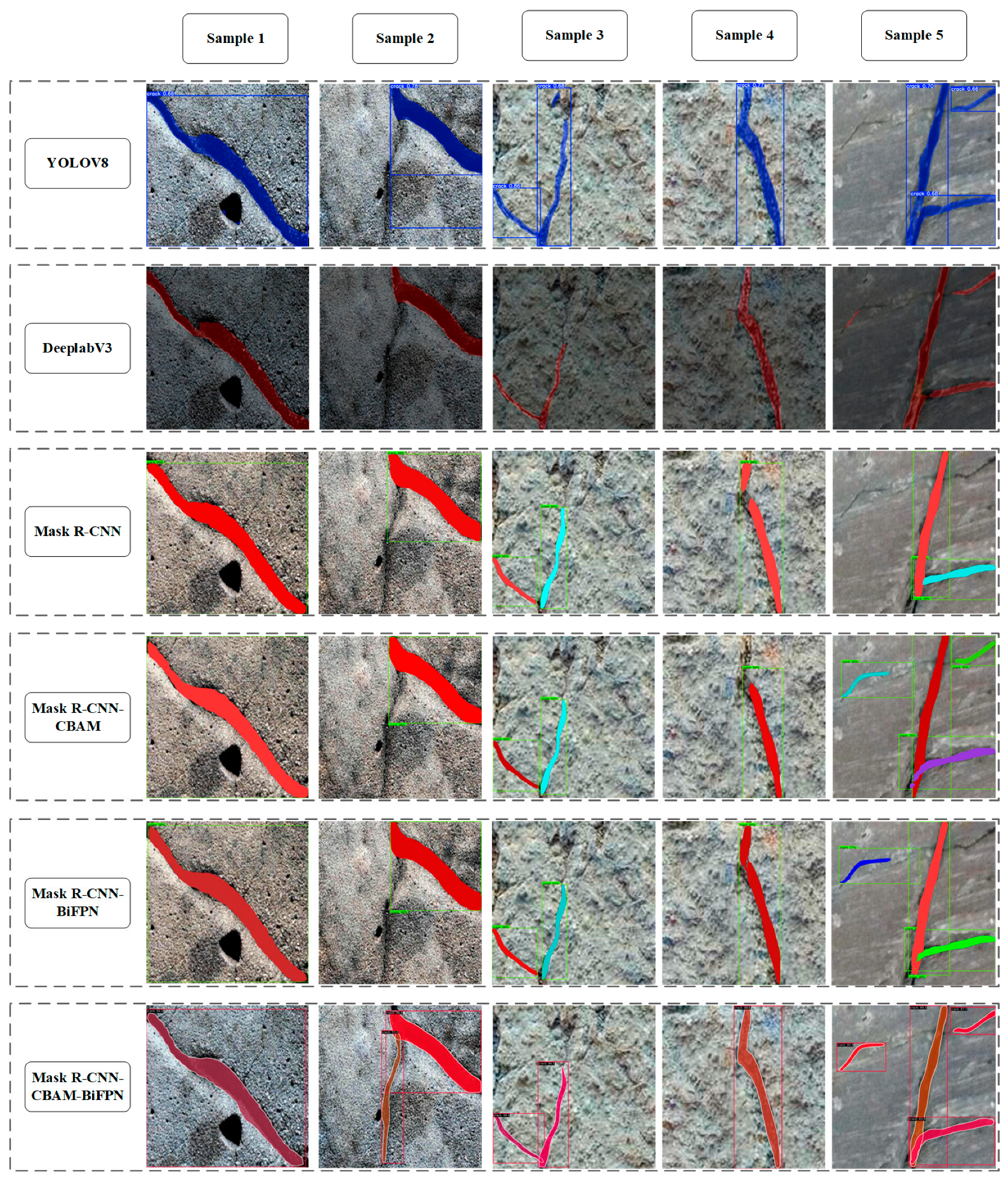

4.2. Recognition Result Analysis

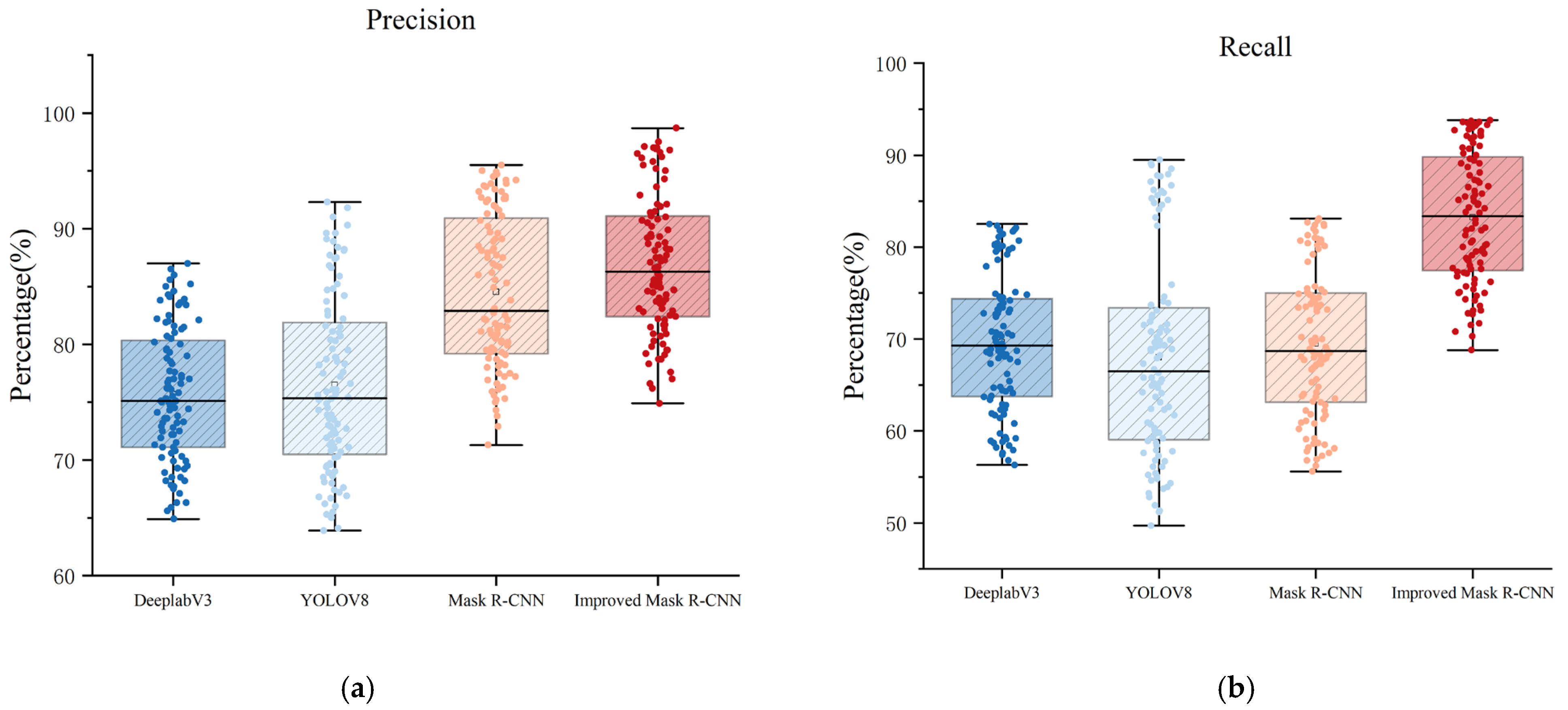

4.3. Model Performance Analysis

5. Conclusions

- (1)

- The improved model significantly outperforms the baseline Mask R-CNN model in key performance indicators such as precision and recall rate mAP of fracture recognition. Among them, the precision (P) of the improved model reaches 82.6% and the recall rate (R) reaches 80.3%, which are 9.1 percentage points and 12.7 percentage points higher than the baseline model, respectively; the mAP@0.5 indicator is improved by 8.36 percentage points, and the overall recognition performance is improved.

- (2)

- The CBAM module, through the combined effects of channel attention and spatial attention, can adaptively enhance the discriminative features related to cracks and suppress background noise, thereby improving the model’s recognition ability under low contrast and complex lighting conditions; the BiFPN module, through bidirectional cross-layer feature fusion and learnable weight distribution mechanism, achieves efficient integration of multi-scale features, ensuring that the model can maintain the detailed features of microcracks while taking into account the global coherence of large-scale cracks. The synergistic effect of the two improves the stability and robustness of the model in different complex scenarios.

- (3)

- The CBAM-BiFPN-Mask R-CNN model proposed in this paper shows higher accuracy and robustness in the detection of complex backgrounds, low contrast and fine cracks. It can more quickly and accurately determine the presence and location of cracks in images.

- (4)

- The current self-constructed dataset exhibits considerable scale and diversity, and there remains potential for further expansion. Since the model was trained solely on this custom dataset, its generalizability (transferability) to other rock types or extreme environments requires further validation. Future research should focus on constructing a larger and more diverse cross-scenario rock fracture dataset to systematically evaluate and enhance the model’s generalization capability and robustness. Additionally, employing semi-supervised or self-supervised learning strategies would help leverage extensive unlabeled field image data, thereby reducing reliance on large-scale annotated datasets.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ferrero, A.M.; Forlani, G.; Roncella, R.; Voyat, H.I. Advanced Geostructural Survey Methods Applied to Rock Mass Characterization. Rock Mech. Rock Eng. 2009, 42, 631–665. [Google Scholar] [CrossRef]

- Świt, G.; Krampikowska, A.; Tworzewski, P. Non-Destructive Testing Methods for In Situ Crack Measurements and Morphology Analysis with a Focus on a Novel Approach to the Use of the Acoustic Emission Method. Materials 2023, 16, 7440. [Google Scholar] [CrossRef]

- Adhikari, M.D.; Kim, T.H.; Yum, S.G.; Kim, J.Y. Damage Detection and Monitoring of a Concrete Structure Using 3D Laser Scanning. Eng. Proc. 2023, 36, 1. [Google Scholar]

- Mihić, M.; Sigmund, Z.; Završki, I.; Butković, L.L. An Analysis of Potential Uses, Limitations and Barriers to Implementation of 3D Scan Data for Construction Management-Related Use—Are the Industry and the Technical Solutions Mature Enough for Adoption? Buildings 2023, 13, 1184. [Google Scholar] [CrossRef]

- Tran, Q.H.; Han, D.; Kang, C.; Haldar, A.; Huh, J. Effects of Ambient Temperature and Relative Humidity on Subsurface Defect Detection in Concrete Structures by Active Thermal Imaging. Sensors 2017, 17, 1718. [Google Scholar] [CrossRef]

- Ko, T.; Lin, C.M.Y. A Review of Infrared Thermography for Delamination Detection on Infrastructures and Buildings. Sensors 2022, 22, 423. [Google Scholar] [CrossRef]

- Yuan, Q.; Shi, Y.; Li, M. A Review of Computer Vision-Based Crack Detection Methods in Civil Infrastructure: Progress and Challenges. Remote Sens. 2024, 16, 2910. [Google Scholar] [CrossRef]

- Sezgin, M.; Sankur, B. Survey over image thresholding techniques and quantitative performance evaluation. J. Electron. Imaging 2004, 13, 146–168. [Google Scholar] [CrossRef]

- Zhang, D.; Lu, G. Review of shape representation and description techniques. Pattern Recognit. 2003, 37, 1–19. [Google Scholar] [CrossRef]

- Loncaric, S. A survey of shape analysis techniques. Pattern Recognit. 1998, 31, 983–1001. [Google Scholar] [CrossRef]

- Deb, D.; Hariharan, S.; Rao, U.; Ryu, C.-H. Automatic detection and analysis of discontinuity geometry of rock mass from digital images. Comput. Geosci. 2007, 34, 115–126. [Google Scholar] [CrossRef]

- Reid, T.R.; Harrison, J.P. A semi-automated methodology for discontinuity trace detection in digital images of rock mass exposures. Int. J. Rock Mech. Min. Sci. 2000, 37, 1073–1089. [Google Scholar] [CrossRef]

- Bolkas, D.; Vazaios, I.; Peidou, A.; Vlachopoulos, N. Detection of Rock Discontinuity Traces Using Terrestrial LiDAR Data and Space-Frequency Transforms. Geotech. Geol. Eng. 2018, 36, 1745–1765. [Google Scholar] [CrossRef]

- Li, X.; Chen, J.; Zhu, H. A new method for automated discontinuity trace mapping on rock mass 3D surface model. Comput. Geosci. 2016, 89, 118–131. [Google Scholar] [CrossRef]

- Liu, J.-H.; Jiang, Y.-D.; Zhao, Y.-X.; Zhu, J.; Wang, Y. Crack edge detection of coal CT images based on LS-SVM. In Proceedings of the International Conference on Machine Learning and Cybernetics, Baoding, China, 12–15 July 2009; p. 2398. [Google Scholar]

- Zhang, Z.; Wang, S.; Wang, P.; Wang, C. Intelligent identification and extraction of geometric parameters for surface fracture networks of rocky slopes. Chin. J. Geotech. Eng. 2021, 43, 2240–2248. [Google Scholar]

- Chuanqi, L.; Jian, Z.; Daniel, D. Utilizing semantic-level computer vision for fracture trace characterization of hard rock pillars in underground space. Geosci. Front. 2024, 15, 101769. [Google Scholar]

- Tursenhali, H.; Hang, L. Intelligent identification of cracks on concrete surface combining self-attention mechanism and deep learning. J. Rail Way Sci. Eng. 2021, 18, 844–852. [Google Scholar]

- Cha, Y.; Choi, W.; Büyüköztürk, O. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Park, J.; Chen, Y.-C.; Li, Y.-J.; Kitani, K. Crack detection and refinement via deep reinforcement learning. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021. [Google Scholar]

- Chen, F.; Jahanshahi, M. NB-CNN: Deep Learning-Based Crack Detection Using Convolutional Neural Network and Naive Bayes Data Fusion. IEEE Trans. Ind. Electron. 2018, 65, 4392–4400. [Google Scholar] [CrossRef]

- Ji, Y.; Song, S.; Zhang, W.; Li, Y.; Xue, J.; Chen, J. Automatic identification of rock fractures based on deep learning. Eng. Geol. 2025, 345, 107874. [Google Scholar] [CrossRef]

- Chen, X.; Lian, Q.; Chen, X.; Shang, J. Surface Crack Detection Method for Coal Rock Based on Improved YOLOv5. Appl. Sci. 2022, 12, 9695. [Google Scholar] [CrossRef]

- Zhang, P.; Du, K.; Tannant, D.D.; Zhu, H.; Zheng, W. Automated method for extracting and analysing the rock discontinuities from point clouds based on digital surface model of rock mass. Eng. Geol. 2018, 239, 109–118. [Google Scholar] [CrossRef]

- Li, M.; Chen, M.; Lu, W.; Yan, P.; Tan, Z. Automatic extraction and quantitative analysis of characteristics from complex fractures on rock surfaces via deep learning. Int. J. Rock Mech. Min. Sci. 2025, 187, 106038. [Google Scholar] [CrossRef]

- Zhang, L.; Shen, J.; Zhu, B. A research on an improved Unet-based concrete crack detection algorithm. Struct. Health Monit.-Int. J. 2021, 20, 1864–1879. [Google Scholar] [CrossRef]

- Lopes, R.G.; Yin, D.; Poole, B.; Gilmer, J.; Cubuk, E.D. Improving Robustness Without Sacrificing Accuracy with Patch Gaussian Augmentation. arXiv 2019, arXiv:1906.02611. [Google Scholar] [CrossRef]

- Gong, C.; Wang, D.; Li, M.; Chandra, V.; Liu, Q. KeepAugment: A Simple Information-Preserving Data Augmentation Approach. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. AutoAugment: Learning Augmentation Policies from Data. arXiv 2019, arXiv:1805.09501. [Google Scholar] [CrossRef]

- Huang, S.; Dong, H.; Yang, Y.; Wei, Y.; Ren, M.; Wang, S. IATN: Illumination-aware two-stage network for low-light image enhancement. Signal Image Video Process. 2024, 18, 3565–3575. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Guo, M.-H.; Xu, T.-X.; Liu, J.-J.; Liu, Z.-N.; Jiang, P.-T.; Mu, T.-J.; Zhang, S.-H.; Martin, R.R.; Cheng, M.-M.; Hu, S.-M. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Wang, C.; Zhong, C. Adaptive Feature Pyramid Networks for Object Detection. IEEE Access 2021, 9, 107024–107032. [Google Scholar] [CrossRef]

- Su, Q.; Zhang, G.; Wu, S.; Yin, Y. FI-FPN: Feature-integration feature pyramid network for object detection. Ai Commun. 2023, 36, 191–203. [Google Scholar] [CrossRef]

- Wang, K.; Liew, J.H.; Zou, Y.; Zhou, D.; Feng, J. PANet: Few-Shot Image Semantic Segmentation with Prototype Alignment. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

| Enhancement Type | Name | Parameter Settings |

|---|---|---|

| Blurring | Gaussian blur | blur_limit = (3,7) |

| Median blur | blur_limit = 3 | |

| Mean blur | blur_limit = 3 | |

| Noise | Gaussian noise | var_limit = (10.0,50.0) |

| Color adjustment | Saturation | saturation = 0.5 |

| Contrast | contrast = 0.5 | |

| Brightness | brightness = 0.3 | |

| Combined | Random 2-transform Combination | Random selection of 2 methods |

| Configuration | Parameter |

|---|---|

| CPU | Intel Core i9-13900 HX |

| GPU | NVIDIA GeForce RTX 4060 Laptop GPU |

| Development environment | Windows 10 |

| Memory | 32 G |

| Hard disk | 1 TB |

| Methods | mAP@[0.5:0.95] | Precision(%) | Recall(%) | F1 Score |

|---|---|---|---|---|

| DeeplabV3 | 38.5% | 72.3 | 65.8 | 0.689 |

| YOLOV8 | 35.6 | 70.6 | 62.7 | 0.664 |

| Mask R-CNN | 38.1% | 73.5 | 67.6 | 0.704 |

| Mask R-CNN-CBAM | 39.5% | 75.9 | 70.4 | 0.731 |

| Mask R-CNN-BiFPN | 42.7% | 78.8 | 75.5 | 0.771 |

| Mask R-CNN-CBAM-BiFPN | 45.9% | 82.6 | 80.3 | 0.814 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Y.; Deng, N.; Ye, F.; Zhang, Q.; Yan, Y. Rock Surface Crack Recognition Based on Improved Mask R-CNN with CBAM and BiFPN. Buildings 2025, 15, 3516. https://doi.org/10.3390/buildings15193516

Hu Y, Deng N, Ye F, Zhang Q, Yan Y. Rock Surface Crack Recognition Based on Improved Mask R-CNN with CBAM and BiFPN. Buildings. 2025; 15(19):3516. https://doi.org/10.3390/buildings15193516

Chicago/Turabian StyleHu, Yu, Naifu Deng, Fan Ye, Qinglong Zhang, and Yuchen Yan. 2025. "Rock Surface Crack Recognition Based on Improved Mask R-CNN with CBAM and BiFPN" Buildings 15, no. 19: 3516. https://doi.org/10.3390/buildings15193516

APA StyleHu, Y., Deng, N., Ye, F., Zhang, Q., & Yan, Y. (2025). Rock Surface Crack Recognition Based on Improved Mask R-CNN with CBAM and BiFPN. Buildings, 15(19), 3516. https://doi.org/10.3390/buildings15193516