Use and Potential of AI in Assisting Surveyors in Building Retrofit and Demolition—A Scoping Review

Abstract

1. Introduction

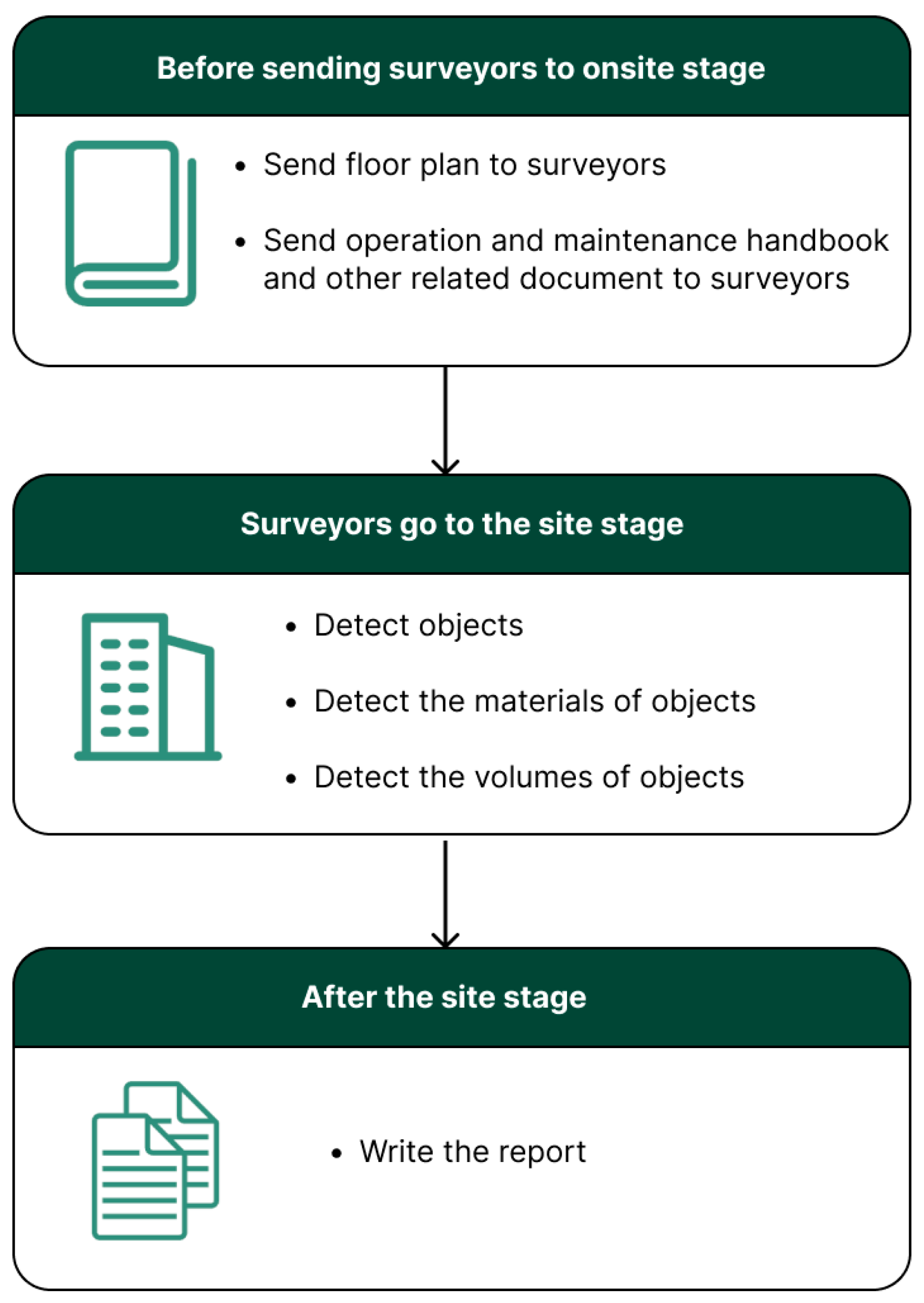

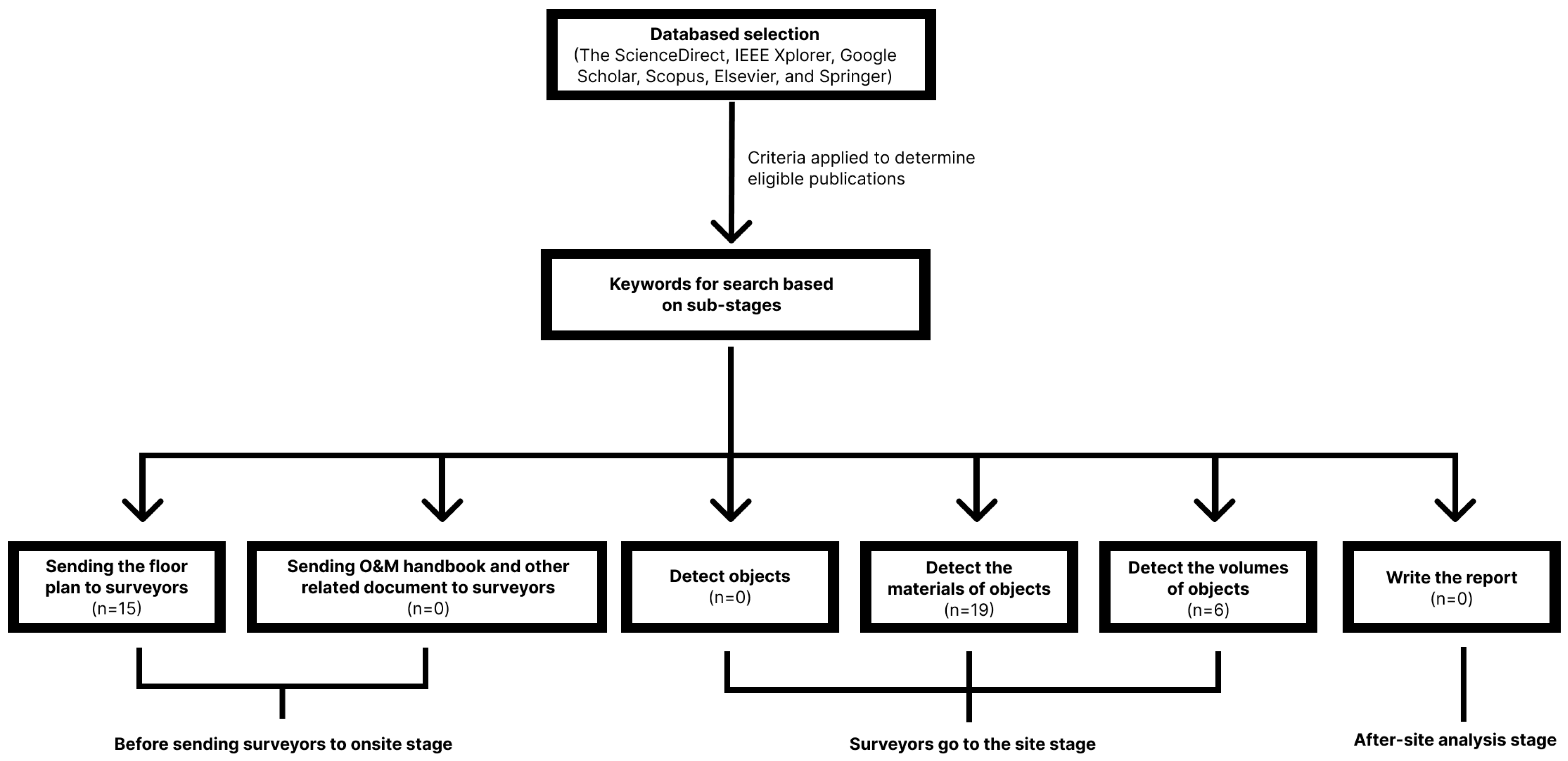

2. Methodology

2.1. Search Processes

- Articles, books, and conference papers in English related to the sub-processes

- Document is available in electronic form

- Published before 31 March 2025

- The paper should be related to structured elements or mainly related to the structured elements of building, but can include non-structured elements.

- The paper related to use AI in PRA/PDA

2.2. Scope of AI Topics

2.3. Preliminary Results

3. Discussion

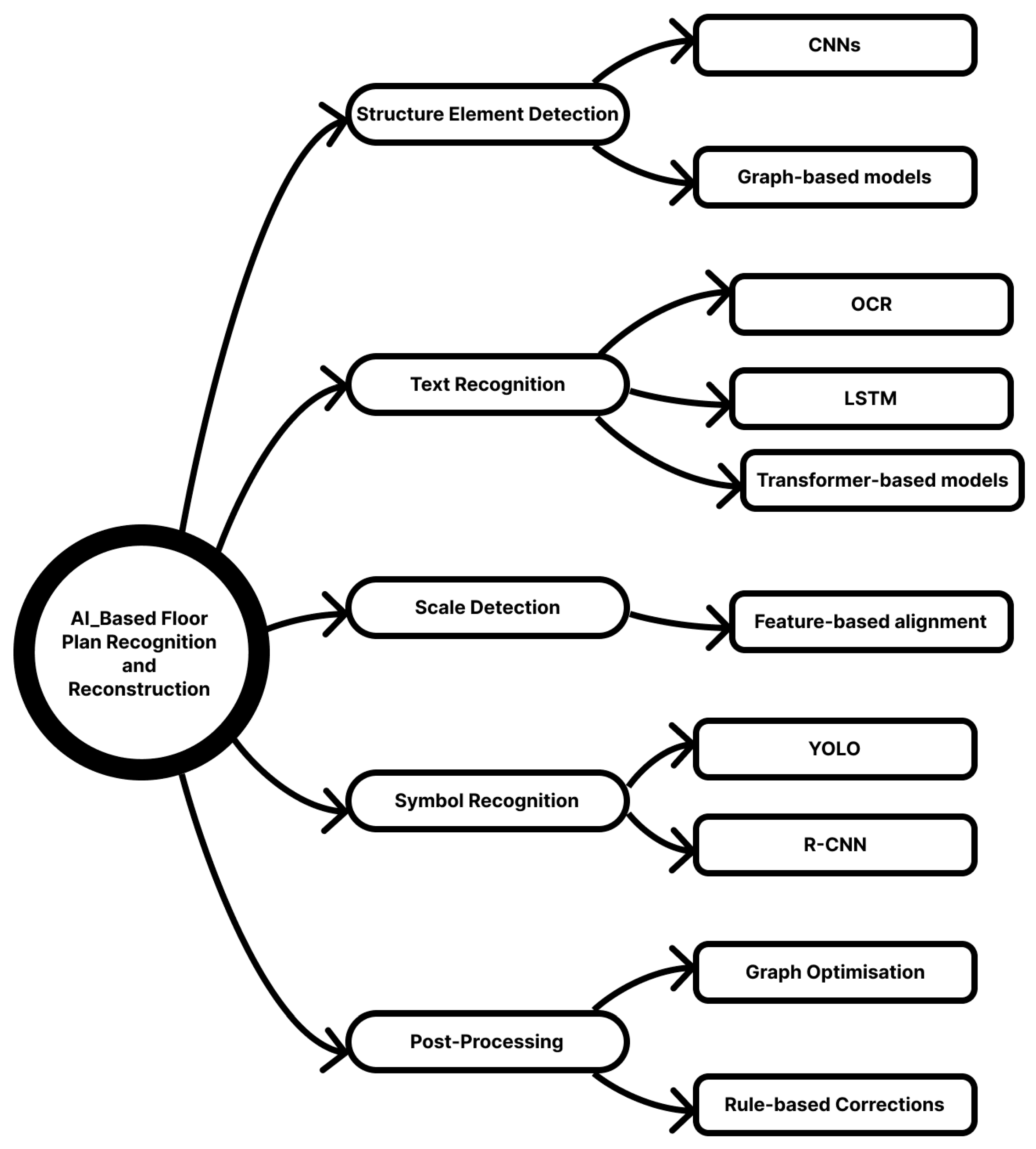

3.1. AI for PRA/PDA-Related Floor Plan Recognition

3.1.1. AI-Based Floor Plan Recognition and Reconstruction Processes

3.1.2. AI Technologies for Floor Plan Recognition and Transformation

Deep Learning-Based Image Recognition

Generative Adversarial Network (GAN)-Based Methodologies

Rule-Based Vectorization Methods for Automated Floor Plan Processing

Hybrid AI and Procedural Modeling

3.1.3. Decision-Making Flowchart to Select AI Used in PRA/PDA-Related Floor Plan Recognition

3.1.4. Summary

3.2. AI for PRA/PDA-Related Document Detection

3.2.1. Natural Language Processing (NLP)

3.2.2. Optical Character Recognition (OCR)

3.2.3. Computer Vision

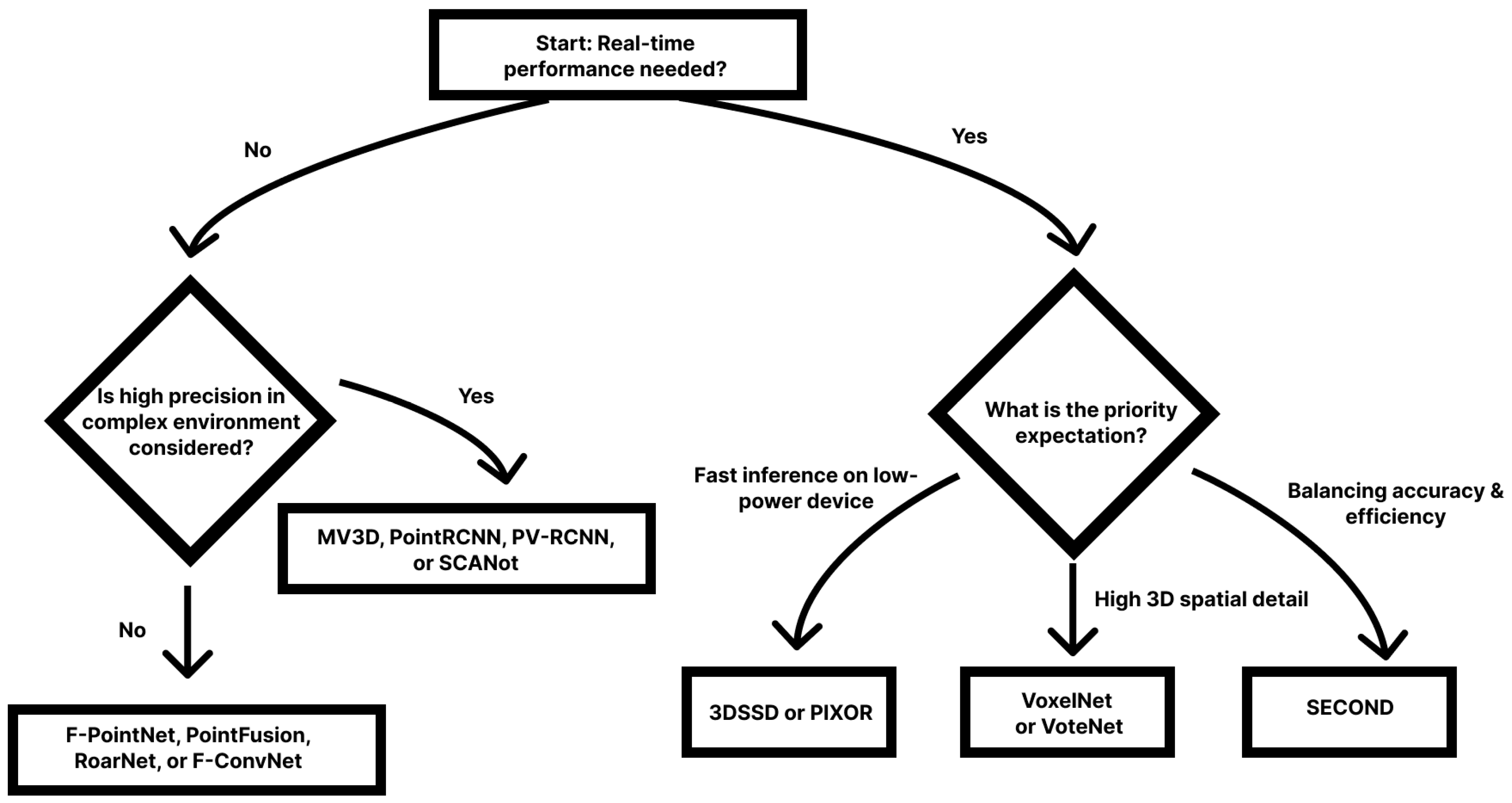

3.3. AI for PRA/PDA-Related Object Detection

3.3.1. Types of Input Data for Object Detection

3.3.2. AI and Machine Learning Techniques in PRA/PDA-Related Object Detection

Region Proposal-Based Methods

Single-Shot Detection Methods

3.3.3. Decision-Making Flowchart to Select AI Used in PRA/PDA-Related Object Detection

3.3.4. Object Detection Summary

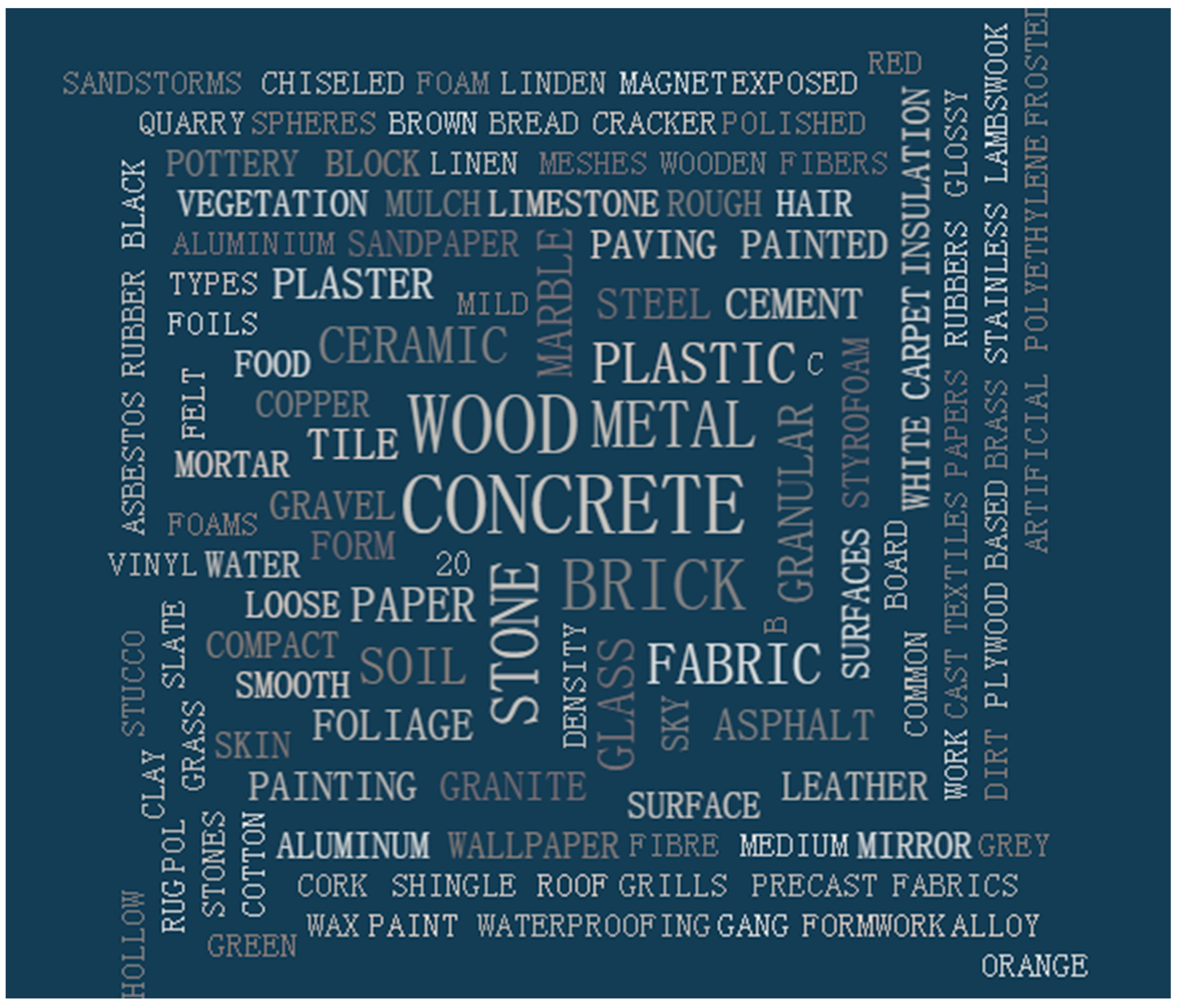

3.4. AI for PRA/PDA-Related Material Detection

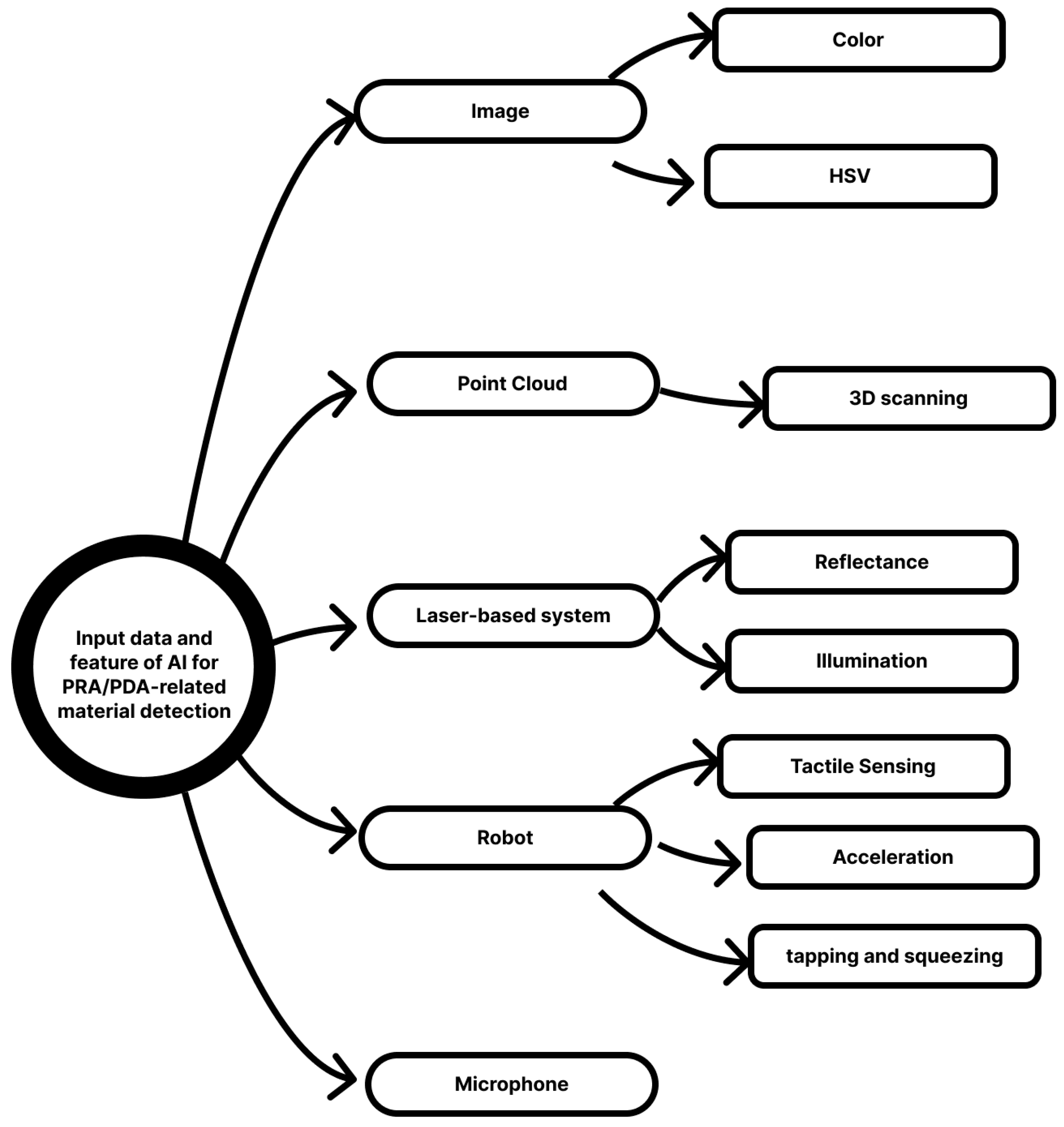

3.4.1. Input Data Types of Material Detection

Image and Camera

Point Cloud

Laser-Based Systems

Robot

Microphone

3.4.2. Features for Material Detection

Color

Hue-Saturation-Value (HSV) Color Model

Reflectance (Surface Roughness)

Illumination Effects

Features from Physical Robots

3.4.3. Algorithms for Material Detection

Support Vector Machines (SVM)

Neural Networks

Other Algorithms

3.4.4. Hazardous Materials Detection

3.4.5. Summary on AI-Driven Material Detection in PRA/PDA

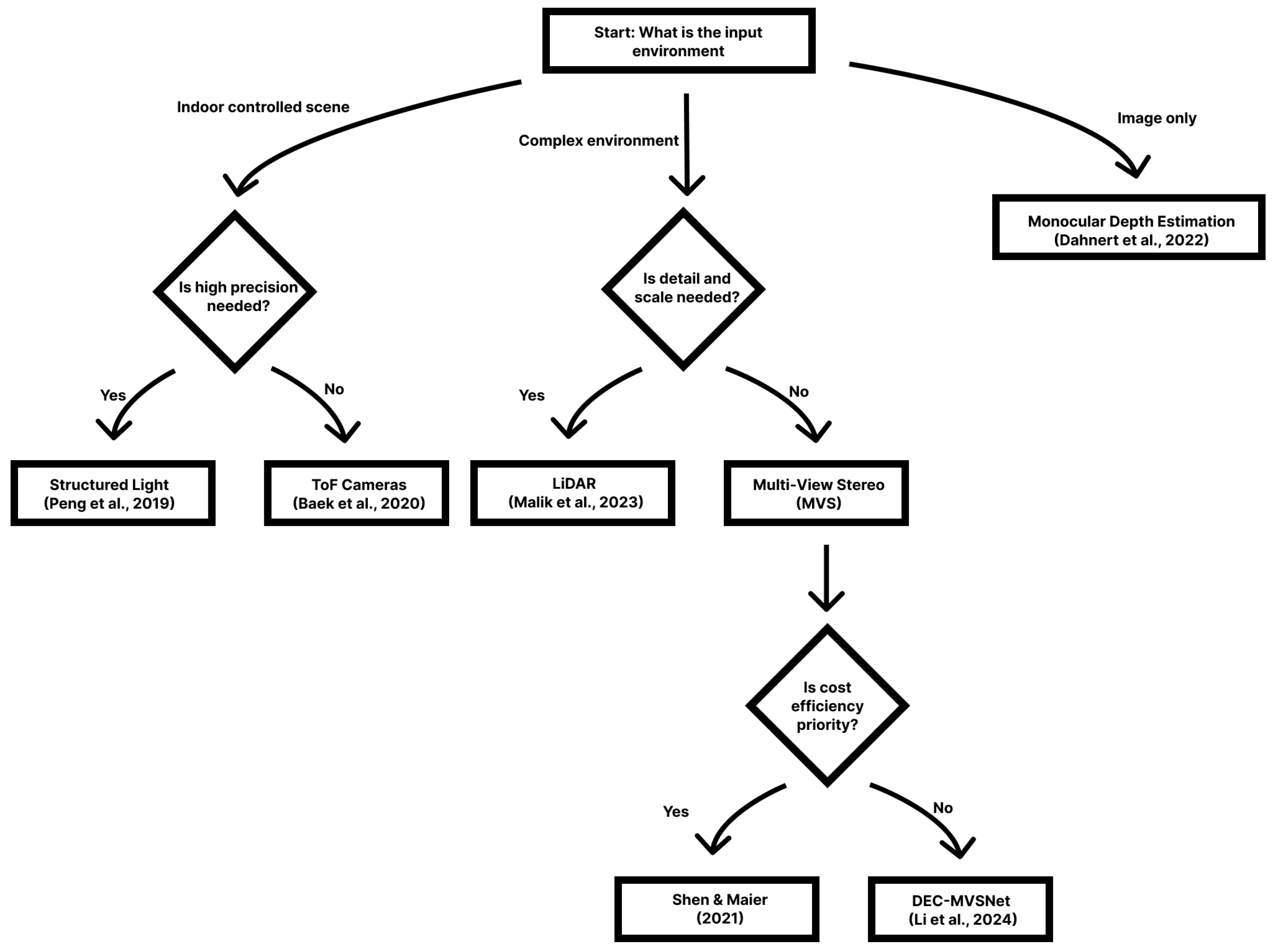

3.5. AI for PRA/PDA-Related Volume Detection

3.5.1. AI Technologies for Volume Detection in PRA/PDA

Structured Light

Time-of-Flight (ToF) Cameras

LiDAR

Multi-View Stereo (MVS)

Monocular Depth Estimation

3.5.2. Decision-Making Flowchart to Select AI Used in PRA/PDA-Related Volume Detection

3.5.3. Discussion on Volume Detection in PRA/PDA Using AI

3.6. Report Writing

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| 3DSSD | 3D Single Shot Detector |

| AI | Artificial Intelligence |

| ANN | Artificial Neural Network |

| BEV | Bird’s Eye View |

| BIM | Building Information Modeling |

| BRDF | Bidirectional Reflectance Distribution Function |

| CBAM | Convolutional Block Attention Module |

| cGAN | Conditional Generative Adversarial Network |

| CNN | Convolutional Neural Network |

| DEC-MVSNet | Dilated ECA-Net MVSNet |

| EIA | Environmental Impact Assessment |

| EPC | Energy Performance Certificate |

| FCN | Fully Convolutional Network |

| FP | Feature Propagation |

| GAN | Generative Adversarial Network |

| GNN | Graph Neural Network |

| HSV | Hue-Saturation-Value |

| IFC | Industry Foundation Classes |

| KDP | Key Demolition Products |

| LiDAR | Light Detection and Ranging |

| LSTM | Long Short-Term Memory |

| MEP | Mechanical, Electrical, and Plumbing |

| MRF | Markov Random Field |

| MV3D | Multi-View 3D Object Detection Network |

| MVS | Multi-View Stereo |

| NFS | Neuro-Fuzzy System |

| NLP | Natural Language Processing |

| O&M | Operation and Maintenance |

| OCR | Optical Character Recognition |

| PDA | Pre-Demolition Auditing |

| PIXOR | Pixel-wise Oriented Region-based Detector |

| PRA | Pre-Retrofit Auditing |

| PVDF | Polyvinylidene Fluoride |

| R-CNN | Region-Based Convolutional Neural Network |

| RGB | Red-Green-Blue color model |

| RPN | Region Proposal Network |

| RT3D | Real-Time 3D Detector |

| SECOND | Sparsely Embedded Convolutional Detection |

| STFT | Short-Time Fourier Transform |

| SVM | Support Vector Machine |

| ToF | Time-of-Flight |

| VFE | Voxel Feature Encoding |

| VoteNet | Hough Voting-based Network for 3D Detection |

| VoxelNet | Voxel-based Neural Network for 3D detection |

| VSA | Voxel Set Abstraction |

| YOLO | You Only Look Once |

Appendix A. Explanation of AI Topics and Their Definitions

| AI Topic | Description |

| Machine learning (ML) | ML enables systems to learn from data and improve machines’ performance over time without being explicitly programmed. By identifying patterns and relationships in large datasets, ML algorithms can make predictions, classify data, or support decision-making. |

| Deep learning (DL) | DL is a subset of ML that uses artificial neural networks with multiple layers to process and analyze complex data. These networks stimulate the human brain’s ability to recognize patterns and extract features from raw data such as images, audio, or text. |

| Neural networks (NN) | NN are computational models inspired by the human brain’s structure and function. They consist of inter-connected layers of nodes that process and transmit information. |

| Natural language processing (NLP) | NLP focuses on enabling computers to understand, interpret, and generate human language. NLP combines computational linguistics with ML to perform tasks such as text analysis, language translation, sentiment analysis, and automated report generation. It bridges the gap between human communication and machine understanding. |

| Pattern recognition | Pattern recognition is the ability of AI systems to identify patterns, regularities, or trends in data. This field often overlaps with ML and computer vision. |

| Machine vision | Machine vision enables machines to interpret and process visual data, such as images or video, to extract meaningful information. This involves techniques like image processing, object detection, and 3D modeling. |

| Expert systems | Expert systems are AI systems designed to simulate the decision-making abilities of a human expert in a specific domain. These systems rely on a knowledge base of facts and rules and use inference engines to solve problems or make decisions. |

| Fuzzy logic | Fuzzy logic is an approach to reasoning. This allows for degrees of truth or partial values, rather than binary true or false logic. It is particularly useful for handling uncertainty and imprecise information. |

| Genetic algorithms | Genetic algorithms are optimization techniques inspired by the principles of natural selection and evolution. These algorithms iteratively improve solutions to complex problems by stimulating biological processes such as mutation, crossover, and selection. |

Appendix B. A Summary of the Papers Highlighted Relating to AI-Based Floor Plan Detection in PRA/PDA

| Paper | AI Method | Algorithm | Dataset | Results Accuracy | Additional Note |

| So et al. (1998) [36] | Rule-Based | N/A (Extrusion from 2D CAD) | N/A | N/A | Focus: Base technique |

| Or et al. (2005) [37] | Rule-Based | RAG + Symbol Recognition | N/A | N/A | Focus: 3D structure |

| Chandler (2006) [38] | Rule-Based | SVG (parsing to .obj) | N/A | N/A | Focus: Layered parsing |

| Moloo et al. (2011) [31] | Deep Learning | Open CV + CNN | N/A | 85–90% accuracy, 4s model generation | |

| Mello et al. (2012) [39] | Rule-Based | Hough + Contour Segmentation | N/A | N/A | Focus: Historical plans |

| Liu et al. (2015) [41] | Hybrid AI + Procedural Modelling | AI + Procedural Modelling | Rent3D (MRF + images) | 90% layout estimation | |

| Wu (2015) [40] | Rule-Based | IndoorOSM + Vectorization | N/A | N/A | Focus: GIS integration |

| Zak & Macadam (2017) [42] | Hybrid AI + Procedural Modelling | BIM + AI vision | N/A | 89% | Input quality dependency issues/challenges |

| Kim et al. (2021) [33] | GAN-Based | cGAN (for style normalization) | EAIS | 12% room detection, 95% room matching | |

| Park & Kim (2021) [35] | Hybrid AI + Procedural Modelling | 3DPlanNet (CNN + Heuristics) | 30 training sample | 95% wall, 97% object accuracy | |

| Vidanapathirana et al. (2021) [24] | GAN-Based | GAN + GNN for realistic textures | N/A | N/A | Focus: hand-drawn plans to immersive 3D |

| Cheng et al. (2022) [32] | Deep Learning | YOLOv5 + DRN + OCR | RJFM (2000 images) | 98% | |

| Pizarro et al. (2022) [29] | Hybrid AI + Procedural Modelling | CNN + RL refinement | N/A | 90%+ | Focus: multi-unit residential models |

| Barreiro et al. (2023) [30] | Deep Learning | CNN + Hough Transform | CubiCasa5k | 81% IoU(walls), 80% vector accuracy | |

| Usman et al. (2024) [34] | GAN-Based | GAN + CNN (Unity3D) | N/A | N/A | Focus: scene realism, not model vectorization |

Appendix C. Documentation That Can Be Accessed by Surveyors Before Going to on Site and Its Context (Apart from Floor Plan)

| Documentation | Context Included |

| Operation and Maintenance (O&M) Book | Equipment, systems, components, maintenance schedules, past repairs, warranties, operational guidelines. |

| Building Survey Reports | Structural details, age, material specifications, condition assessment. |

| As-Built Drawings | Layout, dimensions, material specifications, utilities (HVAC, electrical, plumbing). |

| Material Inventory Report | Construction materials, hazardous substances (asbestos, lead), recyclables. |

| Energy Performance Certificates (EPCs) | Energy efficiency ratings, insulation, heating/cooling systems. |

| Hazardous Material Survey | Asbestos, lead paint, mold, and other hazardous substances. |

| Structural Assessment Report | Load-bearing elements, foundation integrity, risk of structural failure. |

| Environmental Impact Assessment (EIA) | Environmental effects, waste disposal, emissions, biodiversity impact. |

| Mechanical, Electrical, and Plumbing (MEP) Reports | Existing conditions of mechanical, electrical, plumbing systems. |

| Fire Safety Reports | Fire resistance, evacuation routes, fire protection measures. |

| Occupancy and Usage Records | Historical/current usage, modifications, maintenance history. |

| Geotechnical Reports | Soil composition, ground stability, foundation conditions. |

| Waste Management Plan | Strategies for waste handling, recycling, disposal during demolition or renovation. |

| Regulatory Compliance Documentation | Permits, adherence to local building codes, environmental regulations. |

Appendix D. An Overview on Potential AI Methods That Can Be Used in PRA/PDA-Related Object Detection

| Paper | AI Method | Sub-Method | Dataset | Result/Accuracy | Notes |

| Beltran et al. (2018) [58] | Single-Shot (BirdNet) | BEV + CNN | KITTI | SoTA at time | Less vertical accuracy |

| Chen et al. (2017) [44] | Deep Learning | Multi-View (MV3D) | KITTI | 99.1% recall (IoU 0.25) | High accuracy, not real-time |

| Lehner et al. (2019) [56] | Voxel-based | Patch Refinement | N/A | N/A | Local voxel refinement |

| Liang et al. (2019) [50] | Deep Learning | Multi-Sensor Fusion | KITTI | N/A | Robust in occlusion |

| Lu et al. (2019) [51] | Attention-Based DL (SCANet) | Multi-Level Fusion | KITTI | SoTA, 11 FPS | Computationally complex |

| Qi et al. (2019) [45] | Point-based | VoteNet | ScanNet, SUN RGB-D | SoTA | High complexity |

| Shi et al. (2019) [48] | Segmentation-based | PointRCNN | KITTI | High recall | Heavy computation |

| Shi et al. (2019) [25] | Hybrid | PV-RCNN | KITTI, Waymo | High efficiency | Combines voxel + point |

| Shin et al. (2019) [54] | Hybrid (RoarNet) | Image + LiDAR Fusion | KITTI | High accuracy | Works with unsynced sensors |

| Wang et al. (2019) [55] | Deep Learning | F-ConvNet | KITTI, SUN-RGBD | Top-ranked | Sliding frustum-based |

| Xu et al. (2018) [46] | Deep Learning | PointFusion | KITTI, SUN-RGBD | SoTA on datasets | High computation |

| Yan et al. (2018) [59] | Sparse CNN | SECOND | N/A | N/A | Faster than VoxelNet |

| Yang et al. (2018) [57] | Single-Shot (PIXOR) | BEV-based | KITTI | 28 FPS | Loses height info |

| Yang et al. (2018) [49] | Point-based | 3DSSD | KITTI, nuScenes | >25 FPS | Lacks detail on small objects |

| Zeng et al. (2018) [52] | Deep Learning | Real-time 3D (RT3D) | KITTI | Fastest at time (11.1 FPS) | Real-time capable |

| Zhao et al. (2018) [53] | Attention-based | Point-SENet | N/A | Better than F-PointNet | Focus on feature quality |

| Zhou & Tuzel (2018) [47] | Voxel-based | VoxelNet | N/A | N/A | Heavy computation |

Appendix E. An Overview on Existing AI-Driven Material Detection Methods Used in PRA/PDA

| Reference | Material | Main Input | Algorithm | Feature/RGB | Accuracy |

| Penumuru et al., 2020 [82] | Aluminum, Copper, Medium density fiber board, Mild steel, Sandpaper, Styrofoam, Cotton, and Linen | Camera | SVM | RGB | 100% with small sample |

| Strese et al., 2017 [69] | Meshes, Stones, Glossy Surfaces, Wooden surface, Rubbers, Fibers, Foams, Foils/Papers, Fabrics/Textiles | Robot touch, Camera, Microphone (sound signals), and FSR | Naive Bayes classifier | Acceleration, Friction force, Sound, Image | 74% |

| Dimitrov et al., 2014 [60] | Asphalt, Brick, Cement-Granular, Cement-Smooth, Concrete-Cast, Concrete-Precast, Foliage, Form Work, Grass, Gravel, Marble, Metal-Grills, Paving, Soil-Compact, Soil-Vegetation, Soil-Loose, Soil-Mulch, Stone-Granular, Stone-Limestone, Wood. | Single image | SVM | Edges, Spots, Waves, Hue-Saturation-Value (HSV) color values | 97.1% |

| Zhu et al., 2010 [74] | Concrete | Image | ANN | Color, Texture | Around 80% |

| Leung and Malik, 2001 [70] | Felt, Rough Plastic, Sandpaper, Plaster, Rough paper, Roof Shingle, Cork, Rug, Styrofoam, Lambswook, Quarry Tile, Insulation plaster, slate, Painted spheres, Brick, concrete, Brown bread, cracker | Image | K-means | Reflectance, Surface normal | 97% |

| Bian et al., 2018 [83] | Brick, Carpet, Ceramic, Fabric, Foliage, Food, Hair, Leather, Metal, Mirror, Painted, Paper, Plastic, Polished stone, Skin, Sky, Stone, Tile, Wallpaper, Water, Wood, Other | Image | CNN, Softmax, SVM, Random Forest | N/A | 80–85% |

| Liu and Gu, 2014 [26] | Metal, Aluminum, Alloy, Steel, Stainless steel, Brass, Copper, Plastic, Ceramic, Fabric, wood | Image | SVM | Illumination | 51–61% for dented aluminum and stainless steel plates 94–97% for varnished and unvarnished paints. |

| Bell et al., 2013 [68] | Wood, Tile, Marble, Granite | Image | Supervised learning classification | Material parameters (reflectance, material names), Texture information (surface normals, rectified textures), Contextual information (scene category, and object names). | Below 50% |

| Bell et al., 2015 [84] | Brick, Carpet. Ceramic, Fabric, Foliage, Food, Glass, Hair, Leather, Metal, Mirror, Painted, Paper, Plastic, Pol. Stone, Skin, Sky, Stone, Tile, Wallpaper, Water, Wood | Image | CNN | N/A | 85.2% |

| Yuan et al., 2020 [73] | Concrete, Mortar, Stone, Metal, Painting, Wood, Plaster, Pottery, Ceramic | A terrestrial laser scanner (TLS) with a built-in camera | SVM | Material reflectance, HSV color values, and Surface roughness as features | 96.7% |

| Han and Golparvar-Fard, 2015 [72] | Asphalt, Brick, Granular and Smooth Cement based surfaces, Concrete, Foliage, Formwork (Gang Form and Plywood form), Gravel, Insulation, Marble, Metal, Paving, Soil (Compact, Dirt, Vegetation, Loose, and Mulch), Stone (Granular, Limestone), Waterproofing Paint and Wood | 4D Building Information Models (BIM), 3D point cloud models | SVM | RGB | Average accuracy of 92.4% |

| Mahami et al., 2021 [87] | Sandstorms, Paving, Gravel, Stone, Cement-granular, Brick, Soil, Wood, Asphalt, Clay hollow block, and Concrete block | Camera, Microphone | VGG16 | N/A | 97.35% |

| Mengiste et al., 2024 [96] | Concrete surface, Chiseled concrete, Mortar plaster. | Image | CNN and GLCM | Textual and CNN | 95% for Tthe limited (208 images) data sets 71% for very small (70 images) data sets |

| Li et al., 2022 [88] | Wood, Metal, Fabric, Marble, Ceramic, Glass, Leather, Plastic, Rubber, Granite, Wax | 3D model and Point cloud | ResNet-50 | N/A | 76.82% |

| Erickson et al., 2020 [85] | Ceramic, Fabric, Foam, Glass, Metal, Paper, Plastic, and Wood. | A spectrometer and near-field camera | CNN (ImageNet) | N/A | 80.0% |

| Olgun and Türkoğlu, 2022 [62] | Aluminum, Black fabric, Frosted glass, Glass, Pottery, Granite, Linden, Magnet, Polyethylene and Artificial marble | Laser light | LSTM deep learning model | N/A | An average of 93.63% |

| Lu et al., 2018 [61] | Exposed concrete, Concrete-green painting, Grey brick, Concrete-white painting, Orange brick, Red brick, white brick, Wood | Image | Neuro-Fuzzy system (NFS) | Projection results from two directions, Ratio values, RGB color values, Roughness values, and Hue value | 88–96% |

| Son et al., 2014 [64] | Concrete, Steel, and Wood. | Image | Compare | N/A | 82.28–96.70% |

| Erickson et al., 2019 [65] | Plastic, Fabric, Paper, Wood, and Metal | Micro spectrometers | Nural network | N/A | 94.6% when given only one spectral sample per object. 79.1% via leave-one-object-out cross validation. |

Appendix F. A Summary of Papers Highlighted Relating to AI-Based Volume Detection in PRA/PDA

| Paper | Technology Used | Input Data | Object Volume Detected | Accuracy Rate |

| Shen and Maier (2021) [93] | Multi-View Stereo (MVS) | Multiple camera images, 3D tube midline reconstruction | Tube geometry during freeform bending | 0.30–0.61% |

| Peng et al. (2019) [89] | Structured Light | Laser line grid projector images, IHED deep learning model | Box volume measurement | Measure volumes ranging from 100 mm to 1800 mm with an accuracy of ±5.0 mm |

| Baek et al. (2020) [90] | Time-of-Flight (ToF) Cameras | Infrared light pulses, depth data from ToF sensors | Distance measurement for surfaces | Error correction improves precision significantly |

| Malik et al. (2023) [91] | LiDAR | LiDAR-based depth maps, transient neural radiance fields (NeRF) | 3D scene reconstruction and synthetic view generation | High precision in 3D rendering |

| Li et al. (2024) [92] | Multi-View Stereo (MVS) | Multi-view images, DEC-MVSNet, deep learning features | 3D structural reconstruction | Higher completeness and quality than traditional MVS |

| Dahnert et al. (2022) [95] | Monocular Depth Estimation | Single RGB image, convolutional networks for depth estimation | 3D scene reconstruction | Significant improvement over separate task processing |

Appendix G. Context for Sections in PRA/PDA Reports

| Section | Description |

| Executive summary | Provides a succinct overview of the project’s scope, objectives, key findings, and actionable recommendations to facilitate quick comprehension and decision-making. |

| Project introduction | Outlines project background, objectives, audit boundaries, and detailed information on the structure or site, including geographical location, historical context, usage history, age, and current physical condition. |

| Methodology | Describes methodologies used, including assessment methods, techniques, instruments, and adherence to established standards (e.g., ISO guidelines) to ensure transparency, reproducibility, and validity. |

| Building characterization | Details structural and architectural features such as floor plans, elevations, total area, construction materials, and historical modifications to enable precise auditing. |

| Inventory of materials | Systematically identifies, quantifies, and classifies all materials within the structure (e.g., concrete, timber, metals, plastics), supporting resource recovery and waste management planning. |

| Hazardous material identification | Systematic identification of hazardous substances (e.g., asbestos, lead-based paints, mercury, PCBs, mold) for effective hazard mitigation, compliance, and safety planning. |

| Waste management and resource recovery analysis | Evaluates potential opportunities for material reuse, recycling, or recovery, providing detailed recommendations for selective demolition and handling to enhance resource efficiency and minimize environmental impacts. |

| Environmental and economic assessment | Assesses environmental impacts, mitigation measures, and economic considerations, including cost-benefit analyses, to balance sustainability objectives and project viability. |

| Regulatory compliance | Reviews applicable regulatory frameworks (local, national, international guidelines) to ensure recommendations align with legal and industry standards, thereby minimizing legal and regulatory risks. |

| Conclusions and strategic recommendations | Synthesizes audit findings into prioritized, actionable recommendations addressing safety, sustainability, economic efficiency, and regulatory compliance for subsequent project phases. |

| Appendix | Includes Supplementary Materials (e.g., detailed inventories, sampling methods, lab analyses, site photographs, reference documentation) supporting and validating the main report findings. |

References

- Amoah, C.; Smith, J. Barriers to the Green Retrofitting of Existing Residential Buildings. J. Facil. Manag. 2024, 22, 194–209. [Google Scholar] [CrossRef]

- Iwuanyanwu, O.; Gil-Ozoudeh, I.; Okwandu, A.C.; Ike, C.S. Retrofitting Existing Buildings for Sustainability: Challenges and Innovations. Eng. Sci. Technol. J. 2024, 5, 2616–2631. [Google Scholar] [CrossRef]

- Miran, F.D.; Husein, H.A. Introducing a Conceptual Model for Assessing the Present State of Preservation in Heritage Buildings: Utilizing Building Adaptation as an Approach. Buildings 2023, 13, 859. [Google Scholar] [CrossRef]

- Farneti, E.; Cavalagli, N.; Venanzi, I.; Salvatore, W.; Ubertini, F. Residual Service Life Prediction for Bridges Undergoing Slow Landslide-Induced Movements Combining Satellite Radar Interferometry and Numerical Collapse Simulation. Eng. Struct. 2023, 293, 116628. [Google Scholar] [CrossRef]

- Grecchi, M. Building Renovation: How to Retrofit and Reuse Existing Buildings to Save Energy and Respond to New Needs; Springer Nature: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Wang, Z.; Liu, Q.; Zhang, B. What Kinds of Building Energy-Saving Retrofit Projects Should Be Preferred? Efficiency Evaluation with Three-Stage Data Envelopment Analysis (DEA). Renew. Sustain. Energy Rev. 2022, 161, 112392. [Google Scholar] [CrossRef]

- Geldenhuys, H.J. The Development of an Implementation Framework for Green Retrofitting of Existing Buildings in South Africa. Ph.D. Thesis, Stellenbosch University, Stellenbosch, South Africa, 2017. Available online: https://scholar.sun.ac.za/handle/10019.1/100867 (accessed on 26 March 2025).

- Zaharieva, R.; Kancheva, Y.; Evlogiev, D.; Dinov, N. Pre-Demolition Audit as a Tool for Appropriate CDW Management–a Case Study of a Public Building. E3S Web Conf. 2024, 550, 01045. [Google Scholar] [CrossRef]

- Ann, T.W.; Wong, I.; Mok, K.S. Effectiveness and Barriers of Pre-Refurbishment Auditing for Refurbishment and Renovation Waste Management. Environ. Chall. 2021, 5, 100231. [Google Scholar]

- Xu, K.; Shen, G.Q.; Liu, G.; Martek, I. Demolition of Existing Buildings in Urban Renewal Projects: A Decision Support System in the China Context. Sustainability 2019, 11, 491. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, L. Roles of Artificial Intelligence in Construction Engineering and Management: A Critical Review and Future Trends. Autom. Constr. 2021, 122, 103517. [Google Scholar] [CrossRef]

- Abioye, S.O.; Oyedele, L.O.; Akanbi, L.; Ajayi, A.; Delgado, J.M.D.; Bilal, M.; Akinade, O.O.; Ahmed, A. Artificial Intelligence in the Construction Industry: A Review of Present Status, Opportunities and Future Challenges. J. Build. Eng. 2021, 44, 103299. [Google Scholar] [CrossRef]

- Li, Y.; Chen, H.; Yu, P.; Yang, L. A Review of Artificial Intelligence in Enhancing Architectural Design Efficiency. Appl. Sci. 2025, 15, 1476. [Google Scholar] [CrossRef]

- Beyan, E.V.P.; Rossy, A.G.C. A Review of AI Image Generator: Influences, Challenges, and Future Prospects for Architectural Field. J. Artif. Intell. Archit. 2023, 2, 53–65. [Google Scholar]

- Rane, N. Role of ChatGPT and Similar Generative Artificial Intelligence (AI) in Construction Industry. 2023. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4598258 (accessed on 26 March 2025). [CrossRef]

- Momade, M.H.; Durdyev, S.; Estrella, D.; Ismail, S. Systematic Review of Application of Artificial Intelligence Tools in Architectural, Engineering and Construction. Front. Eng. Built Environ. 2021, 1, 203–216. [Google Scholar] [CrossRef]

- Salehi, H.; Burgueño, R. Emerging Artificial Intelligence Methods in Structural Engineering. Eng. Struct. 2018, 171, 170–189. [Google Scholar] [CrossRef]

- Tapeh, A.T.G.; Naser, M.Z. Artificial Intelligence, Machine Learning, and Deep Learning in Structural Engineering: A Scientometrics Review of Trends and Best Practices. Arch. Comput. Methods Eng. 2023, 30, 115–159. [Google Scholar] [CrossRef]

- Thai, H.-T. Machine Learning for Structural Engineering: A State-of-the-Art Review. Structures 2022, 38, 448–491. [Google Scholar] [CrossRef]

- Mulero-Palencia, S.; Álvarez-Díaz, S.; Andrés-Chicote, M. Machine Learning for the Improvement of Deep Renovation Building Projects Using As-Built BIM Models. Sustainability 2021, 13, 6576. [Google Scholar] [CrossRef]

- Ajayi, S.O.; Oyedele, L.O.; Bilal, M.; Akinade, O.O.; Alaka, H.A.; Owolabi, H.A. Critical Management Practices Influencing On-Site Waste Minimization in Construction Projects. Waste Manag. 2017, 59, 330–339. [Google Scholar] [CrossRef] [PubMed]

- Baset, A.; Jradi, M. Data-Driven Decision Support for Smart and Efficient Building Energy Retrofits: A Review. Appl. Syst. Innov. 2025, 8, 5. [Google Scholar] [CrossRef]

- Yamusa, M.; Dauda, J.; Ajayi, S.; Saka, A.B.; Oyegoke, A.S.; Adebisi, W.; Jagun, Z.T. Automated Compliance Checking in the Aec Industry: A Review of Current State, Opportunities and Challenges; Social Science Research Network: Rochester, NY, USA, 2024. [Google Scholar] [CrossRef]

- Vidanapathirana, M.; Wu, Q.; Furukawa, Y.; Chang, A.X.; Savva, M. Plan2Scene: Converting Floorplans to 3D Scenes. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: New York, NY, USA, 2021; pp. 10728–10737. [Google Scholar] [CrossRef]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. PV-RCNN: Point-Voxel Feature Set Abstraction for 3D Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: New York, NY, USA, 2020; pp. 10526–10535. [Google Scholar] [CrossRef]

- Liu, C.; Gu, J. Discriminative Illumination: Per-Pixel Classification of Raw Materials Based on Optimal Projections of Spectral BRDF. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 86–98. [Google Scholar] [CrossRef]

- Panchalingam, R.; Chan, K.C. A State-of-the-Art Review on Artificial Intelligence for Smart Buildings. Intell. Build. Int. 2021, 13, 203–226. [Google Scholar] [CrossRef]

- Aguilar, J.; Garces-Jimenez, A.; R-moreno, M.D.; García, R. A Systematic Literature Review on the Use of Artificial Intelligence in Energy Self-Management in Smart Buildings. Renew. Sustain. Energy Rev. 2021, 151, 111530. [Google Scholar] [CrossRef]

- Pizarro, P.N.; Hitschfeld, N.; Sipiran, I.; Saavedra, J.M. Automatic Floor Plan Analysis and Recognition. Autom. Constr. 2022, 140, 104348. [Google Scholar] [CrossRef]

- Barreiro, A.C.; Trzeciakiewicz, M.; Hilsmann, A.; Eisert, P. Automatic Reconstruction of Semantic 3D Models from 2D Floor Plans. In Proceedings of the 2023 18th International Conference on Machine Vision and Applications (MVA), Hamamatsu, Japan, 23–25 July 2023; IEEE: New York, NY, USA, 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Moloo, R.K.; Dawood, M.A.S.; Auleear, A.S. 3-Phase Recognition Approach to Pseudo 3d Building Generation from 2d Floor Plan. arXiv 2011, arXiv:1107.3680. [Google Scholar] [CrossRef]

- Cheng, X.; Ding, X.; Zhang, J.; Liu, M.; Chang, K.; Wang, J.; Zhou, C.; Yuan, J. Multi-Stage Floor Plan Recognition and 3D Reconstruction. In Proceedings of the 8th International Conference on Computing and Artificial Intelligence, Tianjin, China, 18–21 March 2022; ACM: New York, NY, USA, 2022; pp. 387–393. [Google Scholar] [CrossRef]

- Kim, S.; Park, S.; Kim, H.; Yu, K. Deep Floor Plan Analysis for Complicated Drawings Based on Style Transfer. J. Comput. Civ. Eng. 2021, 35, 04020066. [Google Scholar] [CrossRef]

- Usman, M.; Almulhim, A.; Alaseri, M.; Alzahran, M.; Luqman, H.; Anwar, S. Sketch–to–3D: Transforming Hand-Sketched Floorplans into 3D Layouts. In Proceedings of the 2024 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Perth, Australia, 27–29 November 2024; IEEE: New York, NY, USA, 2024; pp. 359–366. [Google Scholar] [CrossRef]

- Park, S.; Kim, H. 3DPlanNet: Generating 3D Models from 2D Floor Plan Images Using Ensemble Methods. Electronics 2021, 10, 2729. [Google Scholar] [CrossRef]

- So, C.; Baciu, G.; Sun, H. Reconstruction of 3D Virtual Buildings from 2D Architectural Floor Plans. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, Taipei Taiwan, 2–5 November 1998; ACM: New York, NY, USA, 1998; pp. 17–23. [Google Scholar] [CrossRef]

- Or, S.; Wong, K.-H.; Yu, Y.; Chang, M.M.; Kong, H. Highly Automatic Approach to Architectural Floorplan Image Understanding & Model Generation. Pattern Recognit. 2005, 25–32. [Google Scholar]

- Chandler, M.; Genetti, J.; Chappell, G. 3D Interior Model Extrusion from 2D Floor Plans. 2006. Available online: https://www.cs.uaf.edu/2012/ms_project/MS_CS_Matt_Chandler.pdf (accessed on 26 March 2025).

- Mello, C.A.B.; Costa, D.C.; Santos, T.J.D. Automatic Image Segmentation of Old Topographic Maps and Floor Plans. In Proceedings of the 2012 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Seoul, Republic of Korea, 14–17 October 2012; IEEE: New York, NY, USA, 2012; pp. 132–137. [Google Scholar] [CrossRef]

- Wu, H. Integration of 2D Architectural Floor Plans into Indoor Open-StreetMap for Reconstructing 3D Building Models. Ph.D. Thesis, Delft University of Technology, Delft, The Netherlands, 2015. Available online: https://repository.tudelft.nl/file/File_962bcbb6-7230-4539-bcac-cee5f600191a?preview=1 (accessed on 26 March 2025).

- Liu, C.; Schwing, A.G.; Kundu, K.; Urtasun, R.; Fidler, S. Rent3d: Floor-Plan Priors for Monocular Layout Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3413–3421. [Google Scholar]

- Zak, J.; Macadam, H. Utilization of Building Information Modeling in Infrastructure’s Design and Construction. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Busan, Republic of Korea, 25–27 August 2017; IOP Publishing: Bristol, UK, 2017; Volume 236, p. 012108. [Google Scholar]

- Bocaneala, N.; Mayouf, M.; Vakaj, E.; Shelbourn, M. Artificial intelligence based methods for retrofit projects: A review of applications and impacts. Arch. Comput. Methods Eng. 2025, 32, 899–926. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D Object Detection Network for Autonomous Driving. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 6526–6534. [Google Scholar] [CrossRef]

- Qi, C.R.; Litany, O.; He, K.; Guibas, L. Deep Hough Voting for 3D Object Detection in Point Clouds. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: New York, NY, USA, 2019; pp. 9276–9285. [Google Scholar] [CrossRef]

- Xu, D.; Anguelov, D.; Jain, A. PointFusion: Deep Sensor Fusion for 3D Bounding Box Estimation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: New York, NY, USA, 2018; pp. 244–253. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: New York, NY, USA, 2018; pp. 4490–4499. [Google Scholar] [CrossRef]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection From Point Cloud. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: New York, NY, USA, 2019; pp. 770–779. [Google Scholar] [CrossRef]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3DSSD: Point-Based 3D Single Stage Object Detector. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: New York, NY, USA, 2020; pp. 11037–11045. [Google Scholar] [CrossRef]

- Liang, M.; Yang, B.; Chen, Y.; Hu, R.; Urtasun, R. Multi-Task Multi-Sensor Fusion for 3D Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: New York, NY, USA, 2019; pp. 7337–7345. [Google Scholar] [CrossRef]

- Lu, H.; Chen, X.; Zhang, G.; Zhou, Q.; Ma, Y.; Zhao, Y. SCANet: Spatial-Channel Attention Network for 3D Object Detection. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; IEEE: New York, NY, USA, 2019; pp. 1992–1996. [Google Scholar]

- Zeng, Y.; Hu, Y.; Liu, S.; Ye, J.; Han, Y.; Li, X.; Sun, N. RT3D: Real-Time 3-D Vehicle Detection in LiDAR Point Cloud for Autonomous Driving. IEEE Robot. Autom. Lett. 2018, 3, 3434–3440. [Google Scholar] [CrossRef]

- Zhao, X.; Liu, Z.; Hu, R.; Huang, K. 3D Object Detection Using Scale Invariant and Feature Reweighting Networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 9267–9274. [Google Scholar]

- Shin, K.; Kwon, Y.P.; Tomizuka, M. Roarnet: A Robust 3d Object Detection Based on Region Approximation Refinement. In Proceedings of the 2019 IEEE intelligent vehicles symposium (IV), Paris, France, 9–12 June 2019; IEEE: New York, NY, USA, 2019; pp. 2510–2515. [Google Scholar]

- Wang, H.; Xu, T.; Liu, Q.; Lian, D.; Chen, E.; Du, D.; Wu, H.; Su, W. MCNE: An End-to-End Framework for Learning Multiple Conditional Network Representations of Social Network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; ACM: New York, NY, USA, 2019; pp. 1064–1072. [Google Scholar] [CrossRef]

- Lehner, J.; Mitterecker, A.; Adler, T.; Hofmarcher, M.; Nessler, B.; Hochreiter, S. Patch Refinement—Localized 3D Object Detection. arXiv 2019, arXiv:1910.04093. [Google Scholar]

- Yang, B.; Luo, W.; Urtasun, R. PIXOR: Real-Time 3D Object Detection from Point Clouds. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: New York, NY, USA, 2018; pp. 7652–7660. [Google Scholar] [CrossRef]

- Beltrán, J.; Guindel, C.; Moreno, F.M.; Cruzado, D.; Garcia, F.; De La Escalera, A. Birdnet: A 3d Object Detection Framework from Lidar Information. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; IEEE: New York, NY, USA, 2018; pp. 3517–3523. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef]

- Dimitrov, A.; Golparvar-Fard, M. Vision-Based Material Recognition for Automated Monitoring of Construction Progress and Generating Building Information Modeling from Unordered Site Image Collections. Adv. Eng. Inform. 2014, 28, 37–49. [Google Scholar] [CrossRef]

- Lu, Q.; Lee, S.; Chen, L. Image-Driven Fuzzy-Based System to Construct as-Is IFC BIM Objects. Autom. Constr. 2018, 92, 68–87. [Google Scholar] [CrossRef]

- Olgun, N.; Türkoğlu, İ. Defining Materials Using Laser Signals from Long Distance via Deep Learning. Ain Shams Eng. J. 2022, 13, 101603. [Google Scholar] [CrossRef]

- Erickson, Z.; Luskey, N.; Chernova, S.; Kemp, C.C. Classification of Household Materials via Spectroscopy. IEEE Robot. Autom. Lett. 2019, 4, 700–707. [Google Scholar] [CrossRef]

- Schwab, J.; Wachinger, J.; Munana, R.; Nabiryo, M.; Sekitoleko, I.; Cazier, J.; Ingenhoff, R.; Favaretti, C.; Subramonia Pillai, V.; Weswa, I.; et al. Design Research to Embed mHealth into a Community-Led Blood Pressure Management System in Uganda: Protocol for a Mixed Methods Study. JMIR Res. Protoc. 2023, 12, e46614. [Google Scholar] [CrossRef]

- Son, H.; Kim, C.; Hwang, N.; Kim, C.; Kang, Y. Classification of Major Construction Materials in Construction Environments Using Ensemble Classifiers. Adv. Eng. Inform. 2014, 28, 1–10. [Google Scholar] [CrossRef]

- Yu, Z.; Sadati, S.H.; Perera, S.; Hauser, H.; Childs, P.R.; Nanayakkara, T. Tapered Whisker Reservoir Computing for Real-Time Terrain Identification-Based Navigation. Sci. Rep. 2023, 13, 5213. [Google Scholar] [CrossRef] [PubMed]

- Bell, S.; Upchurch, P.; Snavely, N.; Bala, K. OpenSurfaces: A Richly Annotated Catalog of Surface Appearance. ACM Trans. Graph. 2013, 32, 1–17. [Google Scholar] [CrossRef]

- Yu, Z.; Childs, P.R.; Ge, Y.; Nanayakkara, T. Whisker Sensor for Robot Environments Perception: A Review. IEEE Sens. J. 2024, 24, 28504–28521. [Google Scholar] [CrossRef]

- Abeid Neto, J.; Arditi, D.; Evens, M.W. Using Colors to Detect Structural Components in Digital Pictures. Comput.-Aided Civ. Infrastruct. Eng. 2002, 17, 61–67. [Google Scholar] [CrossRef]

- Han, K.K.; Golparvar-Fard, M. Appearance-Based Material Classification for Monitoring of Operation-Level Construction Progress Using 4D BIM and Site Photologs. Autom. Constr. 2015, 53, 44–57. [Google Scholar] [CrossRef]

- Yuan, L.; Guo, J.; Wang, Q. Automatic Classification of Common Building Materials from 3D Terrestrial Laser Scan Data. Autom. Constr. 2020, 110, 103017. [Google Scholar] [CrossRef]

- Zhu, Z.; Brilakis, I. Parameter Optimization for Automated Concrete Detection in Image Data. Autom. Constr. 2010, 19, 944–953. [Google Scholar] [CrossRef]

- Han, Y.; Salido-Monzú, D.; Butt, J.A.; Schweizer, S.; Wieser, A. A Feature Selection Method for Multimodal Multispectral LiDAR Sensing. ISPRS J. Photogramm. Remote Sens. 2024, 212, 42–57. [Google Scholar] [CrossRef]

- Lee, S.; Kim, J.; Lim, H.; Ahn, S.C. Surface Reflectance Estimation and Segmentation from Single Depth Image of ToF Camera. Signal Process. Image Commun. 2016, 47, 452–462. [Google Scholar] [CrossRef]

- Jamali, N.; Sammut, C. Majority Voting: Material Classification by Tactile Sensing Using Surface Texture. IEEE Trans. Robot. 2011, 27, 508–521. [Google Scholar] [CrossRef]

- Romano, J.M.; Kuchenbecker, K.J. Methods for Robotic Tool-Mediated Haptic Surface Recognition. In Proceedings of the 2014 IEEE Haptics Symposium (HAPTICS), Houston, TX, USA, 24–27 February 2014; IEEE: New York, NY, USA, 2014; pp. 49–56. [Google Scholar] [CrossRef]

- Oddo, C.M.; Beccai, L.; Wessberg, J.; Wasling, H.B.; Mattioli, F.; Carrozza, M.C. Roughness Encoding in Human and Biomimetic Artificial Touch: Spatiotemporal Frequency Modulation and Structural Anisotropy of Fingerprints. Sensors 2011, 11, 5596–5615. [Google Scholar] [CrossRef]

- Strese, M.; Schuwerk, C.; Iepure, A.; Steinbach, E. Multimodal Feature-Based Surface Material Classification. IEEE Trans. Haptics 2017, 10, 226–239. [Google Scholar] [CrossRef]

- Takamuku, S.; Gomez, G.; Hosoda, K.; Pfeifer, R. Haptic Discrimination of Material Properties by a Robotic Hand. In Proceedings of the 2007 IEEE 6th International Conference on Development and Learning, London, UK, 1–13 July 2007; pp. 1–6. [Google Scholar] [CrossRef]

- Yu, Z.; Sadati, S.H.; Hauser, H.; Childs, P.R.; Nanayakkara, T. A Semi-Supervised Reservoir Computing System Based on Tapered Whisker for Mobile Robot Terrain Identification and Roughness Estimation. IEEE Robot. Autom. Lett. 2022, 7, 5655–5662. [Google Scholar] [CrossRef]

- Penumuru, D.P.; Muthuswamy, S.; Karumbu, P. Identification and Classification of Materials Using Machine Vision and Machine Learning in the Context of Industry 4.0. J. Intell. Manuf. 2020, 31, 1229–1241. [Google Scholar] [CrossRef]

- Rahman, K.; Ghani, A.; Alzahrani, A.; Tariq, M.U.; Rahman, A.U. Pre-trained model-based NFR classification: Overcoming limited data challenges. IEEE Access 2023, 11, 81787–81802. [Google Scholar] [CrossRef]

- Bian, P.; Li, W.; Jin, Y.; Zhi, R. Ensemble Feature Learning for Material Recognition with Convolutional Neural Networks. EURASIP J. Image Video Process. 2018, 2018, 64. [Google Scholar] [CrossRef]

- Bell, S.; Upchurch, P.; Snavely, N.; Bala, K. Material Recognition in the Wild with the Materials in Context Database. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; IEEE: New York, NY, USA, 2015; pp. 3479–3487. [Google Scholar] [CrossRef]

- Erickson, Z.; Xing, E.; Srirangam, B.; Chernova, S.; Kemp, C.C. Multimodal Material Classification for Robots Using Spectroscopy and High Resolution Texture Imaging. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; IEEE: New York, NY, USA, 2020; pp. 10452–10459. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Mahami, H.; Ghassemi, N.; Darbandy, M.T.; Shoeibi, A.; Hussain, S.; Nasirzadeh, F.; Alizadehsani, R.; Nahavandi, D.; Khosravi, A.; Nahavandi, S. Material Recognition for Automated Progress Monitoring Using Deep Learning Methods. arXiv 2020, arXiv:2006.16344. [Google Scholar]

- Li, Y.; Upadhyay, U.; Slim, H.; Abdelreheem, A.; Prajapati, A.; Pothigara, S.; Wonka, P.; Elhoseiny, M. 3D CoMPaT: Composition of Materials on Parts of 3D Things. In Computer Vision—ECCV 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2022; Volume 13668, pp. 110–127. [Google Scholar] [CrossRef]

- Leung, T.; Malik, J. Representing and Recognizing the Visual Appearance of Materials Using Three-Dimensional Textons. Int. J. Comput. Vis. 2001, 43, 29–44. [Google Scholar] [CrossRef]

- Peng, T.; Zhang, Z.; Song, Y.; Chen, F.; Zeng, D. Portable System for Box Volume Measurement Based on Line-Structured Light Vision and Deep Learning. Sensors 2019, 19, 3921. [Google Scholar] [CrossRef]

- Baek, E.-T.; Yang, H.-J.; Kim, S.-H.; Lee, G.; Jeong, H. Distance Error Correction in Time-of-Flight Cameras Using Asynchronous Integration Time. Sensors 2020, 20, 1156. [Google Scholar] [CrossRef] [PubMed]

- Malik, A.; Mirdehghan, P.; Nousias, S.; Kutulakos, K.N.; Lindell, D.B. Transient Neural Radiance Fields for Lidar View Synthesis and 3D Reconstruction. In Proceedings of the Advances in Neural Information Processing Systems 36 (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023; Volume 36, pp. 71569–71581. [Google Scholar]

- Li, G.; Li, K.; Zhang, G.; Zhu, Z.; Wang, P.; Wang, Z.; Fu, C. Enhanced Multi View 3D Reconstruction with Improved MVSNet. Sci. Rep. 2024, 14, 14106. [Google Scholar] [CrossRef]

- Shen, J.; Maier, D. A Methodology for Camera-Based Geometry Measurement During Freeform Bending with a Movable Die. Master’s Thesis, Technische Universität München, München, Germany, 2021. [Google Scholar]

- Dahnert, M.; Hou, J.; Nießner, M.; Dai, A. Panoptic 3D Scene Reconstruction From a Single RGB Image. arXiv 2022, arXiv:2111.02444. [Google Scholar] [CrossRef]

- Mengiste, E.; Mannem, K.R.; Prieto, S.A.; Garcia De Soto, B. Transfer-Learning and Texture Features for Recognition of the Conditions of Construction Materials with Small Data Sets. J. Comput. Civ. Eng. 2024, 38, 04023036. [Google Scholar] [CrossRef]

| Stages | Sub-Stages | Keywords for Searching * | Finally Found Papers Numbers |

|---|---|---|---|

| Before sending surveyors to onsite stage | Sending the floor plan to surveyors |

| 15 |

| Sending operation and maintenance handbook and other related document to surveyors |

| 0 | |

| Surveyors go to the site stage | Detect objects |

| 0 |

| Detect the materials of objects |

| 19 | |

| Detect the volumes of objects |

| 6 | |

| After-site analysis stage | Write the report |

| 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yin, Y.; Zuo, H.; Jennings, T.; Jain, S.; Cartwright, B.; Buhagiar, J.; Williams, P.; Adams, K.; Hazeri, K.; Childs, P. Use and Potential of AI in Assisting Surveyors in Building Retrofit and Demolition—A Scoping Review. Buildings 2025, 15, 3448. https://doi.org/10.3390/buildings15193448

Yin Y, Zuo H, Jennings T, Jain S, Cartwright B, Buhagiar J, Williams P, Adams K, Hazeri K, Childs P. Use and Potential of AI in Assisting Surveyors in Building Retrofit and Demolition—A Scoping Review. Buildings. 2025; 15(19):3448. https://doi.org/10.3390/buildings15193448

Chicago/Turabian StyleYin, Yuan, Haoyu Zuo, Tom Jennings, Sandeep Jain, Ben Cartwright, Julian Buhagiar, Paul Williams, Katherine Adams, Kamyar Hazeri, and Peter Childs. 2025. "Use and Potential of AI in Assisting Surveyors in Building Retrofit and Demolition—A Scoping Review" Buildings 15, no. 19: 3448. https://doi.org/10.3390/buildings15193448

APA StyleYin, Y., Zuo, H., Jennings, T., Jain, S., Cartwright, B., Buhagiar, J., Williams, P., Adams, K., Hazeri, K., & Childs, P. (2025). Use and Potential of AI in Assisting Surveyors in Building Retrofit and Demolition—A Scoping Review. Buildings, 15(19), 3448. https://doi.org/10.3390/buildings15193448