Comparative Study of Linear and Non-Linear ML Algorithms for Cement Mortar Strength Estimation

Abstract

1. Introduction

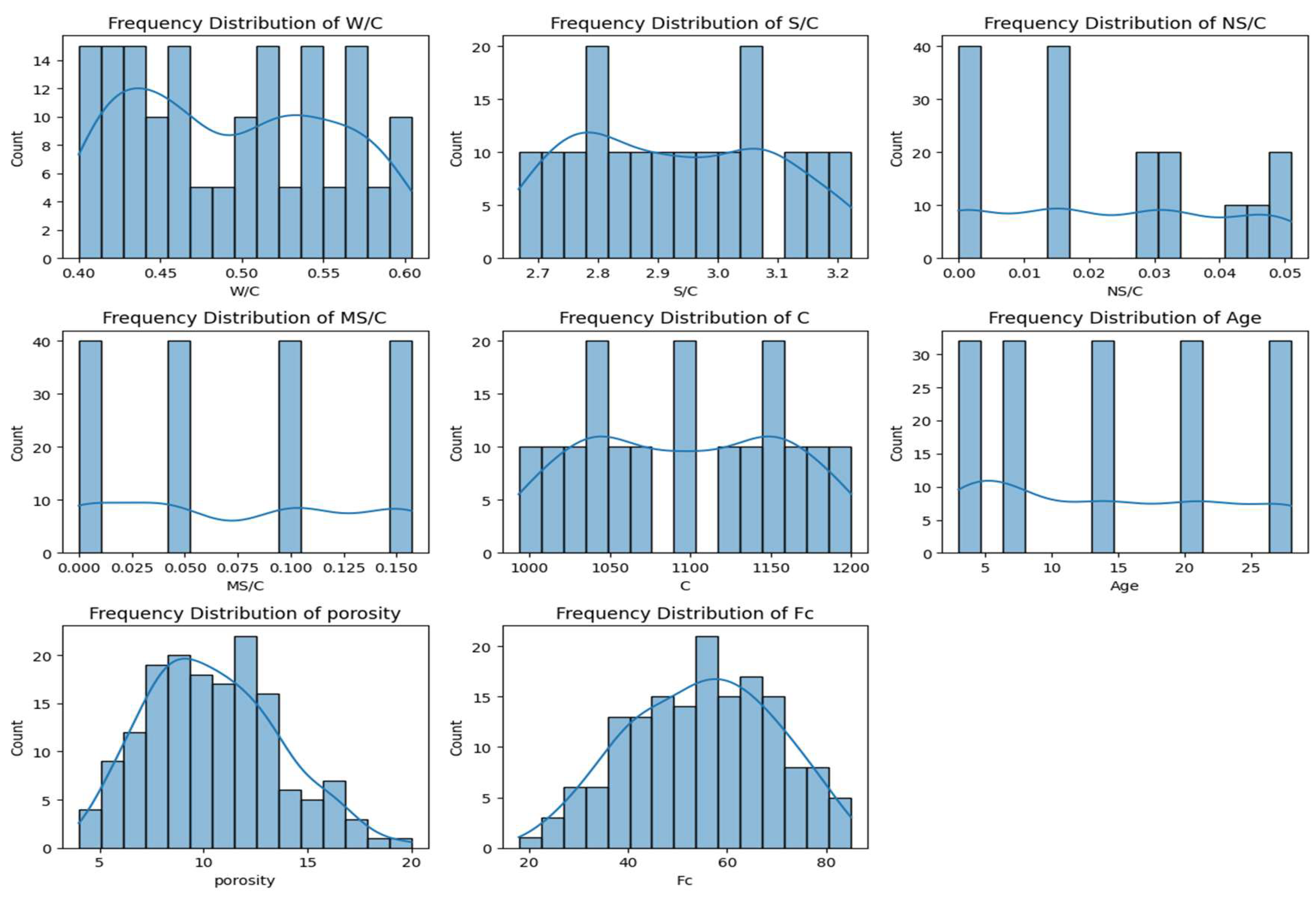

2. Data Description

2.1. Linear Machine Learning Models

2.2. Non-Linear Machine Learning Models

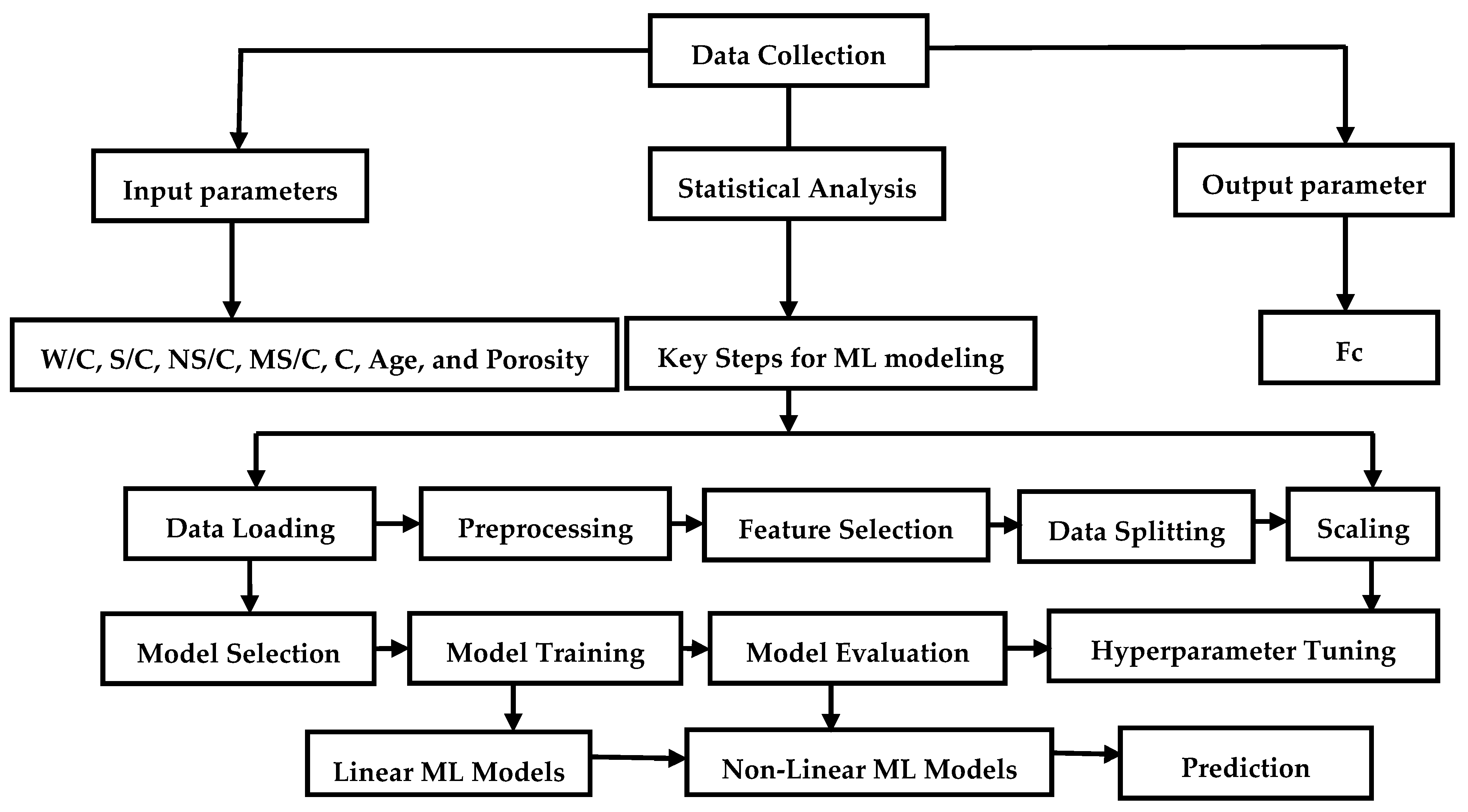

3. Methods

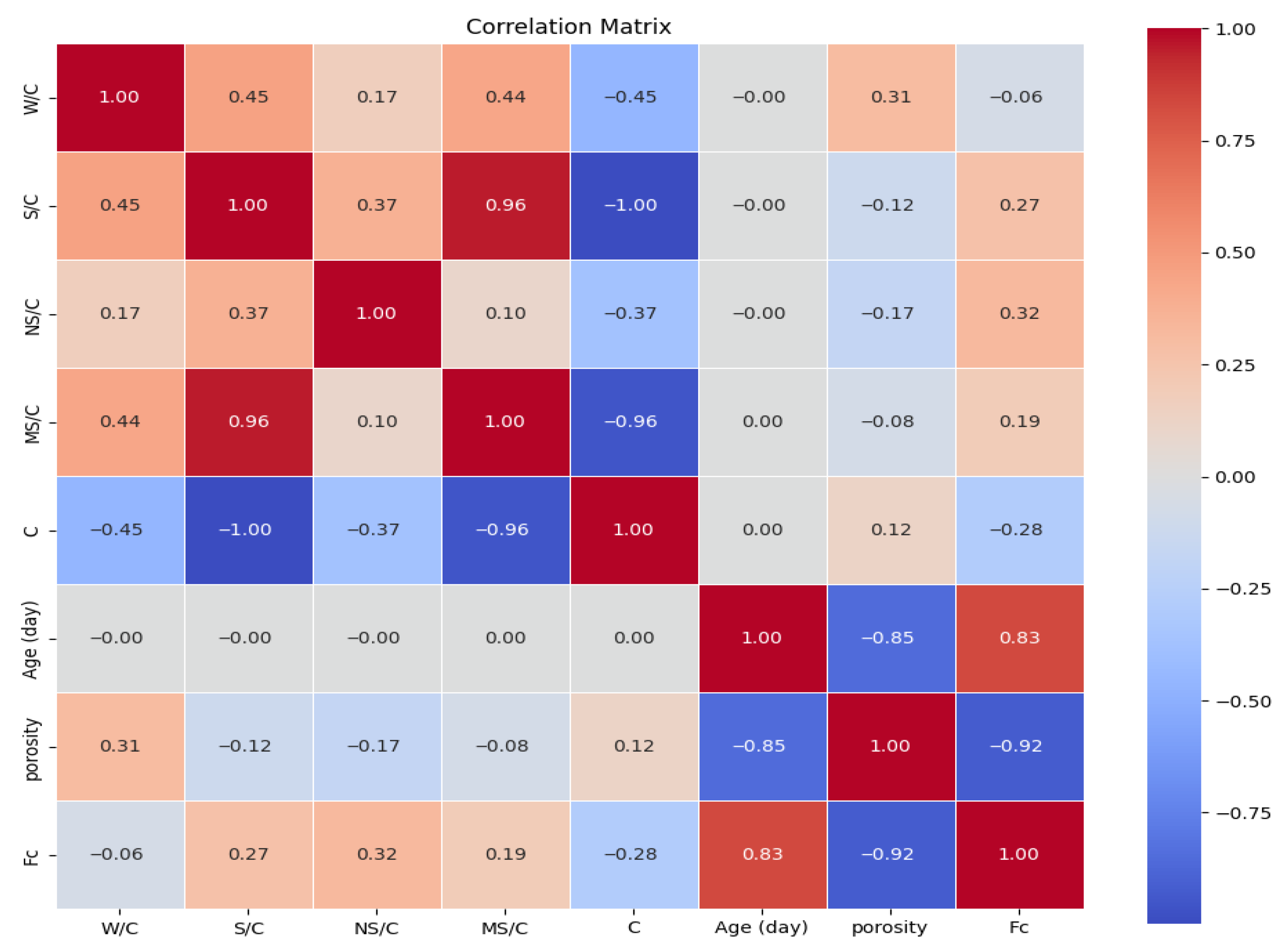

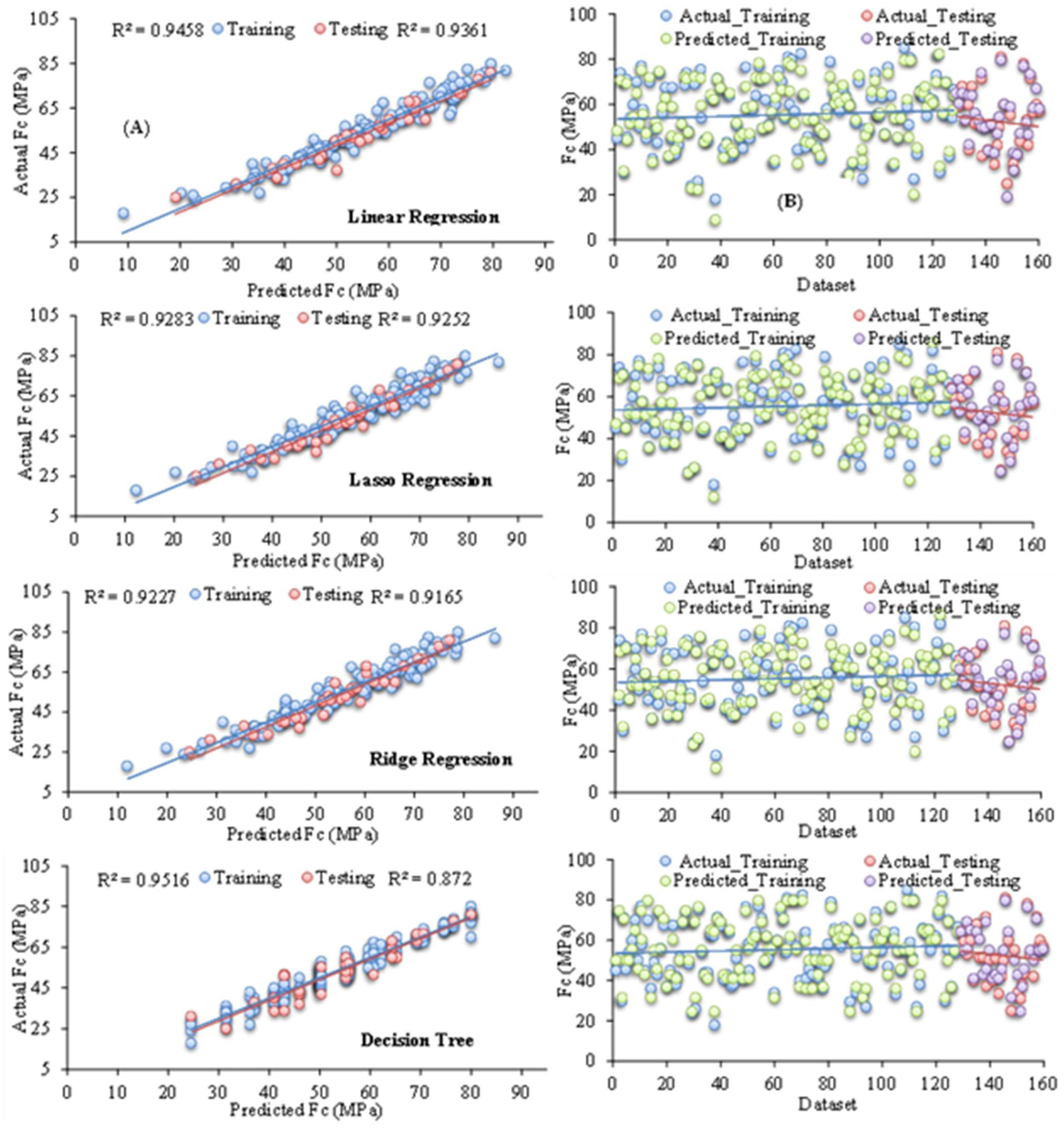

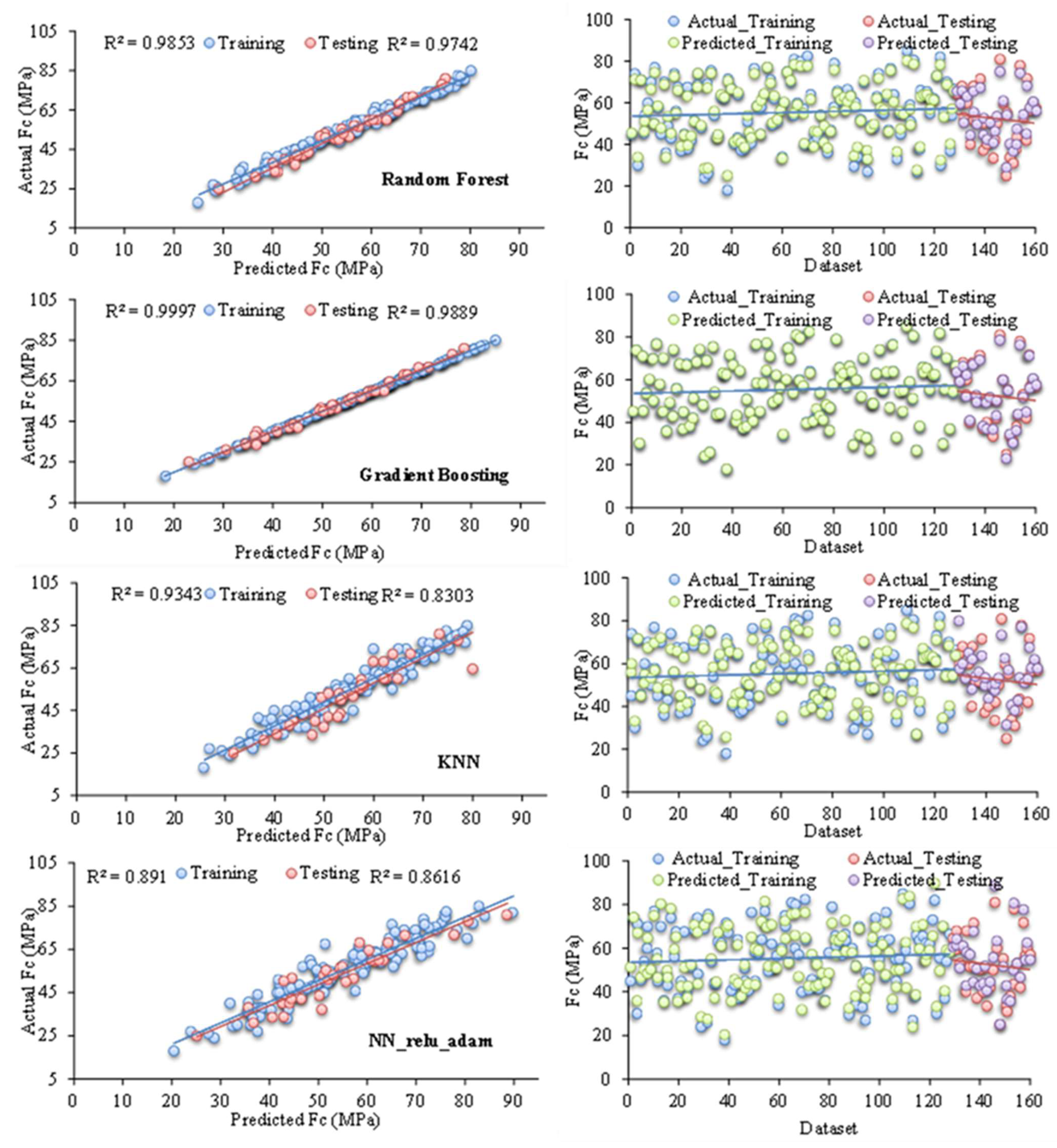

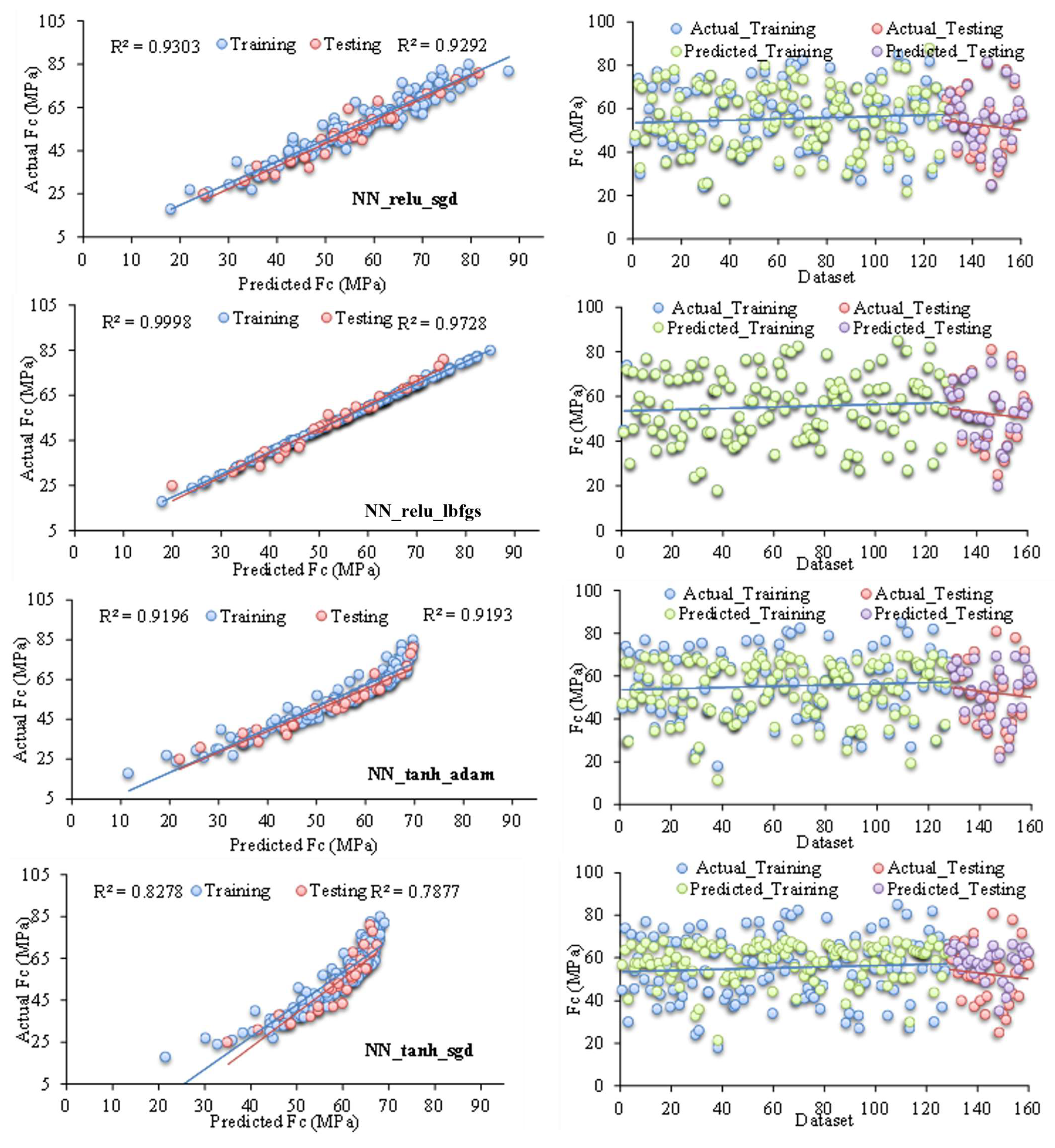

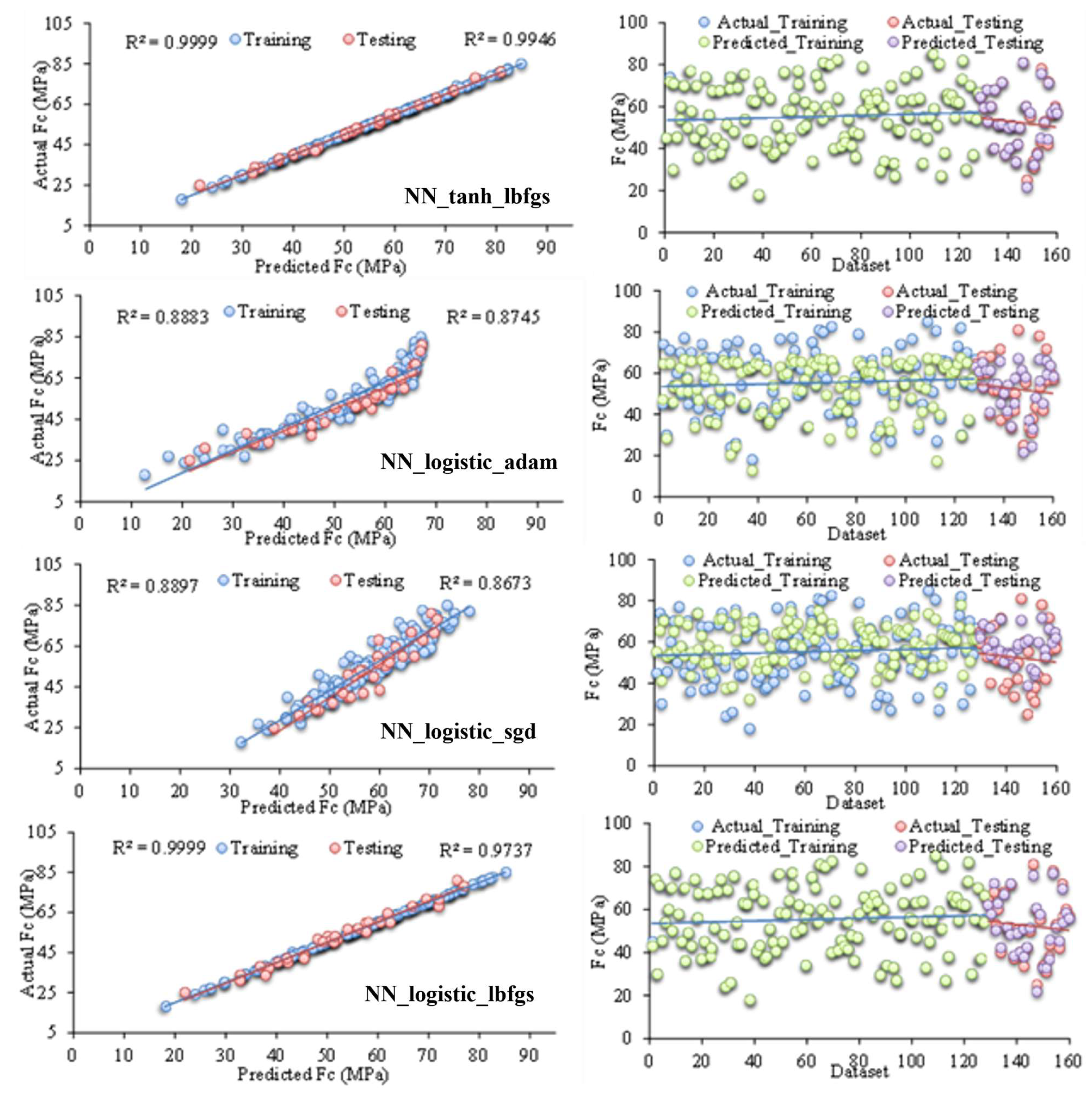

4. Results and Discussion

Validation of the NN_tanh_lbfgs Model Performance

5. Conclusions

- The findings reveal that nonlinear ML models significantly outperform linear models by capturing complex, nonlinear relationships within the dataset, thereby achieving superior generalization and enhanced prediction precision.

- The NN_tanh_lbfgs model exhibits outstanding predictive performance, attaining near-perfect training metrics (R2 = 0.9999, RMSE = 0.0083, MAE = 0.0063) and maintaining excellent generalization on the testing dataset (R2 = 0.9946, RMSE = 1.5032, MAE = 1.2545), thereby underscoring its superior accuracy and robustness.

- The NN_logistic_lbfgs, gradient boosting, and NN_relu_lbfgs models exhibit strong generalization and high accuracy, with minimal performance loss between training and testing, while NN_tanh_sgd and NN_logistic_sgd underperform due to suboptimal optimizer and activation function choices, resulting in poor generalization.

- Key hyperparameters like the L-BFGS optimizer and activation functions (tanh, logistic, and ReLU) critically influence neural network accuracy and generalization, with advanced models such as gradient boosting and NN_tanh_lbfgs outperforming linear methods.

- ML offers a more cost-effective, efficient, and scalable alternative to both traditional and experimental methods, significantly reducing time and resource usage in material testing.

- Linear and nonlinear analyses show curing age and NS/C positively affect Fc, while porosity has the strongest negative impact, as further clarified by SHAP analysis.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, H.; Xiao, H.-G.; Yuan, J.; Ou, J. Microstructure of cement mortar with nano-particles. Compos. Part B Eng. 2004, 35, 185–189. [Google Scholar] [CrossRef]

- Cho, Y.K.; Jung, S.H.; Choi, Y.C. Effects of chemical composition of fly ash on compressive strength of fly ash cement mortar. Constr. Build. Mater. 2019, 204, 255–264. [Google Scholar] [CrossRef]

- Brzozowski, P.; Horszczaruk, E.; Hrabiuk, K. The influence of natural and nano-additives on early strength of cement mortars. Procedia Eng. 2017, 172, 127–134. [Google Scholar] [CrossRef]

- Jasiczak, J.; Zielinski, K. Effect of protein additive on properties of mortar. Cem. Concr. Compos. 2006, 28, 451–457. [Google Scholar] [CrossRef]

- Khan, K.; Ullah, M.F.; Shahzada, K.; Amin, M.N.; Bibi, T.; Wahab, N.; Aljaafari, A. Effective use of micro-silica extracted from rice husk ash for the production of high-performance and sustainable cement mortar. Constr. Build. Mater. 2020, 258, 119589. [Google Scholar] [CrossRef]

- Zhang, B.; Tan, H.; Shen, W.; Xu, G.; Ma, B.; Ji, X. Nano-silica and silica fume modified cement mortar used as Surface Protection Material to enhance the impermeability. Cem. Concr. Compos. 2018, 92, 7–17. [Google Scholar] [CrossRef]

- Li, L.; Zhu, J.; Huang, Z.; Kwan, A.; Li, L. Combined effects of micro-silica and nano-silica on durability of mortar. Constr. Build. Mater. 2017, 157, 337–347. [Google Scholar] [CrossRef]

- Wang, X.; Dong, S.; Ashour, A.; Zhang, W.; Han, B. Effect and mechanisms of nanomaterials on interface between aggregates and cement mortars. Constr. Build. Mater. 2020, 240, 117942. [Google Scholar] [CrossRef]

- Kaplan, S.A. Factors affecting the relationship between rate of loading and measured compressive strength of concrete. Mag. Concr. Res. 1980, 32, 79–88. [Google Scholar] [CrossRef]

- Chen, X.; Wu, S.; Zhou, J. Influence of porosity on compressive and tensile strength of cement mortar. Constr. Build. Mater. 2013, 40, 869–874. [Google Scholar] [CrossRef]

- Jo, B.-W.; Kim, C.-H.; Tae, G.-H.; Park, J.-B. Characteristics of cement mortar with nano-SiO2 particles. Constr. Build. Mater. 2007, 21, 1351–1355. [Google Scholar] [CrossRef]

- Ltifi, M.; Guefrech, A.; Mounanga, P.; Khelidj, A. Experimental study of the effect of addition of nano-silica on the behaviour of cement mortars. Procedia Eng. 2011, 10, 900–905. [Google Scholar] [CrossRef]

- Oltulu, M.; Şahin, R. Energy and Buildings. Effect of nano-SiO2, nano-Al2O3 and nano-Fe2O3 powders on compressive strengths and capillary water absorption of cement mortar containing fly ash: A comparative study. Energy Build. 2013, 58, 292–301. [Google Scholar] [CrossRef]

- Qing, Y.; Zenan, Z.; Deyu, K.; Rongshen, C. Influence of nano-SiO2 addition on properties of hardened cement paste as compared with silica fume. Constr. Build. Mater. 2007, 21, 539–545. [Google Scholar] [CrossRef]

- Artelt, C.; Garcia, E. Impact of superplasticizer concentration and of ultra-fine particles on the rheological behaviour of dense mortar suspensions. Cem. Concr. Res. 2008, 38, 633–642. [Google Scholar] [CrossRef]

- Park, C.; Noh, M.; Park, T. Rheological properties of cementitious materials containing mineral admixtures. Cem. Concr. Res. 2005, 35, 842–849. [Google Scholar] [CrossRef]

- Ammar, M.M. The effect of nano-silica on the performance of Portland Cement Mortar. Master’s Thesis, American University in Cairo, Cairo Governorate, Egypt, 2013. [Google Scholar] [CrossRef]

- Sobuz, M.H.R.; Khatun, M.; Kabbo, M.K.I.; Sutan, N.M. An explainable machine learning model for encompassing the mechanical strength of polymer-modified concrete. Asian J. Civ. Eng. 2025, 26, 931–954. [Google Scholar] [CrossRef]

- Chaabene, W.B.; Flah, M.; Nehdi, M.L. Machine learning prediction of mechanical properties of concrete: Critical review. Constr. Build. Mater. 2020, 260, 119889. [Google Scholar] [CrossRef]

- Hosseinzadeh, M.; Dehestani, M.; Hosseinzadeh, A.J. Prediction of mechanical properties of recycled aggregate fly ash concrete employing machine learning algorithms. J. Build. Eng. 2023, 76, 107006. [Google Scholar] [CrossRef]

- Koya, B.P.; Aneja, S.; Gupta, R.; Valeo, C. Comparative analysis of different machine learning algorithms to predict mechanical properties of concrete. Mech. Adv. Mater. Struct. 2022, 29, 4032–4043. [Google Scholar] [CrossRef]

- Zhang, J.; Stang, H.; Li, V.C. Fatigue life prediction of fiber reinforced concrete under flexural load. Int. J. Fatigue 1999, 21, 1033–1049. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, X.; Cao, X.; Huang, C.; Liu, E.; Qian, S.; Liu, X.; Wu, Y.; Dong, F.; Qiu, C.-W.; et al. Artificial intelligence: A powerful paradigm for scientific research. Innovation 2021, 2, 100179. [Google Scholar] [CrossRef] [PubMed]

- Kapoor, N.R.; Kumar, A.; Kumar, A.; Kumar, A.; Arora, H.C. Artificial intelligence in civil engineering: An immersive view. In Artificial Intelligence Applications for Sustainable Construction; Elsevier: Amsterdam, The Netherlands, 2024; pp. 1–74. [Google Scholar] [CrossRef]

- Poluektova, V.; Poluektov, M. Artificial Intelligence in Materials Science and Modern Concrete Technologies: Analysis of Possibilities and Prospects. Inorg. Mater. Appl. Res. 2024, 15, 1187–1198. [Google Scholar] [CrossRef]

- Kaveh, A. Applications of Artificial Neural Networks and Machine Learning in Civil Engineering; Springer: Cham, Switzerland, 2024. [Google Scholar]

- Mei, L.; Wang, Q. Structural optimization in civil engineering: A literature review. Buildings 2021, 11, 66. [Google Scholar] [CrossRef]

- Azad, M.M.; Kim, H.S. Noise robust damage detection of laminated composites using multichannel wavelet-enhanced deep learning model. Eng. Struct. 2025, 322, 119192. [Google Scholar] [CrossRef]

- Kongala, S.G.R. Explainable Structural Health Monitoring (SHM) for Damage Classification Based on Vibration. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2024. [Google Scholar]

- Jueyendah, S.; Lezgy-Nazargah, M.; Eskandari-Naddaf, H.; Emamian, S. Predicting the mechanical properties of cement mortar using the support vector machine approach. Constr. Build. Mater. 2021, 291, 123396. [Google Scholar] [CrossRef]

- Jueyendah, S.; Martins, C.H. Optimal Design of Welded Structure Using SVM. Computational Engineering and Physical Modeling. Comput. Eng. Phys. Model. 2024, 7, 84–107. [Google Scholar] [CrossRef]

- Amin, M.N.; Alkadhim, H.A.; Ahmad, W.; Khan, K.; Alabduljabbar, H.; Mohamed, A. Experimental and machine learning approaches to investigate the effect of waste glass powder on the flexural strength of cement mortar. PLoS ONE 2023, 18, e0280761. [Google Scholar] [CrossRef]

- Alahmari, T.S.; Ashraf, J.; Sobuz, M.H.R.; Uddin, M.A. Predicting the compressive strength of fiber-reinforced self-consolidating concrete using a hybrid machine learning approach. Innov. Infrastruct. Solut. 2024, 9, 446. [Google Scholar] [CrossRef]

- Jamal, A.S.; Ahmed, A.N. Estimating compressive strength of high-performance concrete using different machine learning approaches. Alex. Eng. J. 2025, 114, 256–265. [Google Scholar] [CrossRef]

- Nguyen, M.H.; Ly, H.-B. Development of machine learning methods to predict the compressive strength of fiber-reinforced self-compacting concrete and sensitivity analysis. Constr. Build. Mater. 2023, 367, 130339. [Google Scholar] [CrossRef]

- Shafighfard, T.; Kazemi, F.; Bagherzadeh, F.; Mieloszyk, M.; Yoo, D.Y. Chained machine learning model for predicting load capacity and ductility of steel fiber–reinforced concrete beams. Comput.-Aided Civ. Infrastruct. Eng. 2024, 39, 3573–3594. [Google Scholar] [CrossRef]

- Jain, D.; Bhadauria, S.S.; Kushwah, S.S. Analysis and prediction of plastic waste composite construction material properties using machine learning techniques. Environ. Prog. Sustain. Energy 2023, 42, e14094. [Google Scholar] [CrossRef]

- Guan, Q.T.; Tong, Z.L.; Amin, M.N.; Iftikhar, B.; Qadir, M.T.; Khan, K. Analyzing the efficacy of waste marble and glass powder for the compressive strength of self-compacting concrete using machine learning strategies. Rev. Adv. Mater. Sci. 2024, 63, 20240043. [Google Scholar] [CrossRef]

- Fei, Z.; Liang, S.; Cai, Y.; Shen, Y. Ensemble machine-learning-based prediction models for the compressive strength of recycled powder mortar. Materials 2023, 16, 583. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Ding, Y.; Kong, Y.; Sun, D.; Shi, Y.; Cai, X. Predicting the Compressive Strength of Sustainable Portland Cement–Fly Ash Mortar Using Explainable Boosting Machine Learning Techniques. Materials 2024, 17, 4744. [Google Scholar] [CrossRef] [PubMed]

- Aamir, H.; Aamir, K.; Javed, M.F. Linear and Non-Linear Regression Analysis on the Prediction of Compressive Strength of Sodium Hydroxide Pre-Treated Crumb Rubber Concrete. Eng. Proc. 2023, 44, 5. [Google Scholar] [CrossRef]

- Kovačević, M.; Hadzima-Nyarko, M.; Grubeša, I.N.; Radu, D.; Lozančić, S. Application of artificial intelligence methods for predicting the compressive strength of green concretes with rice husk ash. Mathematics 2023, 12, 66. [Google Scholar] [CrossRef]

- Bin, F.; Hosseini, S.; Chen, J.; Samui, P.; Fattahi, H.; Jahed Armaghani, D. Proposing optimized random forest models for predicting compressive strength of geopolymer composites. Infrastructures 2024, 9, 181. [Google Scholar] [CrossRef]

- Maulud, D.; Abdulazeez, A.M. A review on linear regression comprehensive in machine learning. J. Appl. Sci. Technol. Trends 2020, 1, 140–147. [Google Scholar] [CrossRef]

- Sinnakaudan, S.; Ghani, A.A.; Ahmad, M.; Zakaria, N. Multiple linear regression model for total bed material load prediction. J. Hydraul. Eng. 2006, 132, 521–528. [Google Scholar] [CrossRef]

- Esmaeili, B.; Hallowell, M.R.; Rajagopalan, B. Attribute-based safety risk assessment. II: Predicting safety outcomes using generalized linear models. J. Constr. Eng. Manag. 2015, 141, 04015022. [Google Scholar] [CrossRef]

- Myers, R.H.; Montgomery, D.C.; Vining, G.G.; Robinson, T.J. Generalized Linear Models: With Applications in Engineering and the Sciences; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Kouvaritakis, B.; Cannon, M. Non-Linear Predictive Control: Theory and Practice; IET: Hertfordshire, UK, 2001. [Google Scholar] [CrossRef]

- Clark, L.A.; Pregibon, D. Tree-based models. In Statistical Models in S; Routledge: Oxfordshire, UK, 2017; pp. 377–419. [Google Scholar]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobot. 2013, 7, 21. [Google Scholar] [CrossRef] [PubMed]

- Kramer, O.; Kramer, O. K-Nearest Neighbors. Dimensionality Reduction with Unsupervised Nearest Neighbors; Springer: Berlin/Heidelberg, Germany, 2013; pp. 13–23. [Google Scholar] [CrossRef]

- Rhodes, C.; Morari, M. The false nearest neighbors algorithm: An overview. Comput. Chem. Eng. 1997, 21, S1149–S1154. [Google Scholar] [CrossRef]

- Deepa, C.; Sathiya Kumari, K.; Pream Sudha, V. A tree based model for high performance concrete mix design. Int. J. Eng. Sci. Technol. 2010, 2, 4640–4646. [Google Scholar]

- Dabiri, H.; Farhangi, V.; Moradi, M.J.; Zadehmohamad, M.; Karakouzian, M. Applications of decision tree and random forest as tree-based machine learning techniques for analyzing the ultimate strain of spliced and non-spliced reinforcement bars. Appl. Sci. 2022, 12, 4851. [Google Scholar] [CrossRef]

- Alzubi, Y.; Alqawasmeh, H.; Al-Kharabsheh, B.; Abed, D. Applications of nearest neighbor search algorithm toward efficient rubber-based solid waste management in concrete. Civ. Eng. J. 2022, 8, 695–709. [Google Scholar] [CrossRef]

- Adeli, H. Neural networks in civil engineering: 1989–2000. Comput.-Aided Civ. Infrastruct. Eng. 2001, 16, 126–142. [Google Scholar] [CrossRef]

- Emamian, S.A.; Eskandari-Naddaf, H. Effect of porosity on predicting compressive and flexural strength of cement mortar containing micro and nano-silica by ANN and GEP. Constr. Build. Mater. 2019, 218, 8–27. [Google Scholar] [CrossRef]

| Variable | Minimum | Maximum | Mean | Std. Dev. |

|---|---|---|---|---|

| W/C (%) | 0.400 | 0.604 | 0.494 | 0.062 |

| S/C (%) | 2.667 | 3.222 | 2.927 | 0.166 |

| NS/C (%) | 0.000 | 0.051 | 0.023 | 0.018 |

| MS/C (%) | 0.000 | 0.157 | 0.074 | 0.058 |

| C (grams) | 993.300 | 1200.000 | 1096.725 | 62.037 |

| Age (day) | 3.000 | 28.000 | 14.600 | 9.091 |

| Porosity (%) | 4.000 | 20.000 | 10.456 | 3.160 |

| Fc (MPa) | 18.000 | 85.000 | 54.823 | 14.648 |

| Model | Hyperparameter | ||

|---|---|---|---|

| Linear Regression | fit intercept = TRUE | ||

| Ridge Regression | fit intercept = TRUE, Solver = auto, alpha = 1.0 | ||

| Lasso Regression | fit intercept = TRUE, alpha = 0.1, max_iter = 1000, selection = cyclic | ||

| Decision Tree | max_depth = 5, min_samples_split = 2, min_samples_leaf = 4, criterion = squared error, random state = 42 | ||

| Random Forest | n_estimators = 100, min_samples_split = 4, max_depth = 10, min_samples_leaf = 2, max_features = sqrt, random state = 42, bootstrap = True | ||

| Gradient Boosting | n_estimators = 300, learning_rate = 0.115, max_depth = 3, min_samples_split = 2, random state = 42, subsample = 0.8, | ||

| KNN | n_neighbors = 5, metric = Mankowski, p = 2, leaf size = 30 | ||

| NN_relu_adam | NN_model = MLP Regressor hidden_layer_sizes (50,) max_iter = 10000 alpha = 0.01 learning_rate_init = 0.0005 validation fraction = 0.2 n_iter_no_change = 10 random state = 42 | activation (relu) | solver = adam solver = sgd solver = lbfgs |

| NN_relu_sgd | |||

| NN_relu_lbfgs | |||

| NN_tanh_adam | activation (tanh) | solver = adam solver = sgd solver = lbfgs | |

| NN_tanh_sgd | |||

| NN_tanh_lbfgs | |||

| NN_logistic_adam | activation (logistic) | solver = adam solver = sgd solver = lbfgs | |

| NN_logistic_sgd | |||

| NN_logistic_lbfgs | |||

| NN_identity_adam | activation (identity) | solver = adam solver = sgd solver = lbfgs | |

| NN_identity_sgd | |||

| NN_identity_lbfgs | |||

| Model | Training | Testing | ||||

|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | |

| Linear Regression | 0.9458 | 3.4444 | 2.5498 | 0.9361 | 3.4772 | 2.5336 |

| Ridge Regression | 0.9283 | 3.9631 | 3.1118 | 0.9252 | 3.8396 | 2.9846 |

| Lasso Regression | 0.9227 | 4.1143 | 3.2687 | 0.9165 | 4.0191 | 3.1724 |

| Decision Tree | 0.9516 | 3.2564 | 2.5564 | 0.8720 | 5.0196 | 4.1672 |

| Random Forest | 0.9853 | 2.2540 | 1.7457 | 0.9742 | 3.6311 | 3.0649 |

| Gradient Boosting | 0.9997 | 0.2370 | 0.1878 | 0.9889 | 1.5176 | 1.2563 |

| KNN | 0.9343 | 3.7902 | 2.9463 | 0.8303 | 5.6828 | 4.4394 |

| NN_relu_adam | 0.891 | 4.9126 | 3.8437 | 0.8616 | 5.3229 | 4.4137 |

| NN_relu_sgd | 0.9303 | 3.9113 | 3.0407 | 0.9292 | 3.6176 | 2.7816 |

| NN_relu_lbfgs | 0.9998 | 0.0161 | 0.0128 | 0.9728 | 2.4332 | 1.8011 |

| NN_tanh_adam | 0.9196 | 4.7176 | 3.2797 | 0.9193 | 3.9913 | 3.1923 |

| NN_tanh_sgd | 0.8278 | 8.2095 | 7.0393 | 0.7877 | 9.8173 | 8.6113 |

| NN_tanh_lbfgs | 0.9999 | 0.0083 | 0.0063 | 0.9946 | 1.5032 | 1.2545 |

| NN_logistic_adam | 0.8883 | 5.6788 | 4.0814 | 0.8745 | 4.9320 | 3.8481 |

| NN_logistic_sgd | 0.8897 | 7.1799 | 6.0262 | 0.8673 | 8.8247 | 7.3645 |

| NN_logistic_lbfgs | 0.9999 | 0.0292 | 0.0215 | 0.9737 | 2.1460 | 1.7765 |

| NN_identity_adam | 0.9328 | 4.0534 | 3.0960 | 0.9255 | 3.2970 | 2.6260 |

| NN_identity_sgd | 0.9186 | 4.3113 | 3.5019 | 0.9099 | 4.4797 | 3.5332 |

| NN_identity_lbfgs | 0.9458 | 3.4445 | 2.5479 | 0.9389 | 3.4737 | 2.5329 |

| Data Set | Method | Training | Testing | ||

|---|---|---|---|---|---|

| R2 | RMSE | R2 | RMSE | ||

| Current study | NN_tanh_lbfgs | 0.9999 | 0.0083 | 0.9946 | 1.5032 |

| Jueyendah et al. [30] | SVM-RBF | 0.9987 | 1.297 | 0.9772 | 2.664 |

| MLP | 0.9733 | 2.350 | 0.9621 | 3.327 | |

| RBF Network | 0.9772 | 2.172 | 0.947 | 3.800 | |

| GRNN | 0.9808 | 2.431 | 0.9198 | 4.830 | |

| SA Emamian [52] | ANN | 0.9965 | 0.862 | 0.9467 | 3.558 |

| GEP | 0.9601 | 2.967 | 0.9429 | 3.386 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jueyendah, S.; Yaman, Z.; Dere, T.; Çavuş, T.F. Comparative Study of Linear and Non-Linear ML Algorithms for Cement Mortar Strength Estimation. Buildings 2025, 15, 2932. https://doi.org/10.3390/buildings15162932

Jueyendah S, Yaman Z, Dere T, Çavuş TF. Comparative Study of Linear and Non-Linear ML Algorithms for Cement Mortar Strength Estimation. Buildings. 2025; 15(16):2932. https://doi.org/10.3390/buildings15162932

Chicago/Turabian StyleJueyendah, Sebghatullah, Zeynep Yaman, Turgay Dere, and Türker Fedai Çavuş. 2025. "Comparative Study of Linear and Non-Linear ML Algorithms for Cement Mortar Strength Estimation" Buildings 15, no. 16: 2932. https://doi.org/10.3390/buildings15162932

APA StyleJueyendah, S., Yaman, Z., Dere, T., & Çavuş, T. F. (2025). Comparative Study of Linear and Non-Linear ML Algorithms for Cement Mortar Strength Estimation. Buildings, 15(16), 2932. https://doi.org/10.3390/buildings15162932