Real-Time Progress Monitoring of Bricklaying

Abstract

1. Introduction

1.1. Automated Progress Monitoring

1.2. Automated Progress Monitoring Methods

1.3. Bricklaying Operations and Progress Monitoring

1.4. Computer Vision and Digital Twin

1.5. Research Gap, Novelty, and Objectives

- Development of a Data Acquisition System;

- Implementation of CV Algorithms for Brick Detection;

- Integration of Data into the BIM Environment;

- Progress Visualization.

2. Literature Review

2.1. Computer Vision

2.2. Image-Based Method for Indoor Construction Activities

2.3. Object Detection Importance

2.4. YOLO Object Detection Algorithm

2.5. Digital Twin

3. Proposed Model Methodology

4. Proposed Model Development

4.1. Data Acquisition

4.2. Wall Mapping and Brick Quantification

4.2.1. Digital Twin Wall Mapping

4.2.2. Brick Quantification

4.2.3. Implementation

4.3. Computer Vision Algorithm for Brick Detection

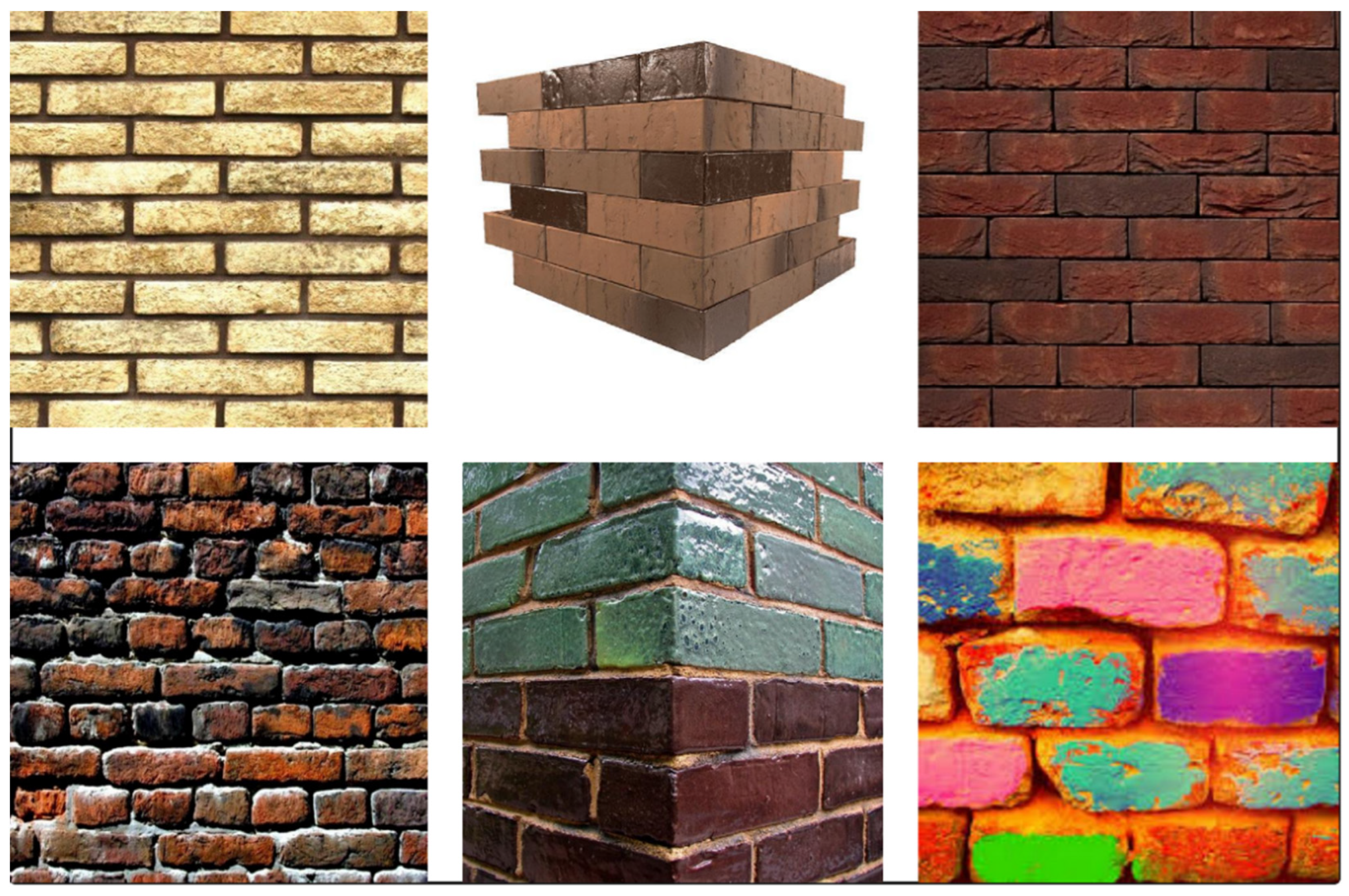

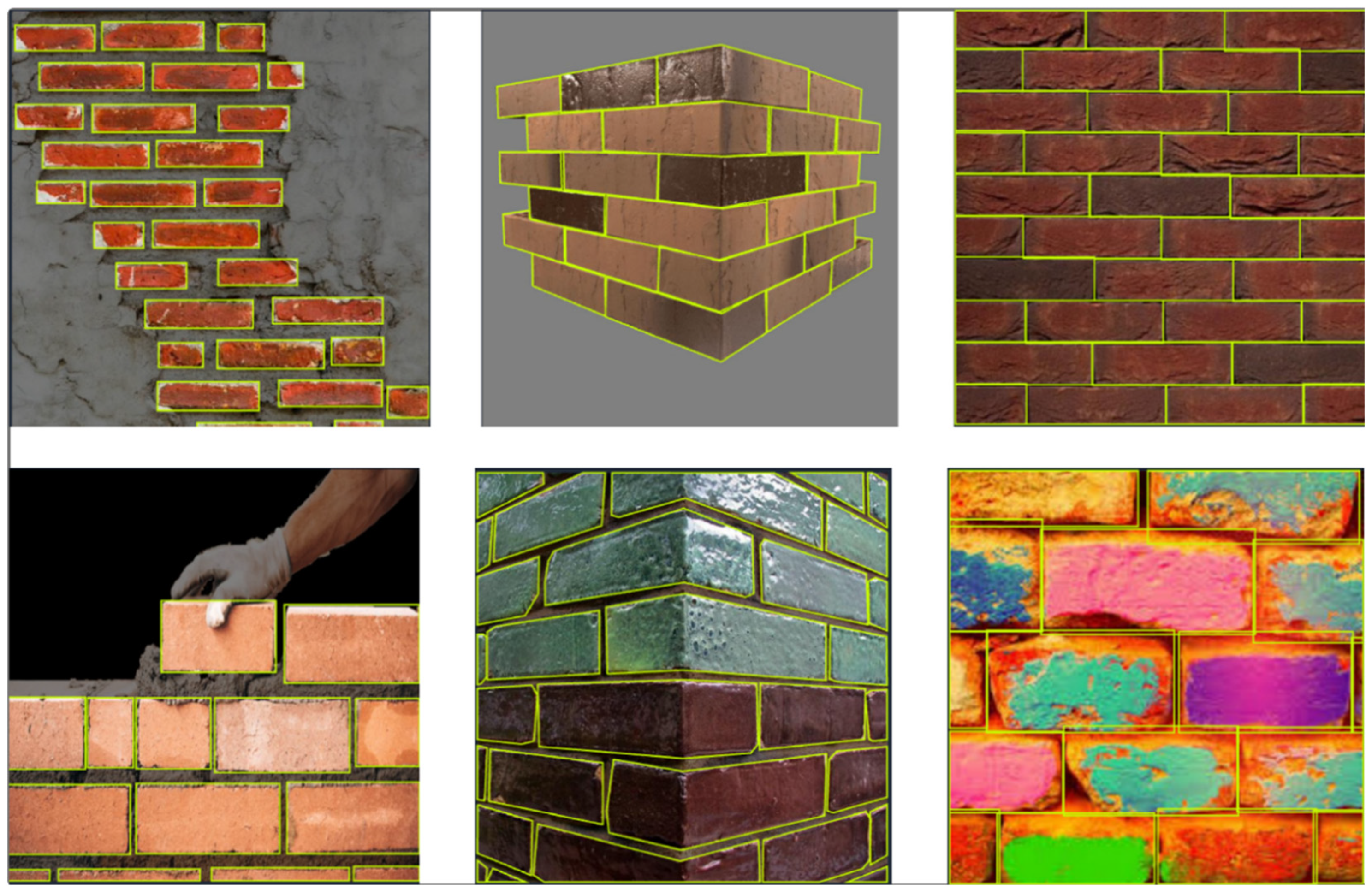

4.3.1. Dataset Creation

4.3.2. Dataset Annotation and Labeling

4.3.3. Data Augmentation and Dataset Partitioning

4.3.4. Computer Vision Algorithm Implementation

Dataset Preparation and Preprocessing

Model Architecture

- Backbone: Extracts features from the input image using convolution layers;

- Neck: Aggregates extracted features to focus on different scales of bricks;

- Head: Outputs predictions for each brick, including the bounding box coordinates, label, and confidence score for each detected brick.

Hyperparameter Tuning

- Model Architecture: Prioritizing Inference Speed

- 2.

- Epochs: Improving Generalization

- 3.

- Batch Size: Choosing the Most Balanced Model

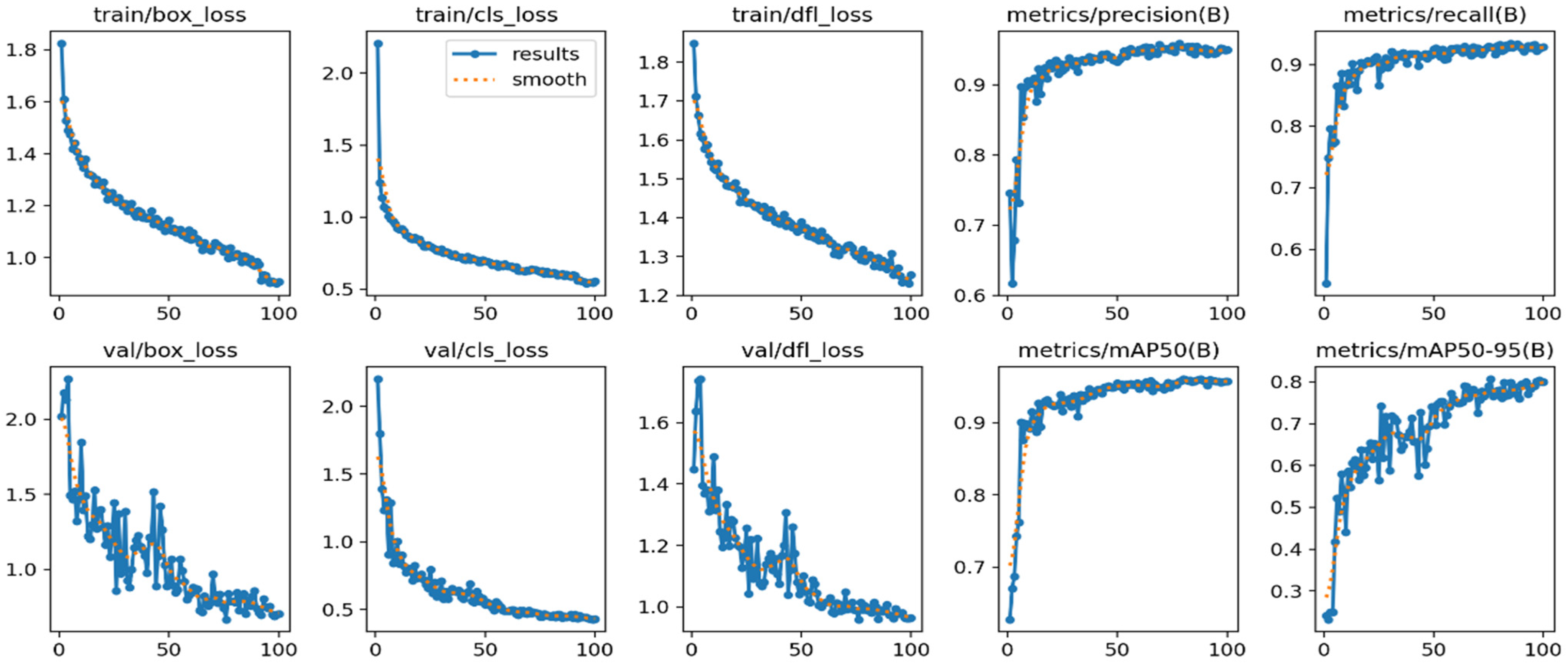

- Epochs: The model is trained over one hundred (100) epochs to ensure adequate learning while avoiding overfitting;

- Batch Size: A batch size of thirty-two (32) is used to process multiple images at once, balancing computational efficiency with model performance;

- Learning Rate: The learning rate is set to 0.01;

- Image Size: Images are resized to 640 × 640 pixels, optimizing detection accuracy while maintaining training efficiency;

- Early stopping was employed to prevent overfitting, halting training after 10 consecutive epochs without improvement.

Training the Model

- Localization Loss: Measures the error in bounding box coordinates;

- Classification Loss: Measures the error in classifying the detected brick;

- Confidence Loss: Measures the confidence level of the model’s predictions.

Training Performance and Early Stopping

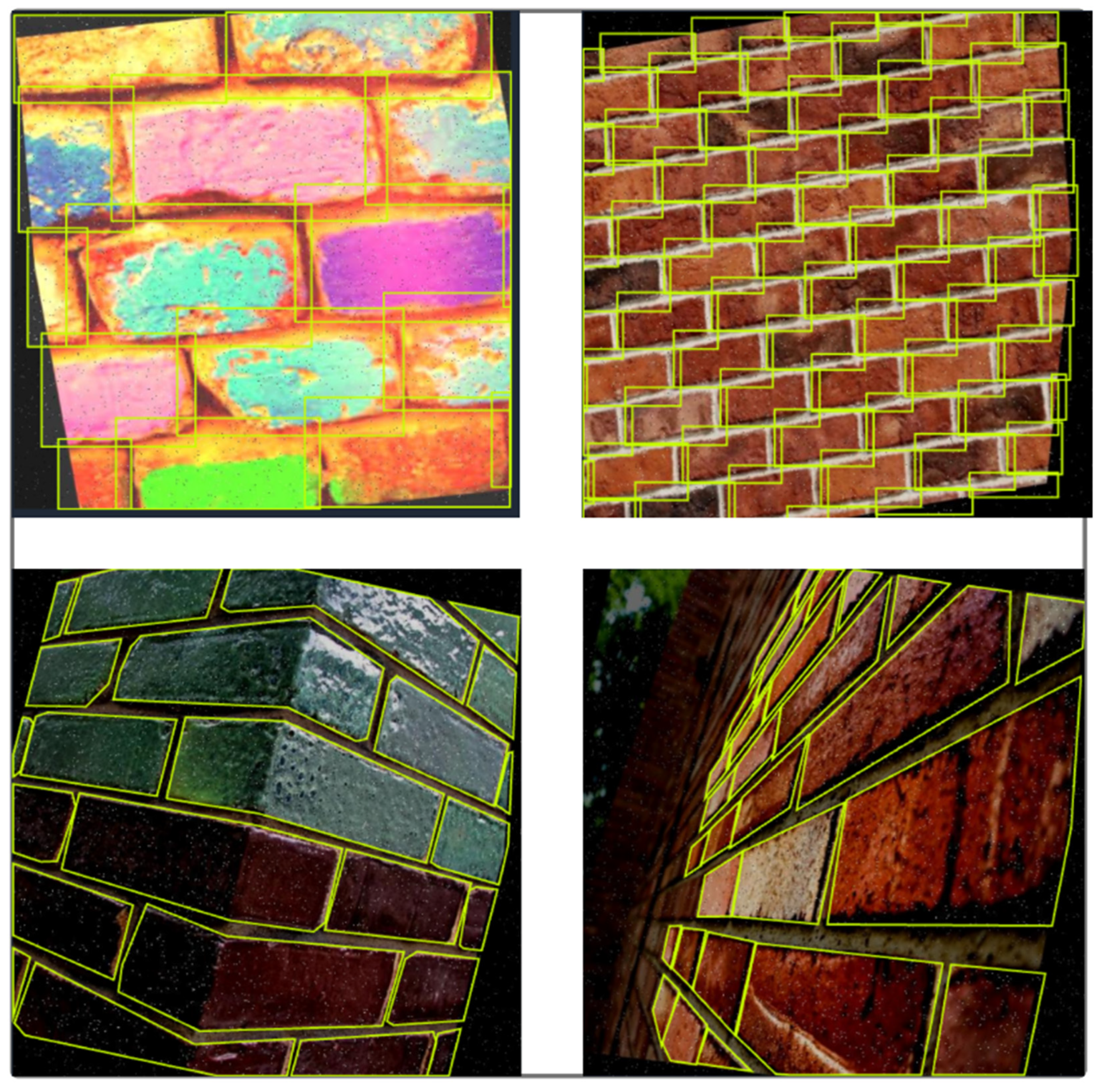

Inference and Prediction

Real-Time BIM Integration Logic and Error Handling

4.4. Progress Monitoring

4.5. Validation and Performance Metrics

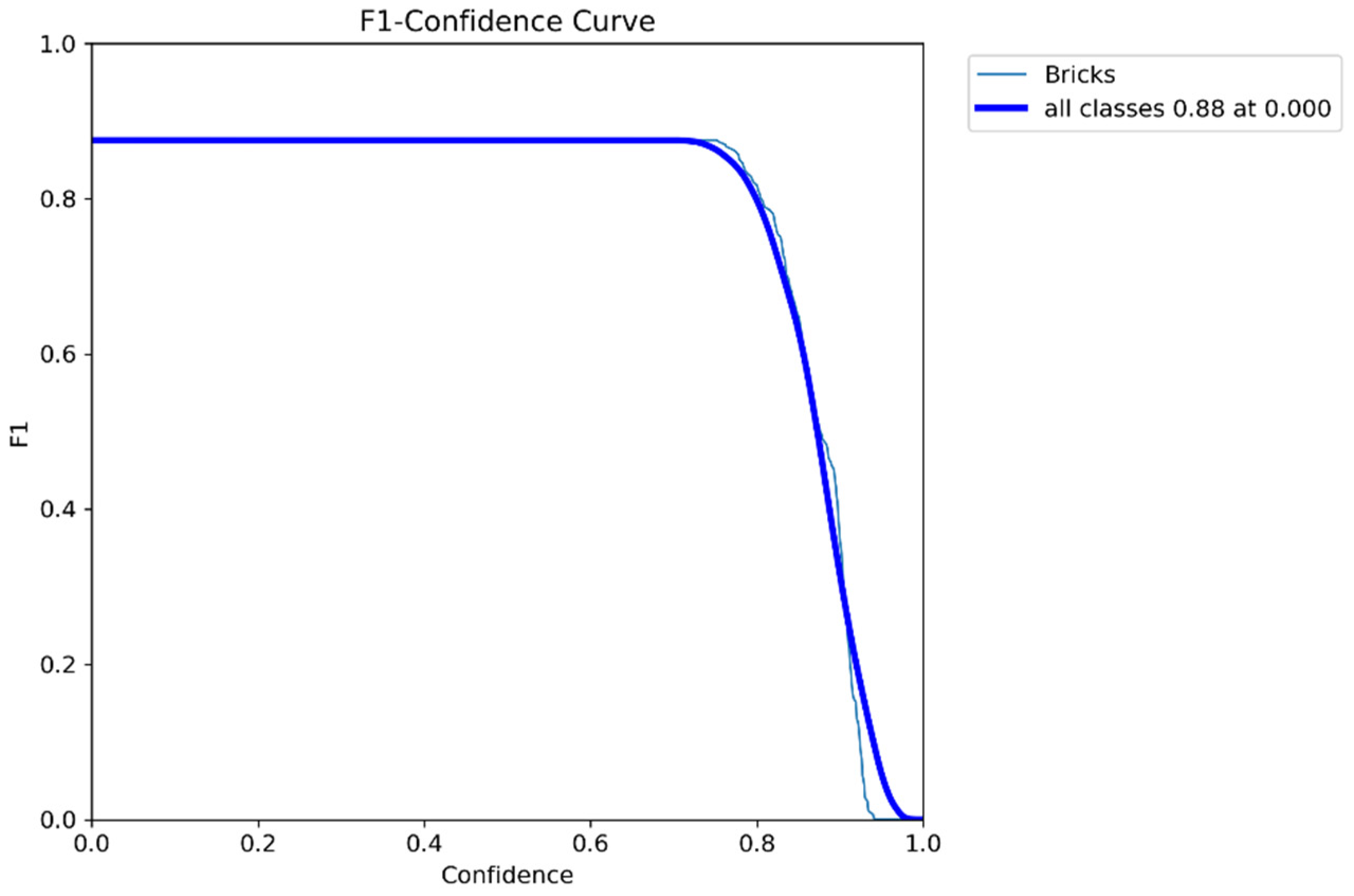

4.5.1. YOLOv8 Testing and Validation

4.5.2. Generalization Evaluation

- Precision: 0.996,

- Recall: 0.781,

- mAP50: 0.889,

- mAP50-95: 0.767.

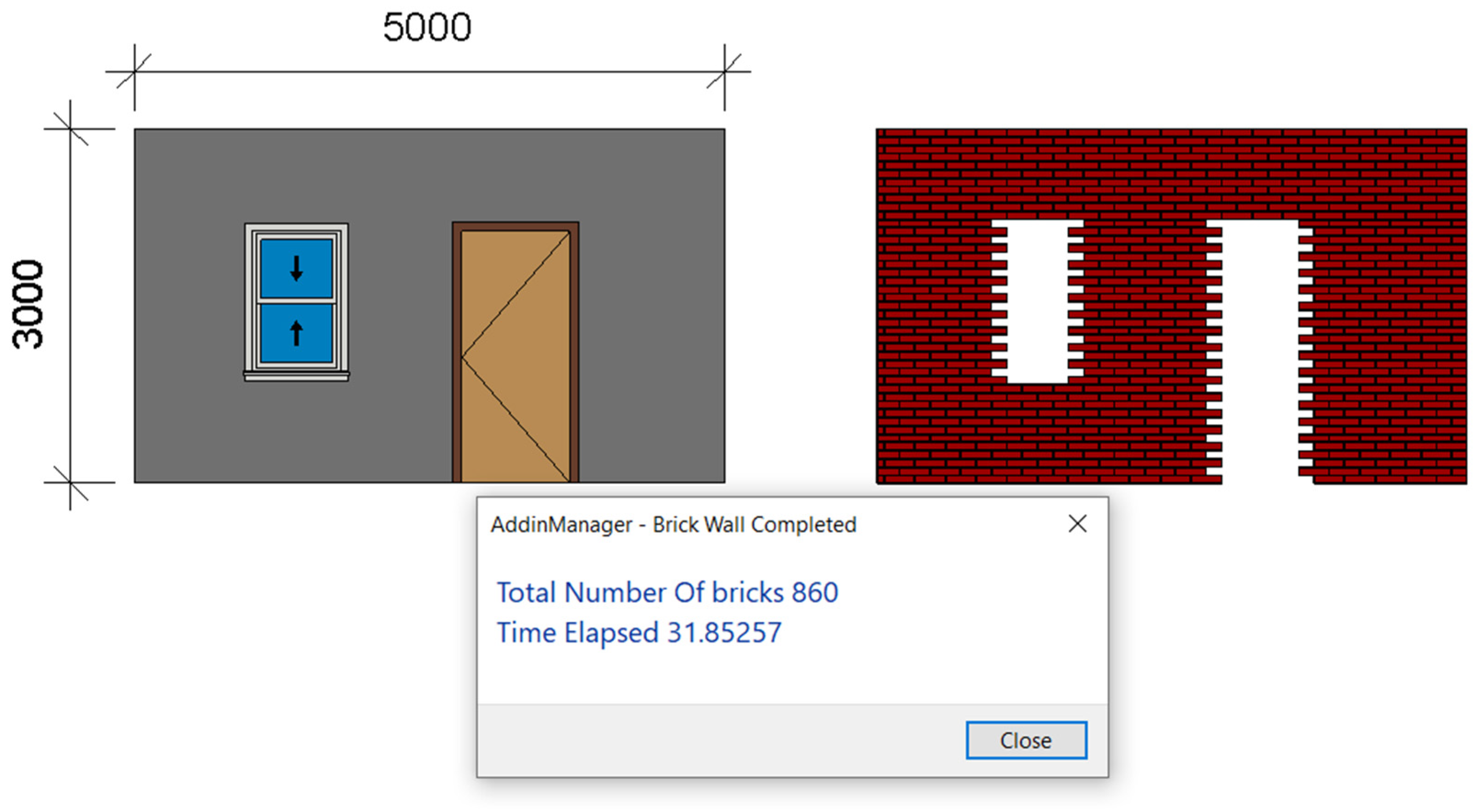

4.5.3. Autodesk Revit© Plug-in Validation

- Performance Metrics

5. Discussion

- Reduction of human error through real-time, automated detection and quantification of bricklaying progress;

- Accelerated updates to project schedules, enabling more responsive decision-making based on live site data;

- Improved resource allocation and cost control through accurate progress tracking and integration into the BIM environment.

5.1. Proposed Model

- Model-level validation: The YOLOv8 model was evaluated using a dedicated test set to confirm detection accuracy;

- System-level validation: The complete framework—including the Autodesk Revit© plug-in—was assessed in a simulated BIM environment.

- Despite the promising results, this research has several limitations:

- Dataset source limitations: Images were sourced online and from local construction sites, which may not comprehensively reflect the variability of actual bricklaying tasks across different projects;

- Indoor environment focus: The proposed model has not been tested in outdoor settings, where dynamic lighting and weather could affect performance;

- Task specificity: While optimized for bricklaying, extending this approach to other construction tasks shall require further customization.

5.2. Comparative Analysis

6. Conclusions and Recommendations

6.1. Conclusions

- High Detection Accuracy: The YOLOv8 model achieved a mean Average Precision of 95.4% (mAP50) and 80.7% (mAP50-95) on the test dataset, ensuring reliable, brick-level detection suitable for real-time tracking in complex indoor construction environments.

- Automation and Speed: The Autodesk Revit© plug-in demonstrated rapid performance by quantifying bricks in a 5 m × 3 m wall model in approximately 32 s, compared to traditional manual quantification methods that typically require much more effort and time.

- Productivity Gains: The automated monitoring process increases productivity by a factor of 5 to 10, reducing reliance on manual inspections and reporting. These gains directly translate into cost savings and faster project cycle times, especially in labor-intensive indoor activities such as bricklaying.

- Enhanced BIM Integration: By linking detected brick counts to BIM’s as-planned models, the system enables real-time updates to 4D schedules. This facilitates accurate comparisons between planned and actual progress, leading to more informed forecasting and better resource planning.

6.2. Recommendations for Future Work

- Dataset Expansion: Augmenting the training data with more diverse site conditions to improve model adaptability and generalization.

- On-site Validation: Conducting field experiments on active construction sites to evaluate real-world performance under varying environmental conditions.

- Broader Applicability: Adapting the YOLO-based detection framework for other indoor tasks (e.g., drywall, tiling) to expand its utility across multiple trades.

- Integration with Broader Monitoring Systems: Combining this system with additional sensors or IoT devices to create a more holistic, multi-source progress monitoring platform.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Barbosa, F.; Woetzel, J.; Mischke, J. Reinventing Construction: A Route of Higher Productivity; McKinsey Global Institute: Gurugram, India, 2017. [Google Scholar]

- McCabe, B.; Hamledari, H.; Shahi, A.; Zangeneh, P.; Azar, E.R. Roles, Benefits, and Challenges of Using UAVs for Indoor Smart Construction Applications. In Proceedings of the ASCE International Workshop on Computing in Civil Engineering 2017, Seattle, WT, USA, 25–27 June 2017. [Google Scholar] [CrossRef]

- Kropp, C.; Koch, C.; König, M.; Brilakis, I. A framework for automated delay prediction of finishing works using video data and BIM-based construction simulation. In Proceedings of the 14th International Conference on Computing in Civil and Building Engineering, Moscow, Russia, 27–29 June 2012. [Google Scholar]

- Bosché, F.; Ahmed, M.; Turkan, Y.; Haas, C.T.; Haas, R. The value of integrating Scan-to-BIM and Scan-vs-BIM techniques for construction monitoring using laser scanning and BIM: The case of cylindrical MEP components. Autom. Constr. 2015, 49, 201–213. [Google Scholar] [CrossRef]

- Pushkar, A.; Senthilvel, M.; Varghese, K. Automated progress monitoring of masonry activity using photogrammetric point cloud. In Proceedings of the 35th International Symposium on Automation and Robotics in Construction (ISARC), Berlin, Germany, 20–25 July 2018; pp. 897–903. [Google Scholar] [CrossRef]

- Ghasemi Poor Sabet, P.; Chong, H.-Y. Pathways for the improvement of construction productivity: A perspective on the adoption of advanced techniques. Adv. Civ. Eng. 2020, 2020, 5170759. [Google Scholar] [CrossRef]

- Omar, T.; Nehdi, M.L. Automation in Construction Data Acquisition Technologies for Construction Progress Tracking. Autom. Constr. 2016, 70, 143–155. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Bohn, J.; Teizer, J.; Savarese, S.; Peña-Mora, F. Automation in Construction Evaluation of Image-based Modeling and Laser Scanning Accuracy for Emerging Automated Performance Monitoring Techniques. Autom. Constr. 2011, 20, 1143–1155. [Google Scholar] [CrossRef]

- Chan, A.P.; Scott, D.; Chan, A.P. Factors affecting the success of a construction project. J. Constr. Eng. Manag. 2004, 130, 153–155. [Google Scholar] [CrossRef]

- Chui, M.; Mischke, J. The impact and Opportunities of Automation in Construction. Glob. Infrastruct. Initiat. 2019, 5, 4–7. [Google Scholar]

- Alzubi, K.; Alaloul, W.; Malkawi, A.; Qureshi, A.; Musarat, M.A. Automated monitoring technologies and construction productivity enhancement: Building projects case. Ain Shams Eng. J. 2022, 14, 102042. [Google Scholar] [CrossRef]

- Ekanayake, B.; Wong, J.K.W.; Fini, A.A.F.; Smith, P.; Thengane, V. Deep learning-based computer vision in project management: Automating indoor construction progress monitoring. Proj. Leadersh. Soc. 2024, 5, 100149. [Google Scholar] [CrossRef]

- Kopsida, M.; Brilakis, I.; Vela, P. A Review of Automated Construction Progress and Inspection Methods. In Proceedings of the 32nd CIB W78 Conference on Construction IT, Eindhoven, Netherlands, 27–29 October 2015; pp. 421–431. [Google Scholar]

- Li, C.Z.; Xue, F.; Li, X.; Hong, J.; Shen, G.Q. An Internet of Things-enabled BIM platform for on-site assembly services in prefabricated construction. Autom. Constr. 2018, 89, 146–161. [Google Scholar] [CrossRef]

- Deng, Y.; Hong, H.; Luo, H.; Deng, H. Automatic Indoor Progress Monitoring Using BIM and Computer Vision. 2017. Available online: https://www.researchgate.net/publication/319128104_Automatic_indoor_progress_monitoring_using_BIM_and_computer_vision (accessed on 11 May 2025).

- Paneru, S.; Jeelani, I. Computer Vision Applications in Construction: Current state, opportunities & challenges. Autom. Constr. 2021, 132, 103940. [Google Scholar] [CrossRef]

- Wei, W.; Lu, Y.; Zhong, T.; Li, P.; Liu, B. Integrated Vision-based Automated Progress Monitoring of Indoor Construction Using Mask Region-based Convolutional Neural Networks and BIM. Autom. Constr. 2022, 140, 104327. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. 2018, pp. 1–9. Available online: https://proceedings.neurips.cc/paper_files/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf (accessed on 11 May 2025).

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Li, F.-F. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2–9. [Google Scholar]

- Akinosho, T.D.; Oyedele, L.O.; Bilal, M.; Ajayi, A.O.; Davila Delgado, M.; Akinade, O.O.; Ahmed, A.A. Deep learning in the construction industry: A review of present status and future innovations. J. Build. Eng. 2020, 32, 101827. [Google Scholar] [CrossRef]

- Fang, Q.; Li, H.; Luo, X.; Ding, L.; Luo, H.; Rose, T.M.; An, W. Automation in Construction Detecting Non-hardhat-use by a Deep Learning Method from Far-field Surveillance Videos. Autom. Constr. 2018, 85, 1–9. [Google Scholar] [CrossRef]

- Li, Y.; Lu, Y.; Chen, J. Automation in Construction a Deep Learning Approach for Real-time Rebar Counting on the Construction Site Based on YOLOv3 Detector. Autom. Constr. 2021, 124, 103602. [Google Scholar] [CrossRef]

- Fisher, R.B.; Breckon, T.P.; Dawson-howe, K. Dictionary of Computer Vision and Image Processing; Wiley: Hoboken, NJ, USA, 2005. [Google Scholar]

- Shapiro, L.G.; Stockman, G.C. Computer Vision; Prentice Hall: Upper Saddle River, NJ, USA, 2001. [Google Scholar]

- Arge, F.O.R.L.; Mage, C.I. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations ICLR 2015, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A.; Liu, W.; et al. Going Deeper with Convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Redmon, J.; Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. 2025. Available online: http://pjreddie.com/yolo/ (accessed on 11 May 2025).

- Wu, X.; Sahoo, D.; Hoi, S.C.H. Neurocomputing Recent Advances in Deep Learning for Object Detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Sun, T.; Fan, Q.; Shao, Y. Advanced Engineering Informatics Deep learning-based rebar detection and instance segmentation in images. Adv. Eng. Inform. 2025, 65, 103224. [Google Scholar] [CrossRef]

- Autodesk. Getting Started Using the Autodesk Revit API. 2019. Available online: https://help.autodesk.com/view/RVT/2025/ENU/?guid=Revit_API_Revit_API_Developers_Guide_Introduction_Getting_Started_Using_the_Autodesk_Revit_API_html (accessed on 11 May 2025).

- Wang, Z.; Zhang, Q.; Yang, B.; Wu, T.; Lei, K.; Zhang, B.; Fang, T. Vision-Based Framework for Automatic Progress Monitoring of Precast Walls by Using Surveillance Videos during the Construction Phase. J. Comput. Civ. Eng. 2021, 35, 04020056. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple Online and Realtime Tracking with a Deep Association Metric Nicolai Wojke, Alex Bewley, Dietrich Paulus; University of Koblenz-Landau: Mainz, Germany; Queensland University of Technology: Brisbane, Australia, 2017. [Google Scholar]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Cao, J.; Peng, B.; Gao, M.; Hao, H.; Li, X.; Mou, H. Object detection based on CNN and vision-transformer: A survey. IET Comput. Vis. 2025, 19, e70028. [Google Scholar] [CrossRef]

- Zhao, X.; Jin, Y.; Selvaraj, N.M.; Ilyas, M.; Cheah, C.C. Automation in Construction Platform-independent visual installation progress monitoring for construction automation. Autom. Constr. 2023, 154, 104996. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. ScienceDirect A Review of Yolo Algorithm Developments A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Redmon, J. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar]

- Ultralytics YOLOv5. 2023. Available online: https://docs.ultralytics.com/yolov5/ (accessed on 11 May 2025).

- Ultralytics YOLOv7. 2023. Available online: https://docs.ultralytics.com/models/yolov7/ (accessed on 11 May 2025).

- Ultralytics YOLOv8. 2023. Available online: https://docs.ultralytics.com/models/yolov8/ (accessed on 11 May 2025).

- Tao, F.; Xiao, B.; Qi, Q.; Cheng, J.; Ji, P. Digital Twin Modeling. J. Manuf. Syst. 2022, 64, 372–389. [Google Scholar] [CrossRef]

- Glaessgen, E.H.; Stargel, D.S. The Digital Twin Paradigm for Future NASA and U.S. Air Force Vehicles. In Proceedings of the Collection of Technical Papers-AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics and Materials Conference, Honolulu, Hl, USA, 23–26 April 2012; p. 76921. [Google Scholar] [CrossRef]

- Grieves, M. Digital Twin: Manufacturing Excellence through Virtual Factory Replication A Whitepaper by Dr. Michael Grieves. White Pap. 2014, 1, 1–7. [Google Scholar]

- Tao, F.; Zhang, M. Digital Twin Shop-Floor: A New Shop-Floor Paradigm Towards Smart Manufacturing. IEEE Access 2017, 5, 20418–20427. [Google Scholar] [CrossRef]

- Opoku, D.G.J.; Perera, S.; Osei-Kyei, R.; Rashidi, M. Digital Twin Application in the Construction Industry: A Literature Review. J. Build. Eng. 2021, 40, 102726. [Google Scholar] [CrossRef]

- Ammar, A.; Nassereddine, H.; AbdulBaky, N.; AbouKansour, A.; Tannoury, J.; Urban, H.; Schranz, C. Digital Twins in the Construction Industry: A Perspective of Practitioners and Building Authority. Front. Built Environ. 2022, 8, 834671. [Google Scholar] [CrossRef]

- Zhuang, C.; Liu, J.; Xiong, H. Digital twin-based smart production management and control framework for the complex product assembly shop-floor. Int. J. Adv. Manuf. Technol. 2018, 96, 1149–1163. [Google Scholar] [CrossRef]

- Wagner, C.; Grothoff, J.; Epple, U.; Drath, R.; Malakuti, S.; Grüner, S.; Hoffmeister, M.; Zimermann, P. The roleof the Industry 4.0 asset administration shell and the digital twin during the life cycle of a plant. In Proceedings of the 2017 22nd IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Limassol, Cyprus, 12–15 September 2017; pp. 1–8. [Google Scholar]

- Kovacevic, M. Digital Twin. In Proceedings of the 17th International Conference on Information and Knowledge Engineering, Cadiz, Spain, 11–15 June 2018. [Google Scholar]

- Jones, D.; Snider, C.; Nassehi, A.; Yon, J.; Hicks, B. Characterising the Digital Twin: A Systematic Literature Review. CIRP J. Manuf. Sci. Technol. 2020, 29, 36–52. [Google Scholar] [CrossRef]

- Boje, C.; Guerriero, A.; Kubicki, S.; Rezgui, Y. Towards a semantic Construction Digital Twin: Directions for Future Research. Autom. Constr. 2020, 114, 103179. [Google Scholar] [CrossRef]

- Autodesk Tandem. 2021. Available online: https://intandem.autodesk.com/ (accessed on 11 May 2025).

- Bentley, H. An Open Platform for Infrastructure Digital Twins. 2021. Available online: https://hub.frost.com/digital-twins/ (accessed on 11 May 2025).

- Rao, A.S.; Radanovic, M.; Liu, Y.; Hu, S.; Fang, Y.; Khoshelham, K.; Palaniswami, M.; Ngo, T. Automation in Construction Real-time Monitoring of Construction Sites: Sensors, methods, and applications. Autom. Constr. 2022, 136, 104099. [Google Scholar] [CrossRef]

- Deng, L. The Mnist Database of Handwritten Digit Images for Machine Learning Research. IEEE Signal Process. Mag. 2012, 29, 141–142. [Google Scholar] [CrossRef]

- Everingham, M.; Gool, L.V.; Williams, C.K.I.; Winn, J.; Zisserman, A. The P ASCAL Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.; Zitnick, C.L.; Doll, P. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 1–15. [Google Scholar]

- Tajeen, H.; Zhu, Z. Automation in Construction Image Dataset Development for Measuring Construction Equipment Recognition Performance. Autom. Constr. 2014, 48, 1–10. [Google Scholar] [CrossRef]

- Kim, H.; Kim, H.; Hong, Y.W.; Byun, H. Detecting Construction Equipment Using a Region-based Fully Convolutional Network and Transfer Learning. J. Comput. Civ. Eng. 2017, 7, 517–524. [Google Scholar] [CrossRef]

- Kolar, Z.; Chen, H.; Luo, X. Automation in Construction Transfer learning and Deep Convolutional Neural Networks for Safety Guardrail Detection in 2D Images. Autom. Constr. 2018, 89, 58–70. [Google Scholar] [CrossRef]

- Li, Y.; Wei, H.; Han, Z.; Huang, J.; Wang, W.; Formisano, A. Deep Learning-Based Safety Helmet Detection in Engineering Management Based on Convolutional Neural Networks. Adv. Civ. Eng. 2020, 2020, 9703560. [Google Scholar] [CrossRef]

- An, X.; Zhou, L.; Liu, Z.; Wang, C.; Li, P.; Li, Z. Automation in Construction Dataset and benchmark for Detecting Moving Objects in Construction Sites. Autom. Constr. 2021, 122, 103482. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Yang, L.; Thirunavukarasu, A.; Evison, C.; Zhao, Y. Fast Personal Protective Equipment Detection for Real Construction Sites Using Deep Learning Approaches. Sensors 2021, 21, 3478. [Google Scholar] [CrossRef] [PubMed]

- Xiao, B.; Kang, S.-C. Development of an Image Data Set of Construction Machines for Deep Learning Object Detection. J. Comput. Civ. Eng. 2021, 35, 5020005. [Google Scholar] [CrossRef]

- Ramez, M. Bricks in Walls Dataset. Roboflow Universe. Roboflow. 2024. Available online: https://universe.roboflow.com/ramezmagdy/bricks-in-walls (accessed on 11 May 2025).

- Roboflow. 2025. Available online: https://roboflow.com/ (accessed on 11 May 2025).

- Google Colab. 2025. Available online: https://colab.google/ (accessed on 11 May 2025).

- Nassar, N.K. An integrated framework for evaluation of performance of construction projects. In Proceedings of the PMI® Global Congress 2009—North America, Orlando, FL, USA, 10–13 October 2009; Project Management Institute: Newtown Square, PA, USA, 2009. [Google Scholar]

- Bricki. Brick-Detector Dataset. Roboflow Universe. Roboflow. 2025. Available online: https://universe.roboflow.com/bricki/brick-detector (accessed on 26 June 2025).

| Occlusions | Bricks without Occlusions | 89% |

| Bricks with Occlusions | 11% | |

| Material | Concrete Bricks | 26% |

| Clay Bricks | 27% | |

| Rocks | 9% | |

| Sand Lime Bricks | 38% | |

| Shape | Square | 4% |

| Rectangular | 87% | |

| Combined | 9% |

| (YOLOv8n) Epochs = 20 Batch Size = 32 | (YOLOv8s) Epochs = 20 Batch Size = 32 | (YOLOv8n) Epochs = 100 Batch Size = 32 | (YOLOv8n) Epochs = 100 Batch Size = 16 | |

|---|---|---|---|---|

| mAP50 | 94.6 | 94.3 | 95.4 | 95.6 |

| mAP50-95 | 71.8 | 73.8 | 80.7 | 80.5 |

| Precision | 94 | 93.7 | 94.6 | 95 |

| Recall | 92.4 | 92.7 | 93 | 93.1 |

| Brightness | Precision | Recall | mAP(50) | mAP(50–95) |

|---|---|---|---|---|

| −100 | 0.902 | 0.881 | 0.915 | 0.751 |

| −50 | 0.905 | 0.903 | 0.927 | 0.825 |

| 50 | 0.906 | 0.881 | 0.93 | 0.844 |

| 100 | 0.852 | 0.881 | 0.927 | 0.788 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Magdy, R.; Hamdy, K.A.; Essawy, Y.A.S. Real-Time Progress Monitoring of Bricklaying. Buildings 2025, 15, 2456. https://doi.org/10.3390/buildings15142456

Magdy R, Hamdy KA, Essawy YAS. Real-Time Progress Monitoring of Bricklaying. Buildings. 2025; 15(14):2456. https://doi.org/10.3390/buildings15142456

Chicago/Turabian StyleMagdy, Ramez, Khaled A. Hamdy, and Yasmeen A. S. Essawy. 2025. "Real-Time Progress Monitoring of Bricklaying" Buildings 15, no. 14: 2456. https://doi.org/10.3390/buildings15142456

APA StyleMagdy, R., Hamdy, K. A., & Essawy, Y. A. S. (2025). Real-Time Progress Monitoring of Bricklaying. Buildings, 15(14), 2456. https://doi.org/10.3390/buildings15142456